1. Introduction

Hyperspectral anomaly detection (HAD), which enables the identification of subtle, unknown materials within hyperspectral images (HSIs), represents a fundamental task in hyperspectral data analysis [

1]. HAD typically operates in an unsupervised manner without requiring prior spectral knowledge of targets, aiming to detect objects whose spectral characteristics significantly differ from their background [

2]. This unique capability renders HAD particularly valuable for various surveillance applications, where specific targets are unknown beforehand, including but not limited to the following: detecting camouflaged personnel in battlefield scenarios [

3], identifying pesticide residues during food safety inspections [

4], and locating tumors in medical imaging [

5].

In the nascent stage of HAD development, statistical-based methods predominated in this field. These approaches, rooted in signal detection theory, were primarily employed for background characterization. The Reed–Xiaoli (RX) detector [

6], derived from a binary hypothesis testing framework under a multivariate Gaussian distribution assumption, subsequently became the most widely adopted HAD method due to its algorithmic simplicity and computational efficiency. Its numerous variants rapidly proliferated, including local-RX (LRX) [

7], which exclusively utilizes pixels from dual concentric sliding windows to maintain uncontaminated background sets, weighted-RX (WRX) [

8], which strategically implements weighting schemes to enhance background representation and improve the detection performance of RX, and kernel-RX (KRX) [

9], which projects original data into higher-dimensional feature spaces through nonlinear mapping to amplify separability between background and anomalies. Further methodological innovations introduced using the signal-to-noise ratio (SNR) as an alternative optimization criterion for detector design [

10]. Notably, the formulation of a hyperspectral target detection (HTD) dual theory specifically for HAD applications marked the first comprehensive exploration of their inherent duality relationships.

However, statistical assumption-based approaches for HAD algorithm design encounter a fundamental limitation: the unknown probability density function in practical applications. This challenge has led to the widespread investigation and application of sparse coding (SC) [

11,

12] and matrix low-rank representation (MLRR) [

13,

14,

15] techniques in HAD research. These methods rely on carefully designed regularization terms that employ various norms to capture essential data characteristics, particularly low-rankness and sparsity properties. SC-based HAD approaches utilize over-complete dictionaries with multiple atoms to represent test pixels through

-norm optimization, as demonstrated in background joint SC [

11] and archetypal analysis with structured SC [

12]. At the matrix level, common optimization objectives incorporate the Frobenius norm,

-norm, and nuclear norm to enforce desired structural properties. The low-rank and sparse representation (LRASR) framework [

13] unifies these concepts by jointly modeling low-rank structures in coefficient matrices and sparse structures in residual matrices. By imposing nuclear norm constraints on the coefficient matrix, LRASR effectively captures the intrinsic HSI structure while isolating anomalies. Further developments, like graph and total variation regularized low-rank representation (GTVLRR) [

14], augment the capabilities of LRASR through dual regularization terms that better preserve spatial structures. Recent advances include nonconvex-based HAD [

15], where generalized shrinkage mapping achieves three objectives: approximating

penalties through group sparsity constraints, simulating

TV penalties via TV regularization, and enforcing low-rank properties with nuclear norm penalties. This integrated approach enables comprehensive spatial correlation modeling.

The intrinsic data structure of HSI is fundamentally tensor-based, while most existing methods convert the HSI data into matrix form. This will potentially compromise the inherent spatial characteristics of HSI. In contrast, tensor representation (TR) methods preserve spatial information completely, consequently achieving enhanced HAD performance. The TR algorithms focus on tensor rank minimization, where (unlike matrix rank) multiple definitions exist, including but not limited to the following: CANDECOMP/PARAFAC (CP) rank [

16], Tucker rank [

17], ring rank [

18], average rank [

19], and tubal rank [

20]. A CP rank-based TR model [

16] decomposes backgrounds via mode-3 products of abundance tensors and endmember matrices while preserving spatial-spectral integrity. By leveraging the inherent sparsity of core tensors generated through Tucker decomposition of gradient tensors, a specialized regularization term [

17] is developed. This unified term simultaneously encodes both the low-rank and local smoothness constraints of background components, consequently improving HAD detection performance. The tensor ring decomposition (TRD) method [

18] effectively exploits low-rank characteristics inherent in HSI background tensors. This approach decomposes a third-order background tensor through multilinear tensor products of three factor tensors, systematically uncovering low-rank features across different dimensionalities of the background tensor. A TR model [

19] incorporates both low average rank and piecewise smooth constraints, employing the tensor nuclear norm (TNN) derived from tensor singular value decomposition (T-SVD) to induce low-rank properties on the background tensor. The tensor low-rank and sparse representation (TLRSR) framework [

20] extends LRASR to the tensor domain, employing the weighted tensor nuclear norm (WTNN) as the convex relaxation for tensor tubal rank. This formulation enables computationally efficient Fourier-domain implementation while maintaining strong theoretical guarantees. The TLRSR further incorporates the

norm to enforce structured group sparsity constraints. As a tensor dictionary representation model, the spatial invariant tensor self-representation (SITSR) model [

21] merges the unfolded matrices of two representative coefficients and constrains them in a low-dimensional subspace. This strategy fully preserves the multi-dimensional structure. Beyond convex formulations, some nonconvex TR methods [

22,

23,

24] have been explored as alternatives to unbiased estimators. These nonconvex formulations can be replaced to promote sparsity, group sparsity, and low-rankness, which have shown obvious advantages in HAD.

More recently, the deep learning (DL) technique has achieved significant success in multiple real-world tasks [

25,

26,

27,

28]. This rapid development in DL also prompted numerous researchers to apply it to HAD, as evidenced by the growing body of work in this field [

29,

30,

31,

32,

33,

34,

35]. The well-known deep learning method is the autonomous HAD (Auto-AD) network [

29], which uses a fully convolutional autoencoder (AE) network to reconstruct the background and uses the reconstruction error as the anomaly score. Auto-AD applies an adaptive weighted loss function to further reduce the reconstruction of anomalies by lowering the weight of anomalous pixels during training. The robust graph autoencoder (RGAE) [

30] incorporates a graph regularization term to preserve the spatial geometric structure during the reconstruction process. RGAE utilizes a superpixel segmentation-based graph regularization term instead of constructing a graph Laplacian matrix to reduce the computational complexity. In addition, the

norm is introduced into the loss function to improve the robustness to anomalies. The transformer-based AE framework (TAEF) [

31] customizes the multilinear transformation decoder, which aims to improve the background learning capability of vanilla AE. In the preprocessing phase, TAEF uses a clustering-based method to suppress anomalies. The deep feature aggregation network (DFAN) [

32] discovers the anomalies by enhancing the representation of various background patterns and calculating the Mahalanobis distance of the residual results. The gated transformer Network for HAD (GT-HAD) [

33] is a dual-branch framework consisting of the anomaly-focused branch and the gated transformer block to accurately reconstruct the background while suppressing the anomalies. To further improve HAD performance, some studies have built deep networks based on the dual-window idea [

34,

35]. The spatial-spectral dual window mask transformer (S2DWMTrans) [

34] makes full use of spatial-spectral information. Specifically, S2DWMTrans develops the local shallow feature extraction module to integrate adjacent spatial information, and the encoder block based on dual-window mask multihead self-attention further eliminates anomalies. Moreover, DirectNet [

35] incorporates a sliding dual-window model to adaptively reconstruct the background, which increases the reconstruction error of anomalies.

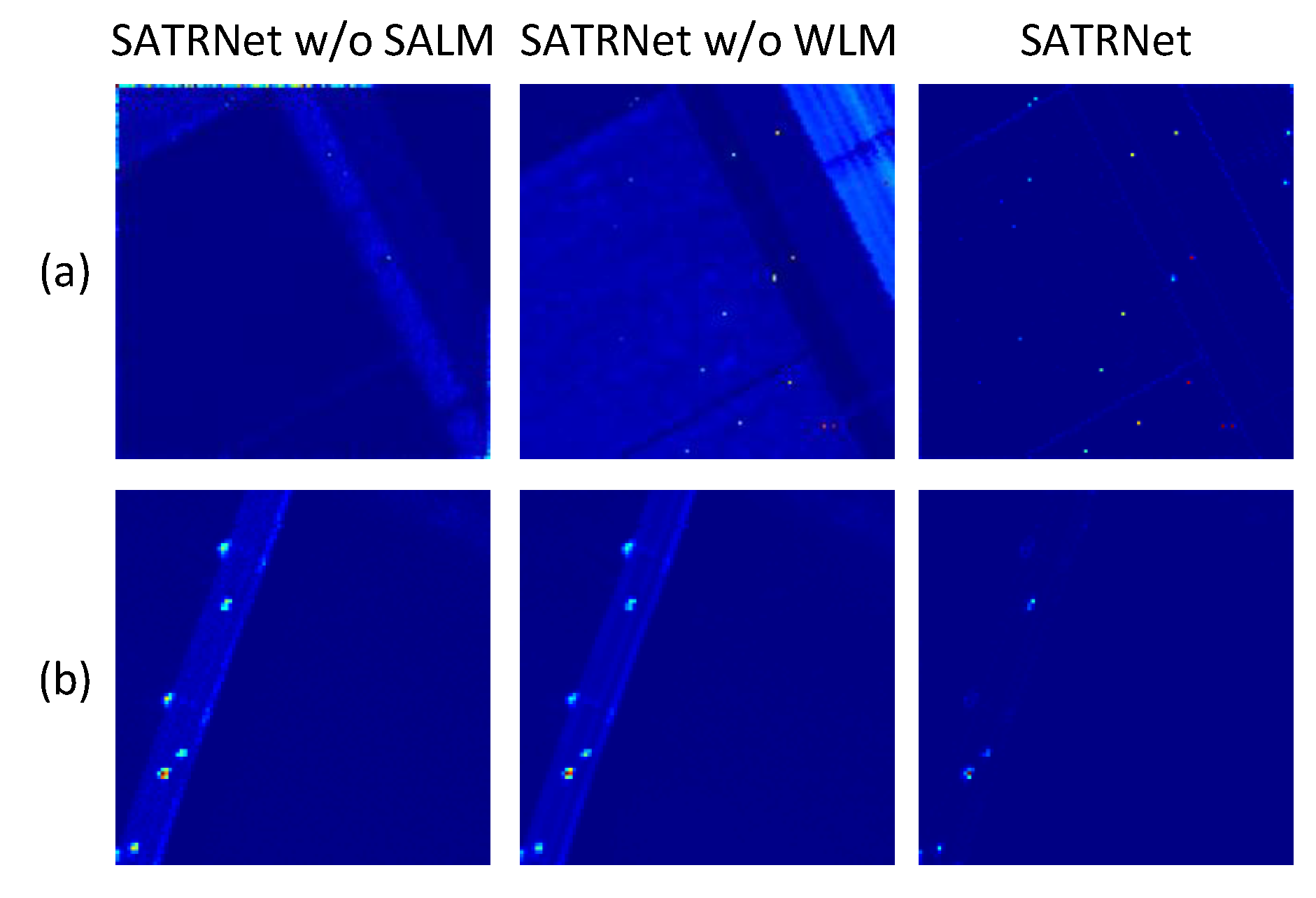

Although the DL-based methods have become promising approaches in HAD, there are still some challenges. As mentioned in [

36,

37,

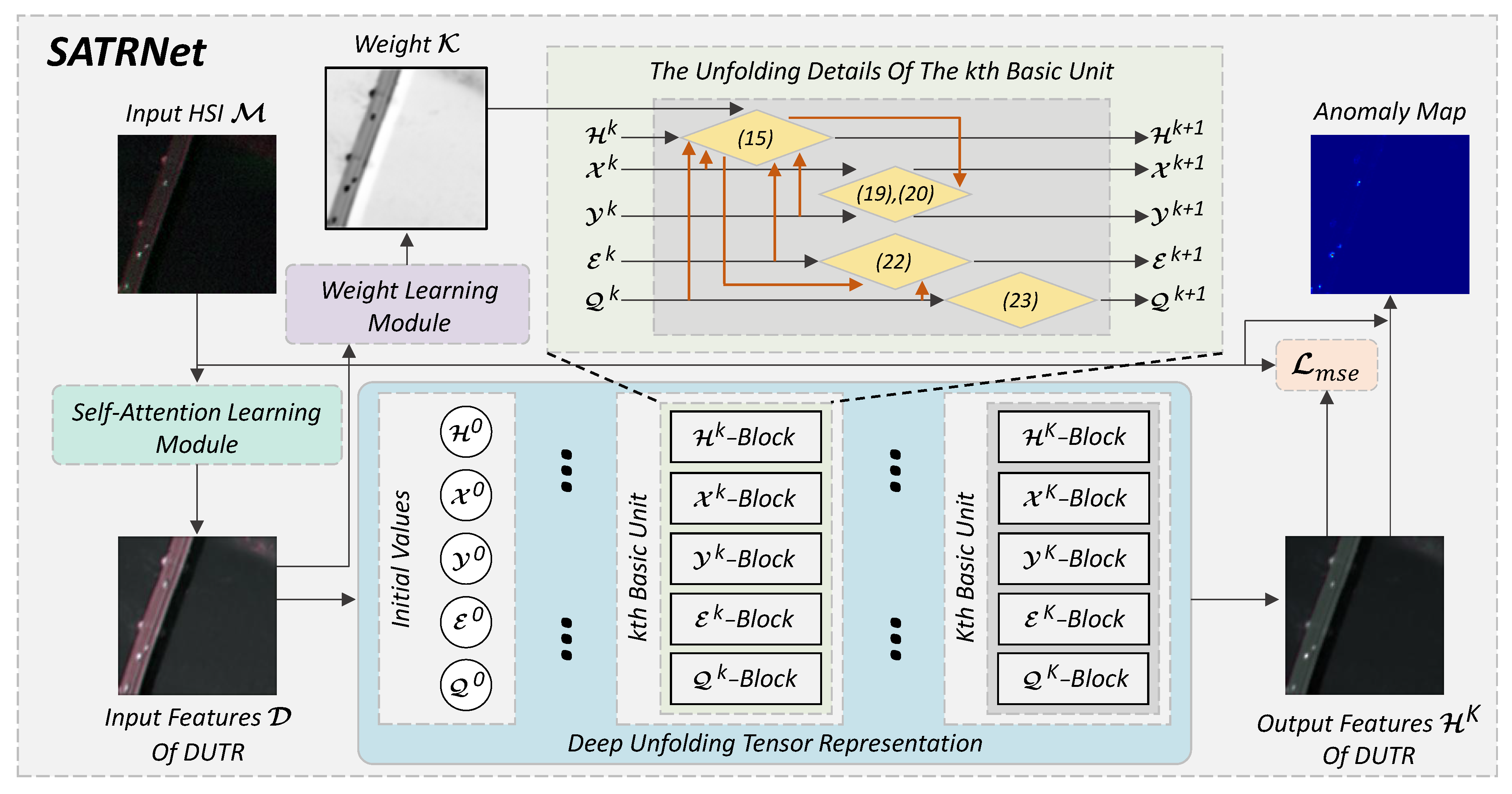

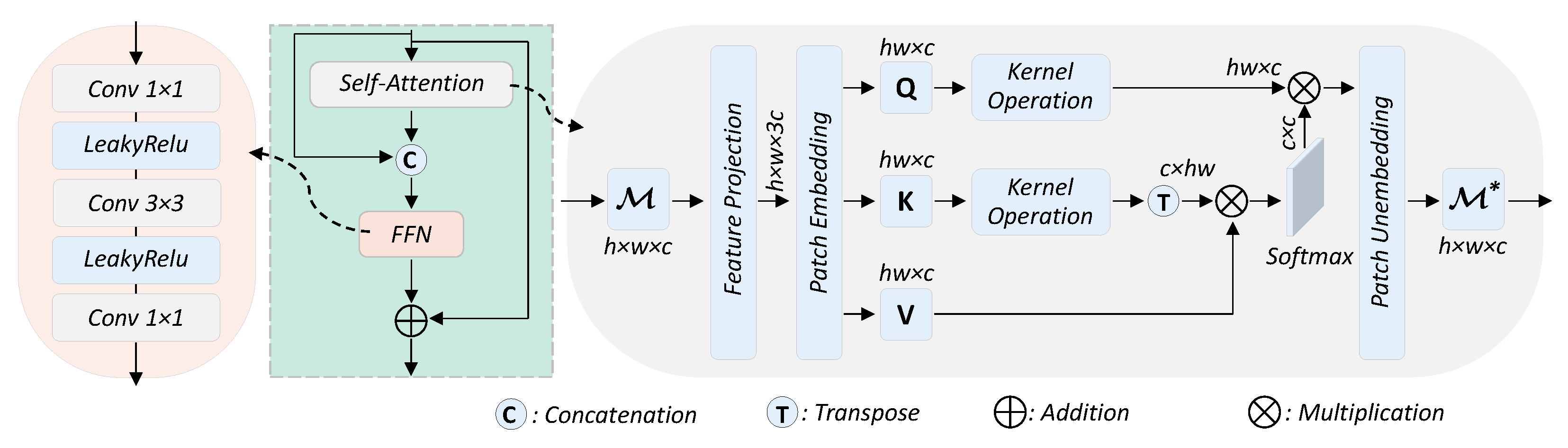

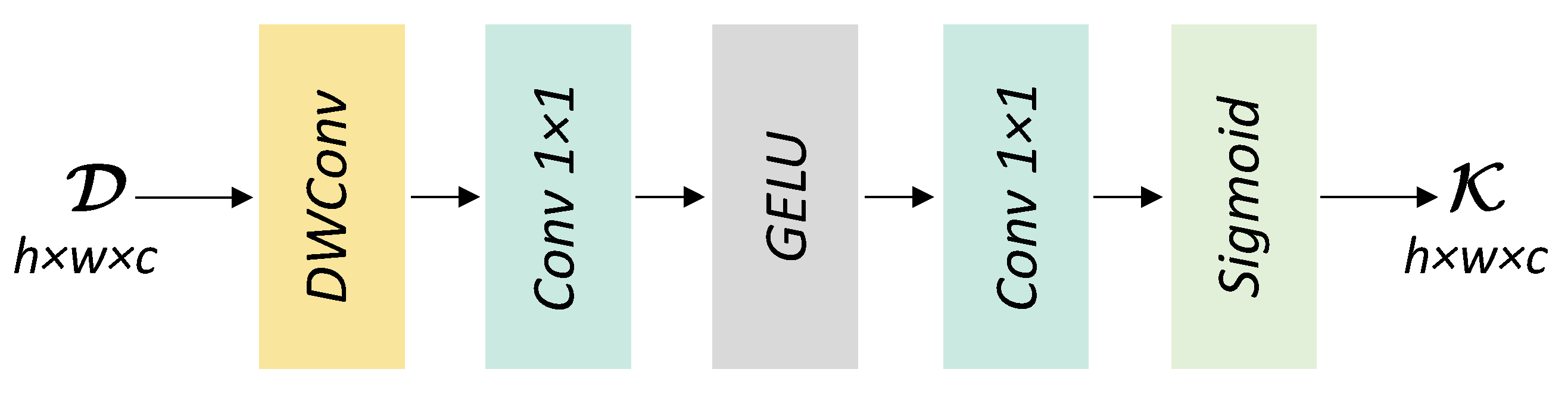

38], the DL-based HAD methods widely suffer from the identity mapping (IM) problem, which refers to the phenomenon that the network reconstructs the whole HSI completely so that the anomalies are represented with imperceptible errors and, thus, are hard to detect. Moreover, the black-box nature of DL-based HAD methods makes them lack interpretability, which aggravates the IM issue to some extent. In fact, most of the existing DL-based methods, no matter how the architecture varies, learn meaningless mappings more or less due to the lack of interpretability and finally drive the network towards IM. On the contrary, traditional methods such as TR algorithms are fully interpretable and do not suffer from the IM problem, despite their limited generalization capability. Based on this observation, we naturally think of combining the TR methods with DL-based methods. Inspired by the deep unfolding technology, we propose a self-attention-aided deep unfolding tensor representation network called SATRNet for HAD. Specifically, SATRNet introduces Deep Unfolding Tensor Representation (DUTR), the architecture of which is designed by unfolding a customized TR algorithm. Due to the global modeling nature of DUTR, a Weight Learning Module (WLM) was developed to inject local details, enhancing the accuracy of background reconstruction. Moreover, a Self-Attention Learning Module (SALM) was introduced before DUTR to extract discriminative features, weakening the subsequent network’s ability to reconstruct the anomalies. In this way, the working principles of the key components in SATRNet are consistent with the iterative algorithms of TR models, which avoids the meaningless feature mappings of the black-box models, and the network is guided towards the explicit objective. The IM problem can be effectively alleviated and thus enhance HAD performance and generalization capability. The main contributions of this work can be summarized as follows:

An interpretable network, the deep unfolding tensor representation network, is developed for HAD, solving the traditional TR model within a deep network. The combination of these two techniques integrates the interpretability of the TR model and the efficiency of the deep network for reliable anomaly detection. Moreover, a Weight Learning Module (WLM) is designed to learn local details to accurately reconstruct the background.

A Self-Attention Learning Module (SALM) is introduced to extract discriminative features, which avoids reconstructing the anomalies during the background reconstruction process. This also helps to effectively alleviate the identity mapping (IM) problem.

Apart from systematic evaluation against 11 HSI benchmark datasets, the adversarial attack experiment for HAD is performed in this work for the first time, indicating the adversarial robustness of the proposed SATRNet.

The rest of this paper is organized as follows. Notations, basic definitions, and related works are introduced in

Section 2.

Section 3 presents the proposed SATRNet model in detail. Comprehensive experimental results are given in

Section 4. Discussions on the adversarial robustness of the proposed method are provided in

Section 5. Finally,

Section 6 concludes the paper.

5. Discussion

Despite the great success that deep learning-based models have achieved in different fields, recent studies reveal that deep learning is vulnerable under adversarial attacks [

67]. Szegedy et al. [

67] first found that deep neural networks are fragile when facing adversarial examples. Adversarial examples, also called adversarial noises, are generated by adding subtle adversarial perturbations to the original images and showing a similar appearance to the original images visually. In remote sensing, adversarial examples have also been proven to exist and draw people’s attention to the adversarial robustness of networks [

68]. As far as we know, this topic in HAD has not been studied yet. Different from traditional noises that bring about visible degradation to images, adversarial noise can make networks output wrong results without being perceived by humans. This may cause serious security problems in some application scenarios [

68]. Therefore, it is essential to analyze the adversarial robustness of the HAD methods.

To examine the adversarial robustness of our proposed SATRNet, we initially generated adversarial samples. These samples necessitate the utilization of adversarial attacks against a well-trained model. Goodfellow et al. [

69] introduced a potent adversarial attack method, the fast gradient sign method (FGSM). Notably, FGSM is an established and effective attack method capable of theoretically attacking any loss function [

69]. Therefore, we used FGSM as the method for obtaining adversarial samples. The samples

are obtained through FGSM as follows:

where

clips the pixel values in the image,

calculates the gradients of the loss function

with respect to the input sample

x,

is the sign function, and parameter

is used to control the attack strength. Further details of the method can be found in [

69].

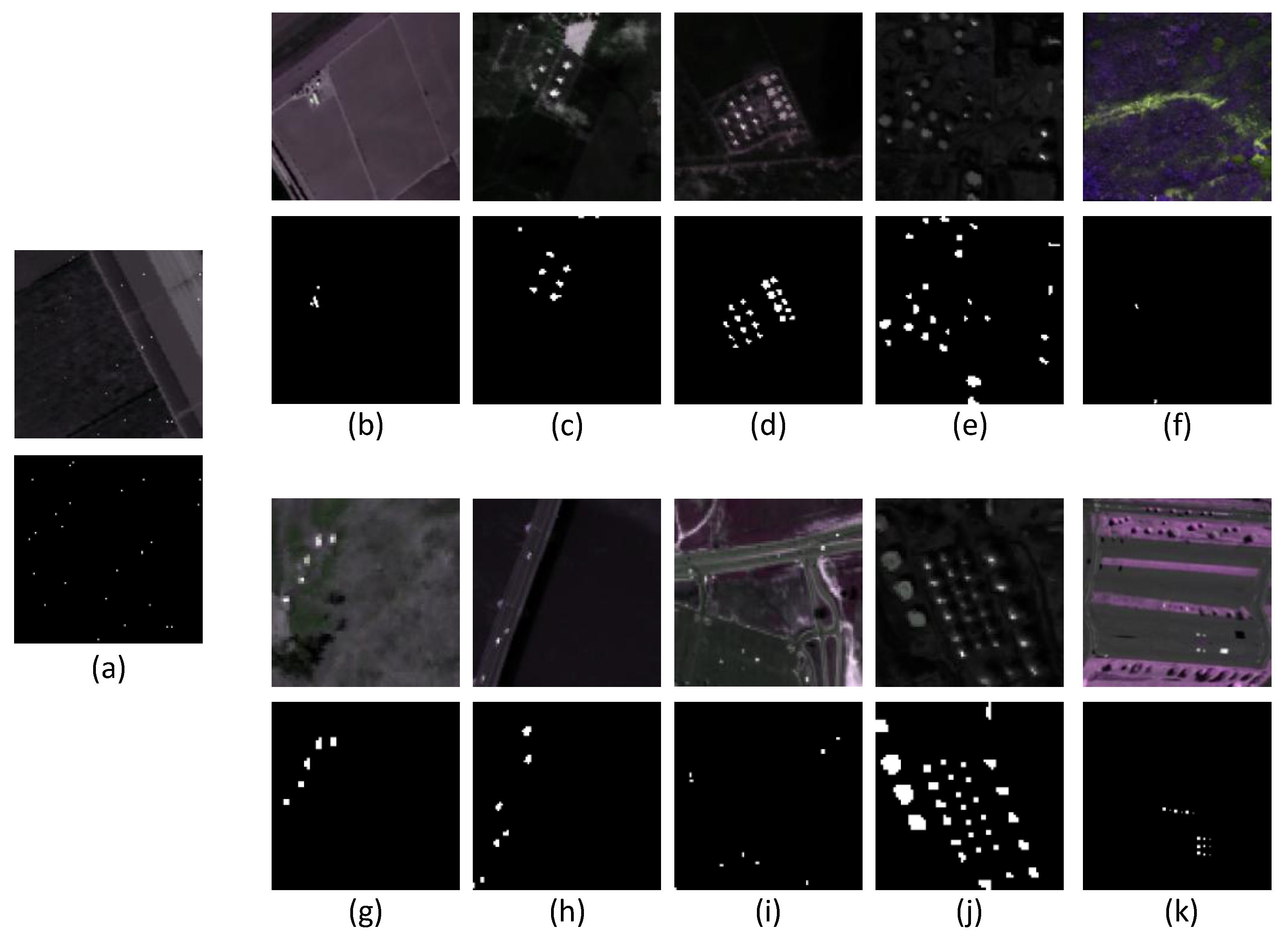

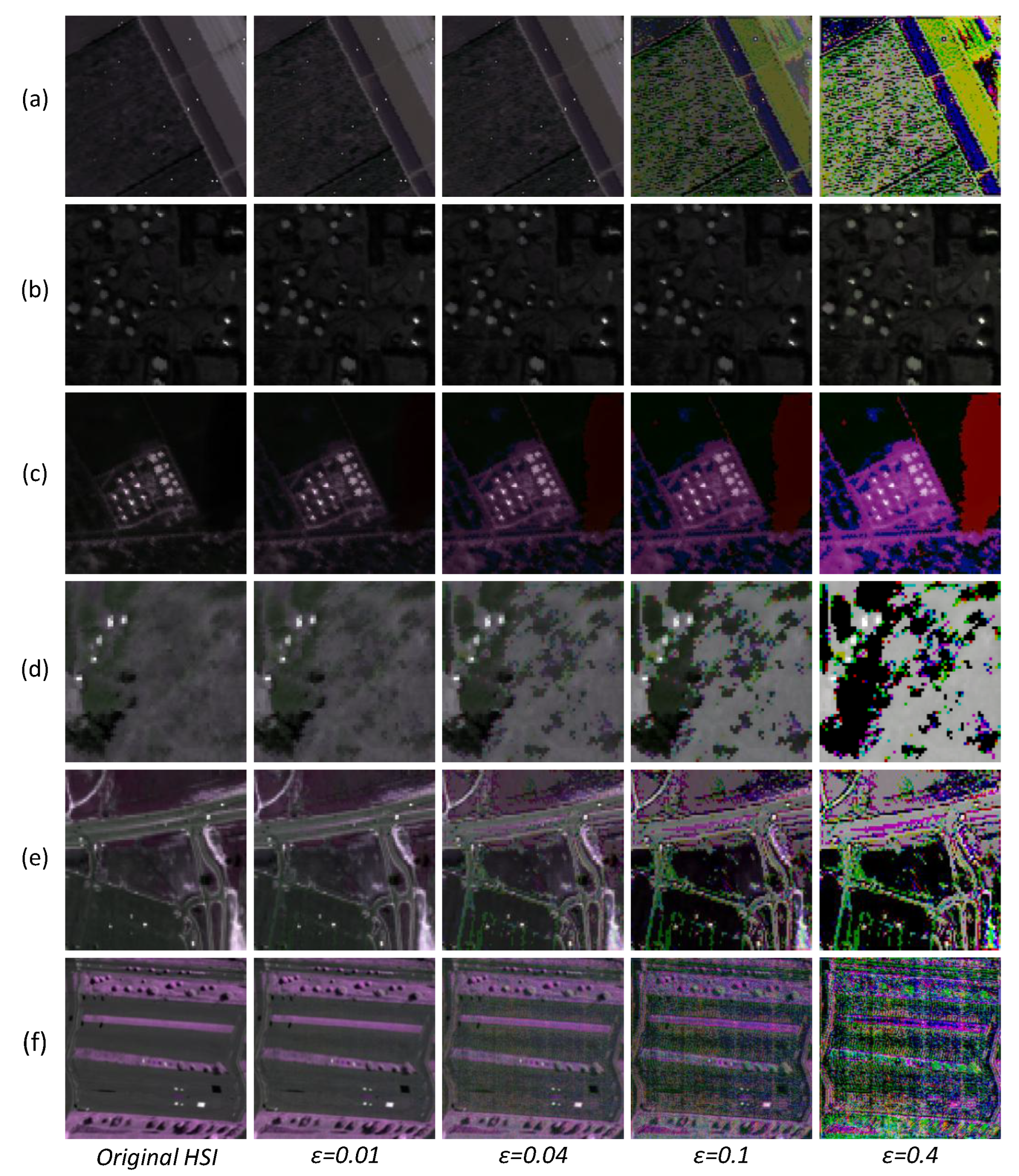

We took 6 HSI datasets to test the adversarial robustness of the proposed method.

Figure 14 visualizes the adversarial examples on 6 datasets with different

values. As shown in

Figure 14, on 6 datasets, the changes in adversarial examples with

and

are hard to perceive compared to clean data. When

and

, the quality degradation becomes obvious on five datasets, except for Urban-IV. The small-sized anomalies are completely submerged in the background, especially on the Salinas-I, HYDICE, and SpecTIR datasets. For the Urban-IV dataset, as the value of

increases, the quality degradation is relatively not obvious. Combining the above observations, in order to balance attack strength with visual imperceptibility, we selected

to evaluate adversarial robustness. It is worth mentioning that these observations are basically consistent with those findings in [

68,

70], where the

value was also set as 0.04.

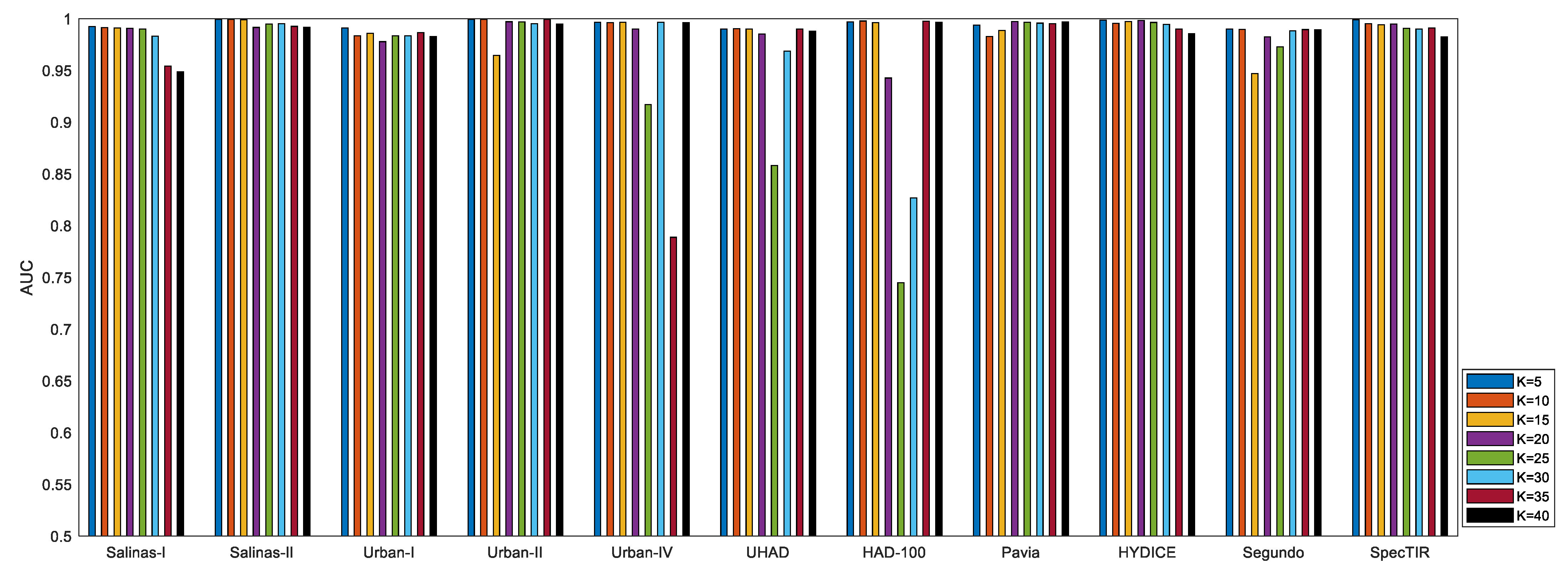

Quantitative performance of the proposed method under different

values on 6 datasets is exhibited in

Table 6. As shown in

Table 6, on the Salinas-I, HYDICE, SpecTIR, and HAD-100 datasets, the detection performance of the proposed method experienced a continuous decline with the increase in attack strength. Compared to the detection performance on clean images, the performance degradation on the four datasets was not obvious at

and

. Among them, detection performance on the Salinas-I dataset at

even exceeded the performance on clean images, which may be caused by the fact that adversarial attacks highlighted small-sized anomalies to some extent while interfering with background learning. At

, the detection performance on the HYDICE, HAD-100, and SpecTIR datasets began to show a large degree of degradation, and at

, the detection results became unsatisfactory. Different from the above results, on the two urban datasets Urban-II and Urban-IV, the proposed method showed almost consistently reliable detection performance under different

values, with only a slight performance degradation on the Urban-II dataset at

, but this is still reliable. The difference in the results can be attributed to the diversity of the surface features of the datasets. Studies have shown that the adversarial robustness of deep neural networks is also related to remote sensing datasets [

71]. In general, datasets with higher diversity tend to provide higher robustness when suffering adversarial attacks. The Urban-II and Urban-IV datasets collect urban scenes, which have higher diversity compared to the large patches of grassland in other datasets such as HAD-100. This can be regarded as one possible reason for the difference in performance. Apart from the

index, further comprehensive quantitative analysis is performed using two metrics:

[

23] and

[

23].

focuses on reflecting the background suppression level of the detector, whereas

measures the overall performance of the detection rate and false alarm rate.

Table 7 and

Table 8 show the

and

of the proposed method with different

values, respectively. From

Table 7 and

Table 8, it is not difficult to see that adversarial attacks have a negative impact on the background suppression capability and overall performance of the detector, which is similar to what is reflected by the AUC values. To investigate the adversarial robustness of the proposed method, we further studied the detection performance of

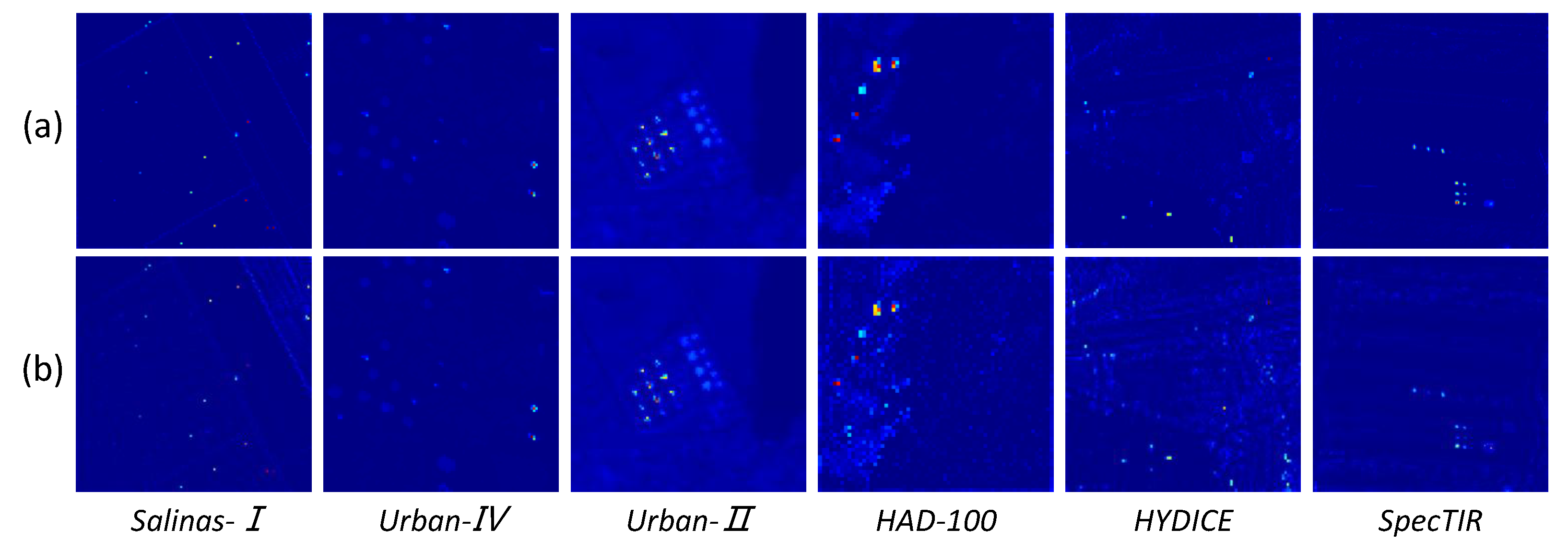

.

Figure 15 shows the visual detection results of the 6 datasets when

. As shown in

Figure 15, the visual detection effects of the Urban-II and Urban-IV datasets remain basically unchanged, and on other datasets, compared with the original detection results, a small number of false detections appear in the background area of the detection map. However, under the adversarial attack, the visual effect still maintains strong discrimination ability. The above results indicate that, despite the difficulties brought by adversarial attacks to the HAD problem, the proposed SATRNet method maintains strong detection performance under reasonable attack intensity and presents good visual effects.

To more comprehensively evaluate the robustness of the proposed algorithm, we compared SATRNet with S

3ANet [

70], a recently proposed classification network with strong adversarial robustness. The original S

3ANet is designed for hyperspectral image classification, which is a supervised method and is inherently different from the unsupervised HAD problem in this work. In order to fit its structure and learning learning paradigm to the HAD task, we made the following modifications. Firstly, in the original S

3ANet, the number of output channels is the number of categories; we adjusted it to the number of bands of the input image so as to maintain the consistency of input and output dimensions. Secondly, the loss function was replaced by the MSE regression loss to optimize the reconstruction quality. This adjustment enables S

3ANet to reconstruct the background of hyperspectral images in an unsupervised manner and, thus, achieve anomaly detection through residual operation. In the training phase, the batch size was 1, the learning rate was 0.001, and the iteration was 100 rounds.

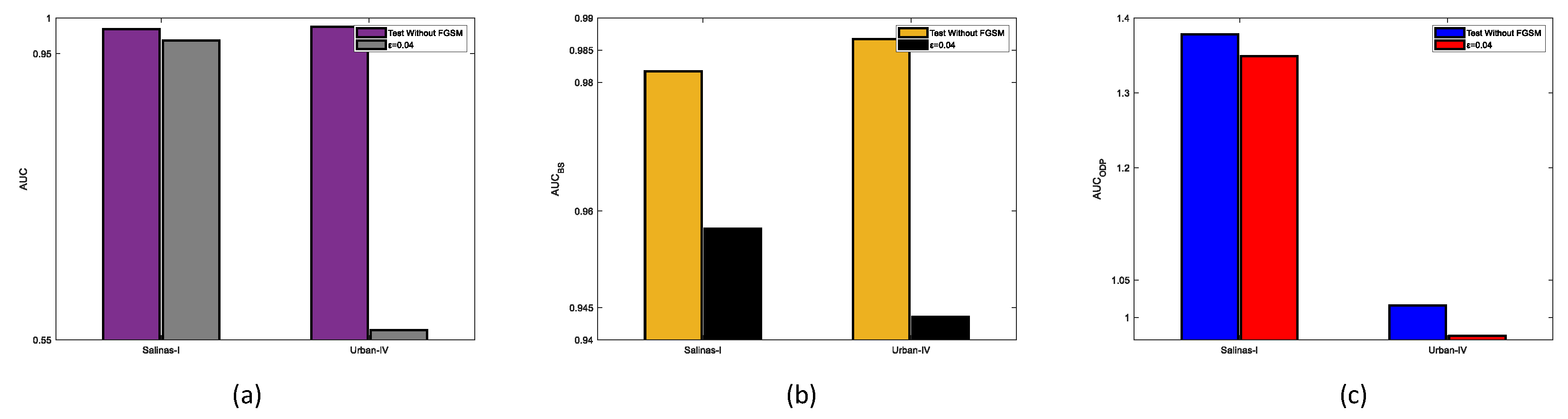

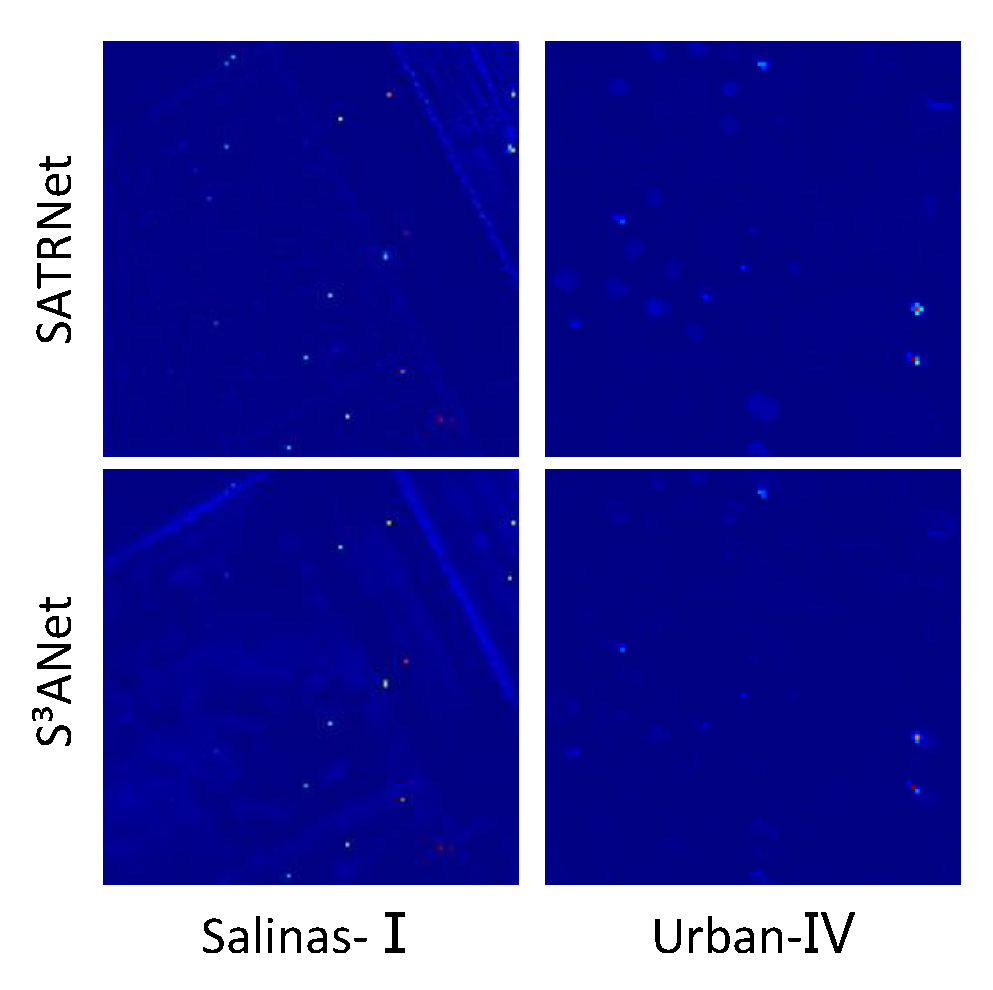

Figure 16 shows the performance of S

3ANet on the Salinas-I and Urban-IV datasets when

. It can be seen from

Figure 16 that S

3ANet experiences a significant decline in various detection indicators on the Salinas-I and Urban-IV datasets, where the performance degradation on the Urban-IV dataset is more significant. As shown in

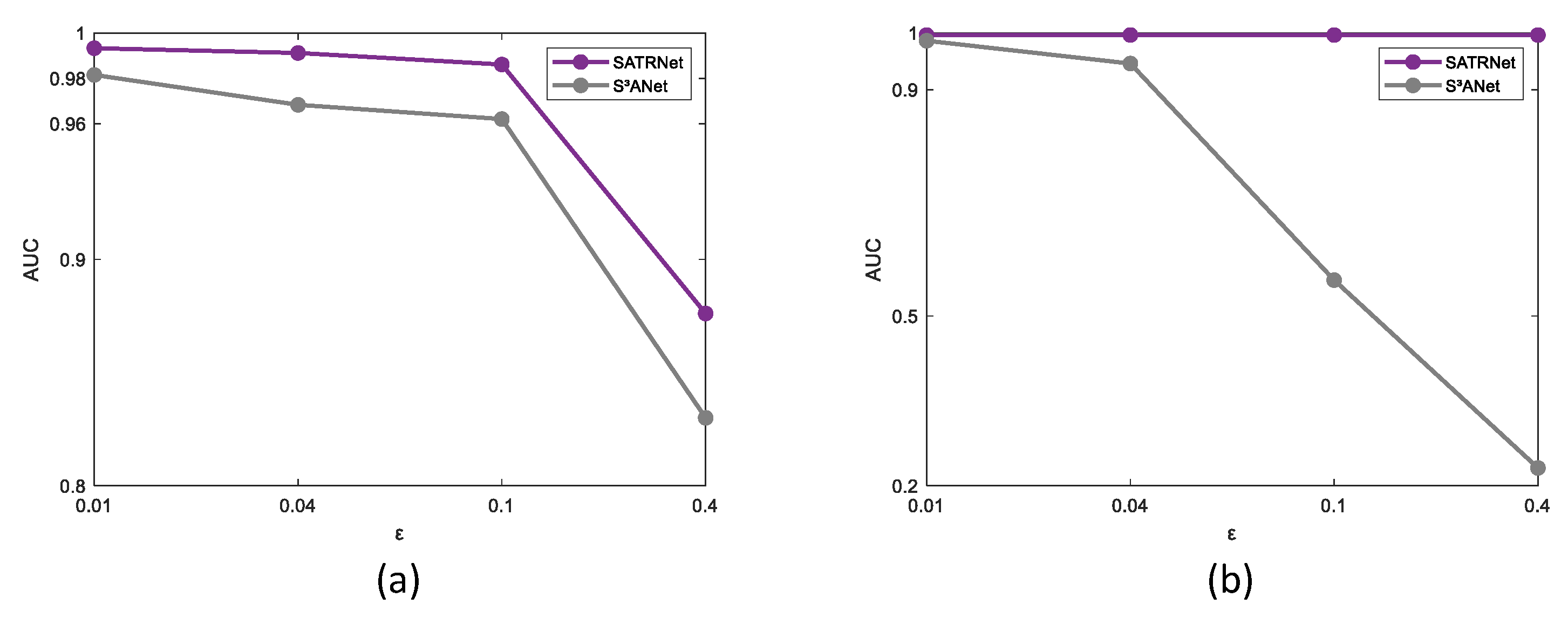

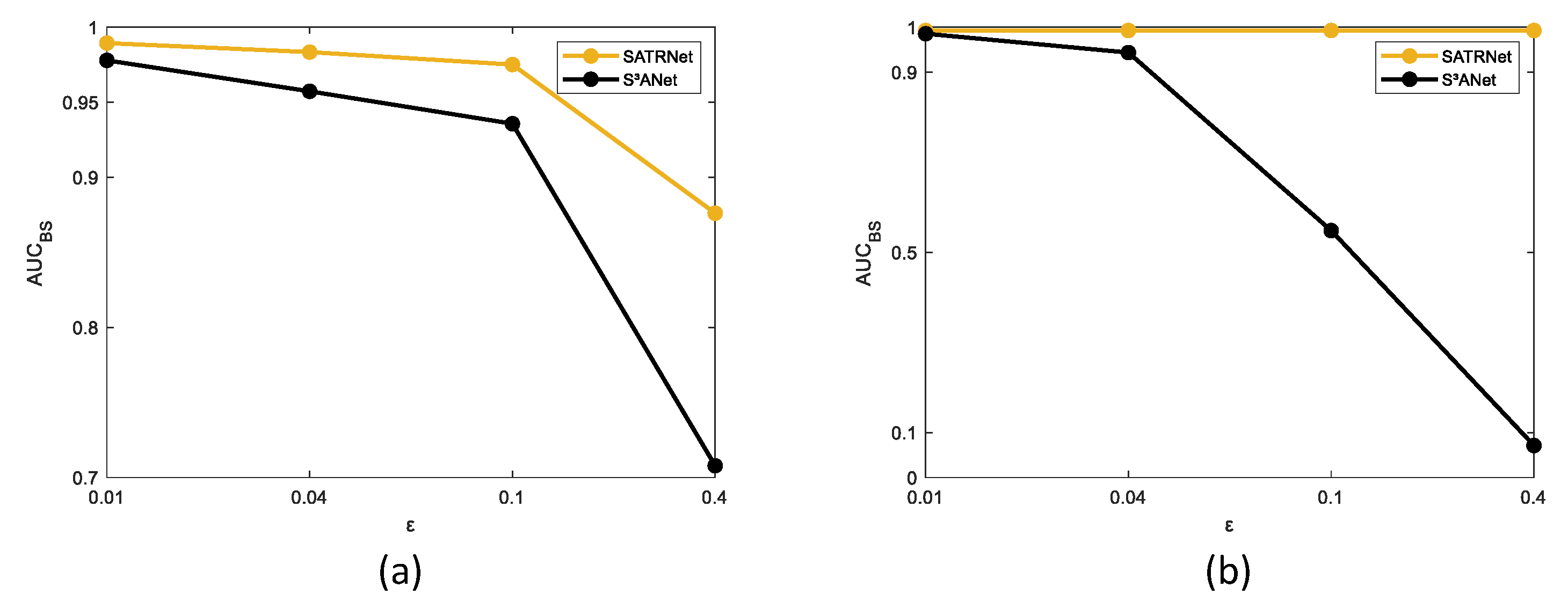

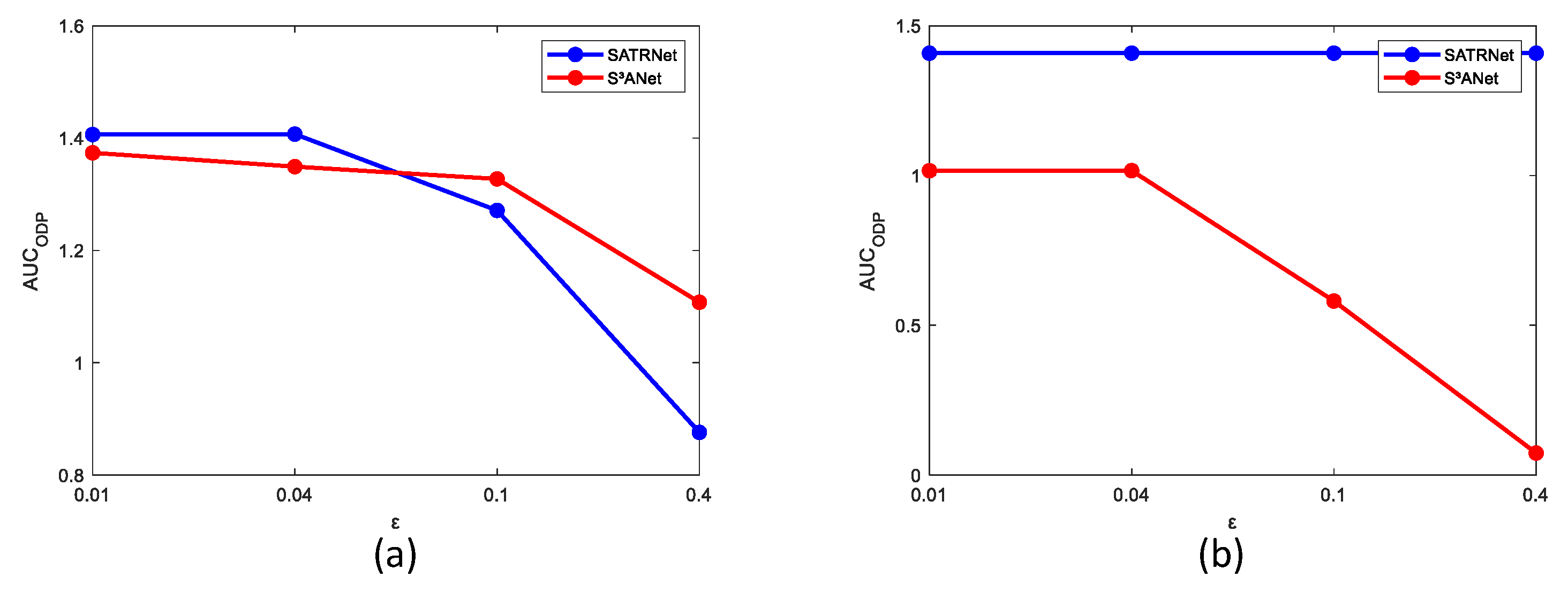

Figure 17,

Figure 18 and

Figure 19, the AUC,

, and

metrics under different

values were tested on the Salinas-I and Urban-IV datasets, respectively. The experimental results show that the proposed method performs better in most cases when compared with S

3ANet in terms of different AUC metrics on the Salinas-I dataset. On the Urban-IV dataset, the performance of S

3ANet declines seriously as the attack strength increases, while the proposed SATRNet method maintains stability in all metrics, showing its significant superiority to the performance of S

3ANet. Detection maps of the two methods at

are given in

Figure 20, which also shows that the proposed SATRNet exhibits better detection accuracy. Combining all observations, we can consider that the proposed method has certain adversarial robustness. This benefits from the capture of spatial correlation by self-attention learning and the inherent robustness of the tensor model [

72].