Airborne and Spaceborne Hyperspectral Remote Sensing in Urban Areas: Methods, Applications, and Trends

Abstract

Highlights

- What are the main findings?

- •

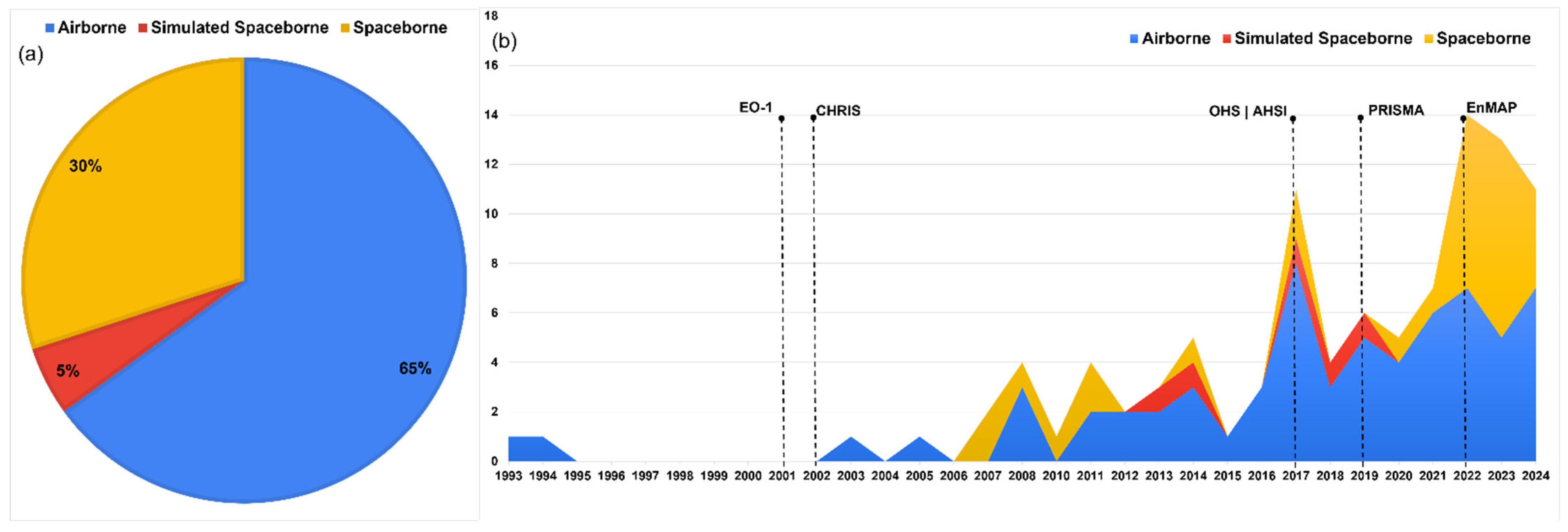

- Airborne imagery remains the dominant hyperspectral data type used in urban remote sensing owing to higher spatial resolution and availability of benchmark datasets, while spaceborne applications have significantly increased since 2019.

- •

- Machine Learning (in particular, Support Vector Machine and Random Forest) is the most widely used image processing approach. Deep Learning is increasingly exploited, however constraints due to needed data and computing resources currently apply.

- What is the implication of the main finding?

- •

- The growing accessibility of spaceborne hyperspectral data (e.g., PRISMA, EnMAP) is shifting the field from conceptual studies toward urban monitoring applications.

- •

- To achieve full operational integration, future research should focus on robust data fusion techniques, standardized urban classification frameworks, real-world case studies beyond benchmark datasets, and capability for multi-temporal monitoring.

Abstract

1. Introduction

2. Research Aims

- What are the predominant trends in hyperspectral remote sensing data analysis for urban applications, regardless of the platform used to collect data?

- Which are the most applied hyperspectral image processing techniques for analysis over urban areas?

- To what extent are spaceborne and airborne hyperspectral imaging currently exploited in urban areas?

- How does spaceborne hyperspectral imaging perform in comparison to airborne hyperspectral imaging?

3. Materials and Methods

3.1. Terminology Definition

- Airborne platforms: airborne missions have operated since 1987, with the first flight campaign conducted by AVIRIS [15]. Depending on sensor characteristics and how the flight survey is performed (e.g., flight altitude), these platforms offer high spatial resolution (SR; 1–20 m) and variable temporal resolution. However, their major drawbacks include high acquisition costs, limited coverage, and challenges related to flight altitude and speed [16]. Furthermore, airborne datasets are mostly collected as one-shot surveys and multi-temporal observations are constrained by acquisition costs.

- Spaceborne platforms: spaceborne missions are relatively recent, with the first-generation including Hyperion [17] (2000–2017) and CHRIS [18] (2001–2021), followed by newer missions such as PRISMA [19] (2019), HISUI [20] (2019), and EnMAP [21] (2022), among others [22]. These platforms offer wide spatial coverage and the possibility to collect over the same areas according to a nominal temporal revisit. However, their major limitations include a greater exposure to adverse weather—while airborne platforms are more flexible in terms of acquisition under varying weather conditions—and a coarser SR (20–60 m) as a trade-off for being more cost-effective [16].

- AHSI has a higher number of bands, twice the swath compared to EnMAP and PRISMA, and a higher revisit frequency

- PRISMA offers a coregistered panchromatic (PAN) image with a 5 m Ground Sampling Distance (GSD)

| Sensor | Mission/ Platform | Years of Service | N° of Bands | Spectral Resolution (nm) | Spectral Range (nm) | Spatial Resolution (m) | Swath Width (km) | Peak Signal-to-Noise Ratio | Nadir Revisit Time |

|---|---|---|---|---|---|---|---|---|---|

| Hyperion [17] | EO-1 | 2000–2017 | 220 | 10 | VNIR (357–1000) SWIR (900–2576) | 30 | 7.7 | 190:1 (VNIR) 110:1 (SWIR) | 30 days |

| CHRIS [28] | PROBA | 2001–2022 | 62 | 1.25–12 | VNIR (400–1000) | 34 | 14 | 160:1 (VNIR) | 7 days |

| OHS [24,27] | Zhuhai-1 | 2018 | 32 | 2.5 | VNIR (400–1000) | 10 | 150 | Not specified | 2 days |

| AHSI [29] | Gaofen-5 | 2018 | 330 | 4 (VNIR) 8 (SWIR) | 390–2550 | 30 | 60 | 650:1 (VNIR) 190:1 (SWIR) | 51 days (Pers. Comment Dr. Yinnian Liu. Email. ynliu@mail.sitp.ac.cn) |

| PRISMA [19] | PRISMA | 2019 | 240 of which 1 PAN | 10 | VNIR (400–1010) SWIR (920–2550) PAN image (400–700) | 30 5 (PAN image) | 30 | 600:1 (VNIR) 200:1 (SWIR) | Less than 29 days |

| Hyperspectral Imager [30] | EnMAP | 2022 | 224 | 6.5 (VNIR) 10 (SWIR) | 420–2445 VNIR (420–1000) SWIR (900–2445) | 30 | 30 | 620:1 (VNIR) 230:1 (SWIR) | 21 days |

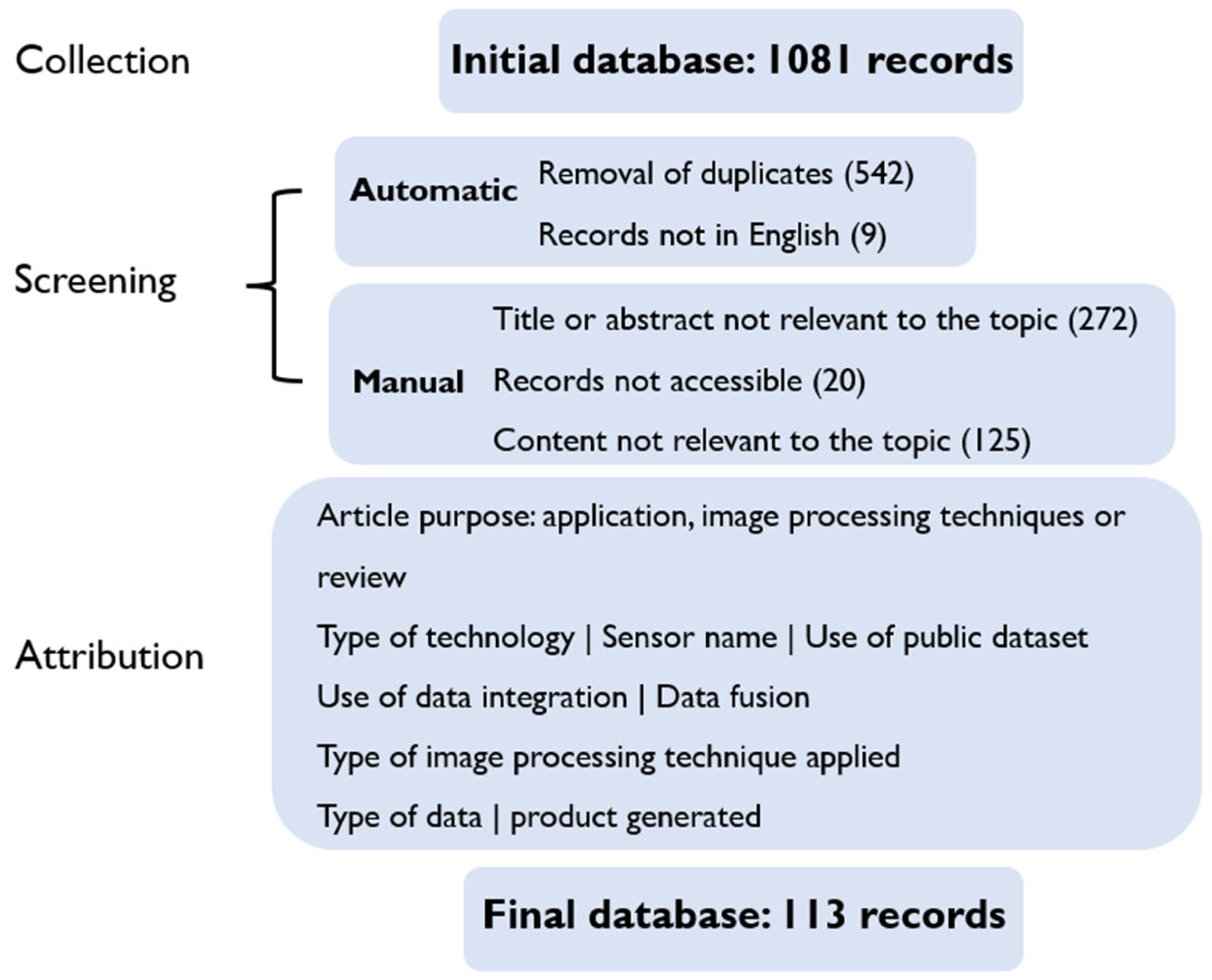

3.2. Methodology

- -

- Remote Sensing AND Hyperspectral AND Urban AND Classification

- -

- Remote Sensing AND Hyperspectral AND Urban AND Satellite

- -

- Remote Sensing AND Hyperspectral AND Urban AND Spaceborne

- -

- Remote Sensing AND Hyperspectral AND Urban AND Airborne

- Hyperspectral imaging had to represent the primary data source employed in the analysis.

- The study area had to consist predominantly, or entirely, of urban environments.

4. Results

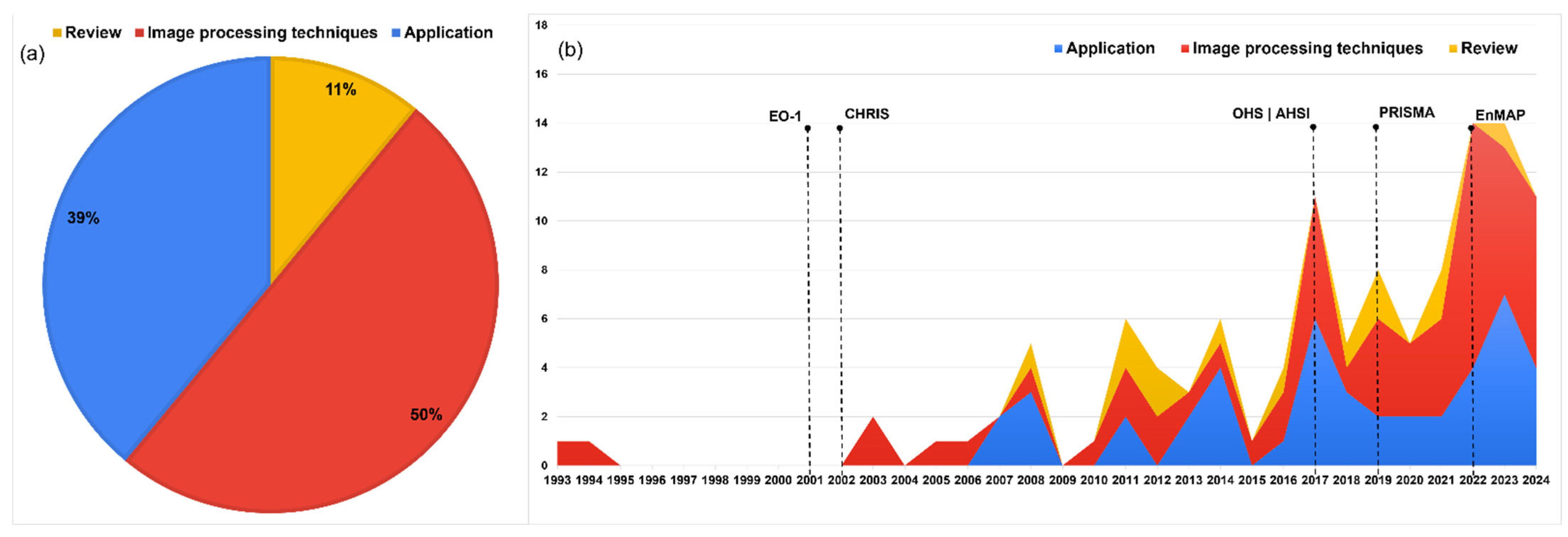

4.1. General Statistics

4.2. Earlier Literature Reviews on Hyperspectral Remote Sensing in Urban Areas

- -

- A lack of studies that consider the trajectory of HSI in urban applications as a comprehensive topic.

- -

- A lack of studies that systematically analyze image-processing techniques and applications that have historically been, and are currently, trending in urban environments.

- -

- A lack of papers that go beyond the SR parameter to establish a comparison between airborne and spaceborne sensors.

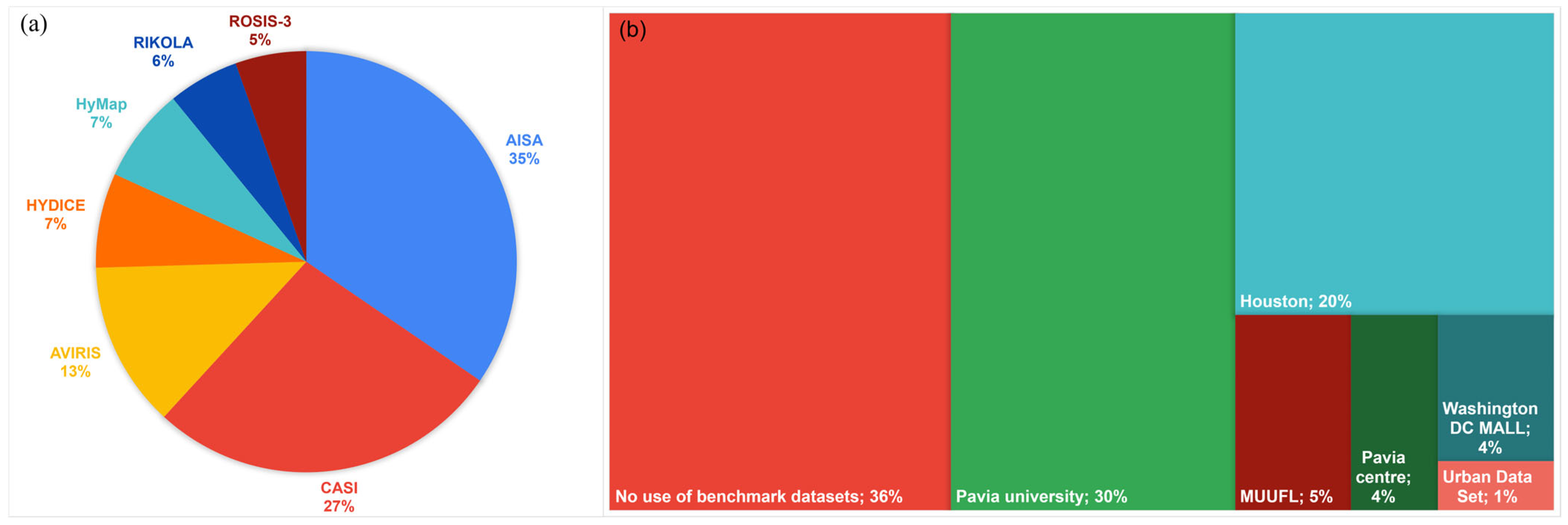

4.3. Hyperspectral Sensors and Datasets

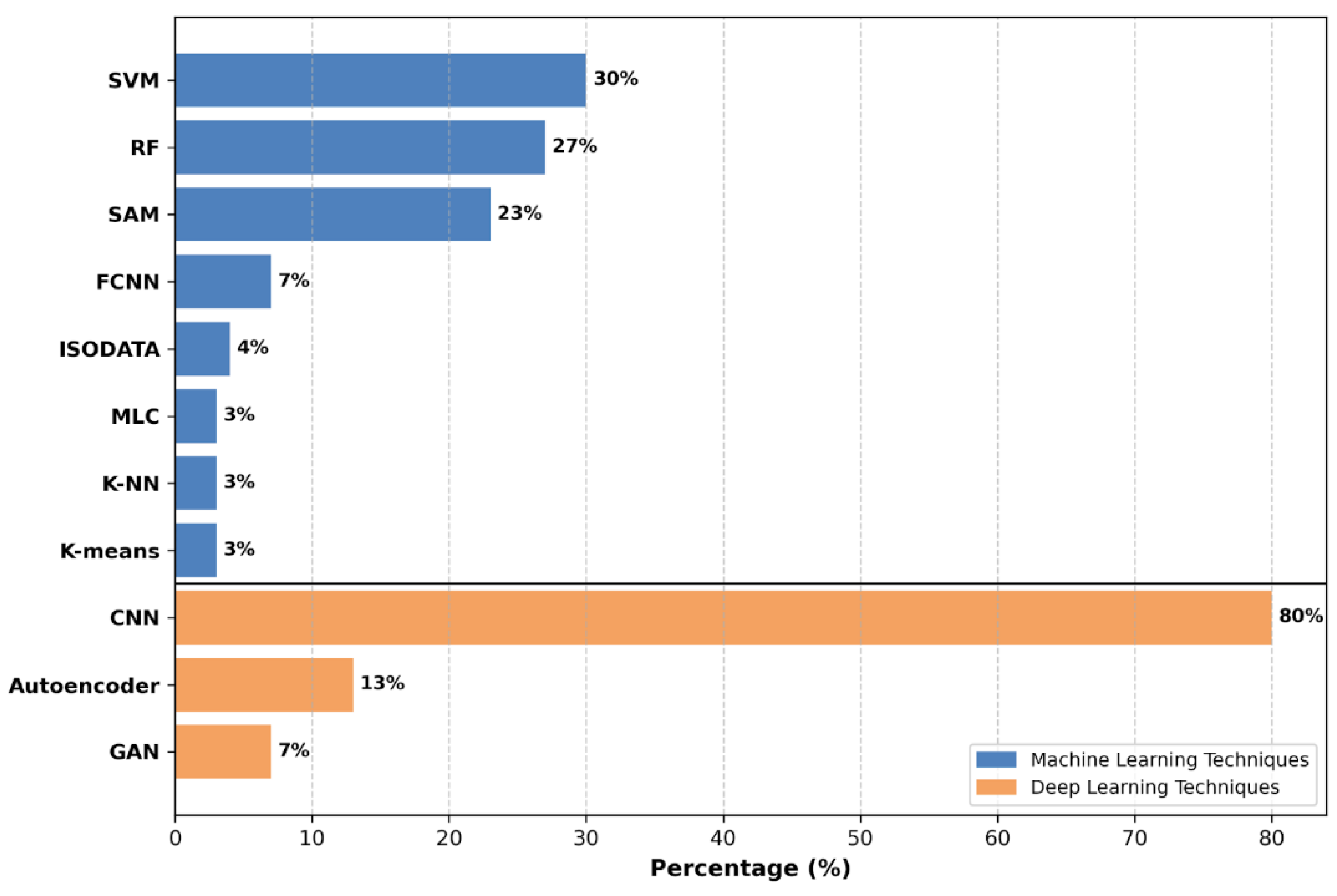

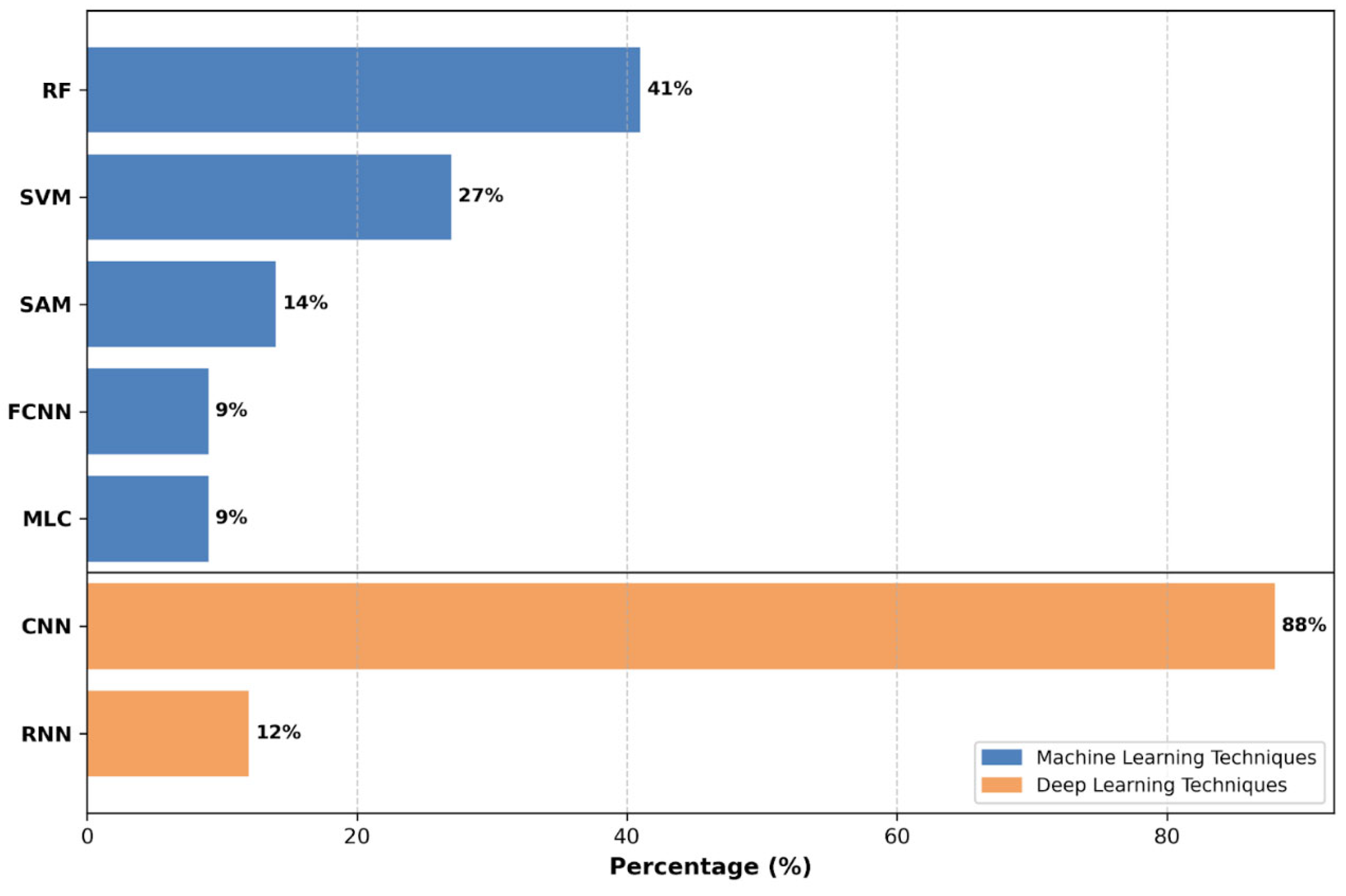

4.4. Image Processing Techniques

- -

- Spectral Angle Mapper (SAM) is a physically based spectral classification method that measures the similarity between spectra using an n-dimensional angle, treating each pixel and reference spectrum as vectors in a space where the number of dimensions corresponds to the number of spectral bands [66].

- -

- Random Forest (RF) is a supervised ensemble classifier composed of multiple Decision Trees (DT). The final prediction is made through voting (for classification tasks) or averaging (for regression tasks) among the trees. For each tree, a random subset of the training samples is selected (a process known as bagging).

- -

- Support Vector Machine (SVM) is a supervised technique based on statistical learning theory, where the input data is mapped through a nonlinear transformation into a high dimensional space (hyperplane), in which linear relationships can be depicted. Throughout this process, the objective of the classifier is to find the best decision surface, which maximizes the distance (margin) among the different training data (support vectors) belonging to different classes; the higher, the better the final accuracy [67]. The transformation to a higher-dimensional space is performed via a kernel function. SVM employs a range of those functions, as they perform differently regarding the nature of data: Polynomial (nonlinear data), Linear (linear data), and Radial Basis kernels (no clear separation among data) are the most significant [68].

- -

- Convolutional Neural Networks (CNNs) use a convolutional layer as their core structural unit, with the main purpose of applying a set of kernels to the input data to extract patterns and features. Pooling is also included, serving to reduce the spatial dimensions of the feature maps, thereby facilitating processing in subsequent convolutional layers. Finally, the activation layer introduces a nonlinear activation function, enabling the model to learn more complex features from the data [70]. There are three main types of CNNs architecture: 1-D, 2-D, and 3-D. One-dimensional architecture is the most basic one, as it performs the convolution to the spectral dimension, as a vector, ignoring the spatial properties of the data. Two-dimensional architecture introduces the convolution into spatial data, converting the pixel spectral vectors into two-dimensional spectral images, thus the network can utilize the spatial information to improve the accuracy, albeit not taking into consideration the full spectral depth [71]. Three-dimensional architecture performs the convolution into the spectral-spatial features, as the third dimension allows to analyze the full spectral depth of the hyperspectral image [72].

- -

- Auto-encoders (AEs) are an unsupervised architecture that learns to reconstruct input data from a lower-dimensional representation, through an encoder, bottleneck layer and a decoder [68]. They are mostly employed to perform dimensionality reduction and high-level spectral feature extraction.

- -

- Recurrent Neural Network (RNN) is a supervised architecture based on loops in the connections, where node-activations at each step depends on the previous one, making it ideal to analyze temporal sequences. The most common RNN is the Long Short-Term Memory (LSTM), composed by a recurrent unit composed by a cell which remembers values at arbitrary time intervals, and three gates intended to regulate the information in and out of the cell [73].

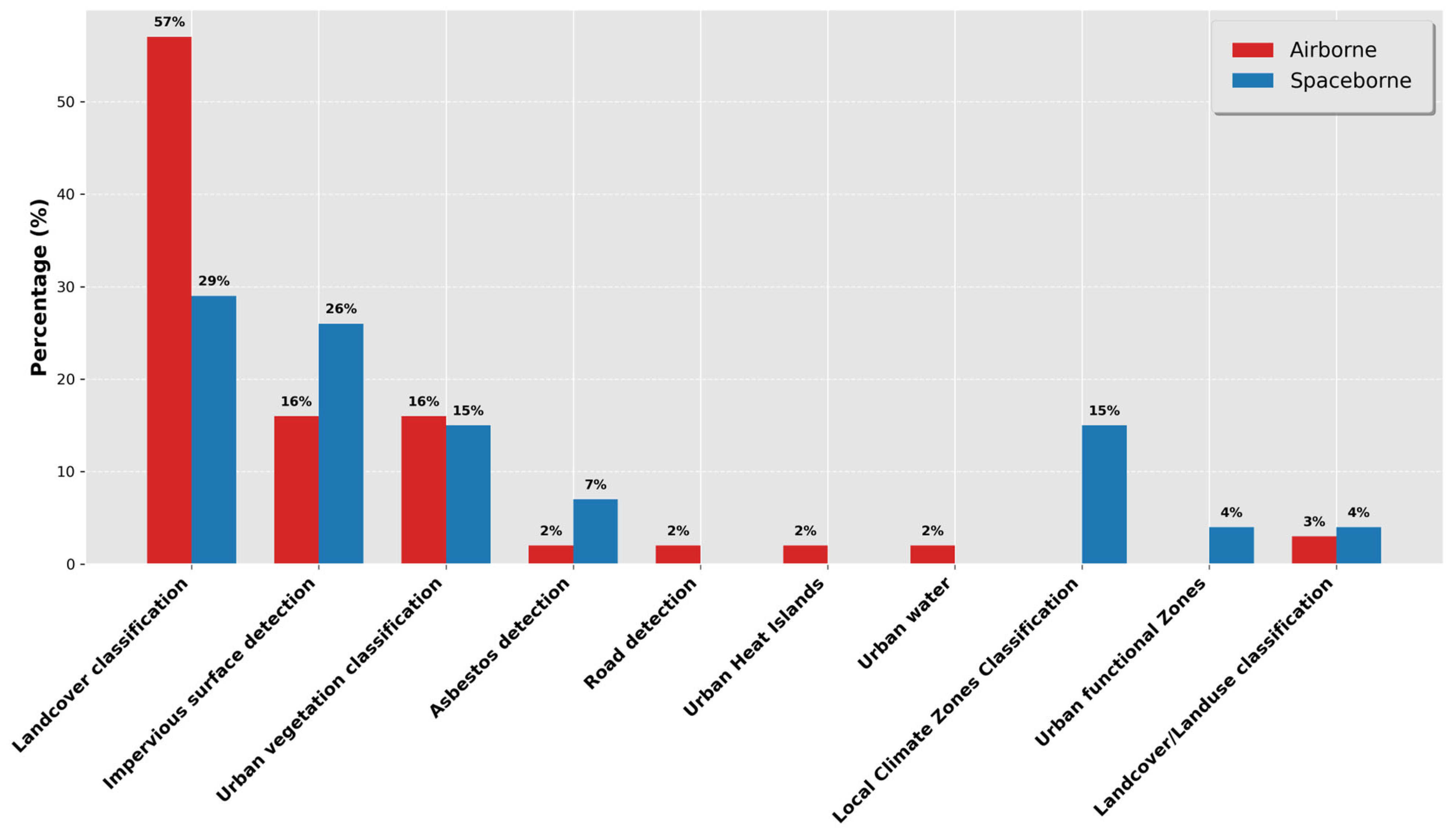

4.5. Main Application Trends

- -

- Urban vegetation classification: This application focuses on distinguishing various types of vegetation, with most of the studies aiming to identify species [74]. It accounts for a significant proportion for both the sensors (15–16%).

- -

- Impervious surface detection: Impervious surfaces are natural or artificial coverings in urban areas that prevent water infiltration into the ground. This classification is generally simpler, often presented as a dichotomy (soil|impervious), a trichotomy (soil|impervious|vegetation) [24]. Although there are some authors that do not apply this scheme, considering roof/pavement and grass/tree independently [75]. This application foresees a more frequent use of spaceborne (26%) than airborne (16%) sensors.

- -

- Land cover/land use classification: This application results from distinct but often conflated concepts. Although land covers usually correspond to a single spectral category, land uses are information classes that result from the confluence of diverse spectral categories. While combining the two concepts in a single product may seem beneficial—as it could provide more comprehensive information, their use typically relates to different objectives: environmental monitoring (land cover) and policymaking (land use) [76]. The variation on purposes could lead to confusion for the end-user, which will be further discussed in the Discussion section. This application accounts for a reduced proportion in both the sensor types (4% for spaceborne; 3% for airborne).

4.6. Use of Data Integration/Fusion

5. Discussion

5.1. Main Insights Extracted from Basic Statistics: Theoretical Stage of the Topic

- -

- Limited availability of spaceborne and airborne hyperspectral imagery for many years before new satellite missions were launched. As indicated in Figure 5, the major problem with airborne HSI lies in its high acquisition cost, leading most authors to rely on public datasets to test techniques and perform benchmarking, resulting in a lack of application-oriented papers. The lack of data has been compensated in the last 5 years with the launch of the new generation of hyperspectral satellites with free of charge and quasi-open data policies (such as PRISMA), as demonstrated by the increasing use of this technology in comparison to previous years.

- -

- Coarse resolution of spaceborne imaging: many authors argue that SR is a major factor that highly influences accuracy when analyzing urban areas since they are composed of a mixture of land covers at small scales [54]. Because of that, some authors preferred to work with spaceborne MSI or airborne HSI [55] which offer better SR, provided that there are disadvantages in terms of spectral resolution and/or cost-effectiveness to access the data.

- -

- Complexity of urban environment: Many aspects involve this issue, such as the similar spectral signatures among many urban land covers [99] or the inherent geometrical complexity of cities (3D structures), which lead to nonlinear data [100]. Although hyperspectral data can address many of these challenges, misclassifications may still occur when relying solely on spectral criteria, requiring the integration of different data sources. This is particularly important in cases where different objects exhibit similar spectral signatures due to shared materials or surface properties, or when the same object returns a different spectrum because of differences in condition, illumination, or background effects [101]. Those factors have led scientists to prefer natural and rural environments to start developing frameworks and applications.

5.2. Imaging Processing Techniques

- -

- Semantic representation through Deep Features: early layers capture low-level features (spatial-spectral metrics), while middle layers capture patterns, to relate both in the deep layers with semantic concepts indicated during the training process. This is especially useful when working with urban areas, as it allows to better cope with its complexities [127].

- -

- Universal approximation: as radically different inputs can be included [73].

- -

- Large flexibility of its structure design: allows to adapt the model to specific tasks and also to different learning strategies, which is fundamental in remote sensing applications [73].

5.3. Trends in Urban Applications

- -

- Pixel analysis approach: Pixel-based analysis is often limited by coarse SR in complex areas—where the spectral signal may be mixed due to the presence of multiple land covers within individual pixels. Sub-pixel analysis techniques, such as regression-based methods provided by ML or DL, or spectral unmixing, can potentially extract more detailed information [75]. However, as far as this article has reviewed, sub-pixel analysis has not yet been applied to land cover classification using HSI, as the high number of land cover categories introduces significant complexity in determining accurate material fractions.

- -

- Study area morphology: Welch [144] conducted an analysis of the minimum pixel size required to effectively study urban areas across various global cities. The findings of the study emphasized that the critical factor to determine suitable SR mainly relied on the contrast between different urban land cover types. In heterogeneous urban environments—where built-up areas, vegetation, transportation infrastructure, and bare soil often coexist within small spatial extents—high spectral and spatial contrast enable more effective discrimination of land cover types, even at coarser resolutions. In areas with low contrast or gradual transitions between covers, even finer SR may struggle to produce accurate classifications.

- -

- Size of features: Another aspect to take into consideration is the size of the urban feature to classify, as coarser SR tends to overlook smaller or more fragmented features. This limitation becomes relevant when the classification task involves detailed land cover categories. In such cases, the spatial heterogeneity within urban environments may not be accurately captured, leading to underrepresentation or misclassification of fine-scale and linear elements such as narrow roads, small buildings, or isolated vegetation patches [145].

5.4. Comparison Between Satellite and Airborne HSI

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AEs | Auto-encoders |

| ANN | Artificial Neural Network |

| ASI | Italian Space Agency |

| CHIME | Copernicus Hyperspectral Imaging Mission for the Environment |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| DT | Decision Trees |

| FCNN | Fully Connected Neural Network |

| GAN | Generative Adversarial Network |

| GIS | Geographic Information System |

| GSD | Ground Sampling Distance |

| HSI | Hyperspectral Imaging |

| K-NN | K-Nearest Neighbors |

| LIDAR | Light Detection and Ranging |

| LCZ | Local Climate Zone |

| LSMA | Linear Spectral Mixture Analysis |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| MLC | Maximum Likelihood Classifier |

| MSI | Multispectral Imaging |

| NMF | Nonnegative Matrix Factorization |

| PAN | Panchromatic |

| RF | Random Forest |

| RNN | Recurrent Neural Network |

| SAR | Synthetic Aperture Radar |

| SAM | Spectral Angle Mapper |

| SNR | Signal-to-Noise Ratio |

| SR | Spatial Resolution |

| SU | Spectral Unmixing |

| SVM | Support Vector Machine |

| SWIR | Short-Wave Infrared |

| UCP | Urban Canopy Parameter |

| VNIR | Visible and Near-Infrared |

References

- Keivani, R. A review of the main challenges to urban sustainability. Int J. Urban Sustain. Dev. 2009, 1, 5–16. [Google Scholar] [CrossRef]

- United Nations World Urbanization Prospects: The 2018 Revision. Department of Economic and Social Affairs. 2018. Available online: https://www.un.org/development/desa/en/news/population/2018-revision-of-world-urbanization-prospects.html (accessed on 6 June 2025).

- United Nations Transforming Our World: The 2030 Agenda for Sustainable Development 2015; Division for Sustainable Development Goals: New York, NY, USA, 2015; Available online: https://www.un.org/sustainabledevelopment/cities/ (accessed on 6 June 2025).

- Zhu, C.; Li, J.; Zhang, S.; Wu, C.; Zhang, B.; Gao, L.; Plaza, A. Impervious Surface Extraction From Multispectral Images via Morphological Attribute Profiles Based on Spectral Analysis. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4775–4790. [Google Scholar] [CrossRef]

- de Almeida, C.R.; Teodoro, A.C.; Gonçalves, A. Study of the Urban Heat Island (UHI) Using Remote Sensing Data/Techniques: A Systematic Review. Environments 2021, 8, 105. [Google Scholar] [CrossRef]

- Shojanoori, R.; Shafri, H. Review on the Use of Remote Sensing for Urban Forest Monitoring. Arboric. Urban For. 2016, 42, 400–417. [Google Scholar] [CrossRef]

- Herold, M.; Roberts, D.A.; Gardner, M.E.; Dennison, P.E. Spectrometry for urban area remote sensing—Development and analysis of a spectral library from 350 to 2400 nm. Remote Sens. Environ. 2004, 91, 304–319. [Google Scholar] [CrossRef]

- Fabbretto, A.; Bresciani, M.; Pellegrino, A.; Alikas, K.; Pinardi, M.; Mangano, S.; Padula, R.; Giardino, C. Tracking Water Quality and Macrophyte Changes in Lake Trasimeno (Italy) from Spaceborne Hyperspectral Imagery. Remote Sens. 2024, 16, 1704. [Google Scholar] [CrossRef]

- Fernández-Manso, A.; Quintano, C.; Fernández-Guisuraga, J.M.; Roberts, D. Next-gen regional fire risk mapping: Integrating hyperspectral imagery and National Forest Inventory data to identify hot-spot wildland-urban interfaces. Sci. Total Environ. 2024, 940, 173568. [Google Scholar] [CrossRef] [PubMed]

- Hajaj, S.; Harti, A.E.; Pour, A.B.; Jellouli, A.; Adiri, Z.; Hashim, M. A review on hyperspectral imagery application for lithological mapping and mineral prospecting: Machine learning techniques and future prospects. Remote Sens. Appl. Soc. Environ. 2024, 35, 101218. [Google Scholar] [CrossRef]

- Ram, B.G.; Oduor, P.; Igathinathane, C.; Howatt, K.; Sun, X. A systematic review of hyperspectral imaging in precision agriculture: Analysis of its current state and future prospects. Comput. Electron. Agric. 2024, 222, 109037. [Google Scholar] [CrossRef]

- Goetz, A.; Vane, G.; Solomon, J.; Rock, B. Imaging Spectrometry for Earth Remote Sensing. Science 1985, 228, 1147–1153. [Google Scholar] [CrossRef]

- Selci, S. The Future of Hyperspectral Imaging. J. Imaging 2019, 5, 84. [Google Scholar] [CrossRef]

- Manolakis, D.; Marden, D.; Shaw, G. Hyperspectral image processing for automatic target detection applications. Linc. Lab. J. 2003, 14, 79–116. [Google Scholar]

- Vane, G.; Green, R.O.; Chrien, T.G.; Enmark, H.T.; Hansen, E.G.; Porter, W.M. The airborne visible/infrared imaging spectrometer (AVIRIS). Remote Sens. Environ. 1993, 44, 127–143. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Pearlman, J.; Carman, S.; Segal, C.; Jarecke, P.; Clancy, P.; Browne, W. Overview of the Hyperion Imaging Spectrometer for the NASA EO-1 mission. Scanning the Present and Resolving the Future. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium, Sydney, NSW, Australia, 9–13 July 2001; Cat. No.01CH37217. Volume 7, pp. 3036–3038. [Google Scholar] [CrossRef]

- Barnsley, M.J.; Settle, J.J.; Cutter, M.A.; Lobb, D.R.; Teston, F. The PROBA/CHRIS mission: A low-cost smallsat for hyperspectral multiangle observations of the Earth surface and atmosphere. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1512–1520. [Google Scholar] [CrossRef]

- Cogliati, S.; Sarti, F.; Chiarantini, L.; Cosi, M.; Lorusso, R.; Lopinto, E.; Miglietta, F.; Genesio, L.; Guanter, L.; Damm, A.; et al. The PRISMA imaging spectroscopy mission: Overview and first performance analysis. Remote Sens. Environ. 2021, 262, 112499. [Google Scholar] [CrossRef]

- Matsunaga, T.; Iwasaki, A.; Tsuchida, S.; Tanii, J.; Kashimura, O.; Nakamura, R.; Yamamoto, H.; Tachikawa, T.; Rokugawa, S. Hyperspectral Imager Suite (HISUI). In Optical Payloads for Space Missions; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2015; pp. 215–222. [Google Scholar] [CrossRef]

- Chabrillat, S.; Foerster, S.; Segl, K.; Beamish, A.; Brell, M.; Asadzadeh, S.; Milewski, R.; Ward, K.J.; Brosinsky, A.; Koch, K.; et al. The EnMAP spaceborne imaging spectroscopy mission: Initial scientific results two years after launch. Remote Sens. Environ. 2024, 315, 114379. [Google Scholar] [CrossRef]

- Qian, S.-E. Hyperspectral Satellites, Evolution, and Development History. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7032–7056. [Google Scholar] [CrossRef]

- Kudela, R.M.; Hooker, S.B.; Guild, L.S.; Houskeeper, H.F.; Taylor, N. Expanded Signal to Noise Ratio Estimates for Validating Next-Generation Satellite Sensors in Oceanic, Coastal, and Inland Waters. Remote Sens. 2024, 16, 1238. [Google Scholar] [CrossRef]

- Feng, X.; Shao, Z.; Huang, X.; He, L.; Lv, X.; Zhuang, Q. Integrating Zhuhai-1 Hyperspectral Imagery With Sentinel-2 Multispectral Imagery to Improve High-Resolution Impervious Surface Area Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2410–2424. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef]

- Spoto, F.; Sy, O.; Laberinti, P.; Martimort, P.; Fernandez, V.; Colin, O.; Hoersch, B.; Meygret, A. Overview Of Sentinel-2. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 1707–1710. [Google Scholar]

- Li, J.; Huang, X.; Tu, L. WHU-OHS: A benchmark dataset for large-scale Hersepctral Image classification. Int. J. Appl. Earth Obs. Geoinformation 2022, 113, 103022. [Google Scholar] [CrossRef]

- Barducci, A.; Guzzi, D.; Marcoionni, P.; Pippi, I. CHRIS-PROBA performance evaluation: Signal-to-noise ratio, instrument efficiency and data quality from acquisitions over San Rossore (ITALY) test site. In Proceedings of the 3rd ESA CHRIS/Proba Workshop, Noordwijk, The Netherlands, 21–23 March 2005. [Google Scholar]

- Liu, Y.-N.; Sun, D.-X.; Hu, X.-N.; Ye, X.; Li, Y.-D.; Liu, S.-F.; Cao, K.-Q.; Chai, M.-Y.; Zhou, W.-Y.-N.; Zhang, J.; et al. The Advanced Hyperspectral Imager: Aboard China’s GaoFen-5 Satellite. IEEE Geosci. Remote Sens. Mag. 2019, 7, 23–32. [Google Scholar] [CrossRef]

- Storch, T.; Honold, H.-P.; Chabrillat, S.; Habermeyer, M.; Tucker, P.; Brell, M.; Ohndorf, A.; Wirth, K.; Betz, M.; Kuchler, M.; et al. The EnMAP imaging spectroscopy mission towards operations. Remote Sens. Environ. 2023, 294, 113632. [Google Scholar] [CrossRef]

- Green, R.O.; Eastwood, M.L.; Sarture, C.M.; Chrien, T.G.; Aronsson, M.; Chippendale, B.J.; Faust, J.A.; Pavri, B.E.; Chovit, C.J.; Solis, M.; et al. Imaging Spectroscopy and the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS). Remote Sens. Environ. 1998, 65, 227–248. [Google Scholar] [CrossRef]

- Cocks, T.; Jenssen, R.; Stewart, A.; Wilson, I.; Shields, T. The HyMap Airborne Hyperspectral Sensor: The System, Calibration and Performance. In Proceedings of the 1st EARSeL Workshop on Imaging Spectroscopy, Zurich, Switzerland, 6–8 October 1998. [Google Scholar]

- Babey, S.K.; Anger, C.D. Compact airborne spectrographic imager (CASI): A progress review. In Proceedings of the Imaging Spectrometry of the Terrestrial Environment; Vane, G., Ed.; SPIE: Bellingham, WA, USA, 1993; Volume 1937, pp. 152–163. [Google Scholar] [CrossRef]

- Centre for Remote Sensing (CRS), University of Iceland ROSIS. 2019. Available online: https://crs.hi.is/?page_id=877 (accessed on 15 May 2025).

- Spectral Imaging Ltd. (SPECIM). Specim FX10 Hyperspectral Camera Datasheet. Available online: https://www.specim.com/products/specim-fx10/ (accessed on 12 August 2025).

- Basedow, R.W.; Carmer, D.C.; Anderson, M.E. HYDICE system: Implementation and performance. In Proceedings of the Imaging Spectrometry; Descour, M.R., Mooney, J.M., Perry, D.L., Illing, L.R., Eds.; SPIE: Bellingham, WA, USA, 1995; Volume 2480, pp. 258–267. [Google Scholar]

- Weeks, J. Population: An Introduction to Concepts and Issues, 10th ed.; Wadsworth Thomson Learning: Belmont, CA, USA, 2008. [Google Scholar]

- Weeks, J. Defining Urban Areas. In Remote Sensing of Urban and Suburban Areas; Springer: Berlin/Heidelberg, Germany, 2010; Volume 10, pp. 33–45. [Google Scholar] [CrossRef]

- UN-Habitat. What Is a City? United Nations Human Settlements Programme: Nairobi, Kenya, 2020; Available online: https://unhabitat.org/sites/default/files/2020/06/city_definition_what_is_a_city.pdf (accessed on 12 June 2025).

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Cuca, B.; Zaina, F.; Tapete, D. Monitoring of Damages to Cultural Heritage across Europe Using Remote Sensing and Earth Observation: Assessment of Scientific and Grey Literature. Remote Sens. 2023, 15, 3748. [Google Scholar] [CrossRef]

- Schmitt, M.; Zhu, X.X. Data Fusion and Remote Sensing: An ever-growing relationship. IEEE Geosci. Remote Sens. Mag. 2016, 4, 6–23. [Google Scholar] [CrossRef]

- Taherzadeh, E.; Mansor, S.B.; Ashurov, R. Hyperspectral Remote Sensing of Urban Areas: An Overview of Techniques and Applications. 2012. Available online: https://api.semanticscholar.org/CorpusID:16655704 (accessed on 15 May 2025).

- van der Linden, S.; Okujeni, A.; Canters, F.; Degerickx, J.; Heiden, U.; Hostert, P.; Priem, F.; Somers, B.; Thiel, F. Imaging Spectroscopy of Urban Environments. Surv. Geophys. 2019, 40, 471–488. [Google Scholar] [CrossRef]

- Transon, J.; D’Andrimont, R.; Maugnard, A.; Defourny, P. Survey of Hyperspectral Earth Observation Applications from Space in the Sentinel-2 Context. Remote Sens. 2018, 10, 157. [Google Scholar] [CrossRef]

- Torres Gil, L.K.; Valdelamar Martínez, D.; Saba, M. The Widespread Use of Remote Sensing in Asbestos, Vegetation, Oil and Gas, and Geology Applications. Atmosphere 2023, 14, 172. [Google Scholar] [CrossRef]

- Abbasi, M.; Mostafa, S.; Vieira, A.S.; Patorniti, N.; Stewart, R.A. Mapping Roofing with Asbestos-Containing Material by Using Remote Sensing Imagery and Machine Learning-Based Image Classification: A State-of-the-Art Review. Sustainability 2022, 14, 8068. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Ciesielski, M.; Sterenczak, K. Accuracy of determining specific parameters of the urban forest using remote sensing. IForest Biogeosci. For. 2019, 12, 498–510. [Google Scholar] [CrossRef]

- Weng, Q. Remote sensing of impervious surfaces in the urban areas: Requirements, methods, and trends. Remote Sens. Environ. 2012, 117, 34–49. [Google Scholar] [CrossRef]

- Kuras, A.; Brell, M.; Rizzi, J.; Burud, I. Hyperspectral and Lidar Data Applied to the Urban Land Cover Machine Learning and Neural-Network-Based Classification: A Review. Remote Sens. 2021, 13, 3393. [Google Scholar] [CrossRef]

- Segl, K.; Guanter, L.; Rogass, C.; Kuester, T.; Roessner, S.; Kaufmann, H.; Sang, B.; Mogulsky, V.; Hofer, S. EeteS—The EnMAP End-to-End Simulation Tool. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 522–530. [Google Scholar] [CrossRef]

- Gong, Z.; Zhong, P.; Hu, W. Diversity in Machine Learning. IEEE Access 2019, 7, 64323–64350. [Google Scholar] [CrossRef]

- Sawant, S.; Prabukumar, M. Band fusion based hyper spectral image classification. Int. J. Pure Appl. Math. 2017, 117, 71–76. [Google Scholar]

- Gamba, P. A collection of data for urban area characterization. In Proceedings of the IGARSS 2004. 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; Volume 1, p. 72. [Google Scholar] [CrossRef]

- Zhang, L.; You, J. A spectral clustering based method for hyperspectral urban image. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–3. [Google Scholar] [CrossRef]

- Nischan, M.L.; Kerekes, J.P.; Baum, J.E.; Basedow, R.W. Analysis of HYDICE noise characteristics and their impact on subpixel object detection. In Proceedings of the Imaging Spectrometry V; Descour, M.R., Shen, S.S., Eds.; SPIE: Bellingham, WA, USA, 1999; Volume 3753, pp. 112–123. [Google Scholar] [CrossRef]

- Kalman, L.S.; Bassett, E.M., III. Classification and material identification in an urban environment using HYDICE hyperspectral data. In Proceedings of the Imaging Spectrometry III; Descour, M.R., Shen, S.S., Eds.; SPIE: Bellingham, WA, USA, 1997; Volume 3118, pp. 57–68. [Google Scholar] [CrossRef]

- Gao, H.; Feng, H.; Zhang, Y.; Xu, S.; Zhang, B. AMSSE-Net: Adaptive Multiscale Spatial–Spectral Enhancement Network for Classification of Hyperspectral and LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Rasti, B.; Ghamisi, P.; Gloaguen, R. Fusion of Multispectral LiDAR and Hyperspectral Imagery. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2659–2662. [Google Scholar] [CrossRef]

- ITRES CASI-1500. 2008. Available online: http://www.formosatrend.com/Brochure/CASI-1500.pdf (accessed on 28 April 2025).

- Wei, J.; Wang, X. An Overview on Linear Unmixing of Hyperspectral Data. Math. Probl. Eng. 2020, 2020, 3735403. [Google Scholar] [CrossRef]

- Keshava, N.; Mustard, J.F. Spectral unmixing. IEEE Signal Process. Mag. 2002, 19, 44–57. [Google Scholar] [CrossRef]

- Wang, M.; Zhao, M.; Chen, J.; Rahardja, S. Nonlinear Unmixing of Hyperspectral Data via Deep Autoencoder Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1467–1471. [Google Scholar] [CrossRef]

- Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine learning in geosciences and remote sensing. Geosci. Front. 2016, 7, 3–10. [Google Scholar] [CrossRef]

- Rashmi, S.; Addamani, S.; Venkat; Ravikiran, S. Spectral Angle Mapper Algorithm for Remote Sensing Image Classification. Int. J. Innov. Sci. Eng. Technol. 2014, 1, 201–205. Available online: http://www.ijiset.com/ (accessed on 2 May 2025).

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Tejasree, G.; Agilandeeswari, L. An extensive review of hyperspectral image classification and prediction: Techniques and challenges. Multimed. Tools Appl. 2024, 83, 80941–81038. [Google Scholar] [CrossRef]

- Lv, W.; Wang, X. Overview of Hyperspectral Image Classification. J. Sens. 2020, 2020, 4817234. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Gao, H.; Lin, S.; Yang, Y.; Li, C.; Yang, M. Convolution Neural Network Based on Two-Dimensional Spectrum for Hyperspectral Image Classification. J. Sens. 2018, 2018, 8602103. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral–Spatial Classification of Hyperspectral Imagery with 3D Convolutional Neural Network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Okujeni, A.; Van der Linden, S.; Jakimow, B.; Rabe, A.; Verrelst, J.; Hostert, P. A Comparison of Advanced Regression Algorithms for Quantifying Urban Land Cover. Remote Sens. 2014, 6, 6324–6346. [Google Scholar] [CrossRef]

- Comber, A.J. Land use or land cover? J. Land Use Sci. 2008, 3, 199–201. [Google Scholar] [CrossRef]

- Vavassori, A.; Oxoli, D.; Venuti, G.; Brovelli, M.A.; de Cumis, M.S.; Sacco, P.; Tapete, D. A combined Remote Sensing and GIS-based method for Local Climate Zone mapping using PRISMA and Sentinel-2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103944. [Google Scholar] [CrossRef]

- Yuan, J.; Wang, S.; Wu, C.; Xu, Y. Fine-Grained Classification of Urban Functional Zones and Landscape Pattern Analysis Using Hyperspectral Satellite Imagery: A Case Study of Wuhan. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3972–3991. [Google Scholar] [CrossRef]

- Sajadi, P.; Gholamnia, M.; Bonafoni, S.; Mills, G.; Sang, Y.-F.; Li, Z.; Khan, S.; Han, J.; Pilla, F. Automated Pixel Purification for Delineating Pervious and Impervious Surfaces in a City Using Advanced Hyperspectral Imagery Techniques. IEEE Access 2024, 12, 82560–82583. [Google Scholar] [CrossRef]

- Negri, R.G.; Dutra, L.V.; Sant’aNna, S.J.S. Comparing support vector machine contextual approaches for urban area classification. Remote Sens. Lett. 2016, 7, 485–494. [Google Scholar] [CrossRef]

- Le Bris, A.; Chehata, N.; Briottet, X.; Paparoditis, N. Spectral Band Selection for Urban Material Classification Using Hyperspectral Libraries. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III–7, 33–40. [Google Scholar] [CrossRef]

- Cavalli, R.M.; Fusilli, L.; Pascucci, S.; Pignatti, S.; Santini, F. Hyperspectral Sensor Data Capability for Retrieving Complex Urban Land Cover in Comparison with Multispectral Data: Venice City Case Study (Italy). Sensors 2008, 8, 3299–3320. [Google Scholar] [CrossRef]

- Weng, Q.; Hu, X.; Lu, D. Extracting impervious surfaces from medium spatial resolution multispectral and hyperspectral imagery: A comparison. Int. J. Remote Sens. 2008, 29, 3209–3232. [Google Scholar] [CrossRef]

- Kumar, V.; Garg, R.D. Comparison of Different Mapping Techniques for Classifying Hyperspectral Data. J. Indian Soc. Remote Sens. 2012, 40, 411–420. [Google Scholar] [CrossRef]

- Gadal, S.; Ouerghemmi, W.; Barlatier, R.; Mozgeris, G. Critical Analysis of Urban Vegetation Mapping by Satellite Multispectral and Airborne Hyperspectral Imagery. In Proceedings of the 5th International Conference on Geographical Information Systems Theory, Applications and Management, Heraklion, Greece, 3–5 May 2019. [Google Scholar] [CrossRef]

- Niedzielko, J.; Kopeć, D.; Wylazłowska, J.; Kania, A.; Charyton, J.; Halladin-Dąbrowska, A.; Niedzielko, M.; Berłowski, K. Airborne data and machine learning for urban tree species mapping: Enhancing the legend design to improve the map applicability for city greenery management. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103719. [Google Scholar] [CrossRef]

- Perretta, M.; Delogu, G.; Funsten, C.; Patriarca, A.; Caputi, E.; Boccia, L. Testing the Impact of Pansharpening Using PRISMA Hyperspectral Data: A Case Study Classifying Urban Trees in Naples, Italy. Remote Sens. 2024, 16, 3730. [Google Scholar] [CrossRef]

- Degerickx, J.; Hermy, M.; Somers, B. Mapping Functional Urban Green Types Using High Resolution Remote Sensing Data. Sustainability 2020, 12, 2144. [Google Scholar] [CrossRef]

- Stewart, I.D.; Oke, T.R. Local Climate Zones for Urban Temperature Studies. Bull. Am. Meteorol. Soc. 2012, 93, 1879–1900. [Google Scholar] [CrossRef]

- Civicioglu, P.; Besdok, E. Pansharpening of remote sensing images using dominant pixels. Expert Syst. Appl. 2024, 242, 122783. [Google Scholar] [CrossRef]

- Khan, A.; Vibhute, A.D.; Mali, S.; Patil, C.H. A systematic review on hyperspectral imaging technology with a machine and deep learning methodology for agricultural applications. Ecol. Inform. 2022, 69, 101678. [Google Scholar] [CrossRef]

- Waigl, C.F.; Prakash, A.; Stuefer, M.; Verbyla, D.; Dennison, P. Fire detection and temperature retrieval using EO-1 Hyperion data over selected Alaskan boreal forest fires. Int. J. Appl. Earth Obs. Geoinf. 2019, 81, 72–84. [Google Scholar] [CrossRef]

- Keramitsoglou, I.; Kontoes, C.; Sykioti, O.; Sifakis, N.; Xofis, P. Reliable, accurate and timely forest mapping for wildfire management using ASTER and Hyperion satellite imagery. For. Ecol. Manag. 2008, 255, 3556–3562. [Google Scholar] [CrossRef]

- Shahtahmassebi, A.R.; Li, C.; Fan, Y.; Wu, Y.; Lin, Y.; Gan, M.; Wang, K.; Malik, A.; Blackburn, G.A. Remote sensing of urban green spaces: A review. Urban For. Urban Green. 2021, 57, 126946. [Google Scholar] [CrossRef]

- Digra, M.; Dhir, R.; Sharma, N. Land use land cover classification of remote sensing images based on the deep learning approaches: A statistical analysis and review. Arab. J. Geosci. 2022, 15, 1003. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Gaur, S.; Das, N.; Bhattacharjee, R.; Ohri, A.; Patra, D. A novel band selection architecture to propose a built-up index for hyperspectral sensor PRISMA. Earth Sci. Inform. 2023, 16, 887–898. [Google Scholar] [CrossRef]

- Poursanidis, D.; Panagiotakis, E.; Chrysoulakis, N. Sub-pixel Material Fraction Mapping in the UrbanScape using PRISMA Hyperspectral Imagery. In Proceedings of the 2023 Joint Urban Remote Sensing Event (JURSE), Heraklion, Greece, 21–23 March 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Rosentreter, J.; Hagensieker, R.; Okujeni, A.; Roscher, R.; Wagner, P.D.; Waske, B. Subpixel Mapping of Urban Areas Using EnMAP Data and Multioutput Support Vector Regression. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1938–1948. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Qiu, H.; Hou, G.; Fan, P. An overview of hyperspectral image feature extraction, classification methods and the methods based on small samples. Appl. Spectrosc. Rev. 2023, 58, 367–400. [Google Scholar] [CrossRef]

- Wang, R.; Wang, M.; Ren, C.; Chen, G.; Mills, G.; Ching, J. Mapping local climate zones and its applications at the global scale: A systematic review of the last decade of progress and trend. Urban Clim. 2024, 57, 102129. [Google Scholar] [CrossRef]

- Vaddi, R.; Kumar, B.L.N.P.; Manoharan, P.; Agilandeeswari, L.; Sangeetha, V. Strategies for dimensionality reduction in hyperspectral remote sensing: A comprehensive overview. Egypt. J. Remote Sens. Space Sci. 2024, 27, 82–92. [Google Scholar] [CrossRef]

- Rasti, B.; Hong, D.; Hang, R.; Ghamisi, P.; Kang, X.; Chanussot, J.; Benediktsson, J.A. Feature Extraction for Hyperspectral Imagery: The Evolution From Shallow to Deep: Overview and Toolbox. IEEE Geosci. Remote Sens. Mag. 2020, 8, 60–88. [Google Scholar] [CrossRef]

- Han, T.; Goodenough, D.G. Investigation of nonlinearity in hyperspectral remotely sensed imagery—A nonlinear time series analysis approach. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–27 July 2007; pp. 1556–1560. [Google Scholar] [CrossRef]

- Christovam, L.E.; Pessoa, G.G.; Shimabukuro, M.H.; Galo, M.L.B.T. Land Use and Land Cover Classification Using Hyperspectral Imagery: Evaluating The Performance Of Spectral Angle Mapper, Support Vector Machine And Random Forest. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 1841–1847. [Google Scholar] [CrossRef]

- Liu, Y.; Lu, S.; Lu, X.; Wang, Z.; Chen, C.; He, H. Classification of Urban Hyperspectral Remote Sensing Imagery Based on Optimized Spectral Angle Mapping. J. Indian Soc. Remote Sens. 2019, 47, 289–294. [Google Scholar] [CrossRef]

- Ouerghemmi, W.; Gadal, S.; Mozgeris, G. Urban Vegetation Mapping Using Hyperspectral Imagery and Spectral Library. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1632–1635. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Brabant, C.; Alvarez-Vanhard, E.; Laribi, A.; Morin, G.; Thanh Nguyen, K.; Thomas, A.; Houet, T. Comparison of Hyperspectral Techniques for Urban Tree Diversity Classification. Remote Sens. 2019, 11, 1269. [Google Scholar] [CrossRef]

- Freeman, E.A.; Moisen, G.G.; Frescino, T.S. Evaluating effectiveness of down-sampling for stratified designs and unbalanced prevalence in Random Forest models of tree species distributions in Nevada. Ecol. Model. 2012, 233, 1–10. [Google Scholar] [CrossRef]

- Colditz, R.R. An Evaluation of Different Training Sample Allocation Schemes for Discrete and Continuous Land Cover Classification Using Decision Tree-Based Algorithms. Remote Sens. 2015, 7, 9655–9681. [Google Scholar] [CrossRef]

- Mellor, A.; Boukir, S.; Haywood, A.; Jones, S. Exploring issues of training data imbalance and mislabelling on random forest performance for large area land cover classification using the ensemble margin. ISPRS J. Photogramm. Remote Sens. 2015, 105, 155–168. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Foody, G. Thematic Map Comparison: Evaluating the Statistical Significance of Differences in Classification Accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Moughal, T.A. Hyperspectral image classification using Support Vector Machine. J. Phys. Conf. Ser. 2013, 439, 012042. [Google Scholar] [CrossRef]

- Gopinath, G.; Sasidharan, N.; Surendran, U. Landuse classification of hyperspectral data by spectral angle mapper and support vector machine in humid tropical region of India. Earth Sci. Inform. 2020, 13, 633–640. [Google Scholar] [CrossRef]

- Okujeni, A.; van der Linden, S.; Suess, S.; Hostert, P. Ensemble Learning From Synthetically Mixed Training Data for Quantifying Urban Land Cover With Support Vector Regression. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1640–1650. [Google Scholar] [CrossRef]

- Mantero, P.; Moser, G.; Serpico, S.B. Partially Supervised classification of remote sensing images through SVM-based probability density estimation. IEEE Trans. Geosci. Remote Sens. 2005, 43, 559–570. [Google Scholar] [CrossRef]

- Martins, L.A.; Viel, F.; Seman, L.O.; Bezerra, E.A.; Zeferino, C.A. A real-time SVM-based hardware accelerator for hyperspectral images classification in FPGA. Microprocess. Microsyst. 2024, 104, 104998. [Google Scholar] [CrossRef]

- Khodadadzadeh, M.; Li, J.; Prasad, S.; Plaza, A. Fusion of Hyperspectral and LiDAR Remote Sensing Data Using Multiple Feature Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1–13. [Google Scholar] [CrossRef]

- Adugna, T.; Fan, J. Comparison of Random Forest and Support Vector Machine Classifiers for Regional Land Cover Mapping Using Coarse Resolution FY-3C Images. Remote Sens. 2022, 14, 574. [Google Scholar] [CrossRef]

- Li, H.-X.; Yang, J.-L.; Zhang, G.; Fan, B. Probabilistic support vector machines for classification of noise affected data. Inf. Sci. 2013, 221, 60–71. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Ahmad, M.; Shabbir, S.; Roy, S.K.; Hong, D.; Wu, X.; Yao, J.; Khan, A.M.; Mazzara, M.; Distefano, S.; Chanussot, J. Hyperspectral Image Classification—Traditional to Deep Models: A Survey for Future Prospects. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 968–999. [Google Scholar] [CrossRef]

- Youssef, R.; Aniss, M.; Jamal, C. Machine Learning and Deep Learning in Remote Sensing and Urban Application: A Systematic Review and Meta-Analysis. In GEOIT4W-2020: Proceedings of the 4th Edition of International Conference on Geo-IT and Water Resources 2020, Geo-IT and Water Resources 2020, Al-Hoceima, Morocco, 11–12 March 2020; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, R.; Zhou, G.; Jia, X. Hierarchical spatial features learning with deep CNNs for very high-resolution remote sensing image classification. Int. J. Remote Sens. 2018, 39, 5978–5996. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. WIREs Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- Li, X.; Fan, X.; Fan, J.; Li, Q.; Gao, Y.; Zhao, X. DASR-Net: Land Cover Classification Methods for Hybrid Multiattention Multispectral High Spectral Resolution Remote Sensing Imagery. Forests 2024, 15, 1826. [Google Scholar] [CrossRef]

- Zheng, Y.; Liu, S.; Chen, H.; Bruzzone, L. Hybrid FusionNet: A Hybrid Feature Fusion Framework for Multisource High-Resolution Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Xia, G.-S.; Chanussot, J.; Zhu, X.X. X-ModalNet: A semi-supervised deep cross-modal network for classification of remote sensing data. ISPRS J. Photogramm. Remote Sens. 2020, 167, 12–23. [Google Scholar] [CrossRef]

- Rafiezadeh Shahi, K.; Ghamisi, P.; Rasti, B.; Gloaguen, R.; Scheunders, P. MS 2 A-Net: Multiscale Spectral–Spatial Association Network for Hyperspectral Image Clustering. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1–13. [Google Scholar] [CrossRef]

- Bera, S.; Shrivastava, V.K.; Satapathy, S.C. Advances in Hyperspectral Image Classification Based on Convolutional Neural Networks: A Review. CMES—Comput. Model. Eng. Sci. 2022, 133, 219–250. [Google Scholar] [CrossRef]

- Ma, X.; Man, Q.; Yang, X.; Dong, P.; Yang, Z.; Wu, J.; Liu, C. Urban Feature Extraction within a Complex Urban Area with an Improved 3D-CNN Using Airborne Hyperspectral Data. Remote Sens. 2023, 15, 992. [Google Scholar] [CrossRef]

- Noshiri, N.; Beck, M.A.; Bidinosti, C.P.; Henry, C.J. A comprehensive review of 3D convolutional neural network-based classification techniques of diseased and defective crops using non-UAV-based hyperspectral images. Smart Agric. Technol. 2023, 5, 100316. [Google Scholar] [CrossRef]

- Jaiswal, G.; Rani, R.; Mangotra, H.; Sharma, A. Integration of hyperspectral imaging and autoencoders: Benefits, applications, hyperparameter tunning and challenges. Comput. Sci. Rev. 2023, 50, 100584. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Deep Recurrent Neural Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef]

- Nascimento, J.M.P.; Dias, J.M.B. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009, 113, S110–S122. [Google Scholar] [CrossRef]

- Vali, A.; Comai, S.; Matteucci, M. Deep Learning for Land Use and Land Cover Classification Based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Li, R.; Gao, X.; Shi, F.; Zhang, H. Scale Effect of Land Cover Classification from Multi-Resolution Satellite Remote Sensing Data. Sensors 2023, 23, 6136. [Google Scholar] [CrossRef]

- Li, X.; Chen, G.; Zhang, Y.; Yu, L.; Du, Z.; Hu, G.; Liu, X. The impacts of spatial resolutions on global urban-related change analyses and modeling. iScience 2022, 25(12), 105660. [Google Scholar] [CrossRef] [PubMed]

- Sliuzas, R.; Kuffer, M.; Masser, I. The Spatial and Temporal Nature of Urban Objects; Springer: Berlin/Heidelberg, Germany, 2009; pp. 67–84. ISBN 978-1-4020-4371-0. [Google Scholar]

- Welch, R. Spatial resolution requirements for urban studies. Int. J. Remote Sens. 1982, 3, 139–146. [Google Scholar] [CrossRef]

- Fisher, J.; Acosta Porras, E.; Dennedy-Frank, P.; Kroeger, T.; Boucher, T. Impact of satellite imagery spatial resolution on land use classification accuracy and modeled water quality. Remote Sens. Ecol. Conserv. 2017, 4, 137–149. [Google Scholar] [CrossRef]

- Hasani, H.; Samadzadegan, F.; Reinartz, P. A metaheuristic feature-level fusion strategy in classification of urban area using hyperspectral imagery and LiDAR data. Eur. J. Remote Sens. 2017, 50, 222–236. [Google Scholar] [CrossRef]

- Zhang, H.; Wan, L.; Wang, T.; Lin, Y.; Lin, H.; Zheng, Z. Impervious Surface Estimation From Optical and Polarimetric SAR Data Using Small-Patched Deep Convolutional Networks: A Comparative Study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2374–2387. [Google Scholar] [CrossRef]

- Mozgeris, G.; Gadal, S.; Jonikavičius, D.; Straigytė, L.; Ouerghemmi, W.; Juodkienė, V. Hyperspectral and color-infrared imaging from ultralight aircraft: Potential to recognize tree species in urban environments. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Liang, Y.; Song, W.; Cao, S.; Du, M. Local Climate Zone Classification Using Daytime Zhuhai-1 Hyperspectral Imagery and Nighttime Light Data. Remote Sens. 2023, 15, 3351. [Google Scholar] [CrossRef]

- Moix, E.; Giuliani, G. Mapping Local Climate Zones (LCZ) Change in the 5 Largest Cities of Switzerland. Urban Sci. 2024, 8, 120. [Google Scholar] [CrossRef]

- Huang, F.; Jiang, S.; Zhan, W.; Bechtel, B.; Liu, Z.; Demuzere, M.; Huang, Y.; Xu, Y.; Ma, L.; Xia, W.; et al. Mapping local climate zones for cities: A large review. Remote Sens. Environ. 2023, 292, 113573. [Google Scholar] [CrossRef]

- Aslam, A.; Rana, I.A. The use of local climate zones in the urban environment: A systematic review of data sources, methods, and themes. Urban Clim. 2022, 42, 101120. [Google Scholar] [CrossRef]

- Goetz, A.F.H. Three decades of hyperspectral remote sensing of the Earth: A personal view. Remote Sens. Environ. 2009, 113, S5–S16. [Google Scholar] [CrossRef]

- Okujeni, A.; van der Linden, S.; Hostert, P. Extending the vegetation–impervious–soil model using simulated EnMAP data and machine learning. Remote Sens. Environ. 2015, 158, 69–80. [Google Scholar] [CrossRef]

- Granero-Belinchon, C.; Adeline, K.; Lemonsu, A.; Briottet, X. Phenological Dynamics Characterization of Alignment Trees with Sentinel-2 Imagery: A Vegetation Indices Time Series Reconstruction Methodology Adapted to Urban Areas. Remote Sens. 2020, 12, 639. [Google Scholar] [CrossRef]

- El Mendili, L.; Puissant, A.; Chougrad, M.; Sebari, I. Towards a Multi-Temporal Deep Learning Approach for Mapping Urban Fabric Using Sentinel 2 Images. Remote Sens. 2020, 12, 423. [Google Scholar] [CrossRef]

- Xu, F.; Heremans, S.; Somers, B. Urban land cover mapping with Sentinel-2: A spectro-spatio-temporal analysis. Urban Inform. 2022, 1, 8. [Google Scholar] [CrossRef]

| Sensor | Nº of Bands | Spectral Resolution (nm) | Spectral Range (nm) | Spatial Resolution (m) | Swath Width (km) | Peak Signal-to-Noise Ratio |

|---|---|---|---|---|---|---|

| AVIRIS [31] | 224 | ~10 | 400–2500 | 4–20 | ~11 | >1000:1 |

| HyMap [32] | 128–160 | ~15 | 450–2500 | 3–5 | 2.5–5 | >500:1 |

| CASI [33] | 228 | 2–10 | 380–1050 | 0.5–4 | 2 | 1095:1 |

| ROSIS-3 [34] | 115 | 4 | 430–860 | 2.3 | 2 | Mentioned as “high” but no value was provided |

| AISA [35] | 63–488 | 2–10 | 400–2500 | 1–5 | 2 | >1000:1 |

| HYDICE [36] | 210 | 10 | 400–2500 | 1–4 | 2 | ~800:1 |

| Publication | Main Topic | Time Range | Outcomes |

|---|---|---|---|

| [43] | Techniques and applications of hyperspectral remote sensing in urban areas | 1990–2012 |

|

| [44] | Influence of SR in hyperspectral remote sensing in urban areas | 1995–2017 |

|

| [46] | Analysis of asbestos and vegetation in urban areas using hyperspectral remote sensing | 1998–2022 |

|

| [48] | State-of-the-art tree species classification in urban areas using hyperspectral remote sensing | 1967–2015 |

|

| [49] | Different analytical approaches to urban forests using hyperspectral remote sensing | 1997–2018 |

|

| [50] | State-of-the-art impervious surface classification in urban areas using hyperspectral remote sensing | 1975–2010 |

|

| [51] | State-of-the-art land cover classification in urban areas using hyperspectral airborne remote sensing | 1991–2021 |

|

| [47] | State-of-the-art asbestos classification in urban areas using hyperspectral airborne remote sensing and Machine Learning | 1980–2022 |

|

| [45] | Analysis of applicability of hyperspectral sensors in comparison to multispectral Sentinel-2 data | 2000–2017 |

|

| Name of the Public Dataset | Brief Description | Classes | Sensor Used | N° of Bands | Spectral Range (nm) | Spatial Resolution (m) | Spectral Resolution (nm) | Peak Signal-to-Noise Ratio |

|---|---|---|---|---|---|---|---|---|

| Pavia University (2003) [34,53] | Gathered over north-east Pavia (Northern Italy), containing 42,776 labeled samples. | Asphalt, Meadows, Gravel, Trees, Metal sheet, Bare soil, Bitumen, Brick, and Shadow. | ROSIS-3 | 115 | 430–860 | 2.3 | 4 | Mentioned as “high” but no value was provided |

| Pavia Centre (2003) [54,55] | Gathered over the city center of Pavia (Northern Italy), containing 7456 labeled samples | Water, Trees, Asphalt, Self-blocking bricks, Bitumen, Tiles, Shadows, Meadows, Bare soil. | ||||||

| Washington DC Mall (1995) [56,57] | Gathered over Washington DC (Virginia, US) containing 76,777 labeled samples | Roofs, Street, Grass, Trees, Path, Water, Shadow | HYDICE | 210 | 400–2500 | 1–4 | 10 | 800:1 |

| Urban Dataset (1995) [58] | Captured over Yuma City (Arizona, US), containing 94,249 labeled samples. | Asphalt, Grass, Tree, Roof, Metal and Dirt | ||||||

| MUUFL (2010) [59] | Gathered over the Gulf Park campus (Mississippi, US) containing 53,687 labeled samples, and a LIDAR image. | Trees, Mostly Grass, Mixed Ground, Dirt and Sand, Roads, Water, Building Shadows, Buildings, Sidewalks, Yellow curbs, Cloth panels | ITRES CASI-1500 | Up to 288, used in this dataset: 64 | 380–1050 | 0.5 | 10.4 | 1095:1 |

| Houston (2018) [60,61] | Gathered over Houston (Texas, US), containing 504,172 labeled samples, and a Digital Surface Model gathered through LIDAR. | Healthy grass, Stressed grass, Artificial turf, Evergreen trees, Deciduous trees, Bare earth, Water, Residential buildings, Non-residential buildings, Roads, Sidewalks, Crosswalks, Major thoroughfares, Highways, Railways, Paved parking lots, Unpaved parking lots, Cars, Trains, Stadium seats | ITRES CASI-1500 | Up to 288, used in this dataset: 48 | 380–1050 | 0.5 | 14 | 1095:1 |

| Type of Data Used for Integration/Fusion | Hyperspectral Spaceborne | Hyperspectral Airborne |

|---|---|---|

| Panchromatic/Multispectral | 9 (8%) | 0 |

| LIDAR | 0 | 11 (10%) |

| SAR | 3 (3%) | 0 |

| Geographic Information System (GIS) | 3 (3%) | - |

| No integration/ fusion | 87 (76%) | |

| Characteristic | Airborne HSI | Spaceborne HSI |

|---|---|---|

| Spatial Resolution | Very high (0.5–5 m) | Moderate (10–60 m) |

| Coverage | Local to regional; limited swath (few km) | Regional to global; wide swath (30–150 km) |

| Temporal Resolution | Sporadic (campaign-based, one-shot surveys) | Repeat coverage (days to weeks depending on mission observation scenario) and/or on demand |

| Data Accessibility | Mostly proprietary or costly flight campaigns; public benchmark datasets available | Increasingly free access for scientific research purposes (e.g., PRISMA, EnMAP) |

| Signal-to-Noise Ratio (SNR) | High, depending on sensor and conditions | Variable; generally lower than airborne but improving in second generation missions |

| Typical Applications | Algorithm testing, benchmark datasets, detailed land cover classification, urban vegetation studies, impervious surfaces | Broader-scale land cover mapping, impervious surfaces, LCZ analysis, regional and city-scale vegetation studies |

| Limitations | High acquisition cost, limited coverage, poor temporal repeatability | Coarser spatial resolution, mixed pixel problem, weather dependence |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gámez García, J.A.; Lazzeri, G.; Tapete, D. Airborne and Spaceborne Hyperspectral Remote Sensing in Urban Areas: Methods, Applications, and Trends. Remote Sens. 2025, 17, 3126. https://doi.org/10.3390/rs17173126

Gámez García JA, Lazzeri G, Tapete D. Airborne and Spaceborne Hyperspectral Remote Sensing in Urban Areas: Methods, Applications, and Trends. Remote Sensing. 2025; 17(17):3126. https://doi.org/10.3390/rs17173126

Chicago/Turabian StyleGámez García, José Antonio, Giacomo Lazzeri, and Deodato Tapete. 2025. "Airborne and Spaceborne Hyperspectral Remote Sensing in Urban Areas: Methods, Applications, and Trends" Remote Sensing 17, no. 17: 3126. https://doi.org/10.3390/rs17173126

APA StyleGámez García, J. A., Lazzeri, G., & Tapete, D. (2025). Airborne and Spaceborne Hyperspectral Remote Sensing in Urban Areas: Methods, Applications, and Trends. Remote Sensing, 17(17), 3126. https://doi.org/10.3390/rs17173126