Abstract

Visual grounding for remote sensing (RSVG) focuses on localizing specific objects in remote sensing (RS) imagery based on linguistic expressions. Existing methods typically employ pre-trained models to locate the referenced objects. However, due to the insufficient capability of cross-modal interaction and alignment, the extracted visual features may suffer from semantic drift, limiting the performance of RSVG. To address this, the article introduces a novel RSVG framework named the text–visual interaction multilevel feature alignment network (TVI-MFAN), which leverages a text–visual interaction attention (TVIA) module to dynamically generate adaptive weights and biases at both spatial and channel dimensions, enabling the visual feature to focus on relevant linguistic expressions. Additionally, a multilevel feature alignment network (MFAN) aggregates contextual information by using cross-modal alignment to enhance features and suppress irrelevant regions. Experiments demonstrate that the proposed method achieves 75.65% and 80.24% (2.42% and 3.1% absolute improvement) accuracy on the OPT-RSVG and DIOR-RSVG dataset, validating its effectiveness.

1. Introduction

The aim of visual grounding for remote sensing (RSVG) is to accurately locate a uniquely specific object within remote sensing (RS) imagery, guided by linguistic expressions. For humans, the process of locating a described target involves progressively refining the extraction of visual features in conjunction with linguistic expressions. In comparison with other cross-modal tasks, RSVG more closely emulates human cognitive processes that facilitates the ability of non-expert users to easily identify and locate an object in RS images. Given its compatibility with human perception and ease of use, it has wide application prospects in fields such as intelligence analysis, disaster response and agricultural detection [1,2,3,4,5,6,7,8].

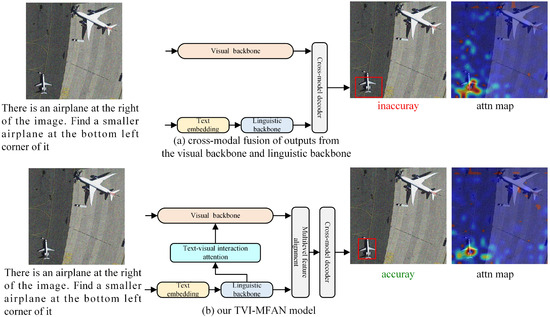

The nascent introduction of RSVG-related concepts was first presented in GeoVG [9], which addresses the challenge of scale variation in RSVG through a Transformer-based multi-level cross-modal (MLCM) feature learning module. TransVG [10] was the pioneering work that introduced a Transformer-based framework for visual grounding (VG), with its core fusion transformer being responsible for multiple tasks. However, this multifunctionality may increase the learning complexity. To address this issue, TransVG++ [11] proposed a language-conditioned vision transformer that serves dual purposes: vision feature encoding and vision-language reasoning. More recently, methods have begun to focus on the design of the visual branch, employing cross-modal attention mechanisms to adjust visual features [12,13,14,15]. For example, VLTVG [16] introduced a visual-linguistic verification module to concentrate encoded features on regions related to linguistic expressions, achieving excellent performance. MGIMM [17] facilitates the learning process of the multimodal model by establishing consistency between visual regions and the corresponding linguistic expressions through region-level instruction tuning. Given the significance of early interaction between visual features and language descriptions, despite the satisfactory grounding efficiency achieved by the aforementioned method, its reliance on a rudimentary cross-modal interaction module to address the VG task is problematic. Specifically, the alignment of visual and linguistic features at the cross-modal fusion stage, followed by the localization of the described target, may induce semantic drift, as shown in Figure 1a. This phenomenon can cause the extracted visual features to diverge from the true semantics conveyed by the linguistic expressions. Consequently, this prompts us to determine whether visual features can be dynamically modulated by linguistic expressions throughout the entire VG task. The core idea centers on investigating whether integrating linguistic semantic information into visual features can enhance their semantic representation and boost localization precision, thereby enhancing the correlation between visual and linguistic features.

Figure 1.

Two types of RSVG frameworks.

To overcome the semantic drift, we propose a novel RSVG framework that enhances the interaction between linguistic and visual modalities through an attention-based mechanism, thereby enhancing the correlation between visual and linguistic features, as shown in Figure 1b. The key to our approach is the generation of adaptive attention parameters derived from linguistic expressions that dynamically modulate the visual feature extraction process of the described target. Subsequently, we employ a multilayer feature alignment network based on the cross-attention mechanism to perform modal alignment within the feature embedding space, bridging the processes of feature extraction and interaction. This method ensures semantic consistency between visual features and linguistic expressions, thereby enhancing the accuracy of VG. Linguistic expressions contain the classification, shape, color and dimensional attributes of the described target. Dynamically adjusting the extraction process of visual features based on linguistic expressions provides a feasible strategy for achieving a precise VG.

The main contributions of this work are as follows:

(1) We introduce a novel RSVG framework, named the text–visual interaction multilevel feature alignment network (TVI-MFAN), composed of text–visual interaction attention (TVIA) and multilevel feature alignment (MFAN) modules. The former utilizes language information to dynamically modulate the visual feature extraction process of the described target. The latter can aggregate visual contextual information to perform modal alignment within the feature embedding space. Compared with previous methods, our approach dynamically adjusts the extraction and cross-modal interaction process for visual features by generating adaptive attention parameters.

(2) We propose a TVIA module that dynamically generates adaptive attention parameters derived from linguistic expressions at both spatial and channel dimensions. These parameters are utilized to dynamically modulate the visual feature extraction process of the described target, thereby enhancing the alignment between visual and linguistic information.

(3) We design an MFAN that performs cross-modal alignment within the feature embedding space, effectively bridging the processes of feature extraction and interaction. This method ensures semantic consistency between visual features and linguistic expressions, thereby enhancing the overall coherence and accuracy of the visual grounding task.

The proposed framework systematically addresses cross-modal distribution bias and scale variation challenges inherent in RSVG tasks, ensuring robust grounding performance even under intricate remote sensing scenarios.

2. Related Works

In this section, we present a concise review of works that are closely related to RSVG. Unlike natural images, RS images are characterized by more complex geospatial relationships and finer-grained texture details. Additionally, objects requiring localization in RS images often face challenges such as significant scale variations, ambiguous boundaries and occlusion interference. While visual grounding tasks have witnessed substantial progress in the domain of natural imagery, their application and exploration in the context of remote sensing remain relatively underdeveloped [18,19,20,21,22].

GeoVG was the first to introduce the concept of RSVG and developed the initial RSVG dataset based on large-scale RS images. This dataset has effectively facilitated the validation of RSVG methods on high-resolution RS imagery. A subsequent advancement, MGVLF [23], further expanded the domain by constructing the DIOR-RSVG dataset. This dataset comprises 17,402 RS images and 38,320 language expressions spanning 20 object categories. RINet [24] locates small-scale objects via an alignment module and a correction gate in RS imagery. VSMR [25] achieves accurate localization by adaptively selecting features and performing multistage cross-modal reasoning. FQRNet [26] leverages Fourier transforms to capture global structured information from RS data to achieve robust semantic coreference. LQVG [27] enhances the localization capability for the described target through the interaction between sentence-level textual features and visual features. MB-ORES [28] integrates diverse network inputs, including object queries, initial bounding boxes, and class name embedding, to identify all objects within an image and/or pinpoint a specific object via a language query. FLAVARS [29] integrates the strengths of both contrastive learning and masked modeling to achieve vision–language alignment and zero-shot classification capability. Other relevant works include [30,31,32,33,34,35,36,37].

In addition, recent studies have focused on vision–language models (VLMs) whereby models learn rich vision–language correlations from large-scale image-text pairs available on the web. CLIP [38] leverages extensive pre-training on image–text paired data to achieve semantic alignment, thus enabling zero-shot predictions in downstream tasks such as image classification and cross-modal retrieval. In the remote sensing domain, most CLIP-based studies [39,40,41] adopt a model architecture analogous to that of CLIP. BLIP [42] attains state-of-the-art performance in multimodal tasks by incorporating a captioner-filter mechanism to mitigate supervised text noise. FLAVA [43], through the integration of a dual-encoder approach and a fusion encoder within a unified model via the shared backbone, demonstrates adaptability to both single-modal and multi-modal tasks.

Methodologically, the above-mentioned methods employ Transformer-based multi-level cross-modal fusion modules (MLCM) to integrate multi-scale visual features (e.g., hierarchical features extracted from ResNet) with multi-granularity textual embeddings (e.g., word-level and sentence-level features derived from BERT [44]). Through the utilization of self-attention and cross-attention mechanisms, these methods significantly enhance cross-modal interactions, thereby improving localization accuracy. However, these approaches primarily focus on cross-modal feature fusion while neglecting the alignment of heterogeneous feature distributions. This oversight may result in mismatches between textual semantics and visual targets, leading to semantic misalignment that ultimately compromises grounding precision.

3. Materials and Methods

In this section, we present the proposed TVI-MFAN framework. We first provide an overview of TVI-MFAN in Section 3.1. Then, we elaborate on the TVIA module in Section 3.2. Finally, we detail the MFAN in Section 3.3.

3.1. Overview

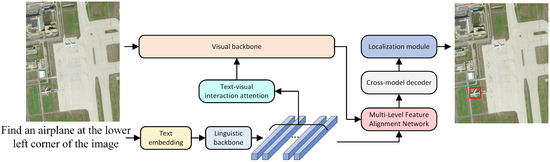

To mitigate semantic misalignment caused by cross-modal discrepancies, we propose the TVI-MFAN, as illustrated in Figure 2.

Figure 2.

Framework of the proposed TVI-MFAN.

Given a referring expression, the linguistic backbone tokenizes the expression and extracts linguistic features, where l denotes the number of tokens and d represents the dimensionality of the linguistic features. These linguistic features are subsequently input into text–visual interaction attention modules to generate several sets of weights and biases for modulating the visual backbone. For a given RS image, visual features are extracted by the visual backbone, modulated by the linguistic features. Here, h, w, and c represent the height, width, and channel numbers of the visual features, respectively. Subsequently, the visual features and linguistic features are mapped to a common dimension through two separate linear projections. Following this, multilevel cross-modal interaction is performed to enhance the features of the referred objects. Finally, the coordinates of the bounding box are predicted by a localization module.

3.1.1. Visual Feature Extraction

Given an input RS image, we employ ResNet as the visual backbone network to extract hierarchical visual features. Specifically, multi-scale features are generated from three intermediate layers with spatial strides of {8, 16, 32}. The final stage is further processed by a convolutional layer with a stride of 2 applied to the output of the preceding layer, resulting in a fourth feature map with a spatial stride of 64. This process yields four-level multi-scale visual features characterized by spatial strides of {8, 16, 32, 64}, which collectively capture both fine-grained details and global contextual patterns within the remote sensing imagery.

3.1.2. Textual Feature Extraction

For the input linguistic expressions, BERT pre-trained with a hidden size of 768 is utilized to generate textual features, which include word-level feature embeddings for each token in the description. These textual features inherently encode rich semantic information regarding target attributes such as category, geometry and spectral properties. This rich semantic encoding enables effective cross-modal feature alignment with visual representations. The alignment mechanism explicitly mitigates semantic misalignment by harmonizing linguistic semantics with the spatially heterogeneous visual patterns present in RS images.

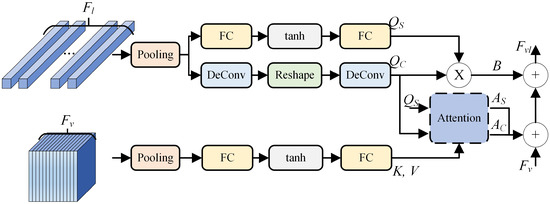

3.2. Text–Visual Interaction Attention

Multi-level visual features encapsulate detailed textures and complex spatial relationships of target regions, while textual features convey refined semantic attributes, such as category, morphology, chromatic properties and scale of the described objects. However, the inherent weak correlation between these heterogeneous modalities in the semantic space necessitates explicit cross-modal alignment. To bridge this gap, we design a TVIA module that semantically enhances visual features by leveraging textual semantics, thereby aligning visual and linguistic representations of target concepts, as depicted in Figure 3.

Figure 3.

Framework of the proposed TVIA.

Our approach employs hierarchical cross-modal interaction to progressively fuse multilevel visual features () with linguistic features (). Initially, we employ an average pooling layer to aggregate multigranular linguistic expressions. Subsequently, the fully connected (FC) layer and tanh function project the pooled features to the same channel dimension as the visual features, thereby generating an adaptive query matrix that contains spatial information. Similarly, the deconvolution layer (DeConv) is adopted to reshape the pooled multigranularity linguistic expressions and generate the adaptive query matrix with channel information. This process facilitates semantic-guided feature recalibration, where linguistic semantics dynamically enhance visual representations at multiple scales. Mathematically, the interaction is formulated as:

As for the adaptive query matrix , we apply a similar process as described in (1) to learn the key and value matrix. The computing process is defined as

Then, the attention weights of the channel and spatial dimensions are calculated based on the adaptive matrix, including adaptive weights and bias. We adopt the idea of affine transformation to adjust the process of visual feature extraction through the adaptive parameters obtained by obtaining multigranularity linguistic information, so that the extracted visual features are more in line with the semantics of the linguistic expressions. Here, ⊗ denotes element-wise multiplication.

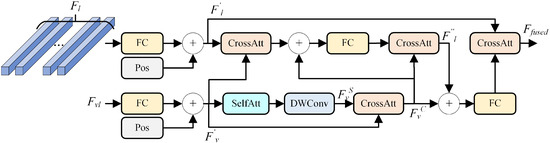

3.3. Multi-Level Feature Alignment Network

During the cross-modal decoding process, the semantic complementarity and inconsistency between the visual and linguistic modalities can lead to semantic misalignment when heterogeneous features are simply fused and directly decoded. To ensure semantic consistency throughout the reasoning process, we introduce a multilevel feature alignment module prior to the cross-modal decoder. This module leverages cross-attention mechanisms to align visual and linguistic semantics, thereby enhancing the semantic association between different modalities. Moreover, while RS images inherently contain rich semantic information across spatial and channel dimensions, they are also susceptible to issues such as noise interference and feature redundancy. The interaction between cross-modal attention and depthwise convolution (DWConv) can enhance the saliency of the described target, reduce the interference of background noise, and mitigate redundant features, thereby improving the localization accuracy of the described target, as shown in Figure 4. The depthwise convolution layer is configured with 1 input channel and 1 output channel, utilizes a convolution kernel of size 5 × 5, and operates with 2 strides.

Figure 4.

Framework of the proposed MFAN.

Given a visual feature map and a linguistic feature vector , two linear projections are employed to map them into the same dimension, followed by the addition of position embedding. This process is formulated as follows:

where and denote the function of getting positional embedding. , , where c and n represent the token numbers and dimension. We obtain and of through DWConv, self-attention and cross-attention mechanisms.

where and are self-attention and cross-attention. Let and denote visual features that encompass both channel and spatial dimensions. The relationships among channels are learned via self-attention and the DWConv, while the connections between spatial elements are established through cross-modal attention. This dual approach yields richer feature representations.

The multimodal features are ultimately utilized for the localization task. Within this multilevel structure, visual features are persistently fused with contextual information derived from the linguistic expressions, providing richer localization cues and reducing referential ambiguity. The proposed TVI-MFAN establishes a stronger visual–linguistic connection, facilitating the integration of more complete and reliable features of the referred objects. The overall training process of the proposed TVI-MFAN is illustrated in Algorithm 1.

| Algorithm 1 TVI-MFAN |

Require: Linguistic expression, remote sensing images, learnable token, ground truth Bbox. Ensure: Bbox. 1: Initialize all weights. 2: for epoch < epochs do 3: Execute the linguistic backbone to extract linguistic features . 4: Execute the TVIA to dynamically generate adaptive weights that guide the visual back-bone in extracting features by Equations (1) to (4). 5: Execute the MFAN to enhance the uniqueness of the object to obtain the refined multi-modal features for localization by Equations (5) to (7). 6: Input the refined multimodal features into the localization module of the TVI-MFAN framework to predict the coordinates of the Bbox. 7: Utilize a loss function to calculate the discrepancy between the predicted Bbox and the ground truth Bbox. 8: end for 9: Obtain predicted Bbox. |

4. Discussion

4.1. Data Description

The proposed method was evaluated on the OPT-RSVG [45] and DIOR-RSVG datasets. OPT-RSVG encompasses 25,452 remote sensing images paired with 48,952 linguistic expressions across 14 object categories. This dataset is marked by substantial category imbalance: vehicles form the most prevalent category (16.73%, 8188 instances), whereas harbors are the least frequent (1920 instances). The remaining 12 categories display relatively uniform distributions, with instance counts varying between 2500 and 5000. DIOR-RSVG, developed by Northwestern Polytechnical University in 2023, is constructed upon DIOR. This dataset comprises 38,320 language expressions across 17,402 RS images, each with a pixel size of 800 × 800. The average expression length is 7.47, and the vocabulary size is 100. It covers 20 object categories.

The linguistic expressions exhibit an average length of 10.10 words (minimum: 3, maximum: 32), encompassing a variety of spatial, spectral and relational descriptions. The relative sizes of the referents are categorized according to predefined thresholds: tiny targets occupy less than 0.01% of the image area, small targets range from 0.01% to 0.1%, large targets from 0.1% to 0.2%, and gigantic targets exceed 0.5% of the image area. Notably, the dataset is primarily composed of small targets, with large and gigantic targets being significantly underrepresented. This distribution mirrors the real-world challenges in RSVG, where small objects are more prevalent and larger objects are relatively rare. The dataset partitioning is conducted in accordance with a 40%-10%-50% split. Specifically, 40% of the text-image pairs are randomly assigned to the training set, 10% to the validation set, and 50% to the test set. This partitioning scheme ensures a statistically robust evaluation, thereby rigorously testing the model’s generalizability across diverse geospatial contexts and linguistic complexities.

To quantitatively assess the efficacy of the proposed method, we utilize Precision@X under varying Intersection over Union (IoU) thresholds and mean Intersection over Union (mIoU) as principal statistical metrics. The meanIoU and cumIoU can be formulated as follows:

where t is the index of the image–language pairs and M represents the size of the dataset. and are the intersection and union area of the predicted and ground-truth bounding boxes.

Specifically, IoU quantifies the overlap between the predicted bounding box and the ground-truth bounding box, while Precision@X evaluates the percentage of test images where the IoU score surpasses a predefined threshold X. For comprehensive benchmarking against existing methods, we additionally integrate cumulative IoU (cumIoU) and meanIoU into the evaluation framework. Collectively, these metrics offer rigorous insights into localization accuracy, robustness across scale variations, and semantic alignment fidelity under complex geospatial scenarios. It is imperative to highlight that all evaluation metrics were computed by averaging the results obtained from 10 independent runs on the dataset.

4.2. Implementation Details

All experiments were performed using the PyTorch 2.8.0 framework on a GreatWall HyperCloud server, which is configured with an Intel Xeon Silver 4210R 2.4 GHz CPU, 128 GB of RAM, and four NVIDIA GeForce RTX 3090 GPUs (each with 24 GB of VRAM). The OPT-RSVG and DIOR-RSVG datasets were partitioned into training (40%), validation (10%), and test (50%) sets via random assignment of text–image pairs.

For hierarchical visual feature extraction, ResNet-50 is initialized with pre-trained weights from DETR [46]. The language branch utilizes a pre-trained BERT [47] model with a hidden layer dimension of 768. Optimization is conducted using AdamW [48] with a weight decay of 0.9 and a learning rate of 0.0001. Batch sizes of 16 and 150 epochs are employed, with learning rate warmup applied during the first 10% of iterations. This configuration ensures reproducibility and fair comparison with existing methods while leveraging state-of-the-art (SOTA) hardware for efficient large-scale RSVG training.

4.3. Comparisons with SOTA Methods

In Table 1 and Table 2, the performance of the proposed method is juxtaposed with that of other SOTA methods on the OPT-RSVG and DIOR-RSVG datasets. The best and second best performances are highlighted in red and blue, respectively. The proposed TVI-MFAN framework achieves competitive performance on OPT-RSVG and DIOR-RSVG datasets, thereby validating its efficacy. TVI-MFAN consistently outperforms the current one-stage methods [49,50]. Specifically, it attains accuracy of 75.65% and 80.24% on both datasets, representing absolute improvements of 5.43% and 6.63% over the previous best performance of the one-stage framework LBYL-Net. Previous one-stage methods necessitate manually designed visual–language fusion modules to generate bounding boxes, which may not adequately capture the salient visual attributes or contextual information in the referred expression. In contrast, our approach enhances the visual features of the referred objects through multi-level visual–linguistic fusion, resulting in more accurate object localization.

Table 1.

Comparision with the SOTA methods for TVI-MFAN on the test set of OPT-RSVG. The best and second best performances are highlighted in red and blue.

Table 2.

Comparision with the SOTA methods for TVI-MFAN on the test set of DIOR-RSVG. The best and second best performances are highlighted in red and blue.

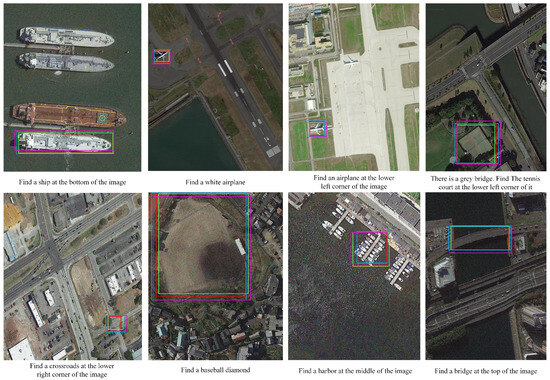

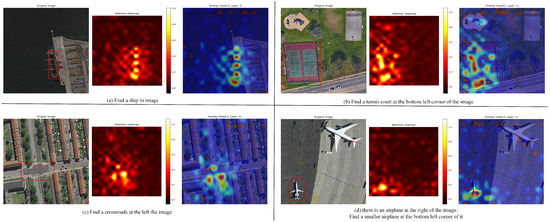

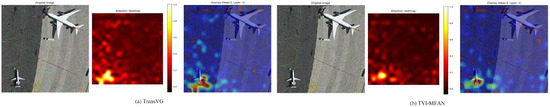

The essence of recent Transformer-based methods [51,52] lies in the design of cross-modal fusion mechanisms. For example, VLTVG enhances object localization by establishing language-conditioned discriminative features and conducting multi-stage cross-modal reasoning. However, its performance is highly dependent on the quality of visual feature extraction. When using ResNet-50 as the visual backbone, VLTVG underperforms compared to TransVG. In contrast, our method employs linguistic features to modulate the visual backbone at multiple stages, directing visual attention to the referred objects and enabling more fine-grained identification. Compared to the recent work MGVLF, the proposed TVI-MFAN achieves significant improvements. Figure 5 and Figure 6 illustrate visualizations of bounding boxes predicted by TVI-MFAN on the OPT-RSVG and DIOR-RSVG datasets; GT is indicated by red boxes, while predictions by TransVG and TVI-MFAN are represented by blue and green boxes. It is evident that the attention is concentrated on objects or regions relevant to the descriptions in the language expressions. Figure 7 and Figure 8 show a comparison of the attention maps of TransVG and TVI-MFAN. The deficiency in early-stage linguistic feature integration may cause TransVG to suffer from attention drift during the object positioning process. In contrast, our proposed method incorporates a more extensive array of linguistic features, enabling it to attain superior positioning accuracy.

Figure 5.

Visualization of the final localization results between TVI-MFAN with other existing methods on the test set of OPT-RSVG. GT is indicated by red boxes, while predictions by TransVG, MGVLF and TVI-MFAN are represented by blue, pink and green boxes.

Figure 6.

Visualization of the final localization results between TVI-MFAN with other existing methods on the test set of DIOR-RSVG. GT is indicated by red boxes, while predictions by TransVG, MGVLF and TVI-MFAN are represented by blue, pink and green boxes.

Figure 7.

Visualization of the attention maps of TVI-MFAN.

Figure 8.

Comparison of the attention maps of TransVG and TVI-MFAN.

4.4. Ablation Study

In this section, we present the results of the ablation study conducted on the OPT-RSVG test set to systematically evaluate the effectiveness of the proposed framework.

4.4.1. Effectiveness of TVI-MFAN Components

To evaluate the necessity of the proposed modules, we conducted experiments by selectively removing them and reporting the Precision@0.5 (Pr@0.5) results. The experiments are summarized in Table 3. The results indicate that the performance decreases by 3.69% in the absence of the TVIA module, 6.28% in the absence of the MFAN, and 9.12% when both modules are removed. These observations demonstrate the effectiveness of each module and verify the locating ability of the proposed method.

Table 3.

Effectiveness of TVI-MFAN components.

4.4.2. Effectiveness of TVIA Module Position

To systematically evaluate the influence of the number and placement of the TVIA module within the proposed framework, ResNet-50 was employed as the visual backbone. The modulation scope was defined as [i, i + 1], where i denotes the i-th stage of the visual backbone. Comprehensive experimental results and Grad-CAM visualizations are presented in Table 4. Notably, the most significant performance drop was observed when all stages of the backbone network were subjected to modulation. This decline can be attributed to the premature inclusion of linguistic information in the initial stages of the visual feature extraction process. Introducing linguistic guidance too early may impede the ability of TVI-MFAN to effectively learn fundamental visual features, consequently hindering its capacity for generalization across different classes.

Table 4.

Effectiveness of the different positions of the TVIA module.

4.4.3. Effectiveness of the Modules in Balancing Accuracy and Computational Efficiency

Taking into account both model performance and computational efficiency, TransVG is chosen as the comparison for this work. In addition, Table 5 reports the effect of the components on model performance. Notably, TVI-MFAN achieves 75.65% Pr@0.5 with the TVIA and MFAN modules, which represents a 6.34% improvement over the TransVG. The ablation study reveals that removing the TVIA module results in a 6.28% drop in inference accuracy, a reduction of 0.466 GFLOPs, and a 1.15 increase in FPS. Similarly, elimination of the MFAN module causes a 3.69% decrease in inference accuracy, a decrease of 0.13 GFLOPs, and a 0.43 rise in FPS. These experiments underscore the significance of both modules in balancing accuracy and computational efficiency.

Table 5.

Comparison of the components on the OPT-RSVG test set.

4.4.4. Effectiveness of TVIA Module Components

To evaluate the contributions of channel weights, spatial weights, and biases within the proposed TVIA module, ablation experiments were conducted, with results presented in Table 6. The experiments demonstrate that excluding biases results in a 0.64% decrease in performance. When employing only channel weights or spatial weights individually, the model achieves accuracy of 74.69% and 72.14%.

Table 6.

Effectiveness of the TVIA module components.

4.4.5. Effectiveness of Linguistic Feature Granularity

To evaluate the different granularities of linguistic feature granularity within the proposed TVIA module, ablation experiments were conducted, with results presented in Table 7. When the sentence + word granularity is chosen, the model performance deteriorates compared to using the sentence granularity alone. This decline may stem from the mixed granularity introducing semantic ambiguity into the linguistic feature, thereby causing confusion in the model’s semantic interpretation and processing. Such ambiguity hampers the model’s ability to accurately comprehend and utilize the linguistic information.

Table 7.

Effectiveness of linguistic feature granularity.

5. Conclusions

In this article, we propose a novel RSVG framework named TVI-MFAN, which guides the visual backbone attention and performs cross-modal feature enhancement for accurate object localization. Firstly, a Text–Visual Interaction Attention Module (TVIA) is introduced to recalibrate visual features using textual features aggregated based on image context. In addition, a Multi-Level Feature Alignment Net (MFAN) is developed to harmonize the differences between remote sensing images and text by modeling multimodal context. Specifically, it hierarchically aligns visual and textual features across spatial, semantic and contextual dimensions to mitigate distribution divergence. Cross-modal attention is then applied between these visual features and textual features to extract target information. Experimental results show that the proposed TVI-MFAN outperforms existing natural image VG and RSVG methods on the RSVG task, demonstrating its effectiveness and superiority. One limitation of this study is that the proposed model can only locate a single object based on query expressions, while real-world scenarios often involve multi-object grounding tasks. In future work, we will enhance the capabilities of RSVG in long-text comprehension and multi-object matching to address the challenges of multi-object grounding tasks in RS.

Author Contributions

Conceptualization, H.C. and W.Q.; methodology, H.C.; software, H.C.; validation, H.C.; formal analysis, W.G.; investigation, H.C.; resources, B.A.; data curation, W.G.; writing—original draft preparation, H.C.; writing—review and editing, X.C.; visualization, H.C.; supervision, W.Q.; project administration, W.Q.; funding acquisition, W.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Excellent Youth Foundation of Shan’xi Scientific Committee 2025JC-JCQN-077, This work was supported in part by NSFC under grant No.62125305.

Data Availability Statement

The datasets analyzed in this study are managed by the Rocket Force University of Engineering and can be made available by the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Volume 1. [Google Scholar]

- Prince, S.J. Understanding Deep Learning; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Sejnowski, T.J. The Deep Learning Revolution; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Khanal, S.; Kc, K.; Fulton, J.P.; Shearer, S.; Ozkan, E. Remote sensing in agriculture-accomplishments, limitations, and opportunities. Remote Sens. 2020, 12, 3783. [Google Scholar] [CrossRef]

- Shirmard, H.; Farahbakhsh, E.; Müller, R.D.; Chandra, R. A review of machine learning in processing remote sensing data for mineral exploration. Remote Sens. Environ. 2022, 268, 112750. [Google Scholar] [CrossRef]

- Jiang, N.; Li, H.B.; Li, C.J.; Xiao, H.X.; Zhou, J.W. A fusion method using terrestrial laser scanning and unmanned aerial vehicle photogrammetry for landslide deformation monitoring under complex terrain conditions. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Lobry, S.; Marcos, D.; Murray, J.; Tuia, D. RSVQA: Visual question answering for remote sensing data. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8555–8566. [Google Scholar] [CrossRef]

- Lu, X.; Wang, B.; Zheng, X.; Li, X. Exploring models and data for remote sensing image caption generation. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2183–2195. [Google Scholar] [CrossRef]

- Sun, Y.; Feng, S.; Li, X.; Ye, Y.; Kang, J.; Huang, X. Visual grounding in remote sensing images. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 404–412. [Google Scholar]

- Deng, J.; Yang, Z.; Chen, T.; Zhou, W.; Li, H. Transvg: End-to-end visual grounding with transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1769–1779. [Google Scholar]

- Deng, J.; Yang, Z.; Liu, D.; Chen, T.; Zhou, W.; Zhang, Y.; Li, H.; Ouyang, W. Transvg++: End-to-end visual grounding with language conditioned vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13636–13652. [Google Scholar] [CrossRef] [PubMed]

- Su, W.; Miao, P.; Dou, H.; Wang, G.; Qiao, L.; Li, Z.; Li, X. Language adaptive weight generation for multi-task visual grounding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10857–10866. [Google Scholar]

- Zhu, C.; Zhou, Y.; Shen, Y.; Luo, G.; Pan, X.; Lin, M.; Chen, C.; Cao, L.; Sun, X.; Ji, R. Seqtr: A simple yet universal network for visual grounding. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2022; pp. 598–615. [Google Scholar]

- Shi, F.; Gao, R.; Huang, W.; Wang, L. Dynamic mdetr: A dynamic multimodal transformer decoder for visual grounding. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 1181–1198. [Google Scholar] [CrossRef]

- Ye, J.; Tian, J.; Yan, M.; Yang, X.; Wang, X.; Zhang, J.; He, L.; Lin, X. Shifting more attention to visual backbone: Query-modulated refinement networks for end-to-end visual grounding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15502–15512. [Google Scholar]

- Yang, L.; Xu, Y.; Yuan, C.; Liu, W.; Li, B.; Hu, W. Improving visual grounding with visual-linguistic verification and iterative reasoning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9499–9508. [Google Scholar]

- Yang, C.; Li, Z.; Zhang, L. MGIMM: Multi-Granularity Instruction Multimodal Model for Attribute-Guided Remote Sensing Image Detailed Description. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5649113. [Google Scholar] [CrossRef]

- Huang, Z.; Yan, H.; Zhan, Q.; Yang, S.; Zhang, M.; Zhang, C.; Lei, Y.; Liu, Z.; Liu, Q.; Wang, Y. A Survey on Remote Sensing Foundation Models: From Vision to Multimodality. arXiv 2025, arXiv:2503.22081. [Google Scholar] [CrossRef]

- Xiao, L.; Yang, X.; Lan, X.; Wang, Y.; Xu, C. Towards Visual Grounding: A Survey. arXiv 2024, arXiv:2412.20206. [Google Scholar] [CrossRef]

- Li, X.; Wen, C.; Hu, Y.; Yuan, Z.; Zhu, X.X. Vision-language models in remote sensing: Current progress and future trends. IEEE Geosci. Remote Sens. Mag. 2024, 12, 32–66. [Google Scholar] [CrossRef]

- Tao, L.; Zhang, H.; Jing, H.; Liu, Y.; Yan, D.; Wei, G.; Xue, X. Advancements in Vision–Language Models for Remote Sensing: Datasets, Capabilities, and Enhancement Techniques. Remote Sens. 2025, 17, 162. [Google Scholar] [CrossRef]

- Huo, C.; Chen, K.; Zhang, S.; Wang, Z.; Yan, H.; Shen, J.; Hong, Y.; Qi, G.; Fang, H.; Wang, Z. When Remote Sensing Meets Foundation Model: A Survey and Beyond. Remote Sens. 2025, 17, 179. [Google Scholar] [CrossRef]

- Zhan, Y.; Xiong, Z.; Yuan, Y. Rsvg: Exploring data and models for visual grounding on remote sensing data. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–13. [Google Scholar] [CrossRef]

- Hang, R.; Xu, S.; Liu, Q. A regionally indicated visual grounding network for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5647411. [Google Scholar] [CrossRef]

- Ding, Y.; Xu, H.; Wang, D.; Li, K.; Tian, Y. Visual selection and multi-stage reasoning for rsvg. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6007305. [Google Scholar] [CrossRef]

- Zhao, E.; Wan, Z.; Zhang, Z.; Nie, J.; Liang, X.; Huang, L. A Spatial Frequency Fusion Strategy Based on Linguistic Query Refinement for RSVG. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5409413. [Google Scholar] [CrossRef]

- Lan, M.; Rong, F.; Jiao, H.; Gao, Z.; Zhang, L. Language query based transformer with multi-scale cross-modal alignment for visual grounding on remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5626513. [Google Scholar] [CrossRef]

- Radouane, K.; Azzag, H. MB-ORES: A Multi-Branch Object Reasoner for Visual Grounding in Remote Sensing. arXiv 2025, arXiv:2503.24219. [Google Scholar] [CrossRef]

- Corley, I.; Nsutezo, S.F.; Ortiz, A.; Robinson, C.; Dodhia, R.; Ferres, J.M.L.; Najafirad, P. FLAVARS: A Multimodal Foundational Language and Vision Alignment Model for Remote Sensing. arXiv 2025, arXiv:2501.08490. [Google Scholar] [CrossRef]

- Scheibenreif, L.; Hanna, J.; Mommert, M.; Borth, D. Self-supervised vision transformers for land-cover segmentation and classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1422–1431. [Google Scholar]

- Guo, X.; Lao, J.; Dang, B.; Zhang, Y.; Yu, L.; Ru, L.; Zhong, L.; Huang, Z.; Wu, K.; Hu, D.; et al. Skysense: A multi-modal remote sensing foundation model towards universal interpretation for earth observation imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27672–27683. [Google Scholar]

- Wang, Y.; Albrecht, C.M.; Braham, N.A.A.A.; Liu, C.; Xiong, Z.; Zhu, X.X. Decoupling common and unique representations for multimodal self-supervision learning. arXiv 2024, arXiv:2309.05300. [Google Scholar]

- Fuller, A.; Millard, K.; Green, J. CROMA: Remote sensing representations with contrastive radar-optical masked autoencoders. Adv. Neural Inf. Process. Syst. 2023, 36, 5506–5538. [Google Scholar]

- Wang, Z.; Prabha, R.; Huang, T.; Wu, J.; Rajagopal, R. Skyscript: A large and semantically diverse vision-language dataset for remote sensing. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 5805–5813. [Google Scholar]

- Zhang, Z.; Zhao, T.; Guo, Y.; Yin, J. RS5M and GeoRSCLIP: A large scale vision-language dataset and a large vision-language model for remote sensing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5642123. [Google Scholar] [CrossRef]

- Mo, S.; Kim, M.; Lee, K.; Shin, J. S-clip: Semi-supervised vision-language learning using few specialist captions. Adv. Neural Inf. Process. Syst. 2023, 36, 61187–61212. [Google Scholar]

- Wang, F.; Wu, C.; Wu, J.; Wang, L.; Li, C. Multistage synergistic aggregation network for remote sensing visual grounding. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning, 2021. pp. 8748–8763. Available online: https://proceedings.mlr.press/v139/radford21a.html (accessed on 20 November 2024).

- Li, X.; Wen, C.; Hu, Y.; Zhou, N. RS-CLIP: Zero shot remote sensing scene classification via contrastive vision-language supervision. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103497. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, C.; Fan, L.; Meng, G.; Xiang, S.; Ye, J. Addressclip: Empowering vision-language models for city-wide image address localization. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 76–92. [Google Scholar]

- Liu, F.; Chen, D.; Guan, Z.; Zhou, X.; Zhu, J.; Ye, Q.; Zhou, J. Remoteclip: A vision language foundation model for remote sensing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. Blip: Bootstrapping Language-Image Pre-Training for Unified Vision-Language Understanding and Generation. In International Conference on Machine Learning; PMLR: Oxford, UK, 2022; pp. 12888–12900. Available online: https://proceedings.mlr.press/v162/li22n.html (accessed on 20 November 2024).

- Singh, A.; Hu, R.; Goswami, V.; Couairon, G.; Galuba, W.; Rohrbach, M.; Kiela, D. Flava: A foundational language and vision alignment model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15638–15650. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Li, K.; Wang, D.; Xu, H.; Zhong, H.; Wang, C. Language-guided progressive attention for visual grounding in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5631413. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers); ASSOC Computational: Stroudsburg, PA, USA, 2019; pp. 4171–4186. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Huang, B.; Lian, D.; Luo, W.; Gao, S. Look before you leap: Learning landmark features for one-stage visual grounding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16888–16897. [Google Scholar]

- Yang, Z.; Chen, T.; Wang, L.; Luo, J. Improving one-stage visual grounding by recursive sub-query construction. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XIV 16; Springer International Publishing: Cham, Switzerland, 2020; pp. 387–404. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 38–55. [Google Scholar]

- Zhang, P.; Zhang, Y.; Wu, H.; Liu, X.; Hou, Y.; Wang, L. Language-guided Object Localization via Refined Spotting Enhancement in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5621315. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).