HDAMNet: Hierarchical Dilated Adaptive Mamba Network for Accurate Cloud Detection in Satellite Imagery

Abstract

1. Introduction

- A novel state-space-model-based deep neural network, termed HDAMNet, is proposed in this paper for cloud segmentation. The network innovatively introduces the visual state space model (VSSM) into the cloud detection domain by replacing the convolutional downsampling modules in traditional U-shaped network encoders with HDAMamba Blocks, effectively establishing long-range spatial dependency modeling.

- We design the Hierarchical Dilated Cross Scan (HDCS) mechanism to address the multi-scale features of clouds. It employs a multi-scale shifted windowing scheme and an adaptive dilation strategy to expand the receptive field for hierarchical feature perception. The resulting features are then dynamically fused by our multi-resolution adaptive feature extraction (MRAFE) module to enhance the final representation.

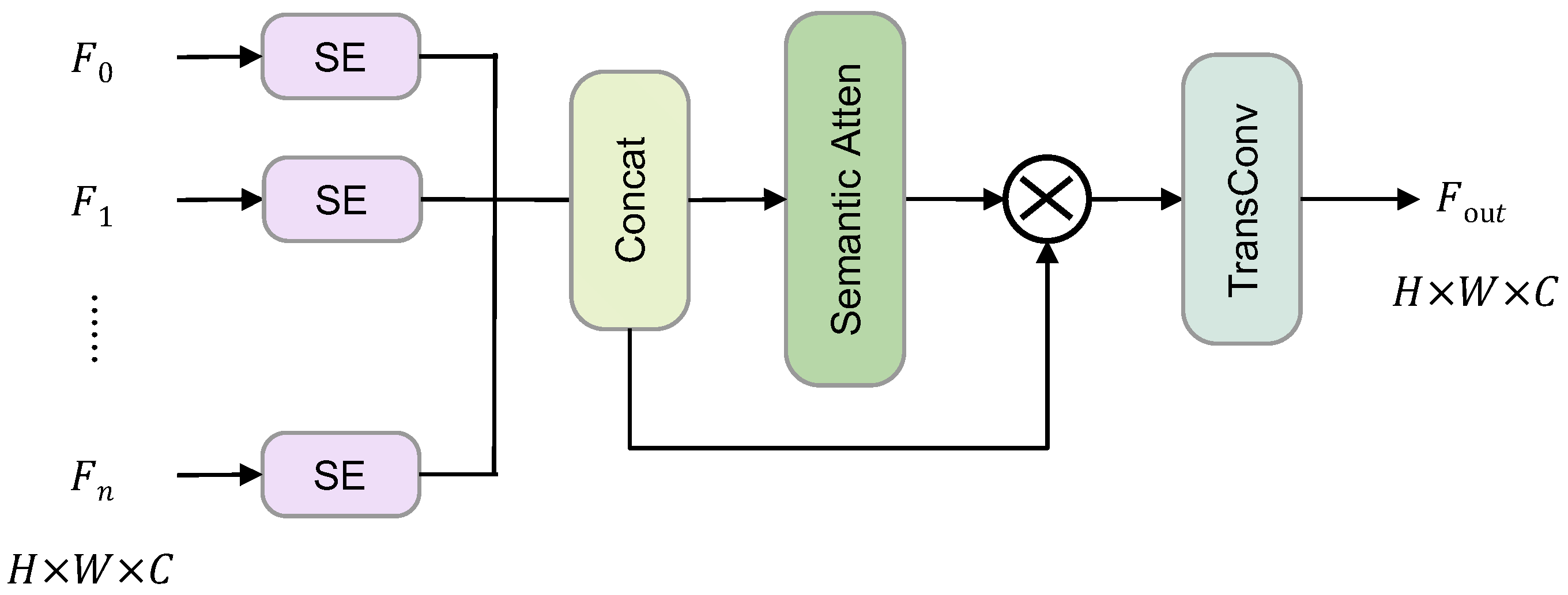

- To resolve boundary ambiguities, we introduce a Layer-wise Adaptive Attention (LAA) mechanism at the skip connections. This mechanism adaptively recalibrates feature channels to promote an effective fusion of shallow spatial details with deep semantic context, leading to more precise segmentation.

2. Related Work

2.1. CNN-Based Methods

2.2. Transformer-Based Methods

2.3. Mamba-Based Methods

3. Methodology

3.1. Preliminaries

3.2. HDAMNet

3.3. HDAMamba Block

3.3.1. HDCS

3.3.2. MRAFE

3.4. LAA

3.5. Loss Function

4. Results

4.1. Datasets

4.1.1. HRC_WHU Dataset

4.1.2. CloudS_M24 Dataset

4.1.3. WHU Cloud Dataset

4.2. Comparative Methods

4.2.1. CNN-Based Methods

4.2.2. Transformer-Based Methods

4.2.3. Mamba-Based Methods

4.3. Implementation Details

4.4. Evaluation Metrics

4.5. Ablation Study of Key Components

4.6. Comparison Test of the HRC_WHU Dataset

4.7. Generalization Experiment of the CloudS_M24 Dataset

4.8. Generalization Experiment of the WHU Cloud Dataset

5. Discussion

5.1. Analysis of Shifted Window Scale Settings

5.2. Comparative Analysis of Core Modules: Mamba and Transformer

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Verbesselt, J.; Hyndman, R.; Newnham, G.; Culvenor, D. Detecting Trend and Seasonal Changes in Satellite Image Time Series. Remote Sens. Environ. 2010, 114, 106–115. [Google Scholar] [CrossRef]

- Dutrieux, L.P.; Verbesselt, J.; Kooistra, L.; Herold, M. Monitoring Forest Cover Loss Using Multiple Data Streams, a Case Study of a Tropical Dry Forest in Bolivia. ISPRS J. Photogramm. Remote Sens. 2015, 107, 112–125. [Google Scholar] [CrossRef]

- Prasad, A.K.; Chai, L.; Singh, R.P.; Kafatos, M. Crop Yield Estimation Model for Iowa Using Remote Sensing and Surface Parameters. Int. J. Appl. Earth Obs. Geoinf. 2006, 8, 26–33. [Google Scholar] [CrossRef]

- Tralli, D.M.; Blom, R.G.; Zlotnicki, V.; Donnellan, A.; Evans, D.L. Satellite Remote Sensing of Earthquake, Volcano, Flood, Landslide and Coastal Inundation Hazards. ISPRS J. Photogramm. Remote Sens. 2005, 59, 185–198. [Google Scholar] [CrossRef]

- Zhang, Y.; Rossow, W.B.; Lacis, A.A.; Oinas, V.; Mishchenko, M.I. Calculation of Radiative Fluxes from the Surface to Top of Atmosphere Based on ISCCP and Other Global Data Sets: Refinements of the Radiative Transfer Model and the Input Data. J. Geophys. Res. Atmos. 2004, 109, D19105. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-Based Cloud and Cloud Shadow Detection in Landsat Imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ Automated Cloud-Cover Assessment (ACCA) Algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef]

- Hu, X.; Wang, Y.; Shan, J. Automatic Recognition of Cloud Images by Using Visual Saliency Features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1760–1764. [Google Scholar]

- Zhang, W.; Jin, S.; Zhou, L.; Xie, X.; Wang, F.; Jiang, L.; Zheng, Y.; Qu, P.; Li, G.; Pan, X. Multi-Feature Embedded Learning SVM for Cloud Detection in Remote Sensing Images. Comput. Electr. Eng. 2022, 102, 108177. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, Q.; Zhang, L. Multi-Temporal Cloud Detection Based on Robust PCA for Optical Remote Sensing Imagery. Comput. Electron. Agric. 2021, 188, 106342. [Google Scholar] [CrossRef]

- Mohajerani, S.; Saeedi, P. Cloud-Net: An End-to-End Cloud Detection Algorithm for Landsat 8 Imagery. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1029–1032. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Ge, W.; Yang, X.; Jiang, R.; Shao, W.; Zhang, L. CD-CTFM: A Lightweight CNN-Transformer Network for Remote Sensing Cloud Detection Fusing Multiscale Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 4538–4551. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, Y.; Li, Y.; Wan, Y.; Yao, Y. CloudViT: A Lightweight Vision Transformer Network for Remote Sensing Cloud Detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, Z.; Liu, C.; Tian, Q.; Wang, Y. Cloudformer: Supplementary Aggregation Feature and Mask-Classification Network for Cloud Detection. Appl. Sci. 2022, 12, 3221. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv 2024, arXiv:2312.00752. [Google Scholar]

- Ruan, J.; Li, J.; Xiang, S. VM-UNet: Vision Mamba UNet for Medical Image Segmentation. arXiv 2024, arXiv:2402.02491. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, J.-Q.; Zhang, Y.; Cui, G.; Li, L. Mamba-UNet: UNet-Like Pure Visual Mamba for Medical Image Segmentation. arXiv 2024, arXiv:2402.05079. [Google Scholar]

- Wang, Y.; Cao, L.; Deng, H. MFMamba: A Mamba-Based Multi-Modal Fusion Network for Semantic Segmentation of Remote Sensing Images. Sensors 2024, 24, 7266. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. RS-Mamba for Large Remote Sensing Image Dense Prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633314. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Zhu, Q.; Cai, Y.; Fang, Y.; Yang, Y.; Chen, C.; Fan, L.; Nguyen, A. Samba: Semantic Segmentation of Remotely Sensed Images with State Space Model. Heliyon 2024, 10, e38495. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Zhang, X.; Pun, M.-O. RS3Mamba: Visual State Space Model for Remote Sensing Image Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Y.; Yang, X.; Jiang, R.; Zhang, L. RSAM-Seg: A SAM-Based Model with Prior Knowledge Integration for Remote Sensing Image Semantic Segmentation. Remote Sens. 2025, 17, 590. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, J.; Yang, X.; Jiang, R.; Ye, Q. Improving Deep Learning-Based Cloud Detection for Satellite Images with Attention Mechanism. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Francis, A.; Sidiropoulos, P.; Muller, J.-P. CloudFCN: Accurate and Robust Cloud Detection for Satellite Imagery with Deep Learning. Remote Sens. 2019, 11, 2312. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A Cloud Detection Algorithm for Satellite Imagery Based on Deep Learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Yang, J.; Guo, J.; Yue, H.; Liu, Z.; Hu, H.; Li, K. CDnet: CNN-Based Cloud Detection for Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6195–6211. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep Learning Based Cloud Detection for Medium and High Resolution Remote Sensing Images of Different Sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef]

- Hu, K.; Zhang, D.; Xia, M. CDUNet: Cloud Detection UNet for Remote Sensing Imagery. Remote Sens. 2021, 13, 4533. [Google Scholar] [CrossRef]

- Lu, C.; Xia, M.; Lin, H. Multi-Scale Strip Pooling Feature Aggregation Network for Cloud and Cloud Shadow Segmentation. Neural Comput. Appl. 2022, 34, 6149–6162. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S.; et al. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6881–6890. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the Advances in Neural Information Processing Systems, Red Hook, NY, USA, 14–16 December 2021; Volume 34, pp. 12077–12090. [Google Scholar]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-Like Pure Transformer for Medical Image Segmentation. In Proceedings of the Computer Vision—ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2022; Karlinsky, L., Michaeli, T., Nishino, K., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 205–218. [Google Scholar]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and Multiscale Transformer Fusion Network for Remote-Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. TransUNet: Rethinking the U-Net Architecture Design for Medical Image Segmentation through the Lens of Transformers. Med. Image Anal. 2024, 97, 103280. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, Z.; Liu, C.; Tian, Q.; Zhou, Y. Cloudformer V2: Set Prior Prediction and Binary Mask Weighted Network for Cloud Detection. Mathematics 2022, 10, 2710. [Google Scholar] [CrossRef]

- Zhang, Z.; Tan, S.; Zhou, Y. CloudformerV3: Multi-Scale Adapter and Multi-Level Large Window Attention for Cloud Detection. Appl. Sci. 2023, 13, 12857. [Google Scholar] [CrossRef]

- Zhang, H.; Zhu, Y.; Wang, D.; Zhang, L.; Chen, T.; Wang, Z.; Ye, Z. A Survey on Visual Mamba. Appl. Sci. 2024, 14, 5683. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.04722. [Google Scholar] [CrossRef]

- Ma, J.; Li, F.; Wang, B. U-Mamba: Enhancing Long-Range Dependency for Biomedical Image Segmentation. arXiv 2024, arXiv:2401.04722. [Google Scholar]

- Xing, Z.; Ye, T.; Yang, Y.; Liu, G.; Zhu, L. SegMamba: Long-Range Sequential Modeling Mamba for 3D Medical Image Segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2024, Marrakesh, Morocco, 6–10 October 2024; Linguraru, M.G., Dou, Q., Feragen, A., Giannarou, S., Glocker, B., Lekadir, K., Schnabel, J.A., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 578–588. [Google Scholar]

- Ye, Z.; Chen, T.; Wang, F.; Zhang, H.; Zhang, L. P-Mamba: Marrying Perona Malik Diffusion with Mamba for Efficient Pediatric Echocardiographic Left Ventricular Segmentation. arXiv 2024, arXiv:2402.08506. [Google Scholar] [CrossRef]

- Liu, J.; Yang, H.; Zhou, H.-Y.; Xi, Y.; Yu, L.; Li, C.; Liang, Y.; Shi, G.; Yu, Y.; Zhang, S.; et al. Swin-UMamba: Mamba-Based UNet with ImageNet-Based Pretraining. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2024, Marrakesh, Morocco, 6–10 October 2024; Linguraru, M.G., Dou, Q., Feragen, A., Giannarou, S., Glocker, B., Lekadir, K., Schnabel, J.A., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 615–625. [Google Scholar]

- Yang, Y.; Xing, Z.; Yu, L.; Huang, C.; Fu, H.; Zhu, L. Vivim: A Video Vision Mamba for Medical Video Segmentation. arXiv 2024, arXiv:2401.14168. [Google Scholar] [CrossRef]

- Gu, A.; Goel, K.; Ré, C. Efficiently Modeling Long Sequences with Structured State Spaces. arXiv 2022, arXiv:2111.00396. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.; Gao, X. Lightweight and High-Precision Cloud Detection Method for Cloud-Snow Coexistence Areas in High-Resolution Remote Sensing Imagery. Acta Geod Cartogr. Sin. 2023, 52, 93. [Google Scholar]

- Ji, S.; Dai, P.; Lu, M.; Zhang, Y. Simultaneous Cloud Detection and Removal From Bitemporal Remote Sensing Images Using Cascade Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2021, 59, 732–748. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Dev, S.; Nautiyal, A.; Lee, Y.H.; Winkler, S. CloudSegNet: A Deep Network for Nychthemeron Cloud Image Segmentation. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1814–1818. [Google Scholar] [CrossRef]

- Chen, K.; Liu, C.; Shi, Z. RSMamba: Remote Sensing Image Classification with State Space Model. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

| Method | HDAMamba | MRAFE | LAA | Jaccard | OA | F1-Score |

|---|---|---|---|---|---|---|

| Variant-I | ✗ | ✗ | ✗ | 86.97 | 96.20 | 93.03 |

| Variant-II | ✓ | ✗ | ✗ | 88.30 | 96.85 | 93.79 |

| Variant-III | ✓ | ✓ | ✗ | 89.49 | 97.22 | 94.45 |

| Variant-IV | ✗ | ✗ | ✓ | 86.27 | 96.02 | 92.63 |

| HDAMNet | ✓ | ✓ | ✓ | 91.12 | 97.42 | 95.36 |

| Network Type | Method | Jaccard | Precision | Recall | Specificity | OA | F1-Score |

|---|---|---|---|---|---|---|---|

| CNN-based | UNet | 86.97 | 96.89 | 89.47 | 98.87 | 96.20 | 93.03 |

| PSPNet | 87.34 | 95.59 | 91.00 | 98.34 | 96.26 | 93.24 | |

| CloudNet | 87.50 | 97.18 | 89.77 | 98.97 | 96.37 | 93.33 | |

| CDnet | 87.51 | 95.88 | 90.93 | 98.45 | 96.32 | 93.34 | |

| CloudSegNet | 83.95 | 93.17 | 89.46 | 97.41 | 95.16 | 91.27 | |

| Transformer-based | UNetFormer | 87.76 | 96.07 | 91.03 | 98.53 | 96.41 | 93.48 |

| Swin-Unet | 85.67 | 96.11 | 88.75 | 98.58 | 95.80 | 92.28 | |

| CMTFNet | 86.81 | 95.80 | 90.24 | 98.44 | 96.12 | 92.24 | |

| TransUNet | 83.35 | 95.32 | 87.45 | 98.30 | 95.23 | 91.22 | |

| Mamba-based | Samba | 89.11 | 94.94 | 93.54 | 98.03 | 96.76 | 94.24 |

| VM-UNet | 88.98 | 94.76 | 93.58 | 97.96 | 96.72 | 94.17 | |

| Mamba-UNet | 89.83 | 96.41 | 92.93 | 98.63 | 97.02 | 94.64 | |

| RS3Mamba | 90.40 | 95.52 | 94.39 | 98.25 | 97.16 | 94.95 | |

| HDAMNet | 91.12 | 97.04 | 93.73 | 98.87 | 97.42 | 95.36 |

| Network Type | Method | Jaccard | Precision | Recall | Specificity | OA | F1-Score |

|---|---|---|---|---|---|---|---|

| CNN-based | UNet | 74.65 | 86.24 | 84.74 | 97.76 | 95.92 | 85.47 |

| PSPNet | 78.22 | 89.97 | 85.70 | 98.42 | 96.61 | 87.78 | |

| CloudNet | 78.98 | 87.98 | 88.52 | 98.00 | 96.66 | 88.25 | |

| CDnet | 75.90 | 89.20 | 83.58 | 98.33 | 96.23 | 86.3 | |

| CloudSegNet | 69.27 | 84.48 | 79.37 | 97.59 | 95.00 | 81.84 | |

| Transformer-based | UNetFormer | 81.36 | 88.71 | 90.75 | 98.09 | 97.05 | 89.72 |

| Swin-Unet | 68.71 | 87.59 | 76.12 | 98.22 | 95.08 | 81.45 | |

| CMTFNet | 78.58 | 91.53 | 84.74 | 98.70 | 96.72 | 88.00 | |

| TransUNet | 80.68 | 91.95 | 86.82 | 98.74 | 97.05 | 89.31 | |

| Mamba-based | Samba | 81.99 | 90.80 | 89.42 | 98.50 | 97.21 | 90.10 |

| VM-UNet | 81.88 | 91.15 | 88.95 | 98.57 | 97.21 | 90.04 | |

| Mamba-UNet | 71.37 | 91.37 | 76.53 | 98.80 | 95.64 | 83.29 | |

| RS3Mamba | 80.83 | 89.60 | 89.20 | 98.29 | 97.00 | 89.40 | |

| HDAMNet | 84.29 | 92.61 | 90.37 | 98.81 | 97.61 | 91.47 |

| Network Type | Method | Jaccard | Precision | Recall | Specificity | OA | F1-Score |

|---|---|---|---|---|---|---|---|

| CNN-based | UNet | 62.16 | 72.94 | 80.79 | 98.95 | 98.33 | 76.66 |

| PSPNet | 35.89 | 80.19 | 39.37 | 99.66 | 97.60 | 52.83 | |

| CloudNet | 53.00 | 72.35 | 66.47 | 99.10 | 97.99 | 69.28 | |

| CDnet | 58.89 | 75.17 | 73.12 | 99.15 | 98.26 | 74.13 | |

| CloudSegNet | 41.79 | 87.69 | 44.39 | 99.78 | 97.89 | 58.94 | |

| Transformer-based | UNetFormer | 41.87 | 66.55 | 53.04 | 99.06 | 97.49 | 59.03 |

| Swin-Unet | 57.85 | 82.13 | 66.18 | 99.49 | 98.36 | 73.30 | |

| CMTFNet | 58.41 | 65.98 | 83.58 | 98.48 | 97.97 | 73.74 | |

| TransUNet | 61.50 | 69.96 | 83.57 | 98.73 | 98.22 | 76.16 | |

| Mamba-based | Samba | 53.30 | 63.65 | 76.63 | 98.46 | 97.72 | 69.54 |

| Mamba-UNet | 60.74 | 79.47 | 72.03 | 99.35 | 98.42 | 75.57 | |

| RS3Mamba | 52.02 | 57.22 | 85.13 | 97.76 | 97.33 | 68.44 | |

| HDAMNet | 63.01 | 74.11 | 80.78 | 99.01 | 98.38 | 77.30 |

| Scale Setting | Jaccard | Precision | Recall | Specificity | OA | F1-Score |

|---|---|---|---|---|---|---|

| {0.25, 0.25, 0.25} | 89.52 | 97.13 | 91.95 | 98.93 | 96.95 | 94.47 |

| {0.25, 0.25, 0.50} | 90.82 | 96.58 | 93.84 | 98.69 | 97.32 | 95.19 |

| {0.10, 0.20, 0.30} | 88.44 | 96.30 | 91.55 | 98.61 | 96.61 | 93.87 |

| {0.10, 0.30, 0.50} | 88.64 | 97.06 | 91.09 | 98.91 | 96.70 | 93.98 |

| {0.20, 0.40, 0.75} | 89.81 | 96.74 | 92.62 | 98.77 | 97.03 | 94.63 |

| {0.25, 0.50, 0.75} | 90.11 | 96.14 | 93.50 | 98.52 | 97.10 | 94.80 |

| {0.25, 0.50, 1.00} | 91.12 | 97.04 | 93.73 | 98.87 | 97.42 | 95.36 |

| Method | Inference Time | FLOPs | Jaccard | OA | F1-Score |

|---|---|---|---|---|---|

| Transformer-based | 0.4571 s | 571.695 M | 80.74% | 94.05% | 89.34% |

| Mamba-based | 0.3182 s | 197.15 M | 91.12% | 97.42% | 95.36% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Li, Y.; Yang, X.; Jiang, R.; Zhang, L. HDAMNet: Hierarchical Dilated Adaptive Mamba Network for Accurate Cloud Detection in Satellite Imagery. Remote Sens. 2025, 17, 2992. https://doi.org/10.3390/rs17172992

Wang Y, Li Y, Yang X, Jiang R, Zhang L. HDAMNet: Hierarchical Dilated Adaptive Mamba Network for Accurate Cloud Detection in Satellite Imagery. Remote Sensing. 2025; 17(17):2992. https://doi.org/10.3390/rs17172992

Chicago/Turabian StyleWang, Yongcong, Yunxin Li, Xubing Yang, Rui Jiang, and Li Zhang. 2025. "HDAMNet: Hierarchical Dilated Adaptive Mamba Network for Accurate Cloud Detection in Satellite Imagery" Remote Sensing 17, no. 17: 2992. https://doi.org/10.3390/rs17172992

APA StyleWang, Y., Li, Y., Yang, X., Jiang, R., & Zhang, L. (2025). HDAMNet: Hierarchical Dilated Adaptive Mamba Network for Accurate Cloud Detection in Satellite Imagery. Remote Sensing, 17(17), 2992. https://doi.org/10.3390/rs17172992