PWFNet: Pyramidal Wavelet–Frequency Attention Network for Road Extraction

Abstract

1. Introduction

- We propose a novel road extraction model that integrates multi-scale spatial and frequency features. By jointly leveraging spatial continuity and frequency structural information, the model effectively addresses challenges such as complex textures and severe occlusions in remote sensing imagery, thereby enhancing robustness and representational capacity in complex scenes. The source code is publicly available at https://github.com/zong3124/PWFNet (accessed on 18 August 2025).

- We design a Pyramidal Wavelet Convolution (PWC) module, which introduces Discrete Wavelet Transform (DWT) at multiple spatial scales to perform high–low frequency decomposition and the reconstruction of features. This enhances the model’s ability to capture both global road structures and local details, making it particularly effective for road extraction tasks with significant scale variations.

- We propose a Frequency-aware Adjustment Module (FAM), which conducts multi-band decomposition and the weighted modulation of features in the Fourier domain. By learning adaptive weights for each frequency band, the module strengthens road-related low-frequency components while suppressing high-frequency background interference, improving extraction accuracy in texture-rich environments.

2. Related Works

2.1. Road Extraction in Deep Learning

2.2. Wavelet Transform in CNNs

3. Methods

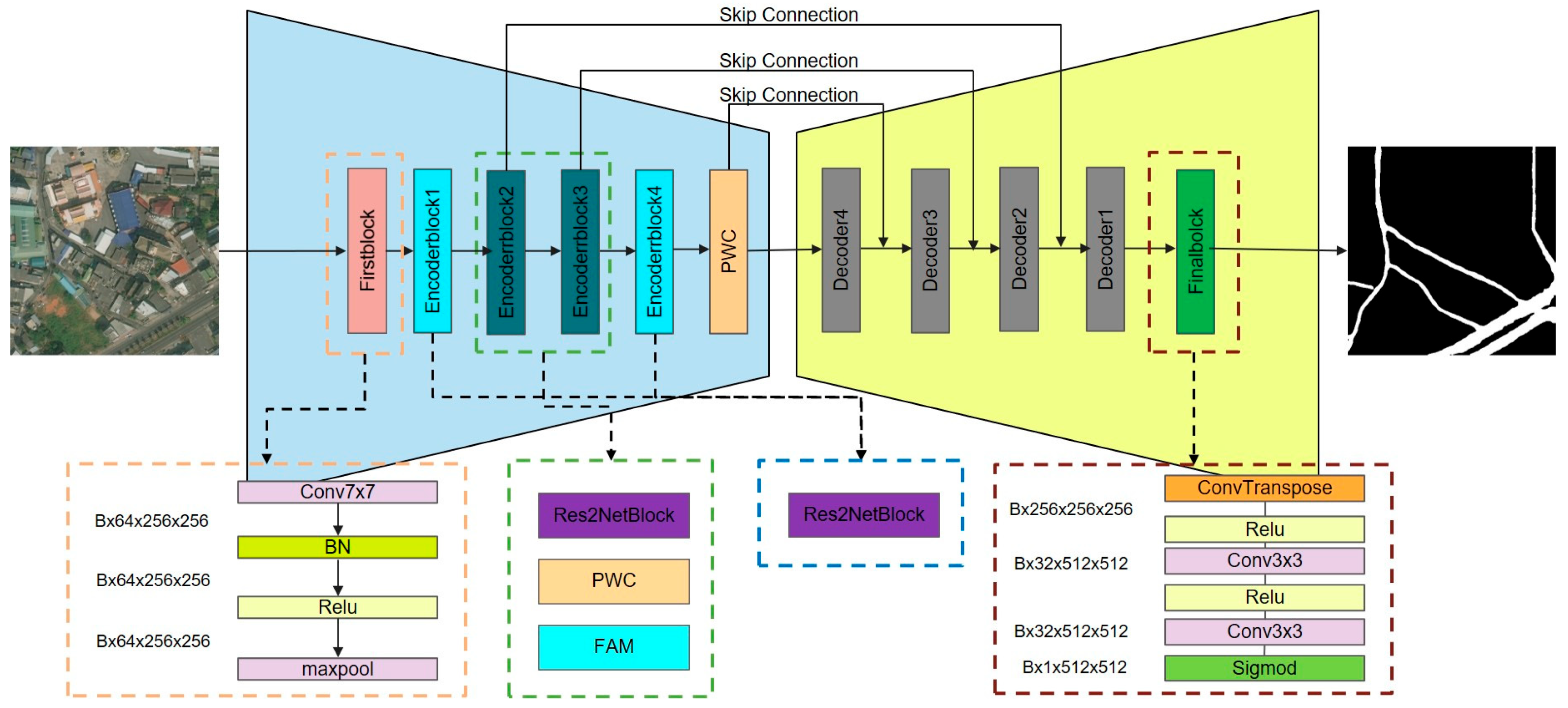

3.1. Overall Structure of PWFNet

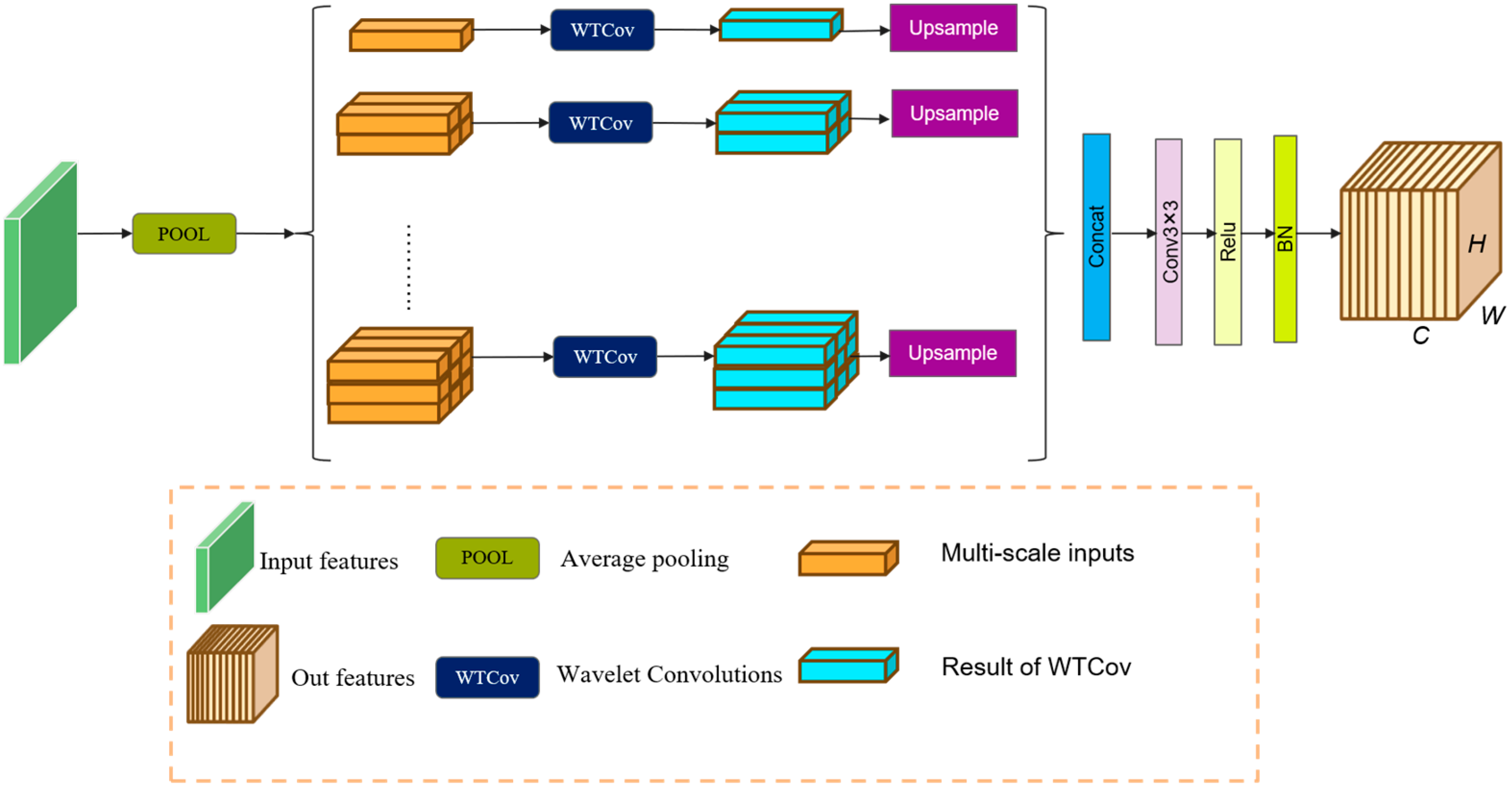

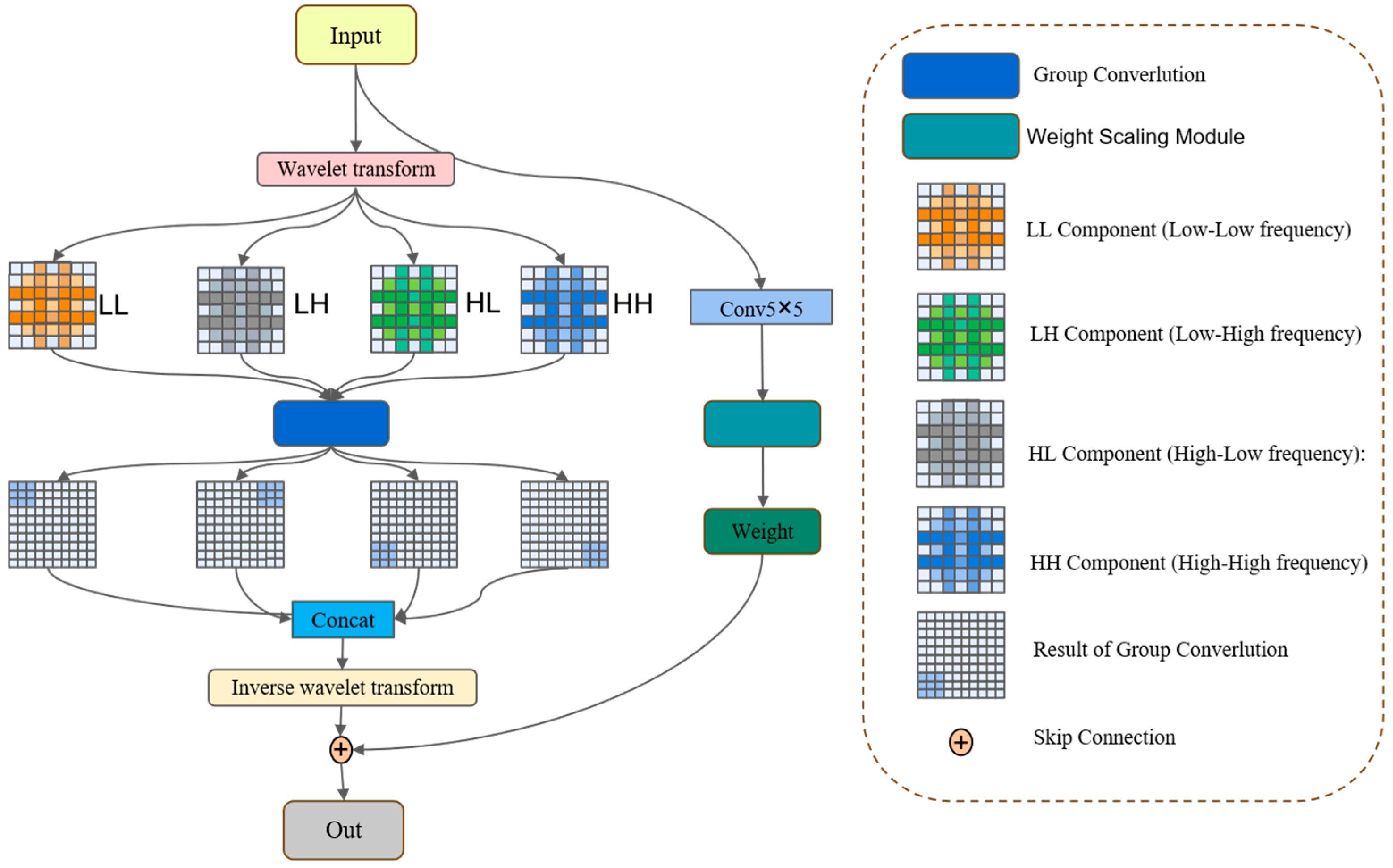

3.2. Pyramidal Wavelet Convolution Module

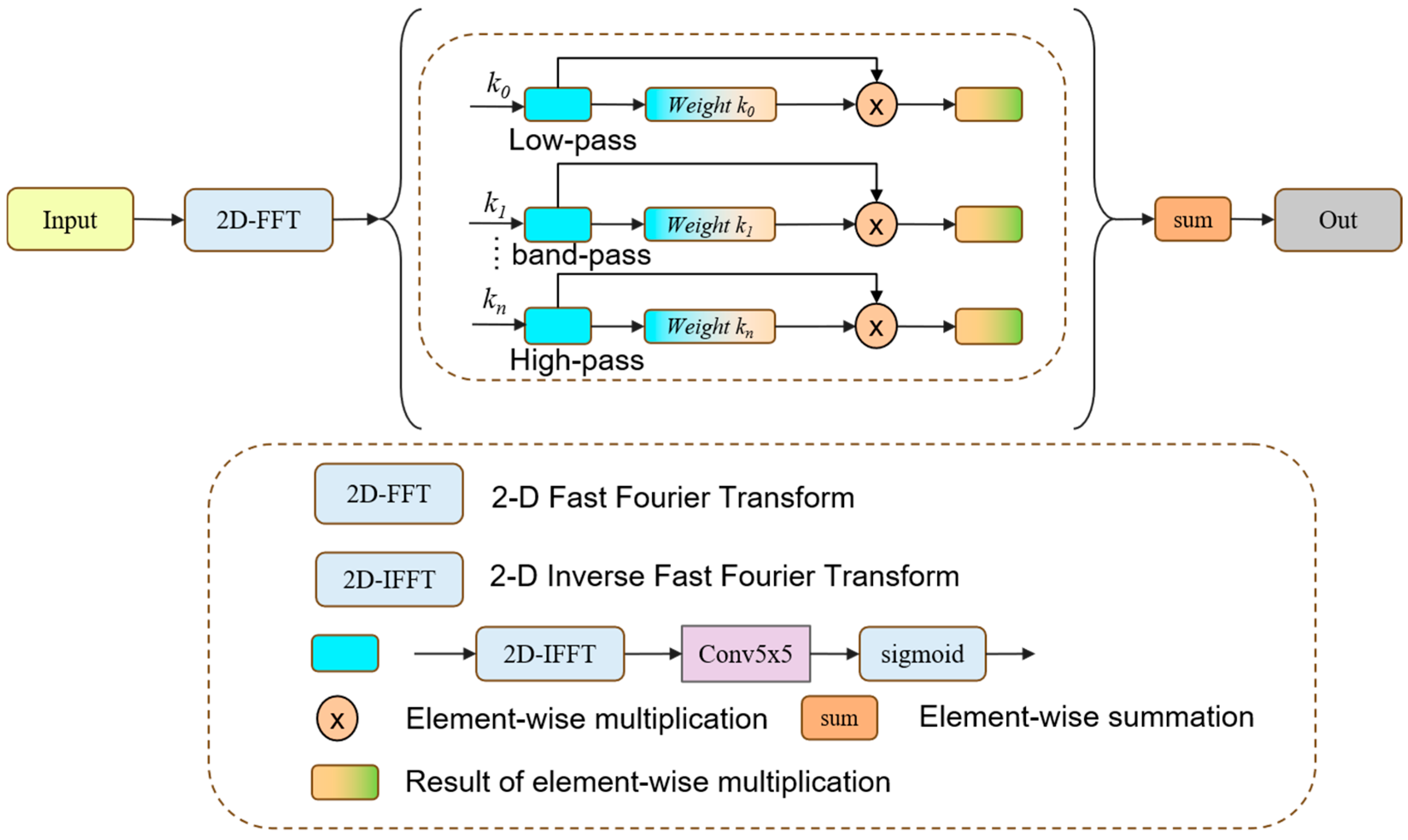

3.3. Frequency-Aware Adjustment Module

3.4. Comparative Methods

4. Experiment and Datasets

4.1. Datasets

4.1.1. DeepGlobe Road Dataset

4.1.2. CHN6-CUG Road Dataset

4.1.3. LSRV Road Dataset

4.2. Implementation Details

4.3. Evaluation Metrics

4.4. Comparison of the Methods

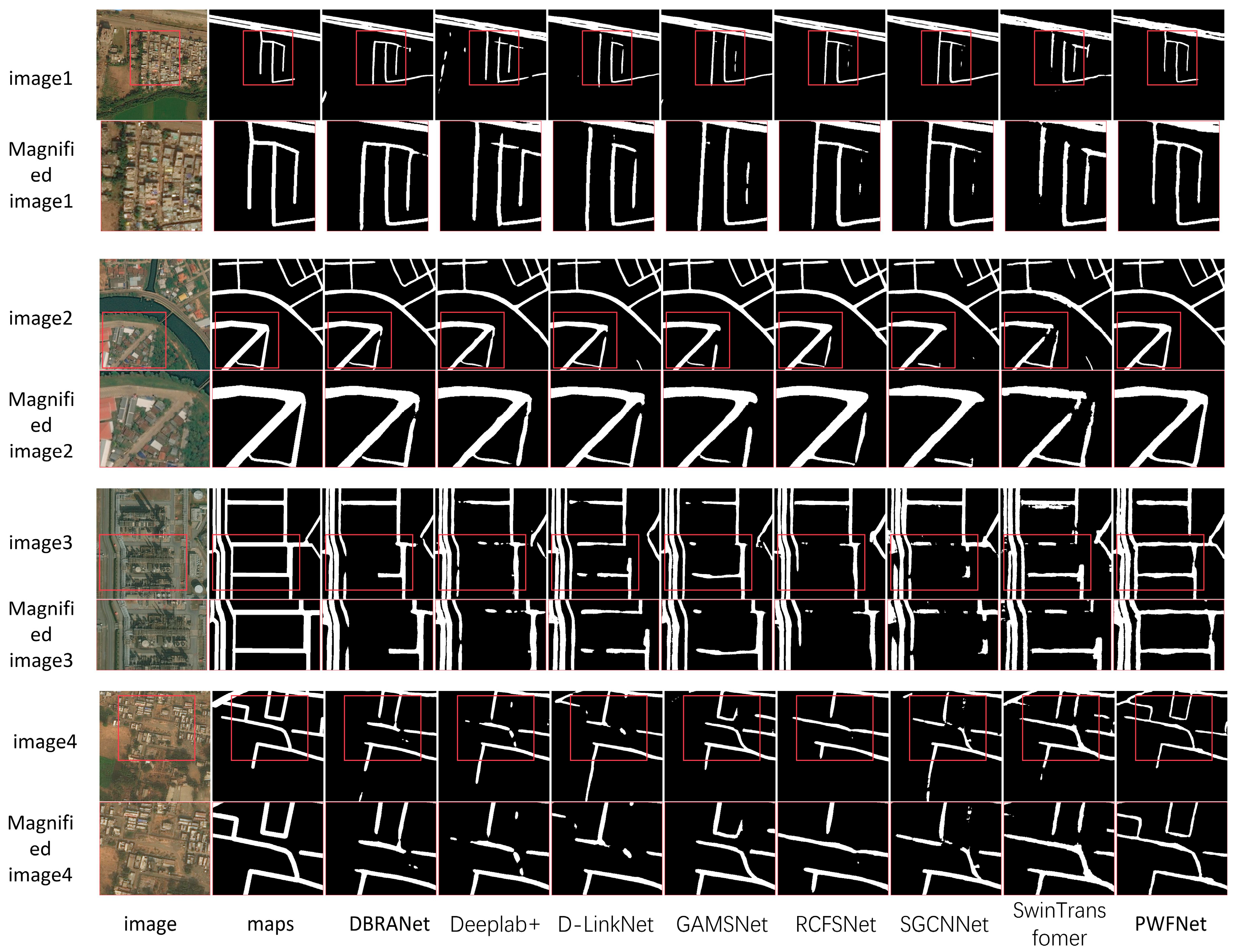

4.4.1. Experiments on the DeepGlobe Road Dataset

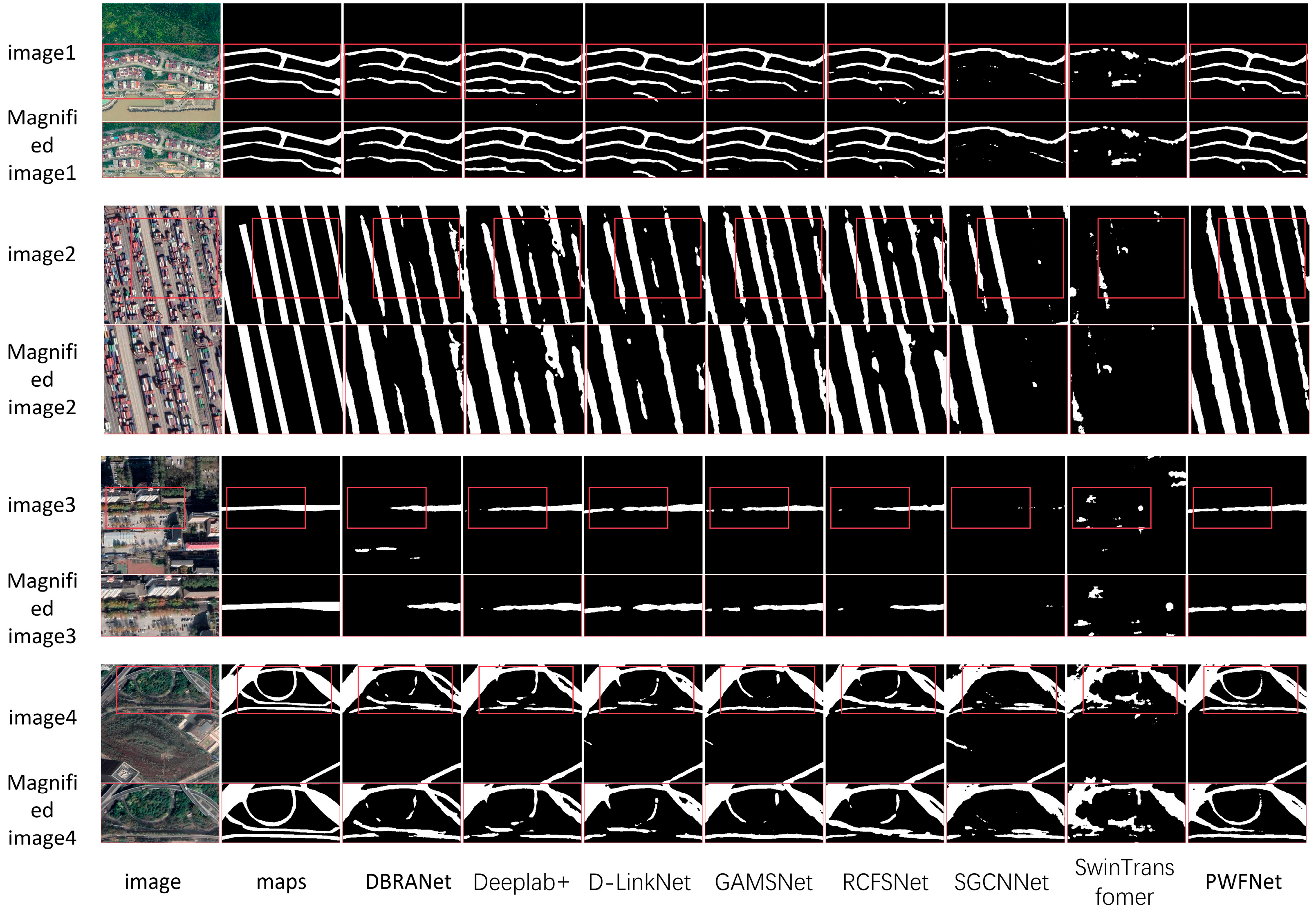

4.4.2. Experiments on the CHN6-CUG Road Dataset

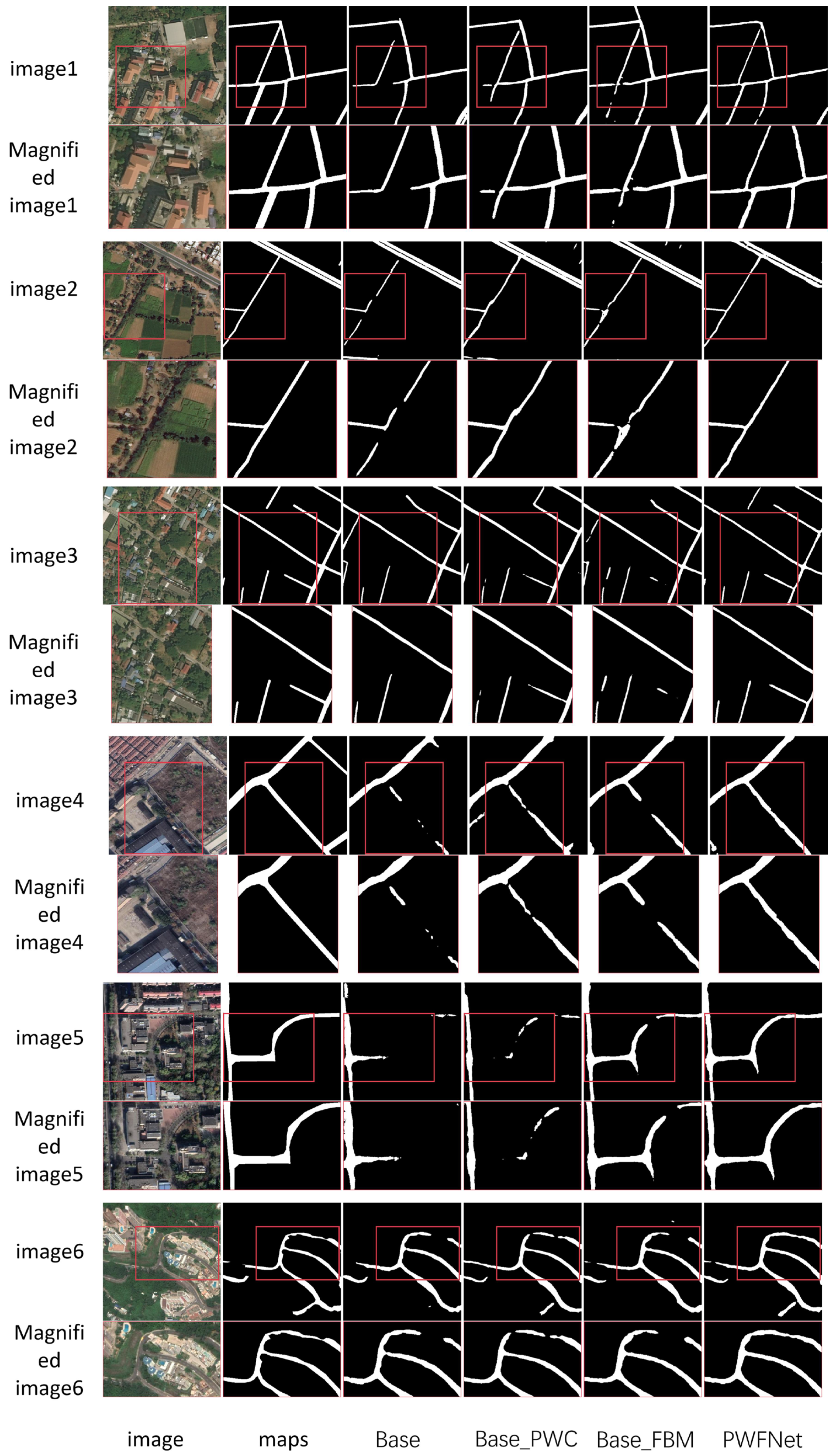

4.4.3. Ablation Experiments Between Modules

5. Discussion

5.1. Model Complexity and Time Complexity Analysis

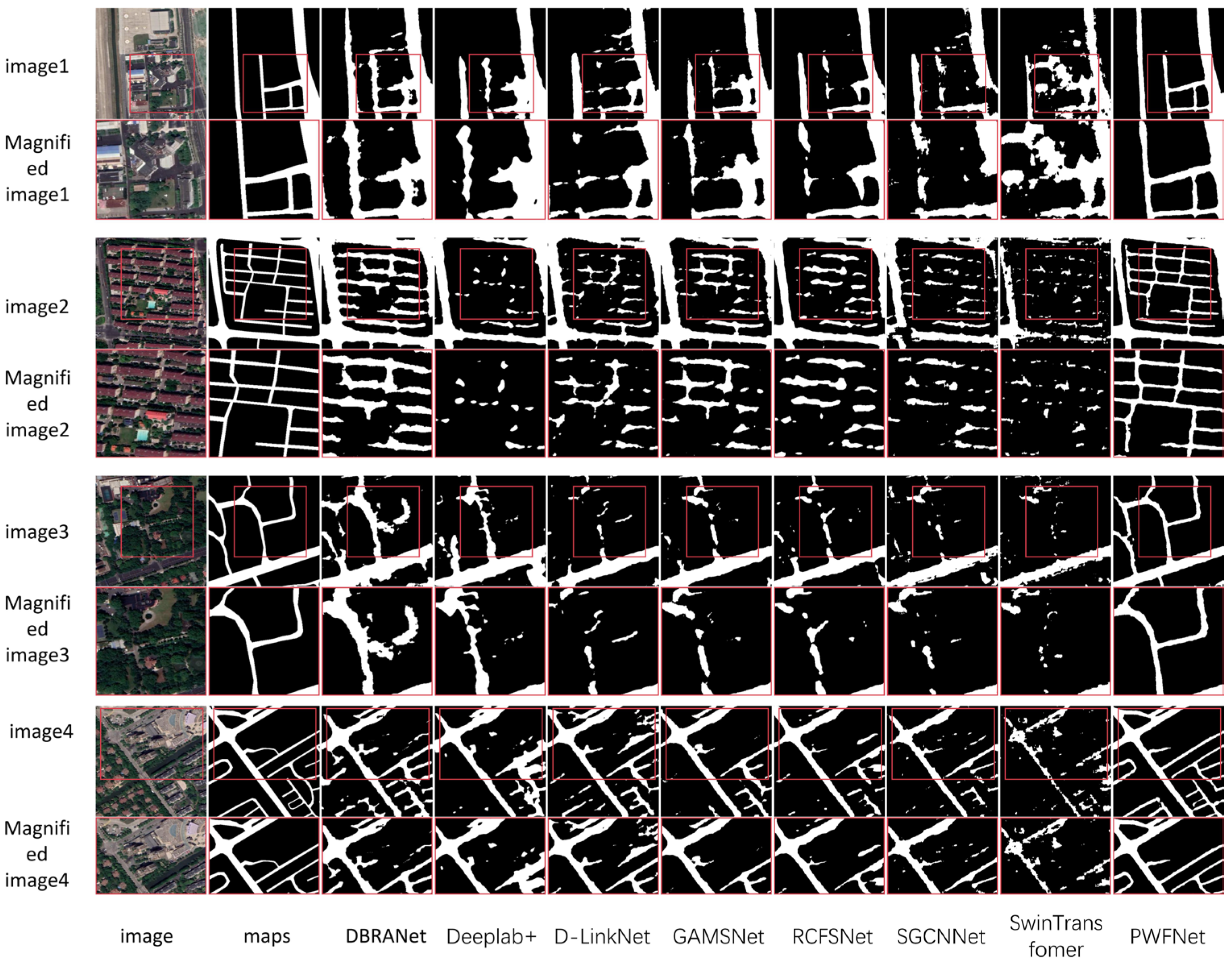

5.2. Cross-Region Transfer Experiments

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, R.; Wu, J.; Lu, W.; Miao, Q.; Zhang, H.; Liu, X.; Lu, Z.; Li, L. A Review of Deep Learning-Based Methods for Road Extraction from High-Resolution Remote Sensing Images. Remote Sens. 2024, 16, 2056. [Google Scholar] [CrossRef]

- Boyko, A.; Funkhouser, T. Extracting roads from dense point clouds in large scale urban environment. ISPRS-J. Photogramm. Remote Sens. 2011, 66, S2–S12. [Google Scholar] [CrossRef]

- Wang, J.; Song, J.; Chen, M.; Yang, Z. Road network extraction: A neural-dynamic framework based on deep learning and a finite state machine. Int. J. Remote Sens. 2015, 36, 3144–3169. [Google Scholar] [CrossRef]

- Senthilnath, J.; Varia, N.; Dokania, A.; Anand, G.; Benediktsson, J.A. Deep TEC: Deep transfer learning with ensemble classifier for road extraction from UAV imagery. Remote Sens. 2020, 12, 245. [Google Scholar] [CrossRef]

- Wang, X.; Jin, X.; Dai, Z.; Wu, Y.; Chehri, A. Deep Learning-Based Methods for Road Extraction From Remote Sensing Images: A vision, survey, and future directions. IEEE Geosci. Remote. Sens. Mag. 2025, 13, 55–78. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert. Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. (Engl. Ed.) 2016, 3, 271–282. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Alamri, A. VNet: An end-to-end fully convolutional neural network for road extraction from high-resolution remote sensing data. Ieee Access. 2020, 8, 179424–179436. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Cui, W.; Jiang, H. Fully convolutional networks for building and road extraction: Preliminary results. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 June 2016; pp. 1591–1594. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, Y.; Li, W.; Alexandropoulos, G.C.; Yu, J.; Ge, D.; Xiang, W. DDU-Net: Dual-decoder-U-Net for road extraction using high-resolution remote sensing images. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Jiang, X.; Li, Y.; Jiang, T.; Xie, J.; Wu, Y.; Cai, Q.; Jiang, J.; Xu, J.; Zhang, H. RoadFormer: Pyramidal deformable vision transformers for road network extraction with remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2022, 113, 102987. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Meng, Q.; Zhou, D.; Zhang, X.; Yang, Z.; Chen, Z. Road Extraction from Remote Sensing Images via Channel Attention and Multi-Layer Axial Transformer. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 5504705. [Google Scholar] [CrossRef]

- Yang, H.; Zhou, C.; Xing, X.; Wu, Y.; Wu, Y. A High-Resolution Remote Sensing Road Extraction Method Based on the Coupling of Global Spatial Features and Fourier Domain Features. Remote Sens. 2024, 16, 3896. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, X.; Wang, C.; Chen, S.; Kong, H. Fourier-Deformable Convolution Network for Road Segmentation from Remote Sensing Images. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 4415117. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Z.; Yang, J.; Zhang, H. Wavelet transform feature enhancement for semantic segmentation of remote sensing images. Remote Sens. 2023, 15, 5644. [Google Scholar] [CrossRef]

- Sardar, A.; Mehrshad, N.; Mohammad Razavi, S. Efficient image segmentation method based on an adaptive selection of Gabor filters. IET Image Process. 2020, 14, 4198–4209. [Google Scholar] [CrossRef]

- Omati, M.; Sahebi, M.R. Change detection of polarimetric SAR images based on the integration of improved watershed and MRF segmentation approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2018, 11, 4170–4179. [Google Scholar] [CrossRef]

- Valero, S.; Chanussot, J.; Benediktsson, J.A.; Talbot, H.; Waske, B. Advanced directional mathematical morphology for the detection of the road network in very high resolution remote sensing images. Pattern Recognit. Lett. 2010, 31, 1120–1127. [Google Scholar] [CrossRef]

- Jeong, M.; Nam, J.; Ko, B.C. Lightweight multilayer random forests for monitoring driver emotional status. IEEE Access. 2020, 8, 60344–60354. [Google Scholar] [CrossRef]

- Song, M.; Civco, D. Road extraction using SVM and image segmentation. Photogramm. Eng. Remote Sens. 2004, 70, 1365–1371. [Google Scholar] [CrossRef]

- Huan, H.; Sheng, Y.; Zhang, Y.; Liu, Y. Strip attention networks for road extraction. Remote Sens. 2022, 14, 4516. [Google Scholar] [CrossRef]

- Buslaev, A.; Seferbekov, S.; Iglovikov, V.; Shvets, A. Fully convolutional network for automatic road extraction from satellite imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 207–210. [Google Scholar]

- Pan, D.; Zhang, M.; Zhang, B. A generic FCN-based approach for the road-network extraction from VHR remote sensing images–using OpenStreetMap as benchmarks. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2021, 14, 2662–2673. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. Linknet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Wan, J.; Xie, Z.; Xu, Y.; Chen, S.; Qiu, Q. DA-RoadNet: A dual-attention network for road extraction from high resolution satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2021, 14, 6302–6315. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–186. [Google Scholar]

- Mei, J.; Li, R.; Gao, W.; Cheng, M. CoANet: Connectivity attention network for road extraction from satellite imagery. IEEE Trans. Image Process. 2021, 30, 8540–8552. [Google Scholar] [CrossRef]

- Zhu, X.; Huang, X.; Cao, W.; Yang, X.; Zhou, Y.; Wang, S. Road extraction from remote sensing imagery with spatial attention based on Swin Transformer. Remote Sens. 2024, 16, 1183. [Google Scholar] [CrossRef]

- Ge, C.; Nie, Y.; Kong, F.; Xu, X. Improving road extraction for autonomous driving using swin transformer unet. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Beijing, China, 18 September–12 October 2022; pp. 1216–1221. [Google Scholar]

- Liu, W.; Gao, S.; Zhang, C.; Yang, B. RoadCT: A hybrid CNN-transformer network for road extraction from satellite imagery. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 105605. [Google Scholar] [CrossRef]

- Wang, R.; Cai, M.; Xia, Z.; Zhou, Z. Remote sensing image road segmentation method integrating CNN-Transformer and UNet. IEEE Access 2023, 11, 144446–144455. [Google Scholar] [CrossRef]

- Chen, H.; Li, Z.; Wu, J.; Xiong, W.; Du, C. SemiRoadExNet: A semi-supervised network for road extraction from remote sensing imagery via adversarial learning. ISPRS J. Photogramm. Remote. Sens. 2023, 198, 169–183. [Google Scholar] [CrossRef]

- Gao, L.; Zhou, Y.; Tian, J.; Cai, W.; Lv, Z. MCMCNet: A Semi-supervised Road Extraction Network for High-resolution Remote Sensing Images via Multiple Consistency and Multi-task Constraints. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Zhou, M.; Sui, H.; Chen, S.; Liu, J.; Shi, W.; Chen, X. Large-scale road extraction from high-resolution remote sensing images based on a weakly-supervised structural and orientational consistency constraint network. ISPRS J. Photogramm. Remote. Sens. 2022, 193, 234–251. [Google Scholar] [CrossRef]

- Lu, X.; Zhong, Y.; Zheng, Z.; Wang, J. Cross-domain road detection based on global-local adversarial learning framework from very high resolution satellite imagery. ISPRS J. Photogramm. Remote. Sens. 2021, 180, 296–312. [Google Scholar] [CrossRef]

- Finder, S.E.; Zohav, Y.; Ashkenazi, M.; Treister, E. Wavelet feature maps compression for image-to-image CNNs. Adv. Neural Inf. Process. Systems. 2022, 35, 20592–20606. [Google Scholar]

- Huang, J.; Zhao, Y.; Li, Y.; Dai, Z.; Chen, C.; Lai, Q. ACM-UNet: Adaptive Integration of CNNs and Mamba for Efficient Medical Image Segmentation. arXiv 2025, arXiv:2505.24481. [Google Scholar]

- Xing, R. FreqU-FNet: Frequency-Aware U-Net for Imbalanced Medical Image Segmentation. arXiv 2025, arXiv:2505.17544. [Google Scholar]

- Li, Q.; Shen, L.; Guo, S.; Lai, Z. Wavelet integrated CNNs for noise-robust image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7245–7254. [Google Scholar]

- Huang, H.; He, R.; Sun, Z.; Tan, T. Wavelet-srnet: A wavelet-based cnn for multi-scale face super resolution. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1689–1697. [Google Scholar]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet convolutions for large receptive fields. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 363–380. [Google Scholar]

- Eliasof, M.; Bodner, B.J.; Treister, E. Haar wavelet feature compression for quantized graph convolutional networks. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 4542–4553. [Google Scholar] [CrossRef]

- Liu, H.; Wang, C.; Zhao, J.; Chen, S.; Kong, H. Adaptive fourier convolution network for road segmentation in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Lin, S.; Yao, X.; Liu, X.; Wang, S.; Chen, H.; Ding, L.; Zhang, J.; Chen, G.; Mei, Q. MS-AGAN: Road extraction via multi-scale information fusion and asymmetric generative adversarial networks from high-resolution remote sensing images under complex backgrounds. Remote Sens. 2023, 15, 3367. [Google Scholar] [CrossRef]

- Song, R.; Shi, F.; Du, G.; Zhang, X.; Jiang, C. MG-RoadNet: Road Segmentation Network for Remote Sensing Images Based on Multi-Receptive Field Graph Convolution. Signal Image Video Process. 2025, 19, 679. [Google Scholar] [CrossRef]

- Chen, L.; Gu, L.; Li, L.; Yan, C.; Fu, Y. Frequency Dynamic Convolution for Dense Image Prediction. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 30178–30188. [Google Scholar]

- Gao, S.; Cheng, M.; Zhao, K.; Zhang, X.; Yang, M.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yang, Z.; Zhou, D.; Yang, Y.; Zhang, J.; Chen, Z. Road extraction from satellite imagery by road context and full-stage feature. IEEE Geosci. Remote. Sens. Lett. 2022, 20, 8000405. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, W.; Gui, Q.; Li, X.; Wang, L. Split depth-wise separable graph-convolution network for road extraction in complex environments from high-resolution remote-sensing images. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Lu, X.; Zhong, Y.; Zheng, Z.; Zhang, L. GAMSNet: Globally aware road detection network with multi-scale residual learning. ISPRS J. Photogramm. Remote. Sens. 2021, 175, 340–352. [Google Scholar] [CrossRef]

- Chen, S.; Ji, Y.; Tang, J.; Luo, B.; Wang, W.; Lv, K. DBRANet: Road extraction by dual-branch encoder and regional attention decoder. IEEE Geosci. Remote. Sens. Lett. 2021, 19, 3002905. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. A challenge to parse the earth through satellite images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–181. [Google Scholar]

- Zhu, Q.; Zhang, Y.; Wang, L.; Zhong, Y.; Guan, Q.; Lu, X.; Zhang, L.; Li, D. A global context-aware and batch-independent network for road extraction from VHR satellite imagery. ISPRS J. Photogramm. Remote. Sens. 2021, 175, 353–365. [Google Scholar] [CrossRef]

- Batra, A.; Singh, S.; Pang, G.; Basu, S.; Jawahar, C.V.; Paluri, M. Improved road connectivity by joint learning of orientation and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10385–10393. [Google Scholar]

| Methods | IOU (%) | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|

| GAMSNet | 66.74 | 81.14 | 78.99 | 80.05 |

| DBRANet | 65.14 | 81.54 | 76.41 | 78.89 |

| Deeplab+ | 65.87 | 81.82 | 77.17 | 79.43 |

| D-LinkNet | 64.44 | 81.63 | 75.36 | 78.37 |

| SGCN | 64.96 | 81.86 | 75.88 | 78.76 |

| RCFSNet | 64.94 | 80.25 | 77.30 | 78.75 |

| Swin Transformer | 61.69 | 78.70 | 74.06 | 76.31 |

| PWFNet | 70.52 | 92.69 | 84.67 | 82.71 |

| Methods | IOU (%) | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|

| GAMSNet | 61.08 | 78.61 | 73.26 | 75.84 |

| DBRANet | 60.82 | 79.35 | 72.25 | 75.63 |

| Deeplab+ | 60.87 | 78.18 | 73.32 | 75.67 |

| D-LinkNet | 60.72 | 80.17 | 71.46 | 75.56 |

| SGCN | 53.43 | 79.64 | 61.89 | 69.65 |

| RCFSNet | 61.38 | 78.36 | 73.91 | 76.07 |

| Swin Transformer | 45.71 | 65.66 | 57.71 | 61.38 |

| PWFNet | 62.63 | 74.90 | 79.26 | 77.02 |

| PWC | FAM | DeepGlobe | CHN6-CUG | |||

|---|---|---|---|---|---|---|

| Model | F1 (%) | IOU (%) | F1 (%) | IOU (%) | ||

| BaseLine | 79.08 | 65.39 | 73.48 | 58.07 | ||

| BaseLine_FAM | √ | 79.72 | 66.28 | 76.33 | 61.72 | |

| BaseLine_PWC | √ | 79.94 | 66.58 | 76.45 | 61.88 | |

| PWFNet | √ | √ | 82.71 | 70.52 | 77.02 | 62.63 |

| Methods | Params | GFLOPs |

|---|---|---|

| GAMSNet | 29.33 M | 58.32 G |

| DBRANet | 47.68 M | 53.16 G |

| Deeplab+ | 40.29 M | 69.14 G |

| D-LinkNet | 217.65 M | 120.31 G |

| SGCN | 42.73 M | 311.58 G |

| RCFSNet | 58.23 M | 182.31 G |

| Swin Transformer | 30.90 M | 48.5 G |

| BaseLine | 29.03 M | 73.87 G |

| BaseLine_FAM | 29.09 M | 72.94 G |

| BaseLine_PWC | 79.94 M | 102.20 G |

| PWFNet | 80.00 M | 102.31 G |

| Methods | IOU (%) | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|

| GAMSNet | 57.97 | 73.29 | 73.50 | 73.39 |

| DBRANet | 55.79 | 65.83 | 78.53 | 71.62 |

| Deeplab+ | 49.90 | 62.70 | 70.96 | 66.58 |

| D-LinkNet | 56.34 | 67.02 | 77.94 | 72.07 |

| SGCN | 53.40 | 75.25 | 64.78 | 69.62 |

| RCFSNet | 55.03 | 72.94 | 69.14 | 70.99 |

| Swin Transformer | 43.55 | 75.19 | 50.86 | 60.68 |

| PWFNet | 65.88 | 84.18 | 80.28 | 80.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zong, J.; Sun, Y.; Wang, R.; Xu, D.; Yang, X.; Zhao, X. PWFNet: Pyramidal Wavelet–Frequency Attention Network for Road Extraction. Remote Sens. 2025, 17, 2895. https://doi.org/10.3390/rs17162895

Zong J, Sun Y, Wang R, Xu D, Yang X, Zhao X. PWFNet: Pyramidal Wavelet–Frequency Attention Network for Road Extraction. Remote Sensing. 2025; 17(16):2895. https://doi.org/10.3390/rs17162895

Chicago/Turabian StyleZong, Jinkun, Yonghua Sun, Ruozeng Wang, Dinglin Xu, Xue Yang, and Xiaolin Zhao. 2025. "PWFNet: Pyramidal Wavelet–Frequency Attention Network for Road Extraction" Remote Sensing 17, no. 16: 2895. https://doi.org/10.3390/rs17162895

APA StyleZong, J., Sun, Y., Wang, R., Xu, D., Yang, X., & Zhao, X. (2025). PWFNet: Pyramidal Wavelet–Frequency Attention Network for Road Extraction. Remote Sensing, 17(16), 2895. https://doi.org/10.3390/rs17162895