1. Introduction

The lane graph is a critical component in autonomous driving and advanced driver-assistance systems (ADAS). It provides a structured representation of the road environment, modeling lanes as directed edges and representing intersections, merging points, and splitting points as nodes, effectively capturing the connectivity, directionality, and spatial relationships among lanes. Each node contains precise geospatial information, facilitating accurate vehicle localization, while edges support motion planning. Together, these elements enable some level of autonomous driving and detailed navigation planning.

The lane graph can be constructed and updated by collecting data with an ego-vehicle equipped with multiple sensors such as LiDAR, cameras, and inertial measurement units (IMUs). However, this approach has several limitations, including a restricted field of view, long data collection times, and dependence on vehicle-based infrastructure, which also increases costs. By contrast, aerial imagery can rapidly cover larger areas and eliminates the need of vehicle-based infrastructure, resulting in a more efficient and scalable solution compared to sensor-equipped ego-vehicles. Nevertheless, constructing an accurate lane graph from aerial imagery remains challenging due to several factors, including the large area to be covered, the complexity of crossroads, the low resolution of ground objects, changes in road texture, occlusions caused by trees, vehicle queues or bridges, and variations in lighting conditions caused by shadows.

It is also worth mentioning that the task of lane graph extraction differs from lane line detection [

1] and lane marking detection [

2]. Lane graph extraction focuses on the topology of lane centerlines and how lanes are interconnected, whereas lane line detection targets the boundaries of the lanes, and lane marking detection focuses solely on identifying markings without considering the topological relationships between lanes.

Approaches for extracting lane graphs from aerial imagery can be broadly categorized into two main types: segmentation-based methods [

3] and graph-based methods [

4,

5]. Segmentation-based methods start by predicting lane segmentation masks, which are subsequently processed using conventional segmentation-to-graph algorithms to obtain the final lane graph. In contrast, graph-based methods directly predict the lane graph without relying on segmentation-to-graph algorithms. These methods may employ an agent [

4] to predict local lane graphs based on its position or use parametric graph representations such as Bézier graphs [

5]. There are trade-offs between the two approaches. Segmentation-based methods offer faster and more consistent inference times as the area increases, making them more scalable. However, they are highly dependent on the quality of the lane segmentation masks to produce high-quality lane graphs and often struggle with complex crossroads. In contrast, graph-based methods may generate more precise lane-level graphs but require algorithms to aggregate local graphs, which can significantly slow performance when dealing with large areas.

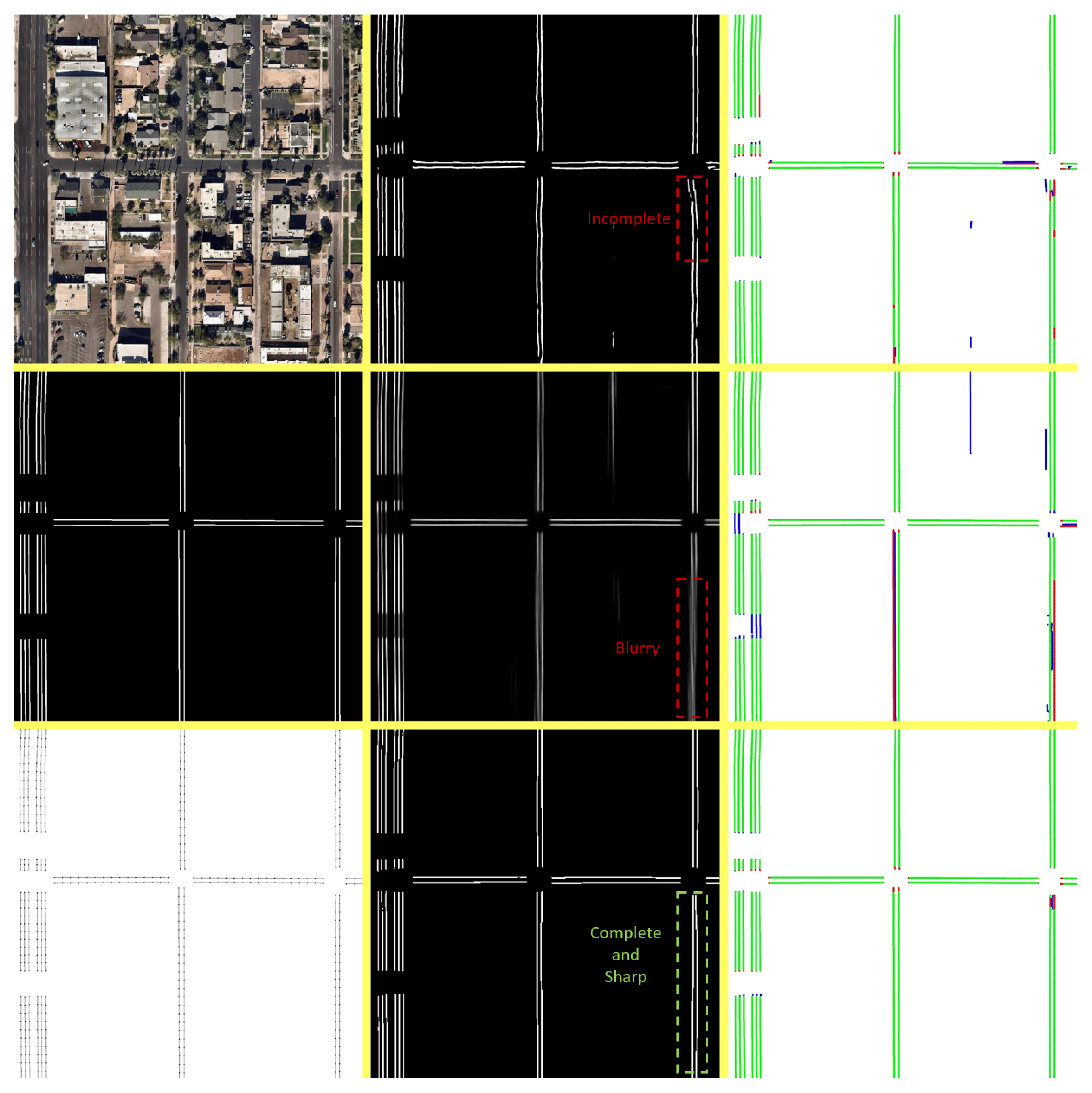

Segmentation-based methods employ CNNs to extract lane segmentation masks. However, these networks often struggle to produce sharp and continuous lane masks due to the intrinsic characteristics of aerial imagery, such as occlusions caused by trees, vehicle queues, or bridges, or changes in visual patterns resulting from variations in road texture and lighting conditions (see

Figure 1). Since the subsequent segmentation-to-graph algorithm relies heavily on the quality of these masks, any inaccuracies can significantly degrade the overall quality of the lane graphs. These methods attempt to address these challenges by applying post-processing heuristics or incorporating specialized loss functions and dilated convolutional layers [

3]. Nonetheless, the lane masks produced by these methods still lack continuity and sharpness. Our method addresses these issues by refining the lane segmentation masks output by a CNN, enhancing their continuity and sharpness (as shown in

Figure 1), which leads to higher-quality lane graphs.

Recently, diffusion models [

6,

7,

8] have emerged as an alternative to solve segmentation problems, demonstrating state-of-the-art results in specific domains [

9,

10,

11,

12]. Diffusion models offer several advantages over CNNs, including their flexibility to create ensembles by using different seeds at inference time and ability to infer segmentation masks from complex visual patterns where CNNs often struggle. Thanks to this ability to handle complex visual patterns, we employ diffusion models to refine the lane segmentation masks. Methods [

9,

10,

11,

12] that leverage diffusion models for segmentation problems depend on ensembles of these models to improve the quality of segmentation mask predictions beyond what could be achieved with a single diffusion model. However, this approach falls short when segmenting thin structures, which is a critical challenge in the context of lane segmentation from aerial imagery. Slight lateral variations in the segmented pixels across the ensemble lead to a blurred average segmentation mask (refer to the discussion in

Section 1.3 for more details). On the other hand, a standard diffusion model, i.e., one that starts inference from Gaussian noise, lacks the robustness needed to produce high-quality segmentation masks (as confirmed and discussed in

Section 3.4).

To overcome these limitations, our approach avoids the ensemble strategy and employs a single diffusion model with a deterministic sampling path and a conditioned starting latent variable. Instead of initiating the sampling procedure from Gaussian noise, as it is standard in diffusion models, we start with a latent variable conditioned on the segmentation masks produced by a CNN and use a deterministic sampling. Since this starting point is already a reasonable approximation of the ground truth segmentation mask, the diffusion model improves the approximation, effectively refining the segmentation mask. A notable outcome of this refinement is that the lane masks become complete and sharp (see qualitative results in

Figure 2 and

Figure 3), significantly improving the connectivity of the lane graph, an essential and highly desirable attribute.

In summary, this paper makes the following contributions:

We introduce a novel approach for refining lane segmentation masks produced by a CNN. Our method employs a diffusion model with a deterministic sampling procedure, initialized from a latent variable conditioned on the initial CNN’s segmentation mask predictions. These refined masks are then utilized to extract the lane graph using a conventional segmentation-to-graph algorithm. Experiments conducted on a public dataset confirm that our method, which integrates a CNN and a diffusion model, outperforms each component used individually (see

Table 1).

We also carry out an ablation study on the components of the diffusion model used in our method (see

Table 2), as well as on the impact of varying the number of sampling steps required for lane mask refinement (see

Table 3). Our results demonstrate that high-quality refinement can be achieved within only a few sampling steps.

Furthermore, we conduct extensive experiments to evaluate how different variants of our sampling strategy affect the overall lane graph metrics (refer to

Table 3 and

Table 4).

In the following sections, we present the related work relevant to our method, which includes (1) lane graph extraction from onboard sensors, (2) lane graph extraction from aerial imagery, and (3) the use of diffusion models for segmentation tasks.

1.1. Lane Graph Extraction from Onboard Sensors

Several previous works [

13,

14,

15,

16,

17] have utilized either street-view images or 3D point clouds collected from onboard sensors such as cameras and LiDAR to extract lane graphs. Street-view images provide rich semantic and visual cues, enhancing the detection of lane centerlines and lane-level topology. Furthermore, 3D point clouds offer precise geometric and elevation data, capturing lane shapes and boundaries. Inspired by human annotators, Homayounfar et al. [

13] train a hierarchical recurrent neural network to sequentially identify and trace lane boundaries, first attending to initial boundary regions and then outputting complete structured polylines. Zürn et al. [

14] employ a multi-modal bird’s-eye view (BEV) representation that integrates LiDAR, RGB, vehicle segmentation masks, and a semantic map (excluding vehicles) as input to train a graph region-based convolutional neural network (Graph R-CNN) [

18]. The network is trained with a regression loss and the method uses a post-processing algorithm to predict the lane graph within local BEV patches. The post-processing step eliminates false positive connections by applying probability and distance thresholds on the output lane graph. In both works, the ground truth data are obtained by projecting 3D LiDAR point clouds into a BEV representation. DAGMapper [

15] uses LiDAR intensity images as input to a recurrent convolutional network with three heads that parameterize a directed acyclic graphical model (DAGM), which iteratively constructs the lane graph. Formulating the problem as a directed acyclic graph, i.e., a graph without cycles, is advantageous, as it mirrors the approach human annotators would take when labeling a lane graph on an aerial image. Zhang et al. [

17] combine sensing data from LiDAR and cameras to train a hierarchical fully convolutional network that predicts a lane-level road network. Combining LiDAR and camera data leverages the strengths of both modalities, resulting in a more robust and reliable lane graph. Zhou et al. employ an encoder–decoder architecture to generate semantic maps from camera and LiDAR data, accumulating frames into a LiDAR-synchronized bird’s-eye view (BEV) projection. They also incorporate OpenStreetMap (OSM) data to help the model infer lane connections at intersections. Can et al. [

19,

20] employ images of a single onboard camera. In their first work [

19], they use a Transformer [

21] with two heads that predicts lane center-lines and bounding boxes of objects. A Transformer is used instead of a CNN to more effectively model long-range dependencies and global context which might be beneficial for extracting the lane-level graph. In their second work [

20], they refine their first approach by replacing the object detection head with a minimal cycle head. This head is designed to identify the smallest cycles formed by directed curve segments between intersections.

Although extracting the lane graph using an ego-vehicle equipped with onboard sensors typically results in highly quality lane-level graphs, this approach has several drawbacks. The main disadvantages include high costs and extended data collection times, making it difficult to scale to large geographical areas. Furthermore, onboard sensors have limited fields of view and struggle with occlusions caused by other vehicles, buildings, or vegetation, leading to incomplete or inconsistent lane graphs. Additionally, maintaining and operating sensor-equipped vehicles requires logistical resources and infrastructure, further hindering scalability.

In contrast, aerial imagery offers broader coverage with faster data collection times and does not depend on vehicle-based infrastructure, making it a more scalable and cost-effective solution. Its consistent top–down perspective simplifies lane detection, provides a wider field of view that reduces occlusion issues, and enables mapping of remote or hard-to-reach areas that may be inaccessible or impractical for sensor-equipped vehicles.

1.2. Lane Graph Extraction from Aerial Imagery

These advantages position aerial imagery as a compelling alternative to onboard sensors for extracting lane-level graphs. However, aerial imagery also poses challenges, including low ground object resolution, occlusions caused by urban infrastructure and vehicles, as well as variations in road texture and lighting conditions. Another significant challenge is the scarcity of datasets for this task, though recent releases [

3,

4] have enabled the development of some methods [

3,

4,

5] to address this task.

Büchner et al. [

4] employ centerline regressor models with a virtual agent positioned at a specific point in a BEV patch to iteratively predict the successor sub-graph relative to the agent’s current location. They identify potential nodes within corridors likely to contain the successor sub-graph, which is then predicted using a causal variant of a message-passing network [

22]. The causality prior enables the network to incorporate information about predecessor and successor features during message passing, allowing it to model directional relationships between nodes. Blayney et al. [

5] represent the lane graph as a Bézier Graph, where nodes define the start and end points of cubic Bézier curves, parameterized by four control points, and edges represent the curves connecting these points. Nodes store the position of the ending points and direction vectors, while edges contain two length values. The intermediate control points are computed from the attributes of the nodes and edges. In this graph, nodes aligned in a straight line are significantly reduced. This compact representation decreases the total number of nodes, enabling the use of a Transformer [

21] architecture. An encoder Transformer processes visual features extracted from the aerial image by a backbone CNN. A Transformer decoder then performs cross-attention between the encoder output and a set of node and edge queries, producing node and edge embeddings. These embeddings are subsequently passed through multilayer perceptron (MLP) heads to predict the parameters of the nodes and edges of the Bézier Graph. These methods [

4,

5] can be considered graph-based approaches, as they do not produce lane segmentation masks as the final output of the learning process, although they may utilize segmentation masks within their training pipelines. As mentioned earlier, graph-based methods typically yield high connectivity and completeness in lane graphs. However, they require algorithms to aggregate the local graphs predicted within each patch, making them slower than segmentation-based approaches.

In contrast to graph-based methods, segmentation-based approaches offer faster inference times and simpler solutions for merging different segmented aerial patches. Once the entire area is merged, the lane graph is extracted using a conventional segmentation-to-graph algorithm. He et al. [

3] tackle the problem this way, where the final outputs of the learning process are lane segmentation masks. These masks are subsequently post-processed using a traditional segmentation-to-graph algorithm. This approach divides the task into two subtasks: lane extraction in non-intersection areas and turning lane extraction at intersections. This division simplifies the learning process for the CNNs, as the visual patterns and lanes differ significantly between intersection and non-intersection areas. For instance, lanes in non-intersection areas do not cross, while those at intersections often do, and intersection areas occupy only a small portion of the aerial image compared to non-intersection areas. Due to the complex visual patterns and intricate lane connections, intersection areas may also require additional pre- or post-processing. In the first subtask, a D-LinkNet [

23], a CNN based on a U-Net [

24] with dilated convolutions [

25,

26], is employed to extract segmentation and direction maps of the lanes. Direction maps are segmentation maps where colors encode the driving direction of the lanes. Using direction maps alongside lane segmentation masks has been shown to improve performance in road segmentation, a task closely related to lane segmentation. The direction map is then used to predict the direction of the graph. In the second subtask, a classifier distinguishes between valid and invalid lane connections. It leverages aerial images, potential segmentation masks of the lane connections, and positional information from the terminal nodes (defined as nodes with exactly one incoming or outgoing edge). These inputs provide geometric cues and visual features that help the classifier differentiate between valid and invalid connections. The potential connection segmentation masks are generated using a network similar to the one employed in the first subtask. Lane connections are selected from a pool of ordered terminal node pairs. Candidate terminal node pairs are constructed based on a distance threshold between terminal nodes within the lane graph derived from non-intersection areas extracted in the first subtask. This approach is effective because terminal nodes from different crossroads are typically much farther apart than those from the same crossroad, reflecting the fact that most crossroads are not situated close to one another. This process is followed by predicting segmentation masks for valid connections, which may represent either curves (for turns) or straight lines, using a D-LinkNet similar to the one used previously. Once the segmentation mask is obtained, the segmentation-to-graph algorithm is applied to extract the subgraph of the lane connections. Finally, the lane graphs extracted from both subtasks are integrated by using the terminal nodes as shared junctions.

Our method builds upon this previous work but is specifically tailored to improve lane extraction in non-intersection areas (first subtask). It addresses a key limitation of segmentation-based approaches: incomplete and noisy lane masks. By producing sharp and complete lane masks, the subsequent segmentation-to-graph algorithm yields higher-quality lane graphs, specially in terms of connectivity. We leave the second subtask for future work, as it presents additional challenges, including applying diffusion models on intersection areas and integrating geo-positional information for the terminal nodes. Nevertheless, we believe our method could be extended to address these challenges with further modifications. We present several potential approaches in

Section 4.

1.3. Diffusion Models for Image Segmentation

Diffusion models (DMs) are generative models that synthesize new data from Gaussian noise. The intuition behind these types of models is to corrupt a clean sample by progressively adding noise and then learn to reverse this process using a random process. Thanks to the stochasticity of the learned reverse process, new data can be generated. Diffusion models comprise three principal components: the forward process, the denoising process, and the sampling procedure (also referred to as inference) [

27]. During the forward process, noise is progressively added to the data over a fixed time horizon. This process can be formalized as a Markov chain, i.e., each state depends only on the previous state, with Gaussian transition distributions following a variance schedule that controls the balance between signal and noise. The denoising process progressively removes the noise introduced during the forward process. While it can also be modeled as a Markov chain, computing the true transition distributions is intractable, so these distributions are approximated using parameterized models. To learn the parameters, a neural network is trained by optimizing the lower bound loss. Once trained, the neural network is used in the sampling procedure to generate new data from noise sampled from a standard normal distribution. Denoising diffusion probabilistic models (DDPMs) [

7] provide a simple parameterization for learning the denoising process, resulting in the generation of high-quality images, which subsequent works [

28,

29] further improve. Denoising diffusion implicit models (DDIMs) [

8] accelerate the sampling procedure by generalizing DDPMs to non-Markovian chains, substantially reducing the number of sampling steps relative to the forward steps.

Conditional diffusion models are diffusion models that condition data generation on additional inputs such as class labels [

28,

29] or embeddings [

30] derived from external inputs, including text, semantic maps, or images. These conditional inputs guide the model to produce more accurate and relevant outputs. Conditional diffusion models are employed in image segmentation tasks due to their flexibility in creating ensembles through different random seeds during inference, which makes the segmentation output more robust, and their ability to accurately segment complex visual patterns, areas where CNNs often struggle. However, these models also have some drawbacks, including higher inference times and challenges in achieving deterministic segmentation outputs. Despite these limitations, conditional diffusion models have demonstrated state-of-the-art performance for image segmentation in specific domains [

9,

10,

11,

12]. SegDiff [

9] utilizes a conditional diffusion model for image segmentation across diverse domains, where segmentation masks are diffused, conditioned on the corresponding images. This method generates an ensemble of segmentation masks through multiple sampling processes, which are then averaged to produce the final segmentation mask. Building on SegDiff, MedSegDiff [

11] enhances the original architecture of SegDiff to segment medical imagery by introducing a feature frequency parser (FF-Parser), an attention module that operates on Fourier-space of the image features. This module can be viewed as a learnable counterpart of frequency filters widely used in digital image processing. MedSegDiff-V2 [

12] further refines this approach by employing a Transformer architecture within the FF-Parser module, achieving improved segmentation performance compared to its predecessor.

Although diffusion models have been successfully employed for image segmentation, ensembles of diffusion models struggle with thin structures, such as lane segmentation masks. This limitation arises from slight lateral variations in the segmented pixels across the ensemble, resulting in a blurred average segmentation mask (see the second row of

Figure 2 and

Figure 3), which degrades the quality of the final lane graph. By contrast, this issue is less pronounced for larger objects, where minor discrepancies along the contours have minimal impact on the overall segmentation mask. Our method addresses this challenge by using conditional diffusion models for segmenting the lane masks without relying on an ensemble. Instead, we initiate the sampling procedure from a latent variable conditioned on the output of a CNN and follow a deterministic sampling process (see

Section 2.2).

2. Materials and Methods

Our method is divided into three distinct stages: (1) lane segmentation, (2) lane segmentation refinement, and (3) lane graph extraction (see

Figure 4). Each stage addresses a specific challenge: the First Stage utilizes a CNN to perform an initial segmentation of the lanes; the Second Stage employs a conditional diffusion model and a novel sampling strategy to refine the lane segmentation masks; and the Third Stage converts these refined masks into a graph using a conventional segmentation-to-graph algorithm. It is important to note that only the first two stages involve training and inference phases, whereas the third stage requires no training, relying instead on a traditional rule-based algorithm.

Our primary contribution lies in the lane segmentation refinement stage, while the other two stages are taken from LaneExtraction [

31]. As a result of this refinement process, the lane masks appear significantly sharper, i.e., lane masks exhibit clearly delineated boundaries, and lane segments become continuous (see

Figure 2 and

Figure 3 for visual examples). This improvement in the lane segmentation masks leads to a higher-quality lane graph after the extraction process in the Third Stage (see quantitative evaluations in

Table 1). In addition to enhancing the quality of the resulting lane graph, our approach does not require sequential training, since the models for stages one and two can be trained independently. This independence allows for the flexible use of different architectures and training procedures for the segmentation and diffusion models, enabling parallel training. This parallelization improves training efficiency but requires more computational resources compared to methods that only require one model (refer to

Table 5). However, at inference time, the sequential order of the stages must be maintained (see

Figure 4).

For the lane segmentation refinement, we employ a conditional diffusion model due to its effectiveness in addressing complex visual challenges that CNNs typically struggle with. The diffusion model refines the lane masks by reducing false positives and false negatives, thereby enhancing both their completeness and sharpness. We train the diffusion model conditioned on the RGB aerial images, following the framework of Improved DDPM [

29]. For further technical details, we refer the reader to

Section 2.2.

For sampling, we employ DDIM [

8], which improves efficiency and enables a deterministic sampling path. Unlike standard diffusion models that start DDIM sampling from Gaussian noise, our approach conditions the initial latent variable on the output of the CNN (referred to as the unrefined segmentation mask), which is then refined through a few DDIM sampling steps (see

Figure 5 for a visualization of the process). The unrefined segmentation mask serves a good approximation of the ground truth segmentation mask, thereby reducing the number of plausible sampling trajectories for the diffusion model. In contrast, starting from Gaussian noise makes the process significantly more difficult due to the vastly larger space of potential sampling trajectories. This observation is supported by experimental results in

Table 4, where starting from pure Gaussian noise (last row) leads to worse performance compared to initializing from a latent variable conditioned on the unrefined segmentation mask (other rows of

Table 4).

We explore the conditioning of the starting latent variable with various strategies, including directly starting from the unrefined segmentation mask, adding Gaussian noise to it, and applying noise through several forward diffusion steps (as during training). The rationale behind adding Gaussian noise is to make the latent variable more closely resemble a sample from a Gaussian distribution, which is the expected input for DDIM sampling. Applying noise via forward steps better aligns with the noisy samples used during training for learning the denoising process, but we also include a comparison with the simpler approach of adding Gaussian noise directly. We show experimentally that adding noise to the unrefined segmentation mask, either directly or through the forward steps, enhances the performance metrics and stability of the model compared to directly using the unrefined mask in DDIM sampling (see

Table 3 and

Table 4). We present our analysis of the results in

Section 3.4.

In the following sections, we provide detailed technical explanations of the three stages of our method, as well as relevant implementation details.

2.1. First Stage: Lane Segmentation

For the First Sage, we use the same architecture as LaneExtraction [

3], which employs a D-LinkNet [

23] with two heads: one outputs the segmentation mask while the other predicts the lane direction map. D-LinkNet includes dilated convolutional layers, which are beneficial for lane segmentation from aerial imagery because they expand the receptive field without increasing the number of parameters or reducing spatial resolution. This allows the network to capture broader contextual information essential for identifying continuous structures like lanes while preserving fine details such as lane edges and markings. Joint training of lane segmentation masks with direction maps has proven effective in improving the segmentation performance of both road- [

32] and lane-level [

3] networks. We also follow a similar preprocessing procedure, which involves extracting random patches of fixed size

(

) from the training tiles (see

Section 3.1 for more details on the dataset), where the training tiles have a size of

. This patch size represents a sweet spot, providing sufficient context while ensuring a diverse variety of patches. After selecting a random patch, we apply augmentation techniques, including random rotations and random adjustments to color and brightness, to improve the robustness of the model to lighting and orientation variations, which enhances generalization. We let

be the patch training set of

n triplets induced by the preprocessing procedure, where

is an aerial training patch,

is its ground truth (GT) segmentation mask, and

is its ground truth direction map. The segmentation loss

is a combination of the cross-entropy loss and the dice loss for the segmentation head and the

loss for the direction map head is formally defined as follows:

where

and

are the predictions for the segmentation and direction map, respectively, output by the CNN given the aerial patch

. The CNN performs multitask learning, simultaneously predicting lane direction maps and segmentation masks. Both tasks are considered equally important. The loss function in Equation (

1) consists of three components: the

loss encourages the model to accurately estimate direction maps, while the cross-entropy (CE) loss and Dice loss guide the model to obtain correct segmentation masks. This dual segmentation loss formulation leverages the strengths of both approaches: CE promotes pixel-wise classification accuracy while Dice loss mitigates class imbalance, which is particularly significant in lane segmentation masks. The chosen weights,

for the

loss and

for both CE and Dice losses, which together sum to

for the segmentation branch, ensure that direction and segmentation contribute equally to the overall loss. This weighting strategy promotes balanced optimization between the two tasks, without favoring one over the other.

The inference is performed using a sliding window, with the same size as the training patches (

), applied to the full test image tiles of size

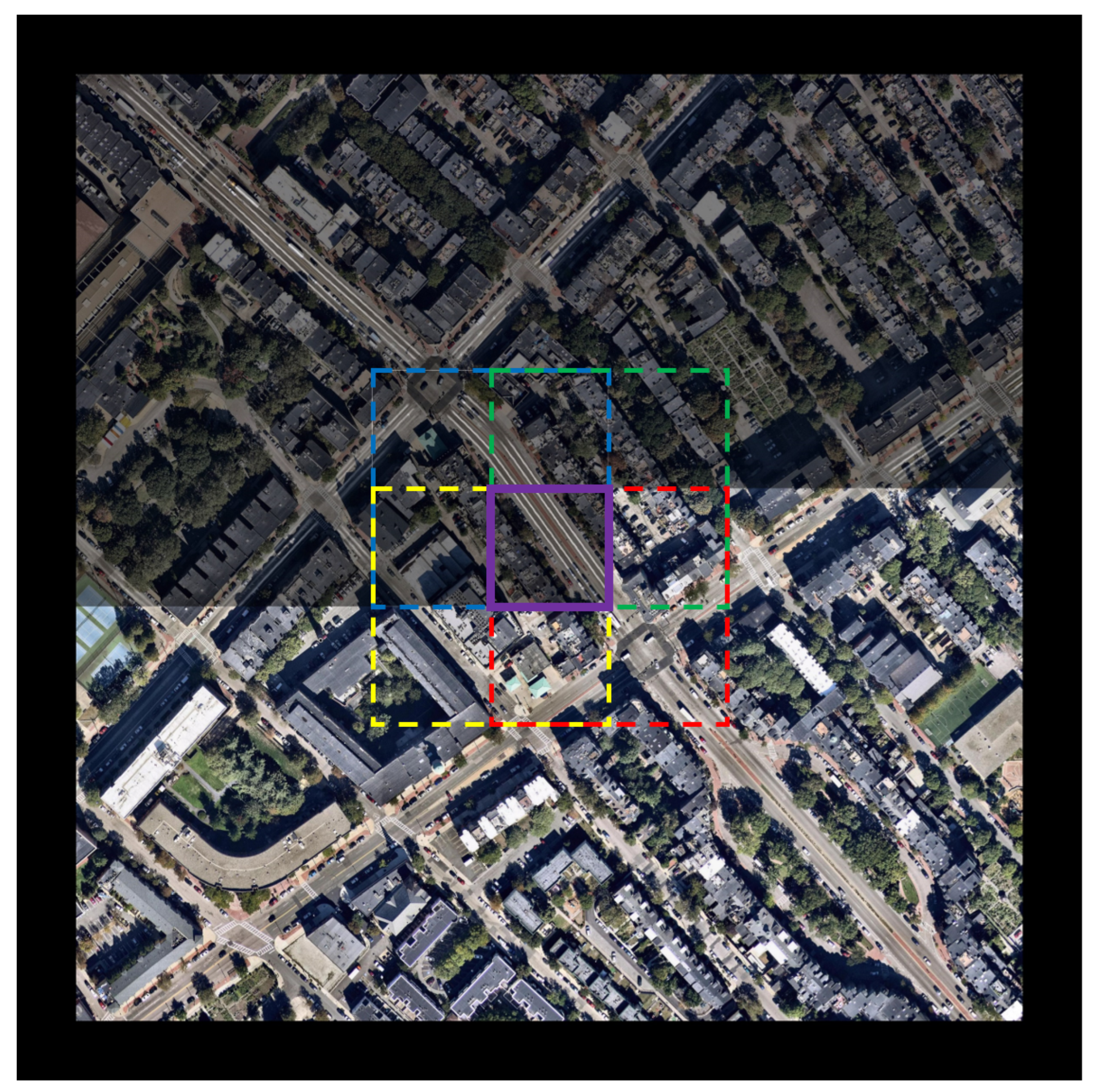

with both horizontal and vertical strides of 512. For each sliding window, a prediction is made using the trained network. Once the entire tile is covered, predictions in overlapping areas (see dashed squares in different colors in

Figure 6) are averaged to produce the final output. The average is taken over four overlapping regions, which enhances robustness. As we only need to apply the sliding window

times to cover the entire tile, efficiency is also guaranteed.

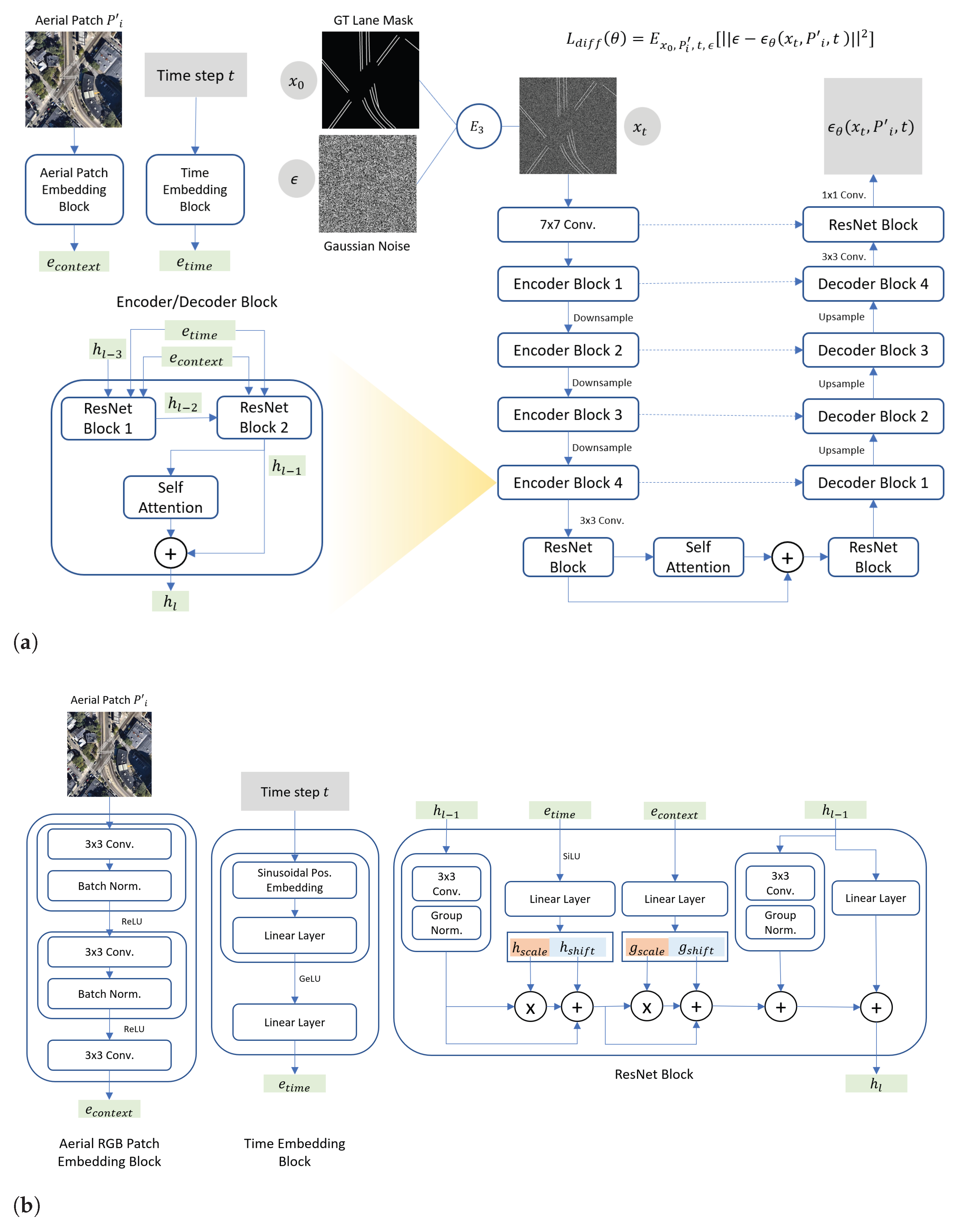

2.2. Second Stage: Lane Segmentation Refinement

Initially, in the Second Stage, we preprocess the training dataset similarly to the previous stage, applying cropping, random rotations, and random adjustments to color and brightness, followed by rescaling. As in the previous stage, these data augmentation techniques also enhance the generalization capabilities of the model. We let

represent the preprocessed dataset, where

is the ground truth (GT) segmentation mask and

is an aerial patch, initially sized

pixels (as in the First Stage), subsequently rescaled to

to fit GPU memory constraints. Then, we encode the rescaled aerial patches

using a simple three-layer CNN and generate timestep embeddings through a block composed of sinusoidal positional embeddings [

21], widely used in diffusion models, combined with two linear layers (see embedding blocks in

Figure 7). We then utilize the rescaled GT segmentation masks to conduct the forward diffusion process of a DDPM [

7], producing noisy segmentation masks guided by a sigmoid variance schedule [

33], used for training stability. Finally, we train a U-Net [

24] to learn the denoising process, following the parametrization introduced by DDPM [

7]. The U-Net architecture is widely utilized in diffusion models for image processing due to its skip connections between the encoder and decoder, which effectively capture global context and restore fine details from noisy inputs. The aerial RGB patches and timestep embeddings are injected into the U-Net via ResNet blocks [

31]. The overall architecture for learning the denoising process of the diffusion model closely follows the design principles of Improved DDPM [

29]. The U-Net is trained with the following loss:

where

,

is the GT segmentation masks data distribution, and

is the corresponding rescaled aerial patch of the sample

, with

,

is the time-step sampled from the uniform distribution

U,

T is the length of horizon for the forward diffusion process,

and

is computed from

by means of the following equation (as in DDPM):

where

, with

and

s are taken from the sigmoid variance schedule [

33].

Once the diffusion model is trained, we follow the DDIM sampling procedure introduced by Song et al. [

8], where samples can be generated through a deterministic process from the initial point

to the final point

, i.e., no noise is added during intermediate steps. Since DDIM employs a non-Markovian process, sampling is significantly faster compared to standard DDPM sampling. Although this typically results in lower sample quality, our case is less affected because we do not start from Gaussian noise. Additionally, in DDIM, the number of training forward steps is independent of the number of sampling steps, which allows sampling from only a selected subset during the inference phase. We choose a subset

, where

is an increasing subsequence of

of length

S, such that the difference between

and

is constant, with

. This constant step size makes the sampling process more stable by avoiding large jumps between steps while still maintaining efficiency. The resulting sampling trajectory is

(the reverse of

), where

is the step size,

S is the total number of sampling steps, and

. Each pair

and

is connected through the following equation, which corresponds to a special case of Equation (12) in DDIM [

8], with

:

where

is the output of U-Net at time-step

t and the rest of terms are defined above. In DDIM,

controls the amount of noise added during sampling, and when it is set to 0, the process is no longer random but deterministic.

To cover the entire testing tiles, we adopt the same sliding window approach used in the First Stage, employing a fixed patch size of

with identical vertical and horizontal strides of 512 to refine each sliding window. However, at this stage, the diffusion model receives two inputs: the aerial patch and its corresponding unrefined segmentation mask, both resized to

to match the input size of the model. The refined mask output is then resized back to the original patch dimensions of

. Once the entire tile is processed, we compute the average of the overlapping regions to obtain the final refined segmentation mask (see

Figure 6). As in the previous stage, averaging is used to enhance robustness, since overlapping patches may include different contextual information.

2.3. Third Stage: Segmentation-to-Graph Algorithm

In the Third Stage, we follow the approach used in LaneExtraction [

3]. Specifically, we apply a threshold

to the refined segmentation masks (interpreted as probability maps) to obtain binary masks. These masks are then processed using a morphological thinning algorithm [

34] to produce a skeleton structure, which is subsequently converted into a graph. Morphological thinning simplifies the mask to a skeleton, preserving the essential structural features of the lanes while eliminating redundant pixels. A post-processing step prunes the graph by removing small connected components and spurs (defined as edges extending from a node without connecting back to the main graph structure). Finally, the Douglas–Peucker algorithm [

35] is employed to simplify the graph, reducing the number of nodes for improved clarity and computational efficiency while preserving its overall shape.

2.4. Implementation Details

The architecture for the D-LinkNet [

23] in the First Stage was implemented in TensorFlow 2 [

36]. We used the same network hyperparameters as LaneExtraction [

3]. To be more precise, we trained the model for 500 epochs, starting with a learning rate of

and reducing it by a factor of 10 at epochs 350 and 450 and employed the AdamW [

37] optimizer. The architecture of the D-LinkNet was adopted from prior work (refer to Figure 3 in LaneExtraction [

3]). Data augmentation techniques, including random cropping, rotations, and random modifications to color and brightness, were employed. We used patch sizes of

for the pipeline of our method. The batch size was set to 8.

The architecture for the diffusion model in the Second Stage was implemented in Pytorch 2 [

38]. We used the sigmoid variance schedule [

33] with hyperparameters set to

,

, and

. The length of the forward process was set to

. The model was trained for

steps, utilizing a learning rate of

with the Adam optimizer [

39] and applying an exponential moving average (EMA) with decay factor of 0.995. The dimensions for both time and aerial patch embeddings were set to 256. Segmentation masks utilized one channel, while aerial RGB patches employed three channels. The batch size was set to 8. Data augmentation strategies included random cropping, horizontal and vertical flipping, integer rotations within

degrees, and color jitter for the aerial RGB patches, which were sized to

. As outlined in

Section 2.2, the patches were resized to

to fit into the GPU memory. Our implementation for the diffusion model is based on the following git repository:

https://github.com/lucidrains/denoising-diffusion-pytorch (accessed on 30 July 2025). This implementation uses the v-parametrization (as explained in Appendix D in [

40]) to train the diffusion model; we left it as it is.

The training and inference of both models were carried out on a single NVIDIA GeForce RTX 3090 GPU using CUDA Version 12.2.

4. Discussion

Our experiments demonstrate that integrating a CNN with a diffusion model, where the CNN’s lane segmentation prediction conditions the initial latent variable in the sampling procedure of a conditional diffusion model, yields complete and sharp lane masks, surpassing the capabilities of either model in isolation (see

Figure 2 and

Figure 3). This enhancement leads to higher-quality lane graphs extracted from the lane masks, with a notable improvement in connectivity, as reflected by the TOPO

score (refer to

Table 1). Furthermore, by analyzing the impact of varying noise levels applied to the initial unrefined segmentation mask during DDIM sampling, we found that while introducing Gaussian noise enhances the stability and robustness of the method, it does not lead to significant improvements in quantitative metrics.

The lane graph extracted from the refined lane masks output by the diffusion model can be viewed as a denoised version of the lane graph produced by the CNN, enhancing the overall quality of the extracted lane graph. However, several limitations are also apparent in the results: (1) our method struggles to replace large false negative segments (e.g., the prominent red segment at the bottom right bifurcation in

Figure 2), and (2) it sometimes generates node segments with significant positional shifts, causing mismatches (visible in

Figure 2 as overlapping blue–red segments near the same bifurcation). Future research could address these two key challenges: (1) restoring complete segments when the CNN provides no cues for the diffusion model and (2) reducing positional shifts to prevent mismatches in the final lane graph. Potential solutions to Challenge (1) include designing specialized loss functions for the CNN that focus on large occluded regions, as well as increasing the number of training samples that represent such scenarios. Additionally, incorporating contextual cues from nearby lanes could help the diffusion model infer missing segments by leveraging the structure of the surrounding road network. Introducing auxiliary supervision—such as occlusion masks or semantic segmentation maps (e.g., of trees or bridges)—could further assist the CNN in handling these areas. Pretraining or multitask learning on related tasks, such as road topology estimation, could also enhance the CNN’s ability to generalize in the presence of large, continuous occlusions. Finally, applying a graph completion step during post-processing may help recover missing connections by exploiting structural patterns in the predicted lane graph. To address Challenge (2), one potential approach is to widen the ground truth lane segmentation masks used by the CNN or to incorporate additional geometric information—such as distances or other geospatial properties between lanes—as input to both models. An alternative strategy could involve introducing a third model that refines the predicted lane graph using geometric cues, such as lane curvature, or applying geometric losses to regress the nodes toward their correct positions.

While our method currently focuses on undirected lane graphs in non-intersection areas, we believe that extending to directed lane graphs across all road areas represents a promising research direction as well. To estimate the direction of lanes, we can utilize the direction map predicted by the CNN (first step) and determine the most likely direction using a heuristic based on edge orientations within a lane segment (similar to Equation (

2) in [

3]). To extend our method to intersection areas, we consider several possible strategies. We first recall that terminal nodes are defined as those with exactly one incoming or outgoing edge within lanes in non-intersection areas. We then define intersection paths as sets of connected nodes that link two lanes from non-intersection areas. The following list outlines three such approaches:

Iterative Key Node Prediction: One approach is to use a model that predicts key nodes within intersection areas, such as nodes derived from Bézier curves connecting terminal nodes. An iterative algorithm can then use visual context and the current node position to predict the next node, starting from a terminal node and stopping when reaching another terminal node.

Joint Segmentation and Diffusion Model: Another alternative is to predict segmentation masks of intersection paths and merge them with the lane segmentation masks from non-intersection regions. Then, we could apply a conditional DDIM that takes both segmentation masks into account, allowing the model to connect all lane segments in a unified manner.

Terminal Node Pairing: A third approach involves training a model to identify pairs of terminal nodes that should be connected within an intersection. Intersection areas could be detected using additional segmentation masks. Once a pair is identified, another model could predict the intermediate nodes and edges that connect the two terminal nodes. This approach is similar to the one proposed in LaneExtraction [

3].

It is also worth noting that our method can be extended to other domains, such as biomedical imaging for vessel segmentation, contour line extraction, or any application requiring sharp and complete segmentation of thin structures. Potential extensions could include integration with stroke-based rendering techniques [

42] or value-function-guided segmentation [

43].