Context-Aware Feature Adaptation for Mitigating Negative Transfer in 3D LiDAR Semantic Segmentation

Abstract

1. Introduction

- A novel feature disentanglement technique for separating object-specific and context-specific information in 3D point cloud data and a cross-attention mechanism for refining source context features using target domain information.

- A modular feature refinement framework that can be integrated into various UDA techniques to enhance their performance by addressing negative transfer arising from context shift.

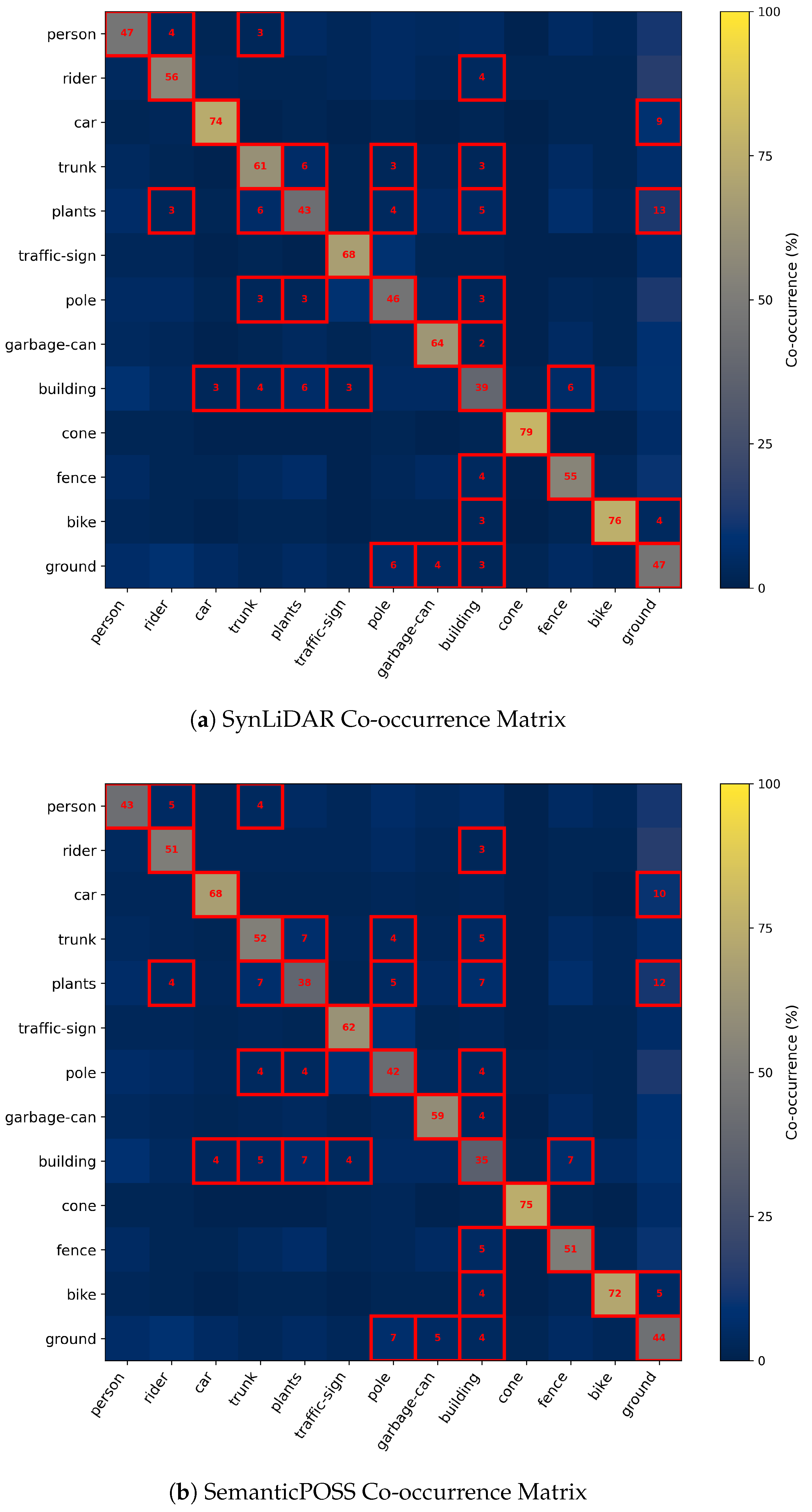

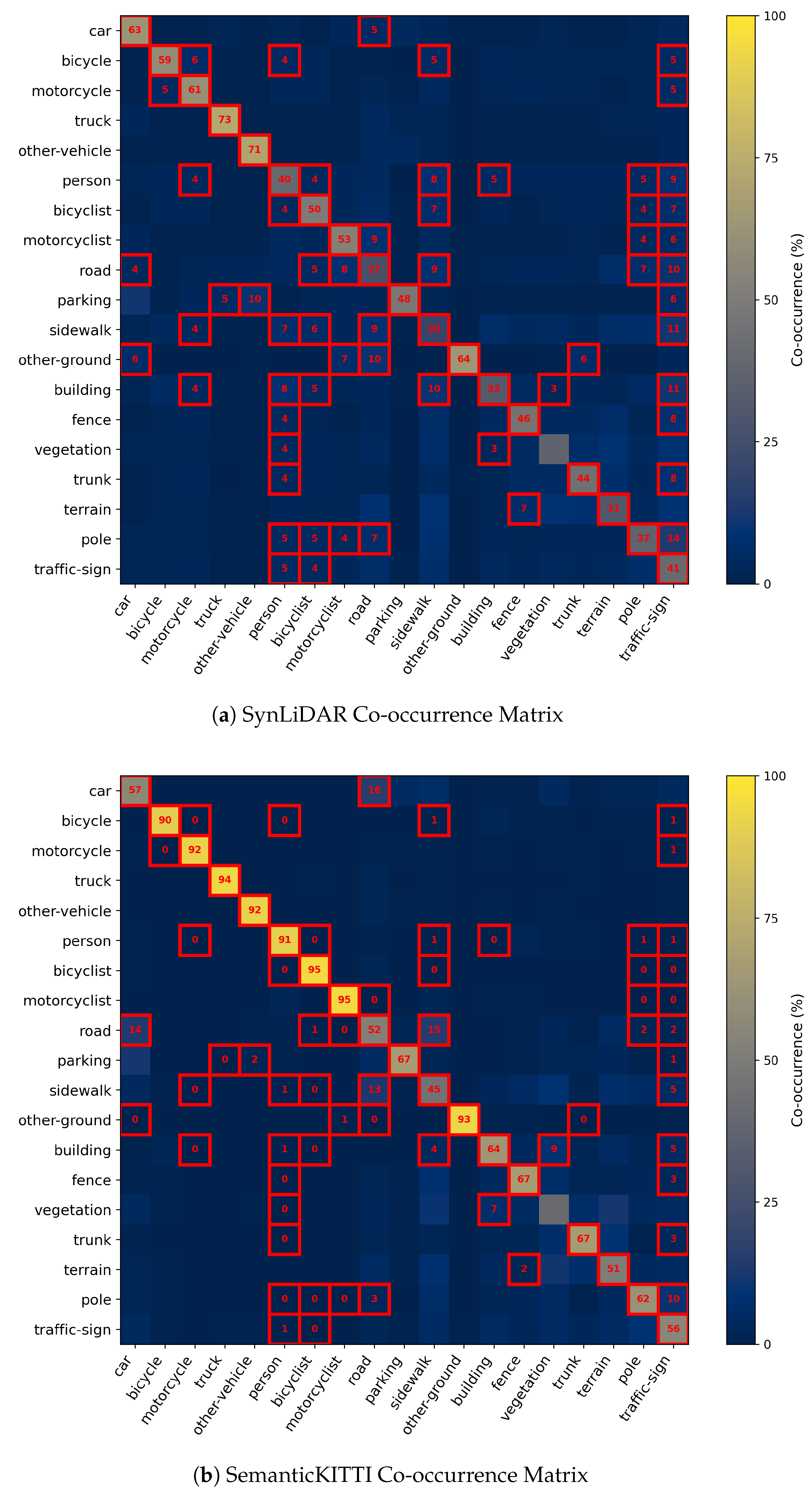

- A method to quantify context shift to enable an analytical evaluation of performance improvements relative to context variability.

- Extensive experiments demonstrating our approach reduces NT and improves UDA performance on challenging 3D semantic segmentation tasks.

2. Related Work

3. Materials and Methods

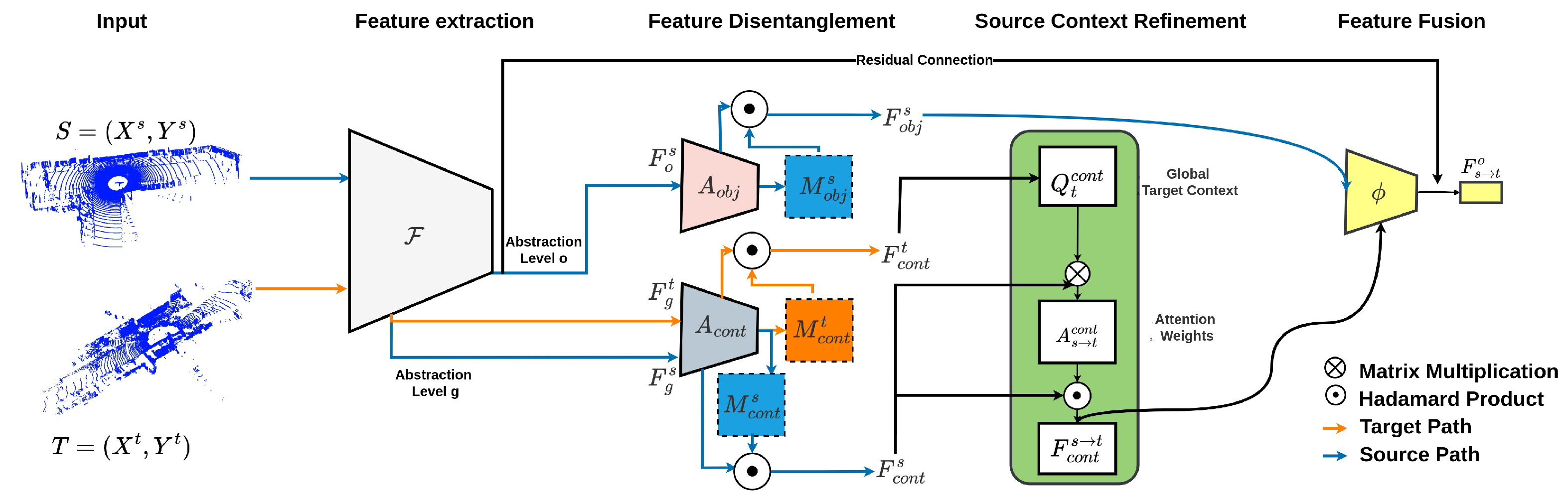

3.1. Context-Aware Feature Adaptation

3.1.1. Object and Context Feature Disentanglement

3.1.2. Source Context Feature Refinement

3.1.3. Cross-Domain Feature Fusion

| Algorithm 1 Context-Aware Feature Adaptation (CAFA) | |

| Require: Source point cloud , Target point cloud , Backbone network F | |

| Ensure: Adapted source features | |

| 1: function DisentangleFeatures() | |

| 2: | ▹ Extract multi-scale features |

| 3: | ▹ Object attention |

| 4: | ▹ Context attention |

| 5: return | |

| 6: end function | |

| 7: function CrossAttentionRefinement() | |

| 8: | ▹ Global target context |

| 9: | ▹ Cross-attention weights |

| 10: return | |

| 11: end function | |

| 12: function FeatureFusion() | |

| 13: | |

| 14: return | ▹ : learnable parameter |

| 15: end function | |

| 16: | |

| 17: | |

| 18: | |

| 19: | |

| 20: return | |

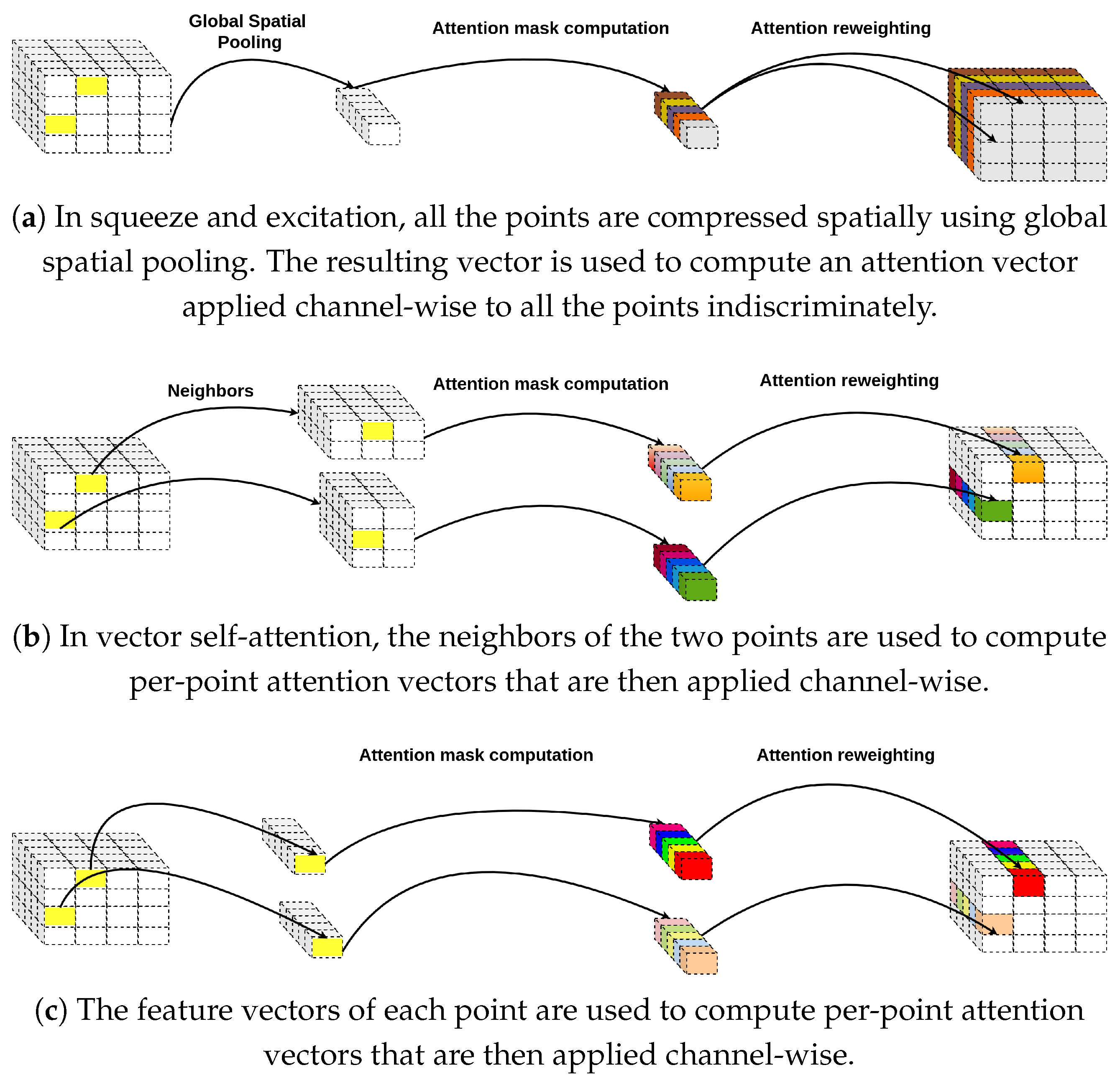

3.2. Relationship to Other Attention Mechanisms

3.2.1. Vector Attention

3.2.2. Squeeze-and-Excitation Module

3.3. Training Objective

4. Results

4.1. Datasets and Baselines

4.1.1. Datasets

4.1.2. Baselines and Training

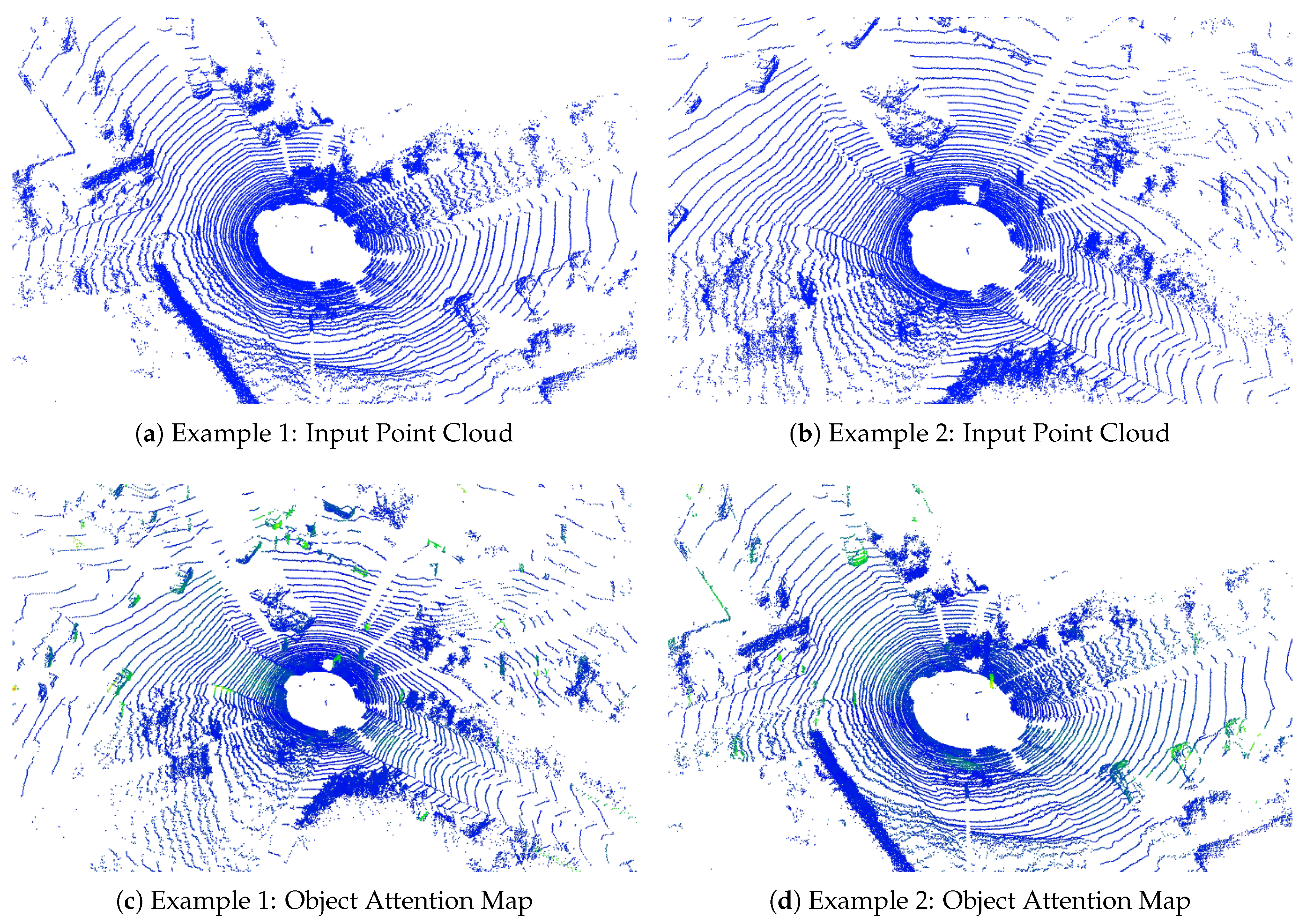

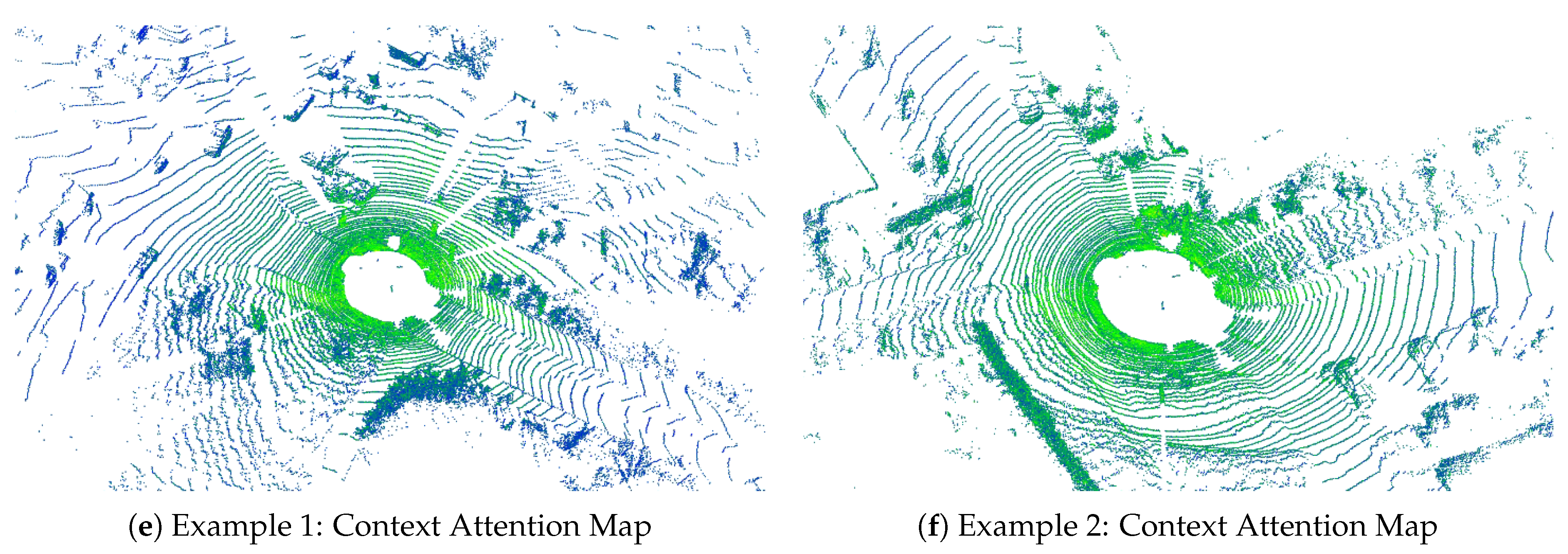

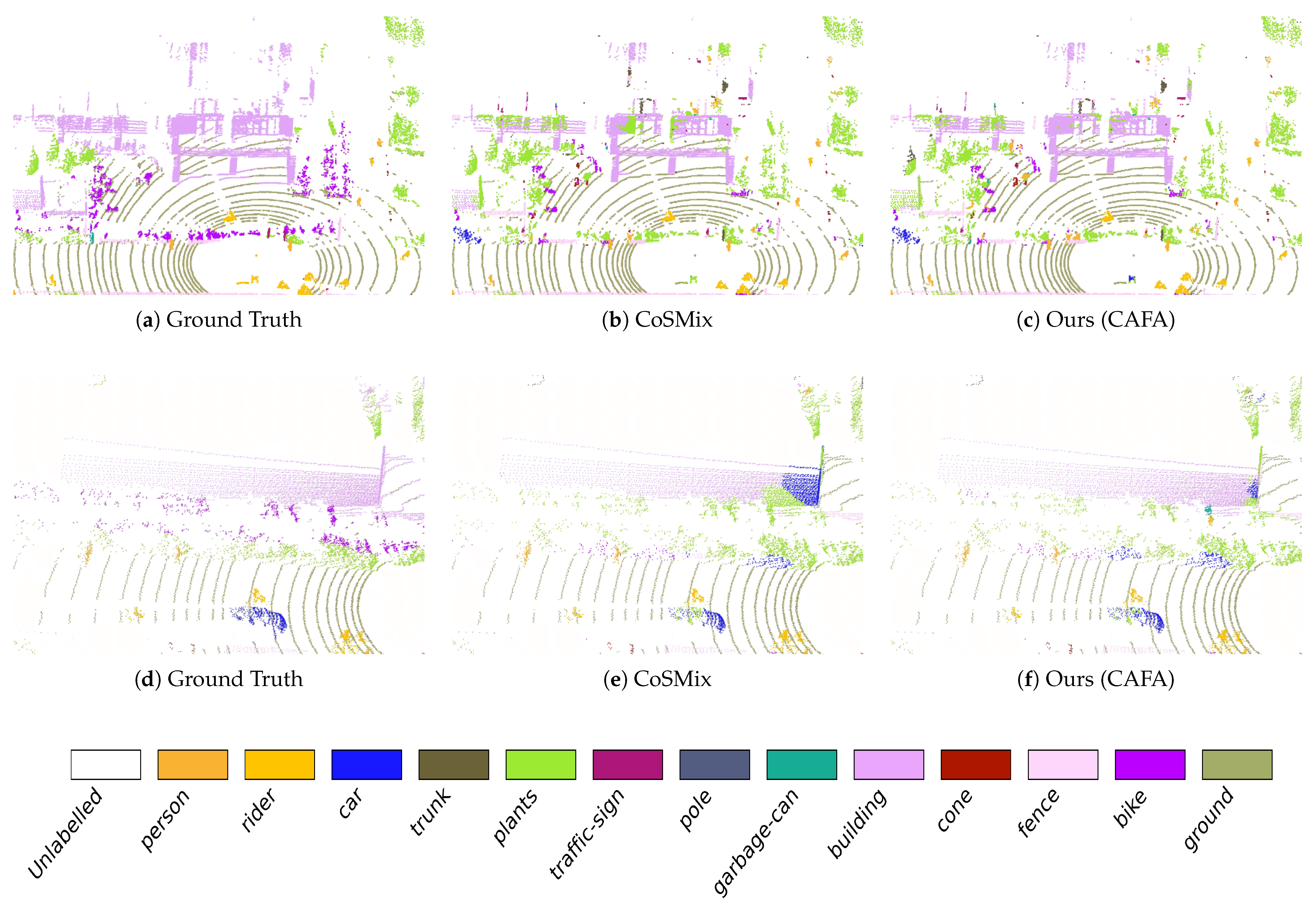

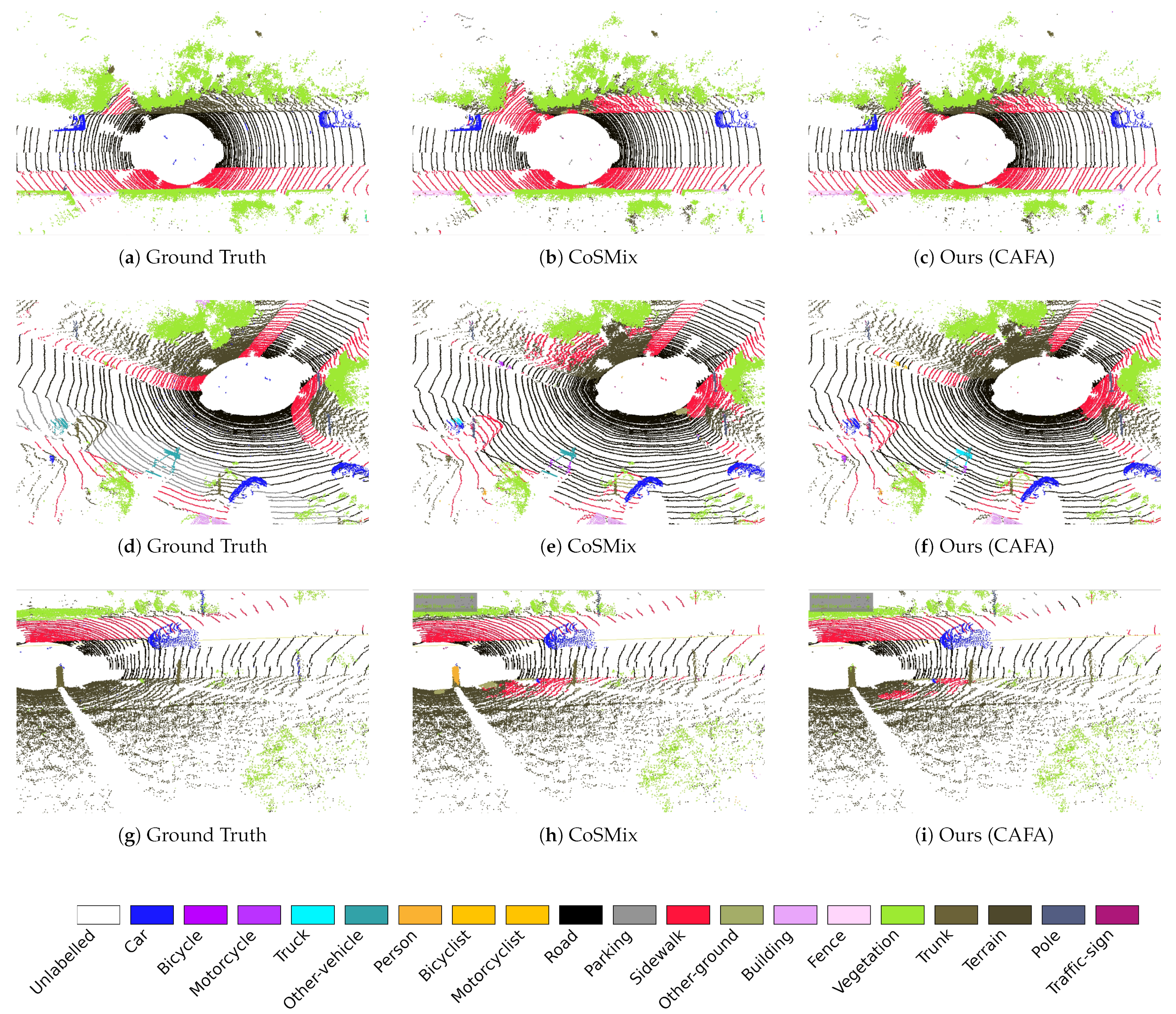

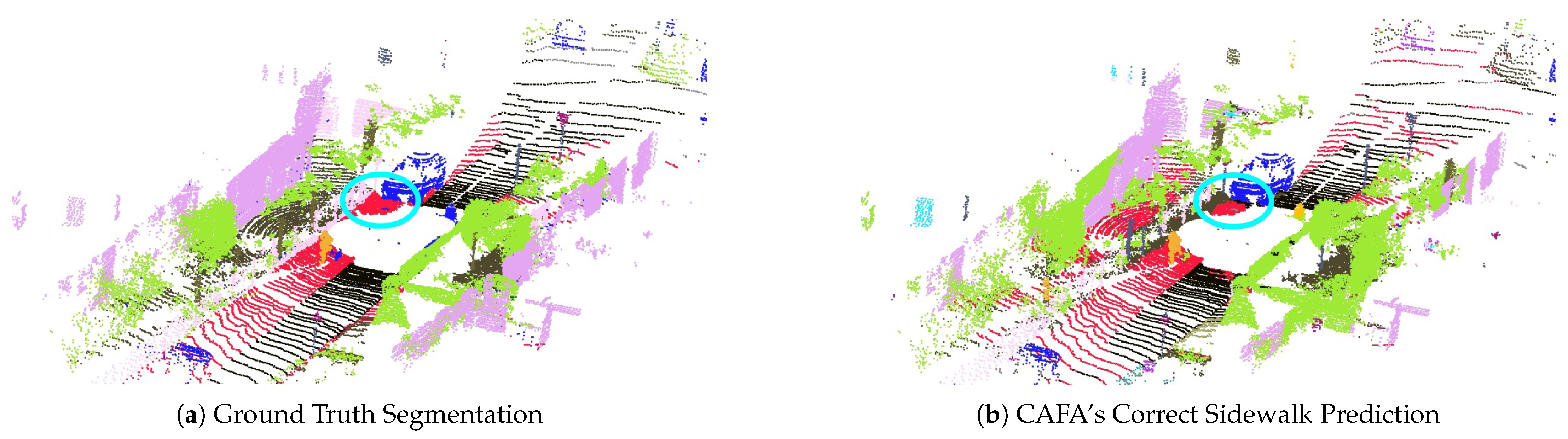

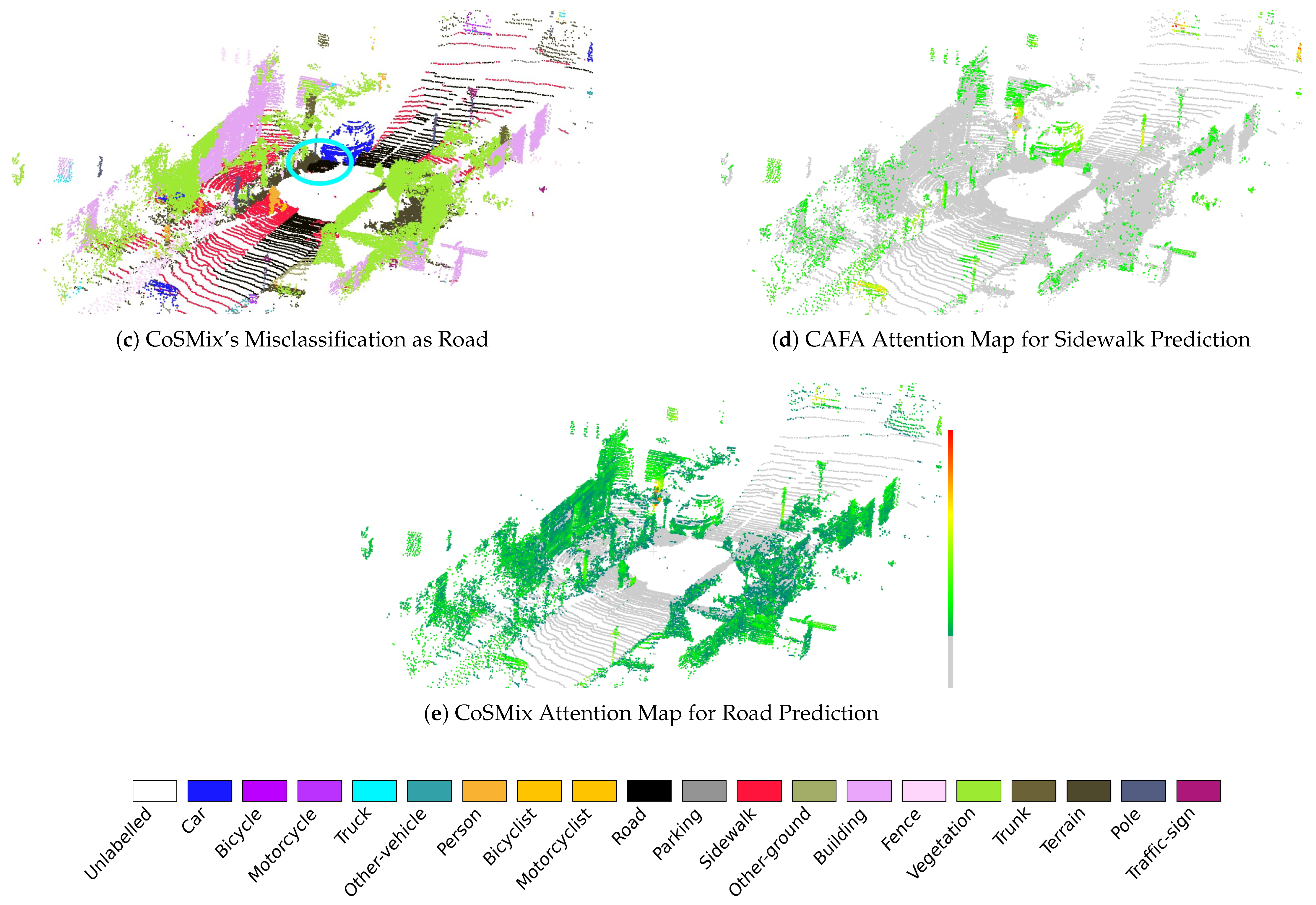

4.2. Feature Disentanglement Results

4.3. Comparison with Previous Methods

4.4. Qualitative Analysis

4.5. Ablation Study

4.6. Context Shift Performance Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UDA | Unsupervised Domain Adaptation |

| NT | Negative Transfer |

| MLP | Multilayer Perceptron |

| mIoU | mean Intersection over Union |

| BEVs | Bird-Eye-View images |

| DTE | Data Transferability Enhancement |

| MTE | Model Transferability Enhancement |

| SE-Net | Squeeze and Excitation |

| SGD | Stochastic Gradient Descent |

References

- Wu, B.; Zhou, X.; Zhao, S.; Yue, X.; Keutzer, K. SqueezeSegV2: Improved Model Structure and Unsupervised Domain Adaptation for Road-Object Segmentation from a LiDAR Point Cloud. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4376–4382. [Google Scholar]

- Xiao, A.; Huang, J.; Guan, D.; Zhan, F.; Lu, S. Transfer Learning from Synthetic to Real LiDAR Point Cloud for Semantic Segmentation. Proc. AAAI Conf. Artif. Intell. 2022, 36, 2795–2803. [Google Scholar] [CrossRef]

- Saltori, C.; Galasso, F.; Fiameni, G.; Sebe, N.; Poiesi, F.; Ricci, E. Compositional Semantic Mix for Domain Adaptation in Point Cloud Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 14234–14247. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Z.; Zeng, W.; Su, Y.; Liu, W.; Cheng, M.; Guo, Y.; Wang, C. Density-guided Translator Boosts Synthetic-to-Real Unsupervised Domain Adaptive Segmentation of 3D Point Clouds. arXiv 2024, arXiv:2403.18469. [Google Scholar]

- Zhao, H.; Zhang, J.; Chen, Z.; Zhao, S.; Tao, D. UniMix: Towards Domain Adaptive and Generalizable LiDAR Semantic Segmentation in Adverse Weather. arXiv 2024, arXiv:2404.05145. [Google Scholar]

- Xiao, A.; Huang, J.; Guan, D.; Cui, K.; Lu, S.; Shao, L. PolarMix: A General Data Augmentation Technique for LiDAR Point Clouds. arXiv 2022, arXiv:2208.00223. [Google Scholar]

- Yuan, Z.; Cheng, M.; Zeng, W.; Su, Y.; Liu, W.; Yu, S.; Wang, C. Prototype-Guided Multitask Adversarial Network for Cross-Domain LiDAR Point Clouds Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5700613. [Google Scholar] [CrossRef]

- Zhang, W.; Deng, L.; Zhang, L.; Wu, D. A Survey on Negative Transfer. IEEE/CAA J. Autom. Sin. 2023, 10, 305–329. [Google Scholar] [CrossRef]

- Liang, J.; Wang, Y.; Hu, D.; He, R.; Feng, J. A Balanced and Uncertainty-aware Approach for Partial Domain Adaptation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Li, S.; Xie, M.; Lv, F.; Liu, C.H.; Liang, J.; Qin, C.; Li, W. Semantic Concentration for Domain Adaptation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 9082–9091. [Google Scholar]

- Saleh, K.; Abobakr, A.; Attia, M.; Iskander, J.; Nahavandi, D.; Hossny, M.; Nahvandi, S. Domain Adaptation for Vehicle Detection from Bird’s Eye View LiDAR Point Cloud Data. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 3235–3242. [Google Scholar] [CrossRef]

- Zhao, S.; Wang, Y.; Li, B.; Wu, B.; Gao, Y.; Xu, P.; Darrell, T.; Keutzer, K. ePointDA: An End-to-End Simulation-to-Real Domain Adaptation Framework for LiDAR Point Cloud Segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, AAAI, Online, 2–9 February 2021. [Google Scholar]

- Yi, L.; Gong, B.; Funkhouser, T. Complete & Label: A Domain Adaptation Approach to Semantic Segmentation of LiDAR Point Clouds. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 15358–15368. [Google Scholar] [CrossRef]

- Li, G.; Kang, G.; Wang, X.; Wei, Y.; Yang, Y. Adversarially Masking Synthetic to Mimic Real: Adaptive Noise Injection for Point Cloud Segmentation Adaptation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 20464–20474. [Google Scholar]

- Wang, Z.; Ding, S.; Li, Y.; Zhao, M.; Roychowdhury, S.; Wallin, A.; Sapiro, G.; Qiu, Q. Range Adaptation for 3D Object Detection in LiDAR. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 2320–2328. [Google Scholar] [CrossRef]

- Luo, H.; Khoshelham, K.; Fang, L.; Chen, C. Unsupervised scene adaptation for semantic segmentation of urban mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2020, 169, 253–267. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar] [CrossRef]

- Rosenstein, M.T.; Marx, Z.; Kaelbling, L.P.; Dietterich, T.G. To transfer or not to transfer. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005. [Google Scholar]

- Zhu, Y.; Zhuang, F.; Wang, J.; Ke, G.; Chen, J.; Bian, J.; Xiong, H.; He, Q. Deep Subdomain Adaptation Network for Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 1713–1722. [Google Scholar] [CrossRef] [PubMed]

- Long, M.; Wang, J.; Ding, G.; Cheng, W.; Zhang, X.; Wang, W. Dual Transfer Learning. In Proceedings of the SDM, Anaheim, CA, USA, 26–28 April 2012. [Google Scholar]

- Tan, B.; Zhang, Y.; Pan, S.J.; Yang, Q. Distant Domain Transfer Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Na, J.; Jung, H.; Chang, H.; Hwang, W. FixBi: Bridging Domain Spaces for Unsupervised Domain Adaptation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2020; pp. 1094–1103. [Google Scholar]

- Wang, Z.; Dai, Z.; Póczos, B.; Carbonell, J.G. Characterizing and Avoiding Negative Transfer. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2018; pp. 11285–11294. [Google Scholar]

- Chen, X.; Wang, S.; Fu, B.; Long, M.; Wang, J. Catastrophic Forgetting Meets Negative Transfer: Batch Spectral Shrinkage for Safe Transfer Learning. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Wang, X.; Jin, Y.; Long, M.; Wang, J.; Jordan, M.I. Transferable Normalization: Towards Improving Transferability of Deep Neural Networks. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Han, Z.; Sun, H.; Yin, Y. Learning Transferable Parameters for Unsupervised Domain Adaptation. IEEE Trans. Image Process. 2021, 31, 6424–6439. [Google Scholar] [CrossRef] [PubMed]

- You, K.; Kou, Z.; Long, M.; Wang, J. Co-Tuning for Transfer Learning. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Zhang, J.O.; Sax, A.; Zamir, A.; Guibas, L.J.; Malik, J. Side-Tuning: A Baseline for Network Adaptation via Additive Side Networks. In Proceedings of the European Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Yang, Y.; Huang, L.K.; Wei, Y. Concept-wise Fine-tuning Matters in Preventing Negative Transfer. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 18707–18717. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16259–16268. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Pan, Y.; Gao, B.; Mei, J.; Geng, S.; Li, C.; Zhao, H. SemanticPOSS: A Point Cloud Dataset with Large Quantity of Dynamic Instances. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 687–693. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9296–9306. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Choy, C.; Gwak, J.; Savarese, S. 4D Spatio-Temporal ConvNets: Minkowski Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 15–20 June 2019; pp. 3070–3079. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Das, A.; Vedantam, R.; Cogswell, M.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2016, 128, 336–359. [Google Scholar] [CrossRef]

- Ma, Y.; Guo, Y.; Liu, H.; Lei, Y.; Wen, G. Global Context Reasoning for Semantic Segmentation of 3D Point Clouds. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Aspen, CO, USA, 1–5 March 2020; pp. 2920–2929. [Google Scholar]

| Hyperparameter | SemanticKITTI | SemanticPOSS |

|---|---|---|

| Maximum Epochs | 100,000 | 100,000 |

| Entropy Threshold | 0.05 | 0.05 |

| Adversarial Loss Weight | 0.001 | 0.001 |

| Mean Teacher | 0.9999 | 0.9999 |

| Voxel Size | 0.05 | 0.05 |

| Learning Rate (Generator) | 2.5 × 10−5 | 2.5 × 10−4 |

| Learning Rate (Discriminator) | 1 × 10−5 | 1 × 10−4 |

| Hyperparameter | SemanticKITTI | SemanticPOSS |

|---|---|---|

| Voxel Size | 0.05 | 0.05 |

| Number of Points | 80,000 | 50,000 |

| Epochs | 20 | 20 |

| Train Batch Size | 1 | 1 |

| Optimizer | SGD | SGD |

| Learning Rate | 0.001 | 0.001 |

| Selection Percentage | 0.5 | 0.5 |

| Target Confidence Threshold | 0.90 | 0.85 |

| Mean Teacher | 0.9 | 0.99 |

| Teacher update frequency | 500 | 500 |

| Model | Car | Bike | Mot | Truck | Other-v | Perso | Bcyst | Mclst | Road | Park | Sidew | Other-g | Build | Fence | Vege | Trunk | Terra | Pole | Traff | mIoU | Gain |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Source | 72.6 | 6.7 | 11.6 | 3.7 | 6.7 | 22.5 | 29.5 | 2.8 | 67.2 | 11.9 | 35.7 | 0.1 | 59.9 | 23.5 | 74.3 | 25.7 | 42.0 | 39.6 | 13.3 | 28.9 | - |

| CoSMix [3] | 83.6 | 10.2 | 14.4 | 8.8 | 15.2 | 27.4 | 23.5 | 0.7 | 77.4 | 17.4 | 43.6 | 0.3 | 55.1 | 24.5 | 72.7 | 44.8 | 40.8 | 45.3 | 19.8 | 32.9 | +0.0 |

| CoSMix + CWFT [29] | 85.1 | 11.2 | 14.3 | 4.0 | 11.9 | 28.0 | 16.5 | 3.9 | 76.4 | 16.9 | 44.9 | 0.1 | 52.1 | 25.2 | 72.4 | 42.2 | 42.8 | 44.2 | 19.6 | 32.2 | −0.7 |

| CoSMix + DSAN [19] | 84.6 | 1.8 | 15.0 | 5.1 | 10.7 | 33.5 | 26.9 | 2.7 | 76.6 | 18.8 | 44.2 | 0.2 | 61.0 | 30.8 | 74.3 | 41.8 | 39.6 | 42.9 | 24.8 | 33.4 | +0.5 |

| CoSMix + TransPar [26] | 83.8 | 5.4 | 13.1 | 7.1 | 13.0 | 22.5 | 15.4 | 2.5 | 77.2 | 17.3 | 43.4 | 0.1 | 58.6 | 24.9 | 73.4 | 41.6 | 38.5 | 43.5 | 15.8 | 31.4 | −1.5 |

| CoSMix + BSS [24] | 82.6 | 5.3 | 17.4 | 7.8 | 11.7 | 26.7 | 12.7 | 3.5 | 77.5 | 19.9 | 44.6 | 0.2 | 52.5 | 25.9 | 72.1 | 38.9 | 41.1 | 43.4 | 20.1 | 31.8 | −1.2 |

| CoSMix + CAFA (ours) | 83.2 | 9.4 | 22.1 | 7.9 | 13.4 | 33.2 | 31.4 | 5.7 | 78.0 | 15.9 | 46.5 | 0.1 | 52.6 | 28.5 | 72.5 | 43.0 | 47.5 | 45.0 | 19.9 | 34.5 | +1.6 |

| PCAN [4] | 85.6 | 16.2 | 27.4 | 9.9 | 10.4 | 28.4 | 64.2 | 2.9 | 77.1 | 13.9 | 50.3 | 0.1 | 67.4 | 19.4 | 75.9 | 41.4 | 47.7 | 40.8 | 21.7 | 36.9 | +0.0 |

| PCAN + CWFT [29] | 86.6 | 17.0 | 25.7 | 10.9 | 10.1 | 30.6 | 60.1 | 2.7 | 77.4 | 12.8 | 50.1 | 0.1 | 64.9 | 23.3 | 74.7 | 43.6 | 46.5 | 42.7 | 22.8 | 37.0 | +0.1 |

| PCAN + DSan [19] | 85.8 | 16.9 | 27.7 | 9.9 | 10.3 | 28.7 | 65.3 | 2.8 | 77.0 | 14.2 | 50.4 | 0.1 | 69.0 | 19.9 | 76.4 | 42.1 | 46.6 | 41.4 | 22.1 | 37.2 | +0.3 |

| PCAN + TransPar [26] | 87.3 | 17.6 | 28.9 | 11.5 | 12.7 | 30.8 | 65.1 | 2.4 | 76.5 | 12.9 | 48.9 | 0.1 | 69.6 | 19.0 | 77.2 | 40.7 | 44.4 | 40.7 | 22.3 | 37.3 | +0.4 |

| PCAN + BSS [24] | 86.0 | 17.0 | 28.1 | 10.5 | 11.6 | 26.9 | 65.9 | 3.3 | 77.2 | 13.9 | 50.3 | 0.1 | 68.1 | 19.4 | 76.2 | 41.2 | 47.4 | 40.7 | 22.9 | 37.2 | +0.3 |

| PCAN + CAFA (ours) | 85.2 | 13.3 | 31.7 | 11.2 | 12.4 | 33.3 | 71.7 | 3.7 | 77.0 | 11.0 | 49.9 | 0.0 | 66.9 | 18.0 | 75.7 | 42.4 | 50.5 | 37.8 | 16.1 | 37.3 | +0.4 |

| Model | Person | Rider | Car | Trunk | Plants | Traffic | Pole | Garbage | Building | Cone | Fence | Bike | Grou. | mIoU | Gain |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Source | 45.7 | 40.2 | 51.5 | 22.1 | 71.9 | 4.9 | 22.2 | 21.9 | 71.9 | 4.8 | 29.8 | 2.6 | 76.1 | 35.8 | - |

| CoSMix [3] | 55.3 | 52.4 | 47.6 | 43.5 | 72.0 | 13.7 | 40.9 | 35.4 | 67.7 | 30.2 | 35.3 | 5.6 | 81.3 | 44.0 | +0.0 |

| CoSMix + CWFT [29] | 53.9 | 50.7 | 54.0 | 31.2 | 72.6 | 13.2 | 41.8 | 35.7 | 69.9 | 28.4 | 31.6 | 7.1 | 81.3 | 43.9 | −0.1 |

| CoSMix + DSAN [19] | 52.8 | 51.5 | 51.6 | 35.9 | 70.8 | 13.3 | 38.2 | 36.9 | 62.7 | 31.8 | 33.0 | 4.8 | 79.1 | 43.3 | −0.7 |

| CoSMix + Transpar [26] | 54.6 | 54.1 | 53.2 | 35.4 | 74.1 | 13.7 | 40.7 | 31.5 | 72.8 | 24.4 | 32.9 | 6.3 | 81.1 | 44.2 | +0.2 |

| CoSMix + BSS [24] | 55.6 | 52.7 | 48.0 | 35.2 | 73.3 | 15.5 | 40.1 | 28.4 | 70.8 | 29.6 | 38.4 | 6.2 | 81.4 | 44.3 | +0.3 |

| CoSMix + CAFA (ours) | 52.3 | 53.9 | 56.9 | 34.0 | 72.5 | 11.0 | 42.3 | 36.9 | 70.6 | 31.7 | 36.6 | 5.2 | 81.1 | 45.0 | +1.0 |

| PCAN [4] | 60.9 | 52.3 | 60.1 | 41.2 | 74.5 | 18.0 | 35.0 | 23.9 | 74.8 | 8.0 | 38.7 | 12.3 | 79.3 | 44.6 | +0.0 |

| PCAN + CWFT [29] | 59.7 | 51.5 | 59.1 | 40.9 | 74.0 | 17.6 | 35.0 | 24.8 | 74.4 | 8.7 | 40.2 | 11.3 | 79.2 | 44.3 | −0.3 |

| PCAN + DSAN [19] | 62.3 | 50.3 | 60.7 | 41.3 | 74.9 | 13.3 | 36.9 | 21.4 | 75.4 | 1.9 | 42.7 | 9.9 | 77.6 | 43.7 | −0.9 |

| PCAN + TransPar [26] | 64.0 | 55.2 | 60.4 | 42.8 | 74.5 | 16.3 | 36.1 | 19.9 | 74.3 | 4.5 | 40.9 | 15.4 | 79.9 | 44.9 | +0.3 |

| PCAN + BSS [24] | 62.2 | 52.5 | 60.5 | 38.7 | 74.6 | 20.0 | 35.6 | 18.3 | 77.1 | 4.2 | 44.4 | 14.1 | 79.8 | 44.8 | +0.2 |

| PCAN + CAFA (ours) | 64.4 | 54.0 | 63.9 | 40.9 | 74.1 | 17.7 | 36.0 | 25.2 | 76.0 | 3.2 | 45.5 | 10.7 | 79.7 | 45.5 | +0.9 |

| Method | mIoU | ΔmIoU |

|---|---|---|

| CAFA (full) | 45.0 | 0.0 |

| CAFA w/o fusion (simple summation) | 44.3 | −0.7 |

| CAFA w/ single-scale features | 44.1 | −0.9 |

| CAFA w/ object features only | 44.6 | −0.4 |

| CAFA w/o residual connection | 42.4 | −2.8 |

| CAFA w/ self-attention (source) | 44.2 | −0.8 |

| CAFA w/ self-attention (target) | 43.7 | −1.3 |

| CAFA w/ 3D context module [39] | 43.2 | −1.8 |

| Dataset | Class | PCAN | CoSMix | ||||

|---|---|---|---|---|---|---|---|

| Baseline | +NT | +CAFA | Baseline | +NT | +CAFA | ||

| SynLiDAR → SemanticKITTI | person | 28.4 | 30.8 | 33.3 | 27.4 | 33.47 | 33.18 |

| bicyclist | 64.2 | 65.1 | 71.7 | 23.4 | 26.89 | 31.36 | |

| motorcyclist | 2.9 | 2.4 | 3.7 | 0.7 | 2.66 | 5.74 | |

| mIoU | 31.8 | 32.7 | 36.2 | 17.2 | 21.0 | 23.4 | |

| SynLiDAR → SemanticPOSS | Trunk | 41.2 | 42.8 | 74.1 | 34.48 | 35.22 | 34.03 |

| Car | 60.1 | 60.4 | 40.9 | 47.57 | 48.02 | 56.94 | |

| Traffic-sign | 18.0 | 16.3 | 36.0 | 13.65 | 15.54 | 10.96 | |

| mIoU | 39.7 | 39.8 | 50.3 | 31.9 | 32.9 | 33.9 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El Mendili, L.; Daniel, S.; Badard, T. Context-Aware Feature Adaptation for Mitigating Negative Transfer in 3D LiDAR Semantic Segmentation. Remote Sens. 2025, 17, 2825. https://doi.org/10.3390/rs17162825

El Mendili L, Daniel S, Badard T. Context-Aware Feature Adaptation for Mitigating Negative Transfer in 3D LiDAR Semantic Segmentation. Remote Sensing. 2025; 17(16):2825. https://doi.org/10.3390/rs17162825

Chicago/Turabian StyleEl Mendili, Lamiae, Sylvie Daniel, and Thierry Badard. 2025. "Context-Aware Feature Adaptation for Mitigating Negative Transfer in 3D LiDAR Semantic Segmentation" Remote Sensing 17, no. 16: 2825. https://doi.org/10.3390/rs17162825

APA StyleEl Mendili, L., Daniel, S., & Badard, T. (2025). Context-Aware Feature Adaptation for Mitigating Negative Transfer in 3D LiDAR Semantic Segmentation. Remote Sensing, 17(16), 2825. https://doi.org/10.3390/rs17162825