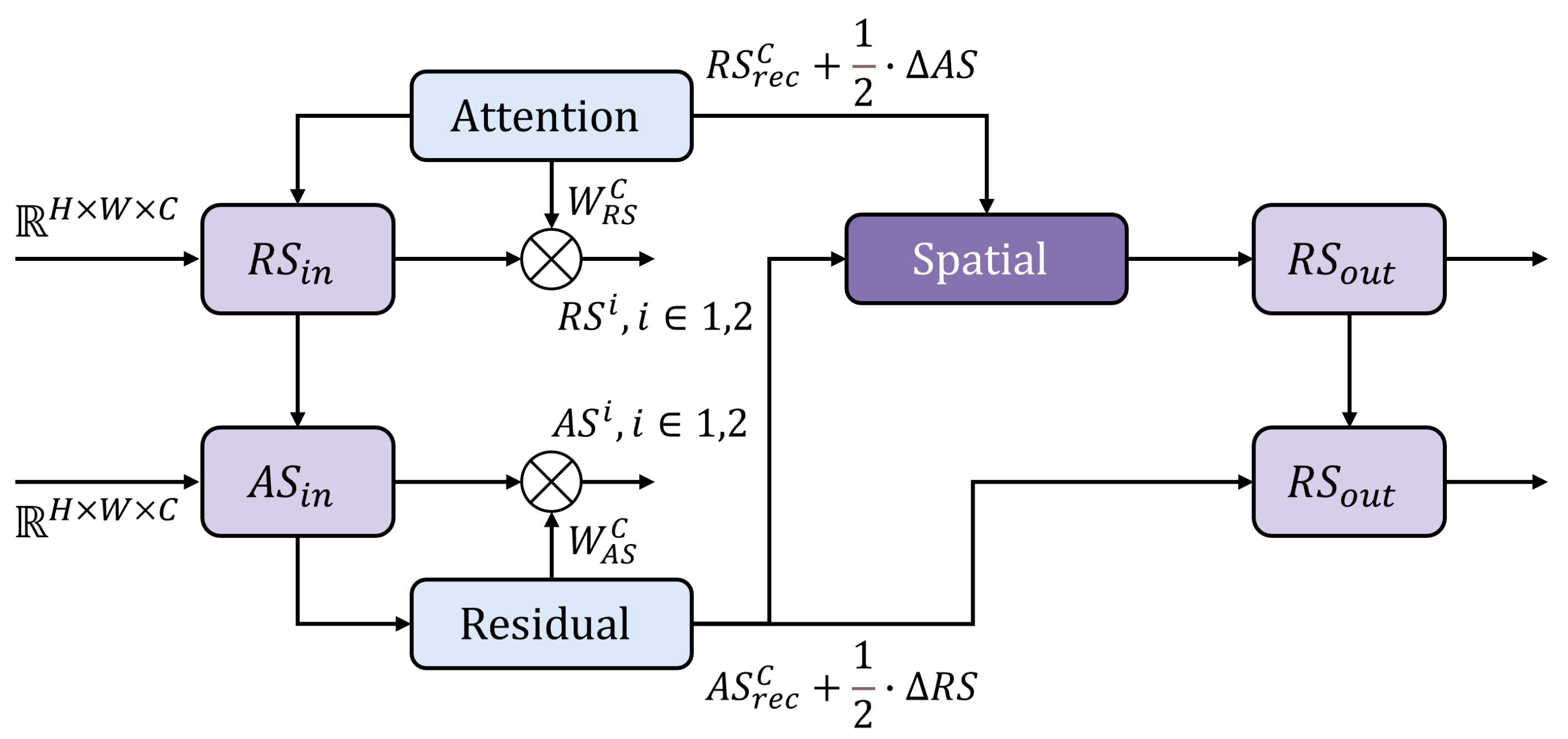

5.1. Experimental Setting

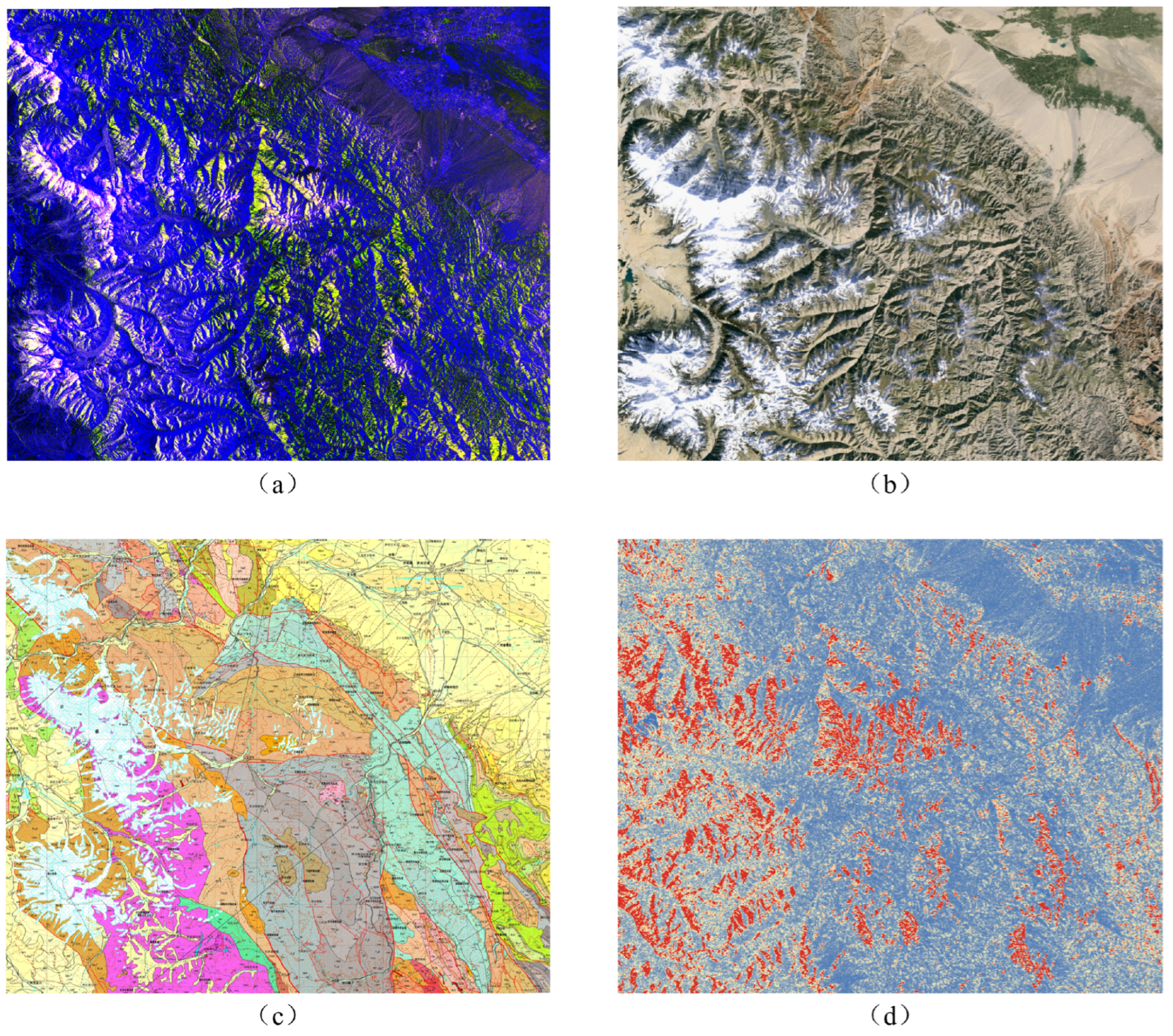

In this work, we utilize a variety of remote sensing data sources, including multispectral imagery, SAR imagery, DEM, slope, texture features, NDVI, and GM. The dataset is randomly divided into training, validation, and test sets with a ratio of 6:2:2. To comprehensively evaluate the enhancement effects of sensitive features and prior knowledge on intelligent remote sensing interpretation, we construct six input configurations based on different modality combinations: (1) MS, which fuses multispectral imagery and SAR imagery; (2) MGM, which combines MS with GM; (3) MDE, which combines MS with DEM; (4) MTS, which integrates MS with slope; (5) MGL, which adds GLCM-based texture features extracted from SAR to MS; and (6) MNV, which integrates MS with the NDVI.

To validate the effectiveness of MCDNet, we compare it with several mainstream deep learning segmentation models. These include classical semantic segmentation networks such as fully convolutional networks (FCNs, using ResNet-101 as the backbone) [

43] and UPerNet (based on ResNet-50) [

44], as well as advanced Transformer-based architectures including SegFormer [

45], Vision Transformer (ViT) [

46], and BEiT [

47]. All experiments are conducted on a desktop with 64 GB RAM and an NVIDIA RTX 3090 GPU. The hyperparameters are set as follows: a batch size of 8, a learning rate of

, the Adam optimizer, and a total of 500 training epochs. To ensure the robustness of results, each experiment is repeated multiple times, and the average performance is reported.

5.4. Comparison Experiments

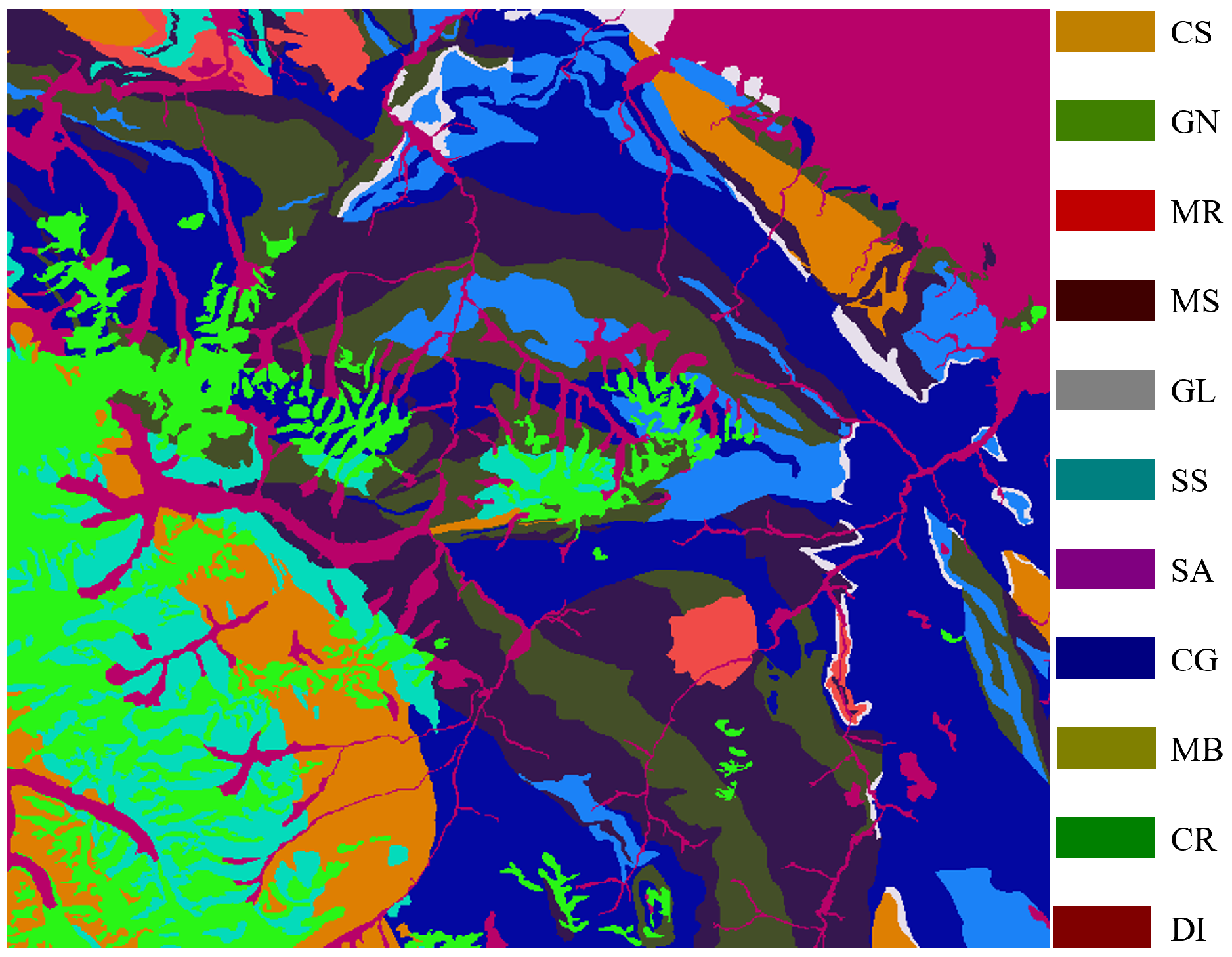

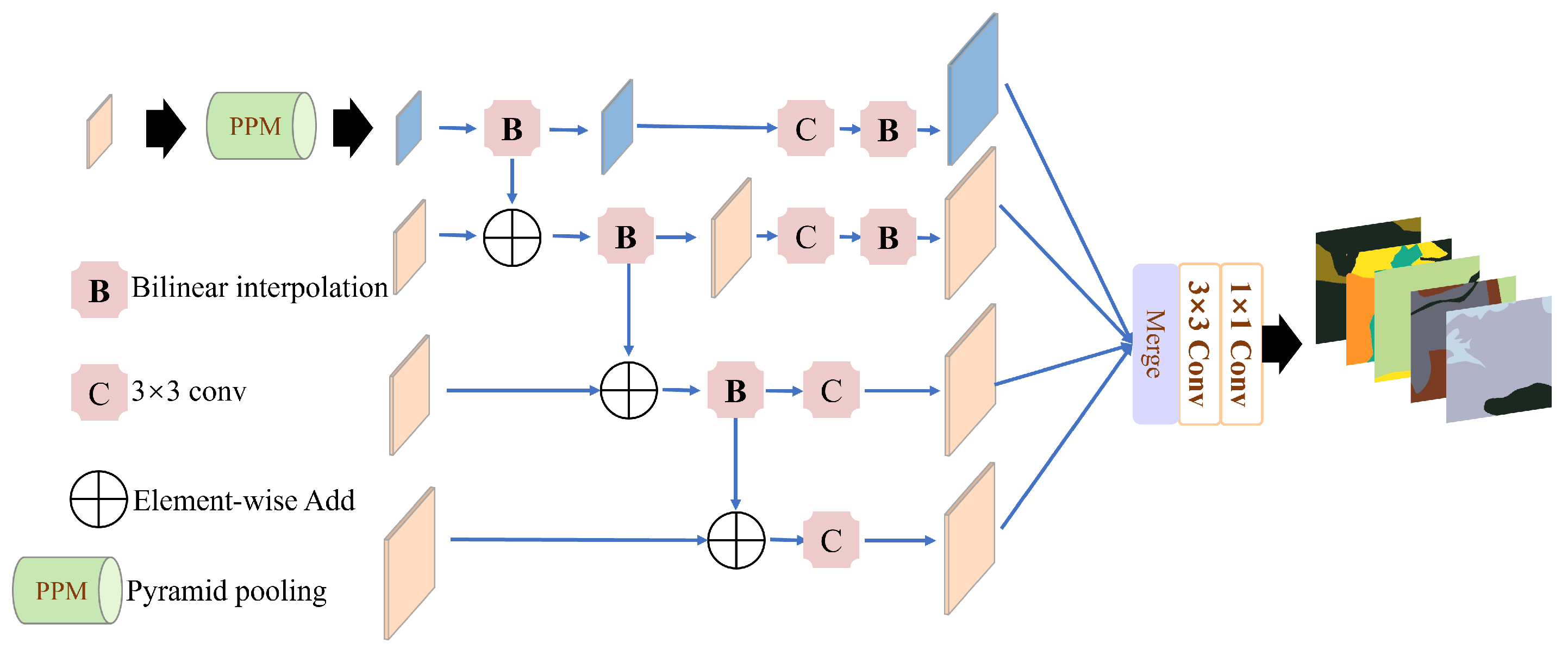

This study compares the performance of MCDNet with several mainstream semantic segmentation models in the task of intelligent geological element interpretation using remote sensing data. Furthermore, it evaluates the practical contributions of various sensitive features and prior knowledge in supporting the interpretation process.

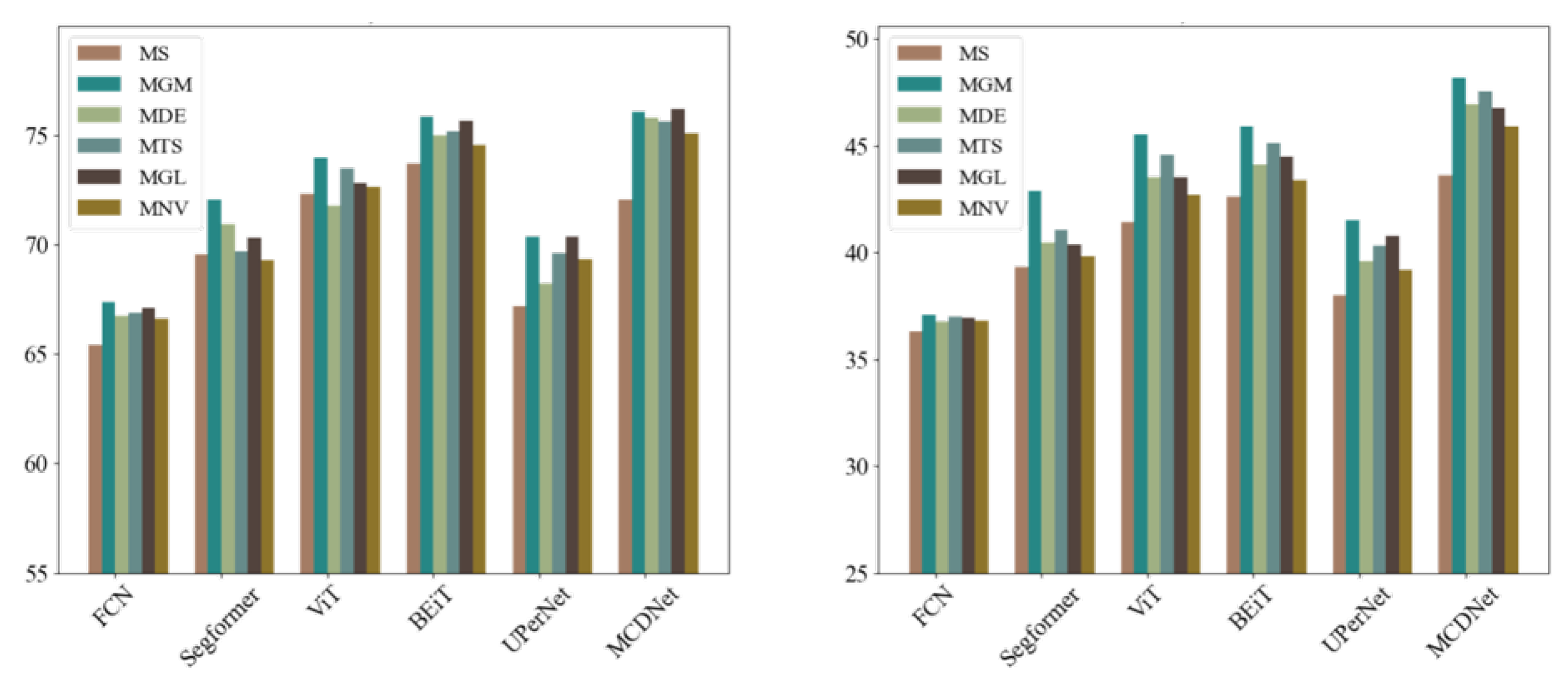

Figure 6 presents the oPA and mIoU achieved by different models under six input scenarios. From the overall trend, all sensitive features and prior knowledge—except for the NDVI—provide varying degrees of positive performance gain, demonstrating the effectiveness of integrating remote sensing data with prior geological knowledge for intelligent interpretation. MCDNet consistently achieves the optimal results across all scenarios, highlighting its superior capability in heterogeneous data fusion and feature discrimination. In contrast, traditional models such as FCN and SegFormer show relatively weaker performance, particularly when only MS imagery is used as input, with mIoU values generally falling below 40%. This indicates limitations in their ability to model fused information. Transformer-based models such as ViT and BEiT achieve second-optimal results, with noticeable improvements upon the integration of prior knowledge. This suggests that while these models are effective in high-dimensional data representation and feature modeling, they still lag behind MCDNet in terms of fusion efficiency and stability.

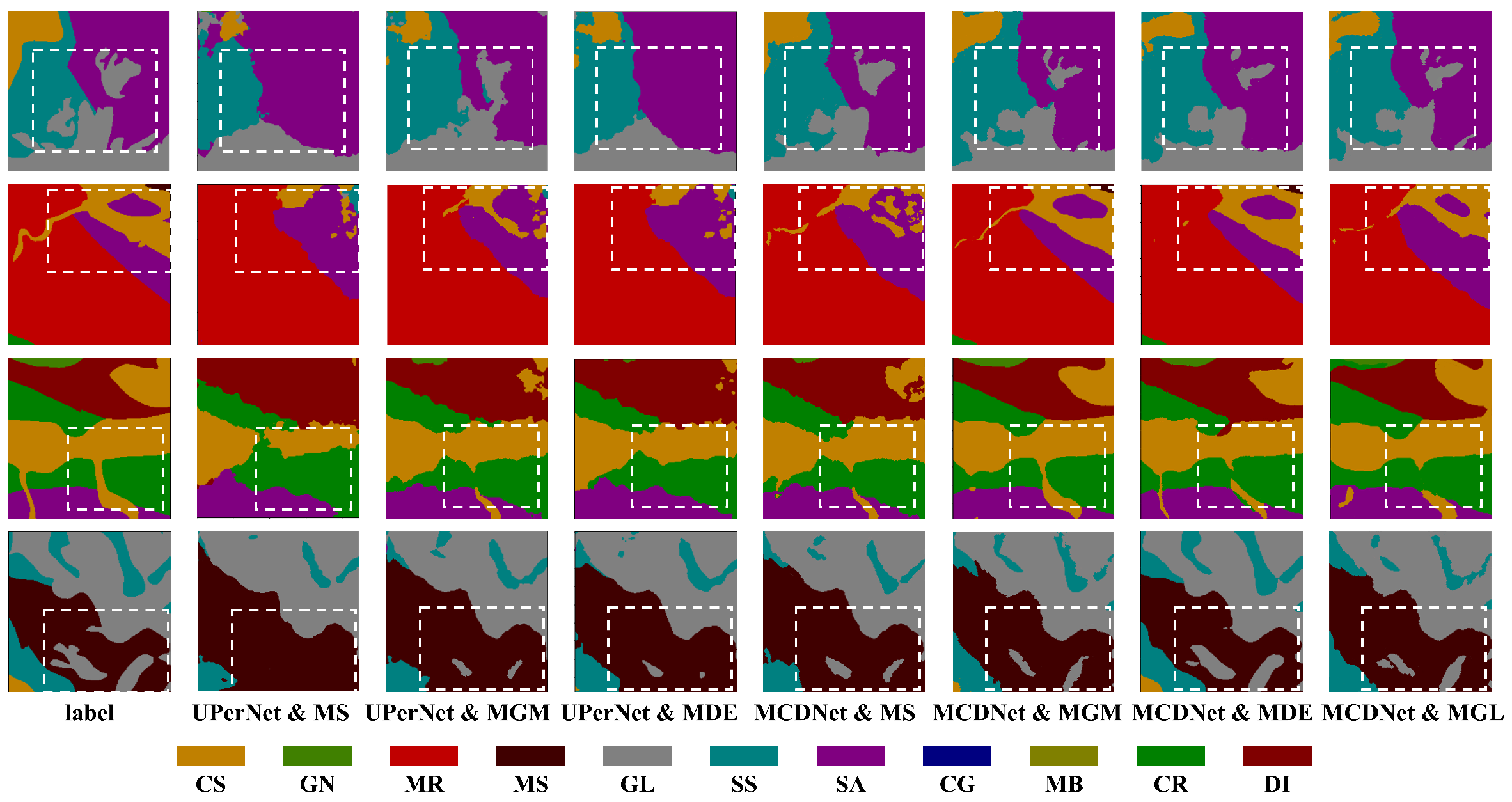

Figure 7 presents a visual comparison between the baseline model UPerNet and the proposed MCDNet under four representative conditions guided by sensitive features and prior knowledge: MS, MGM, MDE, and MGL. It is noteworthy that the MTS scenario is not included due to its high spatial similarity with DEM, resulting in limited discriminative effects. Similarly, the NDVI case is excluded from visualization as the sparse vegetation cover in the study area contributes minimally to geological interpretation. Overall, MCDNet exhibits clearer boundary delineation and more accurate category discrimination across various regions, with particularly enhanced performance in complex zones (highlighted by white dashed boxes). Under the guidance of GM and DEM, MCDNet demonstrates superior recognition of lithological classes with ambiguous boundaries or similar textures, such as MR and CR, significantly reducing class confusion. These visual results strongly confirm the advantages of MCDNet in discriminative power and robustness through multi-source data fusion and sensitive feature guidance. In particular, the model shows improved generalization capability in geologically complex regions and areas lacking dense prior knowledge, outperforming the baseline method.

Under the MS dataset input condition, significant performance differences are observed among the models in geological element interpretation as summarized in

Table 4. Among all compared models, MCDNet achieves the overall optimal performance, obtaining the highest pixel accuracy (PA) and intersection over union (IoU) in 7 out of 11 geological categories. For example, in the CG, MCDNet reaches a PA of 63.0%, outperforming the second-optimal BEiT by 6.2% in PA and 6.0% in IoU. This result demonstrates MCDNet’s robustness and superior representational capability when dealing with small-sample and boundary-ambiguous classes. Notably, GR—the most abundant class in the training dataset—achieves second-optimal segmentation performance, suggesting that class frequency alone does not guarantee optimal results. Conversely, GL, which constitutes only 13% of the training data, still attains the highest accuracy. This highlights that high interpretation performance relies more on the model’s capacity to distinguish subtle geological differences and effectively model cross-modal interactions than merely on data distribution.

Under the MGM input condition, the experimental results are summarized in

Table 5. MCDNet achieves the highest PA and IoU in 9 out of 11 geological categories. Notable improvements are observed in small-sample or low-texture classes such as CG, GN, and MR. For example, MCDNet achieves a PA of 64.4% for CG, representing a 5.6% improvement in PA and a 5.5% improvement in IoU over BEiT. This demonstrates that high-level semantic information contained in geological maps—such as stratigraphic age and tectonic unit boundaries—provides clear prior constraints for the accurate classification of weak categories. Moreover, classes with limited sample representation in the training set, such as MB and CR, also show improved recognition performance, with PA values of 59.8% and 65.0%, respectively. These correspond to accuracy gains of 39.3% and 9.5% over FCN, effectively mitigating the bias toward dominant classes. These findings confirm the comprehensive advantages of MCDNet in handling sparse samples, modeling inter-class similarity, and leveraging high-level semantic features for improved geological element interpretation.

Under the MDE dataset input condition, the experimental results presented in

Table 6 show overall performance improvements across all models compared to the baseline multi-source input. These improvements are particularly evident in categories with pronounced terrain variation and strong topographic dependence in their geological distribution. MCDNet achieves the highest PA and IoU in 8 out of the 11 geological categories. Notably, categories such as GN, CG, and MR—which are more sensitive to terrain changes—demonstrate substantial accuracy gains. For example, in the GN category, MCDNet achieves a PA of 64.2%, outperforming ViT by 1.6%, and an IoU of 41.1%, exceeding BEiT by 4.9%. It is also worth mentioning that although GL has a relatively low sample proportion, it consistently achieves the highest PA across all models, with MCDNet reaching 89.6%. This suggests that GL exhibits distinct terrain-related characteristics that are effectively captured through DEM-enhanced features, facilitating its accurate identification by the model. DEM is especially effective in mountainous and glaciated terrains where elevation and slope sharply constrain lithological distribution, such as in alpine sedimentary sequences and faulted metamorphic belts. The elevation gradient contributes to stratigraphic layering visibility and supports geomorphological boundary delineation.

Under the MTS dataset input condition, the experimental results (

Table 7) indicate particularly improved performance in geological categories strongly driven by topographic variation and gravitational deposition. MCDNet achieves the highest PA in 8 out of 11 categories and the highest IoU in 7 categories. Specifically, for MR, MCDNet reaches a PA of 62.7%, surpassing BEiT by 5.2%, and an IoU of 48.4%, outperforming ViT by 2.7%. GL consistently demonstrates high classification accuracy across all models, achieving a PA of 89.2% with MCDNet. This is attributed to the erosion-resistant nature of GL and its typical occurrence in high-elevation, steep-slope areas, where its topographic distinction from other lithologies enhances its separability. In contrast, despite having a low sample proportion, GN benefits significantly from slope information, with its IoU increasing from 36.9% (BEiT) to 43.8% (MCDNet), highlighting slope’s ability to compensate for weak sample representation. These results suggest that the integration of slope features with MCDNet’s multi-scale interaction structure enhances the model’s capability to capture inter-feature geometric relationships. Traditional models such as FCN and SegFormer, however, continue to exhibit limited robustness under complex terrain conditions as evidenced by IoU scores falling below 10% for classes such as MB and DI, underscoring their deficiency in modeling spatial structure-dependent features.

Texture features, which capture variations in surface roughness and structural patterns of lithological units, serve as critical sensitive information for distinguishing between geologically similar classes. They are particularly effective in regions where spectral similarities and morphological ambiguities coexist, addressing the challenge of high inter-class similarity and intra-class variability. Under the MGL dataset input condition, the experimental results (

Table 8) show that MCDNet achieves the highest PA and IoU in 7 out of 11 geological categories. Notably, significant accuracy improvements are observed in classes such as GN, CG, and SA when guided by GLCM-based texture features. For GN, MCDNet attains a PA of 68.0% and an IoU of 44.8%, surpassing BEiT by 9.0% and 8.8%, respectively. This indicates the strong discriminative power of texture information for lithologies characterized by structural directionality and foliation. GL, known for its distinct crystal granularity in the texture domain, consistently achieves near-saturation accuracy across all models. Texture features are particularly beneficial in complex sedimentary basins and metamorphic zones, where lithologies often exhibit anisotropic structures and repeating foliated patterns. By encoding local variation in pixel intensity, texture descriptors help distinguish materials with similar spectral responses but different formation processes.

The NDVI, primarily reflecting surface vegetation coverage, contributes to lithological discrimination depending on the correlation between vegetation patterns and lithology in the study area. Under the MNV dataset input condition, the results (

Table 9) show that MCDNet continues to achieve leading performance across most categories, attaining the highest PA and IoU in 8 out of 11 lithological classes. However, compared to other guiding features such as geological maps or slope, the inclusion of NDVI does not yield a substantial performance gain. This is largely due to the sparse vegetation cover in the study area, which limits the effectiveness of NDVI in distinguishing between lithological types. Nevertheless, MCDNet still shows notable improvements in classes such as MR, SA, and MS, indicating that even weak NDVI signals can provide complementary information when integrated with multispectral and SAR features.