HAF-YOLO: Dynamic Feature Aggregation Network for Object Detection in Remote-Sensing Images

Abstract

1. Introduction

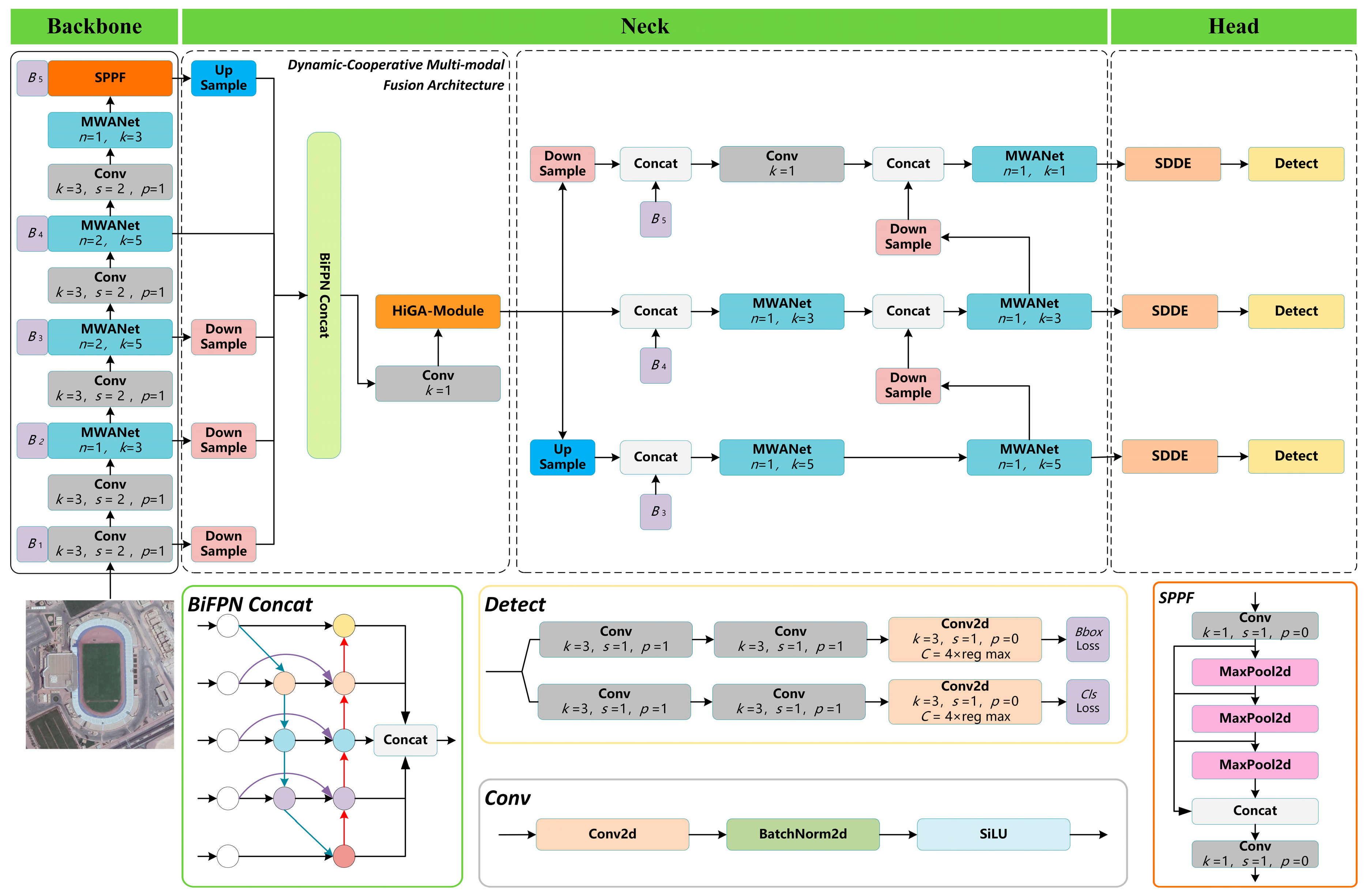

- We propose DyCoMF-Arch, which constructs a multigranularity feature space through multilevel progressive sampling operations. By integrating BiFPN-Concat for cross-level feature interaction, the architecture enhances multiscale object-detection capabilities under complex remote-sensing scenes.

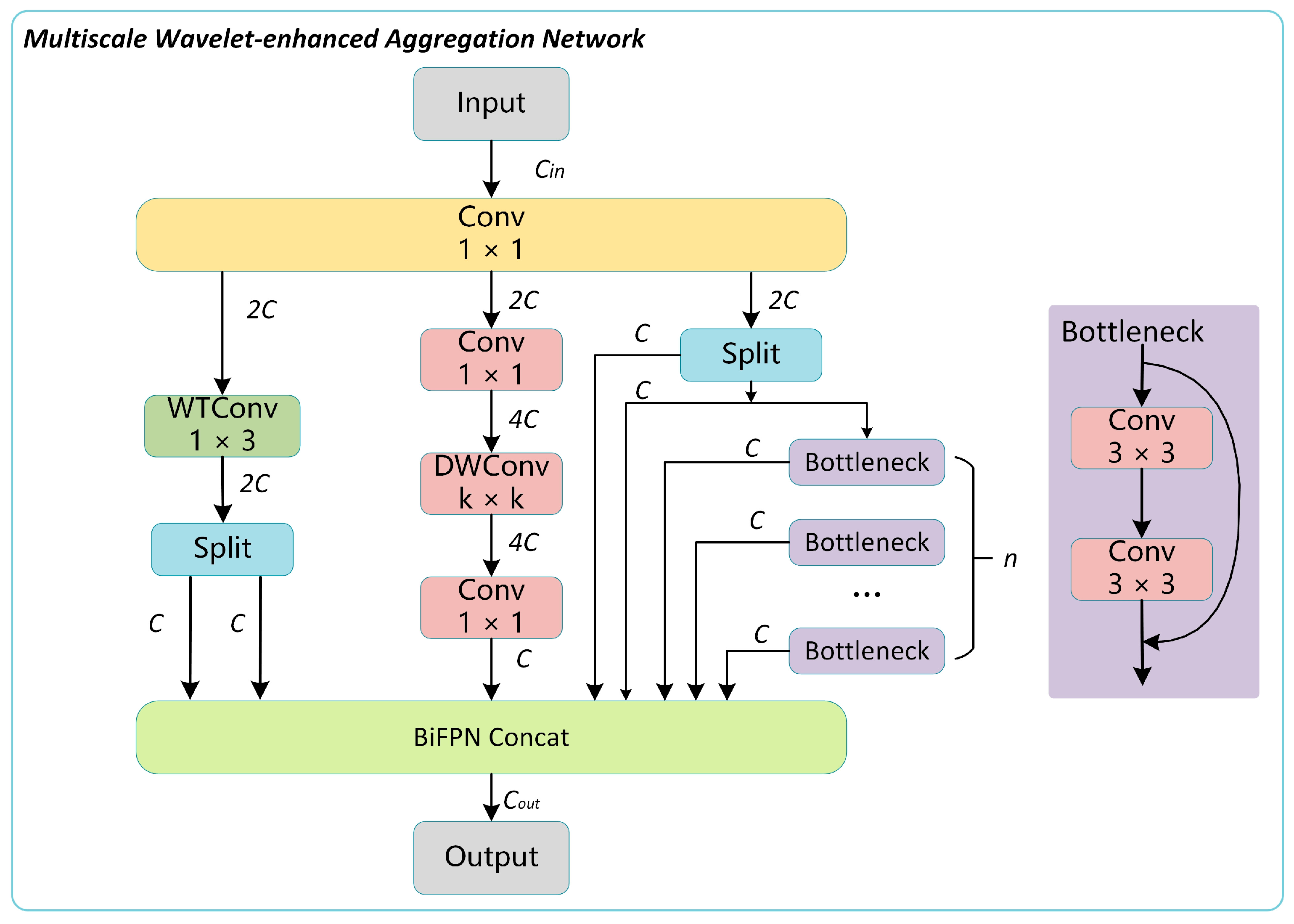

- We design MWA-Net as a replacement for the original C2f module. This network constructs multipath branches to fully extract image features and employs a dynamic fusion branch based on the BiFPN structure to achieve dynamic weighted feature fusion. This approach enhances the representation of small objects in complex backgrounds and solves the issue of feature detail loss encountered by the C2f module in remote-sensing scenes.

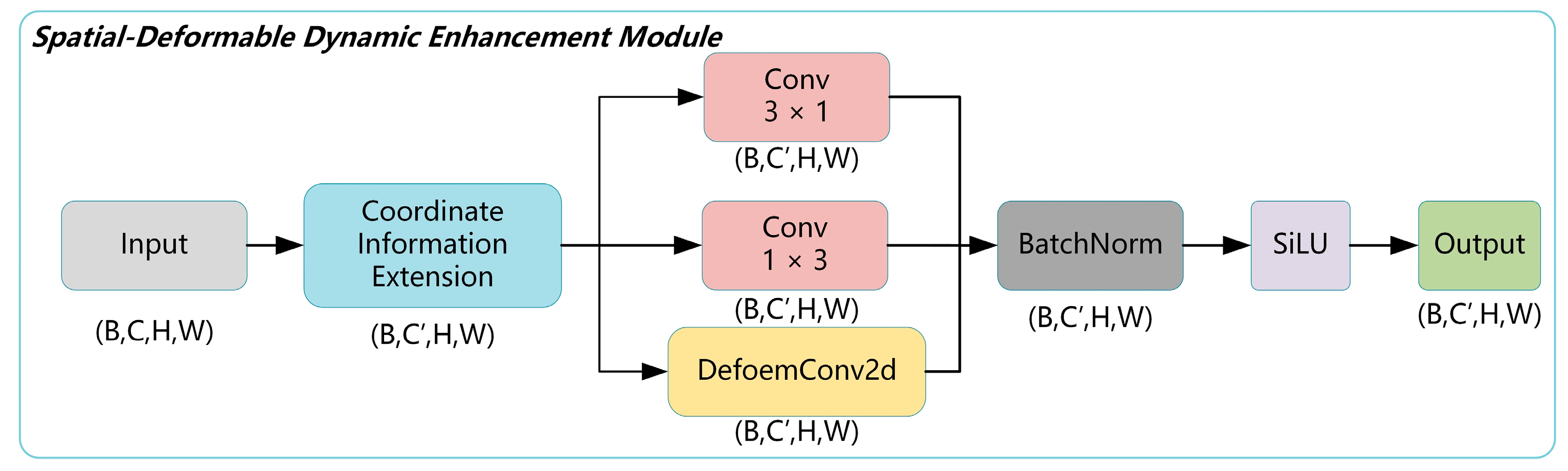

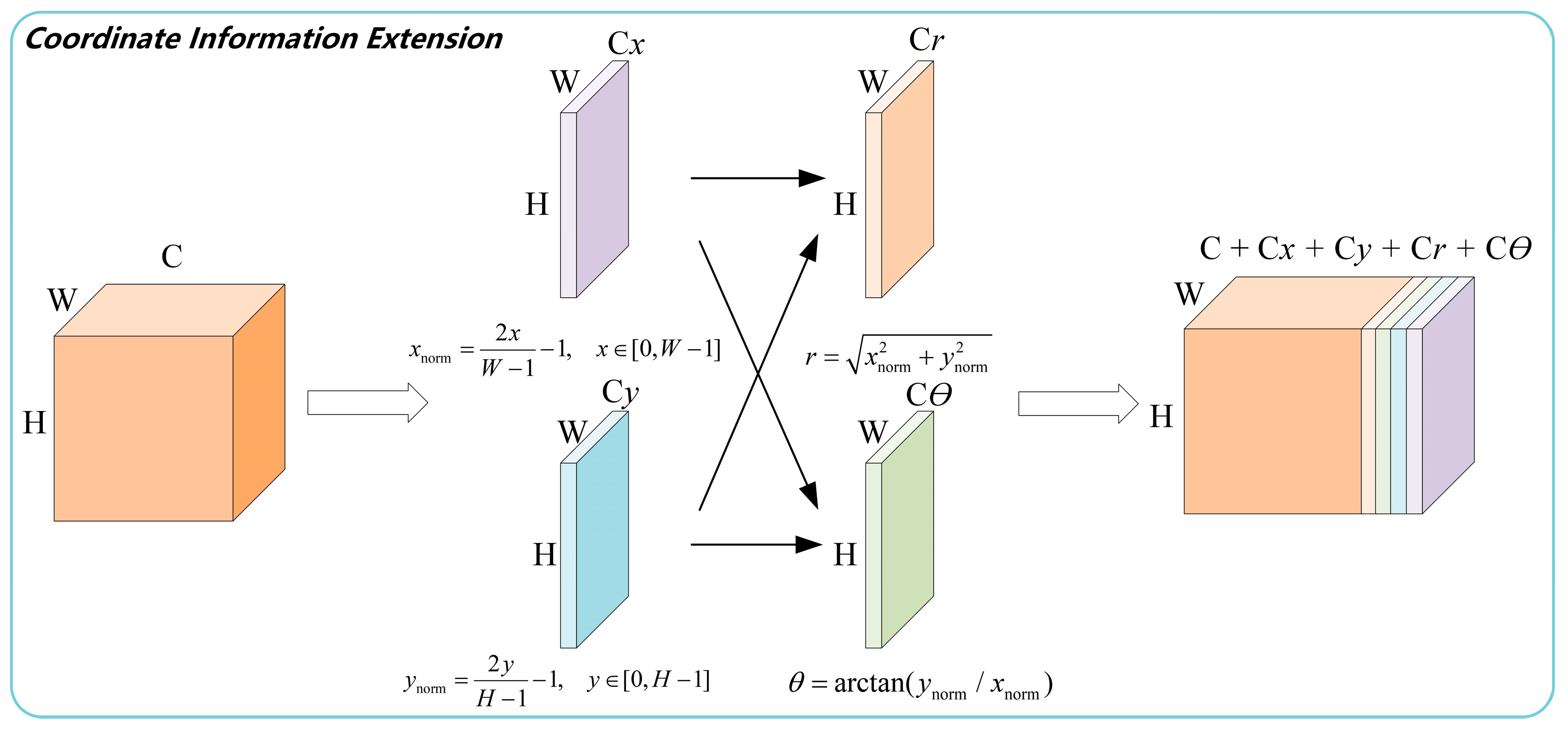

- To address the limitations of the YOLOv8 detection head in detecting small objects in remote-sensing images, we propose SDDE-Module. This module introduces a coordinate enhancement layer to embed absolute coordinate information and adds a multibranch convolutional architecture comprising horizontal, vertical, and deformable convolution branches. This design improves the localization of dense small objects under interference conditions and overcomes the limitations of fixed sampling patterns in adapting to geometric deformations.

2. Related Work

2.1. Traditional Object Detection Methods

2.2. YOLO Series Object Detection Methods

2.3. Application of YOLO Series Algorithms in Remote-Sensing Image Detection

3. Methodology

3.1. DyCoMF-Arch

3.2. MWA-Net

3.3. SDDE-Module

4. Experiments and Results

4.1. Experimental Environment Configuration

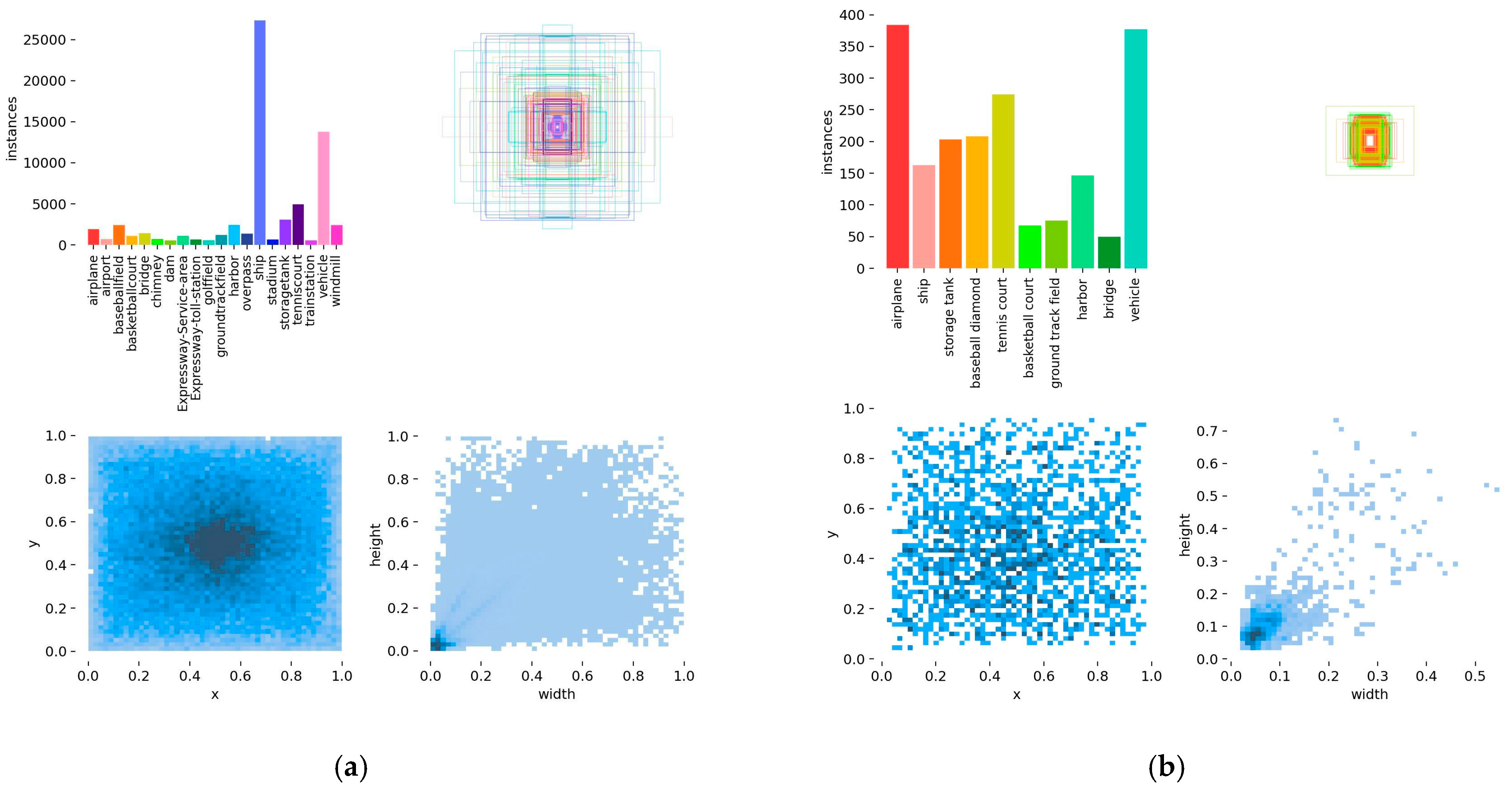

4.2. Experimental Datasets

4.3. Evaluation Metrics

4.4. Experimental Results and Analysis

4.4.1. Ablation Experiments

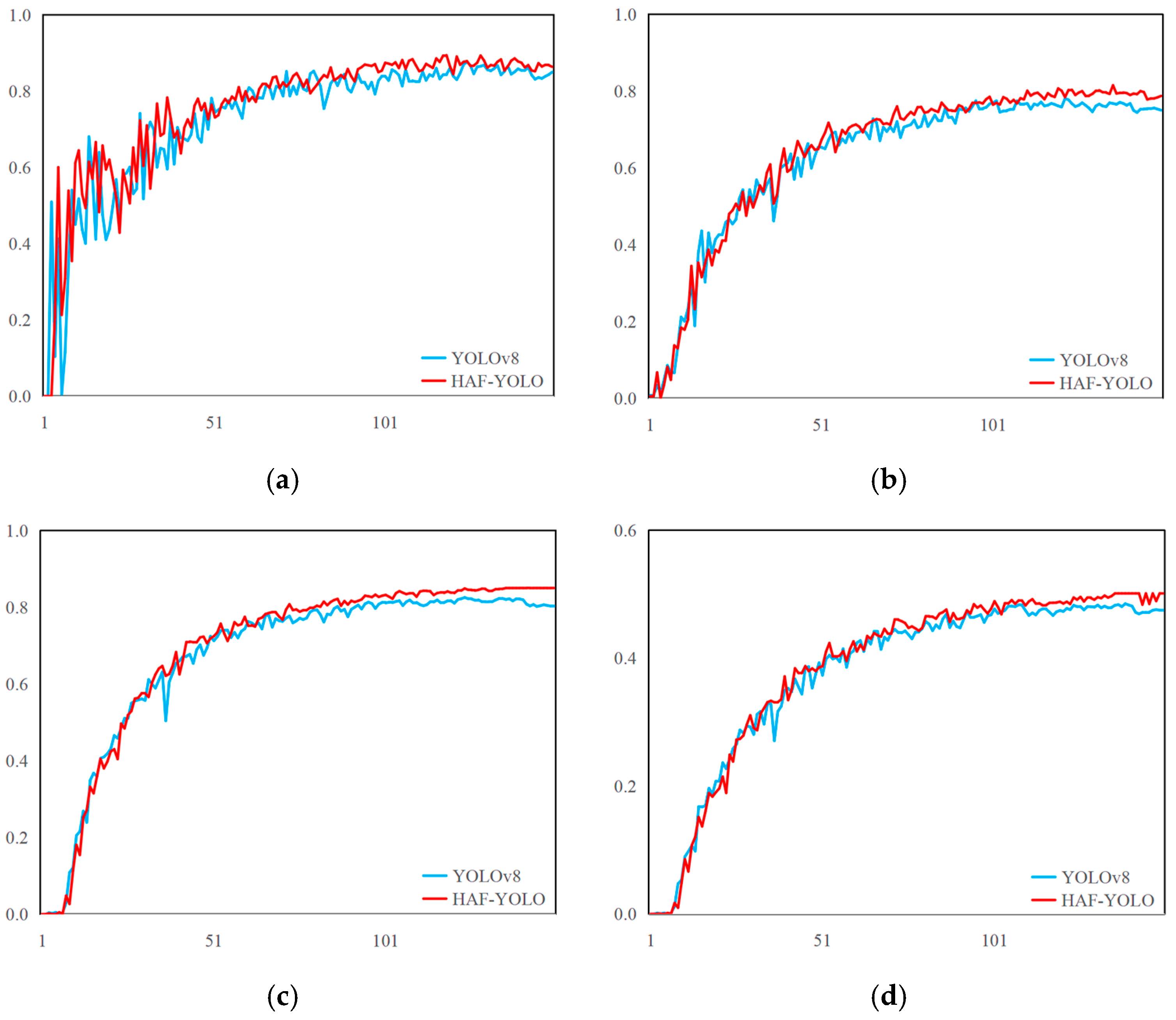

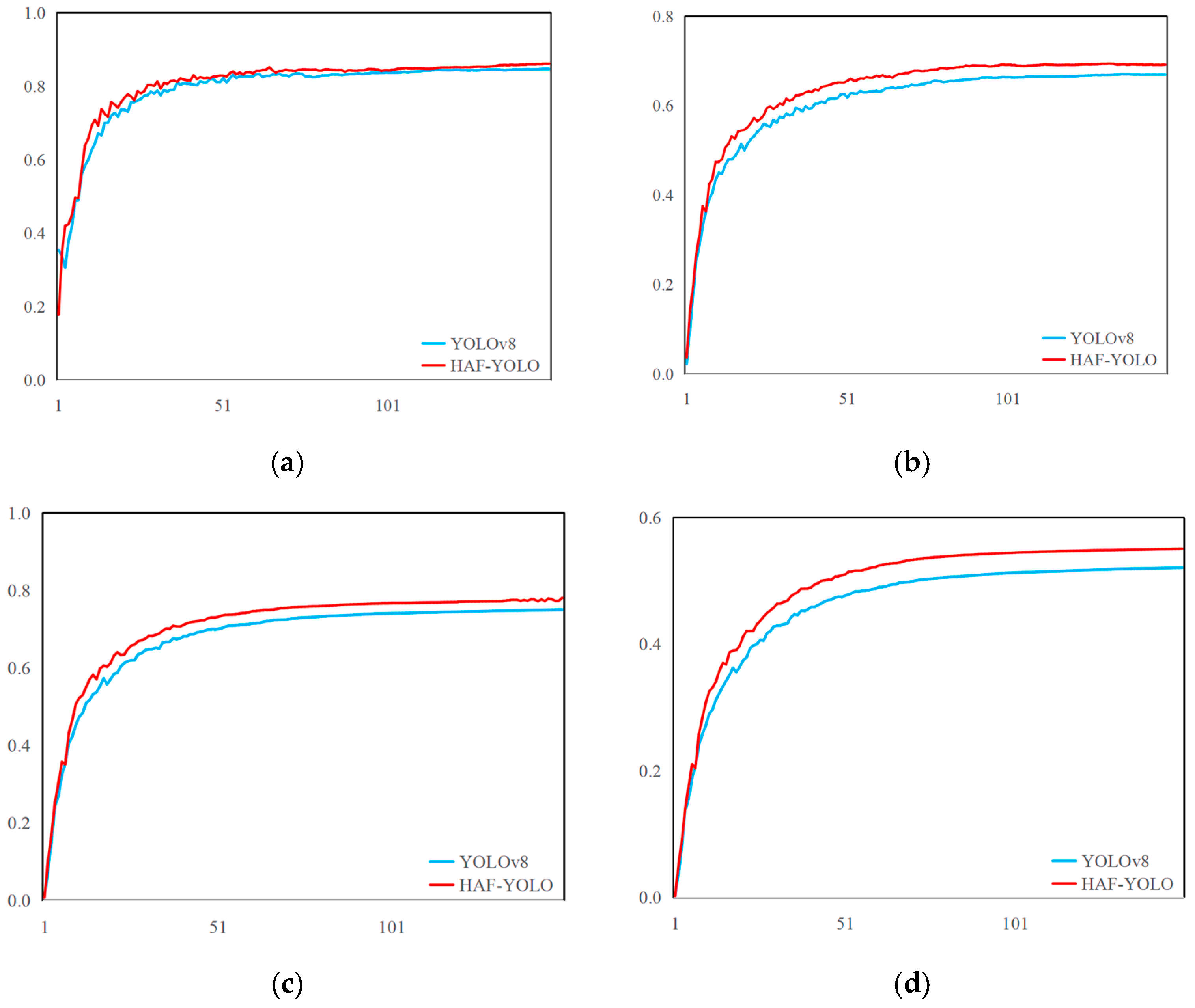

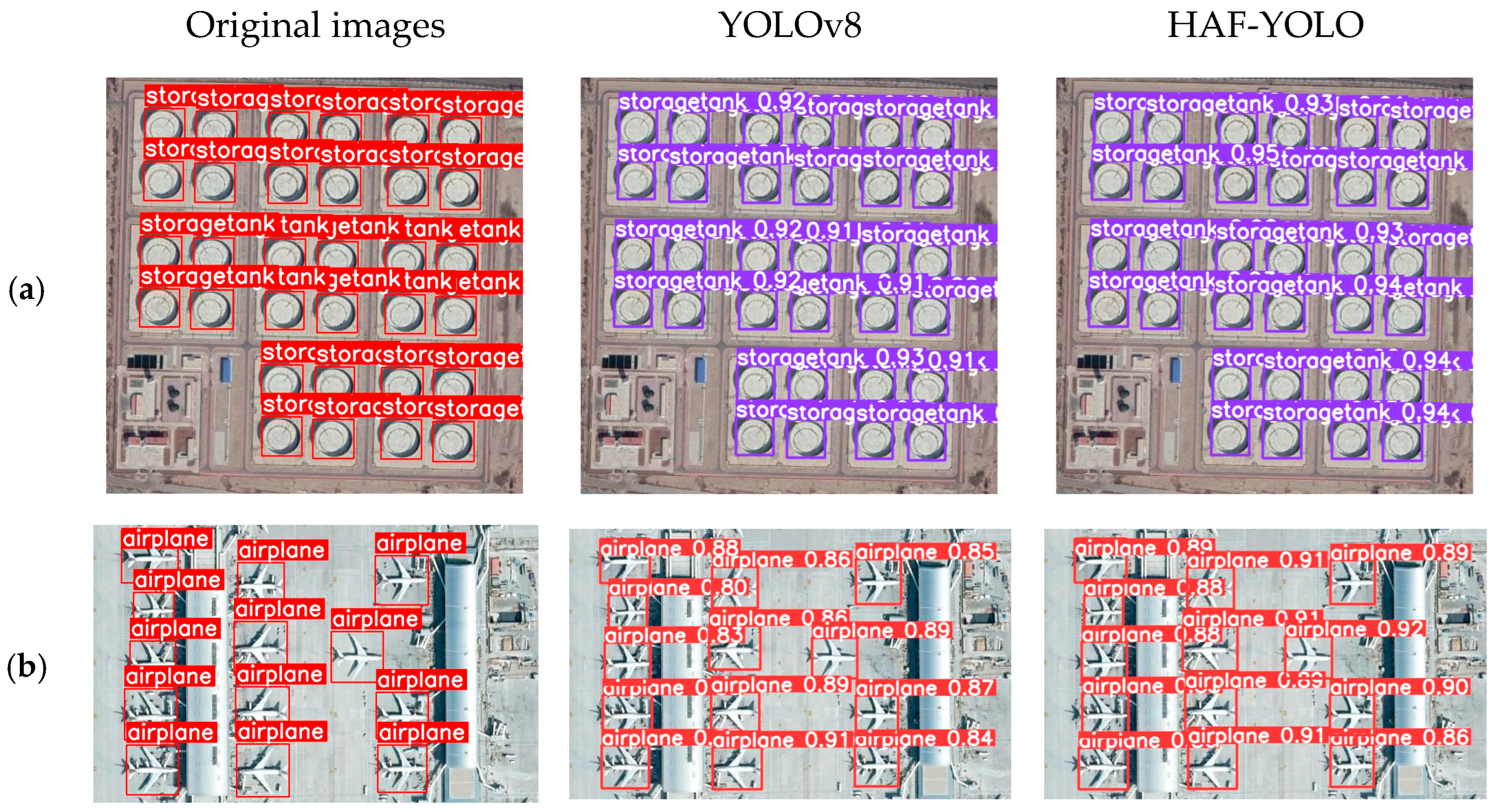

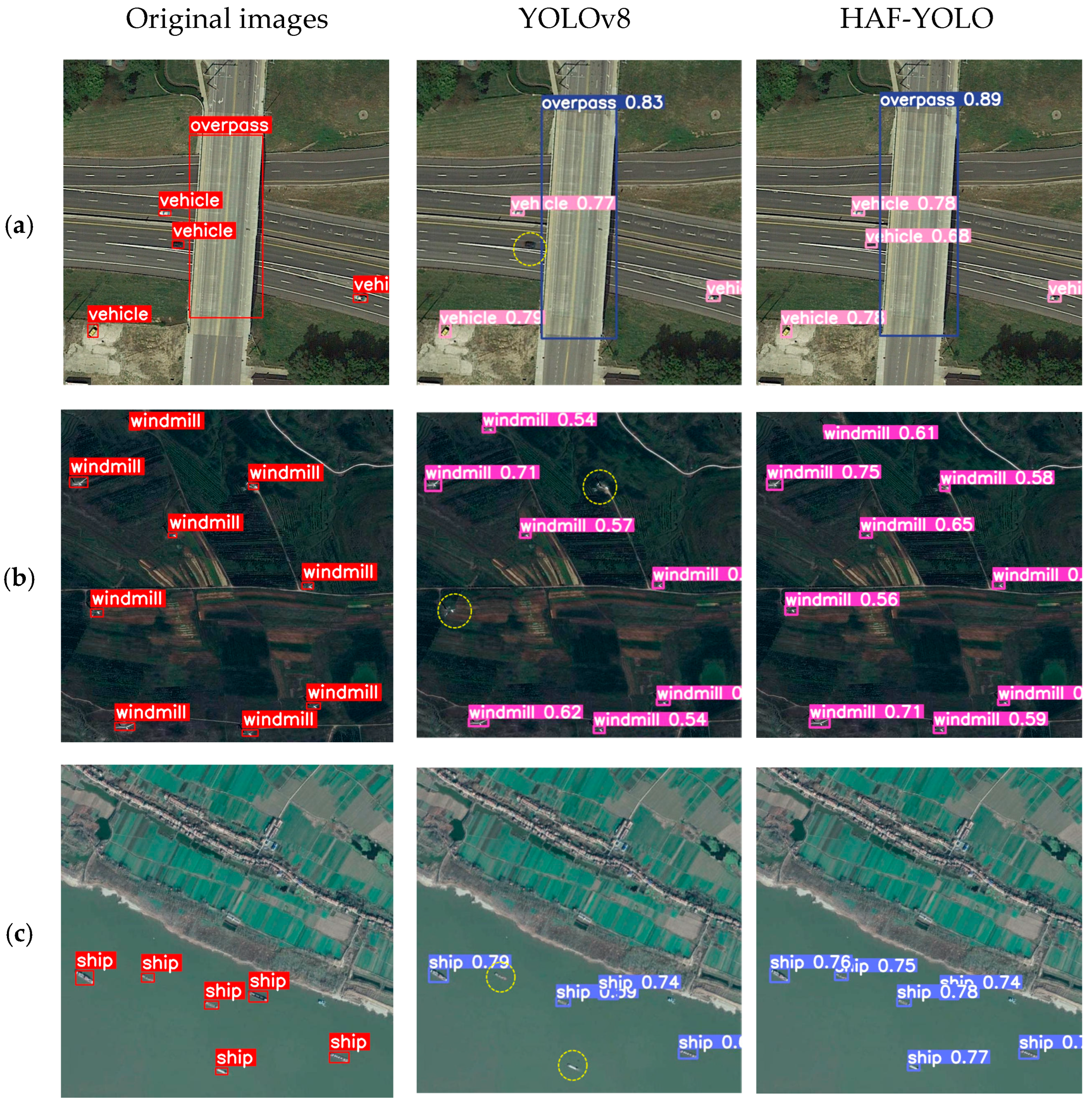

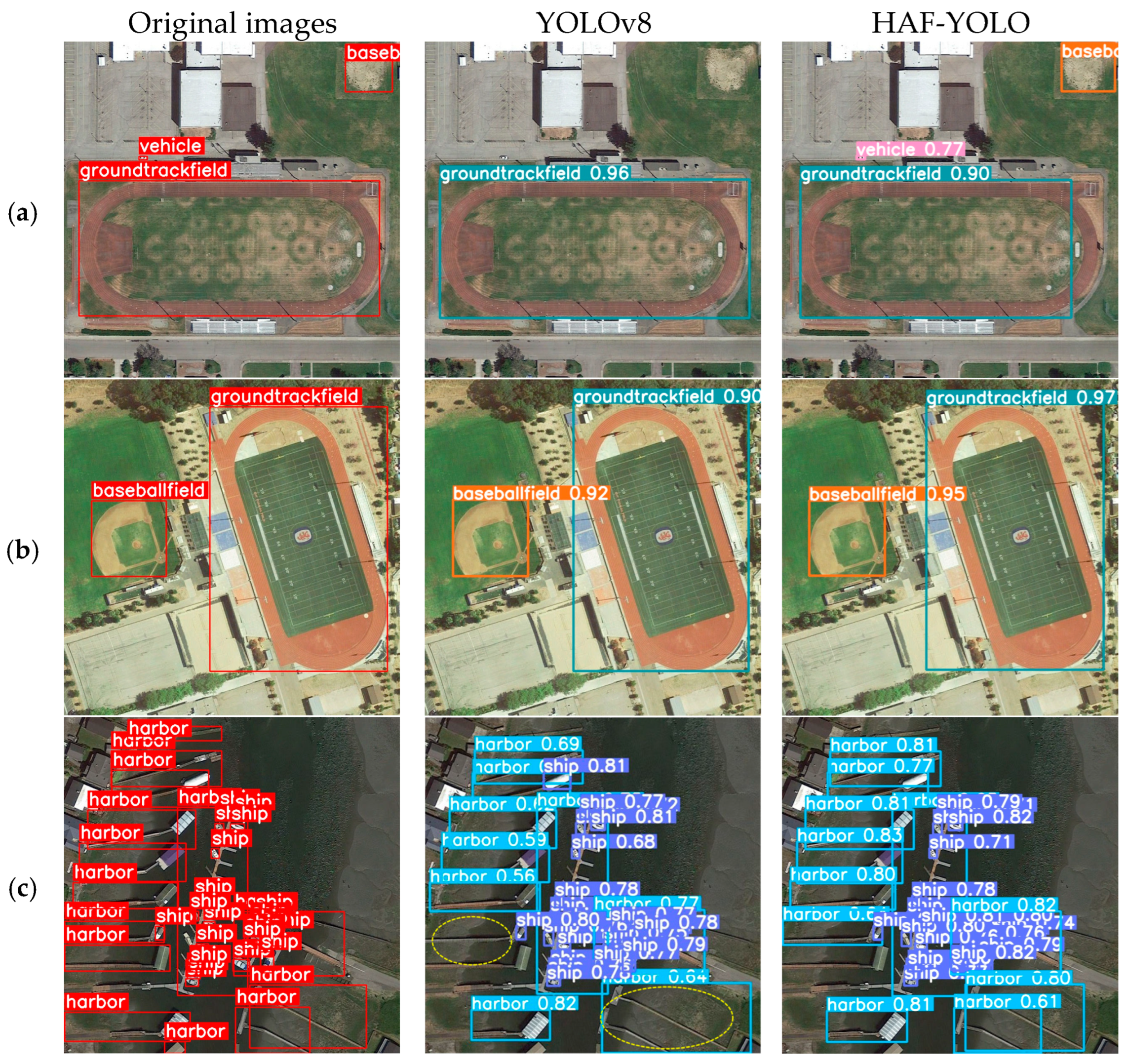

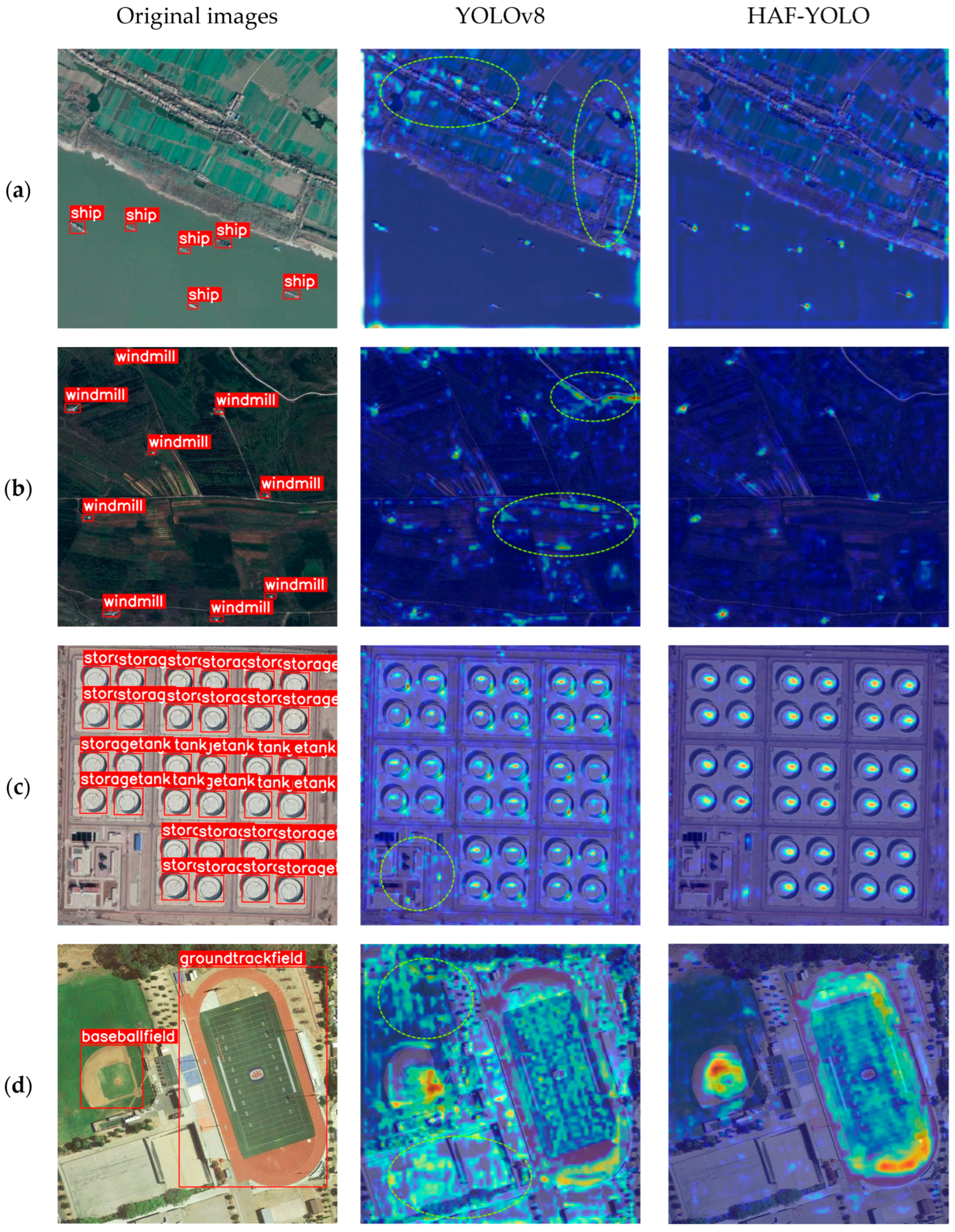

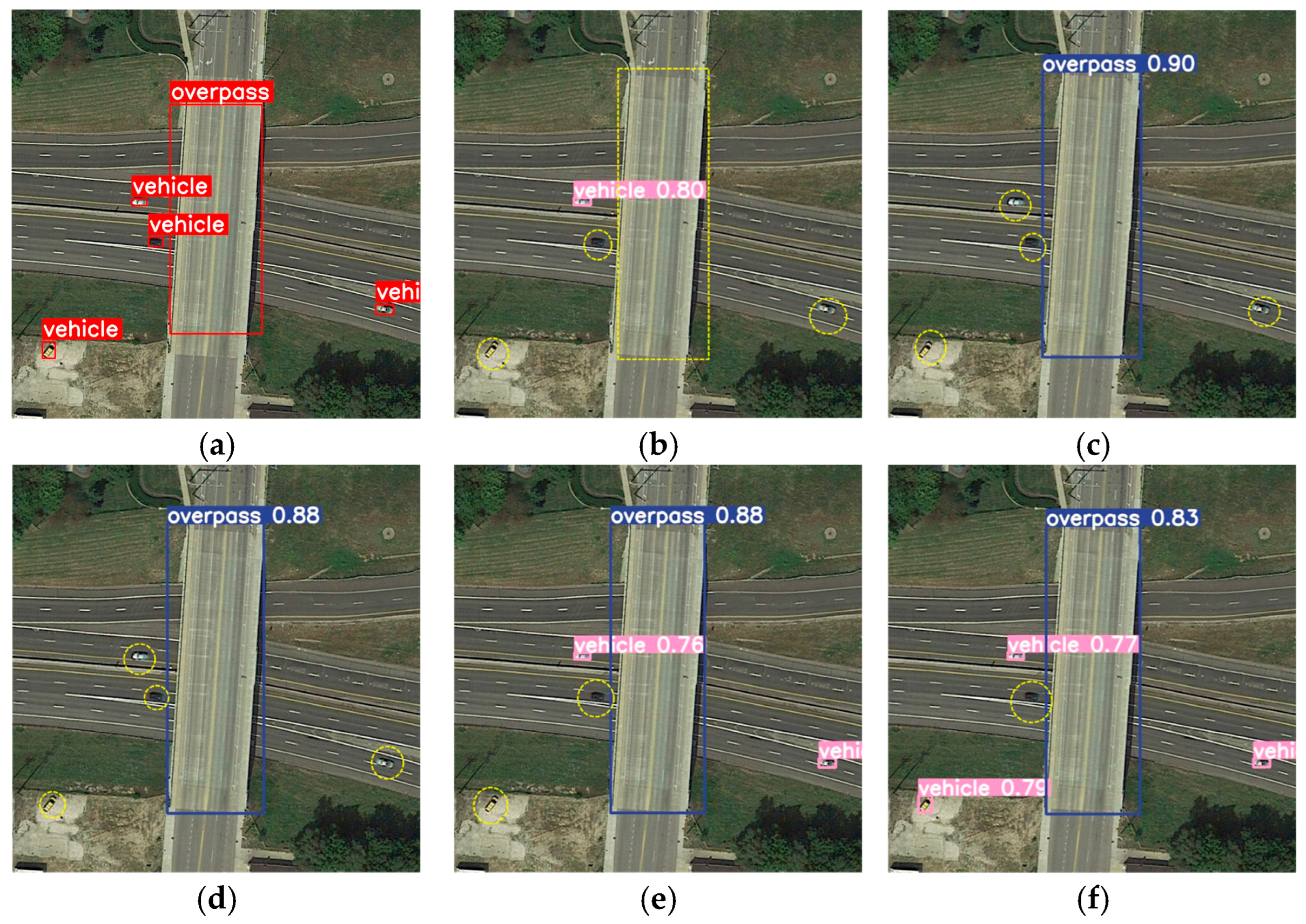

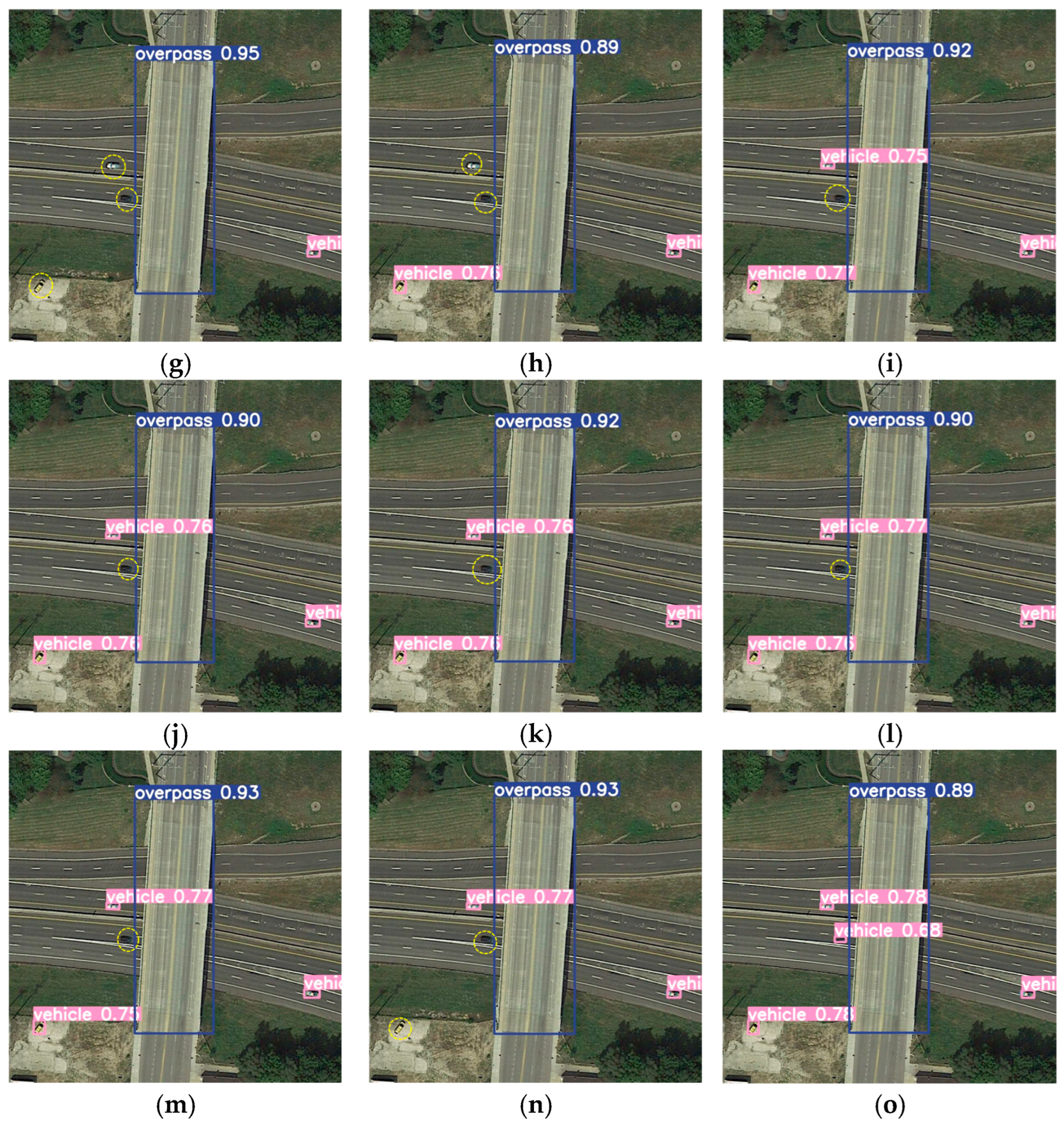

4.4.2. Comparative Analysis Between HAF-YOLO and YOLOv8

4.4.3. Comparative Experiments with Other Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AP | Average precision |

| CGAA | Chunked gated adaptive attention |

| CNN | Convolutional neural network |

| FLOP | Floating-point operation |

| FN | False negative |

| FP | False positive |

| FPN | Feature pyramid network |

| GELAN | General efficient layer aggregation network |

| HOG | Histogram of oriented gradients |

| IoU | Intersection over union |

| MHSA | Multihead self-attention |

| NMS | Non-maximum suppression |

| PGI | Programmable gradient information |

| ROI | Region of interest |

| RPN | Region proposal network |

| SAR | Synthetic aperture radar |

| SDDE | Spatial-deformable dynamic enhancement |

| SE | Squeeze-and-excitation |

| SPPF | Spatial pyramid pooling fast |

| SSD | Single-shot multibox detector |

| SVM | Support vector machine |

| TAA | Task-aligned assigner |

| TN | True negative |

| TP | True positive |

| UAV | Unmanned aerial vehicle |

| YOLO | You Only Look Once |

References

- Zheng, Z.; Yuan, J.; Yao, W.; Kwan, P.; Yao, H.; Liu, Q.; Guo, L. Fusion of UAV-acquired visible images and multispectral data by applying machine-learning methods in crop classification. Agronomy 2024, 14, 2670. [Google Scholar] [CrossRef]

- Zhang, Z.; Yao, F.; Li, J. Dynamic Penetration Test Based on YOLOv5. In Proceedings of the 2022 3rd International Conference on Geology, Mapping and Remote Sensing (ICGMRS), Zhoushan, China, 22–24 April 2022. [Google Scholar]

- Morita, M.; Kinjo, H.; Sato, S.; Tansuriyavong; Sulyyon; Anezaki, T. Autonomous Flight Drone for Infrastructure (Transmission Line) Inspection. In Proceedings of the 2017 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 24–26 November 2017. [Google Scholar]

- Menkhoff, T.; Tan, E.K.B.; Ning, K.S.; Hup, T.G.; Pan, G. Tapping Drone Technology to Acquire 21st Century Skills: A Smart City Approach. In Proceedings of the 2017 IEEE SmartWorld, San Francisco, CA, USA, 4–8 August 2017. [Google Scholar]

- Ryoo, D.-W.; Lee, M.-S.; Lim, C.-D. Design of a Drone-Based Real-Time Service System for Facility Inspection. In Proceedings of the 2023 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 11–13 October 2023. [Google Scholar]

- Sun, X.; Zhang, W. Implementation of Target Tracking System Based on Small Drone. In Proceedings of the 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 20–22 December 2019. [Google Scholar]

- Wang, Z.; Xia, F.; Zhang, C. FD_YOLOX: An Improved YOLOX Object Detection Algorithm Based on Dilated Convolution. In Proceedings of the 2023 IEEE 18th Conference on Industrial Electronics and Applications (ICIEA), Ningbo, China, 18–22 August 2023. [Google Scholar]

- Zong, H.; Pu, H.; Zhang, H.; Wang, X.; Zhong, Z.; Jiao, Z. Small Object Detection in UAV Image Based on Slicing Aided Module. In Proceedings of the 2022 IEEE 4th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 29–31 July 2022. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999. [Google Scholar]

- Pan, W.; Huan, W.; Xu, L. Improving High-Voltage Line Obstacle Detection with Multi-Scale Feature Fusion in YOLO Algorithm. In Proceedings of the 2024 6th International Conference on Electronics and Communication, Network and Computer Technology (ECNCT), Guangzhou, China, 19–21 July 2024. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Viola, P.; Jones, M. Rapid Object Detection Using a Boosted Cascade of Simple Features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into high quality object detection. arXiv 2017, arXiv:1712.00726. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Huang, G.; Liu, Z.; Weinberger, K.Q. Densely connected convolutional networks. arXiv 2016, arXiv:1608.06993. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Farhadi, A.; Redmon, J. YOLOv3: An Incremental Improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Chaurasia, A.; Borovec, J.; Kwon, Y.; Michael, K.; Changyu, L.; Fang, J.; Skalski, P.; Hogan, A.; et al. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 27 August 2024).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Joher, G.; Chaurasia, A.; Qiu, J. YOLOv8. Available online: https://github.com/ultralytics/ultralytics (accessed on 27 August 2024).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Hu, J.; Pang, T.; Peng, B.; Shi, Y.; Li, T. A small object detection model for drone images based on multi-attention fusion network. Image Vis. Comput. 2025, 155, 105436. [Google Scholar] [CrossRef]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small object detection on unmanned aerial vehicle perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef]

- Wang, X.; He, N.; Hong, C.; Wang, Q.; Chen, M. Improved YOLOX-X based UAV aerial photography object detection algorithm. Image Vis. Comput. 2023, 135, 104697. [Google Scholar] [CrossRef]

- Koyun, O.C.; Keser, R.K.; Akkaya, I.B.; Töreyin, B.U. Focus-and-detect: A small object detection framework for aerial images. Signal Process. Image Commun. 2022, 104, 116675. [Google Scholar] [CrossRef]

- Qu, J.; Tang, Z.; Zhang, L.; Zhang, Y.; Zhang, Z. Remote sensing small object detection network based on attention mechanism and multi-scale feature fusion. Remote Sens. 2023, 15, 2728. [Google Scholar] [CrossRef]

- Wu, Y.; Mu, X.; Shi, H.; Hou, M. An object detection model AAPW-YOLO for UAV remote sensing images based on adaptive convolution and reconstructed feature fusion. Sci. Rep. 2025, 15, 16214. [Google Scholar] [CrossRef]

- Ji, C.L.; Yu, T.; Gao, P.; Wang, F.; Yuan, R.Y. YOLO-TLA: An efficient and lightweight small object detection model based on YOLOv5. J. Real-Time Image Process. 2024, 21, 141. [Google Scholar] [CrossRef]

- Hu, W.; Jiang, X.; Tian, J.; Ye, S.; Liu, S. Land target detection algorithm in remote sensing images based on deep learning. Land 2025, 14, 1047. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhao, L.L.; Zhu, M.L. MS-YOLOv7: YOLOv7 based on multi-scale for object detection on UAV aerial photography. Drones 2023, 7, 188. [Google Scholar] [CrossRef]

- Liao, H.; Zhu, W. YOLO-DRS: A bioinspired object detection algorithm for remote sensing images incorporating a multi-scale efficient lightweight attention mechanism. Biomimetics 2023, 8, 458. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet Convolutions for Large Receptive Fields. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object Detection in Optical Remote Sensing Images: A Survey and A New Benchmark. arXiv 2019, arXiv:1909.00133. [Google Scholar] [CrossRef]

| Parameter | Configuration |

|---|---|

| CPU | Intel(R) Core(TM) i9-10940X |

| GPU | NVIDIA GeForce RTX 3090 |

| System | Windows 10 |

| Deep learning framework | PyTorch 2.0.0 |

| GPU accelerator | CUDA 11.6 |

| Integrated development environment | PyCharm |

| Scripting language | Python 3.8 |

| Parameter | Configuration |

|---|---|

| Epochs | 150 |

| Workers | 10 |

| Batch | 10 |

| lr0 | 0.01 |

| Momentum | 0.937 |

| weight_decay | 0.0005 |

| Network optimizer | SGD |

| box loss weight | 7.5 |

| cls loss weight | 0.5 |

| dfl loss weight | 1.5 |

| mosaic | 1.0 |

| IOU | C-IOU |

| translate | 0.1 |

| scale | 0.5 |

| fliplr | 0.5 |

| YOLOv8 | DyCoMF-Arch | MWA-Net | SDDE-Module | P (%) | R (%) | mAP50 (%) | mAP50:95 (%) | Param (M) | GFLOPs |

|---|---|---|---|---|---|---|---|---|---|

| √ | × | × | × | 84.6 | 66.9 | 75.0 | 52.1 | 3.0 | 8.2 |

| √ | √ | × | × | 85.4 | 68.8 | 76.2 | 53.3 | 3.3 | 9.0 |

| √ | × | √ | × | 85.5 | 68.0 | 76.1 | 53.2 | 3.7 | 10.4 |

| √ | × | × | √ | 85.2 | 67.8 | 76.3 | 53.7 | 3.2 | 8.7 |

| √ | √ | √ | × | 85.9 | 69.4 | 76.8 | 53.9 | 4.0 | 11.2 |

| √ | √ | × | √ | 86.2 | 69.8 | 77.5 | 54.2 | 3.6 | 9.6 |

| √ | × | √ | √ | 86.4 | 70.9 | 77.2 | 54.9 | 3.9 | 10.9 |

| √ | √ | √ | √ | 87.1 | 71.9 | 78.1 | 55.4 | 4.3 | 11.8 |

| YOLOv8 | DyCoMF-Arch | MWA-Net | SDDE-Module | P (%) | R (%) | mAP50 (%) | mAP50:95 (%) | Param (M) | GFLOPs |

|---|---|---|---|---|---|---|---|---|---|

| √ | × | × | × | 84.9 | 75.1 | 80.2 | 47.4 | 3.0 | 8.2 |

| √ | √ | × | × | 85.6 | 77.1 | 81.4 | 47.9 | 3.3 | 9.0 |

| √ | × | √ | × | 85.7 | 76.8 | 81.5 | 47.5 | 3.7 | 10.4 |

| √ | × | × | √ | 85.4 | 76.6 | 81.7 | 48.1 | 3.2 | 8.7 |

| √ | √ | √ | × | 86.1 | 77.8 | 82.4 | 48.2 | 4.0 | 11.2 |

| √ | √ | × | √ | 86.2 | 78.1 | 83.8 | 48.7 | 3.6 | 9.6 |

| √ | × | √ | √ | 86.2 | 78.5 | 83.5 | 48.8 | 3.9 | 10.9 |

| √ | √ | √ | √ | 87.6 | 79.8 | 85.0 | 50.2 | 4.3 | 11.8 |

| P (%) | R (%) | AP50 (%) | AP50:95 (%) | |||||

|---|---|---|---|---|---|---|---|---|

| YOLOv8 | HAF-YOLO | YOLOv8 | HAF-YOLO | YOLOv8 | HAF-YOLO | YOLOv8 | HAF-YOLO | |

| All | 0.847 | 0.871 | 0.669 | 0.719 | 0.75 | 0.781 | 0.521 | 0.554 |

| Airplane | 0.944 | 0.962 | 0.635 | 0.708 | 0.788 | 0.827 | 0.532 | 0.575 |

| Airport | 0.805 | 0.832 | 0.803 | 0.876 | 0.857 | 0.898 | 0.585 | 0.658 |

| Baseball field | 0.954 | 0.970 | 0.698 | 0.732 | 0.847 | 0.858 | 0.688 | 0.712 |

| Basketball court | 0.924 | 0.945 | 0.845 | 0.873 | 0.889 | 0.902 | 0.757 | 0.794 |

| Bridge | 0.739 | 0.768 | 0.349 | 0.405 | 0.432 | 0.487 | 0.239 | 0.290 |

| Chimney | 0.970 | 0.968 | 0.725 | 0.745 | 0.765 | 0.792 | 0.645 | 0.682 |

| Dam | 0.698 | 0.705 | 0.657 | 0.712 | 0.698 | 0.724 | 0.391 | 0.435 |

| Expressway service area | 0.836 | 0.852 | 0.811 | 0.856 | 0.863 | 0.888 | 0.606 | 0.660 |

| Expressway toll station | 0.908 | 0.912 | 0.561 | 0.625 | 0.655 | 0.696 | 0.500 | 0.545 |

| Golf course | 0.773 | 0.825 | 0.786 | 0.848 | 0.821 | 0.869 | 0.587 | 0.685 |

| Ground track field | 0.720 | 0.735 | 0.772 | 0.825 | 0.792 | 0.817 | 0.598 | 0.640 |

| Harbor | 0.765 | 0.785 | 0.611 | 0.642 | 0.668 | 0.684 | 0.474 | 0.510 |

| Overpass | 0.844 | 0.860 | 0.523 | 0.562 | 0.617 | 0.642 | 0.413 | 0.445 |

| Ship | 0.929 | 0.942 | 0.832 | 0.865 | 0.901 | 0.914 | 0.549 | 0.575 |

| Stadium | 0.843 | 0.885 | 0.595 | 0.652 | 0.783 | 0.802 | 0.604 | 0.625 |

| Storage tank | 0.961 | 0.968 | 0.553 | 0.582 | 0.733 | 0.751 | 0.457 | 0.472 |

| Tennis court | 0.950 | 0.958 | 0.860 | 0.875 | 0.911 | 0.923 | 0.764 | 0.788 |

| Train station | 0.633 | 0.635 | 0.650 | 0.705 | 0.653 | 0.67 | 0.339 | 0.395 |

| Vehicle | 0.889 | 0.905 | 0.334 | 0.368 | 0.484 | 0.513 | 0.268 | 0.300 |

| Windmill | 0.866 | 0.902 | 0.781 | 0.828 | 0.844 | 0.865 | 0.425 | 0.458 |

| P (%) | R (%) | AP50 (%) | AP50:95 (%) | |||||

|---|---|---|---|---|---|---|---|---|

| YOLOv8 | HAF-YOLO | YOLOv8 | HAF-YOLO | YOLOv8 | HAF-YOLO | YOLOv8 | HAF-YOLO | |

| All | 0.846 | 0.876 | 0.751 | 0.798 | 0.804 | 0.850 | 0.475 | 0.502 |

| Airplane | 0.936 | 0.952 | 0.936 | 0.965 | 0.977 | 0.988 | 0.581 | 0.591 |

| Ship | 0.855 | 0.857 | 0.855 | 0.686 | 0.714 | 0.768 | 0.439 | 0.439 |

| Storage tank | 0.825 | 0.901 | 0.825 | 0.874 | 0.760 | 0.871 | 0.384 | 0.424 |

| Baseball diamond | 0.927 | 0.941 | 0.927 | 0.972 | 0.981 | 0.971 | 0.714 | 0.716 |

| Tennis court | 0.888 | 0.863 | 0.888 | 0.904 | 0.877 | 0.922 | 0.512 | 0.545 |

| Basketball court | 0.623 | 0.736 | 0.623 | 0.522 | 0.525 | 0.606 | 0.286 | 0.348 |

| Ground track field | 0.954 | 0.948 | 0.954 | 0.943 | 0.972 | 0.971 | 0.732 | 0.736 |

| Harbor | 0.860 | 0.854 | 0.860 | 0.903 | 0.923 | 0.925 | 0.468 | 0.510 |

| Bridge | 0.715 | 0.817 | 0.715 | 0.477 | 0.546 | 0.633 | 0.209 | 0.236 |

| Vehicle | 0.874 | 0.886 | 0.874 | 0.738 | 0.763 | 0.844 | 0.430 | 0.471 |

| Model | P (%) | R (%) | mAP50 (%) | mAP50:95 (%) | Param (M) | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|

| Traditional Methods | |||||||

| Faster R-CNN | 85.2 | 76.8 | 82.3 | 46.8 | 26.5 | 45.6 | 41 |

| SSD | 83.5 | 74.1 | 80.5 | 44.2 | 24.1 | 32.7 | 63 |

| YOLO Series | |||||||

| YOLOv3 | 87.1 | 79.9 | 84.5 | 48.9 | 12.1 | 18.9 | 178 |

| YOLOv5 | 82.7 | 76.3 | 81.5 | 47.1 | 2.5 | 7.1 | 198 |

| YOLOv6 | 84.4 | 78 | 82.1 | 48.6 | 4.2 | 11.8 | 196 |

| YOLOv7 | 81.1 | 78.9 | 81.2 | 42.9 | 6.0 | 13.3 | 175 |

| YOLOv8 | 84.9 | 75.1 | 80.2 | 47.4 | 3.0 | 8.2 | 188 |

| YOLOv9 | 83.8 | 72.6 | 78.7 | 46.3 | 1.9 | 7.6 | 168 |

| YOLOv10 | 72.6 | 71.5 | 75 | 45.1 | 7.2 | 21.4 | 142 |

| YOLOv11 | 87.6 | 78.2 | 83.6 | 49.4 | 9.4 | 21.6 | 128 |

| YOLOv12 | 83.7 | 74.1 | 79.6 | 45.8 | 9.2 | 21.2 | 90 |

| RS-Specific SOTA | |||||||

| AAPW-YOLO | 86.5 | 78.0 | 83.6 | 49.0 | 3.5 | 10.5 | 158 |

| YOLO-TLA | 85.0 | 77.5 | 82.1 | 48.0 | 3.2 | 9.0 | 195 |

| MS-YOLOv7 | 81.5 | 79.0 | 81.2 | 47.0 | 6.5 | 14.5 | 176 |

| YOLO-DRS | 86.0 | 78.5 | 83.6 | 49.6 | 3.9 | 10.9 | 169 |

| Ours | |||||||

| HAF-YOLO | 87.6 | 79.8 | 85.0 | 50.2 | 4.3 | 11.8 | 161 |

| Model | P (%) | R (%) | mAP50 (%) | mAP50:95 (%) | Param (M) | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|

| Traditional Methods | |||||||

| Faster R-CNN | 84.7 | 65.9 | 70.8 | 45.1 | 26.5 | 45.6 | 43 |

| SSD | 82.3 | 63.5 | 68.2 | 42.7 | 24.1 | 32.7 | 64 |

| YOLO Series | |||||||

| YOLOv3 | 83 | 62.8 | 68.5 | 47.0 | 12.1 | 18.9 | 185 |

| YOLOv5 | 83.4 | 65.3 | 72.7 | 49.2 | 2.5 | 7.1 | 194 |

| YOLOv6 | 82.4 | 62.7 | 70.2 | 78.5 | 4.2 | 11.8 | 203 |

| YOLOv7 | 85.1 | 67.2 | 75.5 | 52.6 | 6.0 | 13.3 | 182 |

| YOLOv8 | 84.6 | 66.9 | 75.0 | 52.1 | 3.0 | 8.2 | 190 |

| YOLOv9 | 83.8 | 66.9 | 74.9 | 53.3 | 1.9 | 7.6 | 163 |

| YOLOv10 | 83.1 | 63.2 | 71.5 | 48.2 | 7.2 | 21.4 | 145 |

| YOLOv11 | 84.4 | 68.9 | 76.8 | 54.1 | 9.4 | 21.6 | 132 |

| YOLOv12 | 84.6 | 67.6 | 75.9 | 53.9 | 9.2 | 21.2 | 88 |

| RS-Specific SOTA | |||||||

| AAPW-YOLO | 85.0 | 68.5 | 76.8 | 54.0 | 3.5 | 10.5 | 154 |

| YOLO-TLA | 83.8 | 67.0 | 74.5 | 52.0 | 3.2 | 9.0 | 192 |

| MS-YOLOv7 | 85.5 | 67.5 | 75.5 | 52.8 | 6.5 | 14.5 | 182 |

| YOLO-DRS | 84.8 | 69.0 | 76.8 | 54.0 | 3.9 | 10.9 | 173 |

| Ours | |||||||

| HAF-YOLO | 87.1 | 71.9 | 78.1 | 55.4 | 4.3 | 11.8 | 164 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Liu, J.; Zhang, J.; Liu, Y.; Shi, J. HAF-YOLO: Dynamic Feature Aggregation Network for Object Detection in Remote-Sensing Images. Remote Sens. 2025, 17, 2708. https://doi.org/10.3390/rs17152708

Zhang P, Liu J, Zhang J, Liu Y, Shi J. HAF-YOLO: Dynamic Feature Aggregation Network for Object Detection in Remote-Sensing Images. Remote Sensing. 2025; 17(15):2708. https://doi.org/10.3390/rs17152708

Chicago/Turabian StyleZhang, Pengfei, Jian Liu, Jianqiang Zhang, Yiping Liu, and Jiahao Shi. 2025. "HAF-YOLO: Dynamic Feature Aggregation Network for Object Detection in Remote-Sensing Images" Remote Sensing 17, no. 15: 2708. https://doi.org/10.3390/rs17152708

APA StyleZhang, P., Liu, J., Zhang, J., Liu, Y., & Shi, J. (2025). HAF-YOLO: Dynamic Feature Aggregation Network for Object Detection in Remote-Sensing Images. Remote Sensing, 17(15), 2708. https://doi.org/10.3390/rs17152708