Abstract

Timely and accurate identification of surface damage in hydraulic structures is essential for maintaining structural integrity and ensuring operational safety. Traditional manual inspections are time-consuming, labor-intensive, and prone to subjectivity, especially for large-scale or inaccessible infrastructure. Leveraging advancements in aerial imaging, unmanned aerial vehicles (UAVs) enable efficient acquisition of high-resolution visual data across expansive hydraulic environments. However, existing deep learning (DL) models often lack architectural adaptations for the visual complexities of UAV imagery, including low-texture contrast, noise interference, and irregular crack patterns. To address these challenges, this study proposes a lightweight, robust, and high-precision segmentation framework, called LFPA-EAM-Fast-SCNN, specifically designed for pixel-level damage detection in UAV-captured images of hydraulic concrete surfaces. The developed DL-based model integrates an enhanced Fast-SCNN backbone for efficient feature extraction, a Lightweight Feature Pyramid Attention (LFPA) module for multi-scale context enhancement, and an Edge Attention Module (EAM) for refined boundary localization. The experimental results on a custom UAV-based dataset show that the proposed damage detection method achieves superior performance, with a precision of 0.949, a recall of 0.892, an F1 score of 0.906, and an IoU of 87.92%, outperforming U-Net, Attention U-Net, SegNet, DeepLab v3+, I-ST-UNet, and SegFormer. Additionally, it reaches a real-time inference speed of 56.31 FPS, significantly surpassing other models. The experimental results demonstrate the proposed framework’s strong generalization capability and robustness under varying noise levels and damage scenarios, underscoring its suitability for scalable, automated surface damage assessment in UAV-based remote sensing of civil infrastructure.

1. Introduction

Hydraulic structures, including dams, spillways, retaining walls, and canal linings, serve as critical components of water resource management systems worldwide [1,2]. They are fundamental to a range of essential functions, such as water supply regulation, flood mitigation, hydropower generation, navigation, and agricultural irrigation [3]. The long-term structural integrity of facilities is paramount not only for ensuring operational performance but also for safeguarding public safety, protecting surrounding ecosystems, and supporting economic development.

Traditionally, inspection and damage assessment of hydraulic structures rely heavily on manual visual surveys, which are often labor-intensive, time-consuming, subjective, and constrained by accessibility and environmental conditions, especially in hazardous or submerged areas [4,5]. Recent advances in remote sensing technologies, especially the widespread adoption of unmanned aerial vehicles (UAVs), have opened new avenues for the inspection and monitoring of hydraulic structures [6,7]. UAV-based imaging systems enable the rapid acquisition of high-resolution visual data over extensive and otherwise inaccessible areas around hydraulic buildings, significantly improving the efficiency, coverage, and safety of inspection activities. Moreover, UAV platforms equipped with advanced sensors, such as RGB cameras, multispectral imagers, and LiDAR [8,9], facilitate detailed and objective assessments of surface conditions, enabling the early detection of fine-scale defects like cracks, spalling, and material degradation in hydraulic structures [10,11]. In the context of UAV-based remote sensing for hydraulic structure inspection, optical imaging remains one of the most widely utilized modalities owing to its ability to capture fine-grained surface detailed damages with high spatial resolution, low operational cost, and flexible deployment [12].

Traditional digital image processing techniques, such as edge detection, texture analysis, and threshold-based segmentation, currently serve as the primary approach for analyzing UAV-based optical imagery of defects in hydraulic structures [13,14]. These conventional methods enable the automated identification and delineation of surface cracks by enhancing crack-related visual features against complex backgrounds [15]. Conventional digital image processing methods rely heavily on manually tuned parameters and handcrafted features, limiting the damage detection adaptability to diverse environmental conditions and reducing robustness under varying lighting and surface textures.

When integrated with advanced artificial intelligence (AI) algorithms, UAV systems enable automated, accurate, and large-scale assessments of structural conditions, significantly enhancing the efficiency and reliability of hydraulic infrastructure monitoring [16,17,18,19,20]. A growing body of research has demonstrated the effectiveness of combining UAV-acquired imagery with convolutional neural network (CNN) architectures for structural damage detection across a range of infrastructure types. Specifically, classic deep networks such as U-Net [21,22], SegNet [23,24], and DeepLab [25,26] are commonly employed to build structural damage identification models for various types of infrastructure.

In addition to CNNs, recent studies have explored transformer-based architectures like SegFormer [27] and Swin Transformer [28,29], which offer improved global context modeling and robustness in visually complex environments. These DL-based models are particularly well-suited for pixel-wise segmentation tasks, enabling accurate localization and delineation of surface cracks, spalling, and other defects on concrete, masonry, and composite structural elements. Such techniques have been successfully applied across a wide range of infrastructure scenarios, including the detection of surface cracks in concrete dams, corrosion and spalling in bridge decks, crack detection in tunnel linings, and damage assessment in hydraulic gates [30,31,32,33,34]. For example, Savino et al. [35] employed UAV photogrammetry to assess the condition of hydraulic structures by generating high-resolution 3D models, demonstrating its effectiveness in detecting surface degradation over large areas. Ai et al. [36] proposed a pixel-wise crack detection method on rock surfaces using a combination of deep learning and spatial–temporal inference based on high-speed video data. Chu et al. [37] developed a multi-scale attention-based segmentation network, called Tiny-Crack-Net, designed to accurately detect tiny cracks in concrete structures by addressing class imbalance and integrating local and global features. From the above-mentioned studies, it can be inferred that integrating UAV platforms with advanced AI-based techniques like deep learning (DL) holds great promise for automated and accurate damage detection in large-scale infrastructure, especially for hydraulic buildings [38,39]. However, there remains a significant gap in the current research regarding the development of task-specific deep learning architectures designed explicitly for UAV-based inspection of hydraulic structures. Unlike buildings or bridges, hydraulic infrastructures exhibit distinctive visual textures, irregular geometric features, and are often captured under challenging environmental conditions, such as water interference, poor lighting, or turbid surroundings. These factors introduce fine-scale damage features and complex background noise, which substantially degrade the performance of general-purpose segmentation models. As a result, existing approaches frequently suffer from low detection accuracy and limited robustness when deployed in real-world UAV inspections of hydraulic environments. This calls for dedicated methods that can effectively address these domain-specific challenges and ensure reliable damage identification in large-scale water-related facilities.

To address these limitations, this study proposes a robust and high-precision DL-based damage segmentation architecture for hydraulic structures. The proposed model integrates an improved Fast-SCNN for high-speed feature extraction, a Lightweight Feature Pyramid Attention (LFPA) module for enhanced multi-scale contextual representation, and an Edge Attention Module (EAM) for refined boundary localization. Specifically, the improved Fast-SCNN provides a compact and efficient encoder–decoder framework that ensures real-time segmentation performance while maintaining the ability to capture essential structural features in large-scale hydraulic structure scenes. The LFPA module significantly enhances the model’s sensitivity to small-scale defects by adaptively weighting spatial and channel-wise information across different semantic layers, which is particularly beneficial for identifying faint cracks and surface erosion patterns in complex concrete textures. Meanwhile, the EAM introduces edge-aware attention mechanisms that sharpen crack boundaries and improve the delineation of intersecting or irregular crack shapes, effectively mitigating over-segmentation and false detections caused by background noise or structural joints.

The overall content of this paper can be expressed as follows. A lightweight and accurate damage recognition model tailored for hydraulic concrete structures is proposed by integrating an improved Fast-SCNN backbone, LFPA module, and EAM, as detailed in Section 2. Section 3 presents the experimental setup, including dataset preparation, implementation details, and evaluation metrics. Section 4 provides a comprehensive performance evaluation through quantitative comparisons and visual analyses, demonstrating the superiority of the proposed model over existing methods in terms of precision, recall, F1 score, and IoU. Section 5 further discusses the effectiveness of each module through ablation studies and highlights the proposed model’s robustness under challenging conditions, like noise interference and low-contrast backgrounds.

2. Materials and Methods

2.1. Flowchart of the Developed Model

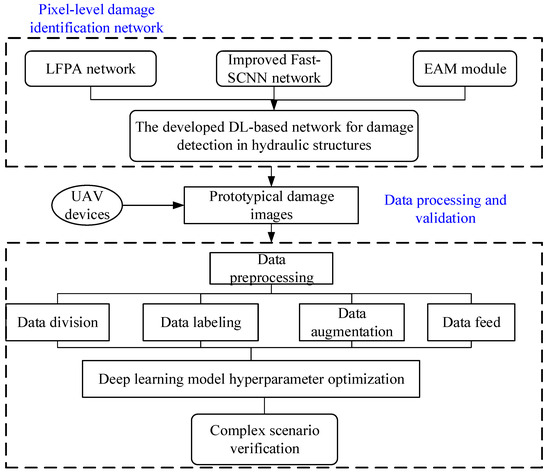

Figure 1 illustrates the overall framework of the proposed DL-based damage identification system for hydraulic concrete structures. The pipeline begins with raw image acquisition from complex concrete surfaces, followed by preprocessing steps to enhance feature clarity. These enhanced inputs are fed into a pixel-level damage identification network that integrates an improved Fast-SCNN backbone, the LFPA module, and the EAM to achieve high-precision segmentation of surface damages and defects. The segmented surface damage results are subsequently subjected to both qualitative and quantitative evaluations to assess the accuracy and robustness of the proposed method.

Figure 1.

Flowchart of the proposed framework.

2.2. Deep Learning Algorithms

2.2.1. Improved Fast-SCNN Network

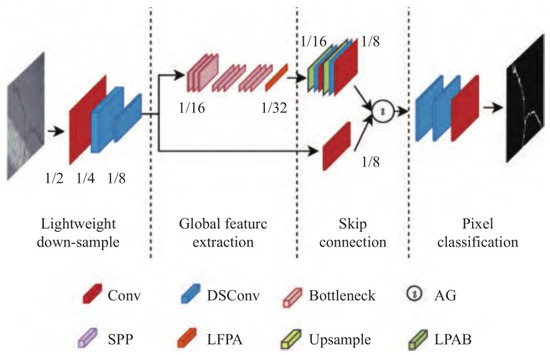

Fast-SCNN is a lightweight semantic segmentation framework originally designed for real-time applications, making it suitable for deployment in practical monitoring scenarios [40]. The Fast-SCNN network consists of four main modules, including lightweight downsampling, global feature extraction, skip connection, and pixel-level classification. The downsampling path utilizes a series of convolutional and depthwise separable convolution (DSConv) layers to rapidly reduce the spatial resolution while preserving essential features. This is followed by a global feature extraction block that integrates spatial pyramid pooling (SPP), bottleneck modules, and a low-frequency pathway attention (LFPA) mechanism to enhance multi-scale representation and improve sensitivity to fine damage patterns. Figure 2 depicts the architecture of the Fast-SCNN network for damage segmentation in hydraulic buildings used in this study. In the skip connection module, high-resolution shallow features are fused with deeper semantic features through attention gating (AG) and local position-aware blocks (LPABs), allowing the model to maintain structural detail while suppressing background noise. Finally, the upsampling and pixel classification module refine the segmentation output, producing high-resolution damage maps.

Figure 2.

Diagram of improved Fast-SCNN network.

2.2.2. LFPA Module

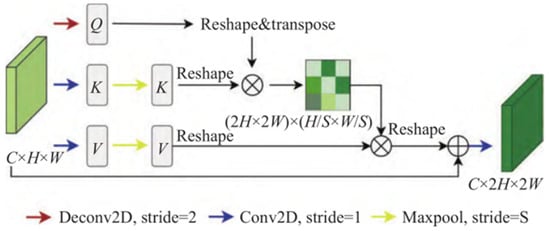

The proposed lightweight attention mechanism is illustrated in Figure 3. This architecture is designed to enhance both the accuracy and efficiency of defect recognition tasks. It utilizes a channel-wise query–key–value (QKV) structure, where the input feature map is processed through distinct convolutional and pooling operations to generate queries (Q), keys (K), and values (V). Specifically, Q is obtained via a Deconv2D operation (stride = 2) to upsample spatial resolution, while K and V are derived through max pooling (stride = S) followed by Conv2D (stride = 1) for dimensionality reduction and feature abstraction. The attention map is computed through a reshaped dot-product between Q and K, capturing global contextual dependencies at a reduced computational cost. The attention-weighted values are then reshaped and fused with the original feature map to produce an output of doubled spatial resolution. This mechanism effectively captures long-range dependencies while maintaining low complexity, making it well-suited for real-time defect detection in damaged high-resolution imagery.

Figure 3.

Schematic of the lightweight position attention module.

The standard positional attention module (PAM) incurs a significant computational burden due to the quadratic relationship between the spatial dimensions (height H and width W) of the key (K) and value (V) matrices. Specifically, the computational complexity of PAM can be expressed as

where , and the matrix represents the matrix operations. The standard PAM makes it particularly costly when used during the upsampling stages. The extensive matrix multiplications involved in computing the self-attention map introduce substantial overhead, which becomes increasingly prohibitive at higher resolutions.

Since the computational complexity of the standard position attention module is , using the standard position attention module will introduce a large amount of computation when upsampling. Therefore, this paper proposes a lightweight position attention module for upsampling, which takes into account the global information during the upsampling process and further reduces the computational complexity of the standard position attention module.

To mitigate this issue, a lightweight positional attention block (LPAB) is proposed in this study for use in the upsampling process. The LPAB is designed to retain global contextual awareness while significantly reducing computational complexity. This is achieved by applying max pooling to the linearly projected key and value matrices, effectively extracting the most salient semantic features beneficial for upsampling. Such pooling suppresses redundant similarity computations in the self-attention matrix and reduces the overall attention computation cost. The resulting matrix operations in the lightweight attention module scale more efficiently, enabling practical deployment in high-resolution feature reconstruction scenarios. Specifically, the computational complexity of PAM can be expressed as

where S represents the convolution kernel size of the maximum pooling.

2.2.3. Edge Attention Module

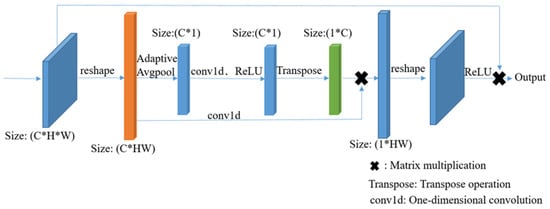

Although lightweight segmentation networks achieve high efficiency, their early downsampling operations often lead to the loss of edge-level details, which are crucial for detecting micro-defects such as hairline damage and surface erosion. To address this issue, an Edge Attention Module (EAM) is introduced to enhance the network’s sensitivity to fine-grained boundaries while preserving real-time performance. Figure 4 shows the diagram of the Edge Attention Module. The EAM consists of three core stages, including edge gradient extraction, edge attention generation, and feature reweighting, optionally followed by multi-scale fusion. In this module, an edge-aware attention map is learned from intermediate feature maps and is used to guide the refinement of spatial features, particularly in regions where defects are subtle or low in contrast. Importantly, this enhancement is achieved with minimal additional computational complexity, ensuring compatibility with real-time inspection frameworks.

Figure 4.

Diagram of EAM mechanism.

To identify potential defect boundaries, the intermediate feature map is first processed using directional gradient filters to compute spatial gradients. The corresponding mathematical formulations are defined as follows:

where represents the intermediate feature map, and denote the Sobel kernels for horizontal and vertical edge detection, and represents the combined edge gradient magnitude map.

The edge map is transformed into an attention map using a convolutional layer followed by batch normalization and a sigmoid activation, enabling adaptive weighting of edge regions.

where denotes the learnable convolutional kernel, denotes the batch normalization, and represents the sigmoid activation function.

The original feature map is reweighted using the generated attention map, allowing the network to focus on edge-critical regions. The corresponding mathematical formulations are defined as follows:

n where denotes the edge-enhanced feature map, and denotes the edge enhancement coefficient.

2.3. Evaluation Indicators and Loss Functions

The performance of the damage detection model was evaluated using a set of well-established metrics, including Intersection over Union (IoU), precision, recall, and F1 score. In parallel with the computation of the IoU, the model’s inference speed was measured on the validation set and reported as the average frames per second (FPS). The corresponding mathematical formulations are defined as follows:

where represents the average frames per second, and denotes the total inference time. The others represent the number of true positive, the number of false positive, and the number of false negative pixels.

To address the issue of sample imbalance in UAV-acquired structural damage imagery, this study adopts a weighted cross-entropy loss function. The standard cross-entropy loss, commonly used to measure the similarity between predicted and true probability distributions, is a convex function with well-established convergence properties, making it a widely adopted objective in classification tasks. However, in the context of concrete defect segmentation, the inherent imbalance between the sparse defect regions and the dominant background leads to suboptimal learning. By introducing class-specific weights, the modified weighted cross-entropy (WCE) loss function imposes greater penalties on misclassified defect pixels, thereby encouraging the model to focus more effectively on subtle and sparsely distributed anomalies during training. The relevant mathematical expressions are as follows:

where denotes the raw prediction logit output by the model for all classes, and denotes the predicted logit corresponding to the true class label.

2.4. Study Case and Data

2.4.1. Project Description and UAV-Based Inspection System

The case study used in this research is located in southwestern China, where a large-scale hydropower project features a double-curvature concrete arch dam with a maximum height of 240 m and a crest length of over 770 m. The overview of the project is shown in Figure 5. The dam’s reservoir has a total capacity of 5.8 billion cubic meters, with an installed generating capacity of 3.3 million kilowatts, contributing to an average annual power generation of 17 billion kilowatt-hours. The flood discharge system, designed for extreme flood events, ensures the dam can handle flows of up to 23,900 m3/s.

Figure 5.

Overview of hydropower station infrastructure.

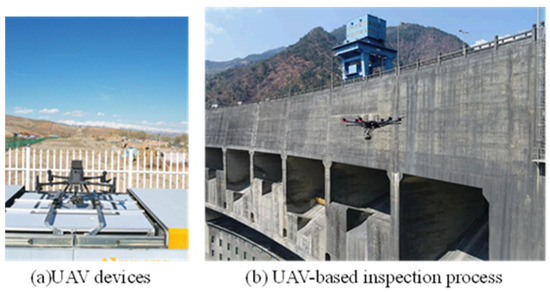

To promptly detect hidden structural defects, UAVs equipped with advanced sensors have been employed in recent years to inspect the dam structure, providing high-resolution imagery and 3D models. Figure 6 shows the UAV inspection process of hydraulic buildings. Table 1 presents the key technical specifications of the UAV devices used in this study. The DJI Matrice 350 RTK supports a payload capacity of approximately 2.7 kg and offers up to 30 min of flight time with typical inspection equipment, ensuring reliable performance for large-scale structural defect detection in hydraulic structures.

Figure 6.

UAV inspection process for hydraulic buildings.

Table 1.

Technical specifications of the UAV and its equipped sensors.

2.4.2. Damage Dataset of Hydraulic Structures

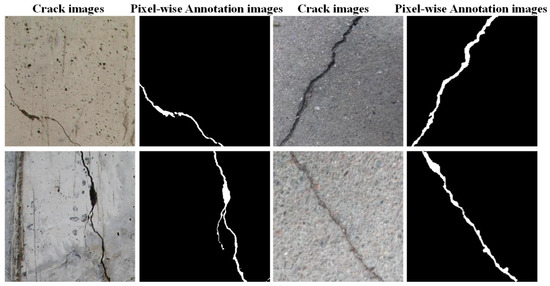

Figure 7 illustrates a set of representative damage images collected from hydraulic structures, accompanied by the corresponding pixel-wise damage annotations. A total of 2479 high-resolution images were captured using a manually piloted UAV to ensure safe and precise navigation in complex environments, especially around structural corners and elevation changes. The onboard RGB camera was configured with a fixed focal length of 35 mm, achieving a ground sampling distance of approximately 0.8 cm/pixel, which enabled clear visualization of fine-scale surface defects such as cracks, concrete spalling, and exposed reinforcement. All images were manually annotated by trained civil engineers using the open-source tool LabelImg. A standardized annotation protocol was followed to ensure consistency across the labeled categories, which included surface cracks, spalling, and exposed steel bars. To prepare the data for training, all the images were resized to 256 × 256 pixels and normalized based on the channel-wise mean and standard deviation. To improve model generalization and robustness, several data augmentation techniques were employed during preprocessing, including random rotations, horizontal flipping, and contrast adjustment. The entire dataset was randomly partitioned into training, validation, and test sets using a 6:2:2 ratio. Notably, the test set was constructed independently from the training and validation sets to ensure an unbiased evaluation of the model’s performance and to better assess its generalization capability in unseen scenarios.

Figure 7.

Collected damaged images in concrete structures with pixel-wise annotation.

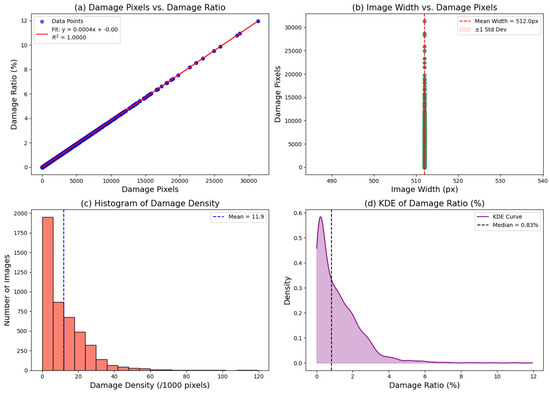

Figure 8 presents a statistical overview of damage masks from a hydraulic structure image dataset. Figure 8a shows a strong linear correlation between damaged pixels and damage ratio, reflecting consistent image size. Figure 8b confirms that most images have a fixed width, while damage extent varies widely. Figure 8c reveals that damage density is highly skewed toward low values, indicating most images contain minor or localized damage. The KDE in Figure 8d further supports this, with a peak near a 1% damage ratio. Based on the above-mentioned statistical analysis, it can be inferred that the structural damage dataset of hydraulic structures is dominated by low-severity damage, which is important for designing DL-based models sensitive to subtle structural defects.

Figure 8.

Statistical analysis of collected concrete damage data (a–d).

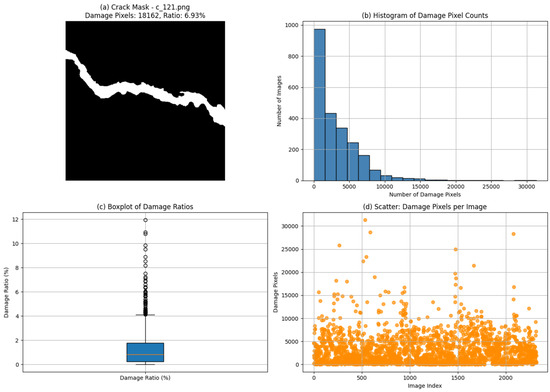

Figure 9 illustrates a statistical analysis based on a representative damage mask image from the developed damaged concrete hydraulic structure dataset. Figure 9a displays the binary damage mask, where the damaged region accounts for 18,162 pixels or 6.93% of the image area. The histogram in Figure 9b shows that most images exhibit a small number of damaged pixels, indicating a skewed distribution dominated by minor defects. The boxplot in Figure 9c further highlights that the majority of damage ratios are below 2%, with a few high-damage outliers. Figure 9d plots damage pixel counts across the dataset, confirming large variability in damage severity. These design choices directly respond to the observed distribution characteristics and ensure robust performance across the full range of damage conditions in hydraulic structures.

Figure 9.

Statistical analysis of damage images of typical hydraulic structures.

3. Results

3.1. Base Model Training

The data processing and model training were conducted on a high-performance workstation equipped with an Intel Core i9-13900K CPU, an NVIDIA RTX 3090 GPU (24 GB), 64 GB RAM, and 2 TB NVMe SSD storage. The software environment included Python 3.9, PyTorch 2.0, CUDA 11.8, and OpenCV, running on Windows 11 Pro.

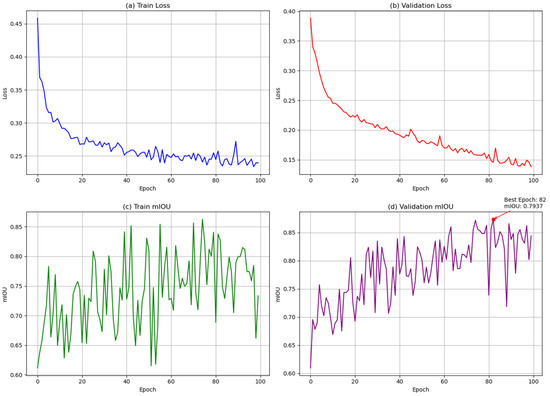

Figure 10 presents the learning curves of the surface damage segmentation model for hydraulic structures over 100 training epochs, including both loss and mIOU metrics for training and validation sets. Throughout the training process, both the training and validation losses of the developed model show a decreasing trend, indicating effective convergence. The validation mIOU curve exhibits a steady improvement and reaches its peak at epoch 82, with a maximum value of 0.7937. This epoch represents the best generalization performance on the validation set. Therefore, the model checkpoint at epoch 82 is selected and saved as the final DL-based damage detection model for downstream damage inspection tasks.

Figure 10.

Learning curves of training and validation for defect pixel-wise segmentation model.

3.2. Ablation Experiment

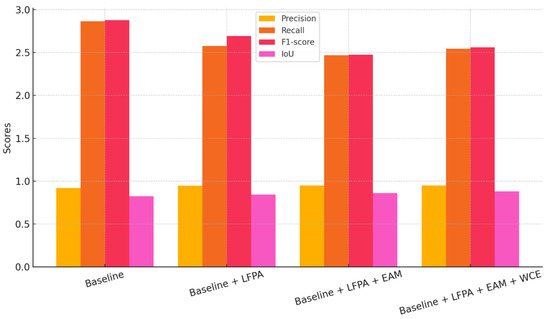

Figure 11 presents a comparative bar chart illustrating the impact of each proposed module on model performance across four key metrics, including precision, recall, F1 score, and IoU. Table 2 shows the ablation experiments of the proposed DL-based method for damage detection in hydraulic structures. The baseline model, based on the improved Fast-SCNN, shows high recall but suffers from relatively lower precision and IoU, indicating an over-prediction tendency and weaker spatial alignment. With the integration of the LFPA module, there is a significant improvement in precision and IoU, demonstrating the module’s effectiveness in enhancing multi-scale semantic understanding. The addition of the EAM further refines the boundary sensitivity, improving the IoU to 86.11% and maintaining high precision. Although a slight reduction in recall is observed, this trade-off results in better localization and reduced false positives. Finally, the inclusion of the weighted cross-entropy loss yields the highest IoU score of 87.92%, confirming its effectiveness in addressing class imbalance and reinforcing the proposed model’s focus on underrepresented defect pixels. Overall, the ablation analysis demonstrates the complementary contributions of the LFPA, EAM, and weighted cross-entropy loss (WCE) in achieving accurate, edge-aware, and robust damage recognition in hydraulic structures.

Figure 11.

Ablation study on hydraulic damage detection.

Table 2.

Ablation study results on hydraulic structure damage segmentation.

3.3. Damage Pixel-Wise Identification Quantitative Assessment

To provide a comprehensive performance benchmark, multiple advanced models were employed as comparative methods, including U-Net [41], SegNet [42], Deeplab v3+ [43], Attention U-Net [44], I-ST-UNet [45], and SegFormer [46]. The reasons for selecting the relevant methods are as follows:

- (1)

- U-Net and SegNet are widely recognized baseline architectures in semantic segmentation, known for their simplicity, efficiency, and extensive adoption in structural damage identification tasks. Their inclusion enables benchmarking against foundational approaches commonly used in the automated detection and pixel-wise delineation of cracks and other defects in concrete and other infrastructure surfaces.

- (2)

- DeepLab v3+ represents a more advanced and high-performing segmentation model that incorporates atrous spatial pyramid pooling (ASPP), enabling effective multi-scale context learning. It is frequently employed in structural damage detection tasks, particularly in scenarios involving complex surface textures or the need to capture fine-scale crack patterns and intricate defect geometries.

- (3)

- Attention U-Net integrates attention mechanisms to enhance feature representation and selectively focus on relevant damage regions, improving segmentation accuracy in cluttered or noisy environments. This makes it a strong candidate for comparison in structural damage localization tasks, where distinguishing cracks or defects from background textures is critical for reliable assessment.

- (4)

- I-ST-UNet is selected as a representative of recent convolutional network advancements due to its ability to capture intricate spatial hierarchies through improved skip connections and multi-scale feature integration. Its architecture is particularly well-suited for structural damage segmentation, where the cracks and surface defects often exhibit varying shapes and scales that benefit from enhanced contextual representation and spatial consistency.

- (5)

- SegFormer exemplifies the transformer-based paradigm in semantic segmentation, offering strong global context modeling with lightweight efficiency. Its hierarchical design and attention-based encoding enable robust performance in complex visual environments. This makes it highly relevant for structural damage detection tasks, where cracks may appear subtle or fragmented across noisy concrete surfaces, requiring powerful long-range dependency modeling for accurate delineation.

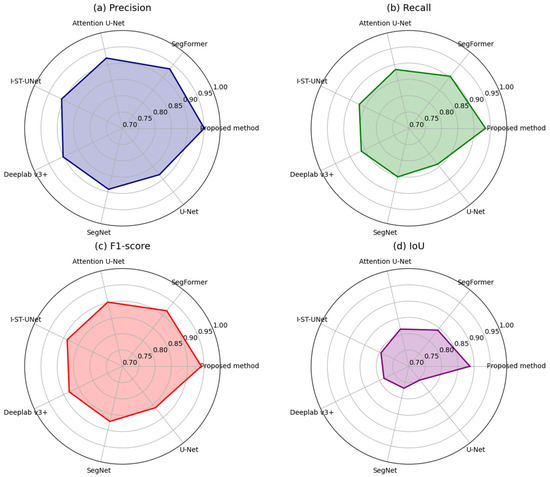

These DL-based methods represent a diverse set of segmentation strategies, ranging from classical encoder–decoder architectures to attention-enhanced networks. By evaluating these damage detection models under identical UAV-acquired imagery conditions, this study ensures a rigorous comparison in terms of detection accuracy, boundary preservation, and robustness to scale variation and background noise. The quantitative evaluation results of the proposed and other benchmark methods are summarized in Figure 12 and Table 3. It is evident from the figure and table that the proposed LFPA-EAM-Fast-SCNN model consistently outperforms baseline models, U-Net, Attention U-Net, DeepLab v3+, SegNet, I-ST-UNet, and SegFormer, in all key performance indicators. Specifically, the proposed method achieves the highest precision (0.949), indicating its strong capability in minimizing false positives, and the highest recall (0.892), suggesting superior sensitivity in identifying true damage regions. As a result, it also attains the highest F1 score (0.906), reflecting a well-balanced and accurate segmentation performance. Furthermore, the Intersection over Union (IoU) reaches 87.92%, confirming the proposed model’s high-quality pixel-level damage localization. In addition to accuracy, the proposed method also demonstrates a significant advantage in inference efficiency. With a processing speed of 56.31 FPS, it substantially outperforms the benchmark models, including U-Net (16.27 FPS), Attention U-Net (14.39 FPS), SegNet (18.58 FPS), DeepLab v3+ (16.53 FPS), I-ST-UNet (12.64 FPS), and Segformer (11.68 FPS). This high inference speed underscores the suitability of the proposed model for real-time or near-real-time damage inspection applications, especially in UAV-based large-area assessments where rapid processing is essential. The visual representation in the radar plot further emphasizes the proposed method’s consistent superiority across both accuracy and efficiency dimensions.

Figure 12.

Radar chart of evaluation indexes of different damage identification methods.

Table 3.

Evaluation of inference efficiency of structural damage identification algorithms.

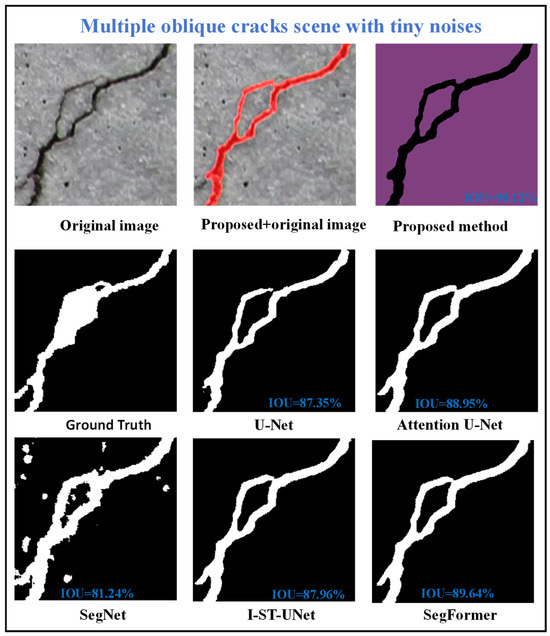

3.4. Evaluate Damage Identification Performance Under Different Levels of Noises

In real-world UAV-based inspections of hydraulic structures, captured imagery is often affected by various forms of noise and visual disturbances, such as water reflections, surface contamination, motion blur, and lighting variations, which can significantly impact the reliability of damage identification models. To assess the robustness of the proposed deep learning framework under such challenging conditions, a series of controlled experiments were conducted by introducing low, medium, and high noises into the test images. Figure 13 shows the defect detection results of the proposed method in scenes with tiny noises. It can be inferred that the proposed method demonstrates superior performance in detecting complex damage patterns under tiny noises on hydraulic concrete surfaces. In a challenging scene characterized by multiple oblique damages and tiny surface holes, the proposed method achieves the highest IoU score of 90.12%, outperforming other comparative methods with IoUs of 87.35%, 88.95%, 81.24%, 87.96%, and 89.64%, respectively. Qualitatively, the proposed method provides more accurate and continuous damage delineation, effectively preserving fine structural details and avoiding over-segmentation or noise interference. Experimental results highlight its robustness and enhanced capability for precise damage identification in hydraulic structures, particularly in scenarios involving intricate damage geometries and surface irregularities.

Figure 13.

Damage identification results for multiple oblique damages with tiny noises.

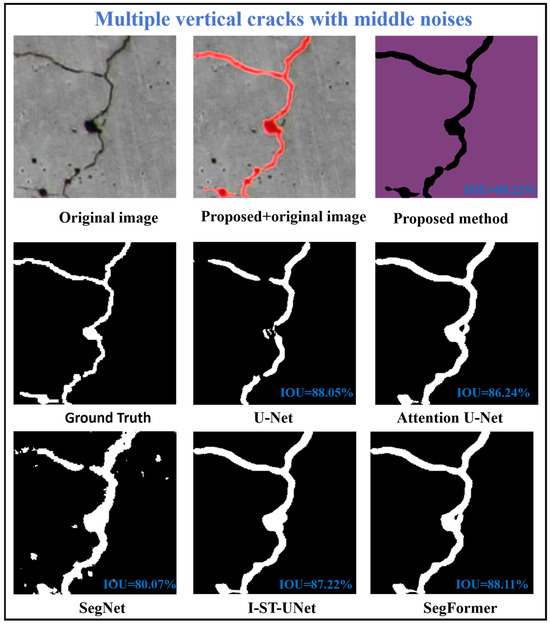

Figure 14 illustrates the effectiveness of the proposed method in segmenting complex damage patterns on concrete surfaces characterized by multiple vertical damages under middle noises. Achieving the highest IoU score of 89.23%, the proposed method outperforms other approaches with IoUs of 88.05%, 86.24%, 80.07%, 87.22%, and 88.11%, respectively. Visually, it demonstrates superior damage continuity, accurate edge delineation, and strong resistance to noise introduced by hole-like features. These experimental results highlight the proposed method’s robustness and precision in detecting vertically oriented damage patterns on concrete surfaces characterized by multiple vertical damages under middle noises, reinforcing its practical value for automated inspection and damage assessment in hydraulic structures.

Figure 14.

Identification results for multiple vertical damages with middle noises.

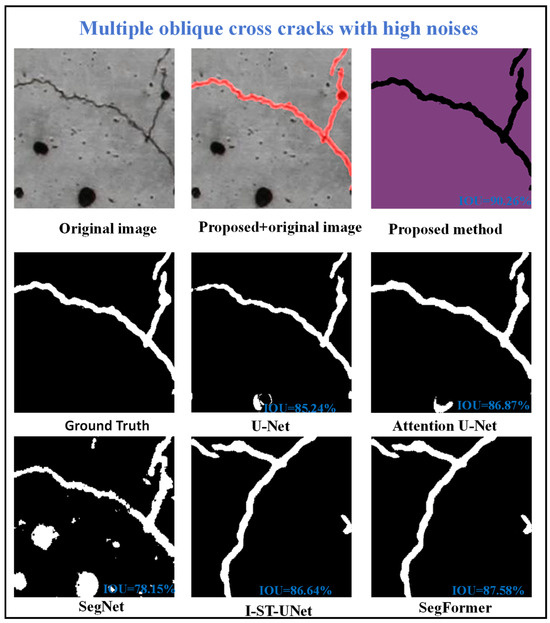

Figure 15 shows the challenging damage detection scenario involving multiple oblique cross damages with high noise interferences. The top row presents the original image, the manually labeled ground truth, and the segmentation result from the proposed method, which achieves the highest IoU score of 90.26%. The bottom row displays the outputs of three comparison methods, which yield lower IoUs of 85.24%, 86.87%, and 78.14%, respectively. Qualitatively, the proposed method demonstrates a clear advantage by accurately capturing intersecting damage paths while effectively suppressing false positives from noise artifacts. In contrast, comparison methods like U-Net, Attention U-Net, and SegNet suffer from misclassification around the noisy regions, either by incorrectly identifying noise as damage or by losing continuity at the damage junctions. I-ST-UNet showed improved continuity in crack delineation compared to traditional networks but was prone to false positives in areas with dense noise. Similarly, SegFormer demonstrated robustness against scattered noise yet struggled with accurately preserving the intersections and complex geometry of the oblique damages. Overall, the proposed method exhibits superior robustness and generalization in complex noisy environments, accurately preserving the structural topology of oblique damages.

Figure 15.

Identification results of defect detection algorithms for vertical damages with high noise inferences.

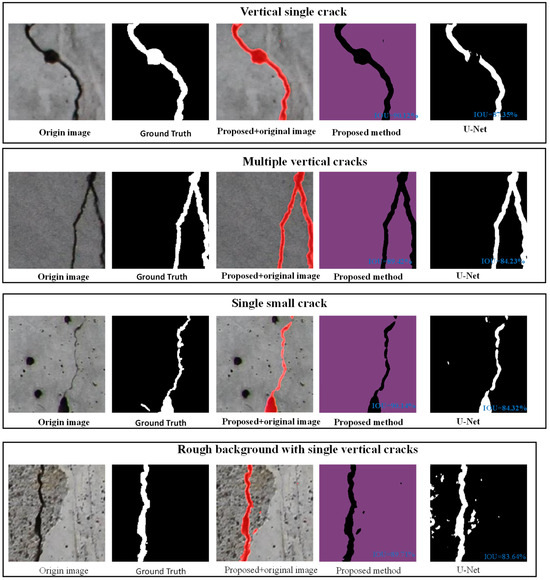

3.5. Comparison of Identification Effects Under Different Complex Damage Forms

Figure 16 demonstrates the comparative evaluation of recognition effects under different complex damage forms. It can be observed that the proposed LFPA-EAM-Fast-SCNN model consistently outperforms both the baseline and ablated variants across all scenarios. Specifically, in cases with low contrast and fine cracks (e.g., Row 1 and Row 2), the proposed model achieves IoU scores of 87.35% and 84.23%, respectively, significantly higher than the baseline outputs, which exhibit fragmented and incomplete segmentation. In high-noise backgrounds and thin crack structures (e.g., Row 3 and Row 4), the proposed model maintains accurate boundary continuity with IoUs of 84.32% and 86.11%, demonstrating robustness to background interference. In the most complex case with intersecting cracks on textured surfaces (Row 5), the proposed method still achieves a high IoU of 83.64%, whereas the baseline suffers from over-segmentation and background leakage. These experimental results confirm that the integration of the LFPA module enhances multi-scale semantic representation, while the EAM ensures refined edge localization, collectively enabling precise and reliable pixel-level damage identification under challenging visual conditions.

Figure 16.

Comparison of damage identification effects under different damage types.

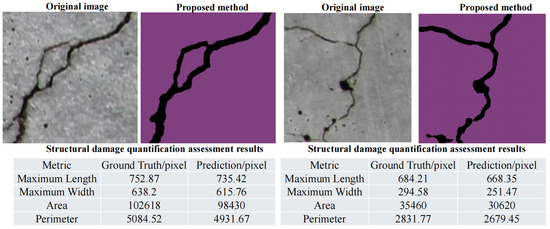

Figure 17 demonstrates the comparative evaluation of recognition effects under different complex damage forms. The visual comparison between the original images and the predicted segmentation masks demonstrates the method’s capability to capture fine-scale damage features. Quantitative assessments are presented in terms of maximum crack length, maximum width, area, and perimeter, comparing the predictions with ground truth annotations. As shown in the tables, the predicted geometric metrics closely approximate the manually annotated values, with only slight deviations observed. The experimental results confirm that the proposed approach effectively replicates human-level performance in damage size estimation and morphological feature extraction, supporting its applicability for automated condition assessment of hydraulic structures.

Figure 17.

Comparison of damage identification effects under different complex damage types.

4. Discussions

4.1. Analysis of the Advantages and Disadvantages of the Proposed Method

The proposed LFPA-EAM-Fast-SCNN framework demonstrates several distinct advantages in the context of pixel-level damage segmentation for hydraulic concrete structures. First, the model achieves high segmentation accuracy across a wide spectrum of defect types, particularly in challenging scenarios involving oblique cracks, surface holes, and noise interference. Second, its lightweight architecture, based on the Fast-SCNN backbone, ensures real-time inference speed, making it well-suited for large-scale or time-constrained inspection tasks. Moreover, the incorporation of the LFPA module and EAM enhances the model’s robustness to variations in damage scale, texture complexity, and background interference, which are common in UAV-acquired inspection imagery.

Despite these advantages, the method also has certain limitations. As the model relies on supervised learning and annotated datasets, its adaptability to unseen structural environments may be restricted without additional retraining. Furthermore, variations in lighting conditions, surface moisture, or reflective noise may impact segmentation performance in highly unstructured field environments. Future work will focus on incorporating domain adaptation techniques and self-supervised learning strategies to improve generalization. Additionally, integrating geometric postprocessing and 3D reconstruction may further extend the applicability of the method for comprehensive structural assessment.

4.2. Combination of LiDAR and Vision for Structural Damage Identification

While UAV-based visual inspection has demonstrated significant potential in detecting and segmenting surface damage on hydraulic structures, its performance can be hindered by environmental factors, such as water-induced image distortions, illumination variability, and surface occlusions. To address these limitations, future research should explore the integration of LiDAR and vision-based modalities to achieve more comprehensive and robust structural assessments. LiDAR offers accurate 3D spatial measurements and geometric profiling capabilities that complement the high-resolution texture and contextual information captured by visual sensors. By fusing depth information from LiDAR with pixel-wise damage predictions from vision-based DL models, it becomes possible to enable not only more precise damage localization but also dimensional quantification (e.g., depth, volume, and surface area) of cracks and surface irregularities. Such multimodal approaches could significantly enhance defect interpretability, reduce false positives in complex backgrounds, and provide richer datasets for model training. Therefore, future UAV-based inspection systems should prioritize the development of lightweight, real-time multimodal fusion frameworks that are tailored to the specific challenges of hydraulic infrastructure environments.

4.3. Structural Damage Assessment and Diagnosis

Although this study focuses on the pixel-level segmentation and localization of surface defects in hydraulic structures, the extracted damage features, such as crack length, width, area, and spatial distribution, can serve as critical inputs for subsequent risk assessment frameworks. In practical applications, these damage maps can be postprocessed to identify regions of interest (e.g., cracks near joints or support elements) and used to derive quantitative indicators of damage severity for hydraulic buildings. By integrating such geometric and spatial attributes with expert knowledge or numerical structural models, the proposed method lays a foundation for data-driven structural health assessment. Future work will explore the coupling of segmentation outputs with domain-specific risk scoring criteria and structural mechanics-based evaluations to enable automated risk prioritization and maintenance decision-making for hydraulic buildings.

4.4. Advantages and Disadvantages of the Proposed Method

The proposed LFPA-EAM-Fast-SCNN framework demonstrates substantial advantages in both segmentation performance and practical applicability. Extensive experimental results under varying noise levels and complex damage configurations confirm the model’s superior accuracy in detecting fine-scale and intersecting defects, with consistently higher IoU scores compared to baseline and state-of-the-art methods. The integration of the LFPA module enables effective multi-scale semantic representation, while the EAM enhances boundary delineation, allowing the model to accurately capture intricate geometries such as oblique cracks and surface holes. In addition, the lightweight Fast-SCNN backbone facilitates real-time inference, making the framework suitable for deployment in UAV-based inspection systems operating under resource constraints.

Despite these strengths, several limitations remain. The proposed method relies on supervised learning and requires high-quality annotated datasets, which may constrain its generalization to unseen environments without additional labeling or fine-tuning. Moreover, the model’s performance may be affected by extreme lighting conditions, such as strong reflections or low-contrast textures, which are frequently encountered in real-world hydraulic structure inspections. These issues suggest opportunities for future work, including the exploration of semi-supervised or unsupervised learning strategies, domain adaptation techniques, and integration with risk quantification frameworks to support comprehensive structural condition assessment.

5. Conclusions

In this study, a lightweight and accurate DL-based framework was proposed for the pixel-level identification of damage in hydraulic concrete structures. By integrating an improved Fast-SCNN backbone, the LFPA module, and the EAM, the proposed model achieves an effective balance between computational efficiency and segmentation precision. Experimental results on complex damage scenarios, including oblique damages, holes, and noise interferences, demonstrate that the model consistently outperforms existing methods in terms of precision, recall, F1 score, and IoU. The proposed architecture exhibits strong generalization capability across various defect types and geometric complexities, and its edge-aware enhancement significantly improves the delineation of damage boundaries. This is particularly important for hydraulic infrastructures, where accurate detection of fine-scale surface degradation is critical for maintenance planning and risk assessment.

Nonetheless, several limitations remain. The current model relies on supervised learning and annotated datasets, which may limit its adaptability to unseen environments without retraining. Future work will explore domain adaptation techniques and self-supervised learning to further enhance model robustness. In addition, the integration of geometric postprocessing and 3D reconstruction could provide more comprehensive structural assessments beyond 2D segmentation. In summary, the proposed method provides a promising and scalable solution for intelligent damage recognition in hydraulic concrete structures, offering both high accuracy and practical deployability for large-scale infrastructure monitoring.

Author Contributions

Methodology, F.H.; Software, F.H.; Investigation, F.H.; Writing—original draft, F.H.; Writing—review & editing, C.G.; Supervision, C.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (grant numbers U2243223 and 52079046) and the Open Project Program of the Engineering Research Center of High-efficiency and Energy-saving Large Axial Flow Pumping Station, Jiangsu Province, Yangzhou University (ECHEAP008).

Data Availability Statement

The data that support the findings of this study are available from the author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, X.; Li, Z.; Sun, L.; Khailah, E.Y.; Wang, J.; Lu, W.; Yahya, E.; Wang, J.; Lu, W. A Critical Review of Statistical Model of Dam Monitoring Data. J. Build. Eng. 2023, 80, 108106. [Google Scholar] [CrossRef]

- Yuan, D.; Gu, C.; Wei, B.; Qin, X.; Xu, W. A High-Performance Displacement Prediction Model of Concrete Dams Integrating Signal Processing and Multiple Machine Learning Techniques. Appl. Math. Model. 2022, 112, 436–451. [Google Scholar] [CrossRef]

- Su, H.; Wen, Z.; Wang, F.; Hu, J. Dam Structural Behavior Identification and Prediction by Using Variable Dimension Fractal Model and Iterated Function System. Appl. Soft Comput. J. 2016, 48, 612–620. [Google Scholar] [CrossRef]

- Wei, B.; Chen, L.; Li, H.; Yuan, D.; Wang, G. Optimized Prediction Model for Concrete Dam Displacement Based on Signal Residual Amendment. Appl. Math. Model. 2020, 78, 20–36. [Google Scholar] [CrossRef]

- Liu, D.; Chen, J.; Hu, D.; Zhang, Z. Dynamic BIM-Augmented UAV Safety Inspection for Water Diversion Project. Comput. Ind. 2019, 108, 163–177. [Google Scholar] [CrossRef]

- Duan, Z.; Liu, J.; Ling, X.; Zhang, J.; Liu, Z. ERNet: A Rapid Road Crack Detection Method Using Low-Altitude UAV Remote Sensing Images. Remote Sens. 2024, 16, 1741. [Google Scholar] [CrossRef]

- Zhang, F.; Hu, Z.; Fu, Y.; Yang, K.; Wu, Q.; Feng, Z. A New Identification Method for Surface Cracks from UAV Images Based on Machine Learning in Coal Mining Areas. Remote Sens. 2020, 12, 1571. [Google Scholar] [CrossRef]

- Zhao, S.; Kang, F.; Li, J. Concrete Dam Damage Detection and Localisation Based on YOLOv5s-HSC and Photogrammetric 3D Reconstruction. Autom. Constr. 2022, 143, 104555. [Google Scholar] [CrossRef]

- Zhu, Y.; Tang, H. Automatic Damage Detection and Diagnosis for Hydraulic Structures Using Drones and Artificial Intelligence Techniques. Remote Sens. 2023, 15, 615. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Palmer, M.; Salubi, A.A. Modelling Farmland Topography for Suitable Site Selection of Dam Construction Using Unmanned Aerial Vehicle (UAV) Photogrammetry. Remote Sens. Appl. Soc. Environ. 2018, 11, 220–230. [Google Scholar] [CrossRef]

- Liu, Y.; Yeoh, J.K.W.; Chua, D.K.H. Deep Learning–Based Enhancement of Motion Blurred UAV Concrete Crack Images. J. Comput. Civ. Eng. 2020, 34, 4020028. [Google Scholar] [CrossRef]

- Deng, L.; Yuan, H.; Long, L.; Chun, P.J.; Chen, W.; Chu, H. Cascade Refinement Extraction Network with Active Boundary Loss for Segmentation of Concrete Cracks from High-Resolution Images. Autom. Constr. 2024, 162, 105410. [Google Scholar] [CrossRef]

- Rau, J.Y.; Hsiao, K.W.; Jhan, J.P.; Wang, S.H.; Fang, W.C.; Wang, J.L. Bridge Crack Detection Using Multi-Rotary UAV and Object-Base Image Analysis. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 311–318. [Google Scholar] [CrossRef]

- Mohammadi, M.; Rashidi, M.; Mousavi, V.; Karami, A.; Yu, Y.; Samali, B. Quality Evaluation of Digital Twins Generated Based on Uav Photogrammetry and Tls: Bridge Case Study. Remote Sens. 2021, 13, 3499. [Google Scholar] [CrossRef]

- Peng, X.; Zhong, X.; Zhao, C.; Chen, A.; Zhang, T. A UAV-Based Machine Vision Method for Bridge Crack Recognition and Width Quantification through Hybrid Feature Learning. Constr. Build. Mater. 2021, 299, 123896. [Google Scholar] [CrossRef]

- Feroz, S.; Dabous, S.A. Uav-Based Remote Sensing Applications for Bridge Condition Assessment. Remote Sens. 2021, 13, 1809. [Google Scholar] [CrossRef]

- Waqas, A.; Kang, D.; Cha, Y.J. Deep Learning-Based Obstacle-Avoiding Autonomous UAVs with Fiducial Marker-Based Localization for Structural Health Monitoring. Struct. Health Monit. 2023, 23, 971–990. [Google Scholar] [CrossRef]

- Mondal, T.G.; Chen, G. Artificial Intelligence in Civil Infrastructure Health Monitoring—Historical Perspectives, Current Trends, and Future Visions. Front. Built Environ. 2022, 8, 1007886. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D.; Jachimowicz, A.; Devaney, C. Utilizing UAV and 3D Computer Vision for Visual Inspection of a Large Gravity Dam. Front. Built Environ. 2018, 4, 31. [Google Scholar] [CrossRef]

- Zhang, Y.; Chow, C.L.; Lau, D. Artificial Intelligence-Enhanced Non-Destructive Defect Detection for Civil Infrastructure. Autom. Constr. 2025, 171, 105996. [Google Scholar] [CrossRef]

- Gao, Y.; Cao, H.; Cai, W.; Zhou, G. Pixel-level road crack detection in UAV remote sensing images based on ARD-Unet. Measurement 2023, 219, 113252. [Google Scholar] [CrossRef]

- Liu, F.; Wang, L. UNet-based model for crack detection integrating visual explanations. Constr. Build. Mater. 2022, 322, 126265. [Google Scholar] [CrossRef]

- Zheng, X.; Zhang, S.; Li, X.; Li, G.; Li, X. Lightweight bridge crack detection method based on segnet and bottleneck depth-separable convolution with residuals. IEEE Access 2021, 9, 161649–161668. [Google Scholar] [CrossRef]

- Zhang, X.; Rajan, D.; Story, B. Concrete crack detection using context-aware deep semantic segmentation network. Comput.-Aided Civ. Infrastruct. Eng. 2019, 34, 951–971. [Google Scholar] [CrossRef]

- Shen, Y.; Yu, Z.; Li, C.; Zhao, C.; Sun, Z. Automated detection for concrete surface cracks based on Deeplabv3+ BDF. Buildings 2023, 13, 118. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, X.; Jiang, Y.; Li, B.; Golsanami, N.; Su, H.; Cai, Y. High-precision segmentation and quantification of tunnel lining crack using an improved DeepLabV3+. Undergr. Space 2025, 22, 96–109. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, A.A.; Dong, Z.; He, A.; Liu, Y.; Zhan, Y.; Wang, K.C.P. Robust semantic segmentation for automatic crack detection within pavement images using multi-mixing of global context and local image features. IEEE Trans. Intell. Transp. Syst. 2024, 25, 11282–11303. [Google Scholar] [CrossRef]

- Luo, H.; Li, J.; Cai, L.; Wu, M. STrans-YOLOX: Fusing swin transformer and YOLOX for automatic pavement crack detection. Appl. Sci. 2023, 13, 1999. [Google Scholar] [CrossRef]

- Ju, X.; Zhao, X.; Qian, S. TransMF: Transformer-based multi-scale fusion model for crack detection. Mathematics 2022, 10, 2354. [Google Scholar] [CrossRef]

- Ali, L.; Alnajjar, F.; Al Jassmi, H.; Gocho, M.; Khan, W.; Serhani, M.A. Performance evaluation of deep CNN-based crack detection and localization techniques for concrete structures. Sensors 2021, 21, 1688. [Google Scholar] [CrossRef]

- Xu, X.; Zhao, M.; Shi, P.; Ren, R.; He, X.; Wei, X.; Yang, H. Crack detection and comparison study based on faster R-CNN and mask R-CNN. Sensors 2022, 22, 1215. [Google Scholar] [CrossRef]

- Li, R.; Yu, J.; Li, F.; Yang, R.; Wang, Y.; Peng, Z. Automatic bridge crack detection using Unmanned aerial vehicle and Faster R-CNN. Constr. Build. Mater. 2023, 362, 129659. [Google Scholar] [CrossRef]

- Ali, R.; Chuah, J.H.; Abu Talip, M.S.; Mokhtar, N.; Shoaib, M.A. Structural crack detection using deep convolutional neural networks. Autom. Constr. 2022, 133, 103989. [Google Scholar] [CrossRef]

- Zhang, E.; Shao, L.; Wang, Y. Unifying transformer and convolution for dam crack detection. Autom. Constr. 2023, 147, 104712. [Google Scholar] [CrossRef]

- Savino, P.; Graglia, F.; Scozza, G.; Di Pietra, V. Automated Corrosion Surface Quantification in Steel Transmission Towers Using UAV Photogrammetry and Deep Convolutional Neural Networks. Comput. Civ. Infrastruct. Eng. 2025, 40, 2050–2070. [Google Scholar] [CrossRef]

- Ai, D.; Jiang, G.; Lam, S.K.; He, P.; Li, C. Automatic Pixel-Wise Detection of Evolving Cracks on Rock Surface in Video Data. Autom. Constr. 2020, 119, 103378. [Google Scholar] [CrossRef]

- Chu, H.; Wang, W.; Deng, L. Tiny-Crack-Net: A Multiscale Feature Fusion Network with Attention Mechanisms for Segmentation of Tiny Cracks. Comput. Civ. Infrastruct. Eng. 2022, 37, 1914–1931. [Google Scholar] [CrossRef]

- Sony, S.; Laventure, S.; Sadhu, A. A Literature Review of Next-Generation Smart Sensing Technology in Structural Health Monitoring. Struct. Control Health Monit. 2019, 26, e2321. [Google Scholar] [CrossRef]

- Perez Jimeno, S.; Capa Salinas, J.; Perez Caicedo, J.A.; Rojas Manzano, M.A. An Integrated Framework for Non-Destructive Evaluation of Bridges Using UAS: A Case Study. J. Build. Pathol. Rehabil. 2023, 8, 80. [Google Scholar] [CrossRef]

- Poudel, R.P.K.; Liwicki, S.; Cipolla, R. Fast-SCNN. arXiv 2019, arXiv:1902.04502. [Google Scholar]

- Liu, Z.; Cao, Y.; Wang, Y.; Wang, W. Computer Vision-Based Concrete Crack Detection Using U-Net Fully Convolutional Networks. Autom. Constr. 2019, 104, 129–139. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Tang, W.; Zou, D.; Yang, S.; Shi, J.; Dan, J.; Song, G. A Two-Stage Approach for Automatic Liver Segmentation with Faster R-CNN and DeepLab. Neural Comput. Appl. 2020, 32, 6769–6778. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Zhang, H.; Ma, L.; Yuan, Z.; Liu, H. Automation in Construction Enhanced Concrete Crack Detection and Proactive Safety Warning Based on I-ST-UNet Model. Autom. Constr. 2024, 166, 105612. [Google Scholar] [CrossRef]

- Li, H.; Zhang, H.; Zhu, H.; Gao, K.; Liang, H.; Yang, J. Automatic Crack De-tection on Concrete and Asphalt Surfaces Using Semantic Segmentation Network with Hierarchical Transformer. Eng. Struct. 2024, 307, 117903. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).