1. Introduction

With the rapid development of satellite technology, the satellite transmitter identification method based on radar and optical imaging has matured. However, deep-learning methods that rely on a single image modality still have inherent limitations. Specific Emitter Identification (SEI), as a physical layer-based identity authentication mechanism, analyzes the unique radio frequency characteristics of the received radio signals. Radio Frequency Fingerprints (RFFs) for identifying different emission sources [

1] have received extensive attention in recent years. RFFs originate from the inherent differences in the design or manufacturing process of hardware circuits and have the characteristics of being tamper-resistant and difficult to forge [

2], laying the physical foundation for SEI and providing an important complementary perspective for satellite transmitter identification.

Deep Learning (DL), with its powerful data analysis capabilities, has been extensively applied in wireless communication technologies [

3,

4,

5], including SEI [

6,

7,

8,

9,

10,

11,

12,

13,

14,

15]. For instance, Ref. [

16] proposed an SEI method utilizing Complex-Valued Neural Networks (CVNNs) to process In-phase and Quadrature (I/Q) signals directly, significantly enhancing recognition accuracy. Ref. [

17] introduced Knowledge Graph (KG) technology to SEI, designing an Adaptive Feature Combination (AFC) strategy via attention mechanisms to assign optimal weights to features for building an efficient classifier. Ref. [

18] explored the application of Graph Neural Networks (GNNs) in SEI, effectively improving system robustness in a complex wireless communication environment. Ref. [

19] proposed a Transformer-based SEI method to better capture long-term dependencies within signals. These DL-based SEI approaches rely on massive historical RF signal samples and deep neural networks to extract robust and effective RFFs, demonstrating superior performance compared to traditional methods based on handcrafted features.

However, most existing DL-based SEI methods depend on supervised learning, requiring large amounts of precisely labeled data for effective training. In practical applications, especially for satellite signal data, obtaining reliable annotations during data acquisition is often challenging, resulting in scarce, accurately labeled samples. Consequently, the proportion of effectively labeled data is typically low, making the application of conventional supervised learning methods difficult under such constraints. To address the issue of limited labeled training samples, Few-Shot Learning (FSL) has recently been introduced to SEI. Ref. [

20] applied meta-learning to SEI, directly utilizing I/Q data for training to reduce manual preprocessing steps. Validated on data collected from ZigBee devices and drones, this method achieved optimal performance with only 15 samples per class. Ref. [

21] improved a meta-learning algorithm to better handle high-dimensional input data by calculating the distance and scatter between features, using this information to predict emitters. Compared to traditional meta-learning, the enhanced algorithm incorporates richer signal information to extract higher-quality, low-dimensional features. Ref. [

22] proposed a novel FSL-SEI method based on Deep Metric Ensemble Learning (DMEL). Leveraging Complex-Valued Convolutional Neural Networks (CVCNNs) combined with contrastive loss and SoftMax loss, it extracts discriminative features characterized by compact intra-class distances and separable inter-class distances, ultimately enabling ADS-B signal identification via an ensemble classifier.

The above-mentioned FSL-SEI methods assume that only a small number of labeled samples exist. However, a more realistic scenario is that a large number of unlabeled samples and limited labeled data are available. Semi-supervised learning provides an effective approach for this scenario. It utilizes unlabeled samples to offer auxiliary information and regularization constraints during model training. Semi-supervised learning mainly includes generative methods, pseudo-labeling methods, consistency regularization methods, and hybrid methods. Generation methods, such as Generative Adversarial Network (GAN) [

23], Convolutional Autoencoder (CAE) [

24], and diffusion models [

25], because they can generate pseudo-samples that conform to the real data distribution, directly alleviating the scarcity problem of labeled data. Ref. [

26] introduced a semi-supervised Auxiliary Classifier GAN (ACGAN) for modulation recognition, incorporating training tricks from Improved GAN [

27] (e.g., feature matching, minibatch discrimination, and classification backbone). Both labeled and unlabeled samples were used as real samples to train the ACGAN, while labeled samples participated in the discriminator training to achieve classification capability. Ref. [

28] proposed a semi-supervised Deep Convolutional GAN (DCGAN) for modulation recognition and SEI, using unlabeled samples as real samples for DCGAN training and labeled samples to train the discriminator’s classification ability. Generating pseudo-labels based on high-confidence model predictions is another effective strategy to regularize deep model training. Ref. [

29] proposed a Semi-Supervised SEI method using Metric Adversarial Training (MAT). Specifically, it innovatively introduced pseudo-labels into metric learning, realizing Semi-Supervised Metric Learning (SSML), and designed an objective function alternately regularized by SSML and Virtual Adversarial Training (VAT) to extract discriminative and generalizable semantic features from radio signals. Consistency regularization methods aim to maintain consistency in model outputs for perturbed inputs. Ref. [

30] proposed a SEI method based on Dual Consistency Regularization (DCR), combined with pseudo-labeling. It enforced consistency between the predicted class distributions of unlabeled data under different augmentations and consistency between the semantic feature distributions of labeled samples and pseudo-labeled samples to achieve more accurate emitter identification.

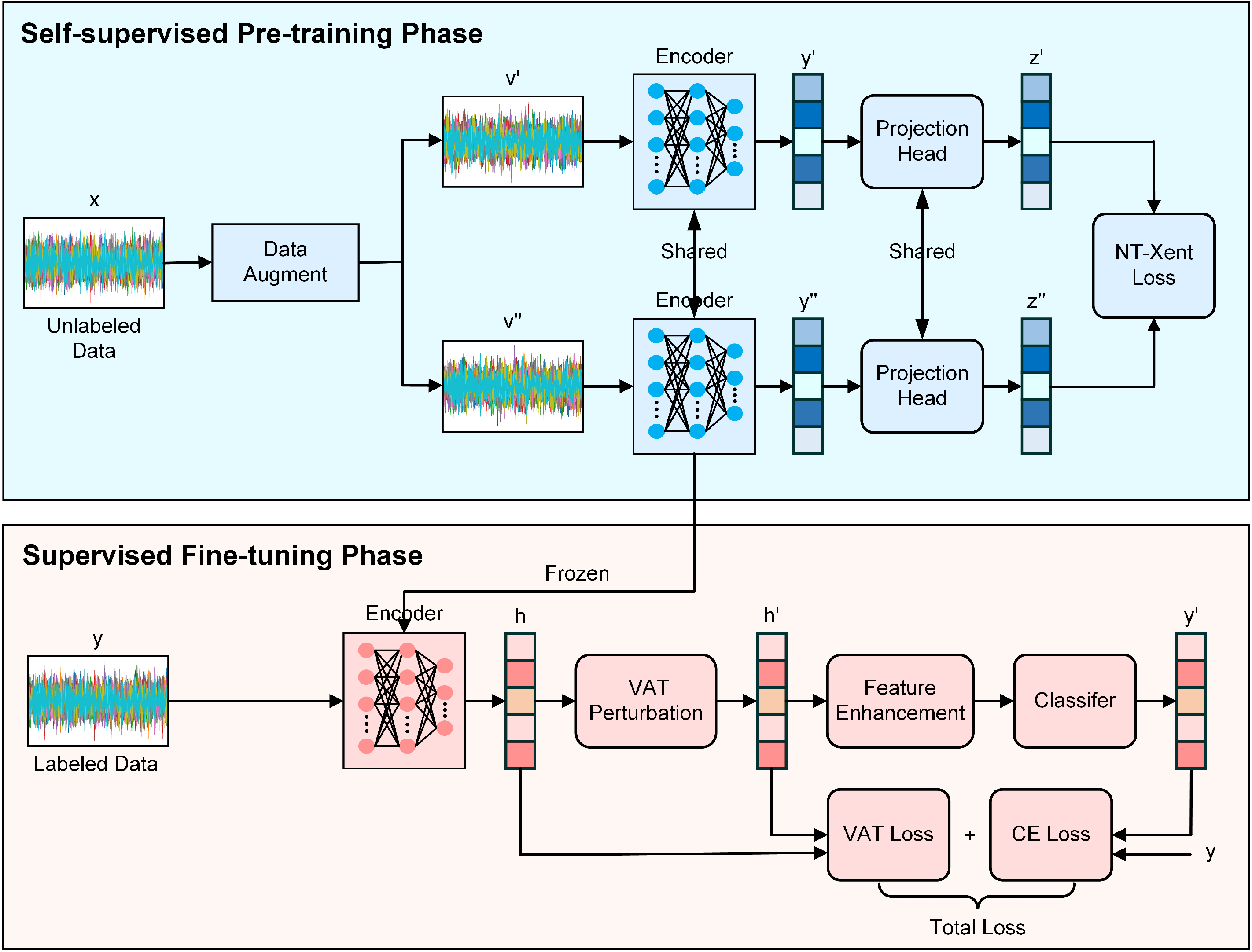

However, semi-supervised learning still relies on pseudo-labels or generated data whose quality cannot be guaranteed. Self-Supervised Learning (SSL) is a type of unsupervised learning. It uses contrastive learning to construct auxiliary tasks, trains the RFFs extractor with unlabeled data, and then only requires a small number of labeled samples to fine-tune the RFFs extractor to complete the SEI task. Therefore, SSL is a more balanced and effective paradigm. It utilizes a large amount of unlabeled data to learn meaningful representations, while only requiring a small number of labeled samples to adapt to downstream tasks. Ref. [

31] introduced an efficient self-supervised learning method referred to as BYOL [

32] to SEI and designed three optimized data augmentation schemes that are phase rotation, random cropping, and jitter. Ref. [

33] performed self-supervised learning of constellation trace figure to achieve feature extraction of unlabeled RF signals and SEI in the downstream task. Ref. [

34] employed BYOL as the self-supervised backbone for pretraining, designed an Adversarial Augmentation (Adv-Aug) strategy, and introduced knowledge transfer to fine-tune the extractor and classifier. Ref. [

35] designed an asymmetric dual-network architecture (online and target networks), employing contrastive loss to distinguish positive and negative sample pairs, combined with a non-contrastive consistency constraint for cross-network feature alignment, further enhancing the robustness and generalizability of the learned RFFs. In conclusion, both unsupervised and self-supervised learning methods can extract RFFs on unlabeled samples and serve the target SEI task. However, since the amount of labeled data available for fine-tuning is typically much smaller than the unlabeled data used in pretraining, models remain susceptible to overfitting during the fine-tuning stage.

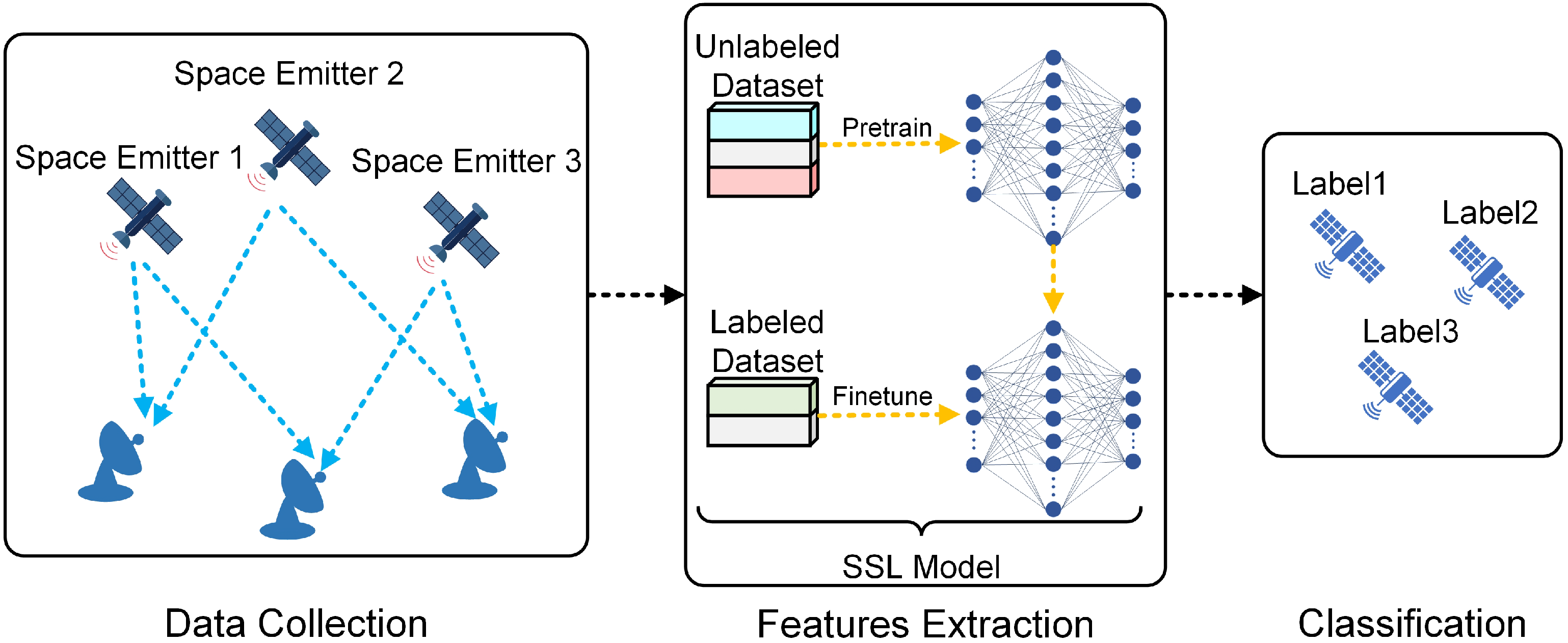

Inspired by the aforementioned research, this paper proposes a Self-Supervised Learning SEI (SSL-SEI) method. The method fully leverages unlabeled auxiliary datasets to train the RFFs extractor and achieves high-performance emitter identification by fine-tuning the RFFs extractor and classifier using a limited number of labeled samples. The main contributions of this paper are as follows:

We propose a novel SSL-SEI framework that integrates self-supervised contrastive learning with supervised fine-tuning. This framework effectively leverages unlabeled signals to learn generalizable RFFs and adapts to downstream SEI tasks using only a small number of labeled samples, thereby overcoming the limitations of dependence on labeled samples and achieving high-accuracy emitter identification.

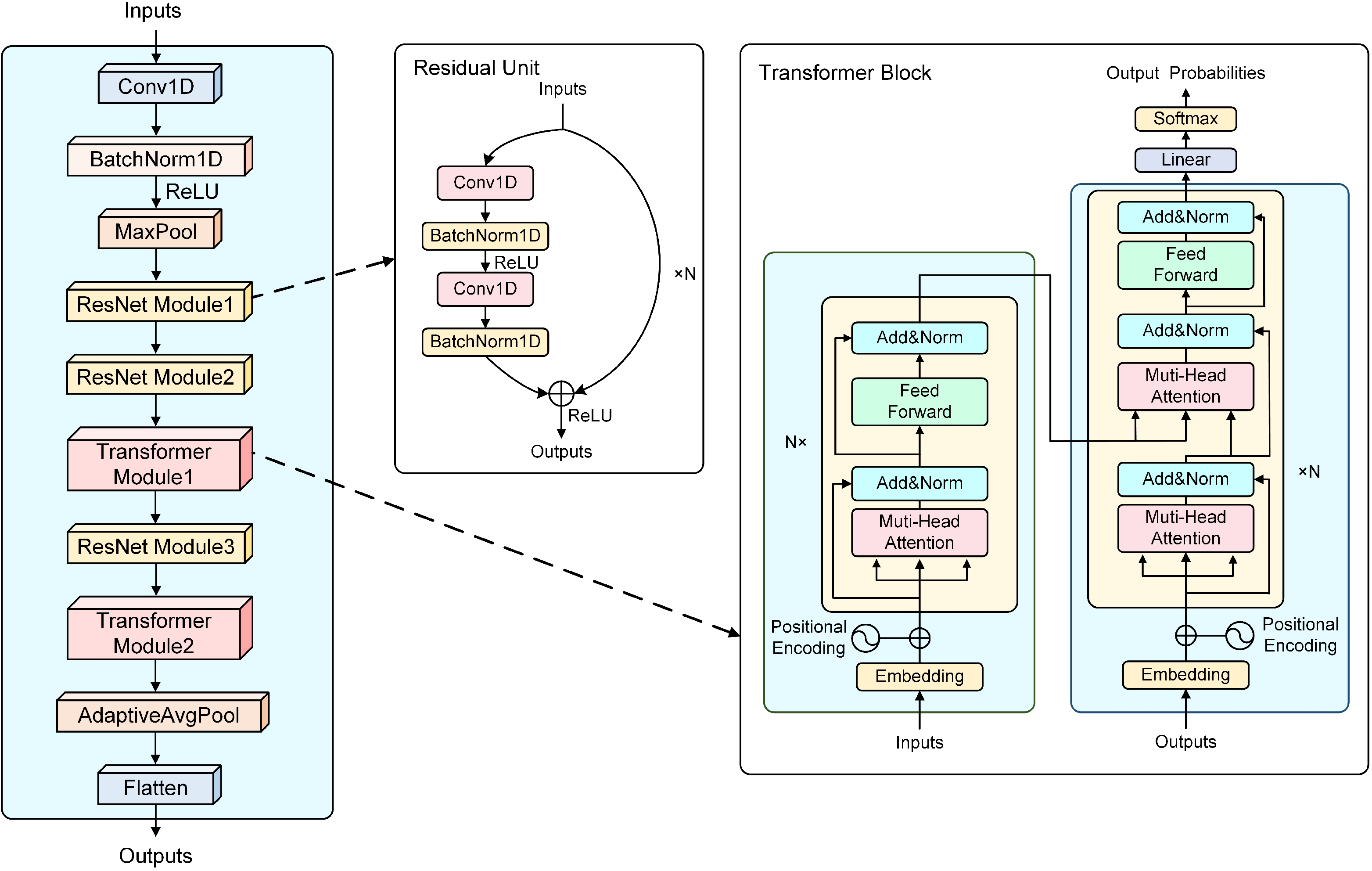

We design specialized data augmentation strategies for satellite signals and design a hybrid encoder combining Transformer [

36] and ResNet [

37] modules to enhance RFF extraction. In the fine-tuning phase, we introduce VAT [

38] as a regularization technique to further improve model robustness under label-scarce conditions.

We validate the proposed method on the real-world Beidou Navigation Satellite System (BDS) signal datasets with 10 emitter classes. Experimental results show that our approach significantly outperforms the competing methods across various settings with limited labeled samples, demonstrating its effectiveness and generalizability.

4. Experimental Results Analysis and Discussion

4.1. Datasets

In order to validate the effectiveness of the proposed method, we collected real-world satellite navigation signals using a 40-m antenna, as shown in

Figure 4, and conducted extensive experiments. These data are from the BDS signals and contain 10 different categories, labeled from 0 to 9. Each sample is a 1D sequence of length 4000, and its shape is

. In the pretraining stage, each class has 800 samples, totaling 8000 training samples. This ensures that the encoder learns generalizable representations of all categories without label supervision. During the fine-tuning stage, we simulated a limited labeled data scenario. The number of labeled samples per class was varied across experiments, taking values from the set

, to evaluate model performance under different levels of supervision. For each scenario, the labeled data are randomly divided into the training set and the validation set, with a ratio of 0.8:0.2, respectively. The test set remained fixed throughout all experiments and consisted of 1500 samples, ensuring consistent and fair evaluation across all models.

To ensure a fair and objective evaluation, the pretraining dataset (unlabeled) and the fine-tuning dataset (labeled) are strictly non-overlapping. Specifically, the unlabeled data used during the self-supervised pretraining stage is entirely separate from the labeled data used in the fine-tuning stage. Furthermore, within the fine-tuning process, the training, validation, and test sets are constructed independently without any shared samples. This strict separation prevents data leakage and ensures that the experimental results accurately reflect the validity of the model. The details of the datasets are shown in

Table 1.

4.2. Experimental Details

All experiments were implemented using Python 3.9.19 and the PyTorch 1.11 framework. Training was conducted on a workstation equipped with an NVIDIA GeForce RTX 4090 GPU. For the self-supervised pretraining stage, we trained the model for 200 epochs using the LARS optimizer, with a batch size of 256 and a learning rate set to

. The temperature parameter

in the contrastive loss is set to 0.1. During the fine-tuning stage, we trained for 100 epochs using a smaller batch size of 32, and adopted the Adam optimizer with a learning rate of

for stable convergence. The test batch size was also set to 32 to match the training configuration during evaluation. The VAT weight (

) is set to 0.3, and the perturbation magnitude (

) is set to 4.0. These values are selected to balance the trade-off between task loss and adversarial smoothing. The selection principle of VAT parameters will be discussed in detail in

Section 4.6. The details and simulation parameters are shown in

Table 2.

We use four widely used evaluation metrics for SEI, including Accuracy (ACC), Recall (R), Precision (P), and F1-score (F). Among them, Accuracy, Recall, Precision, and F1-score range from 0 to 1. It is worth noting that a higher value of all metrics indicates better performance of the model. These evaluation metrics are defined as follows:

Accuracy (

ACC): Accuracy measures the proportion of correct predictions out of the total number of predictions. It is defined as:

where

represents the number of true positives,

represents the number of true negatives,

represents the number of false positives, and

represents the number of false negatives.

Recall (

R): Recall measures the proportion of actual positives that are correctly identified:

Precision (

P): Precision measures the proportion of positive predictions that are actually correct:

F1-score (

F): The F1-score is the harmonic mean of precision and recall. It provides a balance between the two metrics:

4.3. Comparison Methods

To verify the effectiveness of the proposed method, four comparative methods are introduced as follows.

- 1.

FineZero: FineZero indicates that it directly trains the RFFs extractor and classifier without going through the pretraining stage, where the RFFs extractor is the hybrid encoder proposed in this paper. Specifically, there is no knowledge transfer during the training process, and the parameters of the RFFs extractor and classifier are randomly initialized. Therefore, the performance of FineZero represents the performance baseline.

- 2.

MAML: MAML [

39] is a model-agnostic meta-learning framework that aims to quickly adapt to new tasks with a small number of samples. The core idea is to learn a common set of initialization parameters so that the model can efficiently solve new tasks with a small number of gradient updates.

- 3.

SimCLR: SimCLR [

40] is a self-supervised contrastive learning method. SimCLR takes the augmented views of samples as positive examples and all other samples in a batch as negative examples.

- 4.

SA2SEI: SA2SEI [

34] is a few-shot SEI method based on self-supervised learning and adversarial enhancement. Specifically, a novel adversarial enhancing-driven self-supervised learning is used to pretrain the RFFs extractor with unlabeled auxiliary datasets, and knowledge transfer is introduced to fine-tune the extractor and classifier.

Table 3 presents the performance comparison of various methods applied to the BDS signal datasets across different scenarios ({10,15,20,25,30,35,40}-shot). The experimental results show that the proposed method significantly outperforms other methods on the BDS signal datasets.

As shown by the experimental results in

Table 3, the proposed method consistently achieves significantly higher identification accuracy than existing mainstream approaches across all training sample settings. In the 10-shot setting, the proposed method reaches an accuracy of 54.73%, outperforming SimCLR (49.93%), SA2SEI (43.13%), MAML (36.67%), and the baseline (40.93%). As the number of training samples increases, the performance of the proposed method improves at a much faster rate than other methods. For instance, under the 20-shot setting, it achieves 89.27% accuracy, whereas SimCLR reaches 70.93%, SA2SEI 68.43%, MAML 68.52%, and the baseline only 60.27%. This pronounced performance gap demonstrates the effectiveness of the proposed framework in enabling the model to capture discriminative features even when labeled data are limited. Under larger sample settings ({25,30,35,40}-shot), the proposed method continues to maintain a strong lead, ultimately achieving an accuracy of 97.20% in the 40-shot setting, significantly surpassing SA2SEI (91.08%), SimCLR (86.00%), and all other methods.

In addition to accuracy, we also evaluate the performance of the model in terms of recall, precision, and F1-score, as shown in

Table 4,

Table 5 and

Table 6. The proposed method consistently achieves the highest values on all three metrics across all shot settings. For example, in the 20-shot scenario, the proposed method achieves 88.05% recall, 91.15% precision, and 89.48% F1-score, substantially outperforming all comparison methods. This trend persists across increasing sample sizes, culminating in 40-shot scores of 96.15% recall, 97.60% precision, and 97.44% F1-score. These results further validate the robustness and effectiveness of the proposed approach from multiple evaluation perspectives.

Overall, these results indicate that the proposed approach offers high precision and robustness in scenarios with limited labeled data. The experimental results validate the effectiveness of the introduced hybrid encoder architecture, the self-supervised contrastive learning mechanism, and the VAT-based fine-tuning strategy for the SEI task.

4.4. Exploring the Impact of Different Data Augmentation Strategies on SSL

To investigate the impact of different data augmentation strategies on the effectiveness of self-supervised pretraining, we conducted a series of experiments using various augmentation combinations. All experiments use the same parameter settings described in

Section 4.2. The experimental results are shown in

Figure 5.

The results demonstrate that single augmentations, such as Noise, Scale, or Shift, generally provide moderate improvements as the number of labeled samples increases. Among them, the Scale and Shift augmentations outperform Noise, achieving 96.93% and 92.53% accuracy, respectively, at 40 labeled samples per class, compared to 75.87% for Noise. This suggests that geometric transformations (Scale and Shift) are more beneficial for representation learning than random Noise alone. The improvement trend shown by pairwise enhancement combinations, including Noise + Scale, Noise + Shift, and Scale + Shift, is not very stable, and these combinations only outperform single enhancements under specific sample sizes. The proposed enhancement strategy (Noise + Scale + Shift) consistently outperforms all other configurations in all sample sizes. It achieves the highest accuracy rate of 97.20% when there are 40 samples in each class. More importantly, it demonstrates a significant leading advantage in low-resource scenarios. The above experimental results demonstrate the effectiveness of the data augmentation proposed in this study in the SSL phase.

4.5. Ablation Experiment

As shown in

Table 7, we conducted ablation experiments in the 10-class BDS signal dataset under the 40-shot scenario to evaluate the effectiveness of each component in the framework we proposed. All experiments use the same parameter settings described in

Section 4.2. Model (A) adopts the RFFs extractor modified based on the ResNet-50. This model is trained in a supervised learning manner without going through any self-supervised pretraining stage, and uses cross-entropy loss for end-to-end optimization. Model (B) uses the hybrid encoder proposed in this paper instead of ResNet and adopts the same training strategy as Model (A). Models (C) and (E) use the same encoder as Model (A), adopt the self-supervised training strategy, and introduce contrastive learning. Model (E) further introduces the VAT-based fine-tuning method proposed in this paper. Model (D) uses the same encoder as Model (B), adopts a self-supervised training strategy, and introduces contrastive learning. Model (F) is the method proposed in this paper.

- 1.

Effectiveness Analysis of Hybrid Encoder: The hybrid encoder leverages ResNet to extract local signal features and Transformer to capture global dependencies via self-attention. This combination leads to more expressive and generalizable RFF representations for SEI. To validate the effectiveness of the proposed hybrid encoder architecture, we compare pairs of models trained under identical strategies but with different encoders. Specifically, comparing (A) and (B), the accuracy improves from 73.93% to 80.93%, indicating that the hybrid encoder provides a more expressive representation than the vanilla ResNet. Similarly, under the SSL setting, (C) achieves 86.00%, while (D) achieves 89.67%, again demonstrating consistent performance gains from the hybrid encoder. Finally, under the full framework including both SSL and VAT, (E) achieves 92.87%, whereas (F) reaches 97.20%, further highlighting the contribution of the hybrid encoder to overall performance improvement.

- 2.

Effectiveness Analysis of SSL: The SSL framework guides the model to learn invariant and discriminative features by maximizing agreement between augmented views of the same signal. This contrastive objective encourages the encoder to enhance RFF extraction without relying on labels. To evaluate the impact of the SSL framework, we compare models with and without SSL while keeping the encoder fixed. For the ResNet-based models, (A) achieves 73.93%, whereas (C) improves to 86.00%, indicating that the introduction of the SSL framework achieved a significant gain of nearly 10%. A similar trend is observed with the hybrid encoder: (B) achieves 80.93%, while (D) achieves 89.67%. These results confirm that the self-supervised framework effectively improves feature representations by leveraging unlabeled data, especially when labeled samples are limited.

- 3.

Effectiveness Analysis of VAT: VAT improves model robustness by introducing small adversarial perturbations, encouraging the classifier to maintain consistent predictions around labeled samples. This regularization smooths decision boundaries and prevents overfitting, which is crucial for SEI tasks under limited labeled data. To investigate the contribution of VAT, we compare models trained with and without VAT under the SSL setting. When using the ResNet encoder, (C) achieves 86.00%, while (E) improves to 92.87%. Similarly, with the hybrid encoder, performance increases from 89.67% in (D) to 97.20% in (F). These results demonstrate that VAT effectively enhances the model’s robustness and generalization, particularly when combined with the hybrid encoder and SSL components.

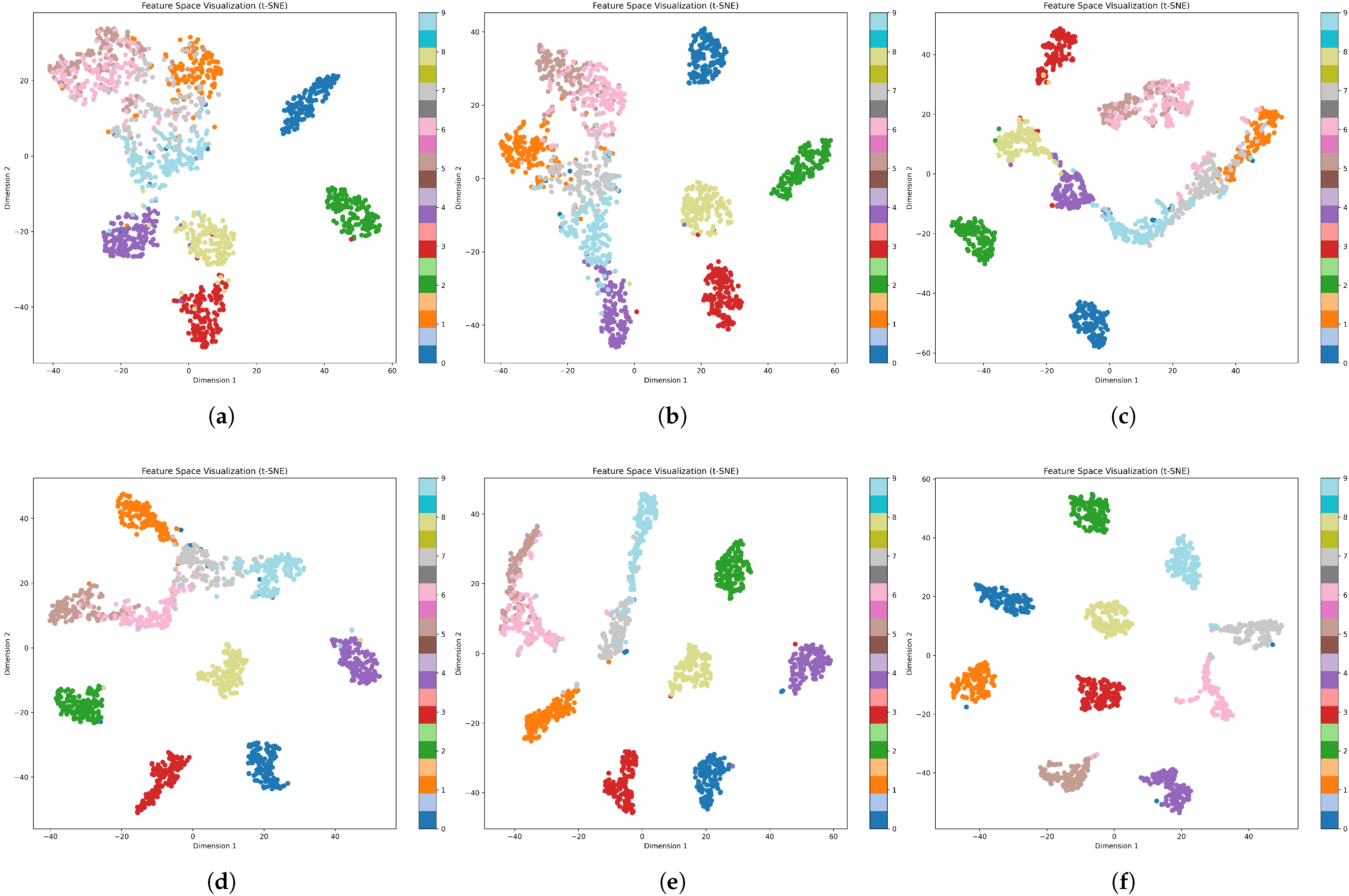

To further investigate the discriminative capability of the learned representations, we performed t-SNE visualization on the feature embeddings of all models.

Figure 6 presents the scatter plots of features from 10 selected classes in the BDS signal datasets, extracted by different models. For models (A) and (B) without SSL or VAT, the clusters show obvious overlap and relatively poor intra-class compactness, indicating limited discriminative ability. After the introduction of self-supervised learning, (C) and (D) exhibit a more compact intra-class distribution and better inter-class separation, indicating that the SSL framework effectively guides the encoder to learn semantically meaningful representations. With the introduction of VAT, it was observed that (E) further demonstrated clearer class boundaries and a more compact intra-class distribution than the model using only SSL. Finally, the complete Model (F) achieves the best visualization quality, featuring highly compact intra-class clusters and minimal inter-class overlap. This clear and well-separated feature distribution reflects the effectiveness of each component and proves that the proposed complete framework not only achieves high precision but also learns a highly discriminative feature space.

Table 8 further quantitatively analyzes the silhouette coefficient and separation ratio. Specifically, we use the silhouette coefficient and separation ratio to measure the distribution of class representations. The silhouette coefficient measures separation quality between clusters, and the higher values (closer to 1) indicate better clustering, which is defined as:

where

represents average Euclidean distance between sample

i and all other points in the same cluster,

represents the smallest average Euclidean distance between sample

i and points in any other cluster, and

n is total number of samples.

The separation ratio is the ratio of inter-cluster separation to intra-cluster compactness. Higher values indicate superior clustering (well-separated and tight clusters). The separation ratio is defined as:

where

is a small constant to prevent division by zero. Intra-class compactness and inter-class separation, respectively, represent the average distance of samples within the cluster and the average distance of the cluster centroids, and are defined as:

where

is the set of samples in cluster

k (

),

is the Euclidean distance between samples

and

,

is the total intra-cluster pairs,

is the centroid of cluster

k,

is the Euclidean distance between centroids, and

is the total cluster pairs.

As shown in

Table 8, (A) and (B) yield the lowest silhouette coefficients and separation ratios, indicating poor feature discrimination and clustering quality. The incorporation of self-supervised learning in (C) and (D) leads to consistent improvements across both metrics, demonstrating the effectiveness of contrastive pretraining in enhancing representation learning. Further performance gains are achieved with the addition of VAT in Model (E), highlighting its role in improving robustness and inter-class separability under limited supervision. The complete Model (F) achieves the highest silhouette coefficient of 0.8859 and a separation ratio of 11.3587, significantly outperforming all other variants.

These results collectively validate the effectiveness of the proposed SSL-SEI framework. By integrating contrastive pretraining with VAT-based fine-tuning, the framework successfully learns highly discriminative and robust feature representations, enabling accurate emitter identification even with limited labeled data.

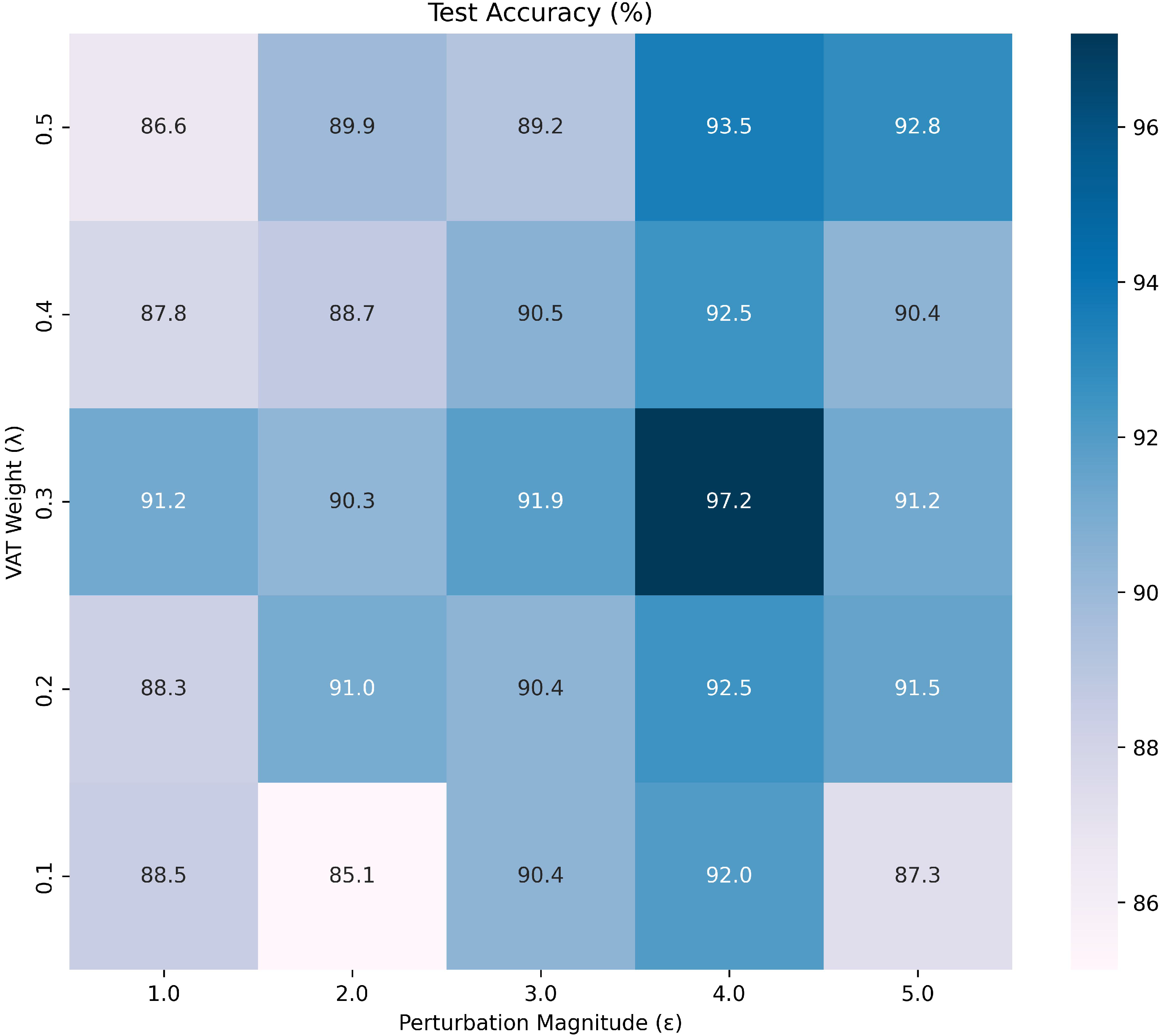

4.6. Impact of VAT Parameters

To evaluate the sensitivity of VAT parameters, we conducted a comprehensive grid search analysis of perturbation magnitude (

) and VAT weight (

) in the 10-class BDS signal dataset under the 40-shot scenario.

Figure 7 presents a heat map illustrating the results of a grid search conducted to evaluate the sensitivity of

and

.

The experimental results indicate that under the condition of fixed , the recognition accuracy shows a trend of first increasing and then slowly decreasing with the increase of . Among them, reaches the peak across different settings, indicating that moderately enhancing the perturbation is beneficial to improving the robustness of the model. It is worth noting that a larger disturbance intensity () is generally superior to a smaller intensity (), but an appropriate needs to be set to achieve the best performance. This aligns with the theoretical of VAT, when is too small (), the perturbation fails to sufficiently explore the decision boundary, limiting its regularization effect. Conversely, overly large perturbations () distort the input signal representation beyond the manifold of valid samples, leading to performance degradation. The optimal range, observed at , achieves a balance between boundary exploration and feature integrity. This is consistent with VAT theory, where moderate perturbations enforce local smoothness without destroying the discriminative structure.

The VAT loss is weighted by the coefficient , which controls the influence of the virtual adversarial regularization term relative to the supervised loss. A smaller value of (e.g., 0.1) fails to sufficiently emphasize the VAT regularization, potentially leading to overfitting in data-scarce settings. On the other hand, excessively large values (e.g., ) may overly constrain the model, interfering with supervised learning and resulting in underfitting. The best performance is observed with , which achieves an optimal trade-off between maintaining decision boundary smoothness and preserving discriminative learning capacity.

In summary, the optimal configuration (, ) aligns with the theoretical design of VAT, providing both sufficient boundary exploration and stability.

4.7. Impact of Environmental Diversity on Model Performance

To evaluate the adaptability and robustness of the proposed method under real-world conditions, we define the dataset described in

Section 4.1 as Dataset1, and construct five additional datasets (Dataset2 through Dataset6) using signal data collected from the same 10 BDS satellites over a long time span from 2021 to 2024. These satellites were observed under varying temporal and orbital conditions, naturally introducing environmental variability such as changes in satellite hardware states, signal propagation conditions, and ground station configurations.

It is worth noting that in these supplementary experiments, we retain the model pretrained on Dataset1 and perform fine-tuning using only a small number of labeled samples from each of the new datasets. This setup reflects practical deployment scenarios where a general-purpose feature extractor, once pretrained, can be rapidly adapted to newly collected data without retraining from scratch. Instead, only minimal labeled data are required to fine-tune the model for evolving signal environments. Evaluation metrics, including Accuracy (ACC), Precision (P), Recall (R), and F1-score (F), are used to quantify classification performance across the datasets.

As shown in

Table 9,

Table 10,

Table 11 and

Table 12, the proposed model consistently maintains strong performance across all datasets. While a slight reduction in performance is observed under the 10-shot and 15-shot settings, which may be attributed to greater signal variability or environmental noise, the model exhibits rapid adaptation as the number of labeled samples increases. These findings demonstrate that the proposed SSL-SEI framework is capable of learning generalizable features and can be effectively fine-tuned on newly collected satellite observations using only a small amount of labeled data. This confirms the practical applicability of our method in dynamic and diverse environments.

5. Conclusions

In this paper, we propose a novel SSL-SEI framework based on self-supervised contrastive learning to address the challenge of limited labeled data in SEI. During the pretraining phase, we design tailored data augmentation strategies for satellite signals and construct a hybrid encoder that combines ResNet and Transformer modules to enhance feature learning. This enables the model to extract robust and discriminative RFFs from unlabeled satellite signals. To mitigate the overfitting risk caused by scarce labeled data, we introduce a VAT-based fine-tuning phase, where adversarial perturbations are applied in the feature space to improve the robustness and generalization of the model, thereby achieving high-accuracy emitter identification. We conduct extensive experiments on the real-world datasets constructed from collected BDS signals. Results demonstrate that the proposed SSL-SEI framework achieves excellent SEI performance using only a small number of labeled samples and significantly outperforms competing methods under various low-label settings, validating its effectiveness and generalizability.

Furthermore, to evaluate the robustness and adaptability of the proposed framework under realistic conditions, we conducted additional experiments using datasets constructed from long-term satellite observations spanning 2021 to 2024. These datasets naturally incorporate variations in signal propagation environments, satellite hardware conditions, and ground station configurations. Without retraining the model from scratch, we fine-tuned the pretrained encoder using only a small number of labeled samples from each new dataset. The results show that while distributional shifts can lead to reduced accuracy under extremely low-shot conditions, the model quickly recovers high identification performance as more labeled samples become available. These findings demonstrate the strong generalization capability of the proposed framework and confirm its practical feasibility in dynamic and evolving environments.

While the proposed framework demonstrates strong performance, it still relies on fine-tuning with a small amount of labeled data. As a result, it may not generalize immediately to entirely new emitter classes without some form of supervised adaptation. This limitation is particularly critical in scenarios involving previously unseen emitters, where the current method lacks the capability for instant recognition. In future work, considering the emergence of such unknown targets, we plan to incorporate domain adaptation and incremental learning techniques to enable the model to continuously adapt to new data while preserving previously acquired knowledge.