Small but Mighty: A Lightweight Feature Enhancement Strategy for LiDAR Odometry in Challenging Environments

Abstract

1. Introduction

- A stability-aware feature selection mechanism based on the statistical distribution of local smoothness is proposed to efficiently identify stable features from conventional geometric feature extractions.

- An adaptive weighting scheme is introduced to emphasize stable features during pose optimization, thereby suppressing the influence of low-quality residuals and enhancing estimation robustness.

- Extensive experiments on challenging datasets demonstrate consistent performance gains across diverse environments, validating the generality of the proposed strategy.

2. Related Work

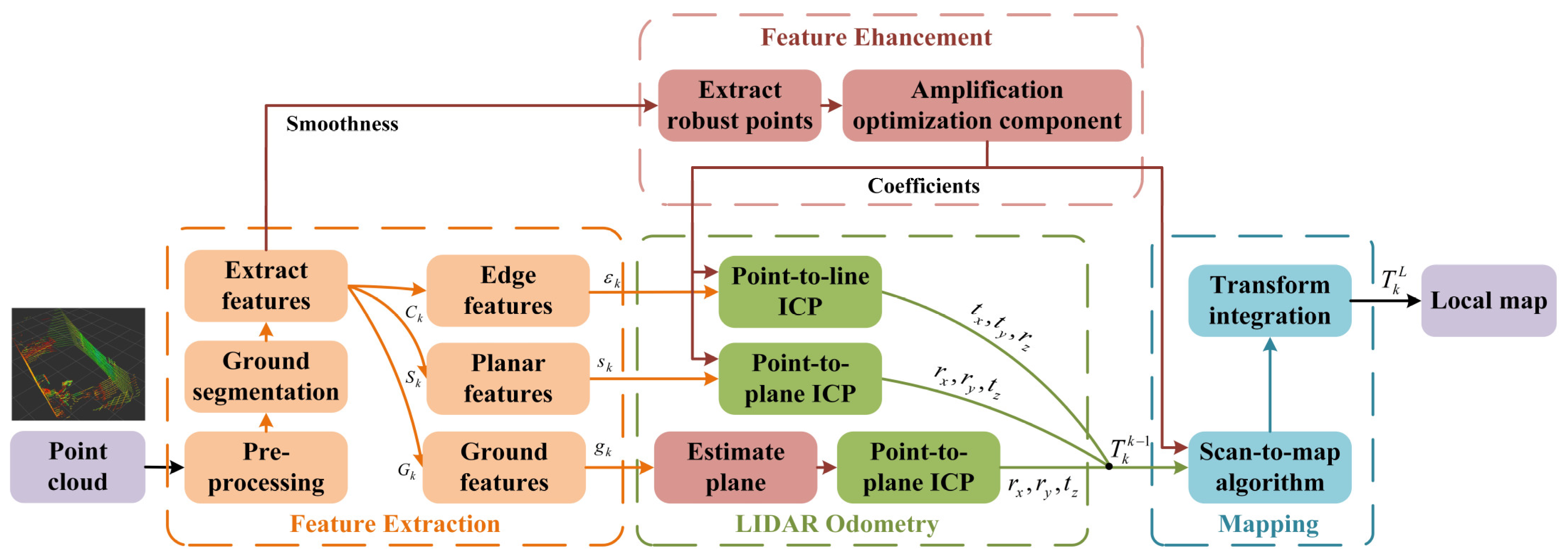

3. Methodology

3.1. System Overview

3.2. Point Cloud Preprocessing

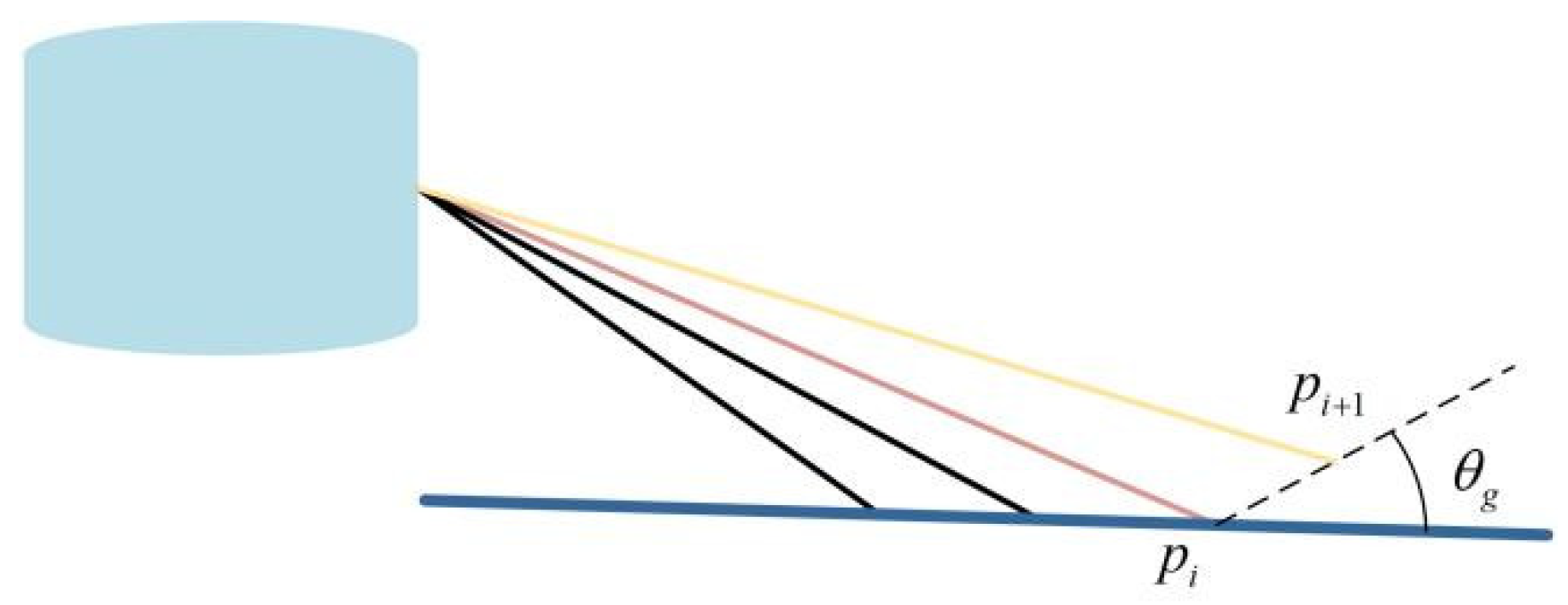

3.3. Ground Segmentation

3.4. Feature Extraction

3.5. Stable Feature Point Selection

3.5.1. Spatially Stable Feature Point Selection

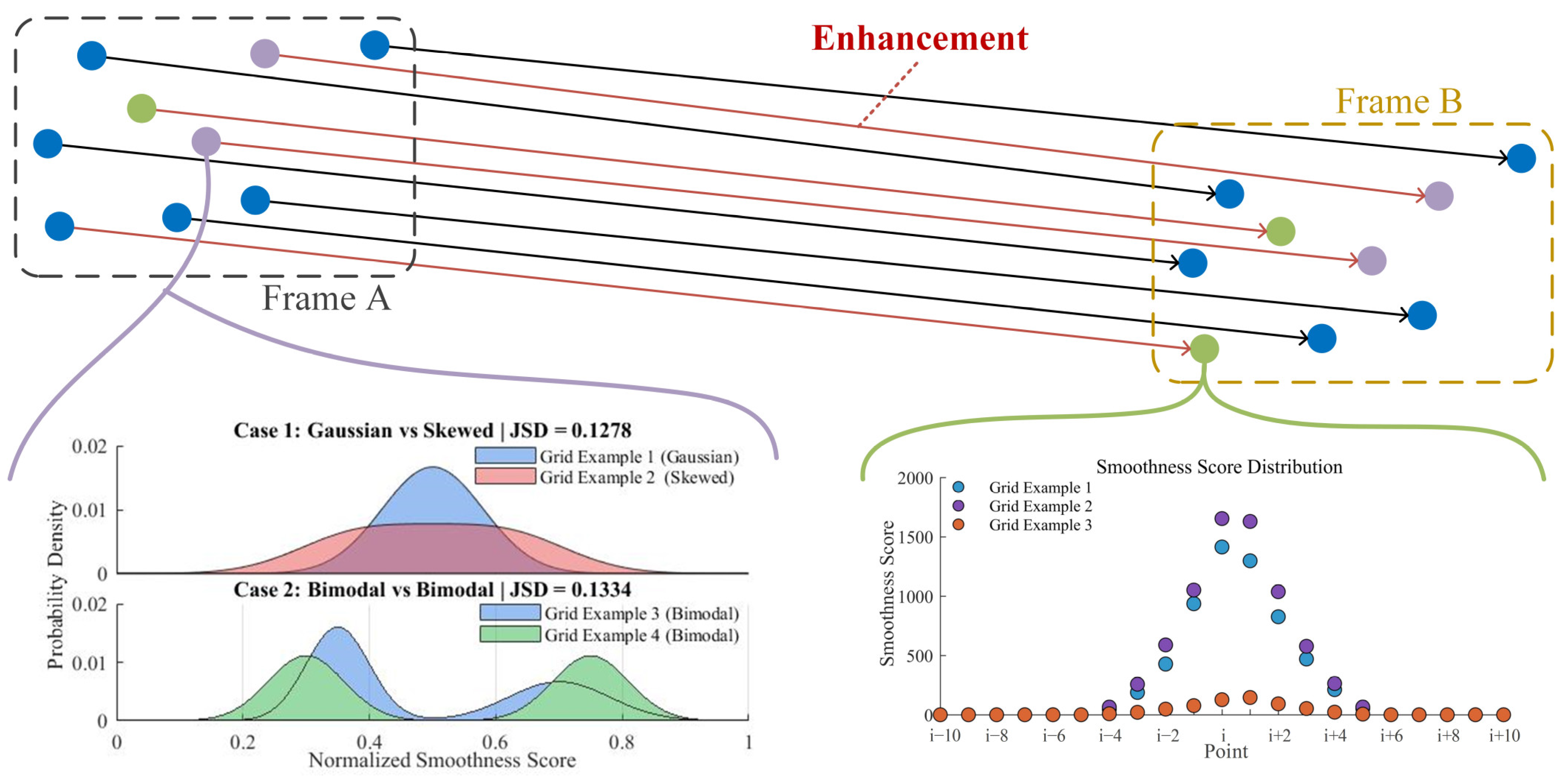

3.5.2. Temporal Stable Feature Point Selection

3.6. Feature Association

3.6.1. Edge and Planar Feature Associations

3.6.2. Ground Feature Association

3.7. Stability-Aware Feature Enhancement Weighting

4. Experiment

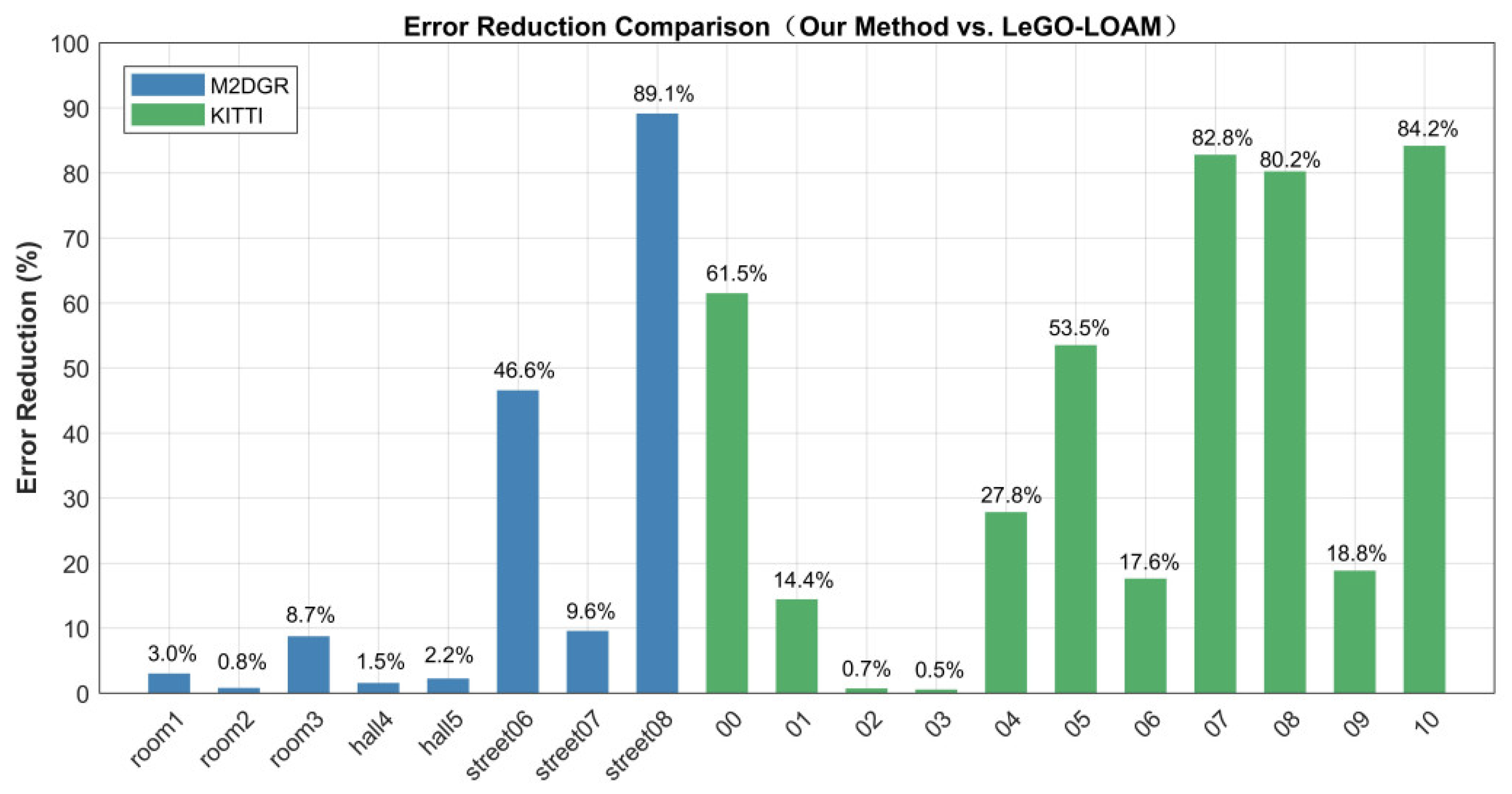

4.1. Comparison with Baseline Algorithm

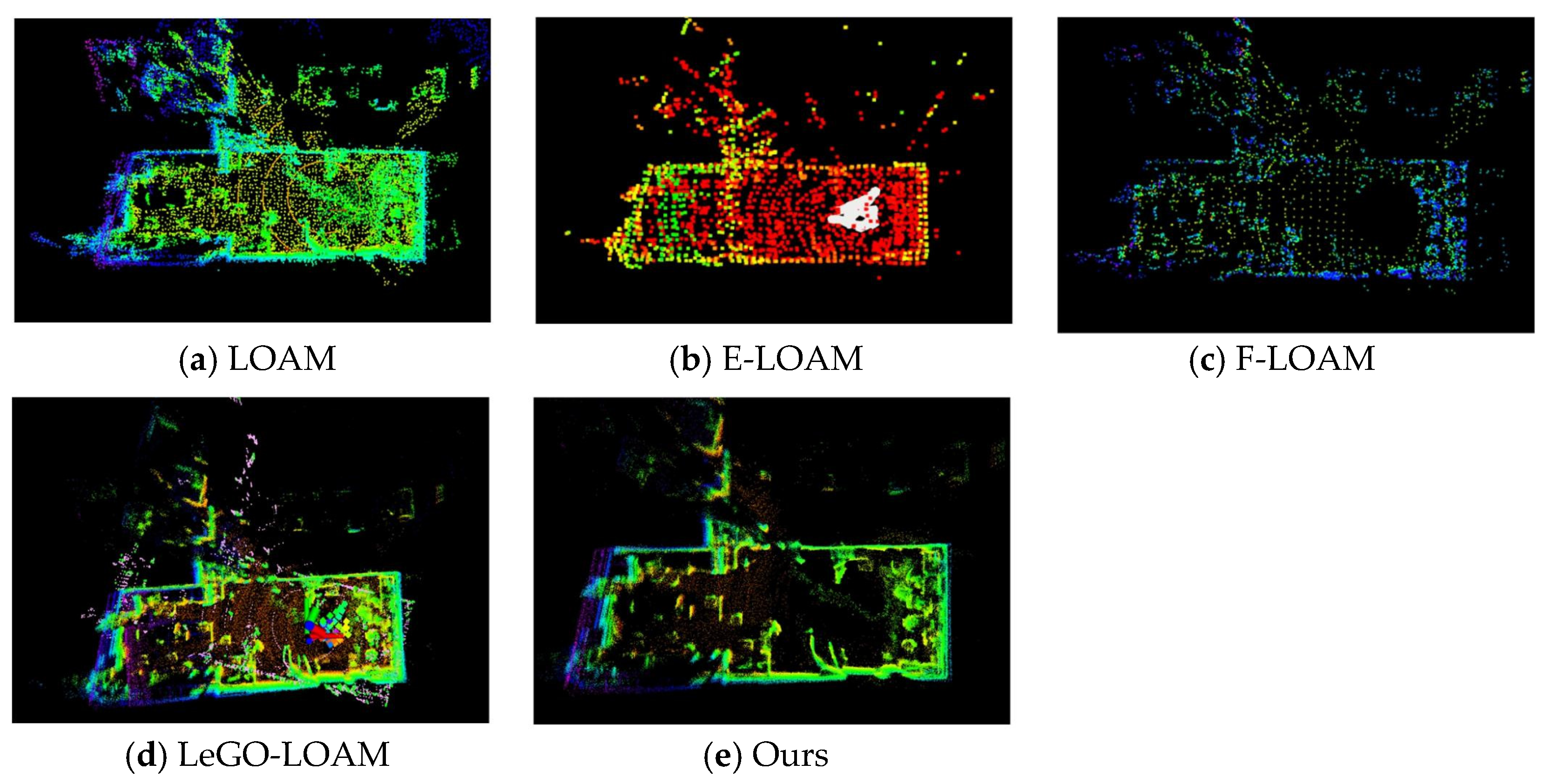

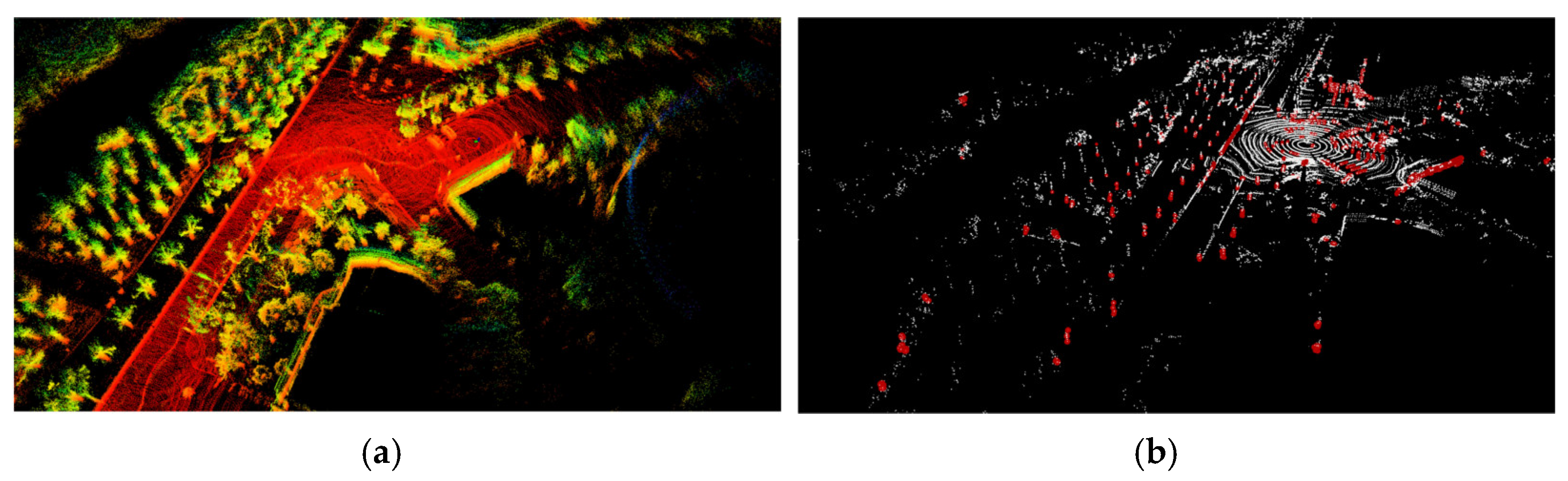

4.1.1. Subjective Map Quality Comparison

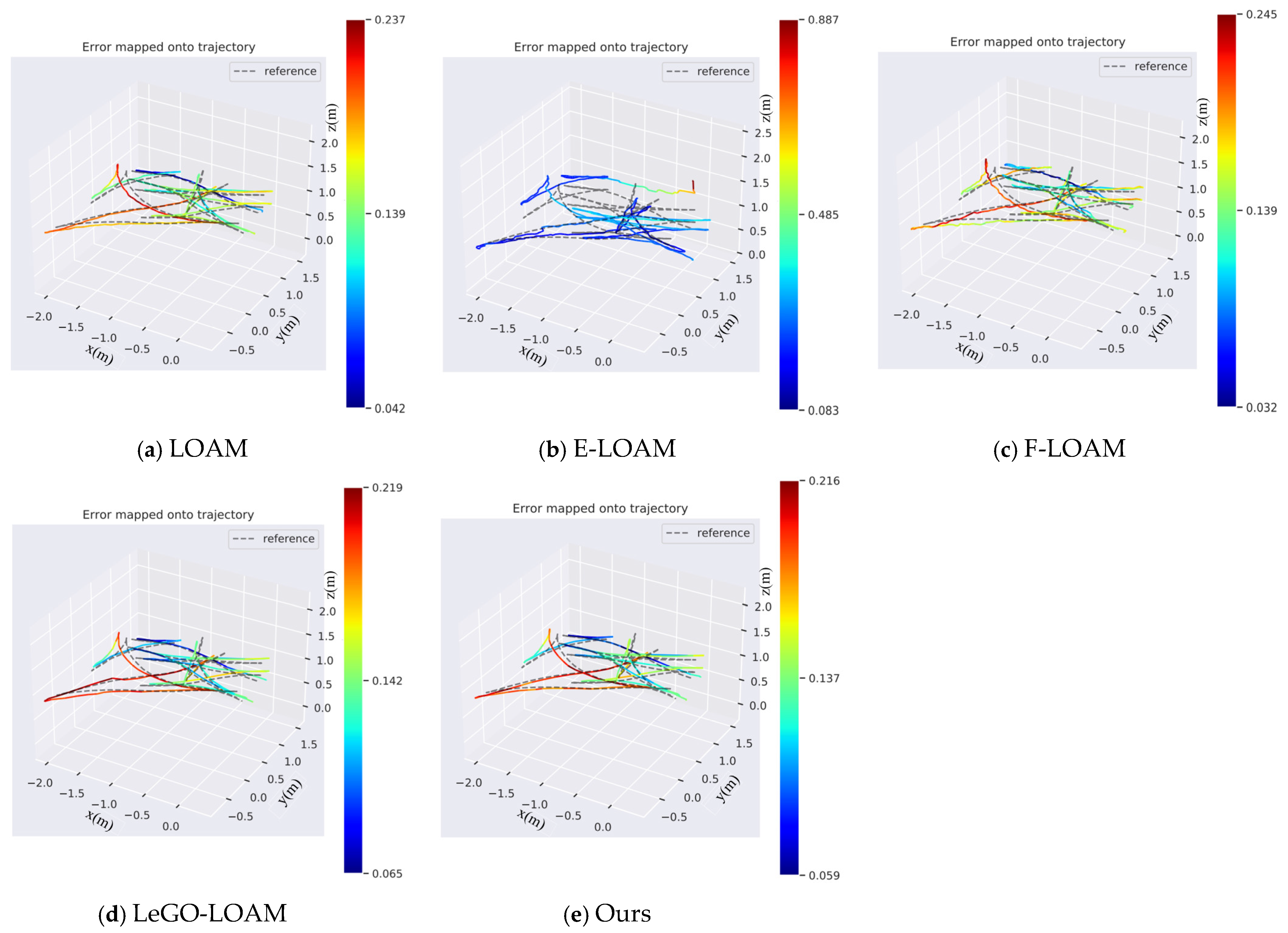

4.1.2. Objective Evaluation

4.2. Comparison with Representative SLAM Systems

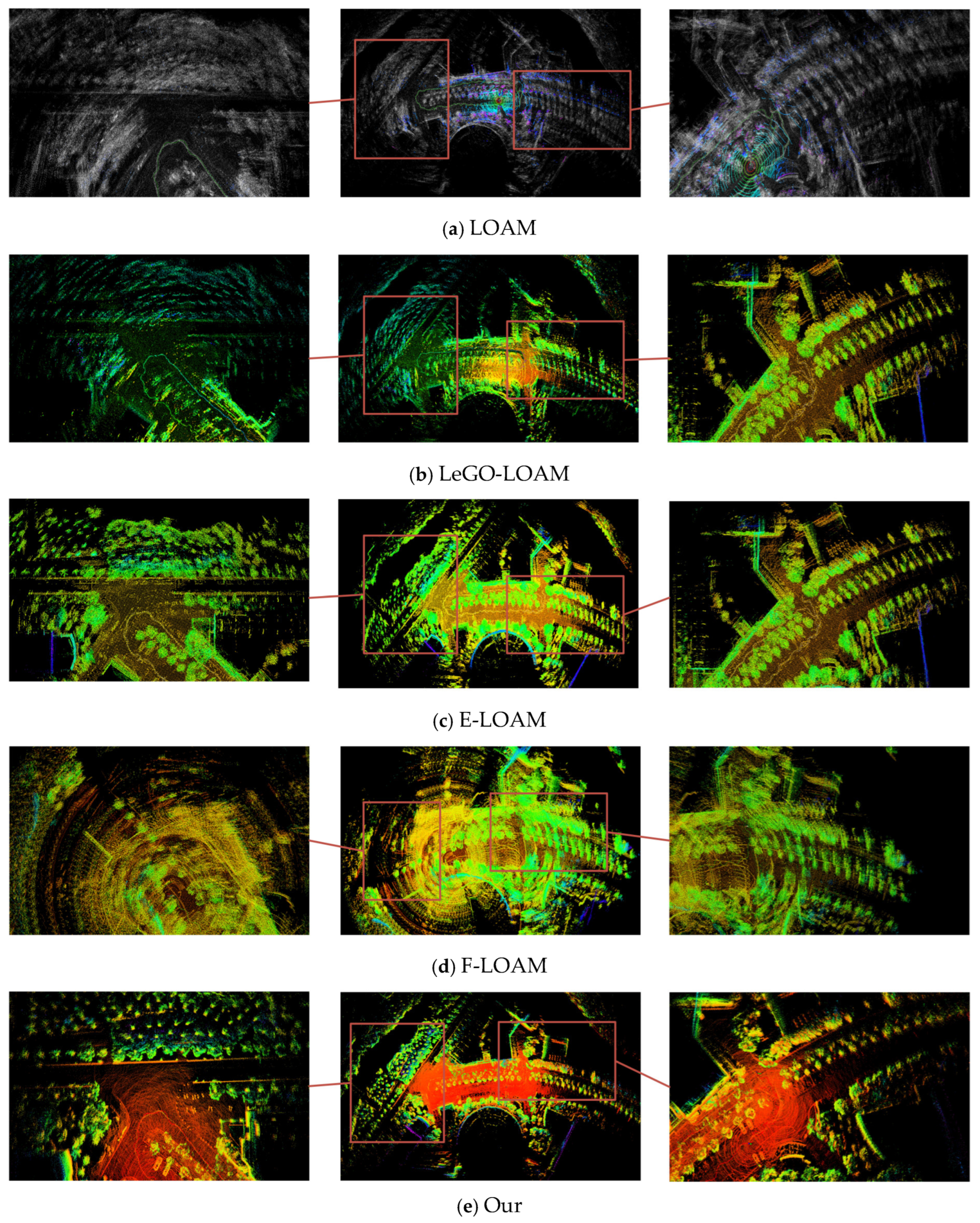

4.2.1. Subjective Map Quality Comparison

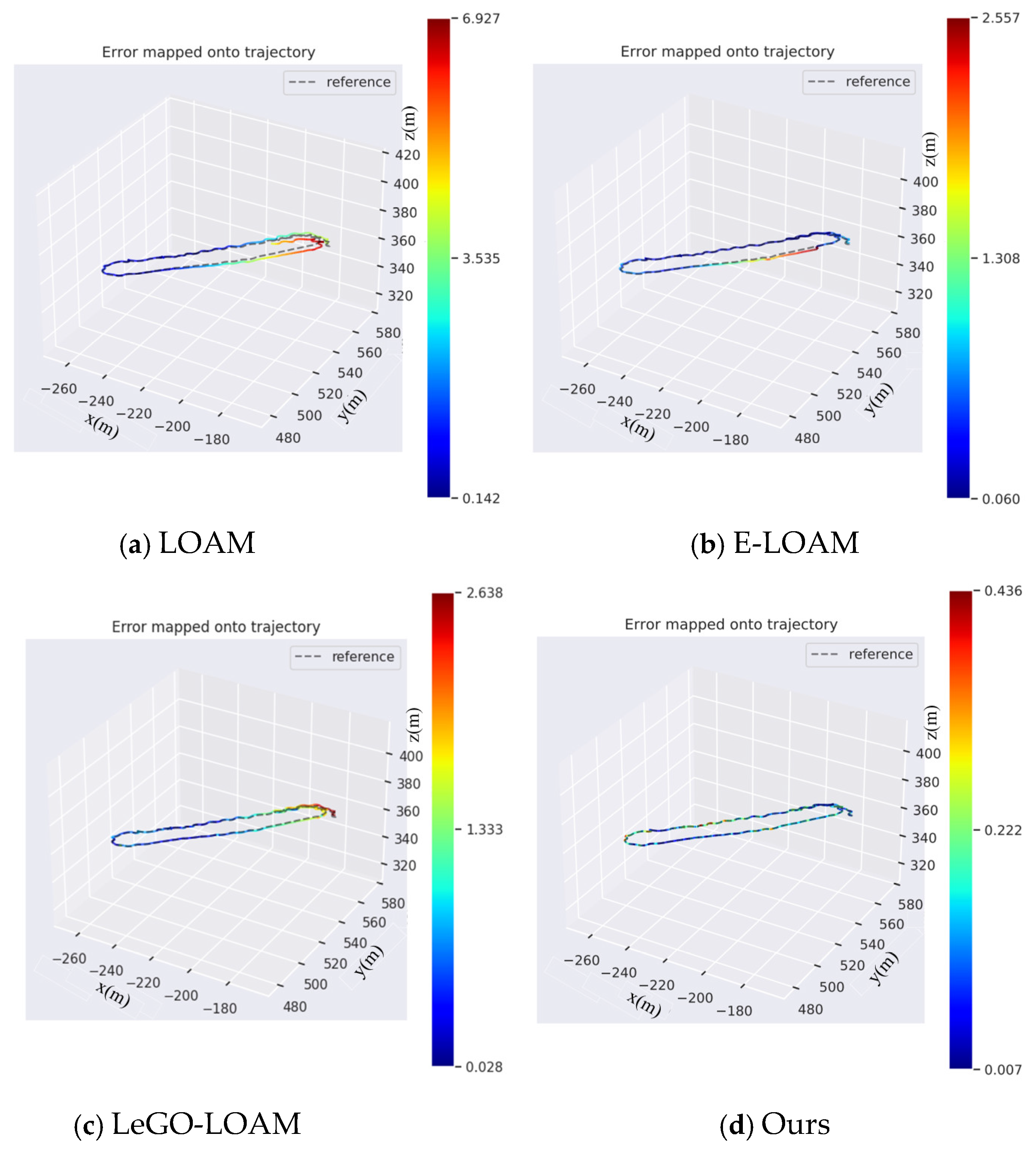

4.2.2. Objective Evaluation

4.3. Runtime Performance Evaluation

5. Discussion

5.1. Smoothness-Based Feature Stability

5.2. Threshold for Stable Feature Selection

5.3. Performance Boundaries and Application Potential

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.; Dong, J.; Zhang, Y.; Liu, Y.H. MS-SLAM: Memory-Efficient Visual SLAM with Sliding Window Map Sparsification. J. Field Robot. 2025, 42, 935–951. [Google Scholar] [CrossRef]

- Zhu, F.; Zhao, Y.; Chen, Z.; Jiang, C.; Zhu, H.; Hu, X. DyGS-SLAM: Realistic Map Reconstruction in Dynamic Scenes Based on Double-Constrained Visual SLAM. Remote Sens. 2025, 17, 625. [Google Scholar] [CrossRef]

- Huang, L.; Zhu, Z.; Yun, J.; Xu, M.; Liu, Y.; Sun, Y.; Hu, J.; Li, F. Semantic loopback detection method based on instance segmentation and visual SLAM in autonomous driving. IEEE Trans. Intell. Transp. Syst. 2024, 25, 3118–3127. [Google Scholar] [CrossRef]

- Wang, S.; Song, A.; Miao, T.; Ji, Q.; Li, H. A LiDAR SLAM Based on Clustering Features and Constraint Separation. IEEE Trans. Instrum. Meas. 2025, 74, 1–18. [Google Scholar] [CrossRef]

- Wang, J.; Xu, M.; Zhao, G.; Chen, Z. 3-D LiDAR Localization Based on Novel Nonlinear Optimization Method for Autonomous Ground Robot. IEEE Trans. Ind. Electron. 2024, 71, 2758–2768. [Google Scholar] [CrossRef]

- Si, Y.; Han, W.; Yu, D.; Bao, B.; Duan, J.; Zhan, X.; Shi, T. MixedSCNet: LiDAR-Based Place Recognition Using Multi-Channel Scan Context Neural Network. Electronics 2024, 13, 406. [Google Scholar] [CrossRef]

- He, Y.; Li, B.; Ruan, J.; Yu, A.; Hou, B. ZUST Campus: A lightweight and practical LiDAR SLAM dataset for autonomous driving scenarios. Electronics 2024, 13, 1341. [Google Scholar] [CrossRef]

- Fujinaga, T. Autonomous navigation method for agricultural robots in high-bed cultivation environments. Comput. Electron. Agric. 2025, 231, 110001. [Google Scholar] [CrossRef]

- McDermid, G.J.; Terenteva, I.; Chan, X.Y. Mapping Trails and Tracks in the Boreal Forest Using LiDAR and Convolutional Neural Networks. Remote Sens. 2025, 17, 1539. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Rome, Italy, 13–17 July 2015; RSS Foundation: Rome, Italy, 2014; Volume 2, pp. 1–9. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and ground-optimized LiDAR odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4758–4765. [Google Scholar]

- Wang, Z.; Liu, G. Improved LeGO-LOAM method based on outlier points elimination. Measurement 2023, 214, 112767. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, L.; Gao, F.; Wang, L. FEVO-LOAM: Feature extraction and vertical optimized Lidar odometry and mapping. IEEE Robot. Autom. Lett. 2022, 7, 12086–12093. [Google Scholar] [CrossRef]

- Liang, S.; Cao, Z.; Guan, P.; Wang, C.; Yu, J.; Wang, S. A novel sparse geometric 3-D LiDAR odometry approach. IEEE Syst. J. 2021, 15, 1390–1400. [Google Scholar] [CrossRef]

- Yi, S.; Lyu, Y.; Hua, L.; Pan, Q.; Zhao, C. Light-LOAM: A Lightweight LiDAR Odometry and Mapping Based on Graph-Matching. IEEE Robot. Autom. Lett. 2024, 9, 3219–3226. [Google Scholar] [CrossRef]

- Oelsch, M.; Karimi, M.; Steinbach, E. RO-LOAM: 3D Reference Object-based Trajectory and Map Optimization in LiDAR Odometry and Mapping. IEEE Robot. Autom. Lett. 2022, 7, 6806–6813. [Google Scholar] [CrossRef]

- Oelsch, M.; Karimi, M.; Steinbach, E. R-LOAM: Improving LiDAR Odometry and Mapping with Point-to-Mesh Features of a Known 3D Reference Object. IEEE Robot. Autom. Lett. 2021, 6, 2068–2075. [Google Scholar] [CrossRef]

- Li, L.; Kong, X.; Zhao, X.; Li, W.; Wen, F.; Zhang, H.; Liu, Y. SA-LOAM: Semantic-aided LiDAR SLAM with Loop Closure. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 7627–7634. [Google Scholar]

- Chen, C.; Jin, A.; Wang, Z.; Zheng, Y.; Yang, B.; Zhou, J.; Xu, Y.; Tu, Z. SGSR-Net: Structure Semantics Guided LiDAR Super-Resolution Network for Indoor LiDAR SLAM. IEEE Trans. Multimed. 2024, 26, 1–13. [Google Scholar] [CrossRef]

- Zhou, L.; Huang, G.; Mao, Y.; Yu, J.; Wang, S.; Kaess, M. PLC-LiSLAM: LiDAR SLAM with Planes, Lines, and Cylinders. IEEE Robot. Autom. Lett. 2022, 7, 7163–7170. [Google Scholar] [CrossRef]

- Guo, H.; Zhu, J.; Chen, Y. E-LOAM: LiDAR Odometry and Mapping with Expanded Local Structural Information. IEEE Trans. Intell. Veh. 2023, 8, 1911–1921. [Google Scholar] [CrossRef]

- Qian, L.; Li, W.; Hu, Y. Neural LiDAR Odometry with Feature Association and Reuse for Unstructured Environments. J. Field Robot. 2025. [Google Scholar] [CrossRef]

- Tuna, T.; Nubert, J.; Nava, Y.; Khattak, S.; Hutter, M. X-ICP: Localizability-Aware LiDAR Registration for Robust Localization in Extreme Environments. IEEE Trans. Robot. 2024, 40, 452–471. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. FAST-LIO2: Fast Direct LiDAR-Inertial Odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Pan, H.; Liu, D.; Ren, J.; Huang, T.; Yang, H. LiDAR-IMU Tightly-Coupled SLAM Method Based on IEKF and Loop Closure Detection. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 6986–7001. [Google Scholar] [CrossRef]

- Pan, Y.; Xie, J.; Wu, J.; Zhou, B. Camera-LiDAR Fusion with Latent Correlation for Cross-Scene Place Recognition. IEEE Trans. Ind. Electron. 2025, 72, 2801–2809. [Google Scholar] [CrossRef]

- Lee, J.; Komatsu, R.; Shinozaki, M.; Kitajima, T.; Asama, H.; An, Q. Switch-SLAM: Switching-Based LiDAR-Inertial-Visual SLAM for Degenerate Environments. IEEE Robot. Autom. Lett. 2024, 9, 7270–7277. [Google Scholar] [CrossRef]

- Wu, W.; Zhong, X.; Wu, D.; Chen, B.; Zhong, X.; Liu, Q. LIO-Fusion: Reinforced LiDAR Inertial Odometry by Effective Fusion with GNSS/Relocalization and Wheel Odometry. IEEE Robot. Autom. Lett. 2023, 8, 1571–1578. [Google Scholar] [CrossRef]

- Shen, Z.; Wang, J.; Pang, C.; Lan, Z.; Fang, Z. A LiDAR-IMU-GNSS fused mapping method for large-scale and high-speed scenarios. Measurement 2024, 225, 113961. [Google Scholar] [CrossRef]

- Wang, G.; Wu, X.; Liu, Z.; Wang, H. PWCLO-Net: Deep LiDAR Odometry in 3D Point Clouds Using Hierarchical Embedding Mask Optimization. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15905–15914. [Google Scholar]

- Liu, T.; Wang, Y.; Niu, X.; Chang, L.; Zhang, T.; Liu, J. LiDAR Odometry by Deep Learning-Based Feature Points with Two-Step Pose Estimation. Remote Sens. 2022, 14, 2764. [Google Scholar] [CrossRef]

- Wang, Q.X.; Wang, M.J. A novel 3D LiDAR deep learning approach for uncrewed vehicle odometry. PeerJ Comput. Sci. 2024, 10, e2189. [Google Scholar] [CrossRef]

- Setterfield, T.P.; Hewitt, R.A.; Espinoza, A.T.; Chen, P. Feature-Based Scanning LiDAR-Inertial Odometry Using Factor Graph Optimization. IEEE Robot. Autom. Lett. 2023, 8, 3374–3381. [Google Scholar] [CrossRef]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation (ICRA), Pasadena, CA, USA, 19–23 May 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 19–25. [Google Scholar]

- Grant, D.; Bethel, J.; Crawford, M. Point-to-plane registration of terrestrial laser scans. ISPRS J. Photogramm. Remote Sens. 2012, 72, 16–26. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C.; Chen, C.; Xie, L. F-LOAM: Fast LiDAR odometry and mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4390–4396. [Google Scholar]

- Li, W.; Hu, Y.; Han, Y.; Li, X. KFS-LIO: Key-feature selection for lightweight lidar inertial odometry. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5042–5048. [Google Scholar]

- Liu, Y.; Wang, C.; Wu, H.; Wei, Y.; Ren, M.; Zhao, C. Improved LiDAR localization method for mobile robots based on multi-sensing. Remote Sens. 2022, 14, 6133. [Google Scholar] [CrossRef]

- Guo, S.; Rong, Z.; Wang, S.; Wu, Y. A LiDAR SLAM with PCA-based feature extraction and two-stage matching. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Pan, Y.; Xiao, P.; He, Y.; Shao, Z.; Li, A.Z. MULLS: Versatile LiDAR SLAM via Multi-metric Linear Least Square. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 11633–11640. [Google Scholar]

- Kim, J.; Woo, J.; Im, S. RVMOS: Range-View Moving Object Segmentation Leveraged by Semantic and Motion Features. IEEE Robot. Autom. Lett. 2022, 7, 8044–8051. [Google Scholar] [CrossRef]

- Han, B.; Wei, J.; Zhang, J.; Meng, Y.; Dong, Z.; Liu, H. GardenMap: Static point cloud mapping for Garden environment. Comput. Electron. Agric. 2023, 204, 107548. [Google Scholar] [CrossRef]

- Ogura, K.; Yamada, Y.; Kajita, S.; Yamaguchi, H.; Higashino, T.; Takai, M. Ground object recognition and segmentation from aerial image-based 3D point cloud. Comput. Intell. 2019, 35, 625–642. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, F.; Sun, L.; Zhang, P.; Zhang, Z.; Chen, J.; Chen, F.; Yi, M. Multi-View Point Cloud Registration Based on Improved NDT Algorithm and ODM Optimization Method. IEEE Robot. Autom. Lett. 2024, 9, 6816–6823. [Google Scholar] [CrossRef]

- Chen, S.; Ma, H.; Jiang, C.; Zhou, B.; Xue, W.; Xiao, Z.; Li, Q. NDT-LOAM: A Real-Time Lidar Odometry and Mapping with Weighted NDT and LFA. IEEE Sens. J. 2022, 22, 3660–3671. [Google Scholar] [CrossRef]

- Wang, H.; Tang, Y.; Hu, J.; Liu, H.; Wang, W.; Wei, C.; Hu, C.; Wang, W. Robust and High-Precision Point Cloud Registration Method Based on 3D-NDT Algorithm for Vehicle Localization. IEEE Trans. Veh. Technol. 2025, 1–14. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9296–9306. [Google Scholar]

- Yin, J.; Li, A.; Li, T.; Yu, W.; Zou, D. M2DGR: A Multi-Sensor and Multi-Scenario SLAM Dataset for Ground Robots. IEEE Robot. Autom. Lett. 2022, 7, 2266–2273. [Google Scholar] [CrossRef]

- Liu, J.; Qi, Y.; Yuan, G.; Liu, L.; Li, Y. IFAL-SLAM: An approach to inertial-centered multi-sensor fusion, factor graph optimization, and adaptive Lagrangian method. Meas. Sci. Technol. 2024, 36, 16336. [Google Scholar] [CrossRef]

- Wang, W.; Li, H.; Yu, H.; Xie, Q.; Dong, J.; Sun, X.; Liu, H.; Sun, C.; Li, B.; Zheng, F. SLAM Algorithm for Mobile Robots Based on Improved LVI-SAM in Complex Environments. Sensors 2024, 24, 7214. [Google Scholar] [CrossRef] [PubMed]

- Xia, Y.; Wu, H.; Zhu, L.; Qi, W.; Zhang, S.; Zhu, J. A multi-sensor fusion framework with tight coupling for precise positioning and optimization. Signal Process. 2024, 217, 109343. [Google Scholar] [CrossRef]

- Peng, G.; Gao, Q.; Xu, Y.; Li, J.; Deng, Z.; Li, C. Pose Estimation Based on Bidirectional Visual-Inertial Odometry with 3D LiDAR (BV-LIO). Remote Sens. 2024, 16, 2970. [Google Scholar] [CrossRef]

- Meng, X.; Chen, X.; Chen, S.; Fang, Y.; Fan, H.; Luo, J.; Wu, Y.; Sun, B. An improved LIO-SAM algorithm by integrating image information for dynamic and unstructured environments. Meas. Sci. Technol. 2024, 35, 96313. [Google Scholar] [CrossRef]

- Song, Z.; Zhang, X.; Zhang, S.; Wu, S.; Wang, Y. VS-SLAM: Robust SLAM Based on LiDAR Loop Closure Detection with Virtual Descriptors and Selective Memory Storage in Challenging Environments. Actuators 2025, 14, 132. [Google Scholar] [CrossRef]

- Chen, W.; Ji, S.; Lin, X.; Yang, Z.; Chi, W.; Guan, Y.; Zhu, H.; Zhang, H. P2d-DO: Degeneracy Optimization for LiDAR SLAM with Point-to-Distribution Detection Factors. IEEE Robot. Autom. Lett. 2025, 10, 1489–1496. [Google Scholar] [CrossRef]

- Lu, J.; Liu, J.; Qin, L.; Li, M. Enhanced 3D LiDAR Features TLG: Multi-Feature Fusion and LiDAR Inertial Odometry Applications. IEEE Robot. Autom. Lett. 2025, 10, 1170–1177. [Google Scholar] [CrossRef]

- Huang, K.; Zhao, J.; Zhu, Z.; Ye, C.; Feng, T. LOG-LIO: A LiDAR-Inertial Odometry with Efficient Local Geometric Information Estimation. IEEE Robot. Autom. Lett. 2024, 9, 459–466. [Google Scholar] [CrossRef]

- Evo: Python Package for the Evaluation of Odometry and SLAM. Available online: https://github.com/MichaelGrupp/evo (accessed on 10 February 2021).

- Souza, R.R.D.; Toebe, M.; Mello, A.C.; Bittencourt, K.C. Sample size and Shapiro—Wilk test: An analysis for soybean grain yield. Eur. J. Agron. 2023, 142, 126666. [Google Scholar] [CrossRef]

- Lim, C.; See, S.C.M.; Zoubir, A.M.; Ng, B.P. Robust Adaptive Trimming for High-Resolution Direction Finding. IEEE Signal Process. Lett. 2009, 16, 580–583. [Google Scholar] [CrossRef]

- Guner, B.; Frankford, M.T.; Johnson, J.T. A Study of the Shapiro-Wilk Test for the Detection of Pulsed Sinusoidal Radio Frequency Interference. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1745–1751. [Google Scholar] [CrossRef]

- Ni, S.; Lin, C.; Wang, H.; Li, Y.; Liao, Y.; Li, N. Learning geometric Jensen-Shannon divergence for tiny object detection in remote sensing images. Front. Neurorobotics 2023, 17, 1273251. [Google Scholar] [CrossRef]

- Yang, W.; Song, H.; Huang, X.; Xu, X.; Liao, M. Change Detection in High-Resolution SAR Images Based on Jensen-Shannon Divergence and Hierarchical Markov Model. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 3318–3327. [Google Scholar] [CrossRef]

- Ding, D.; Qiu, C.; Liu, F.; Pan, Z. Point Cloud Upsampling via Perturbation Learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4661–4672. [Google Scholar] [CrossRef]

- Zhang, X.; Li, S.; Sun, J.; Zhang, Y.; Liu, D.; Yang, X.; Zhang, H. Target edge extraction for array single-photon lidar based on echo waveform characteristics. Opt. Laser Technol. 2023, 167, 109736. [Google Scholar] [CrossRef]

- Watson, E.A. Viewpoint-independent object recognition using reduced-dimension point cloud data. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2021, 38, B1–B9. [Google Scholar] [CrossRef] [PubMed]

| Seq. | Scene Type | LeGO-LOAM | Ours | Error Reduction |

|---|---|---|---|---|

| room1 | Indoor–Room | 0.1539 | 0.1493 | 2.99% |

| room2 | Indoor–Room | 0.1303 | 0.1293 | 0.77% |

| room3 | Indoor–Room | 0.1615 | 0.1474 | 8.73% |

| hall4 | Indoor–Corridor | 0.9304 | 0.9162 | 1.53% |

| hall5 | Indoor–Corridor | 0.9161 | 0.8958 | 2.22% |

| street06 | Outdoor–Road | 0.8119 | 0.4338 | 46.57% |

| street07 | Outdoor–Road | 3.1782 | 2.8748 | 9.55% |

| street08 | Outdoor–Road | 1.3677 | 0.1488 | 89.12% |

| Seq. | Scene Type | LeGO-LOAM | Ours | Error Reduction |

|---|---|---|---|---|

| 00 | Urban | 5.9466 | 2.2901 | 61.48% |

| 01 | Highway | 93.6190 | 80.1161 | 14.42% |

| 02 | Urban | 56.8059 | 56.4135 | 0.69% |

| 03 | Rural | 0.9121 | 0.9074 | 0.52% |

| 04 | Urban | 0.3913 | 0.2824 | 27.81% |

| 05 | Urban | 2.2142 | 1.0298 | 53.49% |

| 06 | Urban | 0.9292 | 0.7655 | 17.61% |

| 07 | Urban | 1.1430 | 0.1969 | 82.77% |

| 08 | Urban + Rural | 3.8252 | 0.7570 | 80.21% |

| 09 | Urban + Rural | 2.2011 | 1.7866 | 18.83% |

| 10 | Urban + Rural | 2.2011 | 0.3488 | 84.15% |

| Seq. | Scene Type | LOAM | LeGO-LOAM | F-LOAM | E-LOAM | Ours |

|---|---|---|---|---|---|---|

| room1 | Indoor–Room | 0.1567 | 0.1539 | 0.1608 | 0.2980 | 0.1493 |

| room2 | Indoor–Room | 0.1324 | 0.1303 | 0.1336 | 0.1387 | 0.1293 |

| room3 | Indoor–Room | 0.1597 | 0.1615 | 0.1659 | 0.3454 | 0.1474 |

| hall4 | Indoor–Corridor | 0.9289 | 0.9304 | 0.9311 | 1.0243 | 0.9162 |

| hall5 | Indoor–Corridor | 0.9013 | 0.9161 | 0.8978 | 0.9319 | 0.8958 |

| street06 | Outdoor–Road | 0.5590 | 0.8119 | 0.9255 | 1.4031 | 0.4338 |

| street07 | Outdoor–Road | 21.2160 | 9.2723 | 83.4078 | 3.2085 | 2.8748 |

| street08 | Outdoor–Road | 0.5570 | 1.3677 | 50.1628 | 0.7543 | 0.1488 |

| Seq. | Scene Type | LOAM | LeGO-LOAM | F-LOAM | E-LOAM | Ours |

|---|---|---|---|---|---|---|

| 00 | Urban | 2.4395 | 5.9466 | 4.7688 | 2.4943 | 2.2901 |

| 01 | Highway | 18.6474 | 93.6190 | 18.9226 | 264.6704 | 80.1161 |

| 02 | Urban | 117.1589 | 56.8059 | 8.5632 | 122.1116 | 56.413 |

| 03 | Rural | 0.9590 | 0.9121 | 0.9158 | 0.9533 | 0.9074 |

| 04 | Urban | 0.3889 | 0.3913 | 0.3618 | 33.3604 | 0.2824 |

| 05 | Urban | 2.4763 | 2.2142 | 3.4823 | 1.0605 | 1.0298 |

| 06 | Urban | 0.7676 | 0.9292 | 0.7906 | 0.7765 | 0.7655 |

| 07 | Urban | 0.5808 | 1.1430 | 0.6617 | 0.6738 | 0.1969 |

| 08 | Urban + Rural | 3.6795 | 3.8252 | 4.0830 | 4.9288 | 0.7570 |

| 09 | Urban + Rural | 1.7889 | 2.2011 | 1.8128 | 1.5503 | 1.7866 |

| 10 | Urban + Rural | 1.3463 | 2.201 | 1.4030 | 1.9565 | 0.3488 |

| Scenario | Stage | LOAM | E-LOAM | F-LOAM | LeGO-LOAM | Ours |

|---|---|---|---|---|---|---|

| Feature Extraction | 4.096 | 14.114 | 4.136 | 4.651 | 5.781 | |

| Indoor | Backend Optimization | 21.641 | 65.472 | 41.207 | 1.292 | 1.037 |

| Total Odometry | 25.737 | 79.586 | 45.343 | 5.943 | 6.818 | |

| Feature Extraction | 11.215 | 13.864 | 3.807 | 8.039 | 8.426 | |

| Outdoor | Backend Optimization | 24.190 | 111.696 | 86.769 | 0.808 | 0.374 |

| Total Odometry | 35.405 | 125.558 | 90.601 | 8.847 | 8.800 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Jia, K.; Wei, Z. Small but Mighty: A Lightweight Feature Enhancement Strategy for LiDAR Odometry in Challenging Environments. Remote Sens. 2025, 17, 2656. https://doi.org/10.3390/rs17152656

Chen J, Jia K, Wei Z. Small but Mighty: A Lightweight Feature Enhancement Strategy for LiDAR Odometry in Challenging Environments. Remote Sensing. 2025; 17(15):2656. https://doi.org/10.3390/rs17152656

Chicago/Turabian StyleChen, Jiaping, Kebin Jia, and Zhihao Wei. 2025. "Small but Mighty: A Lightweight Feature Enhancement Strategy for LiDAR Odometry in Challenging Environments" Remote Sensing 17, no. 15: 2656. https://doi.org/10.3390/rs17152656

APA StyleChen, J., Jia, K., & Wei, Z. (2025). Small but Mighty: A Lightweight Feature Enhancement Strategy for LiDAR Odometry in Challenging Environments. Remote Sensing, 17(15), 2656. https://doi.org/10.3390/rs17152656