A Weakly Supervised Network for Coarse-to-Fine Change Detection in Hyperspectral Images

Abstract

1. Introduction

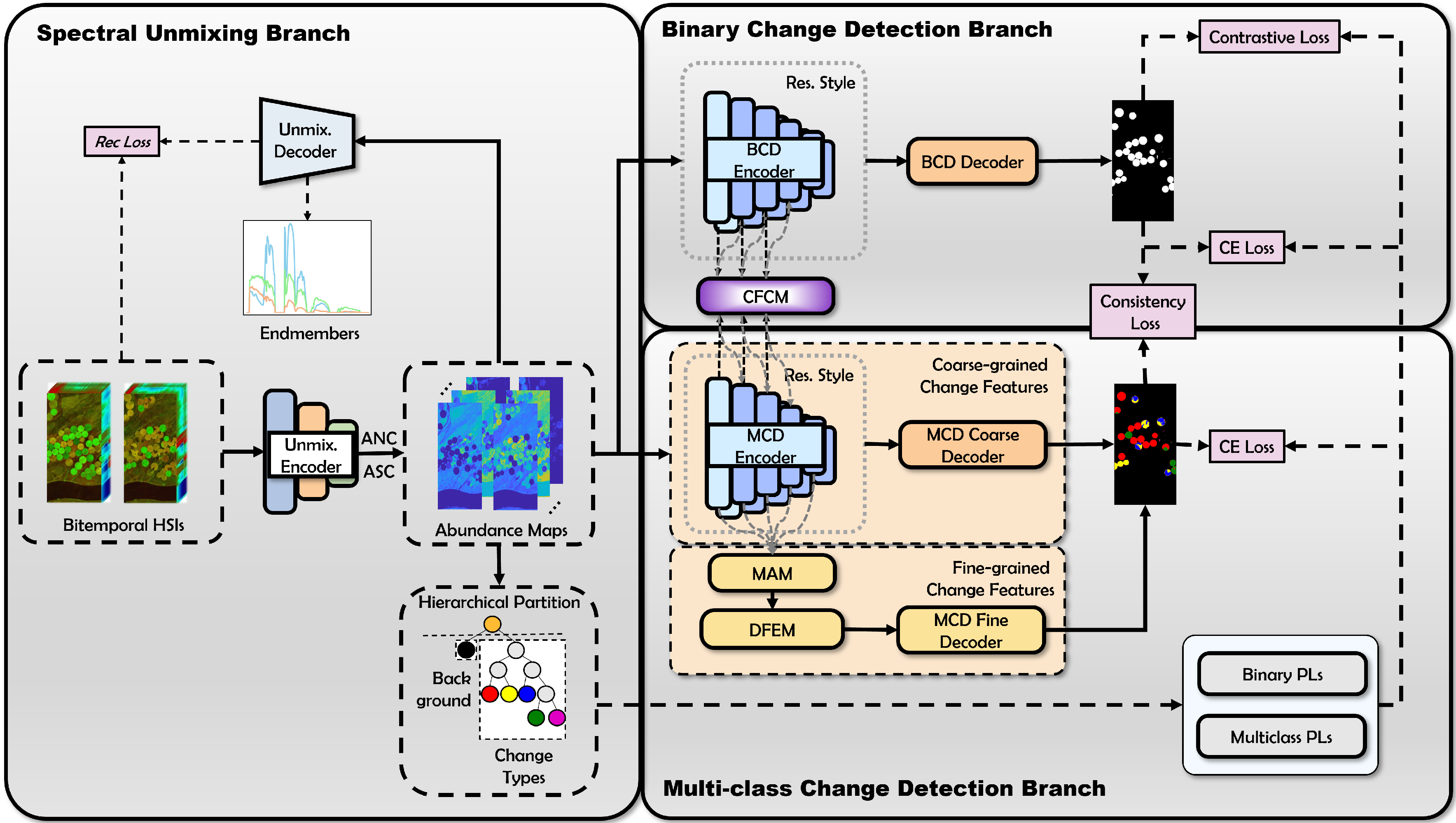

- We propose a dual-branch learning framework that integrates binary and multiclass change detection, extracting sub-pixel level features through a spectral unmixing branch (SUB), while optimizing feature interaction between branches via a Cross-Feature Coupling Module (CFCM).

- To address heterogeneous spatial scales of change targets, WSCDNet introduces a Multi-Granularity Aggregation Module (MAM) that effectively integrates fine-grained spatial features with highly discriminative semantic features for detecting easily confused areas, while a Difference Feature Enhancement Module (DFEM) amplifies change features, significantly improving detection capabilities in challenging regions.

- To address limited labeled hyperspectral data, WSCDNet generates pseudo-labels by combining SUB-extracted abundance features with hierarchical partitioning. It mitigates label unreliability through dual-branch consistency loss, measuring probability distribution agreement, and employs a sample filtering mechanism to prevent overfitting to incorrect labels.

2. Related Works

2.1. Hyperspectral Change Detection

2.2. Feature Extraction of Hyperspectral Images

3. Methods

3.1. Overview of Network Structure

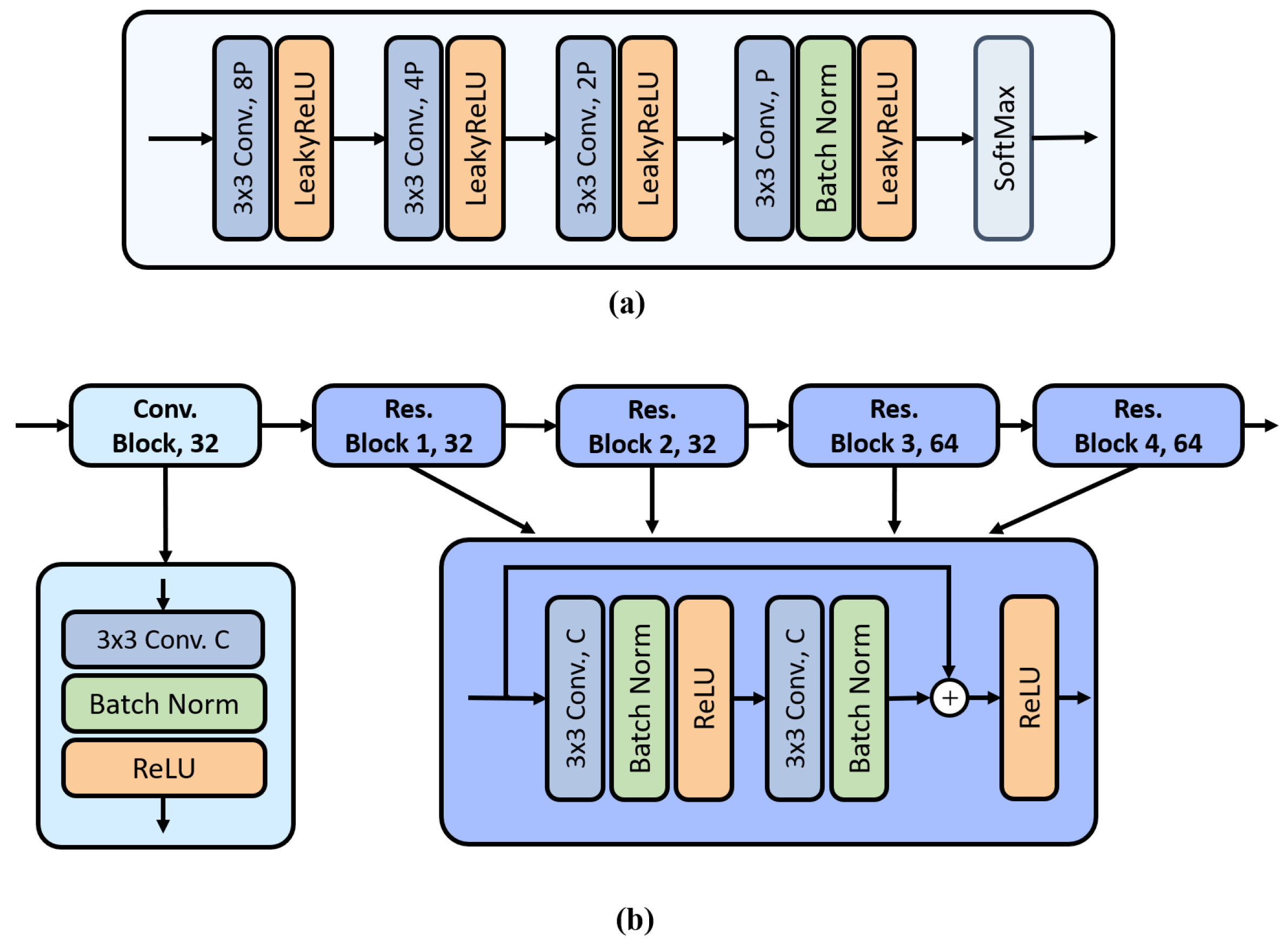

3.2. Spectral Unmixing Branch

3.3. Noisy Pseudo-Label Generation

3.4. Binary Change Detection Branch

3.5. Multiclass Change Detection Branch

3.6. Cross-Feature Coupling Module

3.7. Weakly Supervised Collaborative Learning with Noisy Labels

| Algorithm 1: WSCDNet for Coarse-to-Fine CD |

|

4. Experimental Validation and Analysis

4.1. Datasets

4.2. Setup

4.3. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chengle, Z.; Qian, S.; Jun, L.; Xinchang, Z. Spectral-frequency domain attribute pattern fusion for hyperspectral image change detection. Natl. Remote Sens. Bull. 2024, 28, 105–120. [Google Scholar]

- Sicong, L.; Kecheng, D.; Yongjie, Z.; Jin, C.; Peijun, D.; Xiaohua, T. Remote sensing change detection technology in the Era of artificial intelligence: Inheritance, development and challenges. Natl. Remote Sens. Bull. 2023, 27, 1975–1987. [Google Scholar]

- Cheng, G.; Huang, Y.; Li, X.; Lyu, S.; Xu, Z.; Zhao, H.; Zhao, Q.; Xiang, S. Change detection methods for remote sensing in the last decade: A comprehensive review. Remote Sens. 2024, 16, 2355. [Google Scholar] [CrossRef]

- Liu, S.; Marinelli, D.; Bruzzone, L.; Bovolo, F. A review of change detection in multitemporal hyperspectral images: Current techniques, applications, and challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 140–158. [Google Scholar] [CrossRef]

- Yan, Z.; Huazhong, R.; Desheng, C. The research of building earthquake damage object-oriented change detection based on ensemble classifier with remote sensing image. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4950–4953. [Google Scholar]

- Hu, Y.; Raza, A.; Syed, N.R.; Acharki, S.; Ray, R.L.; Hussain, S.; Dehghanisanij, H.; Zubair, M.; Elbeltagi, A. Land use/land cover change detection and NDVI estimation in Pakistan’s Southern Punjab Province. Sustainability 2023, 15, 3572. [Google Scholar] [CrossRef]

- Shah-Hosseini, R.; Homayouni, S.; Safari, A. Environmental monitoring based on automatic change detection from remotely sensed data: Kernel-based approach. J. Appl. Remote Sens. 2015, 9, 095992. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A.; Zhang, L. Building damage assessment for rapid disaster response with a deep object-based semantic change detection framework: From natural disasters to man-made disasters. Remote Sens. Environ. 2021, 265, 112636. [Google Scholar] [CrossRef]

- Zheng, Y.; Liu, S.; Lorenzo, B. Scribble-Guided Structural Regression Fusion for Multimodal Remote Sensing Change Detection. IEEE Geosci. Remote Sens. Lett. 2025, 22, 5002005. [Google Scholar] [CrossRef]

- Su, Y.; Xu, R.; Gao, L.; Han, Z.; Sun, X. Development of deep learning-based hyperspectral remote sensing image unmixing. Natl. Remote Sens. Bull. 2024, 28, 1–19. [Google Scholar] [CrossRef]

- Long, J.; Liu, S.; Li, M.; Zhao, H.; Jin, Y. BGSNet: A boundary-guided Siamese multitask network for semantic change detection from high-resolution remote sensing images. Isprs J. Photogramm. Remote Sens. 2025, 225, 221–237. [Google Scholar] [CrossRef]

- Long, J.; Liu, S.; Li, M. SMGNet: A Semantic Map-Guided Multi-Task Neural Network for Remote Sensing Image Semantic Change Detection. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6009605. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Proceedings, Part I 13. Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 818–833. [Google Scholar]

- Zhang, P.; Gong, M.; Zhang, H.; Liu, J.; Ban, Y. Unsupervised difference representation learning for detecting multiple types of changes in multitemporal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2277–2289. [Google Scholar] [CrossRef]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised deep slow feature analysis for change detection in multi-temporal remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef]

- Ou, X.; Liu, L.; Tan, S.; Zhang, G.; Li, W.; Tu, B. A hyperspectral image change detection framework with self-supervised contrastive learning pretrained model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7724–7740. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2006, 45, 218–236. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and k-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Marchesi, S.; Bruzzone, L. ICA and kernel ICA for change detection in multispectral remote sensing images. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 2, pp. II–980. [Google Scholar]

- Bovolo, F.; Marchesi, S.; Bruzzone, L. A framework for automatic and unsupervised detection of multiple changes in multitemporal images. IEEE Trans. Geosci. Remote Sens. 2011, 50, 2196–2212. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Cui, X.; Zhang, L. A post-classification change detection method based on iterative slow feature analysis and Bayesian soft fusion. Remote Sens. Environ. 2017, 199, 241–255. [Google Scholar] [CrossRef]

- Hu, M.; Wu, C.; Zhang, L. GlobalMind: Global multi-head interactive self-attention network for hyperspectral change detection. ISPRS J. Photogramm. Remote Sens. 2024, 211, 465–483. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised deep change vector analysis for multiple-change detection in VHR images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3677–3693. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Amani, M. A new end-to-end multi-dimensional CNN framework for land cover/land use change detection in multi-source remote sensing datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A general end-to-end 2-D CNN framework for hyperspectral image change detection. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3–13. [Google Scholar] [CrossRef]

- Hu, M.; Wu, C.; Du, B. EMS-Net: Efficient multi-temporal self-attention for hyperspectral change detection. In Proceedings of the IGARSS 2023-2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 6664–6667. [Google Scholar]

- Luo, F.; Zhou, T.; Liu, J.; Guo, T.; Gong, X.; Ren, J. Multiscale diff-changed feature fusion network for hyperspectral image change detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5502713. [Google Scholar] [CrossRef]

- Nascimento, J.M.; Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Sindhwani, V.; Niyogi, P.; Belkin, M. A co-regularization approach to semi-supervised learning with multiple views. In Proceedings of the ICML Workshop on Learning with Multiple Views, Citeseer, Bonn, Germany, 7–11 August 2005; Volume 2005, pp. 74–79. [Google Scholar]

- Li, Y.; Han, H.; Shan, S.; Chen, X. Disc: Learning from noisy labels via dynamic instance-specific selection and correction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 24070–24079. [Google Scholar]

- Xia, Q.; Lee, F.; Chen, Q. TCC-net: A two-stage training method with contradictory loss and co-teaching based on meta-learning for learning with noisy labels. Inf. Sci. 2023, 639, 119008. [Google Scholar] [CrossRef]

- Ning, X.; Wang, X.; Xu, S.; Cai, W.; Zhang, L.; Yu, L.; Li, W. A review of research on co-training. Concurr. Comput. Pract. Exp. 2023, 35, e6276. [Google Scholar] [CrossRef]

- Saha, S.; Kondmann, L.; Song, Q.; Zhu, X.X. Change detection in hyperdimensional images using untrained models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11029–11041. [Google Scholar] [CrossRef]

- Liu, S.; Du, Q.; Tong, X.; Samat, A.; Bruzzone, L. Unsupervised change detection in multispectral remote sensing images via spectral-spatial band expansion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3578–3587. [Google Scholar] [CrossRef]

- Liu, S.; Du, Q.; Tong, X.; Samat, A.; Pan, H.; Ma, X. Band selection-based dimensionality reduction for change detection in multi-temporal hyperspectral images. Remote Sens. 2017, 9, 1008. [Google Scholar] [CrossRef]

- Hu, M.; Wu, C.; Du, B.; Zhang, L. Binary change guided hyperspectral multiclass change detection. IEEE Trans. Image Process. 2023, 32, 791–806. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. A subspace-based change detection method for hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 815–830. [Google Scholar] [CrossRef]

- Hou, Z.; Li, W.; Li, L.; Tao, R.; Du, Q. Hyperspectral change detection based on multiple morphological profiles. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5507312. [Google Scholar] [CrossRef]

- Zhou, F.; Chen, Z. Hyperspectral image change detection by self-supervised tensor network. In Proceedings of the IGARSS 2020-2020 IEEE International Geoscience and Remote Sensing Symposium, Virtual, 26 September–2 October 2020; pp. 2527–2530. [Google Scholar]

- Gao, F.; Dong, J.; Li, B.; Xu, Q. Automatic change detection in synthetic aperture radar images based on PCANet. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1792–1796. [Google Scholar] [CrossRef]

- Wu, C.; Chen, H.; Du, B.; Zhang, L. Unsupervised change detection in multitemporal VHR images based on deep kernel PCA convolutional mapping network. IEEE Trans. Cybern. 2021, 52, 12084–12098. [Google Scholar] [CrossRef]

- Wu, H.; Chen, Z. Self-supervised change detection with nonlocal tensor train and subpixel signature guidance. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5529617. [Google Scholar] [CrossRef]

| Method | Hermiston | Yancheng | Urban | |||

|---|---|---|---|---|---|---|

| OA (%) | KAPPA | OA (%) | KAPPA | OA (%) | KAPPA | |

| LSCD | 92.99 | 0.7068 | 91.51 | 0.7547 | 99.60 | 0.5307 |

| ASCD | 95.95 | 0.7938 | 93.48 | 0.8286 | 99.77 | 0.7795 |

| PCAkMeans | 96.75 | 0.8648 | 95.93 | 0.8891 | 99.75 | 0.8059 |

| DSFANet | 97.81 | 0.9018 | 94.57 | 0.8492 | 99.86 | 0.8830 |

| PCANet | 83.19 | 0.4140 | 93.08 | 0.8011 | 99.74 | 0.7619 |

| MaxTree | 95.86 | 0.8317 | 96.00 | 0.8924 | 99.72 | 0.7955 |

| MinTree | 95.95 | 0.8349 | 95.96 | 0.8913 | 99.72 | 0.7955 |

| DeepCVA | 97.24 | 0.8836 | 96.20 | 0.8963 | 99.47 | 0.6827 |

| KPCA-MNet | 92.79 | 0.7287 | 96.53 | 0.9068 | 99.74 | 0.8173 |

| HI-DRL | 97.72 | 0.8910 | 94.02 | 0.8358 | 99.81 | 0.8418 |

| SSTN | 98.63 | 0.9380 | 93.75 | 0.8216 | 99.83 | 0.8649 |

| WSCDNet | 98.77 | 0.9449 | 96.93 | 0.9176 | 99.88 | 0.9049 |

| Method | Hermiston | Yancheng | Urban | |||

|---|---|---|---|---|---|---|

| OA (%) | KAPPA | OA (%) | KAPPA | OA (%) | KAPPA | |

| DeepCVA | 95.11 | 0.8031 | 89.53 | 0.7534 | 99.12 | 0.4779 |

| KPCA-MNet | 89.27 | 0.6248 | 93.67 | 0.8438 | 99.42 | 0.5904 |

| MaxTree | 90.25 | 0.6254 | 94.19 | 0.8562 | 99.07 | 0.4626 |

| MinTree | 88.99 | 0.5762 | 93.67 | 0.8433 | 99.07 | 0.4626 |

| DSFANet | 94.44 | 0.7609 | 80.20 | 0.5072 | 99.33 | 0.5162 |

| SNTS | 97.36 | 0.8863 | 96.19 | 0.9058 | 99.70 | 0.7468 |

| WSCDNet | 97.76 | 0.9044 | 96.97 | 0.9248 | 99.72 | 0.7691 |

| Experiment | Method | Hermiston | |||

|---|---|---|---|---|---|

| Binary Change Detection | Multiclass Change Detection | ||||

| OA(%) | KAPPA | OA(%) | KAPPA | ||

| A | Baseline | 98.66 | 0.9403 | 97.58 | 0.8981 |

| B | Baseline + MAM | 98.68 | 0.9419 | 97.72 | 0.9023 |

| C | Baseline + DFEM | 98.68 | 0.9411 | 97.59 | 0.8960 |

| D | Baseline + CFCM | 98.69 | 0.9410 | 97.64 | 0.8990 |

| E | Baseline + Noisy Learning Srategy | 98.70 | 0.9411 | 97.60 | 0.8967 |

| F | PLs by K-Means | 98.63 | 0.9383 | 94.16 | 0.7490 |

| Experiment | Hermiston | |||||

|---|---|---|---|---|---|---|

| Binary Change Detection | Multiclass Change Detection | |||||

| OA(%) | KAPPA | OA(%) | KAPPA | |||

| G | 0.001 | 0.3 | 98.67 | 0.9411 | 97.33 | 0.8870 |

| H | 0.001 | 0.2 | 98.77 | 0.9449 | 97.76 | 0.9044 |

| I | 0.001 | 0.1 | 98.71 | 0.9425 | 97.64 | 0.8997 |

| J | 0.01 | 0.2 | 98.72 | 0.9426 | 97.58 | 0.8973 |

| K | 0.0001 | 0.2 | 98.64 | 0.9330 | 97.71 | 0.9018 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Chen, Z. A Weakly Supervised Network for Coarse-to-Fine Change Detection in Hyperspectral Images. Remote Sens. 2025, 17, 2624. https://doi.org/10.3390/rs17152624

Zhao Y, Chen Z. A Weakly Supervised Network for Coarse-to-Fine Change Detection in Hyperspectral Images. Remote Sensing. 2025; 17(15):2624. https://doi.org/10.3390/rs17152624

Chicago/Turabian StyleZhao, Yadong, and Zhao Chen. 2025. "A Weakly Supervised Network for Coarse-to-Fine Change Detection in Hyperspectral Images" Remote Sensing 17, no. 15: 2624. https://doi.org/10.3390/rs17152624

APA StyleZhao, Y., & Chen, Z. (2025). A Weakly Supervised Network for Coarse-to-Fine Change Detection in Hyperspectral Images. Remote Sensing, 17(15), 2624. https://doi.org/10.3390/rs17152624