An Efficient Ship Target Integrated Imaging and Detection Framework (ST-IIDF) for Space-Borne SAR Echo Data

Abstract

1. Introduction

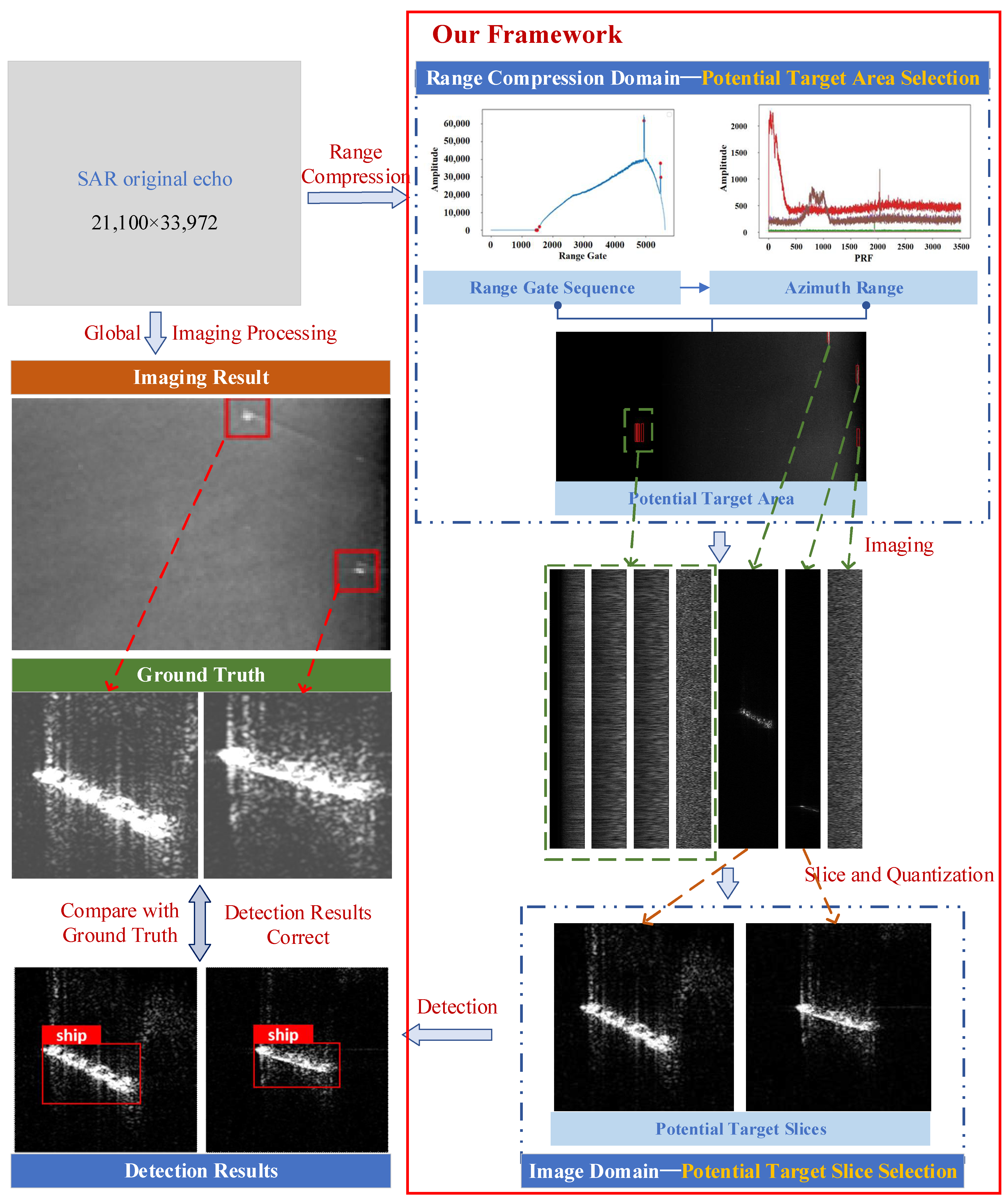

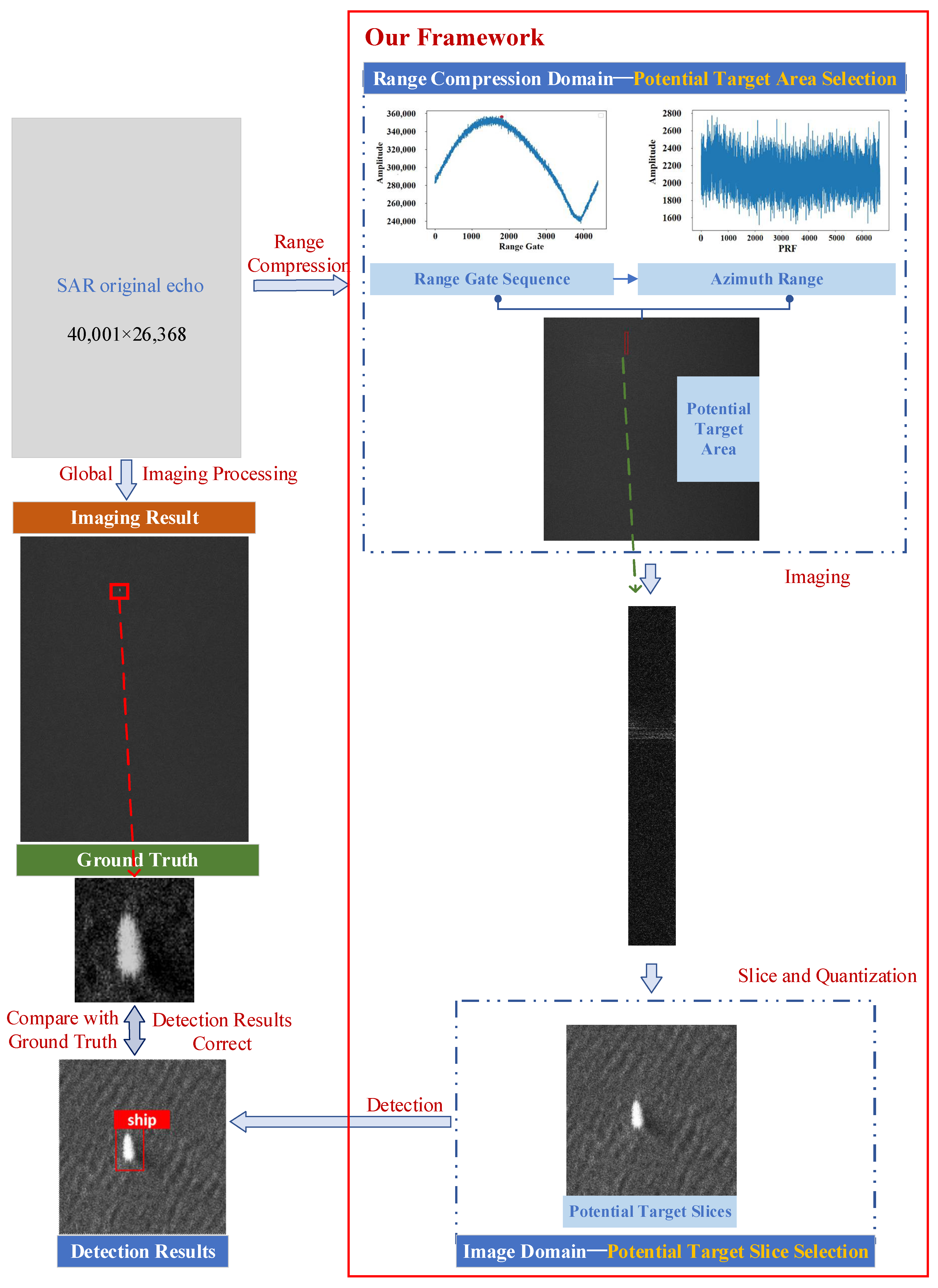

2. Methods

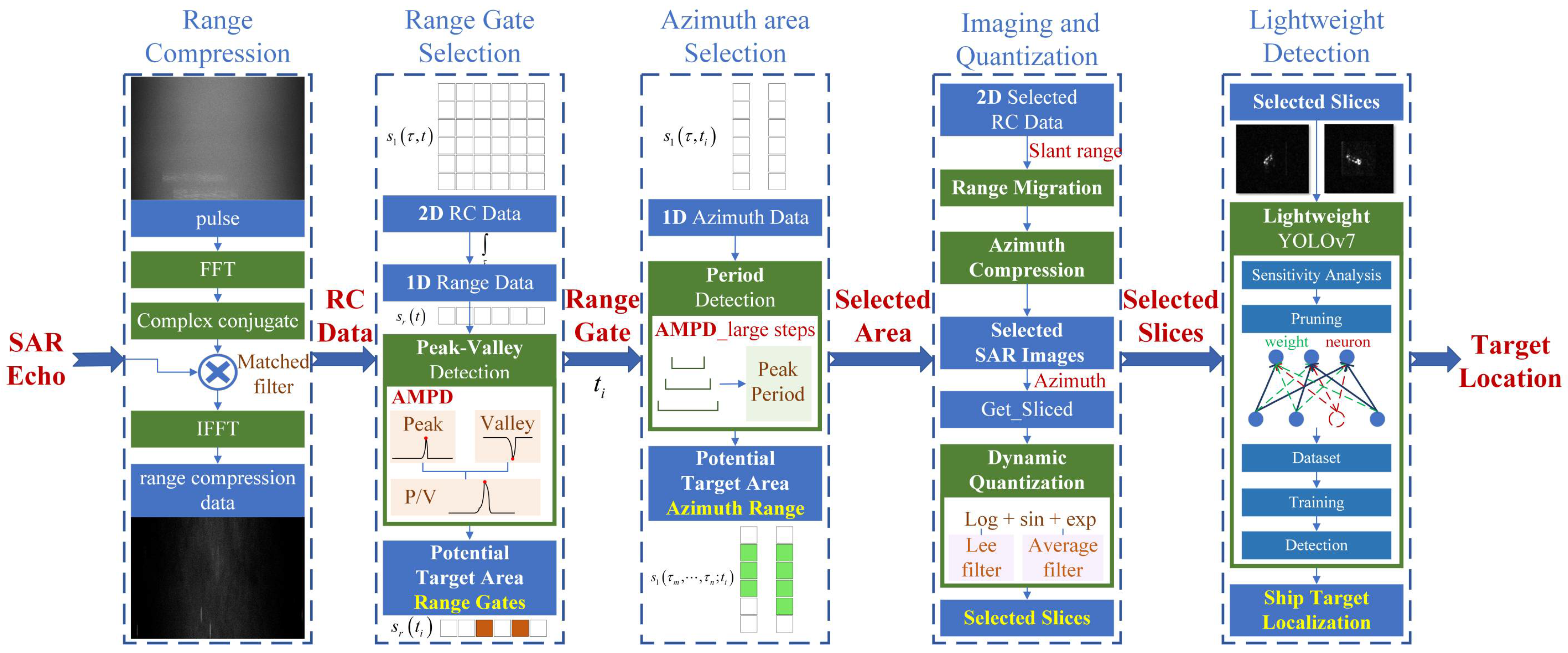

2.1. ST-IIDF for Wide-Area Offshore Scenarios

2.1.1. Range Compression

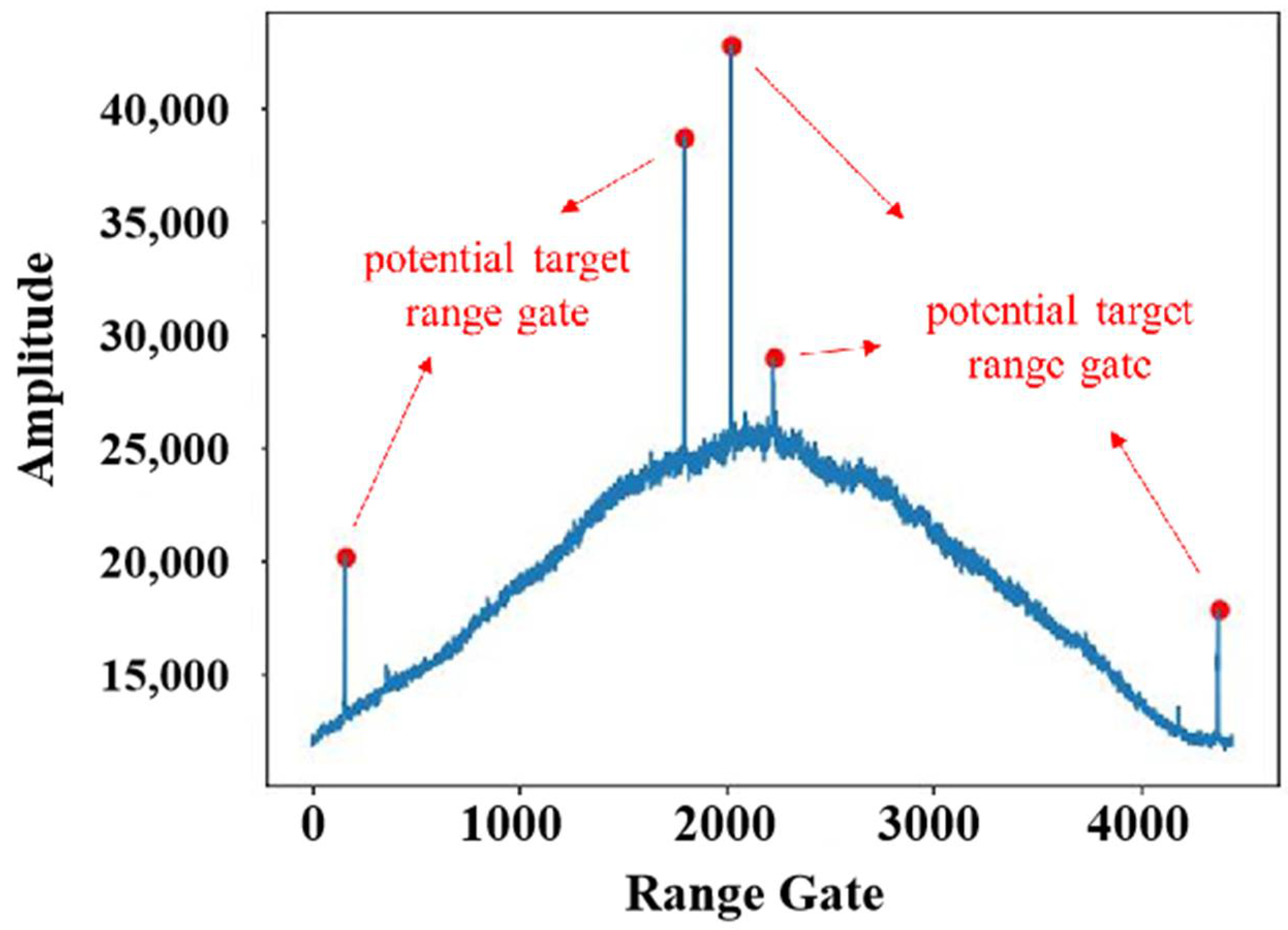

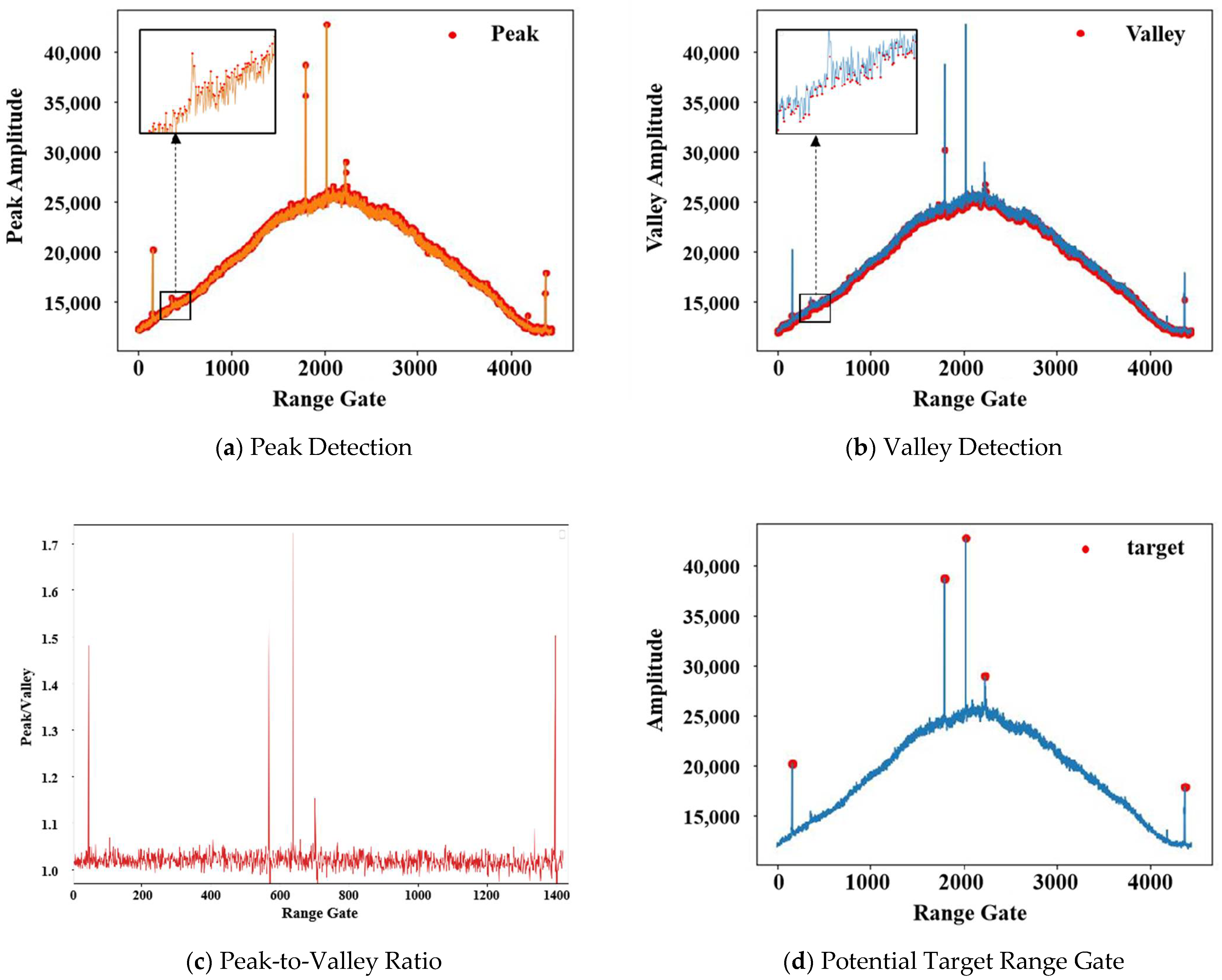

2.1.2. Range Gate Selection

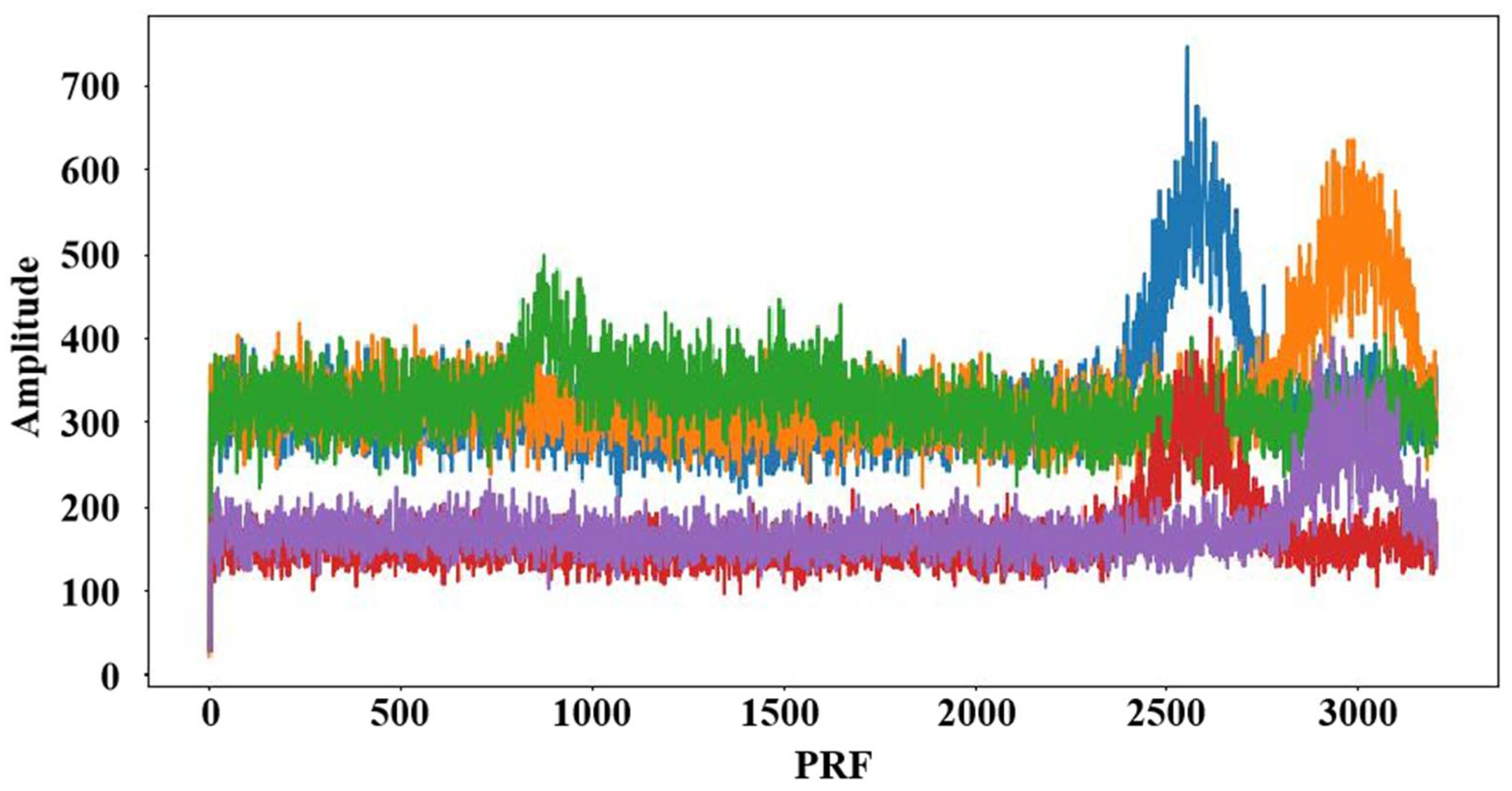

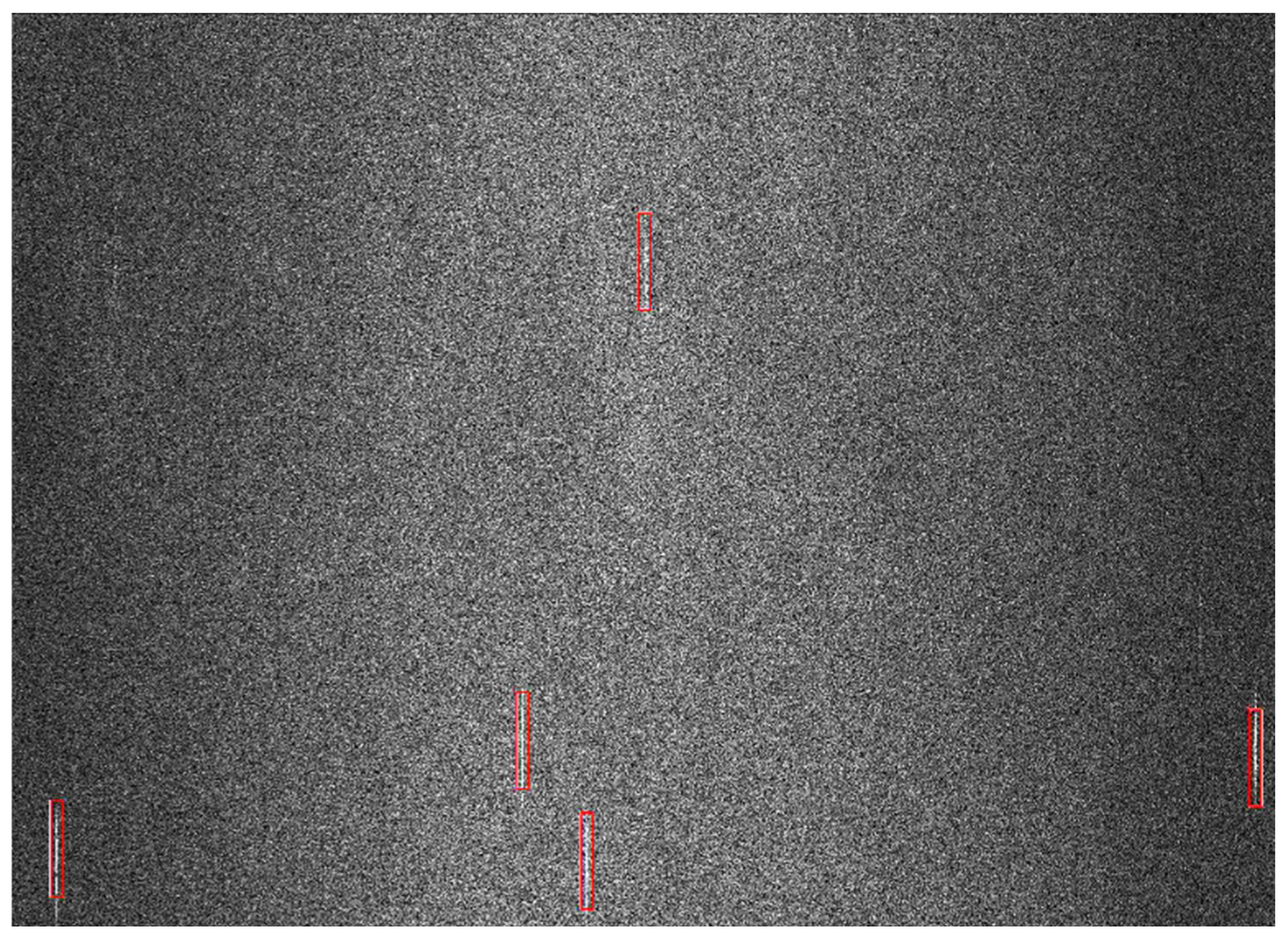

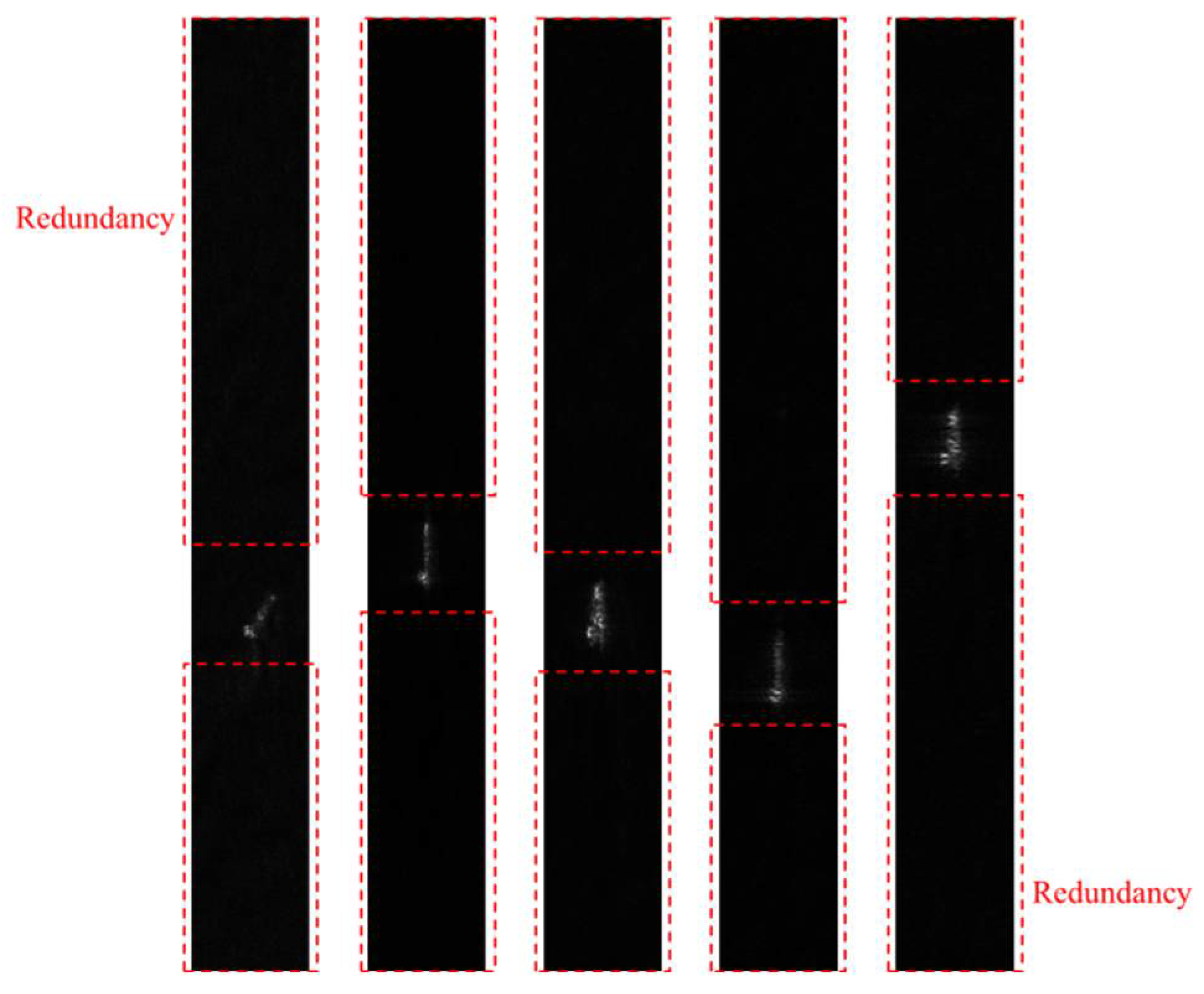

2.1.3. Azimuth Range Selection

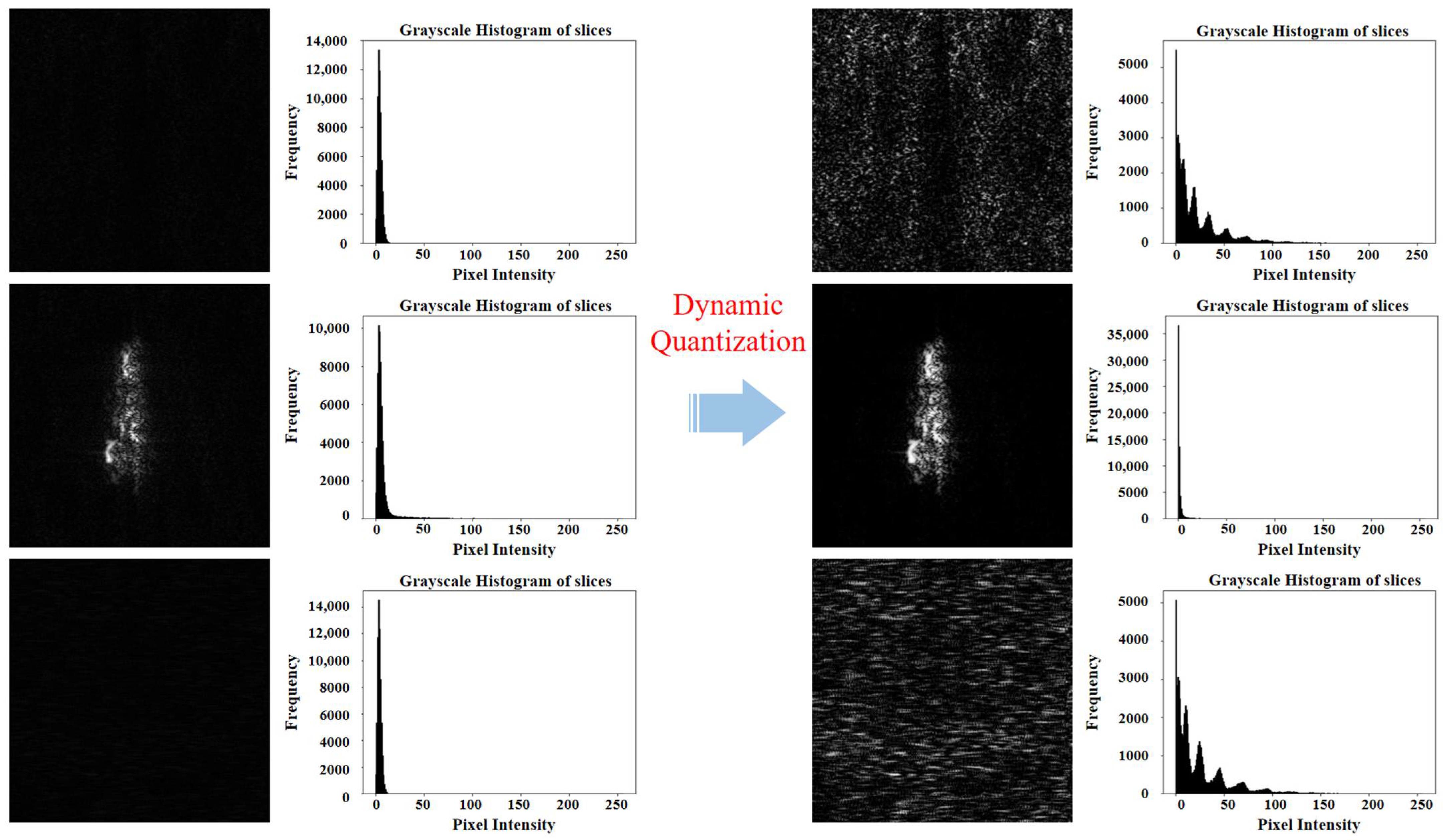

2.1.4. Imaging and Quantization

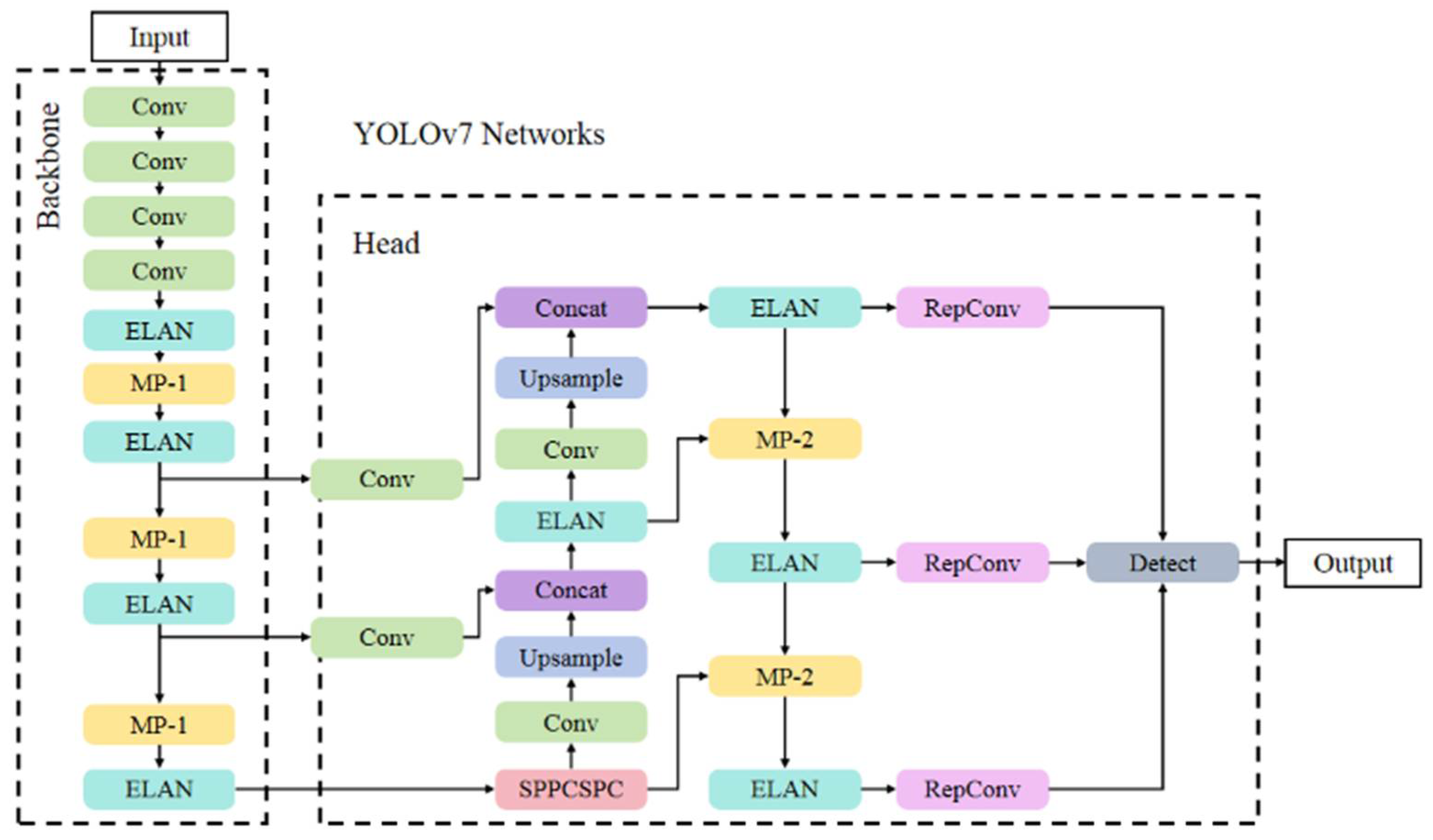

2.1.5. Lightweight Detection

2.2. Specific Implementation Method of ST-IIDF

2.2.1. Range Compression

2.2.2. Range Gate Selection

2.2.3. Azimuth Range Selection

2.2.4. Imaging and Quantization

2.2.5. Lightweight Detection

3. Experimental Results

3.1. Implementation Details

3.2. Experimental Data

3.3. Real Scenario Results

4. Discussion

4.1. Fundamental Analysis

4.2. Comparison Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ren, Y.; Zheng, M.; Zhang, L.; Fan, H.; Xie, Y. An adaptive false target suppression and radial velocity estimation method of moving targets based on image-domain for high-resolution and wide-swath SAR. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5211718. [Google Scholar] [CrossRef]

- Li, C.S.; Wang, W.J.; Wang, P.B.; Chen, J.; Xu, H.P.; Yang, W.; Yu, Z.; Sun, B.; Li, J.W. Current situation and development trend of space-borne SAR technology. J. Electron. Inf. Technol. 2016, 38, 229–240. (In Chinese) [Google Scholar] [CrossRef]

- Lv, J.; Zhu, D.; Geng, Z.; Chen, H.; Huang, J.; Niu, S.; Ye, Z.; Zhou, T.; Zhou, P. Efficient target detection of monostatic/bistatic SAR vehicle small targets in ultracomplex scenes via lightweight model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5225120. [Google Scholar] [CrossRef]

- Su, C.; Pan, Y.; Yang, W.; Wang, Y.; Zeng, H.; Li, C. An integrated method for fast imaging and detection of lightweight intelligent ship targets. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 9268–9272. [Google Scholar] [CrossRef]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data: Algorithms and Implementation; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Li, B.; Liu, S.; Gu, P.; Huang, Y.; Wu, Y. High-resolution imaging algorithm based on back projection for bistatic SAR. Inf. Technol. 2019, 02, 39–48. (In Chinese) [Google Scholar] [CrossRef]

- Walker, J.L. Range-Doppler imaging of rotating objects. IEEE Trans. Aerosp. Electron. Syst. 1980, AES-16, 23–52. [Google Scholar] [CrossRef]

- Raney, R.K.; Runge, H.; Bamler, R.; Cumming, I.G.; Wong, F.H. Precision SAR processing using chirp scaling. IEEE Trans. Geosci. Remote Sens. 1994, 32, 786–799. [Google Scholar] [CrossRef]

- Giroux, V.; Cantalloube, H.; Daout, F. An omega-K algorithm for SAR bistatic systems. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Seoul, Republic of Korea, 29 July 2005; pp. 1060–1063. [Google Scholar] [CrossRef]

- Zuo, S.; Sun, G.; Xing, M.; Chang, W. A modified fast factorized back projection algorithm for the spotlight SAR imaging. In Proceedings of the 2015 IEEE 5th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Singapore, 1–4 September 2015; pp. 756–759. [Google Scholar] [CrossRef]

- Wu, Y.; Li, B.; Zhao, B.; Liu, X. A fast factorized back-projection algorithm based on range block division for stripmap SAR. Electronics 2024, 13, 1584. [Google Scholar] [CrossRef]

- Ulander, L.M.; Hellsten, H.; Stenstrom, G. Synthetic-aperture radar processing using fast factorized back-projection. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 760–776. [Google Scholar] [CrossRef]

- Moreira, A.; Mittermayer, J.; Scheiber, R. Extended chirp scaling algorithm for air- and spaceborne SAR data processing in stripmap and scanSAR imaging modes. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1123–1136. [Google Scholar] [CrossRef]

- Zhang, H.; Zuo, W.; Liu, B.; Li, C.; Li, D.; Duan, C. An extended frequency scaling algorithm for bistatic SAR with high squint angle. IEEE Access 2023, 11, 20063–20078. [Google Scholar] [CrossRef]

- Finn, H.M. A CFAR design for a window spanning two clutter fields. IEEE Trans. Aerosp. Electron. Syst. 1986, AES-22, 155–169. [Google Scholar] [CrossRef]

- Færch, L.; Dierking, W.; Hughes, N.; Doulgeris, A.P. A comparison of constant false alarm rate object detection algorithms for iceberg identification in L- and C-band SAR imagery of the Labrador Sea. Cryosphere 2023, 17, 5335–5355. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Gao, F.; Huang, T.; Wang, J.; Sun, J.; Yang, E.; Hussain, A. Combining deep convolutional neural network and SVM for SAR image target recognition. In Proceedings of the 2017 IEEE International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Exeter, UK, 21–23 June 2017; pp. 1082–1085. [Google Scholar] [CrossRef]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Latif, I.H.; Abdulredha, S.H.; Hassan, S.K.A. Discrete wavelet transform-based image processing: A review. Al-Nahrain J. Sci. 2024, 27, 109–125. [Google Scholar] [CrossRef]

- Jolliffe, I.T. Principal Component Analysis, 2nd ed.; Springer: New York, NY, USA, 2002. [Google Scholar]

- Xiao, S.Y.; Xu, H.P.; Sun, B.; Liu, W. Modified Morphological Component Analysis Method for SAR Image Clutter Suppression. Remote Sens. 2025, 17, 1727. [Google Scholar] [CrossRef]

- Moreira, A.; Prats-Iraola, P.; Younis, M. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Ai, J.; Tian, R.; Luo, Q. Multi-scale rotation-invariant Haar-like feature integrated CNN-based ship detection algorithm of multiple-target environment in SAR imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10070–10087. [Google Scholar] [CrossRef]

- Ren, X.Z.; Zhou, P.Y.; Fan, X.Q.; Feng, C.G.; Li, P. LPFFNet: Lightweight Prior Feature Fusion Network for SAR Ship Detection. Remote Sens. 2025, 17, 1698. [Google Scholar] [CrossRef]

- Tao, L.; Zhou, Y.; Jiang, X.; Liu, X.; Zhou, Z. Convolutional neural network-based dictionary learning for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1776–1780. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Xu, F.; Pei, J. An improved Faster R-CNN based on MSER decision criterion for SAR image ship detection in harbor. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 1322–1325. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Wan, Z.Y.; Lan, Y.L.; Xu, Z.D.; Shang, K.; Zhang, F.Z. DAU-YOLO: A Lightweight and Effective Method for Small Object Detection in UAV Images. Remote Sens. 2025, 17, 1768. [Google Scholar] [CrossRef]

- Cao, Y.; Guo, S.; Jiang, S.; Zhou, X.; Wang, X.; Luo, Y.; Yu, Z.; Zhang, Z.; Deng, Y. Parallel optimisation and implementation of a real-time back projection (BP) algorithm for SAR based on FPGA. Sensors 2022, 22, 2292. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Y.; Li, C.Y.; Zhu, Y.F. Denoising and Feature Enhancement Network for Target Detection Based on SAR Images. Remote Sens. 2025, 17, 1739. [Google Scholar] [CrossRef]

- Chen, Y.S.; Chen, J.; Sun, L.; Wu, B.C.; Xu, H. AJANet: SAR Ship Detection Network Based on Adaptive Channel Attention and Large Separable Kernel Adaptation. Remote Sens. 2025, 17, 1745. [Google Scholar] [CrossRef]

- Guo, H.; Bai, H.; Yuan, Y.; Qin, W. Fully deformable convolutional network for ship detection in remote sensing imagery. Remote Sens. 2022, 14, 1850. [Google Scholar] [CrossRef]

- Venter, C.J.; Grobler, H.; AlMalki, K.A. Implementation of the CA-CFAR algorithm for pulsed-Doppler radar on a GPU architecture. In Proceedings of the 2011 IEEE Jordan Conference on Applied Electrical Engineering and Computing Technologies, Amman, Jordan, 6–8 December 2011; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Zhang, F.; Yao, X.; Tang, H. Multiple mode SAR raw data simulation and parallel acceleration for Gaofen-3 mission. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2115–2126. [Google Scholar] [CrossRef]

- Zhang, Y.; Shang, M.; Lv, Y.; Qiu, X. A Near-Real-Time Imaging Algorithm for Focusing Spaceborne SAR Data in Multiple Modes Based on an Embedded GPU. Remote Sens. 2025, 17, 1495. [Google Scholar] [CrossRef]

- Zhang, L.J.; Zhang, J.; Zhang, X.; Lang, H.T. Parallel algorithm of dual-parameter CFAR ship detection with balanced task allocation. J. Remote Sens. 2016, 20, 344–351. (In Chinese) [Google Scholar] [CrossRef]

- Bales, M.R.; Benson, T.; Dickerson, R.; Campbell, D.; Hersey, R.; Culpepper, E. Real-time implementations of ordered-statistic CFAR. In Proceedings of the 2012 IEEE Radar Conference, Atlanta, GA, USA, 7–11 May 2012. [Google Scholar] [CrossRef]

- Bian, M.M.; Li, S.L.; Yue, R.G. Research of On-board Real-time Imaging Processing Algorithm of Space-borne Synthetic Aperture Radar. Spacecr. Eng. 2013, 22, 97–103. (In Chinese) [Google Scholar] [CrossRef]

- Zhao, J.; Lin, X.; Yuan, Z.D.; Du, N.G.; Cai, X.L.; Yang, C.; Zhao, J.; Xu, Y.S.; Zhao, L.W. GNSS Precipitable Water Vapor Prediction for Hong Kong Based on ICEEMDAN-SE-LSTM-ARIMA Hybrid Model. Remote Sens. 2025, 17, 1675. [Google Scholar] [CrossRef]

- Xie, Y.; Zhong, Z.; Li, B.; Xie, Y.; Chen, L.; Chen, H. An ARM-FPGA Hybrid Acceleration and Fault Tolerant Technique for Phase Factor Calculation in Spaceborne Synthetic Aperture Radar Imaging. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5059–5072. [Google Scholar] [CrossRef]

- Xiao, M.; Ling, W.C.; Liu, Y.B.; Liu, L.; Wang, X.B.; Liu, X. Real-time Ship Detection Algorithm Based on Gaofen-3 Satellite. Sci. Technol. Eng. 2021, 21, 8057–8064. (In Chinese) [Google Scholar] [CrossRef]

- Ran, L.; Liu, Z.; Li, T.; Xie, R.; Zhang, L. An adaptive fast factorized back-projection algorithm with integrated target detection technique for high-resolution and high-squint spotlight SAR imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 171–183. [Google Scholar] [CrossRef]

- De Sousa, K.; Pilikos, G.; Azcueta, M.; Floury, N. Ship detection from raw SAR echoes using convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 9936–9944. [Google Scholar] [CrossRef]

- Casalini, E.; Frioud, M.; Small, D.; Henke, D. Refocusing FMCW SAR Moving Target Data in the Wavenumber Domain. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3436–3449. [Google Scholar] [CrossRef]

- Joshi, S.K.; Baumgartner, S.V.; da Silva, A.B.C.; Kriege, G. Range-Doppler based CFAR ship detection with automatic training data selection. Remote Sens. 2019, 11, 1270. [Google Scholar] [CrossRef]

- Schneider, F.; Muller, M. Real-Time SAR Ship Detection via CFAR-Based Range-Compressed Data Filtering. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Brussels, Belgium, 11–16 July 2021; pp. 5128–5131. [Google Scholar] [CrossRef]

- Wang, C.; Guo, B.; Song, J.; He, F.; Li, C. A novel CFAR-based ship detection method using range-compressed data for spaceborne SAR system. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5215515. [Google Scholar] [CrossRef]

- Zeng, H.; Song, Y.; Yang, W.; Miao, T.; Liu, W.; Wang, W.; Chen, J. An Incept-TextCNN model for ship target detection in SAR range-compressed domain. IEEE Geosci. Remote Sens. Lett. 2024, 21, 3501305. [Google Scholar] [CrossRef]

- Tan, X.D.; Leng, X.G.; Luo, R.; Sun, Z.Z.; Ji, K.F.; Kuang, G.Y. YOLO-RC: SAR ship detection guided by characteristics of range-compressed domain. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 18834–18851. [Google Scholar] [CrossRef]

- Tan, X.D.; Leng, X.G.; Ji, K.F.; Kuang, G.Y. RCShip: A dataset dedicated to ship detection in range-compressed SAR data. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4004805. [Google Scholar] [CrossRef]

- Scholkmann, F.; Wolf, M.; Boss, J. An Efficient Algorithm for Automatic Peak Detection in Noisy Periodic and Quasi-Periodic Signals. Algorithms 2012, 5, 588–603. [Google Scholar] [CrossRef]

- de M. Santos, R.C.C.; Silva, M.C.; Oliveira, R.A.R. Real-time Object Detection Performance Analysis Using YOLOv7 on Edge Devices. IEEE Lat. Am. Trans. 2024, 22, 799–805. [Google Scholar] [CrossRef]

- Miao, T.; Zeng, H.; Yang, W.; Chu, B.; Zou, F.; Ren, W. An Improved Lightweight RetinaNet for Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4667–4679. [Google Scholar] [CrossRef]

| Evaluation | AP-SSDD | AP0.5-SSDD | AP0.75-SSDD | AP-GF3 | Params | GFLOPS@640 |

|---|---|---|---|---|---|---|

| SSD | 52.4 | 89.4 | 54.9 | 84.4 | —— | —— |

| Faster-RCNN | 54.0 | 90.0 | 59.9 | 84.6 | —— | —— |

| YOLOv7-origin | 58.7 | 93.7 | 68.1 | 91.8 | 11.2 M | 34.7 |

| YOLOv7-pruned (Ours) | 57.3 | 93.2 | 66.4 | 91.5 | 6.0 M | 12.9 |

| Hardware | Configuration |

|---|---|

| GPU | NVIDIA Quadro RTX 6000/8000 |

| Video Memory | 24 GB |

| CPU | Intel Xeon Gold 6226R |

| CPU frequency | 2.9 GHz |

| Memory | 503 GB |

| Source | Band | Imaging Mode | Resolution | Observation Range |

|---|---|---|---|---|

| Pujiang-2 | X | stripmap mode | 1 m | 20 km |

| Hisea-1 | C | stripmap mode | 1–3 m | 20–40 km |

| Scene | Source | Band | Size | Center Longitude | Center Latitude |

|---|---|---|---|---|---|

| 1 | Pujiang-2 | X | 21,100 × 33,972 | −76.4271817431 | 36.9728933691 |

| 2 | Pujiang-2 | X | 21,789 × 23,552 | 11.7466221524 | 37.2469736600 |

| 3 | Hisea-1 | C | 40,001 × 26,368 | 113.8201076643 | 18.0575724603 |

| Scene | Size | No. of Ships | No. of Range Compressing Domain Areas | No. of Selected Slices | Correct Detection | False Detection | Missing Detection | Total Time (Ours) | Traditional Process Time | Time Accelerated |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 21,100 × 33,792 | 2 | 7 | 2 | 2 | 0 | 0 | 296.8 s | 10,078.3 s | 33.9 |

| 2 | 21,789 × 23,552 | 1 | 2 | 1 | 1 | 0 | 0 | 116.1 s | 6302.4 s | 54.3 |

| 3 | 17,455 × 33,792 | 3 | 5 | 3 | 3 | 0 | 0 | 227.6 s | 7095.0 s | 31.2 |

| 4 | 19,248 × 26,624 | 5 | 5 | 5 | 5 | 0 | 0 | 224.9 s | 6213.1 s | 27.6 |

| 5 | 17,546 × 34,816 | 12 | 22 | 12 | 12 | 0 | 0 | 762.7 s | 8061.1 s | 10.6 |

| 6 | 17,798 × 26,624 | 2 | 3 | 3 | 2 | 0 | 0 | 145.3 s | 3017.6 s | 20.8 |

| 7 | 17,670 × 35,840 | 4 | 5 | 5 | 4 | 0 | 0 | 189.6 s | 4696.1 s | 24.8 |

| 8 | 21,599 × 31,744 | 1 | 1 | 1 | 1 | 0 | 0 | 65.7 s | 3641.3 s | 55.4 |

| 9 | 40,001 × 26,368 | 1 | 1 | 1 | 1 | 0 | 0 | 203.2 s | 15,385.9 s | 75.7 |

| 10 | 40,001 × 19,712 | 2 | 6 | 4 | 2 | 0 | 0 | 455.8 s | 11,523.8 s | 25.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, C.; Yang, W.; Pan, Y.; Zeng, H.; Wang, Y.; Chen, J.; Huang, Z.; Xiong, W.; Chen, J.; Li, C. An Efficient Ship Target Integrated Imaging and Detection Framework (ST-IIDF) for Space-Borne SAR Echo Data. Remote Sens. 2025, 17, 2545. https://doi.org/10.3390/rs17152545

Su C, Yang W, Pan Y, Zeng H, Wang Y, Chen J, Huang Z, Xiong W, Chen J, Li C. An Efficient Ship Target Integrated Imaging and Detection Framework (ST-IIDF) for Space-Borne SAR Echo Data. Remote Sensing. 2025; 17(15):2545. https://doi.org/10.3390/rs17152545

Chicago/Turabian StyleSu, Can, Wei Yang, Yongchen Pan, Hongcheng Zeng, Yamin Wang, Jie Chen, Zhixiang Huang, Wei Xiong, Jie Chen, and Chunsheng Li. 2025. "An Efficient Ship Target Integrated Imaging and Detection Framework (ST-IIDF) for Space-Borne SAR Echo Data" Remote Sensing 17, no. 15: 2545. https://doi.org/10.3390/rs17152545

APA StyleSu, C., Yang, W., Pan, Y., Zeng, H., Wang, Y., Chen, J., Huang, Z., Xiong, W., Chen, J., & Li, C. (2025). An Efficient Ship Target Integrated Imaging and Detection Framework (ST-IIDF) for Space-Borne SAR Echo Data. Remote Sensing, 17(15), 2545. https://doi.org/10.3390/rs17152545