Abstract

The efficient and precise identification of urban slums is a significant challenge for urban planning and sustainable development, as their morphological diversity and complex spatial distribution make it difficult to use traditional remote sensing inversion methods. Current deep learning (DL) methods mainly face challenges such as limited receptive fields and insufficient sensitivity to spatial locations when integrating multi-source remote sensing data, and high-quality datasets that integrate multi-spectral and geoscientific indicators to support them are scarce. In response to these issues, this study proposes a DL model (coordinate-attentive U2-DeepLab network [CAU2DNet]) that integrates multi-source remote sensing data. The model integrates the multi-scale feature extraction capability of U2-Net with the global receptive field advantage of DeepLabV3+ through a dual-branch architecture. Thereafter, the spatial semantic perception capability is enhanced by introducing the CoordAttention mechanism, and ConvNextV2 is adopted to optimize the backbone network of the DeepLabV3+ branch, thereby improving the modeling capability of low-resolution geoscientific features. The two branches adopt a decision-level fusion mechanism for feature fusion, which means that the results of each are weighted and summed using learnable weights to obtain the final output feature map. Furthermore, this study constructs the São Paulo slums dataset for model training due to the lack of a multi-spectral slum dataset. This dataset covers 7978 samples of 512 × 512 pixels, integrating high-resolution RGB images, Normalized Difference Vegetation Index (NDVI)/Modified Normalized Difference Water Index (MNDWI) geoscientific indicators, and POI infrastructure data, which can significantly enrich multi-source slum remote sensing data. Experiments have shown that CAU2DNet achieves an intersection over union (IoU) of 0.6372 and an F1 score of 77.97% on the São Paulo slums dataset, indicating a significant improvement in accuracy over the baseline model. The ablation experiments verify that the improvements made in this study have resulted in a 16.12% increase in precision. Moreover, CAU2DNet also achieved the best results in all metrics during the cross-domain testing on the WHU building dataset, further confirming the model’s generalizability.

1. Introduction

Developing countries face governance challenges in impoverished urban areas amid the ongoing global urbanization. The expansion of slums not only reflects the challenges of structural inequality in urban development—where low-income groups are forced to cluster due to insufficient housing, environmental conditions, and sanitation infrastructure—but also directly impacts the quality of life for their residents and the effectiveness of urban governance. In this context, the establishment of an accurate and efficient settlement identification and monitoring system has become the primary technical challenge to achieving the United Nations’ (UN’s) housing security goals.

The formation of slums is primarily driven by rapid urban population growth. Its speed exceeds the government’s ability to provide housing, environmental services, and sanitary infrastructure, leaving low-income groups struggling to access adequate and affordable living conditions [1]. The traditional method based on field surveying and mapping [2] is inefficient and labor-intensive, making it difficult to meet the increasing needs due to the significant increase in the number of slums [3]. Therefore, using remote sensing technology combined with artificial intelligence to conduct large-scale, long-term “spatiotemporal dynamic” mapping of slums has become a new method that has attracted considerable attention [3].

Given that slums show significant morphological differences in different regions, cities, and development stages, their complexity and diversity make it extremely difficult to construct a generalized remote sensing inversion model of slums, limiting the applicability of traditional remote sensing inversion methods in this field [3]. Therefore, the method of visual interpretation based on remote sensing images has become popular. Such methods need to be performed by professionals. Professionals can be experts from local or non-local areas, but local experts possess contextual expertise, enabling them to avoid overlooking local opinions, geo-ethical issues, and other concerns during annotation [2]. Although the method based on manual labeling by professionals has significantly reduced the cost compared with traditional offline visits, it still faces the problem of high human and material consumption. Therefore, understanding how to efficiently and accurately extract slums through modern automated methods has become an important challenge in current remote sensing and urban planning research.

Considering this backdrop, automatic recognition methods based on artificial intelligence have emerged. ML, as a discipline that combines statistical modeling with algorithm optimization, is capable of automatically extracting patterns and features from data, achieving task automation [4]. These algorithms typically possess a strong learning ability and can effectively adapt to the diverse characteristics of slum morphology. Algorithms such as random forest [5], K-means clustering [6], support vector machine (SVM) [7], and logistic regression [8] have been widely applied in the extraction of slums from remote sensing images. Researchers have made targeted improvements to these algorithms to further improve the recognition effect. Leonita et al. compared the performance of the improved SVM with the random forest algorithm in a slum identification task in Bandung, India, and pointed out that the optimized SVM can achieve a recognition accuracy of up to 88.5% [9]. Cunha et al. compared eight classification models based on data from the slums of Rio de Janeiro and they found that GradientBoost and XGBoost exhibited high robustness and accuracy in complex urban environments [10]. Gram-Hansen et al. proposed the use of canonical correlation forests for slum extraction on low-resolution remote sensing data, providing an economically effective solution for urban monitoring under resource-constrained conditions [11].

In recent years, DL has gradually become a major research topic in the field of remote sensing image processing with the significant improvement in hardware performance and the development of big data technologies. DL is a branch of ML [12]. Moreover, DL possesses outstanding feature learning capabilities on high-dimensional, unstructured data due to its multi-layer neural network models. The representational power and adaptability of DL are more remarkable compared with traditional ML [13].

Convolutional neural networks (CNNs) are widely used in image analysis tasks, including classical models, such as LeNet-5 [14], AlexNet [15], VGG [16], and GoogLeNet (Inception) [17]. Numerous scholars are dedicated to exploring DL methods, which are suitable for slum extraction, based on these network structures. Ibrahim et al. developed the predictSLUMS model, which integrates spatial statistical information with artificial neural networks and utilizes street intersection data to achieve high-precision identification of slums with a small amount of data [18]. Haworth et al. proposed the URBAN-i model, which focuses on data fusion and integrated information from aerial and street view images to achieve the multi-scale detection of urban informal areas [19]. Verma et al. utilized a pre-trained CNN model to construct a specialized training set using ultra-high-resolution and medium-resolution satellite imagery for the detection and segmentation of slum areas [20]. Lu et al. designed GASlumNet, which combines a dual-branch architecture of Unet and ConvNeXt, utilizing the two branches to identify high-resolution images and geological feature images, respectively. The efficiency and robustness of the model in slum extraction tasks were validated in the case of Mumbai, India [21].

In effectively recognizing slum areas, the features of the slums must be extracted. Previous studies have made substantial summaries. For example, UN-Habitat asserts that slums typically lack access to clean water sources, sanitation facilities, adequate living space, stability, and safety [22]. Verma et al. proposed that slum settlements can be distinctly identified through their unique architectural morphology characterized by high building density and disorganized development patterns [21]. Pedro et al. identified the following defining features of slums: irregular spatial configurations, the absence of pathways wider than 3 m, lack of public green spaces, extreme building density (with roof coverage exceeding 90%), the dominance of small-scale structures, and the occupation of hazard-prone locations adjacent to railways, major roadways, and riverbanks [23].

This study summarizes the various characteristics of slums based on related research and classifies them based on the types of data sources, which are required when computers obtain these characteristics. This task is carried out to set the input image categories in the dataset for model recognition. The specific classification is shown in Table 1. This classification approach can better guide the construction of datasets. DL models demonstrate inherent superiority in image feature extraction through their capacity to automatically capture multi-scale features via convolutional operations. This hierarchical feature learning mechanism enables comprehensive characterization of visual patterns across spatial resolutions [24]. When creating the dataset, one simply sets the corresponding images, and the model will automatically extract the features of slums.

Table 1.

Summary of the slum characteristics in the new format.

However, the training of DL models is highly dependent on high-quality datasets. In the field of building extraction in remote sensing imagery, the current datasets that are widely used include the following: WHU Building Dataset [25], Massachusetts Buildings Dataset [26], and Open AI Tanzania Buildings Dataset. Fang et al. pointed out that the cross-modal fusion of complementary information from multi-spectral remote sensing images can enhance the perception capability of detection algorithms, thereby making them highly robust and reliable in broad applications [27]. However, remote sensing datasets focusing on slum areas are not only extremely scarce but also mostly contain only RGB band images, lacking multi-spectral or other specific band information that can fully characterize the geospatial semantic features of slums. Considering the significant role of multispectral images in the identification of slums, this study has constructed the São Paulo slums dataset. This dataset not only includes high-resolution RGB images but also integrates three key geospatial indicator images, enriching the research materials in this domain.

In the continuous evolution of DL models, the U2-Net, a two-level nested U-shaped structure proposed by Qin et al., efficiently performs in multi-scale feature extraction [28]. This model stacks the U-shaped residual U (RSU) blocks in the form of a Unet, forming a two-layer nested U-shaped structure, which can extract multi-scale features from the image without reducing the image resolution. Their research shows that the model exhibits strong competitiveness compared with state-of-the-art (SOTA) methods on six public datasets. Although U2-Net was initially mainly used for salient object detection tasks, after targeted improvements, it can also be successfully applied to binary classification tasks, such as slum image segmentation. This mechanism provides a new technological approach to the automatic monitoring of urban poverty areas.

U2-Net achieves high-precision edge perception through its nested U-shaped architecture and deep supervision mechanism. The dense feature pyramid of the model has advantages in extracting local details. However, the direct application of U2-Net for slum extraction will result in numerous challenges. For instance, the multi-level recursive downsampling of the model results in a limited effective receptive field (ERF) [29]. When the input resolution decreases, the blurring of local textures exacerbates the issue of contextual discontinuity caused by insufficient receptive fields. Most multispectral images possess only low resolution due to technological constraints. Consequently, U2-Net is unsuitable for recognition tasks involving low-resolution geoscientific indicator images of slums. Furthermore, remote sensing images exhibit distinct geospatial sensitivity characteristics compared with conventional optical imagery. Nevertheless, the existing U2-Net model lacks a targeted spatial encoding mechanism, failing to adequately parse the geospatial coordinate attributes embedded in the imagery during feature extraction. This structural deficiency hinders the network’s ability to effectively capture the precise spatial correlation properties of surface targets.

By contrast, DeepLabv3+ [30] incorporates multi-scale dilated convolutions through Atrous Spatial Pyramid Pooling (ASPP), expanding the global receptive field while maintaining the resolution of feature maps. When combined with the strong representation ability of low-resolution features from backbone networks, such as Xception, this model precisely compensates for the shortcoming of U2-Net, making it suitable for identifying slum geospatial indicators in images. Furthermore, considering the unique characteristic of remote sensing images being highly sensitive to spatial positions, this study improves the U2-Net by adding a CoordAttention mechanism [31], enabling it to effectively extract the spatial coordinate semantics of remote sensing images.

The main contributions of this study are summarized as follows:

- (1)

- The São Paulo slums dataset has been created. The dataset contains 7878 images with a 512 × 512 resolution, with spatial resolutions of 0.5 m for the RGB imagery and POI indicator images and 20 m for the NDVI and MNDWI indicator images.

- (2)

- The coordinate-attentive U2-DeepLab network (CAU2DNet) model is designed and constructed in this study for the slum extraction problem. The model is a two-branch network architecture that combines two branches of U2-Net and DeepLabV3+ and introduces the CoordAttention mechanism to fully explore the image and geo-semantic features. The code for the CAU2DNet model can be obtained from the following URL: https://github.com/luoooxia/CAU2DNet (accessed on 24 May 2025).

Section 2 will introduce the study area and the creation method of the São Paulo slums dataset; Section 3 will introduce the internal structure and specific details of CAU2DNet; Section 4 will introduce the datasets and methods used for experiments, the parameters set for model training, and the comparative evaluation of the models; and Section 5 will conduct ablation experiments and summarize the entire study.

2. Materials

2.1. Study Area

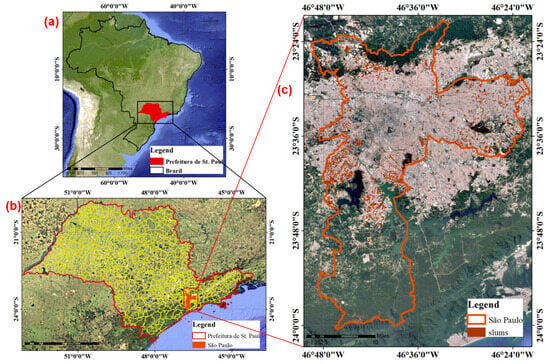

The study area of this work is the city of São Paulo, Brazil. São Paulo is located in the southeast of Brazil and is the capital of the state of São Paulo, situated at latitude 23°33′S, longitude 46°38′W, with its specific location shown in Figure 1. São Paulo is the largest city in Brazil by area and population, with a total area of approximately 1521 km2 and a population exceeding 12 million (data from 2020). Furthermore, São Paulo is one of the cities in Brazil with the highest levels of income inequality, characterized by a pronounced wealth disparity and the closest proximity of affluent neighborhoods with slums. The census data released by the Brazilian Institute of Geography and Statistics (IBGE) in 2022 indicated that 15.1% of the residents of São Paulo city live in slum areas [32]. Due to the excessive difficulty in creating a dataset covering the entire globe or the Southern Hemisphere, selecting typical slum areas for dataset construction proves more efficient for research purposes. The São Paulo region, characterized by significant wealth disparity and a large impoverished population, exemplifies the high-density, large-scale informal settlements that typify “slum expansion” in the rapid urbanization processes of many developing countries in the Southern Hemisphere. Therefore, choosing the São Paulo region for this study offers excellent representativeness.

Figure 1.

The location of the study area. (a) Brazilian region (b) São Paulo State region (c) São Paulo City region.

2.2. Data Sources

This study requires the following in creating the São Paulo slums dataset: (1) high-spatial-resolution remote sensing imagery for identifying the image features of slums; (2) multispectral remote sensing imagery for calculating geologic indicator images, thereby identifying the geologic features of slums; (3) POI data for identifying the spatial location features of favelas; (4) official reference data for the slums in São Paulo for reference during annotation; and (5) the administrative divisions of São Paulo city defining the study area.

The high-spatial-resolution remote sensing images were sourced from the Google Earth website. We obtained these images using QGIS on 20 September 2024, with the resolution set to 0.5 m during the download. Multispectral remote sensing images originate from the 20 m spatial resolution L2A level Sentinel-2 satellite images from the Copernicus Data Center, and the date of generation for the two images is 5 February 2024. The POI data are sourced from the OpenStreetMap website, including the location and attribute information of three types of facilities: medical facilities, entertainment facilities, and dining facilities. The reference data for the slums in the São Paulo comes from the official GIS website of the São Paulo Municipal Housing Department (PMSP): habitaSAMPA. The administrative divisions of the city of São Paulo are sourced from the Global Administrative Areas Database. The specific information is shown in Table 2.

Table 2.

Data source websites.

But the data sources exist access restrictions. For instance, the RGB images downloaded from Google Earth do not allow the selection or identification of their specific generation time, and the POI data obtained from OpenStreetMap also suffers from this issue. Therefore, this study has reannotated slum areas in the RGB images to ensure temporal alignment between the labels and the imagery. As for the geoscientific indicator images, their lower resolution and auxiliary role mean they carry minor weight in the model’s recognition process, rendering the spatiotemporal alignment issue less impactful. Therefore, this study primarily ensures that all utilized geoscientific indicator images are generated from recently acquired data, with their acquisition times annotated in Table 2.

2.3. Data Processing

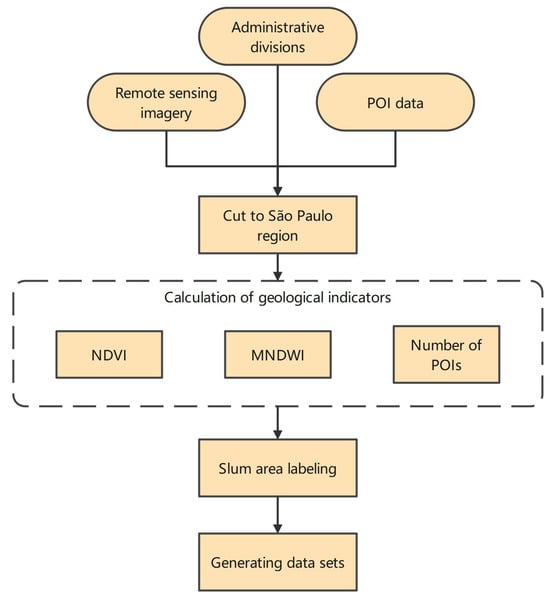

This study constructed a dataset covering the area of São Paulo city, which contains a total of 7978 sample images (all with a size of 512 × 512 pixels), to train CAU2DNet for slum recognition (Figure 2).

Figure 2.

São Paulo slum dataset construction process.

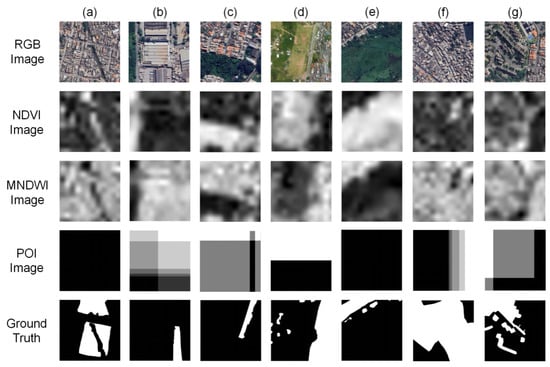

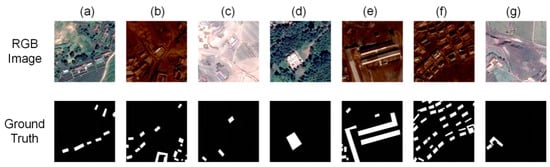

This dataset not only includes true color (RGB) images but also integrates NDVI, MNDWI index images, and infrastructure index images based on POI data, providing the model with rich multi-source information. Some representative images are shown in Figure 3. Each POI image is provided a separate color mapping for display convenience, that is, the gray levels are divided according to the number of different values within the same image, and different values are assigned.

Figure 3.

Example samples (512 × 512) from the São Paulo slums dataset. (a) Slums mixed with ordinary residential areas; (b) slums mixed with large buildings; (c) slums near forests; (d) slums near open land; (e) slums near grasslands; (f) concentrated large-scale slums; (g) relatively dispersed slums.

2.3.1. True Color (RGB) Image Processing

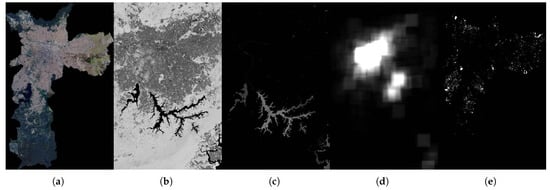

This study first utilized QGIS to obtain images with a 0.5 m spatial resolution of the São Paulo area from the Google Earth platform. Given that these images are commercial products processed by Google, we did not perform preprocessing operations on the remote sensing images. Instead, we directly combined the vector data of the administrative divisions of São Paulo city to crop the images. The processing results are shown in Figure 4a.

Figure 4.

Overall image of the São Paulo slums dataset. (a) RGB imagery; (b) NDVI imagery; (c) MNDWI imagery; (d) POI imagery; (e) slum label.

2.3.2. Calculation of Geoscience Indicator Images

Slum areas typically exhibit characteristics such as sparse vegetation, extensive impervious surfaces, and a lack of infrastructure, which are difficult to directly obtain in RGB images. Therefore, this study utilizes the 20 m resolution L2A-level multispectral imagery provided by Sentinel-2 to calculate the following geoscience indicator images, with the processing results shown in Figure 4b–d.

NDVI is used to assess vegetation coverage and health conditions, and it can reflect the characteristics of vegetation coverage in slums. The calculation formula is as follows:

where refers to the near-infrared band radiation value and denotes to the Red band radiation value.

MNDWI is primarily used for detecting and extracting surface water bodies, effectively suppressing the interference from buildings and soil backgrounds. This factor can reflect the widespread presence of the impervious surfaces in slum areas. The calculation formula is as follows:

where refers to the radiation value of the green band and denotes to the radiation value of the short-wave infrared band.

Slums are typically characterized by a lack of infrastructure. In depicting this information, this study utilizes point of interest (POI) data provided by OpenStreetMap to calculate the number of POIs within a 3 km2 neighborhood for each pixel of high-resolution remote sensing imagery. This metric can reflect the completeness of infrastructure in the area: regions with a larger number of POIs typically have more developed infrastructure, whereas areas with fewer POIs are likely to be slums.

2.3.3. Labeled São Paulo Slums

Given the time difference between obtaining the base map from Google Earth and the release date of the slum vector data by PMSP, the official published slum areas may not exactly match with the areas corresponding to the remote sensing images used in this study. The red box marks the area officially designated as a slum area (Figure 5). However, no slum houses can be currently found on the base map imagery due to the possible demolition and relocation. The area marked in orange has been confirmed and verified as a slum area. However, this area has not been included in the official data released. Furthermore, considering the deficiencies in accuracy and timeliness of official data, this study adopted a method of manual visual interpretation using official vector data as a reference, along with the street view information provided by Google Earth for verification, to re-label the slum areas in the São Paulo region, thereby improving the accuracy of the labeling. The final labeling results are shown in Figure 4e.

Figure 5.

Schematic of the error in the official labeling of the slum area.

2.3.4. Cutting to São Paulo Slums Dataset

After completing the image annotation and the construction of the various geological feature images, this study resampled all feature images to a resolution of 0.5 m and cut them according to the administrative divisions of São Paulo to facilitate the integration of various data sources within the dataset and the input for the model. Thereafter, this study used a 512 × 512 rectangle to split the base map, label data, and discard the images that do not contain any slum samples at all. Finally, a dataset of slums in São Paulo containing 7978 sample images was obtained, accompanied by complete remote sensing imagery and annotated vector data.

3. Methodology

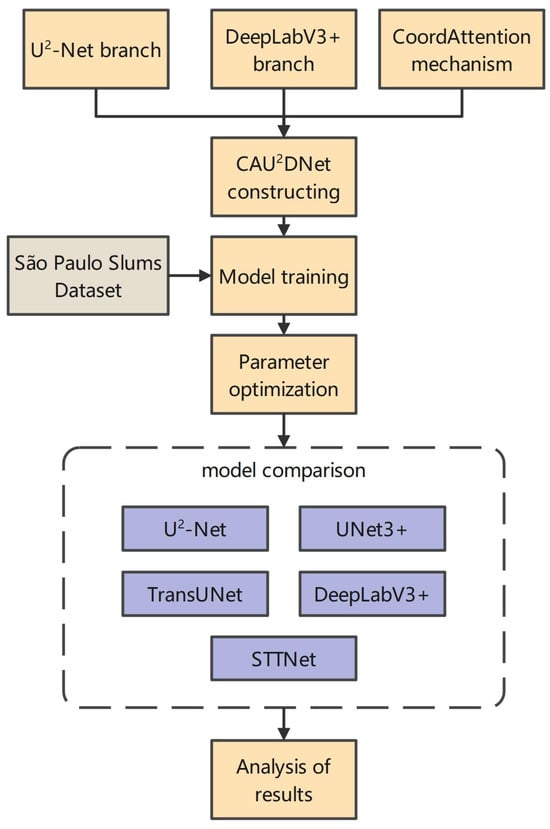

This section will outline the overall framework of the CAU2DNet model. The model inputs the RGB and geoscientific indicator images into two separate branches and fuses the results using a weighted sum approach for feature integration. Moreover, this study made some improvements and introduced the CoordAttention attention mechanism to effectively adapt the model to the task of slum recognition, thereby enhancing the accuracy of the model. The technical roadmap of this study is shown in Figure 6.

Figure 6.

Technical roadmap of this study.

3.1. Model Overview

CAU2DNet adopts a dual-stream encoding–decoding architecture, and its mathematical model can be formally defined as follows:

where represents the decision fusion function, and and correspond to the two branches of CAU2DNet.

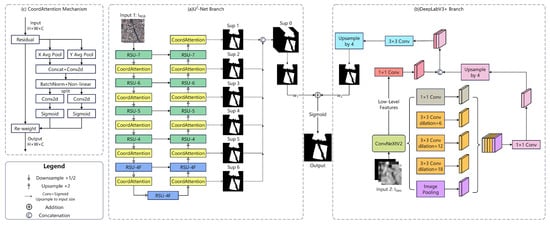

The model structure is shown in Figure 7,

Figure 7.

Overall structure of CAU2DNet: (a) the U2-Net branch; (b) the DeepLabV3+ branch; (c) the CoordAttention mechanism.

CAU2DNet consists of two branches: the U2-Net branch is responsible for processing RGB image inputs . This model achieves outstanding segmentation accuracy due to its nested U-shaped architecture, which effectively aggregates multi-scale features, making it particularly suitable for processing complex high-resolution remote sensing images. The DeepLabV3+ branch is responsible for processing geoscientific indicator image inputs . The ASPP module of the model significantly expands the receptive field through multi-scale dilated convolutions, effectively capturing context information at different scales, making it suitable for processing lower-resolution geoscientific auxiliary images.

This study introduced the CoordAttention mechanism into the U2-Net branch to further enhance the performance of the model in slum remote sensing recognition tasks. This study cascades a CoordAttention mechanism module after each RSU block in the original U2-Net. This mechanism enhances the network’s spatial perception by explicitly modeling spatial coordinate information, which helps in accurately locating target areas and improving the segmentation accuracy. This study replaced the backbone network of the DeepLabV3+ branch from Xception to ConvNextV2 [33], which utilizes advanced convolutional design and sparse gating mechanisms, enabling efficient feature extraction, thereby enhancing the model’s recognition accuracy and receptive field range.

In terms of the integration mechanism, this study designed a decision-level integration strategy. Specifically, this strategy involves processing the input image on two separate branches and generating preliminary segmentation results, which are then fused through a weighted sum approach. The fusion weights are set as learnable model parameters and , which are automatically learned as the optimal weights through the optimization algorithm during the training process. The calculation formula is as follows:

The final fusion result maps the output value range to the interval through the Sigmoid function to represent the probability of each pixel belonging to the slum area. The confidence threshold is set to 0.5. When the output value is greater than 0.5, the area is determined to be a slum area; otherwise, it is determined to be a non-slum area.

3.2. U2-Net Branch

U2-Net adopts a nested U-shaped architecture, following a recursive downsampling–upsampling paradigm (Figure 7a). The topological structure of this model can be formally described as an encoder–decoder structure with a hierarchical symmetry. The excellent recognition accuracy of this model makes it highly suitable for identifying RGB image information in CAU2DNet.

Specifically, the encoder module of this branch contains six consecutive downsampling stages. Meanwhile, the decoder module consists of five upsampling stages. The output of the sixth downsampling stage is connected to the decoder through a feature projection layer. Moreover, skip connections are utilized between encoders and decoders at the same level to pass shallow information, allowing the model to effectively integrate deep semantic information while retaining high-resolution details. This model enables the decoder to retain the high-resolution features of the encoder, thereby helping in the restoration of the details and maintaining segmentation accuracy.

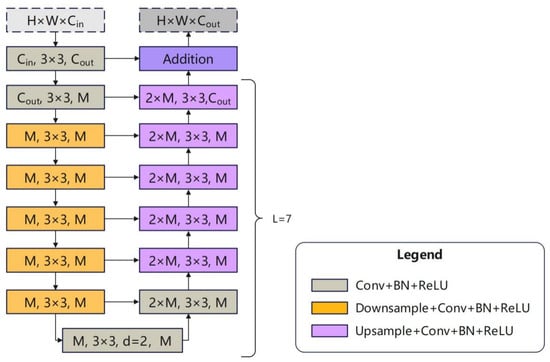

Each encoding and decoding stage utilizes the residual U (RSU) block, with its internal downsampling and upsampling structure shown in Figure 8 and also performs skip connections on stages at the same level.

Figure 8.

RSU block (taking RSU-7 as an example).

The depth of the RSU block at the k-th layer is determined by the network level that it resides in, which satisfies the decreasing pattern. This deep adaptive design enables shallow networks to achieve a larger ERF through multiple downsampling operations. In the last two downsampling stages ( and ) and the first upsampling stage (), further downsampling would result in the loss of substantial information due to the original image being compressed to a considerably small size. Therefore, these three stages use dilated convolution to replace upsampling, and the RSU used is referred to as RSU-4F. The output of the RSU block can be simply represented as follows:

where represents the input vector, and D and U represent the downsampling and the upsampling operations respectively. In the U2-Net branch of the CAU2DNet model, the parameters of each RSU block are shown in Table 3. In the U2-Net branch, the encoder part of the input RGB image can be defined as follows:

where , and represents the depth of the RSU block. The decoder part can be defined as follows:

where to maintain the encoding and decoding symmetry and ⊕ represents the feature concatenation operation (skip connection).

Table 3.

U2-Net branch parameters.

U2-Net utilizes a feature fusion pattern that combines skip connections with deep supervision. In each RSU block, the feature maps are directly passed between the encoder and the decoder via skip connections. Thereafter, the feature maps passed to the decoder are fused with the upsampled feature maps through an additive fusion, which is element-wise addition.

In terms of deep supervision, the feature maps of the different scales outputted by the various decoders will be concatenated to achieve the fusion of multi-scale features, enabling U2-Net to have good capabilities in preserving detailed information and capturing multi-scale features. The final mathematical expression is as follows:

where represents a 1 × 1 convolution for channel compression, and ⊗ denotes the feature-level cascading operation, which is element-wise addition in this context.

In addressing the spatial location sensitivity characteristics of the remote sensing images that distinguish them from other types of images, this study improves the RSU module by cascading a coordinate attention module (CoordAttention) after its final upsampling layer to enable the model to identify slums. The calculation process of CoordAttention can be divided into feature encoding in two orthogonal directions, achieving location awareness through the embedding of coordinate information, thereby greatly improving the accuracy of identification in remote sensing images.

3.3. DeepLabV3+ Branch

The DeepLabV3+ model is an advanced semantic segmentation model, as shown in Figure 6b, which combines an encoder–decoder architecture and an ASPP module. Its large receptive field makes it particularly suitable for identifying low-resolution geoscientific indicator images within the CAU2DNet.

The backbone primarily utilizes existing pretrained DL models, such as ResNet [34] or Xception [35]. To enhance our model’s ability to recognize slum areas more effectively, this study selects ConvNextV2, which features a sparse gating mechanism as the backbone and retrains it on the São Paulo slums dataset.

The ASPP module is the encoder of DeepLabV3+, which captures multi-scale contextual information through dilated separable convolutions with different sampling rates, thereby enhancing the model’s ability to recognize targets of different sizes. This module consists of three parallel dilated convolution layers with different sampling rates, a pooling layer, and a 1 × 1 convolution layer. This design allows DeepLabV3+ to have a multi-scale receptive field, which can effectively perceive geoscientific indicator images with low resolution. This model can be simply represented as follows:

where is the i-th dilated convolutional layer, and M is the number of dilated convolutional layers. The specific parameters of the ASPP module used in CAU2DNet are shown in Table 4. Given that the ASPP module does not change the size of the input feature map, the size of the input and output feature maps is 512 × 512.

Table 4.

ASPP module parameters.

The decoder part of the DeepLabV3+ is the main optimization portion of the model compared with the DeepLabV3 [36]. This model utilizes skip connections to combine the output of the encoder with the output of the ASPP module. Specifically,

- (1)

- The feature maps produced by the encoder’s ASPP module are upsampled to the resolution of the shallow features using bilinear interpolation by a factor of four.

- (2)

- The shallow features of corresponding size from the backbone are extracted and concatenated with the output of the decoder and encoder.

- (3)

- 3 × 3 convolution is performed to refine the features.

- (4)

- The feature maps are restored to their original resolution using 4× upsampling again.

These improvements can significantly enhance the segmentation effect of the model, especially in terms of restoring object boundaries. If this process is represented as , then the input geoscientific indicator image input . The DeepLabV3+ branch can be represented as follows:

where represents the backbone part of DeepLabV3+, and is the shallow part of the backbone.

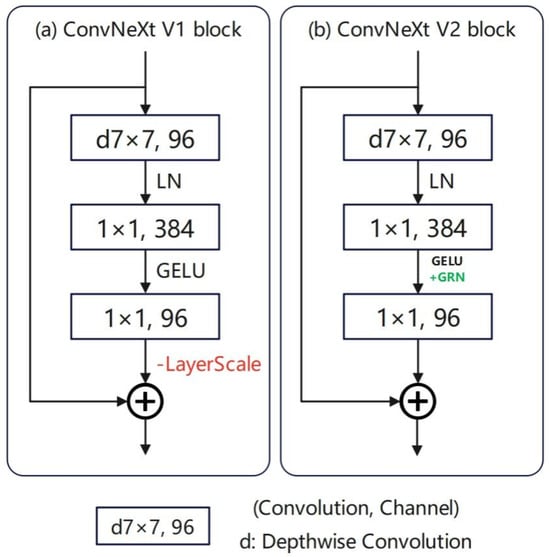

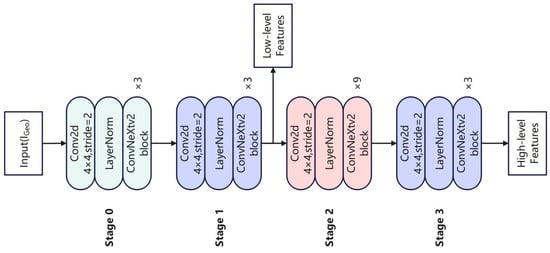

3.4. ConvNextV2 Backbone

ConvNextV2 is a CNN that has been improved from ConvNext, incorporating self-supervised learning and architectural innovations. ConvNext is a pure convolutional model inspired by the Swin Transformer architecture, consisting of multiple ConvNext blocks combined with downsampling blocks. This model aims to combine the advantages of modern Transformer architectures while maintaining the efficiency and simplicity of CNNs. ConvNextV2 is used to replace Xception as the backbone network in the DeepLabV3+ branch in this study due to its faster computational efficiency and higher recognition accuracy compared with Xception.

The ConvNext block structure is shown in Figure 9a. The input feature map first enters a deep CNN layer to extract its local feature information and then passes through a 1 × 1 point-wise convolutional layer, which can adjust the number of channels and perform feature fusion without changing the spatial resolution of the feature map. Subsequently, a layer normalization layer is applied to accelerate the training of the model. Finally, the GeLU activation function is used to output the feature maps. The ConvNext block performs a skip connection at the end, adding the input to the output feature maps, to preserve substantial feature information and alleviate the gradient vanishing problem.

Figure 9.

Comparison between the ConvNextV1 block and the ConvNextV2 block. (a) ConvNextV1 block; (b) ConvNextV2 block.

Compared to traditional CNN backbones, the main advantages of ConvNextV2 lie in its Global Response Normalization (GRN) layer (Figure 9b) and sparse convolution mechanism. The GRN layer effectively mitigates the feature collapse through inter-channel feature competition, enabling the model to extract key features across different channels more evenly and enhance feature representation for low-resolution geoscientific indicator images. The sparse convolution mechanism performs convolution operations only on activated points, significantly reducing computational load while maintaining accuracy, making it particularly suitable for remote sensing image recognition tasks with large datasets. Additionally, subsequent ablation experiments demonstrate that replacing the backbone of DeepLabV3+ with ConvNextV2 leads to a 1.11% increase in Recall, a 1.03% improvement in F1 score, and an IoU boost to 0.6372. These results directly validate ConvNextV2’s optimization effects for low-resolution geoscientific feature modeling.

In the DeepLabV3+ branch of the CAU2DNet model, the ConvNextV2 network primarily consists of four encoder stages, without an explicit decoder, as shown in Figure 10. Each stage sequentially passes through a Conv2d layer, a LayerNorm layer, and multiple ConvNextV2 block layers, with the number of ConvNextV2 block layers in the four stages being three, three, nine, and three in this study, respectively. The output of Stage 1 serves as low-level features, which are later fused with the output of the ASPP module. Meanwhile, the output of Stage 3 serves as the input for the ASPP module. The specific parameters of ConvNextV2 are shown in Table 5.

Figure 10.

ConvNext V2 structural diagram.

Table 5.

ConvNextV2 parameters.

The ConvNextV2 backbone can be mathematically expressed as follows

where refers to the stacking of the k-th stage ConvNext block. refers to the output features of the stage, , and ⊗ refers to the feature cascading operation.

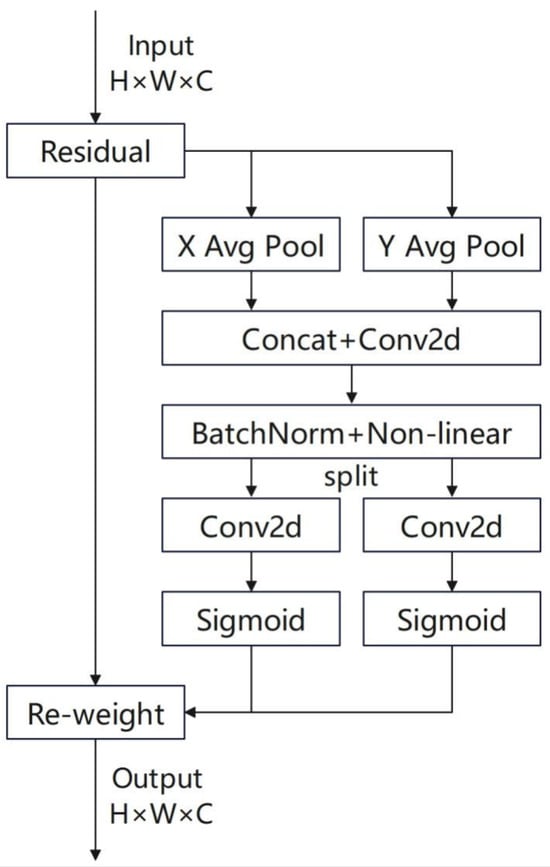

3.5. CoordAttention Mechanism

In improving the U2-Net architecture and its accuracy in slum detection, this study cascades a CoordAttention (CA) block after each RSU module to strengthen the network’s joint modeling capability of target spatial location and channel dependencies, thereby enhancing the identification ability of slum areas. CoordAttention decomposes channel attention into the aggregation of coordinate information along the horizontal and vertical directions through a decomposed feature encoding strategy. The specific structure of the model is shown in Figure 11.

Figure 11.

CoordAttention structural diagram.

The primary reason for selecting the CoordAttention mechanism over other recent attention mechanisms (such as CBAM or SE modules) lies in its ability to explicitly model the spatial coordinate information of remote sensing images, thereby enhancing the network’s perception of target spatial locations, which is crucial for slum identification tasks.The goal of CoordAttention is to simultaneously capture “inter-channel dependencies” and “spatial locations” to avoid information loss. By decoupling spatial dimensions, encoding positional information, and integrating the processes of channel and spatial attention, CoordAttention deeply binds the spatial locations of targets with channel features, thereby enhancing the network’s joint modeling capability for target spatial locations and channel dependencies.

In a given feature map , coordinate information embedding is first performed by encoding the horizontal and vertical spatial context information separately to perceive directional features. With regard to the feature value at the spatial position in the c-th channel, 1D pooling is performed along the horizontal and vertical directions:

where and represent the spatial context information in the horizontal and vertical directions, respectively. After concatenating these factors along the spatial dimension, the intermediate feature is formed:

where represents the nonlinear activation function (such as ReLU), refers to the cross-channel interaction using a 1 × 1 convolution kernel, and indicates the spatial dimension concatenation. Thereafter, f is decoupled into and through the splitting operation, and they are separately transformed using and convolution kernels:

where refers to the Sigmoid function and and refer to the attention weight maps in the horizontal and vertical directions, respectively.

The final output feature Y is obtained through position-sensitive attention weighting:

3.6. Loss Function

Given that slum recognition is a typical image segmentation task, which is a binary classification problem, CAU2DNet mainly adopts binary cross-entropy (BCE) as the final loss function. This mechanism is capable of effectively evaluating the difference between the predicted probability values of the model and the actual values. In a given set of predicted values and the true values y, the BCE loss calculation formula is expressed as follows:

4. Results and Analysis

4.1. Experimental Environment

In this study, all experiments were implemented based on PyTorch. The Python version used during the experiments was 3.11.5, the PyTorch version was 2.4.1, and the system version was Ubuntu 20.04.2 LTS. The model training utilized an NVIDIA GeForce RTX 2080 Ti (NVIDIA, Santa Clara, CA, USA) with 24 GB of video memory to train for 100 epochs. The batch size was set to two for all batches. The initial learning rate for the Adam optimizer was set to 0.01, with an optimizer momentum of 0.9 and a weight decay of 0.0001. A cosine annealing learning rate scheduler was utilized to automatically adjust the learning rate.

4.2. Experimental Dataset

This study conducted experiments using a self-constructed São Paulo favela dataset to verify the model’s ability in slum recognition. The construction process of this dataset has been discussed in detail in Section 2.3, and it is randomly divided into a training set and a testing set at a ratio of 8:2. Furthermore, this study selected the WHU building dataset for training to validate the model’s generalizability and superiority.

The WHU (Wuhan University) building dataset is a public dataset released by the School of Remote Sensing and Information Engineering at Wuhan University, primarily serving research on intelligent extraction of buildings from remote sensing images and dynamic change detection. This dataset includes four distinct subsets: (1) The aerial image dataset is based on high-resolution aerial images of the Christchurch region in New Zealand, covering detailed annotations of approximately 22,000 buildings. (2) The Global Urban Satellite Dataset integrates multi-source sensor data (including QuickBird/WorldView), covering urban built-up areas across multiple climate zones. (3) The East Asian satellite data focuses on architectural features in the East Asian region, providing over 17,000 standardized samples. (4) The building change detection dataset is used for temporal analysis in post-earthquake reconstruction.

The WHU Building Dataset exhibits notable characteristics of a diverse urban environment. It boasts extensive coverage, integrating multi-source sensor data that spans urban built-up areas across multiple climate zones, encompassing samples with varying lighting conditions, architectural forms, and scene complexities, so it can simulate real-world environments of different cities globally. Additionally, it demonstrates strong task adaptability, focusing specifically on building extraction from remote sensing imagery, which is like slum identification. Consequently, the results obtained from the WHU Building Dataset can indirectly validate a model’s capability to identify complex urban areas, thereby verifying its generalization performance.

This study selected the East Asian satellite dataset for training, which has a resolution of 0.45 m and contains 17,388 samples of 512 × 512 pixels, of which 1616 samples include building areas (Figure 12). This study only conducted experiments on the 1616 samples that include building areas. The official training set and test set, which include the building area, are 713 and 903 respectively. To make the training and evaluation of the model more stable, the two were swapped in this study.

Figure 12.

Schematic of the WHU East Asia satellite building dataset. (a) Normally exposed remote sensing image; (b) underexposed remote sensing image; (c) overexposed remote sensing image; (d) rectangular building with a large roof area; (e) strip-shaped shed building; (f) a cluster of densely distributed buildings; (g) a sample with fewer buildings.

4.3. Evaluation Indicators

Five indicators were chosen for calculation to objectively and comprehensively evaluate the model proposed in this study: accuracy, precision, recall, score, and intersection over union (IoU). Accuracy represents the proportion of correct predictions made by the model; precision indicates the proportion of samples predicted as the positive class that are actually positive; recall represents the proportion of samples that are actually positive among those predicted as the positive class; the score is the harmonic mean of precision and recall, which can comprehensively measure the performance of the model; IoU stands for intersection over union, which represents the overlap ratio between the predicted and the true regions and can be indirectly calculated using the confusion matrix. The formulas are expressed as follows:

where and indicate true positives, false positives, false negatives, and true negatives, respectively.

4.4. Comparison with State-of-the-Art Methods

This study selected five baseline models for comparison to demonstrate the superiority of CAU2DNet. These models incorporate mainstream Unet and Transformer architectures, demonstrating strong representativeness.

- (1)

- U2-Net: This model adopts a nested U-shaped architecture, following a recursive downsampling–upsampling paradigm, and its topological structure can be formally described as an encoder–decoder structure with a hierarchical symmetry. The RSU block can capture multi-scale contextual information without significantly increasing the computational load by combining dilated convolutions of different scales and a symmetric encoding–decoding structure while maintaining high-resolution features.

- (2)

- DeepLabV3+: This classic model in the field of semantic segmentation utilizes an encoder–decoder architecture. The encoder utilizes backbone networks, such as Xception or ResNet to extract features and integrates with the ASPP module. This model captures multi-scale contextual information in parallel through atrous convolutions at different rates while also utilizing global pooling to integrate global features.

- (3)

- Unet3+ [37]: This model is improved based on Unet++. The main contribution is the proposal of full-scale skip connections, indicating that each layer of the decoder is connected to all encoders. These improvements can enhance the model’s multi-scale interaction, fully utilize multi-scale information, and improve segmentation accuracy.

- (4)

- TransUnet [38]: This model is the first to combine the Transformer architecture with the Unet architecture, effectively integrating the advantages of both. Moreover, this model leverages the Transformer’s ability to focus on the global context, compensating for the limited receptive field of Unet.

- (5)

- STTNet [39]: This model is relatively new in the field of building recognition. Given that buildings occupy particularly small areas in remote sensing images, this model adopts an efficient dual-path Transformer architecture and introduces a novel module called the “Sparse Token Sampler” to represent buildings as a set of “sparse” feature vectors in their feature space, thereby achieving optimal performance with low time costs.

4.5. Experimental Results on the WHU East Asia Satellite Building Dataset

The experimental results comparing CAU2DNet with other baseline methods are shown in Table 6. CAU2DNet outperforms all other baseline methods in nearly all evaluation metrics, with only a marginal 0.05% disadvantage in recall compared with STTNet. Given that this dataset does not include multispectral images, this study only conducted experiments using the U2-Net branch that includes the CoordAttention mechanism. Therefore, the outstanding performance of this model mainly stems from the CoordAttention’s ability to perceive spatial location information. CAU2DNet achieved leads of 0.31%, 0.27%, 6.82%, 0.28%, 2.84%, and 0.0155 in various metrics compared with the experimental results of U2-Net. In particular, the significant improvement in precision can prove that these enhancements give CAU2DNet a stronger ability to distinguish building areas.

Table 6.

Experimental results on the WHU East Asia satellite building dataset.

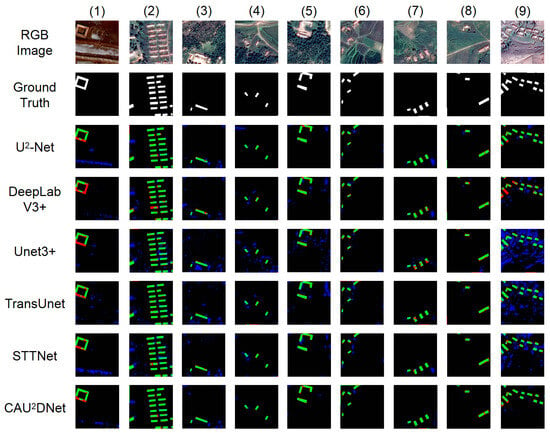

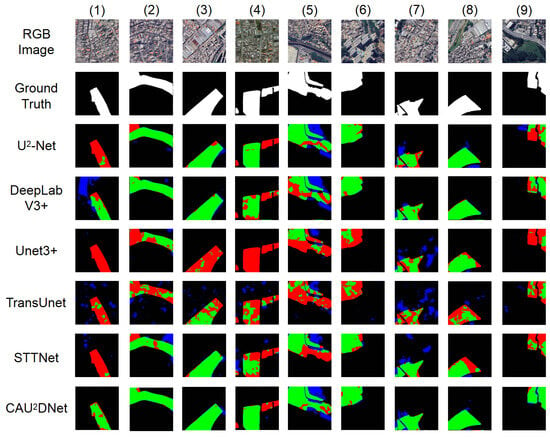

The recognition results of the different methods on the test set are exhibited in Figure 13. On the WHU East Asia satellite building dataset, all models have a certain ability to recognize building areas. However, CAU2DNet has better control over non-building areas, with a significantly smaller false positive area than the other models. Moreover, this model ensures a leading level of recognition accuracy for real building areas.

Figure 13.

Visual comparison of the different methods on the WHU East Asian Architecture Dataset. Green indicates the true positive, blue denotes the false positive, red is the false negative, and black represents the true negative.

For example, in (1), U2-Net fails to distinguish the road area below the image from the building area. However, this situation has been significantly improved after the improvements made in this study. In (5) and (8), the comparison between CAU2DNet and U2-Net indicates that U2-Net fails to distinguish two bare soil areas, which CAU2DNet did not identify as buildings. This notion indicates that the improvements made in this study can uncover a deeper level of semantic information about buildings. In (9), the overexposed images and large areas of bare soil caused Unet3+, TransUnet, and STTNet to produce large areas of false detection regions. Although DeepLabV3+ did not identify these areas as buildings, it also failed to properly identify the real building areas. CAU2DNet and U2-Net can better accomplish identification tasks compared with other models.

The WHU East Asia satellite building dataset has a limited number of samples, making it difficult for various models to be effectively trained. However, U2-Net and CAU2DNet, which are improved upon this dataset, still manage to maintain excellent performance. This notion indicates that the CAU2DNet architecture has a more efficient feature extraction capability compared with other models.

Overall, Unet3+ and TransUnet perform poorly, with a large number of false positive areas indicating that they only extract shallow semantic information for building areas. CAU2DNet can distinguish similar areas, such as roads and bare soil, while ensuring the identification of building areas, demonstrating its ability to perceive deep semantic information.

4.6. Experimental Results on the São Paulo Slums Dataset

The experimental results of CAU2DNet and other baseline methods applied to the São Paulo Favela dataset are shown in Table 7. CAU2DNet outperforms other baseline methods on all evaluation metrics. The superior performance of CAU2DNet mainly stems from its dual-branch architecture and the use of the CoordAttention, for which enhancements effectively strengthen the model’s feature perception for slum areas.

Table 7.

Experimental results on the São Paulo slums dataset.

The results of the different methods on the test set are exhibited in Figure 14.

Figure 14.

Visual comparison of the different methods on the São Paulo slums dataset. Green indicates the true positive, blue denotes the false positive, red is the false negative, and black represents the true negative.

CAU2DNet has a number of true positive (TP) regions while also being able to effectively control the false positive (FP) regions. This results verifies that the precision indicator of this model is significantly ahead of other methods.

In terms of the dense and complex building clusters represented by (1), U2-Net, Unet3+, TransUnet, and STTNet failed to detect the slum areas within them. DeepLabV3+ confused other buildings with slums, resulting in a large number of false positive areas. However, CAU2DNet was able to accurately identify the correct slum areas, with only partial missed detections in a few areas at the edges and inside, demonstrating its good perception of the deeper semantics of slums. With regard to the slum areas with clear edges represented by (3) and (5), CAU2DNet can identify smoother edges than the other methods, reflecting its excellent performance in edge extraction.

Unet3+ and TransUnet are the two methods with the worst performance. In particular, although Unet3+ has a large number of parameters and a very long training time, it still yields unsatisfactory results. This notion may indicate that this model is unsuitable for slum identification without improvements. The recognition results of TransUnet are distributed in a patchy manner with a large number of false positive areas, with numerous missed detections within the actual slum areas. This situation may be attributed to the Transformer architecture that requires a large number of samples to support its training, and the sample size of the slums in the São Paulo dataset is insufficient to support it. However, in reality, slum samples are particularly scarce, and methods such as Unet can achieve excellent results on small datasets, making them highly suitable for the objectives of this study.

Although other baseline models demonstrate excellent performance in their respective domains, they fail to adequately adapt to the characteristics of remote sensing imagery, such as significant variations in resolution and the inclusion of spatial location information. In contrast, CAU2DNet features a dual-branch architecture, enabling the use of high-precision networks for identifying high-resolution images and wide-receptive-field networks for low-resolution images, thereby accommodating different resolutions of remote sensing imagery. Additionally, CAU2DNet incorporates a CoordAttention mechanism capable of recognizing implicit spatial location information in remote sensing images. These constitute the specific advantages of CAU2DNet in slum identification tasks.

5. Discussion

5.1. Ablation Experiments

In this section, ablation experiments are conducted to test the effectiveness of the improvements. CAU2DNet is primarily an improvement based on U2-Net. The main improvements are as follows:

- (1)

- Enhancing feature extraction capabilities by increasing the input of geoscientific indicator images.

- (2)

- Integrating the DeepLabV3+ branch for identifying geoscientific indicator images

- (3)

- Integrating the CoordAttention mechanism to enhance the model’s sensitivity to spatial location information.

- (4)

- Replacing the backbone of DeepLabV3+ with ConvNextV2.

This study designed the following five ablation experiments to evaluate the effectiveness of these improvements:

- (1)

- U2-Net (RGB) refers to the U2-Net network without any modifications, which only takes RGB true color images as input images.

- (2)

- U2-Net (RGB + Geo) refers to the original U2-Net network without any modifications, which takes RGB true color images and geoscientific indicator images as input images.

- (3)

- U2-Net (RGB) + DeepLab (Geo) refers to the combination of the unmodified U2-Net and DeepLabV3+ as branches, utilizing the same decision-level feature fusion mechanism as CAU2DNet for feature integration. The RGB true-color image is used as the input image for the U2-Net branch, while the geoscientific index image serves as the input for the DeepLabV3+ branch. The backbone of the DeepLabV3+ branch is the original Xception.

- (4)

- U2-Net + DeepLab + CoordAttention refers to adding the CoordAttention mechanism to the U2-Net branch on the basis of the model in (3).

- (5)

- CAU2DNet refers to the final version of the model in this study. It is based on the model in (4), where the backbone of the DeepLabV3+ branch is replaced with ConvNextV2.

These ablation experiments were conducted on the São Paulo Favela dataset constructed in this study using the experimental environment described in Section 4.1 and the evaluation metrics described in Section 4.3. The results are shown in Table 8.

Table 8.

Experimental results on the São Paulo slum dataset.

The comparison of the results of experiments (1) and (2) indicates that all indicators have been improved, with the most significant increase in precision, which increased by 7.54%. Ofthe other indicators, IoU increased from 0.5485 to 0.5559, and the score increased by 3.88%. This indicates that the introduction of multi-source images can effectively enhance the model’s ability to recognize slums.

Furthermore, the comparison of the results of experiments (2) and (3) indicates that the precision increased by 5.79%, recall increased by 6.25%, the score increased by 6.05%, and the IoU increased by 0.6274 by using DeepLabV3+ to recognize low-resolution geoscientific indicator images. The ASPP module of the DeepLabV3+ branch, through multi-scale dilated convolutions, significantly enhances the modeling capability for low-resolution geoscientific features.

The comparison of the results of experiments (3) and (4) indicates that adding the CoordAttention mechanism increased the precision by 1.89%, but recall decreased by 1.72%. The CoordAttention mechanism enhances the model’s ability to focus on key features by strengthening position-sensitive attention, thereby improving recognition accuracy. However, it may reduce the coverage of all relevant samples due to stricter screening criteria, ultimately manifesting as an increase in precision but a decrease in recall in experimental results. This issue will be alleviated with the use of the ConvNextV2 backbone.

Meanwhile, the comparison of the results of experiments (4) and (5) shows that the replacement of the ConvNextV2 backbone caused the recall to increase by 1.11% while also increasing the score by 1.03% and the IoU to 0.6372. The sparse convolution mechanism in ConvNextV2 can selectively focus on highly distinctive pixels, effectively balancing the overly stringent recognition criteria of the CoordAttention mechanism, leading to a recovery in recall.

These improvements have resulted in the proposed CAU2DNet outperforming the original U2-Net in every aspect, with an accuracy increase of 1.46%, precision improvement of 16.12%, recall enhancement of 6.2%, an score of 10.85%, and an IoU improvement from 0.5485 to 0.6372.

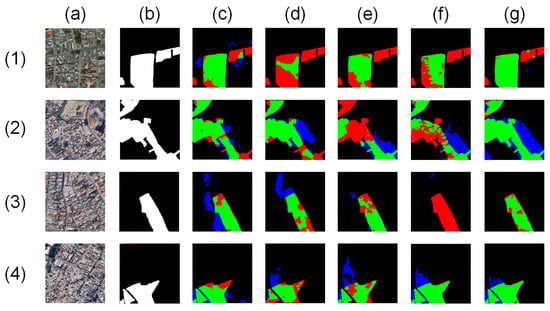

The specific identification results are shown in Figure 15.

Figure 15.

Ablation experiment visualization results. (a) RGB image; (b) ground truth; (c) U2-Net; (RGB) (d) U2-Net (RGB + Geo); (e) U2-Net (RGB); + DeepLab (Geo); (f) U2-Net + DeepLab + CoordAttention; (g) CAU2DNet. Green indicates the true positive, blue denotes the false positive, red refers to the false negative, and black is the true negative.

The model gains an in-depth understanding of the semantics of slums, and the identification results become increasingly accurate with the gradual addition of the modifications. CAU2DNet has the best recognition accuracy among these four highly challenging samples. However, in (1), U2-Net (RGB + Geo) shows a decrease in the identification of slums compared with U2-Net (RGB). We speculate that this result is due to the low resolution of the geoscientific indicator images causing confusion for the U2-Net model, which has a high recognition accuracy and a small receptive field, in identifying the semantics of slums. Moreover, the subsequent use of DeepLabV3+ for recognizing geological indicator images has restored accuracy, which can also verify this hypothesis. In (3), U2-Net + DeepLab + CoordAttention completely missed the slum areas in the image. This phenomenon may be due to the introduction of the CoordAttention mechanism, which altered the model’s understanding of slum features, resulting in extensive missed detections in this area. This issue was also improved after replacing the ConvNextV2 backbone.

5.2. Model Efficiency

To evaluate the computational efficiency of the models, this study calculated the Giga Floating-point Operations Per Second (GFLOPs) and the number of model parameters (Params) for each model. The results are shown in Table 9. It can be observed that CAU2DNet exhibits relatively higher values in both metrics. This is primarily due to CAU2DNet’s dual-branch architecture. While such a structure enables the model to achieve high accuracy, it also increases the model’s complexity, resulting in lower computational efficiency. In subsequent research, more efforts should be made with respect to its lightweight design to explore its potential for acceleration.

Table 9.

Model efficiency of different methods.

6. Conclusions

This study aims at the remote sensing recognition task of urban slums, summarizes the slum image features classified by data source type, constructs a São Paulo slum dataset integrating multispectral images, and proposes the CAU2DNet model based on a dual-branch DL architecture, which can better be used for identifying slums based on remote sensing images, providing data support and methodological reference for subsequent research and applications in this field.

The CAU2DNet model introduced in this study utilizes the U2-Net branch to identify high-resolution true-color remote sensing images and the DeepLabV3+ branch to recognize low-resolution geoscientific indicator images. This model can fully leverage the respective advantages of both branches and has achieved significant advantages compared with the baseline model. Furthermore, this study has added the CoordAttention mechanism to the U2-Net branch, enabling the model to effectively recognize spatial location features in remote sensing images. This study replaces the backbone of the DeepLabV3+ branch with ConvNextV2, which has a large receptive field. The experiments proved that this model can effectively improve the issue of recall index decline caused by missing detection of blurry boundary areas.

This study conducted experiments on the São Paulo slums dataset and the WHU East Asia satellite building dataset. The results showed that the CAU2DNet model achieved the best performance in terms of accuracy, precision, recall, score, and IoU compared with other baseline methods, proving its highly competitive performance in the field of slum remote sensing recognition. Moreover, this study conducted ablation experiments on various improvements using the São Paulo slumxczs dataset. The results demonstrated that all modifications made in this research can effectively enhance model performance.

However, this study still has the following limitations. (1) Geographical limitations of the data: The current dataset only covers the city of São Paulo. In the future, this work needs to be expanded to areas with different climate zones and stages of urban development. (2) High computational costs: The dual-branch architecture has a large number of parameters. In subsequent work, knowledge distillation or lightweight design can be used to optimize inference efficiency. (3) Insufficient temporal dynamic analysis: This study focuses on static identification. In the future, temporal remote sensing data can be combined to explore the driving mechanisms behind the expansion and evolution of slums. This study encourages further research in this field and hopes that more scholars will contribute to addressing these limitations in the future.

Author Contributions

Conceptualization, L.M.; methodology, X.L. and L.M.; software, X.L.; validation, L.M., X.S. and X.Z.; formal analysis, C.Z. and X.S.; investigation, C.Z. and X.Y.; resources, Z.S.; data curation, X.L.; writing—original draft preparation, X.L. and C.Z.; writing—review and editing, X.L., Z.S., Y.P. and L.M.; visualization, X.S.; supervision, L.M. and Y.P.; project administration, X.Z.; All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Open Foundations of Jiangsu Province Engineering Research Center of Airborne Detecting and Intelligent Perceptive Technology (JSECF2023-14), the Key Laboratory of Land Satellite Remote Sensing Application, Ministry of Natural Resources of the People’s Republic of China (Grant No. KLSMNR-K202304) and the National Natural Science Foundation of China (42401516).

Data Availability Statement

The data presented in this study are openly available at https://github.com/luoooxia/CAU2DNet (accessed on 24 May 2025).

Acknowledgments

This manuscript was edited for proper English language, grammar, punctuation, spelling, and overall style by one or more of the highly qualified native English speaking editors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ooi, G.L.; Phua, K.H. Urbanization and Slum Formation. J. Urban Health 2007, 84, 27–34. [Google Scholar] [CrossRef]

- Thomson, D.R.; Kuffer, M.; Boo, G.; Hati, B.; Grippa, T.; Elsey, H.; Linard, C.; Mahabir, R.; Kyobutungi, C.; Maviti, J.; et al. Need for an Integrated Deprived Area “Slum” Mapping System (IDEAMAPS) in Low- and Middle-Income Countries (LMICs). Soc. Sci. 2020, 9, 80. [Google Scholar] [CrossRef]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R. Slums from Space—15 Years of Slum Mapping Using Remote Sensing. Remote Sens. 2016, 8, 455. [Google Scholar] [CrossRef]

- Mahesh, B. Machine Learning Algorithms—A Review. Int. J. Sci. Res. (IJSR) 2019, 9, 381–386. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 7 January 1966; University of California Press: Berkeley, CA, USA, 1967; Volume 1. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Cox, D.R. The Regression Analysis of Binary Sequences. J. R. Stat. Society. Ser. B (Methodol.) 1958, 20, 215–242. [Google Scholar] [CrossRef]

- Leonita, G.; Kuffer, M.; Sliuzas, R.; Persello, C. Machine Learning-Based Slum Mapping in Support of Slum Upgrading Programs: The Case of Bandung City, Indonesia. Remote Sens. 2018, 10, 1522. [Google Scholar] [CrossRef]

- Cunha, H.; Diniz da Silva, A.; Martins, B.; Guedes, B.; Nunes, I.; Maranhão, M.; Conforto, M. Detection of slums in Rio de Janeiro through satellite images. Dataset Rep. 2024, 3, 107–113. [Google Scholar] [CrossRef]

- Gram-Hansen, B.J.; Helber, P.; Varatharajan, I.; Azam, F.; Coca-Castro, A.; Kopackova, V.; Bilinski, P. Mapping Informal Settlements in Developing Countries using Machine Learning and Low Resolution Multi-spectral Data. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, AIES ’19, New York, NY, USA, 27–28 January 2019; pp. 361–368. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Mathew, A.; Amudha, P.; Sivakumari, S. Deep Learning Techniques: An Overview. In Advanced Machine Learning Technologies and Applications; Advances in Intelligent Systems and Computing; Hassanien, A., Bhatnagar, R., Darwish, A., Eds.; Springer: Singapore, 2021; Volume 1141. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; Volume 25, pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Ibrahim, M.R.; Titheridge, H.; Cheng, T.; Haworth, J. predictSLUMS: A new model for identifying and predicting informal settlements and slums in cities from street intersections using machine learning. Comput. Environ. Urban Syst. 2019, 76, 31–56. [Google Scholar] [CrossRef]

- Ibrahim, M.R.; Haworth, J.; Cheng, T. URBAN-i: From urban scenes to mapping slums, transport modes, and pedestrians in cities using deep learning and computer vision. Environ. Plan. B 2021, 48, 76–93. [Google Scholar] [CrossRef]

- Verma, D.; Jana, A.; Ramamritham, K. Transfer learning approach to map urban slums using high and medium resolution satellite imagery. Habitat Int. 2019, 88, 101981. [Google Scholar] [CrossRef]

- Lu, W.; Hu, Y.; Peng, F.; Feng, Z.; Yang, Y. A Geoscience-Aware Network (GASlumNet) Combining UNet and ConvNeXt for Slum Mapping. Remote Sens. 2024, 16, 260. [Google Scholar] [CrossRef]

- United Nations Human Settlements Programme. The State of the World’s Cities Report, 2006/2007: The Millennium Development Goals and Urban Sustainability; 30 Years of Shaping the Habitat Agenda, 1st ed.; Yearbook on Urban Development, Earthscan and UN-Habitat: London, UK; Nairobi, Kenya, 2006; p. 204. [Google Scholar]

- Pedro, A.A.; Queiroz, A.P. Slum: Comparing municipal and census basemaps. Habitat Int. 2019, 83, 30–40. [Google Scholar] [CrossRef]

- Shaheen, F.; Verma, B. An ensemble of deep learning architectures for automatic feature extraction. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction From an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Hinton, G.E.; Mnih, V. Machine Learning for Aerial Image Labeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1–8. [Google Scholar]

- Qingyun, F.; Zhaokui, W. Cross-modality attentive feature fusion for object detection in multispectral remote sensing imagery. Pattern Recognit. 2022, 130, 108786. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the Effective Receptive Field in Deep Convolutional Neural Networks. arXiv 2017, arXiv:1701.04128. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision–ECCV 2018, Proceedings of the 15th European Conference, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtually, 19–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Available online: https://censo2022.ibge.gov.br/panorama/ (accessed on 15 February 2025).

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders. arXiv 2023, arXiv:2301.00808. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2017, arXiv:1610.02357. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtually, 4–8 May 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Chen, K.; Zou, Z.; Shi, Z. Building Extraction from Remote Sensing Images with Sparse Token Transformers. Remote Sens. 2021, 13, 4441. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).