Abstract

The clever eye (CE) algorithm has been introduced for target detection in remote sensing image processing. It originally proposes the concept of data origin and can achieve the lowest average output energy compared to both the classical constrained energy minimization (CEM) and matched filter (MF) methods. In addition, it has been theoretically proven that the solutions of the best data origins can be attributed to solving a linear equation, which makes it computationally efficient. However, CE is only designed for single-target detection cases, while multiple-target detection is more demanding in real applications. In this paper, by naturally extending CE to a multiple-target case, we propose a unified algorithm termed multi-target clever eye (MTCE). The theoretical results in CE prompt us to consider an interesting question: do the MTCE solutions also share a similar structure to those of CE? Aiming to answer this question, we investigate a class of unconstrained non-convex optimization problems, where both the CE and MTCE models serve as special cases, which, interestingly, can also be utilized to solve a more generalized linear system. In addition, we further prove that all these solutions are globally optimal. In this sense, the analytical solutions of this generalized model can be deduced. Therefore, a unified framework is provided to deal with such a non-convex optimization problem, where both the solutions of MTCE and CE can be succinctly derived. Furthermore, its computational complexity is of the same magnitude as that of the other multiple-target-based methods. Experiments on both simulations and real hyperspectral remote sensing data verify our theoretical conclusions, and the comparison of quantitative metrics also demonstrates the advantage of our proposed MTCE method in multiple-target detection.

1. Introduction

Since remote sensing imagery consists of tens or hundreds of bands with a very high spectral resolution, it has prompted the development of various applications over the past decades. Target detection is one of the most important tasks in the field of remote sensing image processing, which aims to detect and highlight the specified target of interest and to distinguish it from the background pixels within a given environment or dataset. This task has a broad range of real applications, such as camouflage target detection [1], agricultural production [2], land use [3], climate change [4], urban monitoring [5], ship detection [6], etc.

Currently, existing target detection methods can be divided into the following four categories: (1) Algorithms based on the spectral similarity index, which aim to calculate the spectral difference between the target and background pixels. The most commonly used one is the spectral angle mapping [7]; (2) Linear mixing model (LMM)-based algorithms, which assume that any mixed pixel can be regarded as a linear combination of endmembers that can be viewed as targets of interest [8]. The represented algorithms are orthogonal subspace projection [9,10], and non-negative matrix factorization [11,12]; (3) Learning-based algorithms, such as multitasking and metric learning [13,14,15,16]. (4) Algorithms based on statistical metrics. Currently, the second-order statistic is widely applied, and the classical methods include constrained energy minimization (CEM) [17] and the matched filter (MF) method [18]; except for the second-order statistic, higher-order statistics have also been introduced for this task [19,20]. In recent years, deep learning-based methods such as [21,22,23,24,25,26,27] have emerged and been developed for target detection tasks.

However, they require supervised pre-training via a large number of labeled samples to learn good representation and thus also suffer from a high computational burden. In this paper, we will focus on the last class for discussion, which can be grouped into the unsupervised learning type. As opposed to the deep learning class, this branch does not require a pre-training process and has received more attention in small-sample scenarios.

The most classical statistic-based target detection algorithm is CEM, which was developed from the field of signal processing. To detect targets of interest, it aims to find a projection direction to keep the output of the target as a constant while suppressing the average filter output energy of the data to a minimum level. In this way, the target can be highlighted. Though MF is designed from the perspective of the binary statistical hypothesis, the MF detector is very similar to that of CEM, only differing in that the data should first be centralized for MF. From the perspective of the optimization model, both of them can be viewed as a convex optimization model with a linear constraint. Formally, a convex function is defined as follows: let f be a function defined on a subset I of the real numbers . If for all and for all , the following inequality holds, then the function f is said to be convex on the interval I. However, they have different levels of performance, caused by the change of the data’s origin. This means that the selection of the data’s origin will have an impact on the performance of target detection.

In this sense, the clever eye (CE) algorithm, which originally introduces the concept of the data’s origin as a new variable, has been proposed, where both CEM and MF can be viewed as special cases [28]. Compared to CEM and MF, there are two coupled variables to be optimized in the CE model, leading to a non-convex optimization model. In [28], the authors adopted an iterative gradient method in order to obtain a feasible solution. More interestingly, after rigid theoretical analysis, the non-convex optimization model of CE can be attributed to solving a system of linear equations. Meanwhile, they further show that all of these stationary points are globally optimal [29] (see Theorems 1 and 2 later for details). In this sense, there exist analytical solutions for CE, making it computationally efficient and also theoretically guaranteed.

However, the above model is only limited to single-target detection tasks, and may not satisfy demands in real applications. Therefore, previous works have extended some models to multiple-target cases, including both linear and non-linear types. For the linear class, multi-target CEM (MTCEM) and multi-target MF (MTMF) can be easily generalized in a natural way. The non-linear type incorporates some non-linear operations into target detection, such as Sum CEM (SCEM) [30], Winner-Takes-All CEM (WTACEM) [30], and Revised MTCEM (RMTCEM) [31]. Following the linear class, the CE model can also be extended into multiple-target cases, which is considered and analyzed in this work and is termed the multi-target CE (MTCE) algorithm for consistency. Inspired by theoretical results in CE, we consider an interesting question: do the MTCE solutions also share a similar structure to those of CE? In this paper, we investigate this issue and establish a theoretical analysis of it. Then, we evaluate the performance of MTCE in target detection tasks for a remote sensing dataset.

The rest of this paper is organized as follows. In Section 2, we briefly review some classical algorithms and present existing theoretical results for CE. In Section 3, we propose the generalized MTCE model and derive its simplified model. In Section 4, we give a detailed theoretical analysis of a class of unconstrained non-convex models, where both the CE and MTCE models are classed as special cases. Finally, the computational complexity of MTCE is also presented. Some experimental results that focus on the application of MTCE in target detection are given in Section 5. The conclusions and some possible future works are presented in Section 6.

2. Preliminaries

In this part, we introduce several classical algorithms for target detection tasks and discuss existing theoretical results for CE. For intuition, the notations used in this article are defined in Table 1.

Table 1.

Notational conventions adopted in this article.

2.1. CEM

CEM comes from linear constrained minimum variance beamforming in the field of digital signal processing, which was proposed by Harsanyi in 1993. It has also become one of the most important target detection methods in the field of remote sensing image processing [17]. Mathematically, it is designed to find a direction in order to minimize the average output energy of the data projected along while keeping the output of the target of interest constant. We denote the hyperspectral (or multispectral) data , where are the number of bands and pixels, respectively. is the target vector to be detected. The CEM model is

where , is the correlation matrix of . (1) is a quadratic convex model with an equality constraint. By using the Lagrangian method, the solution of (1) can be obtained by Then, the objective function is calculated by

The CEM output is given by

2.2. Multi-Target CEM (MTCEM)

CEM is only designed for single- target detection situations. However, in many scenarios, multiple-target detection applications are more demanding. In this way, multi-target CEM (MTCEM) is proposed by adding more target signatures to the CEM constraint. We assume that is a p target spectrum selected from N ones, where in general. Then, the MTCEM model is

where is a vector with all of the elements equal to 1. By similarly using the Lagrangian method, the solution of (4) can be obtained:

2.3. Clever Eyes (CE) Algorithm

It is easy to find that the performance of CEM is influenced by the selection of the data’s origin, and different origins usually lead to different results. To search for the best data origin, an algorithm termed the clever eyes (CE) algorithm is proposed. It simultaneously finds the optimal data origin (denoted ) and the projection direction to minimize the average output energy of the data projected along at position and to maintain the output of the selected samples at a constant. The model of CE is formulated as [28]

where , and

where is the mean vector of , and

is the covariance matrix of . Similarly, by introducing the Lagrangian method, the filter vector corresponding to (6) can be obtained:

Then, the objective function in (6) is transformed into

Since the numerator is a constant, equivalently, minimizing (10) is transformed into maximizing the following function:

The solution of (11) is called the best data origin.

2.4. The Analytical Solutions of CE

With rigid theoretical analysis, it has been proven that searching for the stationary points of (11) is similar to solving a system of linear equations, which makes the CE algorithm computationally efficient. Meanwhile, theoretical results show that all of these stationary points are globally optimal. The results are presented as the following two theorems.

Theorem 1

([29]). To achieve the optimal target detector from the perspective of the filter output energy, the target vector, ; data mean vector, ; the data covariance matrix, ; and data origin, must satisfy the following linear equation (represented in the matrix form):

which is named the clever eye equation.

3. Unified Multi-Target CE (MTCE)

3.1. Model Formulation

Similar to extending CEM into MTCEM, it is natural to generalize CE to the multi-target case, which is termed the multi-target CE (MTCE) algorithm and is better adapted to real applications. In the following, we will focus on this algorithm and further derive its simplified version.

More specifically, the MTCE model is intended to simultaneously find a data origin direction and a projection one to minimize the variance of the data projected along when observing the data “standing” at and also to maintain the output of the selected samples at a constant. And it can be formulated as follows:

in which, , and is defined in (7).

It should be noted that MTCEM can be viewed as a case of MTCE when the data origin is set to , and MTMF can be viewed as a case of MTCE when . CE can be viewed as a case of MTCE when . Furthermore, when and , it is classed as a CEM model. When and , it is classed as an MF model. Thus, the proposed MTCE model provides a more unified framework which can incorporate many presented models as its special cases. An intuitive table is provided in Table 2.

Table 2.

Relation between the aforementioned algorithms and the generalized MTCE one.

3.2. Model Simplication

The Lagrangian function of (15) is defined as

where is the Lagrangian multiplier. By firstly fixing , the gradient leads to

where we use the subscript to indicate that is a function of . Substituting (17) into the constraint in (15), it follows that

The solution of (21) is the best data origin (denoted ) for the MTCE model.

Motivated by the theoretical results in the CE model, as presented in Theorems 1 and 2, we were inspired to consider the following interesting question: as MTCE is a more generalized version of CE, do the solutions of the MTCE model also have a similar structure to that of the CE algorithm? In this paper, we focus on this question and provide a positive answer to it.

Note that the MTCE model is more complicated than that of CE, since when extending the single-target case into the multiple-target case, the reciprocal in (10) turns into the inverse of a square matrix in (21). Thus, the equivalent transformation from (10) and (11) cannot make sense for (21). As will be seen in the later analysis from (A29)–(A31), dealing with the inverse of the square matrix with the form in (21) becomes a key step in deducing our theoretical results.

4. Theoretical Analysis

In this section, inspired by the optimization model of MTCE, i.e., (21), we will investigate a class of non-convex problems taking the following more generalized form:

where is the variable vector to be optimized, is a column vector with all elements equal to 1, is an matrix. Some assumptions on these variables are made as follows: is column full rank, indicating that . takes the form of , where is assumed to be symmetric positive definite, and is known. It is easily checked that is invertible. We have some remarks concerning the model (22):

In this way, (22) is a more generalized model, where both MTCE and CE serve as its special case, and these assumptions on variables also fit to the practical target detection task. Thus, in the following analysis, we mainly focus on analyzing (22). After some algebraic derivations, we present the following two main theorems.

4.1. The Stationary Points of Model (22)

Theorem 3.

Regarding the stationary points of model (22) where and , the solution must satisfy the following linear equation:

The proof is given in Appendix A.3. Based on Theorem 3, we successfully transform the non-convex model (22) into the solutions of one system of linear Equations (23), which is similar to the single target case presented in Theorem 1 in [29]. One can refer to Section IV of [29] to intuitively understand the geometrical explanation of such a linear system. Our generalized MTCE method shares a similar structure. In detail, denoting and , it holds that and . Then, the solutions of can be given by . Because here there are n variables and only one equation, (23) is an under-determined system. Therefore, it has an infinite number of solutions. The following theorem further shows that all these solutions are globally optimal.

4.2. Global Optimality

Theorem 4.

All the stationary points of model (22) correspond to its globally optimal solutions, and the corresponding objective function is equal to

where is a constant irrelevant to .

The proof is given in Appendix A.4. Combining Theorems 3 with 4, we obtain the analytical solutions of the non-convex optimization model (22). In addition, we have some remarks concerning the above two theorems:

- In addition, similar to (13), the MTCE detector can be rewritten aswhere is the MTMF detector. The detailed derivations of (27) are given in Appendix A.5.

In this way, instead of adopting an iterative optimization algorithm such as the gradient descent algorithm, we can obtain the best data origin of the MTCE model by merely solving (25), which is computationally cheap. In addition, though there are infinite solutions for the best data origin, it is sufficient to only select one of them based on the conclusion in Theorem 4. Then, we can obtain the MTCE detector and the corresponding target detection result.

Finally, the pseudo-code of MTCE is summarized in Algorithm 1.

| Algorithm 1 Multi-target clever eye (MTCE) |

Require: the dataset , and the target sample matrix .

|

4.3. Computational Complexity Analysis

In this part, we will analyze the computational complexity of the proposed MTCE algorithm. Based on the framework in Algorithm 1, the complexity mainly includes the following parts:

- compute : .

- compute and : , .

- compute : .

- compute and : , .

- obtain the best data origin : .

- compute : .

- compute : .

Eventually, the computational complexity of MTCE is summarized as shown in Table 3. Similarly, the computational complexity of MTCEM and MTMF can also be analyzed, which is listed in Table 3 for comparison. In real application, since and , the main computational complexity of all three algorithms is to calculate the correlation matrix, which is given by , suggesting that the complexity of these three algorithms is of a similar magnitude. This indicates that although the MTCE model is non-convex and more complicated, compared to that of MTCEM and MTMF, using the above theoretical analysis, we can still obtain its solutions in a computationally cheap way. In addition, from the perspective of average output energy, the result of MTCE at the optimal data origin is also the lowest, which is theoretically superior to that of MTCEM and MTMF. To conclude, the MTCE algorithm will be preferred in terms of both its theoretical optimality and its lack of high computational complexity.

Table 3.

Computational complexity comparison of MTCE, MTCEM and MTMF, where L, N, p represents the number of the bands, total pixels, and target samples, respectively.

5. Experimental Results

In this section, both a simulated and real hyperspectral dataset are used to evaluate the performance of the proposed MTCE algorithm. Some methods are selected for comparison. These compared methods mainly include two types: (1) Some presented multi-target algorithms, such as multiple-target CEM (MTCEM) [30], Sum CEM (SCEM) [30], and Winner-Take-All CEM (WTACEM) [30], and Revised MTCEM (RMTCEM) [31]. SCEM first computes the CEM results of each single-target pixel and then adds them to obtain the final multi-target result; meanwhile, WTACEM selects the maximized value of each pixel as the final one. RMTCEM is a revised version of MTCEM, whose calculation for the correlation matrix of the data excludes these target pixels to be detected. (2) Note that the main differences between MTCEM, MTMF, and MTCE mentioned above lie in the selection of the data’s origin. In this sense, we also investigate the impact of different data origins on target detection performance. We choose and set different data origins as follows:

- , which corresponds to the MTCEM algorithm;

- , which corresponds to the MTMF algorithm;

- two randomly generated data origins. For convenience, the two results are denoted MTR1 and MTR2;

- the best data origin, which corresponds to our proposed MTCE algorithm.

Totally, these algorithms are selected for comparison to evaluate the proposed MTCE one. Furthermore, to quantitatively evaluate target detection performance, several widely used metrics, including area under the receiver operating characteristic (ROC) curve (AUC), overall accuracy (OA), F-score, and Cohen’s kappa coefficient, are calculated and compared. The AUC metric can be directly computed based on the target detection output, while the other three metrics are calculated based on the binary classification of the output. Here, the threshold method is used to generate the binary result, where the optimal threshold is determined from the ROC curve using the Younden index [32]. Then, the confusion matrix is defined in Table 4.

Table 4.

Illustration of the confusion matrix of a binary classification.

Then, the OA index is computed by

The F-score metric (denoted ϝ) is calculated by

where and . is the weight of precision in the harmonic mean and is set to in our experiments. Denote

The Kappa coefficient (denote ) can be calculated by

The higher the value of the four quantitative metrics, the better the target detection result. Note here that in the following experiments, the size of the selected targets is usually smaller compared to that of the reference samples for background pixels. For a fair comparison of the quantitative metrics, we randomly select the background pixels whose number is three times of that of the reference target pixels. Then, the OA, F-score, and Kappa coefficient are computed in our experiments. In addition, since the proposed MTCE algorithm minimizes the average output energy, to better show this, in the experiment, the energy of each algorithm denoted E is also recorded for an intuitive comparison. For these algorithms which contain , it can be obtained by calculating the objective function value using in (21). For the three non-linear algorithms in the first type, including SCEM, WTACEM and RMTCEM, because they incorporate other strategies for target detection and variable is not the only factor influencing the final result, for a fair comparison, E is not computed for them.

All the algorithms are implemented in MATLAB R2019b on a laptop of 12 GB RAM, Inter(R) Core (TM) i7-10510U CPU, @1.80 GHZ. The source code of the MTCE implementation can be downloaded from https://www.researchgate.net/publication/391798644_MTCE_code (accessed on 28 May 2025).

5.1. Simulation Experiment

In this part, to evaluate the performance of the MTCE model and its analytical solutions (25) in target detection, the simulated data is generated as follows.

(1) A background image with a size of is firstly generated, which follows a three-dimensional normal distribution. More specifically, the mean vector is given by , and the covariance matrix of the data is set to

(2) We add two different small target images with the same size of into the background data. Correspondingly, the groundtruth image for the targets is shown in Figure 1b. The target vector in the upper left of Figure 1b is set to , and that in the lower right is set to , respectively. Then, Gaussian white noise with a signal-to-noise ratio (SNR) of 10 dB is added to the two target images.

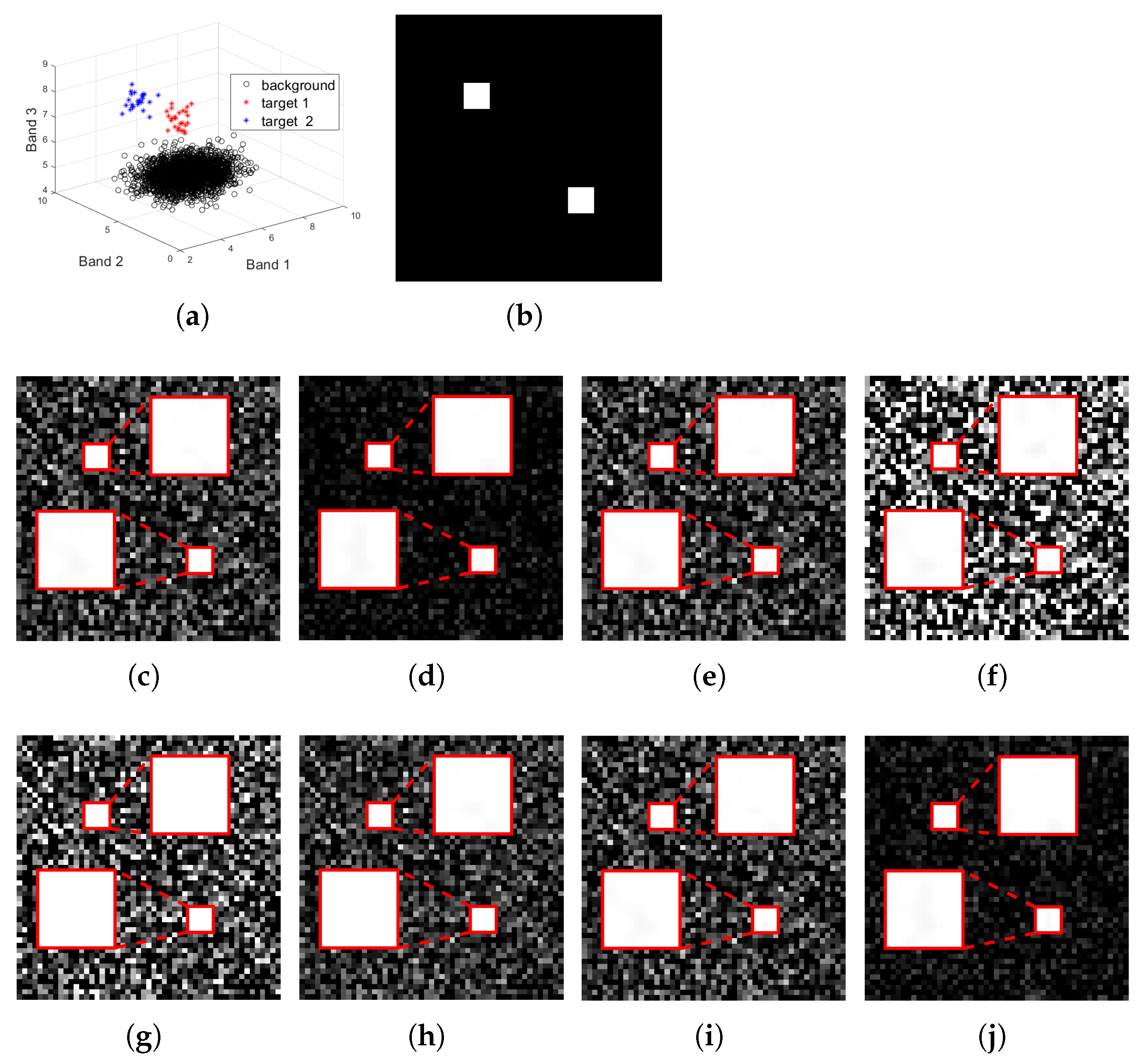

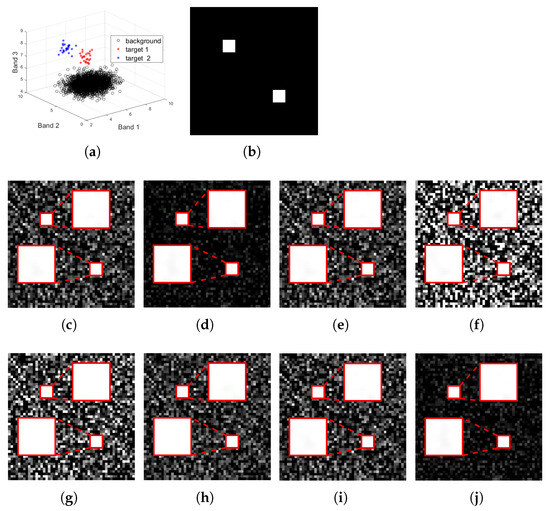

Figure 1.

Target detection results for the simulated data with a size of : (a): the distribution of the simulation image in three-dimensional spectral space, where the background pixels are marked in a black circle, and the targets are marked with red and blue asterisks; (b): the groundtruth image for the target and ; the detection result of (c) MTCEM where , (d) MTMF where , (e) MTR1 where , and (f) MTR2 where , (g) SCEM, (h) WTACEM, and (i) RMTCEM, respectively; (j): the detection result of MTCE ().

By reshaping the cubic remote sensing data with a size of into a size of , we obtain the corresponding matrix data . The distribution of the simulated dataset in the three-dimensional feature space is shown in Figure 1a (where the background points are marked in black circles, and the targets are marked in red and blue asterisks, respectively). It can be seen that the distribution of the background pixels is relatively flat, and the two classes of the targets are located in the top of the background. The target sample matrix is set to the groundtruth . By solving (25), we can obtain the best data origin denoted , and correspondingly, the detection result at can be obtained. Note that even though there are infinite feasible solutions for using Theorem 3, it is enough to select one of them as the final representative result, since their results are also the same when using Theorem 4.

For comparison, we also calculate and obtain the results of target detection at different data origins , as mentioned above. The results for SCEM, WTACEM, and RMTCEM can also be obtained. Their target detection results are shown in Figure 1. Some comments and comparisons are made as follows:

- Although MTCEM () can also highlight the target of interest, the separability between them is weak;

- When randomly selecting the data origin, as shown in Figure 1e,f, the target detection performance is good in some cases, while it is unsatisfactory in the others;

- The performance of these non-linear algorithms, including SCEM, WTACEM, and RMTCEM, is also inferior to that of the proposed MTCE one;

- It is difficult for us to determine which is better between the results obtained by MTMF () and MTCE (), whose results are visually very similar. Such a phenomenon can be theoretically explained. Using some derivations (See Appendix A.5 for details), it can be concluded that the projection direction of the MTCE detector is parallel to that of MTMF, up to an extra scaling factor on the vector length. Thus, their target detection results are also the same, when the outputs of the detection result are normalized into the range . However, they still differ in the vector length. Thus, from the perspective of the average output energy , the MTCE value will always be the lowest based on the conclusion in Theorem 4 and thus is always superior to that of MTMF.

To quantitatively compare and evaluate the target detection performance in different settings, we turn to calculating the quantitative metrics defined above for comparison. Correspondingly, the results are tabulated in Table 5. It can be seen that concerning the AUC, OA, F-score, and Kappa coefficient metrics, the results of the MTCE and MTMF detectors are superior to those of the other selections for data origin. In addition, the average output energy of MTCE is smaller than that of MTMF, consistent with the above theoretical analysis. To conclude, the experimental results indicate that the MTCE detector with the best data origin obtains the best target detection performance and the lowest output energy, which is consistent with the developed theorems and demonstrates its advantages over existing multi-target detection methods.

Table 5.

Quantitative comparison of the average output energy (denoted ) and four quantitative metrics, including AUC, OA, F-score (denoted ), and Kappa coefficient (denoted ), of different algorithms (including MTCEM, MTMF, MTR1, MTR2, and MTCE) for the simulated data. “-” means that their energy metric is not computed for a fair comparison. Bold numbers indicate the highest values in each row.

5.2. Hyperspectral Data 1: Xian Data

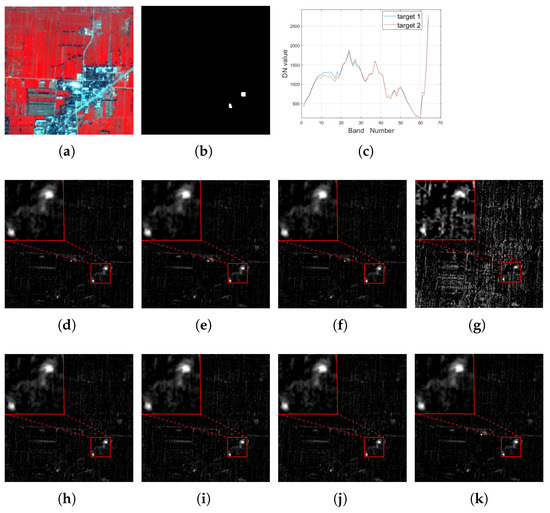

Here, a hyperspectral image is used to conduct an experiment concerning a target detection task. It was collected by the Operational Modular Imaging Spectrometer-II, which is a hyperspectral imaging system developed by the Shanghai Institute of Technical Physics, Chinese Academy of Sciences, and was acquired in the city of Xi’an, China in 2003 (named Xi’an data). It contains 64 bands from 460 nm to 10,250 nm in 3.6 m spatial resolution and each band has pixels. In this area, there are two small man-made targets, and the groundtruth is shown in Figure 2b. We visually inspect all the bands and find that there is no clear distinction between the targets and the background in any one band. Due to limitations on space, we only show the false color composite image in Figure 2a. It can be seen that it is very difficult to distinguish the target of interest from the background pixels.

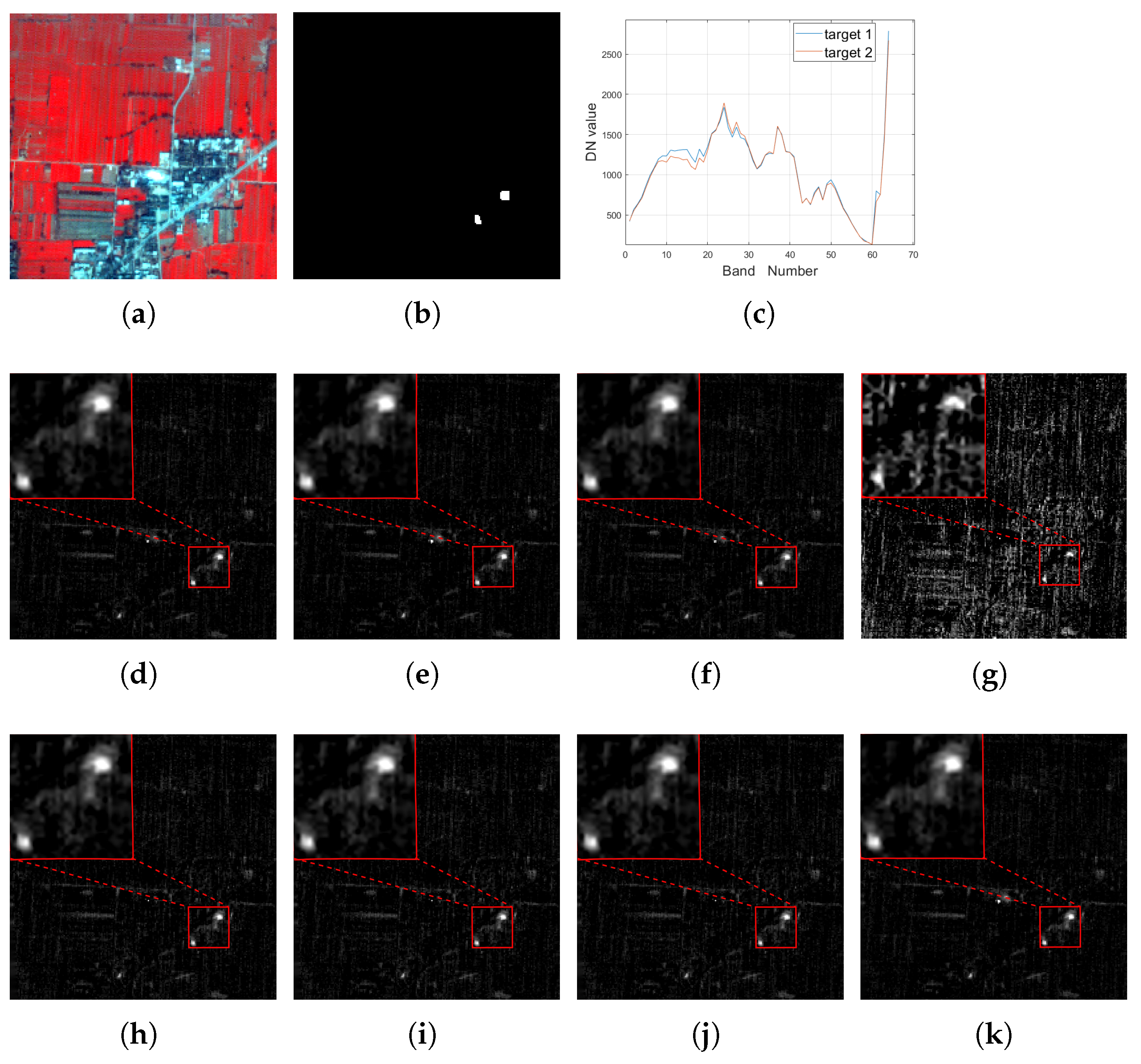

Figure 2.

For the Xi’an data, (a): the false color image (R: 750.6 nm, G: 559.6 nm, B: 510.1 nm); (b) the groundtruth image for the target of interest; (c): the spectrum curve for the target of interest; the detection result of (d) MTCEM where , (e) MTMF where , (f) MTR1 where , and (g) MTR2 where using (h) SCEM, (i) WTACEM, and (j) RMTCEM, respectively. (k): The detection result of MTCE ().

The target sample vectors are selected as the two representative pixels, which have a row index of 137 and column indexes of 157 and 159, respectively. The spectra of them are plotted in Figure 2c. By solving (25), we can obtain the best data origin denoted . Similar to the simulated part, the target detection results of these compared algorithms are also displayed for comparison, whose results are shown in Figure 2d–k. In addition, the metric comparison results are also listed in Table 6.

Table 6.

Quantitative comparison of the average output energy (denoted ) and four quantitative metrics, including AUC, OA, F-score (denoted ), and Kappa coefficient (denoted ), of different algorithms (including MTCEM, MTMF, MTR1, MTR2, and MTCE) for the hyperspectral Xian data. “-” means that their energy metric is not computed for a fair comparison. Bold numbers indicate the highest values in each row.

It can be visually seen that these compared methods can also efficiently extract these targets of interest, except for MTR2, whose data origin selection is inappropriate. The satisfactory performance on these compared methods is mainly due to the fact that here, we select small objects as our targets of interest, meaning they only account for a small percentage of all pixels. When the number of bands of the data is relatively large, the separability between the target and background pixels is sharp, and these compared algorithms can also achieve good performance, as shown by the metrics results in Table 6. In addition, by introducing the data origin and selecting the best one , the performance can be further improved. Note that some metrics of MTMF, such as OA, F-score and Kappa coefficient, are inferior to that of MTCE. In this experiment, this can be attributed to the random selection of background pixels when computing these metrics. However, their AUC values are still the same, which means that they can be treated the same in theory. Similarly, the energy of MTCE is also lower than that of MTMF. These results further confirm the superiority of the MTCE detector derived from the developed theorems concerning both target detection performance and the lowest average output energy.

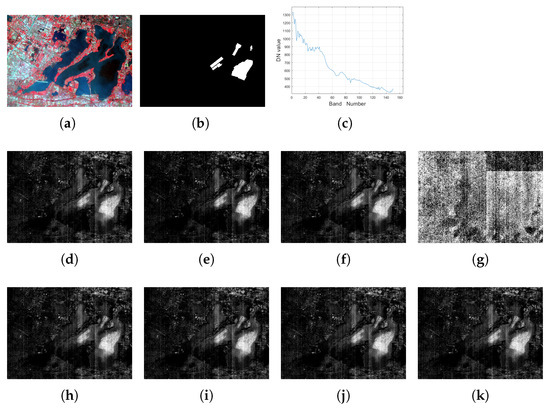

5.3. Hyperspectral Data 2: YCLake Data

In this part, we conduct an experiment on another hyperspectral dataset, termed the YCLake dataset for convenience. It contains 150 bands whose wavelengths range from 390.32 nm to 1029.18 nm in 30 m spatial resolution, and each band has pixels, which were acquired by Gaofen-5 (GF5) satellite at Yangcheng lake of Jiangsu Province, China. The corresponding false color image is shown in Figure 3a. With the development of fishery and aquaculture in lakes, pen culture is mainly conducted, which makes it the most important form of aquaculture in shallow lakes in China. In this experiment, we will select the pen culture as the target to be detected, and the groundtruth image for the pen culture is shown in Figure 3b.

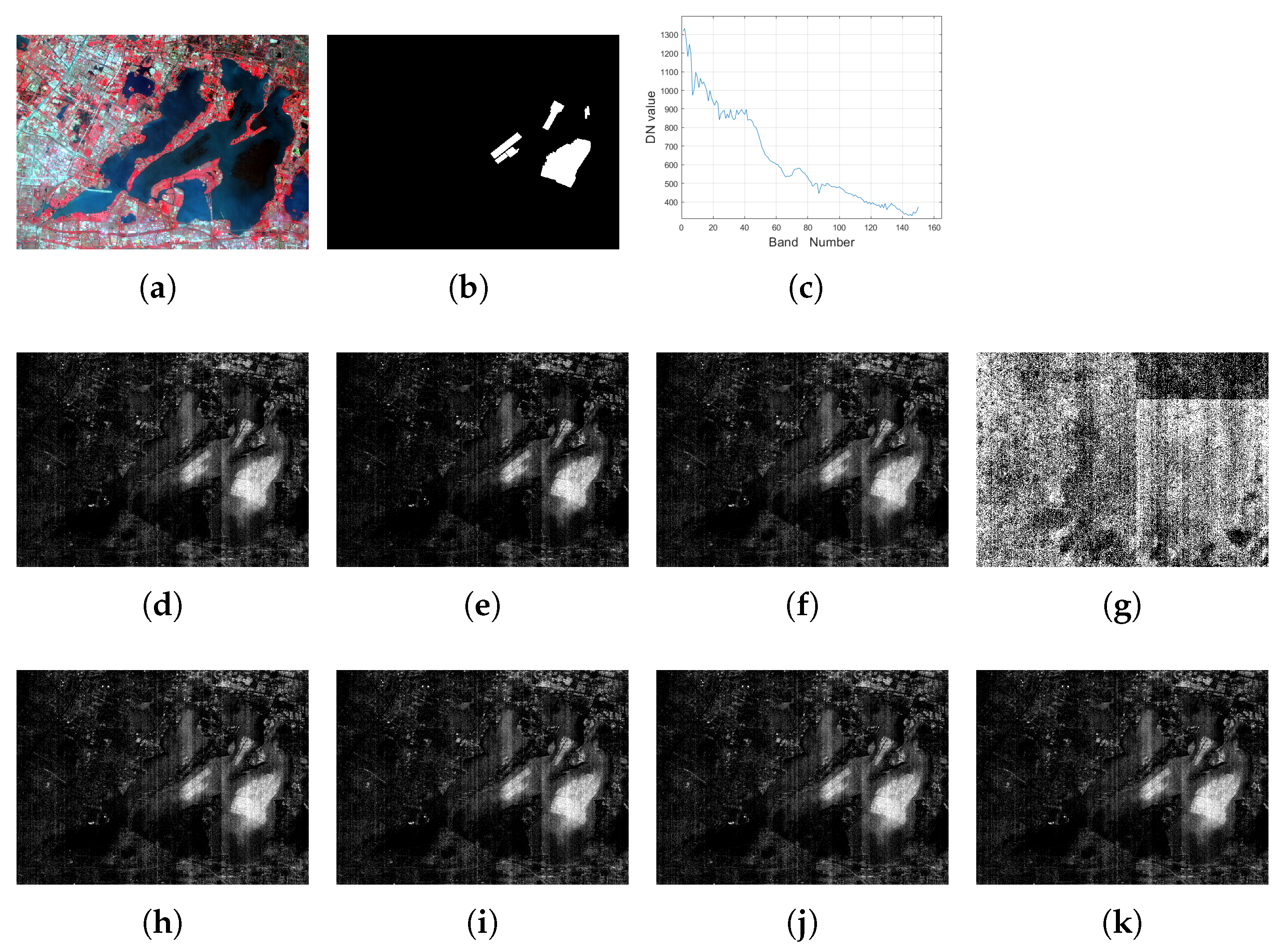

Figure 3.

For the YCLake data, (a): the false color image (R: 860.88 nm, G: 651.31 nm, B: 548.66 nm); (b) the groundtruth image for the target of interest; (c): the spectrum curve for the target of interest; the detection result with setting of (d) MTCEM where , (e) MTMF where , (f) MTR1 where , and (g) MTR2 where , (h) SCEM, (i) WTACEM, and (j) RMTCEM, respectively. (k): The detection result of MTCE ().

We consider two different settings concerning the selection of the target detection task: (1) We set p to be 1, which will reduce in the case of single-target detection. We want to show that the proposed unified multi-target detection algorithm is still efficient in such a situation. The selected spectrum of the one target is shown in Figure 3c; (2) For multi-target detection, differing from the setting in the previous two experiments where true or specified target pixels are selected, in this part, we randomly choose ten target pixels () based on the groundtruth shown in Figure 3b, and we perform the target detection task.

The comparison results for the case are shown in Figure 3. To save space, the results for are not displayed in this part. Correspondingly, the metric comparison results for these two cases are listed in Table 7. Note again that due to the randomness of calculating the OA, F-score, and Kappa coefficient, the results of MTMF may be larger than that of MTCE, such as in the setting of . However, they can be viewed as equivalent to the target detection result, and the AUC metric is a more reasonable metric with which to view this consistency.

Table 7.

The quantitative comparison of the average output energy (denoted E) and four quantitative metrics, including AUC, OA, F-score (denoted ), and Kappa coefficient (denoted ), of different algorithms (including MTCEM, MTMF, MTR1, MTR2, and MTCE) for the hyperspectral YCLake data. “-” means that their energy metric is not computed for a fair comparison. Bold numbers indicate the highest values in each row.

Meanwhile, the average output energy of MTCE is always lower than that of MTMF in all conducted experiments. Similar to the conclusion drawn in the previous parts, the proposed MTCE algorithm is still superior to these compared methods in both its target detection performance and lowest average output energy.

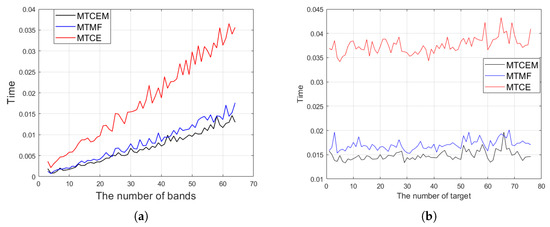

5.4. Time Consumption Comparison

In this part, the time consumption of the algorithms for the different datasets used in the above experimental parts is also recorded and compared, the results of which are tabulated in Table 8. For clearness, L, N, p, which represent the number of the bands, total pixels, and target samples, respectively, are also listed in the table. Consistent with the complexity analysis discussed before, the time consumption of MTCE is a little higher than that of MTCEM and MTMF, and this extra time is spent calculating the best data origin.

Table 8.

Time consumption comparison of MTCEM, MTMF, SCEM, WTACEM, RMTCEM, and MTCE for the different datasets used in the subsections A, B, and C, where L, N, p represents the number of bands, pixels, and target samples, respectively.

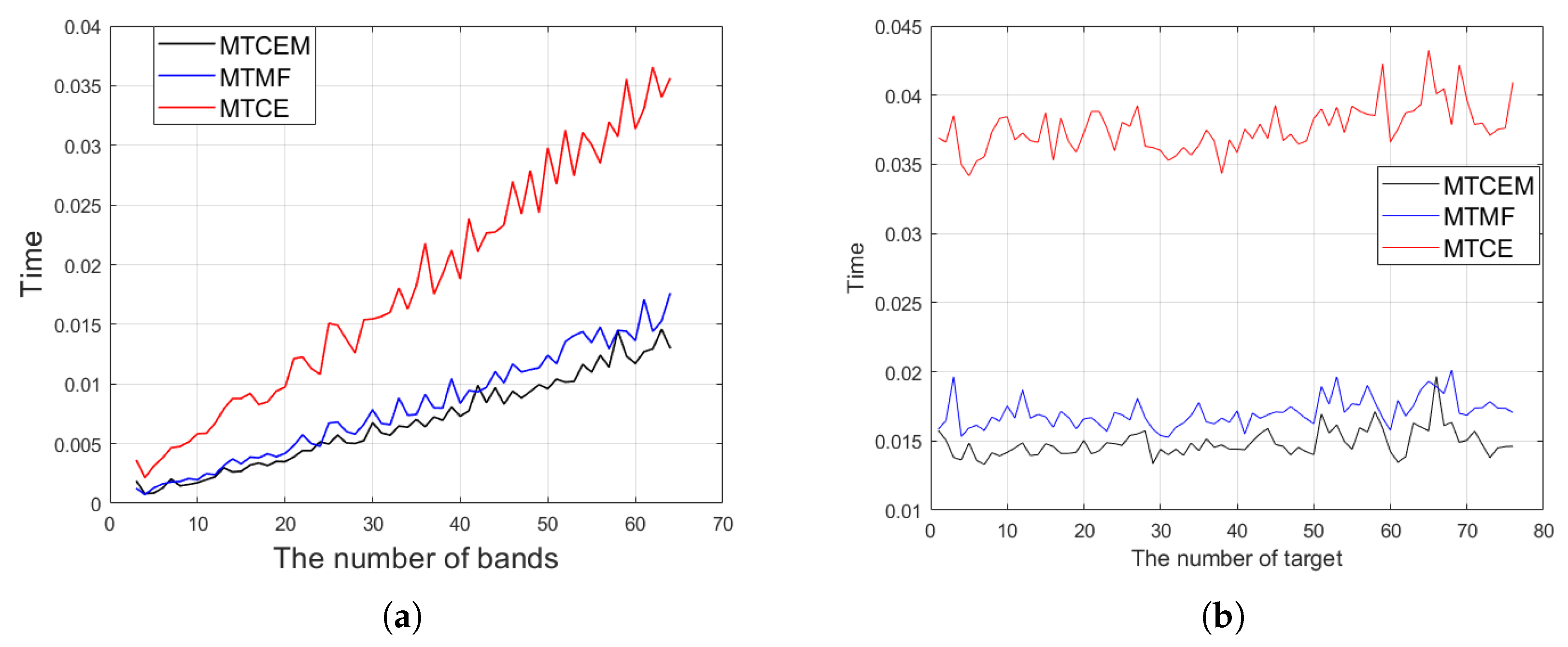

In addition, we plot the time complexity curves against the number of bands L and the number of target spectra p, respectively, when the other variables are fixed. We adopt the Xi’an dataset as our test dataset. The number of total bands is 64, and there are 76 labeled targets of interest according to the groundtruth. Correspondingly, L ranges from 3 to 64 bands, and p = 2, N = 40,000 is fixed. p ranges from 1 to 76 spectra, and L = 64, N = 40,000 is fixed. The results are plotted in Figure 4. To reduce the experimental randomness, the average value of 10 runs is recorded as the final result. It can be seen that as L increases, the time consumption indeed becomes larger. The time consumption as a function of p exhibits a relatively flat change trend and thus has smaller impact on time spent. This is consistent with our analysis in Table 3, demonstrating a dominance impact of computational complexity.

Figure 4.

Time consumption curve as a function of (a) the number of bands L; (b) the number of target p.

We conclude the experimental section of this paper with some remarks as follows.

- Theoretically, the MTCE solutions have the lowest average output energy, which is always superior to that of the other energy-based algorithms and thus is globally optimal from the perspective of output energy.

- Practically, for different datasets, the gulf in complexity between MTCE and these compared methods is very small, which demonstrates that our proposed MTCE algorithm is still efficient in managing high-dimensional real remote sensing image data. This implies that it can achieve better target detection performance without much higher time consumption.

6. Conclusions

In this paper, by extending the CE algorithm into a multiple-target case, we present a more generalized version, termed the MTCE algorithm. Inspired by the MTCE model, we investigate a class of unconstrained non-convex optimization models and rigorously derive their analytical solutions, which can be equated to solving a linear equation. In this way, a unified framework for the solution of such a non-convex optimization model is provided, where the presented theoretical results for CE are classed as a special case, and the solutions of MTCE can also be solved in a computationally efficient way. Finally, we focus on the application of the MTCE algorithm in the field of remote sensing target detection. Experiments on both simulations and several real datasets demonstrate its superiority (in terms of lowest output energy and target detection performance) over the other compared algorithms.

Some interesting works may be carried out in the future. On the one hand, the proposed MTCE algorithm is designed for multiple-target detection within the single temporal data. Multi-temporal remote sensing data processing is now being paid more and more attention [33,34]. It is worthy studying whether MTCE can be generalized to multi-temporal cases. On the other hand, exploiting potential application scenarios for the generalized non-convex model (22) could be another interesting project in the future.

Author Contributions

Experiment and writing: L.W.; methodology and data collection: L.J. and X.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Fund of China (62401088).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Appendix A.1

We first start by presenting some basic formula of the matrix calculus. Then, the detailed proofs for the presented Theorems 3 with 4 are provided in Appendix A.3 and Appendix A.4, and the detailed derivations for (27) are provided in Appendix A.5.

Appendix A.2. Basic Formula

Suppose that is a scalar-valued function with the matrix as its variable, and is the partial derivative of with respect to . It then holds that

where is the derivative operator. The following properties will be used later [35]:

The well-known Sherman–Morrison–Woodbury (SMW) matrix inversion lemma is as follows:

Appendix A.3. Proof of Theorem 3

Theorem A3.

Regrading the stationary points of model (22) where , denoted , the solution must satisfy the following linear equation:

Proof.

The proof is divided into the following several steps for a better organization. Step 1 is the form of a derivative of .

For simplicity, denote

Then, the objective function in (22) is simplified as

Step 2 is the simplification of .

According to (A6), it holds that

Correspondingly, we have

By recursively utilizing as listed in (A5), it holds that

Based on (A1) and after extracting the term , it follows that

Step 3 is the simplification of .

To further simplify (A22), we separately consider the following two terms:

Step 4 is the solution form of .

Since , is invertible, is a column full rank, and is a non-zero vector. When , it holds that

Step 5: Further simplification on .

In the following, we mainly focus on calculating in (A26). Firstly, (A23) is reorganized as

Based on (A26) and (A29), we have

Step 6: Sign of (A30).

- Since is positive definite, . Meanwhile, is a column full rank, and it is easily verified that and are also positive definites, indicating that .

- Here, we mainly investigate the sign of the denominator of the second term and aim to show that it is a non-negative term, which is organized and proven later in Lemma A1.

Thus, with these two conclusions, when (A30) holds, we have . By reusing (A6), we derive

Since , rearranging the numerator gives the result (A7). □

Lemma A1.

The denominator of the second term presented in (A30) is a non-negative term.

Proof.

First, it is reorganized as

Since the third term in (A32) is non-negative, we continue considering the sign of the following term:

Since is a symmetric positive definite, can be decomposed into . Then, (A33) is expressed as

where is an identity matrix, and . is an orthogonal complement projection matrix; thus, it is a non-negative definite. Then, it can be concluded that , and (A32) is also non-negative. □

Appendix A.4. Proof of Theorem 4

Theorem A4.

All the stationary points of model (22) correspond to its globally optimal solutions, and the corresponding objective function is equal to

where is a constant irrelevant to .

Proof.

Based on (A6) and (A29), we have

where is a constant irrelevant to , and the third term is non-negative based on (A32)∼(A34).

If and only if , the equality holds, which indicates that for any that satisfies (A7) (or equivalently, (A31)), the corresponding objective function always reaches the lowest value. Thus, the proof of Theorem 4 is complete. □

Appendix A.5. Derivations of (27)

For convenience, the MTCE detector is restated here:

where is the MTMF detector which corresponds to the form when is set to be the mean vector of the data. The above relationship indicates that the direction of is parallel to that of , up to a scale constant.

To see this, we first consider the generalized model (22), and based on the conclusion in Theorem 3, for any that satisfies (A7), by (A6) and (A29), we have

By combining (A27) with (A38) and reusing (A31), we have

and in the last equation, we utilize the conclusion in Theorem 3, i.e., (A31).

Furthermore, (20) can be viewed as a special case of (A39), when is considered the target vector , is the mean vector of the data , and is the covariance matrix of the data . The variable to be optimized corresponds to the data origin . We then have

and (A37) can be derived.

References

- Su, Y.; Xie, C.; Yu, S.; Zhang, C.; Lu, W.; Capmany, J.; Luo, Y.; Nakano, Y.; Hao, Y.; Yoshikawa, A. Camouflage target detection via hyperspectral imaging plus information divergence measurement. In Proceedings of the International Conference on Optoelectronics and Microelectronics Technology and Application, Russia, Moscow, 20–22 September 2017; Volume 1024, p. 102440F. [Google Scholar]

- Kader, D.; Nicolas, H.; Christian, W. Detecting salinity hazards within a semiarid context by means of combining soil and remote-sensing data. Geoderma 2017, 134, 217–230. [Google Scholar]

- Marco, C.; Giovanni, P.; Carlo, S.; Luisa, V. Land Use Classification in Remote Sensing Images by Convolutional Neural Networks. Acta Ecol. Sin. 2015, 28, 627–635. [Google Scholar]

- Lin, C.; Chen, S.; Chen, C.; Tai, C. Detecting newly grown tree leaves from unmanned-aerial-vehicle images using hyperspectral target detection techniques. ISPRS J. Photogramm. Remote Sens. 2018, 142, 174–189. [Google Scholar] [CrossRef]

- Li, X.; Zhang, S.; Pan, X.; Pat, D.; Roger, C. Straight road edge detection from high-resolution remote sensing images based on the ridgelet transform with the revised parallel-beam Radon transform. Int. J. Remote Sens. 2010, 31, 5041–5059. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Ship Detection in Spaceborne Optical Image with SVD Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5832–5845. [Google Scholar] [CrossRef]

- Narumalani, S.; Mishra, D.; Burkholder, J.; Merani, P.; Willson, G. A Comparative Evaluation of ISODATA and Spectral Angle Mapping for the Detection of Saltcedar Using Airborne Hyperspectral Imagery. Geocarto Int. 2006, 21, 59–66. [Google Scholar] [CrossRef]

- Vincent, F.; Besson, O. One-Step Generalized Likelihood Ratio Test for Subpixel Target Detection in Hyperspectral Imaging. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4479–4489. [Google Scholar] [CrossRef]

- Harsanyi, J.; Chang, C.I. Hyperspectral image classification and dimensionality reduction: An orthogonal subspace projection approach. IEEE Trans. Geosci. Remote Sens. 1994, 32, 779–785. [Google Scholar] [CrossRef]

- Chang, C.I. Orthogonal subspace projection (OSP) revisited: A comprehensive study and analysis. IEEE Trans. Geosci. Remote Sens. 2005, 43, 502–518. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled Nonnegative Matrix Factorization Unmixing for Hyperspectral and Multispectral Data Fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Arngren, M.; Schmidt, M.N.; Larson, J. Unmixing of Hyperspectral Images using Bayesian Non-negative Matrix Factorization with Volume Prior. J. Signal Process. Syst. 2011, 65, 479–496. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, B.; Zhang, L.; Liu, T. Joint Sparse Representation and Multitask Learning for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 894–906. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Xin, H.; Du, B. Hyperspectral Remote Sensing Image Subpixel Target Detection Based on Supervised Metric Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4955–4965. [Google Scholar] [CrossRef]

- Jiang, T.; Li, Y.; Xie, W.; Du, Q. Discriminative Reconstruction Constrained Generative Adversarial Network for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4666–4679. [Google Scholar] [CrossRef]

- Xie, W.; Lei, J.; Yang, J.; Li, Y.; Du, Q.; Li, Z. Deep Latent Spectral Representation Learning-Based Hyperspectral Band Selection for Target Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2015–2026. [Google Scholar] [CrossRef]

- Harsanyi, C.J. Detection and Classification of Subpixel Spectral Signatures in Hyperspectral Image Sequences; University of Maryland: Baltimore County, MD, USA, 1993. [Google Scholar]

- Manolakis, D.; Shaw, G. Detection algorithms for hyperspectral imaging applications. Signal Process. Mag. IEEE 2002, 19, 29–43. [Google Scholar] [CrossRef]

- Yang, S.; Song, Z.; Yuan, H.; Zou, Z.; Shi, Z. Fast high-order matched filter for hyperspectral image target detection. Infrared Phys. Technol. 2018, 94, 151–155. [Google Scholar] [CrossRef]

- Geng, X.; Wang, L.; Ji, L. Identify Informative Bands for Hyperspectral Target Detection Using the Third-Order Statistic. Remote Sens. 2021, 13, 1776. [Google Scholar] [CrossRef]

- Xie, W.; Zhang, J.; Lei, J.; Li, Y.; Jia, X. Self-spectral learning with GAN based spectral-spatial target detection for hyperspectral image. Neural Netw. 2021, 142, 375–387. [Google Scholar] [CrossRef]

- Zhu, D.; Du, B.; Zhang, L. Two-Stream Convolutional Networks for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6907–6921. [Google Scholar] [CrossRef]

- Qin, H.; Xie, W.; Li, Y.; Du, Q. HTD-VIT: Spectral-Spatial Joint Hyperspectral Target Detection with Vision Transformer. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 1967–1970. [Google Scholar] [CrossRef]

- Shi, Y.; Lei, J.; Yin, Y.; Cao, K.; Li, Y.; Chang, C.I. Discriminative Feature Learning with Distance Constrained Stacked Sparse Autoencoder for Hyperspectral Target Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1462–1466. [Google Scholar] [CrossRef]

- Xie, W.; Yang, J.; Lei, J.; Li, Y.; He, G. SRUN: Spectral Regularized Unsupervised Networks for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1463–1474. [Google Scholar] [CrossRef]

- Li, Y.; Qin, H.; Xie, W. HTDFormer: Hyperspectral Target Detection Based on Transformer with Distributed Learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5524715. [Google Scholar] [CrossRef]

- Yang, B.; He, Y.; Jiao, C.; Pan, X.; Wang, G.; Wang, L.; Wu, J. Multiple-Instance Metric Learning Network for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5516016. [Google Scholar] [CrossRef]

- Geng, X.; Ji, L.; Sun, K. Clever eye algorithm for target detection of remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2016, 1–14, 32–39. [Google Scholar] [CrossRef]

- Geng, X.; Ji, L.; Yang, W. The Analytical Solution of the Clever Eye (CE) Method. IEEE Trans. Geosci. Remote Sens. 2021, 59, 478–487. [Google Scholar] [CrossRef]

- Ren, H.; Du, Q.; Chang, C.I.; Jensen, J. Comparison between constrained energy minimization based approaches for hyperspectral imagery. In Proceedings of the IEEE Workshop on Advances in Techniques for Analysis of Remotely Sensed Data, Greenbelt, MD, USA, 27–28 October 2003; pp. 244–248. [Google Scholar] [CrossRef]

- Yin, H.; Wang, Y.; Wang, Y. A Revised Multi-Target Detection Approach in Hyperspectral lmage. Acta Electron. Sin. 2010, 38, 4. [Google Scholar]

- Perkins, N.J.; Schisterman, E.F. The inconsistency of “optimal” cutpoints obtained using two criteria based on the receiver operating characteristic curve. Am. J. Epidemiol. 2006, 163, 670–675. [Google Scholar] [CrossRef]

- Geng, X.; Ji, L.; Zhao, Y. Filter tensor analysis: A tool for multi-temporal remote sensing target detection. ISPRS J. Photogramm. Remote Sens. 2019, 151, 290–301. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, K.; Gao, K.; Li, W. Hyperspectral Time-Series Target Detection Based on Spectral Perception and Spatial–Temporal Tensor Decomposition. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5520812. [Google Scholar] [CrossRef]

- Zhang, X.D. Matrix Analysis and Applications; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).