Remote Sensing Image Semantic Segmentation Sample Generation Using a Decoupled Latent Diffusion Framework

Abstract

1. Introduction

2. Methodology

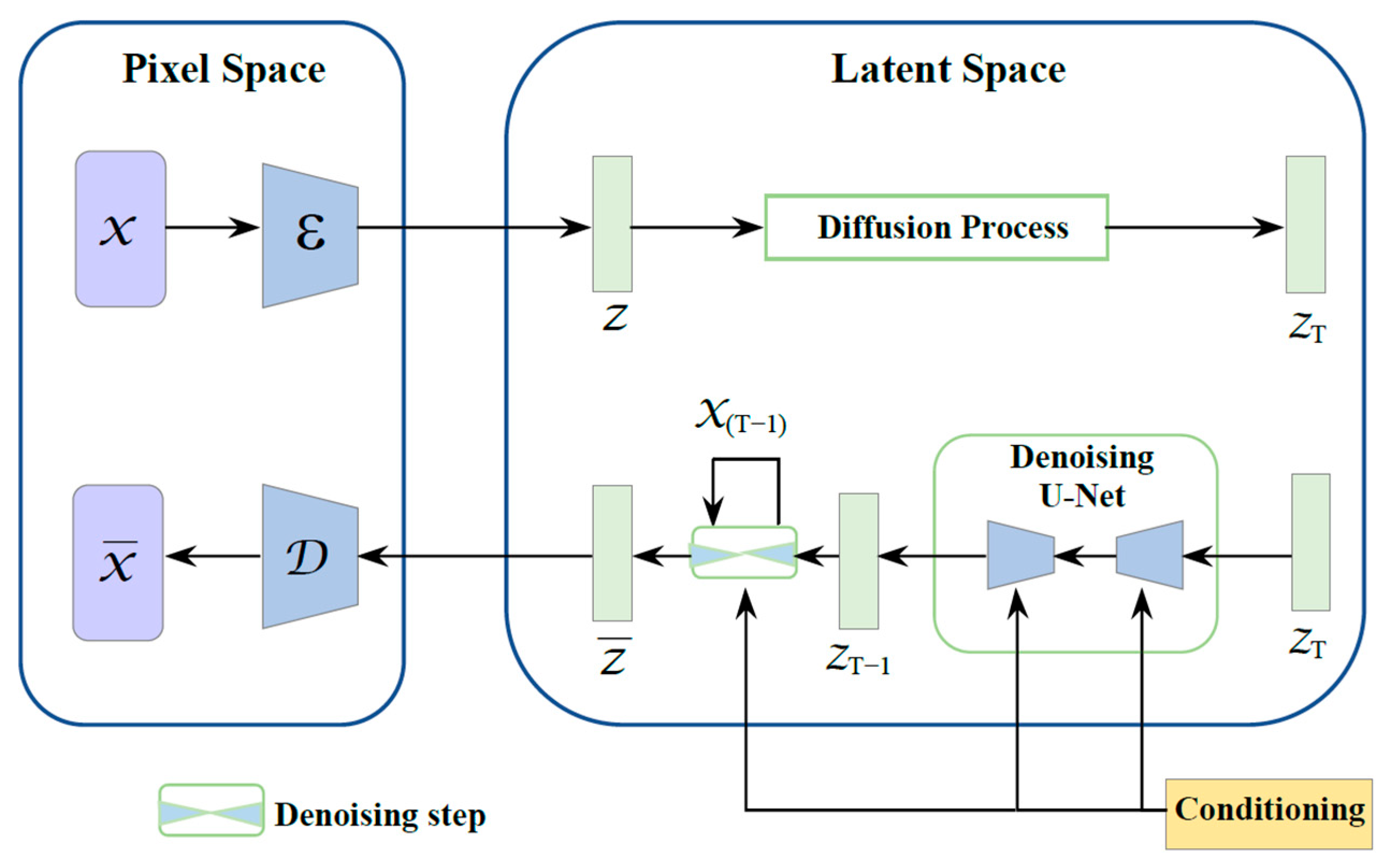

2.1. Overview of Latent Diffusion Model

2.1.1. Stable Diffusion Model

- Encoding to latent space: A pretrained Variational Autoencoder (VAE) compresses the input image into latent representations, reducing data dimensionality while extracting essential feature information. The result is a latent code.

- Forward diffusion: In the latent space, noise is gradually added to step by step, producing a sequence of progressively noisier latent codes. This simulates the degradation of the image from ordered to disordered, laying the groundwork for subsequent denoising.

- Denoising with U-Net: The noisy latent code is fed into a U-Net-based denoising network. Here, condition embeddings are used to steer the denoising toward outputs that satisfy specified conditions. The conditions are derived from textual prompts and other priors, and are injected into the U-Net, guiding it to recover a cleaner latent code that adheres to the conditioning.

- Decoding with VAE: The denoised latent code is decoded back to pixel space by the VAE decoder, yielding the reconstructed image .

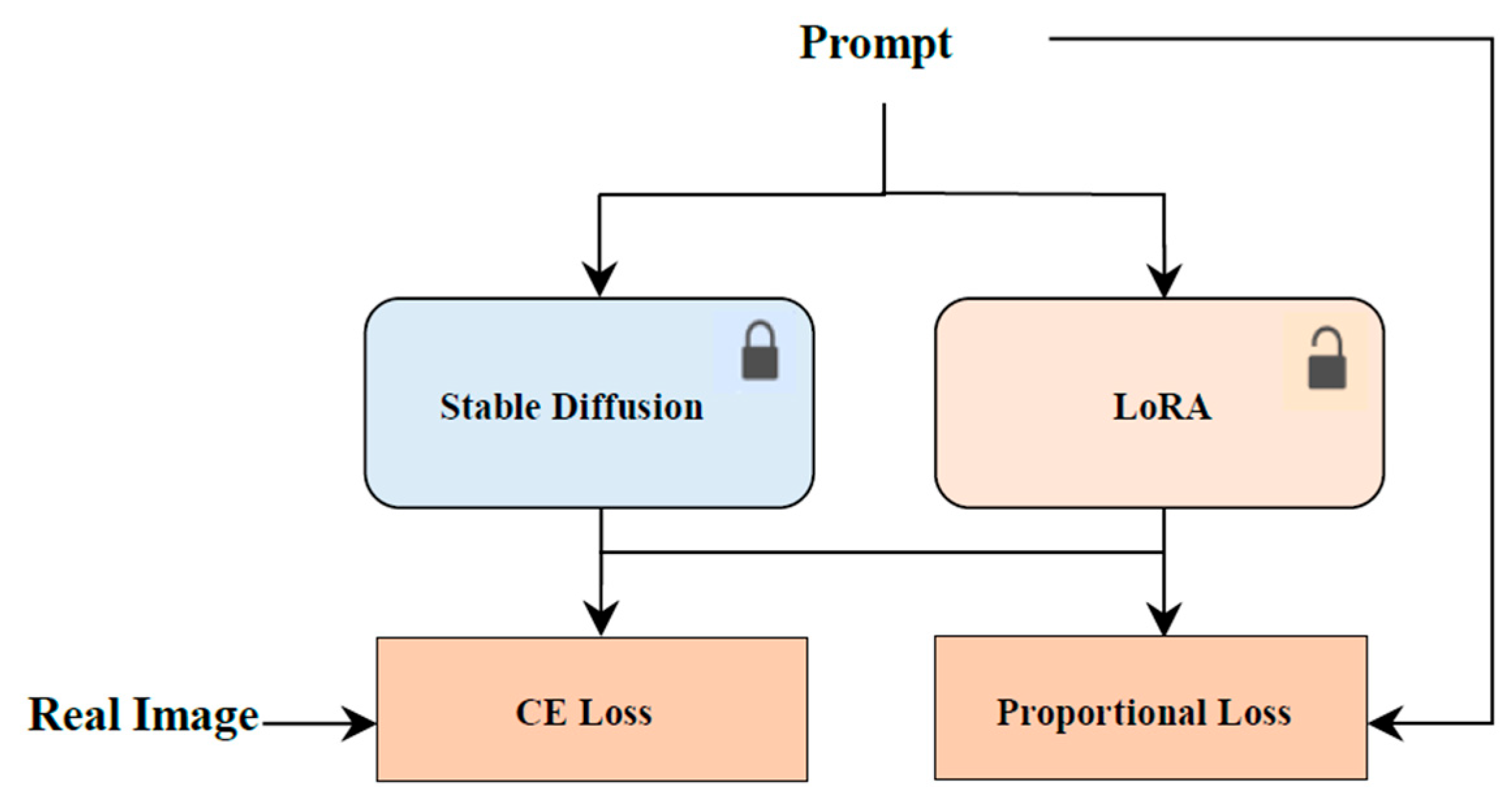

2.1.2. LoRA Method

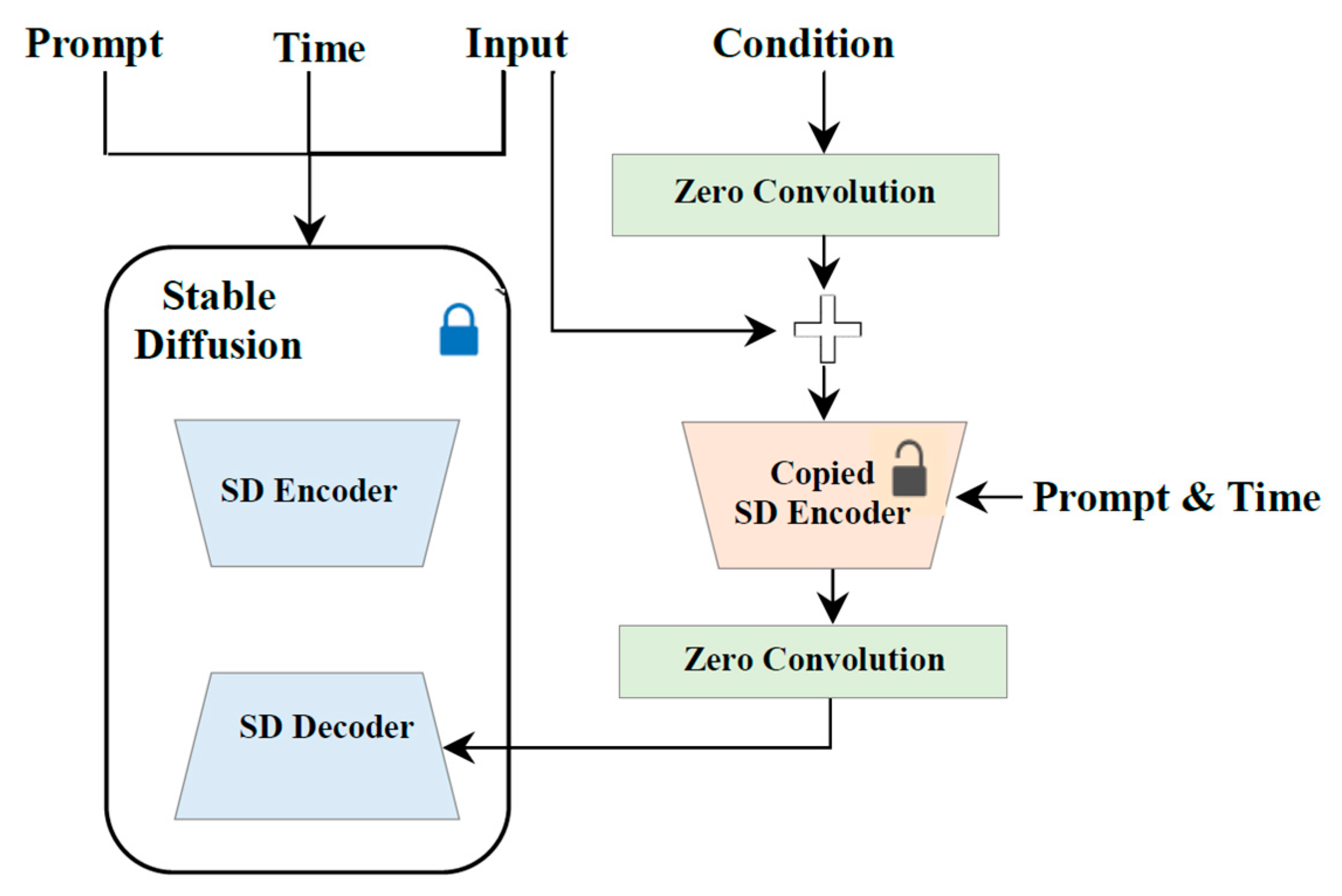

2.1.3. ControlNet Method

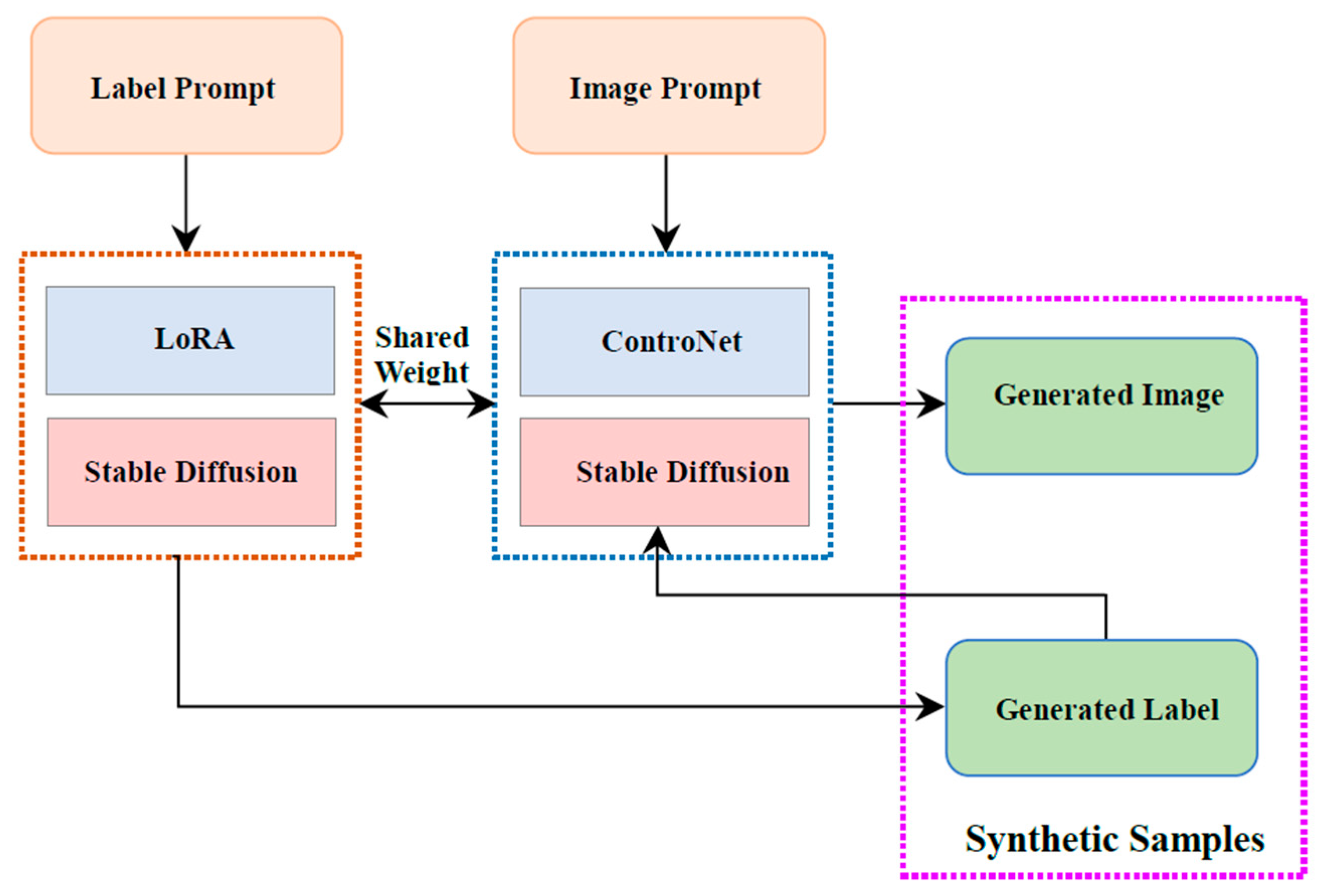

2.2. Decoupled Latent Diffusion Framework for Synthetic Sample Generation

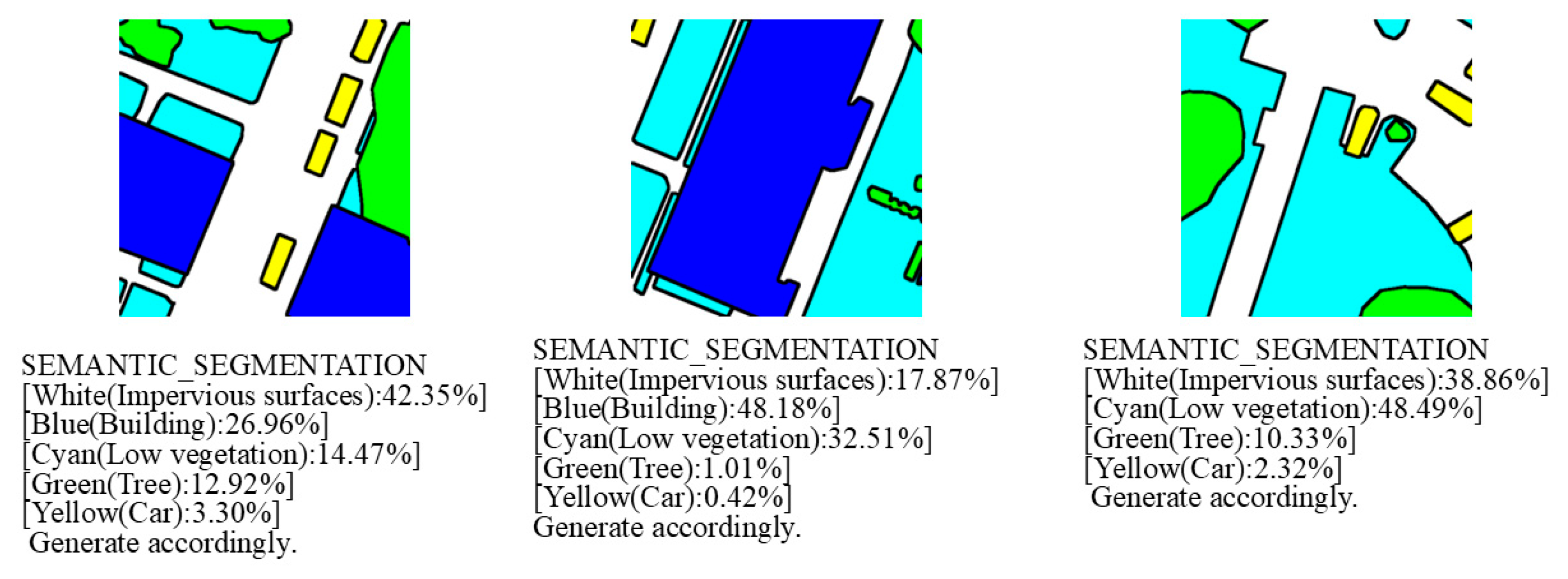

2.2.1. Text-Driven Label Generation with LoRA

2.2.2. Proportion-Aware Loss Function

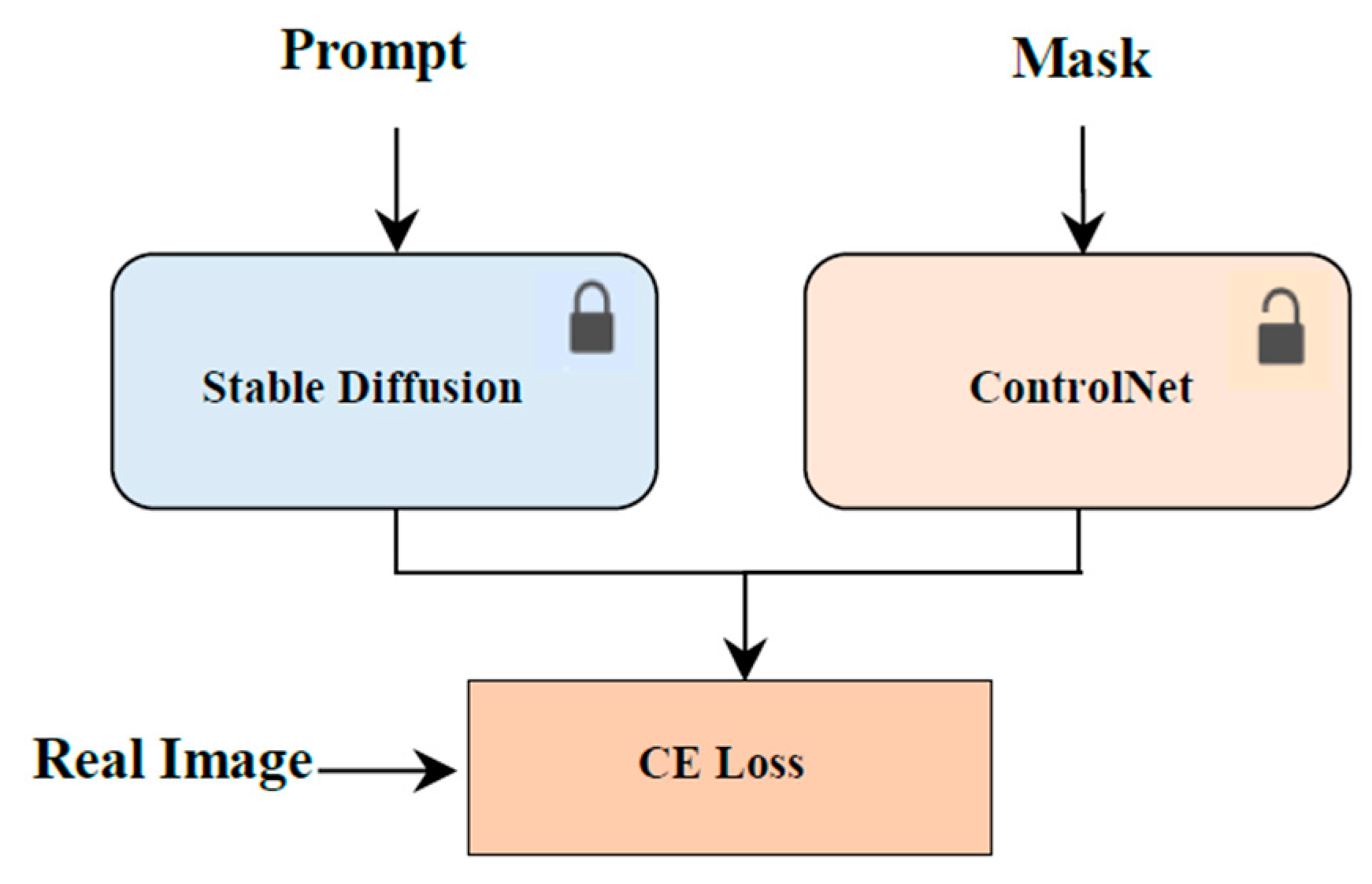

2.2.3. Image Generation Method Based on ControlNet

- Lines 5–13 implement LoRA fine-tuning with the proportion-aware loss (Lprop) that enforces class ratio targets Ptarget;

- Lines 15–22 train a ControlNet conditioned on both the generated mask and its caption, while keeping the Stable Diffusion backbone frozen;

- Lines 23–29 generate a user-defined number of synthetic image–mask pairs;

- Line 30 mixes them with real data at a configurable ratio α (e.g., 40%);

- Lines 31–34 perform standard supervised training of any segmentation model (e.g., DeepLabV3+, PSPNet, SegFormer) on the augmented dataset.

| Algorithm 1: Synthetic–Sample Generation and Segmentation Pipeline |

| Require: real images and masks |

| Require: target class ratios |

| Require: , (frozen) |

| 1: Hyper-parameters: , |

| 2: /* Stage 1 – Label generation */ |

| 3: do |

| 4: |

| 5: , , ) |

| 6: end for |

| 7: Initialise LoRA adapters on () |

| 8: while training do |

| 9: Sample mini-batch , ) |

| 10: |

| 11: |

| 12: |

| 13: |

| 14: Update LoRA with |

| 15: end while |

| 16: trained mask generator |

| 17: /* Stage 2 – Image synthesis */ |

| 18: Initialise ControlNet weights |

| 19: while training do |

| 20: |

| 21: |

| 22: |

| 23: |

| 24: end while |

| 25: trained image generator |

| 26: /* Stage 3 – Segmentation training */ |

| 27: |

| 28: do |

| 29: random prompt |

| 30: |

| 31: |

| 32: |

| 33: end for |

| 34: |

| 35: Train segmentation net on |

| 36: Evaluate on validation set; return |

3. Results and Discussion

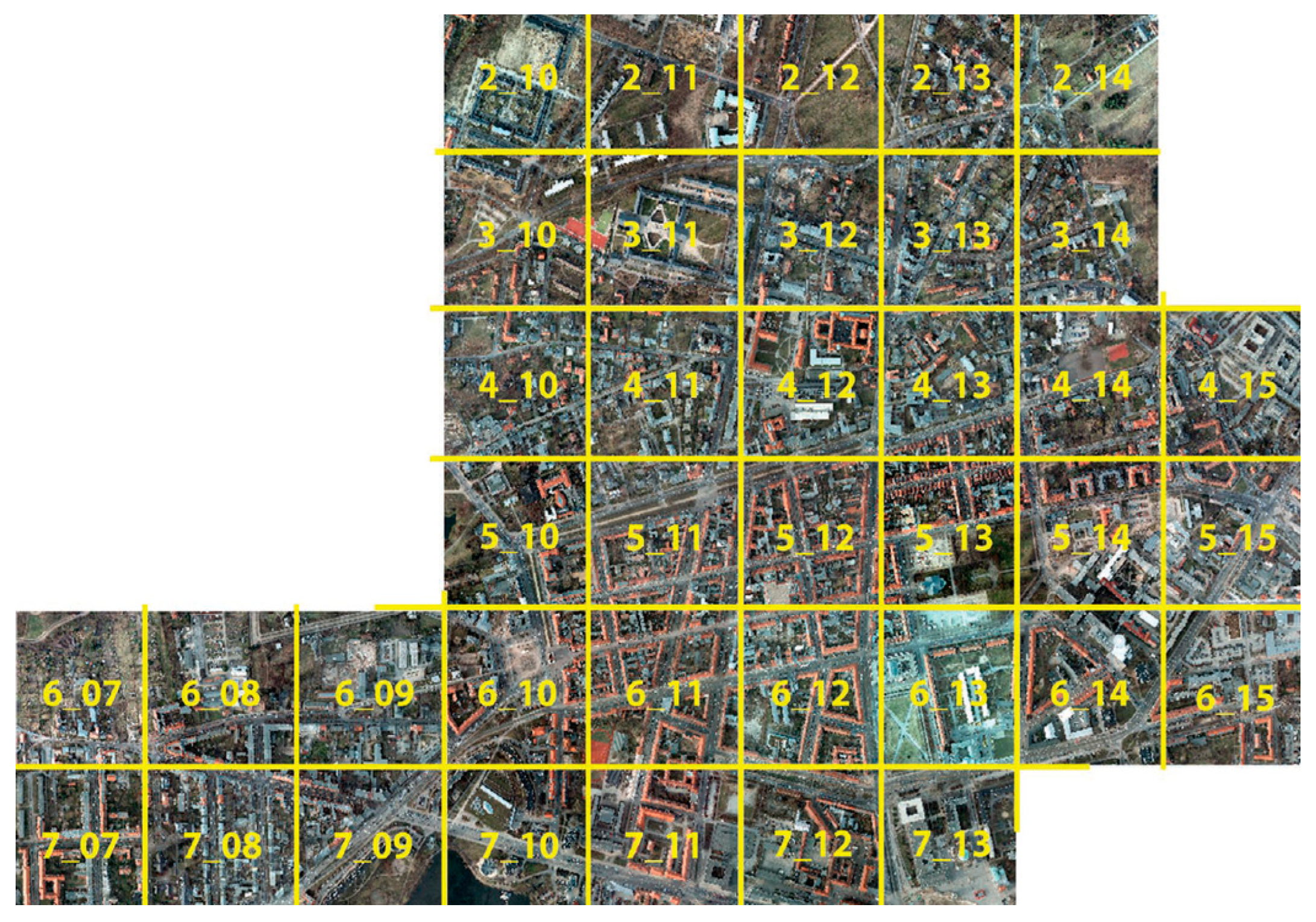

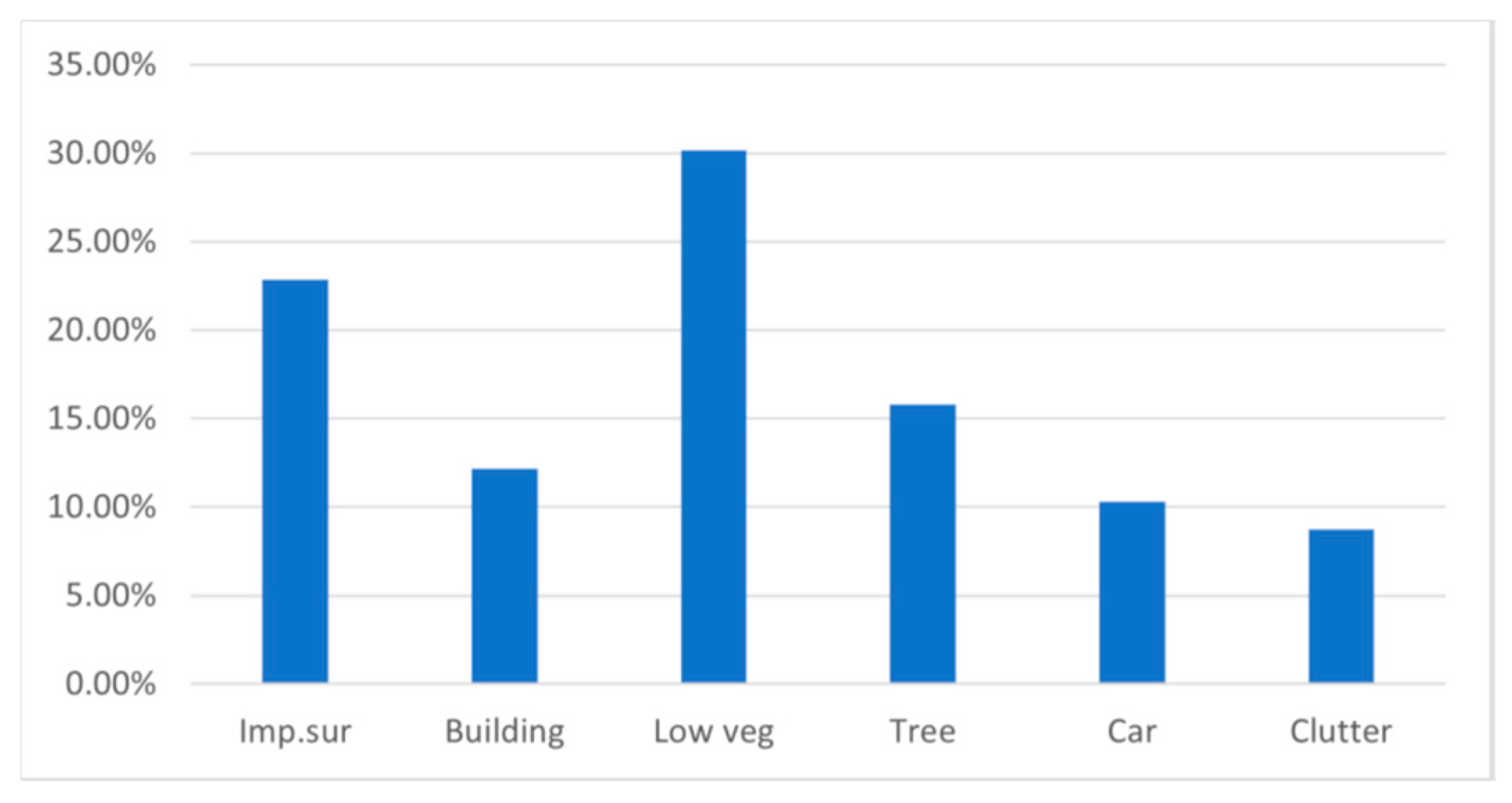

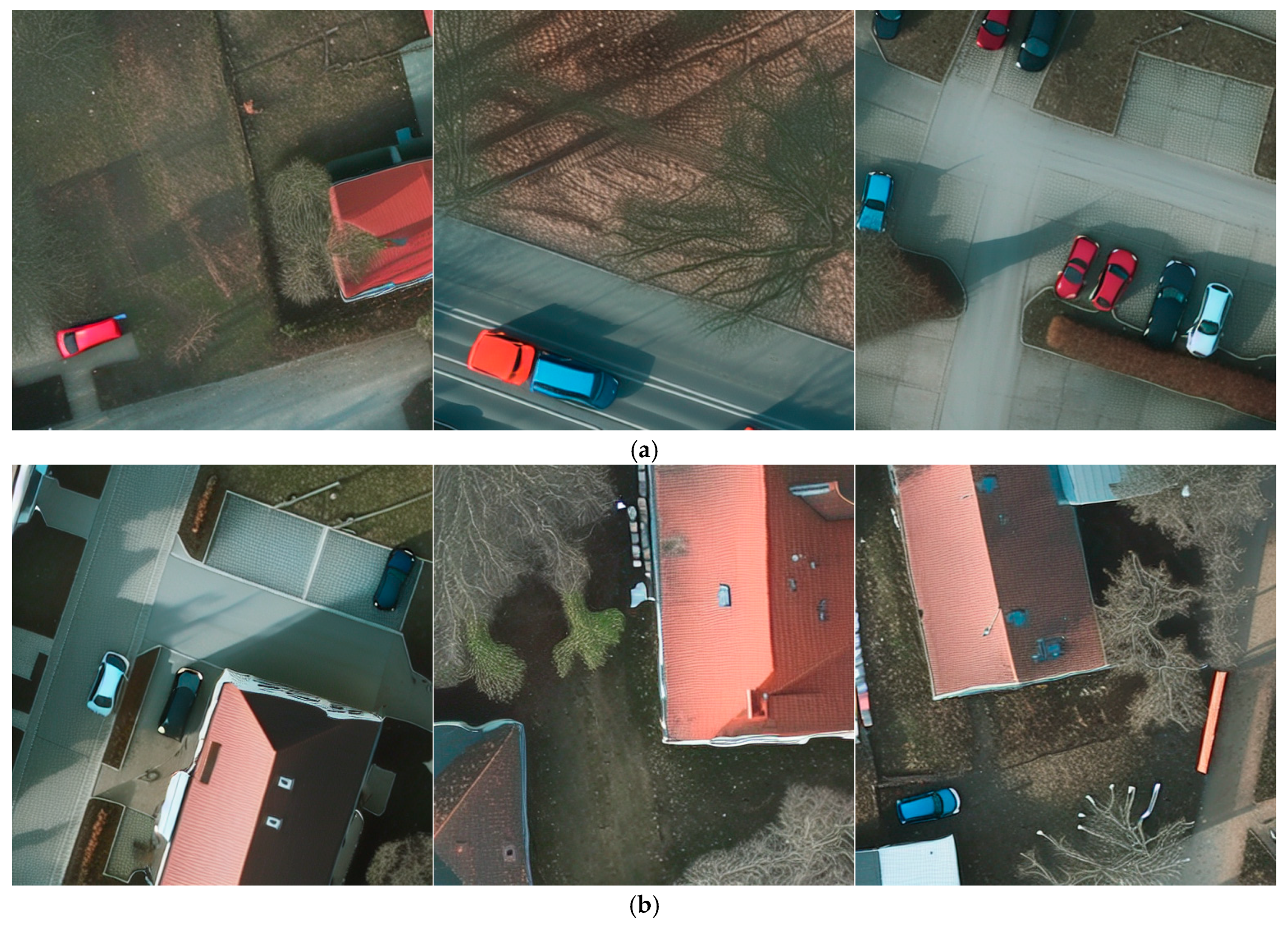

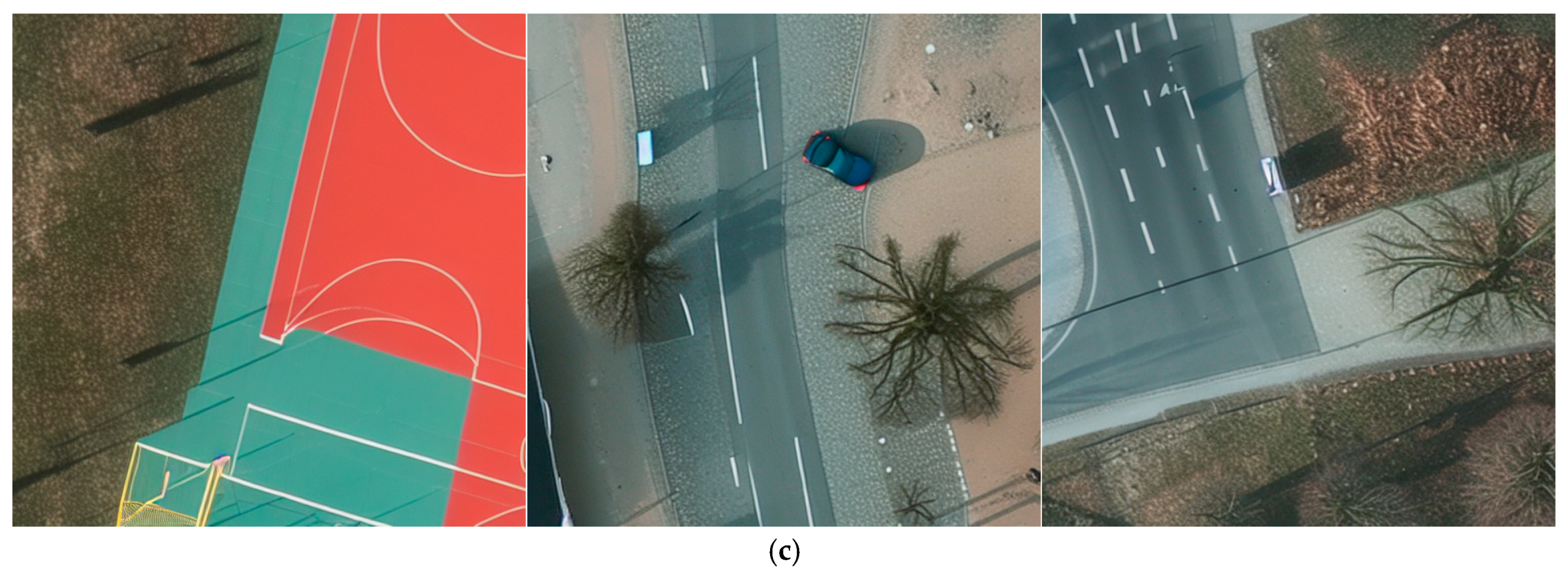

3.1. Dataset

3.2. Evaluation Metrics and Parameter Settings

3.2.1. Evaluation Metrics

- Mean Intersection Over Union (mIoU): mIoU is a primary metric for segmentation performance, defined as the average of the IoU across all classes. The mIoU can be defined by Equation (5):where N is the total number of categories, TPi is the number of pixels correctly categorized as positive instances in category i, FPi is the number of pixels incorrectly categorized as positive instances in category i, and FNi is the number of pixels incorrectly categorized as negative instances in category i.

- Mean F1 score (FI): The average F1 score can be defined by Equation (6):where Precision denotes the proportion of positive cases predicted by the model that are actually positive, calculated as TP/(TP + FP), and Recall denotes the proportion of positive cases that are actually positive and successfully predicted by the model, calculated as TP/(TP + FP). The mean F1 score is the average of the multi-classes, i.e., mF1 = .

- Fréchet Inception Distance (FID): This measure the distributional difference between generated images and real images; a lower FID indicates higher generation quality. Its computation is defined by Equation (7):Here, and denote the feature means of the real and generated images, respectively; and denote the feature covariance matrices of the real and generated images, respectively.

- Mean Class Proportion Error (mCPE): This metric quantifies the deviation between the class proportions in the generated labels and the predefined target proportions. Lower mCPE values indicate that the generated labels align more closely with the expected class distribution. mCPE is represented by Equation (8):where n is the total number of samples and m is the total number of classes; is the proportion of class i in the generated labels; and is the proportion of class i in the text-annotated labels. The absolute difference represents the proportion error for class i. The chief advantage of mCPE is that it quantifies how far the generated label distribution deviates from the desired class proportions and, in a straightforward numeric form, reflects the model’s effectiveness at proportion control.

3.2.2. Parameter Settings

3.3. Comparative Experiments

3.3.1. Comparison of Different Models

3.3.2. Evaluation on Additional Dataset

3.3.3. Comparison with Random Oversampling

3.4. Ablation Study

3.4.1. Effect of Proportion-Aware Loss

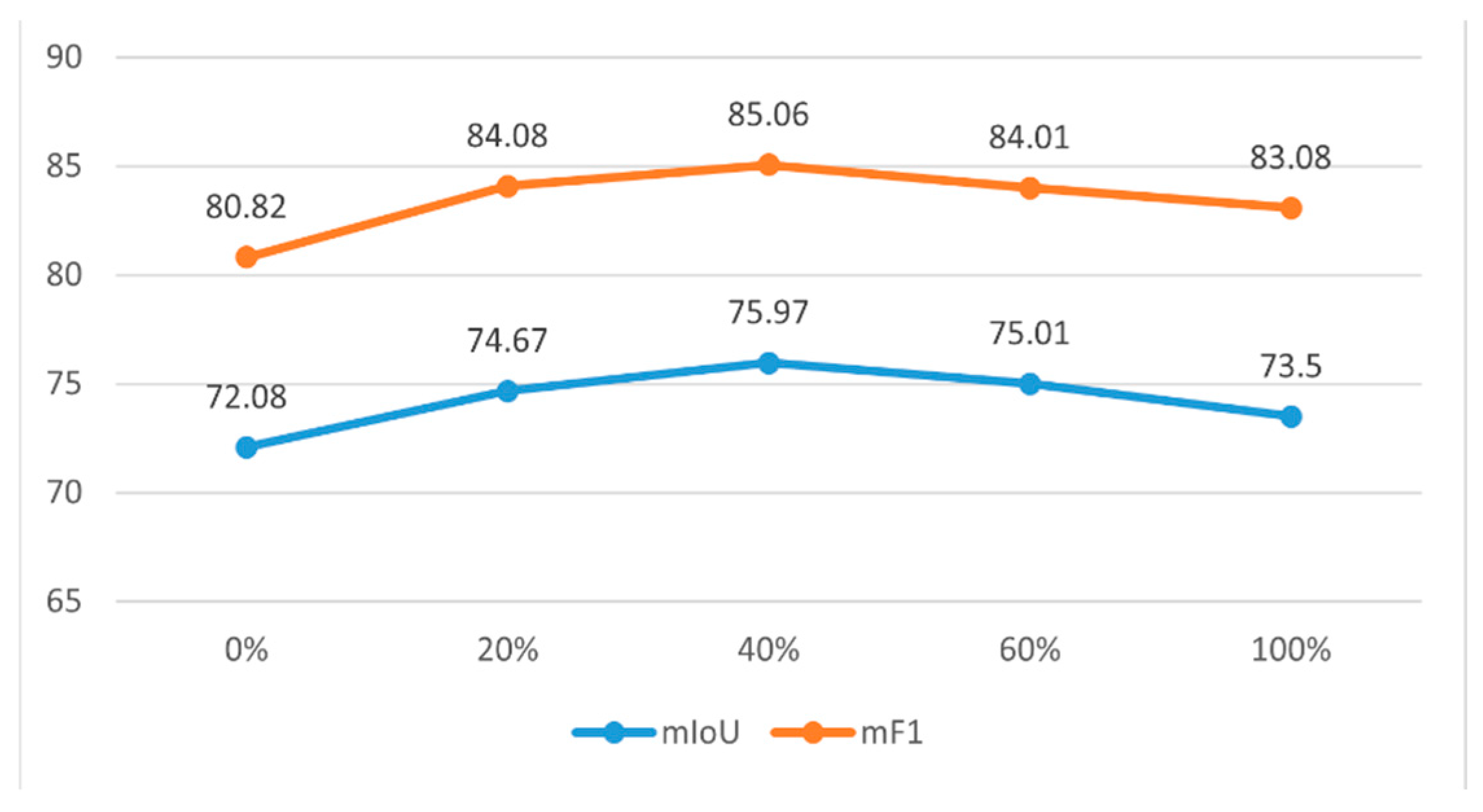

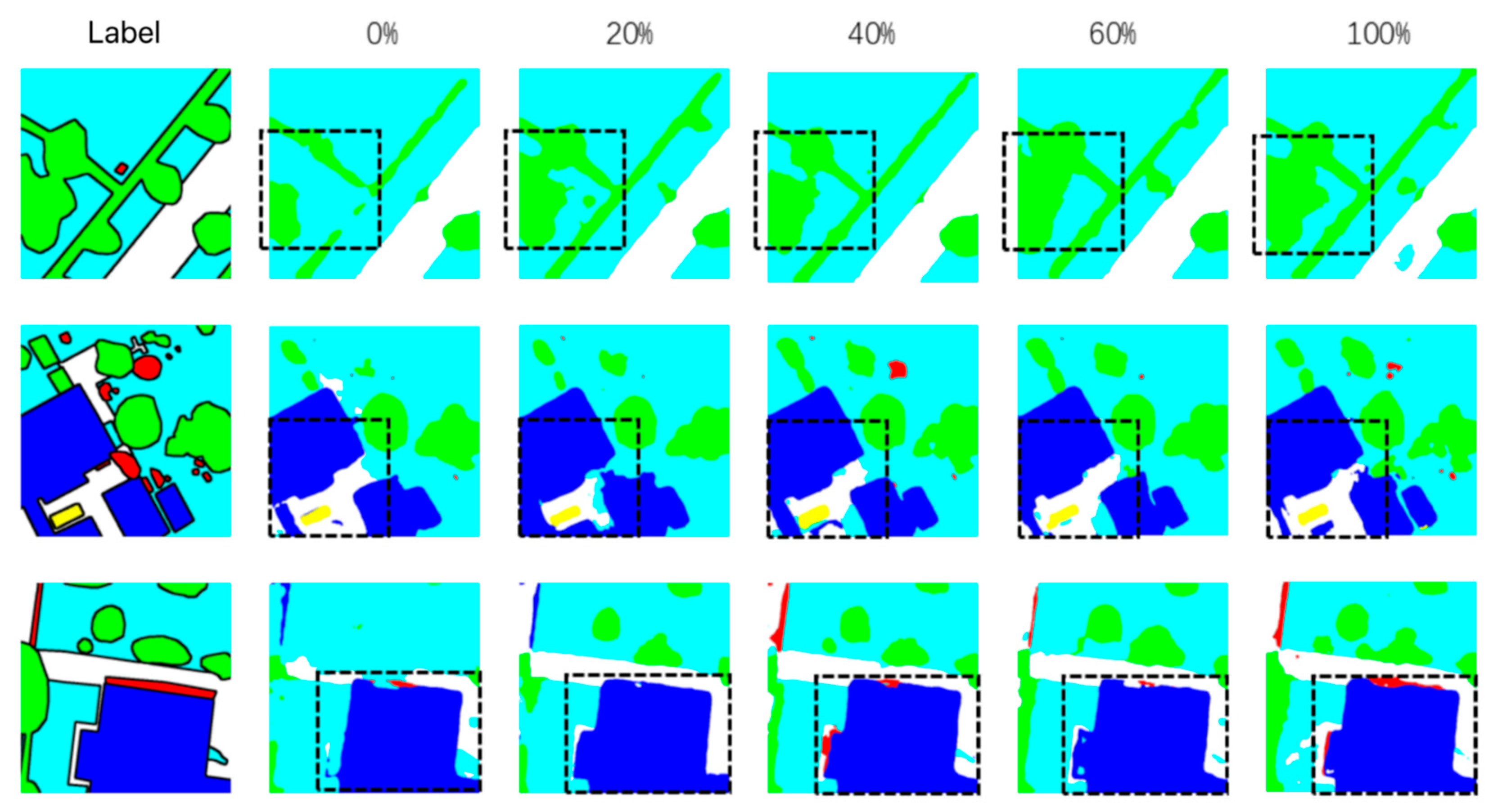

3.4.2. Effect of Synthetic Sample Proportion

3.4.3. Performance Across Different Models

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GAN | Generative Adversarial Network |

| LDM | Latent Diffusion Model |

| LoRA | Low-Rank Adaptation |

| MLLM | Multimodal Large Language Model |

| mIoU | Mean Intersection over Union |

| mF1 | Mean F1 Score (average per-class F1) |

| mCPE | Mean Class Proportion Error |

| VAE | Variational Autoencoder |

References

- Zhu, M.; Zhu, D.; Huang, M.; Gong, D.; Li, S.; Xia, Y.; Lin, H.; Altan, O. Assessing the Impact of Climate Change on the Landscape Stability in the Mediterranean World Heritage Site Based on Multi-Sourced Remote Sensing Data: A Case Study of the Causses and Cévennes, France. Remote Sens. 2025, 17, 203. [Google Scholar] [CrossRef]

- Qiu, X.; Zhang, Z.; Luo, X.; Zhang, X.; Yang, Y.; Wu, Y.; Su, J. Semantic Uncertainty-Awared for Semantic Segmentation of Remote Sensing Images. IET Image Process. 2025, 19, e70045. [Google Scholar] [CrossRef]

- Wang, J.; Chen, T.; Zheng, L.; Tie, J.; Zhang, Y.; Chen, P.; Luo, Z.; Song, Q. A Multi-Scale Remote Sensing Semantic Segmentation Model with Boundary Enhancement Based on UNetFormer. Sci. Rep. 2025, 15, 14737. [Google Scholar] [CrossRef] [PubMed]

- Danish, M.U.; Buwaneswaran, M.; Fonseka, T.; Grolinger, K. Graph Attention Convolutional U-Net: A Semantic Segmentation Model for Identifying Flooded Areas. In Proceedings of the IECON 2024-50th Annual Conference of the IEEE Industrial Electronics Society, Chicago, IL, USA, 3–6 November 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Gong, D.; Huang, M.; Ge, Y.; Zhu, D.; Chen, J.; Chen, Y.; Zhang, L.; Hu, B.; Lai, S.; Lin, H. Revolutionizing Ecological Security Pattern with Multi-Source Data and Deep Learning: An Adaptive Generation Approach. Ecol. Indic. 2025, 173, 113315. [Google Scholar] [CrossRef]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A Deep Learning Framework for Semantic Segmentation of Remotely Sensed Data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- ISPRS. ISPRS Test Project on Urban Classification, 3D Building Reconstruction and Semantic Labeling. Available online: https://www.isprs.org/resources/datasets/benchmarks/UrbanSemLab/semantic-labeling.aspx (accessed on 20 April 2025).

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation. arXiv 2021, arXiv:2110.08733. [Google Scholar]

- Zermatten, V.; Lu, X.; Castillo-Navarro, J.; Kellenberger, T.; Tuia, D. Land Cover Mapping from Multiple Complementary Experts under Heavy Class Imbalance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 6468–6477. [Google Scholar] [CrossRef]

- Harshvardhan, G.M.; Gourisaria, M.K.; Pandey, M.; Rautaray, S.S. A Comprehensive Survey and Analysis of Generative Models in Machine Learning. Comput. Sci. Rev. 2020, 38, 100285. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Hinz, T.; Fisher, M.; Wang, O.; Wermter, S. Improved Techniques for Training Single-Image Gans. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 1300–1309. [Google Scholar]

- Niu, Z.; Li, Y.; Gong, Y.; Zhang, B.; He, Y.; Zhang, J.; Tian, M.; He, L. Multi-Class Guided GAN for Remote-Sensing Image Synthesis Based on Semantic Labels. Remote Sens. 2025, 17, 344. [Google Scholar] [CrossRef]

- Graves, A. Generating Sequences With Recurrent Neural Networks. arXiv 2013, arXiv:1308.0850. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep Unsupervised Learning Using Nonequilibrium Thermodynamics. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2256–2265. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Song, J.; Meng, C.; Ermon, S. Denoising Diffusion Implicit Models. arXiv 2020, arXiv:2010.02502. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical Text-Conditional Image Generation with Clip Latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-Rank Adaptation of Large Language Models. ICLR 2022, 1, 3. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding Conditional Control to Text-to-Image Diffusion Models. In Proceedings of the IEEE/CVF International Conference On Computer Vision, Paris, France, 1–6 October 2023; pp. 3836–3847. [Google Scholar]

- Toker, A.; Eisenberger, M.; Cremers, D.; Leal-Taixé, L. Satsynth: Augmenting Image-Mask Pairs through Diffusion Models for Aerial Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27695–27705. [Google Scholar]

- Zhao, C.; Ogawa, Y.; Chen, S.; Yang, Z.; Sekimoto, Y. Label Freedom: Stable Diffusion for Remote Sensing Image Semantic Segmentation Data Generation. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023; IEEE: New York, NY, USA, 2023; pp. 1022–1030. [Google Scholar]

- Tang, D.; Cao, X.; Hou, X.; Jiang, Z.; Liu, J.; Meng, D. CRS-Diff: Controllable Remote Sensing Image Generation with Diffusion Model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5638714. [Google Scholar] [CrossRef]

- Khanna, S.; Liu, P.; Zhou, L.; Meng, C.; Rombach, R.; Burke, M.; Lobell, D.; Ermon, S. DiffusionSat: A Generative Foundation Model for Satellite Imagery. arXiv 2023, arXiv:2312.03606. [Google Scholar]

- Zhu, C.; Liu, Y.; Huang, S.; Wang, F. Taming a Diffusion Model to Revitalize Remote Sensing Image Super-Resolution. Remote Sens. 2025, 17, 1348. [Google Scholar] [CrossRef]

- Wang, P.; Bai, S.; Tan, S.; Wang, S.; Fan, Z.; Bai, J.; Chen, K.; Liu, X.; Wang, J.; Ge, W. Qwen2-vl: Enhancing Vision-Language Model’s Perception of the World at Any Resolution. arXiv 2024, arXiv:2409.12191. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

| Method | FID |

|---|---|

| Pix2Pix | 129.09 |

| CycleGAN | 144.72 |

| Ours | 92.59 |

| Prompt | Loss | Impervious Surface | Building | Low Vegetation | Tree | Car | Clutter | mCPE |

|---|---|---|---|---|---|---|---|---|

| √ | √ | 2.57 | 23.76 | 4.24 | 18.98 | 1.44 | 1.24 | 8.705 |

| √ | - | 3.18 | 26.01 | 5.75 | 24.29 | 1.66 | 3.69 | 10.76 |

| Synthetic Sample Ratio | Impervious Surface (mIoU/F1) | Building | Low Vegetation | Tree | Car | Clutter |

|---|---|---|---|---|---|---|

| 0% | 84.15/91.39 | 90.87/95.22 | 73.05/84.42 | 76.66/86.79 | 87.35/93.25 | 20.39/33.87 |

| 20% | 84.24/91.45 | 89.02/94.19 | 72.71/84.20 | 75.25/82.31 | 89.55/94.49 | 37.25/54.28 |

| 40% | 84.56/91.63 | 90.30/94.90 | 73.37/84.64 | 77.03/87.02 | 90.62/95.08 | 39.92/57.06 |

| 60% | 84.52/91.61 | 90.47/95.00 | 74.21/85.19 | 78.37/87.87 | 88.93/94.14 | 33.55/50.25 |

| 100% | 81.96/90.09 | 88.07/93.66 | 71.76/83.56 | 76.71/86.82 | 89.00/94.18 | 33.51/50.20 |

| Model/Synthetic Sample Ratio | IoU/F1 | mIoU | mF1 | |||||

|---|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | Clutter | |||

| PSPNet/0% | 83.91/91.25 | 87.86/93.54 | 70.58/82.75 | 75.70/86.17 | 88.27/93.17 | 33.24/49.89 | 73.26 | 82.89 |

| PSPNet/40% | 84.48/91.59 | 89.61/94.52 | 72.79/84.25 | 76.73/86.83 | 89.26/94.32 | 33.32/49.98 | 74.36 | 83.58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Liu, H.; Yang, R.; Chen, Z. Remote Sensing Image Semantic Segmentation Sample Generation Using a Decoupled Latent Diffusion Framework. Remote Sens. 2025, 17, 2143. https://doi.org/10.3390/rs17132143

Xu Y, Liu H, Yang R, Chen Z. Remote Sensing Image Semantic Segmentation Sample Generation Using a Decoupled Latent Diffusion Framework. Remote Sensing. 2025; 17(13):2143. https://doi.org/10.3390/rs17132143

Chicago/Turabian StyleXu, Yue, Honghao Liu, Ruixia Yang, and Zhengchao Chen. 2025. "Remote Sensing Image Semantic Segmentation Sample Generation Using a Decoupled Latent Diffusion Framework" Remote Sensing 17, no. 13: 2143. https://doi.org/10.3390/rs17132143

APA StyleXu, Y., Liu, H., Yang, R., & Chen, Z. (2025). Remote Sensing Image Semantic Segmentation Sample Generation Using a Decoupled Latent Diffusion Framework. Remote Sensing, 17(13), 2143. https://doi.org/10.3390/rs17132143