Mapping the Cerrado–Amazon Transition Using PlanetScope–Sentinel Data Fusion and a U-Net Deep Learning Framework

Abstract

1. Introduction

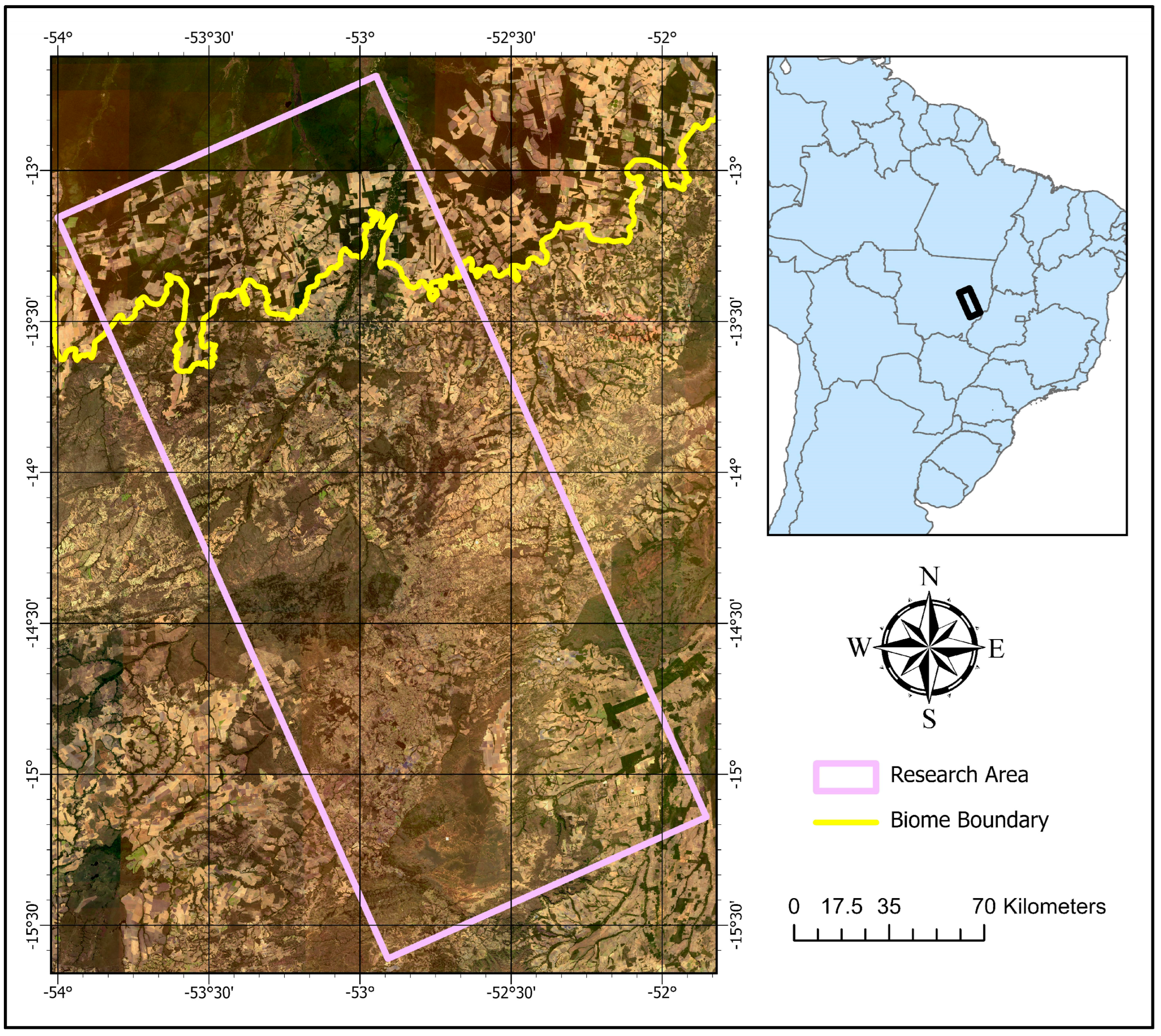

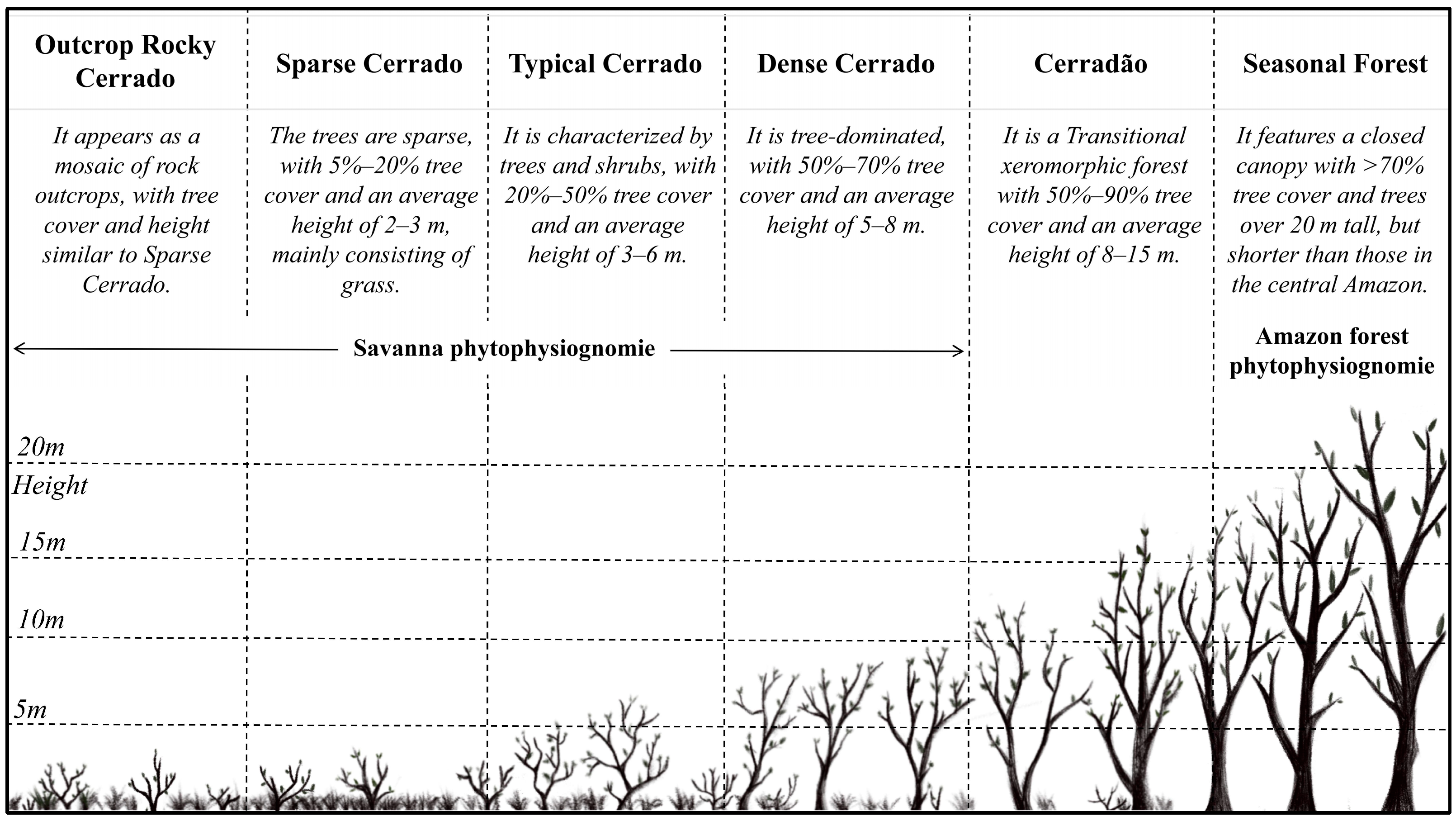

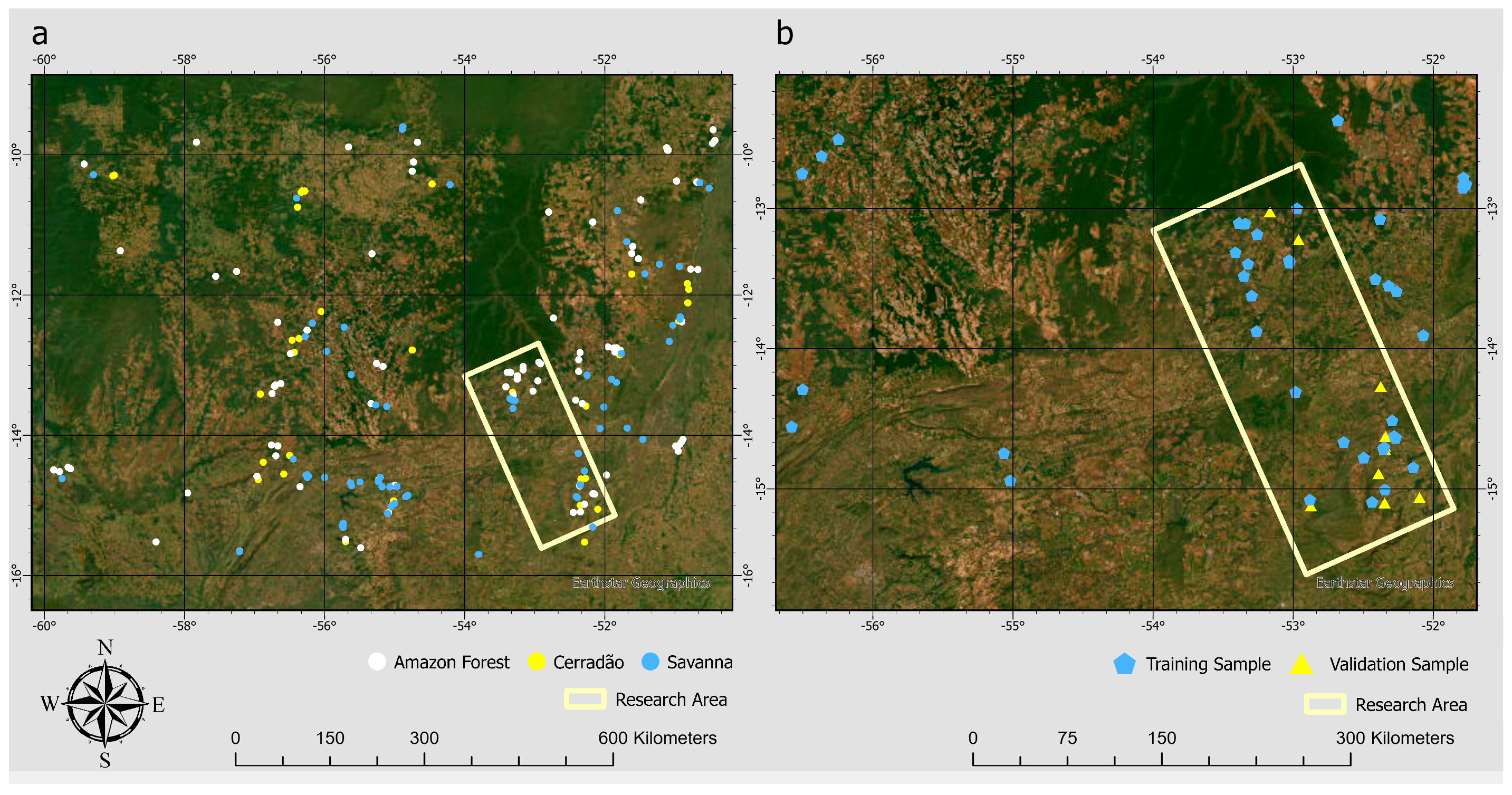

2. Study Area

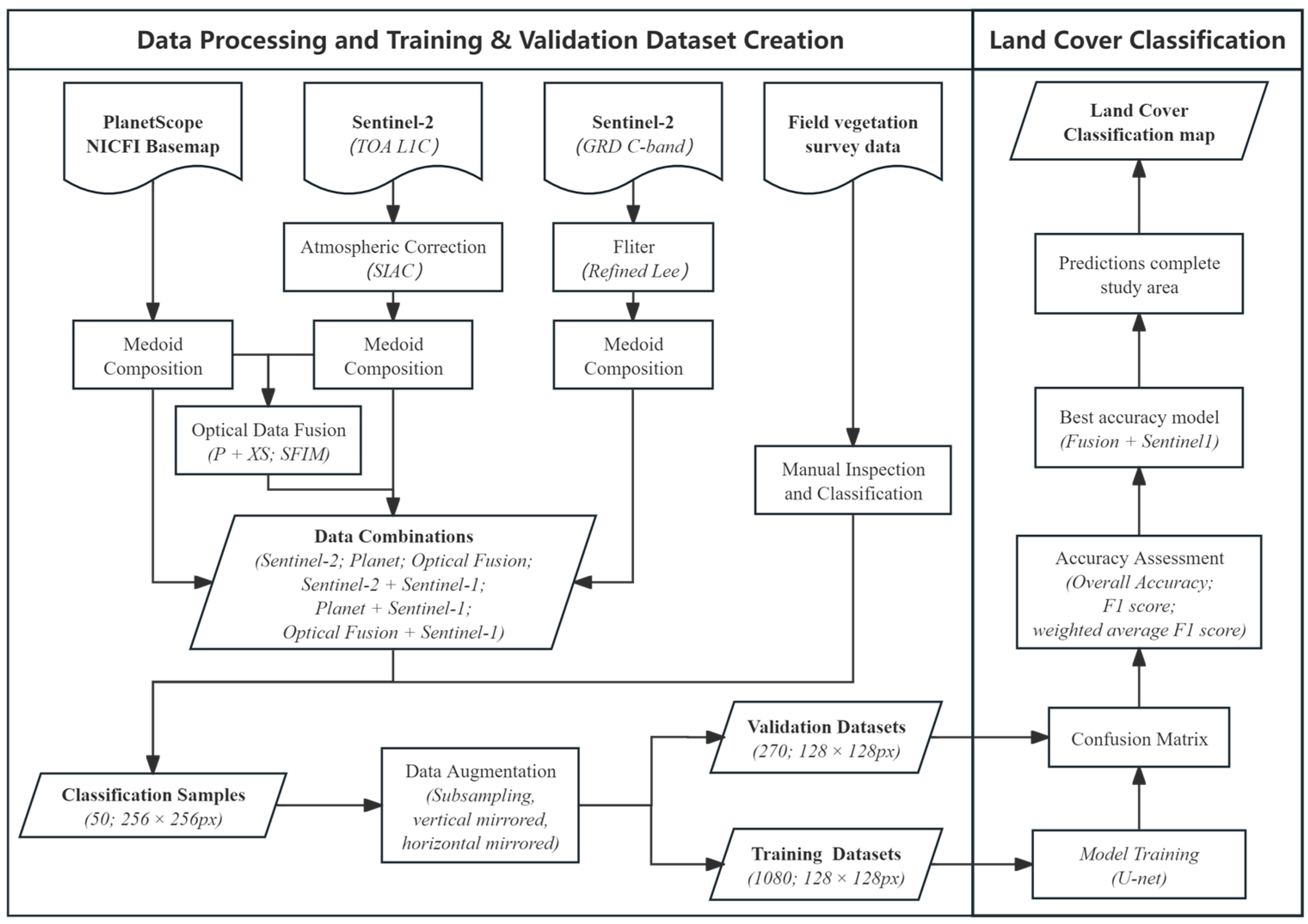

3. Data and Methods

3.1. Data Collection

3.1.1. Satellite Data

3.1.2. Ground Truth Data

3.2. Image Classification

U-Net

3.3. Optical Data Fusion

3.4. Accuracy Assessment

4. Results

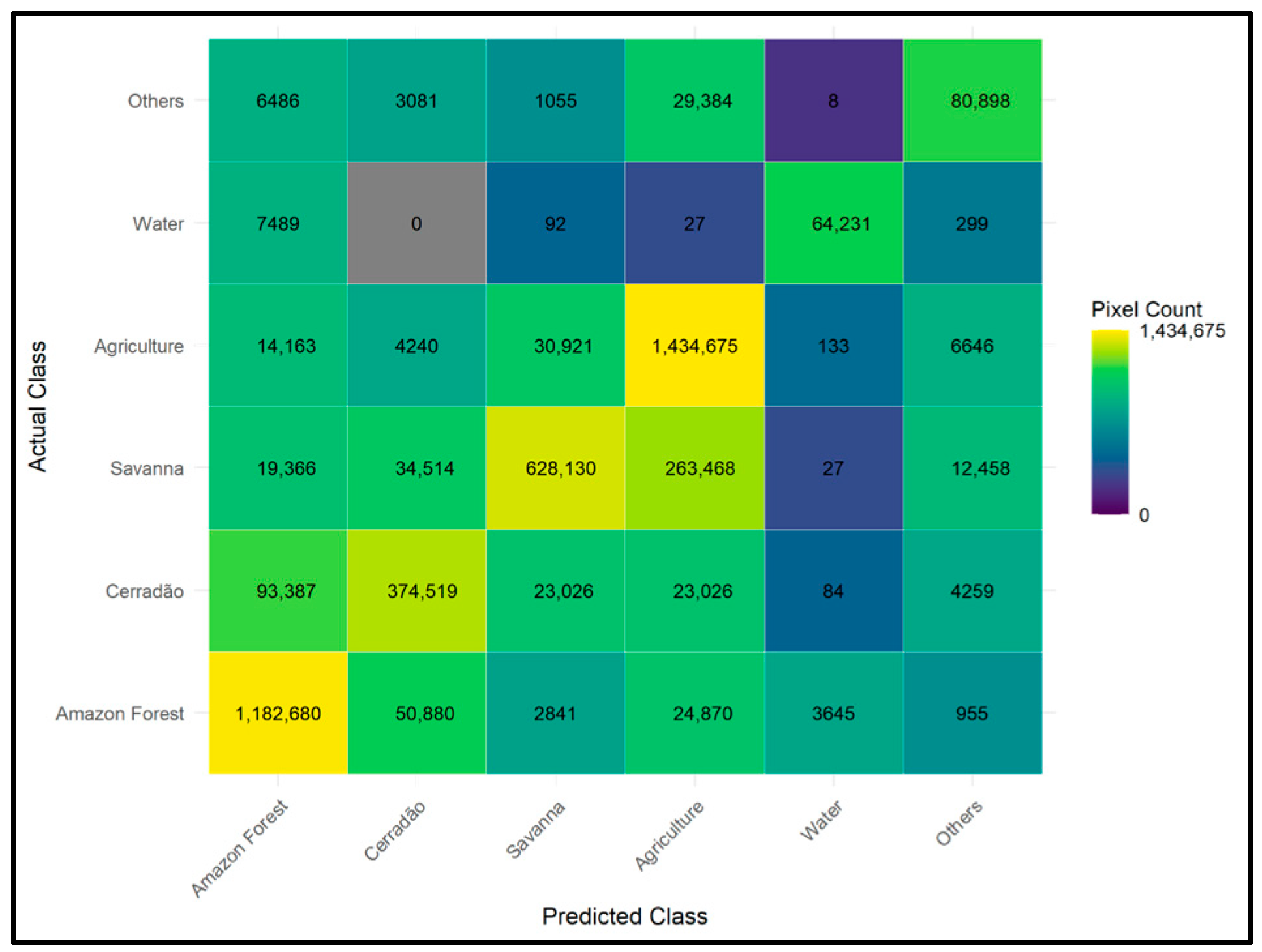

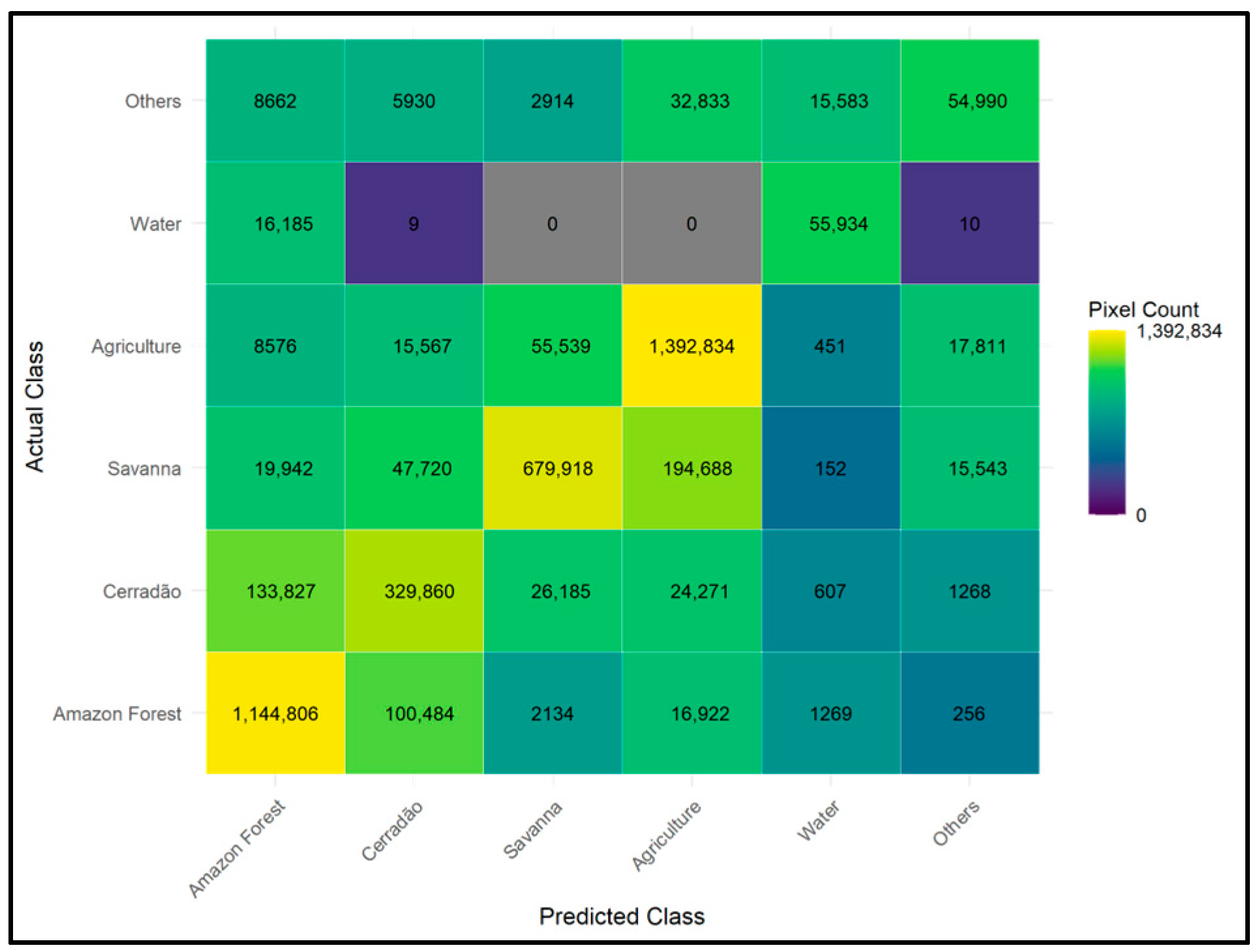

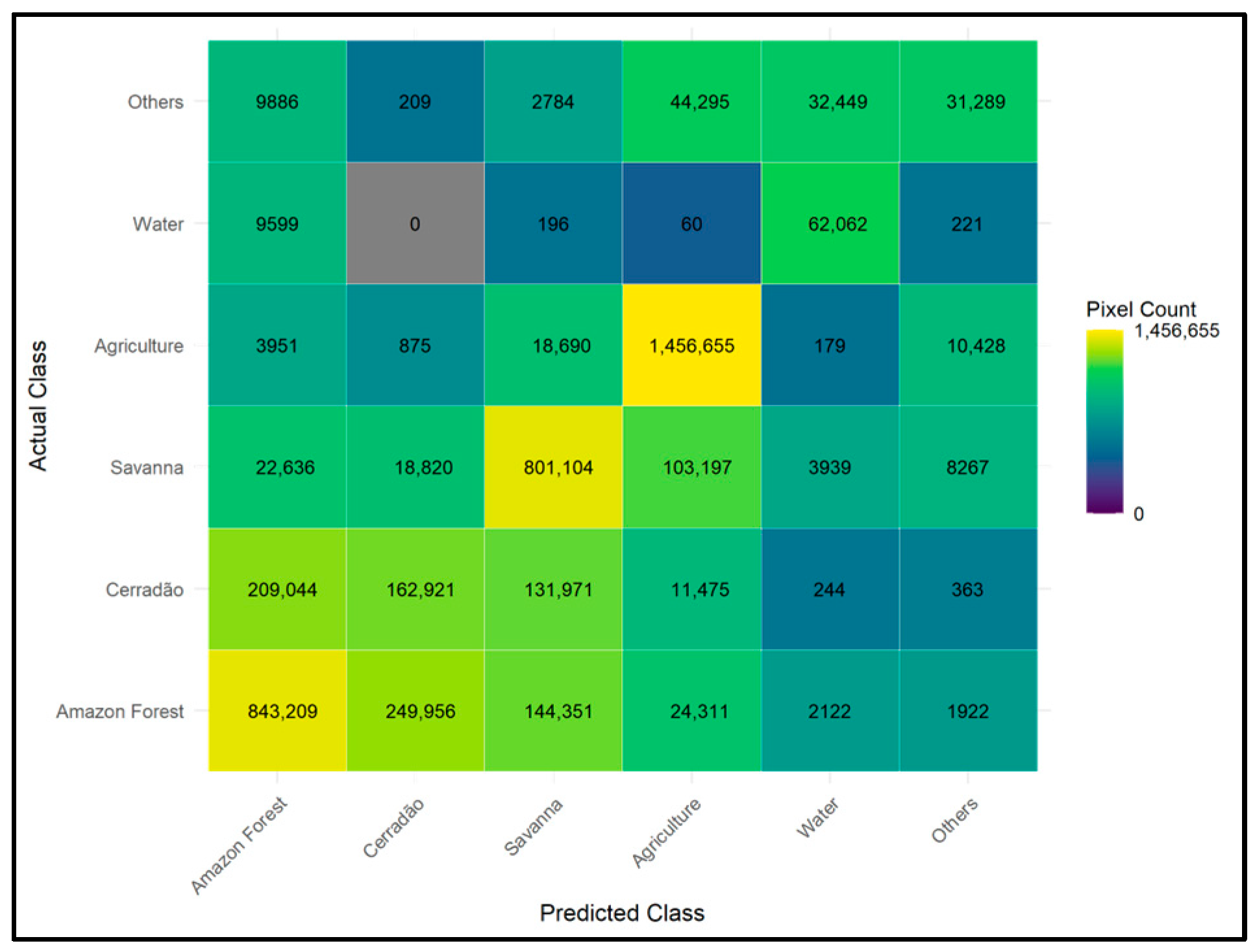

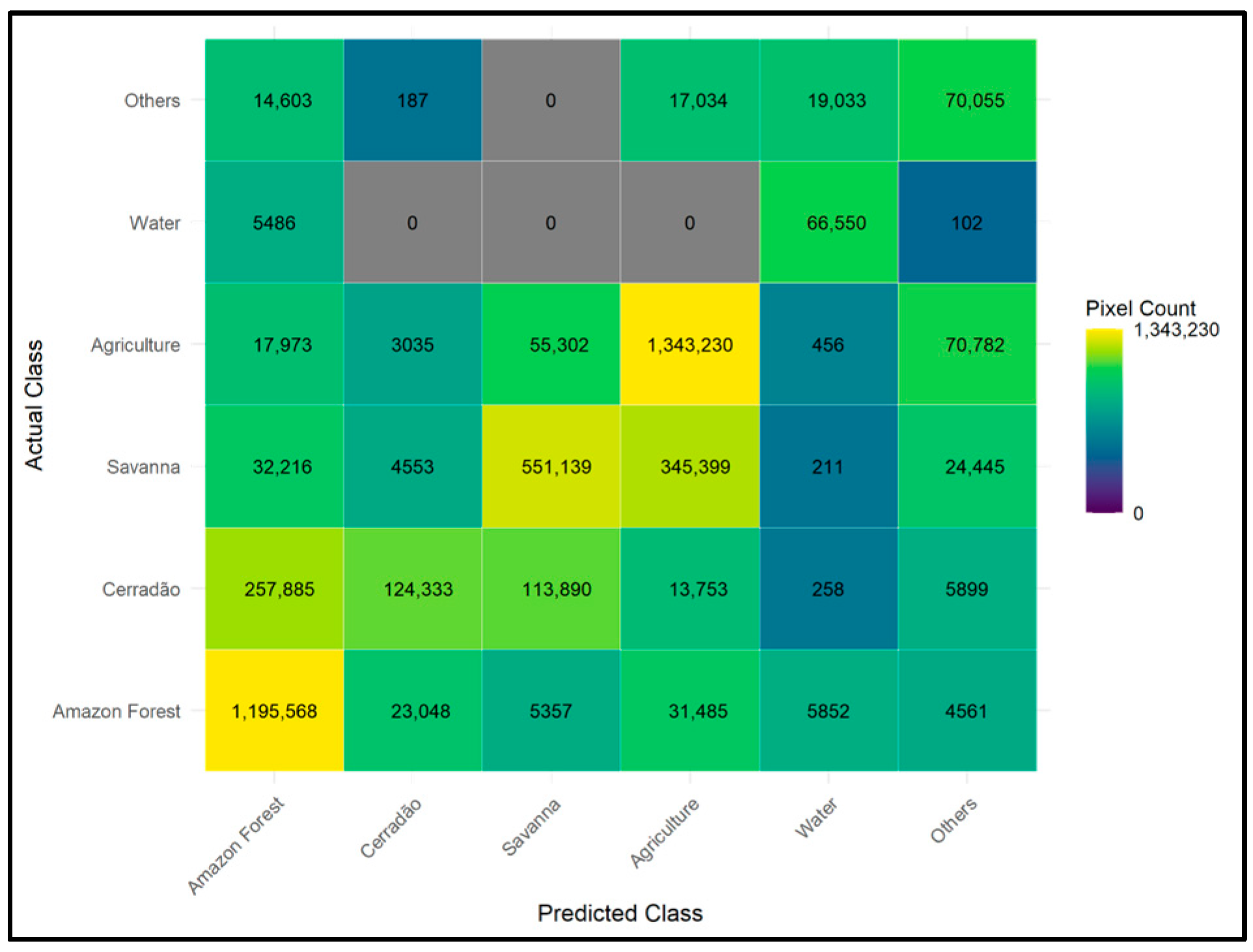

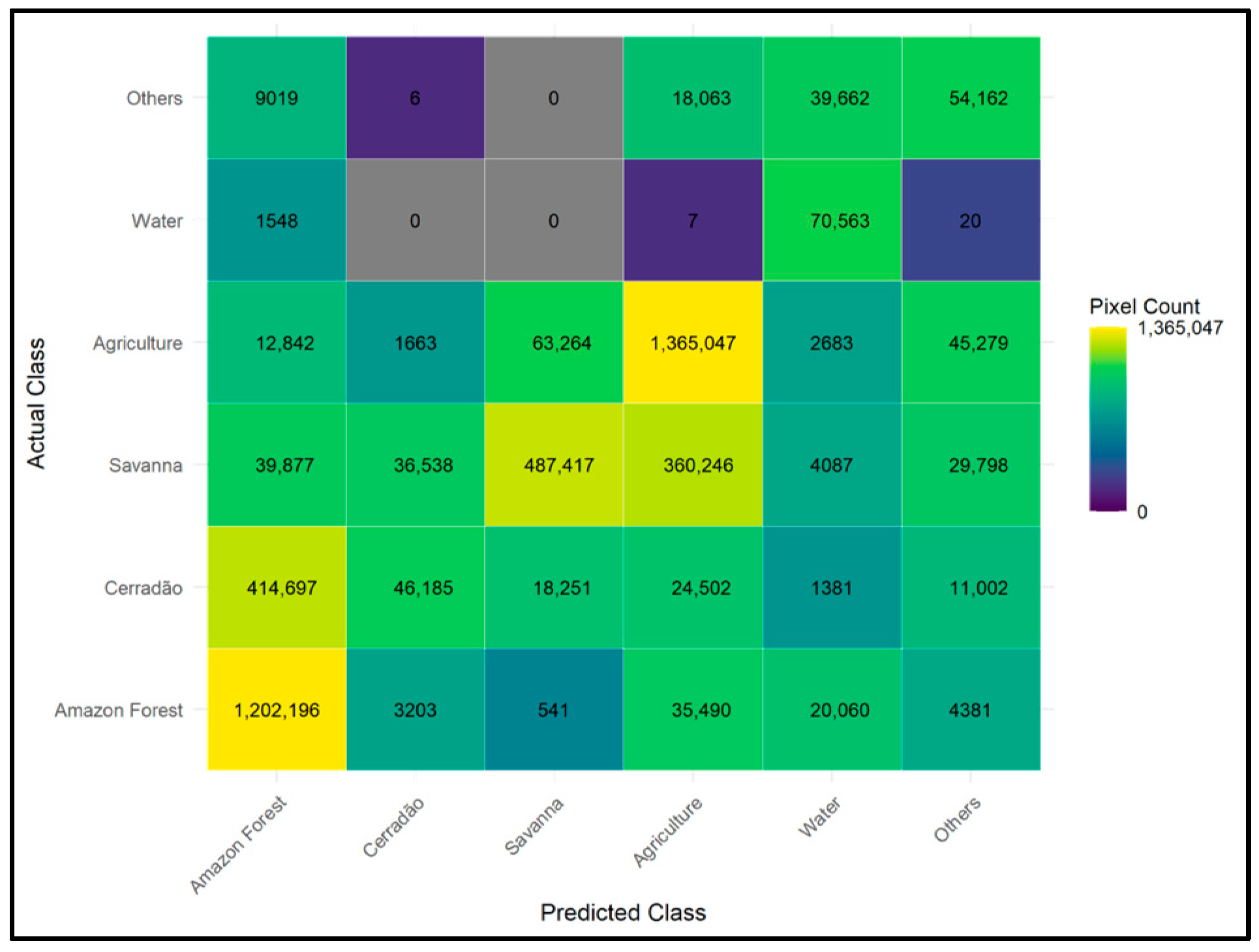

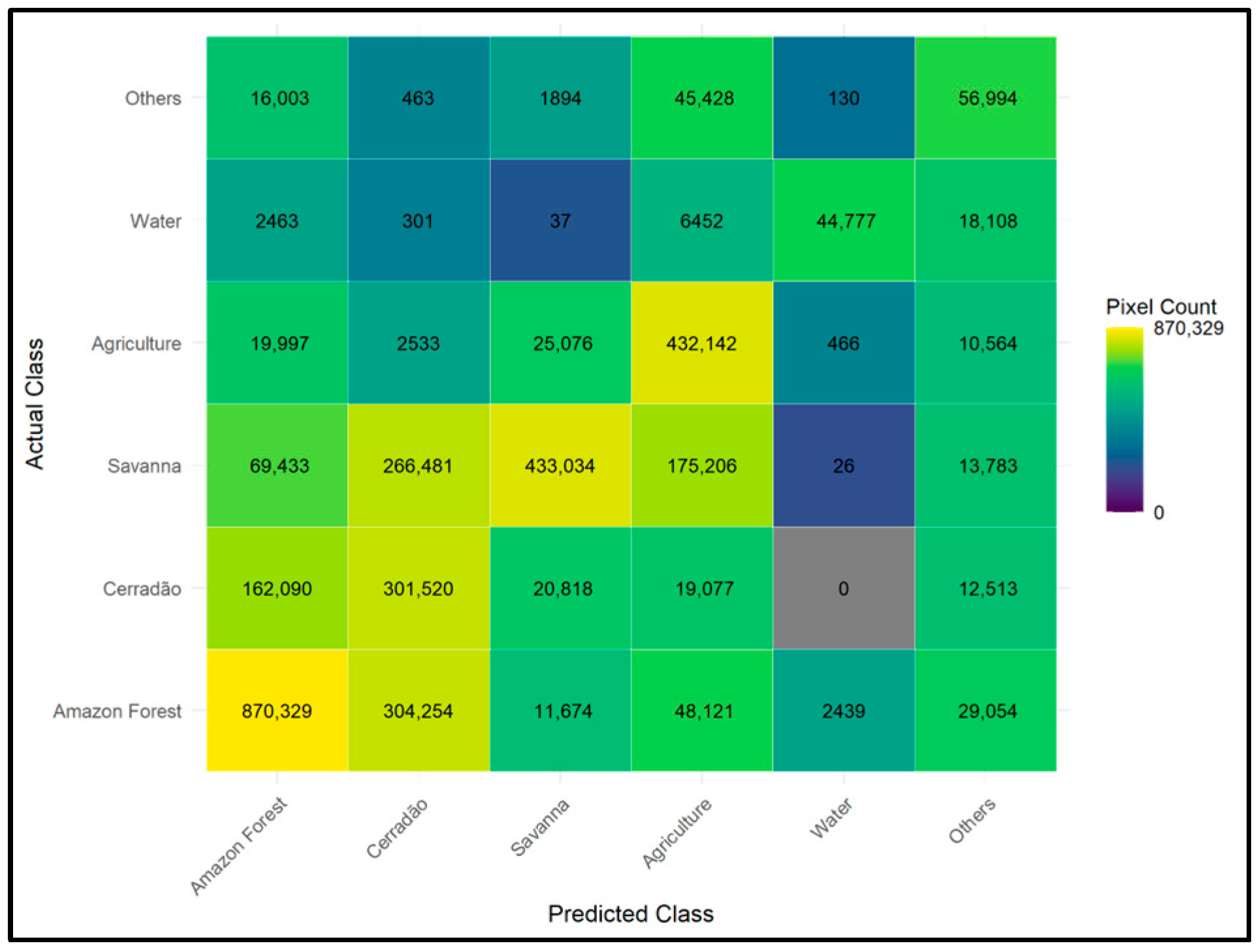

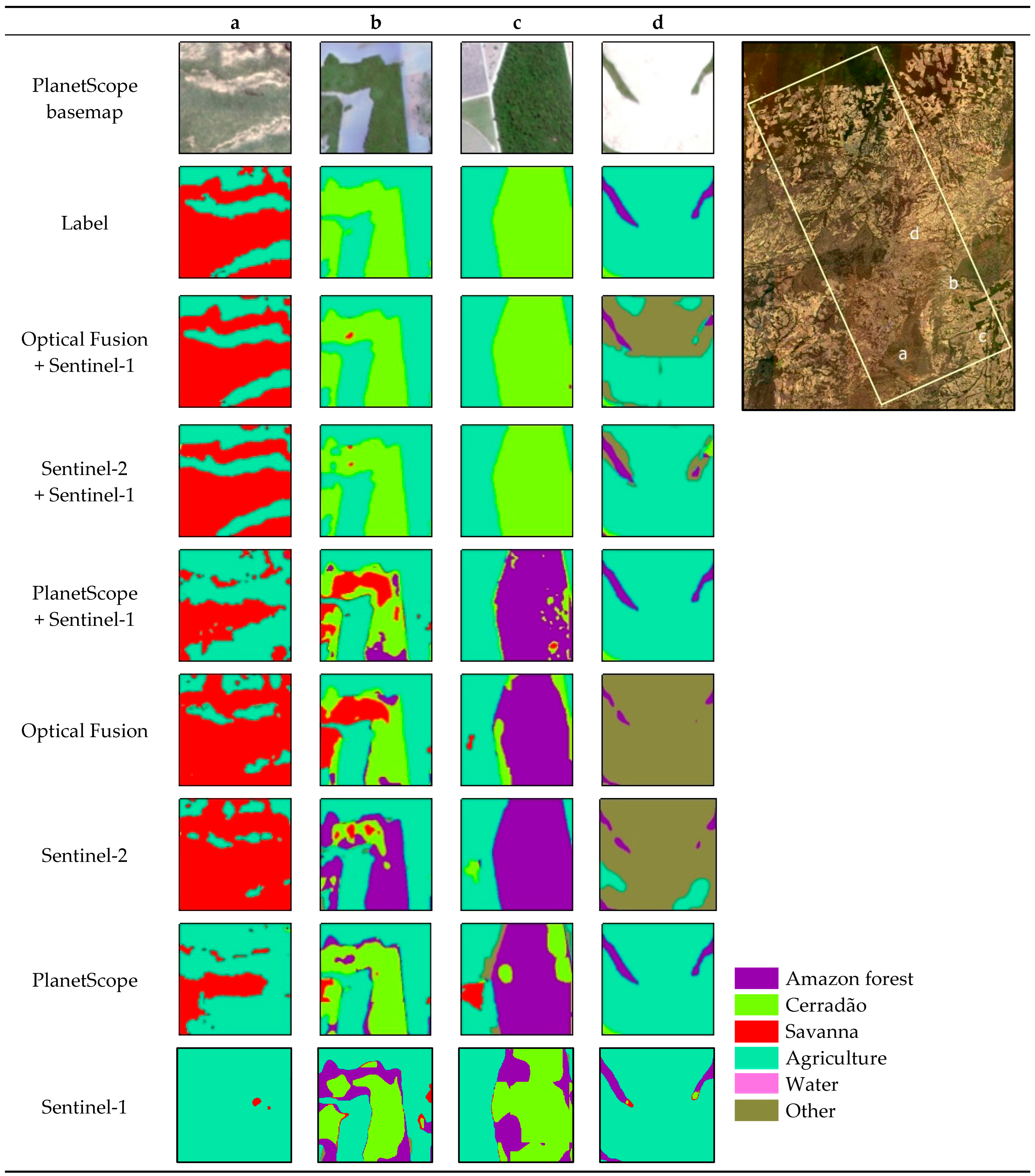

4.1. Identifying Which Sensor or Sensor Combination Results in the Highest Mapping Accuracies

4.2. Spatial Patterns of Land Cover Within the CAT

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Peixoto, K.S.; Marimon-Junior, B.H.; Marimon, B.S.; Elias, F.; de Farias, J.; Freitag, R.; Mews, H.A.; das Neves, E.C.; Prestes, N.C.C.S.; Malhi, Y. Unravelling Ecosystem Functions at the Amazonia-Cerrado Transition: II. Carbon Stocks and CO2 Soil Efflux in Cerradão Forest Undergoing Ecological Succession. Acta Oecol. 2017, 82, 23–31. [Google Scholar] [CrossRef]

- Morandi, P.S.; Marimon, B.S.; Marimon-Junior, B.H.; Ratter, J.A.; Feldpausch, T.R.; Colli, G.R.; Munhoz, C.B.R.; da Silva Júnior, M.C.; de Souza Lima, E.; Haidar, R.F.; et al. Tree Diversity and Above-Ground Biomass in the South America Cerrado Biome and Their Conservation Implications. Biodivers. Conserv. 2020, 29, 1519–1536. [Google Scholar] [CrossRef]

- Marengo, J.A.; Jimenez, J.C.; Espinoza, J.-C.; Cunha, A.P.; Aragão, L.E.O. Increased Climate Pressure on the Agricultural Frontier in the Eastern Amazonia–Cerrado Transition Zone. Sci. Rep. 2022, 12, 457. [Google Scholar] [CrossRef] [PubMed]

- Zeferino, L.B.; Lustosa Filho, J.F.; dos Santos, A.C.; Cerri, C.E.P.; de Oliveira, T.S. Soil Carbon and Nitrogen Stocks Following Forest Conversion to Long-Term Pasture in Amazon Rainforest-Cerrado Transition Environment. CATENA 2023, 231, 107346. [Google Scholar] [CrossRef]

- Ribeiro, A.F.S.; Santos, L.; Randerson, J.T.; Uribe, M.R.; Alencar, A.A.C.; Macedo, M.N.; Morton, D.C.; Zscheischler, J.; Silvestrini, R.A.; Rattis, L.; et al. The Time since Land-Use Transition Drives Changes in Fire Activity in the Amazon-Cerrado Region. Commun. Earth Environ. 2024, 5, 96. [Google Scholar] [CrossRef]

- Reis, S.M.; de Oliveira, E.A.; Elias, F.; Gomes, L.; Morandi, P.S.; Marimon, B.S.; Marimon Junior, B.H.; das Neves, E.C.; de Oliveira, B.; Lenza, E. Resistance to Fire and the Resilience of the Woody Vegetation of the “Cerradão” in the “Cerrado”–Amazon Transition Zone. Braz. J. Bot. 2017, 40, 193–201. [Google Scholar] [CrossRef]

- Mataveli, G.; de Oliveira, G.; Silva-Junior, C.H.L.; Stark, S.C.; Carvalho, N.; Anderson, L.O.; Gatti, L.V.; Aragão, L.E.O.C. Record-Breaking Fires in the Brazilian Amazon Associated with Uncontrolled Deforestation. Nat. Ecol. Evol. 2022, 6, 1792–1793. [Google Scholar] [CrossRef]

- Malhi, Y.; Roberts, J.T.; Betts, R.A.; Killeen, T.J.; Li, W.; Nobre, C.A. Climate Change, Deforestation, and the Fate of the Amazon. Science 2008, 319, 169–172. [Google Scholar] [CrossRef]

- Matricardi, E.A.T.; Skole, D.L.; Pedlowski, M.A.; Chomentowski, W. Assessment of Forest Disturbances by Selective Logging and Forest Fires in the Brazilian Amazon Using Landsat Data. Int. J. Remote Sens. 2013, 34, 1057–1086. [Google Scholar] [CrossRef]

- Araújo, I.; Scalon, M.C.; Amorim, I.; Oliveras, I.; Cruz, W.J.A.; Reis, S.M.; Marimon, B.S. Contrasting Vegetation Gradient Effects Explain the Differences in Leaf Traits Among Woody Plant Communities in the Amazonia-Cerrado Transition. Res. Sq. 2021. [Google Scholar] [CrossRef]

- Alencar, A.; Nepstad, D.; McGrath, D.; Moutinho, P.; Pacheco, P.; Diaz, M.; Soares Filho, B. Desmatamento Na Amazônia: Indo Além Da ”Emergência Crônica”; IPAM: Belém, Brazil, 2004; Volume 90. [Google Scholar]

- Nogueira, D.S.; Marimon, B.S.; Marimon-Junior, B.H.; Oliveira, E.A.; Morandi, P.; Reis, S.M.; Elias, F.; Neves, E.C.; Feldpausch, T.R.; Lloyd, J.; et al. Impacts of Fire on Forest Biomass Dynamics at the Southern Amazon Edge. Environ. Conserv. 2019, 46, 285–292. [Google Scholar] [CrossRef]

- Beuchle, R.; Achard, F.; Bourgoin, C.; Vancutsem, C.; Eva, H.; Follador, M. Deforestation and Forest Degradation in the Amazon; European Union: Luxembourg, 2021. [Google Scholar] [CrossRef]

- Banerjee, O.; Cicowiez, M.; Macedo, M.N.; Malek, Ž.; Verburg, P.H.; Goodwin, S.; Vargas, R.; Rattis, L.; Bagstad, K.J.; Brando, P.M.; et al. Can We Avert an Amazon Tipping Point? The Economic and Environmental Costs. Environ. Res. Lett. 2022, 17, 125005. [Google Scholar] [CrossRef]

- Albert, J.S.; Carnaval, A.C.; Flantua, S.G.A.; Lohmann, L.G.; Ribas, C.C.; Riff, D.; Carrillo, J.D.; Fan, Y.; Figueiredo, J.J.P.; Guayasamin, J.M.; et al. Human Impacts Outpace Natural Processes in the Amazon. Science 2023, 379, eabo5003. [Google Scholar] [CrossRef] [PubMed]

- Gowda, J.H.; Kitzberger, T.; Premoli, A.C. Landscape Responses to a Century of Land Use along the Northern Patagonian Forest-Steppe Transition. Plant Ecol. 2012, 213, 259–272. [Google Scholar] [CrossRef]

- Joly, C.A.; Assis, M.A.; Bernacci, L.C.; Tamashiro, J.Y.; de Campos, M.C.R.; Comes, J.A.M.A.; Lacerda, M.S.; dos Santos, F.A.M.; Pedroni, F.; de Souza Pereira, L. Floristic and Phytosociology in Permanent Plots of the Atlantic Rainforest along an Altitudinal Gradient in Southeastern Brazil. Biota Neotrop. 2012, 12, 123–145. [Google Scholar]

- Pokorny, B.; Pacheco, P.; de Jong, W.; Entenmann, S.K. Forest Frontiers out of Control: The Long-Term Effects of Discourses, Policies, and Markets on Conservation and Development of the Brazilian Amazon. Ambio 2021, 50, 2199–2223. [Google Scholar] [CrossRef]

- Faria, D.; Morante-Filho, J.C.; Baumgarten, J.; Bovendorp, R.S.; Cazetta, E.; Gaiotto, F.A.; Mariano-Neto, E.; Mielke, M.S.; Pessoa, M.S.; Rocha-Santos, L.; et al. The Breakdown of Ecosystem Functionality Driven by Deforestation in a Global Biodiversity Hotspot. Biol. Conserv. 2023, 283, 110126. [Google Scholar] [CrossRef]

- Maurya, M.J.; Vivek, M. Deforestation: Causes, Consequences and Possible Solutions. Ldealistic J. Adv. Res. Progress. Spectr. IJARPS 2025, 4, 70–76. [Google Scholar]

- Ratter, J.A.; Richards, P.W.; Argent, G.; Gifford, D.R.; Clapham, A.R. Observations on the Vegetation of Northeastern Mato Grosso: I. The Woody Vegetation Types of the Xavantina-Cachimbo Expedition Area. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1973, 266, 449–492. [Google Scholar] [CrossRef]

- Ribeiro, J.F.; Walter, B.M.T. As Principais Fitofissionomias do Bioma Cerrado. In Cerrado: Ecologia e Flora; Sano, S.M., Almeida, S.P., Ribeiro, J.P., Eds.; Embrapa: Brasilia, Brazil, 2008; pp. 153–212. [Google Scholar]

- Franczak, D.D.; Marimon, B.S.; Hur Marimon-Junior, B.; Mews, H.A.; Maracahipes, L.; Oliveira, E.A. de Changes in the Structure of a Savanna Forest over a Six-Year Period in the Amazon-Cerrado Transition, Mato Grosso State, Brazil. Rodriguésia 2011, 62, 425–436. [Google Scholar] [CrossRef][Green Version]

- Marimon, B.S.; Marimon-Junior, B.H.; Feldpausch, T.R.; Oliveira-Santos, C.; Mews, H.A.; Lopez-Gonzalez, G.; Lloyd, J.; Franczak, D.D.; de Oliveira, E.A.; Maracahipes, L.; et al. Disequilibrium and Hyperdynamic Tree Turnover at the Forest–Cerrado Transition Zone in Southern Amazonia. Plant Ecol. Divers. 2014, 7, 281–292. [Google Scholar] [CrossRef]

- de Oliveira, S.N.; de Carvalho Júnior, O.A.; Gomes, R.A.T.; Guimarães, R.F.; McManus, C.M. Landscape-Fragmentation Change Due to Recent Agricultural Expansion in the Brazilian Savanna, Western Bahia, Brazil. Reg. Environ. Change 2017, 17, 411–423. [Google Scholar] [CrossRef]

- Morandi, P.S.; Marimon-Junior, B.H.; de Oliveira, E.A.; Reis, S.M.; Valadão, M.B.X.; Forsthofer, M.; Passos, F.B.; Marimon, B.S. Vegetation succession in the Cerrado/Amazonian forest transition zone of Mato Grosso state, Brazil. Edinb. J. Bot. 2016, 73, 83–93. [Google Scholar] [CrossRef]

- Reis, S.M.; Marimon, B.S.; Marimon Junior, B.H.; Morandi, P.S.; de Oliveira, E.A.; Elias, F.; das Neves, E.C.; de Oliveira, B.; Nogueira, D.d.S.; Umetsu, R.K.; et al. Climate and Fragmentation Affect Forest Structure at the Southern Border of Amazonia. Plant Ecol. Divers. 2018, 11, 13–25. [Google Scholar] [CrossRef]

- Soares-Filho, B.; Rajão, R.; Macedo, M.; Carneiro, A.; Costa, W.; Coe, M.; Rodrigues, H.; Alencar, A. Cracking Brazil’s Forest Code. Science 2014, 344, 363–364. [Google Scholar] [CrossRef]

- de Souza Mendes, F.; Baron, D.; Gerold, G.; Liesenberg, V.; Erasmi, S. Optical and SAR Remote Sensing Synergism for Mapping Vegetation Types in the Endangered Cerrado/Amazon Ecotone of Nova Mutum—Mato Grosso. Remote Sens. 2019, 11, 1161. [Google Scholar] [CrossRef]

- Marimon Junior, B.H.; Haridasan, M. Comparação da vegetação arbórea e características edáficas de um cerradão e um cerrado sensu stricto em áreas adjacentes sobre solo distrófico no leste de Mato Grosso, Brasil. Acta Bot. Bras. 2005, 19, 913–926. [Google Scholar] [CrossRef]

- Marimon, B.S.; Lima, E.D.S.; Duarte, T.G.; Chieregatto, L.C.; Ratter, J.A. Observations on the vegetation of northeastern Mato Grosso, Brazil. IV. An analysis of the Cerrado–Amazonian Forest ecotone. Edinb. J. Bot. 2006, 63, 323–341. [Google Scholar] [CrossRef]

- Torello-Raventos, M.; Feldpausch, T.R.; Veenendaal, E.; Schrodt, F.; Saiz, G.; Domingues, T.F.; Djagbletey, G.; Ford, A.; Kemp, J.; Marimon, B.S.; et al. On the Delineation of Tropical Vegetation Types with an Emphasis on Forest/Savanna Transitions. Plant Ecol. Divers. 2013, 6, 101–137. [Google Scholar] [CrossRef]

- Passos, F.B.; Marimon, B.S.; Phillips, O.L.; Morandi, P.S.; das Neves, E.C.; Elias, F.; Reis, S.M.; de Oliveira, B.; Feldpausch, T.R.; Marimon Júnior, B.H. Savanna Turning into Forest: Concerted Vegetation Change at the Ecotone between the Amazon and “Cerrado” Biomes. Braz. J. Bot. 2018, 41, 611–619. [Google Scholar] [CrossRef]

- Marques, E.Q.; Marimon-Junior, B.H.; Marimon, B.S.; Matricardi, E.A.T.; Mews, H.A.; Colli, G.R. Redefining the Cerrado–Amazonia Transition: Implications for Conservation. Biodivers. Conserv. 2020, 29, 1501–1517. [Google Scholar] [CrossRef]

- Bourscheit, A. Proposal to Remove Mato Grosso from the Legal Amazon Allows Deforestation of an Area the Size of Pernambuco. Available online: http://infoamazonia.org/en/2022/03/18/proposal-to-remove-mato-grosso-from-the-legal-amazon-allows-deforestation-of-an-area-the-size-of-pernambuco/ (accessed on 4 April 2025).

- Prizibisczki, C. MT Tenta Recategorizar Florestas no Estado para que Sejam Consideradas como Cerrado. Available online: https://oeco.org.br/noticias/mt-tenta-recategorizar-florestas-no-estado-para-que-sejam-consideradas-como-cerrado/ (accessed on 4 April 2025).

- Juarez, C. Bill No. 337/2022. 2022. Available online: https://www.camara.leg.br/proposicoesWeb/fichadetramitacao?idProposicao=2314952 (accessed on 4 April 2025).

- Comissão De Meio Ambiente, Recursos Hídricos E Recursos Minerais Supplementary Bill—Substitutivo Integral ao PLC No. 18/2024. 2024. Available online: https://oeco.org.br/wp-content/uploads/2024/10/PLC-18-Substitutivo-Integral-n.-3.pdf (accessed on 4 April 2025).

- Zaiatz, A.P.S.R.; Zolin, C.A.; Vendrusculo, L.G.; Lopes, T.R.; Paulino, J. Agricultural Land Use and Cover Change in the Cerrado/Amazon Ecotone: A Case Study of the Upper Teles Pires River Basin. Acta Amaz. 2018, 48, 168–177. [Google Scholar] [CrossRef]

- de Faria, L.D.; Matricardi, E.A.T.; Marimon, B.S.; Miguel, E.P.; Junior, B.H.M.; de Oliveira, E.A.; Prestes, N.C.C.d.S.; de Carvalho, O.L.F. Biomass Prediction Using Sentinel-2 Imagery and an Artificial Neural Network in the Amazon/Cerrado Transition Region. Forests 2024, 15, 1599. [Google Scholar] [CrossRef]

- Vizzari, M. PlanetScope, Sentinel-2, and Sentinel-1 Data Integration for Object-Based Land Cover Classification in Google Earth Engine. Remote Sens. 2022, 14, 2628. [Google Scholar] [CrossRef]

- Bueno, I.T.; Antunes, J.F.; Dos Reis, A.A.; Werner, J.P.; Toro, A.P.; Figueiredo, G.K.; Esquerdo, J.C.; Lamparelli, R.A.; Coutinho, A.C.; Magalhães, P.S. Mapping Integrated Crop-Livestock Systems in Brazil with Planetscope Time Series and Deep Learning. Remote Sens. Environ. 2023, 299, 113886. [Google Scholar] [CrossRef]

- Wagner, F.H.; Dalagnol, R.; Silva-Junior, C.H.L.; Carter, G.; Ritz, A.L.; Hirye, M.C.M.; Ometto, J.P.H.B.; Saatchi, S. Mapping Tropical Forest Cover and Deforestation with Planet NICFI Satellite Images and Deep Learning in Mato Grosso State (Brazil) from 2015 to 2021. Remote Sens. 2023, 15, 521. [Google Scholar] [CrossRef]

- Werner, J.P.; Belgiu, M.; Bueno, I.T.; Dos Reis, A.A.; Toro, A.P.; Antunes, J.F.; Stein, A.; Lamparelli, R.A.; Magalhães, P.S.; Coutinho, A.C. Mapping Integrated Crop–Livestock Systems Using Fused Sentinel-2 and PlanetScope Time Series and Deep Learning. Remote Sens. 2024, 16, 1421. [Google Scholar] [CrossRef]

- Matosak, B.M.; Fonseca, L.M.G.; Taquary, E.C.; Maretto, R.V.; Bendini, H.d.N.; Adami, M. Mapping Deforestation in Cerrado Based on Hybrid Deep Learning Architecture and Medium Spatial Resolution Satellite Time Series. Remote Sens. 2022, 14, 209. [Google Scholar] [CrossRef]

- Bolfe, É.L.; Parreiras, T.C.; da Silva, L.A.P.; Sano, E.E.; Bettiol, G.M.; Victoria, D.d.C.; Sanches, I.D.; Vicente, L.E. Mapping Agricultural Intensification in the Brazilian Savanna: A Machine Learning Approach Using Harmonized Data from Landsat Sentinel-2. ISPRS Int. J. Geo-Inf. 2023, 12, 263. [Google Scholar] [CrossRef]

- Maciel Junior, I.C.; Dallacort, R.; Boechat, C.L.; Teodoro, P.E.; Teodoro, L.P.R.; Rossi, F.S.; de Oliveira-Júnior, J.F.; Della-Silva, J.L.; Baio, F.H.R.; Lima, M.; et al. Maize Crop Detection through Geo-Object-Oriented Analysis Using Orbital Multi-Sensors on the Google Earth Engine Platform. AgriEngineering 2024, 6, 491–508. [Google Scholar] [CrossRef]

- Pham-Duc, B.; Nguyen, H.; Nguyen-Quoc, H. Unveiling the Research Landscape of Planetscope Data in Addressing Earth-Environmental Issues: A Bibliometric Analysis. Earth Sci. Inform. 2025, 18, 52. [Google Scholar] [CrossRef]

- Aguilera, M.A.Z. Classication Of Land-Cover Through Machine Learning Algorithms For Fusion Of Sentinel-2a And Planetscope Imagery. In Proceedings of the 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020; pp. 246–253. [Google Scholar]

- Hoekman, D.; Kooij, B.; Quiñones, M.; Vellekoop, S.; Carolita, I.; Budhiman, S.; Arief, R.; Roswintiarti, O. Wide-Area Near-Real-Time Monitoring of Tropical Forest Degradation and Deforestation Using Sentinel-1. Remote Sens. 2020, 12, 3263. [Google Scholar] [CrossRef]

- Sarti, M.; Migliaccio, M.; Nunziata, F.; Mascolo, L.; Brugnoli, E. On the Sensitivity of Polarimetric SAR Measurements to Vegetation Cover: The Coiba National Park, Panama. Int. J. Remote Sens. 2017, 38, 6755–6768. [Google Scholar] [CrossRef]

- Bitencourt, M.D.; De Mesquita, H.N., Jr.; Kuntschik, G.; Da Rocha, H.R.; Furley, P.A. Cerrado Vegetation Study Using Optical and Radar Remote Sensing: Two Brazilian Case Studies. Can. J. Remote Sens. 2007, 33, 468–480. [Google Scholar] [CrossRef]

- de Carvalho, L.; Rahman, M.; Hay, G.; Yackel, J. Optical and SAR Imagery for Mapping Vegetation Gradients in Brazilian Savannas: Synergy between Pixel-Based and Object-Based Approaches. In Proceedings of the International Conference of Geographic Object-Based Image, Ghent, Belgium, 29 June–2 July 2010; Volume 38, pp. 1–7. [Google Scholar]

- Wagner, F.H.; Sanchez, A.; Tarabalka, Y.; Lotte, R.G.; Ferreira, M.P.; Aidar, M.P.M.; Gloor, E.; Phillips, O.L.; Aragão, L.E.O.C. Using the U-net Convolutional Network to Map Forest Types and Disturbance in the Atlantic Rainforest with Very High Resolution Images. Remote Sens. Ecol. Conserv. 2019, 5, 360–375. [Google Scholar] [CrossRef]

- Dang, K.B.; Nguyen, T.H.T.; Nguyen, H.D.; Truong, Q.H.; Vu, T.P.; Pham, H.N.; Duong, T.T.; Giang, V.T.; Nguyen, D.M.; Bui, T.H. U-Shaped Deep-Learning Models for Island Ecosystem Type Classification, a Case Study in Con Dao Island of Vietnam. One Ecosyst. 2022, 7, e79160. [Google Scholar] [CrossRef]

- Filatov, D.; Yar, G.N.A.H. Forest and Water Bodies Segmentation Through Satellite Images Using U-Net. arXiv 2022, arXiv:2207.11222. [Google Scholar]

- Zhao, G.; Zhang, Y.; Ge, M.; Yu, M. Bilateral U-Net Semantic Segmentation with Spatial Attention Mechanism. CAAI Trans. Intell. Technol. 2023, 8, 297–307. [Google Scholar] [CrossRef]

- Dimitrovski, I.; Spasev, V.; Loshkovska, S.; Kitanovski, I. U-Net Ensemble for Enhanced Semantic Segmentation in Remote Sensing Imagery. Remote Sens. 2024, 16, 2077. [Google Scholar] [CrossRef]

- de Bem, P.P.; de Carvalho Junior, O.A.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Change Detection of Deforestation in the Brazilian Amazon Using Landsat Data and Convolutional Neural Networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Mohla, S.; Mohla, S.; Guha, A.; Banerjee, B. Multimodal Noisy Segmentation Based Fragmented Burn Scars Identification in Amazon Rainforest. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 4122–4126. [Google Scholar]

- Neves, A.K.; Körting, T.S.; Fonseca, L.M.G.; Girolamo Neto, C.D.; Wittich, D.; Costa, G.; Heipke, C. Semantic Segmentation of Brazilian Savanna Vegetation Using High Spatial Resolution Satellite Data and U-Net. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 505–511. [Google Scholar] [CrossRef]

- Solórzano, J.V.; Mas, J.F.; Gao, Y.; Gallardo-Cruz, J.A. Land Use Land Cover Classification with U-Net: Advantages of Combining Sentinel-1 and Sentinel-2 Imagery. Remote Sens. 2021, 13, 3600. [Google Scholar] [CrossRef]

- Dalagnol, R.; Wagner, F.H.; Galvão, L.S.; Braga, D.; Osborn, F.; Sagang, L.B.; da Conceição Bispo, P.; Payne, M.; Junior, C.S.; Favrichon, S. Mapping Tropical Forest Degradation with Deep Learning and Planet NICFI Data. Remote Sens. Environ. 2023, 298, 113798. [Google Scholar] [CrossRef]

- Alvares, C.A.; Stape, J.L.; Sentelhas, P.C.; Gonçalves, J.d.M.; Sparovek, G. Köppen’s Climate Classification Map for Brazil. Meteorol. Z. 2013, 22, 711–728. [Google Scholar] [CrossRef] [PubMed]

- Prestes, N.C.C.S.; Marimon, B.S.; Morandi, P.S.; Reis, S.M.; Junior, B.H.M.; Cruz, W.J.A.; Oliveira, E.A.; Mariano, L.H.; Elias, F.; Santos, D.M.; et al. Impact of the Extreme 2015-16 El Niño Climate Event on Forest and Savanna Tree Species of the Amazonia-Cerrado Transition. Flora 2024, 319, 152597. [Google Scholar] [CrossRef]

- Biomas|IBGE. Available online: https://www.ibge.gov.br/geociencias/cartas-e-mapas/informacoes-ambientais/15842-biomas.html?=&t=downloads (accessed on 5 April 2025).

- Oliveira, K.N.; Miguel, E.P.; Martins, M.S.; Rezende, A.V.; Dos Santos, J.A.; Nappo, M.E.; Matricardi, E.A.T. Species Substitution and Changes in the Structure, Volume, and Biomass of Forest in a Savanna. Plants 2024, 13, 2826. [Google Scholar] [CrossRef] [PubMed]

- Ratter, J.A.; Askew, G.P.; Montgomery, R.F.; Gifford, D.R.; Ratter, J.A.; Askew, G.P.; Montgomery, R.F.; Gifford, D.R. Observations on Forests of Some Mesotrophic Soils in Central Brazil. Rev. Bras. Bot. 1978, 1, 47–58. [Google Scholar]

- Viani, R.A.; Rodrigues, R.R.; Dawson, T.E.; Lambers, H.; Oliveira, R.S. Soil pH Accounts for Differences in Species Distribution and Leaf Nutrient Concentrations of Brazilian Woodland Savannah and Seasonally Dry Forest Species. Perspect. Plant Ecol. Evol. Syst. 2014, 16, 64–74. [Google Scholar] [CrossRef]

- Gonçalves, R.V.S.; Cardoso, J.C.F.; Oliveira, P.E.; Oliveira, D.C. Changes in the Cerrado Vegetation Structure: Insights from More than Three Decades of Ecological Succession. Web Ecol. 2021, 21, 55–64. [Google Scholar] [CrossRef]

- Pereira, C.C.; Fernandes, G.W. Cerrado Rupestre Is Not Campo Rupestre: The Unknown and Threatened Savannah on Rocky Outcrops. Nat. Conserv. 2022, 49, 131–136. [Google Scholar] [CrossRef]

- Nogueira, E.M.; Nelson, B.W.; Fearnside, P.M.; França, M.B.; de Oliveira, Á.C.A. Tree Height in Brazil’s ‘Arc of Deforestation’: Shorter Trees in South and Southwest Amazonia Imply Lower Biomass. For. Ecol. Manag. 2008, 255, 2963–2972. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 Mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Planet Satellite Imaging|Planet. Available online: https://www.planet.com/ (accessed on 5 April 2025).

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Tropical Forest Observatory: High-Resolution Satellite Monitoring for Forests|Planet. Available online: https://www.planet.com/tropical-forest-observatory/ (accessed on 5 April 2025).

- Yin, F.; Lewis, P.E.; Gómez-Dans, J.L. Bayesian Atmospheric Correction over Land: Sentinel-2/MSI and Landsat 8/OLI. Geosci. Model Dev. 2022, 15, 7933–7976. [Google Scholar] [CrossRef]

- Lee, J.-S.; Grunes, M.R.; De Grandi, G. Polarimetric SAR Speckle Filtering and Its Implication for Classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2363–2373. [Google Scholar]

- Solórzano, J.V.; Mas, J.F.; Gallardo-Cruz, J.A.; Gao, Y.; Fernández-Montes de Oca, A. Deforestation Detection Using a Spatio-Temporal Deep Learning Approach with Synthetic Aperture Radar and Multispectral Images. ISPRS J. Photogramm. Remote Sens. 2023, 199, 87–101. [Google Scholar] [CrossRef]

- Rasterio Package—Rasterio 1.5.0.Dev Documentation. Available online: https://rasterio.readthedocs.io/en/latest/api/rasterio.html (accessed on 5 April 2025).

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Gašparović, M.; Medak, D.; Pilaš, I.; Jurjević, L.; Balenović, I. Fusion of Sentinel-2 and PlanetScope Imagery for Vegetation Detection and Monitoring. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-1, 155–160. [Google Scholar] [CrossRef]

- Li, C.; Liu, L.; Wang, J.; Zhao, C.; Wang, R. Comparison of Two Methods of the Fusion of Remote Sensing Images with Fidelity of Spectral Information. In Proceedings of the IGARSS 2004—2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; Volume 4, pp. 2561–2564. [Google Scholar]

- Ding, Y.; Wei, X.; Pang, H.; Zhang, J. Fusion of Object and Scene Based on IHS Transform and SFIM. In Proceedings of the 2011 IEEE Sixth International Conference on Image and Graphics, Hefei, China, 12–15 August 2011; pp. 702–706. [Google Scholar]

- Kpienbaareh, D.; Sun, X.; Wang, J.; Luginaah, I.; Bezner Kerr, R.; Lupafya, E.; Dakishoni, L. Crop Type and Land Cover Mapping in Northern Malawi Using the Integration of Sentinel-1, Sentinel-2, and PlanetScope Satellite Data. Remote Sens. 2021, 13, 700. [Google Scholar] [CrossRef]

- Farhadpour, S.; Warner, T.A.; Maxwell, A.E. Selecting and Interpreting Multiclass Loss and Accuracy Assessment Metrics for Classifications with Class Imbalance: Guidance and Best Practices. Remote Sens. 2024, 16, 533. [Google Scholar] [CrossRef]

- Grande, T.O. De Desmatamentos No Cerrado Na Última Década: Perda de Hábitat, de Conectividade e Estagnação Socioeconômica. Ph.D. Thesis, University of Brasilia, Brasilia, Brazil, 2019. [Google Scholar]

- Rekow, L. Socio-Ecological Implications of Soy in the Brazilian Cerrado. Chall. Sustain. 2019, 7, 7–29. [Google Scholar] [CrossRef]

- Sattolo, T.M.S. Soil Carbon and Nitrogen Dynamics as Affected by Crop Diversification and Nitrogen Fertilization Under Grain Production Systems in the Cerrado Region. Ph.D. Thesis, Universidade de São Paulo, São Paulo, Brazil, 2020. [Google Scholar]

- Gamon, J.A.; Field, C.B.; Goulden, M.L.; Griffin, K.L.; Hartley, A.E.; Joel, G.; Penuelas, J.; Valentini, R. Relationships Between NDVI, Canopy Structure, and Photosynthesis in Three Californian Vegetation Types. Ecol. Appl. 1995, 5, 28–41. [Google Scholar] [CrossRef]

- White, M.A.; De BEURS, K.M.; Didan, K.; Inouye, D.W.; Richardson, A.D.; Jensen, O.P.; O’keefe, J.; Zhang, G.; Nemani, R.R.; Van LEEUWEN, W.J.D.; et al. Intercomparison, Interpretation, and Assessment of Spring Phenology in North America Estimated from Remote Sensing for 1982–2006. Glob. Change Biol. 2009, 15, 2335–2359. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between Leaf Pigment Content and Spectral Reflectance across a Wide Range of Species, Leaf Structures and Developmental Stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Nasirzadehdizaji, R.; Balik Sanli, F.; Abdikan, S.; Cakir, Z.; Sekertekin, A.; Ustuner, M. Sensitivity Analysis of Multi-Temporal Sentinel-1 SAR Parameters to Crop Height and Canopy Coverage. Appl. Sci. 2019, 9, 655. [Google Scholar] [CrossRef]

- Prakash, A.J.; Mudi, S.; Paramanik, S.; Behera, M.D.; Shekhar, S.; Sharma, N.; Parida, B.R. Dominant Expression of SAR Backscatter in Predicting Aboveground Biomass: Integrating Multi-Sensor Data and Machine Learning in Sikkim Himalaya. J. Indian Soc. Remote Sens. 2024, 52, 871–883. [Google Scholar] [CrossRef]

- Irfan, A.; Li, Y.; E, X.; Sun, G. Land Use and Land Cover Classification with Deep Learning-Based Fusion of SAR and Optical Data. Remote Sens. 2025, 17, 1298. [Google Scholar] [CrossRef]

- Sur, K.; Verma, V.K.; Panwar, P.; Shukla, G.; Chakravarty, S.; Nath, A.J. Monitoring Vegetation Degradation Using Remote Sensing and Machine Learning over India—A Multi-Sensor, Multi-Temporal and Multi-Scale Approach. Front. For. Glob. Change 2024, 7, 1382557. [Google Scholar] [CrossRef]

- Chi, Z.; Xu, K. Multi-Sensor Fusion and Machine Learning for Forest Age Mapping in Southeastern Tibet. Remote Sens. 2025, 17, 1926. [Google Scholar] [CrossRef]

- Sansevero, J.B.; Garbin, M.L.; Sánchez-Tapia, A.; Valladares, F.; Scarano, F.R. Fire Drives Abandoned Pastures to a Savanna-like State in the Brazilian Atlantic Forest. Perspect. Ecol. Conserv. 2020, 18, 31–36. [Google Scholar] [CrossRef]

- Silva, A.G.P.; Galvão, L.S.; Ferreira Júnior, L.G.; Teles, N.M.; Mesquita, V.V.; Haddad, I. Discrimination of Degraded Pastures in the Brazilian Cerrado Using the PlanetScope SuperDove Satellite Constellation. Remote Sens. 2024, 16, 2256. [Google Scholar] [CrossRef]

- Nóbrega, R.L.; Guzha, A.C.; Torres, G.N.; Kovacs, K.; Lamparter, G.; Amorim, R.S.; Couto, E.; Gerold, G. Effects of Conversion of Native Cerrado Vegetation to Pasture on Soil Hydro-Physical Properties, Evapotranspiration and Streamflow on the Amazonian Agricultural Frontier. PLoS ONE 2017, 12, e0179414. [Google Scholar] [CrossRef]

- van Leeuwen, W.J. Visible, near-IR, and Shortwave IR Spectral Characteristics of Terrestrial Surfaces. In The SAGE Handbook of Remote Sensing; Sage: Thousand Oaks, CA, USA, 2009; pp. 33–50. [Google Scholar]

- Feng, H.; Chen, C.; Dong, H.; Wang, J.; Meng, Q. Modified Shortwave Infrared Perpendicular Water Stress Index: A Farmland Water Stress Monitoring Method. J. Appl. Meteorol. Climatol. 2013, 52, 2024–2032. [Google Scholar] [CrossRef]

- Chen, S.; Zhao, K.; Jiang, T.; Li, X.; Zheng, X.; Wan, X.; Zhao, X. Predicting Surface Roughness and Moisture of Bare Soils Using Multiband Spectral Reflectance Under Field Conditions. Chin. Geogr. Sci. 2018, 28, 986–997. [Google Scholar] [CrossRef]

- Small, C. High Spatial Resolution Spectral Mixture Analysis of Urban Reflectance. Remote Sens. Environ. 2003, 88, 170–186. [Google Scholar] [CrossRef]

- Radhi, H.; Assem, E.; Sharples, S. On the Colours and Properties of Building Surface Materials to Mitigate Urban Heat Islands in Highly Productive Solar Regions. Build. Environ. 2014, 72, 162–172. [Google Scholar] [CrossRef]

- Alavipanah, S.K.; Karimi Firozjaei, M.; Sedighi, A.; Fathololoumi, S.; Zare Naghadehi, S.; Saleh, S.; Naghdizadegan, M.; Gomeh, Z.; Arsanjani, J.J.; Makki, M. The Shadow Effect on Surface Biophysical Variables Derived from Remote Sensing: A Review. Land 2022, 11, 2025. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road Extraction from High-Resolution Remote Sensing Imagery Using Deep Learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef]

- Shao, Z.; Fu, H.; Fu, P.; Yin, L. Mapping Urban Impervious Surface by Fusing Optical and SAR Data at the Decision Level. Remote Sens. 2016, 8, 945. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Multi-Source Remotely Sensed Data Fusion for Improving Land Cover Classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Mohammadpour, P.; Viegas, C. Applications of Multi-Source and Multi-Sensor Data Fusion of Remote Sensing for Forest Species Mapping. In Advances in Remote Sensing for Forest Monitoring; Pandey, P.C., Arellano, P., Eds.; Wiley: Hoboken, NJ, USA, 2022; pp. 255–287. ISBN 978-1-119-78812-6. [Google Scholar]

- Wang, J.; Yang, M.; Chen, Z.; Lu, J.; Zhang, L. An MLC and U-Net Integrated Method for Land Use/Land Cover Change Detection Based on Time Series NDVI-Composed Image from PlanetScope Satellite. Water 2022, 14, 3363. [Google Scholar] [CrossRef]

- Grabska, E.; Socha, J. Evaluating the Effect of Stand Properties and Site Conditions on the Forest Reflectance from Sentinel-2 Time Series. PLoS ONE 2021, 16, e0248459. [Google Scholar] [CrossRef] [PubMed]

- Gan, Y.; Wang, Q.; Song, G. Structural Complexity Significantly Impacts Canopy Reflectance Simulations as Revealed from Reconstructed and Sentinel-2-Monitored Scenes in a Temperate Deciduous Forest. Remote Sens. 2024, 16, 4296. [Google Scholar] [CrossRef]

- Imperatore, P.; Azar, R.; Calo, F.; Stroppiana, D.; Brivio, P.A.; Lanari, R.; Pepe, A. Effect of the Vegetation Fire on Backscattering: An Investigation Based on Sentinel-1 Observations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4478–4492. [Google Scholar] [CrossRef]

- Tsokas, A.; Rysz, M.; Pardalos, P.M.; Dipple, K. SAR Data Applications in Earth Observation: An Overview. Expert Syst. Appl. 2022, 205, 117342. [Google Scholar] [CrossRef]

- Varella, R.F.; Bustamante, M.M.C.; Pinto, A.S.; Kisselle, K.W.; Santos, R.V.; Burke, R.A.; Zepp, R.G.; Viana, L.T. Soil fluxes of CO2, CO, NO, AND N2 O from an old pasture and from native savanna in Brazil. Ecol. Appl. 2004, 14, 221–231. [Google Scholar] [CrossRef]

- Atarashi-Andoh, M.; Koarashi, J.; Ishizuka, S.; Hirai, K. Seasonal Patterns and Control Factors of CO2 Effluxes from Surface Litter, Soil Organic Carbon, and Root-Derived Carbon Estimated Using Radiocarbon Signatures. Agric. For. Meteorol. 2012, 152, 149–158. [Google Scholar] [CrossRef]

- Ratter, J.A.; Ribeiro, J.F.; Bridgewater, S. The Brazilian Cerrado Vegetation and Threats to Its Biodiversity. Ann. Bot. 1997, 80, 223–230. [Google Scholar] [CrossRef]

- Carneiro, B.M.; de Carvalho Junior, O.A.; Guimarães, R.F.; Evangelista, B.A.; de Carvalho, O.L.F. Exploiting Legal Reserve Compensation as a Mechanism for Unlawful Deforestation in the Brazilian Cerrado Biome, 2012–2022. Sustainability 2024, 16, 9557. [Google Scholar] [CrossRef]

- Colman, C.B.; Guerra, A.; Almagro, A.; de Oliveira Roque, F.; Rosa, I.M.; Fernandes, G.W.; Oliveira, P.T.S. Modeling the Brazilian Cerrado Land Use Change Highlights the Need to Account for Private Property Sizes for Biodiversity Conservation. Sci. Rep. 2024, 14, 4559. [Google Scholar] [CrossRef] [PubMed]

- Overbeck, G.E.; Vélez-Martin, E.; Scarano, F.R.; Lewinsohn, T.M.; Fonseca, C.R.; Meyer, S.T.; Müller, S.C.; Ceotto, P.; Dadalt, L.; Durigan, G.; et al. Conservation in Brazil Needs to Include Non-forest Ecosystems. Divers. Distrib. 2015, 21, 1455–1460. [Google Scholar] [CrossRef]

- Colli, G.R.; Vieira, C.R.; Dianese, J.C. Biodiversity and Conservation of the Cerrado: Recent Advances and Old Challenges. Biodivers. Conserv. 2020, 29, 1465–1475. [Google Scholar] [CrossRef]

- Reis, S.M.; Marimon, B.S.; Esquivel-Muelbert, A.; Marimon, B.H., Jr.; Morandi, P.S.; Elias, F.; de Oliveira, E.A.; Galbraith, D.; Feldpausch, T.R.; Menor, I.O.; et al. Climate and Crown Damage Drive Tree Mortality in Southern Amazonian Edge Forests. J. Ecol. 2022, 110, 876–888. [Google Scholar] [CrossRef]

- Mitchard, E.T. The Tropical Forest Carbon Cycle and Climate Change. Nature 2018, 559, 527–534. [Google Scholar] [CrossRef]

- East, A.; Hansen, A.; Jantz, P.; Currey, B.; Roberts, D.W.; Armenteras, D. Validation and Error Minimization of Global Ecosystem Dynamics Investigation (GEDI) Relative Height Metrics in the Amazon. Remote Sens. 2024, 16, 3550. [Google Scholar] [CrossRef]

- Bragagnolo, L.; da Silva, R.V.; Grzybowski, J.M.V. Amazon Forest Cover Change Mapping Based on Semantic Segmentation by U-Nets. Ecol. Inform. 2021, 62, 101279. [Google Scholar] [CrossRef]

- Cherif, E.; Hell, M.; Brandmeier, M. DeepForest: Novel Deep Learning Models for Land Use and Land Cover Classification Using Multi-Temporal and-Modal Sentinel Data of the Amazon Basin. Remote Sens. 2022, 14, 5000. [Google Scholar] [CrossRef]

- Magalhães, I.A.L.; de Carvalho Júnior, O.A.; de Carvalho, O.L.F.; de Albuquerque, A.O.; Hermuche, P.M.; Merino, É.R.; Gomes, R.A.T.; Guimarães, R.F. Comparing Machine and Deep Learning Methods for the Phenology-Based Classification of Land Cover Types in the Amazon Biome Using Sentinel-1 Time Series. Remote Sens. 2022, 14, 4858. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model. Remote Sens. 2019, 11, 68. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J. Deep Learning in Environmental Remote Sensing: Achievements and Challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Masolele, R.N.; De Sy, V.; Herold, M.; Marcos, D.; Verbesselt, J.; Gieseke, F.; Mullissa, A.G.; Martius, C. Spatial and Temporal Deep Learning Methods for Deriving Land-Use Following Deforestation: A Pan-Tropical Case Study Using Landsat Time Series. Remote Sens. Environ. 2021, 264, 112600. [Google Scholar] [CrossRef]

- Rousset, G.; Despinoy, M.; Schindler, K.; Mangeas, M. Assessment of Deep Learning Techniques for Land Use Land Cover Classification in Southern New Caledonia. Remote Sens. 2021, 13, 2257. [Google Scholar] [CrossRef]

- Darbari, P.; Kumar, M.; Agarwal, A. SIT. Net: SAR Deforestation Classification of Amazon Forest for Land Use Land Cover Application. J. Comput. Commun. 2024, 12, 68–83. [Google Scholar] [CrossRef]

| Category | Training Dataset | Validation Dataset |

|---|---|---|

| Amazon forest | 3,735,681 | 1,265,871 |

| Cerradão | 3,418,998 | 516,018 |

| Savanna | 2,831,667 | 957,963 |

| Agriculture | 6,575,880 | 1,490,778 |

| Water | 274,896 | 72,138 |

| Other | 857,598 | 120,912 |

| Class Name | Description |

|---|---|

| Amazon Forest | Includes evergreen forests, semi-deciduous seasonal forests, gallery forests, and riparian forests. |

| Cerradão | Represents a transitional ecological woodland between the Amazon dry forest and the Brazilian savanna, characterized by dense vegetation. |

| Savanna | Encompasses typical Cerrado, sparse Cerrado, and rocky Cerrado, with typical Cerrado being the predominant type in the study area. |

| Agriculture | Covers all farmland and pastures. |

| Water | Includes rivers, streams, and lakes. |

| Other | Includes isolated buildings, urban areas, and other human-made surfaces such as paved roads. |

| Date Type | Number of Bands | |

|---|---|---|

| Optical Fusion + Sentinel-1 | 12 | |

| Optical + SAR | Sentinel-2 + Sentinel-1 | 12 |

| PlanetScope + Sentinel-1 | 6 | |

| Optical | Optical Fusion | 10 |

| Sentinel-2 | 10 | |

| PlanetScope | 4 | |

| SAR | Sentinel-1 | 2 |

| Sentinel-2 | PlanetScope | ||||

|---|---|---|---|---|---|

| Name | Description | Resolution | Name | Description | Resolution |

| Band 2 | Blue | 10 m | Band 1 | Blue | 4.77 m |

| Band 3 | Green | Band 2 | Green | ||

| Band 4 | Red | Band 3 | Red | ||

| Band 8 | NIR | Band 4 | NIR | ||

| Band 8A | Red Edge 4 | 20 m | Band 4 | NIR | |

| Band 5 | Red Edge 1 | ||||

| Band 6 | Red Edge 2 | ||||

| Band 7 | Red Edge 3 | ||||

| Date Type | Overall Accuracy | F1 Score | |

|---|---|---|---|

| Optical and SAR | Optical Fusion + Sentinel-1 | 85% | 0.85 |

| Sentinel-2 + Sentinel-1 | 83% | 0.82 | |

| PlanetScope + Sentinel-1 | 76% | 0.75 | |

| Optical | Optical Fusion | 76% | 0.74 |

| Sentinel-2 | 73% | 0.69 | |

| PlanetScope | 80% | 0.80 | |

| SAR | Sentinel-1 | 62% | 0.49 |

| Optical + SAR | Optical | SAR | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Optical Fusion + Sentinel-1 | Sentinel-2 + Sentinel-1 | PlanetScope + Sentinel-1 | Optical Fusion | Sentinel-2 | PlanetScope | Sentinel-1 | |||||||||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |

| Amazon forest | 0.89 | 0.93 | 0.91 | 0.86 | 0.90 | 0.88 | 0.77 | 0.67 | 0.71 | 0.78 | 0.94 | 0.86 | 0.72 | 0.95 | 0.82 | 0.80 | 0.93 | 0.86 | 0.76 | 0.69 | 0.72 |

| Cerradão | 0.80 | 0.72 | 0.76 | 0.66 | 0.64 | 0.65 | 0.38 | 0.32 | 0.34 | 0.80 | 0.24 | 0.37 | 0.53 | 0.09 | 0.15 | 0.60 | 0.46 | 0.52 | 0.34 | 0.58 | 0.43 |

| Savanna | 0.92 | 0.66 | 0.76 | 0.89 | 0.71 | 0.79 | 0.73 | 0.84 | 0.78 | 0.76 | 0.58 | 0.65 | 0.86 | 0.51 | 0.64 | 0.93 | 0.62 | 0.74 | 0.88 | 0.45 | 0.60 |

| Agriculture | 0.81 | 0.96 | 0.88 | 0.84 | 0.93 | 0.88 | 0.89 | 0.98 | 0.93 | 0.77 | 0.90 | 0.83 | 0.76 | 0.92 | 0.83 | 0.86 | 0.94 | 0.90 | 0.59 | 0.88 | 0.71 |

| Water | 0.94 | 0.89 | 0.92 | 0.76 | 0.78 | 0.77 | 0.61 | 0.86 | 0.72 | 0.72 | 0.92 | 0.81 | 0.51 | 0.98 | 0.67 | 0.69 | 0.91 | 0.81 | 0.94 | 0.62 | 0.75 |

| Other | 0.77 | 0.67 | 0.71 | 0.61 | 0.45 | 0.52 | 0.60 | 0.26 | 0.36 | 0.40 | 0.58 | 0.47 | 0.34 | 0.45 | 0.41 | 0.46 | 0.66 | 0.54 | 0.40 | 0.47 | 0.44 |

| Category | Area (km2) | Proportion of Study Area (%) |

|---|---|---|

| Amazon forest | 7741.2 | 20.1 |

| Cerradão | 3232.1 | 8.4 |

| Savanna | 8317.3 | 21.6 |

| Agriculture | 18,667.4 | 48.6 |

| Water | 75.1 | 0.2 |

| Other | 391.5 | 1.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Harris, A.; Marimon, B.S.; Marimon Junior, B.H.; Dennis, M.; Bispo, P.d.C. Mapping the Cerrado–Amazon Transition Using PlanetScope–Sentinel Data Fusion and a U-Net Deep Learning Framework. Remote Sens. 2025, 17, 2138. https://doi.org/10.3390/rs17132138

Li C, Harris A, Marimon BS, Marimon Junior BH, Dennis M, Bispo PdC. Mapping the Cerrado–Amazon Transition Using PlanetScope–Sentinel Data Fusion and a U-Net Deep Learning Framework. Remote Sensing. 2025; 17(13):2138. https://doi.org/10.3390/rs17132138

Chicago/Turabian StyleLi, Chuanze, Angela Harris, Beatriz Schwantes Marimon, Ben Hur Marimon Junior, Matthew Dennis, and Polyanna da Conceição Bispo. 2025. "Mapping the Cerrado–Amazon Transition Using PlanetScope–Sentinel Data Fusion and a U-Net Deep Learning Framework" Remote Sensing 17, no. 13: 2138. https://doi.org/10.3390/rs17132138

APA StyleLi, C., Harris, A., Marimon, B. S., Marimon Junior, B. H., Dennis, M., & Bispo, P. d. C. (2025). Mapping the Cerrado–Amazon Transition Using PlanetScope–Sentinel Data Fusion and a U-Net Deep Learning Framework. Remote Sensing, 17(13), 2138. https://doi.org/10.3390/rs17132138