Abstract

Accurate extraction of plastic greenhouses from high-resolution remote sensing imagery is essential for agricultural resource management and facility-based crop monitoring. However, the dense spatial distribution, irregular morphology, and complex background interference of greenhouses often limit the effectiveness of conventional segmentation methods. This study proposes a deep learning framework that integrates a multi-scale Transformer-based decoder with a Swin-UNet architecture to improve feature representation and extraction accuracy. To enhance geometric consistency, a post-processing strategy is introduced, combining connected component analysis and morphological operations to suppress noise and refine boundary shapes. Using GF-2 satellite imagery over Weifang City, China, the model achieved a recall of 92.44%, precision of 91.47%, intersection-over-union of 85.13%, and F1-score of 91.95%. In addition to instance-level extraction, spatial distribution and statistical analysis were performed across administrative divisions, revealing regional disparities in protected agriculture development. The proposed approach offers a practical solution for greenhouse mapping and supports broader applications in land use monitoring, agricultural policy enforcement, and resource inventory.

1. Introduction

Plastic greenhouses, a key component of modern protected agriculture, are typically constructed using lightweight steel, bamboo or wood, or aluminum alloy as structural supports and are covered with plastic films such as polyethylene (PE), polyvinyl chloride (PVC), or ethylene-vinyl acetate (EVA) copolymers. Their primary function is to regulate the internal microclimatic conditions—temperature, humidity, light intensity, and carbon dioxide concentration—thereby enabling seasonal crop adjustment, off-season cultivation, and highly efficient intensive farming practices [1]. The use of plastic greenhouses originated in Japan during the 1950s and soon spread to other regions, including Asia, the Americas, and Europe. In China, greenhouse agriculture began in the 1960s and 1970s, initially being applied mainly for vegetable production [2]. Since the 1980s, China has experienced rapid growth in protected agriculture, with the total greenhouse cultivation area expanding from 15,000 hectares to 4.67 million hectares by 2010 [3]. By 2023, the cumulative area of constructed greenhouses in China had reached 18.36 million hectares [2], representing over 70% of the global total. Major greenhouse industry clusters have emerged in provinces such as Shandong, Hebei, Jiangsu, and Yunnan. Owing to their low construction costs and high adaptability, plastic greenhouses have become an essential production method for ensuring a stable supply of vegetables, fruits, flowers, and seedlings in China. They are particularly well-suited for widespread adoption among smallholder farmers and small- to medium-sized agricultural enterprises.

Amid the ongoing global transformation of agriculture, plastic greenhouse systems have been widely adopted in vertical farming and intensive agricultural models. These systems effectively conserve land resources while significantly increasing yield per unit area, thereby playing a pivotal role in addressing global food security challenges [4,5,6]. In China, greenhouse cultivation not only serves as a critical source of vegetable supply but also functions as a key means of increasing farmers’ income [7]. According to statistics, the global area under greenhouse vegetable cultivation reached 3.91 million hectares by the end of 2017, with China accounting for 80.4% of the total [8]. By 2020, the protected vegetable cultivation area and yield in China represented 19% and 38% of the national totals, respectively [9], further reinforcing the country’s leading role in global greenhouse agriculture [5,10]. However, the rapid expansion of plastic greenhouses has also introduced a range of ecological and environmental challenges. On one hand, greenhouse soils are prone to the accumulation of heavy metals, antibiotics, and other contaminants, which deteriorate soil quality and pose risks to human health [7]. On the other hand, plastic covering materials modify surface reflectance properties [11], leading to increased radiation reflection. Moreover, the widespread use and improper disposal of non-degradable plastic films contribute to agricultural non-point source plastic pollution [12,13]. Given these concerns, it is imperative to develop a high-precision remote sensing system for the identification and spatial mapping of plastic greenhouses. Such a system would enable the rapid extraction and dynamic monitoring of greenhouse distributions, enhance the efficiency of agricultural resource management, and provide critical support for promoting green agricultural transformation and ecological environmental protection.

The rapid advancement of remote sensing technology has not only provided comprehensive data support for applications such as land cover classification and land use monitoring [14] but has also opened new avenues for the automated extraction of plastic greenhouses. Early efforts in greenhouse extraction primarily relied on spectral feature analysis or object-oriented classification approaches [15]. For example, Taşdemir et al. (2014) extracted both texture and spectral features of greenhouses from WorldView-2 imagery and employed an approximate spectral clustering algorithm for unsupervised classification. Their method achieved an overall accuracy of 87.68% across 8000 samples and was partially able to distinguish glass greenhouses from plastic ones [16]. Similarly, Yang et al. (2017) proposed the novel Plastic Greenhouse Index based on enhanced spectral features, attaining an overall extraction accuracy of 91.2% using Landsat imagery in Weifang City, Shandong Province, China [17]. While spectral-based methods have shown good performance in specific regions, their robustness remains limited. Tarantino and Figorito (2012) observed that changes in greenhouse covering materials significantly alter their spectral signatures, leading to frequent misclassifications [18]. Aguilar et al. (2015) further noted that during high-temperature periods, greenhouse films are often whitened for thermal regulation, resulting in substantial spectral variation that complicates reliable extraction using spectral-based approaches [19]. To overcome these limitations, object-oriented classification strategies have been introduced. Wu et al. (2016) developed an object-oriented greenhouse extraction model based on Landsat-8 imagery using the Xiaoshan District of Hangzhou City, China as a case study. By integrating the Random Forest (RF) algorithm, their approach outperformed purely spectral-based methods, although it still struggled to accurately differentiate greenhouses from sparsely vegetated non-greenhouse areas [20]. Balcik et al. (2020) applied object-based greenhouse extraction techniques using Sentinel-2 MSI and SPOT-7 imagery, achieving overall accuracies of 88.38% and 91.43%, respectively, thereby demonstrating the potential of such methods [21]. However, they also highlighted classifier-specific limitations: K-Nearest Neighbors (KNN) and RF tended to misclassify bare soil and roads as greenhouses, while Support Vector Machines (SVM) were prone to confusion between greenhouses and white-roofed structures [21].

In recent years, advances in deep learning techniques and computational resources have significantly enhanced the precision of surface feature identification and classification in remote sensing imagery. The application of deep learning models to ground object extraction has not only improved accuracy but also substantially reduced dependence on manual intervention [22]. For example, Deng et al. (2023) developed a Coastal Aquaculture Network (CANet) based on DeepLabV3+ to achieve large-scale extraction of marine aquaculture areas from GF-2 remote sensing imagery [23]; Tian et al. (2023) utilized the BsiNet framework for crop classification in the mountainous terrain of Xishui County [24]; Chen et al. (2023) developed a Generative Adversarial Network (GAN)-based method for road extraction from remote sensing images [25]; and Xie et al. (2024) applied YOLOv5 to detect wind turbines in GF-2 imagery [26]. In the domain of agricultural greenhouse extraction, several deep learning-based approaches have also emerged. Ma et al. (2021) introduced a method based on Fully Convolutional Networks (FCNs), achieving an accuracy of 92.68% [22]. Chen et al. (2022) employed a ResNet34-based architecture for large-scale greenhouse mapping in Shandong Province, demonstrating its utility in agricultural monitoring [27]. More recently, Tong et al. (2024) used Convolutional Neural Networks (CNNs) to map the global distribution of greenhouses, covering approximately 1.3 million hectares, thus visually documenting the rapid global expansion of protected agriculture [28]. Despite these achievements, current large-scale mapping methods remain limited in their ability to accurately delineate the boundary morphology and spatial distribution of individual greenhouse structures. This deficiency hinders detailed analysis of key parameters such as greenhouse count, shape, area, and structural type, which are critical for fine-scale agricultural research and management.

Accurate extraction of individual plastic greenhouses from remote sensing imagery remains a significant challenge due to factors such as narrow inter-structure spacing, high-density spatial distribution, and the inherent heterogeneity and variability of surrounding background environments. These challenges primarily manifest in two key aspects: (1) Adhesion artifacts in extraction results: In areas with densely distributed greenhouses and minimal spacing between structures, remote sensing-based extraction often suffers from large-scale adhesion artifacts, where adjacent greenhouses are incorrectly merged into single connected objects. This severely impairs the accuracy of greenhouse counting and morphological analysis. (2) Irregular contours and fragmented segments: Inaccurate segmentation of greenhouse boundaries frequently results in irregular edge contours and fragmented patches. These errors introduce noise and discontinuities in the extracted outputs, undermining the reliability of subsequent spatial analysis and information retrieval processes.

To address the aforementioned challenges, this study proposes a remote sensing image analysis method for the precise extraction of individual plastic greenhouses. The method integrates multi-scale feature learning with image post-processing mechanisms to improve extraction accuracy and completeness. Weifang City, located in Shandong Province, China, is selected as the representative study area due to its extensive greenhouse cultivation. The primary contributions of this study are as follows:

- Development of a Multi-scale Transformer Decoder (MTD) module: A novel decoder architecture is constructed by integrating hierarchical attention mechanisms and adaptive feature fusion for cross-scale dependency modeling. The MTD module incorporates lateral connections and cross-attention mechanisms to effectively aggregate greenhouse edge information across multiple spatial scales. By progressively refining feature representations from high-level semantics to low-level details, the module significantly improves the precision of edge detection.

- Design of the MTDSNet architecture: A new network, termed MTDSNet (Multi-scale Transformer Decoder Swin-UNet), is proposed to enhance the model’s sensitivity to edge features in complex background environments. The architecture effectively mitigates adhesion artifacts caused by insufficient spacing between adjacent greenhouses, improving the delineation of individual structures.

- Introduction of an image post-processing strategy: A Denoising and Smoothing (DS) module is developed to refine the preliminary segmentation results. This module performs patch-level denoising and edge smoothing, thereby reducing fragmentation and improving the geometric regularity of extracted greenhouse boundaries.

- Experimental validation of the proposed method: A series of deep learning-based experiments were conducted to evaluate the method’s performance in extracting individual greenhouses at sub-meter accuracy. The results confirm that the proposed framework delivers high-precision extraction, providing reliable remote sensing data support for facility agriculture census and the fine-scale management of cultivated land resources.

The remainder of this paper is organized as follows: Section 2 presents the study area, dataset construction, and methodology. Section 3 details the experimental results, including visual outputs and statistical evaluation. Section 4 discusses the strengths and limitations of the proposed method. Section 5 provides a comprehensive conclusion and outlook for future research.

2. Materials and Methods

2.1. Study Area

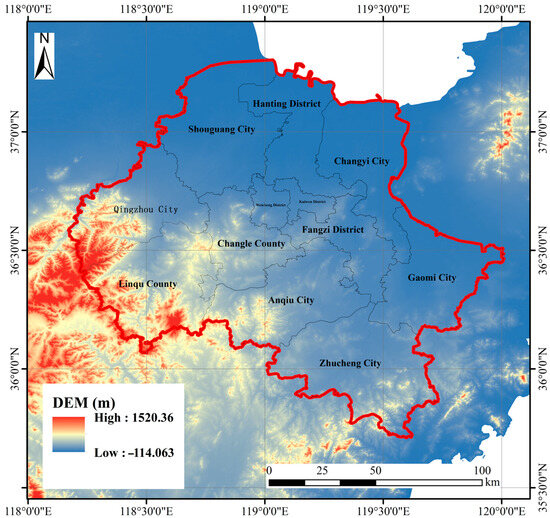

This study selects Weifang City, located in Shandong Province, China, as the research area, with the primary objective of achieving high-precision extraction and mapping of individual plastic greenhouses. Situated in the central-eastern part of Shandong (35°41′–37°26′N, 118°10′–120°00′E), Weifang lies within the warm temperate monsoon climate zone. The region is characterized by abundant sunlight, distinct seasonal variations, and an average annual sunshine duration exceeding 2500 h. Elevations range from −7.19 m (below sea level) to 1013.08 m, although the majority of the terrain consists of flat plains—ideal for the development of protected agriculture (Figure 1). Covering an area of approximately 16,100 square kilometers, Weifang serves as a strategic hub linking the Jiaodong Economic Circle and the Provincial Capital Economic Circle. It is one of China’s most prominent bases for protected vegetable cultivation and represents the region with the densest distribution and highest degree of industrialization in plastic greenhouse farming [10].

Figure 1.

Elevation distribution map of Weifang City, Shandong Province. The red demarcation lines denote the administrative boundary of Weifang City.

2.2. Datasets

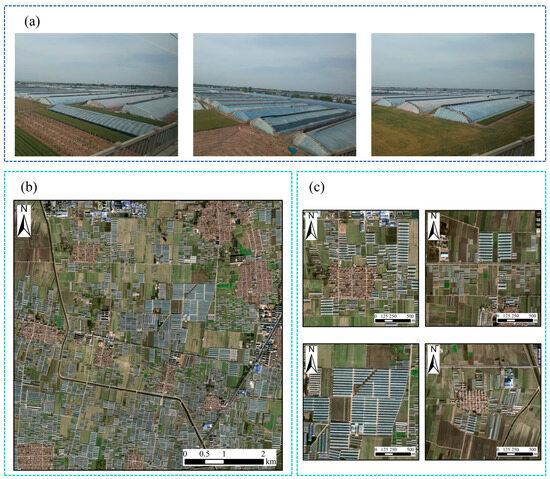

Weifang City in Shandong Province serves as a representative region for the development of protected agriculture in China, characterized by high cultivation density, large-scale individual greenhouse structures, and complex spatial configurations of facility clusters, as illustrated in Figure 2. In this study, GF-2 satellite imagery is selected as the primary dataset for model training and analytical purposes, with the goal of achieving accurate extraction of individual plastic greenhouses. The GF-2 satellite provides panchromatic imagery at a spatial resolution of 0.8 m and multispectral imagery at 3.2 m, allowing for clear delineation of surface features such as buildings, roads, and agricultural plots. This high spatial resolution, coupled with extensive regional coverage, makes GF-2 imagery particularly well-suited for both fine-scale individual greenhouse extraction and large-scale mapping applications. It offers an effective balance between precision and operational efficiency in agricultural monitoring tasks.

Figure 2.

Real-world plastic greenhouses and their representations in remote sensing imagery. (a) Real-world plastic greenhouses; (b,c) plastic greenhouses on remote sensing images at varying scales.

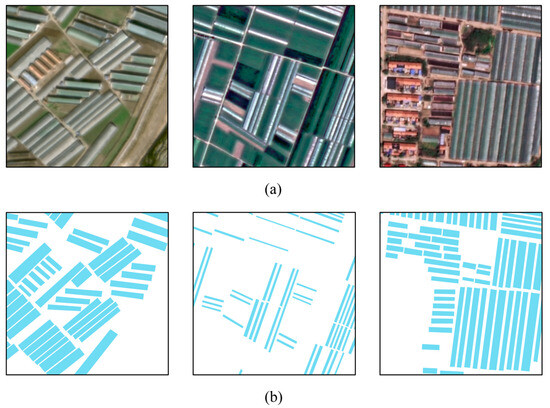

The GF-2 satellite imagery used for dataset construction encompasses a total volume of approximately 7.45 GB and covers several typical greenhouse cultivation zones across Weifang City, Shandong Province, China. This dataset provides spatially explicit support for agricultural monitoring and facilitates the development of high-resolution extraction models. It is important to note that plastic greenhouses in different subregions of Weifang exhibit noticeable variations in morphological characteristics and spatial configuration patterns—a phenomenon that has been well-documented in agricultural remote sensing studies. These regional differences present both challenges and opportunities for standardized data labeling and model generalization. Figure 3 presents representative examples of greenhouse typologies observed in the GF-2 imagery. These visual samples serve as essential references for the annotation workflow, supporting the consistent generation of individual greenhouse labels and contributing to the robustness of model training and evaluation.

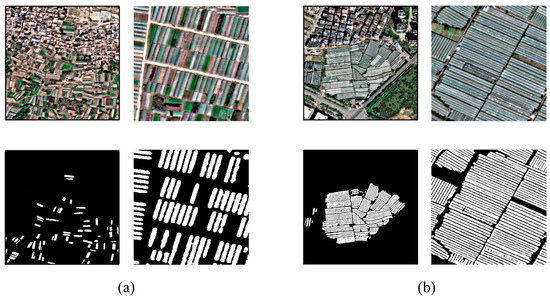

Figure 3.

Representative greenhouse typologies observed in Weifang City’s remote sensing imagery. (a) Plastic greenhouses with varying distribution densities and morphological features in remote sensing images. (b) The label corresponding to the plastic greenhouse in (a).

To enhance the model’s ability to capture local contextual features, this study adopts a tile-based segmentation strategy for processing high-resolution (HR) remote sensing imagery. This approach not only enables more effective learning of localized spatial patterns but also helps control the input data volume per batch, thereby preventing GPU memory overflow and improving training efficiency and stability.

2.3. Methods

2.3.1. Extraction Workflow

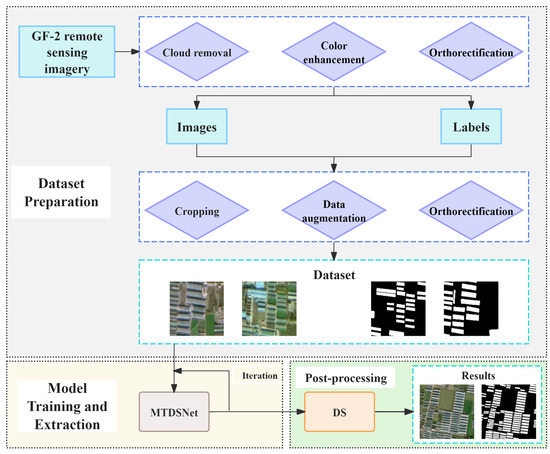

This study addresses the task of individual plastic greenhouse extraction in Weifang City, Shandong Province, through the design of a technical workflow comprising three primary operational stages, as illustrated in Figure 4: (1) dataset preparation, (2) model training and extraction, and (3) post-processing.

Figure 4.

The extraction workflow for individual plastic greenhouses in Weifang City, Shandong Province, China.

In the dataset preparation stage, GF-2 satellite imagery covering the study area was acquired from the China Center for Resources Satellite Data and Application. Our research group frequently uses high-resolution satellite imagery, such as GF-2 and GF-7, for our studies and has therefore developed specialized preprocessing software tailored to this type of data. The software features processing workflows such as cloud removal, enhancement, or color balancing, enabling users to complete required image processing operations by inputting corresponding computer commands. A series of pre-processing operations—including radiometric enhancement, panchromatic and multispectral fusion, orthorectification, and mosaic tiling—were performed to ensure geometric precision and radiometric consistency for downstream analysis. Subsequently, manual annotation of individual greenhouses was conducted using ArcMap 10.8, following standardized delineation protocols for high-resolution agricultural features. Priority was given to spatially distinct greenhouses with clearly defined boundaries, resulting in a total of 58,236 annotated single-unit greenhouse instances. The vector-format annotations were converted into raster format, co-registered with the corresponding GF-2 imagery, and uniformly tiled into 512 × 512 pixel patches to construct image–label pairs for deep learning applications. The dataset was then partitioned into training and validation sets using an 8:2 split.

In the model training and extraction phase, the proposed Multi-scale Transformer Decoder Swin-UNet (MTDSNet) network was employed to train the model on the prepared datasets. Leveraging hierarchical feature fusion mechanisms, the network enhanced the extraction of agricultural structures exhibiting diverse spatial scales and spectral textures. Through iterative optimization, high-performance model parameters were obtained, demonstrating strong generalization capability across varied operational scenarios. The trained model was subsequently applied to GF-2 imagery of Weifang City to generate preliminary extraction results of individual plastic greenhouses. Our model was implemented using the PyTorch (2.0.1) framework, running in an environment with Python 3.8, CUDA 11.6, and Windows 10, utilizing an NVIDIA GeForce RTX 3090 graphics card (Santa Clara, CA, USA).

In the post-processing stage, a Denoising and Smoothing (DS) module—based on Connected Component Analysis (CCA) and Morphological Operations (MO)—was implemented to refine the model outputs. This module effectively removes small fragmented patches and morphologically inconsistent artifacts while simultaneously performing edge smoothing. As a result, the final outputs exhibit improved alignment with the true geometric contours and spatial distribution patterns of plastic greenhouses in the study area. In the post-processing workflow, we employed the Python programming language, where edge smoothing and fragmented patch removal primarily rely on libraries including “cv2”, “numpy”, and “gaussian_filter”.

2.3.2. MTDSNet Architecture

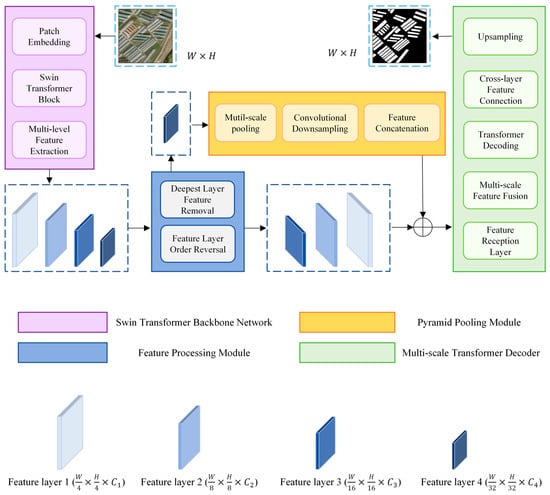

The proposed MTDSNet architecture is illustrated in Figure 5. The network builds upon the Swin-UNet framework, which integrates the powerful feature representation capabilities of the Swin Transformer within a U-shaped architecture originally designed for medical image segmentation tasks [29,30]. Our model retains the classical encoder–decoder structure of U-Net while incorporating a shifted window-based Swin Transformer as the backbone for hierarchical feature extraction. To satisfy the hierarchical feature requirements of the Multi-scale Transformer Decoder (MTD) module, the final-layer features extracted by the Swin Transformer are passed through a Pyramid Pooling Module (PPM) to enhance global contextual understanding. Simultaneously, the intermediate features from the remaining layers are reorganized from a shallow-to-deep to a deep-to-shallow sequence. These multiscale features are then fed into the MTD module, where learnable attention weights are used to achieve dynamic and adaptive feature fusion. This architectural design improves the compatibility between high-level semantic features and low-level spatial details, while avoiding redundancy with features generated by the PPM. Additionally, it mitigates the risk of losing fine-grained information due to the overwhelming influence of high-level abstract features.

Figure 5.

MTDSNet architecture diagram.

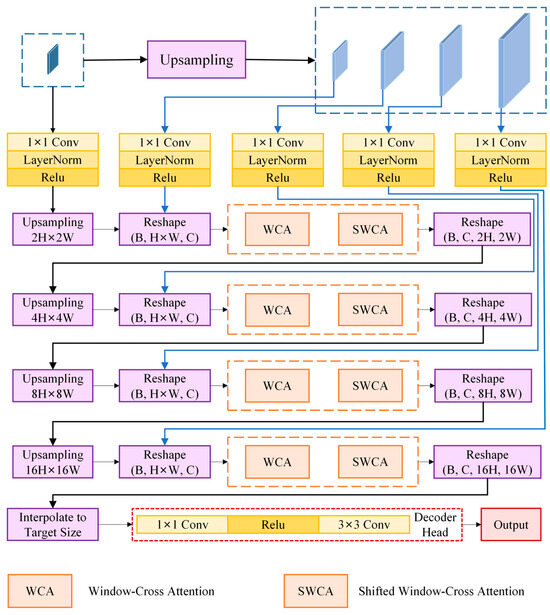

2.3.3. Multi-Scale Transformer Decoder (MTD)

Kim et al. (2022) developed a multi-scale Transformer architecture for Human–Object Interaction (HOI) monitoring [31], while Wu et al. (2023) introduced a Multi-scale Attention Fusion (MAF) module to integrate heterogeneous feature information across different levels [32]. These approaches significantly improved network performance through multi-scale refinement strategies. Drawing inspiration from their pioneering work, this study proposes a Multi-level Task Decoupling (MTD) module to address cross-scale feature conflicts and enhance feature integration. The architectural configuration of the MTD module is shown in Figure 6.

Figure 6.

MTD module architecture diagram.

The MTD module operates through four sequential processing stages:

Stage 1: The module receives two feature inputs—deepest-level features processed by the Pyramid Pooling Module (PPM) and reversed feature maps from shallower layers. These are upsampled to generate feature maps at multiple resolutions: 2H × 2W, 4H × 4W, 8H × 8W, and 16H × 16W (where H and W denote the original height and width, respectively). Feature Map 1 is processed through lateral convolution and upsampled to 2H × 2W resolution. It is then reshaped into a tensor of shape (B, H × W, C), where B is the batch size, and C is the number of channels. This reshaped map is further refined using Window-Cross Attention and Shifted Window-Cross Attention mechanisms and subsequently reshaped back to (B, C, 2H, 2W). This stage emphasizes small-object sensitivity via multi-scale attention fusion.

Stage 2: The output feature map from Stage 1 is further upsampled to 4H × 4W and concatenated with Feature Map 2. The combined features are reshaped into (B, H × W, C) and passed through the attention modules as in Stage 1. The output is then reshaped to (B, C, 4H, 4W), maintaining spatial consistency.

Stage 3: Similar to the previous stage, the output from Stage 2 is upsampled to 8H × 8W and concatenated with Feature Map 3. The concatenated features undergo attention-based refinement and reshaping as before.

Stage 4: Following the same processing pattern, the features are upsampled to 16H × 16W, concatenated with Feature Map 4, and passed through the same attention modules to generate the final fused representation.

This hierarchical multi-scale refinement strategy enables the MTD module to dynamically integrate semantic and spatial features across resolutions, mitigating cross-scale feature conflicts and enhancing the model’s ability to extract detailed structural characteristics of individual plastic greenhouses. The computational workflow is formulated as follows:

- (1)

- Initial stage :

denotes the initial input feature map; denotes horizontal convolution processing; denotes the initial feature derived from lateral convolution; denotes the upsampled feature map.

- (2)

- Multi-stage decoding:

denotes the feature-aligned feature map; denotes the t-th upsampled feature map; denotes the height and width of the upsampled output; denotes the transpose operation; denotes the t-th level attention output; , , and denote the query matrix, key matrix, and value matrix, respectively; denotes the feature dimension per head; denotes the bias term; denotes the deserialized feature map at the t-th stage.

- (3)

- Final results:

denotes the final feature map; denotes the final-stage feature map in the multi-level decoding hierarchy; denotes the height and width of the final output; denote a 1 × 1 convolutional layer, ReLU activation function, and batch normalization, respectively; is the final result.

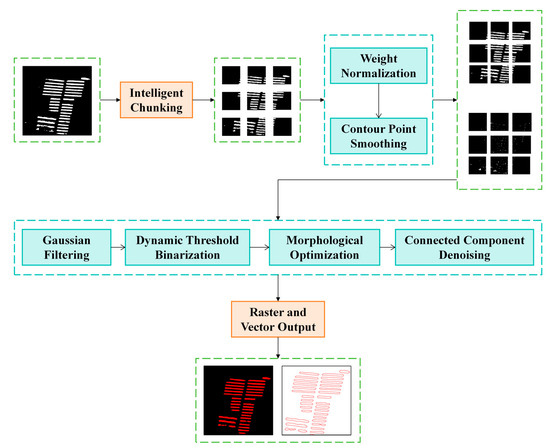

2.3.4. Denoising and Smoothing (DS) Module

In practical remote sensing imagery, the presence of complex background features—such as roads, trees, and heterogeneous terrain—often interferes with the accurate extraction of small-scale individual plastic greenhouses. Under such conditions, deep learning models are prone to misclassify these background elements as greenhouse-related structures, resulting in fragmented patches and irregular edge contours in the extraction outputs. These artifacts degrade segmentation accuracy and geometric consistency, and may lead to significant estimation errors in greenhouse quantity and area, ultimately compromising key decision-making processes in precision agriculture, including crop monitoring and irrigation scheduling. To address these challenges, this study introduces a post-processing module—Denoising and Smoothing (DS)—built upon Connected Component Analysis (CCA) and Morphological Operations (MO). CCA is a widely used technique in binary image processing and it identifies and labels spatially adjacent pixel regions with identical values (termed connected components), enabling the effective removal of isolated noise patches. MO, through structured operations such as erosion and dilation, facilitates noise suppression, contour smoothing, and the filling of small voids in target regions, playing a crucial role in geometric refinement. The DS module integrates these two techniques into a unified processing pipeline, allowing for simultaneous noise elimination and edge regularization. This integrated approach streamlines the post-processing workflow, enhances operational efficiency, and reduces computational resource consumption in large-scale remote sensing applications. The operational workflow of the DS module is illustrated in Figure 7.

Figure 7.

The processing workflow of the DS module.

To enhance the processing efficiency of the DS module, a block-based strategy was first adopted to enable localized parallel processing of extraction results. The module then identified regions with irregular boundaries and applied a sequential optimization pipeline consisting of Gaussian filtering, dynamic threshold-based binarization, and morphological operations to perform edge smoothing. Following the refinement of edge contours, fragmented and noise-like patches were eliminated to improve the structural integrity of the extracted greenhouse features. Upon completion of these operations, the post-processing results were exported in both raster and vector formats, ensuring compatibility with various downstream geospatial analysis and application scenarios.

2.3.5. Accuracy Evaluation Metrics

This study evaluates the model’s performance using four commonly adopted metrics: Recall, Precision, F1-Score, and Intersection over Union (IoU). These metrics are used to systematically analyze the model’s effectiveness in extracting individual plastic greenhouses, assess its strengths and limitations, and guide further architectural optimizations to improve adaptability and accuracy in greenhouse extraction tasks within Weifang City. Recall and Precision jointly reflect the model’s classification capability. A higher Recall indicates a lower false negative rate—i.e., fewer missed detections of actual greenhouse instances—while a higher Precision implies a lower false positive rate, meaning fewer non-greenhouse regions are incorrectly identified as greenhouses. The F1-score, defined as the harmonic mean of Precision and Recall, serves as a balanced indicator of overall classification performance, particularly when dealing with imbalanced datasets. The Intersection over Union (IoU) quantifies the spatial agreement between the predicted extraction results and the ground truth labels. A higher IoU value indicates a better overlap and stronger spatial consistency between the model’s output and actual greenhouse locations. The computational formulas for each of these evaluation metrics are presented in Equations (11)–(14).

True Positives (TP): Instances where the ground truth is positive, and the model correctly predicts them as positive. True Negatives (TN): Instances where the ground truth is negative, and the model correctly predicts them as negative. False Positives (FP): Instances where the ground truth is negative, but the model incorrectly predicts them as positive. False Negatives (FN): Instances where the ground truth is positive, but the model incorrectly predicts them as negative.

3. Results

3.1. Ablation Study

3.1.1. Quantitative Comparisons

To assess the effectiveness of the proposed MTDSNet architecture and the integrated Multi-scale Transformer Decoder (MTD) module, a series of experiments were conducted using the self-constructed Individual Plastic Greenhouse (IPG) Dataset. In these experiments, the Swin-UNet framework served as the baseline model, and the MTD module was incorporated to evaluate its contribution to extraction performance. The experimental results evaluated on the IPG dataset are presented in Table 1.

Table 1.

Test accuracy comparison table between Swin-UNet and MTDSNet.

In the evaluation conducted on the IPG dataset, the proposed MTDSNet model—integrated with the MTD module—achieved an F1-score of 91.95%, outperforming the baseline Swin-UNet, which attained 89.11%. This improvement highlights the superior overall classification performance of MTDSNet in extracting individual plastic greenhouses. Further comparative analysis of Recall and Precision values confirms the enhanced ability of MTDSNet to accurately detect greenhouse instances while minimizing false predictions. Additionally, higher IoU scores indicate stronger spatial consistency between the predicted greenhouse regions and the ground truth annotations. These experimental results validate the effectiveness of the proposed MTD module, which leverages multi-scale feature fusion as its core strategy. The module significantly improves the network’s representational capacity and overall extraction performance in complex remote sensing scenes.

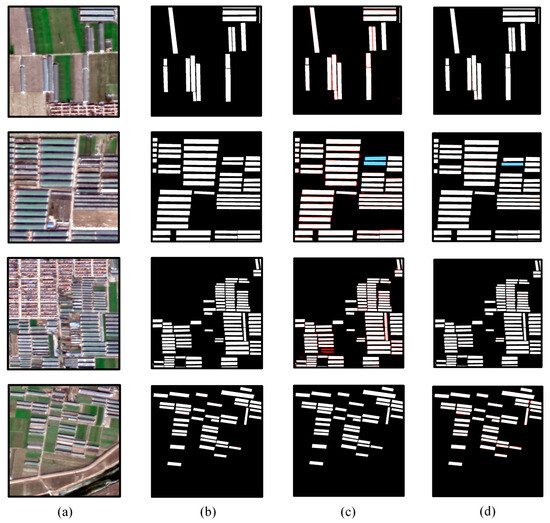

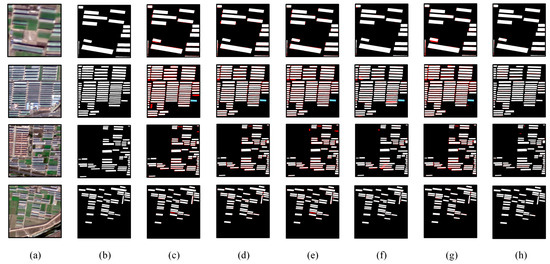

3.1.2. Extraction Result Comparison

To visually illustrate the performance differences between models, selected comparative extraction results are presented in Figure 8. Each row highlights a representative spatial context for individual plastic greenhouse distribution. First row: Sparsely distributed greenhouses. Second row: Densely clustered greenhouses. Third row: Greenhouses located in urban environments. Fourth row: Greenhouses located in suburban areas. In sparsely distributed areas, MTDSNet effectively distinguished closely spaced greenhouses and preserved the morphological integrity of individual structures. In contrast, Swin-UNet frequently misclassified adjacent greenhouses as a single entity, resulting in adhesion artifacts and degraded boundary clarity. In densely populated greenhouse zones (second row), both models exhibited omission errors; however, MTDSNet produced fewer missed detections, demonstrating improved sensitivity. Within urban regions (third row), MTDSNet showed significantly fewer false positives, achieving more accurate delineation amid complex background features. In suburban areas (fourth row), both models performed comparably, with only marginal differences. Overall, these visual comparisons further validate that MTDSNet consistently outperforms Swin-UNet across diverse spatial configurations, particularly in terms of preserving structural details and minimizing misclassification.

Figure 8.

Ablation study example: Red regions denote false extraction, while blue areas indicate missed extraction. (a) GF-2 remote sensing image; (b) corresponding labels for individual plastic greenhouses; (c) Swin-UNet; (d) MTDSNet.

3.2. Comparison of Methods

3.2.1. Quantitative Comparisons

To further evaluate the performance of MTDSNet, we conducted comparative experiments on the IPG dataset using several widely adopted semantic segmentation models, including UNet [33], UperNet [34], CPS-RAUnet++ [35], and UNETR++ [36]. UNet adopts a U-shaped symmetric architecture with skip connections to integrate shallow spatial details with deep semantic representations. Originally designed for medical image segmentation, it is effective at preserving fine object boundaries, even under limited training data conditions. UperNet is a multi-task learning framework that combines a feature pyramid network with a pyramid pooling module. It performs hierarchical visual parsing through bidirectional feature aggregation, making it particularly suitable for pixel-wise semantic segmentation in complex visual environments, including medical imaging and scene understanding. CPS-RAUnet++ was proposed by Gan et al. (2024) for detecting the jet stream axis in the atmosphere [35]. This method integrates enhanced residual blocks and refined attention gate mechanisms into the dense skip connections of U-Net++. UNETR++, proposed by Shaker et al. (2024), designs an Efficient Paired Attention (EPA) module that leverages a pair of interdependent branches based on spatial and channel attention mechanisms to efficiently learn discriminative features in both spatial and channel dimensions [36].

Among the evaluated semantic segmentation methods on the IPG dataset (as shown in Table 2), UNETR++ achieved the best overall performance among the baseline models, with an F1-score of 91.28%. Although UperNet yielded a higher Recall than Swin-UNet, it recorded the lowest IoU value, indicating poor spatial alignment between its predictions and the ground truth. Consequently, UperNet also exhibited the lowest overall accuracy, with an F1-score of 87.81%. In contrast, the proposed MTDSNet achieved the highest performance across all metrics, with a Recall of 92.44%, Precision of 91.47%, IoU of 85.13%, and F1-score of 91.95%. These results underscore the model’s superior ability to accurately and consistently extract individual plastic greenhouses, even in complex spatial environment.

Table 2.

Test accuracy table of widely used semantic segmentation methods.

3.2.2. Extraction Result Comparison

Figure 9 presents comparative extraction results of selected scenes to visually assess the performance of different models, with red indicating false positive areas and blue denoting false negative areas. The rows correspond to different spatial distribution contexts of individual plastic greenhouses. The first row of results: sparsely distributed. The second row of results: densely clustered. The third row of results: urban environments. The fourth row of results: suburban areas.

Figure 9.

Partial test results on the IPG dataset. (a) GF-2 remote sensing image; (b) corresponding labels for individual plastic greenhouses; (c) UperNet; (d) CPS-RAUnet++; (e) UNet; (f) UNETR++; (g) Swin-UNet; (h) MTDSNet.

In the first row of results, all methods demonstrated comparable performance, with only minor instances in Swin-UNet’s extraction results deviating from typical greenhouse morphology. In the second row of results, all methods exhibited both false positive areas and false negative areas, with UperNet displaying the highest incidence of false positive areas. In contrast, MTDSNet maintains more complete structural integrity. In the third row of results, all methods exhibited false positive areas, with CPS-RAUnet++, UNETR++, and MTDSNet demonstrating fewer instances; however, the extraction results of UNETR++ showed minor adhesion phenomena. In the fourth row of results, the extraction results from UperNet and UNet exhibited a small number of false positive areas, while CPS-RAUnet++ showed mild adhesion in its extracted outputs.

Overall, based on both quantitative accuracy metrics and qualitative visual comparisons, the proposed MTDSNet consistently outperforms conventional semantic segmentation approaches in terms of structure preservation, misclassification reduction, and robustness across complex spatial environments.

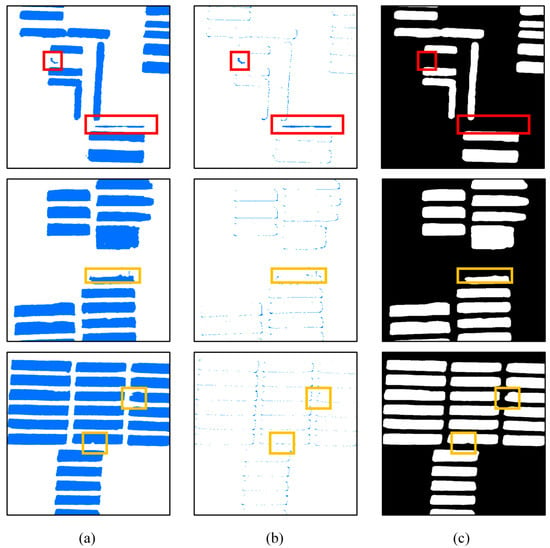

3.3. DS Module Post-Processing

The effectiveness of the DS module is illustrated in Figure 10: Figure 10a shows the original extraction results produced by MTDSNet, Figure 10b presents intermediate outcomes after partial processing, Figure 10c displays the final refined results. Red rectangles highlight typical speckle noise, which is effectively removed in the post-processed output. The comparison between Figure 10a,c demonstrates the module’s ability to eliminate small false-positive regions. Yellow rectangles indicate areas with significant edge refinement, where Figure 10b illustrates the progressive boundary smoothing process. As a result, the final extracted edges more closely align with the true morphological contours of the greenhouses. In conclusion, the DS module exhibits strong capability in enhancing the precision, cleanliness, and shape fidelity of extraction results, thereby providing a robust data foundation for downstream applications such as greenhouse counting, area statistics, and spatial distribution analysis.

Figure 10.

Extraction results and post-processing results of individual plastic greenhouses. (a) Extraction results; (b) optimization details; (c) post-processing results.

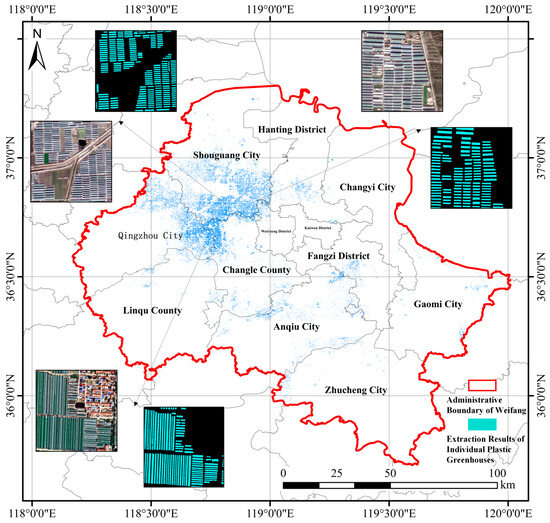

3.4. Mapping of Individual Plastic Greenhouses in Weifang City

Following the completion of remote sensing-based extraction and post-processing of individual plastic greenhouses in Weifang City, Shandong Province, this study conducted quantitative, areal, and spatial distribution analyses on the extracted results. As illustrated in Figure 11, the spatial distribution of greenhouses exhibits marked agglomeration patterns, with the highest concentrations located along the borders of Shouguang City, Qingzhou City, and Changle County—forming the primary concentration zone of intensive facility agriculture within Weifang. In contrast, relatively sparse greenhouse distributions were observed in regions such as the central Kuiwen District, southern Zhucheng City, and the eastern parts of Gaomi and Changyi Cities, indicating lower levels of facility-based agricultural development in these areas.

Figure 11.

Extraction results map of individual plastic greenhouses in Weifang City.

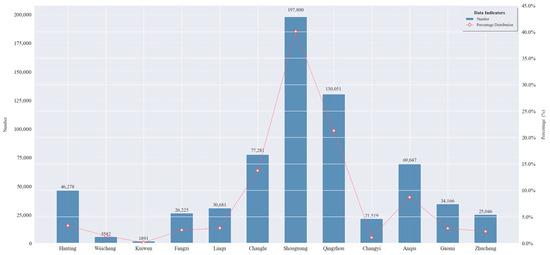

3.5. Statistics and Analysis of Individual Plastic Greenhouses

As part of the statistical analysis, Table 3 presents the number, area, and corresponding proportions of individual plastic greenhouses across all administrative divisions (districts, counties, and county-level cities) within Weifang City. Figure 12 is the bar chart of individual plastic greenhouse quantity statistics in Weifang City. The results indicate that Shouguang City is the most intensive greenhouse cultivation area, with 197,800 greenhouses, accounting for 29.72% of the municipal total. The corresponding coverage area reached 260.70 km2, representing 40.09% of the total area—ranking first in both quantity and spatial extent. Qingzhou City and Changle County followed, with 130,051 and 77,281 greenhouses, covering 138.24 km2 and 89.10 km2, respectively. Together, these regions form the core support base of Weifang’s greenhouse industry. In contrast, Kuiwen District, the central urban core, exhibited the lowest level of greenhouse development, with only 1891 units and a total area of 0.83 km2, accounting for just 0.28% of the city’s greenhouse count and 0.13% of the total area. This reflects the spatial constraints of facility-based agriculture in densely urbanized zones. Overall, the spatial distribution of individual plastic greenhouses in Weifang demonstrates a distinct pattern: higher density in the north, sparser distribution in the south, and low concentration in central urban areas with peripheral clustering. This evident spatial heterogeneity at the regional scale provides crucial data support for optimizing agricultural resource allocation, refining industrial spatial layout, and promoting the implementation of precision agricultural management strategies.

Table 3.

Statistics on the quantity and area of individual plastic greenhouses across districts (counties and county-level cities) in Weifang City.

Figure 12.

Bar chart of individual plastic greenhouse quantity statistics in Weifang City.

4. Discussion

4.1. Advantage

This study proposes a high-precision intelligent extraction framework for individual plastic greenhouses based on high-resolution remote sensing imagery. The approach integrates a Multi-scale Transformer Decoder (MTD) into the Swin-UNet architecture to form the MTDSNet model, and further incorporates a Denoising and Smoothing (DS) post-processing module to enhance output quality. Within the backbone network, MTD enables multi-scale feature fusion, effectively capturing the textural, geometric, and spectral characteristics of greenhouses in complex remote sensing scenes. This significantly improves the model’s capability to recognize greenhouse structures under varying backgrounds. The architecture demonstrates strong spatial representation and global modeling abilities, making it particularly suitable for regions with dense greenhouse distributions, narrow inter-structure spacing, and diverse morphological forms. In the post-processing stage, the DS module—integrating Connected Component Analysis (CCA) and Morphological Operations (MO)—substantially enhances boundary refinement and structural restoration. It not only eliminates misclassified noise patches but also improves edge regularity, allowing the output to better conform to the true rectangular or arched forms of greenhouses. This effectively addresses common issues in traditional methods, such as adhesion between adjacent structures and boundary blurring.

In summary, the methodology presented in this study establishes a scalable and practically applicable technical foundation for large-scale greenhouse mapping in Weifang City. It holds significant potential for a wide range of applications, including agricultural facility supervision, crop subsidy verification, dynamic monitoring of cultivated land resources, and the implementation of rural revitalization strategies.

4.2. Limitations and Future Directions

Although MTDSNet has demonstrated relatively high accuracy in extracting individual plastic greenhouses within the Weifang City area, certain limitations remain. Greenhouse extraction experiments were conducted in selected areas of Tianshui City, Gansu Province and Kunming City, Yunnan Province, China, with results shown in Figure 13. Greenhouse structures in the Tianshui region of Gansu Province exhibited relatively compact dimensions, with extraction results closely aligned with those from Weifang City; only sporadic adhesion phenomena were observed between a minority of adjacent greenhouses. Extraction results exhibited widespread adhesion phenomena due to the slender morphology and intensive distribution of greenhouse structures in Kunming, Yunnan. In high-density greenhouse distribution regions like Yunnan, the model still exhibits widespread incomplete separation of adjacent greenhouses, compromising the accuracy of subsequent statistical counting and area-based analyses. Additionally, this study did not conduct model transfer or experimental validation at broader spatial scales (e.g., provincial or national levels). The model’s generalization capability remains to be comprehensively evaluated.

Figure 13.

(a) Extraction results of plastic greenhouses in partial areas of Tianshui City, Gansu Province; (b) extraction results of plastic greenhouses in partial areas of Kunming City, Gansu Province.

To address these challenges, future research will focus on the following two directions: (1) enhancing cross-regional generalization by incorporating multi-regional and multi-temporal remote sensing datasets into the training process, thereby improving model robustness and applicability across diverse geographical contexts, and (2) improving boundary delineation and instance-level segmentation by refining post-processing strategies to achieve more precise separation of adherent greenhouses. This will support higher-accuracy extraction results and enable large-scale, reliable identification and statistical analysis of individual plastic greenhouses.

5. Conclusions

This study presents a novel semantic segmentation framework, MTDSNet, which integrates a Multi-scale Transformer Decoder (MTD) into the Swin-UNet architecture. By leveraging window-based self-attention and multi-scale feature fusion, the model effectively captures the textural, morphological, and spectral characteristics of plastic greenhouses from high-resolution remote sensing imagery. To further refine extraction accuracy, a Denoising and Smoothing (DS) module—combining Connected Component Analysis (CCA) and Morphological Operations (MO)—was introduced to eliminate noise artifacts and improve boundary continuity. The proposed approach significantly mitigates common issues such as greenhouse adhesion and irregular edge contours prevalent in conventional methods. Experimental validation using GF-2 imagery over Weifang City demonstrates the strong performance of the framework, with a Recall of 92.44%, Precision of 91.47%, IoU of 85.13%, and F1-score of 91.95%. In addition to extraction, the study performed quantitative and spatial analyses of greenhouse distribution across administrative regions, revealing distinct clustering patterns and regional disparities in facility agriculture development. These results highlight the method’s practical utility for large-scale agricultural monitoring, greenhouse resource inventory, and policy-oriented decision-making, such as subsidy verification and land-use regulation. Despite its promising performance, the model currently faces challenges in accurately separating adjacent greenhouses in extremely dense areas and lacks cross-regional validation. Future work will focus on enhancing the model’s generalization capability through multi-regional, multi-temporal training and improving boundary segmentation precision, enabling scalable application at provincial and national levels. These advancements will further promote the integration of remote sensing and artificial intelligence for intelligent monitoring and sustainable management of protected agriculture.

Author Contributions

Conceptualization, Y.C. and Z.C.; methodology, X.Y. (Xiaoyu Yu), X.Y. (Xuan Yang) and X.Y. (Xu Yang); software, P.C., X.Y. (Xiaoyu Yu), and X.Y. (Xu Yang); validation, X.Y. (Xiaoyu Yu) and X.Y. (Xu Yang); formal analysis, X.Y. (Xiaoyu Yu); resources, Z.C. and Y.C.; data curation, X.Y. (Xiaoyu Yu); writing—original draft preparation, X.Y. (Xiaoyu Yu) and Y.C.; writing—review and editing, X.Y. (Xiaoyu Yu), Y.B., Z.C., and Y.C.; visualization, X.Y. (Xiaoyu Yu); supervision, Y.B. and X.Y. (Xiaoyu Yu); project administration, Z.C. and Y.C.; funding acquisition, Z.C. and Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Key Research and Development Program of China (No. 2023YFF0805901) and the Science and Disruptive Technology Program, Aerospace Information Research Institute, Chinese Academy of Sciences (No. E3Z219010F).

Data Availability Statement

Data are contained within the article.

Acknowledgments

We sincerely acknowledge the editor and all anonymous reviewers for their dedicated efforts and insightful comments, which have provided crucial guidance for future manuscript improvements.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MTDSNet | Multi-scale Transformer Decoder Swin-UNet |

| MTD | Multi-scale Transformer Decoder |

| DS | Denoising and Smoothing |

| DEM | Digital Elevation Model |

| HR | High-Resolution |

| CCA | Connected Component Analysis |

| MO | Morphological Operations |

| PPM | Pyramid Pooling Module |

| IoU | Intersection over Union |

References

- McMahon, R.W. An Introduction to Greenhouse Production. Curriculum Materials Service, Ohio State University, 254 Agricultural Administration Building, 2120 Fyffe Road, Columbus, OH 43210-1067 (Stock No. 9502M, $42.95). 2000. Available online: https://eric.ed.gov/?id=ED451356 (accessed on 11 June 2025).

- Guo, B.; Zhou, B.; Zhang, Z.; Li, K.; Wang, J.; Chen, J.; Papadakis, G. A critical review of the status of current greenhouse technology in China and development prospects. Appl. Sci. 2024, 14, 5952. [Google Scholar] [CrossRef]

- Chen, Y.; Hu, W.; Huang, B.; Weindorf, D.C.; Rajan, N.; Liu, X.; Niedermann, S. Accumulation and health risk of heavy metals in vegetables from harmless and organic vegetable production systems of China. Ecotoxicol. Environ. Saf. 2013, 98, 324–330. [Google Scholar] [CrossRef] [PubMed]

- Ma, H.; Feng, T.; Shen, X.; Luo, Z.; Chen, P.; Guan, B. Greenhouse extraction with high-resolution remote sensing imagery using fused fully convolutional network and object-oriented image analysis. J. Appl. Remote Sens. 2021, 15, 046502. [Google Scholar] [CrossRef]

- Aguilar, M.Á.; Jiménez-Lao, R.; Nemmaoui, A.; Aguilar, F.J.; Koc-San, D.; Tarantino, E.; Chourak, M. Evaluation of the consistency of simultaneously acquired Sentinel-2 and Landsat 8 imagery on plastic covered greenhouses. Remote Sens. 2020, 12, 2015. [Google Scholar] [CrossRef]

- Van Delden, S.H.; SharathKumar, M.; Butturini, M.; Graamans, L.J.A.; Heuvelink, E.; Kacira, M.; Kaiser, E.; Klamer, R.S.; Klerkx, L.; Kootstra, G.; et al. Current status and future challenges in implementing and upscaling vertical farming systems. Nat. Food 2021, 2, 944–956. [Google Scholar] [CrossRef]

- Hu, W.; Zhang, Y.; Huang, B.; Teng, Y. Soil environmental quality in greenhouse vegetable production systems in eastern China: Current status and management strategies. Chemosphere 2017, 170, 183–195. [Google Scholar] [CrossRef]

- Wang, Y.; Li, L.; Zhang, X.; Ji, M. Pesticide residues in greenhouse leafy vegetables in cold seasons and dietary exposure assessment for consumers in Liaoning Province, Northeast China. Agronomy 2024, 14, 322. [Google Scholar] [CrossRef]

- Liu, X.; Xin, L. Spatial and temporal evolution and greenhouse gas emissions of China’s agricultural plastic greenhouses. Sci. Total Environ. 2023, 863, 160810. [Google Scholar] [CrossRef]

- Chen, W.; Li, J.; Wang, D.; Xu, Y.; Liao, X.; Wang, Q.; Chen, Z. Large-scale automatic extraction of agricultural greenhouses based on high-resolution remote sensing and deep learning technologies. Environ. Sci. Pollut. Res. 2023, 30, 106671–106686. [Google Scholar] [CrossRef]

- Levin, N.; Lugassi, R.; Ramon, U.; Braun, O.; Ben-Dor, E. Remote sensing as a tool for monitoring plasticulture in agricultural landscapes. Int. J. Remote Sens. 2007, 28, 183–202. [Google Scholar] [CrossRef]

- Picuno, P. Innovative material and improved technical design for a sustainable exploitation of agricultural plastic film. Polym.-Plast. Technol. Eng. 2014, 53, 1000–1011. [Google Scholar] [CrossRef]

- Agüera, F.; Aguilar, F.J.; Aguilar, M.A. Using texture analysis to improve per-pixel classification of very high resolution images for mapping plastic greenhouses. ISPRS J. Photogramm. Remote Sens. 2008, 63, 635–646. [Google Scholar] [CrossRef]

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big data for remote sensing: Challenges and opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Chen, W.; Xu, Y.; Zhang, Z.; Yang, L.; Pan, X.; Jia, Z. Mapping agricultural plastic greenhouses using Google Earth images and deep learning. Comput. Electron. Agric. 2021, 191, 106552. [Google Scholar] [CrossRef]

- Taşdemir, K.; Koc-San, D. Unsupervised extraction of greenhouses using WorldView-2 images. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 4914–4917. [Google Scholar] [CrossRef]

- Yang, D.; Chen, J.; Zhou, Y.; Chen, X.; Chen, X.; Cao, X. Mapping plastic greenhouse with medium spatial resolution satellite data: Development of a new spectral index. ISPRS J. Photogramm. Remote Sens. 2017, 128, 47–60. [Google Scholar] [CrossRef]

- Tarantino, E.; Figorito, B. Mapping rural areas with widespread plastic covered vineyards using true color aerial data. Remote Sens. 2012, 4, 1913–1928. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Vallario, A.; Aguilar, F.J.; García Lorca, A.; Parente, C. Object-based greenhouse horticultural crop identification from multi-temporal satellite imagery: A case study in Almeria, Spain. Remote Sens. 2015, 7, 7378–7401. [Google Scholar] [CrossRef]

- Wu, C.F.; Deng, J.S.; Wang, K.; Ma, L.G.; Tahmassebi, A.R.S. Object-based classification approach for greenhouse mapping using Landsat-8 imagery. Int. J. Agric. Biol. Eng. 2016, 9, 79–88. [Google Scholar] [CrossRef]

- Balcik, F.B.; Senel, G.; Goksel, C. Object-based classification of greenhouses using Sentinel-2 MSI and SPOT-7 images: A case study from Anamur (Mersin), Turkey. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2769–2777. [Google Scholar] [CrossRef]

- Ma, A.; Chen, D.; Zhong, Y.; Zheng, Z.; Zhang, L. National-scale greenhouse mapping for high spatial resolution remote sensing imagery using a dense object dual-task deep learning framework: A case study of China. ISPRS J. Photogramm. Remote Sens. 2021, 181, 279–294. [Google Scholar] [CrossRef]

- Deng, J.; Bai, Y.; Chen, Z.; Shen, T.; Li, C.; Yang, X. A convolutional neural network for coastal aquaculture extraction from high-resolution remote sensing imagery. Sustainability 2023, 15, 5332. [Google Scholar] [CrossRef]

- Tian, X.; Chen, Z.; Li, Y.; Bai, Y. Crop classification in mountainous areas using object-oriented methods and multi-source data: A case study of Xishui county, China. Agronomy 2023, 13, 3037. [Google Scholar] [CrossRef]

- Chen, H.; Li, Z.; Wu, J.; Xiong, W.; Du, C. SemiRoadExNet: A semi-supervised network for road extraction from remote sensing imagery via adversarial learning. ISPRS J. Photogramm. Remote Sens. 2023, 198, 169–183. [Google Scholar] [CrossRef]

- Xie, J.; Tian, T.; Hu, R.; Yang, X.; Xu, Y.; Zan, L. A Novel Detector for Wind Turbines in Wide-Ranging, Multi-Scene Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sensing. 2024, 17, 17725–17738. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, Z.; Gao, J.; Cai, M.; Yang, X.; Chen, P.; Li, Q. A convolutional neural network for large-scale greenhouse extraction from satellite images considering spatial features. Remote Sens. 2022, 14, 4908. [Google Scholar] [CrossRef]

- Tong, X.; Zhang, X.; Fensholt, R.; Jensen, P.R.D.; Li, S.; Larsen, M.N.; Reiner, F.; Tian, F.; Brandt, M. Global area boom for greenhouse cultivation revealed by satellite mapping. Nat. Food 2024, 5, 513–523. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Computer Vision—ECCV 2022 Workshops, Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2023; pp. 205–218. [Google Scholar] [CrossRef]

- Xiao, H.; Li, L.; Liu, Q.; Zhu, X.; Zhang, Q. Transformers in medical image segmentation: A review. Biomed. Signal Process. Control 2023, 84, 104791. [Google Scholar] [CrossRef]

- Kim, B.; Mun, J.; On, K.W.; Shin, M.; Lee, J.; Kim, E.S. MSTR: Multi-Scale Transformer for End-to-End Human-Object Interaction Detection. arXiv 2022, arXiv:2203.14709. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and multiscale transformer fusion network for remote-sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI, Proceedings of the MICCAI 2015 18th International Conference, Munich, Germany, 5–9 October 2015; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified Perceptual Parsing for Scene Understanding. arXiv 2018, arXiv:1807.10221. [Google Scholar] [CrossRef]

- Gan, J.; Cai, K.; Fan, C.; Deng, X.; Hu, W.; Li, Z.; Wei, P.; Liao, T.; Zhang, F. CPS-RAUnet++: A Jet Axis Detection Method Based on Cross-Pseudo Supervision and Extended Unet++ Model. Electronics 2025, 14, 441. [Google Scholar] [CrossRef]

- Shaker, A.; Maaz, M.; Rasheed, H.; Khan, S.; Yang, M.H.; Khan, F.S. UNETR++: Delving into efficient and accurate 3D medical image segmentation. IEEE Trans. Med. Imaging 2024, 43, 3377–3390. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).