Abstract

With the flourish of deep learning, transformer models have achieved remarkable performance in dealing with many computer vision tasks. However, their applications in infrared small target detection is limited due to two factors: (1) the high computational complexity of the conventional transformer models reduces the efficiency of detection; (2) the small target is easily left out in the visual environment with complex backgrounds. To deal with the issues, we propose a lightweight infrared small target detection method based on a linear transformer named IstdVit, which achieves high accuracy and low delay in infrared small target detection. The model consists of two parts: a multi-scale linear transformer and a lightweight dual feature pyramid network. It combines the strengths of a lightweight feature extraction module and the multi-head attention mechanism, effectively representing the small targets in the complex background at an economical computational cost. Additionally, it incorporates rotational position encoding to improve understanding of spatial context. The experiments conducted on the NUDT-SIRST and IRSTD-1K datasets indicate that IstdVit achieves a good balance between speed and accuracy, outperforming other state-of-the-art methods while maintaining a low number of parameters.

1. Introduction

In the modern military, maritime, and other fields, the ability of rapid target detection is essential. Rapid detection over medium and long distances has become a primary focus for completing security tasks to meet the requirements for timely detection and processing. However, in the presence of complex background interference or strong electromagnetic interference, conventional visible light and radar detection systems often struggle to effectively detect targets. Infrared (IR) systems provide several benefits over visible and radar systems, including passive monitoring, operation in all weather conditions, and enhanced spatial resolution [1]. The infrared search and track system (IRSTS) has emerged as an effective alternative to traditional detection methods. This is due to its passive operation capabilities in all weather conditions and airspace [2]. Additionally, it offers high resistance to jamming and recognizes camouflaged targets effectively, among other advantages. As a result, the IRSTS shows promising potential for a wide range of applications. However, the detection of small infrared targets still encounters several challenges due to the objective conditions of imaging. Infrared sensors capture thermal radiation signals, but their effectiveness can be limited by atmospheric scattering, refraction, and noise. Thus, the images produced often lack detail, have low signal-to-noise ratios [3], and feature complex backgrounds, which can increase the rate of false detections. Moreover, because of the remote detection distance, small infrared targets lack visual features, such as color and texture, due to their diminutive size, often comprising fewer than a few dozen pixels [4], which can easily result in losing important semantic and detailed information about the target. In addition, infrared small target detection algorithms have strong real-time requirements [5]. Despite advancements in algorithms for natural scene target detection and semantic segmentation, research focused specifically on algorithms for small infrared targets remains limited.

Currently, many infrared small target detection algorithms depend on heuristic rules for designing manual features, such as color, texture, and other characteristics of common targets [6,7]. Consequently, these methods often lack sufficient generalization and accuracy. Unrecalled or misdetected small infrared targets will have a serious negative impact on the subsequent processes, such as aircraft tracking systems. These characteristics of small infrared targets pose challenges for researchers that are not typically found in other fields of target detection.

Deep learning has also been applied in the field of the infrared small target detection. MDvsFA [8] is an early exploration of using deep learning for the task of infrared small target detection. The study primarily proposed a deep learning framework using adversarial learning and highlighted the need to balance miss detection and false alarms in the infrared small target detection task. Due to the limitations of the foundational datasets and theories at that time, the proposed method requires not only a large amount of computation, but also exhibits an average generalization ability. UIUNet [9] proposes a nested U-Net structure, where a smaller U-Net is integrated into a larger U-Net backbone to achieve multilevel and multi-scale representation learning. Nevertheless, the large number of parameters in UIUNet results in significant computational effort, which creates challenges in practical applications. ISTDUNet [10] is a straightforward and reliable baseline model for the detection of small infrared (IR) targets. The ISTDUNet architecture is simple but highly effective. It achieves state-of-the-art AUC values while maintaining a low parameter count. RDIAN [11] is a lightweight, multidirectional attention network designed for multisensory infrared small target detection. It boasts excellent IoU metrics, along with very low parameter counts and fast model inference speeds. The small size of infrared targets, combined with the lack of unique texture features, makes effective feature extraction challenging. This often leads to poor target detection performance. Additionally, the mentioned methods face a significant speed–accuracy trade-off; specifically, those methods that achieve higher Mean Intersection over Union (MIoU) tend to have larger amount of parameters, resulting in lower frames per second (FPS).

In recent years, the introduction of the vision transformer (ViT) brings the advantages of transformer architecture to computer vision. By dividing an image into smaller segments and treating them as a sequential input, the ViT shows performance that is comparable to, or even surpasses, that of convolutional neural networks (CNNs) in tasks such as image classification [12,13,14], object detection [15], motion recognition [13], and image segmentation [16]. SCTransNet [17] proposes a Spatial-Channel Cross-Transformer block module (SCTB) that captures global information by interacting with features at different levels. It enhances the discriminative ability of these features through a multi-scale strategy and cross-space-channel information interaction. This approach represents the current state-of-the-art (SOTA) method based on transformers, achieving the highest level of performance. However, the transformer-based methods require substantial computational resources for self-attention calculations. This high demand makes it challenging to model relationships between the infrared small target detection at native resolution and the low computation ability of edge devices. Therefore, this paper focuses on improving the existing transformer-based algorithms for infrared small target detection.

This paper proposes a lightweight infrared small target detection method called IstdVit, based on a linear transformer, to achieve the requirements for high detection performance, low inference delay, a minimal number of parameters, and reduced computation in practical applications. The contributions of this paper are concluded as follows:

- 1.

- This paper proposes a method for infrared small target detection, IstdVit, which aims to address the “speed–accuracy” trade-off problem. It has a U-net architecture based on transformers, and it is a lightweight method.

- 2.

- This paper designs a multi-scale linear transformer (MLT) module. To improve the capability to distinguish small targets from the background, this paper introduces Mixed 2D rotational position encoding (RoPE) into the multi-scale linear transformer (MLT) to enhance spatial relative position modeling.

- 3.

- To address the demands of various small targets, the multi-scale parallel convolution processing strategy utilizing Dilated Convolution is proposed, followed by the introduction of the attention mechanism. The multi-scale features can combine their strengths to achieve a finer and more robust feature representation.

- 4.

- Experiments conducted on several datasets demonstrate that the proposed method outperforms other SOTA methods in the accuracy and speed metrics. Its mIoU increases by 11.07% in comparison with the ACM method. It reaches an MIoU of 70.22, Pd of 94.59, and Fa of 1.81 × 10−5 for the IRSTD-1K dataset.

2. Related Work

2.1. The Infrared Small Target Detection Datasets

For the infrared small target detection task, some datasets were created to be the evaluation. Two typical datasets are the IRSTD-1K [18] and the NUDT-SIRST [19].

The IRSTD-1K [18] dataset consists of real-world scenes captured using infrared cameras. The dataset has the following characteristics: (1) The targets in the dataset typically occupy fewer pixels in the image, generally between 1 and 10 pixels, and their size varies significantly from scene to scene. (2) It includes a diverse array of scenes, such as ocean, river, field, mountain, city, and cloud. The backgrounds of these scenes are complex, featuring a significant amount of clutter and noise, and the contrast between the targets and the backgrounds is low. (3) The target signal-to-noise ratio in the dataset is low due to the effects of thermal noise and environmental disturbances. The infrared radiation intensity of the target is weak, making it easily overwhelmed by background noise. These characteristics present a challenge to the detection algorithms.

The NUDT-SIRST [19] dataset is the first large-scale dataset specifically designed for detecting small infrared targets. The dataset consists of a total of 1327 images. The researchers gathered a substantial collection of actual infrared background images featuring various scenes such as cities, fields, oceans, and clouds. They employed Gaussian kernels along with pre-collected target templates (for example, airplanes, boats, and drones) to simulate point, small, and extended targets. The NUDT-SIRST dataset offers a rich variety of multi-target scenarios featuring targets of different sizes, shapes, and brightness levels. It is a high-quality, large-scale dataset specifically designed for infrared small target detection. With its diversity and accurate labeling, this dataset provides a solid foundation for training and evaluating infrared small target detection models.

These datasets have been widely tested by many methods. So far, the state-of-the-art (SOTA) methods include LGGNet [1], SCTransNet [17], MSHNet [20], IRPruneDet [21], etc. Li et al. proposed LGGNet that combines local detail information and global contextual information. It is a transformer-based method and was compared with CNN-based approaches. Experiments conducted on the IRSTD-1K dataset showed that LGGNet achieved 65.01% on and 90.57% on . Yuan et al. [17] designed a Spatial-Channel Cross-Transformer Network (SCTransNet) for infrared small target detection. It introduces a spatially embedded single-head channel-cross-attention mechanism to learn global information by interacting between features at different levels. It achieves 68.03% on on the IRSTD-1K dataset, and 94.09% on on the NUDT-SIRST dataset. Liu et al. [20] proposed MSHNet, which is based on a plain U-Net without bells and whistles. Its effectiveness benefits from the design of a scale- and location-sensitive loss. It was tested using the metric, with 67.16% on on the IRSTD-1K dataset and 80.55% on on the NUDT-SIRST dataset. Zhang et al. [21] proposed an efficient infrared small target detection method called IRPruneDet, which is based on wavelet structure regularized soft channel pruning. This method achieved 64.54% on and 62.71% on on the IRSTD-1K dataset.

Overall, many methods have been proposed and tested on the IRSTD-1K dataset and the NUDT-SIRST dataset. The SOTA methods such as SCTransNet [17], MSHNet [20], IRPruneDet [21], and LGGNet [1] have achieved good results. Certainly, they are selected as the comparison methods in this paper.

2.2. The Infrared Small Target Detection Methods

For the infrared small target detection task, some research efforts have attempted to incorporate transformer or attention mechanisms into the model, which can be categorized into two main approaches.

The first type of approach involves the convolution-based attention mechanisms. These mechanisms generally enhance foundational attention techniques used in the field of computer vision, such as SENet and CBAM. They are implemented as straightforward relational augmentation modules that follow the general convolutional layers. Representative methods include ACM [22], ALCNet [23], SGBNet [24], DNANet [19], and MIANet [25].

ACM [22] employs an Asymmetric Contextual Modulation (ACM) module for infrared small target detection to address the challenge of infrared small target feature fusion. The ACM module employs the Global Channel Attention Module (GCAM) to transmit high-level semantic information to the shallow network, while the Pixel-level Channel Attention Module (PCAM) maintains and emphasizes fine details of small infrared targets within the deep network. Ultimately, the attention module is completed through two paths: top-down and bottom-up, facilitating the bidirectional exchange of high-level semantic information and low-level details. ALCNet [23] designed a Bottom-up Local Attentional Modulation, BLAM. This module embeds details from low-level features into high-level semantic feature maps, effectively addressing the challenges of infrared small target detection that arise from the scarcity of intrinsic target features. DNANet [19] is a densely nested attention network that detects small infrared targets. This research addresses the challenge of losing small targets within deep feature representations, a common issue in other methodologies. It aims to mitigate this problem by utilizing more densely nested feature interaction modules and attention modules. The attention module in this approach type primarily helps the network identify where attention is needed. However, it does not model the relationship between the target and the background information, which means it fails to learn the spatial relevance of the features.

The second type of approach involves transformer-based architectures. It typically includes a transformer module with both token-mixer and Feed-Forward Neural Network (FFN) components. The FFN is a crucial component in many neural network architectures, especially in the transformer. It typically consists of two linear transformations with a ReLU activation function in between. Despite its straightforward structure, the FFN plays a vital role in enhancing the model’s ability to capture complex patterns and relationships within the data. MTUNet [26] is a multilevel TransUNet architecture designed for infrared small target detection. It designs a multilevel ViT module (MVTM) for coarse-to-fine feature extraction. Additionally, MTUNet proposes a new loss function, FocalIoU, that combines the benefits of focal loss and SoftIoU loss, enabling effective localization of small targets while preserving the shape of the target during the learning process.

For infrared small target detection tasks, Liu et al. [27] proposed a transformer-based infrared small and dim target detection method that captures long-range dependencies and enhances target features, outperforming state-of-the-art methods in terms of complexity and generalization. However, this method incurs a computational cost of 42.65 G FLOPs and does not address the model’s computational resource requirements, which may result in intensive computation and lengthy training times. Yu et al. [28] presented a strengthened spatial–temporal tri-layer local contrast method (SSTLCM) that effectively detects dim, small infrared moving targets in complex backgrounds and processes each image in 0.055 s, which is relatively fast and suitable for near-real-time scenarios. Nevertheless, the paper’s limitations include potential weaknesses in handling highly dynamic backgrounds and fast-moving targets, as well as a reliance on specific hardware for computational efficiency. Li et al. [29] introduced IST-TransNet, a transformer-based network for infrared small target detection, enhancing performance through an anti-aliasing contextual feature fusion module, a spatial and channel attention module, and a vision transformer branch to mitigate background and flash element interference. But its ability to generalize in complex backgrounds and detect small targets is limited, and the computational complexity of the ViT module is high, which may lead to increased detection time.

Infrared small target detection tasks usually require real-time operation with limited computational resources. However, the computational complexity of the transformer model is proportional to the square of the image’s spatial resolution. Directly substituting the common network architecture with a transformer will create a network that cannot achieve the necessary performance for real-time operation. To address this issue, some methods [27] apply the transformer at the level of low-resolution feature map, utilizing a large patch or window. But the feature maps at the lowest level contain extremely sparse features of small targets and large sense fields. Applying the transformer coarse-grained at this level can diminish the effectiveness of relational modeling. Coarse-grained transformers, such as those with low-level or large patches, struggle to improve the network’s ability to detect small targets at low resolutions.

In summary, the fine-grained transformers operate at native resolutions, significantly increasing the computational complexity of the network. This transformer trade-off problem between speed and accuracy poses a significant challenge for infrared small target detection tasks. This paper proposes the IstdVit algorithm based on a linear transformer for detecting small infrared targets. It leverages the transformer to model relationships directly on high-resolution feature maps, allowing for the real-time construction of more distinctive target features.

3. Proposed Method

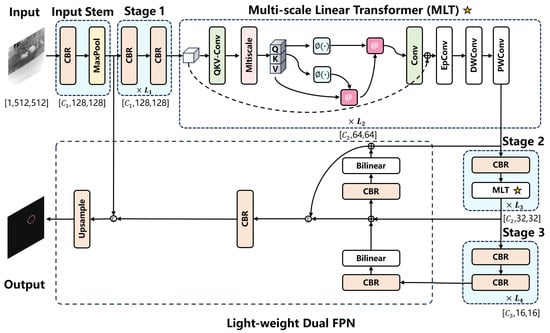

As shown in Figure 1, the IstdVit network serves as a robust model based on transformer architecture for detecting small infrared targets. Its design is straightforward, consisting mainly of a few essential components: the CBR module, the multi-scale linear transformer module, and the lightweight dual FPN module.

Figure 1.

The overall architecture of the proposed IstdVit model.

The CBR module, commonly used in convolutional neural networks (CNNs), stands for Convolution-BatchNorm-Rectified Linear Unit. The convolutional layer extracts features from the input data; the batch normalization layer normalizes the outputs of the convolutional layer to stabilize and accelerate the training process; and the ReLU activation function introduces non-linearity into the model. The CBR module is widely used due to its effectiveness in enhancing the performance of CNNs, improving the convergence speed and generalization ability.

The multi-scale linear transformer is an advanced neural network architecture designed to efficiently handle and integrate information from multiple scales. It incorporates linear transformations to process input data at various scales, enabling the model to capture both local and global features effectively. The term “bilinear” often refers to bilinear interpolation. This approach helps produce smooth and visually pleasing results when scaling or transforming images.

Lightweight dual FPN is a powerful architecture designed to enhance object detection by effectively integrating multi-scale features from a CNN backbone. It constructs a feature pyramid that combines high-level semantic information from deeper layers with high-resolution details from shallower layers through a top-down pathway and lateral connections. The feature fusion allows FPN to detect objects of various sizes more accurately, significantly improving detection performance, especially for small objects and those with varying scales.

3.1. The Design of IstdVit Architecture

In Figure 1, the reason to combine convolution and transformer is to leverage their respective strengths. According to some studies [30], the main advantage of CNNs is that local inductive bias and translation invariance can effectively extract local features of images. Small infrared targets occupy only a few to a dozen pixels in an infrared image, and their distribution is extremely sparse [31]. Therefore, convolution serves as a natural feature descriptor for these small infrared targets. While the transformer [32] and the ViT [30] lack the local prior of convolution, they effectively capture global dependencies at any image location through a self-attentive mechanism, making them well-suited for learning target context information. In addition, a study [33] analyzed the Fourier spectrum and concluded that ViT tends to capture low-frequency signals, similar to the behavior of low-pass filters [34]. In contrast, CNNs tend to capture high-frequency signals. Therefore, combining convolutional and transformer architectures can enhance the expressiveness of neural networks [33].

Some studies [31] suggest that for a pair of infrared small target images to be detected, the background can be assumed to exhibit nonlocal autocorrelation and thus can be approximated as a low-rank matrix. In this context, the small targets can be treated as sparse matrices. Thus, given an infrared image D, it can be decomposed into a low-rank matrix B, a sparse matrix T, and a noise term N, leading to the following mathematical model:

Additionally, the sparse matrix T can be further expressed as the sum of the high-frequency components and the low-frequency components:

Utilizing convolution and a transformer enhances the capture of both high- and low-frequency information in infrared images, allowing for improved detection and restoration of small targets. Vanilla self-attention is a core mechanism in the original transformer model. It calculates attention scores between each pair of elements in a sequence using query, key, and value vectors. After applying softmax to these scores to determine normalized weights, it aggregates the value vectors based on these weights, allowing the model to focus on relevant elements and capture dependencies within the sequence effectively. However, the computational complexity of vanilla self-attention is proportional to the square of the input image’s resolution. As a result, using it directly for infrared small target detection is not only very resource-intensive, but much of the computation is also independent of the sparse small targets and their contexts. Therefore, this paper introduces the linear transformer as an MLT module to enable it to compute the self-attention mechanism with linear computational complexity related to feature resolution.

In the feature recovery phase of small infrared targets, we designed a lightweight multi-scale feature fusion module called lightweight dual FPN. This FPN layer can accept multiple feature images of varying resolutions from the backbone network on a layer-by-layer basis, fusing high-level semantic features with low-level features. The lightweight two-path feature pyramid network outputs final logits, which are further processed through the Sigmoid function to create a probability map H. IstdVit is learned using the SoftIoU loss function:

where denotes the Sigmoid function.

3.2. Multi-Scale Linear Transformer

3.2.1. The Original Transformer

First of all, let us discuss the original transformer. For , n is the length of the input, and for this task, . X is obtained as through three linear layers :

Split the matrices Q, K, and V into h heads. The dimension of each head is :

where represent the query, key, and value for each head. The advantages include two points: (1) In different heads, the features could be projected into different dimensions. In each component of the multi-head attention mechanism, the self-attention could be performed according to the features with different dimensions. Then, the diversity of the self-attention on the images could be exploited. (2) By performing multi-head attention, the computation on different components could be exactly in parallel, and thus the efficiency of the algorithm is improved. A self-attention operation is performed for each head:

The denotes the matrix of similarity scores for the query and key. And the softmax function not only normalizes these similarity scores but also sharpens the resulting probability distribution. Afterward, the h heads are spliced and then passed through the output linear layer :

Thus the time complexity of the process is

When the image resolution is large, the computational complexity of processing increases exponentially. This presents a significant challenge for the transformer when handling high-resolution feature maps, preventing them from being used directly at the native resolution. To address this issue, it is usually necessary to apply techniques such as chunking [30,35] or downsampling to reduce the resolution of the feature map. Such operations either limit attention to a small window or lose important features of small targets during downsampling. Therefore, a more effective and higher-resolution transformer is required for infrared small target detection tasks.

3.2.2. Design of Multi-Scale Linear Transformer

This paper introduces the linear transformer [36,37], which reduces computation to a linear multiple of the resolution. In the self-attention process, it can be equivalently expressed as follows:

where is a process of weighted summation of according to the similarity function . At this stage, it is only necessary to satisfy . Thus let , where represents the kernel function and has a non-negative value domain. At this point, the attention process can be rewritten as follows:

The time complexity is , which reduces the complexity from a quadratic multiple of resolution to a linear multiple. As a result, there is no need to perform operations like chunking [30,35] high-resolution feature maps to conduct self-attention operations at native resolution. In this paper, is simply chosen as the kernel function to ensure both the non-negativity of the result and the efficiency of the computation.

To enhance spatial contextual comprehension during the learning process of the small targets, the relative positional relationships between elements should be included in the transformer. To achieve this, we introduced Mixed 2D RoPE [38], which is a 2D version of Rotary Position Embedding [39] (RoPE) utilized in the multi-scale linear transformer. RoPE is a method used in transformer-based models to incorporate positional information into the model’s understanding of sequences. Unlike traditional position embeddings that are added to the input vectors, RoPE rotates the query and key vectors in a way that encodes their relative positions. This rotation is achieved through a series of complex number rotations, allowing the model to effectively capture the order and distance between elements in a sequence. Briefly, RoPE uses Euler’s formula for multiplication to determine relative positions. The query of the a-th element in the feature map needs to be computed with the key of the b-th element to determine the attention value. Firstly, RoPE positional coding is applied to each of them:

Subsequently, the product in the attention matrix is as follows:

where represents the operation of taking the real part of a complex number, while the symbol * denotes the complex conjugate. It can be demonstrated that the product of elements located at different positions will have their relative positions, represented as (n-m), reflected as rotations in the complex domain. Lastly, for an attention matrix with in both rows and columns, the relative position information in the attention matrix after incorporating RoPE can be viewed as a form of Toeplitz matrix:

The values in the matrix represent relative positions. Incorporating this relative position information is highly beneficial for understanding the context of small infrared targets. It enhances the ability to learn the relationships between these small targets and their surrounding areas, which is crucial for refining boundaries, shapes, and other related operations. In addition, the relative distance decay mechanism implicit in RoPE is equivalent to adding an inductive bias of local importance to attention, which helps the network converge. The mixed form used [38] in this paper to better handle 2D location information is as follows:

For the element at position a, its two-dimensional position is represented as . Its t-th dimensional position is encoded as , which results in the attention matrix containing two-dimensional relative position information when calculating relative positions:

The linear attention calculation procedure for adding RoPE is as follows:

To effectively address the diverse demands of various small targets in the sensory field, this paper proposes a multi-scale parallel convolution processing strategy utilizing Dilated Convolution, followed by the introduction of the attention mechanism. Specifically, the input feature map is computed in parallel using multiple 3 × 3 convolutional layers first, each set with different dilation rates of 1, 2, and 4. This setup is equivalent to using convolutional kernels with sensory fields of 3 × 3, 5 × 5, and 9 × 9. These multi-scale features are then input as additional heads into the linear self-attention mechanism, which can adaptively focus on important feature scales, combining the strengths of multi-scale features to achieve a finer and more robust feature representation.

3.3. Evaluation Indicators

, , and are used to measure the performance of the algorithm in detection tasks. Meanwhile, is a crucial metric for assessing image segmentation results. evaluates the effectiveness of image segmentation by considering both the overlap and the discrepancies between the predicted results and the true values. Its calculation formula is as follows:

where n is the number of samples; is the predicted value of the i-th sample. indicates the number of pixels in the intersection of the ground truth and the predicted result for the i-th sample. Meanwhile, denotes the total number of pixels in the union of the ground truth and the predicted result for the i-th sample.

is a metric used to measure target detection accuracy. It is calculated as follows:

is the false alarm rate. Its calculation formula is as follows:

4. Results of the Experiments

In this section, we compare the proposed IstdVit model with other state-of-the-art methods, including model-based, CNN-based, and transformer-based approaches, to demonstrate the effectiveness and advancements of IstdVit. Additionally, we present the ablation experiments conducted during the development of IstdVit. Our comparative experiments are primarily based on two datasets: NUDT-SIRST [19] and IRSTD-1K [18]. For the IRSTD-1K [18] dataset, we adhere to the same training and testing set division as outlined in the original paper.

4.1. Implementation Details

The network design and training tests for IstdVit were performed on PyTorch. The experiments used an NVIDIA GeForce RTX 3090 GPU and an Intel(R) Core(TM) i7-11700 CPU. In this paper, IstdVit was trained for a period of 400 epochs, employing the AdamW optimizer and a cosine annealing learning rate scheduler. The loss function was used during the training process:

where denotes the logits output by the network, signifies the truth value, and indicates the function, which maps logits to probability values.

4.2. Quantitative Analysis

This section compares the IstdVit proposed in this paper with other state-of-the-art algorithms. The comparison includes multiple model-based full-precision methods such as Top-Hat [40], Max-Median [41], RLCM [42], WSLCM [43], TLLCM [44], MSLCM [45], MSPCM [45], IPI [31], NRAM [46], RIPT [47], PSTNN [48], and MSLSTIPT [43]. Additionally, various CNN-based deep learning methods are considered, including ACM [22], ALCNet [23], ISNet [18], RDIAN [11], DNANet [19], ISTDUNe [10], UIUNet [9], IRPruneDet [21], and MSHNet [20]. Finally, the analysis covers several transformer-based deep learning methods, including SCTransNet [17], MTUnet [26], and LGGNet [1]. To evaluate the various methods, we use to measure their arithmetic performance.

In Table 1, this paper presents extensive comparative experiments of three classes of model-based, CNN-based, and transformer-based methods on the challenging IRSTD-1K dataset from publicly available datasets. IRSTD-1K [18] is a challenging benchmark dataset for infrared small target detection (IRSTD) that consists of 1000 infrared images from real scenes. The dataset consists of real-world scenes captured using an infrared camera, with targets labeled manually on a pixel-by-pixel basis. Each image has a resolution of 512 × 512 pixels. It includes various types of small targets, such as drones, creatures, boats, and vehicles, exhibiting a diversity of shapes, variability in size, and interference from complex backgrounds. The IRSTD-1K dataset offers greater scene diversity and a wider variety of targets compared to previous datasets. It features lower image contrast, a reduced signal-to-noise ratio, and a smaller percentage of average small target sizes. Overall, the IRSTD-1K dataset serves as a high-quality and complex benchmark for infrared small-target detection research, providing valuable resources and challenges for the development of more robust detection algorithms.

Table 1.

Performance comparison of the proposed IstdVit and the model-based methods, the CNN-based methods, and the transformer-based methods on the IRSTD-1K [18] dataset.

From the experimental results, it can be seen that there are some excellent transformer-based methods applied to infrared small target detection tasks. For instance, SCTransNet achieves an of 68.07, demonstrating good detection accuracy and a low false alarm rate. However, the high computational complexity of the transformer model results in SCTransNet requiring 40.46 G of computation, despite strict control over the number of parameters and computations. This presents a significant challenge for applying this model in real-world scenarios. In addition, due to the fact that the transformer-based model requires a large amount of data for training, SCTransNet must be jointly trained using multiple datasets to achieve optimal performance.

Notably, the IstdVit proposed in this paper achieves 70.22 , 94.59 , and 1.81 × 10−5 on the IRSTD-1K dataset and utilizes only 26% of the parameters and 7% of the computational effort compared to the SCTransNet method. Additionally, it significantly outperforms all other infrared small target detection methods and reaches an SOTA level. Meanwhile, while achieving a comparable amount of computation as ACM [22], the of IstdVit increased by 11.07. This suggests that IstdVit in this paper achieves an excellent speed–accuracy trade-off while using the transformer architecture, which holds greater practical value.

The experimental results on the synthetic dataset NUDT-SIRST [19] are shown in Table 2. The performance of the proposed IstdVit outperforms other methods on the NUDT-SIRST dataset, especially the metric, which reaches an astonishing 99.31, significantly outperforming other SOTA methods. IstdVit’s surpasses methods (ACM and ALCNet) with low computational effort by 30 points. Compared to high-precision methods (ISTDU-Net, UIU-Net, and SCTransNet), IstdVit achieves a superior while achieving higher and lower . Particularly, is the lowest among all methods, reaching 0.02 × 10−5. This indicates fully that IstdVit’s ability to detect small infrared targets is very powerful. The IstdVit metrics introduced in this paper demonstrate impressive performance levels while maintaining a low number of parameters and minimal computational effort. Experimental results from the IRSTD-1K and NUDT-SIRST datasets indicate that the IstdVit achieves a strong balance between speed and accuracy, delivering SOTA performance.

Table 2.

Performance comparison of the proposed IstdVit and the model-based methods, the CNN-based methods, and the transformer-based methods on the NUDT-SIRST [19] dataset.

4.3. Ablation Study

The ablation experiments of the proposed IstdVit are shown in Table 3. Each stage of Baseline includes the basic residual convolution module, achieving 66.73% , 86.86% , and 1.71% , with approximately 2.91M parameters. After incorporating the standard transformer directly on top of the existing model, the increases to 69.52%. The improves to 89.89%, while slightly decreases to 1.64%. However, this enhancement comes at the cost of a model size increase to 3.40 million parameters, primarily due to the additional parameters required for global attention calculations. To further decrease the model’s overall computing power, the standard transformer has been replaced with the MLT, resulting in a nearly flat of 69.44%. While the performance of has significantly increased to 93.91%, and has a slight rise to 2.11%. At the same time, the number of parameters has been notably reduced to 2.92 million. This suggests that relational modeling with multi-scale information offers substantial benefits for small target detection while minimizing costs. Finally, the RoPE has been introduced as part of a multi-scale linear transformer. This enhancement has boosted the to 70.22%, increased the Pd to 94.59%, and decreased the Fa to 1.81%. Furthermore, the RoPE does not increase the model size, keeping the number of parameters at 2.92 million. This further demonstrates the effectiveness and lightweight nature of IstdVit in the detection of small infrared targets.

Table 3.

The ablation experiments of the proposed IstdVit on the IRSTD-1K [18] dataset.

4.4. Qualitative Analysis

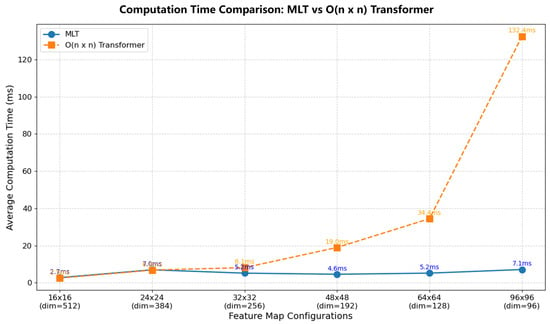

This paper compares the computational time consumed by MLT and the square-fold transformer under various feature maps, as illustrated in Figure 2, to further investigate the computational benefits of MLT compared to the square-fold transformer (the original scaled dot-product attention computation).

Figure 2.

Comparison of transformer elapsed time (ms) for MLT and squared times for feature maps of different resolutions.

At a feature map resolution of 16 × 16 and a corresponding dimension of 512, MLT and the square-times transformer require similar amounts of time. MLT begins to take significantly less time than the square-times transformer at a resolution of 32 × 32. With a resolution of 64 × 64, the elapsed time for MLT increases only slowly due to the decrease in dimensionality. However, the elapsed time for the square-times transformer is already seven times greater than that of the MLT. This demonstrates that the advantages of MLT at higher resolutions of feature maps are quite significant, resulting in a noticeable speedup. Additionally, it shows that the square-times transformer architecture, at its native resolution, struggles to enable infrared small target detection methods to achieve real-time performance.

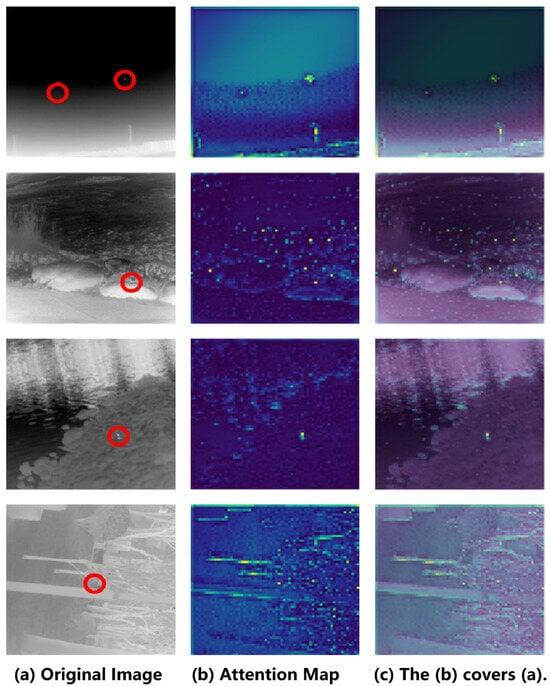

Figure 3 displays the heat map results visualizing MLT attention, where areas with higher temperatures indicate greater MLT attention and areas with lower temperatures signify less MLT attention.

Figure 3.

Attention graph visualization results for MLT. (a) is the original input image, (b) is the MLT attention map, (c) is the original input image covered by the attention map.

The visualization results indicate that MLT is effective in accurately identifying the area where the target is located within the features, while also minimizing the influence of unrelated regions that do not pertain to the small target. The shallow layer of the network usually represents basic information, such as contours and corner points of the image, without differentiating the targets. However, due to the raw resolution attention computation of the MLT, it can accurately detect information like the location of small targets at this shallow layer. In addition, it can be noticed that attention is similarly assigned around the small target, indicating that the MLT is already acquiring a contextual understanding of it. For instance, when the small target is situated in the woods, the MLT not only concentrates on the target itself but also considers the surrounding contextual information, such as the edges of the trees.

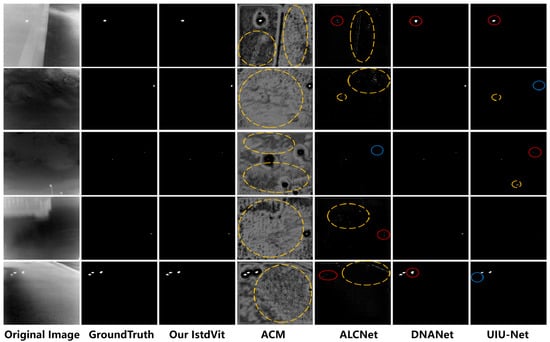

Figure 4 displays the visualization results of IstdVit compared with other infrared small target detectors, including ACM [22], ALCNet [23], DNANet [19], and UIU-Net [9], all evaluated on the same scene. It is important to note that the masks produced by each model are not binarized. The results indicate that ACM and ALCNet generally struggle with more significant misdetections of non-target artifacts, while UIU-Net tends to experience leakage and is less effective at capturing finer details. Although DNANet demonstrates superior overall detection accuracy, it still shows some shortcomings in learning the details of the target. In contrast, IstdVit performs better in both detection accuracy and detail learning, further confirming its advantages in the infrared small target detection task. In future, testing on a more diverse range of datasets is needed to assess the generalizability of the IstdVit method across different scenarios and environments, such as anti-UAV object detection.

Figure 4.

Comparison of the visualization results of IstdVit with other IR small target detectors. The red circles in the figure indicate poor target detail learning, the blue circles represent missed detections, and the yellow circles represent false detections.

5. Conclusions

This paper proposes a new method, IstdVit, that balances speed and accuracy for the infrared small target detection task. Specifically, IstdVit is a U-shaped transformer-based architecture that enhances the original transformer by presenting a lightweight multi-scale linear transformer. It reduces the computational complexity of the transformer from a square relationship with the feature map to a linear relationship. This innovation allows the architecture to effectively learn the contextual relationships of the small infrared targets while maintaining low computational demands, enabling real-time detection of small infrared targets. IstdVit achieves a computational load similar to that of the real-time method ACM, while the of IstdVit also increases by 11.07% on the IRSTD-1K dataset. Additionally, in the NUDT-SIRST dataset, IstdVit demonstrates higher detection probability () and a lower false alarm rate () compared to other SOTA methods.

Author Contributions

All authors contributed to the idea for the article. B.W. and Q.M. provided the initial idea and framework regarding this manuscript; The literature search and analysis were performed by Y.W. and Q.M.; writing, B.W., Y.W. and Q.M.; visualization, Y.W. and Q.M.; supervision, B.W. and H.Z.; data curation, J.C. and H.Z.; investigation, J.C., H.Z. and L.Z.; review and editing, H.Z. and L.Z.; funding acquisition, B.W., H.Z. and L.Z. All authors participated in the commenting and modification of the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Youth Fund of the National Natural Science Foundation of China under number 62406249, and the Natural Science Basic Research Program of Shaanxi under number 2024JC-YBQN-0612, and the National Key Laboratory of Space Target Awareness under number STA2024KGJ0202. This work is also supported by the Basic Research Programs of Taicang, 2023, under number TC2023JC23, and the Open Fund of National Engineering Laboratory for Big Data System Computing Technology, under number SZU-BDSC-OF2024-08.

Data Availability Statement

The code may be accessed at https://github.com/BingshuCV/ISTDViT, accessed on 10 June 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, Q.; Mao, Q.; Liu, W.; Wang, J.; Wang, W.; Wang, B. Local information guided global integration for infrared small target detection. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 4425–4429. [Google Scholar]

- Zhang, Y.; Li, Z.; Siddique, A.; Azeem, A.; Chen, W.; Cao, D. Infrared Small Target Detection Based on Interpretation Weighted Sparse Method. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5001415. [Google Scholar] [CrossRef]

- Kou, R.; Wang, C.; Peng, Z.; Zhao, Z.; Chen, Y.; Han, J.; Huang, F.; Yu, Y.; Fu, Q. Infrared small target segmentation networks: A survey. Pattern Recognit. 2023, 143, 109788. [Google Scholar] [CrossRef]

- Chen, D.; Qin, F.; Ge, R.; Peng, Y.; Wang, C. ID-UNet: A densely connected UNet architecture for infrared small target segmentation. Alex. Eng. J. 2025, 110, 234–244. [Google Scholar] [CrossRef]

- Xin, B.; Li, Q.; Mao, Q.; Wang, J.; Wang, B. FBI-Net: Frequency Band Integration Network for Infrared Small Target Segmentation. In Proceedings of the 2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; Volume 110, pp. 1–25. [Google Scholar]

- Gan, W.; Liu, Z.; Chen, C.L.P.; Zhang, T. Siamese labels auxiliary learning. Inf. Sci. 2023, 625, 314–326. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, K.; Wang, B.; Li, X. Hierarchical Multimodality Graph Reasoning for Remote Sensing Visual Question Answering. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5651312. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss detection vs. false alarm: Adversarial learning for small object segmentation in infrared images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; Volume 110, pp. 8509–8518. [Google Scholar]

- Wu, X.; Hong, D.; Chanussot, J. Uiu-net: U-net in u-net for infrared small object detection. IEEE Trans. Image. Process 2023, 32, 364–376. [Google Scholar] [CrossRef]

- Hou, Q.; Zhang, L.; Tan, F.; Xi, Y.; Zheng, H.; Li, N. ISTDU-net: Infrared small-target detection u-net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 7506205. [Google Scholar] [CrossRef]

- Sun, H.; Bai, J.; Yang, F.; Bai, X. Receptive-field and direction induced attention network for infrared dim small target detection with a large-scale dataset irdst. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5000513. [Google Scholar] [CrossRef]

- Hu, X.; Chen, C.L.P.; Zhang, T. Dynamic Broad Metric Learning. IEEE Trans. Artif. Intell. 2025, 1–14. [Google Scholar] [CrossRef]

- Li, S.; Zhang, T.; Chen, C.L.P. SIA-Net: Sparse Interactive Attention Network for Multimodal Emotion Recognition. IEEE Trans. Comput. Soc. Syst. 2024, 11, 6782–6794. [Google Scholar] [CrossRef]

- Li, C.; Wang, B.; Zheng, J.; Zhang, Y.; Chen, C.L.P. Unsigned Road Incidents Detection Using Improved RESNET From Driver-View Images. IEEE Trans. Artif. Intell. 2025, 6, 1203–1216. [Google Scholar] [CrossRef]

- Wang, B.; Li, C.; Zou, W.; Zheng, Q. Foreign Object Detection Network for Transmission Lines from Unmanned Aerial Vehicle Images. Drones 2024, 8, 361. [Google Scholar] [CrossRef]

- Cao, Z.; Lu, Y.; Xin, H.; Wang, R.; Nie, F.; Sebilo, M. Superpixel-Based Bipartite Graph Clustering Enriched with Spatial Information for Hyperspectral and LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5505115. [Google Scholar] [CrossRef]

- Yuan, S.; Qin, H.; Yan, X.; Akhtar, N.; Mian, A. Sctransnet: Spatial-channel cross transformer network for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5002615. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. Isnet: Shape matters for infrared small target detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 867–876. [Google Scholar]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense nested attention network for infrared small target detection. IEEE Trans. Image Process. 2022, 32, 1745–1758. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, R.; Zheng, B.; Wang, H.; Fu, Y. Infrared small target detection with scale and location sensitivity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 17490–17499. [Google Scholar]

- Zhang, M.; Yang, H.; Guo, J.; Li, Y.; Gao, X.; Zhang, J. Irprunedet: Efficient infrared small target detection via wavelet structure-regularized soft channel pruning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 7224–7232. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional local contrast networks for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, C.L.P.; Wu, M.; Zhang, T. SGB-Net: Scalable Graph Broad Network. IEEE Trans. Neural Netw. Learn. Syst. 2025, 1–15. [Google Scholar] [CrossRef]

- Li, S.; Zhang, T.; Chen, B.; Chen, C.P. MIA-Net: Multi-Modal Interactive Attention Network for Multi-Modal Affective Analysis. IEEE Trans. Affect. Comput. 2023, 14, 2796–2809. [Google Scholar] [CrossRef]

- Wu, T.; Li, B.; Luo, Y.; Wang, Y.; Xiao, C.; Liu, T.; Yang, J.; An, W.; Guo, Y. Mtu-net: Multilevel transunet for space-based infrared tiny ship detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5601015. [Google Scholar] [CrossRef]

- Liu, F.; Gao, C.; Chen, F.; Meng, D.; Zuo, W.; Gao, X. Infrared small and dim target detection with transformer under complex backgrounds. IEEE Trans. Image Process. 2023, 32, 12. [Google Scholar] [CrossRef]

- Yu, J.; Li, L.; Li, X.; Jiao, J.; Su, X.; Chen, F. Infrared moving small-target detection using strengthened spatial-temporal tri-layer local contrast method. Infrared Phys. Technol. 2024, 140, 105367. [Google Scholar] [CrossRef]

- Li, C.; Huang, Z.; Xie, X.; Li, W. Ist-transnet: Infrared small target detection based on transformer network. Infrared Phys. Technol. 2023, 132, 104723. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2021, arXiv:2103.13915. [Google Scholar]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared patch-image model for small target detection in a single image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Si, C.; Yu, W.; Zhou, P.; Zhou, Y.; Wang, X.; Yan, S. Inception transformer. arXiv 2022, arXiv:2205.12956. [Google Scholar]

- Park, N.; Kim, S. How do vision transformers work? In Proceedings of the International Conference on Learning Representations, Virtual Event, 25–29 April 2022.

- Zhao, T.; Cao, J.; Hao, Q.; Bao, C.; Shi, M. Res-swintransformer with local contrast attention for infrared small target detection. Remote Sens. 2023, 15, 4387. [Google Scholar] [CrossRef]

- Katharopoulos, A.; Vyas, A.; Pappas, N.; Fleuret, F. Transformers are rnns: Fast autoregressive transformers with linear attention. arXiv 2020, arXiv:2006.16236. [Google Scholar]

- Cai, H.; Li, J.; Hu, M.; Gan, C.; Han, S. Efficientvit: Lightweight multi-scale attention for high-resolution dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 17302–17313. [Google Scholar]

- Heo, B.; Park, S.; Han, D.; Yun, S. Rotary position embedding for vision transformer. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Su, J.; Ahmed, M.; Lu, Y.; Pan, S.; Bo, W.; Liu, Y. Roformer: Enhanced transformer with rotary position embedding. arXiv 2023, arXiv:2104.09864. [Google Scholar] [CrossRef]

- Tom, V.T.; Peli, T.; Leung, M.; Bondaryk, J.E. Morphology-based algorithm for point target detection in infrared backgrounds. In Signal and Data Processing of Small Targets 1993: Volume 1954; SPIE: San Francisco, CA, USA, 1993; pp. 2–11. [Google Scholar]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-mean and max-median filters for detection of small targets. In Signal and Data Processing of Small Targets 1999: Volume 3809; SPIE: San Francisco, CA, USA, 1999; pp. 74–83. [Google Scholar]

- Han, J.; Liang, K.; Zhou, B.; Zhu, X.; Zhao, J.; Zhao, L. Infrared small target detection utilizing the multiscale relative local contrast measure. IEEE Geosci. Remote Sens. Lett. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Zhang, H.; Zhao, Q.; Zhang, X.; Li, N. Infrared small target detection based on the weighted strengthened local contrast measure. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1670–1674. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Liu, C.; Zhang, H.; Zhao, Q. A local contrast method for infrared small-target detection utilizing a tri-layer window. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1822–1826. [Google Scholar] [CrossRef]

- Moradi, S.; Moallem, P.; Sabahi, M.F. A false-alarm aware methodology to develop robust and efficient multi-scale infrared small target detection algorithm. Infrared Phys. Technol. 2018, 89, 387–397. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, L.; Zhang, T.; Cao, S.; Peng, Z. Infrared small target detection via non-convex rank approximation minimization joint l2,1 norm. Remote Sens. 2018, 10, 1821. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y. Reweighted infrared patch-tensor model with both nonlocal and local priors for single-frame small target detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, Z. Infrared small target detection based on partial sum of the tensor nuclear norm. Remote Sens. 2019, 11, 382. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).