Abstract

The management and identification of forest species in a city are essential tasks for current administrations, particularly in planning urban green spaces. However, the cost and time required are typically high. This study evaluates the potential of RGB point clouds captured by unnamed aerial vehicles (UAVs) for automating tree species classification. A dataset of 809 trees (crowns) for eight species was analyzed using a random forest classifier and deep learning with PointNet and PointNet++. In the first case, eleven variables such as the normalized red–blue difference index (NRBDI), intensity, brightness (BI), Green Leaf Index (GLI), points density (normalized), and height (maximum and percentiles 10, 50, and 90), produced the highest reliability values, with an overall accuracy of 0.70 and a Kappa index of 0.65. In the second case, the PointNet model had an overall accuracy of 0.62, and 0.64 with PointNet++; using the features Z, red, green, blue, NRBDI, intensity, and BI. Likewise, there was a high accuracy in the identification of the species Populus alba L., and Melaleuca armillaris (Sol. ex Gaertn.) Sm. This work contributes to a cost-effective workflow for urban tree monitoring using UAV data, comparing classical machine learning with deep learning approaches and analyzing the trade-offs.

1. Introduction

Tree species identification plays a fundamental role in forest management [1,2] as it contributes to the sustainable control and conservation of forest resources. This task is relevant and challenging [3] due to Latin America’s rich forest species variety [4]. Ecuador is recognized as one of the most biodiverse countries in the world [5,6,7], housing approximately 12.75 million hectares of forest [8]. The differentiation of species is fundamental not only for improving their conservation through the design of effective strategies but also for identifying them accurately to avoid confusion between species and contributing to the creation of appropriate forestry practices [9]. Despite the relevance of these tasks, these studies are scarce in Latin American countries, and most of them have been conducted in countries such as China [3,10,11,12,13], and the United States [14,15]; or regions such as Central Europe [14,16], which has forest resources but is not as lush and biodiverse as in tropical regions.

Traditional single-tree species identification is often costly, time-consuming, and resource-intensive [17,18]. However, recent advances in remote sensing technologies have revolutionized ecological and forestry research, providing high-resolution data to understand forest structure and composition [19,20,21,22]. One of these is based on unmanned aerial vehicles (UAVs), which have introduced new approaches for tree species identification [23,24] using photogrammetric systems [18] in RGB [25], multispectral [26,27,28], hyperspectral [29,30], and light detection and ranging (LiDAR) data [31,32,33].

In this context, one of the most relevant characteristics offered by UAVs is the very precise spatial resolutions at a low flight altitude [30], which can be applied to object detection at distances ranging from a few meters to several hundred meters, using three-dimensional (3D) data [34,35] facilitating the detailed assessment of individual trees [30], crown characterizations [33,36], tree height estimation [32,37], detection of tree species [2], and biomass estimation [36,38]. The most used are LiDAR sensors, whether aerial (ALS) or terrestrial (TLS). LiDAR data enable the estimation of stand attributes across extensive areas at a significantly lower cost compared to traditional field-based methods [39]. For instance, Lindberg and Holmgren (2017) [40] evaluated individual tree crown (ITC) delineation from LiDAR data, achieving high accuracy in tree detection and the mapping of vegetation in urban environments, providing information on tree species at various spatial scales. Seidel et al. (2021) [14] utilized deep learning (DL) algorithms to predict tree species in a temperate forest in Germany and Oregon (USA) from TLS data, achieving an accuracy of 86%. Key variables included tree height, crown width, and the 3D canopy structure. The predicted species included several hardwood species, such as oak and maple. Despite its advantages, LiDAR presents challenges for species classification [12], with some being the lack of accuracy in classifying forest structures due to their complexity [41]; insufficient data resolution to distinguish structural details [42]; need for sophisticated computational resources due to the size of point clouds [43]; or even omitting the classification of certain species [44].

Another alternative is the use of UAV-based photogrammetry, which offers a cost-effective option and technical advantages, including integration with other technologies [45,46]. This approach facilitates the extraction of individual tree crowns [47] or stand characteristics through methods such as the use of multispectral sensors and laser scanners (UAV-LS), spectral angle classification algorithms (SAM), and crown height model (CHM) segmentation techniques, among others [10], thereby improving classification accuracy [48]. However, significant challenges remain in tree identification from a UAV zenithal view, such as the heterogeneity of tree structures, canopy overlaps, diverse species compositions, canopy architectures, tree densities, complex tree structures, and variations in foliage density, which can affect the accuracy of boundary detection [32,36].

A low-cost photogrammetry technique that has been implemented in various studies is ‘Structure-from-Motion’ (SfM) photogrammetry [49,50], which allows for the generation of dense point clouds from UAV imagery [45]. SfM enables the acquisition of 3D remote sensing data in forestry with low cost and technical expertise [49]; also, UAV-based SfM can be used to model forest type, tree density, and aboveground biomass in certain types of forests [51]. These point clouds have been successfully used for tree identification [27,37,52,53,54] and integrated with various data types to improve species classification [55]. Several methods offer a promising way for the identification of individual trees, such as [30,33,56] exploiting the rich geometric and spectral information within 3D datasets. Other studies have demonstrated the effectiveness of point cloud-based methods for obtaining structural attributes such as tree height, crown diameter, and canopy volume [32,36]. The spectral information from multispectral or hyperspectral imagery improves species discrimination based on leaf chemistry and pigmentation [29]. Nevalainen et al. (2017) [30] evaluated the effectiveness of UAV-based photogrammetry and hyperspectral imagery for detecting and classifying individual trees in boreal forests. The classification experiments, which employed random forest (RF) and multilayer perceptron (MLP) classifiers, achieved overall accuracies of 95% and an F-score of 0.93. The accuracy of individual tree identification from photogrammetric point clouds varied between 40% and 95%, depending on area characteristics. Sothe et al. (2019) [29] focused their work on classifying 12 major tree species in a subtropical forest in Southern Brazil using UAV-based photogrammetric point clouds, hyperspectral data, and an SVM algorithm, achieving an overall accuracy of 72.4% and a Kappa index of 0.70. The study identified key features such as visible and near infrared (VNIR) bands, normalized difference vegetation index (NDVI), pigment-specific simple ratio (PSSR), minimum noise fraction (MNF) components, and texture from specific spectral bands as crucial for species discrimination. The common technique for the analysis of dense point clouds (LiDAR or photogrammetric) in these works was machine learning algorithms, including RF [36,54,57,58], support vector machine (SVM) [29,59], and advanced methods such as deep learning [1,18,33,60,61,62]. In addition to being currently widely used in this type of research, refs. [58,63,64] have shown significant potential for automated species classification by evaluating these multidimensional datasets, and demonstrating noticeable improvements in classification accuracy [58].

In summary, to identify tree species at the individual level, it is convenient to combine spectral, textural, and spatial features from point clouds, using machine learning or deep learning algorithms, the latter being a superior performance method [15]. Some algorithms from point clouds are point cloud tree species classification (PCTSCN), which is an intelligent classification strategy for tree species [11], and PointNet and PointNet++, which are a deep learning network framework based on point cloud data [12,65], useful for partitioning the amount of data in these point clouds by spanning smaller regions. Recent studies have explored the application of these two frameworks to classify tree species using LiDAR point clouds. These models can process cluttered point clouds by automating processes and reducing manual feature extraction, which is promising in the classification of forest species in different regions of the world [44]. Another useful option for classifying plant species is Class3Dp, a free software designed to classify plant species in RGB and multispectral point clouds. This software performs the machine learning-supervised classification of point clouds based on 3D and spectral information [66].

Based on this background, in this study, we investigate the potential of RGB data and geometric features derived from UAV photogrammetric point clouds for the identification of urban tree species in the city of Cuenca (Ecuador). Using RF, we evaluated the classification performance to identify the most effective features and models to distinguish tree species based on tree crown point clouds datasets. Additionally, this analysis was expanded through PointNet and PointNet++ to determine if the use of neural networks can improve the identification of forest species from point clouds. The main contributions of this study are as follows: (i) assessing how effectively RGB data and geometric features derived from UAV-based photogrammetric point clouds can be used for urban tree species classification; and (ii) determining which classification algorithm, such as random forest, PointNet, or PointNet++, achieves the highest classification accuracy when applied to point cloud representations of urban tree crowns.

2. Materials and Methods

2.1. Study Zone

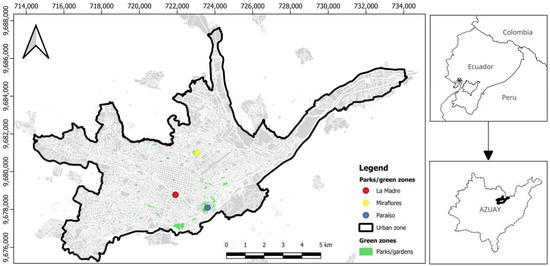

Cuenca is located in the central-southern region of Ecuador (Figure 1), and it lies at an average elevation of approximately 2500 m above sea level (m.a.s.l.). In 2017, this city had around 208 public parks and green spaces within its urban zone [67], complemented by riparian trees along its rivers. Among these green spaces, the parks with the largest surface area are Paraiso, Miraflores, and La Madre. The tree inventory of Cuenca (TIC) included data on 16,737 trees and it provides information on taxonomy, origin, crown and trunk structure, phenology, forest management, potential issues, and two photographs per tree [67]. Among these trees, 43% were introduced species, 41% native, and fewer than 16% were either endemic species or uncategorized. The most frequent species were Salix humboldtiana Willd., Tecoma stans (L.) Juss. ex Kunth, Fraxinus excelsior L., Jacaranda mimosifolia D. Don, Prunus serotina Ehrh., and Melaleuca armillaris (Sol. ex Gaertn.) Sm. [68]. Of the 171 parks inventoried in the TIC until December 2024 [17] according to the website https://gis.uazuay.edu.ec/iforestal/ (accessed on 12 April 2025), this study focused on 54 parks where UAV flights were conducted between 2015 and 2022.

Figure 1.

Location of parks and green areas of the study zone in Cuenca.

2.2. Workflow Description

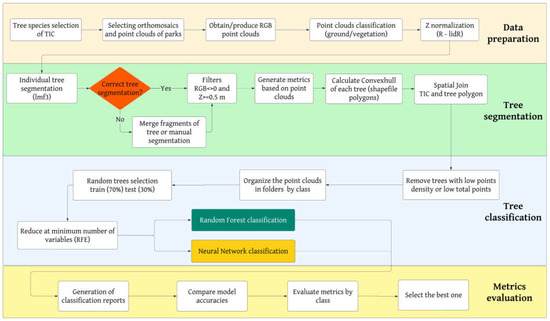

The methodology (Figure 2) was implemented in the following three stages: (1) Data preparation, which involved exploring the TIC database to select the target classes and their green zones. Subsequently, the point clouds corresponding to the parks that contain these tree species were prepared for processing. (2) In the tree segmentation stage, individual trees were delineated (automatically or manually) from the point clouds and assigned to their respective class. Furthermore, geometric attributes and spectral characteristics were obtained from RGB point clouds. (3) In the tree classification stage, we used recursive feature elimination (RFE) to reduce the number of features and trained the model using random forest (RF) and neural network algorithms to classify the point cloud of each tree. (4) Finally, in the metrics evaluation stage, the model’s performance was evaluated using metrics such as overall accuracy and the Kappa index. Classification reports were generated, including class-specific metrics such as precision, recall, and F1-Score, to aid in selecting the final model and identifying the classes with the highest accuracy.

Figure 2.

Workflow diagram.

2.2.1. UAV Data Collection

The TIC was evaluated to identify and select the classes with the highest occurrence, as described on the website https://gis.uazuay.edu.ec/herramientas/iforestal-dashboard/ (accessed on 12 April 2025). The most common species included Salix humboldtiana Willd., Tecoma stans (L.) Juss. ex Kunth, Prunus serotina Ehrh., Fraxinus excelsior L., Jacaranda mimosifolia D. Don, Melaleuca armillaris (Sol. ex Gaertn.) Sm., Alnus acuminata Kunth, Schinus molle L., Acacia dealbata Link, and Callistemon lanceolatus (Sm.) Sweet. Based on these data, 54 parks and green zones where these species are present were identified. UAV flights over these zones were conducted using various sensors (Table 1), all of them with red, green, and blue (RGB) channels. The flights were operated between 2015 and 2022, from 7:00 a.m. to 5:00 p.m., with altitudes ranging from 30 to 150 m above ground level.

Table 1.

RGB sensors used.

The aerial photographs captured during these flights were processed using Metashape software version 1.7.0 (Educational License) across various versions. The principal outputs included orthomosaics and point clouds. In the case of the point clouds, automatic point classification was performed. The classification codes in LAS files, defined by the American Society for Photogrammetry and Remote Sensing (ASPRS), are stored in the classification attribute of each point cloud. These codes assign values such as ground (value 2), low vegetation (value 3), medium vegetation (value 4), and high vegetation (value 5). The ground class was used to normalize tree height (Z coordinate) using the spatial interpolation of the k-nearest neighbor (KNN) approach with an inverse-distance weighting (IDW), while the vegetation classes enabled the extraction of points corresponding to a tree. This process was performed using the lidR package (version 4.1.2) in R (version 4.4.2).

2.2.2. Tree Segmentation and Feature Extraction

The identification of individual trees was performed in two stages. Initially, the tree segmentation algorithm (LMF3) from the rLidar package was employed to rasterize the canopy, detect individual trees, and assign a unique tree ID to all UAV points associated with each tree. Subsequently, the segmented trees were subject to manual evaluation to correct fragmentation, merging segments into a single tree where necessary. In cases where automated segmentation was insufficient, individual trees were manually delineated using CloudCompare software (version 2.13.2). For each tree, the duplicated points, non-vegetation, and vegetation-classified points with an RGB value of 0 (shadows) were removed. Each point cloud of a tree was transformed into a 2D polygon (convexhull) and linked to a TIC tree. The resulting dataset was further refined by selecting classes that could be linked to the point cloud (Table 2) and excluding trees with low point density or insufficient point counts. Some of the majority classes of the TIC, such as Prunus serotina Ehrh., had to be excluded from this process because they could not be matched with an adequate number of segmented trees.

Table 2.

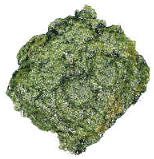

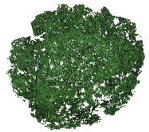

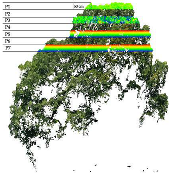

Examples of individual tree RGB photographs of some classes and point clouds from zenithal and front views (RGB channels). DBH is the diameter at breast height.

From the tree point clouds and the RGB channels, 74 variables were produced, 36 spectral (Table 3) and 38 geometric (Table 4), based on works such as [56,65]. Variables potentially influenced by flight conditions or photogrammetric factors—such as image overlap, flight altitude, illumination, and camera angle—including point density, color channels, and spectral indices, were normalized using Z-score standardization. Finally, the mean, minimum, maximum, and standard deviation of RGB features and indexes were obtained at tree level.

Table 3.

Spectral features obtained from the point clouds at tree level.

Table 4.

Geometric features obtained from the point clouds at tree level.

A total of 809 individuals were obtained from the selected classes (Table 5), divided into 70% for training and 30% for testing for each class. The number of samples was highest for Fraxinus excelsior L., while Jacaranda mimosifolia D. Don and Schinus molle L. had the lowest counts.

Table 5.

Number of training and testing trees by class.

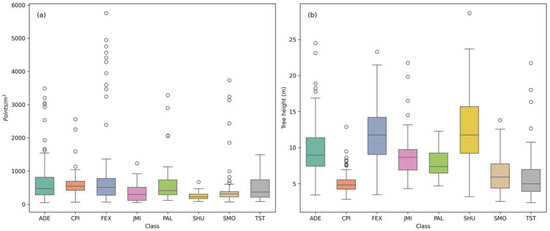

The final tree point clouds database shows the distribution of UAV point density (points/m2) among different vegetation classes (Figure 3a) and the distribution of maximum tree heights (in meters) for various vegetation classes (Figure 3b). In the first case, point densities vary significantly between classes such as FEX. In contrast, classes such as SHU and SMO show lower densities with fewer extreme values. In the second case, the maximum tree height varies between classes such as ADE and SHU, which show the greatest variability and the highest outliers. In contrast, CPI and SMO demonstrate smaller maximum heights with tighter distributions, reflecting more uniform growth patterns. FEX and SHU show the highest median heights.

Figure 3.

Class distribution by (a) point density (points/m2) and (b) maximum tree height (m).

2.2.3. Feature Selection and Classification

RF was used for variable selection and point cloud classification. This algorithm is a set of decision trees, typically trained using the bagging method, where each tree is trained on a different data subset to detect errors in individual trees. Additionally, RF provides a measure of the relative importance of each variable [71]. To select the most relevant variables, the RFE technique was applied. In subsequent iterations, the importance measures were recomputed at each step of variable elimination to refine the selection process [72]. For this research setting, the hyperparameters of RF were n_estimators = 500 (define the number of trees in the model) and class_weight = ‘balanced’ (adjust the weight of the classes to handle imbalances in the data).

The final model was selected based on the set of variables that achieved the highest values of accuracy (Equation (1)) and Kappa (Equation (2)). Additionally, this model was evaluated by class using the following metrics: precision (Equation (3)), which measures the percentage of correctly predicted positive observations out of all positive predictions made by the model; recall (Equation (4)), which represents the percentage of actual positives that were correctly identified by the model; and F1-Score (Equation (5)), which is the harmonic mean of precision and recall. These metrics can be generated using the classification_report function of the scikit-learn module, which is often applied after model predictions to assess how well the model is classifying the data into specific categories.

In these equations, TP represents true positives; TN denotes true negatives; FP refers to false positives; and FN indicates false negatives as defined in the confusion matrix [73]. For Cohen’s Kappa, is the observed agreement, which represents the actual probability that both annotators assign the same label to a sample, while is the expected agreement when both annotators assign the labels randomly [74].

2.2.4. Tree Identification Using PointNet and PointNet++

An alternative classification was applied based on PointNet, an architecture of a neural network designed for processing and classifying 3D point clouds that operate on raw point cloud data [75], integrating them into a supervised convolutional neural network architecture [76]. Although the original architecture of PointNet is primarily focused on capturing the geometric shape of objects using exclusively the spatial coordinates XYZ [75]. Another model evaluated is PointNet++, which features a hierarchical feature learning approach, which allows capturing the detailed geometric structure in the local environment of each point. Thanks to this, the model can extract local feature information at multiple scales within 3D objects [44].

This work proposes a modification of the inputs of the deep learning models to enhance the classification. To achieve this, the inputs of PointNet and PointNet++ models were adapted to process information from the normalized Z coordinates and other relevant attributes associated with each point, such as RGB color values, intensity, and spectral indices (BI and NRBDI), according to the selection of variables obtained by RFE.

Table 6 provides the parameter settings for the PointNet model. Among these, batch size (BS) and epochs are two crucial hyperparameters that significantly impact the training process of a neural network. Batch size refers to the number of training samples processed by epoch. Smaller batch sizes provide more frequent updates, resulting in a smoother but slower training process and potentially better generalization, while larger batch sizes offer faster computation with more stable updates but may require more memory and risk poorer generalization. Epochs denote the number of times to traverse the entire training dataset during training. Increasing the number of epochs allows the model to learn better, but too many epochs can lead to overfitting, where the model learns the training data too well and fails to generalize new data [12]. Choosing the right combination of batch size and epochs is essential for achieving good performance, balancing training speed, memory efficiency, and model accuracy.

Table 6.

Hyperparameters settings evaluated for PointNet.

3. Results

In this section, we describe the results obtained through RF and the characteristics obtained as relevant. These features were subsequently used through neural networks with the PointNet architecture. In both cases, the classification reports of the models are presented.

3.1. Model Accuracy and Feature Importance Using RF

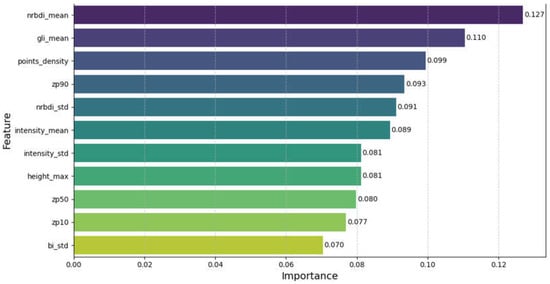

The classification model’s performance was evaluated using the following eight tree species: Acacia dealbata Link (ADE), Populus alba L. (PAL), Melaleuca armillaris (Sol. ex Gaertn.) Sm. (CPI), Tecoma stans (L.) Juss. ex Kunth (TST), Jacaranda mimosifolia D. Don (JMI), Schinus molle L. (SMO), Salix humboldtiana Willd. (SHU), and Fraxinus excelsior L. (FEX). Using all variables, the accuracy and Kappa values were 0.69 and 0.63, respectively. After the RFE process, the best combination of variables (Figure 4) presents an accuracy of 0.70 and Kappa of 0.65. The eleven variables used were the following: nrbdi_mean, gli_mean, points_density, zp90, nrbdi_std, intensity_mean, intensity_std, height_max, zp50, zp10, and bi_std.

Figure 4.

Variable importance of the final RF model.

In Table 7, the rows represent the true classes (reference), while the columns represent the predicted classes (predicted), showing how testing samples of each class were correctly or incorrectly classified. For instance, the CPI class shows 35 correct predictions with minimal misclassifications, being the one with the highest agreement between actual data and predictions. On the other hand, the right side of the table provides evaluation metrics for each class: precision, recall, and the F1-score (F1), where the values summarize the model’s ability to correctly classify samples for each class. For example, the PAL class achieved high performance with an F1-score of 0.82 due to high precision (0.77) and recall (0.88). In contrast, the SMO class performed poorly, with an F1-score of 0.39 due to low precision (0.50) and recall (0.32). The model’s overall accuracy is 0.70, with a macro average F1-score of 0.65, which indicates that the model’s performance is relatively consistent, and a weighted average of 0.69 that suggests the model performs slightly better in most of the classes.

Table 7.

Confusion matrix and evaluation report with RF.

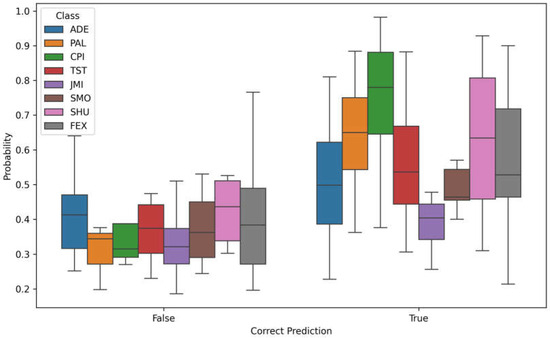

3.2. Classification Probabilities Using RF

Figure 5 shows the probability distribution for samples by class, divided into correctly identified samples (“True”) and misclassified samples (“False”). The separation of the classes in the probability distributions of the correctly and incorrectly classified samples shows the model indeed assigns higher probabilities to the correct predictions. When analyzing the correct classifications (right graphic) the highest median probability is observed in some classes, including CPI (green), PAL (orange), and SHU (pink). This suggests that the selected feature set effectively captures the distinctions among these three tree species. On the other hand, wider interquartile ranges in the “False” category (left graphic) are observed in classes like ADE (blue), JMI (purple), or SMO (brown), indicating variability in the model’s predictions. This would suggest that certain species are confused with others that share their traits.

Figure 5.

Distribution of probabilities classification assigned by RF.

3.3. Classification Results with PointNet and PointNet++

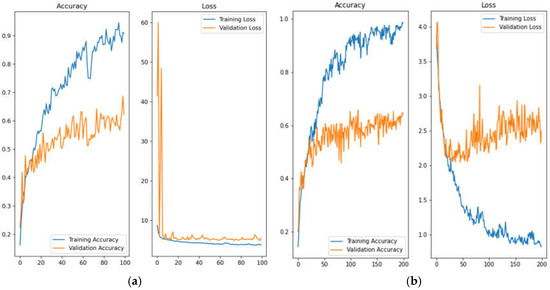

Several models were trained using different configurations of epochs number, batch size, and the points per tree (Table 8). Using only the XYZ coordinates with PointNet and PointNet++, the highest accuracy achieved was 0.45. However, in this section, we evaluate these models using the variables identified as most relevant by RF. For instance, using 4096 points and a batch size of 96 led to an accurate rise from 0.54 at 20 epochs to 0.62 at 100 epochs. Likewise, the performance was positively affected by the number of points, particularly in the range of 4096 points. The highest accuracy (0.62) was achieved with 4096 points, 100 epochs, and a batch size of 96, indicating that this configuration strikes the optimal balance between learning ability and generalization for this dataset. On the other hand, by PointNet++, the higher accuracy was obtained at 3072 points, 128 batch size, and 200 epochs, reaching a value of 0.64. Across all configurations, using 3072 and 4096 points consistently leads to a higher accuracy than smaller point sets, suggesting that PointNet++ benefits significantly from the number of points. In both cases, regarding batch sizes, high sizes (96 and 128) produce better outcomes compared to smaller (32 or 64) batch sizes in most cases, and the increasing epoch counts generally enhance the accuracy, with significant improvements seen when comparing 20 to 200 epochs.

Table 8.

Model accuracy values categorized by training parameters: number of epochs, batch size, and number of input points.

Evaluating the learning curves of the PointNet model (Figure 6a) shows the highest accuracy (Epochs:100, BS:96, Points:4096). The model learns quite well during training, but the learning is more irregular in validation data. The validation accuracy improves, though not to the same level of the training data. Similarly, the validation loss decreases at first but then fluctuates and remains slightly above the training loss. This suggests that the model is slightly overfitting the training data, limiting its ability to generalize the classification to new data, although the overfitting is not drastic. In relation to the PointNet++, the model with the highest accuracy (Epochs:200, BS:128, Points:3072), Figure 6b shows the learning curves, achieving similarly high training accuracy but with noticeably better validation accuracy. The validation loss is more stable compared to PointNet, although some fluctuation persists. These patterns suggest that PointNet++ generalizes better, likely due to its hierarchical structure that captures local features more effectively, resulting in reduced overfitting and improved validation performance.

Figure 6.

Learning curves of model (a) PointNet with 4096 points, 100 epochs, and batch size of 96. (b) PointNet++ with 3072 points, 200 epochs, and batch size of 128.

The main results (Table 9) show the reliability of the PointNet model has a variable performance among classes, with an overall accuracy of 0.62, macro average of 0.60, and weighted average of 0.63. On the other hand, the PointNet++ model registers an overall accuracy of 0.64, macro average of 0.60, and weighted average of 0.64. Classes PAL and CPI have the best performance in two models, while the JMI class has the worst. The comparison between PointNet and PointNet++ shows that PointNet++ provides better classification results in most classes. This model shows a more balanced performance between precision and recall, resulting in higher F1-scores in six of the eight species evaluated. For example, ADE improves from an F1-score of 0.57 in PointNet to 0.65 in PointNet++. In contrast, the classes CPI and SMO show higher F1-scores using PointNet. Although PointNet performs similarly in certain categories, PointNet++ presents stronger results overall, although at a higher computational cost.

Table 9.

Confusion matrix and report of PointNet and PointNet++ model.

4. Discussion

This study evaluated the potential of RGB point clouds obtained through UAVs for the identification of tree species, comparing traditional machine learning methods such as random forest with a deep learning approach based on PointNet. The following discussion is organized around four main topics, as follows: (1) the limitations of the dataset, (2) opportunities in the Ecuadorian context, (3) model comparison based on overall accuracy and class-level performance, and (4) feature importance obtained from RF was used to select the input features for PointNet and PointNet++.

4.1. Limitations

One of the main limitations of UAV digital photogrammetric point clouds with respect to the UAV LiDAR is the lack of penetration through dense vegetation [77], which restricts the acquisition of information from the ground and the internal structure of the canopy. However, at the level of trees, the extraction of individual tree crown width and height using photogrammetric and LiDAR data are comparable [78].

Likewise, canopy height metrics, such as the 90th (ZP90) and 50th (ZP50) height percentiles, had positive effects in RF, such as [27]. These metrics are particularly relevant for species with distinct height profiles, reflecting the importance of UAV structural data, to differentiate species based on vertical stratification. However, the absence of ground control points (GCPs) with GPS in UAV flights introduces potential errors in height profiles derived from photogrammetric data. In addition, this work does not analyze in depth the problem of tree segmentation since the trees had considerable spacing within the selected parks.

A spatial analysis of the predictions revealed that approximately 33% of the misclassifications were concentrated along the Tomebamba riverbank. To further investigate this pattern, three maps were generated (see Supplementary Materials), showing the classification results at the species level, separated into correctly classified and misclassified trees. Of the 73 misclassified trees, 24 were located along the Tomebamba River, for which an additional map was created.

Although PointNet and PointNet++ are deep models specifically designed to process 3D data without the need for prior transformations, the performance may be limited when used with datasets that do not contain sufficient spatial features or when the network architecture does not adequately fit the problem.

4.2. Opportunities

Based on costs, in Ecuador, acquiring specialized equipment such as multispectral cameras or LiDAR sensors can be challenging. However, RGB UAVs offer an affordable alternative.

In the context of dimensions, works such as that of Man et al. (2025) [78] have focused on the radiometric aspects in 2D, whereas our approach leverages the 3D dimension information derived from the point cloud by analyzing the distribution of the Z coordinate. The use of UAV RGB point clouds enables the capture of vegetation’s three-dimensional structure with a level of detail that surpasses traditional approaches, such as those based on multispectral data in some tree species [52].

Another main topic in this study is the use of two classification approaches: random forest (RF) and PointNet++ and PointNet, particularly in the context of spectral and geometric data. Traditional machine learning (ML) methods, such as RF, are often based on mathematical relationships or clear rules that allow humans to understand how decisions are made. In contrast, deep learning (PointNet and PointNet++) operate as “black boxes” due to their high complexity and the large number of parameters [32,79]. This means that for an analysis using ML, an expert in forest areas should be available to analyze and interpret the variables selected by the model.

Although PointNet has great potential for 3D point cloud processing, traditional models such as random forest (RF) remain robust, particularly for classification tasks involving heterogeneous data. This is due to RF’s simplicity and computational efficiency, which make it a more practical choice in many scenarios.

At the level of the tree species, the strengths of this study lie in identifying native species and understanding their key characteristics such as ecological adaptation, medicinal benefits or, in some cases, their potential negative impact on health and ecosystems. Among the notable native species are the Tecoma stans (L.) Juss. ex Kunth (TST), which exhibits remarkable drought tolerance, and the Salix humboldtiana (SHU), which thrives in humid soils and is valued for its importance in medicine and its ability to restore contaminated soils. On the other hand, some introduced species, such as the Acacia dealbata (ADE), can significantly affect ecosystems due to their invasive nature and ease of propagation. Similarly, the Populus alba (PAL) can displace native species and pose a potential health risk due to its allergenic properties [80].

4.3. Models and Classes Performance

In this paper, the results show that the RGB channels, height distribution, and the density of point clouds obtained allow them to generate high reliability in the identification of species such as Populus alba L. (PAL), Melaleuca armillaris (Sol. ex Gaertn.) Sm. (CPI), Salix humboldtiana Willd. (SHU), and Fraxinus excelsior L. (FEX) using RF. Conversely, classes such as Schinus molle L. (SMO) and Jacaranda mimosifolia D. Don (JMI) showed lower performance in this model.

In all models, species like PAL and CPI exhibit characteristics that facilitate better discrimination, with F1-scores above 0.71. In contrast, the class JMI had F1-Scores below 0.45; possibly due to the lower number of validation samples compared to other classes. However, the SMO class, which also had a limited number of samples, had moderate reliability using PointNet.

In analysis at the class level, the RF-trained model demonstrated superior performance compared to the PointNet and PointNet++ trained model for tree species classification from UAV point clouds. Using the F1-Score to compare, RF had a higher reliability in classes such as ADE, TST, JMI, SHU, and FEX. On the other hand, PointNet managed to outperform RF, in the classes CPI and SMO. Finally, using PointNet++ class PAL had a higher reliability compared to the other models.

Despite relying solely on RGB data from UAVs, this study achieved a global accuracy of 0.70 and a Kappa coefficient of 0.65, which is comparable to other works. For instance, Seidel et al. (2021) reported an accuracy of 0.86 using LiDAR scanners and key variables such as tree height, crown width, and 3D canopy structure [14]. Similarly, Sothe et al. (2019) achieved an overall accuracy of 0.72 and a Kappa coefficient of 0.70 using UAV-based photogrammetric point clouds and hyperspectral data, although they classified 12 main tree species of a subtropical forest of southern Brazil [29]. Onishi and Ise (2021), using a traditional approach based on RGB images combined with deep learning achieved up to 90% accuracy in tree species classification [25]. However, their study was conducted in a limited geographic area. In contrast, the present study explores the potential of integrating 3D point clouds (XYZRGB) with geometric features in a more complex urban environment. This demonstrates the potential for forest identification at a lower cost compared to the more advanced technologies or traditional methods used in these studies.

Finally, the use of PointNet++ entails increased computational demands and training times, mainly due to its higher model complexity. Although PointNet++ demonstrated improved classification accuracy across most classes, it is necessary to assess whether the magnitude of this improvement justifies the additional processing time.

4.4. Feature Importance

The relationship between tree crown density and UAV-derived point clouds density, using RF, is crucial for accurately assessing vertical structural attributes in remote sensing applications for classes such as ADE, CPI, TST, JMI, and SMO. Dense tree crowns typically result in higher point cloud densities due to increased surface area and canopy complexity, which enhances the photogrammetric point retrieval during UAV data acquisition. This relationship underscores the importance of optimizing UAV flight parameters, such as altitude, longitudinal, and transversal overlaps, to ensure sufficient point cloud density.

The feature importance in RF highlights the position of spectral and structural metrics in remote sensing-based forestry applications. For instance, the mean values of the normalized red–blue difference index (NRBDI_Mean) emerged as the most important variable, underscoring its strong influence on classification accuracy and the vegetation stress like apple and citrus [81]. Similarly, the Green Leaf Index (GLI_Mean), the second most important feature, emphasizes the role of photosynthetic activity in species differentiation [82]. The classes with highest accuracy in RF such as PAL, CPI, SHU, and FEX are usually found in soils well supplied with water [80], and their relationship with the NRBDI lies in the index’s ability to provide descriptions.

Structural metrics derived from the UAV point cloud data also contributed significantly to classification performance. Point density, which represents the number of points per square meter, proved to be particularly useful for characterizing canopy heterogeneity. This finding indicates that structural information complements spectral data to distinguish species with diverse canopy structures.

5. Conclusions

This study compared the performance of two classification approaches for identifying eight tree species from RGB point clouds: random forest (RF), from a machine learning (ML) perspective, which used spectral and geometric attributes derived from the point clouds; PointNet and PointNet++, from a deep learning (DL) approach, which directly processes 3D coordinates and associated attributes.

The results indicate that the random forest model achieved superior overall performance, with an accuracy of 0.70, compared to 0.62 obtained by PointNet and 0.64 by PointNet++. In addition, class-level analysis determined that RF outperformed PointNet’s for most species, especially Acacia dealbata Link (ADE), Tecoma stans (L.) Juss. ex Kunth (TST), Jacaranda mimosifolia D. Don (JMI), Fraxinus excelsior L. (FEX), and Salix humboldtiana Willd. (SHU). However, PointNet demonstrated a better performance on Melaleuca armillaris (Sol. ex Gaertn.) Sm. (CPI), and Populus alba L. (PAL) in PointNet++, indicating that its ability to capture three-dimensional geometry may be particularly useful for these species.

Analysis of the importance of variables in RF revealed that spectral indices such as mean normalized red–blue difference index (nrbdi_mean) and mean Green Leaf Index (gli_mean) were the most relevant attributes for classification, followed by geometric characteristics such as maximum height, Z percentiles (zp10, zp50, zp90), normalized point density, intensity (mean and standard deviation), and brightness standard deviation. This suggests that the combination of RGB information together with structural variables can be used to differentiate forest species evaluated from a 3D perspective.

Finally, it is recommended that future research should first focus on balancing the number of samples in the database, so that the comparison between RF and PointNet’s models is more effective. In addition, the use of multispectral attributes, in addition to RGB data, could be evaluated. From the DL approach, other neural network architectures will be explored and mechanisms such as transfer learning or data augmentation will be implemented to improve the generalization of the PointNet-based model. Our results open the door to the more efficient monitoring and management of urban forests, particularly in areas like Ecuador where the diversity of species is high and the development and update of urban tree inventories is more challenging.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/rs17111863/s1, Figure S1: Correctly classified validation samples by class map. Figure S2: Classification errors by class for validation samples map. Figure S3: Classification errors by class for validation samples-Banks of the Tomebamba River map.

Author Contributions

Conceptualization, D.P.-P. and L.Á.R.; methodology, D.P.-P., L.Á.R. and E.M.; investigation, D.P.-P., E.M. and E.B.-L.; writing—original draft preparation, D.P.-P., E.M. and E.B.-L.; supervision, L.Á.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by UNIVERSIDAD DEL AZUAY in the context of investigation of projects 2024-0143 denominated “Estimación de Biomasa aérea en áreas urbanas utilizando drones. Estudio del caso de la ciudad de Cuenca (Ecuador)”.

Data Availability Statement

The original data presented in this study are openly available in the geoportal of the University of Azuay at https://gis.uazuay.edu.ec/datasets/tree-point-clouds.html (accessed on 13 May 2025).

Acknowledgments

The authors acknowledge the use of the High-Performance Computing (HPC) resources provided by the Corporación Ecuatoriana para el Desarrollo de la Investigación y la Academia (CEDIA), which significantly accelerated the training of deep learning models through GPU computing.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, J.; Wang, X.; Wang, T. Classification of Tree Species and Stock Volume Estimation in Ground Forest Images Using Deep Learning. Comput. Electron. Agric. 2019, 166, 105012. [Google Scholar] [CrossRef]

- Pu, R. Mapping Tree Species Using Advanced Remote Sensing Technologies: A State-of-the-Art Review and Perspective. J. Remote Sens. 2021, 2021, 9812624. [Google Scholar] [CrossRef]

- Liu, B.; Chen, S.; Huang, H.; Tian, X. Tree Species Classification of Backpack Laser Scanning Data Using the PointNet++ Point Cloud Deep Learning Method. Remote Sens. 2022, 14, 3809. [Google Scholar] [CrossRef]

- Rieckmann, M.; Adomßent, M.; Härdtle, W.; Aguirre, P. Sustainable Development and Conservation of Biodiversity Hotspots in Latin America: The Case of Ecuador. In Biodiversity Hotspots; Springer: Berlin/Heidelberg, Germany, 2011; pp. 435–452. [Google Scholar]

- MAE; FAO. Especies Forestales Leñosas Arbóreas y Arbustivas de Los Bosques Montanos Del Ecuador; MAE/FAO: Quito, Ecuador, 2015.

- Jadán, O.; Donoso, D.A.; Cedillo, H.; Bermúdez, F.; Cabrera, O. Floristic Groups, and Changes in Diversity and Structure of Trees, in Tropical Montane Forests in the Southern Andes of Ecuador. Diversity 2021, 13, 400. [Google Scholar] [CrossRef]

- Aguirre, Z.; Valencia, E.; Veintimilla, D.; Pardo, S.; Jaramillo, N. Floristic Diversity, Structure, and Endemism of the Woody Component in the Lower Montane Evergreen Forest of Valladolid Parish, Zamora Chinchipe, Ecuador. Rev. Cienc. Tecnol. 2024, 20, 121–134. [Google Scholar] [CrossRef]

- Cuesta, F.; Peralvo, M.; Merino-Viteri, A.; Bustamante, M.; Baquero, F.; Freile, J.F.; Muriel, P.; Torres-Carvajal, O. Priority Areas for Biodiversity Conservation in Mainland Ecuador. Neotrop. Biodivers. 2017, 3, 93–106. [Google Scholar] [CrossRef]

- FAO. Global Forest Resources Assessment 2020; FAO: Rome, Italy, 2020; ISBN 978-92-5-132581-0.

- Lian, X.; Zhang, H.; Xiao, W.; Lei, Y.; Ge, L.; Qin, K.; He, Y.; Dong, Q.; Li, L.; Han, Y.; et al. Biomass Calculations of Individual Trees Based on Unmanned Aerial Vehicle Multispectral Imagery and Laser Scanning Combined with Terrestrial Laser Scanning in Complex Stands. Remote Sens. 2022, 14, 4715. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Y.; Liu, Z. Classification of Typical Tree Species in Laser Point Cloud Based on Deep Learning. Remote Sens. 2021, 13, 4750. [Google Scholar] [CrossRef]

- Fan, Z.; Wei, J.; Zhang, R.; Zhang, W. Tree Species Classification Based on PointNet++ and Airborne Laser Survey Point Cloud Data Enhancement. Forests 2023, 14, 1246. [Google Scholar] [CrossRef]

- Ma, Z.; Pang, Y.; Wang, D.; Liang, X.; Chen, B.; Lu, H.; Weinacker, H.; Koch, B. Individual Tree Crown Segmentation of a Larch Plantation Using Airborne Laser Scanning Data Based on Region Growing and Canopy Morphology Features. Remote Sens. 2020, 12, 1078. [Google Scholar] [CrossRef]

- Seidel, D.; Annighöfer, P.; Thielman, A.; Seifert, Q.E.; Thauer, J.-H.; Glatthorn, J.; Ehbrecht, M.; Kneib, T.; Ammer, C. Predicting Tree Species From 3D Laser Scanning Point Clouds Using Deep Learning. Front. Plant Sci. 2021, 12, 635440. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J. Urban Tree Species Classification Using a Worldview-2/3 and LiDAR Data Fusion Approach and Deep Learning. Sensors 2019, 19, 1284. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, T.; Skidmore, A.K.; Heurich, M. Important LiDAR Metrics for Discriminating Forest Tree Species in Central Europe. ISPRS J. Photogramm. Remote Sens. 2018, 137, 163–174. [Google Scholar] [CrossRef]

- Pacheco-Prado, D.; Bravo-López, E.; Ruiz, L.Á. Tree Species Identification in Urban Environments Using TensorFlow Lite and a Transfer Learning Approach. Forests 2023, 14, 1050. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; López Cáceres, M.L.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Lines, E.R.; Fischer, F.J.; Owen, H.J.F.; Jucker, T. The Shape of Trees: Reimagining Forest Ecology in Three Dimensions with Remote Sensing. J. Ecol. 2022, 110, 1730–1745. [Google Scholar] [CrossRef]

- Atkins, J.W.; Bhatt, P.; Carrasco, L.; Francis, E.; Garabedian, J.E.; Hakkenberg, C.R.; Hardiman, B.S.; Jung, J.; Koirala, A.; LaRue, E.A.; et al. Integrating Forest Structural Diversity Measurement into Ecological Research. Ecosphere 2023, 14, e4633. [Google Scholar] [CrossRef]

- Mouafik, M.; Chakhchar, A.; Fouad, M.; El Aboudi, A. Remote Sensing Technologies for Monitoring Argane Forest Stands: A Comprehensive Review. Geographies 2024, 4, 441–461. [Google Scholar] [CrossRef]

- Ecke, S.; Stehr, F.; Frey, J.; Tiede, D.; Dempewolf, J.; Klemmt, H.J.; Endres, E.; Seifert, T. Towards Operational UAV-Based Forest Health Monitoring: Species Identification and Crown Condition Assessment by Means of Deep Learning. Comput. Electron. Agric. 2024, 219, 108785. [Google Scholar] [CrossRef]

- Li, X.; Zheng, Z.; Xu, C.; Zhao, P.; Chen, J.; Wu, J.; Zhao, X.; Mu, X.; Zhao, D.; Zeng, Y. Individual Tree-Based Forest Species Diversity Estimation by Classification and Clustering Methods Using UAV Data. Front. Ecol. Evol. 2023, 11, 1139458. [Google Scholar] [CrossRef]

- Onishi, M.; Ise, T. Explainable Identification and Mapping of Trees Using UAV RGB Image and Deep Learning. Sci. Rep. 2021, 11, 903. [Google Scholar] [CrossRef] [PubMed]

- Feng, X.; Li, P. A Tree Species Mapping Method from UAV Images over Urban Area Using Similarity in Tree-Crown Object Histograms. Remote Sens. 2019, 11, 1982. [Google Scholar] [CrossRef]

- Xu, Z.; Shen, X.; Cao, L.; Coops, N.C.; Goodbody, T.R.H.; Zhong, T.; Zhao, W.; Sun, Q.; Ba, S.; Zhang, Z.; et al. Tree Species Classification Using UAS-Based Digital Aerial Photogrammetry Point Clouds and Multispectral Imageries in Subtropical Natural Forests. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102173. [Google Scholar] [CrossRef]

- Briechle, S.; Krzystek, P.; Vosselman, G. Classification of Tree Species and Standing Dead Trees by Fusing UAV-Based LiDAR Data and Multispectral Imagery in the 3D Deep Neural Network Pointnet++. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, V-2–2020, 203–210. [Google Scholar] [CrossRef]

- Sothe, C.; Dalponte, M.; de Almeida, C.M.; Schimalski, M.B.; Lima, C.L.; Liesenberg, V.; Takahashi Miyoshi, G.; Garcia Tommaselli, A.M. Tree Species Classification in a Highly Diverse Subtropical Forest Integrating UAV-Based Photogrammetric Point Cloud and Hyperspectral Data. Remote Sens. 2019, 11, 1338. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Kovanič, Ľ.; Topitzer, B.; Peťovský, P.; Blišťan, P.; Gergeľová, M.B.; Blišťanová, M. Review of Photogrammetric and LiDAR Applications of UAV. Appl. Sci. 2023, 13, 6732. [Google Scholar] [CrossRef]

- Chen, J.; Liang, X.; Liu, Z.; Gong, W.; Chen, Y.; Hyyppä, J.; Kukko, A.; Wang, Y. Tree Species Recognition from Close-Range Sensing: A Review. Remote Sens. Environ. 2024, 313, 114337. [Google Scholar] [CrossRef]

- Pleşoianu, A.I.; Stupariu, M.S.; Şandric, I.; Pătru-Stupariu, I.; Drăguţ, L. Individual Tree-Crown Detection and Species Classification in Very High-Resolution Remote Sensing Imagery Using a Deep Learning Ensemble Model. Remote Sens. 2020, 12, 2426. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV Photogrammetry for Mapping and 3d Modeling–Current Status and Future Perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38-1/C22, 25–31. [Google Scholar] [CrossRef]

- Cetin, Z.; Yastikli, N. The Use of Machine Learning Algorithms in Urban Tree Species Classification. ISPRS Int. J. Geoinf. 2022, 11, 226. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; White, J.C.; Wulder, M.A.; Næsset, E. Remote Sensing in Forestry: Current Challenges, Considerations and Directions. For. Int. J. For. Res. 2024, 97, 11–37. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.-J.; de Castro, A.I.; Torres-Sánchez, J.; Triviño-Tarradas, P.; Jiménez-Brenes, F.M.; García-Ferrer, A.; López-Granados, F. Classification of 3D Point Clouds Using Color Vegetation Indices for Precision Viticulture and Digitizing Applications. Remote Sens. 2020, 12, 317. [Google Scholar] [CrossRef]

- Carbonell-Rivera, J.P.; Ruiz, L.Á.; Estornel, J.; Simó-Martí, M.; Quille-Mamani, J.; Torralba, J. Comparación de Biomasa Estimada a Partir de Mediciones Clásicas y Nubes de Puntos UAV Clasificadas Mediante Class3Dp. In Proceedings of the XX Congreso de la Asociación Española de Teledetección. Teledetección y Cambio Global: Retos y Oportunidades para un Crecimiento Azul, Cádiz, Spain, 4–7 June 2024; pp. 219–222. [Google Scholar]

- Picos, J.; Bastos, G.; Míguez, D.; Alonso, L.; Armesto, J. Individual Tree Detection in a Eucalyptus Plantation Using Unmanned Aerial Vehicle (UAV)-LiDAR. Remote Sens. 2020, 12, 885. [Google Scholar] [CrossRef]

- Lindberg, E.; Holmgren, J. Individual Tree Crown Methods for 3D Data from Remote Sensing. Curr. For. Rep. 2017, 3, 19–31. [Google Scholar] [CrossRef]

- Xu, C.; Morgenroth, J.; Manley, B. Integrating Data from Discrete Return Airborne LiDAR and Optical Sensors to Enhance the Accuracy of Forest Description: A Review. Curr. For. Rep. 2015, 1, 206–219. [Google Scholar] [CrossRef]

- Favorskaya, M.; Tkacheva, A.; Danilin, I.M.; Medvedev, E.M. Fusion of Airborne LiDAR and Digital Photography Data for Tree Crowns Segmentation and Measurement. In Proceedings of the Smart Innovation, Systems and Technologies, Sorrento, Italy, 17–19 June 2015; Volume 40. [Google Scholar]

- Tao, W. Multi-View Dense Match for Forest Area. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2014, XL-1, 397–400. [Google Scholar] [CrossRef]

- Liu, B.; Huang, H.; Su, Y.; Chen, S.; Li, Z.; Chen, E.; Tian, X. Tree Species Classification Using Ground-Based LiDAR Data by Various Point Cloud Deep Learning Methods. Remote Sens. 2022, 14, 5733. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Greco, R.; Barca, E.; Raumonen, P.; Persia, M.; Tartarino, P. Methodology for Measuring Dendrometric Parameters in a Mediterranean Forest with UAVs Flying inside Forest. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103426. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A Review of Methods for Automatic Individual Tree-Crown Detection and Delineation from Passive Remote Sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Burt, A.; Disney, M.; Calders, K. Extracting Individual Trees from Lidar Point Clouds Using Treeseg. Methods Ecol. Evol. 2019, 10, 438–445. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Eltner, A.; Sofia, G. Structure from Motion Photogrammetric Technique. In Developments in Earth Surface Processes; Tarolli, P., Mudd, S.M., Eds.; Elsevier: Amsterdam, The Netherlands, 2020; Volume 23, pp. 1–24. ISBN 978-0-444-64178-6. [Google Scholar]

- Alonzo, M.; Andersen, H.E.; Morton, D.C.; Cook, B.D. Quantifying Boreal Forest Structure and Composition Using UAV Structure from Motion. Forests 2018, 9, 119. [Google Scholar] [CrossRef]

- Pacheco-Prado, D.; Bravo-López, E.; Martínez-Urgilés, E. Tree Species Identification Using UAV RGB and Multispectral Cloud Points. In Proceedings of the Emerging Research in Intelligent Systems, Ecuador, South America, 15 March 2024; Olmedo Cifuentes, G.F., Arcos Avilés, D.G., Lara Padilla, H.V., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 66–79. [Google Scholar]

- Carbonell-Rivera, J.P.; Torralba, J.; Estornell, J.; Ruiz, L.Á.; Crespo-Peremarch, P. Classification of Mediterranean Shrub Species from UAV Point Clouds. Remote Sens. 2022, 14, 199. [Google Scholar] [CrossRef]

- Zeybek, M. Classification of UAV Point Clouds by Random Forest Machine Learning Algorithm. Turk. J. Eng. 2021, 5, 48–57. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Maimaitijiang, M. Urban Tree Species Classification Using UAV-Based Multi-Sensor Data Fusion and Machine Learning. GIsci Remote Sens. 2021, 58, 1250–1275. [Google Scholar] [CrossRef]

- Carbonell-Rivera, J.P.; Estornell, J.; Ruiz, L.A.; Torralba, J.; Crespo-Peremarch, P. Classification of UAV-Based Photogrammetric Point Clouds of Riverine Species Using Machine Learning Algorithms: A Case Study in the Palancia River, Spain. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B2-2020, 659–666. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree Species Classification with Random Forest Using Very High Spatial Resolution 8-Band WorldView-2 Satellite Data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Zhong, H.; Zhang, Z.; Liu, H.; Wu, J.; Lin, W. Individual Tree Species Identification for Complex Coniferous and Broad-Leaved Mixed Forests Based on Deep Learning Combined with UAV LiDAR Data and RGB Images. Forests 2024, 15, 293. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, X. Support Vector Machines for Tree Species Identification Using LiDAR-Derived Structure and Intensity Variables. Geocarto Int. 2013, 28, 364–378. [Google Scholar] [CrossRef]

- Qi, Y.; Dong, X.; Chen, P.; Lee, K.-H.; Lan, Y.; Lu, X.; Jia, R.; Deng, J.; Zhang, Y. Canopy Volume Extraction of Citrus Reticulate Blanco Cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sens. 2021, 13, 3437. [Google Scholar] [CrossRef]

- Kim, D.H.; Ko, C.U.; Kim, D.G.; Kang, J.T.; Park, J.M.; Cho, H.J. Automated Segmentation of Individual Tree Structures Using Deep Learning over LiDAR Point Cloud Data. Forests 2023, 14, 1159. [Google Scholar] [CrossRef]

- Xu, N.; Qin, R.; Song, S. Point Cloud Registration for LiDAR and Photogrammetric Data: A Critical Synthesis and Performance Analysis on Classic and Deep Learning Algorithms. ISPRS Open J. Photogramm. Remote Sens. 2023, 8, 100032. [Google Scholar] [CrossRef]

- Modzelewska, A.; Fassnacht, F.E.; Stereńczak, K. Tree Species Identification within an Extensive Forest Area with Diverse Management Regimes Using Airborne Hyperspectral Data. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101960. [Google Scholar] [CrossRef]

- Rana, P.; St-Onge, B.; Prieur, J.F.; Cristina Budei, B.; Tolvanen, A.; Tokola, T. Effect of Feature Standardization on Reducing the Requirements of Field Samples for Individual Tree Species Classification Using ALS Data. ISPRS J. Photogramm. Remote Sens. 2022, 184, 189–202. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Carbonell-Rivera, J.P.; Estornell, J.; Ruiz, L.Á.; Crespo-Peremarch, P.; Almonacid-Caballer, J.; Torralba, J. Class3Dp: A Supervised Classifier of Vegetation Species from Point Clouds. Environ. Model. Softw. 2024, 171, 105859. [Google Scholar] [CrossRef]

- Pacheco, D.; Ávila, L. Inventario de Parques y Jardines de La Ciudad de Cuenca Con UAV y Smartphones. In Proceedings of the XVI Conferencia de Sistemas de Información Geográfica, Cuenca, Ecuador, 27–29 September 2017; pp. 173–179. [Google Scholar]

- Universidad del Azuay Dashboard Inventario Forestal Cuenca. Available online: https://gis.uazuay.edu.ec/herramientas/iforestal-dashboard/ (accessed on 12 April 2025).

- Song, X.; Wu, F.; Lu, X.; Yang, T.; Ju, C.; Sun, C.; Liu, T. The Classification of Farming Progress in Rice–Wheat Rotation Fields Based on UAV RGB Images and the Regional Mean Model. Agriculture 2022, 12, 124. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, F.; Qi, Y.; Deng, L.; Wang, X.; Yang, S. New Research Methods for Vegetation Information Extraction Based on Visible Light Remote Sensing Images from an Unmanned Aerial Vehicle (UAV). Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 215–226. [Google Scholar] [CrossRef]

- Gerón, A. Hands-On Machine Learning with Scikit-Learn, Keras & Tensorflow, 2nd ed.; O’Reilly Media: Sebastopol, CA, USA, 2019; ISBN 9781492032649. [Google Scholar]

- Wang, D.; Wan, B.; Qiu, P.; Su, Y.; Guo, Q.; Wang, R.; Sun, F.; Wu, X. Evaluating the Performance of Sentinel-2, Landsat 8 and Pléiades-1 in Mapping Mangrove Extent and Species. Remote Sens. 2018, 10, 1468. [Google Scholar] [CrossRef]

- Zheng, H.; Sherazi, S.W.A.; Son, S.H.; Lee, J.Y. A Deep Convolutional Neural Network-based Multi-class Image Classification for Automatic Wafer Map Failure Recognition in Semiconductor Manufacturing. Appl. Sci. 2021, 11, 9769. [Google Scholar] [CrossRef]

- Sklearn.Metrics.Cohen_Kappa_Score—Scikit-Learn 1.2.1 Documentation. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.metrics.cohen_kappa_score.html (accessed on 1 January 2023).

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- García-García, A.; Gómez-Donoso, F.; García-Rodríguez, J.; Orts-Escolano, S.; Cazorla, M.; Azorin-López, J. Pointnet: A 3d Convolutional Neural Network for Real-Time Object Class Recognition. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 1578–1584. [Google Scholar]

- Hong, Q.; Ge, Z.; Wang, X.; Li, Y.; Xia, X.; Chen, Y. Measuring Canopy Morphology of Saltmarsh Plant Patches Using UAV-Based LiDAR Data. Front. Mar. Sci. 2024, 11, 1378687. [Google Scholar] [CrossRef]

- Man, Q.; Yang, X.; Liu, H.; Zhang, B.; Dong, P.; Wu, J.; Liu, C.; Han, C.; Zhou, C.; Tan, Z.; et al. Comparison of UAV-Based LiDAR and Photogrammetric Point Cloud for Individual Tree Species Classification of Urban Areas. Remote Sens. 2025, 17, 1212. [Google Scholar] [CrossRef]

- Pu, L.; Xv, J.; Deng, F. An Automatic Method for Tree Species Point Cloud Segmentation Based on Deep Learning. J. Indian. Soc. Remote Sens. 2021, 49, 2163–2172. [Google Scholar] [CrossRef]

- Guzmán-Salinas, N.; Jiménez-Pesántez, M.; Minga-Ochoa, D.; Delgado-Inga, O.; Martínez, E. Árboles Urbanos de Cuenca; Casa Editora de la Universidad del Azuay: Cuenca, Ecuador, 2023; ISBN 9789942645180. [Google Scholar]

- Glenn, D.M.; Tabb, A. Evaluation of Five Methods to Measure Normalized Difference Vegetation Index (NDVI) in Apple and Citrus. Int. J. Fruit. Sci. 2019, 19, 191–210. [Google Scholar] [CrossRef]

- Gitelson, A.; Viña, A.; Inoue, Y.; Arkebauer, T.; Schlemmer, M.; Schepers, J. Uncertainty in the Evaluation of Photosynthetic Canopy Traits Using the Green Leaf Area Index. Agric. Meteorol. 2022, 320, 108955. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).