IR-ADMDet: An Anisotropic Dynamic-Aware Multi-Scale Network for Infrared Small Target Detection

Abstract

1. Introduction

- (1)

- We propose IR-ADMDet, a specialized one-stage detector for small target detection in infrared imagery characterized by irregular background noise, designed to maximize detection accuracy while minimizing missed detection instances.

- (2)

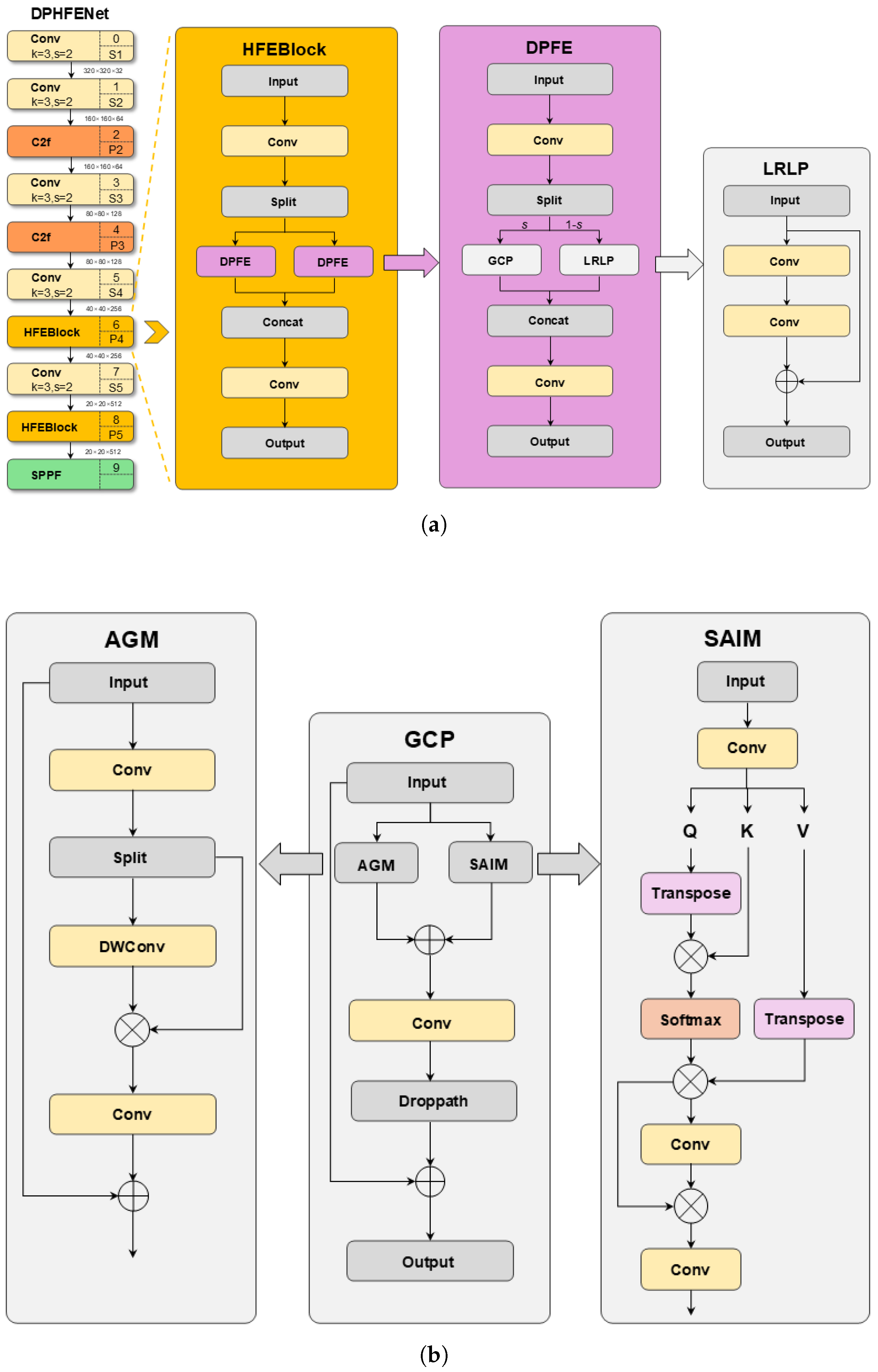

- The backbone DPHFENet incorporates Hybrid Feature Extractor Block (HFEBlock), combining CNN and Transformer architectural strengths to enhance feature extraction precision and efficiency, reduce parametric and computational requirements, and enrich contextual information representation.

- (3)

- The Neck HAFF implements the Bidirectional Gated Feature Symbiosis (BGFS) module for effective integration of local and global contextual features, complemented by Recurrent Graph-Enhanced Fusion (RGEF) and Interlink Fusion Core (IFC) modules that optimize model complexity and enhance detection performance through lightweight convolutions, reparameterization techniques, and attention mechanisms.

- (4)

- Comprehensive experimental evaluation demonstrated the superior performance of IR-ADMDet compared to state-of-the-art object detectors across benchmark datasets, including SIRSTv2, IRSTD-1k, and NUDT-SIRST, validating its enhanced detection capabilities.

2. Relate Work

2.1. Bounding Box-Based Infrared Small Target Detection Methods

2.2. Segmentation-Based Infrared Small Target Detection Methods

2.3. Feature Fusion Network

3. Method

3.1. Overall Architecture

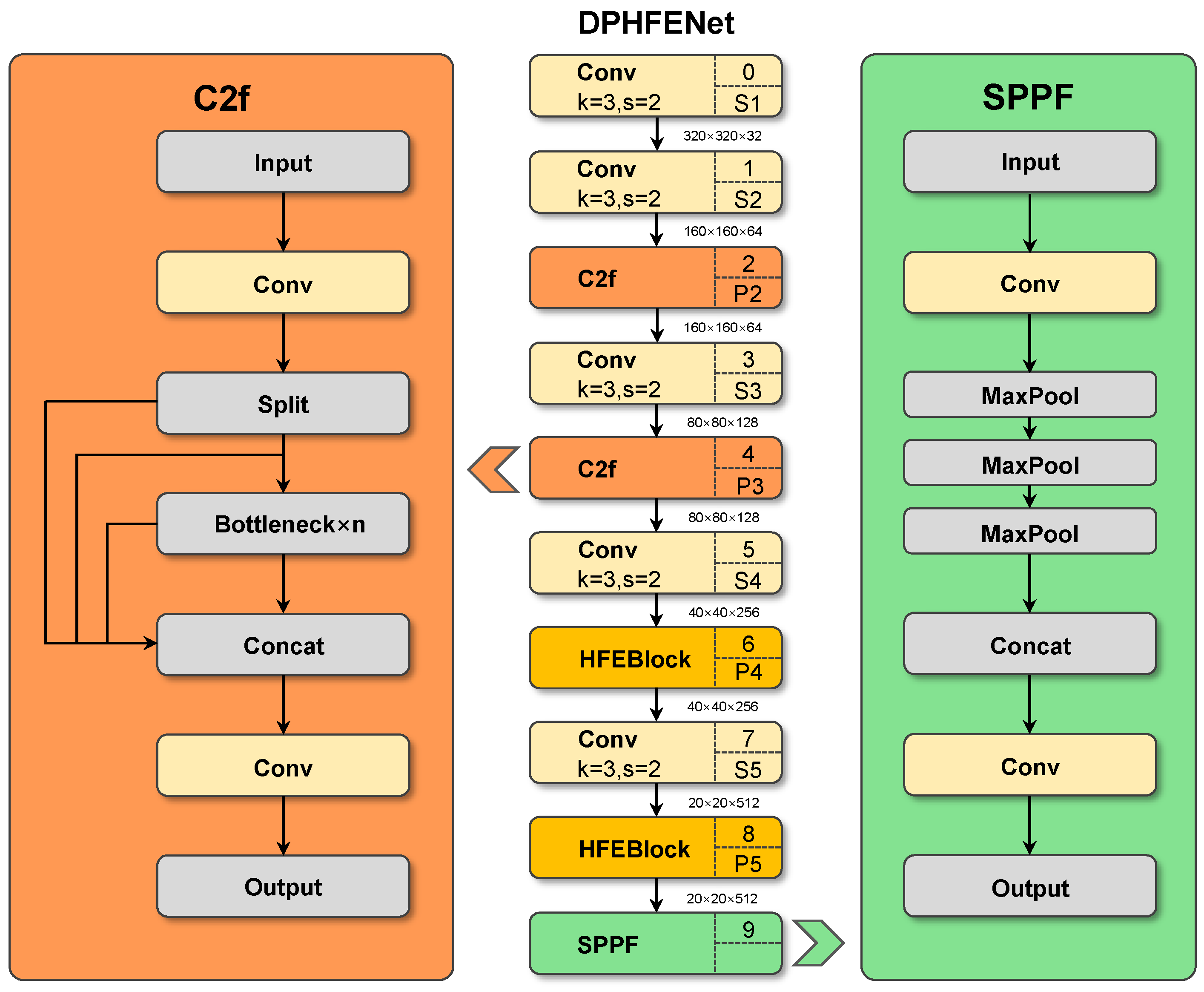

3.2. Dual-Path Hybrid Feature Extractor Network

3.3. Hierarchical Adaptive Fusion Framework

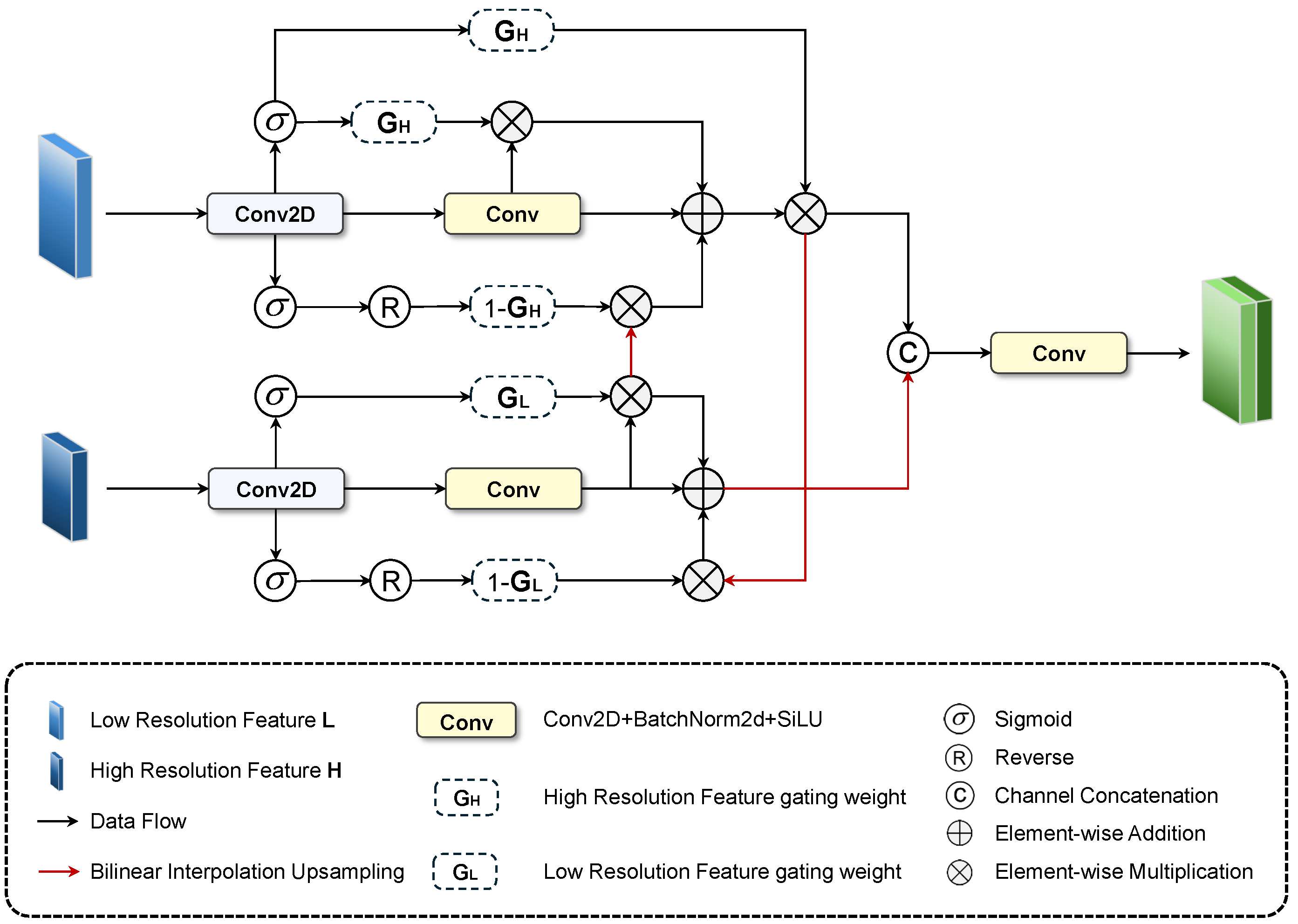

3.3.1. Bidirectional Gated Feature Symbiosis Module

3.3.2. Recurrent Graph-Enhanced Fusion Module

3.3.3. Interlink Fusion Core Module

3.4. Loss Function

4. Experiments and Analysis

4.1. Experimental Setup

4.1.1. Dataset and Training Settings

4.1.2. Evaluation Metrics

4.2. Ablation Study

- Group 1: YOLOv8s (Baseline).

- Group 2: YOLOv8s+P2.

- Group 3: YOLOv8s+P2+HFEBlock.

- Group 4: YOLOv8s+P2+HFEBlock+BGFS+RGEF.

- Group 5: YOLOv8s+P2+HFEBlock+BGFS+RGEF+IFC (Ours).

| Group | P2 | HFEBlock | BGFS+RGEF | IFC | F1 | AP50 | Parameters/M |

|---|---|---|---|---|---|---|---|

| 1 (Baseline) | × | × | × | × | 0.851 | 0.881 | 11.125971 |

| 2 | ✓ | × | × | × | 0.852 | 0.895 | 6.947563 |

| 3 | ✓ | ✓ | × | × | 0.894 | 0.937 | 6.273452 |

| 4 | ✓ | ✓ | ✓ | × | 0.912 | 0.936 | 5.462284 |

| 5 (Ours) | ✓ | ✓ | ✓ | ✓ | 0.95 | 0.96 | 5.767821 |

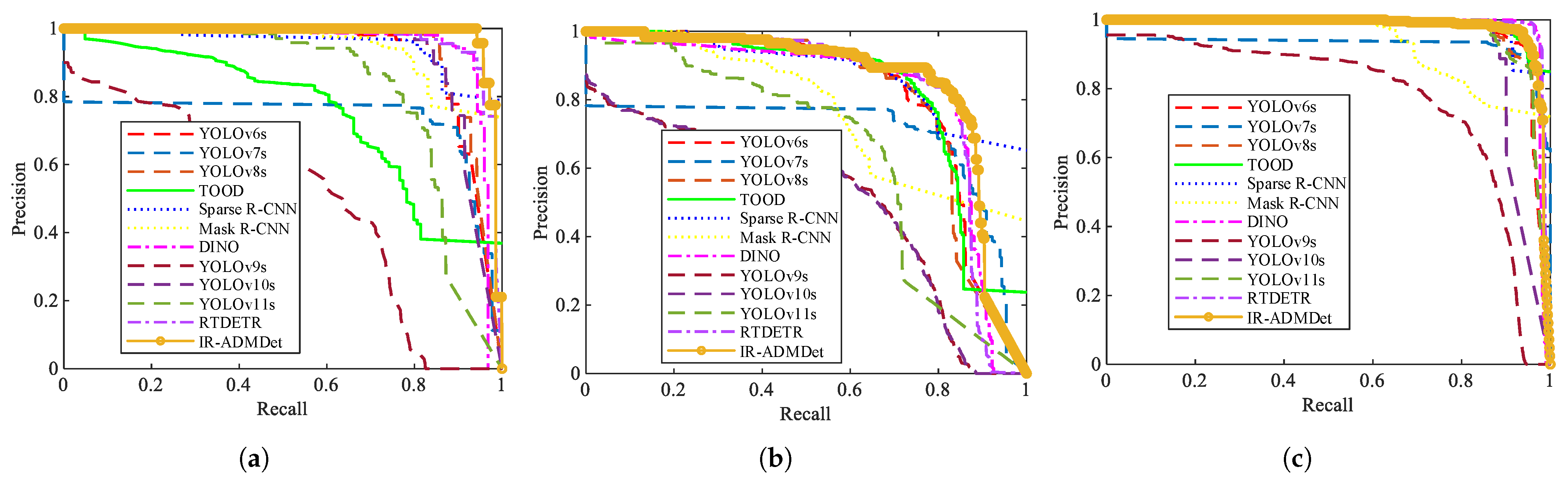

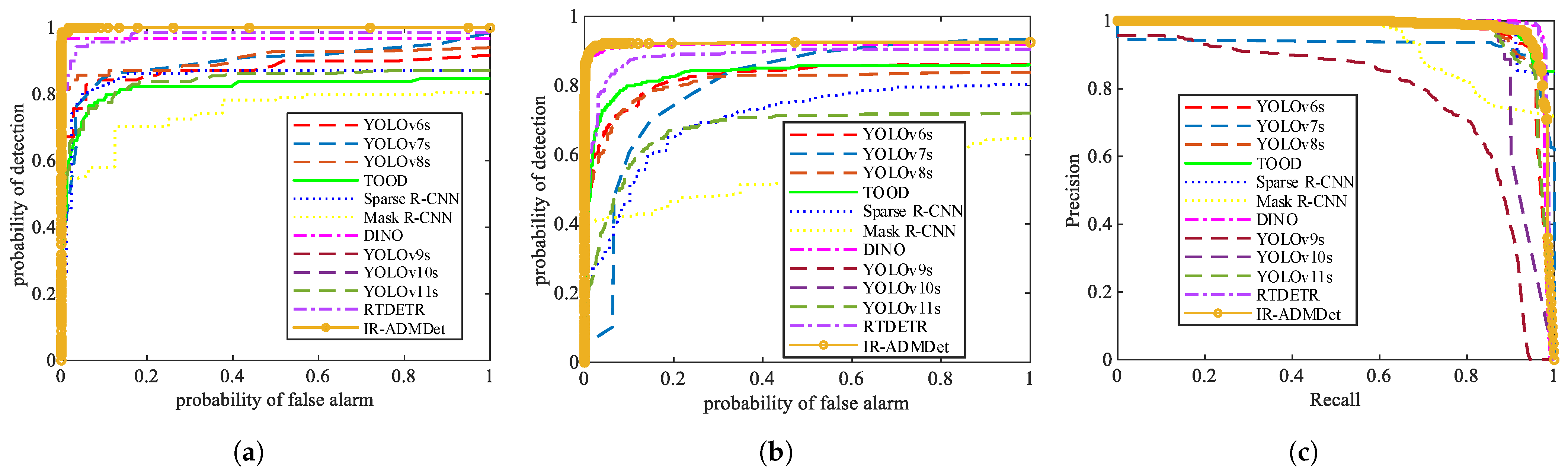

4.3. Comparative Experiment

4.3.1. Quantitative Analysis

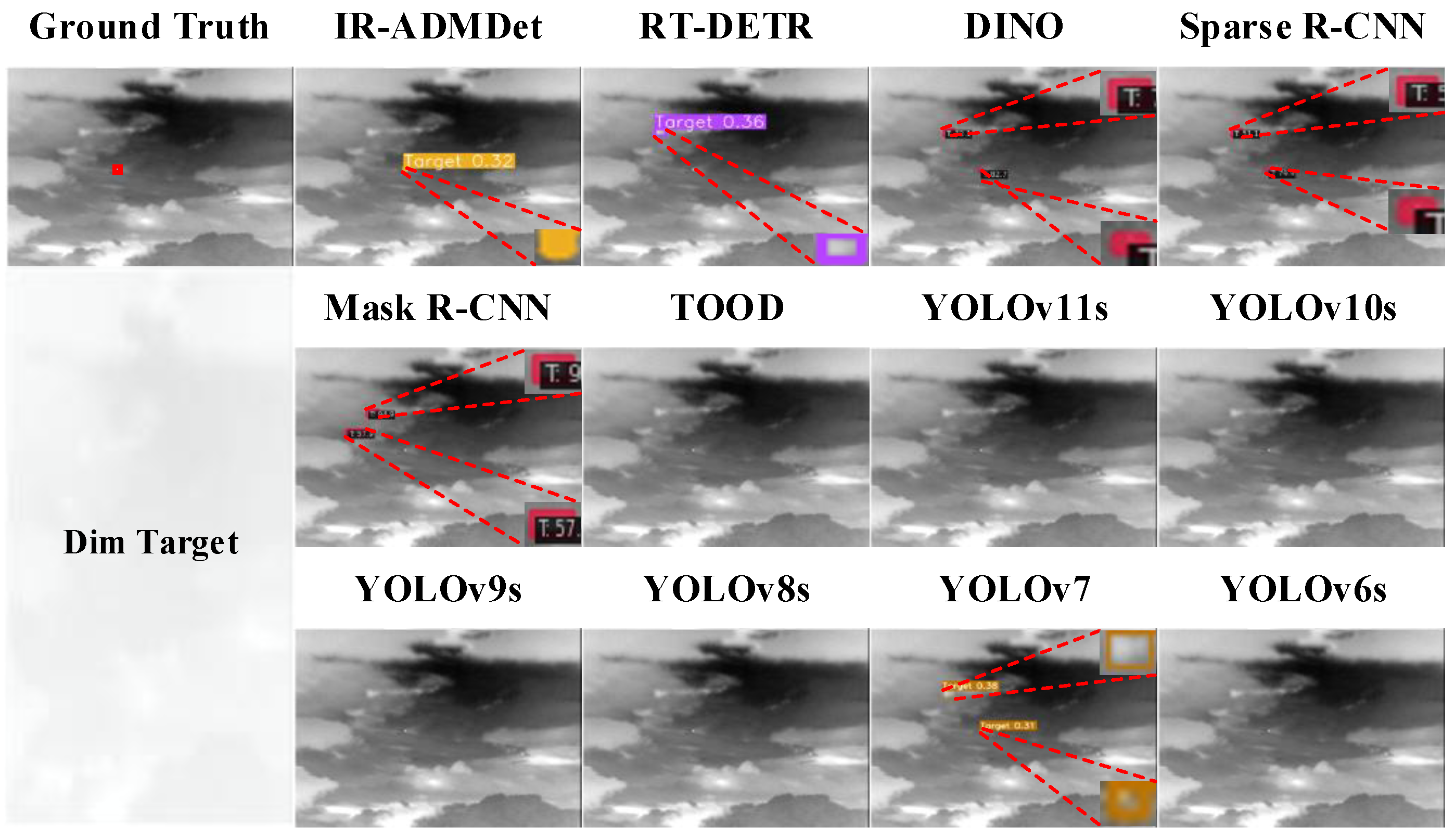

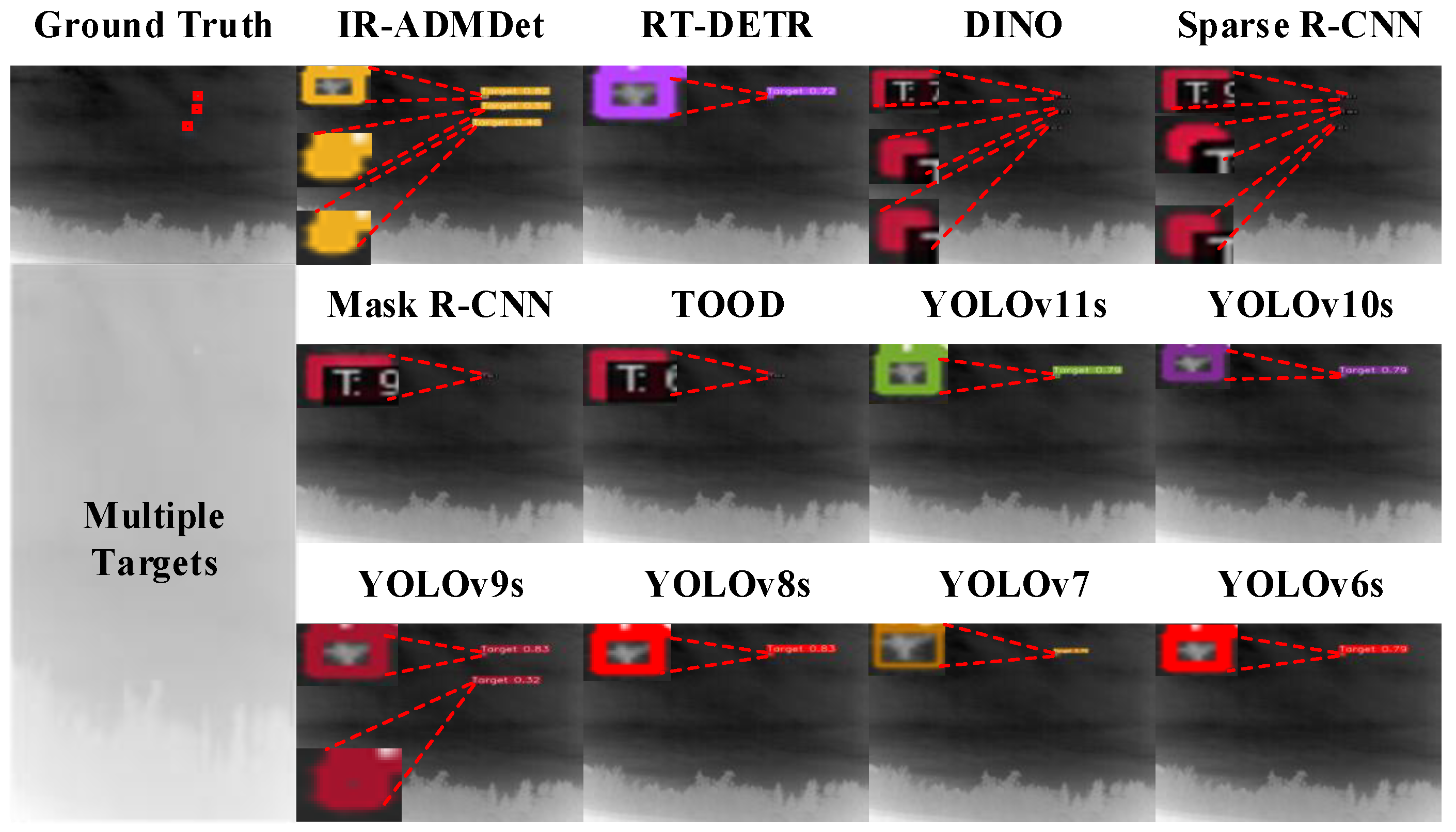

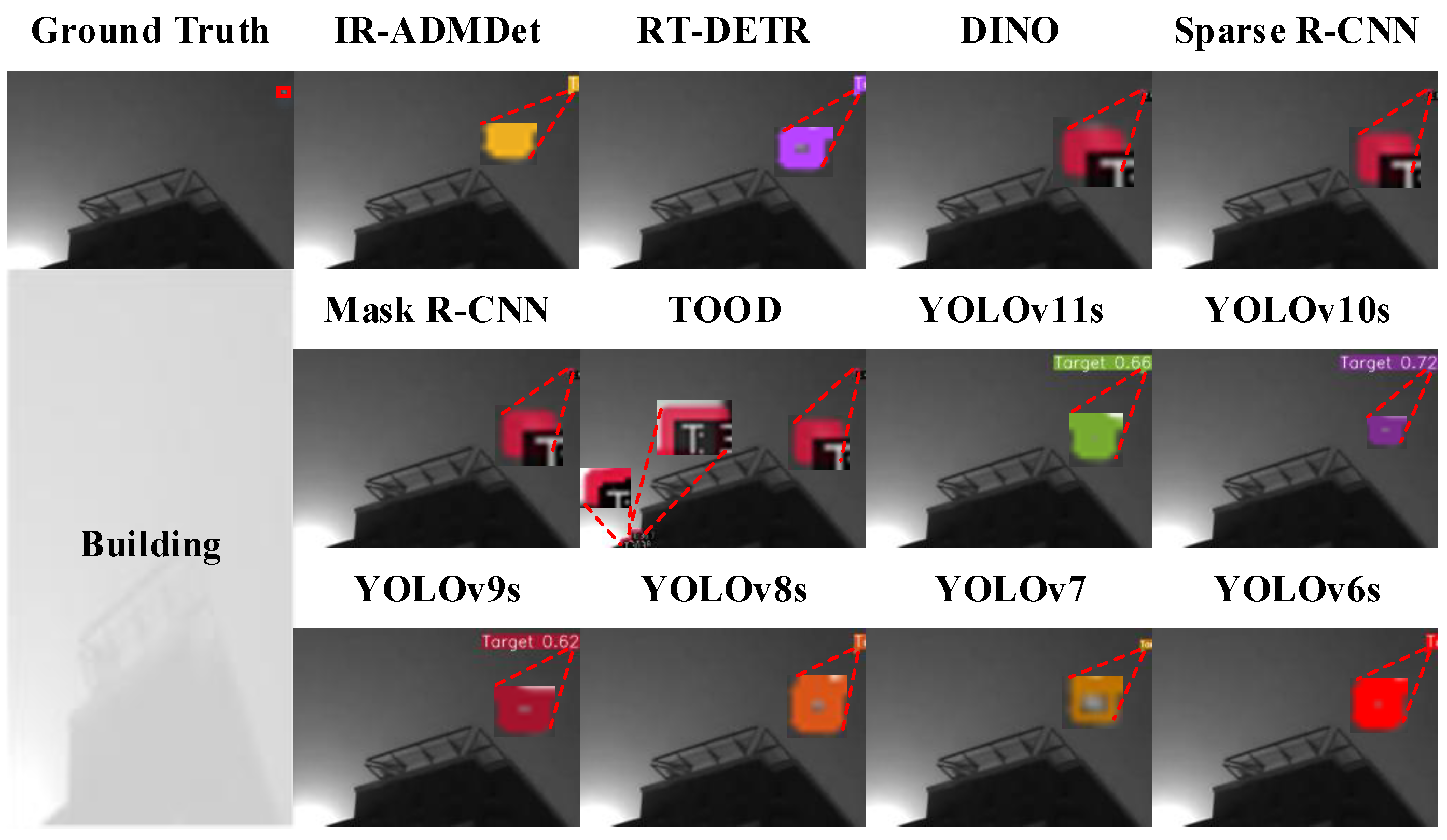

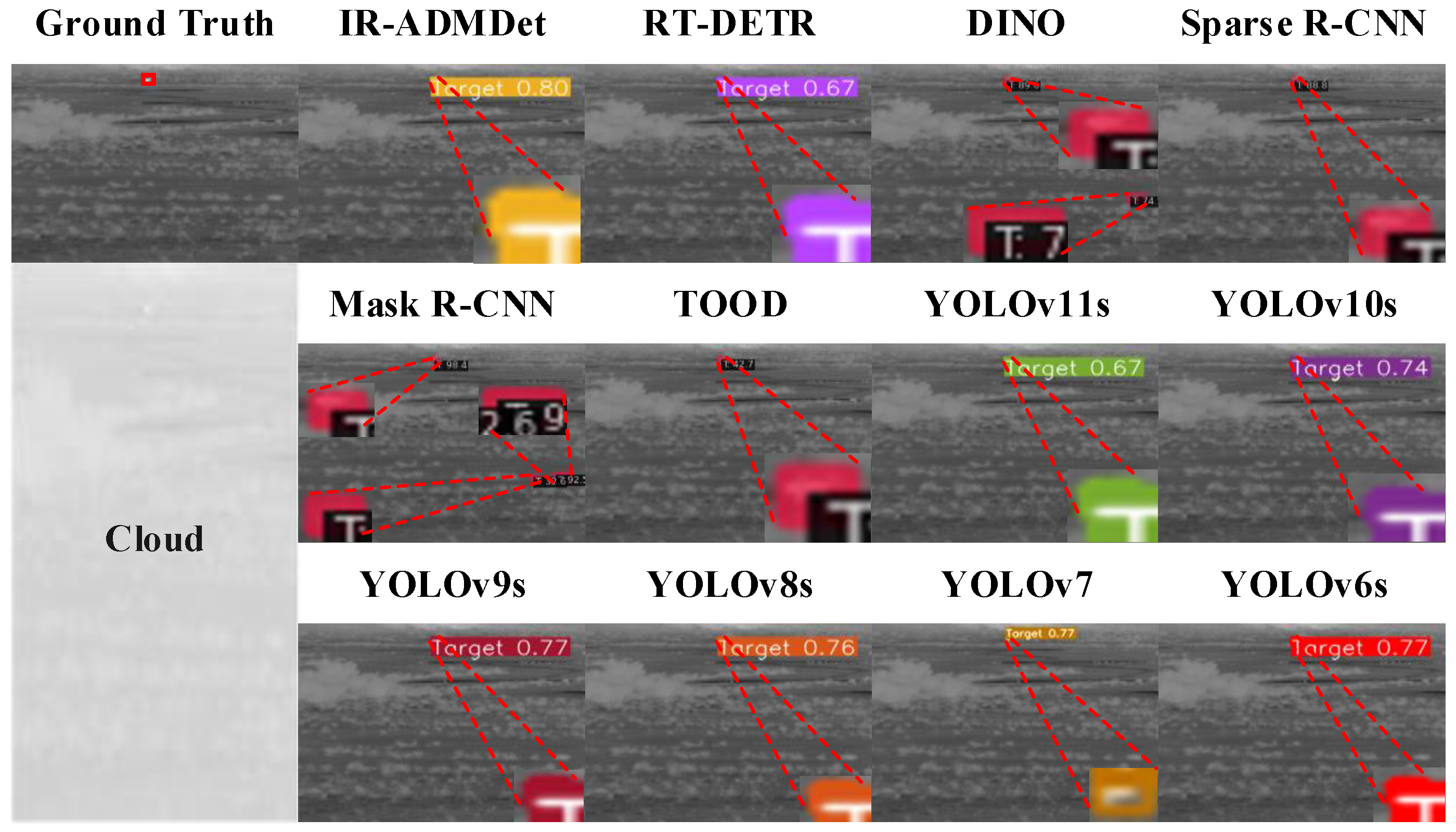

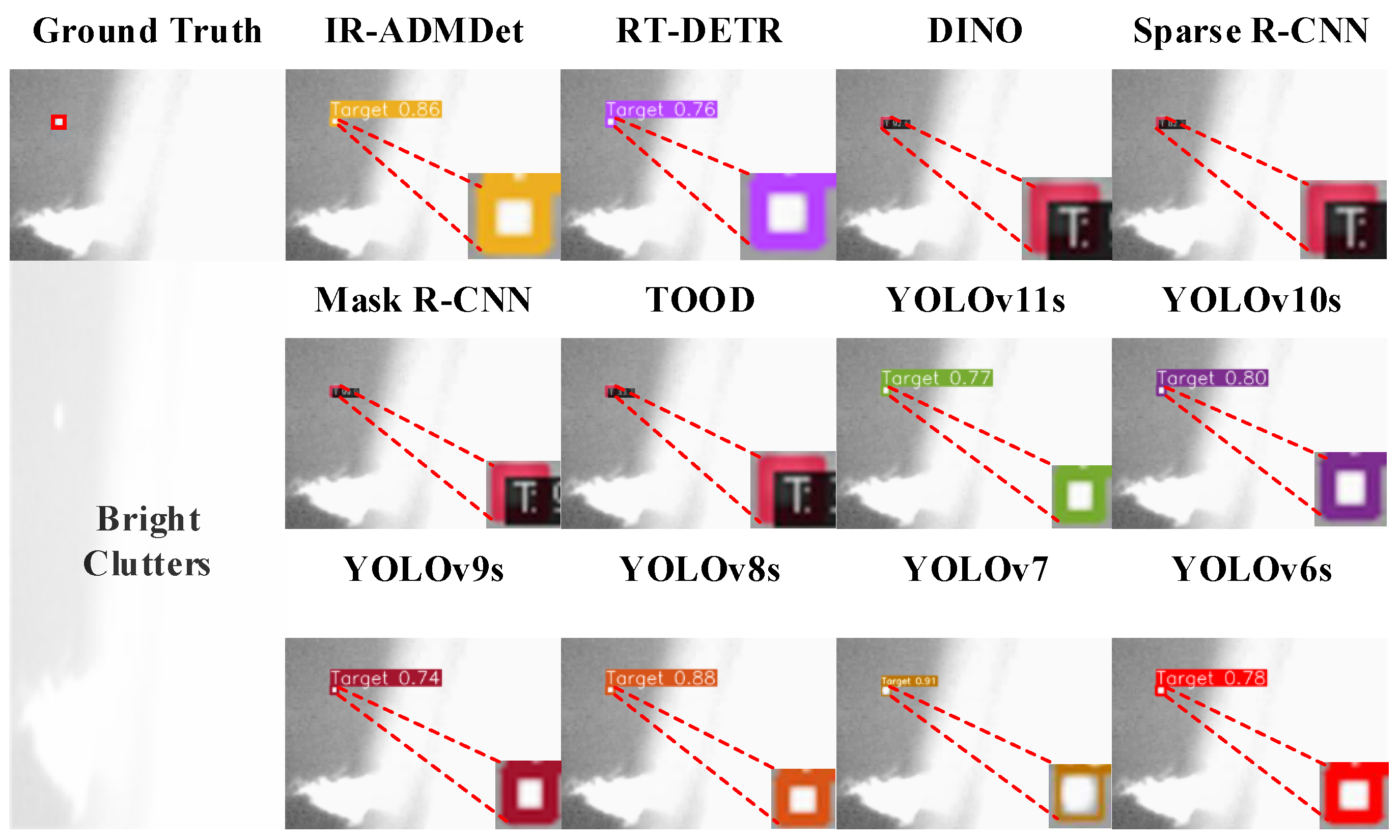

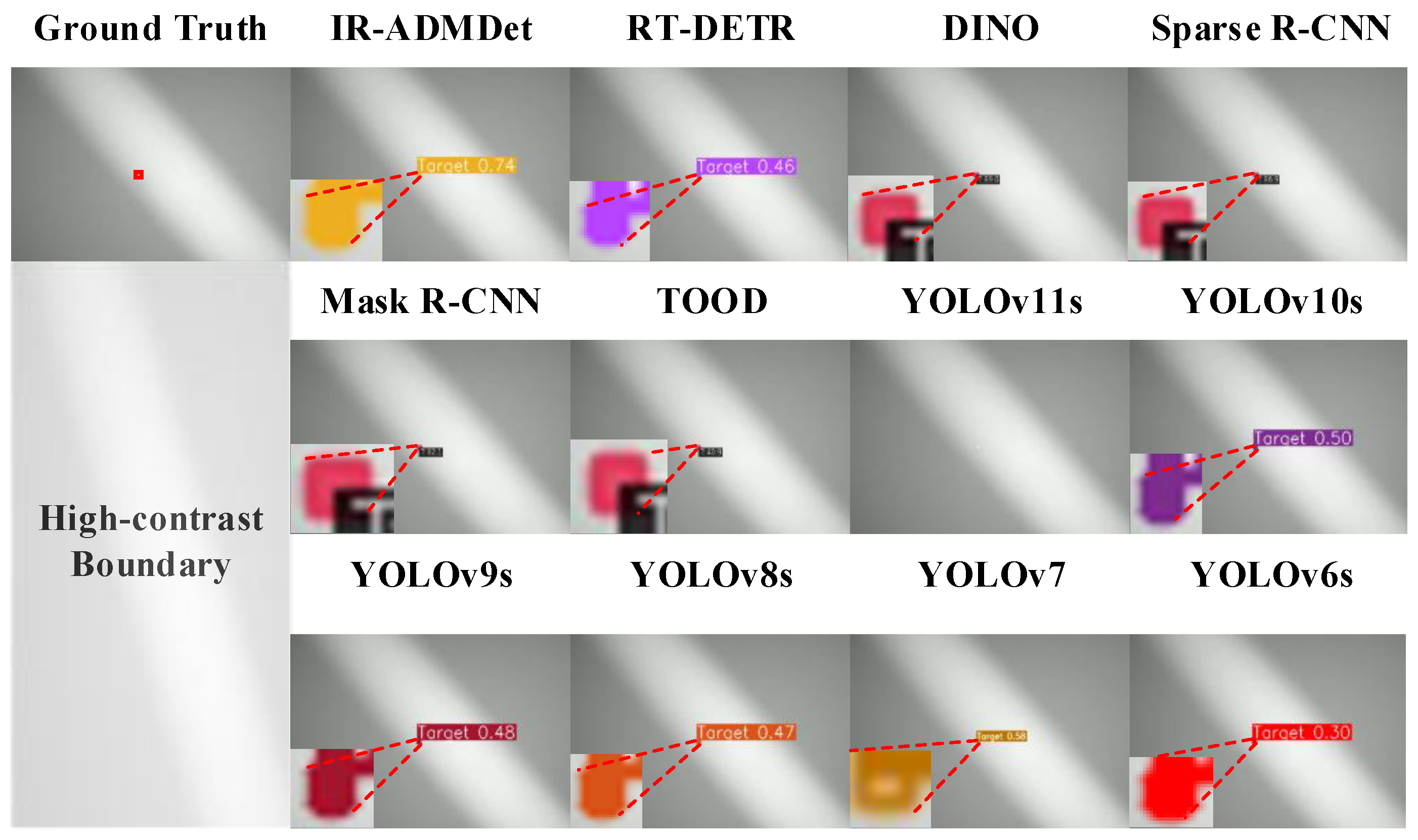

4.3.2. Qualitative Analysis

4.3.3. Comparison with Segmentation-Based Methods

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hao, X.; Luo, S.; Chen, M.; He, C.; Wang, T.; Wu, H. Infrared small target detection with super-resolution and YOLO. Opt. Laser Technol. 2024, 177, 111221. [Google Scholar] [CrossRef]

- Tong, Y.; Leng, Y.; Yang, H.; Wang, Z. Target-Focused Enhancement Network for Distant Infrared Dim and Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4109711. [Google Scholar] [CrossRef]

- Ma, T.; Guo, G.; Li, Z.; Yang, Z. Infrared Small Target Detection Method Based on High-Low-Frequency Semantic Reconstruction. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6012505. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Chi, W.; Liu, J.; Wang, X.; Ni, Y.; Feng, R. A Semantic Domain Adaption Framework for Cross-Domain Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Lin, F.; Bao, K.; Li, Y.; Zeng, D.; Ge, S. Learning Contrast-Enhanced Shape-Biased Representations for Infrared Small Target Detection. IEEE Trans. Image Process. 2024, 33, 3047–3058. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Leng, X.; Zhang, X.; Zhou, Z.; Xiong, B.; Ji, K.; Kuang, G. Arbitrary-Direction SAR Ship Detection Method for Multiscale Imbalance. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5208921. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, S.; Sun, Z.; Liu, C.; Sun, Y.; Ji, K.; Kuang, G. Cross-Sensor SAR Image Target Detection Based on Dynamic Feature Discrimination and Center-Aware Calibration. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5209417. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Liu, F.; Lyu, X.; Tong, Y.; Xu, Z.; Zhou, J. A Synergistical Attention Model for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5400916. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Li, L.; Xu, N.; Liu, F.; Yuan, C.; Chen, Z.; Lyu, X. AAFormer: Attention-Attended Transformer for Semantic Segmentation of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5002805. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Liu, F.; Tong, Y.; Lyu, X.; Zhou, J. Semantic Segmentation of Remote Sensing Images by Interactive Representation Refinement and Geometric Prior-Guided Inference. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5400318. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Tao, F.; Tong, Y.; Gao, H.; Liu, F.; Chen, Z.; Lyu, X. A Cross-Domain Coupling Network for Semantic Segmentation of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5005105. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Yu, A.; Lyu, X.; Gao, H.; Zhou, J. A Frequency Decoupling Network for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5607921. [Google Scholar] [CrossRef]

- Kou, R.; Wang, C.; Peng, Z.; Zhao, Z.; Chen, Y.; Han, J.; Huang, F.; Yu, Y.; Fu, Q. Infrared small target segmentation networks: A survey. Pattern Recognit. 2023, 143, 109788. [Google Scholar] [CrossRef]

- Yao, R.; Li, W.; Zhou, Y.; Sun, J.; Yin, Z.; Zhao, J. Dual-Stream Edge-Target Learning Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5007314. [Google Scholar] [CrossRef]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Qiu, W.; Wang, K.; Li, S.; Zhang, K. YOLO-based Detection Technology for Aerial Infrared Targets. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; pp. 1115–1119. [Google Scholar] [CrossRef]

- Gongguo, Z.; Junhao, W. An improved small target detection method based on Yolo V3. In Proceedings of the 2021 International Conference on Electronics, Circuits and Information Engineering (ECIE), Zhengzhou, China, 22–24 January 2021; pp. 220–223. [Google Scholar] [CrossRef]

- Lin, Z.; Huang, M.; Zhou, Q. Infrared small target detection based on YOLO v4. J. Phys. Conf. Ser. 2023, 2450, 012019. [Google Scholar] [CrossRef]

- Li, S.; Li, Y.; Li, Y.; Li, M.; Xu, X. YOLO-FIRI: Improved YOLOv5 for Infrared Image Object Detection. IEEE Access 2021, 9, 141861–141875. [Google Scholar] [CrossRef]

- Ciocarlan, A.; Le Hegarat-Mascle, S.; Lefebvre, S.; Woiselle, A.; Barbanson, C. A Contrario Paradigm for Yolo-Based Infrared Small Target Detection. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 5630–5634. [Google Scholar] [CrossRef]

- Cao, L.; Wang, Q.; Luo, Y.; Hou, Y.; Cao, J.; Zheng, W. YOLO-TSL: A lightweight target detection algorithm for UAV infrared images based on Triplet attention and Slim-neck. Infrared Phys. Technol. 2024, 141, 105487. [Google Scholar] [CrossRef]

- Betti, A.; Tucci, M. YOLO-S: A Lightweight and Accurate YOLO-like Network for Small Target Detection in Aerial Imagery. Sensors 2023, 23, 1865. [Google Scholar] [CrossRef]

- Hou, Y.; Tang, B.; Ma, Z.; Wang, J.; Liang, B.; Zhang, Y. YOLO-B: An infrared target detection algorithm based on bi-fusion and efficient decoupled. PLoS ONE 2024, 19, e0298677. [Google Scholar] [CrossRef]

- Jawaharlalnehru, A.; Sambandham, T.; Sekar, V.; Ravikumar, D.; Loganathan, V.; Kannadasan, R.; Khan, A.A.; Wechtaisong, C.; Haq, M.A.; Alhussen, A.; et al. Target Object Detection from Unmanned Aerial Vehicle (UAV) Images Based on Improved YOLO Algorithm. Electronics 2022, 11, 2343. [Google Scholar] [CrossRef]

- Liu, Y.; Li, N.; Cao, L.; Zhang, Y.; Ni, X.; Han, X.; Dai, D. Research on Infrared Dim Target Detection Based on Improved YOLOv8. Remote Sens. 2024, 16, 2878. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric Contextual Modulation for Infrared Small Target Detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 1–6 January 2021; pp. 950–959. [Google Scholar]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. IEEE Trans. Image Process. 2023, 32, 1745–1758. [Google Scholar] [CrossRef]

- Hou, Q.; Zhang, L.; Tan, F.; Xi, Y.; Zheng, H.; Li, N. ISTDU-Net: Infrared Small-Target Detection U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 7506205. [Google Scholar] [CrossRef]

- Sun, H.; Bai, J.; Yang, F.; Bai, X. Receptive-Field and Direction Induced Attention Network for Infrared Dim Small Target Detection with a Large-Scale Dataset IRDST. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5000513. [Google Scholar] [CrossRef]

- Dai, Y.; Li, X.; Zhou, F.; Qian, Y.; Chen, Y.; Yang, J. One-Stage Cascade Refinement Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5000917. [Google Scholar] [CrossRef]

- Tong, X.; Su, S.; Wu, P.; Guo, R.; Wei, J.; Zuo, Z.; Sun, B. MSAFFNet: A Multiscale Label-Supervised Attention Feature Fusion Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5002616. [Google Scholar] [CrossRef]

- Shi, Q.; Zhang, C.; Chen, Z.; Lu, F.; Ge, L.; Wei, S. An infrared small target detection method using coordinate attention and feature fusion. Infrared Phys. Technol. 2023, 131, 104614. [Google Scholar] [CrossRef]

- Xu, H.; Zhong, S.; Zhang, T.; Zou, X. Multiscale Multilevel Residual Feature Fusion for Real-Time Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5002116. [Google Scholar] [CrossRef]

- Fang, H.; Ding, L.; Wang, L.; Chang, Y.; Yan, L.; Han, J. Infrared Small UAV Target Detection Based on Depthwise Separable Residual Dense Network and Multiscale Feature Fusion. IEEE Trans. Instrum. Meas. 2022, 71, 5019120. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, Z.; Bao, G.; Hu, J.; Shi, T.; Sun, G.; Gong, J. Multiscale Progressive Fusion Filter Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5602314. [Google Scholar] [CrossRef]

- Zhang, M.; Li, B.; Wang, T.; Bai, H.; Yue, K.; Li, Y. CHFNet: Curvature Half-Level Fusion Network for Single-Frame Infrared Small Target Detection. Remote Sens. 2023, 15, 1573. [Google Scholar] [CrossRef]

- Zuo, Z.; Tong, X.; Wei, J.; Su, S.; Wu, P.; Guo, R.; Sun, B. AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sens. 2022, 14, 3412. [Google Scholar] [CrossRef]

- Liu, S.; Chen, P.; Woźniak, M. Image Enhancement-Based Detection with Small Infrared Targets. Remote Sens. 2022, 14, 3232. [Google Scholar] [CrossRef]

- Chen, Y.; Li, L.; Liu, X.; Su, X. A Multi-Task Framework for Infrared Small Target Detection and Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5003109. [Google Scholar] [CrossRef]

- Zhao, M.; Cheng, L.; Yang, X.; Feng, P.; Liu, L.; Wu, N. TBC-Net: A real-time detector for infrared small target detection using semantic constraint. arXiv 2019, arXiv:2001.05852. [Google Scholar]

- Liu, F.; Gao, C.; Chen, F.; Meng, D.; Zuo, W.; Gao, X. Infrared Small and Dim Target Detection with Transformer Under Complex Backgrounds. IEEE Trans. Image Process. 2023, 32, 5921–5932. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Dai, X.; Bai, Y.; Wang, Y.; Fu, Y. Rewrite the Stars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5694–5703. [Google Scholar]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. ISNet: Shape Matters for Infrared Small Target Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 877–886. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C.; et al. Sparse R-CNN: End-to-End Object Detection with Learnable Proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14454–14463. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Los Alamitos, CA, USA, 10–17 October 2021; pp. 3490–3499. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Computer Vision—ECCV 2024, Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 9–15 December 2024; Curran Associates, Inc.: Newry, UK, 2024; Volume 37, pp. 107984–108011. [Google Scholar]

- Mou, X.; Lei, S.; Zhou, X. YOLO-FR: A YOLOv5 Infrared Small Target Detection Algorithm Based on Feature Reassembly Sampling Method. Sensors 2023, 23, 2710. [Google Scholar] [CrossRef]

- Yue, T.; Lu, X.; Cai, J.; Chen, Y.; Chu, S. YOLO-MST: Multiscale deep learning method for infrared small target detection based on super-resolution and YOLO. Opt. Laser Technol. 2025, 187, 112835. [Google Scholar] [CrossRef]

- Ren, D.; Li, J.; Han, M.; Shu, M. DNANet: Dense Nested Attention Network for Single Image Dehazing. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2035–2039. [Google Scholar] [CrossRef]

| Module | Number | Output Resolution (Pixels) | Output Channel | s |

|---|---|---|---|---|

| Conv | 1 | 320 × 320 | 32 | - |

| Conv | 1 | 160 × 160 | 32 | - |

| C2f | 3 | 160 × 160 | 32 | - |

| Conv | 1 | 80 × 80 | 64 | - |

| C2f | 6 | 80 × 80 | 64 | - |

| Conv | 1 | 40 × 40 | 128 | - |

| HFEBlock | 6 | 40 × 40 | 128 | 0.25 |

| Conv | 1 | 20 × 20 | 256 | - |

| HFEBlock | 3 | 20 × 20 | 256 | 0.5 |

| SPPF | 1 | 20 × 20 | 256 | - |

| Name | Configuration |

|---|---|

| Operating system | Win11 |

| Computing platform | CUDA 11.7 |

| CPU | AMD Ryzen 7 5800H |

| GPU | NVIDIA GeForce RTX 3060 |

| GPU memory size | 6 G |

| k | P | R | F1 | AP50 |

|---|---|---|---|---|

| 3 | 0.959 | 0.886 | 0.921 | 0.94 |

| 5 | 0.948 | 0.952 | 0.95 | 0.96 |

| 7 | 0.96 | 0.926 | 0.943 | 0.957 |

| 9 | 0.946 | 0.9 | 0.922 | 0.953 |

| 11 | 0.941 | 0.906 | 0.923 | 0.943 |

| 13 | 0.945 | 0.909 | 0.927 | 0.941 |

| Methods | Dataset | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SIRST-V2 | IRSTD-1k | NUDT-SIRST | Parameters/M | ||||||||||||

| P | R | F1 | AP50 | P | R | F1 | AP50 | P | R | F1 | AP50 | ||||

| RT-DETR [46] | 0.958 | 0.911 | 0.934 | 0.94 | 0.824 | 0.827 | 0.825 | 0.83 | 0.99 | 0.959 | 0.974 | 0.98 | 20.184 | ||

| DINO [47] | 0.927 | 0.923 | 0.924 | 0.948 | 0.836 | 0.816 | 0.826 | 0.826 | 0.983 | 0.964 | 0.973 | 0.978 | 47.54 | ||

| Sparse R-CNN [48] | 0.897 | 0.863 | 0.88 | 0.888 | 0.826 | 0.743 | 0.782 | 0.81 | 0.986 | 0.91 | 0.946 | 0.944 | 77.8 | ||

| Mask R-CNN [49] | 0.923 | 0.79 | 0.851 | 0.888 | 0.807 | 0.561 | 0.662 | 0.691 | 0.811 | 0.814 | 0.812 | 0.877 | 43.991 | ||

| TOOD [50] | 0.689 | 0.661 | 0.675 | 0.704 | 0.839 | 0.745 | 0.789 | 0.809 | 0.952 | 0.925 | 0.938 | 0.958 | 32.018 | ||

| YOLOv6s [51] | 0.911 | 0.798 | 0.851 | 0.886 | 0.847 | 0.718 | 0.777 | 0.818 | 0.926 | 0.938 | 0.932 | 0.963 | 16.298 | ||

| YOLOv7 [52] | 0.898 | 0.71 | 0.793 | 0.792 | 0.796 | 0.704 | 0.747 | 0.749 | 0.945 | 0.894 | 0.919 | 0.941 | 6.195 | ||

| YOLOv8s | 0.908 | 0.801 | 0.851 | 0.881 | 0.826 | 0.743 | 0.782 | 0.81 | 0.948 | 0.893 | 0.92 | 0.962 | 11.126 | ||

| YOLOv9s [53] | 0.92 | 0.75 | 0.826 | 0.873 | 0.797 | 0.773 | 0.785 | 0.805 | 0.927 | 0.952 | 0.939 | 0.965 | 7.167 | ||

| YOLOv10s [54] | 0.881 | 0.798 | 0.837 | 0.885 | 0.829 | 0.711 | 0.765 | 0.817 | 0.927 | 0.899 | 0.913 | 0.965 | 7.218 | ||

| YOLOv11s | 0.908 | 0.797 | 0.857 | 0.888 | 0.8 | 0.728 | 0.762 | 0.801 | 0.849 | 0.902 | 0.925 | 0.964 | 9.413 | ||

| YOLO-FR [55] | 0.933 | 0.912 | 0.922 | 0.923 | 0.812 | 0.811 | 0.811 | 0.815 | 0.954 | 0.908 | 0.93 | 0.933 | 8.336 | ||

| YOLO-MST [56] | 0.941 | 0.925 | 0.933 | 0.935 | 0.825 | 0.819 | 0.822 | 0.831 | 0.971 | 0.911 | 0.94 | 0.947 | 12.7 | ||

| IR-ADMDet (Ours) | 0.948 | 0.952 | 0.95 | 0.96 | 0.831 | 0.823 | 0.827 | 0.852 | 0.963 | 0.938 | 0.95 | 0.978 | 5.768 | ||

| Methods | Dataset | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SIRSTv2 | IRSTD-1k | NUDT-SIRST | |||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | |||

| ACM [27] | 0.721 | 0.777 | 0.748 | 0.679 | 0.757 | 0.716 | 0.706 | 0.869 | 0.779 | ||

| ALCNet [28] | 0.838 | 0.665 | 0.741 | 0.700 | 0.820 | 0.755 | 0.809 | 0.797 | 0.803 | ||

| DNANet [57] | 0.876 | 0.863 | 0.869 | 0.820 | 0.726 | 0.770 | 0.954 | 0.959 | 0.956 | ||

| ISTDU-Net [30] | 0.852 | 0.796 | 0.823 | 0.780 | 0.770 | 0.775 | 0.947 | 0.941 | 0.944 | ||

| RDIAN [31] | 0.899 | 0.720 | 0.800 | 0.828 | 0.670 | 0.741 | 0.917 | 0.882 | 0.900 | ||

| OSCAR [32] | 0.873 | 0.742 | 0.802 | 0.769 | 0.760 | 0.764 | 0.900 | 0.927 | 0.913 | ||

| IR-ADMDet (Ours) | 0.948 | 0.952 | 0.950 | 0.831 | 0.823 | 0.827 | 0.963 | 0.938 | 0.950 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, N.; Wei, D. IR-ADMDet: An Anisotropic Dynamic-Aware Multi-Scale Network for Infrared Small Target Detection. Remote Sens. 2025, 17, 1694. https://doi.org/10.3390/rs17101694

Li N, Wei D. IR-ADMDet: An Anisotropic Dynamic-Aware Multi-Scale Network for Infrared Small Target Detection. Remote Sensing. 2025; 17(10):1694. https://doi.org/10.3390/rs17101694

Chicago/Turabian StyleLi, Ning, and Daozhi Wei. 2025. "IR-ADMDet: An Anisotropic Dynamic-Aware Multi-Scale Network for Infrared Small Target Detection" Remote Sensing 17, no. 10: 1694. https://doi.org/10.3390/rs17101694

APA StyleLi, N., & Wei, D. (2025). IR-ADMDet: An Anisotropic Dynamic-Aware Multi-Scale Network for Infrared Small Target Detection. Remote Sensing, 17(10), 1694. https://doi.org/10.3390/rs17101694