Detection of Invasive Species (Siam Weed) Using Drone-Based Imaging and YOLO Deep Learning Model

Abstract

1. Introduction

2. Materials and Methods

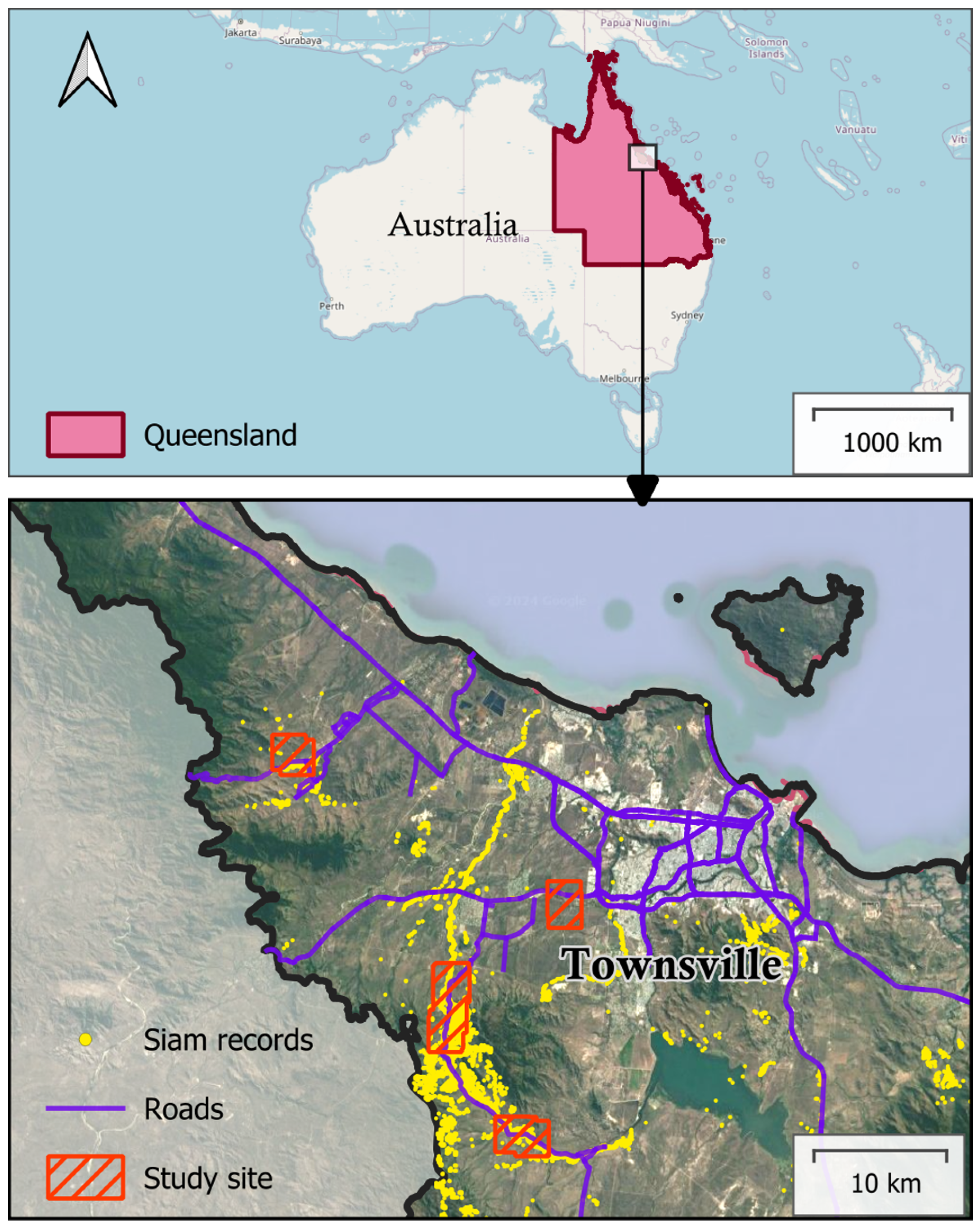

2.1. Study Area

2.2. Data Capture

2.3. Detection Model

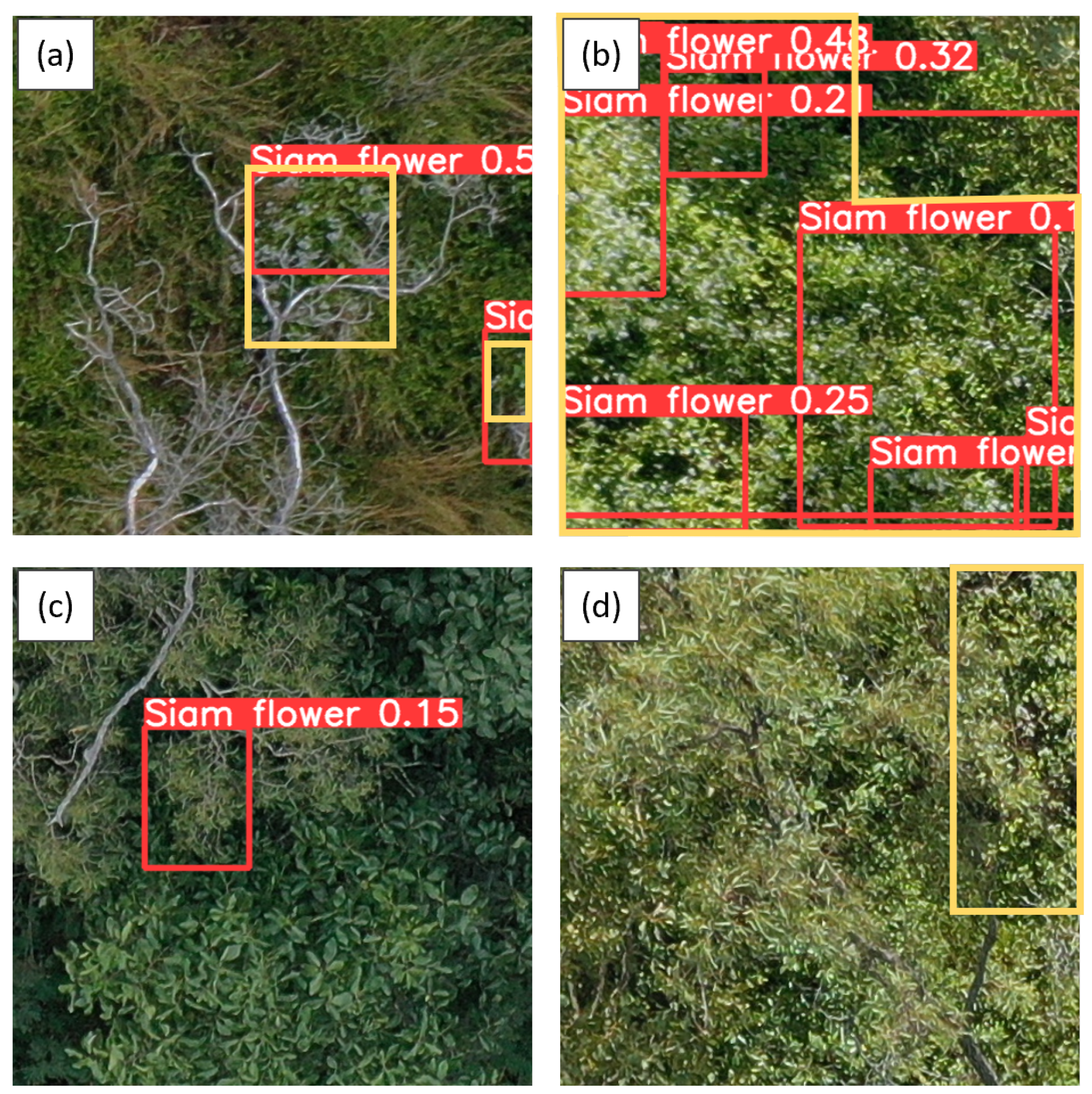

2.4. Validation of the Model

2.5. Accuracy Metrics

3. Results

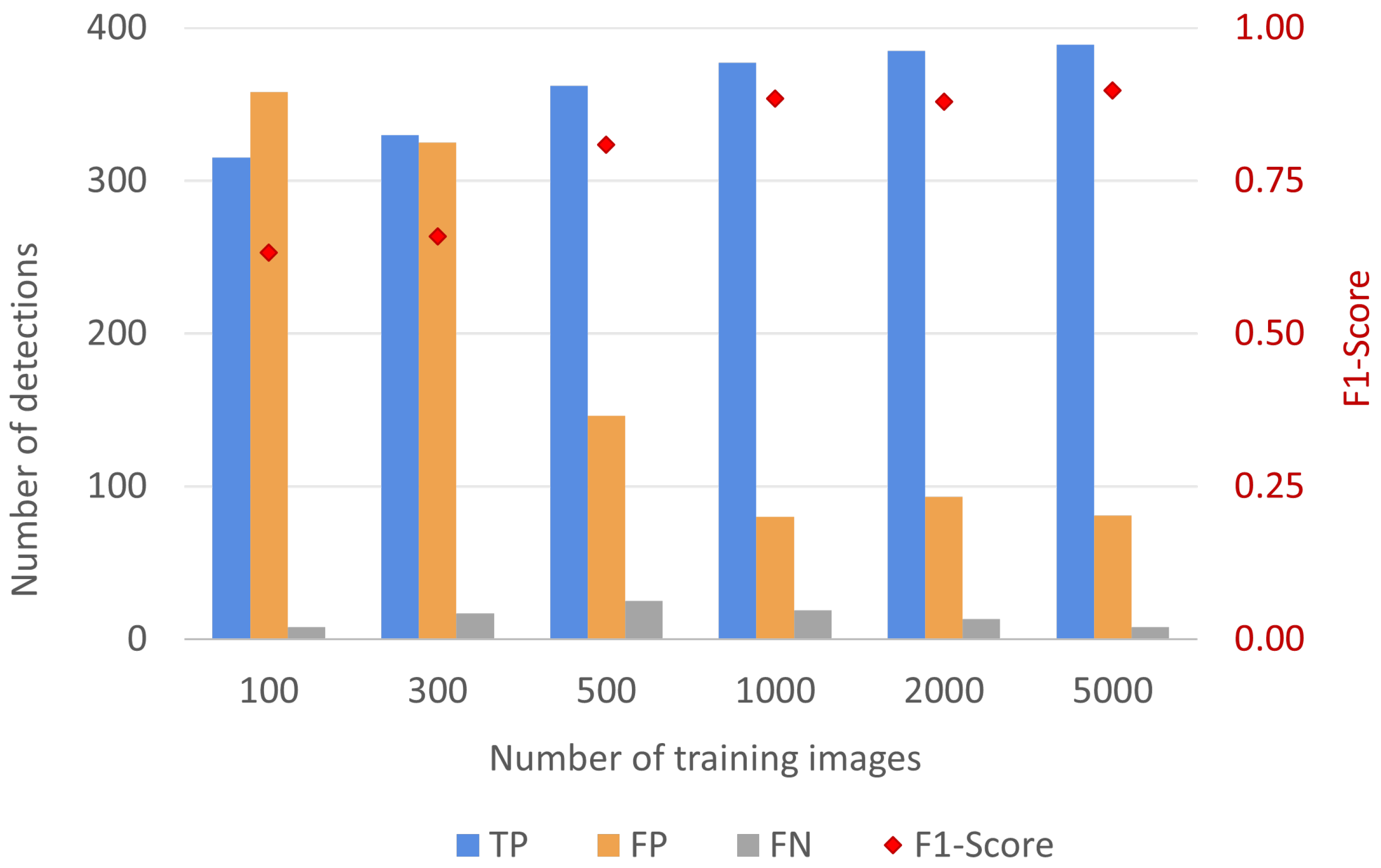

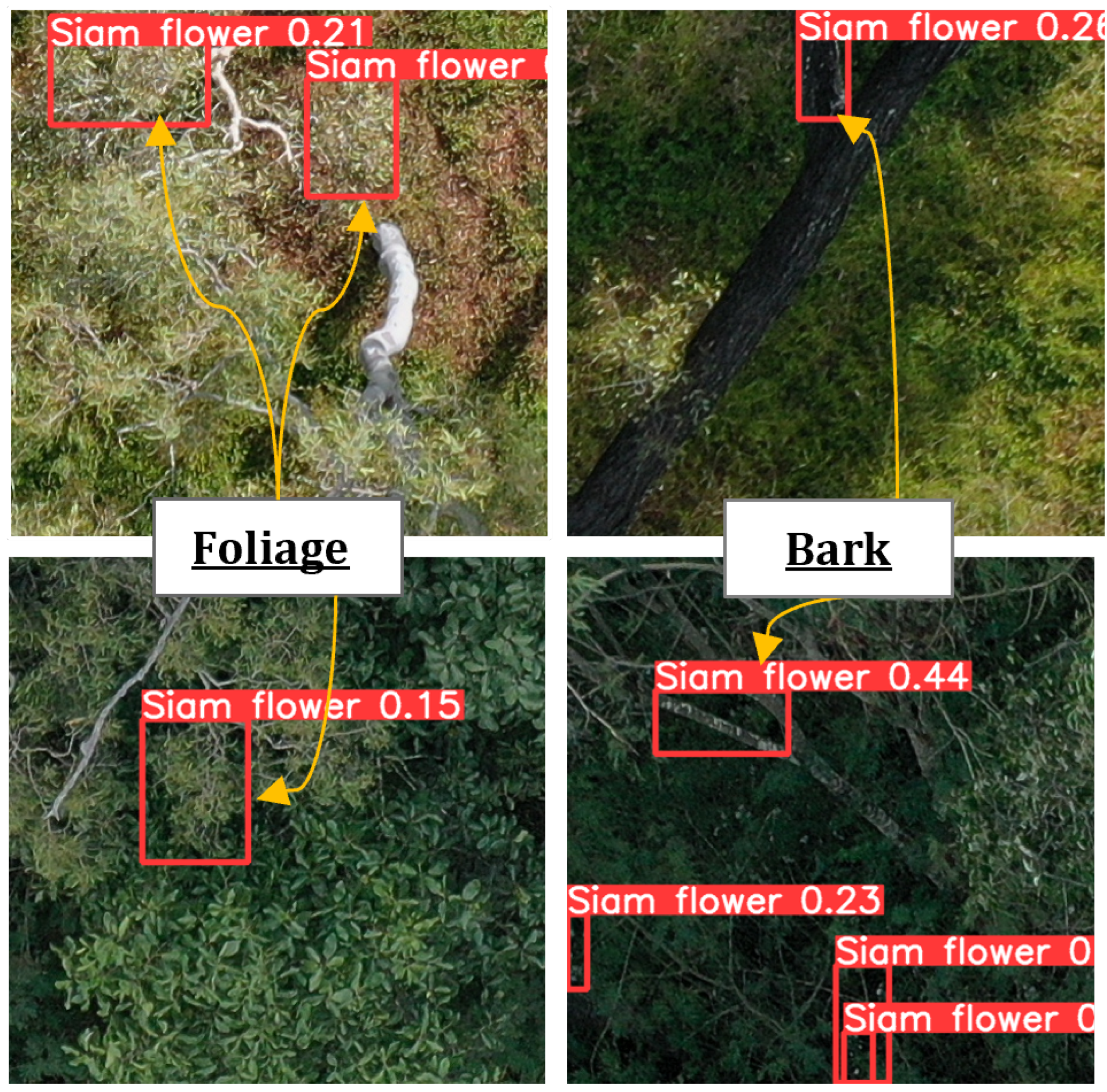

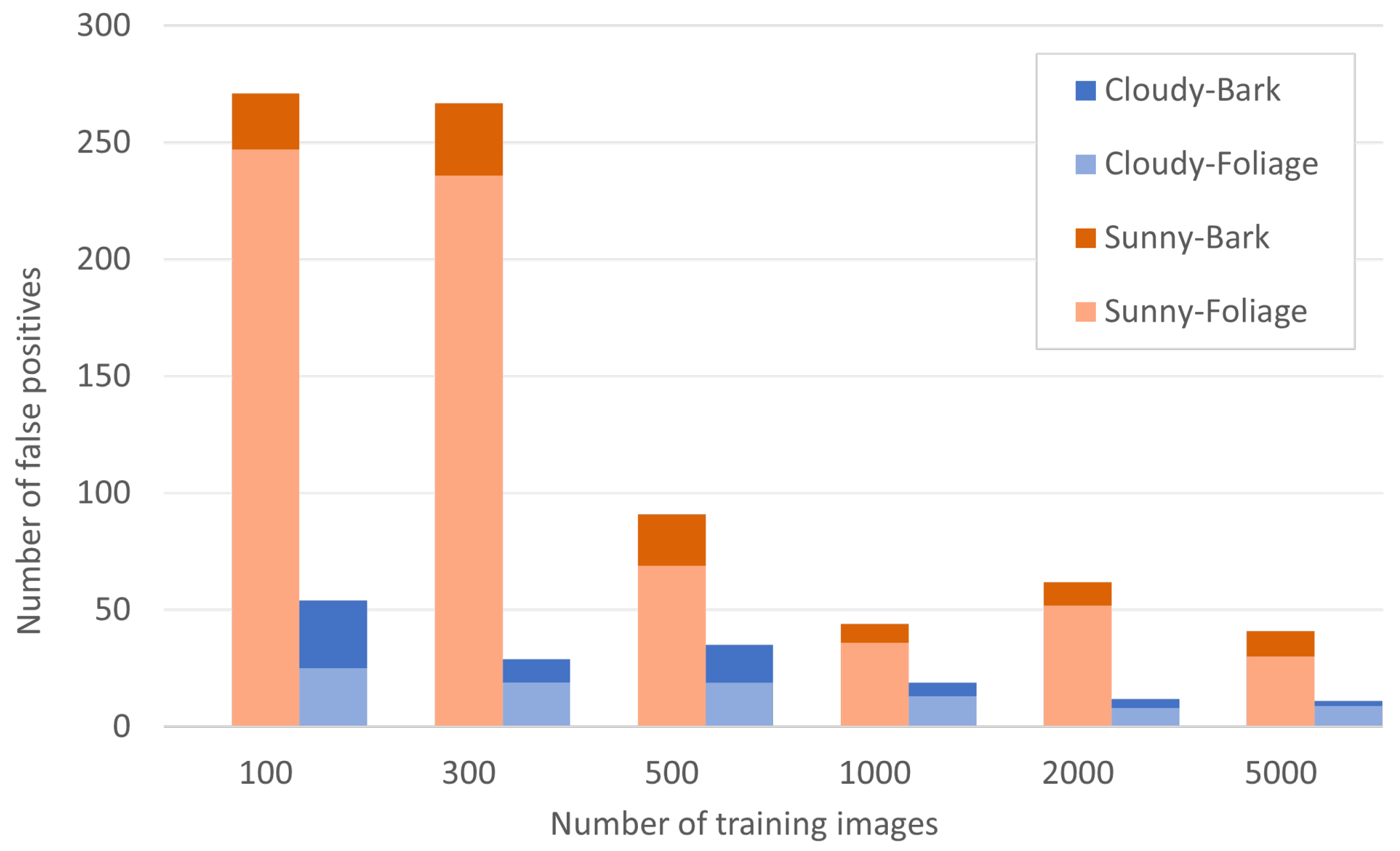

3.1. Effect of the Number of Input Training Images

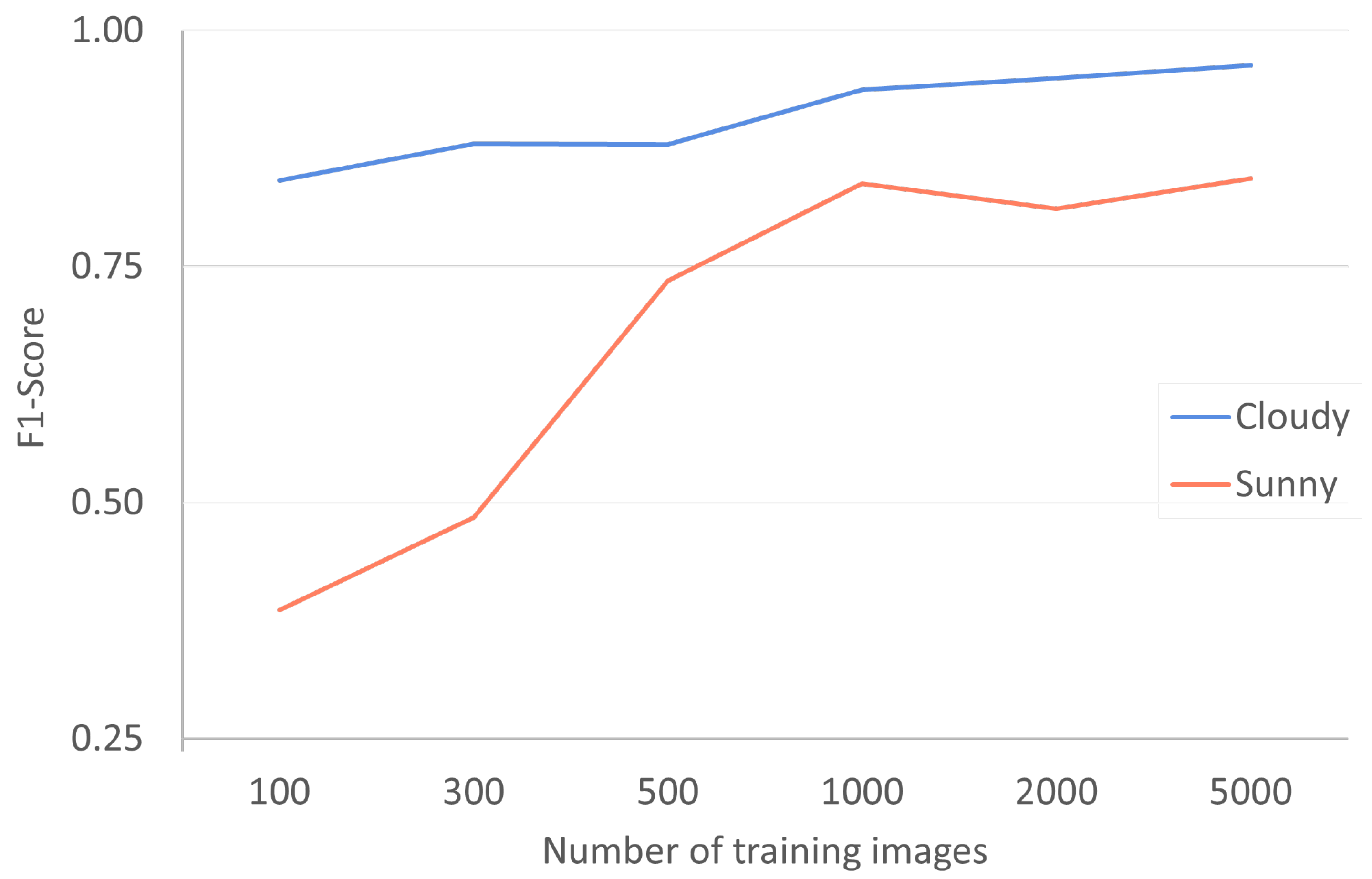

3.2. Effect of Solar Illumination

3.3. The Effect of Model Complexity

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Panetta, F.D.; James, R.F. Weed control thresholds: A useful concept in natural ecosystems? Plant Prot. Q. 1999, 14, 68–76. [Google Scholar]

- Williams, J.A.; West, C.J. Environmental weeds in Australia and New Zealand: Issues and approaches to management. Austral Ecol. 2000, 25, 425–444. [Google Scholar] [CrossRef]

- Hulme, P.E. Beyond control: Wider implications for the management of biological invasions. J. Appl. Ecol. 2006, 43, 835–847. [Google Scholar] [CrossRef]

- Roslim, M.H.M.; Juraimi, A.S.; Che’Ya, N.N.; Sulaiman, N.; Manaf, M.N.H.A.; Ramli, Z.; Motmainna, M. Using Remote Sensing and an Unmanned Aerial System for Weed Management in Agricultural Crops: A Review. Agronomy 2021, 11, 1809. [Google Scholar] [CrossRef]

- Gašparović, M.; Zrinjski, M.; Barković, Đ.; Radočaj, D. An automatic method for weed mapping in oat fields based on UAV imagery. Comput. Electron. Agric. 2020, 173, 105385. [Google Scholar] [CrossRef]

- Rodriguez, R., III; Jenkins, D.; Leary, J.; Perroy, R. A direct geolocation method for aerial imaging surveys of invasive plants. Int. J. Environ. Sci. Technol. 2024, 21, 8375–8390. [Google Scholar] [CrossRef]

- Göktoǧan, A.H.; Sukkarieh, S.; Bryson, M.; Randle, J.; Lupton, T.; Hung, C. A Rotary-wing Unmanned Air Vehicle for Aquatic Weed Surveillance and Management. J. Intell. Robot. Syst. 2010, 57, 467–484. [Google Scholar] [CrossRef]

- Hamilton, M.; Matthews, R.; Caldwell, J. Needle in a haystack-detecting hawkweeds using drones. In Proceedings of the 21st Australasian Weeds Conference, Sydney, Australia, 9–13 September 2018; pp. 9–13. [Google Scholar]

- Jeffery, M. Eradication: Lessons learnt from 17 years of the National Siam Weed Eradication Program. In Proceedings of the Developing Solutions to Evolving Weed Problems—18th Australasian Weeds Conference, Melbourne, VIC, Australia, 8–11 October 2012; pp. 92–93. [Google Scholar]

- Zachariades, C.; Day, M.; Muniappan, R.; Reddy, G. Chromolaena odorata (L.) king and robinson (Asteraceae). In Biological Control of Tropical Weeds Using Arthropods; Cambridge University Press: Cambridge, UK, 2009; pp. 130–162. [Google Scholar]

- Price, T. Siam weed and the dust devils: Managing Chromolaena odorata in the Northern Territory. In Proceedings of the 22nd Australasian Weeds Conference (2022)—CAWS—Council of Australasian Weed Societies, North Adelaide, Australia, 25–29 September 2022. [Google Scholar]

- Waterhouse, B. Discovery of Chromolaena odorata in northern Queensland, Australia. Chromolaena odorata Newsl. 1994, 9, 1–2. [Google Scholar]

- te Beest, M.; Esler, K.J.; Richardson, D.M. Linking functional traits to impacts of invasive plant species: A case study. Plant Ecol. 2015, 216, 293–305. [Google Scholar] [CrossRef]

- Brooks, S.J.; Setter, S.D.; Gough, K.L. Siam weed disperal mechanisms. In Proceedings of the 14th Queensland Weed Symposium, Port Douglas, Australia, 4–7 December 2017. [Google Scholar]

- Kriticos, D.J.; Yonow, T.; McFadyen, R.E. The potential distribution of Chromolaena odorata (Siam weed) in relation to climate. Weed Res. 2005, 45, 246–254. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; De Castro, A.I.; Peña-Barragán, J.M. Configuration and specifications of an unmanned aerial vehicle (UAV) for early site specific weed management. PLoS ONE 2013, 8, e58210. [Google Scholar] [CrossRef] [PubMed]

- Tamminga, A.; Hugenholtz, C.; Eaton, B.; Lapointe, M. Hyperspatial Remote Sensing of Channel Reach Morphology and Hydraulic Fish Habitat Using an Unmanned Aerial Vehicle (UAV): A First Assessment in the Context of River Research and Management. River Res. Appl. 2015, 31, 379–391. [Google Scholar] [CrossRef]

- Hassler, S.C.; Baysal-Gurel, F. Unmanned Aircraft System (UAS) Technology and Applications in Agriculture. Agronomy 2019, 9, 618. [Google Scholar] [CrossRef]

- Gautam, D.; Elliott, L.; Loewensteiner, D.; Whiteside, T.; Brooks, S.; Price, T.; Luck, L.; Inglis, S.; Maeer, J.A.; Green, D.; et al. Optimising methods to detect invasive Siam weed using drone-based image capture and machine learning in northern Australia. In Proceedings of the Locate Conference, Adelaide, Australia, 10–12 May 2023. [Google Scholar]

- Zhang, Y.; Gao, J.; Cen, H.; Lu, Y.; Yu, X.; He, Y.; Pieters, J.G. Automated spectral feature extraction from hyperspectral images to differentiate weedy rice and barnyard grass from a rice crop. Comput. Electron. Agric. 2019, 159, 42–49. [Google Scholar] [CrossRef]

- Su, W.H. Advanced Machine Learning in Point Spectroscopy, RGB- and Hyperspectral-Imaging for Automatic Discriminations of Crops and Weeds: A Review. Smart Cities 2020, 3, 767–792. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef]

- Agarwal, R.; Hariharan, S.; Nagabhushana Rao, M.; Agarwal, A. Weed Identification using K-Means Clustering with Color Spaces Features in Multi-Spectral Images Taken by UAV. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 7047–7050. [Google Scholar] [CrossRef]

- Wu, H.; Liu, Q.; Liu, X. A Review on Deep Learning Approaches to Image Classification and Object Segmentation. Comput. Mater. Contin. 2019, 60, 575–597. [Google Scholar] [CrossRef]

- Al-Badri, A.H.; Ismail, N.A.; Al-Dulaimi, K.; Salman, G.A.; Khan, A.R.; Al-Sabaawi, A.; Salam, M.S.H. Classification of weed using machine learning techniques: A review—Challenges, current and future potential techniques. J. Plant Dis. Prot. 2022, 129, 745–768. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Gutiérrez, P.; Peña, J.; Torres-Sánchez, J.; López-Granados, F.; Hervás-Martínez, C. Machine learning paradigms for weed mapping via unmanned aerial vehicles. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Alam, M.; Alam, M.S.; Roman, M.; Tufail, M.; Khan, M.U.; Khan, M.T. Real-Time Machine-Learning Based Crop/Weed Detection and Classification for Variable-Rate Spraying in Precision Agriculture. In Proceedings of the 2020 7th International Conference on Electrical and Electronics Engineering (ICEEE), Virtual, 14–16 April 2020; pp. 273–280. [Google Scholar] [CrossRef]

- Li, H.; Guo, C.; Yang, Z.; Chai, J.; Shi, Y.; Liu, J.; Zhang, K.; Liu, D.; Xu, Y. Design of field real-time target spraying system based on improved YOLOv5. Front. Plant Sci. 2022, 13, 1072631. [Google Scholar] [CrossRef]

- Wang, A.; Xu, Y.; Wei, X.; Cui, B. Semantic Segmentation of Crop and Weed using an Encoder-Decoder Network and Image Enhancement Method under Uncontrolled Outdoor Illumination. IEEE Access 2020, 8, 81724–81734. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 by Ultralytics. 2020. Available online: https://github.com/ultralytics/yolov5/blob/master/CITATION.cff (accessed on 25 December 2024).

- Kıvrak, O.; Gürbüz, M.Z. Performance Comparison of YOLOv3,YOLOv4 and YOLOv5 algorithms: A Case Study for Poultry Recognition. Avrupa Bilim Teknol. Derg. 2022, 38, 392–397. [Google Scholar] [CrossRef]

- Ammar, A.; Koubaa, A.; Benjdira, B. Deep-Learning-Based Automated Palm Tree Counting and Geolocation in Large Farms from Aerial Geotagged Images. Agronomy 2021, 11, 1458. [Google Scholar] [CrossRef]

- Murad, N.Y.; Mahmood, T.; Forkan, A.R.M.; Morshed, A.; Jayaraman, P.P.; Siddiqui, M.S. Weed detection using deep learning: A systematic literature review. Sensors 2023, 23, 3670. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, Y.; Zhao, B.; Kang, X.; Ding, Y. Review of weed detection methods based on computer vision. Sensors 2021, 21, 3647. [Google Scholar] [CrossRef]

- Mawardi, Z.; Gautam, D.; Whiteside, T.G. Utilization of Remote Sensing Dataset and a Deep Learning Object Detection Model to Map Siam Weed Infestations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 18939–18948. [Google Scholar] [CrossRef]

- Amarasingam, N.; Kelly, J.E.; Sandino, J.; Hamilton, M.; Gonzalez, F.; Dehaan, R.L.; Zheng, L.; Cherry, H. Bitou bush detection and mapping using UAV-based multispectral and hyperspectral imagery and artificial intelligence. Remote Sens. Appl. Soc. Environ. 2024, 34, 101151. [Google Scholar] [CrossRef]

- Pham, D.; Gautam, D.; Sheffield, K. Classifying Serrated Tussock Cover from Aerial Imagery Using RGB Bands, RGB Indices, and Texture Features. Remote Sens. 2024, 16, 4538. [Google Scholar] [CrossRef]

- Elfatma, O.; Santi, I.S.; Kurniawan, I.; Setyawan, H.; Aji, W.A.; Mahendra; Syahputra, B.; Febrianti, I.; Ratmallah, D. Small Format Aerial Photography to Control Chromolaena odorata Weed. In Proceedings of the International Conference on Innovations in Social Sciences Education and Engineering, Bandung, Indonesia, 8 July 2023; Volume 3, p. 077. [Google Scholar]

- Kishore, B.S.P.C.; Kumar, A.; Saikia, P.; Lele, N.; Srivastava, P.; Pulla, S.; Suresh, H.; Kumar Bhattarcharya, B.; Latif Khan, M.; Sukumar, R. Mapping of understorey invasive plant species clusters of Lantana camara and Chromolaena odorata using airborne hyperspectral remote sensing. Adv. Space Res. 2024, 73, 1379–1396. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Weed Detection by Faster RCNN Model: An Enhanced Anchor Box Approach. Agronomy 2022, 12, 1580. [Google Scholar] [CrossRef]

- Hasan, A.S.M.M.; Diepeveen, D.; Laga, H.; Jones, M.G.K.; Sohel, F. Image patch-based deep learning approach for crop and weed recognition. Ecol. Inform. 2023, 78, 102361. [Google Scholar] [CrossRef]

- Maher, P.; Vanderwoude, C.; Scanlan, J.; Davis, B.; Funkhouser, S. Planning and undertaking a national delimiting survey for Chromolaena odorata. In Proceedings of the Fifteenth Australasian Weeds Conference, Adelaide, Australia, 24–28 September 2006. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Jin, X.; Sun, Y.; Che, J.; Bagavathiannan, M.; Yu, J.; Chen, Y. A novel deep learning-based method for detection of weeds in vegetables. Pest Manag. Sci. 2022, 78, 1861–1869. [Google Scholar] [CrossRef]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A novel benchmark of YOLO object detectors for weed detection in cotton production systems. Comput. Electron. Agric. 2022, 205, 107655. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed detection in maize fields by UAV images based on crop row preprocessing and improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Alexandrova, S.; Tatlock, Z.; Cakmak, M. RoboFlow: A flow-based visual programming language for mobile manipulation tasks. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5537–5544. [Google Scholar] [CrossRef]

- Czymmek, V.; Harders, L.O.; Knoll, F.J.; Hussmann, S. Vision-based deep learning approach for real-time detection of weeds in organic farming. In Proceedings of the 2019 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Auckland, New Zealand, 20–23 May 2019; pp. 1–5. [Google Scholar]

- Su, D.; Kong, H.; Qiao, Y.; Sukkarieh, S. Data augmentation for deep learning based semantic segmentation and crop-weed classification in agricultural robotics. Comput. Electron. Agric. 2021, 190, 106418. [Google Scholar] [CrossRef]

| Date | Cloud Cover [okta] | Wind Speed [m/s] |

|---|---|---|

| 21 June | 0–0 | 7–19 |

| 22 June | 0–1 | 0–28 |

| 23 June | 1–0 | 15–30 |

| 24 June | 8–8 | 11–13 |

| 25 June | 0–5 | 7–22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gautam, D.; Mawardi, Z.; Elliott, L.; Loewensteiner, D.; Whiteside, T.; Brooks, S. Detection of Invasive Species (Siam Weed) Using Drone-Based Imaging and YOLO Deep Learning Model. Remote Sens. 2025, 17, 120. https://doi.org/10.3390/rs17010120

Gautam D, Mawardi Z, Elliott L, Loewensteiner D, Whiteside T, Brooks S. Detection of Invasive Species (Siam Weed) Using Drone-Based Imaging and YOLO Deep Learning Model. Remote Sensing. 2025; 17(1):120. https://doi.org/10.3390/rs17010120

Chicago/Turabian StyleGautam, Deepak, Zulfadli Mawardi, Louis Elliott, David Loewensteiner, Timothy Whiteside, and Simon Brooks. 2025. "Detection of Invasive Species (Siam Weed) Using Drone-Based Imaging and YOLO Deep Learning Model" Remote Sensing 17, no. 1: 120. https://doi.org/10.3390/rs17010120

APA StyleGautam, D., Mawardi, Z., Elliott, L., Loewensteiner, D., Whiteside, T., & Brooks, S. (2025). Detection of Invasive Species (Siam Weed) Using Drone-Based Imaging and YOLO Deep Learning Model. Remote Sensing, 17(1), 120. https://doi.org/10.3390/rs17010120