Abstract

Traditional synthetic-aperture radar (SAR) imaging, while adept at capturing stationary scenes with clarity, often results in the blurring of moving targets due to Doppler spectrum disparities. To overcome this, this study introduces an innovative optical approach for imaging moving targets. By employing a spatial light modulator (SLM), the phase information of SAR data can be modulated onto a light beam. Then, the light propagation path is meticulously designed to take advantage of the free propagation of light to compensate for the phase differences in SAR data, thereby achieving focal clarity. Simulations and experimental validations have demonstrated that this method surpasses traditional digital imaging techniques in terms of focusing precision. Additionally, the processing delay is only as long as the time taken for light propagation. The optical path is simple, avoiding complex assembly and alignment. This novel approach opens up new possibilities for the SAR imaging of moving targets, offering potential applications in moving target extraction, separation, and velocity estimation.

1. Introduction

Synthetic-aperture radar (SAR) constitutes an active observation system, offering versatile all-day and all-weather imaging capabilities. Its wide range of applications includes geological exploration, agricultural monitoring, terrain mapping, etc. [,,,,,,,,]. Take geological exploration as an example; SAR is invaluable for its ability to map and analyze Earth’s subsurface structures with precision. Its penetrating radar waves enable the detection of geological features, such as faults and mineral deposits, hidden beneath the surface, offering a vital tool for resource exploration and disaster management. For instance, SAR sensors possess the capacity to detect subtle changes in terrain inclination due to adverse geological formations and can furnish early warning signals of impending collapse several days before a landslide []. The adaptability of SAR extends to various moving platforms, such as aircraft and satellites. By emitting pulses from diverse positions based on the motion of the platform, SAR generates the pulse repetition interval (PRI), which indicates the time interval between two emitted pulses. In SAR, utilizing the relative motion between the radar and the target leads to the formation of a synthetic aperture, thereby enhancing azimuth (parallel to the radar’s motion direction) resolution. Achieving high resolution in the range direction, perpendicular to the radar’s motion, involves the use of transmitted pulse signals, usually as linear frequency modulation signals. Echo data from SAR typically exist in a complex format and are stored in a two-dimensional matrix, where each row encapsulates the echo of an emitted pulse. The purpose of imaging is to reconstruct the backscattering information of the target from the collected data. Given the known nature of the transmitted signal, focusing in the range direction is relatively straightforward and can be achieved using a matched filter. However, the challenge lies in azimuth direction focusing, primarily owing to the complexity of range migration.

Various classical SAR imaging algorithms have been developed [], including the matched filter [], back projection [], range Doppler [], chirp scaling [], and frequency scaling algorithms []. However, these algorithms were primarily designed for stationary scenes, which causes the images of moving targets to shift and become defocused, ultimately resulting in degraded imaging results []. In terms of the matched filter algorithm, it is designed following the maximum signal-to-noise ratio criterion and constructs a reference function which is conjugate matching with the phase of the echo data. Since the algorithm assumes a stationary target when constructing the signal model, the movement of targets in real scenarios can cause mismatch of the matched filter, which may affect the imaging accuracy. However, if the motion effects of the target are taken into account and the filter is redesigned accordingly, the imaging results can be improved []. In practical applications, individuals frequently possess prior knowledge of stationary scenes; however, their main interest lies in accurately visualizing moving targets within a scene. This is particularly relevant in fields such as traffic monitoring, where scenes often contain numerous moving targets. Therefore, this study focuses on the imaging of moving targets.

The existing SAR systems can be categorized into two distinct groups: multichannel and single channel. Opting for a single-channel SAR requires only one receiving antenna, which provides advantages such as cost reduction and circumvention of registration issues associated with multi-antenna processing. Moreover, it generates smaller data volumes, thereby enhancing the feasibility of real-time processing. Therefore, our study exclusively focuses on a moving-target imaging algorithm for single-channel SAR. The earliest imaging algorithm for moving targets is the Doppler filter approach [,]. Based on the characteristic that the Doppler center of moving targets is different from that of static targets and the clutter, a filter is used to separate the spectrum of the moving targets in the Doppler domain for imaging. This method is straightforward and requires low computational effort, but its major drawback lies in the limitation on the minimum radial velocity of the moving target. To overcome this limitation, S. Barbarossa proposed the Doppler-rate filtering approach []. After using an appropriate filter to focus the stationary scene, the Doppler frequency of the moving target still shows linear modulation. At this point, a Doppler-rate filter is able to focus the moving target. However, the challenge is that the Doppler slope rate is unknown. Thus different filters must be tried. If the Doppler centroid and Doppler rate of the moving target are known, focusing becomes much easier. Therefore, numerous moving target imaging methods developed later are dedicated to parameter estimation. Time-frequency transformation, a technique for handling non-stationary random signals, has been instrumental in advancing Doppler parameter estimation. This advancement gave rise to various moving target imaging methods, notably those employing the Wigner–Ville distribution and short-time Fourier transform [,,]. While these methods excel in accuracy under conditions of high signal-to-noise and signal-to-clutter ratios, their effectiveness is limited otherwise. R. P. Perry et al. introduced an algorithm based on the Keystone transform [], which later spawned a series of algorithms [,,,]. However, these algorithms also grapple with challenges like elevated computational requirements and compromised accuracy. In 2001, J. K. Jao introduced a methodology grounded in the concept of relative speed []. This method obtains clear images of moving targets by refocusing SAR images that are well focused on static scenes. J.K. Jao commenced by deriving the trajectories of moving targets in SAR imagery processed using the back projection algorithm as well as the phase on the trajectory. Subsequently, Jao engineered a filter that matches the phase to refine the focusing of these images, thus achieving high-clarity depictions of moving subjects. Later, V. T. Vu et al. proposed a series of more sophisticated moving target imaging algorithms based on relative speed [,,]. The underlying principle of the relative speed method is intuitive, with its practical implementation being relatively uncomplicated. Our approach delineated in this paper bears some resemblance to the relative speed method to some extent, yet it diverges markedly in terms of input data and phase compensation method.

In addition to traditional SAR, interferometric SAR is also widely used for motion detection. Building on the foundational principles of SAR, this technique garners a series of SAR images by conducting multiple observations of the same region, either at varied orbital paths or different temporal intervals. The target information is then reconstructed based on geometric relationships and phase differences. The operational modes of interferometric SAR are diverse, encompassing the cross-track, along-track, and repeat-track modes []. While the former two necessitate dual antennas, thus escalating the equipment expenditure, the repeat-track mode operates with a singular antenna, revisiting the same trajectory over different time frames to image a specific area. This approach, albeit cost-efficient, demands rigorous precision in the positional and attitudinal control of the flight platform, and various inconsistencies over time can impair data coherence. Moreover, the intricacies of the interferometric imaging process are not to be understated, involving meticulous steps such as image registration and phase unwrapping, each critical to the fidelity of the final imagery.

In the initial stages of SAR development, echo data were recorded using photographic films, and imaging involved optical systems [,]. With the advancement of memory devices and digital signal processors, digital storage replaced photographic films, and electronic imaging gradually replaced optical methods. Nevertheless, optical systems retain the characteristics of parallelism, high speed, and low power consumption, making them well suited for various applications. Furthermore, the emergence of modern photoelectric devices, such as spatial light modulators (SLMs), facilitated the development of novel SAR optical or photoelectric cooperative processing systems [,,]. Therefore, our preference leans toward optical imaging.

This study presents an optical imaging method for SAR based on the free propagation of light. Although initially devised for moving targets, the potential of this proposed method extends to stationary targets as well. This approach utilizes the phase variations of light emitted by sub-light sources in different positions during free propagation. This compensation addresses the phase differences in SAR data. By manipulating either the shape or position of the receiving surface, precise control over the optical path is achievable. This ensures equated light phase at a predetermined point after free propagation, thereby achieving a focal-point attainment.

The structure of the following sections of this paper is as follows: Section 2 primarily delves into the details of the method, beginning with an explanation of the fundamental idea, followed by a comprehensive derivation of the formulas, and culminating in an overview of the complete imaging process. Section 3 focuses on displaying the experimental procedures and outcomes, for which we design a total of five experiments to accommodate various scenarios. Section 4 engages in a discussion of the methodology, contrasting it with other imaging techniques using the imaging results of a single moving point target as a case study. The final section presents the conclusions.

2. Materials and Methods

2.1. Basic Idea

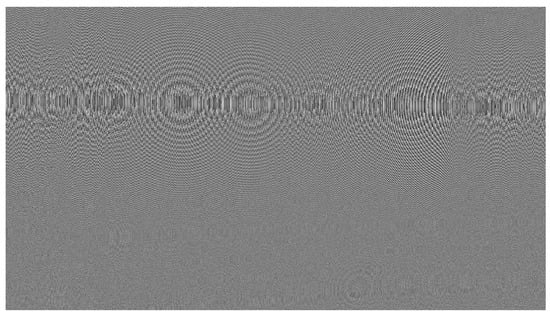

The amplitude distribution of the echo data, which contains a single-point target after range-pulse compression, is shown in Figure 1, resembling an arc. Moreover, the phase distribution of the constituent points on the arc exhibits an approximate quadratic pattern. Consider each point as an individual sub-light source; the initial phase aligns with that of the SAR data, and let it propagate freely. Assuming the presence of a point denoted as Q, where the emitted light from each sub-source converges with an identical phase, it follows that the focal point of the light is at Q, resulting in the imaging of the radar data (for further explanations regarding the position of point Q, please refer to Figure 3 and Section 2.2). According to Fourier optics [], light with a quadratic phase distribution can be focused over a specific distance during free propagation (please see Appendix A for details). Therefore, we assert the existence of point Q.

Figure 1.

Amplitude distribution of SAR echo with a point target after range compression.

Starting with the positional relationship between the radar and a moving point target, our analysis delves into the phase of the echo data. Through this analysis, we infer the conditions which the coordinates of point Q must satisfy based on the relationship between the echo phase and the phase variations caused by light propagation (refer to Section 2.2). Next, we explore the feasibility of capturing images from multiple-point targets simultaneously using a single receiving surface (refer to Section 2.3). Finally, we present our findings and insights (refer to Section 2.4).

2.2. Search for the Focal Point

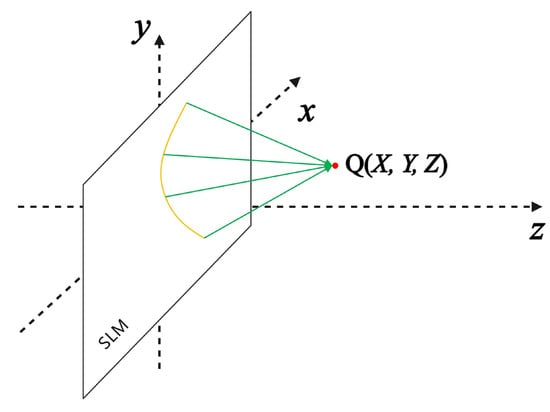

A geometric model illustrating the radar imaging process of a moving point target is shown in Figure 2. This model operates under the assumption that the radar system maintains a constant velocity parallel to the x-axis. At time , the moving target P is positioned at , while the antenna phase center (APC) is situated at (time can be selected as needed). The velocity of the target along the x-axis is , while its velocity along the y-axis is .

Figure 2.

Geometry model for radar imaging.

The instantaneous slant range between the APC and the target at time t is given by

Let . By applying the Taylor Formula, Equation (1) can be approximated as

where .

The slant range in Equation (2) introduces a delay of (c is the speed of light) when transmitting a pulse and receiving its echo. This delay corresponds to the phase delay , denoted as . In practical scenarios, minimum value normalization often involves subtracting a distance R, leading to the expression:

Considering the range-sampling rate of the SAR system as , the delay in Equation (3) can be converted into the number of sampling points:

Our approach utilized a phase-only spatial light modulator, a device capable of modulating information onto the phase of light. Each range-sampling point corresponds to a pixel along the x-direction of the SLM. Assuming a pixel size of , the delay in Equation (4) can be converted into the pixel position:

Similar considerations apply to the azimuth direction. Sampling points of different pulses are mapped to distinct pixels along the y-direction of the SLM, with the pixel size remaining . Consequently, azimuth time t can be transformed into the position in the y-direction of the SLM:

Let the light propagate to point to compensate for the phase, as shown in Figure 3. Consequently, the distance between point Q and the corresponding location on the SAR data curve at time t is given by

When a parallel light with wavelength is incident on the SLM, the resulting phase difference owing to the propagation of the reflected light to position is

Upon substituting and , the phase difference becomes

Let

Figure 3.

Diagram illustrating free propagation of light.

Owing to the consistent phase of light propagation to point Q, the phase difference precisely compensates the phase delay present in the radar data. This relationship is represented as

where represents an arbitrary constant. By substituting and , and employing the Taylor Formula for approximation under the condition , we obtain

As Equation (14) holds true for all values of t, the coefficients preceding the different orders of t are equal.

For Equation (15a) to be valid, must be satisfied. That is,

Equation (18) defines an elliptic equation within a two-dimensional plane. By substituting and rewriting it into the standard form of an elliptic equation, we obtain the following expression:

where

and

Equation (20) represents an elliptical cylinder that stands perpendicular to the plane. Complementarily, Equation (17) defines a plane that is perpendicular to the y-axis intersecting the cylinder represented by Equation (20) and consequently yielding an elliptical shape. This implies that the trajectory followed by point Q, which meets these requirements, traces out an ellipse. The center of this ellipse is located at . Let the focal length of the ellipse be f, which can be expressed as

The foci of the ellipse are located at and , while a vertex is situated at . The semi-major axis of the ellipse is represented by

Accordingly, the semi-minor axis of the ellipse can be expressed as

Theoretically, focusing is achievable at any point along the ellipse. For the present analysis, we choose a focal point with an x-coordinate of 0. Let the coordinates of the focal point be , leading to the following expression:

2.3. Multi-Target Situation

According to the analysis presented in Section 2.2, each individual target point is associated with a focusing ellipse. Efficiently capturing images of multiple-point targets simultaneously hinges on selecting a focal point on each respective ellipse, followed by choosing a connecting surface that intersects these identified points. While aiming for a surface shape that maintains simplicity, the ideal situation involves identifying a planar surface. To simplify the problem, our analysis focuses on cases where the point targets shared the same velocities.

The abscissa of the focal point was specified as . The focal point coordinates were set as , according to Equation (20),

Let (in cases where , an alternate reference time can be chosen to ensure at ), then . Substituting and into Equation (27) yields the relationship between and :

Equation (28) presents a hyperbolic expression within a two-dimensional plane. Using MATLAB (https://www.mathworks.com/products/matlab.html), a graph of with respect to was generated (refer to Table 1 for relevant parameters). Figure 4 illustrates that the graph closely resembles a straight line. Through linear fitting, the slope and intercept of this line were determined as 1.952410691902430 and 0.233678882644849, respectively. The associated root-mean-square error was calculated to be 5.076 × 10−10.

Table 1.

Parameter List.

Figure 4.

with respect to .

This led us to consider the possibility of approximating the hyperbola as a straight line. The Taylor expansion of Equation (28) at gives the following expression:

The resulting slope and intercept were calculated as 1.952408840666066 and 0.233678883785827, respectively. The difference between these computed values and the values obtained from the previous fitting remains below . Therefore, this approximation was considered reliable.

2.4. Method Summary

After range-pulse compression, the data can be loaded onto the SLM and subjected to imaging through the principle of the free propagation of light. When specific parameters meet designated conditions, the resulting image of an individual moving point target becomes focused onto an ellipse that is oriented perpendicular to the y-axis. The mathematical expressions are shown in Equations (17) and (20). Consequently, any point along this ellipse can be deliberately chosen to receive and reconstruct the image. The simultaneous reception of images for multiple-point targets with identical speeds can be achieved through the strategic positioning of an inclined plane, as demonstrated in Equation (29). To sum up, the entire imaging process is shown in Figure 5.

Figure 5.

Moving target imaging process: First, SAR echo data undergo range-pulse compression via computer processing. Next, the phase information is extracted and converted into a grayscale image, and this phase information is then loaded onto a laser using an SLM. Following this, the light compensates for the phase during its free propagation. Finally, a camera is positioned appropriately to capture the finely produced image.

3. Experiments and Results

In the previous section, we detail the principles and processing flow of the optical imaging method we proposed. In this section, we examine the method from an experimental perspective. Initially, the method’s efficacy is confirmed via straightforward simulation experiments. Subsequently, tailored experiments are orchestrated for distinct scenarios: a single moving point target, multiple moving point targets, and an area target. Ultimately, we investigate the imaging outcomes when moving and stationary targets coexist.

3.1. Experiment 1: Validation of Methodology

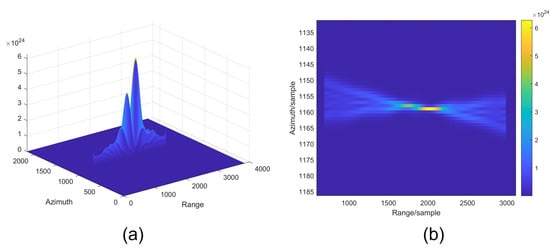

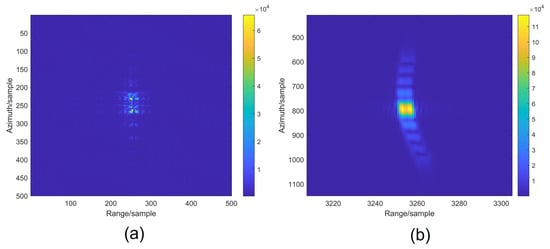

To validate the efficacy of the methodology proposed in Section 2, MATLAB simulations were executed. The initial simulation involved an echo generated by a single moving point. Subsequently, a range-pulse compression was performed. Finally, the free propagation of light was simulated using a computational method (Fourier transform was used to calculate Fresnel diffraction, see Appendix B for details), and the resulting light intensity distribution at the plane (the value of was calculated using Equation (26)) is shown in Figure 6. A subset of simulation parameters has been detailed in Table 1. The moving point target was located at while maintaining velocities of and .

Figure 6.

Light intensity distribution of a point target after free propagation. (a) Stereogram. (b) Partially enlarged top view.

As shown in Figure 6, after free propagation, the data demonstrate effective focusing in the azimuth direction; however, the originally well-focused range direction experiences defocusing. This issue can be readily resolved by superimposing a cylindrical lens along the range direction. The effect of introducing the lens is shown in Figure 7, highlighting the sharp focus for the point target. Therefore, the efficacy of the proposed method is affirmed.

Figure 7.

Light intensity distribution of a point target after free propagation with a cylindrical lens. (a) Stereogram. (b) Partially enlarged top view.

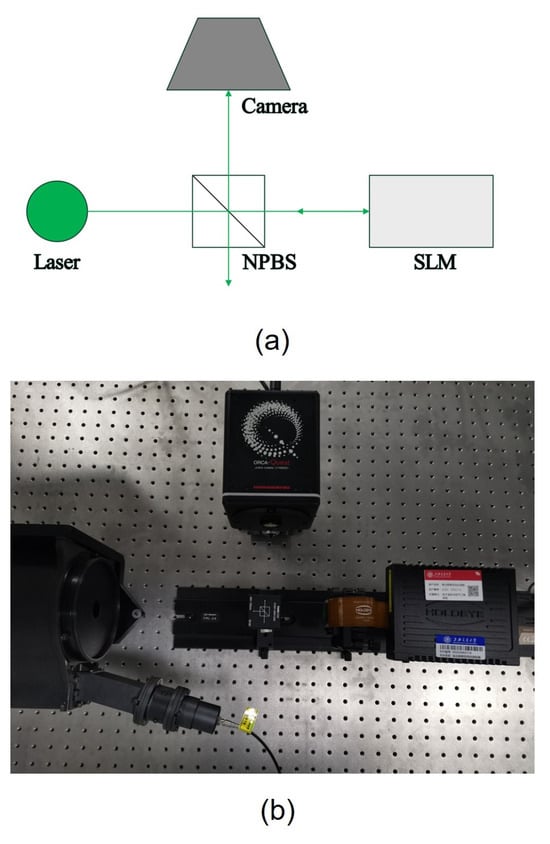

3.2. Experiment 2: Single-Point Target Focusing

This experiment aims to use an actual optical setup to focus a single-point target. Both a schematic and a photograph of the optical setup are shown in Figure 8. A 532 nm laser was utilized for the experiment. The SLM used is a reflective liquid-crystal-on-silicon modulator from the HOLOEYE brand, with a pixel pitch of 3.74 μm. Owing to the reflective nature of the SLM, the incorporation of a non-polarizing beam splitter (NPBS) is necessary to change the direction of light propagation. The optical path from the camera to the SLM is denoted as .

Figure 8.

Optical path utilized in the experiments. (a) Schematic. (b) Physical photograph.

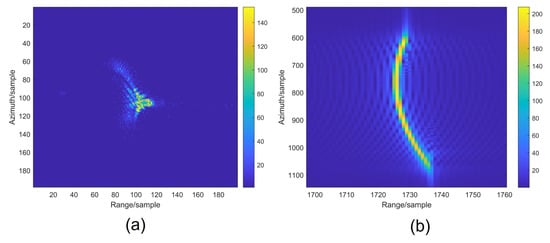

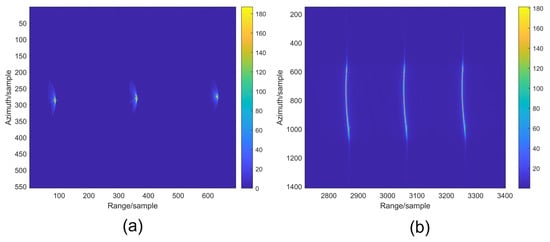

For this experiment, we used the echo data after range compression, which corresponds to the data employed in Experiment 1. In this study, we used an SLM to simultaneously achieve the effects of a cylindrical lens. This was achieved by conducting a Fourier transform on the data intended for loading, followed by multiplication with a matrix equivalent to the lens. The data loaded onto the SLM is shown in Figure A1 in Appendix C. The final imaging results are shown in Figure 9a. The results obtained using the electronic imaging method (employing a matched filter algorithm) are shown in Figure 9b. As shown in Figure 9, the electronic method exhibits a suboptimal focusing effect in the azimuth direction, possibly attributed to the exclusion of target motion effects. In contrast, the method proposed in this paper demonstrates significantly superior focusing performance when compared to the electronic approach.

Figure 9.

Results of focused single-point target. (a) Optical method. (b) Electronic method.

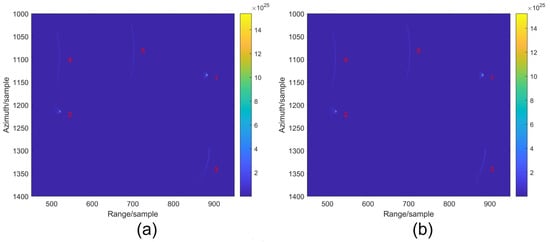

3.3. Experiment 3: Multiple Moving Point Target Focusing

An echo simulation involving three moving points was performed, with simulation parameters listed in Table 1. The velocities assigned to the point targets were and . From Equation (29), the inclination angle of the inclined plane was calculated as 62.8790°, and the intercept was determined to be 0.2337. To consider the impact of the camera’s tilted placement on receiving performance, compensation was applied. This compensation transformed the loaded data onto the SLM, effectively transforming the tilted plane into a plane perpendicular to the optical axis. The data finally loaded onto the SLM are shown in Figure A2 in Appendix C. The resulting image, shown in Figure 10a, demonstrates a significantly superior imaging result compared to the electronic imaging result illustrated in Figure 10b.

Figure 10.

Focusing results for multiple-point targets. (a) Optical method. (b) Electronic method.

3.4. Experiment 4: Area Target Focusing

A simulated echo features a rectangular area target, achieved by incorporating multiple closely spaced point targets to simulate the area target. The area target measures 4 m in length and 1.8 m in width and is characterized by velocities, where and . The data finally loaded onto the SLM is shown in Figure A3 in Appendix C. The method proposed for this study was used for imaging, and the results are shown in Figure 11a. In contrast, Figure 11b presents the imaging results generated through the electronic methodology. The electronic imaging results exhibit a severe tailing phenomenon along the azimuth direction, while the optical imaging method does not have this issue.

Figure 11.

Area target focusing results. (a) Optical method. (b) Electronic method.

3.5. Experiment 5: Focusing with Moving and Static Targets

We simulated the echo data containing both a moving point target and a stationary point target, and the velocity of the moving point target remained and . The optical imaging result, depicted in Figure 12, demonstrates a clear focus on the moving target alongside significant defocusing of the stationary target. Notably, the moving target’s intensity surpasses that of the stationary one, with a comparative analysis revealing the moving target’s intensity to be approximately 14 times greater. Such imaging methodology proves efficacious for scenarios prioritizing the identification of moving targets within a static scence, thereby potentially facilitating the detection of motion amidst stationary backgrounds.

Figure 12.

Focusing effect when moving and static targets coexist.

When multiple moving point targets with varying speeds exist in the scene, the results are similar to some extent. The specific experimental procedures and results have been provided in Appendix D.

4. Discussion

In order to facilitate the comparison of the effects of the optical method and the electronic method, we calculated the variances of Figure 9, Figure 10 and Figure 11, respectively, as shown in Table 2. The variances were calculated with the formula shown in Equation (30):

Table 2.

Variance list.

Each image has pixels. denotes the pixel value (intensity) at column n, row m. is the mean value of the image, which is defined in Equation (31):

It can be seen that the variance in the images obtained by the optical method is much larger than that of the electronic method, and the larger the variance, the higher the image quality. We also calculated the peak-to-sidelobe ratios (PSLRs) for Figure 9a,b, which are −12.98 dB and −0.23 dB, respectively. In Figure 10a,b, we selected one point target from each to calculate their PSLRs, which are −0.42 dB and −0.22 dB, respectively.

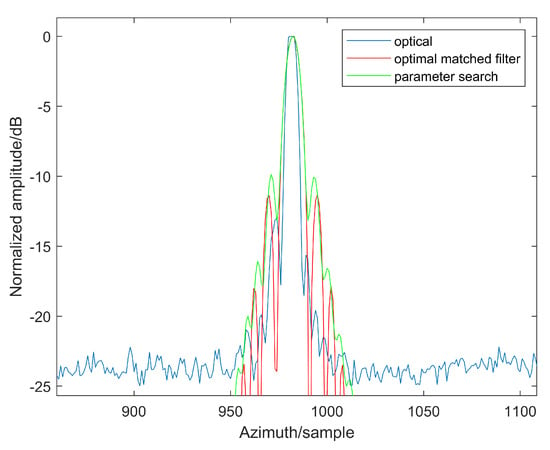

For a solitary moving point target with a known velocity, it is theoretically possible to determine optimal matched filter parameters, thereby attaining comparatively ideal imaging outcomes. To more effectively contrast the imaging capabilities of our proposed method, we calculated the parameters of the matched filter using accurate motion parameters. Then we employed the refined matched filter algorithm on the echo data from Experiment 2, resulting in the imagery shown in Figure 13a, with a variance of 9.2779 × 105.

Figure 13.

Imaging results of a single moving point target using other electronic methods. (a) Optimal matched filter algorithm. (b) Parameter search method.

Comparing Figure 9a and Figure 13a, it is evident that the image obtained by optical method is broadly similar in contour to Figure 13a. Perhaps the result in Figure 13a has slightly better symmetry and is closer to the ideal sinc function. Nevertheless, the imaging result from the optical method is also commendable. From the standpoint of variance, it even exceeds the performance depicted in Figure 13a. Given the inevitable errors in actual optical paths, some degree of imperfection in imaging results is unavoidable. Moreover, the outcome presented in Figure 13a was achieved utilizing precise motion parameters. If these parameters were obtained by estimation, the result might not be as favorable.

Furthermore, we explored the imaging of individual moving point targets through a parameter search approach. Following pulse compression in the range direction, a set of azimuth matched filters with varying quadratic coefficients were evaluated. The image with optimal imaging performance was chosen according to the maximum variance criterion, as depicted in Figure 13b. The associated variance is 7.7238 × 105, lower than that of Figure 9a. The azimuth profiles of the single-point target images obtained by optical method, optimal matched filter and parameter search approach are shown in Figure 14. The corresponding PSLRs are −12.98 dB, −11.33 dB, and −9.87 dB, respectively.

Figure 14.

Azimuth profiles of single-point target imaging results.

5. Conclusions

Loading SAR data, which contain moving targets, onto the SLM enables the realization of focusing on these moving targets through the free propagation of light. For a single-point target, focal points are distributed along an ellipse, and the resulting focused image can be captured by a plane perpendicular to the optical axis. For multiple-point targets moving at a uniform speed, a focused image becomes attainable by employing a plane tilted at a specific angle relative to the optical axis. This study establishes a correlation between the position and tilt angle of the plane and target speed, resulting in the successful experimental achievement of focusing on moving targets. The results underscore the superior imaging effect of this method compared to electronic imaging methods. Moreover, the optical imaging system utilized in this study comprises only four components: a laser, an NPBS, an SLM, and a camera, making its structure very simple. The imaging process relies on the free propagation of light, providing minimal processing delay that depends only on the length of the optical path. Certainly, no method is without its shortcomings, and the method we proposed is no exception. Due to the involvement of optical path alignment, it is inevitable that there will be alignment errors, which is a ubiquitous drawback of optical imaging methods. Additionally, limited by the resolution of the SLM, we are currently able to process data of only up to 3840*2160 in size at once. This limitation could potentially be overcome by stitching together multiple SLMs. As technology progresses, it is anticipated that SLMs of higher resolution will become available.

In summary, the method proposed in this paper provides an effective solution for SAR imaging of moving targets. The correlation between the focusing position of these moving targets and their velocities makes it applicable not only for detection and separation but also for velocity estimation. Our future endeavors will delve into exploring additional applications for this method.

Author Contributions

Conceptualization, J.C. and K.W.; methodology, J.C. and C.Y.; software, J.C.; validation, D.W. and J.C.; formal analysis, J.C. and C.Y.; investigation, J.C and D.W.; resources, K.W.; data curation, C.Y. and J.C.; writing—original draft preparation, J.C.; writing—review and editing, J.C. and K.W.; visualization, J.C.; supervision, K.W.; project administration, K.W.; funding acquisition, D.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant no: 62301312).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SAR | synthetic-aperture radar |

| NPBS | non-polarizing beam splitter |

| PRI | pulse repetition interval |

| SLM | spatial light modulators |

| APC | antenna phase center |

| PSLR | peak-to-sidelobe ratio |

Appendix A

Fourier optics is a branch of optics generated by the combination of optics with communication and information theory. It applies theories such as Fourier transform to analyze phenomena such as propagation, diffraction, and imaging of light waves. This enables insights into diffraction, image formation, and signal processing by viewing optical phenomena in frequency space. Central to Fourier optics is the idea that optical fields can be seen as combinations of waves with varying frequencies. Fourier optics is crucial in advancing imaging, holography, and optical information processing by enabling precise analysis of light’s frequency components for a variety of applications. Below, we will employ the principles of Fourier optics to elucidate why light with a quadratic phase distribution can be focused after propagating freely over a certain distance. Let’s assume the complex amplitude distribution of light with a quadratic phase is as follows:

where k is the wave number and f is a non-zero constant. Then, according to the Fresnel diffraction formula [], the complex amplitude distribution of light after free propagation over a distance z is given by:

When ,

Therefore, it can be considered that the light is focused at .

Appendix B

According to book [], Fresnel diffraction can be written in the following Fourier transform form:

where , are the coordinates of the aperture plane; x and y are the coordinates of the observation plane; and z is the distance between the aperture plane and the observation plane. The function U denotes the complex amplitude distribution of light, and k denotes the wave number, defined as . In Equation (A5), the relationship between the frequency value of the Fourier transform and the coordinates of the observed plane is

Therefore, Fourier transform can be used to calculate Fresnel diffraction.

Appendix C

Figure A1.

Data loaded on SLM in Experiment 2.

Figure A2.

Data loaded on SLM in Experiment 3.

Figure A3.

Data loaded on SLM in Experiment 4.

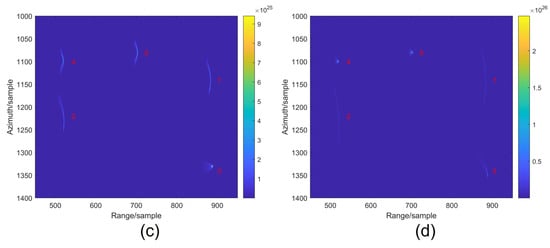

Appendix D

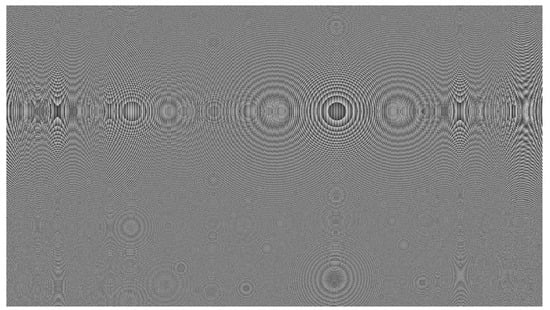

We simulated echo data containing four moving point targets and one stationary point target, with the targets’ velocities and positions shown in Table A1, and radar parameters consistent with Table 1. The positioning of the receiving surface, tailored to the motion parameters of different moving targets, yields varied imaging outcomes. Figure A4 illustrates the imaging results achieved through Matlab simulations. For ease of presentation, we expanded the data scale from 2160 × 3840 to 2160 × 7680. The alignment of the receiving surface’s position with the motion parameters of a specific moving target ensures its optimal focus, while causing varying degrees of defocus in others. Two targets with similar motion parameters exhibit similar focusing effects. Leveraging this property, targets with specific motion parameters can be selectively identified.

Table A1.

Velocities and locations of the targets.

Table A1.

Velocities and locations of the targets.

| Target | (m/s) | (m/s) | Location |

|---|---|---|---|

| 1 | 10 | 0 | (−20,2550,0) |

| 2 | 10 | 1 | (−20,2650,0) |

| 3 | 4 | 4 | (20,2550,0) |

| 4 | 0 | 1 | (20,2650,0) |

| 5 | 0 | 0 | (0,2600,0) |

Figure A4.

Focusing effects of multiple moving point targets with different speeds. (a) Focusing parameters match target 1. (b) Focusing parameters match target 2. (c) Focusing parameters match target 3. (d) Focusing parameters match target 4.

References

- Lan, H.; Liu, X.; Li, L.; Li, Q.; Tian, N.; Peng, J. Remote Sensing Precursors Analysis for Giant Landslides. Remote Sens. 2022, 14, 4399. [Google Scholar] [CrossRef]

- Pham, T.D.; Yokoya, N.; Bui, D.T.; Yoshino, K.; Friess, D.A. Remote Sensing Approaches for Monitoring Mangrove Species, Structure, and Biomass: Opportunities and Challenges. Remote Sens. 2019, 11, 230. [Google Scholar] [CrossRef]

- Wang, C.; Chang, L.; Wang, X.S.; Zhang, B.; Stein, A. Interferometric Synthetic Aperture Radar Statistical Inference in Deformation Measurement and Geophysical Inversion: A review. IEEE Geosci. Remote Sens. Mag. 2024, 12, 8–35. [Google Scholar] [CrossRef]

- Bovenga, F. Special Issue “Synthetic Aperture Radar (SAR) Techniques and Applications”. Sensors 2020, 20, 1851. [Google Scholar] [CrossRef] [PubMed]

- Xie, T.; Ouyang, R.; Perrie, W.; Zhao, L.; Zhang, X. Proof and Application of Discriminating Ocean Oil Spills and Seawater Based on Polarization Ratio Using Quad-Polarization Synthetic Aperture Radar. Remote Sens. 2023, 15, 1855. [Google Scholar] [CrossRef]

- Amitrano, D.; Di Martino, G.; Guida, R.; Iervolino, P.; Iodice, A.; Papa, M.N.; Riccio, D.; Ruello, G. Earth Environmental Monitoring Using Multi-Temporal Synthetic Aperture Radar: A Critical Review of Selected Applications. Remote Sens. 2021, 13, 604. [Google Scholar] [CrossRef]

- Asiyabi, R.M.; Ghorbanian, A.; Tameh, S.N.; Amani, M.; Jin, S.; Mohammadzadeh, A. Synthetic Aperture Radar (SAR) for Ocean: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 9106–9138. [Google Scholar] [CrossRef]

- Nunziata, F.; Meng, T.; Buono, A.; Migliaccio, M. Observing sea oil pollution using synthetic aperture radar measurements: From theory to applications. In Proceedings of the OCEANS 2023—Limerick, Limerick, Ireland, 5–8 June 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, Z.; Lin, H.; Wang, M.; Liu, X.; Chen, Q.; Wang, C.; Zhang, H. A Review of Satellite Synthetic Aperture Radar Interferometry Applications in Permafrost Regions: Current status, challenges, and trends. IEEE Geosci. Remote Sens. Mag. 2022, 10, 93–114. [Google Scholar] [CrossRef]

- Cruz, H.; Véstias, M.; Monteiro, J.; Neto, H.; Duarte, R.P. A Review of Synthetic-Aperture Radar Image Formation Algorithms and Implementations: A Computational Perspective. Remote Sens. 2022, 14, 1258. [Google Scholar] [CrossRef]

- Pi, Y.; Yang, J.; Fu, Y.; Yang, X. Principle of Synthetic Aperture Radar Imaging; University of Electronic Science and Technology of China Press: Chengdu, China, 2007. [Google Scholar]

- Munson, D.; O’brien, J.; Kenneth Jenkins, W. A Tomographic Formulation of Spotlight-Mode Synthetic Aperture Radar. Proc. IEEE 1983, 71, 917–925. [Google Scholar] [CrossRef]

- Wu, C.; Liu, K.; Jin, M. Modeling and a Correlation Algorithm for Spaceborne SAR Signals. IEEE Trans. Aerosp. Electron. Syst. 1982, AES-18, 563–575. [Google Scholar] [CrossRef]

- Raney, R.; Runge, H.; Bamler, R.; Cumming, I.; Wong, F. Precision SAR processing using chirp scaling. IEEE Trans. Geosci. Remote Sens. 1994, 32, 786–799. [Google Scholar] [CrossRef]

- Mittermayer, J.; Moreira, A.; Loffeld, O. Spotlight SAR data processing using the frequency scaling algorithm. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2198–2214. [Google Scholar] [CrossRef]

- Raney, R.K. Synthetic Aperture Imaging Radar and Moving Targets. IEEE Trans. Aerosp. Electron. Syst. 1971, AES-7, 499–505. [Google Scholar] [CrossRef]

- Sheng, W.; Mao, S. An Effective Method for Ground Moving Target Imaging and Location in SAR System. J. Electron. Inf. Technol. 2004, 26, 598–606. [Google Scholar]

- Freeman, A.; Currie, A. Synthetic aperture radar (SAR) images of moving targets. GEC J. Res. 1987, 5, 106–115. [Google Scholar]

- Barbarossa, S. Doppler-Rate Filtering For Detecting Moving Targets with Synthetic Aperture Radars. In Proceedings of the Defense, Security, and Sensing, Orlando, FL, USA, 27–28 March 1989. [Google Scholar]

- Barbarossa, S.; Farina, A. A novel procedure for detecting and focusing moving objects with SAR based on the Wigner-Ville distribution. In Proceedings of the IEEE International Conference on Radar, Arlington, VA, USA, 7–10 May 1990; pp. 44–50. [Google Scholar] [CrossRef]

- Xia, X.; Wang, G.; Chen, V. Quantitative SNR analysis for ISAR imaging using joint time-frequency analysis-Short time Fourier transform. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 649–659. [Google Scholar] [CrossRef]

- Barbarossa, S.; Farina, A. Detection and imaging of moving objects with synthetic aperture radar. Part 2: Joint time-frequency analysis by Wigner-Ville distribution. IEE Proc. F (Radar Signal Process.) 1992, 139, 89–97. [Google Scholar] [CrossRef]

- Perry, R.; DiPietro, R.; Fante, R. SAR imaging of moving targets. IEEE Trans. Aerosp. Electron. Syst. 1999, 35, 188–200. [Google Scholar] [CrossRef]

- Zhu, D.; Li, Y.; Zhu, Z. A Keystone Transform Without Interpolation for SAR Ground Moving-Target Imaging. IEEE Geosci. Remote Sens. Lett. 2007, 4, 18–22. [Google Scholar] [CrossRef]

- Li, G.; Xia, X.G.; Peng, Y.N. Doppler Keystone Transform: An Approach Suitable for Parallel Implementation of SAR Moving Target Imaging. IEEE Geosci. Remote Sens. Lett. 2008, 5, 573–577. [Google Scholar] [CrossRef]

- Huang, P.; Liao, G.; Yang, Z.; Xia, X.G.; Ma, J.T.; Ma, J. Long-Time Coherent Integration for Weak Maneuvering Target Detection and High-Order Motion Parameter Estimation Based on Keystone Transform. IEEE Trans. Signal Process. 2016, 64, 4013–4026. [Google Scholar] [CrossRef]

- Zeng, C.; Li, D.; Luo, X.; Song, D.; Liu, H.; Su, J. Ground Maneuvering Targets Imaging for Synthetic Aperture Radar Based on Second-Order Keystone Transform and High-Order Motion Parameter Estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4486–4501. [Google Scholar] [CrossRef]

- Jao, J.K. Theory of synthetic aperture radar imaging of a moving target. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1984–1992. [Google Scholar] [CrossRef]

- Vu, V.T.; Sjogren, T.K.; Pettersson, M.I.; Gustavsson, A.; Ulander, L.M.H. Detection of Moving Targets by Focusing in UWB SAR—Theory and Experimental Results. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3799–3815. [Google Scholar] [CrossRef]

- Sjogren, T.K.; Vu, V.T.; Pettersson, M.I.; Gustavsson, A.; Ulander, L.M.H. Moving Target Relative Speed Estimation and Refocusing in Synthetic Aperture Radar Images. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2426–2436. [Google Scholar] [CrossRef][Green Version]

- Vu, V.T.; Pettersson, M.I.; Sjögren, T.K. Moving Target Focusing in SAR Image With Known Normalized Relative Speed. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 854–861. [Google Scholar] [CrossRef]

- Rosen, P.; Hensley, S.; Joughin, I.; Li, F.; Madsen, S.; Rodriguez, E.; Goldstein, R. Synthetic aperture radar interferometry. Proc. IEEE 2000, 88, 333–382. [Google Scholar] [CrossRef]

- Cutrona, L.; Leith, E.; Porcello, L.; Vivian, W. On the application of coherent optical processing techniques to synthetic-aperture radar. Proc. IEEE 1966, 54, 1026–1032. [Google Scholar] [CrossRef]

- Tomiyasu, K. Tutorial review of synthetic-aperture radar (SAR) with applications to imaging of the ocean surface. Proc. IEEE 1978, 66, 563–583. [Google Scholar] [CrossRef]

- Marchese, L.; Bourqui, P.; Turgeon, S.; Harnisch, B.; Suess, M.; Doucet, M.; Turbide, S.; Bergeron, A. Extended capability overview of real-time optronic SAR processing. In Proceedings of the IET International Conference on Radar Systems (Radar 2012), Glasgow, UK, 22–25 October 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, Y.; Yang, C.; Wang, K. Study on processing synthetic aperture radar data based on optical 4f system for fast imaging. Opt. Express 2022, 30, 44408–44419. [Google Scholar] [CrossRef]

- Yang, C.; Zhang, Y.; Wang, D.; Wang, K. Compact optical real-time imaging system for high-resolution SAR data based on autofocusing. Opt. Commun. 2023, 546, 129751. [Google Scholar] [CrossRef]

- Goodman, J.W. Introduction to Fourier Optics, 4th ed.; W. H. Freeman and Company: New York, NY, USA, 2017. [Google Scholar]

- Lv, N. Fourier Optics, 3rd ed.; China Machine Press: Beijing, China, 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).