Meta-Analysis Assessing Potential of Drone Remote Sensing in Estimating Plant Traits Related to Nitrogen Use Efficiency

Abstract

1. Introduction

2. Materials and Methods

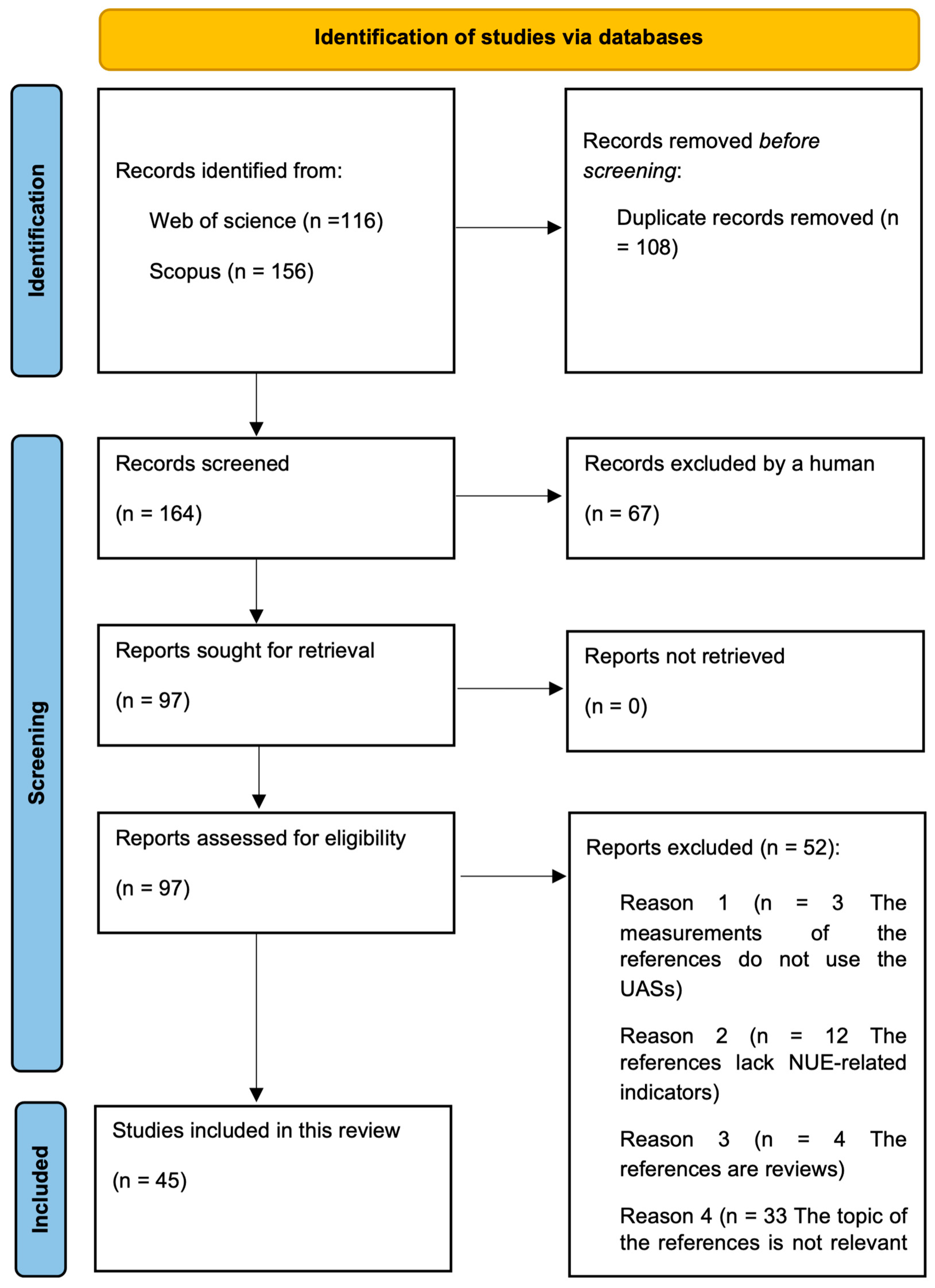

2.1. Literature Search

2.2. Data Extraction

- Sensor Types: We identified four sensor types that could potentially influence the accuracy of trait estimation: RGB, MS, HSI, and a combination of RGB and MS sensors.

- Signal Processing Techniques: To estimate vegetation characteristics, we employed a range of signal processing techniques as outlined by [22]. These include Multivariate Linear Methods (e.g., Partial Least Squares Regression, Stepwise Multiple Linear Regression, and Multiple Linear Regression), Multivariate Non-Linear Methods (e.g., Random Forest and Support Vector Machine), Physically Based Approaches (utilizing specific formulas), and Univariate Methods (involving Vegetation Indices and either Linear or Non-Linear Regressions).

- Model Evaluation Procedures: In the existing literature, two predominant strategies for model evaluation are calibration and validation. Calibration serves as a measure of the model’s accuracy when derived from a training data set. In contrast, validation gauges the model’s capacity for estimating trait values in an independent test data set, thereby providing insights into the model’s generalizability and stability.

- Growth Stages: To standardize the data monitoring period across all studies, we converted the reported growth stages to the BBCH scale [23], a globally recognized scale for phenological staging in plants. We categorized the growth stages as follows: early stage (BBCH 0–30), mid-stage (BBCH 31–60), and late stage (BBCH 61–90). Additionally, we considered the entire growth period (BBCH 0–90) as a separate category. These categorizations were employed to assess the impact of different growth stages on the accuracy of plant trait prediction.

- Crop Types: The articles analyzed for prediction accuracy primarily focused on the following crops: winter wheat, maize, barley, winter oilseed, and rice. These crops were individually categorized to evaluate the differential impact of crop type on the accuracy of plant trait estimation.

2.3. Data Analysis

2.3.1. Data Transformation and Standardization

2.3.2. Model Formulation: Three-Level Random Effects Model

2.3.3. Incorporating Moderator Variables (Fixed Effect)

2.3.4. Assessing Variability and Intra-Class Correlation

2.3.5. Evaluating the Explained Variance

3. Results

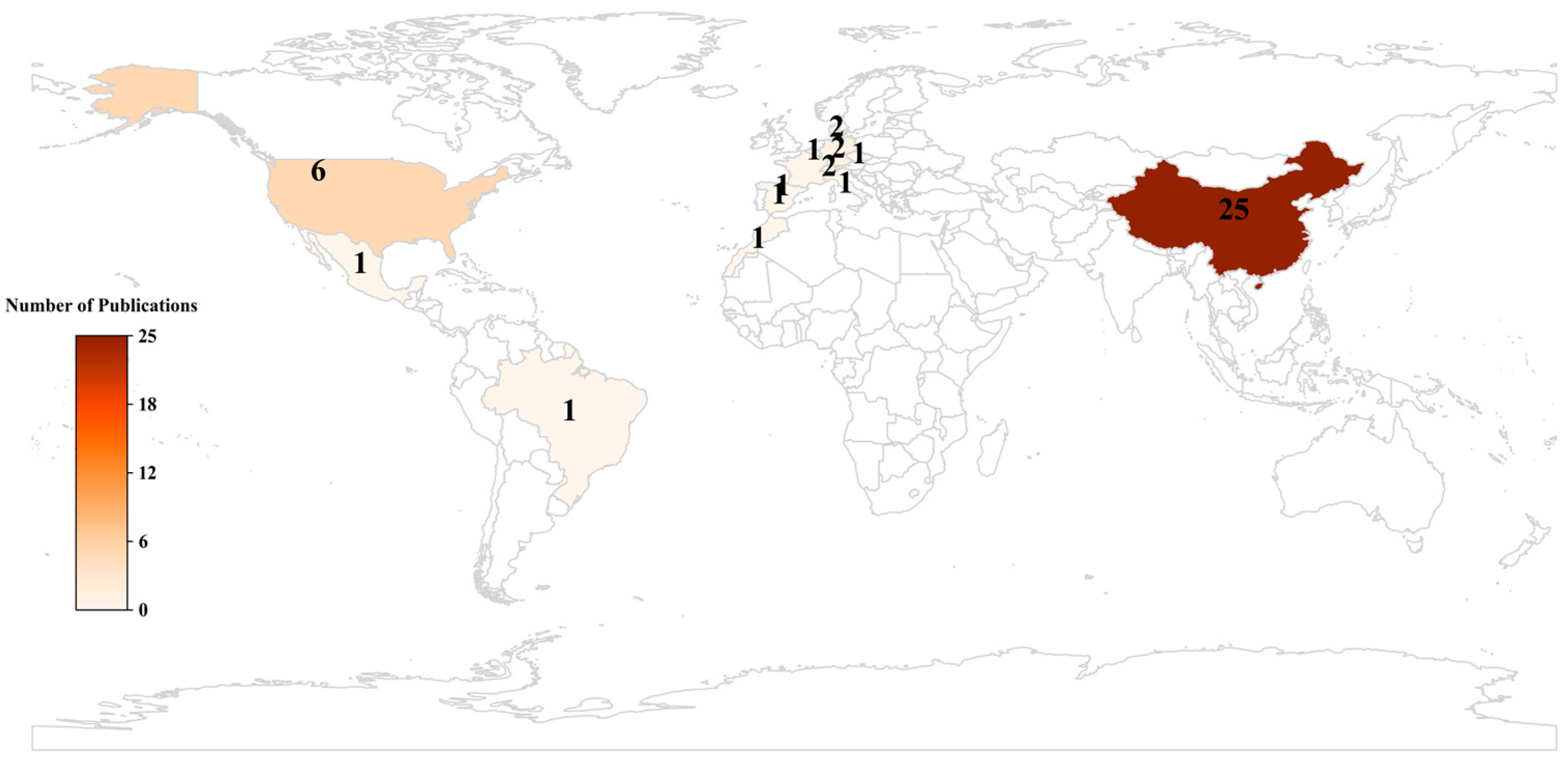

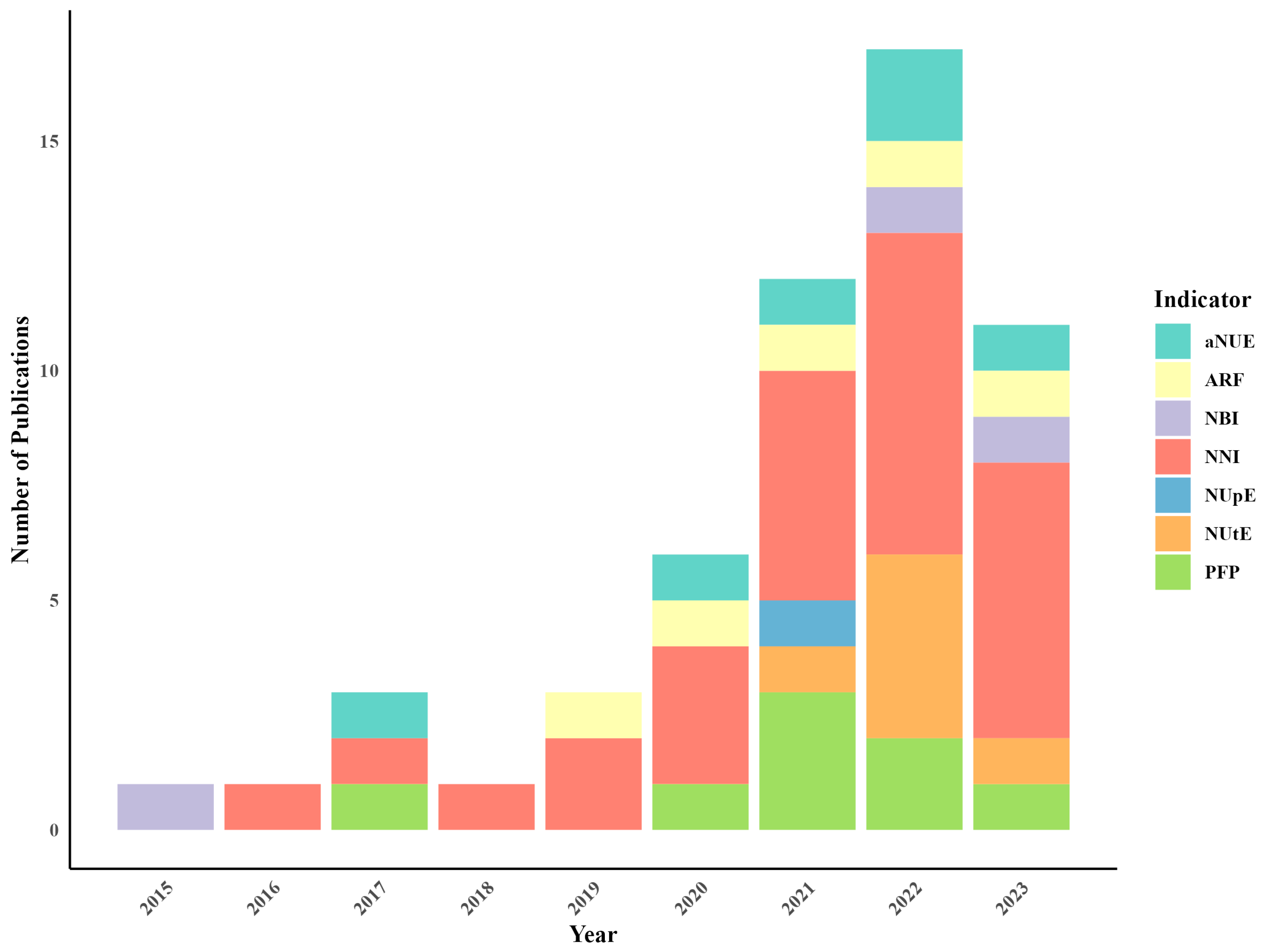

3.1. Geographical Distribution and Research Trends

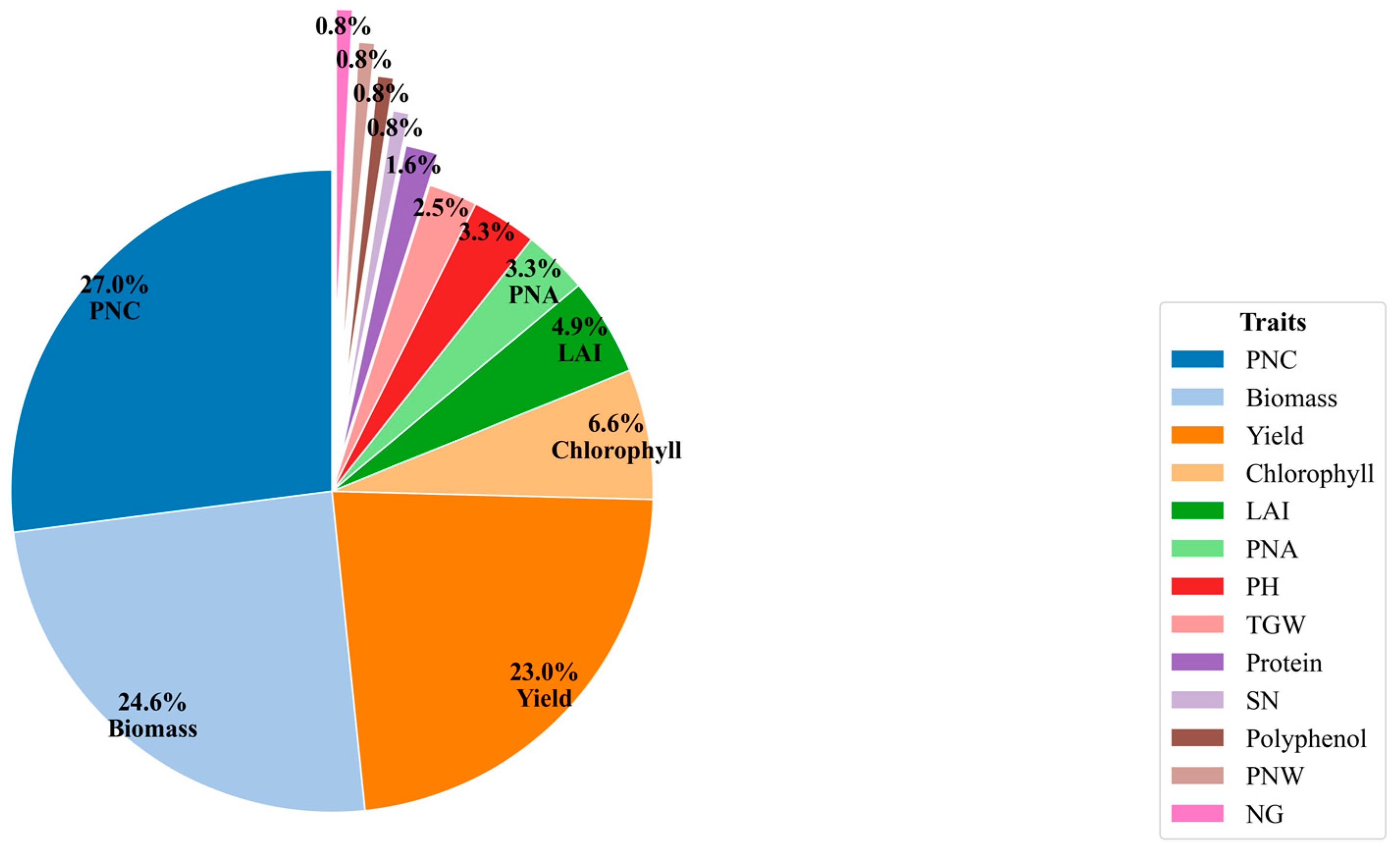

3.2. Comparing Indicators for Assessing NUE

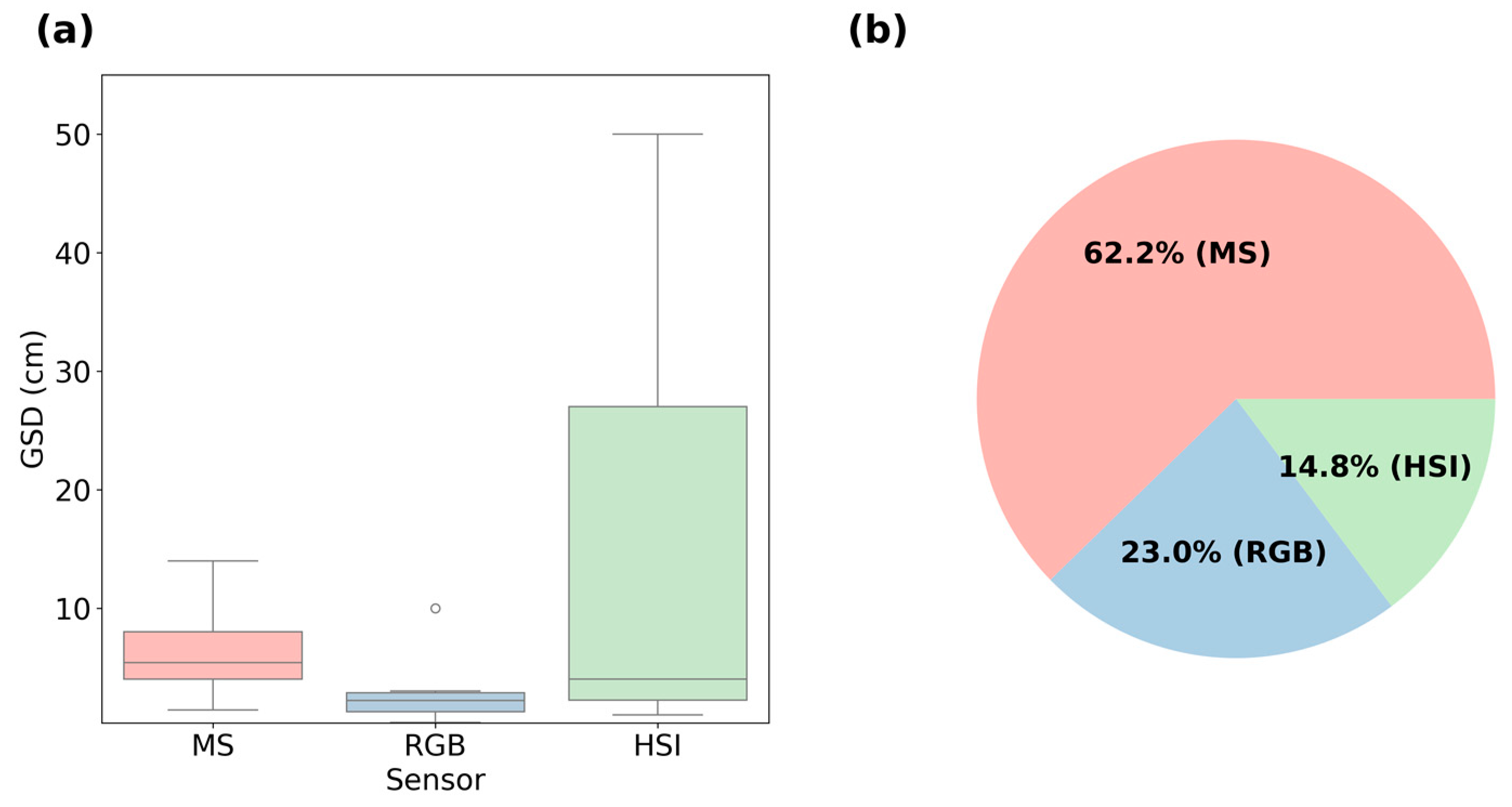

3.3. Specifications and Ground Sampling Distance (GSD)

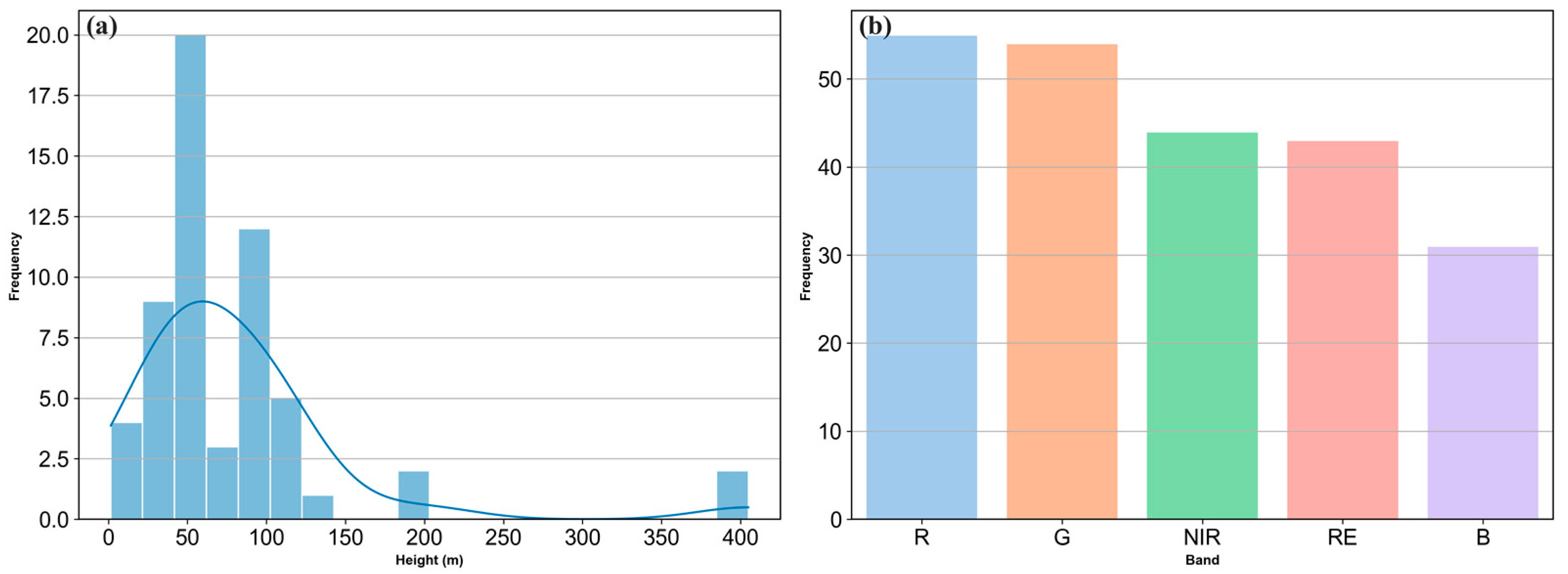

3.4. Flight Parameters and Spectral Characteristics

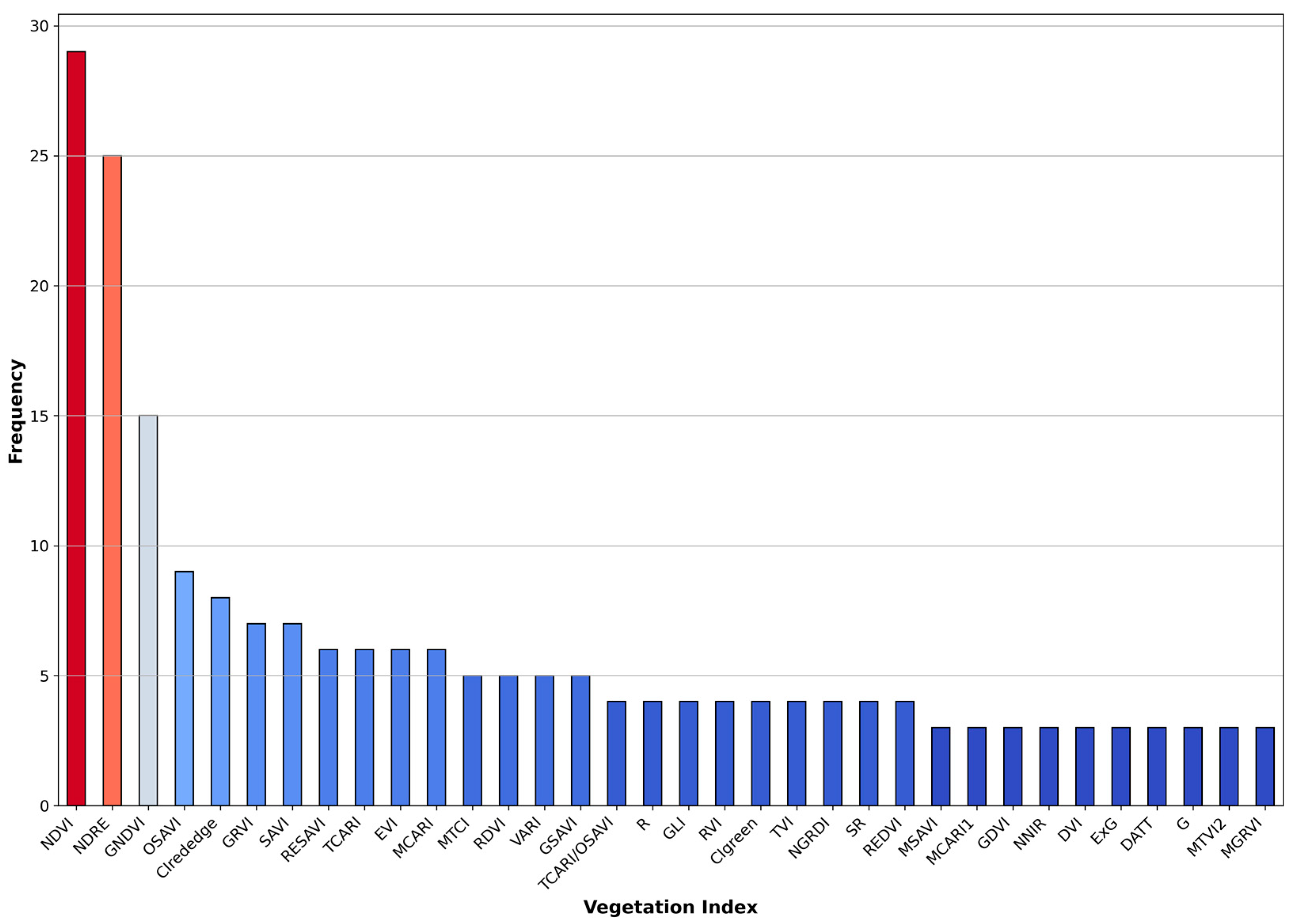

3.5. Commonly Used Vegetation Indices in NUE Assessment

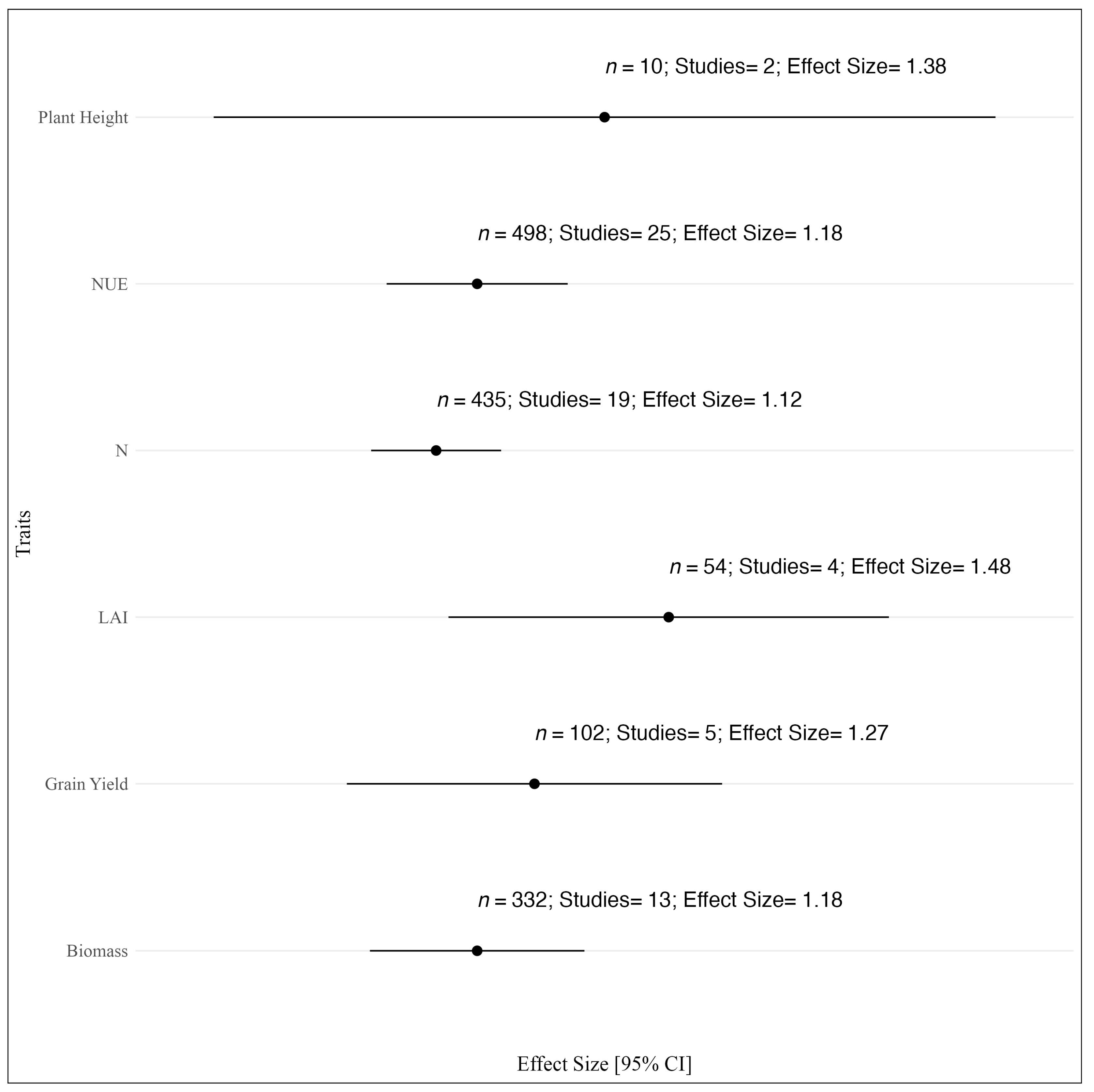

3.6. UAV-Based Trait Estimation for NUE Analysis

4. Challenges and New Opportunities for UAV Remote Sensing in NUE

4.1. Accounting for the Effects of Phenological Variations on Spectral Data and Indicators of NUE

4.2. Correcting Canopy Structural Effects on UAV-Derived NUE Estimates

4.3. Advancing Remote Sensing through Imagery Data Fusion and AI-Driven Feature Analysis

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anas, M.; Liao, F.; Verma, K.K.; Sarwar, M.A.; Mahmood, A.; Chen, Z.-L.; Li, Q.; Zeng, X.-P.; Liu, Y.; Li, Y.-R. Fate of nitrogen in agriculture and environment: Agronomic, eco-physiological and molecular approaches to improve nitrogen use efficiency. Biol. Res. 2020, 53, 47. [Google Scholar] [CrossRef]

- Ahmed, M.; Rauf, M.; Mukhtar, Z.; Saeed, N.A. Excessive use of nitrogenous fertilizers: An unawareness causing serious threats to environment and human health. Environ. Sci. Pollut. Res. 2017, 24, 26983–26987. [Google Scholar] [CrossRef] [PubMed]

- EU Nitrogen Expert Panel. Nitrogen Expert Panel. Nitrogen Use Efficiency (NUE). In An Indicator for the Utilization of Nitrogen in Agriculture and Food Systems; Wageningen University: Wageningen, The Netherlands, 2015. [Google Scholar]

- Li, Y.; Li, B.; Yuan, Y.; Liu, Y.; Li, R.; Liu, W. Improved soil surface nitrogen balance method for assessing nutrient use efficiency and potential environmental impacts within an alpine meadow dominated region. Environ. Pollut. 2023, 325, 121446. [Google Scholar] [CrossRef] [PubMed]

- Scheer, C.; Rowlings, D.W.; Antille, D.L.; De Antoni Migliorati, M.; Fuchs, K.; Grace, P.R. Improving nitrogen use efficiency in irrigated cotton production. Nutr. Cycl. Agroecosyst. 2023, 125, 95–106. [Google Scholar] [CrossRef]

- Stahl, A.; Friedt, W.; Wittkop, B.; Snowdon, R.J. Complementary diversity for nitrogen uptake and utilisation efficiency reveals broad potential for increased sustainability of oilseed rape production. Plant Soil 2016, 400, 245–262. [Google Scholar] [CrossRef]

- Wan, X.; Wu, W.; Shah, F. Nitrogen fertilizer management for mitigating ammonia emission and increasing nitrogen use efficiencies by 15N stable isotopes in winter wheat. Sci. Total Environ. 2021, 790, 147587. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef]

- Brinkhoff, J.; Dunn, B.W.; Robson, A.J.; Dunn, T.S.; Dehaan, R.L. Modeling Mid-Season Rice Nitrogen Uptake Using Multispectral Satellite Data. Remote Sens. 2019, 11, 1837. [Google Scholar] [CrossRef]

- Hegedus, P.B.; Ewing, S.A.; Jones, C.; Maxwell, B.D. Using spatially variable nitrogen application and crop responses to evaluate crop nitrogen use efficiency. Nutr. Cycl. Agroecosyst 2023, 126, 1–20. [Google Scholar] [CrossRef]

- Li, J.-L.; Su, W.-H.; Zhang, H.-Y.; Peng, Y. A real-time smart sensing system for automatic localization and recognition of vegetable plants for weed control. Front. Plant Sci. 2023, 14, 1133969. [Google Scholar] [CrossRef]

- Ludovisi, R.; Tauro, F.; Salvati, R.; Khoury, S.; Mugnozza Scarascia, G.; Harfouche, A. UAV-Based Thermal Imaging for High-Throughput Field Phenotyping of Black Poplar Response to Drought. Front. Plant Sci. 2017, 8, 1681. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Gašparović, M.; Zrinjski, M.; Barković, Đ.; Radočaj, D. An automatic method for weed mapping in oat fields based on UAV imagery. Comput. Electron. Agric. 2020, 173, 105385. [Google Scholar] [CrossRef]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef]

- Wahab, I.; Hall, O.; Jirström, M. Remote Sensing of Yields: Application of UAV Imagery-Derived NDVI for Estimating Maize Vigor and Yields in Complex Farming Systems in Sub-Saharan Africa. Drones 2018, 2, 28. [Google Scholar] [CrossRef]

- Yang, M.J.; Hassan, M.A.; Xu, K.J.; Zheng, C.Y.; Rasheed, A.; Zhang, Y.; Jin, X.L.; Xia, X.C.; Xiao, Y.G.; He, Z.H. Assessment of Water and Nitrogen Use Efficiencies Through UAV-Based Multispectral Phenotyping in Winter Wheat. Front. Plant Sci. 2020, 11, 927. [Google Scholar] [CrossRef] [PubMed]

- Liang, T.; Duan, B.; Luo, X.Y.; Ma, Y.; Yuan, Z.Q.; Zhu, R.S.; Peng, Y.; Gong, Y.; Fang, S.H.; Wu, X.T. Identification of High Nitrogen Use Efficiency Phenotype in Rice (Oryza sativa L.) Through Entire Growth Duration by Unmanned Aerial Vehicle Multispectral Imagery. Front. Plant Sci. 2021, 12, 740414. [Google Scholar] [CrossRef] [PubMed]

- Quan, Z.; Zhang, X.; Fang, Y.; Davidson, E.A. Different quantification approaches for nitrogen use efficiency lead to divergent estimates with varying advantages. Nat. Food 2021, 2, 241–245. [Google Scholar] [CrossRef] [PubMed]

- Cormier, F.; Foulkes, J.; Hirel, B.; Gouache, D.; Moënne-Loccoz, Y.; Le Gouis, J. Breeding for increased nitrogen-use efficiency: A review for wheat (T. aestivum L.). Plant Breed. 2016, 135, 255–278. [Google Scholar] [CrossRef]

- Hawkesford, M.J. Genetic variation in traits for nitrogen use efficiency in wheat. J. Exp. Bot. 2017, 68, 2627–2632. [Google Scholar] [CrossRef]

- Van Cleemput, E.; Vanierschot, L.; Fernández-Castilla, B.; Honnay, O.; Somers, B. The functional characterization of grass- and shrubland ecosystems using hyperspectral remote sensing: Trends, accuracy and moderating variables. Remote Sens. Environ. 2018, 209, 747–763. [Google Scholar] [CrossRef]

- Finn, G.A.; Straszewski, A.E.; Peterson, V. A general growth stage key for describing trees and woody plants. Ann. Appl. Biol. 2007, 151, 127–131. [Google Scholar] [CrossRef]

- Liu, L.; Li, H.; Zhu, S.; Gao, Y.; Zheng, X.; Xu, Y. The response of agronomic characters and rice yield to organic fertilization in subtropical China: A three-level meta-analysis. Field Crops Res. 2021, 263, 108049. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Y.; Liang, J.; Bi, Y.; Wang, S.; Shang, Y. Climatic Changes and Anthropogenic Activities Driving the Increase in Nitrogen: Evidence from the South-to-North Water Diversion Project. Water 2021, 13, 2517. [Google Scholar] [CrossRef]

- Mastrocicco, M.; Colombani, N.; Soana, E.; Vincenzi, F.; Castaldelli, G. Intense rainfalls trigger nitrite leaching in agricultural soils depleted in organic matter. Sci. Total Environ. 2019, 665, 80–90. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Li, P.; Li, M.; Wen, M.; Wei, H.; Zhang, Z. Coupled Dynamics of Soil Water and Nitrate in the Conversion of Wild Grassland to Farmland and Apple Orchard in the Loess Drylands. Agronomy 2023, 13, 1711. [Google Scholar] [CrossRef]

- Li, J.W.; Zhang, F.; Qian, X.Y.; Zhu, Y.H.; Shen, G.X. Quantification of rice canopy nitrogen balance index with digital imagery from unmanned aerial vehicle. Remote Sens. Lett. 2015, 6, 183–189. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.H. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef]

- Thompson, L.J.; Puntel, L.A. Transforming Unmanned Aerial Vehicle (UAV) and Multispectral Sensor into a Practical Decision Support System for Precision Nitrogen Management in Corn. Remote Sens. 2020, 12, 1597. [Google Scholar] [CrossRef]

- Kefauver, S.C.; Vicente, R.; Vergara-Diaz, O.; Fernandez-Gallego, J.A.; Kerfal, S.; Lopez, A.; Melichar, J.P.E.; Molins, M.D.S.; Araus, J.L. Comparative UAV and Field Phenotyping to Assess Yield and Nitrogen Use Efficiency in Hybrid and Conventional Barle. Front. Plant Sci. 2017, 8, 1733. [Google Scholar] [CrossRef]

- Argento, F.; Anken, T.; Abt, F.; Vogelsanger, E.; Walter, A.; Liebisch, F. Site-specific nitrogen management in winter wheat supported by low-altitude remote sensing and soil data. Precis. Agric. 2021, 22, 364–386. [Google Scholar] [CrossRef]

- Hu, D.-W.; Sun, Z.-P.; Li, T.-L.; Yan, H.-Z.; Zhang, H. Nitrogen Nutrition Index and Its Relationship with N Use Efficiency, Tuber Yield, Radiation Use Efficiency, and Leaf Parameters in Potatoes. J. Integr. Agric. 2014, 13, 1008–1016. [Google Scholar] [CrossRef]

- Song, X.Y.; Yang, G.J.; Xu, X.G.; Zhang, D.Y.; Yang, C.H.; Feng, H.K. Winter Wheat Nitrogen Estimation Based on Ground-Level and UAV-Mounted Sensors. Sensors 2022, 22, 549. [Google Scholar] [CrossRef] [PubMed]

- Dong, R.; Miao, Y.; Wang, X.; Chen, Z.; Yuan, F. Improving maize nitrogen nutrition index prediction using leaf fluorescence sensor combined with environmental and management variables. Field Crops Res. 2021, 269, 108180. [Google Scholar] [CrossRef]

- Wang, J.; Meyer, S.; Xu, X.; Weisser, W.W.; Yu, K. Drone Multispectral Imaging Captures the Effects of Soil Nmin on Canopy Structure and Nitrogen Use Efficiency in Wheat. Available online: https://ssrn.com/abstract=4699313 (accessed on 18 January 2024). [CrossRef]

- Olson, M.B.; Crawford, M.M.; Vyn, T.J. Hyperspectral Indices for Predicting Nitrogen Use Efficiency in Maize Hybrids. Remote Sens. 2022, 14, 1721. [Google Scholar] [CrossRef]

- Luo, H.Z.; Dewitte, K.; Landschoot, S.; Sigurnjak, I.; Robles-Aguilar, A.A.; Michels, E.; De Neve, S.; Haesaert, G.; Meers, E. Benefits of biobased fertilizers as substitutes for synthetic nitrogen fertilizers: Field assessment combining minirhizotron and UAV-based spectrum sensing technologies. Front. Environ. Sci. 2022, 10, 988932. [Google Scholar] [CrossRef]

- Fageria, N.K.; Baligar, V.C. Enhancing Nitrogen Use Efficiency in Crop Plants. Adv. Agron. 2005, 88, 97–185. [Google Scholar]

- Gianquinto, G.; Orsini, F.; Fecondini, M.; Mezzetti, M.; Sambo, P.; Bona, S. A methodological approach for defining spectral indices for assessing tomato nitrogen status and yield. Eur. J. Agron. 2011, 35, 135–143. [Google Scholar] [CrossRef]

- Li, W.; Wu, W.; Yu, M.; Tao, H.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Monitoring rice grain protein accumulation dynamics based on UAV multispectral data. Field Crops Res. 2023, 294, 108858. [Google Scholar] [CrossRef]

- Xu, G.; Fan, X.; Miller, A.J. Plant Nitrogen Assimilation and Use Efficiency. Annu. Rev. Plant Biol. 2012, 63, 153–182. [Google Scholar] [CrossRef]

- Guo, X.; He, H.; An, R.; Zhang, Y.; Yang, R.; Cao, L.; Wu, X.; Chen, B.; Tian, H.; Gao, Y. Nitrogen use-inefficient oilseed rape genotypes exhibit stronger growth potency during the vegetative growth stage. Acta Physiol. Plant. 2019, 41, 175. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Jia, M.; Colombo, R.; Rossini, M.; Celesti, M.; Zhu, J.; Cogliati, S.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Estimation of leaf nitrogen content and photosynthetic nitrogen use efficiency in wheat using sun-induced chlorophyll fluorescence at the leaf and canopy scales. Eur. J. Agron. 2021, 122, 126192. [Google Scholar] [CrossRef]

- Liu, J.K.; Zhu, Y.J.; Tao, X.Y.; Chen, X.F.; Li, X.W. Rapid prediction of winter wheat yield and nitrogen use efficiency using consumer-grade unmanned aerial vehicles multispectral imagery. Front. Plant Sci. 2022, 13, 1032170. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.S.; Li, L.T.; Gao, W.H.; Zhang, Y.K.; Liu, Y.N.; Wang, S.Q.; Lu, J.W. Diagnosis of nitrogen status in winter oilseed rape (Brassica napus L.) using in-situ hyperspectral data and unmanned aerial vehicle (UAV) multispectral images. Comput. Electron. Agric. 2018, 151, 185–195. [Google Scholar] [CrossRef]

- Qian, Y.-G.; Wang, N.; Ma, L.-L.; Liu, Y.-K.; Wu, H.; Tang, B.-H.; Tang, L.-L.; Li, C.-R. Land surface temperature retrieved from airborne multispectral scanner mid-infrared and thermal-infrared data. Opt. Express 2016, 24, A257–A269. [Google Scholar] [CrossRef] [PubMed]

- Arroyo, J.A.; Gomez-Castaneda, C.; Ruiz, E.; de Cote, E.M.; Gavi, F.; Sucar, L.E. Assessing Nitrogen Nutrition in Corn Crops with Airborne Multispectral Sensors. In Proceedings of the Advances in Artificial Intelligence: From Theory to Practice (IEA/AIE 2017), PT II, Arras, France, 27–30 June 2017; pp. 259–267. [Google Scholar]

- Chen, Z.C.; Miao, Y.X.; Lu, J.J.; Zhou, L.; Li, Y.; Zhang, H.Y.; Lou, W.D.; Zhang, Z.; Kusnierek, K.; Liu, C.H. In-Season Diagnosis of Winter Wheat Nitrogen Status in Smallholder Farmer Fields Across a Village Using Unmanned Aerial Vehicle-Based Remote Sensing. Agronomy 2019, 9, 619. [Google Scholar] [CrossRef]

- Zhang, J.Y.; Wang, W.K.; Krienke, B.; Cao, Q.; Zhu, Y.; Cao, W.X.; Liu, X.J. In-season variable rate nitrogen recommendation for wheat precision production supported by fixed-wing UAV imagery. Precis. Agric. 2022, 23, 830–853. [Google Scholar] [CrossRef]

- Heinemann, P.; Schmidhalter, U. Spectral assessments of N-related maize traits: Evaluating and defining agronomic relevant detection limits. Field Crops Res. 2022, 289, 108710. [Google Scholar] [CrossRef]

- Bernabe, S.; Sanchez, S.; Plaza, A.; Lopez, S.; Benediktsson, J.A.; Sarmiento, R. Hyperspectral Unmixing on GPUs and Multi-Core Processors: A Comparison. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1386–1398. [Google Scholar] [CrossRef]

- Sánchez, S.; Paz, A.; Martín, G.; Plaza, A. Parallel unmixing of remotely sensed hyperspectral images on commodity graphics processing units. Concurr.Comput. Pract. Exp. 2011, 23, 1538–1557. [Google Scholar] [CrossRef]

- Liu, H.Y.; Zhu, H.C.; Li, Z.H.; Yang, G.J. Quantitative analysis and hyperspectral remote sensing of the nitrogen nutrition index in winter wheat. Int. J. Remote Sens. 2020, 41, 858–881. [Google Scholar] [CrossRef]

- Du, R.; Chen, J.; Xiang, Y.; Zhang, Z.; Yang, N.; Yang, X.; Tang, Z.; Wang, H.; Wang, X.; Shi, H.; et al. Incremental learning for crop growth parameters estimation and nitrogen diagnosis from hyperspectral data. Comput. Electron. Agric. 2023, 215, 108356. [Google Scholar] [CrossRef]

- Wang, L.; Gao, R.; Li, C.; Wang, J.; Liu, Y.; Hu, J.; Li, B.; Qiao, H.; Feng, H.; Yue, J. Mapping Soybean Maturity and Biochemical Traits Using UAV-Based Hyperspectral Images. Remote Sens. 2023, 15, 4807. [Google Scholar] [CrossRef]

- Jiang, J.; Zhang, Z.Y.; Cao, Q.; Liang, Y.; Krienke, B.; Tian, Y.C.; Zhu, Y.; Cao, W.X.; Liu, X.J. Use of an Active Canopy Sensor Mounted on an Unmanned Aerial Vehicle to Monitor the Growth and Nitrogen Status of Winter Wheat. Remote Sens. 2020, 12, 3684. [Google Scholar] [CrossRef]

- Pereira, F.R.D.; de Lima, J.P.; Freitas, R.G.; Dos Reis, A.A.; do Amaral, L.R.; Figueiredo, G.; Lamparelli, R.A.C.; Magalhaes, P.S.G. Nitrogen variability assessment of pasture fields under an integrated crop-livestock system using UAV, PlanetScope, and Sentinel-2 data. Comput. Electron. Agric. 2022, 193, 106645. [Google Scholar] [CrossRef]

- Gong, Y.; Yang, K.; Lin, Z.; Fang, S.; Wu, X.; Zhu, R.; Peng, Y. Remote estimation of leaf area index (LAI) with unmanned aerial vehicle (UAV) imaging for different rice cultivars throughout the entire growing season. Plant Methods 2021, 17, 88. [Google Scholar] [CrossRef] [PubMed]

- Vukasovic, S.; Alahmad, S.; Christopher, J.; Snowdon, R.J.; Stahl, A.; Hickey, L.T. Dissecting the Genetics of Early Vigour to Design Drought-Adapted Wheat. Front. Plant Sci. 2021, 12, 754439. [Google Scholar] [CrossRef] [PubMed]

- Zheng, T.; Liu, N.; Wu, L.; Li, M.; Sun, H.; Zhang, Q.; Wu, J. Estimation of Chlorophyll Content in Potato Leaves Based on Spectral Red Edge Position. IFAC-PapersOnLine 2018, 51, 602–606. [Google Scholar] [CrossRef]

- Kochetova, G.V.; Avercheva, O.V.; Bassarskaya, E.M.; Kushunina, M.A.; Zhigalova, T.V. Effects of Red and Blue LED Light on the Growth and Photosynthesis of Barley (Hordeum vulgare L.) Seedlings. J. Plant Growth Regul. 2023, 42, 1804–1820. [Google Scholar] [CrossRef]

- da Costa, M.B.T.; Silva, C.A.; Broadbent, E.N.; Leite, R.V.; Mohan, M.; Liesenberg, V.; Stoddart, J.; do Amaral, C.H.; de Almeida, D.R.A.; da Silva, A.L.; et al. Beyond trees: Mapping total aboveground biomass density in the Brazilian savanna using high-density UAV-lidar data. For. Ecol. Manag. 2021, 491, 119155. [Google Scholar] [CrossRef]

- Gu, Y.; Wylie, B.K.; Howard, D.M.; Phuyal, K.P.; Ji, L. NDVI saturation adjustment: A new approach for improving cropland performance estimates in the Greater Platte River Basin, USA. Ecol. Indic. 2013, 30, 1–6. [Google Scholar] [CrossRef]

- Wang, H.; Mortensen, A.K.; Mao, P.S.; Boelt, B.; Gislum, R. Estimating the nitrogen nutrition index in grass seed crops using a UAV-mounted multispectral camera. Int. J. Remote Sens. 2019, 40, 2467–2482. [Google Scholar] [CrossRef]

- Roy Choudhury, M.; Christopher, J.; Das, S.; Apan, A.; Menzies, N.W.; Chapman, S.; Mellor, V.; Dang, Y.P. Detection of calcium, magnesium, and chlorophyll variations of wheat genotypes on sodic soils using hyperspectral red edge parameters. Environ. Technol. Innov. 2022, 27, 102469. [Google Scholar] [CrossRef]

- Singh, S.; Pandey, P.; Khan, M.S.; Semwal, M. Multi-temporal High Resolution Unmanned Aerial Vehicle (UAV) Multispectral Imaging for Menthol Mint Crop Monitoring. In Proceedings of the 2021 6th International Conference for Convergence in Technology (I2CT), Maharashtra, India, 2–4 April 2021; pp. 1–4. [Google Scholar]

- Peng, J.X.; Manevski, K.; Korup, K.; Larsen, R.; Andersen, M.N. Random forest regression results in accurate assessment of potato nitrogen status based on multispectral data from different platforms and the critical concentration approach. Field Crops Res. 2021, 268, 108158. [Google Scholar] [CrossRef]

- Huang, S.; Miao, Y.; Zhao, G.; Ma, X.; Tan, C.; Bareth, G.; Rascher, U.; Yuan, F. Estimating rice nitrogen status with satellite remote sensing in Northeast China. In Proceedings of the 2013 Second International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Fairfax, VA, USA, 12–16 August 2013; pp. 550–557. [Google Scholar] [CrossRef]

- Ren, H.; Zhou, G. Determination of green aboveground biomass in desert steppe using litter-soil-adjusted vegetation index. Eur. J. Remote Sens. 2014, 47, 611–625. [Google Scholar] [CrossRef]

- Pei, S.-Z.; Zeng, H.-L.; Dai, Y.-L.; Bai, W.-Q.; Fan, J.-L. Nitrogen nutrition diagnosis for cotton under mulched drip irrigation using unmanned aerial vehicle multispectral images. J. Integr. Agric. 2023, 22, 2536–2552. [Google Scholar] [CrossRef]

- Lei, S.; Luo, J.; Tao, X.; Qiu, Z. Remote Sensing Detecting ofYellow Leaf Disease of Arecanut Based on UAVMultisource Sensors. Remote Sens. 2021, 13, 4562. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of Spectral–Temporal Response Surfaces by Combining Multispectral Satellite and Hyperspectral UAV Imagery for Precision Agriculture Applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Wang, L.; Chen, S.; Li, D.; Wang, C.; Jiang, H.; Zheng, Q.; Peng, Z. Estimation of Paddy Rice Nitrogen Content and Accumulation Both at Leaf and Plant Levels from UAV Hyperspectral Imagery. Remote Sens. 2021, 13, 2956. [Google Scholar] [CrossRef]

- Costa, L.; Nunes, L.; Ampatzidis, Y. A new visible band index (vNDVI) for estimating NDVI values on RGB images utilizing genetic algorithms. Comput. Electron. Agric. 2020, 172, 105334. [Google Scholar] [CrossRef]

- Sakamoto, T.; Gitelson, A.A.; Wardlow, B.D.; Arkebauer, T.J.; Verma, S.B.; Suyker, A.E.; Shibayama, M. Application of day and night digital photographs for estimating maize biophysical characteristics. Precis. Agric. 2012, 13, 285–301. [Google Scholar] [CrossRef]

- Qiu, Z.C.; Ma, F.; Li, Z.W.; Xu, X.B.; Du, C.W. Development of Prediction Models for Estimating Key Rice Growth Variables Using Visible and NIR Images from Unmanned Aerial Systems. Remote Sens. 2022, 14, 1384. [Google Scholar] [CrossRef]

- Han, S.Y.; Zhao, Y.; Cheng, J.P.; Zhao, F.; Yang, H.; Feng, H.K.; Li, Z.H.; Ma, X.M.; Zhao, C.J.; Yang, G.J. Monitoring Key Wheat Growth Variables by Integrating Phenology and UAV Multispectral Imagery Data into Random Forest Model. Remote Sens. 2022, 14, 3723. [Google Scholar] [CrossRef]

- Matsushita, B.; Yang, W.; Chen, J.; Onda, Y.; Qiu, G. Sensitivity of the Enhanced Vegetation Index (EVI) and Normalized Difference Vegetation Index (NDVI) to Topographic Effects: A Case Study in High-density Cypress Forest. Sensors 2007, 7, 2636–2651. [Google Scholar] [CrossRef] [PubMed]

- Liang, L.; Di, L.; Zhang, L.; Deng, M.; Qin, Z.; Zhao, S.; Lin, H. Estimation of crop LAI using hyperspectral vegetation indices and a hybrid inversion method. Remote Sens. Environ. 2015, 165, 123–134. [Google Scholar] [CrossRef]

- Astaoui, G.; Dadaiss, J.E.; Sebari, I.; Benmansour, S.; Mohamed, E. Mapping Wheat Dry Matter and Nitrogen Content Dynamics and Estimation of Wheat Yield Using UAV Multispectral Imagery Machine Learning and a Variety-Based Approach: Case Study of Morocco. AgriEngineering 2021, 3, 29–49. [Google Scholar] [CrossRef]

- Somvanshi, S.S.; Kumari, M. Comparative analysis of different vegetation indices with respect to atmospheric particulate pollution using sentinel data. Appl. Comput. Geosci. 2020, 7, 100032. [Google Scholar] [CrossRef]

- Qiu, Z.C.; Ma, F.; Li, Z.W.; Xu, X.B.; Ge, H.X.; Du, C.W. Estimation of nitrogen nutrition index in rice from UAV RGB images coupled with machine learning algorithms. Comput. Electron. Agric. 2021, 189, 106421. [Google Scholar] [CrossRef]

- Nguyen, C.; Sagan, V.; Bhadra, S.; Moose, S. UAV Multisensory Data Fusion and Multi-Task Deep Learning for High-Throughput Maize Phenotyping. Sensors 2023, 23, 1827. [Google Scholar] [CrossRef]

- Olson, M.B.; Crawford, M.M.; Vyn, T.J. Predicting Nitrogen Efficiencies in Mature Maize with Parametric Models Employing In-Season Hyperspectral Imaging. Remote Sens. 2022, 14, 5884. [Google Scholar] [CrossRef]

- Sangha, H.S.; Sharda, A.; Koch, L.; Prabhakar, P.; Wang, G. Impact of camera focal length and sUAS flying altitude on spatial crop canopy temperature evaluation. Comput. Electron. Agric. 2020, 172, 105344. [Google Scholar] [CrossRef]

- Wasilewska-Dębowska, W.; Zienkiewicz, M.; Drozak, A. How Light Reactions of Photosynthesis in C4 Plants Are Optimized and Protected under High Light Conditions. Int. J. Mol. Sci. 2022, 23, 3626. [Google Scholar] [CrossRef] [PubMed]

- SAGE, R.F.; KUBIEN, D.S. The temperature response of C3 and C4 photosynthesis. Plant Cell Environ. 2007, 30, 1086–1106. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Pu, P.; Tang, Y.; Zhang, L.; Lv, J. C4 photosynthetic enzymes play a key role in wheat spike bracts primary carbon metabolism response under water deficit. Plant Physiol. Biochem. 2019, 142, 163–172. [Google Scholar] [CrossRef]

- Huma, B.; Kundu, S.; Poolman, M.G.; Kruger, N.J.; Fell, D.A. Stoichiometric analysis of the energetics and metabolic impact of photorespiration in C3 plants. Plant J. 2018, 96, 1228–1241. [Google Scholar] [CrossRef] [PubMed]

- Fatima, Z.; Abbas, Q.; Khan, A.; Hussain, S.; Ali, M.A.; Abbas, G.; Younis, H.; Naz, S.; Ismail, M.; Shahzad, M.I.; et al. Resource Use Efficiencies of C3 and C4 Cereals under Split Nitrogen Regimes. Agronomy 2018, 8, 69. [Google Scholar] [CrossRef]

- Jiang, J.; Atkinson, P.M.; Chen, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Liu, X.; Cao, W. Combining UAV and Sentinel-2 satellite multi-spectral images to diagnose crop growth and N status in winter wheat at the county scale. Field Crops Res. 2023, 294, 108860. [Google Scholar] [CrossRef]

- Yu, F.; Bai, J.; Jin, Z.; Zhang, H.; Yang, J.; Xu, T. Estimating the rice nitrogen nutrition index based on hyperspectral transform technology. Front. Plant Sci. 2023, 14, 1118098. [Google Scholar] [CrossRef]

- Pang, J.; Palta, J.A.; Rebetzke, G.J.; Milroy, S.P. Wheat genotypes with high early vigour accumulate more nitrogen and have higher photosynthetic nitrogen use efficiency during early growth. Funct. Plant Biol. 2014, 41, 215–222. [Google Scholar] [CrossRef]

- White, P.J.; Bradshaw, J.E.; Brown, L.K.; Dale, M.F.B.; Dupuy, L.X.; George, T.S.; Hammond, J.P.; Subramanian, N.K.; Thompson, J.A.; Wishart, J.; et al. Juvenile root vigour improves phosphorus use efficiency of potato. Plant Soil 2018, 432, 45–63. [Google Scholar] [CrossRef]

- Li, W.; He, X.; Chen, Y.; Jing, Y.; Shen, C.; Yang, J.; Teng, W.; Zhao, X.; Hu, W.; Hu, M.; et al. A wheat transcription factor positively sets seed vigour by regulating the grain nitrate signal. New Phytol. 2020, 225, 1667–1680. [Google Scholar] [CrossRef]

- Kant, S.; Seneweera, S.; Rodin, J.; Materne, M.; Burch, D.; Rothstein, S.; Spangenberg, G. Improving yield potential in crops under elevated CO2: Integrating the photosynthetic and nitrogen utilization efficiencies. Front. Plant Sci. 2012, 3, 162. [Google Scholar] [CrossRef] [PubMed]

- Sinha, S.K.; Sevanthi, V.A.M.; Chaudhary, S.; Tyagi, P.; Venkadesan, S.; Rani, M.; Mandal, P.K. Transcriptome Analysis of Two Rice Varieties Contrasting for Nitrogen Use Efficiency under Chronic N Starvation Reveals Differences in Chloroplast and Starch Metabolism-Related Genes. Genes 2018, 9, 206. [Google Scholar] [CrossRef]

- Melino, V.J.; Fiene, G.; Enju, A.; Cai, J.; Buchner, P.; Heuer, S. Genetic diversity for root plasticity and nitrogen uptake in wheat seedlings. Funct. Plant Biol. 2015, 42, 942–956. [Google Scholar] [CrossRef]

- Mu, X.; Chen, Y. The physiological response of photosynthesis to nitrogen deficiency. Plant Physiol. Biochem. 2021, 158, 76–82. [Google Scholar] [CrossRef] [PubMed]

- Kayad, A.; Rodrigues, F.A.; Naranjo, S.; Sozzi, M.; Pirotti, F.; Marinello, F.; Schulthess, U.; Defourny, P.; Gerard, B.; Weiss, M. Radiative transfer model inversion using high-resolution hyperspectral airborne imagery—Retrieving maize LAI to access biomass and grain yield. Field Crops Res. 2022, 282, 108449. [Google Scholar] [CrossRef]

- Rebolledo, M.C.; Dingkuhn, M.; Péré, P.; McNally, K.L.; Luquet, D. Developmental Dynamics and Early Growth Vigour in Rice. I. Relationship Between Development Rate (1/Phyllochron) and Growth. J. Agron. Crop Sci. 2012, 198, 374–384. [Google Scholar] [CrossRef]

- Jia, Y.; Zou, D.; Wang, J.; Liu, H.; Inayat, M.A.; Sha, H.; Zheng, H.; Sun, J.; Zhao, H. Effect of low water temperature at reproductive stage on yield and glutamate metabolism of rice (Oryza sativa L.) in China. Field Crops Res. 2015, 175, 16–25. [Google Scholar] [CrossRef]

- Li, Y.; Liang, S. Evaluation of Reflectance and Canopy Scattering Coefficient Based Vegetation Indices to Reduce the Impacts of Canopy Structure and Soil in Estimating Leaf and Canopy Chlorophyll Contents. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Knyazikhin, Y.; Schull, M.A.; Stenberg, P.; Mottus, M.; Rautiainen, M.; Yang, Y.; Marshak, A.; Latorre Carmona, P.; Kaufmann, R.K.; Lewis, P.; et al. Hyperspectral remote sensing of foliar nitrogen content. Proc. Natl. Acad. Sci. USA 2013, 110, E185–E192. [Google Scholar] [CrossRef]

- Kattenborn, T.; Schmidtlein, S. Radiative transfer modelling reveals why canopy reflectance follows function. Sci. Rep. 2019, 9, 6541. [Google Scholar] [CrossRef]

- Yan, K.; Zhang, Y.; Tong, Y.; Zeng, Y.; Pu, J.; Gao, S.; Li, L.; Mu, X.; Yan, G.; Rautiainen, M.; et al. Modeling the radiation regime of a discontinuous canopy based on the stochastic radiative transport theory: Modification, evaluation and validation. Remote Sens. Environ. 2021, 267, 112728. [Google Scholar] [CrossRef]

- Asseng, S.; Turner, N.C.; Ray, J.D.; Keating, B.A. A simulation analysis that predicts the influence of physiological traits on the potential yield of wheat. Eur. J. Agron. 2002, 17, 123–141. [Google Scholar] [CrossRef]

- Falster, D.S.; Westoby, M. Leaf size and angle vary widely across species: What consequences for light interception? New Phytol. 2003, 158, 509–525. [Google Scholar] [CrossRef]

- Kimes, D.S.; Knyazikhin, Y.; Privette, J.L.; Abuelgasim, A.A.; Gao, F. Inversion methods for physically-based models. Remote Sens. Rev. 2000, 18, 381–439. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Schaepman-Strub, G.; Schaepman, M.E.; Painter, T.H.; Dangel, S.; Martonchik, J.V. Reflectance quantities in optical remote sensing—Definitions and case studies. Remote Sens. Environ. 2006, 103, 27–42. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, S.; Wei, Q.; Yang, Y.; Pan, H.; Fu, X.; Fan, Z.; Qin, B.; Wang, X.; Ma, X.; et al. Variation in Leaf Type, Canopy Architecture, and Light and Nitrogen Distribution Characteristics of Two Winter Wheat (Triticum aestivum L.) Varieties with High Nitrogen-Use Efficiency. Agronomy 2022, 12, 2411. [Google Scholar] [CrossRef]

- Li, H.; Li, D.; Xu, K.; Cao, W.; Jiang, X.; Ni, J. Monitoring of Nitrogen Indices in Wheat Leaves Based on the Integration of Spectral and Canopy Structure Information. Agronomy 2022, 12, 833. [Google Scholar] [CrossRef]

- Rengarajan, R.; Schott, J.R. Modeling and Simulation of Deciduous Forest Canopy and Its Anisotropic Reflectance Properties Using the Digital Image and Remote Sensing Image Generation (DIRSIG) Tool. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4805–4817. [Google Scholar] [CrossRef]

- Camenzind, M.P.; Yu, K. Multi temporal multispectral UAV remote sensing allows for yield assessment across European wheat varieties already before flowering. Front. Plant Sci. 2024, 14, 1214931. [Google Scholar] [CrossRef]

- Wang, N.; Guo, Y.; Wei, X.; Zhou, M.; Wang, H.; Bai, Y. UAV-based remote sensing using visible and multispectral indices for the estimation of vegetation cover in an oasis of a desert. Ecol. Indic. 2022, 141, 109155. [Google Scholar] [CrossRef]

- Jiang, J.; Atkinson, P.M.; Zhang, J.Y.; Lu, R.H.; Zhou, Y.Y.; Cao, Q.; Tian, Y.C.; Zhu, Y.; Cao, W.X.; Liu, X.J. Combining fixed-wing UAV multispectral imagery and machine learning to diagnose winter wheat nitrogen status at the farm scale. Eur. J. Agron. 2022, 138, 126537. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Bai, S.; Zhao, J. A New Strategy to Fuse Remote Sensing Data and Geochemical Data with Different Machine Learning Methods. Remote Sens. 2023, 15, 930. [Google Scholar] [CrossRef]

- Leung, C.K.; Braun, P.; Cuzzocrea, A. AI-Based Sensor Information Fusion for Supporting Deep Supervised Learning. Sensors 2019, 19, 1345. [Google Scholar] [CrossRef]

- Xu, X.; Li, W.; Ran, Q.; Du, Q.; Gao, L.; Zhang, B. Multisource Remote Sensing Data Classification Based on Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 937–949. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Comparison of Vector Stacking, Multi-SVMs Fuzzy Output, and Multi-SVMs Voting Methods for Multiscale VHR Urban Mapping. IEEE Geosci. Remote Sens. Lett. 2010, 7, 261–265. [Google Scholar] [CrossRef]

- Mou, L.; Lu, X.; Li, X.; Zhu, X.X. Nonlocal Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8246–8257. [Google Scholar] [CrossRef]

- Nguyen, P.; Shivadekar, S.; Chukkapalli, S.S.L.; Halem, M. Satellite Data Fusion of Multiple Observed XCO2 using Compressive Sensing and Deep Learning. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2073–2076. [Google Scholar]

- Rußwurm, M.; Körner, M. Multi-Temporal Land Cover Classification with Sequential Recurrent Encoders. ISPRS Int. J. Geo-Inf. 2018, 7, 129. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Q.; Liu, J.; Shang, J.; Du, X.; McNairn, H.; Champagne, C.; Dong, T.; Liu, M. Image Classification Using RapidEye Data: Integration of Spectral and Textual Features in a Random Forest Classifier. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5334–5349. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral Vegetation Indices and Their Relationships with Agricultural Crop Characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; Haq, I.U.; Hafeez, M.; Iqbal, N.; Shaukat, A.; Zaidi, S.M.H.; Mahmood, Z. Wheat Yellow Rust Disease Infection Type Classification Using Texture Features. Sensors 2022, 22, 146. [Google Scholar] [CrossRef]

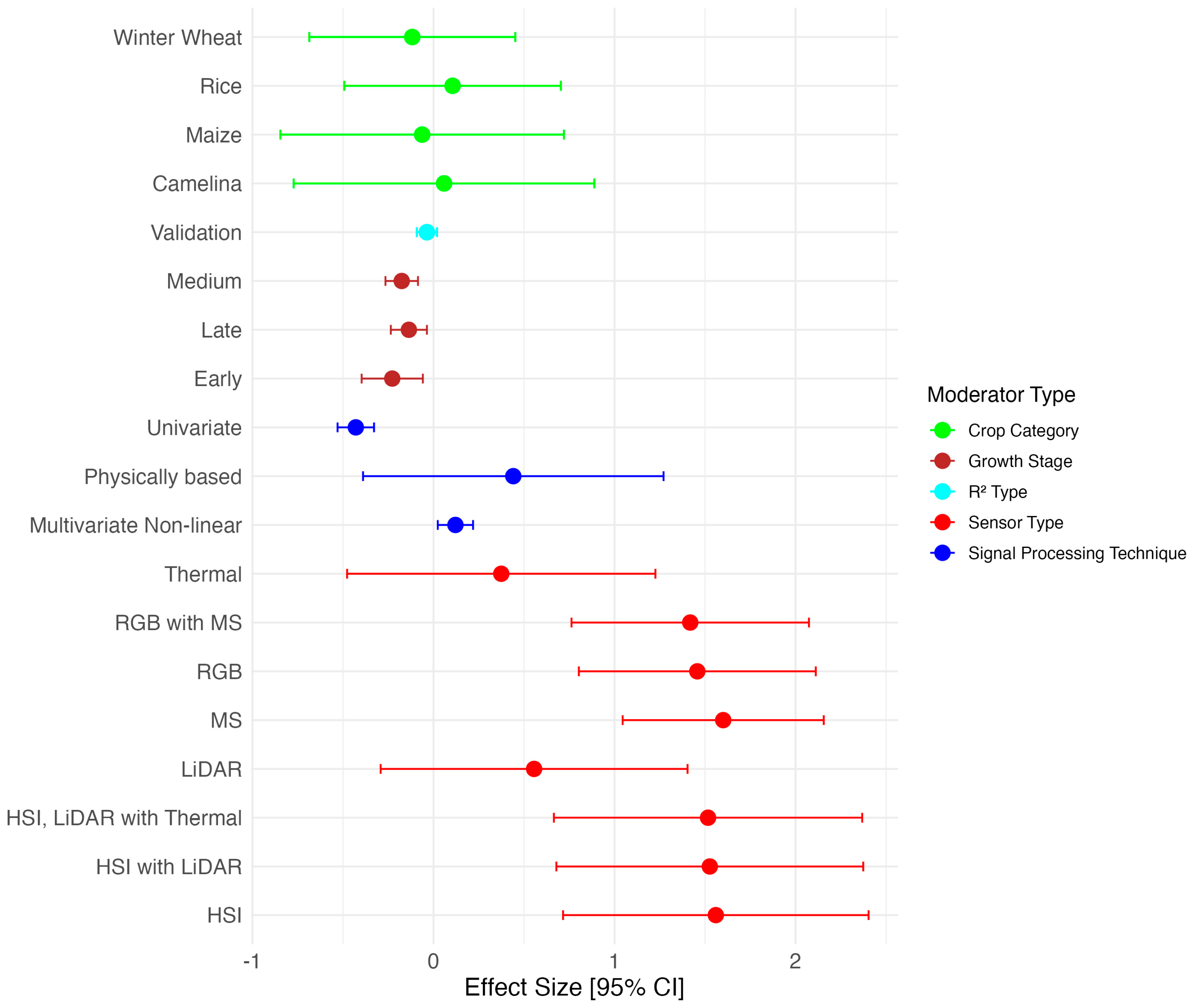

| Moderator | Regression Model Statistics | Anova Test | Variance of Effect | Heterogeneity Measures | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Null | Fixed Effects | F | df | p | Level 2 | Level 3 | |||||

| Esti. | SE | Pr(>|t|) | (num; den) | 0.031 | 0.146 | 0.175 | |||||

| 1.116 | 0.052 | 0.000 *** | |||||||||

| Sensor Type | 71.704 | 8; 20.950 | 0 | 0.055 | 0.075 | 0.423 | 0 | 0.488 | |||

| HSI | 1.395 | 0.174 | 0.000 *** | ||||||||

| HSI, LiDAR | 1.368 | 0.180 | 0.000 *** | ||||||||

| HSI, LiDAR, Thermal | 1.358 | 0.186 | 0.000 *** | ||||||||

| LiDAR | 0.397 | 0.180 | 0.0476 * | ||||||||

| MS | 1.167 | 0.084 | 0.000 *** | ||||||||

| RGB | 0.954 | 0.144 | 0.000 *** | ||||||||

| RGB, MS | 1.180 | 0.140 | 0.000 *** | ||||||||

| Thermal | 0.216 | 0.186 | 0.266 | ||||||||

| Crop | 0.515 | 4; 12.530 | 0.726 | 0.025 | 0.146 | 0.144 | 0.205 | 0.003 | |||

| Barley | 0.959 | 0.312 | 0.003 ** | ||||||||

| Camelina | 1.050 | 0.312 | 0.001 ** | ||||||||

| Cotton | 2.005 | 0.413 | 0.000 *** | ||||||||

| Maize | 1.182 | 0.104 | 0.000 *** | ||||||||

| Rice | 1.288 | 0.123 | 0.000 *** | ||||||||

| Winter Wheat | 1.032 | 0.062 | 0.000 *** | ||||||||

| Signal Processing Technique | 40.446 | 3; 70.630 | 0 | 0.031 | 0.129 | 0.196 | 0 | 0.116 | |||

| Multivariate Linear | 1.233 | 0.069 | 0.000 *** | ||||||||

| Multivariate Non-linear | 1.344 | 0.067 | 0.000 *** | ||||||||

| Physically based | 2.005 | 0.401 | 0.000 *** | ||||||||

| Univariate | 0.886 | 0.060 | 0.000 *** | ||||||||

| Growth Stage | 6.295 | 3; 415.560 | 0 | 0.036 | 0.145 | 0.201 | 0 | 0.012 | |||

| All | 1.229 | 0.072 | 0.000 *** | ||||||||

| Early | 0.995 | 0.138 | 0.000 *** | ||||||||

| Late | 1.093 | 0.078 | 0.000 *** | ||||||||

| Medium | 1.085 | 0.061 | 0.000 *** | ||||||||

| Type | 1.655 | 1; 415.890 | 0.199 | 0.027 | 0.145 | 0.157 | 0.128 | 0.011 | |||

| Calibration | 1.043 | 0.056 | 0.000 *** | ||||||||

| Validation | 1.148 | 0.051 | 0.000 *** | ||||||||

| Number of obs: 435 | Number of studies: 19 | ||||||||||

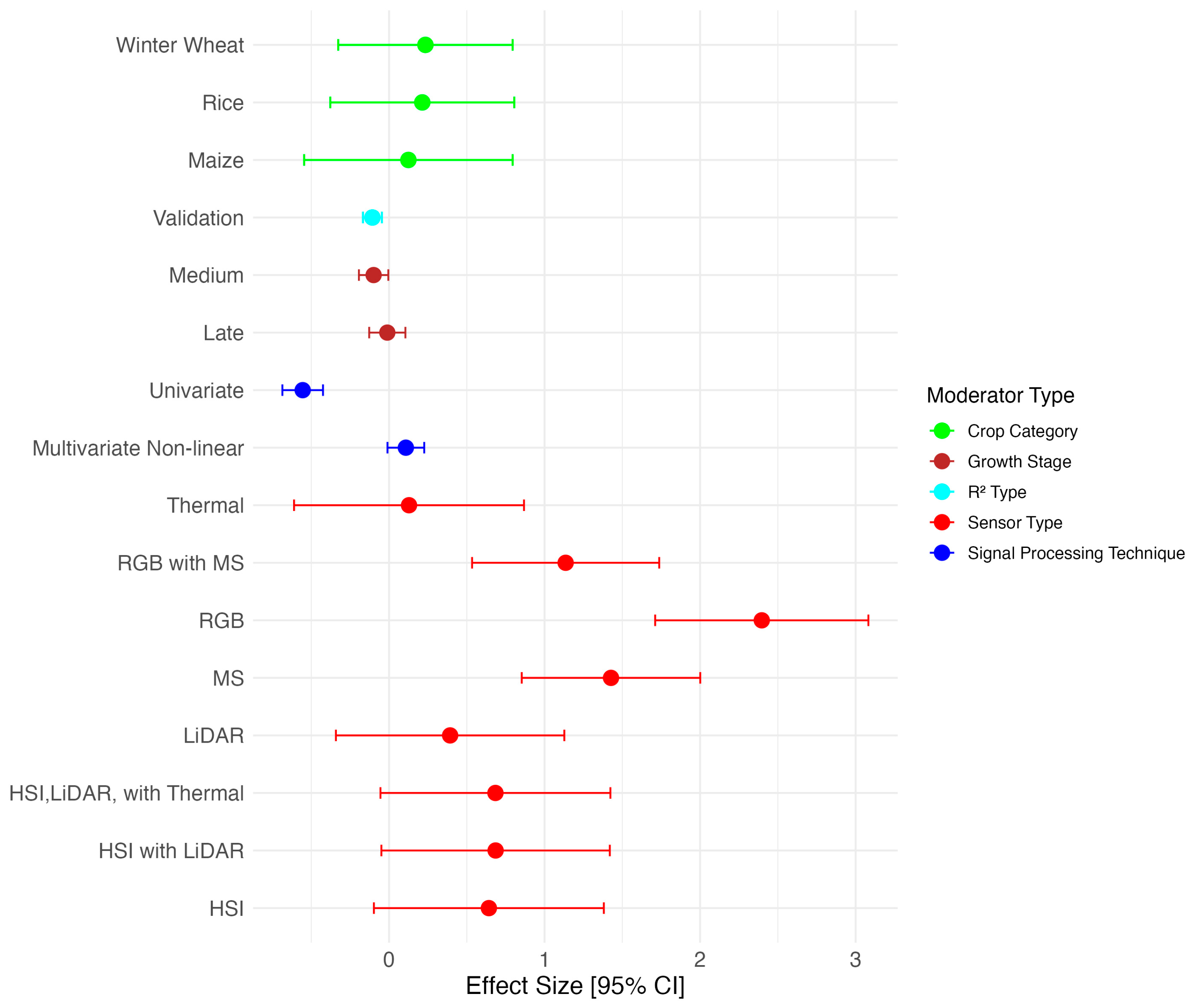

| Moderator | Regression Model Statistics | Anova Test | Variance of Effect | Heterogeneity Measures | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Null | Fixed Effects | F | df | p | Level 2 | Level 3 | |||||

| Esti. | SE | Pr(>|t|) | (num; den) | 0.116 | 0.076 | 0.604 | |||||

| 1.18 | 0.072 | 0.000 *** | |||||||||

| Sensor Type | 5.256 | 8; 29.960 | 0 | 0.117 | 0.072 | 0.618 | 0 | 0.506 | |||

| HSI | 1.144 | 0.160 | 0.000 *** | ||||||||

| HSI, LiDAR | 1.152 | 0.187 | 0.000 *** | ||||||||

| HSI, LiDAR, Thermal | 1.149 | 0.199 | 0.000 *** | ||||||||

| LiDAR | 0.872 | 0.187 | 0.000 *** | ||||||||

| MS | 1.177 | 0.104 | 0.000 *** | ||||||||

| RGB | 1.087 | 0.190 | 0.000 *** | ||||||||

| RGB, MS | 1.349 | 0.175 | 0.000 *** | ||||||||

| Thermal | 0.644 | 0.199 | 0.002 ** | ||||||||

| Crop | 0.501 | 6; 15.000 | 0.798 | 0.134 | 0.076 | 0.639 | 0 | 0.483 | |||

| Barley | 1.014 | 0.458 | 0.033 * | ||||||||

| Cotton | 1.003 | 0.369 | 0.015 * | ||||||||

| Maize | 1.394 | 0.187 | 0.000 *** | ||||||||

| Rice | 1.175 | 0.169 | 0.000 *** | ||||||||

| Soybean | 1.401 | 0.399 | 0.002 ** | ||||||||

| Winter Oil seed | 1.450 | 0.371 | 0.001 ** | ||||||||

| Winter Wheat | 1.092 | 0.112 | 0.000 *** | ||||||||

| Signal Processing Technique | 30.372 | 3; 473.64 | 0 | 0.133 | 0.066 | 0.67 | 0 | 0.55 | |||

| Multivariate Linear | 1.241 | 0.085 | 0.000 *** | ||||||||

| Multivariate Non-linear | 1.427 | 0.082 | 0.000 *** | ||||||||

| Physically Based | 1.117 | 0.133 | 0.000 *** | ||||||||

| Univariate | 0.989 | 0.080 | 0.000 *** | ||||||||

| Growth Stage | 5.871 | 3; 474.31 | 0 | 0.126 | 0.075 | 0.629 | 0 | 0.491 | |||

| All | 1.110 | 0.081 | 0.000 *** | ||||||||

| Early | 1.348 | 0.102 | 0.000 *** | ||||||||

| Late | 1.254 | 0.086 | 0.000 *** | ||||||||

| Medium | 1.181 | 0.079 | 0.000 *** | ||||||||

| Type | 6.612 | 1; 467.48 | 0.01 | 0.116 | 0.076 | 0.604 | 0 | 0.481 | |||

| Calibration | 1.176 | 0.075 | 0.000 *** | ||||||||

| Validation | 1.181 | 0.072 | 0.000 *** | ||||||||

| Number of obs: 498 | Number of studies: 25 | ||||||||||

| Moderator | Regression Model Statistics | Anova Test | Variance of Effect | Heterogeneity Measures | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Null | Fixed Effects | F | df | p | Level 2 | Level 3 | |||||

| Esti. | SE | Pr(>|t|) | (num; den) | 0.081 | 0.097 | 0.455 | |||||

| 1.18 | 0.085 | 0.000 *** | |||||||||

| Sensor Type | 18.311 | 8; 11.518 | 0 | 0.017 | 0.084 | 0.17 | 0.444 | 0.424 | |||

| HSI | 0.810 | 0.150 | 0.000 *** | ||||||||

| HSI, LiDAR | 0.853 | 0.141 | 0.001 ** | ||||||||

| HSI, LiDAR, Thermal | 0.852 | 0.150 | 0.000 *** | ||||||||

| LiDAR | 0.561 | 0.141 | 0.008 ** | ||||||||

| MS | 1.201 | 0.063 | 0.000 *** | ||||||||

| RGB | 1.928 | 0.177 | 0.000 *** | ||||||||

| RGB, MS | 1.122 | 0.079 | 0.000 *** | ||||||||

| Thermal | 0.296 | 0.150 | 0.087 | ||||||||

| Crop | 0.328 | 3; 6.220 | 0.805 | 0.073 | 0.097 | 0.428 | 0 | 0.336 | |||

| Barley | 0.666 | 0.413 | 0.115 | ||||||||

| Maize | 0.900 | 0.201 | 0.002 ** | ||||||||

| Rice | 1.251 | 0.201 | 0.000 *** | ||||||||

| Winter Wheat | 1.264 | 0.102 | 0.000 *** | ||||||||

| Signal Processing Technique | 65.225 | 2; 257.652 | 0 | 0.175 | 0.076 | 0.698 | 0 | 0.484 | |||

| Multivariate Linear | 1.378 | 0.132 | 0.000 *** | ||||||||

| Multivariate Non-linear | 1.478 | 0.125 | 0.000 *** | ||||||||

| Univariate | 0.876 | 0.124 | 0.000 *** | ||||||||

| Growth Stage | 2.408 | 2; 279.126 | 0.092 | 0.09 | 0.097 | 0.483 | 0 | 0.34 | |||

| All | 1.265 | 0.101 | 0.000 *** | ||||||||

| Late | 1.193 | 0.105 | 0.000 *** | ||||||||

| Medium | 1.151 | 0.092 | 0.000 *** | ||||||||

| Type | 11.976 | 1; 313.423 | 0.001 | 0.08 | 0.098 | 0.45 | 0 | 0.334 | |||

| Calibration | 1.168 | 0.088 | 0.000 *** | ||||||||

| Validation | 1.186 | 0.086 | 0.000 *** | ||||||||

| Number of obs: 332 | Number of studies: 13 | ||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Hu, Y.; Li, F.; Fue, K.G.; Yu, K. Meta-Analysis Assessing Potential of Drone Remote Sensing in Estimating Plant Traits Related to Nitrogen Use Efficiency. Remote Sens. 2024, 16, 838. https://doi.org/10.3390/rs16050838

Zhang J, Hu Y, Li F, Fue KG, Yu K. Meta-Analysis Assessing Potential of Drone Remote Sensing in Estimating Plant Traits Related to Nitrogen Use Efficiency. Remote Sensing. 2024; 16(5):838. https://doi.org/10.3390/rs16050838

Chicago/Turabian StyleZhang, Jingcheng, Yuncai Hu, Fei Li, Kadeghe G. Fue, and Kang Yu. 2024. "Meta-Analysis Assessing Potential of Drone Remote Sensing in Estimating Plant Traits Related to Nitrogen Use Efficiency" Remote Sensing 16, no. 5: 838. https://doi.org/10.3390/rs16050838

APA StyleZhang, J., Hu, Y., Li, F., Fue, K. G., & Yu, K. (2024). Meta-Analysis Assessing Potential of Drone Remote Sensing in Estimating Plant Traits Related to Nitrogen Use Efficiency. Remote Sensing, 16(5), 838. https://doi.org/10.3390/rs16050838