Abstract

Three-dimensional reconstruction is a key technology employed to represent virtual reality in the real world, which is valuable in computer vision. Large-scale 3D models have broad application prospects in the fields of smart cities, navigation, virtual tourism, disaster warning, and search-and-rescue missions. Unfortunately, most image-based studies currently prioritize the speed and accuracy of 3D reconstruction in indoor scenes. While there are some studies that address large-scale scenes, there has been a lack of systematic comprehensive efforts to bring together the advancements made in the field of 3D reconstruction in large-scale scenes. Hence, this paper presents a comprehensive overview of a 3D reconstruction technique that utilizes multi-view imagery from large-scale scenes. In this article, a comprehensive summary and analysis of vision-based 3D reconstruction technology for large-scale scenes are presented. The 3D reconstruction algorithms are extensively categorized into traditional and learning-based methods. Furthermore, these methods can be categorized based on whether the sensor actively illuminates objects with light sources, resulting in two categories: active and passive methods. Two active methods, namely, structured light and laser scanning, are briefly introduced. The focus then shifts to structure from motion (SfM), stereo matching, and multi-view stereo (MVS), encompassing both traditional and learning-based approaches. Additionally, a novel approach of neural-radiance-field-based 3D reconstruction is introduced. The workflow and improvements in large-scale scenes are elaborated upon. Subsequently, some well-known datasets and evaluation metrics for various 3D reconstruction tasks are introduced. Lastly, a summary of the challenges encountered in the application of 3D reconstruction technology in large-scale outdoor scenes is provided, along with predictions for future trends in development.

1. Introduction

Three-dimensional reconstruction is a way to represent and process 3D objects by generating a digital model in a computer, which is the basis for visualizing, editing, and researching their properties. It is also a critical technology for generating a virtual world and combining reality with virtuality to express the real world. With the popularity of image-acquisition equipment and the development of computer vision in recent years, obtaining information from 2D images is no longer sufficient to meet the demands of various applications. Obtaining more accurate 3D reconstruction models through massive 2D images has become a research hot spot. At the same time, modern remote sensing technology is continuously improving. Furthermore, the high-resolution images obtained through remote sensing have demonstrated significant utility in areas such as urban planning and management, ground observation, and related fields.

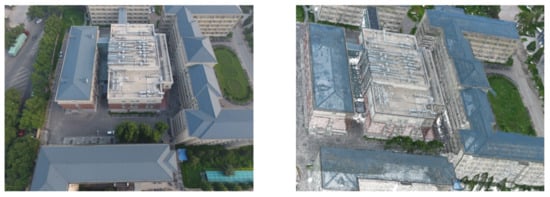

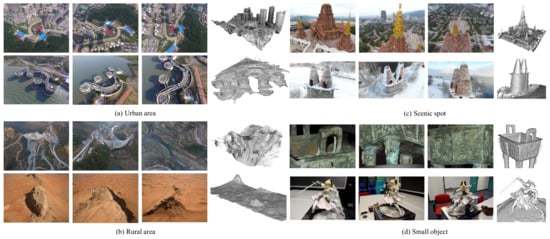

There is significant practical and theoretical research value in applying satellite images obtained in remote sensing scenarios and outdoor scene images obtained in aviation and UAV scenarios to 3D reconstruction technology. This approach offers an efficient means of generating precise large-scale scene models (Figure 1), which are applicable in smart city development, navigation, virtual tourism, disaster monitoring, early warning systems, and various other domains [1]. Additionally, for cultural heritage, 3D reconstruction techniques can be employed to reconstruct the original appearance of cultural heritage sites. By scanning and modeling ancient buildings, urban remains, or archaeological sites, researchers can recreate visual representations of historical periods, facilitating a better understanding and preservation of cultural heritage [2]. Most image-based studies currently prioritize the speed and accuracy of 3D reconstruction in indoor scenes. While there are some studies that address large-scale scenes, there are no systematic research reviews on this topic. Therefore, a comprehensive overview of 3D reconstruction techniques utilizing multi-view imagery from large-scale scenes is presented in this paper. It should be explicitly stated that the 3D reconstruction mentioned in this article solely pertains to generating point clouds of a scene and does not involve subsequent mesh models.

Figure 1.

An example of 3D reconstruction: (left) real image, (right) 3D model: point clouds.

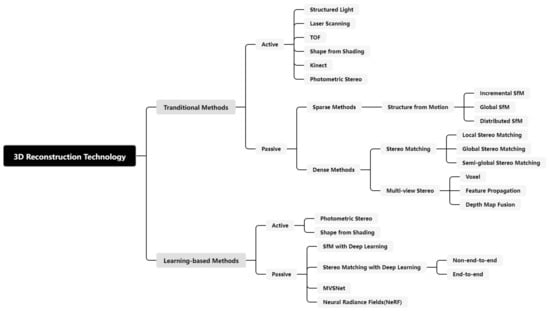

Three-dimensional reconstruction methods are categorically divided into traditional and learning-based types, in which classification is based on the utilization of neural networks. Furthermore, based on their approach to acquiring scene information, they can be further categorized into active and passive reconstruction methods [3]. An active 3D reconstruction method scans a target through a 3D scanning device, followed by calculating the depth information of the object and obtaining point cloud data, which are used to restore the 3D model of the target. The main steps are data registration, point cloud data pre-processing, segmentation, and triangle meshing [4]. At present, widely adopted active methods encompass structured light-based 3D reconstruction [5,6], reconstruction from 3D laser scanning data [7], shadow detection [8,9], time of flight (TOF) [10,11], photometric stereo [12,13,14,15], and Kinect methods [16]. Structured light-based reconstruction methods emit specific light waves through the corresponding equipment and obtain information about the changes in light on the surface of an object. The data are then employed to calculate the 3D information, such as the surface depth of the object, so that a 3D model of the object can be reconstructed. Laser-scanning reconstruction uses the time difference between an emitted and returned laser to calculate the distance from an object’s surface to the scanner in order to reconstruct a 3D model. Passive 3D reconstruction is used to reconstruct a 3D model of an object through images collected using cameras. Since the images do not contain depth information about the object, 3D reconstruction can only be completed by predicting the surface depth of the object through geometric principles (Figure 2).

Figure 2.

Classification of 3D reconstruction technology.

Active methods, such as laser scanning, while capable of obtaining the precise depth information of objects, have their limitations. Firstly, their scanning equipment is typically expensive, bulky, and not affordable for the average research facility. Secondly, these scanning devices often rely on emitting and receiving light waves to calculate distances, making them susceptible to uncontrollable factors, such as lighting conditions in the environment. Consequently, they are usually more suitable for the 3D reconstruction of small objects and may not be ideal for large-scale scenes. Therefore, the focus has shifted toward passive methods that are reliant on camera images.

The remainder of this article is organized as follows: Section 2 introduces 3D reconstruction methods based on traditional methods. In Section 3, 3D reconstruction methods based on deep learning are presented. Section 4 provides a list of datasets and evaluation metrics for large-scale scenes. Finally, the challenges and outlook are provided in Section 5, and conclusions are drawn in Section 6.

2. Traditional Methods

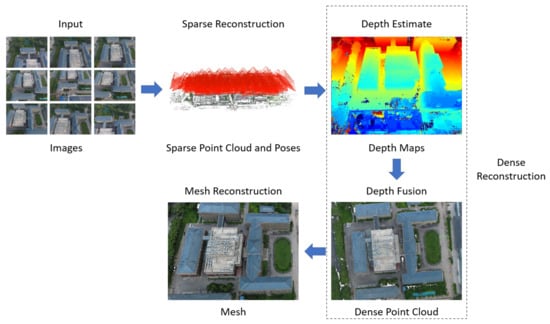

In traditional passive reconstruction methods, the initial stage involves detecting and matching feature points in images, followed by associating corresponding points across multiple images for subsequent camera pose estimation and point cloud reconstruction. A typical pipeline of 3D reconstruction is shown in Figure 3. It is worth noting that, in Figure 3, the final step shows a mesh reconstruction. However, this article exclusively focuses on the generation of point clouds, and therefore, mesh reconstruction will not be introduced. This section presents an overview of the advancements in point cloud reconstruction techniques, including structure from motion (SfM) for sparse reconstruction, stereo matching, and multi-view stereo (MVS) for dense reconstruction.

Figure 3.

A typical pipeline of 3D reconstruction. Step 1: Input multi-view images. Step 2: Use SfM to compute camera poses and reconstruct sparse point clouds. Step 3: Estimate depth using MVS. Step 4: Obtain a dense point cloud via depth fusion. Finally, obtain the mesh through mesh reconstruction.

2.1. Sparse Reconstruction: SfM

Structure from motion (SfM) is a technique that automatically recovers camera parameters and a 3D scene’s structure from multiple images or video sequences. SfM uses cameras’ motion trajectories to estimate camera parameters. By capturing images from different viewpoints, the camera’s position information and motion trajectory are computed. Subsequently, a 3D point cloud is generated in the spatial coordinate system. Existing SfM methods can be categorized into incremental, distributed, and global approaches according to the different methods for estimating the initial values of unknown parameters.

2.1.1. Incremental SfM

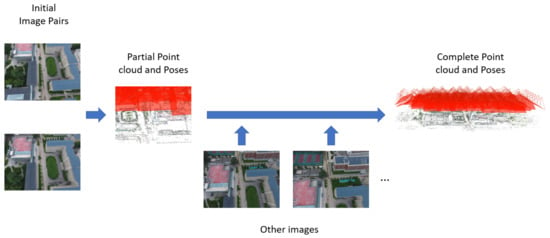

Incremental SfM [17], which involves selecting image pairs and reconstructing sparse point clouds, is currently the most widely used method. Photo Tourism, which was proposed by Snavley et al. [18], is the earliest incremental SfM system. It first selects a pair of images to compute the camera poses and reconstruct a partial scene. Then, it gradually adds new images and adjusts the previously computed camera poses and a scene model to obtain camera poses and scene information (Figure 4). Recently, a significant number of incremental SfM methods have been introduced, such as [19], EC-SfM [20], and AdaSfM [21].

Figure 4.

An overview of incremental SfM. First, an initial image pair is selected to compute the camera poses and reconstruct a partial scene; then, gradually new images are gradually added to compute the complete point cloud.

In 2012, Moulon et al. [22] proposed Adaptive SfM (ASFM), an adaptive threshold estimation method that eliminates the need for manually setting hyperparameters. Due to the presence of noise and drift in pose and 3D point estimation, it is necessary to optimize the camera poses using the bundle adjustment (BA) algorithm after incorporating a certain number of new image pairs. In 2013, Wu et al. introduced VisualSFM [23], which improved the matching speed through a preemptive feature matching strategy and accelerated sparse reconstruction using a local–global bundle-adjustment technique. When the number of cameras increases, optimization is performed only on local images, and when the overall model reaches a certain scale, optimization is applied to all images, thus improving the reconstruction speed. In 2016, Schönberger et al. [24] integrated the classical SfM methods and made individual improvements to several key steps, such as geometric rectification, view selection, triangulation, and bundle adjustment, which were consolidated into COLMAP.

When reconstructing large-scale scenes using incremental SfM, errors accumulate as the number of images increases, leading to scene drift and time-consuming and repetitive bundle adjustments. Therefore, the initial incremental SfM approach is not ideal for large-scale outdoor scene reconstruction. In 2017, Zhu et al. [25] proposed a camera-clustering algorithm that divided the original SfM problem into sub-SfM problems for multiple clusters. For each cluster, a local incremental SfM algorithm was applied to obtain local camera poses, which were then incorporated into a global motion-averaging framework. Finally, the corresponding partial reconstructions were merged to improve the accuracy of large-scale incremental SfM, particularly in camera registration. In 2018, Qu et al. [26] proposed a fast outdoor-scene-reconstruction algorithm for drone images. They first used principal component analysis to select key drone images and create an image queue. Incremental SfM was then applied to compute the queue images, and new key images were selected and added to the queue. This enabled the use of incremental SfM for large-scale outdoor scene reconstruction. In 2019, Duan et al. [27] combined the graph optimization theory with incremental SfM. When constructing the graph optimization model, they used the sum of the squared reprojection errors as the cost function for optimization, aiming to reduce errors. Liu et al. [28] proposed a linear incremental SfM system for the large-scale 3D reconstruction of oblique photography scenes. They addressed the presence of many pure rotational image pairs in oblique photography data using bundle adjustment and outlier filtering to develop a new strategy for selecting initial image pairs. They also reduced cumulative errors by combining local bundle adjustment, local outlier filtering, and local re-triangulation methods. Additionally, the conjugate gradient method was employed to achieve a reconstruction speed that was close to linear speed. In 2020, Cui et al. [29] introduced a new SfM system. This system used track selection and camera prioritization to improve the robustness and efficiency of incremental SfM and make the datasets of large-scale scenes useful in SfM.

The main drawbacks of incremental SfM are as follows:

- Sensitivity to the selection of initial image pairs, which limits the quality of reconstruction to the initial pairs chosen.

- The accumulation of errors as new images are added, resulting in the scene-drift phenomenon.

- Incremental SfM is an iterative process where each image undergoes bundle adjustment optimization, leading to a significant number of redundant computations and lower reconstruction efficiency.

2.1.2. Global SfM

Global SfM encompasses the estimation of global camera rotations, positions, and point cloud generation. In contrast to incremental SfM, which processes images one by one, global SfM takes all of the images as input and performs bundle adjustment optimization only once, significantly improving the reconstruction speed. It evenly distributes errors and avoids error accumulation, resulting in higher reconstruction accuracy. It was first proposed by Sturm et al. [30]. In 2011, Crandall et al. [31] introduced a global approach based on Markov random fields. Hartley et al. [32] proposed the Weiszfeld algorithm based on the L1 norm to estimate camera rotations in the global SfM pipeline, achieving fast and robust results. In 2014, Wilson et al. [33] presented a 1D SfM method, which mapped the translation problem to a one-dimensional space, removed outliers, and then estimated global positions using non-convex optimization equations to reduce scene graph mismatches and improve the accuracy of position estimation. In 2015, Sweeney et al. [34] utilized the cycle-consistent optimization of a scene graph’s fundamental matrix to enhance the accuracy of camera pose estimation for image pairs. Cui et al. [35] proposed a novel global SfM method that optimized the solution process with auxiliary information, enabling it to handle various types of data.

However, global SfM may not yield satisfactory results for large-scale scenes due to the varying inter-camera correlations. In 2018, Zhu et al. [36] introduced a distributed framework for global SfM based on nested decomposition. They segmented the initial camera distribution map and iteratively optimized multiple segmented maps to improve local motion averages. Then, they optimized the connections between the sub-distribution maps to enhance global motion averaging, thereby improving global SfM reconstruction in large-scale scenes. In 2022, Pang [37] proposed a segmented SfM algorithm based on global SfM for the UAV-based reconstruction of outdoor scenes. The algorithm grouped UAV images based on latitude and longitude, extracted and matched features while removing mismatches, performed global SfM for each image group to obtain camera poses and sparse point clouds, merged point clouds, optimized scene spatial points and camera poses according to the grouping order, and finally performed global SfM on the merged data to obtain the point cloud of the entire large scene. Yu et al. [38] presented a robust global SfM algorithm for UAV-based 3D reconstruction. They combined rotation averaging from Lie algebra, the L1 norm, and least-squares principles to propose the L1-IRLS algorithm for computing the rotation parameters of UAV images, and they also incorporated GPS data into bundle adjustment to obtain high-precision point cloud data.

The main advantages of global SfM are as follows:

- Global SfM aims to optimize the camera poses and 3D scene structure simultaneously, ensuring that the entire reconstruction is globally consistent. This results in more accurate and reliable reconstructions.

- Global SfM typically employs optimization techniques such as global bundle adjustment, allowing it to provide high-precision estimates of camera parameters and 3D point clouds.

- Global SfM is typically suitable for large-scale scenes.

The main disadvantages of global SfM are as follows:

- Global SfM methods are computationally intensive and may require significant amounts of time and computational resources, especially for large datasets with many images and 3D points.

- The global camera position estimation results are unstable.

- Global SfM can be sensitive to outliers in the data. If there are incorrect correspondences or noisy measurements, they can have a significant impact on the global optimization process.

2.1.3. Distributed SfM

Although incremental SfM has advantages in robustness and accuracy, its efficiency is not high enough. Additionally, with the accumulation of errors, the scene structure is likely to exhibit drift in large-scale scene reconstruction. Global SfM is more efficient than the incremental approach; however, it is sensitive to outliers. In 2017, Cui et al. [39] proposed a distributed SfM method that combined incremental and global approaches. It used the incremental SfM method to compute camera positions for each additional image, and the camera rotation matrices were computed using the global SfM method. Finally, a local bundle adjustment algorithm was applied to optimize the camera’s center positions and scene 3D coordinates, thereby improving the reconstruction speed while ensuring robustness. In 2021, Wang et al. [40] introduced a hybrid global SfM method for estimating global rotations and translations at the same time. Distributed SfM combines the advantages of both methods to some extent.

2.2. Dense Reconstruction: Stereo Matching and MVS

2.2.1. Stereo Matching

Derived from the human binocular vision system [41], binocular vision imitates the principles of human vision to obtain a vast amount of three-dimensional data. It captures left and right images from different perspectives using two identical cameras at the same location. By utilizing the disparity formed from the two images, the depth of each pixel can be obtained. The process can be divided into four main steps: camera calibration, image rectification, stereo matching, and 3D reconstruction calculation [42]. Stereo matching, in particular, is the foundational and crucial step in binocular vision reconstruction.

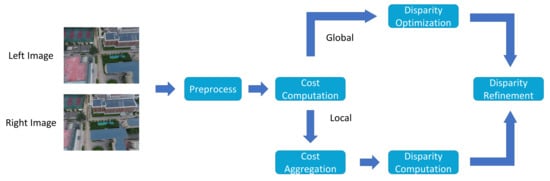

Stereo matching aims to find corresponding pixels between two images captured using left and right cameras, calculates the corresponding disparity values, and then uses the principles of triangle similarity to obtain the depth information between objects and the cameras. However, challenges in improving the matching accuracy arise due to factors such as uneven illumination, occlusion, blurring, and noise [43]. The matching process mainly consists of four steps: matching cost calculation, matching cost aggregation, disparity calculation, and disparity refinement [44] (Figure 5). Additionally, to enhance accuracy, constraints such as the epipolar, uniqueness, disparity–continuity, ordering–consistency, and similarity constraints are employed to simplify the search process [45]. Based on these constraint methods, stereo matching algorithms can be classified into global, local, and semi-global matching methods (SGMs) [46].

Figure 5.

Workflow of stereo matching. First, the noise is reduced through image filtering, images are normalized, and image features are extracted. Then, the cost is computed, such as through the absolute differences in pixels between the images in an image pair. Finally, the disparity maps are computed, and the disparity map is refined.

Local Matching Method

Local matching methods in stereo vision rely on local constraints, such as windows, features, and phases, to perform matching, and they primarily utilize grayscale information from images. These methods determine disparities by establishing correspondences between the grayscale values of a given pixel and a small neighborhood of pixels. Currently, fixed-window [47], adaptive-window [48], and multi-window [49] algorithms are the main focus of research in window-based matching. While local matching algorithms can rapidly generate disparity maps, their accuracy is limited, particularly in regions with low texture and discontinuous disparities. In 2006, Yoon et al. [50] introduced the adaptive support weight (ASW) approach, which assigns different support weights to each pixel based on color differences and pixel distances. This technique improves matching accuracy in low-texture regions. In 2008, Wang et al. [51] proposed cooperative competition between regions to minimize the matching costs through collaborative optimization. In 2011, Liu et al. [52] introduced a new similarity measurement function to replace the traditional sum of absolute differences (SAD) function. They also employed different window sizes for matching in various regions and developed a new matching algorithm based on SIFT features and Harris corner points, improving both matching accuracy and speed. In 2014, Zhong et al. [53] introduced smoothness constraints and performed image segmentation using color images. They used a large window to match initial seed points and then expanded them using a small window to obtain a disparity map, effectively reducing matching errors in discontinuous disparity regions. In 2016, Hamzah et al. [45] addressed the limitations of single cost metrics by combining the absolute difference (AD), gradient matching (GM), and census transform (CN) into an iterative guided filter (GF) that enhanced edge information in images. Additionally, they introduced graph-cut algorithms to enhance robustness in low-texture regions and discontinuous disparity regions.

Local matching methods are efficient and flexible, yet they lack a holistic understanding of the scene, which makes them prone to local optima.

Global Matching Method

Global stereo matching algorithms primarily utilize the complete pixel information in images and the disparity information of neighboring pixels to perform matching. They employ constraint conditions to create an energy function that integrates all of the pixels in the image, aiming to obtain as much global information as possible. Global stereo matching algorithms can optimize the energy function through methods such as dynamic programming, belief propagation, and graph cuts [54].

Dynamic programming matching algorithms have been established under the constraint of epipolar lines. Optimal point searching and matching along each epipolar line are performed using dynamic programming with the aim of minimizing the global energy function and obtaining a disparity map. However, since only the pixels along the horizontal epipolar lines are scanned, the resulting disparity map often exhibits noticeable striping artifacts [54]. In 2006, Sung et al. [55] proposed a multi-path dynamic programming matching algorithm, which introduced a new energy function that considered the correlation between epipolar lines and utilized edge information in the images to address the discontinuity caused by occlusion, resulting in more accurate disparity estimation at boundary positions and reducing striping artifacts. In 2009, Li et al. [56] utilized the scale-invariant feature transform (SIFT) to extract feature points from images and performed feature point matching using a nearest-neighbor search, effectively alleviating striping artifacts. In 2012, Hu et al. [57] proposed a single-directional four-connected tree search algorithm and improved the dynamic programming algorithm for disparity estimation in boundary regions, enhancing both the accuracy and the efficiency of disparity estimation in boundary areas.

Stereo matching algorithms based on confidence propagation are commonly formulated as Markov random fields. In these algorithms, each pixel acts as a network node containing two types of information: data information, which stores the disparity value, and message information, which represents the node’s information to be propagated. Confidence propagation occurs among four neighboring pixels, enabling messages to propagate effectively in low-texture regions and ensuring accurate disparity estimation [58]. This approach achieves high matching accuracy by individually matching pixels throughout the entire image. However, it is characterized by a low matching efficiency and a long computation time. To address this issue, Zhou et al. [59] proposed a parallel algorithm in 2011. This algorithm divides the image into distinct regions for parallel matching, and the results from each region are subsequently combined to enhance the overall matching efficiency.

In stereo matching, the estimation of a disparity map can be formulated as minimizing a global energy function. Graph-cut-based matching algorithms construct a network graph of an image, where the problem of minimizing the energy function is equivalent to finding the minimum cut of the graph. By identifying the optimal image segmentation set, the algorithm achieves a globally optimal disparity map [60]. However, graph-cut algorithms often suffer from inaccurate initial matching in low-texture regions and require the computation of template parameters for all segmentation regions, leading to poor matching results in low-texture and occluded areas, as well as long computation times. In 2007, Bleyer et al. [61] proposed an improved approach that aggregated initial disparity segments into a set of disparity layers. By minimizing a global cost function, the algorithm selected the optimal disparity layer, resulting in improved matching performance in large textureless regions. Lempitsky et al. [62] achieved significant improvements in speed by parallelizing the computation of optimal segmentation and subsequently fusing the results. In 2014, He et al. [63] addressed the issues of blurry boundaries and prone-to-error matching in low-texture regions. They employed a mean-shift algorithm for image segmentation, performed singular value decomposition to fit disparity planes, and applied clustering and merging to neighboring segmented regions, leading to enhanced matching efficiency and accuracy in low-texture and occluded areas.

Global matching methods incorporate the advantages of local matching methods and adopt the cost aggregation approach used in local optimal dense matching methods. They introduce regularization constraints to obtain more robust matching results, but they consume more computational time and memory resources. Additionally, compared to local matching methods, global matching methods more readily incorporate additional prior information as constraints, such as the prevalent planar structural information in urban scenes, thus further enhancing the refinement of reconstruction results.

Semi-Global Matching Method

Semi-global matching (SGM) [64] also adopts the concept of energy function minimization. However, unlike global matching methods, SGM transforms the optimization problem of two-dimensional images into one-dimensional optimization along multiple paths (i.e., scanline optimization). It aggregates costs along paths from multiple directions and uses the Winner Takes All (WTA) algorithm to calculate disparities, achieving a good balance between matching accuracy and computational cost. The census transform proposed by Zabih [65] is widely used in matching cost computation. This method has a simple structure but is heavily reliant on the central pixel of the local window and is sensitive to noise. In 2012, Hermann et al. [66] introduced a hierarchical iterative semi-global stereo matching algorithm, resulting in a significant improvement in speed. Rothermel et al. [67] proposed a method for adjusting the disparity search range based on an image pyramid matching strategy called tSGM. They utilized the previous disparity to derive the dynamic disparity search range for each current pixel. This further reduced memory consumption while enhancing computational accuracy. In 2016, Tao [68] proposed a multi-measure semi-global matching method, building upon previous research. This method improved and expanded aspects such as the choice of penalty coefficients, similarity measures, and disparity range adjustments in classic semi-global matching algorithms. Compared to the method in [67], it offered enhancements in terms of reconstruction completeness and accuracy. In 2017, Li et al. [69] used mutual information combined with grayscale and gradient information as the matching cost function to calculate the cost values. They employed multiple adaptive path aggregations to optimize the initial cost values. Finally, they applied methods such as a left–right consistency check to complete the optimization. Additionally, they further refined the matching results using peak filters. In 2018, Chai et al. [70] introduced a semi-global matching method based on a minimum spanning tree. This approach calculated the cost values between pixels along four planned paths and aggregated the costs in both the leaf node and root node directions. The algorithm resulted in fewer mismatched points near the image edges and provided a more accurate disparity map. In 2020, Wang et al. [71] used SURF to detect potential matching points in remote sensing stereo image pairs. This was performed to modify the path weights in different aggregation directions, improving the matching accuracy in areas with weak texture and discontinuous disparities. However, the SURF step introduced additional computational burdens. Shrivastava et al. [72] extended the traditional semi-global matching (SGM) pipeline architecture by processing multiple pixels in parallel with relaxed dependency constraints, which improved the algorithm’s efficiency. However, it led to significant accuracy losses. In 2021, Huang et al. [73] introduced weights during the census transform phase, enabling the accurate selection of reference pixel values for the central point. They also used a multi-scale aggregation strategy with guided filtering as the cost aggregation kernel, resulting in improved matching accuracy. However, this significantly increased the algorithm’s complexity, making it less suitable for parallel implementation. Zhao et al. [74] replaced the central pixel with the surrounding pixels of the census window during the transformation process, making it more robust and achieving good disparity results. In 2022, Lu et al. [75] employed a strategy involving downsampling and disparity skipping. They also introduced horizontal path weighting during aggregation. However, this approach introduced a new path weight parameter, increasing the computational complexity of cost aggregation.

The current semi-global stereo matching algorithms have made significant advancements in both accuracy and efficiency. However, they have not achieved a well-balanced trade-off between accuracy and efficiency.

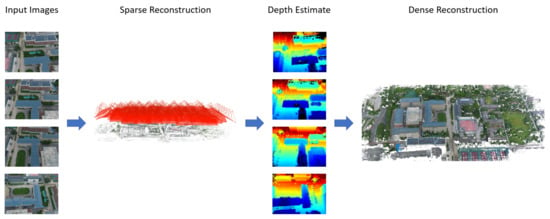

2.2.2. Multi-View Stereo

When using SfM for scene reconstruction, the sparsity of feature matching points often leads to a sparse point cloud and unsatisfactory reconstruction results. To overcome this limitation, multi-view stereo (MVS) techniques are employed to enhance the reconstruction. MVS leverages the camera pose parameters from SfM in a scene to capture richer information. Moreover, the image rectification and stereo matching mentioned in Section 2.2 are used in MVS. The primary goal is to identify the most effective method of matching corresponding points across different images, thereby improving the density of the scene and enhancing the quality of the reconstruction (Figure 6). MVS can be implemented through three main methods: voxel-based reconstruction, feature propagation, and depth map fusion [76].

Figure 6.

Dense reconstruction based on MVS.

The voxel-based algorithm defines a spatial range—typically a cube—that encapsulates the entire scene to be reconstructed. This cube is then subdivided into smaller cubes, which are known as voxels. By assigning occupancy values to the voxels based on scene characteristics, such as filling voxels in occupied regions and leaving others unfilled in unoccupied regions, a 3D model of the object can be obtained [77]. However, this algorithm has limitations. Firstly, it requires an initial determination of a fixed spatial range, which limits its ability to reconstruct objects beyond that range. Secondly, the algorithm’s complexity restricts the number of subdivisions, resulting in relatively lower object resolution.

The feature propagation method involves generating surface slices and an initial point cloud based on initial feature points. These feature points are projected onto images and propagated to the surrounding areas. Finally, surface slices are used to cover the scene surface for 3D reconstruction. Each surface slice can be visualized as a rectangle with information such as its center and surface normal vector. By estimating surface slices and ensuring the complete coverage of the scene, an accurate and dense point cloud structure can be obtained [78]. In 2010, Furukawa proposed a popular feature-propagation-based MVS algorithm called PMVS [79].

The depth map fusion method is the most commonly used and effective approach in multi-view stereo vision. It typically involves four steps: reference image selection, depth map estimation, depth map refinement, and depth map fusion [80]. Estimating depth maps is a critical step in multi-view stereo reconstruction, where an appropriate depth value is assigned to each pixel in the image. This estimation is achieved by maximizing the photometric consistency between an image and a corresponding window in the reference image centered at that pixel (Figure 7). Common metrics for photometric consistency include the mean absolute difference (MAD), the sum of squared differences (SSD), the sum of absolute differences (SAD), and normalized cross-correlation (NCC) [52].

Figure 7.

Flowchart of depth estimation. The cost volume of selecting a candidate set is generated using certain criteria, such as variance. All cost volumes are aggregated, and the depth is estimated using the cost volume. Finally, depth maps are obtained after optimization.

3. Learning-Based Methods

Traditional 3D reconstruction methods have been widely applied in various industries and in daily life. While traditional 3D reconstruction methods still dominate the research field, an increasing number of researchers are starting to focus on using deep learning to explore 3D reconstruction, or in other words, the intersection and fusion of the two approaches. With the development of deep learning, convolutional neural networks (CNNs) have been extensively used in computer vision. CNNs have significant advantages in image processing, as they can directly take images as inputs, avoiding the complex processes of feature extraction and data reconstruction in traditional image processing algorithms. Although deep-learning-based 3D reconstruction methods have been developed relatively recently, they have progressed rapidly. Deep learning has made significant advancements in the research of 3D reconstruction. What roles can deep learning play in 3D reconstruction? Initially, deep learning can provide new insights into the optimization of the performance of traditional reconstruction methods, such as Code SLAM [81]. This approach employs deep learning methods to extract multiple basis functions from a single image, using neural networks to represent the depth of a scene. These representations can greatly simplify the optimization problems present in traditional geometric methods. Secondly, the fusion of deep-learning-based reconstruction algorithms with traditional 3D reconstruction algorithms leverages the complementary strengths of both approaches. Furthermore, deep learning can be used to mimic human vision and directly reconstruct 3D models. Since humans can perform 3D reconstruction based on their brains rather than strict geometric calculations, it is theoretically feasible to use deep learning methods directly. It is essential to note that, in some research, certain methods aim to perform 3D reconstruction directly from a single image. In theory, a single image lacks the 3D information of an object and is, thus, unable to recover depth information. However, humans can make reasonable estimates of an object’s distance based on experience, which adds some plausibility to such methods.

This section will introduce the applications of deep learning in traditional methods such as structure from motion, stereo matching, and multi-view stereo vision, as well as in the novel approach of neural-radiance-field-based 3D reconstruction.

3.1. SfM with Deep Learning

The combination of deep learning and SfM enables the efficient estimation of camera poses and scene depth due to the high accuracy and efficiency of feature extraction and matching in CNNs [82]. In 2017, Zhou et al. [83] achieved good results by utilizing unsupervised photometric error minimization. They employed two jointly trained CNNs to predict depth maps and camera motion. Ummenhofer et al. [84] utilized optical flow features to estimate scene depth and camera motion, improving the generalization capabilities in unfamiliar scenes. In 2018, Wang et al. [85] incorporated a multi-view geometry constraint between depth and motion. They used a CNN to estimate the scene depth and a differentiable module to compute camera motion. In 2019, Tang et al. [86] proposed a deep learning framework called BA-Net (Bundle Adjustment Network). The core of the network was a differentiable bundle adjustment layer that predicted both the scene depth and camera motion based on CNN features. It emphasized the incorporation of multi-view geometric constraints, enabling the reconstruction of an arbitrary number of images.

3.2. Stereo Matching with Deep Learning

In 2015, LeCun et al. [87] introduced the use of convolutional neural networks (CNNs) for extracting image features in cost computation. Furthermore, they presented cost aggregation with a cross-cost consistency check. This approach eliminated erroneous matching areas, marking the emergence of deep learning as a significant technique in stereo matching.

3.2.1. Non-End-to-End Methods

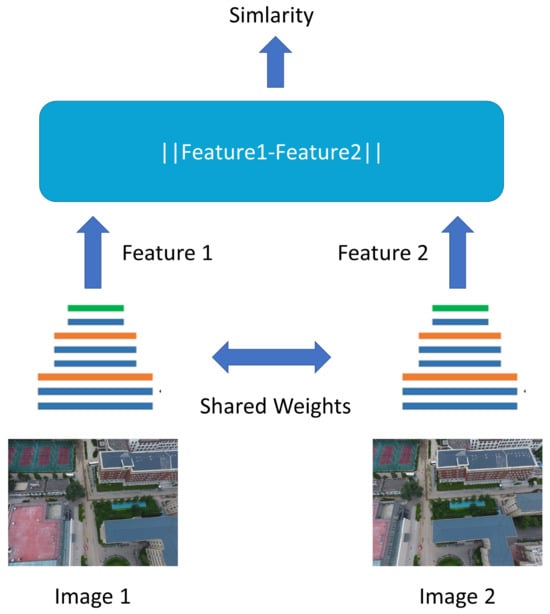

The image networks used for stereo matching can be categorized into three main types: pyramid networks [88], Siamese networks [89], and generative adversarial networks (GANs) [90].

In 2018, Chang et al. [91] incorporated a pyramid pooling module during the feature extraction stage. They utilized multi-scale analysis and a 3D-CNN structure to effectively address issues such as vanishing and exploding gradients, achieving favorable outcomes even under challenging conditions, such as weak textures, occlusion, and non-uniform illumination. There are also some other related works, such as CREStereo [92], ACVNet [93], and NIG [94].

Siamese networks, pioneered by Bromley et al. [89], consist of two weight-sharing CNNs that take the left and right images as inputs. Feature vectors are extracted from these images, and the L1 distance between the feature vectors is measured to estimate the similarity between the images (Figure 8). MC-CNN [87] is a classic example of a network based on Siamese networks. Zagoruyko et al. [95] enhanced the original Siamese network by incorporating the ReLU function and smaller convolutional kernels, thereby deepening the convolutional layers and improving the matching accuracy. In 2018, Khamis [96] utilized a Siamese network to extract features from left and right images. They first computed a disparity map using low-resolution cost convolution and then introduced a hierarchical refinement network to capture high-frequency details. The guidance of a color input facilitated the generation of high-quality boundaries.

Figure 8.

Architecture of Siamese networks. Two CNNs with shared weights are used to extract image features.

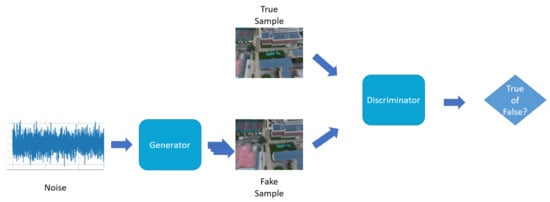

Generative adversarial networks (GANs), which were proposed by Luo et al. [90], consist of a generator model and a discriminator model. The generator model learns the features of input data and generates images similar to the input images, while the discriminator model continuously distinguishes between the generated images and the original images until a Nash equilibrium is reached (Figure 9). In 2018, Pilzer et al. [97] presented a GAN framework based on binocular vision. It comprised two generator sub-networks and one discriminator network. The two generator networks were used in adversarial learning to train the reconstruction of disparity maps. Through mutual constraints and supervision, they generated disparity maps from two different viewpoints, which were then fused to produce the final data. Experiments demonstrated that this unsupervised model achieved good results under non-uniform lighting conditions. Lore et al. [98] proposed a deep convolutional generative model that obtained multiple depth maps from neighboring frames, further enhancing the quality of depth maps in occluded areas. In 2019, Matias et al. [99] used a generative model to handle occluded areas and achieved satisfactory disparity results.

Figure 9.

Architecture of a GAN: generator: generates fake samples using noise and image features; discriminator: distinguishes true samples and fake samples generated by the generator.

3.2.2. End-to-End Methods

The deep-learning-based non-end-to-end stereo matching methods mentioned in Section 3.2.1 essentially do not deviate from the framework of traditional methods. In general, they still require the addition of hand-designed regularization functions or post-disparity processing steps. This means that non-end-to-end stereo matching methods have the drawbacks of high computational complexity and low time efficiency, and they have not resolved the issues present in traditional stereo matching methods, such as limited receptive fields and a lack of image contextual information. In 2016, Mayer et al. [100] successfully introduced an end-to-end network structure into the stereo matching task for the first time and achieved good results. The design of more efficient end-to-end stereo matching networks has gradually become a research trend in stereo matching.

Current end-to-end stereo matching networks take left and right views as inputs. After feature extraction using convolutional modules with shared weights, they construct a cost volume using either correlation or concatenation operations. Finally, different convolution operations are applied based on the dimensions of the cost volume to regress the disparity map. End-to-end stereo matching networks can be categorized into two approaches: those based on 3D cost volumes and those based on 4D cost volumes according to the dimensions of the cost volume. In this article, the focus is on 4D cost volumes.

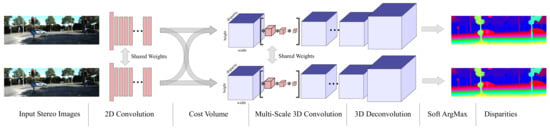

In contrast to architectures inspired by traditional neural network models, end-to-end stereo matching network architectures based on 4D cost volumes are specifically designed for the stereo matching task. In this architecture, the network no longer performs dimension reduction on the features, allowing the cost volume to retain more image geometry and contextual information. In 2017, Kendall et al. [101] proposed a novel deep disparity learning network named GCNet, which creatively introduced a 4D cost volume and, for the first time, utilized 3D convolutions in the regularization module to integrate contextual information from the 4D cost volume. This pioneering approach established a 3D network structure that was specifically designed for stereo matching (Figure 10). It first used weight-sharing 2D convolutional layers to separately extract high-dimensional features from the left and right images. At this stage, downsampling was performed to reduce the original resolution by half, which helped reduce the memory requirements. Then, the left feature map and the corresponding channel of the right feature map were combined pixel-wise along the disparity dimension to create a 4D cost volume. After that, it utilized an encoding–decoding module consisting of multi-scale 3D convolutions and deconvolutions to regularize the cost volume, resulting in a cost volume tensor. Finally, the cost volume was regressed with a differentiable Soft ArgMax to obtain the disparity map. GC-Net was considered state of the art due to its 4D (height, width, disparity, and feature channels) volume. In 2018, Chang et al. [91] proposed the pyramid stereo matching network (PSMNet). It was primarily composed of a spatial pyramid pooling (SPP) module and a stack of hourglass-shaped 3D CNN modules. The pyramid pooling module was responsible for extracting multi-scale features to make full use of global contextual information, while the stacked hourglass 3D encoder–decoder structure regularized the 4D cost volume to provide disparity predictions. However, due to the inherent information loss in the pooling operations at different scales within the SPP module, PSMNet exhibited lower matching accuracy in image regions that contained a significant amount of fine detail information, such as object edges.

Figure 10.

Architecture of GC-Net [101]. Step 1: Weight-sharing 2D convolution is used to extract image features and downsample. Step 2: The 4D cost volume is created by combining left and right images’ feature maps. Step 3: The cost volume is regularized through multi-scale 3D convolution and 3D deconvolution. Step 4: The cost volume is regressed with a differentiable Soft ArgMax, and a disparity map is obtained.

Although end-to-end networks based on 4D cost volumes achieve good matching results, the computational complexity of the 3D convolutional structure itself results in high costs in terms of both storage resources and computation time. In 2019, Wang et al. [102] introduced a three-stage disparity estimation network called AnyNet, which used a coarse-to-fine strategy. Firstly, the network constructed a low-resolution 4D cost volume using low-resolution feature maps as input. Then, it searched within a smaller disparity range using 3D convolutions to obtain a low-resolution disparity map. Finally, it upsampled the low-resolution disparity map to obtain a high-resolution disparity map. This method was progressive, allowing for stopping at any time to obtain a coarser disparity map, thereby trading matching speed for accuracy. Zhang et al. [103] proposed GA-Net, which replaced many 3D convolutional layers in the regularization module with semi-global aggregation (SGA) layers and local guided aggregation (LGA) layers. SGA is a differentiable approximation of cost aggregation methods used in SGM, and the penalty coefficient is learned by the network instead of being determined by prior knowledge. This provides better adaptability and flexibility for different regions of an image. The LGA layer is appended at the end of the network to aggregate local costs with the aim of refining disparity near thin structures and object edges.

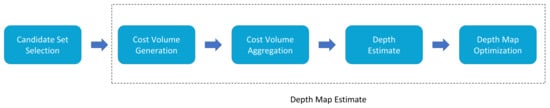

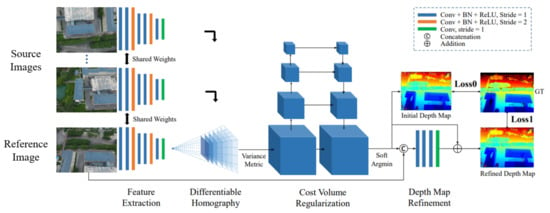

3.3. MVS with Deep Learning

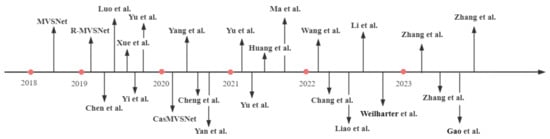

In 2018, Yao et al. pioneered the combination of convolutional neural networks and MVS, resulting in the development of MVSNet [104]. They extracted image features using a CNN, utilized a differentiable homography matrix to construct a cost volume, and regularized the cost volume using 3D U-Net, enabling multi-view depth estimation (Figure 11). Building upon this work, Yao et al. [105] introduced RMVSNet (recurrent MVSNet) in 2019. By replacing the 3D CNN convolution in MVSNet with gated units, they achieved reduced memory consumption. Chen et al. [106] proposed PointMVSNet, which employed graph convolutional networks to refine the point cloud generated by MVSNet. Luo et al. [107] introduced P-MVSNet (Patchwise MVSNet), which significantly improved the accuracy and completeness of depth maps and reconstructed a point cloud through the application of the Patchwise approach. Xue et al. [108] proposed MVSCRF (MVS Conditional Random Field), which incorporated a conditional random field (CRF) module to enforce smoothness constraints on the depth map. This approach resulted in enhanced depth estimation. Yi et al. [109] presented PVA-MVSNet (Pixel View Adaptive MVSNet), which generated depth estimation with higher confidence by adaptively aggregating views at both the pixel and voxel levels. Yu et al. [110] introduced Fast-MVSNet, which utilized sparse cost volume and Gauss–Newton layers to enhance the runtime speed of MVSNet. In 2020, Gu et al. [111] introduced Cascade MVSNet, a redesigned model that encoded features from different scales using an image feature pyramid in a cascading manner. This approach not only saved memory resources but also improved MVS speed and accuracy. Yang et al. [112] proposed CVP-MVSNet (Cost–Volume Pyramid MVSNet), which employed a pyramid-like cost–volume structure to adjust the depth map at different scales, from coarse to fine. Cheng et al. [113] developed a network that automatically adjusted the depth interval to avoid dense sampling and achieved high-precision depth estimation. Yan et al. [114] introduced D2HC-RMVSNet (Dense Hybrid RMVSNet), a high-density hybrid recursive multi-view stereo network that incorporated dynamic consistency checks, yielding excellent results while significantly reducing memory consumption. Liu et al. [115] proposed RED-Net. It introduced a recurrent encoder–decoder (RED) architecture for sequential regularization of cost volume, achieving higher efficiency and accuracy while maintaining resolution, which was beneficial for large-scale reconstruction. In 2022, a significant number of works based on some helpful modules in computer vision, such as attention and transformers, were introduced [116,117,118,119,120]. In 2023, Zhang et al. [121] proposed DSC-MVSNet, which used separable convolution based on depth and attention modules to regularize the cost volume. Zhang et al. [122] proposed vis-MVSNet, which estimated matching uncertainty and integrated pixel-level occlusion information within the network to enhance depth estimation accuracy in scenes with severe occlusions. There were also more studies on MVSNet (Figure 12), such as MS-REDNet [123], AACVP-MVSNet [124], Sat-MVSF [125], RA-MVSNet [126], M3VSNet [127], and Epp-MVSNet [128].

Figure 11.

Overview of MVSNet [104]. Image features are extracted by multiple CNNs that share weights. Then, a differentiable homography matrix and variance metric are utilized to generate the cost volume, and a 3D U-Net is used to regularize the cost volume. Finally, depth maps are estimated and refined with the regularized probability volume and a reference image.

Figure 12.

Chronological overview of MVSNet methods [104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128].

3.4. Neural Radiance Fields

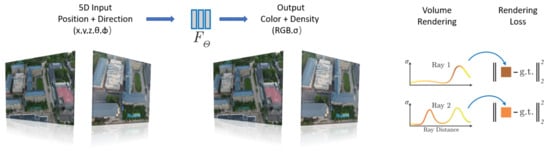

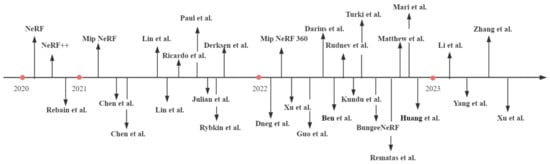

In 2020, a groundbreaking scene-rendering technique called Neural Radiance Fields (NeRF) was introduced by Ben et al. [129]. NeRF is an end-to-end learning framework that leverages the spatial coordinates of objects and camera poses as input, and a multi-layer perceptron (MLP) network is utilized to simulate a neural field. This neural field represents a scalar property, such as opacity, of the object in a specific direction. By tracing rays through the scene and integrating colors based on the rays and opacity, NeRF generates high-quality images or videos from novel viewpoints (Figure 13). Building upon NeRF, Zhang et al. [130] proposed NeRF++, which addresses the potential shape–illumination ambiguity. It acknowledges that, while the geometric representation of space in an NeRF model trained on a scene’s dataset could be incorrect, it can still render accurate results on the training samples. However, for unseen views, incorrect shapes may result in imperfect generalization. NeRF++ tackles this challenge and resolves the parameterization issue when applying NeRF to unbounded 360° scenes. This enhancement allows for better capturing of objects in large-scale, unbounded 3D scenes. Although NeRF itself does not possess inherent 3D object reconstruction capabilities, modifications and variants that incorporate geometric constraints have been developed. These NeRF-based methods enable the end-to-end reconstruction of 3D models of objects or scenes by integrating geometric constraints into the learning framework. There have been a significant number of studies on NeRF in recent years (Figure 14), such as DeRF [131], depth-supervised NeRF [132], Mip-NeRF [133], Mip-NeRF 360 [134], Ha-NeRF [135], DynIBaR [136], MRVM-NeRF [137], MVSNeRF [138], PointNeRF [139], and ManhattanNeRF [140].

Figure 13.

A pipeline of NeRF. The 5D information, including the 3D position of the pixel of the target scene and the view direction observing the scene, is input into an MLP. Then, the color and density information about the pixel are output. Finally, the volume is rendered using loss functions.

Figure 14.

Chronological overview of NeRF methods [129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159].

When facing large-scale outdoor scenes, the main challenges that need to be addressed are as follows:

- Accurate six-DoF camera pose estimation;

- Normalization of lighting conditions to avoid overexposed scenes;

- Handling open outdoor scenes and dynamic objects;

- Striking a balance between accuracy and computational efficiency.

To obtain more accurate camera poses, in 2021, Lin et al. [141] proposed I-NeRF, which inverted the training of NeRF by using a pre-trained model to learn precise camera poses. Lin et al. [142] introduced BA-NeRF, which could optimize pixel-level loss even with noisy camera poses by computing the difference between the projected results of rotated camera poses and the given camera pose. They also incorporated an annealing mechanism in the position-encoding module, gradually introducing high-frequency components during the training process, resulting in accurate and stable reconstruction results.

To address the issue of lighting, in 2021, Ricardo et al. [143] introduced two new encoding layers: appearance embedding, which modeled the static appearance of the scene, and transient embedding, which modeled transient factors and uncertainties, such as occlusions. In 2022, by learning these embeddings, they achieved a control mechanism for adjusting a scene’s lighting. Darius et al. [144] proposed the ADOP (approximate differentiable one-pixel point) rendering method, which incorporated a camera-processing pipeline to rasterize a point cloud, and they fed it into a CNN for convolution, resulting in high-dynamic-range images. They then utilized traditional differentiable image processing techniques, such as lighting compensation, and trained the network to learn the corresponding weights, achieving fine-grained modeling. Ben et al. [145] discovered the issue of inconsistent noise between RGB-processed images and the original data. They proposed training NeRF on the original images before RGB processing and obtaining RGB images using image processing methods. This approach resulted in more consistent and uniform lighting. This approach leveraged the implicit alignment capabilities of NeRF and utilized the consensus relationships between multiple shots to complement each other’s information. Rudnev et al. [146] introduced the NeRF-OSR method, which learned an implicit neural scene representation by decomposing the scene into spatial occupancy, illumination, shadow, and diffuse reflectance. This method supported the meaningful editing of scene lighting and camera viewpoints simultaneously and in conjunction with semantics.

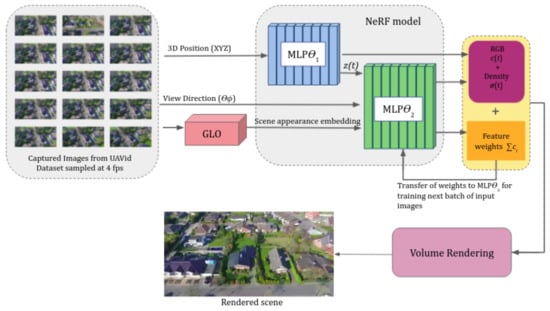

When modeling open scenes and dynamic objects and when modeling outdoor scenes with NeRF, neglecting the far scene can result in background errors, while modeling it can lead to a reduced resolution in the foreground due to scale issues. NeRF++, which was created by Zhang et al. [130], addressed this problem by proposing a simplified inverse sphere-parameterization method for free viewpoint synthesis. The scene space was divided into two volumes: an inner unit sphere, representing the foreground and all cameras, and an outer volume, represented by an inverted sphere that covered the complementary portion of the inner volume. The inner volume contained the foreground and all cameras, while the outer volume represented the remaining environment, and both parts were rendered using separate NeRF models. In 2021, Julian et al. [147] introduced the neural scene graph (NSG) method for dynamic rigid objects. It treated the background as the root node and the moving objects as the foreground (neighbor nodes). The relationships between poses and scaling factors were used to create edges in the associated graph, and intersections between rays and the 3D bounding boxes of the objects were verified along the edges. If there was an intersection, the rays were bent, and modeling was performed separately for the inside and outside of the detection box to achieve consistent foreground–background images. Paul et al. [148] proposed TransNeRF based on transfer learning, where they first used a generative adversarial network (GAN) called GLO [149] to learn and model dynamic objects based on NeRF-W [143]. Then, a pre-trained NeRF++ was used as the MLP module in the network (Figure 15). In 2022, Abhijit et al. [150] introduced Panoptic NeRF, which decomposed dynamic 3D scenes into a series of foreground and background elements, representing each foreground element with a separate NeRF model.

Figure 15.

Architecture of TransNeRF [148]. The 3D position is input into a color MLP to obtain color information and into the GLO module to generate a scene appearance embedding. The color information, view direction, and appearance embedding go through another density MLP to obtain the density information. Both of the MLPs come from an NeRF++ model, and the feature weights of the density MLP guide the training of the next batch of input images.

In terms of large-scale scenes, Haithem et al. [151] proposed Mega-NeRF for drone scenes, which employed a top-down 2D grid approach to divide the scene into multiple grids. The training data were then reorganized into each grid based on the intersections between camera rays and the scene, enabling individual NeRF models to be trained for each grid. They also introduced a new guided sampling method that sampled points only near the object’s surface, improving the rendering speed. In addition, improvements were made to NeRF++ by dividing the scene into foreground and background regions using ellipsoids. Taking advantage of the camera height measurements, rays were terminated near the ground to further refine the sampling range. Derksen et al. [152] introduced S-NeRF, which was the first application of Neural Radiance Fields to the reconstruction of 3D models from multi-view satellite images. It directly modeled direct sunlight and a local light field and learned the diffuse light, such as sky light, as a function of the sun’s position. This approach leveraged non-correlated effects in satellite images to generate realistic images under occlusion and changing lighting conditions. In 2022, Xu et al. [153] proposed BungeeNeRF, which used progressive learning to gradually refine the fitting of large-scale city-level 3D models, starting from the distant view and progressively capturing different levels of detail. Rematas et al. [154] introduced Urban Radiance Fields (URFs), which incorporated information from LiDAR point clouds to guide NeRF in reconstructing street-level scenes. Matthew et al. [155] proposed BlockNeRF, which divided large scenes based on prior map information. They created circular blocks centered around the projected points of the map’s blocks and trained a separate NeRF model for each block. By combining the outputs of multiple NeRF models, they obtained optimal results. Mari et al. [156] extended the work of S-NeRF by introducing a rational polynomial camera model to improve the robustness of the network to changing shadows and transient objects in satellite cameras. Huang et al. [157] took a traditional approach by assuming existing surface hypotheses for buildings. They introduced a new energy term to encourage roof preference and two additional hard constraints, explicitly obtaining the correct object topology and detail recovery based on LiDAR point clouds. In 2023, Zhang et al. [158] proposed GP-NeRF, which introduced a hybrid feature based on 3D hash grid features and multi-resolution plane features. They extracted the grid features and plane features separately and then combined them as inputs into NeRF for density prediction. The plane features were also separately input into the color-prediction MLP network, improving the reconstruction speed and accuracy of NeRF in large-scale outdoor scenes. Xu et al. [159] proposed Grid-NeRF, which combined feature grids and introduced a dual-branch structure with a grid branch and a NeRF branch trained in two stages. They captured scene information using a feature plane pyramid and input it into the shallow MLP network (grid branch) for the feature grid learning. The learned feature grid guided the NeRF branch to sample object surfaces, and the feature plane was bilinearly interpolated to predict the grid features of the sampled points. These features, along with positional encoding, were then input into the NeRF branch for rendering.

4. Datasets and Evaluation Metrics

According to the different types of target tasks, the datasets for 3D reconstruction can be also divided into different categories (Table 1). The datasets mentioned in this section are commonly used in corresponding tasks, which mainly include large-scale scenes. These datasets comprise high-resolution satellite images, low-altitude images captured by UAVs, and street-view images captured with handheld cameras in urban environments. They provide rich urban scenes, encompassing diverse architectural structures and land cover types, which are crucial for this research on large-scale 3D reconstruction. Additionally, the evaluation metrics depend on the reconstruction methods, which will be introduced in detail in this section.

Table 1.

Brief introduction to the datasets for the 3D reconstruction of large-scale or outdoor scenes.

4.1. Structure from Motion

4.1.1. Datasets

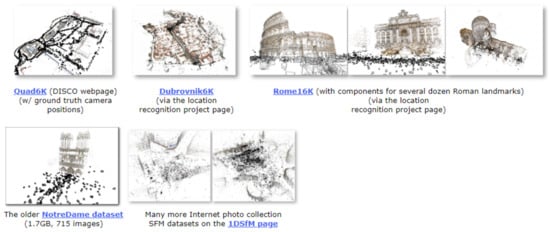

The BigSfM project contains a large number of SfM datasets that are mainly used for the reconstruction of large-scale outdoor scenes. It was proposed by Cornell University. These datasets are usually collected on the Internet, including multiple sets of city landmark images downloaded from Flickr and Google (Figure 16), such as Quad 6K [160], Dubrovnik6K [161], Rome16K [161], and the older NotreDame [18].

Figure 16.

Some famous and common datasets of the BigSfM project.

Quad6K: This dataset contains 6514 images of Cornell University’s Arts Quad; the geographic information from about 5000 images was recorded with the GPS receiver of the user’s own iPhone 3G, and the geographic information from 348 images was measured and recorded using high-precision GPS equipment.

Dubrovnik6K: This dataset contains 6844 images of landmarks in the city of Dubrovnik, and it consists of SIFT features, SfM models, and query images corresponding to SIFT features.

Rome 16K: This dataset contains 16,179 images of landmarks in Roman cities, and it consists of SIFT features, SfM models, and query images corresponding to SIFT features.

The older NotreDame: This dataset contains 715 images of Notre Dame.

4.1.2. Evaluation Metrics

SfM restores the sparse 3D structure in the case of unknown camera poses, and it is difficult for it to obtain the ground truth of a reconstruction, so indirect evaluation metrics are generally used to reflect the reconstruction quality. Therefore, the evaluation metrics of SfM are the number of registered images (Registered), the number of sparse point clouds (Points), the average length of the trajectory (Track), and the point cloud reprojection error (Reprojection Error).

- Registered: The more registered images there are, the more information is used in SfM reconstruction, which indirectly indicates the accurate reconstruction of the points because the reconstruction registration depends on the accuracy of the intermediate process points.

- Points: The more points there are in the sparse point cloud, the higher the degree of matching between the poses of the camera and the 2D points because the accuracy of triangulation depends on both of the above.

- Track: The number of 2D points corresponding to each 3D point. The longer the trajectory of the point, the more information is used, which indirectly means that the accuracy is high.

- Reprojection Error: The average distance error between the position of each 3D point projected to each frame with the poses and the position of the actual detected 2D point. The smaller the reprojection error, the higher the accuracy of the overall structure.

4.2. Stereo Matching

4.2.1. Datasets

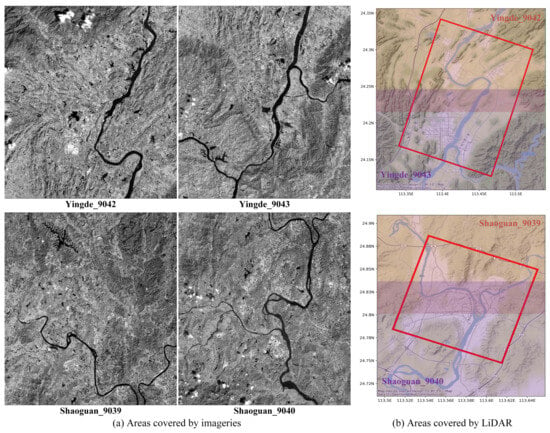

WHU-Stereo [162]: This dataset is based on images of GF-7 and airborne LiDAR point clouds (Figure 17), including buildings and vegetation scenes in six cities in China: Shaoguan, Kunming, Yingde, Qichun, Wuhan, and Hengyang. There are 1757 image pairs with dense disparities.

Figure 17.

Examples from the WHU-Stereo dataset. Areas covered by GF-7 satellite images of the cities of Yingde and Shaoguan (a) and approximate LiDAR data (b).

US3D [163]: This dataset contains two urban scenes from Jacksonville, Florida and Omaha, Nebraska. A total of 4292 image pairs with dense disparities were constructed from 26 panchromatic, visible-light, and near-infrared images of Jacksonville and 43 images of Omaha, and they were collected using WorldView-3 (Figure 18). However, since many of the image pairs were captured from the same area and taken at different times, there may be seasonal differences in the appearance of land cover.

Figure 18.

Examples from the US3D dataset. Epipolar rectified images (top) with ground truth left disparities and semantic labels (bottom).

Satstereo [164]: Most of this dataset uses WorldView-3 images, and a small portion comes from WorldView-2. In addition to the dense disparity, it also builds masks and provides metadata for each image, but as with US3D, there are differences in the seasonal appearance of land cover due to the different acquisition times.

4.2.2. Evaluation Metrics

The main evaluation criteria for stereo matching algorithms are the disparity map’s accuracy and the time complexity. The evaluation metrics for the disparity map’s accuracy include the false matching rate, the mean absolute error (MAE), and the root mean square error (RMSE) [44].

- The false matching rate iswhere and are the respective pixel values of the generated disparity map and the real disparity map. is the evaluation threshold that one sets, and when the difference is greater than , the pixel is marked as a mismatched pixel. N is the total number of pixels in the disparity map.

- MAE:

- RMSE:

4.3. Multi-View Stereo

4.3.1. Datasets

ETH3D [165]: This dataset includes images captured with high-definition cameras and the ground truth of dense point clouds obtained with industrial laser scanners; it includes buildings, natural landscapes, indoor scenes, and industrial scenes (Figure 19). The data of the two modalities are aligned through an optimization algorithm.

Figure 19.

Examples from the ETH3D dataset. Some high-resolution images of buildings and outdoor scenes.

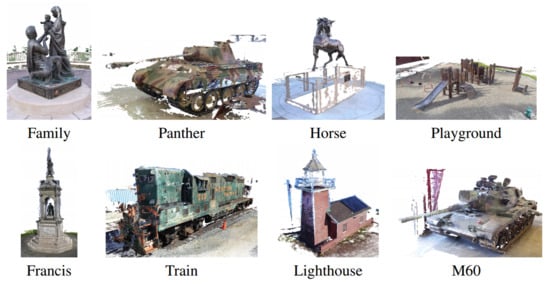

Tanks and Temples [166]: This dataset involved the use of a high-definition camera to shoot videos of real scenes (Figure 20). The number of images in each scene is about 400, and the camera poses are unknown. The ground truth of the dense point cloud was obtained using an industrial laser scanner.

Figure 20.

Examples from Tanks and Temples dataset. Some high-resolution images of the palace scene.

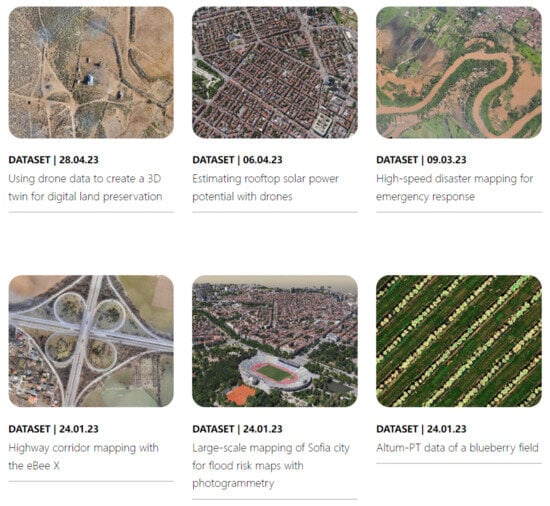

Sensefly [167]: This is an outdoor scene dataset released by Sensefly, a light fixed-wing UAV company, and it includes schools, parks, cities, and other scenes, with RGB, multi-spectral, point cloud, and other data types (Figure 21).

Figure 21.

Some typical examples from the dataset created by Sensefly, including cities, highways, blueberry fields, and other scenes.

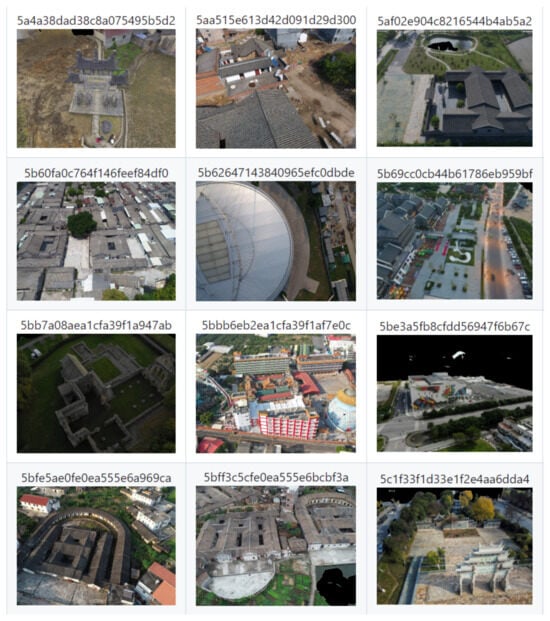

BlendedMVS [168]: This dataset is a large-scale MVS dataset for generalized multi-view stereo networks. The dataset has a total of 17,000 MVS training samples, including 113 scenes, with buildings, sculptures, small objects, and so on. Additionally, there are 29 large scenes, 52 small scenes, and 32 scenes of sculptures (Figure 22).

Figure 22.

Examples from the BlendedMVS dataset. High-resolution images of 12 large scenes from a total of 113 scenes.

4.3.2. Evaluation Metrics

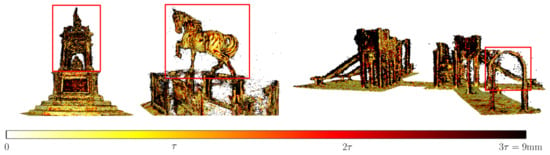

The purpose of multi-view stereo vision is to estimate a dense 3D structure under the premise of knowing the camera poses. If the camera poses are unknown, it is necessary to estimate the camera poses first with SfM. The evaluation of the dense structures is generally based on a point cloud obtained using LiDAR or depth cameras. Some of the corresponding camera poses are directly acquired using a robotic arm during collection, such as in DTU [171], and some are estimated based on the collected depth, such as in ETH3D or Tanks and Temples. The evaluation metrics are the accuracy and completeness, as well as the F1-score, which balances the two. Additionally, some evaluation and visualization results for typical MVSNet models are shown in Table 2, Figure 23 and Figure 24.

- Accuracy: For each estimated 3D point, a true 3D point is found within a certain threshold, and the final matching ratio is the accuracy. It should be noted that, since the ground truth of the point cloud itself is incomplete, it is necessary to estimate the unobservable part of the ground truth first and ignore it when estimating the accuracy.

- Completeness: The nearest estimated 3D point is found within a certain threshold for each true 3D point, and the final matching ratio is the completeness.

- F1-Score (F1-Score): There is a trade-off between the metrics of accuracy and completeness because points can be filled in the entire space to achieve 100% completeness, or only very few absolutely accurate points can be reserved to obtain a very high accuracy index. Therefore, the final evaluation metrics need to integrate both of the above. Assuming that the accuracy is p and the completeness is r, the F1-score is their harmonic mean, i.e., .

Table 2.

F1 results of different MVSNet models on the Tanks and Temples benchmark.

Table 2.

F1 results of different MVSNet models on the Tanks and Temples benchmark.

| Methods | Mean | Family | Francis | Horse | Lighthouse | M60 | Panther | Playground | Train |

|---|---|---|---|---|---|---|---|---|---|

| MVSNet [104] | 43.48 | 55.99 | 28.55 | 25.07 | 50.79 | 53.96 | 50.86 | 47.9 | 34.69 |

| RMVSNet [105] | 48.4 | 69.96 | 46.65 | 32.59 | 42.95 | 51.88 | 48.8 | 52 | 42.38 |

| PointMVSNet [106] | 48.27 | 61.79 | 41.15 | 34.2 | 50.79 | 51.97 | 50.85 | 52.38 | 43.06 |

| P-MVSNet [107] | 55.62 | 70.04 | 44.64 | 40.22 | 65.2 | 55.08 | 55.17 | 60.37 | 54.29 |

| MVSCRF [108] | 45.73 | 59.83 | 30.6 | 29.93 | 51.15 | 50.61 | 51.45 | 52.6 | 39.68 |

| PVA-MVSNet [109] | 54.46 | 69.36 | 46.8 | 46.01 | 55.74 | 57.23 | 54.75 | 56.7 | 49.06 |

| Fast-MVSNet [110] | 47.39 | 65.18 | 39.59 | 34.98 | 47.81 | 49.16 | 46.2 | 53.27 | 42.91 |

| CasMVSNet [111] | 56.84 | 76.37 | 58.45 | 46.26 | 55.81 | 56.11 | 54.06 | 57.18 | 49.51 |

| CVP-MVSNet [112] | 54.03 | 76.5 | 47.74 | 36.34 | 55.12 | 57.28 | 54.28 | 57.43 | 47.54 |

| DSC-MVSNet [121] | 53.48 | 68.06 | 47.43 | 41.6 | 54.96 | 56.73 | 53.86 | 53.46 | 51.71 |

| vis-MVSNet [122] | 60.03 | 77.4 | 60.23 | 47.07 | 63.44 | 62.21 | 57.28 | 60.54 | 52.07 |

| AACVP-MVSNet [124] | 58.39 | 78.71 | 57.85 | 50.34 | 52.76 | 59.73 | 54.81 | 57.98 | 54.94 |

Bold values means the best values compared to all list values of each column.

Figure 23.

Visualization of the error in point cloud models from the Tanks and Temples dataset reconstructed with DSC-MVSNet methods.

Figure 24.

Results of point clouds from vis-MVSNet [122] on the intermediate set of Tanks and Temples.

4.4. Neural Radiance Fields

4.4.1. Datasets

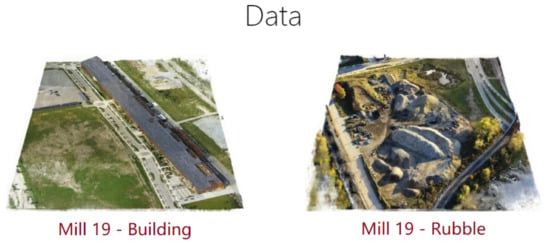

Mill 19 [151]: This dataset comprises photos of scenes near abandoned industrial parks that were taken directly using UAVs, with a resolution of 4608 × 3456. It contains two main scenes: Mill 19-Building and Mill 19-Rubble (Figure 25). Mill 19-Building consists of 1940 grid photos of an area of 500 × 250 square meters around an industrial building, and Mill 19-Rubble contains 1678 photos of all nearby ruins.

Figure 25.

Examples from the Mill 19 dataset. Ground-truth images of two different scenes called “building” and “rubble” captured by a drone.

4.4.2. Evaluation Metrics

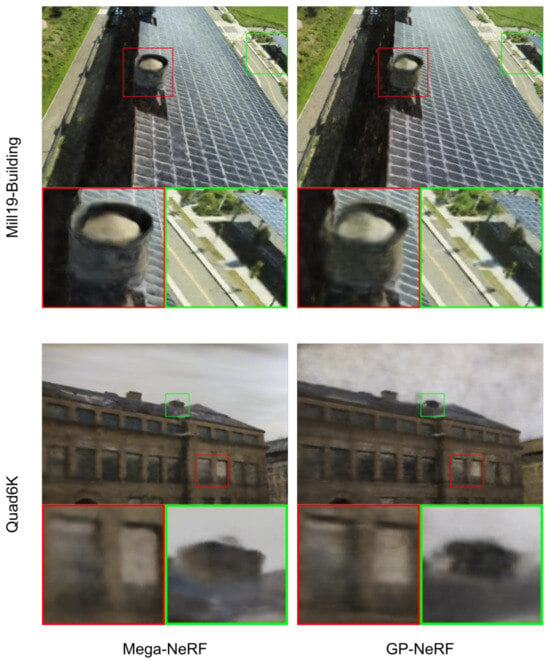

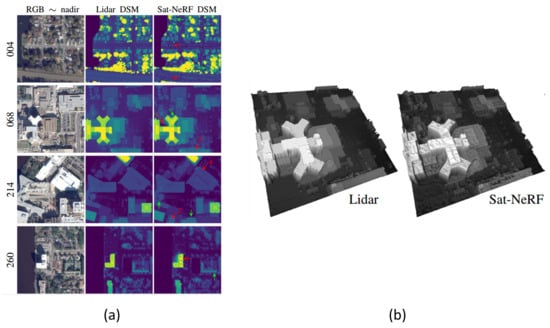

The evaluation metrics for NeRF refer to the image generation task in computer vision, and they include the artificially designed and relatively simple SSIM and PSNR, as well as the LPIPS, which compares the features extracted using a deep learning network. Additionally, some evaluation and visualization results from typical NeRF models are shown in Table 3 and Table 4 and Figure 26, Figure 27 and Figure 28.

Table 3.

Results of different NeRF models on a synthetic NeRF benchmark dataset [129].

Table 4.

Results of different NeRF models on the Mill19 [151] and Quad6K [160] benchmarks.

Figure 26.

Results of Point-NeRF [122] on the Tanks and Temples dataset.

Figure 27.

Visualization results of Mega-NeRF [151] and GP-NeRF [158] on the Mill19 and Quad6K datasets.

Figure 28.

DSM results of Sat-NeRF [156] on their own dataset: (a) 2D visualization; (b) 3D visualization of scene 608.

SSIM (structure similarity index measure) [172]: This measure quantifies the structural similarity between two images, imitating the human visual system’s perception of structural similarity. It is designed to be sensitive to changes in an image’s local structure. The measure assesses image attributes based on brightness, contrast, and structure. The brightness is estimated using the mean, the contrast is measured using the variance, and the structural similarity is judged using the covariance. The value of the SSIM ranges from 0 to 1. The larger the value, the more similar the two images are. If the value of SSIM is 1, the two images are exactly the same. The formulas are as follows:

- Illumination:

- Contrast:

- Structural Score:

- SSIM:where , , , , respectively, represent the mean and standard deviation of images x and y; is the covariance of images x and y; , , and are constants to prevent division by 0; , , and represent the weights of different features when calculating the similarity.

PSNR (peak signal-to-noise ratio) [148]: The PSNR, which measures the maximum image signal and background noise, is used to evaluate image quality. The larger the value, the less image distortion there is. Generally speaking, a PSNR higher than 40 dB indicates that the image quality is almost as good as that of the original image, a value between 30 and 40 dB usually indicates that the distortion loss of the image quality is within an acceptable range, a value between 20 and 30 dB indicates that the image quality is relatively poor, and a value lower than 20 dB indicates serious image distortion. Given a grayscale image I and a noise image K of size m x n, the MSE (mean square error) is as follows:

where is the maximum pixel value of the image.

LPIPS (learned perceptual image patch similarity): This metric was proposed by Zhang et al. [173] and is also called “perceptual loss”; it is a measurement of the distinction between two images. A generator employs a method capable of reconstructing authentic images from fabricated ones. This is achieved by learning the inverse mapping from generated images to the ground truth. Additionally, it emphasizes the perceptual similarity between these images. The LPIPS fits the situation of human perception better than traditional methods do. A low value of the LPIPS represents high similarity between two images. The specific metric calculates the feature difference between a real sample and a generated sample in a model. This difference is calculated in each channel, and it is the weighted average of all channels. Given the ground-truth image reference block and the noisy image distortion block, the formula for the measure of perceptual similarity is as follows:

where is the weight vector of layer l, ⊙ indicates element-by-element multiplication, and is the image feature.

4.5. Comprehensive Datasets

GL3D (Geometric Learning with 3D Reconstruction [168,169]): This is a large-scale dataset created for 3D reconstruction and geometry-related problems with a total of 125,623 high-resolution images. Most of the images were captured by UAVs at multiple scales and angles, with a large geometric overlap, covering 543 scenes, such as cities, rural areas, and scenic spots (Figure 29). Each scene’s datapoint contains a complete image sequence, geometric labels, and reconstruction results. Besides large scenes, GL3D also includes the reconstruction of small objects to enrich data diversity. For the SfM task, GL3D provides image and camera parameters after de-distortion; for the MVS task, GL3D provides rendering fusion maps and depth maps for different viewpoints based on the Blended MVS dataset.

Figure 29.

Examples from the GL3D dataset. Original images of different scenes, including large scenes, small objects, and 3D models of the scenes.