Abstract

Ship detection in synthetic aperture radar (SAR) imagery faces significant challenges due to the limitations of traditional methods, such as convolutional neural network (CNN) and anchor-based matching approaches, which struggle with accurately detecting smaller targets as well as adapting to varying environmental conditions. These methods, relying on either intensity values or single-target characteristics, often fail to enhance the signal-to-clutter ratio (SCR) and are prone to false detections due to environmental factors. To address these issues, a novel framework is introduced that leverages the detection transformer (DETR) model along with advanced feature fusion techniques to enhance ship detection. This feature enhancement DETR (FEDETR) module manages clutter and improves feature extraction through preprocessing techniques such as filtering, denoising, and applying maximum and median pooling with various kernel sizes. Furthermore, it combines metrics like the line spread function (LSF), peak signal-to-noise ratio (PSNR), and F1 score to predict optimal pooling configurations and thus enhance edge sharpness, image fidelity, and detection accuracy. Complementing this, the weighted feature fusion (WFF) module integrates polarimetric SAR (PolSAR) methods such as Pauli decomposition, coherence matrix analysis, and feature volume and helix scattering (Fvh) components decomposition, along with FEDETR attention maps, to provide detailed radar scattering insights that enhance ship response characterization. Finally, by integrating wave polarization properties, the ability to distinguish and characterize targets is augmented, thereby improving SCR and facilitating the detection of weakly scattered targets in SAR imagery. Overall, this new framework significantly boosts DETR’s performance, offering a robust solution for maritime surveillance and security.

1. Introduction

Object detection is crucial in SAR automatic recognition technology, significantly influencing the effectiveness of surveillance and reconnaissance systems [1,2]. SAR images, due to their unique imaging properties, present specific challenges for object detection that differ from those encountered with conventional optical remote sensing methods [3,4]. Traditionally, constant false alarm rate (CFAR) techniques have been favored for SAR image detection due to their simplicity and adaptive thresholding [5]. However, the advent of deep learning has showcased the efficacy of deep CNNs in object detection within natural images, a trend that is now impacting SAR image processing as well [6]. While these techniques can efficiently handle large datasets, they may not always deliver improved accuracy in the initial stages of training [7]. Improving network performance typically involves enhancing the prominence of object features and fine-tuning the detector’s capabilities [8,9]. Despite the effectiveness of CNNs in SAR image object detection [10,11], their dependency on local connectivity and weight sharing can limit their ability to capture global information, particularly in SAR images where pixel correlation is significant [12].

Transformers, renowned for their success in capturing global dependencies in natural language processing, offer a promising alternative [13]. These models excel in learning long-range feature dependencies, making them particularly well suited for tasks requiring a comprehensive understanding of global features. Specifically, the vision transformer (ViT) framework has been applied to SAR image classification tasks by processing images as patches without relying on CNNs for encoding [14]. Unlike CNNs, which are constrained by local processing, transformers can capture broader feature relationships. Recent transformer-based models, such as DETR [15] and ViT faster region (FRCNN) [16], have been adapted for SAR image object detection, achieving performance levels on par with CNN-based methods [17].

In light of the potential benefits of transformers, a ViT-based domain adaptation (DA) framework for SAR image object detection is proposed. Unlike existing transformer-based DA frameworks that generally rely on CNNs for feature encoding [18], this approach utilizes ViT FRCNN as the baseline network to emphasize global features and enhance DA performance. By introducing domain-specific feature spaces with two classification tokens for different domains, refining pseudo-labels through feature clustering, and leveraging the existing detection head of FRCNN, this method aims to improve detection accuracy. Extensive experiments on multisource SAR image datasets demonstrate the effectiveness and superiority of this approach over current state-of-the-art methods. However, general object detection frameworks like RetinaNet, FCOS, GFL, RCNN, YOLO, and DETR, along with modern backbones such as ConvNext, VAN, LSKNet, and Swin transformer, face challenges when applied to SAR imagery due to issues like small object sizes, speckle noise, and sparse information. Recent deep learning methods for SAR detection have focused on enhancing network and module design, with techniques such as MGCAN, MSSDNet, SEFEPNet, Quad-FPN, PADN, EWFAN, and CRTransSar aiming to improve object feature detection and mitigate noise. However, many of these approaches use ImageNet-pretrained backbones, overlooking the domain gap between natural scene datasets and SAR data. To overcome these challenges, a specialized pretraining strategy tailored to SAR imagery is proposed [19]. While transformer-based models like DETR have advanced SAR vessel detection by eliminating hand-crafted anchors, offering an efficient detection process, challenges remain, such as the imbalance between positive and negative samples in anchor-based detection. These issues can be addressed through techniques like focal loss or data augmentation. Additionally, the high data requirements of these models pose challenges for small SAR datasets, but strategies like transfer learning and specialized pretraining can improve performance. Addressing these issues is essential for enhancing detection accuracy, particularly in complex environments.

SAR imagery is widely used for maritime monitoring, particularly for ship detection and oil spill identification in near-real-time systems [20,21,22]. Ships are detected as bright spots due to high backscattering, while sea clutter appears dark with low backscattering [23,24]. Various methods, including adaptive thresholding, wavelet techniques, and machine learning approaches, have been developed for target identification. Traditional methods, such as CFAR detectors, use statistical distributions of sea clutter, including Rayleigh [25], Gamma [26], and Gaussian [27]. Additionally, machine learning techniques like deep neural networks and support vector machines are employed for ship detection [7,28]. Recent advancements, such as the Sentinel-1 SAR mission [29], provide enhanced capabilities with open-access data and frequent revisit times, while its dual polarization improves detection accuracy [30]. Polarimetric methods, such as the polarimetric notch filter, have also been effective in reducing false alarms in complex clutter scenarios [31].

Object detection is critical in SAR technology for surveillance applications, addressing the unique challenges posed by SAR imagery compared to optical methods. While traditional techniques like CFAR have been effective, the increasing adoption of deep learning methods, particularly CNNs, highlights the evolution in this field. However, CNNs often struggle to capture global information, which can hinder their effectiveness in processing SAR data. To address these limitations, a ViT-based framework is proposed to enhance detection accuracy in SAR datasets by leveraging the model’s ability to capture long-range dependencies and global features. Furthermore, SAR technology plays a vital role in maritime monitoring, with advancements from the Sentinel-1 mission providing open-access data and dual-polarization capabilities that improve detection accuracy. Additionally, polarimetric methods enhance detection capabilities in complex scenarios, helping to reduce false alarms and improve the reliability of SAR-based surveillance systems.

1.1. Related Work

1.1.1. Convolutional Neural Network (CNN)-Based SAR Image Object Detection

Conventional CNN-based methods for SAR image object detection have achieved high accuracy, but they often come with increased model complexity. To address this, a lightweight segmented bidirectional feature pyramid network (FPN) combined with a feature fusion module has been proposed, which mitigates the impact of complex backgrounds in SAR images while maintaining strong detection performance [11]. Building on this approach, an enhanced feature extraction method has been developed by integrating an adaptive activation function with a backbone network and incorporating a convolutional block attention model within the FPN. This integration effectively identifies key regions in dense scenes [32]. Further improving feature extraction and alignment, an anchor-free method has been introduced that employs power transform and a feature alignment guidance network [10]. Complementing these advancements, a lightweight framework based on threshold neural networks facilitates rapid detection by extracting grayscale features from SAR images. This method predicts optimal detection thresholds within a sliding window and refines object identification using a false alarm rejection network [5]. Despite these advancements, CNN-based methods often require deeper network structures, which impose significant computational demands and challenge algorithm robustness.

1.1.2. Transformer-Based SAR Image Object Detection

Recent research emphasizes non-convolutional attention mechanisms in SAR object detection, with advancements highlighting the effectiveness of these techniques. For instance, Qu et al. employ a transformer encoder as a feature enhancement bottleneck [33], and in another approach, it is positioned between a multi-scale backbone network and fully connected layers. The integration of transformer encoder and decoder has also been implemented within the YOLO framework [34]. Another method employs a transformer within a local enhancement and transformer (LET) module. Additionally, the development of CAENeck, inspired by the Swin transformer, has shown consistent performance across various SAR datasets [35]. In contrast, DETR, a complete transformer detector, stands out for its competitive performance and learning capacity, making it suitable for diverse detection scenarios while maintaining high accuracy. Building on this, a query-based detector similar to DETR has been developed. However, the complex integration of self-attention and cross-attention modules in this model has limited its ability to fully incorporate recent advancements in DETR [36].

1.1.3. Polarimetric SAR (PolSAR) Image Object Detection

CNN advancements in imaging technology have made global earth observation satellites like Sentinel-1 valuable for SAR image object detection, particularly through PolSAR imaging. Multi-polarization imagery, which captures additional object polarization features, proves more beneficial for detection tasks compared to single polarization images [37,38]. This has led to significant progress in ship detection using PolSAR imagery [39]. Traditional methods rely on manually selected features, such as polarization characteristics and statistical properties of background clutter [40]. The CFAR technique, which uses suitable thresholds to filter target pixels, is commonly employed [41]. Polarization decomposition algorithms, based on the scattering or covariance matrix, have also been effectively applied [42,43]. Despite their effectiveness, conventional methods have limitations, including reliance on a limited number of manually selected features, resulting in insufficient representation of ships, and restricted applicability to specific environments, reducing generalization and robustness [44]. To address these issues, new algorithms based on deep learning have been introduced. For example, dense attention pyramid networks (DAPN) and attention acceptance pyramid networks (APRN) for SAR images have shown competitive results [45]. However, current ship detection algorithms still face challenges, such as detecting weakly scattering targets and generating numerous false alarms due to misidentification of strong scattering clutter pixels [43]. Recent advancements address challenges in PolSAR ship detection through deep learning. A dualistic cascade CNN improves detection by using a parallel cascade architecture (BGFENet and PFENet) to extract robust geometric and polarization features from fully PolSAR images, enhancing performance with limited labeled data through multi-scale fusion [46]. Additionally, the lightweight theory-driven network (LT-Net) combines domain knowledge with deep learning to detect small targets efficiently. Validated on the FPSD dataset, LT-Net outperforms existing methods while reducing computational complexity, underscoring the importance of tailored deep learning approaches for PolSAR detection [47].

1.2. Contributions

The analysis of current SAR image ship detection methods highlights several critical issues [48]. First, CNN-based approaches often face limitations due to their convolutional, pooling, and down-sampling operations, which can cause weakly scattered targets to become less visible in the feature map. Furthermore, anchor-based matching strategies frequently struggle to accurately detect smaller targets, reducing the overall effectiveness of these algorithms. traditional methods, which rely on intensity values or single target characteristics, also encounter difficulties in enhancing SCR and are highly sensitive to environmental conditions, imaging angles, and sensor variations, leading to false negatives and positives. While improving detector performance is essential for enhancing SCR, an over-reliance on scattering characteristics alone can result in missed detections and increased false alarms in complex scenarios. Incorporating wave polarization properties, which differ between ocean waves and man-made targets, presents an opportunity to better distinguish and characterize targets in SAR imagery. Integrating these polarization features can enhance the detection of weakly scattered targets and improve SCR. Despite the advancements offered by transformer, their progress is still heavily influenced by CNN framework designs. DETR, known for its robust performance and extensive learning capacity, is well suited for various detection scenarios; however, it requires specific adaptations for effective SAR object detection, as seen in query-based detectors, which struggle due to the intricate integration of self-attention and cross-attention modules.

The novelty and contributions of the proposed approach for ship detection in remote sensing applications can be summarized as follows:

- Feature Enhancement DETR (FEDETR)

The FEDETR module has been introduced to significantly enhance the feature extraction capabilities of DETR models. This module incorporates a CNN with advanced preprocessing techniques such as filtering, denoising, and the application of maximum and median pooling using various kernel sizes. These techniques refine feature extraction by reducing noise, managing clutter, and emphasizing ship structures against complex backgrounds. Furthermore, a CNN module trained on labeled data integrates metrics like LSF, PSNR, and F1 score to predict optimal pooling configurations, effectively balancing edge sharpness, image fidelity, and detection accuracy.

- PolSAR Integration with Weighted Feature Fusion (WFF)

This approach integrates advanced PolSAR techniques, such as Pauli decomposition, coherence matrix analysis, and Fvh components, to characterize ship echoes based on unique scattering properties. These features are fused with attention maps from FEDETR using the WFF module, which optimizes spatial coherence and scattering information. This fusion enhances detection accuracy in complex environments, refining feature representations and reducing false alarms in SAR imagery.

- Generalization and Applicability

The generalization of detection is extended by the application of the proposed methods and improvements to different sensors and SAR images. In fact, this new methodology is designed to perform effectively in various maritime environments, both inshore and offshore, enhancing ship detection accuracy and reducing false alarms.

However, despite these advancements, the combination of deep feature learning with advanced preprocessing and polarimetric feature extraction introduces increased computational complexity and higher model demands. This may potentially slow down detection speed, which poses a challenge for real-time applications. Balancing these trade-offs is crucial to optimizing the method’s effectiveness and ensuring its efficiency in real-world scenarios. Achieving this balance will enable the methodology to maintain high detection performance while managing the demands of operational efficiency in practical maritime surveillance systems.

2. Materials and Methods

The research methodology proposed here enhances ship detection in SAR imagery using the DETR model by integrating diverse information sources through feature fusion. The FEDETR module is introduced, significantly improving the feature extraction capability. To optimize the features, the WFF module is employed. This module effectively fuses PolSAR methods with the attention maps or features derived from FEDETR, ensuring a comprehensive integration of diverse data to enhance detection accuracy.

First, the FEDETR module enhances the performance of DETR by utilizing a CNN with robust preprocessing techniques, including filtering, denoising, and applying maximum and median pooling with various kernel sizes. These methods refine feature extraction by reducing noise, managing clutter, and highlighting significant ship structures against complex backgrounds, thus improving the quality of input data for the DETR model. Additionally, a CNN module trained on labeled data integrates metrics such as LSF, PSNR, and F1 score, predicting optimal pooling configurations within the DETR model to balance edge sharpness, image fidelity, and detection accuracy. By focusing on the nuanced features in SAR images characterized by diverse scales and irregular object distributions, our methodology ensures robust performance across various environmental conditions and operational scenarios.

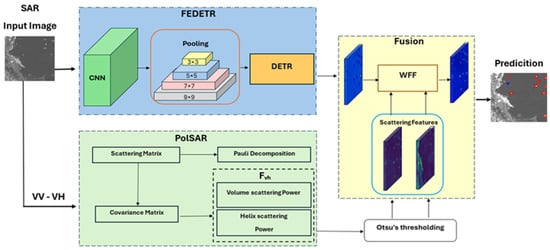

The WFF module integrates advanced PolSAR methods such as Pauli decomposition, coherence matrix analysis, and Fvh with attention maps or features from the FEDETR module to enhance ship detection accuracy in SAR imagery. Initially, enhanced preprocessing techniques refine feature representations through denoising and pooling methods, significantly improving the DETR model’s capability to generate accurate attention maps essential for identifying ships amidst cluttered backgrounds. The WFF module effectively combines insights from PolSAR analysis, focusing on spatial coherence features and detailed scattering characteristics obtained from coherence analysis and Fvh component decomposition. Each component’s contribution is carefully weighted based on its relevance to improving ship detection performance, ensuring a balanced fusion of features. This seamless integration allows for the DETR model to leverage a comprehensive suite of enhancements, ranging from refined feature representations to detailed radar scattering insights, thereby significantly improving detection accuracy across diverse maritime environments. By underscoring the synergy between advanced preprocessing techniques and PolSAR methodologies, the WFF module positions our approach as a superior solution for maritime surveillance and security applications, as illustrated in Figure 1.

Figure 1.

Flowchart of the proposed ship detection in SAR imagery.

2.1. Feature Enhancement DETR (FEDETR) Module

In SAR image preprocessing, techniques such as filtering and denoising are essential for improving feature representation in transformer networks by reducing speckle noise, clutter, and geometric distortions. Foreground objects in SAR images have considerable intraclass variations, and background noise can exhibit complicated patterns that confuse these objects. Despite training the DETR model on the SAR Ship Detection Dataset (SSDD), challenges remain in detecting ships near harbors and amidst clutter.

The pooling module in the proposed FEDETR model is essential for optimizing ship detection in SAR images, particularly within complex maritime environments characterized by speckle noise. By integrating both max pooling and median pooling strategies across varying kernel sizes (3, 5, 7, and 9), the model effectively balances noise reduction with feature preservation. Max pooling enhances the visibility of prominent ship structures against cluttered backgrounds, while median pooling mitigates noise and retains fine edges, crucial for delineating ship boundaries. Optimal pooling parameters are determined using metrics such as LSF, PSNR, and F1 score, ensuring minimized noise and preserved image quality. The selected configuration achieves the lowest LSF, indicating superior edge preservation, alongside the highest F1 score, reflecting enhanced detection accuracy. Extensive experiments were conducted utilizing ship datasets from SAR satellites, including Gaofen-3 and Sentinel-1, which provide various polarizations (VV and VH). These experiments rigorously assessed the effectiveness and generalization of the proposed method, particularly in challenging scenarios where initial detections were inaccurate. The combination of max and median pooling with varying kernel sizes was instrumental in improving detection accuracy across diverse environmental conditions. This comprehensive testing demonstrates the robustness and adaptability of the FEDETR model, underscoring the pivotal role of the pooling module in enhancing SAR image analysis and ship detection performance.

Detection accuracy was evaluated using F1 scores across different pooling types and kernel sizes. Metrics like LSF and PSNR guided the selection of optimal pooling parameters. LSF evaluates edge sharpness to maintain image detail, while PSNR quantifies image quality, aiding in choosing pooling methods that preserve fidelity during preprocessing. This approach aims to optimize ship detection performance using the FEDETR model, supported by customized pooling strategies and rigorous evaluation metrics. To further enhance detection accuracy, a CNN model was developed and trained on labeled data incorporating LSF, PSNR, and F1 score metrics to predict the best pooling type and kernel size. By leveraging these metrics as input features and target labels, the CNN effectively learns to recommend the optimal pooling configuration for enhancing ship detection in SAR images. This integrated approach ensures that selected pooling parameters balance edge sharpness, image quality, and detection accuracy, refining the methodology for optimized ship detection with the FEDETR model.

2.1.1. Pooling Methods In SAR Image Preprocessing

Pooling techniques are essential for enhancing object detection accuracy in SAR images by reducing noise and clutter. Among the primary methods, maximum (MAX) and median pooling play pivotal roles in feature extraction and computational efficiency.

The max pooling emphasizes dominant features, making it effective for detecting prominent structures like ships. However, while it significantly reduces parameters and computational demands, it may neglect finer details and introduce artifacts due to its reliance on the highest pixel values within a kernel. Conversely, median pooling focuses on noise reduction and edge preservation, effectively mitigating noise without blurring crucial features [49]. For a given kernel size at position in the input image or feature map , the median pooling operation is given by the following equation:

As for max pooling, it replaces each pixel’s value with the maximum value of its neighboring pixels within a defined window, based on Equation (2):

When considering kernel sizes, larger kernels in median pooling balance noise reduction with feature preservation, while smaller kernels in max pooling capture finer details. Experimentation is crucial to optimize these parameters for effective SAR image preprocessing, as the choice of pooling method can significantly impact detection performance.

Generally, both pooling techniques present advantages and disadvantages that should be carefully considered. While max pooling excels in retaining prominent features, median pooling’s strength lies in reducing noise and preserving image detail. Addressing these trade-offs is essential for improving the effectiveness and efficiency of object detection models in SAR imagery.

2.1.2. Data Preparation for Optimal Pooling Module

The LSF plays a critical role in image processing, particularly in tasks like edge detection and assessing image sharpness. Specifically, LSF measures edge sharpness, which is crucial for evaluating how well pooling operations preserve image details, and especially important for accurate ship boundary detection [50]. Furthermore, it quantifies the system’s ability to reproduce sharp edges, which is crucial for accurate feature representation in models like FEDETR. LSF is calculated separately for horizontal and vertical edges and is defined as follows:

where is the intensity of the pixel at position (,) in the image. Also, based on Equations (3) and (4), the mean LSF is calculated as follows:

By selecting pooling parameters that minimize LSF, the FEDETR model can effectively preserve edge details, thereby improving its performance in selecting the most relevant features using attention mechanisms. This approach enhances the DETR model’s capability to focus on important features in SAR images, optimizing the attention mechanism to select the best features for ship detection. Maintaining edge sharpness through optimized pooling operations ensures that the DETR model can accurately detect ships even in complex environments like harbors and cluttered backgrounds, where edge preservation is crucial for precise boundary delineation.

PSNR is a crucial metric used to evaluate the quality of processed images compared to their originals, aiding in the selection of pooling methods that maintain fidelity during preprocessing. Specifically, it quantifies the ratio between the maximum possible power of a signal and the power of noise that affects the fidelity of its representation, with higher PSNR values indicating better image quality with less distortion or noise [51]. PSNR is calculated using the following formula:

where MAXP represents the maximum possible pixel value (typically 255 for 8-bit images) and the mean squared error (MSE) is the mean squared error, representing the average squared difference between the original and processed images and is given by the following equation:

where is the original pixel value and is the processed pixel value. Evaluating PSNR for each pooling method helps determine which approach better preserves important image details. Therefore, by minimizing information loss or distortion, PSNR-guided pooling strategies optimize image preprocessing, thereby improving the DETR and FEDETR models’ ability to accurately detect ships under challenging detection scenarios in SAR images.

Despite training the DETR model on SSDDs for ship detection, accurately identifying ships near harbors and in cluttered environments remains challenging. To address these difficulties, experiments were carried out using SAR ship datasets from satellites like Gaofen-3 and Sentinel-1, which provide images with various polarizations (VV and VH). The F1 score was then computed for each combination of pooling type and kernel size within these datasets. This evaluation measured the model’s performance under complex conditions where initial ship detections were inaccurate. The F1 score, which balances precision and recall, provides a comprehensive metric for assessing the model’s accuracy in ship detection across different pooling techniques and kernel sizes. The calculation for each pooling type and kernel size is as follows:

where Precision is the ratio of true positive detections to all positive detections, while Recall is the ratio of true positive detections to all ground truth positives.

Overall, by integrating LSF and PSNR, optimal pooling parameters can be effectively selected to strike a balance between edge sharpness and image quality, which are critical for accurate ship detection. Moreover, the decision-making process involves a comprehensive evaluation of F1 scores to assess detection accuracy, LSF values to measure edge sharpness, and PSNR to quantify image fidelity. This approach ensures that the pooling configuration chosen for preprocessing SAR images enhances the DETR model’s performance by preserving sharp edges while minimizing noise and distortion. In fact, by optimizing these parameters, the model becomes better equipped to detect ships accurately in challenging environments, such as near harbors or amidst cluttered backgrounds, where edge preservation and image quality are paramount.

2.1.3. Optimal Configuration Determination for Labeling Data

Selecting the most suitable pooling method and kernel size is important in preprocessing SAR images to improve the accuracy of ship detection. Max pooling is effective in capturing dominant features, making it advantageous for detecting prominent ship structures amidst cluttered backgrounds. Conversely, median pooling reduces noise and preserves finer details, essential for maintaining image fidelity across diverse environmental conditions. Experimenting with different kernel sizes allows for balancing feature detail against computational complexity, optimizing feature extraction within the detection transformer framework. In the process of optimizing pooling parameters, achieving a balance between edge sharpness and overall image quality is paramount. The integration of LSF and peak PSNR metrics aids in identifying the optimal pooling configuration. LSF evaluates edge sharpness, ensuring that pooling operations preserve sharp edges critical for accurate ship boundary detection. Meanwhile, PSNR quantitatively assesses image quality, ensuring that selected pooling methods maintain high fidelity across the entire image. This approach prevents the loss or distortion of crucial features necessary for accurate ship detection. The decision-making process for selecting optimal pooling parameters involves several key steps:

- Evaluation of F1 scores for each pooling type and kernel size configuration to measure detection accuracy comprehensively. In turn, the highest F1 score is determined as follows:

- Integration of LSF and PSNR metrics to assess edge sharpness and image quality across different pooling configurations for the purpose of making well-informed decisions regarding the optimal pooling parameters for preprocessing SAR images. In fact, this methodical approach guarantees that the processed images retain sharp edges and high fidelity, thereby improving the overall efficacy of the FEDETR model in precisely detecting ships in complex environments.In the context of this process, the configuration with the lowest LSF and with the highest F1 score is selected:

Moreover, if there are multiple configurations with the same F1-score and LSF, the configuration with the highest PSNR is selected:

- 3.

- Implementation of labelling steps: In the final stage, the selected pooling parameters are applied during the labeling process. This involves using these configurations to label and process SAR images, ensuring that the model’s performance is optimized based on the refined parameters. To facilitate this, a Pooling Optimization Dataset was created for the CNN module, which is designed to estimate these parameters effectively.

2.1.4. CNN Module for Pooling Parameter Estimation

SAR images often require advanced preprocessing techniques, such as filtering and denoising, to enhance feature representation before they are input into detection models. Due to the complex nature of SAR objects and their vulnerability to land and sea clutter, effective representation learning is crucial. Focusing solely on local ship features without considering the broader image context can hinder the model’s ability to capture and utilize semantic features, impacting overall detection performance.

This section details the enhancement of a CNN model to predict optimal pooling parameters, specifically the kernel size and pooling type based on preprocessing SAR images. Several strategies are implemented to achieve this goal. Initially, it is critical to refine the model architecture to accurately capture intricate patterns in LSF values.

Dataset Preparation and Structure for CNN Module

The input Pooling Optimization Dataset to the CNN module includes LSF values as input , representing image edge sharpness that is crucial for evaluating pooling’s impact on preserving image details, along with the optimal kernel size target variable, indicating the best kernel size configuration ((3, 3), (5, 5), (7, 7), or (9, 9)) for pooling operations and with another target variable , which specifies the optimal pooling type (‘median’ or ‘max’).

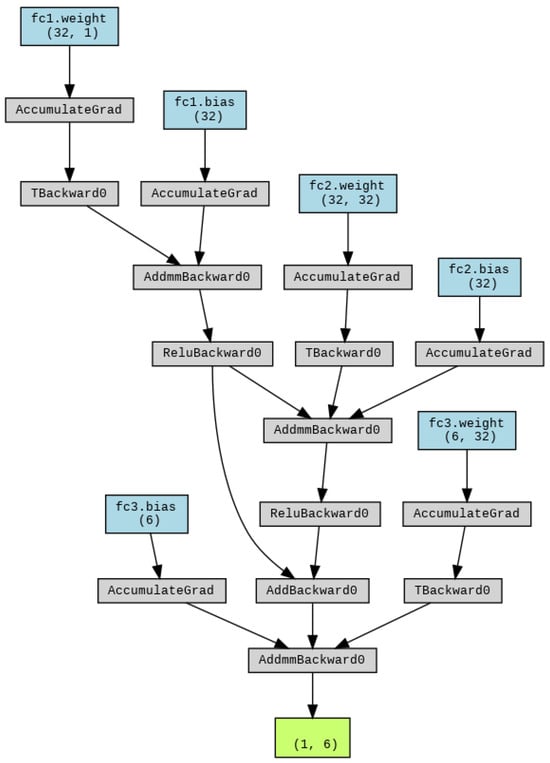

To effectively predict these optimal pooling parameters, the CNN model is designed with a skip connection to improve gradient flow. This design choice ensures more efficient training and better performance in predicting kernel size and pooling type based on the LSF values provided by the dataset. The model begins with an input layer that processes the LSF values, which are crucial for determining the most suitable pooling configurations as outlined in Figure 2. Initially, the model begins with an input layer that receives LSF values (i.e., ), essential for evaluating edge sharpness in SAR images. Then, it incorporates three fully connected layers: fc1, fc2, and fc3. In the model architecture, fc1 processes the input data, followed by fc2, which includes a residual connection from fc1 to address gradient vanishing issues and enhance information propagation. Both fc1 and fc2 utilize the rectified linear unit (ReLU) activation function to introduce non-linearity, crucial for capturing intricate patterns in LSF values. The final layer, fc3, generates logits for predicting kernel size and pooling type without additional activation, ensuring computational efficiency. This structured approach facilitates a streamlined flow of data through the model’s layers, beginning with the input layer (Linear-1) where LSF values undergo initial processing through a linear transformation. ReLU activation introduces crucial non-linearity after each linear transformation (fc1 and fc2), enabling the model to discern complex patterns in the data effectively. A notable feature of the model is the residual connection between ReLU-2 and ReLU-4, which enhances gradient flow by adding the output of fc1 directly to fc2’s output after activation. This mechanism effectively mitigates the issue of vanishing gradients and promotes robust information propagation throughout the network. Consequently, the output layer (Linear-5) consolidates these refined features to predict both the optimal kernel size and pooling type, which are crucial for enhancing SAR image processing tasks. This structured architecture optimizes computational efficiency while significantly enhancing the model’s predictive accuracy, particularly in complex scenarios involving SAR image analysis.

Figure 2.

CNN preprocessing model.

The CNN model’s ability to predict optimal pooling parameters based on advanced image quality and detection accuracy metrics represents a significant advancement in SAR image preprocessing for ship detection. By leveraging this predictive capability, the FEDETR model can dynamically adjust its pooling strategies, enhancing its robustness and accuracy in diverse and challenging scenarios. This integrated approach ensures that preprocessing steps are optimized, ultimately leading to better feature extraction and improved object detection performance.

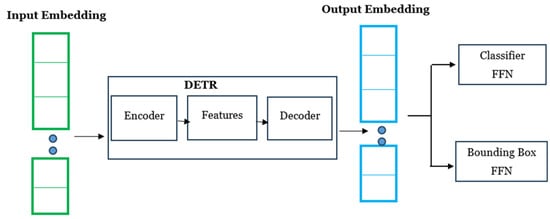

2.1.5. DETR Model

The DETR model builds on the DETR architecture by incorporating the DC5-R101 backbone, which enhances both feature extraction and contextual awareness. This upgrade effectively addresses the common issues faced by traditional object detection methods, such as handling variations in object scale, complex shapes, and crowded scenes with overlapping objects. The DC5-R101 backbone improves adaptability, feature extraction, and contextual understanding, ensuring that the DETR model remains highly effective in object detection. In the DETR model, the input embeddings are processed by the transformer’s encoder–decoder, which produces output embeddings. These embeddings are then fed into a classifier feed-forward network (FFN) and a bounding box FFN to generate the final predictions, as depicted in Figure 3 [52].

Figure 3.

DETR pipeline overview [52].

The model’s architecture begins with the input image (x) and integrates positional encodings PE(x) to accurately identify object positions. These positional encodings are also applied to the input feature maps, enabling the model to interpret spatial relationships. The encodings for a 2D position in the feature map are defined as follows, specifically for positional encodings [14]:

where and represent the row and column indices in the feature map, while and refer to the number of dimensions in the positional encoding.

The DC5-R101 backbone, an essential component of this architecture, effectively adjusts the receptive field of feature maps to improve object detection. This is achieved through the use of dilations and stride adjustments, which enhance the feature map output. This adjustment can be expressed with the following equation [53]:

where represents the output from the DC5-R101 backbone, and (x) refers to the input image with positional encodings. This architectural enhancement, however, leads to increased computational demands due to the more intensive self-attention mechanisms in the encoder. The DC5-R101 backbone enhances the receptive field of feature maps through adjustable dilation rates, with a rate of 2. The DC5 operation can be expressed as follows:

According to Equation (14), DETR utilizes a CNN with a 5 × 5 kernel and a defined dilation rate (d) to improve its ability to capture fine-grained details. The 5 × 5 kernel is selected for its proficiency in detecting complex patterns and spatial relationships. The dilation rate adds flexibility to the convolutional process, affecting how the receptive field is constructed. This method allows for the network to handle information across various scales, which is essential for detailed analytical tasks.

Additionally, the DETR architecture features a transformer encoder that includes both self-attention and feed-forward layers. In this encoder, the self-attention mechanism computes attention scores for each pair of object queries and keys using the formula provided below:

where denotes the dimension of keys and values, and represents the values. Although DETR’s self-attention mechanism captures global context by evaluating the positions of objects within the image, it requires specifying a fixed number of objects queries beforehand. This fixed query count can limit its effectiveness in scenarios where a variable number of objects may be present.

While DETR simplifies the training pipeline, enhancing the efficiency of the learning process, the architecture’s complexity and increased computational demands make it less suitable for real-time applications. The reliance on a fixed query count, combined with the intensive computational requirements introduced by the DC5-R101 backbone and its dilation mechanisms, may lead to slower inference times, impacting practical usability in dynamic environments.

2.2. PolSAR Detection Module

In PolSAR processing, Pauli decomposition and coherence matrix analysis are crucial for ship detection by breaking down the SAR scattering matrix into components like surface, double-bounce, and volume scattering. The Fvh components further enhance detection by quantifying volume and helix scattering. This section introduces a novel method combining Otsu’s thresholding with morphological operations to optimize threshold selection, effectively distinguishing ships from sea clutter.

2.2.1. Pauli Decomposition

The Pauli decomposition breaks down the radar scattering matrix into components highlighting distinct scattering mechanisms:

where H and V denote the orthogonal horizontal and vertical polarization bases, respectively. This decomposition yields three Pauli basis vectors, kp0, kp1, and kp2, representing surface, double-bounce, and volume scattering, defined as follows:

These vectors form the coherence matrix , which captures spatial and polarimetric correlations:

where measures the total power of backscattered signals; indicates the complex correlation polarizations; reflects the correlation between co-polarized and cross-polarized signals; quantifies the power of differential backscattered signals between horizontal and vertical polarizations, crucial for distinguishing between various types of targets; Illustrates the correlation of differential and cross-polarized signals; and represents the power of the cross-polarized component.

2.2.2. Volume and Helix Scattering Module (FVh)

In PolSAR processing, effective ship detection relies on analyzing the coherence matrix , which captures spatial and polarimetric relationships vital for understanding scattering mechanisms like volume and helix scattering. Expansion coefficients , , , and are computed from this matrix to quantify scattering powers:

The scattering powers are then represented as

The combined influence of volume and helix scattering is captured in the ship detection ship detection feature , which is calculated as

Normalization of enhances interpretability across SAR datasets. The equations leverage coherence matrix elements to identify ship areas in SAR imagery, improving detection by combining volume and helix scattering characteristics, minimizing false alarms, and increasing sensitivity.

2.2.3. Thresholding Selecting

In our proposed method for enhancing ship detection in PolSAR imagery, we integrate Otsu’s thresholding algorithm with morphological operations, leveraging distinct scattering characteristics such as Pauli components and to improve sensitivity for targets with high SCR. Specifically, Otsu’s algorithm determines an optimal threshold that maximizes separability λ between two classes in a normed histogram :

where . For each threshold , the algorithm divides the histogram into two classes: Class 1 (ships) and Class 2 (background, representing sea surface). Then, the occurrence probabilities and as well as the average cosine similarities and are computed as follows:

where and are cumulative sums of histogram values up to and from to 255, respectively, while and represent the centroids of Class 1 (ships) and Class 2 (background) at threshold . Moreover, the class variances are computed using these probabilities and centroids in the following manner:

while the intraclass variance and interclass variance are given by the following expressions:

Finally, the separability between the two classes is given by

and the optimal threshold that maximizes it is chosen as the optimal threshold for segmentation. In turn, to refine the binary mask obtained from Otsu’s thresholding and improve ship detection accuracy, morphological operations such as opening and closing are applied. These operations eliminate noise and smooth out the boundaries of detected ships, enhancing the reliability of ship detection in PolSAR imagery. In fact, by integrating Otsu’s thresholding with morphological operations and considering occurrence probabilities and average cosine similarities , our approach automates threshold determination and enhances ship detection capabilities across various environmental conditions.

2.2.4. Weighted Feature Fusion (WFF) Module

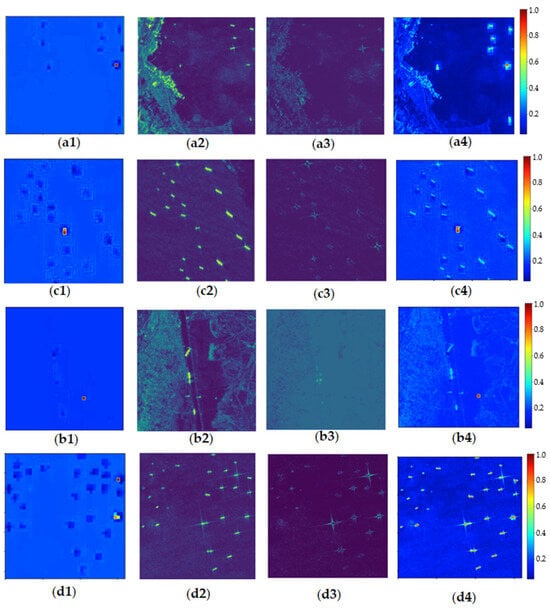

Our research methodology, in its second stage, concentrates on improving ship detection from SAR images through the FEDETR model. This stage integrates several components: the coherence matrix feature derived from Pauli decomposition, the Fvh feature computed from volume and helix scattering powers in PolSAR analysis as well as the attention maps generated by the FEDETR model. This approach also introduces a method for visualizing attention maps specifically designed for SAR imagery using FEDETR.

During model execution, we intercept critical outputs such as convolutional features and attention weights to elucidate the model’s data processing flow. These outputs include predicted logits and rescaled bounding boxes that highlight potential ship locations based on a predefined detection threshold. Furthermore, the attention maps, derived from the final decoder layer’s attention weights, visually represent areas where the model focuses most intensely, aiding in understanding how FEDETR leverages SAR image characteristics to improve ship detection accuracy. The attention map, generated by FEDETR, is computed as follows:

where represents the attention weights of the first index in the final decoder layer of FEDETR, reshaped to match the dimensions of the feature map. In addition, the accumulation of attention weights occurs via the following equation:

where for each bounding box index greater than zero, denotes the attention weights corresponding to that index in the decoder layer. These equations encapsulate how the FEDETR model computes and accumulates attention weights to form the attention map. In turn, the resulting attention map visually represents areas of heightened focus within SAR images, aiding in the interpretation of FEDETR’s processing of SAR data and its application to ship detection tasks.

Overall, the coherence matrix feature provides spatial coherence information, emphasizing structural details such as scattering intensity, while the attention maps identify potential ship locations by highlighting relevant SAR image features. Additionally, the Fvh feature combines volume and helix scattering powers to further enhance ship detection accuracy. To effectively integrate these features, we normalize each component to maintain consistency across their respective ranges. Specifically, the coherence matrix feature is normalized to ensure it falls within a standard range. Similarly, the attention map, after being resized, is normalized to achieve uniformity. As for the Fvh feature, it is also adjusted to ensure its values are consistent with the other features. These normalization steps prepare the weighted features for fusion using the following equation:

where α represents the weight for the coherence matrix feature, β signifies the importance of the attention map and γ balances the contribution of the Fvh feature.

2.3. Materials

2.3.1. Datasets

In this study, the SSDD was utilized to train the DETR model for ship detection, while the SAR-Ship-Dataset was used for testing. In addition, the FEDETR module was also trained using testing datasets from both SSDD and the SAR-Ship-Dataset. By leveraging the diverse and abundant features from these datasets, FEDETR enhances detection in complex backgrounds, improving overall accuracy and robustness, especially for detecting small ships and challenging environments.

Below, more information regarding the SSDD and the SAR-Ship-Datasets are provided, while their characteristics are summarized in Table 1.

Table 1.

Characteristics and specifications of ship detection datasets.

The SSDD comprises 1160 images containing a total of 2456 ship targets, averaging 2.12 ships per image. As a pioneering resource for SAR target detection, it includes images captured by three different satellite sensors across four polarization modes. The resolution ranges from 1 to 15 m, featuring ships in both nearshore and offshore environments. Ship labeling was conducted using the open-source software LabelImg v.1.8.6, enhancing the accuracy of the annotations. By employing the SSDD, our research draws upon established datasets, which enhances the credibility and significance of our findings [54].

SAR-Ship-Dataset comprises 102 GF-3 images and 108 Sentinel-1 images; this dataset includes a total of 43,819 ship slices. Each image has a resolution of 256 × 256 pixels and depicts ships with varying scales and backgrounds, enhancing the robustness and generalization of detection algorithms. The dataset is split into training, validation, and test sets in a 7:2:1 ratio, allowing for the detection model to learn from a comprehensive set of image features and improve its accuracy.

The training process utilized a computer with a 12th Gen Intel(R) Core (TM) i7-12700H processor running at 2.70 GHz, 16.0 GB of installed RAM (15.7 GB usable), and a GeForce RTX 3070 graphics card. Furthermore, the deep learning framework employed for this work was Pytorch v.2.4.

2.3.2. The Original SAR Imageries

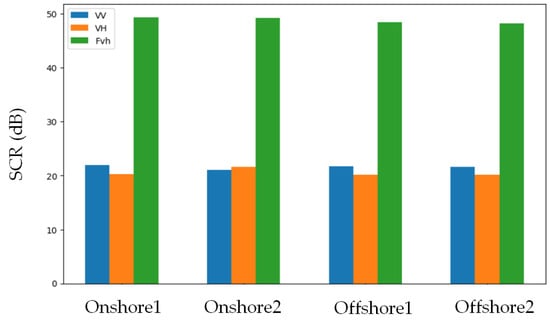

To ensure the quantity and quality of the ship-specific interpretation dataset, four Level 1 Sentinel-1 Interferometric Wide (IW) swath mode images were selected as the original construction data. According to the Sentinel-1 official guide by the European Space Agency (ESA), the IW mode captures three sub-swaths using Terrain Observation with Progressive Scans SAR (TOPSAR). This mode generates products with VV co-polarization and VH cross-polarization. In addition, the cross-polarization scattering provides stronger energy intensity than the co-polarization scattering, making the shape and skeleton of ships clearer. However, cross-polarization also has stronger inshore scattering and sea clutter noise compared to co-polarization. In fact, ships appear as spindle-shaped bright pixels due to double reflection under the radar pulse emitted by the sensor. Details such as resolution and polarization are summarized in Table 1. Labeling was performed using the open-source software program LabelImg. Given the availability of VV and VH polarizations, polarimetric methods were employed for ship detection. Four distinct regions—Onshore1, Onshore2, Offshore1, and Offshore2—were selected and tested using VV and VH polarimetric SAR images. These regions, encompassing major ports, busy sea areas, and specific scenes, were chosen to ensure a representative and ample sample set. The regions cover extensive areas, including the Suez Canal, and all original images with broad swath coverage were sourced from the official website.

3. Results

3.1. Experiments of FEDETR on Datasets

We conducted a series of experiments on the SSDD using the previously described parameter settings, while overall and specific mean average precision metrics (i.e., AP, AP50, AP75, APS, APM, and APL) [55] are presented in in Table 2.

Table 2.

Mean average precision of DETR results.

Based on this table, it is evident that DETR performs well in terms of ship detection in SAR images across all evaluated metrics. In fact, their high values highlight DETR’s robustness and accuracy in various conditions and object sizes, making it a reliable choice for maritime surveillance applications. However, it is important to note that DETR’s performance for small and medium-sized ships is relatively lower compared to large ships. Furthermore, the performance of DETR on complex environments from SSDD images and ship datasets, which include Gaofen-3 and Sentinel-1 images with different polarizations and resolutions, showed variations in detection accuracy. While DETR demonstrated strong performance on the SSDD, its efficacy in more challenging conditions and with diverse datasets was not as consistent. The detection accuracy on these more complex datasets, with varying polarizations and resolutions, was comparatively lower, indicating the need for further refinement and adaptation of DETR to handle the complexities inherent in these diverse maritime environments.

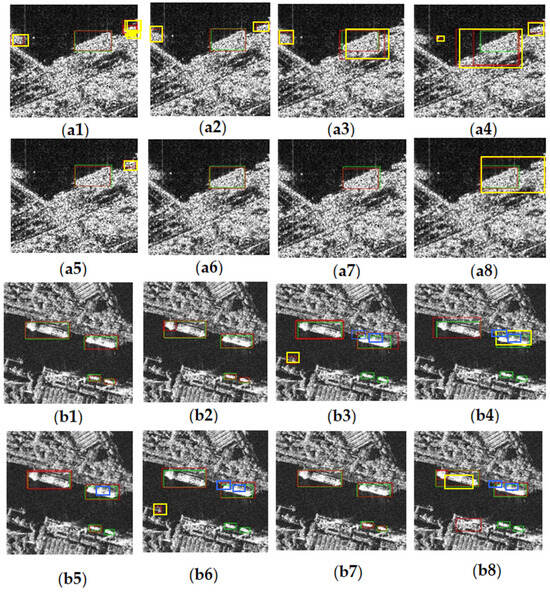

Overall, these results highlight DETR’s strong performance but also point to areas for improvement, particularly in detecting small- and medium-sized ships in SAR images. To address these limitations, the FEDETR module is employed, which utilizes a CNN with robust preprocessing techniques in order to enhance feature extraction before detecting using DETR. This CNN was trained and evaluated on complex environments from SSDD images and ship datasets, which include Gaofen-3 and Sentinel-1 images with different polarizations and resolutions. Figure 4 demonstrates ship detection using DETR after applying different types of maximum and median pooling with kernel sizes of 3, 5, 7, and 9 for these images.

Figure 4.

Performance of FEDETR for two images from the test datasets SSDD and SAR Ship, including Gaofen-3 (a1–a8) and Sentinel-1 images (b1–b8) with different polarizations and resolutions. The ground truths, detection results, the false detection and missed detection results are indicated with green, red, yellow, and blue boxes, respectively.

In the figure above, panels Figure 4(a1–a4) illustrate maximum pooling, while panels (a5–a8) show median pooling with kernel sizes of 3, 5, 7, and 9 for a Gaofen-3 HH polarization image. Similarly, panels Figure 4(b1–b4) and Figure 4(b5–b8) present corresponding results for an image from the SSDD. Ultimately, the FEDETR model recommended median pooling with a kernel size of 7 for the Gaofen-3 HH polarization image, as depicted in Figure 4(a7), and median pooling with a kernel size of 5 for the SSDD image, as shown in Figure 4(b6). These results indicate the efficacy of the proposed FEDETR model in solving the problem of ship misdetection by DETR in complex environments near harbors and different types of images and polarizations from SAR Ship datasets. This was achieved by the integration of LSF, PSNR, and F1 score metrics, which ensured the accurate prediction of optimal pooling configurations that balance edge sharpness, image quality, and detection accuracy. Specifically, the CNN model achieved predicting a pooling type with an accuracy of 91% and a kernel size accuracy of 92%. Therefore, this integrated approach ensures that preprocessing steps are optimized, ultimately enhancing robustness and accuracy in diverse and challenging scenarios, thus leading to leading to better feature extraction and improved object detection performance.

3.2. Ablation Study

The ablation experiments conducted with the FEDETR model, illustrated in Figure 4, utilized the SSD and SAR Ship datasets, using DETR as the baseline model. Four images showcasing large, medium, and small ships were selected to encompass both distant sea and nearshore regions. To ensure the fairness and stability of the experimental results, the experiments employed FEDETR and WFF frameworks. The analysis included Sentinel-1 SAR images from two onshore and two offshore locations, aiming to evaluate the effectiveness of the proposed method across varying ship sizes and complex environmental conditions. The detection performance metrics indicate that FEDETR significantly improved upon the baseline DETR, particularly through the integration of a preprocessing CNN module that enhanced ship detection. Furthermore, the WFF module demonstrated remarkable improvements in detecting small ships, contributing to the overall enhancement in detection performance.

The findings in Table 3 reveal critical differences in the performance of FEDETR compared to DETR across diverse scenarios. In the Onshore1 setting, FEDETR_VV achieved a precision of 88% and recall of 70% using median pooling with a kernel size of 3, which is slightly lower than the DETR_VV performance of 89% precision and 80% recall. This suggests that while FEDETR employs specialized pooling techniques with potential for enhancement, it does not consistently outperform the baseline in this scenario. In the Onshore2 results, FEDETR only attained a precision of 75%, markedly lower than the 99% achieved by DETR, highlighting the challenges FEDETR faces under certain conditions, likely due to the complexities of detecting ships in cluttered environments. However, the offshore scenarios emphasize the strengths of the FEDETR framework, where it achieved significant improvements. In Offshore1, FEDETR_VH using maximum pooling with a kernel size of 5 reached a precision of 99%, surpassing the baseline DETR_VH at 98%. Similarly, in Offshore2, FEDETR excelled across both polarizations, attaining a precision of 99% in both VV and VH, demonstrating substantial enhancements in offshore environments attributed to strategic use of preprocessing CNN modules and optimized pooling techniques.

Table 3.

Performance comparison of FEDETR and baseline DETR on VV and VH polarizations in four areas in terms of precision, recall and F1 score, with the values of the best method showned in bold.

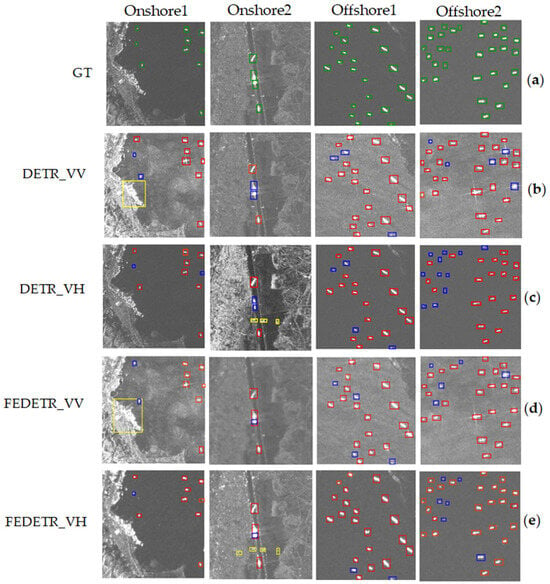

The DETR method provides baseline detection results, but its performance varies across regions and polarizations, highlighting the need for refinement. FEDETR improves detection by predicting the optimal pooling type and kernel size, enhancing accuracy. For instance, according to Table 3, in Onshore1, FEDETR_VV with median pooling and a kernel size of 3 outperforms DETRVV, while FEDETR_VH with maximum pooling and a kernel size of 5 significantly improves detection compared to DETR_VH. Similarly, in Onshore2, FEDETR_VV with median pooling and a kernel size of 7 outperforms DETR_VV, and FEDETR_VH with maximum pooling and a kernel size of 3 enhances detection compared to DETRVH. This continues in Offshore1, where FEDETR_VV with median pooling and a kernel size of 5 surpasses DETR_VV, and FEDETR_VH with maximum pooling and a kernel size of 5 outperforms DETR_VH. In Offshore2, FEDETR_VV with median pooling and a kernel size of 7 outperforms DETR_VV, while FEDETR_VH with maximum pooling and a kernel size of 5 significantly improves detection compared to DETR_VH. Figure 5 highlights that while DETR results are based on the original VV and VH images, FEDETR’s results are based on preprocessed images, optimized through suitable kernel sizes and pooling types for each polarization.

Figure 5.

Experimental results for ship detection in SAR images across four distinct regions: Onshore1, Onshore2, Offshore1, and Offshore2. (a) are the ground truth images; (b–e) are the detection results for DETR using VV and VH (DETR_VV, DETR_VH) as well as FEDETR using VV and VH (FEDETR_VV, FEDETR_VH) polarizations, respectively. Ground truths, detection results, false detection results, and missed detection results are marked with green, red, yellow, and blue boxes.

This preprocessing step significantly enhances detection performance, as evidenced by the improved F1 scores and reduced false and missed detections. The consistent improvement in detection accuracy across all regions demonstrates FEDETR’s effectiveness in fine-tuning pooling parameters, resulting in higher F1 scores and reduced false and missed detections. The integration of optimal pooling parameters in FEDETR showcases its potential to enhance ship detection in SAR images, crucial for reliable maritime surveillance and security operations.

In the second stage of the experiments, various methods for ship detection were tested under diverse conditions, as illustrated in Table 4, which compares the FEDETR, FVh, Pauli basis, and WFF approaches in terms of precision, recall, and F1 score. The results indicate that the fusion between PolSAR techniques using Pauli and FVh with FEDETR’s attention map significantly enhanced ship detection, particularly for small vessels, through the effective utilization of the WFF module. In onshore scenarios, FEDETR and WFF achieved the highest precision and F1 scores of 99% and 95%, respectively, underscoring their robustness in accurately identifying ships. Although the Pauli method achieved perfect recall, it recorded a lower precision of 47% and an F1 score of 64%, suggesting a higher false positive rate. The FVh method showed notable limitations in onshore environments, with a substantially lower F1 score of 30%.

Table 4.

Performance evaluation of ship detection across onshore and offshore environments in terms of precision, recall and F1 score, with the values of the most accurate method showed in bold.

In contrast to the performance observed in onshore areas, the results for offshore scenarios highlight the superior detection capabilities of the FEDETR, FVh, Pauli basis, and WFF methods. Here, both FEDETR and WFF demonstrated outstanding performance, with FEDETR achieving an F1 score of 95% and WFF achieving an impressive F1 score of 98%. The Pauli method also performed commendably offshore, reaching an F1 score of 93%. Although FVh showed good precision at 91%, its F1 score of 89% was comparatively lower. Overall, the proposed FEDETR and WFF methods consistently outperformed the FVh and Pauli approaches, particularly in terms of precision and F1 scores. These findings underscore the robustness and accuracy of the FEDETR and WFF methods in diverse and complex environments, emphasizing their suitability for reliable maritime surveillance and security operations.

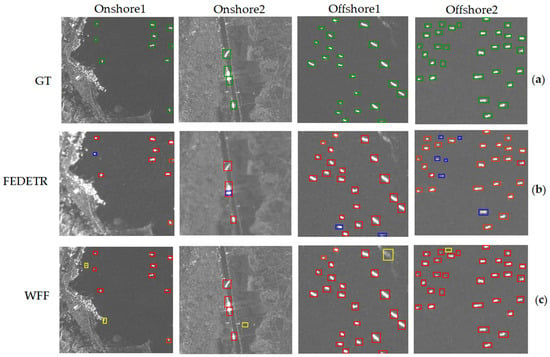

As shown in Figure 6, the WFF method substantially improves ship detection, especially for smaller vessels, by enhancing the separation of ships from the background. This approach proves more effective in refining detection than DETR models, while FEDETR with optimal pooling settings already delivers strong detection performance, the addition of WFF further refines this process, emphasizing its ability to better distinguish ships in complex environments. The integration of WFF with FEDETR leads to a notable increase in overall detection accuracy, making it a powerful tool for maritime surveillance. This enhancement is particularly significant in detecting small ships, where WFF greatly reduces false and missed detections, highlighting its potential for precise and dependable maritime operations.

Figure 6.

Experimental results for ship detection in SAR images across four regions: Onshore1, Onshore2, Offshore1, and Offshore2. (a) are the ground truth images and (b,c) are the predicted results from FEDETR with optimal pooling and kernel size and the WFF method, respectively. Ground truths, detection results, false detections, and missed detections are marked with green, red, yellow, and blue boxes, respectively.

4. Discussion

4.1. Implementation Details

The training process for the FEDETR module is methodically divided into two stages to maximize detection performance and robustness. The first stage focuses on training the DETR for initial ship detection, while the second stage employs a CNN to preprocess and enhance features before the detection phase. This structured approach ensures optimal feature extraction and improves the overall accuracy of the detection model.

In the initial stage, we optimized DETR for detection with an initial learning rate of 0.0001 and a batch size of 2. To prevent overfitting, data augmentation techniques such as image flipping, rotation, and hue adjustments were applied. Each image was auto oriented, resized to 500 500 pixels, and subjected to random rotations between −15 and +15 degrees. The model was trained over 50 epochs on SSDDs. The second stage involves training a CNN to enhance features before feeding them into DETR, aiming to improve detection performance. The CNN model uses the Adam optimizer with a learning rate of 0.001 and cross-entropy loss (CEL). This training process extends over 100 epochs and encompasses both training and evaluation phases to effectively optimize the loss functions. The CEL function measures the difference between predicted and actual values, guiding the model to adjust its parameters for accurate predictions. Moreover, validation metrics, such as kernel size accuracy and pooling type accuracy, are monitored to evaluate the model’s performance in predicting optimal parameters. The CNN is trained to predict the optimal kernel size and pooling type, using the CEL function as follows:

where and represent the actual kernel size and pooling type, respectively. As for the kernel size and pooling accuracies, measuring how accurately the model predicts them is calculated as follows:

where and denote the predicted kernel size and pooling type, while and are the actual kernel size and pooling type.

By training the CNN to predict optimal pooling parameters, SAR image preprocessing is optimized, ensuring the CNN effectively captures relevant features while maintaining computational efficiency. This methodology enhances ship detection accuracy in complex scenarios, such as cluttered backgrounds or harbors. Evaluations on a separate validation dataset demonstrated high accuracy in predicting optimal pooling configurations, validating the model’s effectiveness. Integrating LSF, PSNR, and F1 score metrics ensures that selected pooling parameters balance edge sharpness, image quality, and detection accuracy, leading to improved performance of the FEDETR model.

4.2. FEDETR Module Effect Validation

Effective SAR image preprocessing improves object detection accuracy in complex environments by systematically analyzing correlations between pooling parameters and image quality metrics. Equation (46) forms the quantitative basis for understanding how adjustments in kernel size impact image sharpness (LSF) and fidelity (PSNR) in SAR image processing.

Here, we utilize the Pearson correlation coefficient to quantify the linear relationship between kernel size and LSF or PSNR, calculated using the bad test images from SSD and SAR ship datasets. This coefficient is calculated using the following formula:

where and represent individual data points of kernel size and LSF (or PSNR), while and denote their means, and is the number of data points.

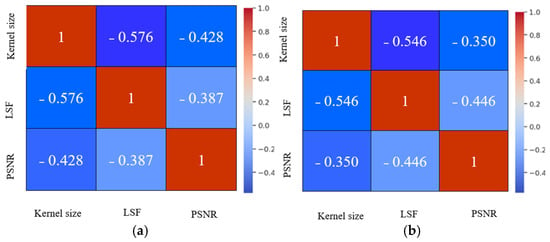

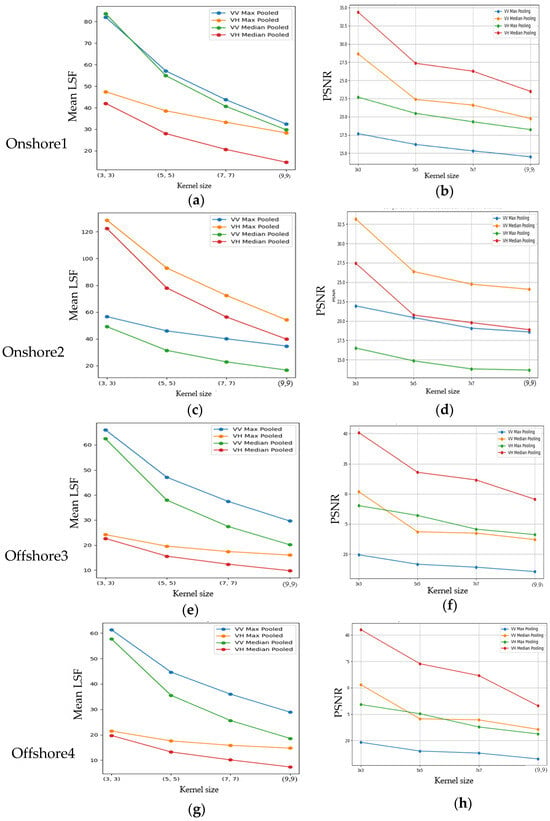

Figure 7 provides critical insights into the correlations among kernel size, LSF, and PSNR for both max pooling and median pooling techniques, which were evaluated based on Equation (46). In max pooling configurations, kernel size exhibits significant negative correlations with both max LSF (−0.576) and max pooling PSNR (−0.428). These findings suggest that larger kernel sizes broaden LSF and reduce PSNR values, indicating a trade-off where increased feature sampling may compromise local image sharpness. Conversely, median pooling setups show stronger negative correlations between kernel size and median LSF (−0.546), as well as between median LSF and median pooling PSNR (−0.350). This implies that median pooling preserves local image details captured by LSF more effectively, potentially resulting in higher PSNR values compared to max pooling. Such findings underscore the advantage of median pooling in applications prioritizing image fidelity.

Figure 7.

Correlation matrix analyzing the relationship between kernel Size, LSF, and PSNR for max pooling (a) and median pooling (b) on SSD and SAR Ship datasets. Validation of FEDETR module effectiveness.

These correlation analyses underscore the importance of selecting pooling configurations that effectively balance edge sharpness and feature representation in SAR image preprocessing. Integrating LSF and PSNR metrics into the decision-making process allows for strategic optimization of pooling parameters, enhancing image processing outcomes. Lower LSF values are crucial as they indicate sharper image edges, essential for accurate object detection in SAR images, while higher PSNR values reflect superior overall image quality and feature retention during pooling operations. Integrating (LSF) and PSNR metrics into the decision-making process proves crucial for optimizing pooling parameters effectively. In fact, by prioritizing pooling configurations that strike a balance between low LSF and high PSNR, the selected parameters effectively enhance both edge sharpness and image fidelity. This systematic approach ensures the robustness and efficacy of models like DETR in precise object detection within SAR imagery, emphasizing the importance of using quantitative metrics to guide parameter selection in digital image processing.

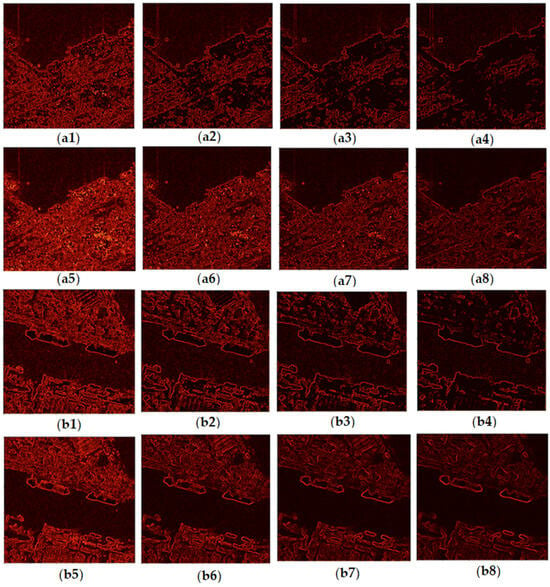

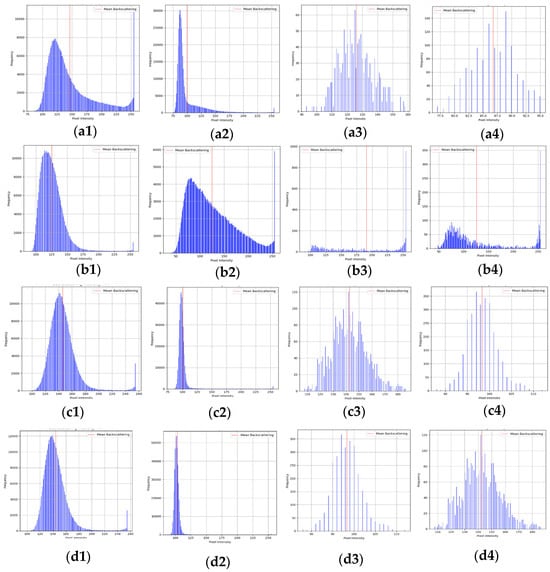

Up next, we assess the impact of feature extraction using FEDETR and the utilization of LSF images to validate the preprocessing module’s enhancement on feature extraction. Figure 8 visualizes the effects of max pooling and median pooling with different kernel sizes on LSF images, illustrating how these pooling methods influence feature representation and noise reduction.

Figure 8.

Depicts the LSF of images with different types of pooling and kernel sizes. Panels (a1–a4) depict LSF images after max pooling, while panels (a5–a8) show LSF images after median pooling with kernel sizes 3, 5, 7, and 9 respectively for Gaofen-3 HH images from the SAR Ship dataset. Panels (b1–b4) illustrate LSF images after max pooling and panels (b5–b8) show LSF images after median pooling for images from the SSD dataset.

Figure 8 presents the performance of FEDETR on selected large, medium, and small ship objects, including those in far sea and near shore scenarios, from test datasets such as SSDD images and the SAR Ship dataset (including Gaofen-3 and Sentinel-1 images with varying polarizations and resolutions). This analysis highlights the variations in detection accuracy concerning edges, textures, and noise reduction, demonstrating how FEDETR enhances the capabilities of DETR in ship detection tasks. Specifically, in Figure 8(a7), median pooling with kernel size 7 for Gaofen-3 HH polarization optimizes feature extraction, while Figure 8(b6) shows median pooling with kernel size 5 for images of SSDDs, emphasizing the preservation and enhancement of feature information crucial for detecting medium and small ships. The use of LSF images after median pooling with kernel size 7 for Gaofen-3 images illustrates the reduction of ship features and interference in complex harbor environments, thereby enabling DETR to effectively extract features and enhance detection accuracy. Conversely, median pooling with kernel size 5 for SSDDs addresses the challenge of retaining original feature information for medium and small ships, demonstrating DETR’s capability to predict optimal features under varying conditions. This analysis confirms the effectiveness of our proposed methodology, showcasing how FEDETR, alongside careful selection of pooling methods and kernel sizes based on LSF and image quality metrics like PSNR, enhances DETR’s performance in SAR image processing. By systematically evaluating these parameters and their impact on feature extraction and noise reduction, our study underscores the robustness and efficacy of our approach in improving ship detection accuracy across diverse SAR datasets and environmental conditions.

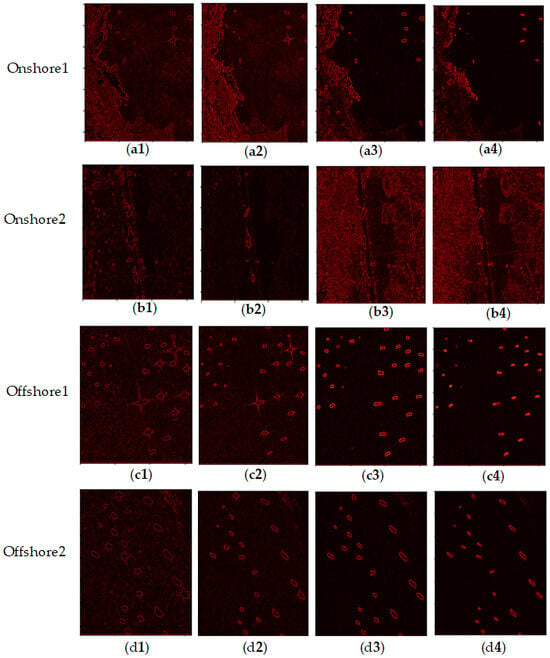

WWF Module Effect Validation

In this section, we analyze a fusion module that combines features from the attention map of FEDETR and PolSAR methods to improve ship detection. Firstly, our analysis focuses on the performance of FEDETR on VV and VH polarizations across four different areas. We predict the best pooling method (maximum or median) and kernel size for each region to achieve the best detection of ships, considering both VV and VH polarizations. Additionally, we analyze the backscattering characteristics and statistical properties (mean and standard deviation) of VV and VH images for each area to understand the rationale behind the optimal pooling choices based on polarization. The mean and standard deviation of the pixel intensity values for a region of interest are calculated as follows:

where is the pixel intensity value and is the total number of pixels in the region of interest. These metrics provide a detailed understanding of the scattering behavior and noise levels in the images, offering insights into the optimal polarization and pooling methods for enhancing ship detection performance. Table 5 summarizes the backscattering values for images and ships in the four regions, providing a comprehensive overview of the analyzed data.

Table 5.

Backscattering values for images and ships in the four regions, where bold values indicate the polarization providing the better accuracy.

In Figure 9, for the first scenario (Onshore1), VV image backscattering is higher than VH for both images and ships. However, VH has lower noise, indicated by a lower standard deviation. For ship detection, VH images with max pooling and a kernel size of 5 yield the best results. In the second scenario (Onshore2), VV backscattering is higher than VH for both images and ships. VV images with median pooling and a kernel size of 7 provide better performance due to lower backscattering noise. For the third scenario (Offshore1), VV backscattering is higher than VH for both images and ships. VH images with max pooling and a kernel size of 5 offer better detection due to lower noise. In the fourth scenario (Offshore2), VV backscattering is higher than VH for both images and ships. VH images with max pooling and a kernel size of 5 provide better detection due to lower noise levels. This validates the efficacy of our FEDETR to select median pooling for VV and max pooling for VH to improve detection.

Figure 9.

Backscattering intensity in VV and VH polarizations and ship presence across four regions. (a1,a2) Backscattering intensity in VV and VH polarizations for Onshore1; (a3,a4) backscattering intensity for ships in Onshore1; (b1,b2) backscattering intensity in VV and VH polarizations for Onshore2; (b3,b4) backscattering intensity for ships in Onshore2; (c1,c2) backscattering intensity in VV and VH polarizations for Offshore1; (c3,c4) backscattering intensity for ships in Offshore1; (d1,d2) backscattering intensity in VV and VH polarizations for Offshore2; and (d3,d4) backscattering intensity for ships in Offshore2. In each subfigure, the x-axis represents pixel intensity, and the y-axis represents frequency.

Figure 10 presents LSF and PSNR values for the four areas, validating our predictions for VV and VH with median and max pooling. The results show that in Onshore1 and Offshore1/2, VH with max pooling (kernel size 5) outperforms VV, while in Onshore2, VV with median pooling (kernel size 7) yields better results. We conclude that the choice of VV or VH polarization depends on the standard deviation, which represents noise, and the type of pooling and kernel size that balance lower LSF and higher PSNR, as predicted by our FEDETR model.

Figure 10.

LSF and PSNR Comparisons for Onshore and Offshore Areas (Onshore1 (a,b), Onshore2 (c,d), Offshore1 (e,f), Offshore2 (g,h)) Using VV and VH Polarization with Median and Max Pooling.

Figure 11 provides a comprehensive comparison of LSF images, showcasing the edges and features resulting from different pooling strategies and kernel sizes. This analysis assesses the effectiveness of these strategies across various regions, thereby enhancing ship detection. In the Onshore1 and Offshore1/2 areas, the VH polarization with the proposed max pooling using a kernel size of 5, applied through a preprocessing CNN module, consistently outperforms the median pooling approach with the same kernel size. This method preserves finer details and significantly boosts detection performance compared to median pooling. Conversely, in these regions, VV polarization with the proposed median pooling using a kernel size of 5 in the preprocessing CNN module performs better than VV with max pooling, underscoring its capability to reduce noise and maintain image fidelity.

Figure 11.

Visual comparison of max and median pooling with different kernel sizes on onshore and offshore SAR imagery for VV and VH polarizations: (a1,a2) Onshore1 VV (max kernel size 3; median kernel size 3); (a3,a4) Onshore1 VV (median kernel size 5); (b1,b2) Onshore2 VV (max kernel size 3); (b3,b4) Onshore2 VH (median kernel size 5); (c1,c2) Offshore1 VV (max kernel size 7; median kernel size 7); (c3,c4) Offshore1 VH (max kernel size 3; median kernel size 3); (d1,d2) Offshore2 VV (max kernel size 5; median kernel size 5); (d3,d4) Offshore2 VH (max kernel size 5; median kernel size 5).

In the Onshore2 area, a comparison is made between the effectiveness of the proposed median pooling with a kernel size of 7 in VV polarization against max pooling, and vice versa for VH polarization with the same kernel size. This comparison reveals nuanced differences in how each polarization and pooling method handle image features and edges, underscoring the importance of selecting the appropriate pooling strategy based on the specific characteristics of each region and polarization. Overall, these findings validate the efficacy of our proposed FEDETR approach, which strategically balances LSF and PSNR metrics to optimize ship detection. By enhancing the preservation of critical image details, our approach achieves superior performance in diverse environmental conditions, leading to more accurate and reliable ship detection results.

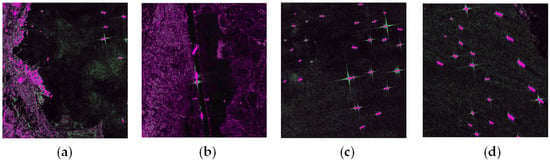

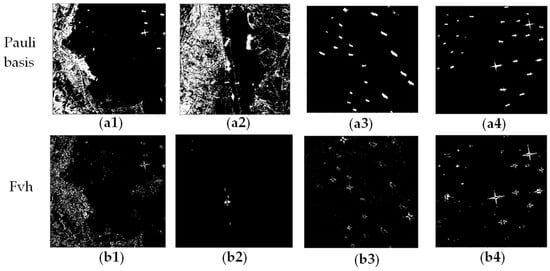

Implementing PolSAR using both Pauli decomposition and Fvh components has significantly enhanced ship detection, as demonstrated in Figure 12 across four regions. Pauli decomposition effectively mitigates noise patterns, such as sidelobes—unwanted radar reflections appearing around the main ship signal—by generating pseudo-color images that clearly differentiate ships from background clutter. This approach reduces the challenge of distinguishing ships from similar noise and reflections from land, which can blend with ship reflections in grayscale images, particularly when their brightness levels are similar. The Pauli decomposition preserves critical ship details, allowing for more accurate identification. In Figure 11, the pseudo-color images highlight key features: the main body of the ship is marked in pink, cross spots are in green, and noise clutter is distinctly represented. This clear separation and preservation of ship features enhance detection accuracy and reliability, demonstrating the effectiveness of combining Pauli decomposition with Fvh components for improved maritime surveillance.

Figure 12.

Experimental results for ship detection in SAR images across four regions: (a) Onshore1, (b) Onshore2, (c) Offshore1, and (d) Offshore2. The figure illustrates the effectiveness of the Pauli decomposition method in reducing noise and distinguishing ships from the background. Ships are marked in pink, while noise clutter is shown in green.