1. Introduction

Flooding, a major global natural disaster [

1], annually affects a vast population, especially in coastal regions that are vulnerable to combined climatic and human-induced hazards [

2,

3]. Notably, 80% of flood-related fatalities occur within 100 km of coasts [

4]. Climate change intensifies typhoons, storm surges, and heavy rains [

5], increasing flood risks. With growing coastal economic activities and advancing global warming, the disaster vulnerability in these areas is expected to rise [

6], escalating future flood hazards [

7,

8].

Accurately estimating the spatial extent of flood characteristics like velocity, depth, and frequency is crucial for flood risk analysis, a focus of extensive scholarly research [

9,

10,

11]. As Vojtek [

12] and Skakun [

13] integrated spatial distribution data of flood inundation extent, depth, and flow velocity with relative flood inundation frequency for flood risk assessment, they have provided new ideas and methods for flood risk evaluation. These methods accurately assess the spatial extent and distribution of flood disasters. However, floods in coastal areas are often not caused by a single hazard but involve the interplay and combined impact of multiple factors. Recent research has shifted from single- to multiple-hazard studies, with the interaction and coupling of different hazards becoming a key challenge in comprehensive risk assessment. Storm cyclones, typically accompanied by heavy rain and storm surges [

14,

15,

16,

17,

18], along with extreme river flow and astronomical tides, frequently lead to compound flooding. Coastal areas, with their flat terrain and dense river networks, are prone to flooding. Upstream heavy rains can cause river levels to exceed alert thresholds, and local heavy precipitation increases the risk of dam failures or overflows [

19]. High tides, storm surges, and sea waves can further exacerbate flooding by causing seawater to back up, particularly when coinciding with river floods, hindering floodwater discharge and worsening drainage [

20,

21]. Additionally, coastal urbanization has increased the number of impervious surfaces [

22,

23,

24], altering hydrological patterns and affecting precipitation and flow processes. Coastal cities also face challenges in flood management due to inadequate urban drainage infrastructure [

25,

26,

27,

28] and significant ground subsidence [

29,

30]. In summary, while significant progress has been made in understanding and mapping flood characteristics, there remains a critical gap in comprehensively assessing the risk of compound flooding, particularly in analyzing the complex interactions and cumulative impacts of multiple flood-inducing factors in coastal areas [

31]. This highlights the need for more integrated and multifaceted research approaches to effectively address the nuances of compound flood risks.

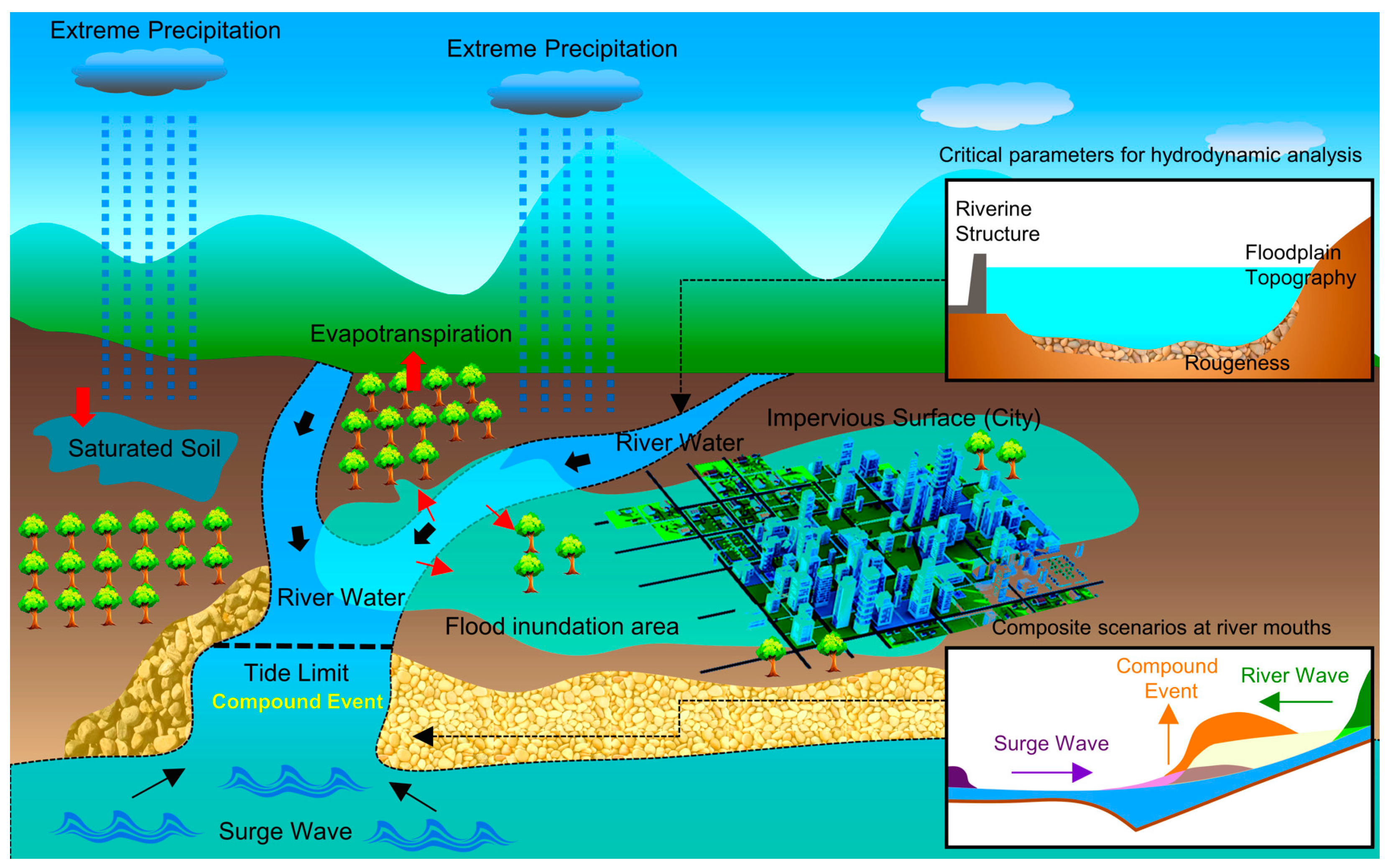

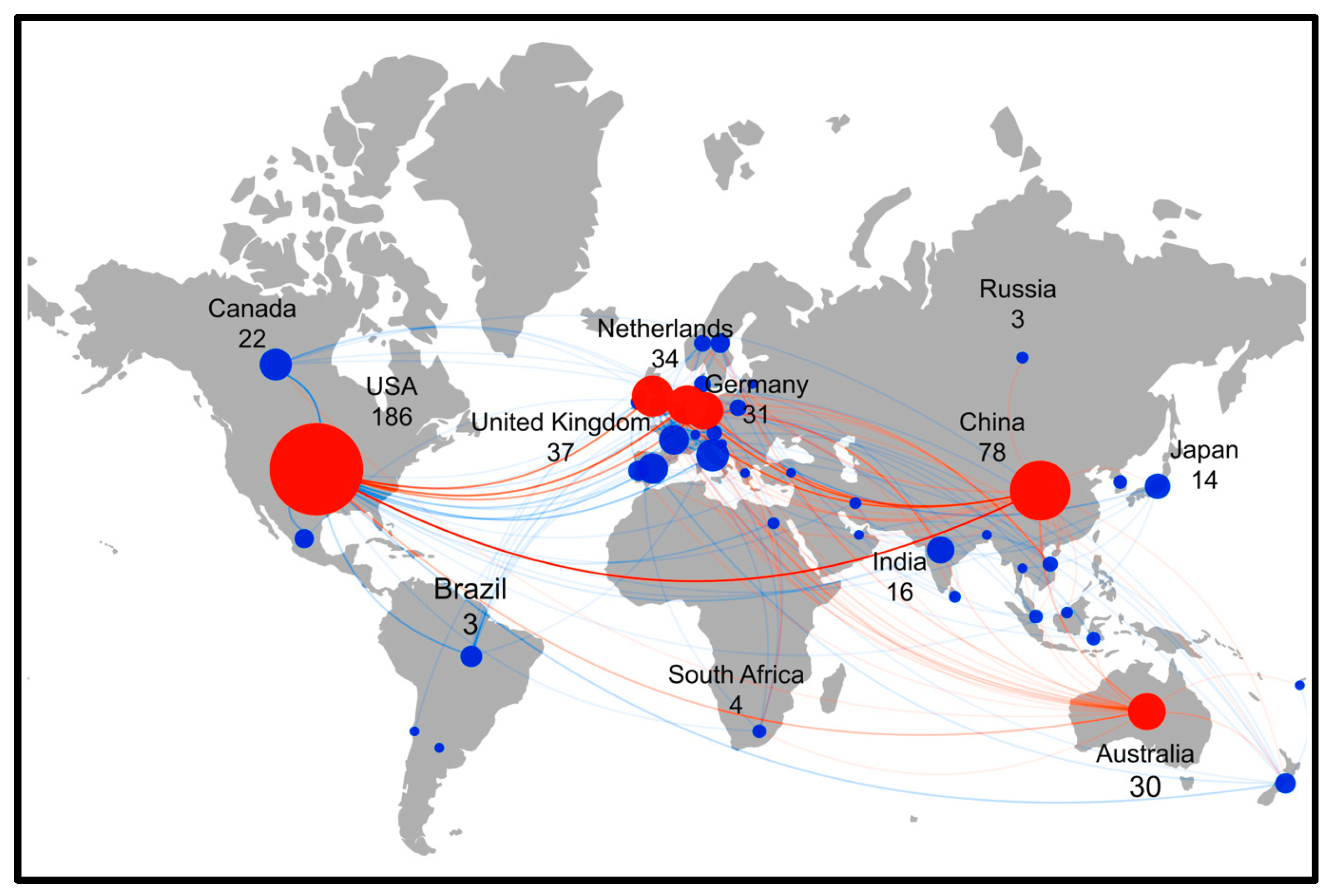

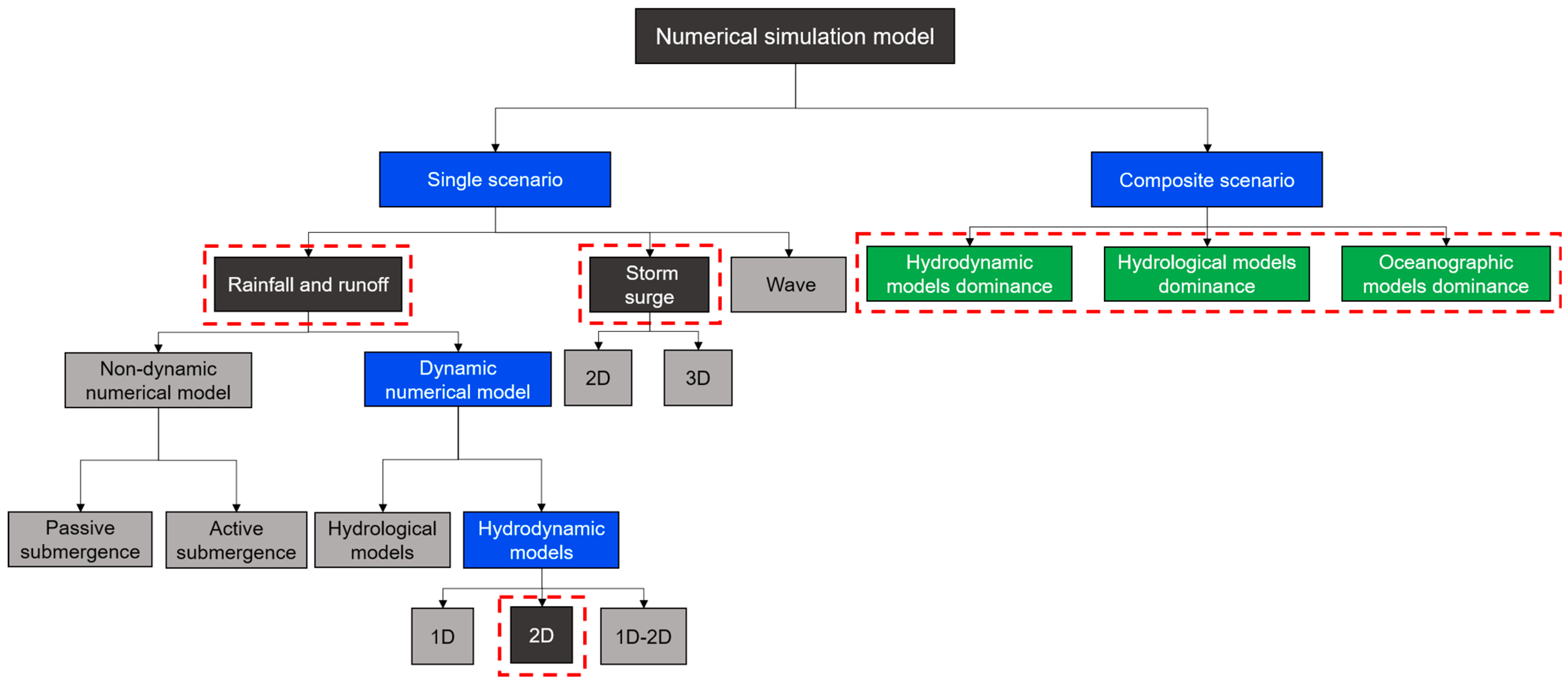

Therefore, this study comprehensively analyzed the processes and mechanisms of compound flooding in coastal regions by reviewing the pertinent literature from the past decade. It focused on three key areas: statistical models, dynamic numerical models, and risk mapping methods employed in assessing coastal compound flood risks. The paper is structured into three distinct sections, each dedicated to one of these focal areas, offering a clear and concise overview of current methodologies and findings in this field. For a detailed process flow, refer to

Figure 1.

Part 1: Analysis of the disaster mechanisms of compound flooding. A review of the literature from the past decade was conducted based on keywords, and three methods were discussed: statistical models, numerical simulation models, and risk mapping methods. The risk mapping methods were further categorized into artificial intelligence (AI) and multi-criteria decision-making (MCDM).

Part 2: Various case studies related to statistical models, numerical simulation models, and risk mapping methods were introduced. Additionally, a comprehensive discussion of the strengths and limitations of each category and subcategory was conducted.

Part 3: This chapter introduces the recent advancements in coupled methods, along with the associated uncertainties, and provides recommendations for future applications. By offering a comprehensive understanding of the methods, it aims to make meaningful contributions to the field of research.

Part 4: A summary and recommendations for future research are presented in this section.

In summary, this study offers an in-depth review of the three aforementioned methods, successfully delineating all essential topics to formulate a review structure. Additionally, it sheds light on recent advancements in coupled flood risk analysis and pinpoints areas for further research.

3. Research Results and Discussion

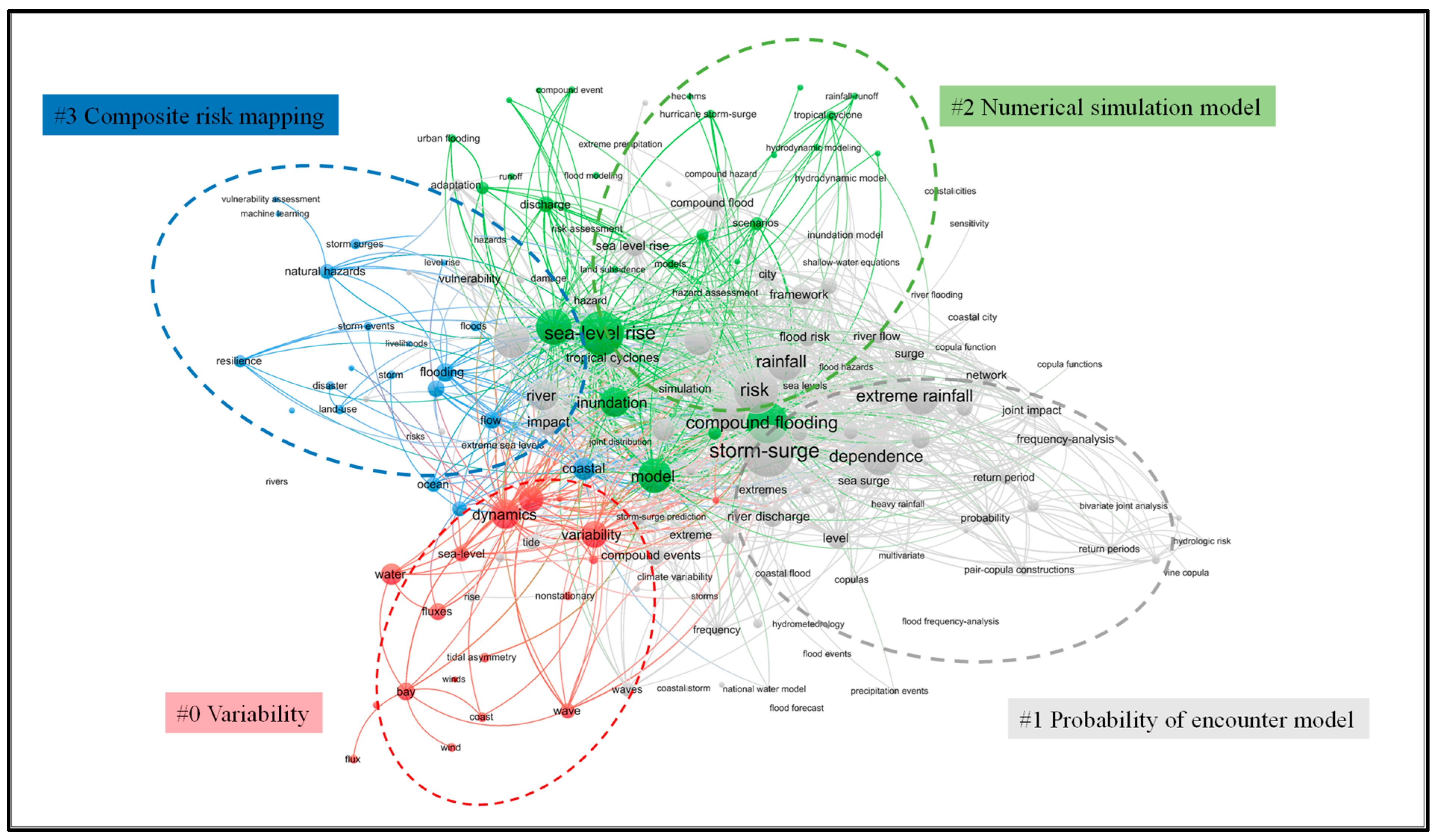

This article examines the risk of compound floods, which is defined by their frequency (return period), intensity (such as water level, volume, and duration), and potential impact (like inundation depth). Statistical models are typically employed to determine flood frequency, while the intensity and scope of impacts are better assessed using dynamic numerical models and risk mapping. This paper systematically reviews advances in composite flood hazard simulations and evaluations, focusing on encounter probability models, numerical simulations, and integrated hazard mapping techniques.

Probabilistic modeling, numerical modeling, and risk mapping form an interconnected framework, where each component contributes to and utilizes data from the others. Probabilistic models use historical flood data to calculate flood occurrence probabilities or frequencies, known as return periods [

49]. This output then informs numerical models, especially for evaluating scenarios at different return periods. These numerical models incorporate data like meteorological conditions, topography, land use, and flood frequency from probabilistic models to detail flood characteristics, such as levels, velocities, and flow rates [

50,

51]. These details are essential for risk mapping, which employs numerical model outputs and integrates them with GIS technology to create inundation and risk maps [

52,

53]. These maps provide critical insights for decision-makers about a flood’s potential geographic spread, inundation depth, and damage distribution. The interplay of inputs and outputs among these models is detailed in

Table 3.

In summary, probabilistic models estimate the probability of flood occurrence, numerical models delineate the flood’s specific features, and risk mapping presents these details visually to highlight the potential scope of a flood’s impact.

3.1. Statistical Models

Within the realm of flood-related statistical model research, the initial studies mainly concentrated on the statistical analysis of rainfall, runoff, and storm surges.

Table 4 concisely outlines the methodologies, findings, and insights from key research on various flood hazard factors.

Although many initial studies have acknowledged the individual impacts of extreme rainfall, coastal flooding, and river flooding, few have addressed their combined effects. This omission potentially compromises the accuracy of depicting the true dynamics of compound flooding events and the associated statistical features of disaster risk. Therefore, research on the joint analysis of multiple hazard factors, based on data from station observations and numerical simulations, is gaining increasing prevalence. The primary research objectives of multivariate analysis include evaluating the correlation [

54], dependence [

55], encounter probability [

56], combination design, and conditional probability [

57] among multiple hazard factors. Correlation and dependence studies aim to quantify the interrelationships among these hazard factors. Combination design is carried out to design different encounter scenarios, such as storm surge–astronomical high tide levels, extreme rainfall–storm surge, storm surge–extreme runoff, extreme runoff–sea-level rise, and so on. Encounter probability represents the probability of events occurring under these different combination scenarios [

58]. Conditional probability indicates the probability of one event occurring given the condition of another event occurring [

59]. For a comprehensive overview, refer to

Table 5, which tabulates several case studies on multivariate flood analysis, encompassing causative factors, research outputs, scenario combinations, and employed methodologies.

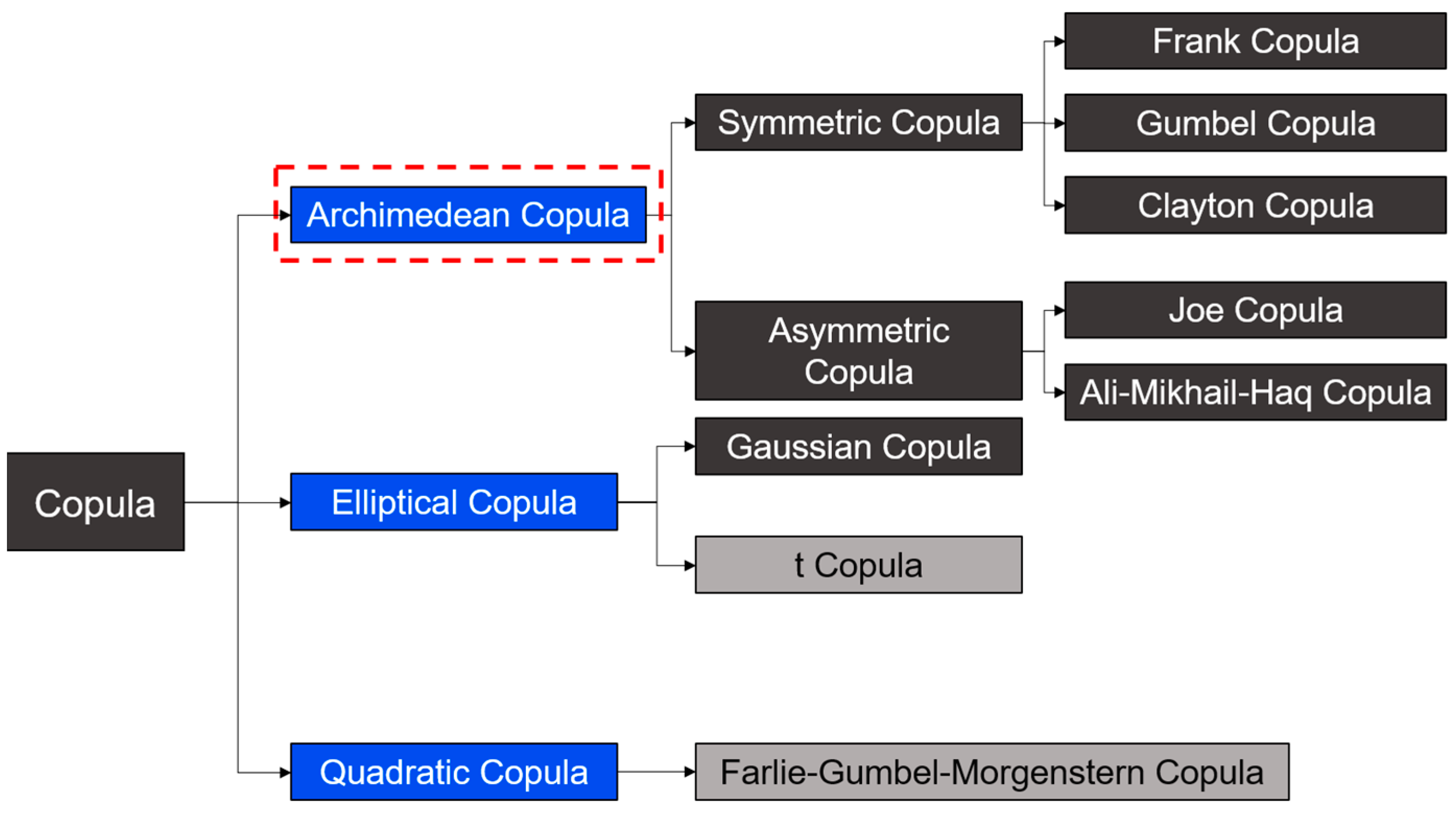

Table 5 highlights the predominant use of the copula method in recent studies. The copula method was most widely used in these studies. Copula is one of the latest advancements in the field of multivariate dependence modeling, and it can be used for calculating joint probability distributions, dependencies, and return periods of bivariate or even multivariate relationships in compound flood scenarios, such as rainfall–storm surge [

14,

69,

70], rainfall–tide [

66,

71,

72], or sea-level rise–river [

73,

74]. More than a dozen linear copula functions have been identified in known studies, each of which corresponds to a different number of parameters, and which differ significantly in their fitting performance [

59]. In general, copula functions can be classified into three main categories: Archimedean copula, elliptical copula, and quadratic copula. The Archimedean copula family includes both symmetric copulas (such as the Frank copula) and asymmetric copulas (such as the Joe copula). The elliptical copula family includes the Gaussian copula and the t copula. Detailed categorization information can be found in

Figure 7. The Frank copula, Gumbel copula, and Clayton copula, which are the most commonly used Archimedean copulas in the field of hydrology, have different tail characteristics [

75]. Different copula functions are used in practical applications, and the calculation results have large differences, so it is especially critical to select a suitable copula function. In practice, it is necessary to select the copula function that works best with the available data according to the root-mean-square error criterion (minimum RMSE), Akaike information criterion (minimum AIC), and BIC (minimum BIC) [

76]. But regardless of the type of copula function, binary copulas are still predominant in the current research. Multivariate coupling modeling is more challenging compared to bivariate modeling, and it should be a future consideration to improve the model to accommodate more variables.

3.2. Numerical Modeling Approaches

In the past, both numerical simulation models and statistical models primarily concentrated on singular hazard factors, including rainfall, storm surges, and river flows, rather than a combination of elements. Recent trends, however, have shifted towards encompassing multiple hazard factors in flood simulation studies. The application research of numerical simulation models can be categorized into two types, namely, single-type and coupled-type, based on the number of compounded flood factors involved.

Figure 8 displays the overall framework of the numerical simulation model.

3.2.1. Isolated Scenario Analysis (ISA)

In coastal regions, rainfall runoff serves as a principal driver of compound flooding events. Researchers use different control conditions and mathematical equations to create numerical models that simulate this process. These models are essential for predicting floods’ development and behavior in various environmental settings. Generally, these simulation models fall into two categories: static and dynamic numerical models.

In fluids with an extremely low viscosity coefficient, the resistance term becomes trivial to the extent that it can be omitted, and the momentum equation may also be considered unnecessary. When hydrodynamic equations exclude this dynamic term, the model is classified as a non-dynamic numerical model. In 2001, Liu introduced a flood assessment methodology utilizing digital elevation models (DEMs), categorized into two types: passive submergence and active submergence [

77]. Both of these methods belong to the category of non-dynamic numerical models. The passive submergence analysis method, commonly referred to as the “bathtub model [

78]”, utilizes a digital elevation model (DEM) by overlaying the floodplain surface onto a raster or triangulated irregular network (TIN) [

79,

80]. In this approach, all areas below the flat floodplain surface are classified as flooded, disregarding the impact of topography on water flow. However, previous studies have indicated that this method tends to overestimate the depth and extent of flooding due to its simplistic representation of flood routing [

81,

82]. This is due to the importance of considering topography in flood simulations, which cannot be ignored, as it directly affects the flow of floodwaters and can hinder the spread of floodwaters in higher-elevated areas. When the influence of both topography and discharge points is considered on top of the passive submergence model, it is referred to as active submergence. The cellular automaton (CA) method is commonly used to simulate active submergence. CA is a grid-based dynamical model that discretizes time, space, and states [

83], enabling the capture of the spatiotemporal evolution of floods. Jamali (2019) proposed a rapid flood inundation model called CA-ffé, based on the CA method, and compared it with HEC-RAS and TUFLOW [

84]. The results proved that CA is effective. However, it is worth noting that CA models are often tested under ideal conditions and may exhibit lower accuracy in reproducing 2D fluid dynamics compared to models based on the comprehensive shallow-water equations (SWEs).

In contrast with the approach of non-dynamic numerical models, many researchers incorporate the dynamic term in governing equations for more accurate flood flow simulation and precise data acquisition, known as dynamic numerical modeling. In the dynamic numerical models for single-element simulation, there are hydrological models, as well as 1D, 2D, and 3D hydrodynamic models. The hydrological model typically operates independently to calculate the inflow and outflow, determining the temporal variation in flow at upstream cross-sections and control sections of river branches [

85,

86]. This flow process can be used as an input boundary condition for hydrodynamic models. In hydrodynamic simulations, the 1D hydrodynamic model is inadequate for addressing the problem of urban surface floods that lack a fixed direction and path. At the same time, the computation of a 3D hydrodynamic model is both complex and time-consuming. Consequently, the application of 2D hydrodynamic models is more extensive.

In the realm of 2D hydrodynamic modeling, certain scholars have streamlined the governing equations, resulting in practical and computationally efficient models—for example, 2D diffusion wave models that ignore the local acceleration term and the convective acceleration term, simple inertial models that ignore the convective acceleration term (e.g., LISFLOOD-FP [

87]), and a 2D kinematic wave model omitting the local acceleration term, convective acceleration term, and pressure term [

88]. The kinematic wave model was initially developed for fluvial flooding with deeper water [

89,

90]. When conducting simulations of relatively shallow urban surface water inundation, the kinematic wave model has certain limitations, requiring several significant assumptions to be made, and it has a low level of accuracy itself. Therefore, the diffusion wave model [

91,

92,

93] and the simplified inertial equation model [

94,

95] are considered to be more practical and commonly used simplified models in the field of flood disaster simulation and risk perception. However, simplified models may lead to inaccurate computational results.

Compared to simplified equations and models, the complete 2D SWE applies to various flow conditions and geographical environments, including meandering watercourses, complex terrain, and irregular water bodies. The complete 2D SWE model that considers more physical details and more accurate fluid dynamic simulation results can be obtained, yielding excellent performance in surface flood simulation research. However, in the full-process simulation of urban floods, the 2D model does not account for the influence of underground drainage pipes, which is a limitation. Therefore, to enhance the accuracy of coastal city flood simulations, many researchers have focused on the coupling of 1D drainage network models with 2D surface flow models [

96,

97,

98,

99]. By establishing a fluid exchange between the 2D and 1D models, a more comprehensive urban flood model can be constructed.

As crucial as rainfall runoff, another equally significant contributor to coastal flooding is the phenomenon of storm surges. Historically, most of the early storm-surge simulations were 2D models based on depth-integrated SWEs [

100,

101,

102,

103]. Their predominant advantages lie in their simplicity and computational efficiency, which is why they have been adopted extensively for storm-surge warnings across numerous countries [

104]. As computing capabilities advanced alongside the refinement of algorithms, the realm of oceanography saw a gradual shift towards 3D numerical models for storm-surge simulation and operational forecasting, such as the ADCIRC, DELFT3D, POM, and FVCOM models [

105]. For instance, Valle (2018) coupled the Weather Research and Forecasting (WRF) model with the Advanced Circulation Model (ADCIRC) to simulate the effects of storm surges [

106]. Liu (2018) created a 3D visualization of the flood pathway process based on the volume-of-fluid (VOF) numerical model [

107]. The study’s results indicated that 3D visualization can serve as an effective tool to visually and realistically present the dynamic changes in hydrological information. Ye (2020) proposed a 3D atmospheric pressure model for river-to-ocean flow based on unstructured grids [

108]. This model integrates traditional hydrological and oceanographic models into a single modeling platform to simulate storm surges, subsequent river flooding events, and compound wave surges. This research demonstrates the significance of incorporating the 3D effect in storm-surge simulations. In comparison to two-dimensional (2D) modeling approaches, three-dimensional (3D) simulations provide a comprehensive inclusion of critical parameters such as topography, bathymetry, wind velocity, and wind direction. Additionally, by integrating these factors, more accurate storm-surge modeling and precise prediction of surge water levels can be achieved, leading to more precise estimations of inundation extents.

Expanding on this concept, it is important to highlight the distinct challenges posed by wave flooding. Wave flooding is a type of flooding caused by large amounts of seawater being pushed onto the coast by strong winds, leading to considerable infrastructural damages, including to structures like dikes and bridges. Notably, wave flooding intensifies the risks associated with compound flooding. Krishna’s 2023 study on India’s southwest coast delved into the interplay between waves, wind, and tides, illuminating wave activity’s pivotal role in flood catastrophes [

109]. A comprehensive literature review reveals a research gap, with single-wave flooding being relatively less studied than rainfall-induced or storm-surge floods. This can be attributed to the fact that wave flooding is inherently triggered by intense winds, with extreme meteorological events, especially severe storms, typically resulting in outcomes like storm surges. Consequently, research on wave flooding typically integrates elements like tides and storm surges.

Broadly, global development issues like rising sea levels [

110,

111], the expansion of impervious surfaces [

22,

23,

24], and land subsidence [

112,

113] significantly impact flood patterns. Their effects are particularly pronounced in determining the severity and frequency of compound flooding in coastal regions. Rather than studying these elements separately, they are often analyzed in tandem with other influencing factors to gain a deeper understanding of compound flooding mechanisms. A compilation of frequently utilized dynamic numerical simulation models for single-scenario simulations, complete with the associated control equations, access methodologies, and results, can be found in

Table 6.

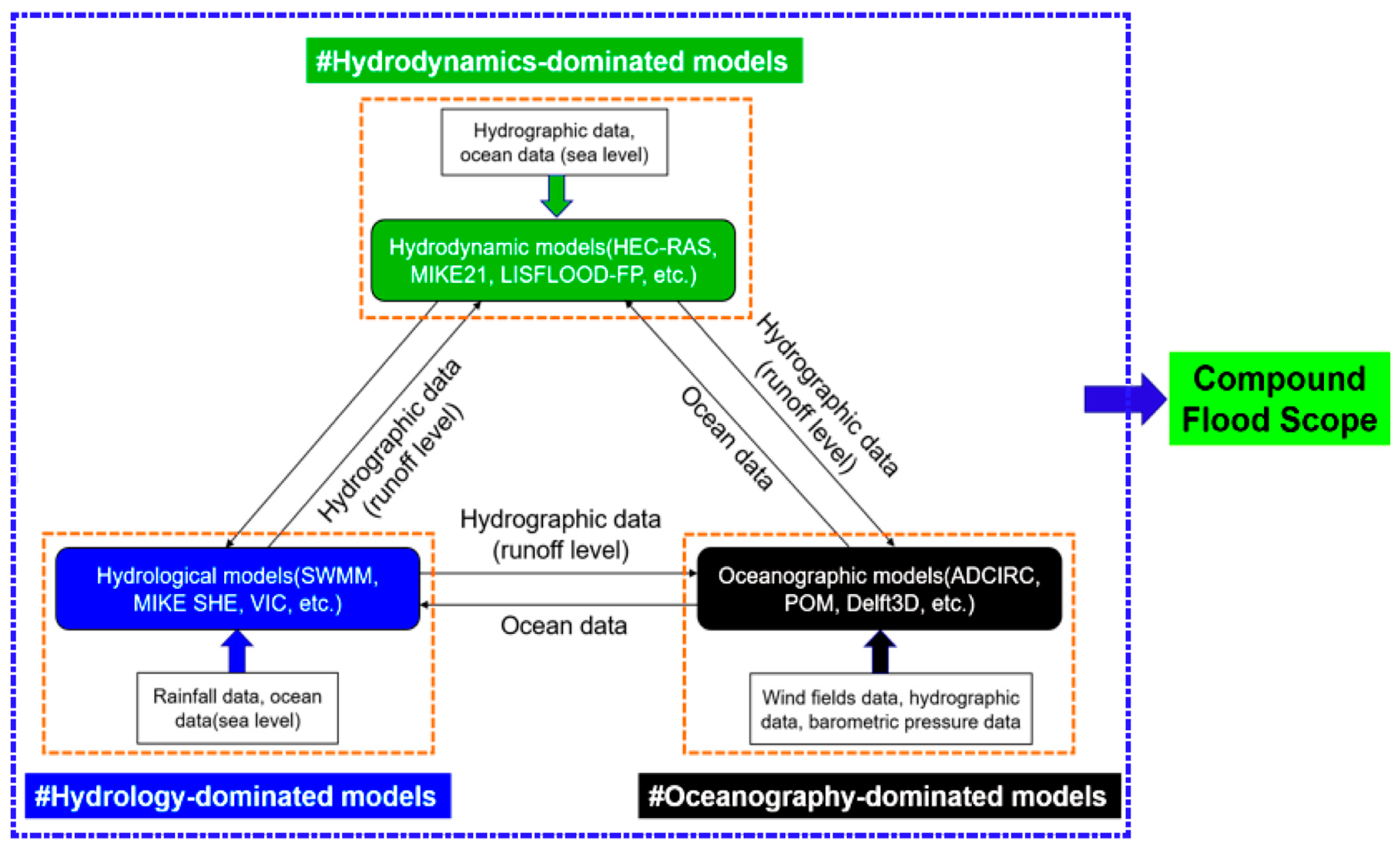

3.2.2. Composite Scenario Analysis (CSA)

Compound flood inundation typically encompasses multiple terrestrial processes, including rainfall and river flow, along with marine elements such as storm surges and waves [

59]. Therefore, simulating compound flood scenarios requires the coupling of oceanographic, hydrological, and hydrodynamic processes. In common composite scenario numerical simulations, it is common to use the results of one model as input conditions for another model. Based on the type of model that ultimately determines the extent of flood inundation, these models can be classified into three categories [

117]: hydrodynamics-dominated models (such as HEC-RAS, MIKE21, and LISFLOOD-FP), hydrology-dominated models (like SWMM, MIKE SHE, and VIC), and oceanography-dominated models (such as ADCIRC, POM, and Deleft3D), as shown in

Figure 9. Hydrodynamics-dominated models use observed or simulated hydrological and oceanographic datasets as inputs to direct the final inundation extents. For example, Yin (2016) coupled the storm-surge model ADCIRC with the urban flood inundation model FloodMap, using the ADCIRC storm-surge modeling as an input to simulate the urban inundation in New York City during Hurricane Sandy [

118]. Shen (2022) coupled a storm-surge model with a coupled 1D/2D urban flood model to quantify the impact of possible future climate scenarios on the transportation infrastructure in Norfolk, Virginia, USA [

119]. Bennett (2023) studied compound flooding in North Jakarta, Indonesia caused by waves, tides, and river flow, using a coupling of the Delft3D and HEC-RAS models [

120]. Hydrodynamics-based coupled models have the advantage of using readily available datasets or simulation results from open models as inputs. This can avoid the need for complex models, such as hydrological and oceanic circulation models, since there is no established mutual interaction between the two models; only using the results of the previous model as the input for the next model may lead to underestimation of the final simulation results, resulting in inaccurate outcomes.

The hydrology-dominated coupled models focus on establishing hydrological simulation processes and combining observed data or model-generated sea levels to simulate the entire process from precipitation runoff to inundation. Silva-Araya (2018) proposed an approach combining the simulated wave nearshore model and the Advanced Circulation Model (ADCIRC) with the gridded surface/subsurface hydrological analysis (GSSHA) 2D hydrological model to determine the levels of coastal flooding caused by a combination of storm surge and surface runoff [

121]. Shi (2022) investigated the effects of compound flooding when rainfall and storm surges occur simultaneously, developing a coupled model based on the 1D hydrological model SWMM and the 2D hydrodynamic model ADCIRC [

122]. Similarly, hydrology-dominated coupled models, in which the interaction between storm surges and runoff is not considered, can underestimate the simulation results.

In oceanography-dominated coupled flood simulations, the focus is primarily on simulating marine processes while utilizing simulated or observed data of hydrological processes such as rainfall and runoff as inputs. Tromble (2013) utilized the distributed hydrological model HL-RDHM to generate flow boundary conditions for ADCIRC and simulated storm-surge inundation [

123]. Bacopoulos (2017) integrated the SWAT and ADCIRC models to develop a coupled model for flood prediction in coastal regions [

124]. Lee (2019) coupled the hydrological model HEC-HMS with the hydrodynamic model Delft3D, where HEC-HMS was used for hydrological simulation, while Delft3D incorporated the runoff data, wind speed, pressure, and topography from HEC-HMS to calculate compound flood water levels and velocities [

125]. In oceanography-dominated coupled flood simulations, the neglect of mutual interactions between rainfall, river flooding, and marine processes can also lead to an underestimation of the inundation outcomes in the coupled flood simulation.

Table 7 lists additional composite flood coupling modeling studies that employ this method, including their categories, models, and research subjects.

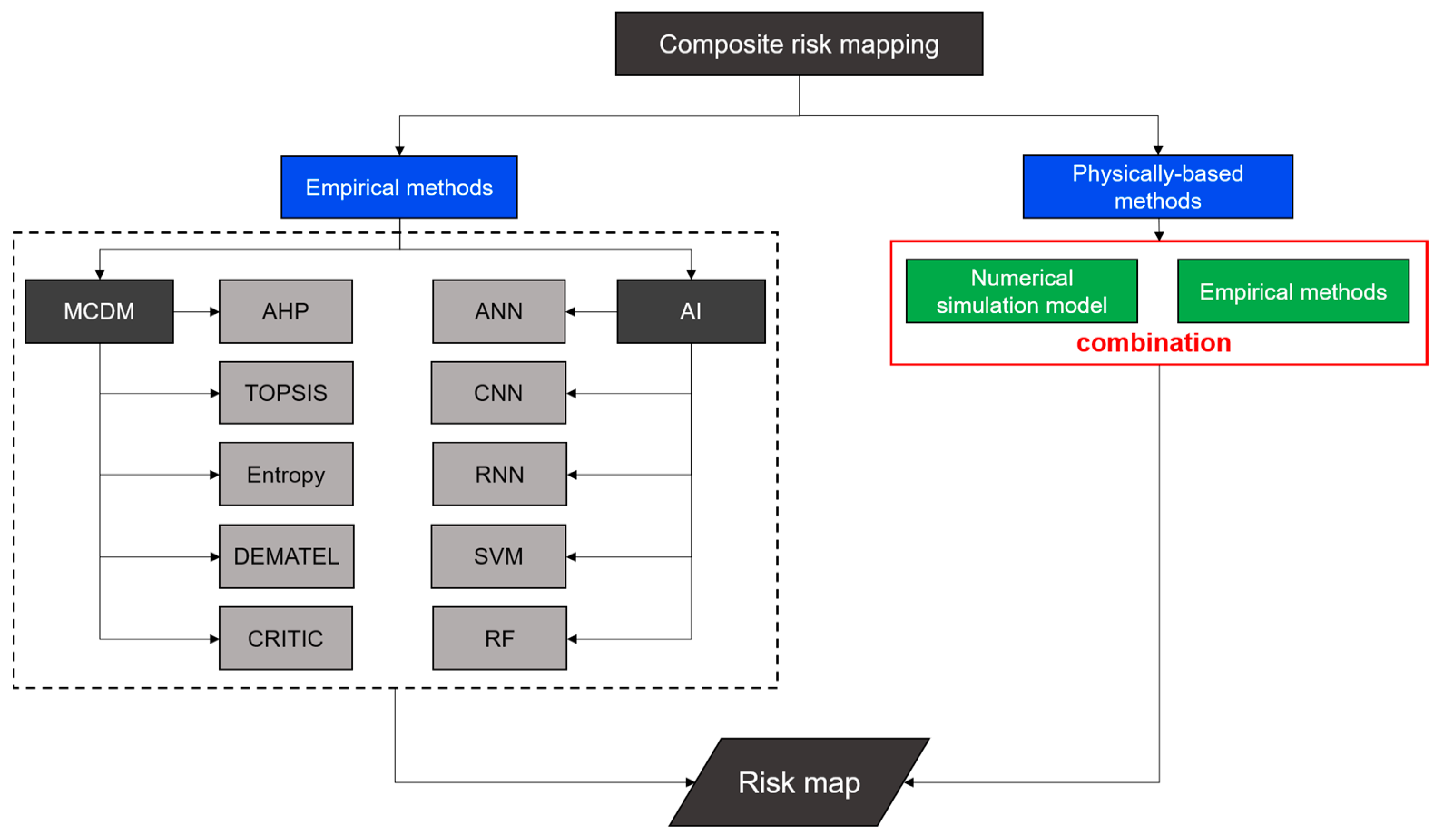

3.3. Integrated Hazard Risk Mapping

Flood hazard maps are essential for probing areas exposed that are to flood hazards, and they provide an effective representation of the spatial extent and distribution of flood hazards. Flood risk mapping plays an instrumental role in the identification of potential flood hazard areas, hazard intensities, flood depths, and initial damage levels. Compared to probability models and numerical simulation models, integrated hazard risk mapping is simpler and more suitable for large-scale and long-term risk assessment, and it has strong integration capabilities [

134] with other methods. Based on the integration status with numerical simulation models, integrated hazard risk mapping methods can be classified into two categories: empirical methods and physical-based methods. Empirical methods include MCDM and AI methods [

135]. Physical-based methods combine empirical models with numerical simulation models, using accurate physical information such as water depth, flow velocity, and inundation area obtained from the simulation models for more precise risk assessment. The structure and classification of integrated hazard risk mapping methods are shown in

Figure 10.

MCDM refers to the decision to choose a limited (infinite) set of conflicting schemes that cannot be shared. This is a method that aims to help decision-makers integrate information to simplify the decision-making process. The result is usually a set of weights related to different goals [

136,

137]. Then, utilizing the weighted linear combination and sequential weighted average method [

137], the weights are summarized, and the attributes of each factor are summed along with their weight to determine the final score of the factors considered in the analysis. After investigation, the analytic hierarchy process (AHP) [

138,

139] is the most popular MCDM method in flood risk assessment [

140]. The AHP can rank alternative solutions based on several criteria through discrete or continuous pairwise comparisons to address decision-making problems [

141], and today it is seldom used alone but, mostly, combined with other flood risk assessment tools to perceive the risk. For example, Cui (2017) considered flood factors in coastal cities, such as the geological deposition rate, rising sea levels, precipitation, the length of urban drainage pipes, annual GDP, and population, utilizing the AHP, Grey model (GM), and artificial neural network (ANN) to predict flood losses [

142]. Currently, the application of MCDM in the field of composite flood risk perception is limited, but it has great potential for development.

Recently, the application of AI techniques, such as machine learning (ML) and deep learning (DL), in geological hazard modeling has been rapidly advancing. ML models are a subfield of AI capable of building predictive models from historical data. They use remote sensing (RS) and past flood data to detect spatial flood ranges and sensitive areas. According to different data and different needs, the researchers can choose different ML algorithms to determine the risk of coastal compound floods. Among these, the ANN is the most popular and widely used method [

143]. Wang (2016) developed an ANN-based system to predict storm surges along the Louisiana coast [

144]. Sahoo (2019) proposed an alternative approach to predict storm surges and onshore flooding using techniques such as ANNs, using pre-computed storm-surge and flood data scenarios to train ANN models for the entire Orissa coast, with a 99% success rate [

145]. Chondros (2021) proposed an integrated approach for flood warning applicable to the flood-prone coastal area of Rohimno, Crete, Greece, developing an ANN model and demonstrating the superior performance of the developed ANN [

146]. In addition to ANNs, other ML models—such as random forests (RF) [

147,

148,

149,

150,

151], the Gaussian process metamodel (GPM) [

152], support-vector machine (SVM) [

147,

150], and recurrent neural networks (RNNs) [

153]—have also been applied. The ML model can make full use of historical data without considering the physical process of flood formation and can quickly train, verify, test, and evaluate the risk of floods based only on the historical flood record dataset, which provides an easier-to-implement approach to flood prediction, with high performance and relatively little complexity compared to physical models [

154]. However, considering accuracy, it is still necessary to strengthen the close connection between ML and the physical process of flood formation. At present, there are few algorithms involved in the related flood mechanisms [

155]. This will be one of the key research directions of ML methods in the field of flooding in the future. DL, as a subset of ML based on neural network structures [

156], consists of multiple performance layers and obtains nonlinear modules through construction [

157]. Convolutional neural networks (CNNs) are a commonly used method in DL. Liu (2019) developed an effective and robust method for coastal inundation mapping based on a deep CNN with dual-time-phase and dual-polarized synthetic-aperture radar (SAR) image information [

158]. Munoz (2021) evaluated the performance of CNN and data fusion (DF) frameworks for generating composite flood maps along the southeast Atlantic coast of the United States under the influence of Hurricane Matthew [

159]. The results show that the resulting composite flood map matches well (80%) with the backward-guided flood map for coastal emergency risk assessment. Although DL has been a hot research direction in recent years, the application of DL in flood risk perception is still a relatively new field.

Physical-based methods, as compared to empirical models, have several advantages. Firstly, physical-based methods can consider more complex scenarios by incorporating multiple interacting physical factors associated with compound flood hazards, leading to more accurate risk assessment results. Secondly, numerical simulation models used in physical-based methods can partition the study area into smaller grid cells, resulting in more precise risk assessment outcomes. Currently, many scholars have already employed physical-based methods for assessing flood risks. Hsu (2017) used the wind wave model (WWM), Princeton Ocean Model (POM), and WASH123D watershed model to simulate wave conditions, storm surges, and coastal inundation [

160]. Based on the results of the inundation simulation, an analytic hierarchy process (AHP) was employed to establish a research area risk map using vulnerability and hazard analysis [

160]. Afifi (2019) conducted a comprehensive study on flood risk by using the 3Di model and high-resolution DEM to simulate typhoon-induced flood inundation, assessing disaster losses and evaluating the vulnerability of the study area through the AHP [

161]. Yan (2021) proposed a method that couples neural networks with numerical models to simulate and identify high-risk areas for urban floods [

162]. The results indicated that this method achieved a high level of prediction accuracy. Ayyad (2022) utilized the ADCIRC model to simulate and generate a large dataset of synthetic tropical cyclones, which was then used to train, validate, and test an ANN model [

163]. The study demonstrated that physical-based simulations can be combined with ANN models to provide faster and more efficient predictions for low-probability events. Zhang (2022) proposed a flood prediction model called QPF-RIF, which combines a hydraulic model (SOBEK), support-vector machine–multistep forecast (SVM-MSF), and a self-organizing map (SOM) [

164]. The test results demonstrated that this model is capable of accurately predicting the long-term distribution and depth of floods, offering more reliable real-time and future information. Numerous studies have demonstrated that combining integrated hazard risk mapping methods with numerical simulation models can yield better predictive results.

Finally, some case studies are listed in

Table 8, showcasing the methods, classifications, input indicators, and output results applied in integrated hazard risk mapping.

4. Challenges and Future Perspectives

Under climate change and urbanization, there will continue to be a rise in sea levels [

170], an increase in the frequency and intensity of typhoons and extreme rainfall [

171], and shifting interactions between runoff and storm surges [

172], with the tendency for compound flooding in coastal areas to be increasingly severe [

73]. Advancing research on compound floods in the context of climate change is crucial for enhancing flood response strategies and effective risk management. Given these circumstances, several key aspects should be considered in future studies of compound flooding in coastal regions.

In compound flood frequency analysis, the assumption of stationarity under a consistent distribution is paramount. However, this assumption has been increasingly challenged by the escalating frequency and intensity of typhoons, influenced by global climate change, rising sea levels, and anthropogenic activities. These factors have significantly altered the stationarity of key variables like precipitation, runoff, and tidal levels [

59,

173]. Consequently, compound flood studies that rest on the stationarity premise may not fully encapsulate the complexities of contemporary real-world scenarios [

174]. Additionally, the data underpinning these statistical methods, whether sourced from observational stations or simulation outputs, often face challenges related to their quality and duration [

175], which, in turn, can profoundly influence the assessment’s outcomes [

59]. Moreover, under the non-stationarity assumption, there is a noticeable dearth of research on multidimensional statistical models for compound floods, especially those extending beyond two dimensions. The intricacies of developing such models under nonstationary conditions present not only computational challenges but also theoretical ones, as they require the integration of multiple approaches. Further research endeavors in this direction are not only warranted but essential for enhancing our understanding and predictive capabilities.

For example, Razmi (2022) considered the non-stationarity of extreme sea levels and precipitation, conducting bivariate frequency analysis using the copula method [

176]. The results showed that under nonstationary conditions, the joint return period of compound floods was shorter. Naseri (2022) evaluated the dependencies and non-stationarity effects between variables in coastal–pluvial compound floods in the context of rising sea levels and changing precipitation patterns, using a copula-based Bayesian framework [

55]. It was found that the risk of compound floods was greater under nonstationary scenarios. Pirani (2023) considered the non-stationarity of rainfall, river flow, and coastal floods in both the temporal and spatial dimensions, developing a nonstationary multivariate model using the C-vine copula method with constant, linear, and quadratic link functions for the parameters, and the uncertainties were quantified based on the Bayesian approach [

177]. The results indicated that under nonstationary conditions, the joint return period of compound floods significantly shortened, and the failure probability exceeded that of the assumption of stationarity. However, because the higher uncertainty and complex dependencies lead to difficulties in constructing joint multivariate statistical models, there are still fewer studies on joint multivariate probabilistic analyses of compound floods. Future studies should consider more joint variables and select more flexible methods for modeling, which is the future trend.

Building on these insights about non-stationarity, it is clear that researchers need better tools to understand floods. This is where the recent shift towards coupling numerical simulation models comes into play. In recent years, the coupling of numerical simulation models has emerged as the predominant approach for composite flood simulation, with the objective of attaining a comprehensive and granular representation of flood dynamics. Huehne (2016) delineated this model coupling into four distinct categories: unidirectional-type coupling, loose coupling, tight coupling, and complete coupling [

178]. Specifically, the unidirectional transfer of information, as detailed in

Section 3.2.2 is indicative of unidirectional-type coupling. Loose coupling allows models to operate independently yet synchronize to facilitate concurrent information iteration. Conversely, tight coupling is achieved through code integration, enabling interaction within the same code, while complete coupling represents the seamless integration of various models into a unified framework that operates under a consistent equation set [

117,

178].

Notably, current research heavily leans towards unidirectional coupling [

33,

117], despite its inherent limitations. For instance, the unidirectional coupling between ocean and land hydrological models typically uses ocean model outputs as inputs for the hydrological model, potentially overlooking the risk of critical land–ocean interactions. This oversight underscores the need for advancing research into loose, tight, or even complete coupling for more accurate composite flood simulations. Presently, investigations into loose and tight couplings remain limited. Saleh (2017) employed a loose coupling technique, combining the HEC-HMS hydrological model, the NYHOPS ocean model, and the HEC-RAS hydrological model to simulate inundation during Hurricanes Irene (2011) and Sandy (2012) [

128]. Similarly, Tang (2013) implemented a domain decomposition method, facilitating tight coupling between the Godunov-type shallow-water model (SWM) and the ocean model FVCOM [

179]. Moreover, Shi (2022) amalgamated the one-dimensional SWMM model into the two-dimensional ADCIRC model, achieving tight coupling to examine various flood factors [

122], while Li (2022) employed the tightly coupled ADCIRC-SWAN model to study storm-surge-induced coastal inundation in Laizhou Bay [

180]. Nonetheless, merely amplifying the coupling intensity may not suffice for accurate simulations. It is paramount to recognize that coupled models inherently harbor uncertainties, such as initial topographical inaccuracies [

181,

182] and potential errors in prior driving data [

183]. Other uncertainties, including those stemming from model parameters like roughness coefficients and wind resistance, are elaborated upon in

Table 9. Addressing these challenges will be vital for future advancements in composite flood hazard assessment.

Data assimilation (DA) has emerged as a pivotal technique to counteract uncertainties, aiming to synergize model states with observational data, either from the field or from remote sensing, to refine predictions [

184]. Its efficacy has been documented across various realms, including hydrology [

185,

186], meteorology [

187,

188], and ocean modeling [

189]. Asher (2019) introduced an optimized interpolation-based DA approach, which significantly reduced the water-level residuals during Hurricane Matthew on the US’s southeastern Atlantic coast [

190]. This method was observed to halve the storm’s overall surge error. Munoz (2022) coupled an integrated Kalman filter with hydrodynamic modeling, underscoring DA’s capacity to bolster composite flood hazard assessments in vulnerable regions, irrespective of the primary flood drivers—be they fluvial, stormwater, or coastal [

191]. The potential of DA to address uncertainties in composite flood drivers presents a promising avenue for future research.

However, a salient challenge remains: coupled models, given their complexity, often lack real-time computational efficiency. This has led to a pivot towards harnessing acceleration technologies like GPU and parallel algorithms. Proven to exponentially enhance flood models’ computation speeds, GPU algorithms represent a game-changer in the field [

192,

193,

194]. For instance, Li (2022) showcased a 2D hydrodynamic parallel model that achieved a staggering 42-fold acceleration in flood simulation for Harbin’s Nangang district [

195]. Similarly, Buttinger-Kreuzhuber (2022) introduced a hybrid method merging GPU-accelerated runoff simulation with CPU-based sewer network simulation, achieving up to 1000 times the speed [

196]. Yet, it is pertinent to note that while GPUs excel in accelerating simpler models, they falter with intricate ones. This necessitates refining the dynamical framework and simplifying the coupled model without compromising its essence.

Statistical models, while proficient at calculating dependencies between flood drivers, lack the capacity to depict the scope and depth of flood inundation or to quantify the mitigation impact of hydraulic engineering constructions [

197]. In contrast, numerical modeling provides a vivid representation of compound flooding risks. Marrying statistical methods with numerical models leverages the benefits of both methodologies. However, risk assessments rooted solely in historical data often neglect the uncertainties ushered in by dynamic conditions. Hence, an amplified focus on research addressing hazard factor uncertainties is paramount [

198,

199]. This can be achieved by generating extensive datasets through stochastic simulations that encompass random rainfall, typhoon trajectories, and intensities. Utilizing numerical models can further dissect the inherent uncertainties in these simulations. Notably, current flood risk assessments under climate change uncertainties remain underexplored and tend to be limited to individual factors. As an illustration, Chaudhary (2022) introduced a probability-centric deep learning technique to ascertain uncertainties from intensified rainfall due to variables like climate change and urban expansion [

200]. It is also crucial to acknowledge that extreme events, such as typhoons, can catalyze secondary hazards like landslides. A deeper understanding of the interconnected dynamics behind these cascading disaster sequences is vital. Equally important is the consideration of the evolving vulnerability of exposed elements [

201], influenced by diverse factors, ranging from coastal defense strategies amidst global climate change to demographic shifts and socioeconomic developments, all of which collectively shape coastal risk projections.

The described approaches, while distinct, can be synergistically coordinated. For practical implementation, it is crucial to account for the study area’s unique disaster database, geographic nuances, and related factors. This involves tailoring methods to the required spatial scale and precision of the risk assessment. In forthcoming endeavors, researchers and policymakers can select or merge methods based on their merits, drawbacks, and suitability, ensuring enhanced compound flood assessments in coastal regions. A concise summary comparing the strengths, weaknesses, and best-use cases of these three flood hazard simulation methods is presented in

Table 10.