Abstract

This paper proposes a method for the intelligent detection of high-frequency surface wave radar (HFSWR) targets. This method cascades the adaptive constant false alarm (CFAR) detector variability index (VI) with the convolutional neural network (CNN) to form a cascade detector (VI)CFAR-CNN. First, the (VI)CFAR algorithm is used for the first-level detection of the range–Doppler (RD) spectrum; based on this result, the two-dimensional window slice data are extracted using the window with the position of the target on the RD spectrum as the center, and input into the CNN model to carry out further target and clutter identification. When the detection rate of the detector reaches a certain level and cannot be further improved due to the convergence of the CNN model, this paper uses a dual-detection maps fusion method to compensate for the loss of detection performance. First, the optimized parameters are used to perform the weighted fusion of the dual-detection maps, and then, the connected components in the fused detection map are further processed to achieve an independent (VI)CFAR to compensate for the (VI)CFAR-CNN detection results. Due to the difficulty in obtaining HFSWR data that include comprehensive and accurate target truth values, this paper adopts a method of embedding targets into the measured background to construct the RD spectrum dataset for HFSWR. At the same time, the proposed method is compared with various other methods to demonstrate its superiority. Additionally, a small amount of automatic identification system (AIS) and radar correlation data are used to verify the effectiveness and feasibility of this method on completely measured HFSWR data.

1. Introduction

High-frequency surface wave radar (HFSWR) is a radar used for the over-the-horizon detection of the sea in medium- and high-frequency bands [1]. However, the radar echo signal contains components such as ground clutter, sea clutter, ionospheric clutter, and various sources of noise interference [2]. Among these, ionospheric clutter is a strong energy clutter caused by the reflection of radar signals from the ionosphere, while sea clutter is a frequency-symmetric first-order Bragg peak resulting from the reflection of electromagnetic waves by sea waves. The presence of clutter and noise makes it challenging to detect targets effectively using traditional single-frame detection methods, leading to false alarms or missed alarms, which raises the challenge to subsequent moving vessel tracking procedure [3].

The primary task of radar target detection is to extract useful target information from echoes while suppressing the interference of noise and clutter as much as possible [4]. The constant false alarm rate (CFAR) detection technique is one of the most classical radar detection techniques at present. In 1968, Finn et al. [5] proposed the cell averaging (CA-CFAR) detection algorithm. Based on this, the smallest of (SO-CFAR) detection algorithm and the greatest of (GO-CFAR) detection algorithm were subsequently proposed. Later, in order to reduce the significant impact of strong interference from the detection background on clutter estimation, Ro ing et al. [6] proposed the Ordered Statistics (OS-CFAR) detection algorithm in 1983. Mean-based CFAR methods have a common characteristic of using averaging to estimate the local interference power level. The most classic method is CA-CFAR, followed by the development of methods such as SO-CFAR and GO-CFAR to improve the detection performance in non-uniform backgrounds. Among the ordered-based CFAR methods, OS-CFAR stands out as it utilizes ordered samples within a reference sliding window for processing. However, in a uniform background, the performance of ordered-based methods tends to be lower compared to mean-based methods (pp. 34–72, [7]). In 1986, Barnum [8] was the first to use HFSWR to detect the sea surface targets. The basic method of CFAR processing is to reference the unit samples and select samples that have consistent clutter characteristics with the detection unit background. Then, the clutter background power level is estimated to form a detection threshold. Therefore, background clutter identification and intelligent processing have become important research areas [9].

In recent years, many researchers have utilized machine learning techniques in the domain of intelligent radar target detection. In fact, research on machine learning in radar signal processing has predominantly focused on target recognition [10]. Deep learning models, like those used for SAR or ISAR images, map input data (such as SAR images, detailed range profiles, moving target micro-Doppler features, etc.) to specific output categories. This process is a form of nonlinear mapping. Target detection in radar operates similarly, using nonlinear mapping to identify a target’s presence. In the 1990s, Professor Simon Haykin from McMaster University in Canada was the first to apply machine learning methods to clutter classification in radar target detection [11]. Building upon the work of Professor Haykin’s team, researchers utilized MLP (multi-layer perceptron) and radial basis function networks for clutter classification based on single-frame or dual-frame observations [12]. In 2006, Professor Haykin [13] proposed the concept of cognitive radar, which consists of two main features: knowledge-assisted signal processing and adaptive transmission processing. In 2007, Besson [14] conducted research on a knowledge-assisted Bayesian detection framework under a non-uniform background and proposed a knowledge-assisted Bayesian detector. In 2012, Li Qinghua [15] proposed a knowledge-assisted CFAR detection algorithm. This approach effectively addresses the adverse effects caused by edge effects in the detection process.

In the domain of the radar signal processing, numerous scholars have applied deep learning methods to high-resolution radar target detection. However, for the HFSWRs that belong to short-wave radars, if the entire range–Doppler (RD) spectrum is used as input and machine learning networks are employed for target detection, the information of a single target only occupies a small fraction of the entire RD spectrum information. This situation poses greater challenges for machine learning networks in the processes of target recognition and localization. In the case of low-resolution radar, researchers are more concerned about integrating traditional signal detection or image processing techniques with deep learning methods. In 2019, Li Wang et al. [16] analyzed the potential application of deep neural networks (DNNs) in radar target detection. In 2020, Zhang Wandong [17] proposed a HFSWR target detection method based on optimal error self-correcting extreme learning machine (OES-ELM). In 2020, Li Qingzhong [18] proposed a fast sea surface target detection algorithm based on cascaded classifiers. In 2021, Wu et al. [2] proposed an intelligent object detection algorithm based on deep feature fusion. The aforementioned work can be summarized into a basic framework. Firstly, signal processing methods or image techniques are employed to perform the initial detection on the RD spectrum. Subsequently, machine learning or deep learning methods are utilized to achieve further classification. However, several issues still need to be explored and resolved in this process:

- The process of detecting the potential target regions on the RD spectrum using various signal processing or image processing methods lacks the flexibility of controlling preset false alarm probability parameters based on specific operator needs, as CFAR methods do, to achieve a balance between detection rate, false alarm rate, and miss rate.

- In the detection process, the first-level detection method has a significant impact on the model training process. Therefore, it is necessary to develop a comprehensive model training plan.

- Regardless of the classification model chosen, there is an objective problem of limited generalization ability, making it impossible to achieve the absolute and accurate recognition of targets or clutter. For example, when the signal-to-clutter ratio is low, the model may incorrectly identify some targets as clutter, resulting in a limited detection rate.

In conclusion, this paper proposes a pioneering detection model that combines the CFAR detector with a convolutional neural network (CNN), integrating image processing and computer vision techniques with CFAR processing. To address the issue of a limited detection rate caused by the convergence of the CNN network in the detection model, an innovative dual-detection map fusion method is introduced to compensate for the loss of detection performance. In brief, the results of an independent CFAR detector are utilized to compensate for the results of the CFAR-CNN cascaded detector. The present study introduces an innovative method that provides new ideas and solutions to solve the challenge of an HFSWR target detection. Empirical evidence from experiments confirms that the proposed method holds notable application value. Importantly, our method has the potential to provide a fresh research perspective for target detection tasks in other sensors. For instance, it could be applied to underwater target detection in the field of side-scan sonar, which offers high-resolution images [19,20]. We believe that our approach is applicable in this context, and such an extension can bring new perspectives and possibilities for target detection research in the domain of remote sensing.

2. (VI)CFAR-CNN Detector Model

2.1. (VI)CFAR Detector

In radar systems, the false alarm probability refers to the probability that the radar misjudges that a target exists when no target exists. In order to ensure that the radar system has predictable and stable detection performance, designers usually try to have a constant false alarm probability. The detection processor with a constant false alarm probability is called the constant false alarm rate (CFAR) processor. The following is the derivation of the principle of the general model of CFAR detection [21].

Assuming that the interference noise is independently and identically distributed, the probability density function of the detection unit is

where is the full power of the IQ channel signal. The detection threshold of the detection unit needs to be set according to the estimated value. The idea of CFAR detection is to estimate based on N reference units around the detection unit. These N reference units construct the observation vector , whose joint probability density function is:

By considering the joint probability density function of as the likelihood function for , we can compute the maximum likelihood estimate of as :

The detection threshold to be set can be obtained by multiplying by a threshold coefficient . This leads to the expression of the detection threshold as:

The mathematical expectation expression of the false alarm probability is:

By presetting the expected false alarm probability, the expression of the threshold coefficient is obtained:

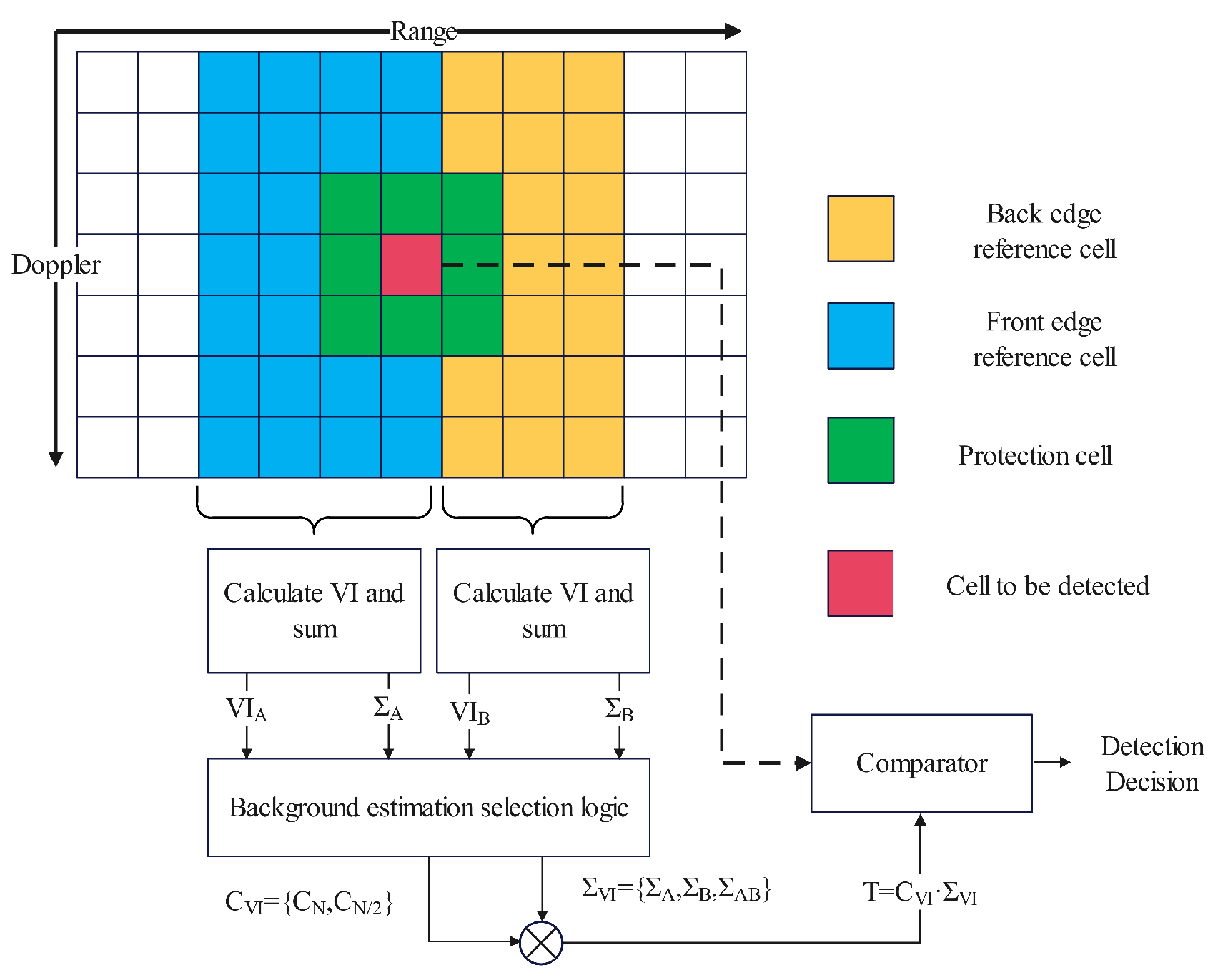

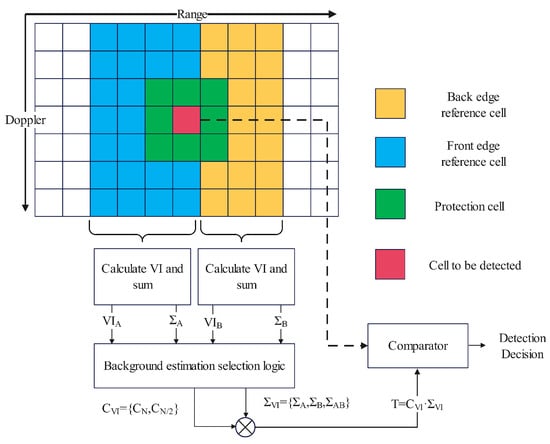

VI (variability index)-CFAR detector, proposed by Michael and Pramod [22], is an adaptive detection method that combines CA-CFAR, SO-CFAR, and GO-CFAR. This method can dynamically adjust the estimation method for clutter power level by calculating the change index of the reference unit and the ratio of the front and rear sliding window mean. Previous studies (pp. 151–153, [7]) have shown that VI-CFAR has a lower complexity compared to OS-CFAR and a better false alarm performance compared to other adaptive detectors such as adaptive orders statistic (AOS)-CFAR [23] and estimation test (ET)-CFAR [24] detectors. We perform slow-time Fourier transform processing on the radar echo signal at each range gate to obtain the RD spectrum, where the range direction represents the radial distance of the target relative to the radar, and the Doppler spectrum represents the Doppler frequency shift of the target relative to the radar [8]. This article uses two-dimensional VI-CFAR processing, and its structural block diagram is shown in Figure 1.

Figure 1.

Two-dimensional VI-CFAR structural block diagram.

is a second-order statistic, for the leading-edge reference cells and the trailing edge reference cells:

where represents the estimated value of the variance, represents the estimated value of the mean square, and represents the arithmetic mean of N reference cells. By comparing with the threshold :

we can determine whether comes from uniform or non-uniform clutter.

Define the mean ratio of the leading edge reference cells and the trailing edge reference cells as [25]:

By comparing with threshold :

It can be judged whether the mean values of the front and back reference cells are the same. The VI-CFAR adaptive threshold is determined based on whether the aforementioned leading and trailing edge reference units are uniform and have the same mean value. The threshold coefficient is determined based on the number of selected reference cells. When all current trailing edge reference units are used, the coefficient is used; when only the leading edge or trailing edge reference cells are used, the coefficient is used. According to Equation (6), the following expressions can be obtained:

The VI-CFAR adaptive threshold generation method is shown in the Table 1.

Table 1.

VI-CFAR adaptive threshold generation method.

The VI-CFAR detector combines the advantages of CA, GO, and SO-CFAR. It is suitable for non-uniform backgrounds with more interference and clutter edges, such as HFSWR, and has certain robustness.

2.2. Clutter and Targets Classification by CNN

During the radar detection process, the visual processing abilities of experienced radar operators often outperform many CFAR processing methods in terms of the detection of targets and management of false alarms. This can be attributed to the intricate visual observation mechanism of humans and their exceptional ability to extract image information. The effectiveness achieved in this manner cannot be replicated by any single CFAR method alone. Therefore, the integration of image processing techniques and computer vision technology with CFAR processing holds immense research value and has promising application prospects.

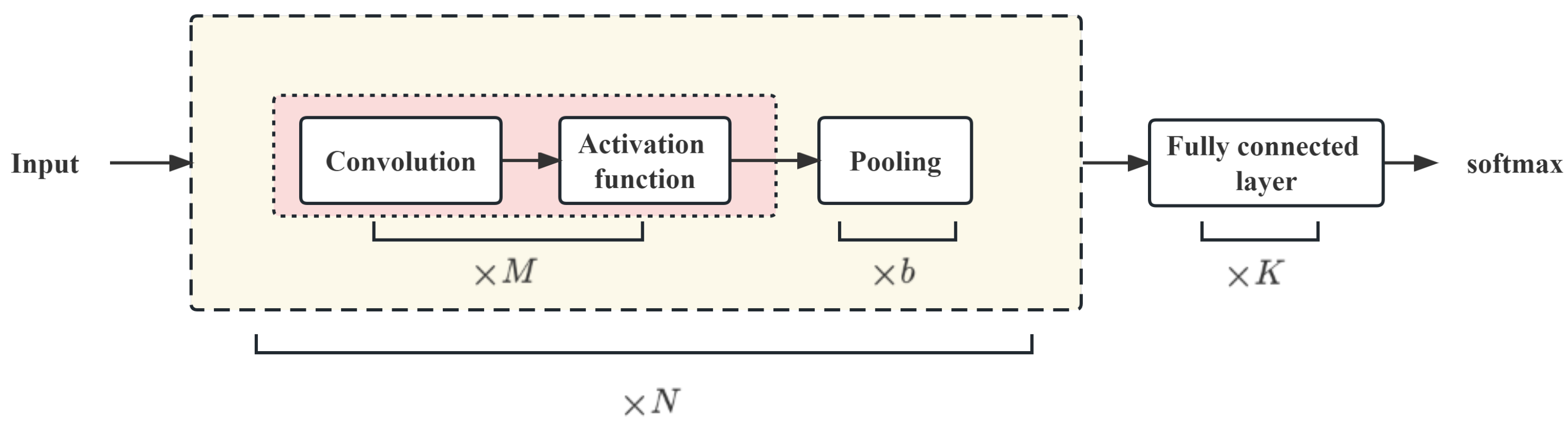

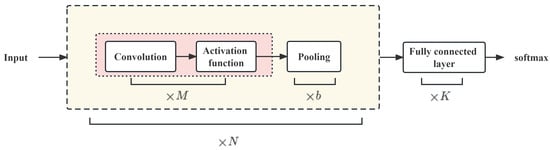

2.2.1. CNN Model

Convolutional neural network (CNN) is a deep neural network featuring localized connections, weight sharing, and additional defining traits. It is widely used in computer vision and pattern recognition domains, spanning various applications. CNN draws inspiration from the biological receptive field mechanism, wherein neurons solely receive signals from the localized area they govern. In the visual nervous system, the receptive field of a neuron refers to a specific region on the retina. Only when this area is stimulated can the neuron be activated [26]. A standard convolutional network consists of a convolutional layer, pooling layer, and fully connected layer as its fundamental components. The network structure is depicted in Figure 2.

Figure 2.

Basic structure of CNN.

In the CNN network, each convolutional block consists of M convolutional layers and b pooling layers. A CNN can be stacked by N convolutional blocks, followed by K fully connected layers. Convolution is the core of CNN. The input data enter the convolution layer after preprocessing. Assuming that the input data and a convolution kernel weight are given, the convolution of the input data and the convolution kernel weight are defined as:

where ∗ represents the convolution operation. The specific operation process is:

The primary function of the convolutional layer is to extract the features from a local region. By employing various convolutional kernels, it can perform equivalent operations as diverse feature extractors. The output of the convolutional layer can be represented by the following mathematical model:

where b represents the bias and represents the activation function. The activation function (activation layer) performs nonlinear operations on the data. In the CNN in this article, the relu function is employed as the activation function.

The output of the convolutional layer will enter the pooling layer. The function of the pooling layer is to decrease the dimensionality of the convolutional layer’s output feature vector, thereby reducing the parameter count.

Assuming that the input of the pooling layer is divided into multiple regions, the pooling operation is to downsample each region to obtain a value as a summary of the region. Commonly used pooling functions include maximum pooling and average pooling. The CNN network in this article uses the maximum pooling method. Maximum pooling is defined as follows:

The output of the convolutional block will be fed into the fully connected layer, which is a basic type of feed-forward neural network model known as the feedback neural network (FNN). The fully connected layer takes the features extracted by the convolutional block as its input and plays a role in classification throughout the entire CNN.

2.2.2. Experimental Data Acquisition

When the radar system receives multiple pulses, the range-pulse spectrum can be converted into a RD spectrum. The following formula is shown as:

where represents the single-frame distance–pulse spectrum after pulse compression, r represents the sequence number of the frame, k represents the pulse number, and t represents the fast time; is the mathematical representation of the single-frame RD spectrum, where represents the Doppler frequency; the RD spectrum will be obtained by multiplying the window function win in the slow time domain and then performing discrete Fourier transform; here, we use the Hanning window as the window function to reduce the spectrum leakage and spectral peak side lobes, which helps to more accurately obtain the RD spectrum information of the target signal.

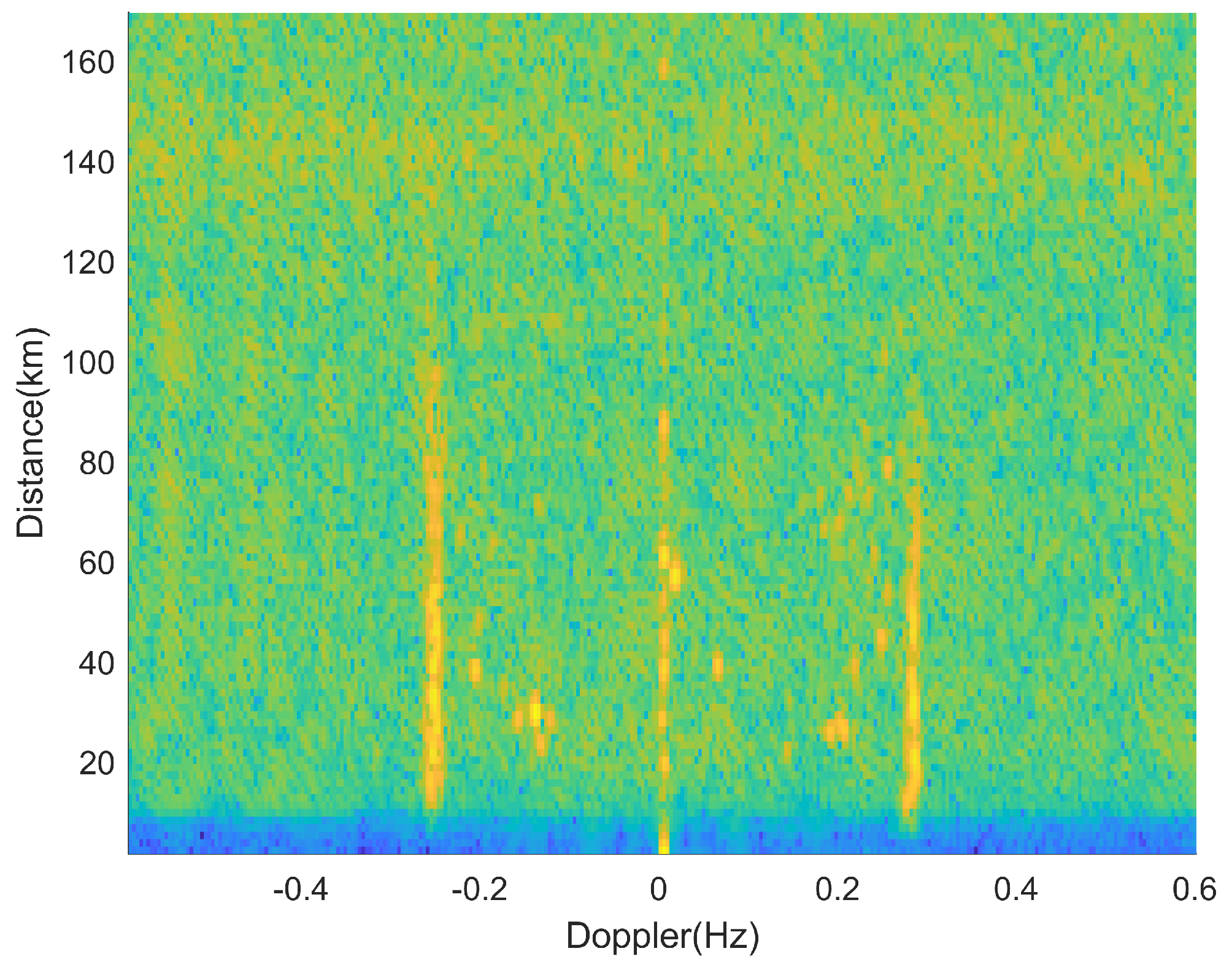

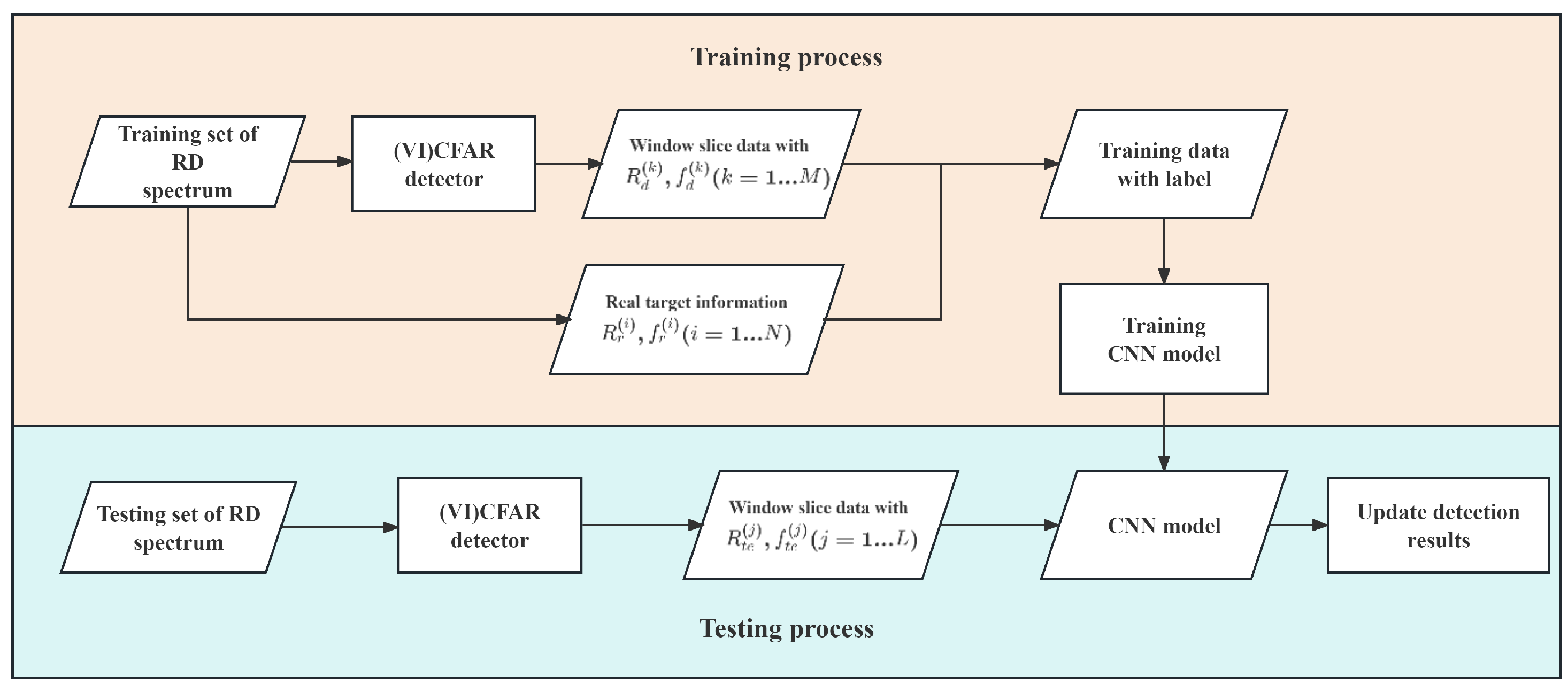

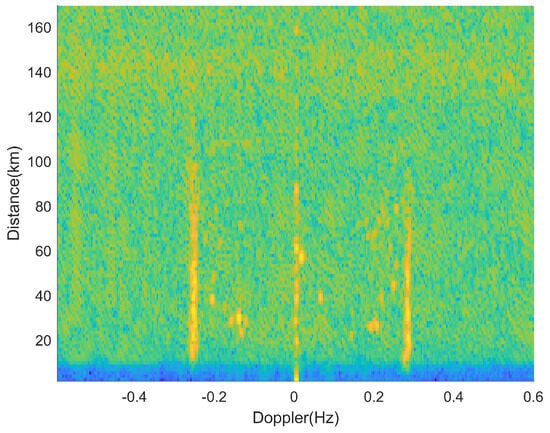

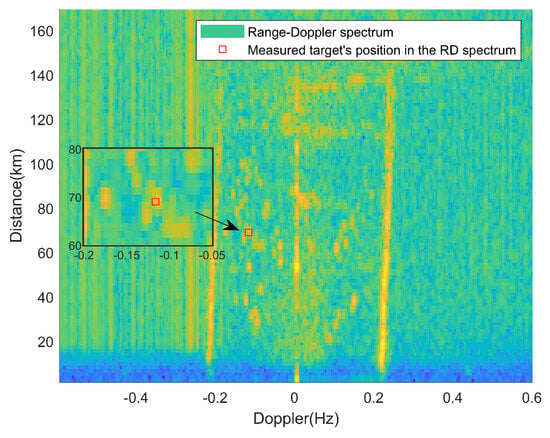

Figure 3 displays an RD spectrum after the above processing. The echo signals received by an HFSWR system located in the Bohai Sea are processed with two rounds of FFT to obtain the RD spectrum. The radar system parameters are shown in Table 2.

Figure 3.

HFSWR measured RD spectrum.

Table 2.

HFSWR system parameters.

In this spectrum, we can extract information related to the distance and Doppler frequency of the targets [27]. This spectrogram describes the power spectral density of electromagnetic wave signals received by HFSWR within the radar detection range. The color range from blue–green–yellow represents the gradual increase in echo energy. It can be seen from the spectrum that the detection environment of HFSWR is very complex. In the actual sea detection process of HFSWR, it is difficult to accurately and comprehensively obtain the true number, location, and status of sea surface targets. While it is feasible to use ship automatic identification system (AIS) data and radar echo data correlation as target true value information, vessels without AIS can still be detected by radar. However, if we only select targets with AIS data, this approach is not conducive to constructing an RD spectrum dataset that encompasses comprehensive position and velocity ground truth values. Moreover, it will also impact the training and testing of subsequent models. Therefore, in order to subsequently verify the performance of the proposed method and compare it with existing methods, this paper adopts the target embedding evaluation method—embedding a certain number of simulated targets in the clutter background to construct a real scene of real HFSWR targets [28]. In addition, we obtained a small amount of association data between the AIS and radar echoes of the target to verify the feasibility of detecting the target using the proposed method in measured target data.

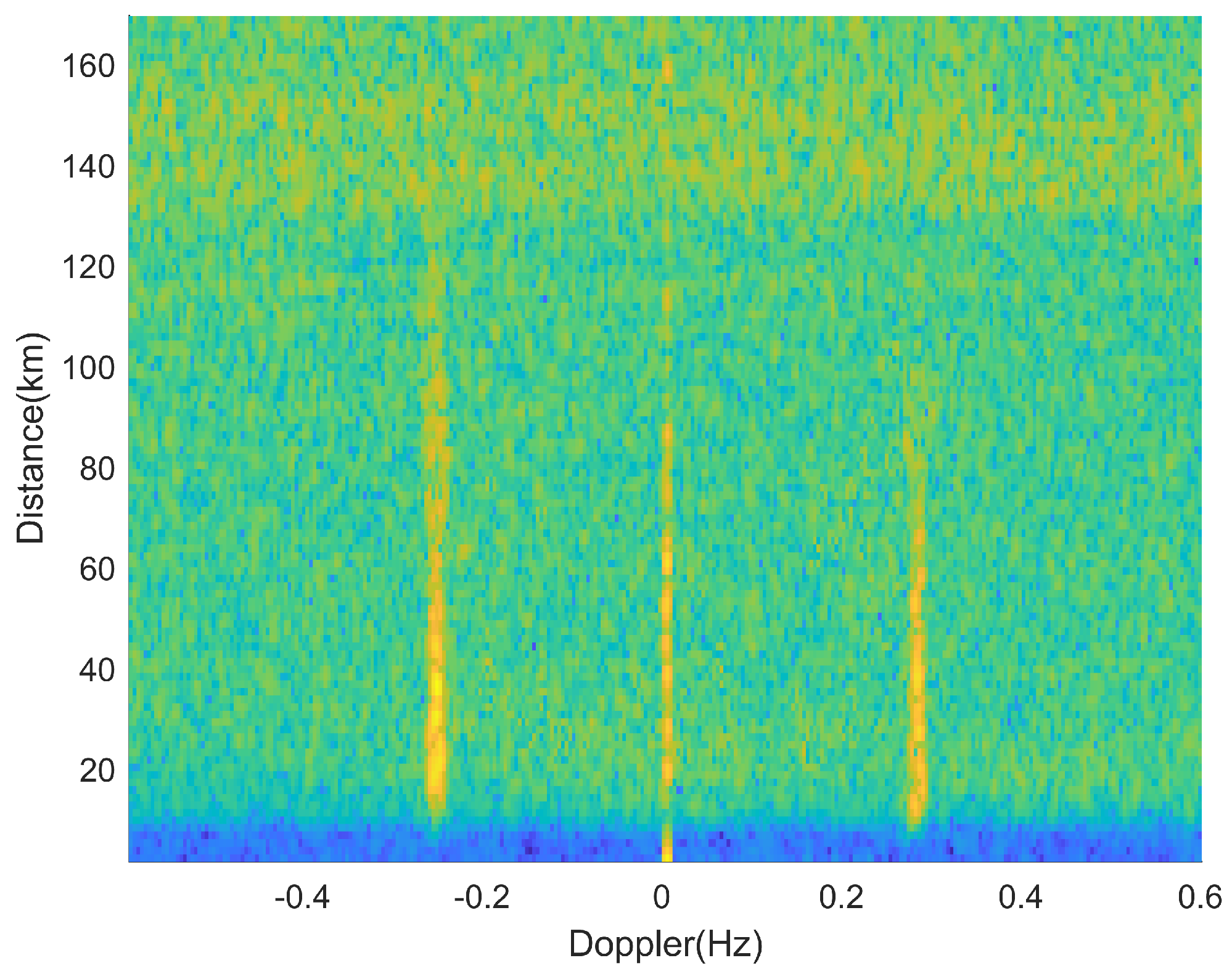

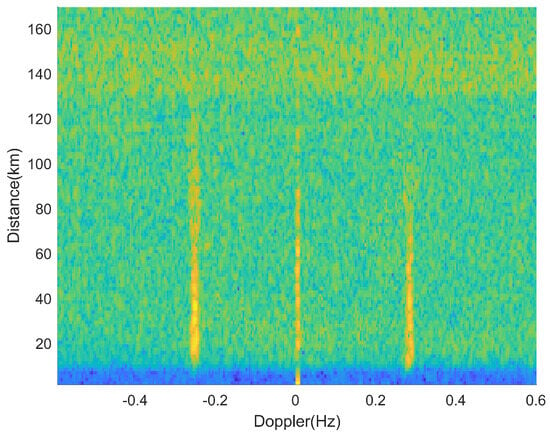

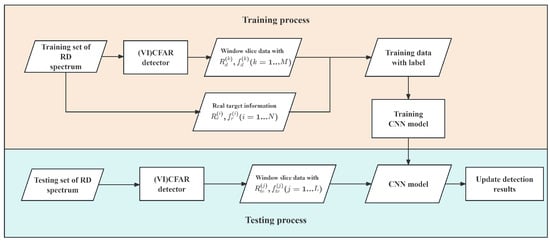

In the original radar RD spectrum, we manually extracted the detection background. Firstly, a distance range of 20–70 km, and Doppler ranges of −0.5 Hz–−0.3 Hz and 0.3 Hz–0.5 Hz are selected from the fully measured RD spectrum as the background reference region and the distribution of this region is calculated. Then, the two regions between the positive and negative Bragg peaks and the zero frequency are randomly assigned values based on the distribution of the background reference region, resulting in a spectrum representing the background detection, as shown in Figure 4.

Figure 4.

Measured detection background.

In the detection background, we add a certain number of simulated targets. For each coherent integration period, we set the number of targets and their specific distance and velocity information. We adopt a linear frequency interrupted continuous wave (LFICW) approach where pulses are transmitted within a coherent integration period. Each pulse in the received signal is sampled times, and after performing a single fast Fourier transform (FFT), range bins are obtained. Assuming there are m targets in a specific range gate, the signal model of the sum of m target signals can be represented as:

where T represents the sampling interval, k is the number of sampling points, and , respectively, represent the amplitude and Doppler frequency of the ith target, represents the distance time delay of the ith target signal, K represents the frequency modulation slope. Then, we obtained the RD data of the simulation target according to Formula (17), where represents the number of range gates, and represents the number of coherent accumulation cycles. The mathematical expression of is as follows:

Finally, we superimpose the simulated target RD data and the measured background data :

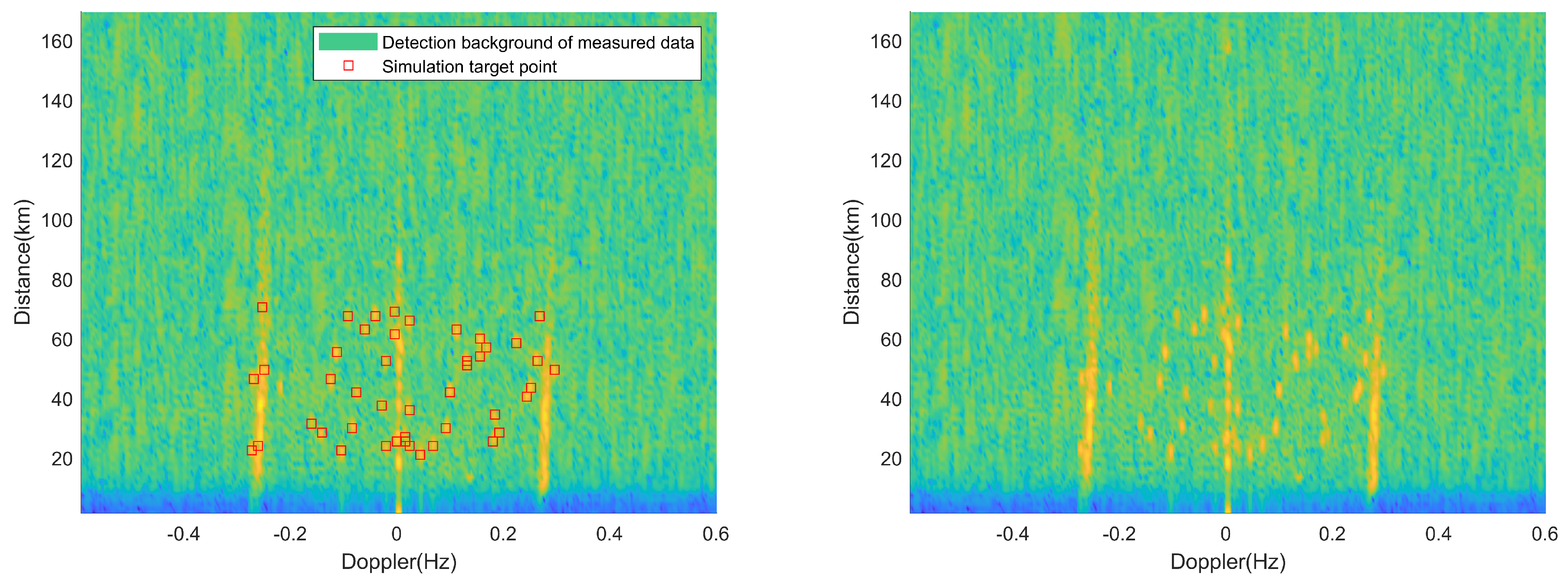

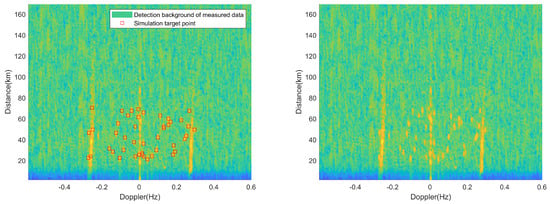

In this way, we can obtain the RD spectrum of the semi-measured and semi-simulated radar that contains all the target true value information. Figure 5 and Figure 6 show a frame of RD spectrum containing 45 simulation targets and 35 simulation targets, respectively. For the convenience of viewing, the simulation targets are marked on the left figure and the simulation targets are not marked on the right figure.

Figure 5.

Single-frame RD spectrum containing 45 simulation targets.

Figure 6.

Single-frame RD spectrum containing 35 simulation targets.

It is worth mentioning that the simulated targets are within a distance range of 20–70 km. In practice, the HFSWR system has a detection blind zone of approximately 20 km. Additionally, different targets are located in different range and Doppler cells. The range resolution in the spectrum is 1.5 km and the Doppler resolution is 0.004 Hz. However, due to signal extensions in both the range and Doppler domains, two targets that are close in the spectrum can exhibit overlap, which is also observed in practical detection.

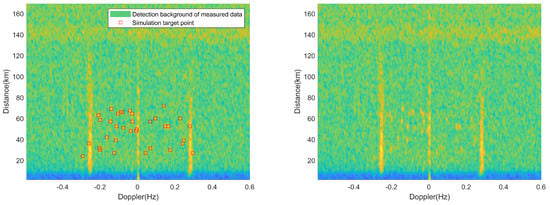

The HFSWR emits electromagnetic waves that interact with the rough sea surface. As a result, the resulting echo signal exhibits a ridge-like structure symmetrical about the Doppler zero frequency in the upward distance of the RD spectrum. Furthermore, the ionospheric clutter manifests as a band-like structure in the upward direction of the Doppler spectrum. Additionally, ground clutter is evident with a ridged structure similar to sea clutter at the Doppler zero frequency. In contrast, the target point in the RD spectrum appears as an isolated peak with a specific amplitude [29]. In fact, these descriptions of different types of clutter and targets on the RD spectrum come from the human observation and perception of different spectral structures, and CNN can automatically extract the different characteristics of targets and clutter and conduct a one-step comparison, achieving the end-to-end classification of clutter and targets.

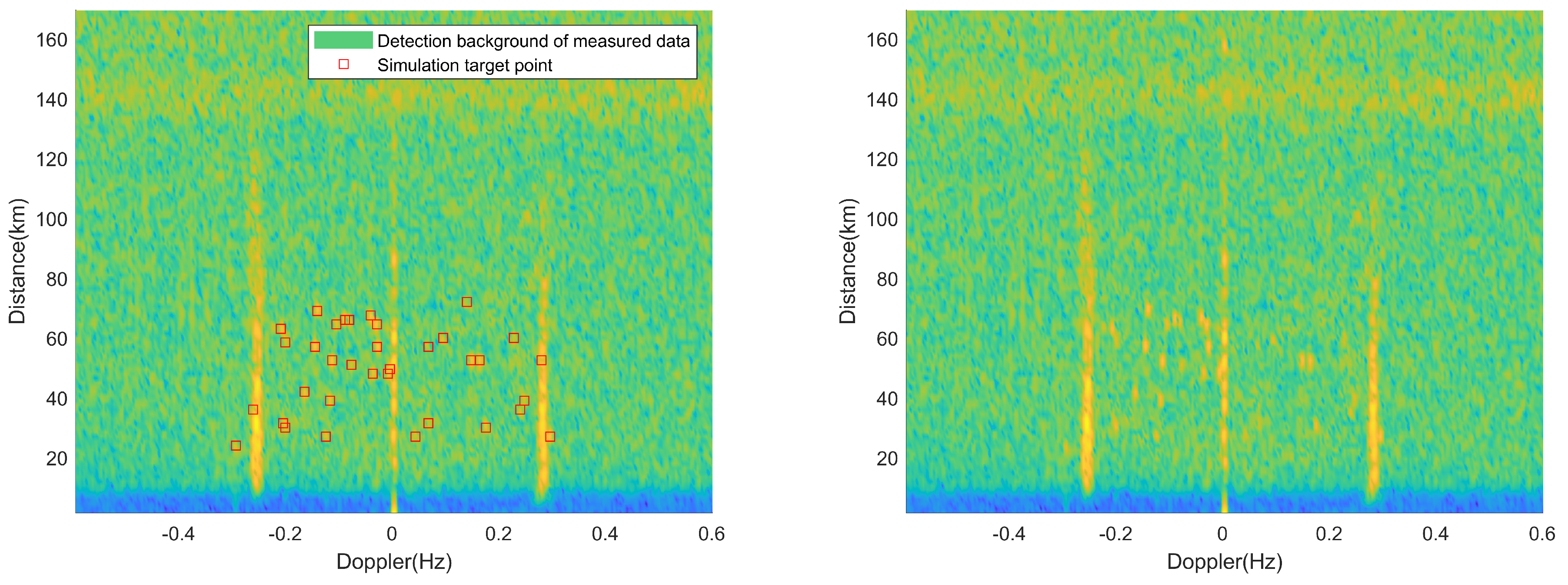

We utilize (VI)CFAR for the preliminary detection to obtain the first-level detection result for a single frame of RD spectrum data. Using the position of this result in the RD spectrum as the center, a 9 × 9 rectangular window is extracted from the RD spectrum to form a window slice, which contains the target or clutter in the form of two-dimensional image data. In terms of window size selection, we take into account the range and Doppler extensions of the target in the spectrum. However, the number of extension cells in each direction is generally limited to four. This ensures that the window slice contains all the information about the target in the spectrum while avoiding the redundancy caused by larger window sizes that may incorporate interference from other targets or clutter. Subsequently, CNN is employed to classify the target or clutter. Figure 7 presents an example of window slices showing clutter and targets.

Figure 7.

Example of window slicing between clutter and target.

2.2.3. Training and Testing Process of (VI)CFAR-CNN

When a deep learning network is introduced to achieve radar target detection, the training of the network model is crucial. A simple training strategy is to perform sliding window processing on each frame of the RD spectrum to form window slice data that can be input to the network. Since the distance and speed information of the simulated target are known, the “target” tag is only added when the target is located in the detection unit; otherwise, a “non-target” tag is added. The problem with this strategy is that if the signal-to-noise ratio (SNR) of the target is very low, even if more such samples are trained, it will be difficult for the network model to distinguish whether it is a target or a clutter during the detection process; in addition, an imbalanced ratio of positive examples (targets) to negative examples (clutter) within the frame’s RD spectrum also has an impact on network training.

The training strategy of this article is to first use the CFAR detector to detect in each frame of the RD spectrum; the obtained detection results are centered on their position in the RD spectrum, and a 9 × 9 window is used to intercept them to form a window slice datum; based on the known target distance and speed information of each frame of data, and the distance and speed information detected by CFAR contained in each window slice datum, we can compare these information and determine whether each window slice datum is a target. Using this approach, each window slice datum can be appropriately labeled as either a target or a non-target. This labeling process effectively utilizes the CFAR detector to supervise and guide the CNN network in distinguishing between targets and non-targets.

Table 3 shows the CNN network structure parameters in the proposed method. In the network, window slice data are used as network input; the convolution layer is responsible for extracting features and obtaining the spatial structure information in the window slice through convolution operations; a batch normalization layer (BN) is introduced between the layers and the pooling layer to optimize the training and bolster network stability; the output of the last pooling layer is flattened into a one-dimensional vector and sent to the fully connected layer to complete classification.

Table 3.

CNN network structure parameters.

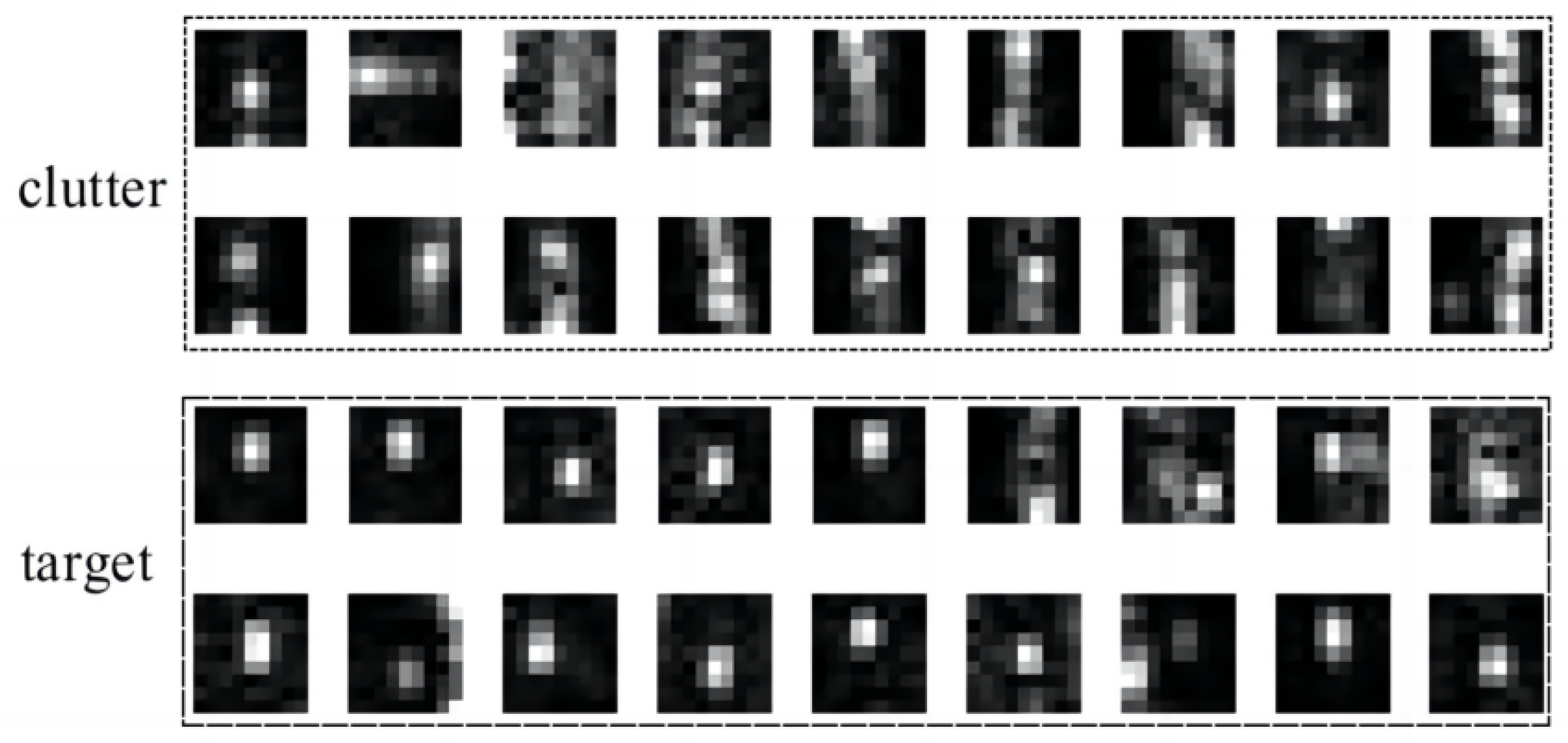

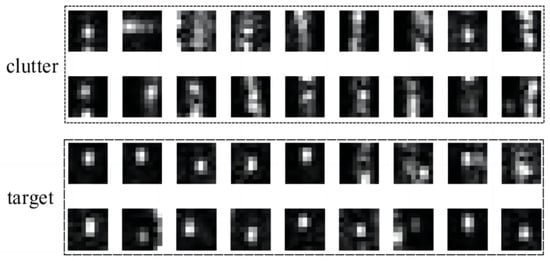

Figure 8 shows the training and testing processes of (VI)CFAR-CNN detector.

Figure 8.

Training and testing process of (VI)CFAR-CNN.

During the training process, the CFAR detector detects “suspected targets” to form window slice data and records range and Doppler frequency information, and compares them with the actual range and Doppler information of the target, and if a match is found, add the label “target”; otherwise, add the label “non-target”. The multi-frame simulation constructs a training dataset consisting of window slices and their corresponding labels, which is then used for training the CNN model. During the test process, use CFAR for detection to obtain preliminary detection results, obtain window slice data based on the detection results, and then use the trained CNN model to further classify whether the window slice data are clutter or targets, and finally update the detection results to complete the detection.

In the (VI)CFAR-CNN detector, the training of the CNN model is supervised by the previous level CFAR detector. Different preset false alarm probability settings of the CFAR detector will produce different window slice data, such as using preset false alarms. In the window slice data generated by the CFAR detector with a low false alarm probability, the SNR of the detected target is generally high, and the proportion of clutter data in the overall data is small; while in the window slice data generated by the CFAR detector with a high preset false alarm probability, the range of the SNR of the detected target is larger, and the clutter data account for a larger proportion of the overall data. Therefore, different preset false alarm probability settings will affect the training of the CNN model. Here, we compare the results of training and testing on window slice data generated by CFAR detectors using different preset false alarm probabilities.

We used CFAR detectors with different preset false alarm probabilities (0.001, 0.002, 0.005, 0.01, 0.02, and 0.05) to generate different window slice data, and fused the window slice data generated by the above multiple preset false alarm probabilities to obtain a fused dataset. Then, we trained and tested different CNN models using the above seven types of datasets, and calculated four evaluation indicators: classification accuracy, recall, precision, and F1 score. For the sake of fairness, each type of dataset contains 2600 clutter window slices and 1000 target window slices, of which 1/10 is used for testing and the rest is used for training. According to the performance metrics shown in Table 4, we discovered that the CNN model trained using window slice data generated by the CFAR detector with multiple preset false alarm probabilities demonstrates a superior performance. Therefore, in subsequent experiments, we used window slice data generated by the CFAR detectors with multiple preset false alarm probabilities to train the CNN model.

Table 4.

CNN model performance under different CFAR preset parameters.

3. Fusion of Dual-Detection Maps to Compensate for Detection Performance

3.1. Detection Rate Loss of (VI)CFAR-CNN

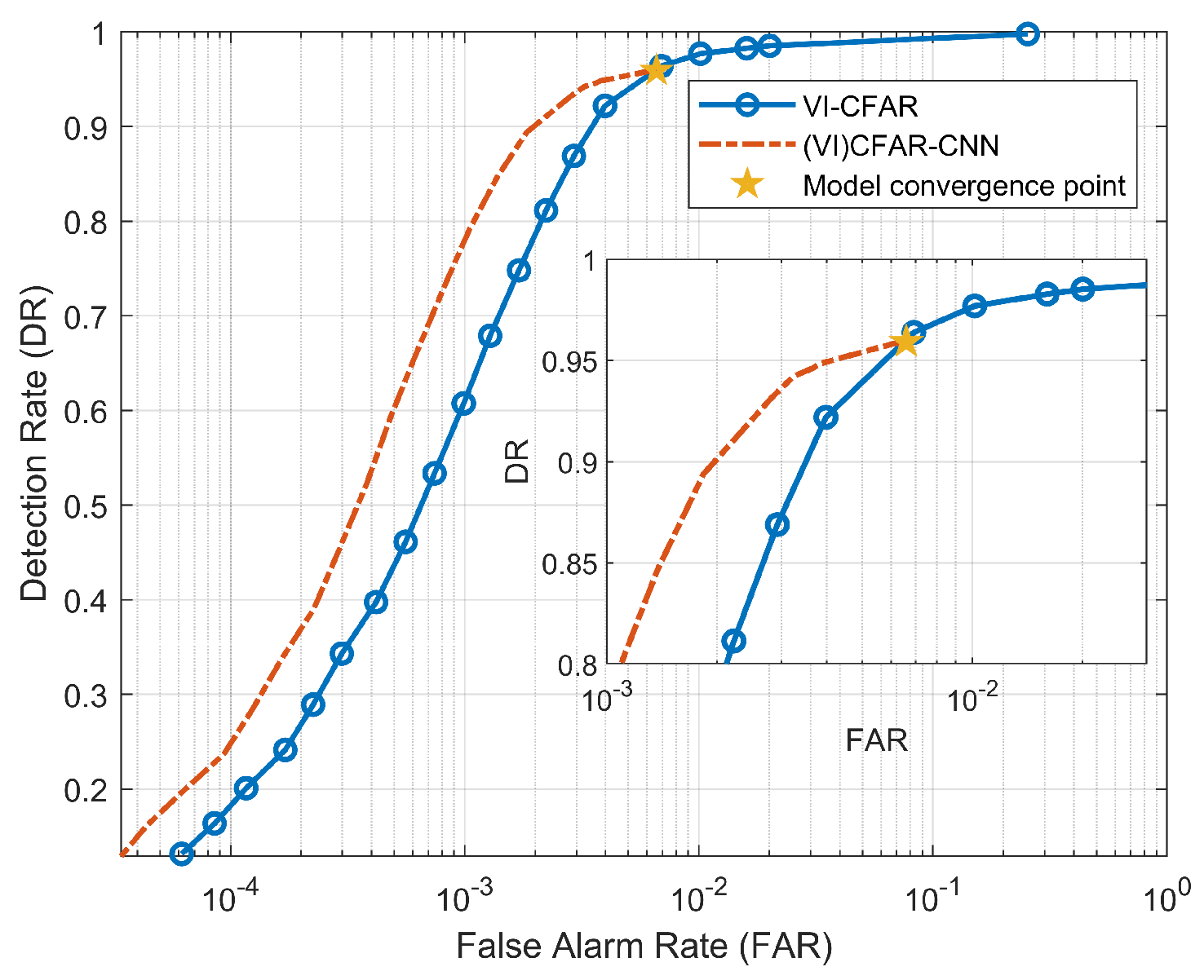

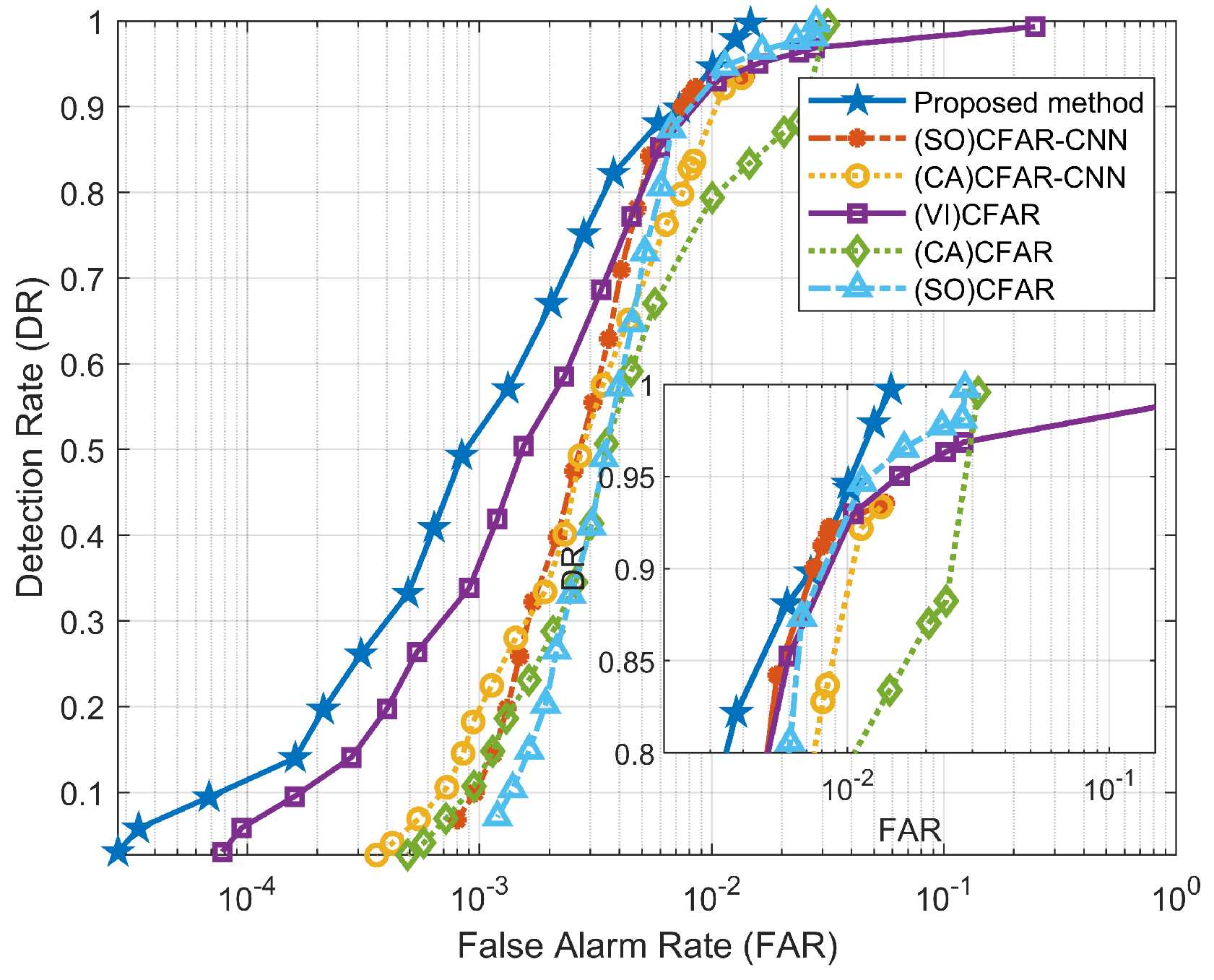

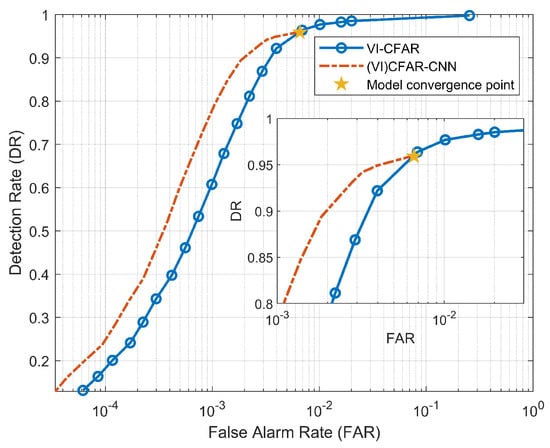

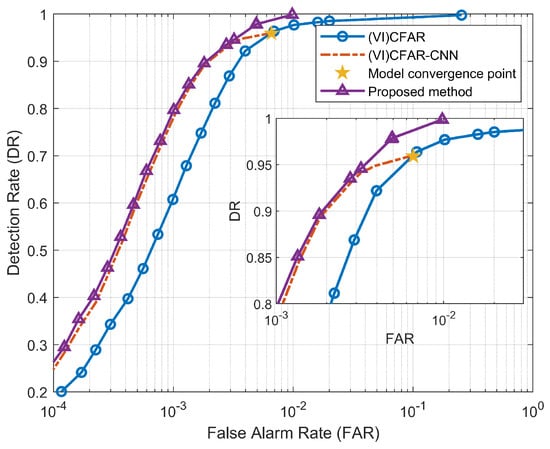

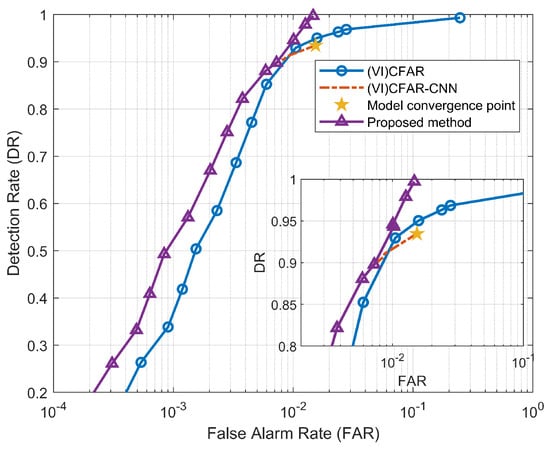

In the previous article, we introduced the principle and process of (VI)CFAR-CNN detection, in which the detection rate of the detector is limited due to the convergence of the CNN model. As shown in Figure 9, we plotted the ROC curves of (VI) CFAR and (VI) CFAR-CNN, illustrating the correlation between detection rate (DR) and false alarm rate (FAR). Their calculation formula is as follows:

where represents the number of correctly detected targets, represents the total number of targets in the frame spectrum, represents the number of false alarm units, and represents the total number of intercepted RD spectrum units (in this article it is ). All performance indicators related to DR and FAR in this article are calculated according to the above formula.

Figure 9.

ROC of (VI)CFAR-CNN and (VI)CFAR.

We can find that, due to the cascaded CNN model after (VI)CFAR, the detection performance of (VI) CFAR-CNN is significantly better than (VI)CFAR. However, due to the convergence of the CNN model, the detector output results tend to be stable. After stabilizing, the DR of (VI)CFAR-CNN no longer increase when it reaches 0.9592, which is called the detection rate loss. The DR and FAR of (VI)CFAR have always kept changing synchronously, and there is no loss of DR. According to the Neyman–Pearson criterion, we hope to control the false alarm probability within a specified range and increase the detection probability as much as possible. Obviously, the (VI)CFAR-CNN detection rate loss caused by the convergence of the CNN model is inconsistent with this criterion. When the model convergence point is reached, the DR will not increase even if the preset false alarm probability is increased. Therefore, we need a way to compensate for the (VI)CFAR-CNN detection rate loss.

3.2. Method of Fusion Decision Making of Dual-Detection Maps

This paper proposes a methodology for making comprehensive decisions based on dual-detection maps in order to enhance the detection performance.

First, the (VI)CFAR-CNN detector with a preset false alarm probability of is used for detection on the RD spectrum ( is the preset false alarm probability when the detector model converges), and the detection map A is obtained.

Then, use the (VI)CFAR detector with the preset false alarm probability of to detect (), and the detection map B is obtained.

For the detection map A and the detection map B, we regard them as two matrices and , and . The value “1” is used to indicate that the target is detected, while the value “0” indicates that the target is not detected. Subsequently, the detection results are weighted and fused using the following calculation method:

where represents the weight vector of the two detectors, and and represents the detection matrix after weighted fusion. For each connected component in , perform the following calculations:

where represents the quantity of units within the k-th connected component; represents the value of the i-th unit in the k-th connected component; and is the normalized factor of the connected component; for parameters , we make the following judgments and perform the following operations:

After traversing the connected components and completing the calculations mentioned above, the final detection results of the dual-detection maps fusion decision are obtained.

In fact, we use an independent (VI)CFAR detector to compensate for the loss of detection performance due to the convergence of the (VI)CFAR-CNN model, ensuring that the preset false alarm probability of (VI)CFAR-CNN remains unchanged, and the preset false alarm probability of (VI)CFAR is increased, thereby improving the final DR, but it will also increase the FAR. As previously stated, the DR and FAR of the detector exhibit synchronous increments or decrements. Nevertheless, our objective for improvement is to maximize the DR while maintaining a FAR that falls within an acceptable threshold.

3.3. Optimization of the Weight and Normalized Factor of the Double Detection Map

In the aforementioned process of fusion decision-making of dual-detection maps to compensate for detection performance, the values of the weights and of the dual-detection maps and the normalized factor are crucial to the quality of the final fusion result.

Under the preset false alarm probability of each independent (VI)CFAR, the value strategy of , and becomes an optimization problem. For this optimization problem, we use the following mathematical model to describe:

where f represents the objective function, x represents the vector composed of and .

We let , and , where there is the parameter space of the preset false alarm probability of all (VI)CFAR in the dual-detection maps fusion decision algorithm. For each element in the parameter space, there exists an optimal solution obtained through the corresponding optimization algorithm. Subsequently, we present the specific optimization process.

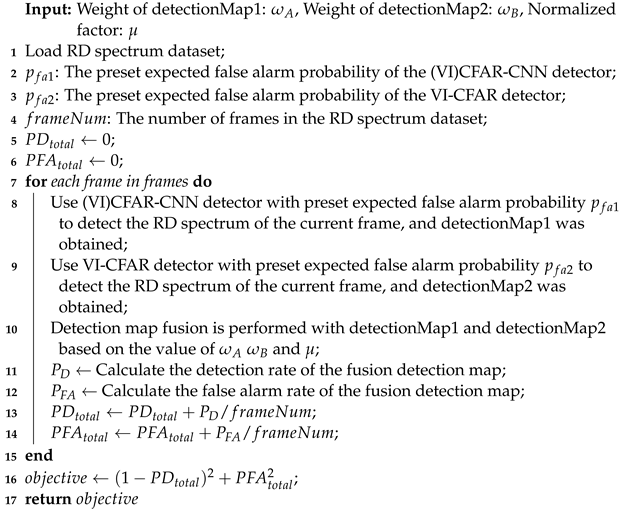

We use pseudocode to describe the definition of the objective function. The calculation process of the objective function is shown in Algorithm 1. In this process, the objective function inputs , , and ; and then we load a fixed RD spectrum dataset; and then we use the dual-detection maps fusion decision-making method to obtain the detection results of each frame of the RD spectrum, and calculate the overall DR and FAR; finally, the objective function value objective about the DR and FAR is output. It should be noted that and in the objective function are global variables outside the function. Let be constant as and only change and as needed.

| Algorithm 1: Objective function |

|

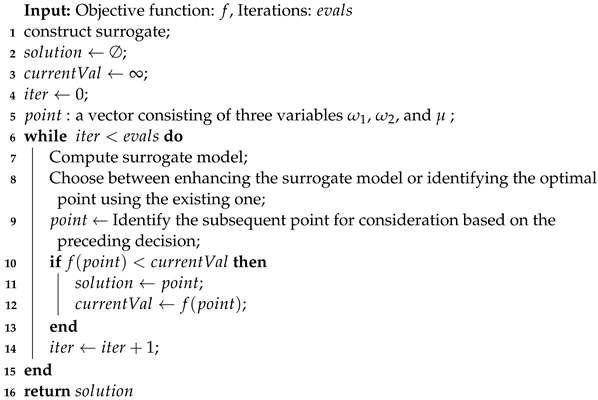

We use the Surrogateopt algorithm to optimize , , and . The definition of the optimization function is shown in the pseudocode in Algorithm 2. The Surrogateopt algorithm is an optimization algorithm based on a surrogate model. It is suitable for objective functions that have a time-consuming and laborious calculation process.

| Algorithm 2: Optimization process of , and |

|

The algorithm approximates the actual objective function by constructing a surrogate model and reduces the number of solutions to the actual objective function [30]. In each iteration, the Surrogateopt algorithm selects a suitable point to evaluate the actual objective function and updates the surrogate model based on the evaluation results. Through continuous iteration, the Surrogateopt algorithm can find the optimal solution or a solution close to the optimal solution.

After the aforementioned optimization process, each preset parameter (i.e., the preset false alarm probability of (VI)CFAR) corresponds to an optimal combination of weight and normalized factor. During the implementation of the detection, we can configure these parameters using a lookup table based on the preset values.

4. Results and Discussion

4.1. Introduction to Simulation Target Experimental Data

According to the experimental data acquisition method described in the above section, we constructed two sets of HFSWR target-embedded RD spectrum data to evaluate the proposed method. The two sets of datasets, DATA_1 and DATA_2, are represented in Table 5.

Table 5.

Dataset Information.

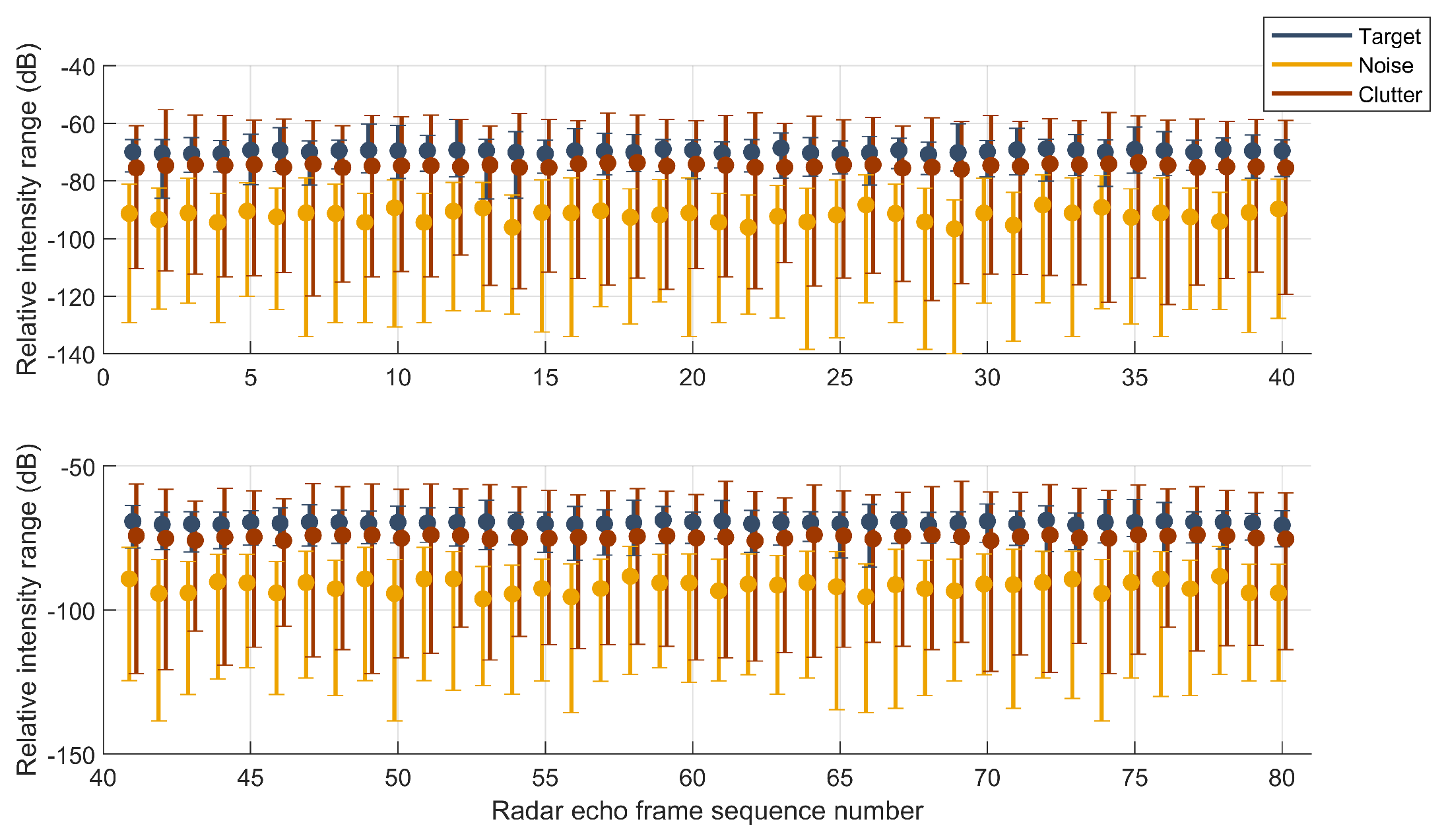

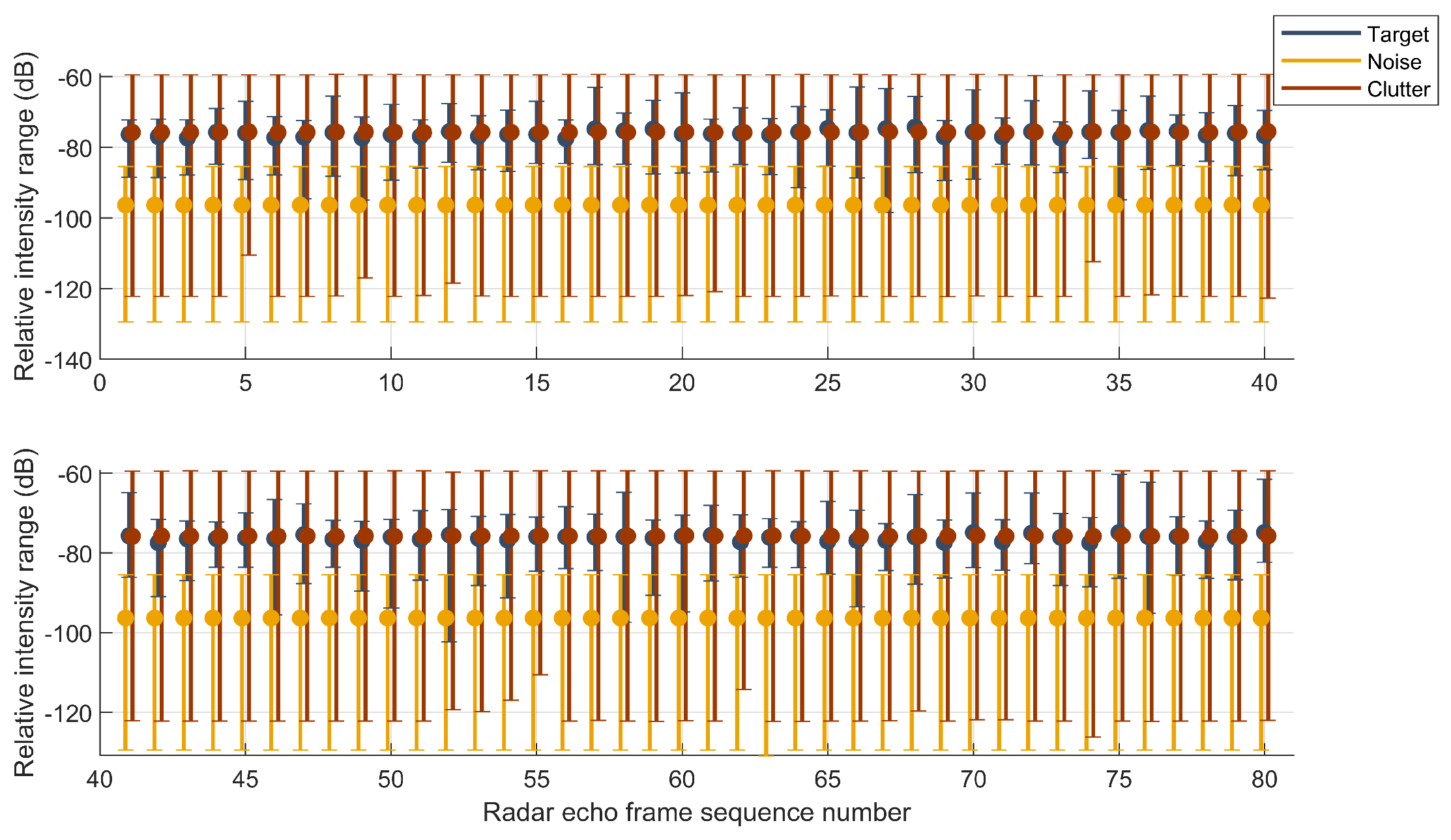

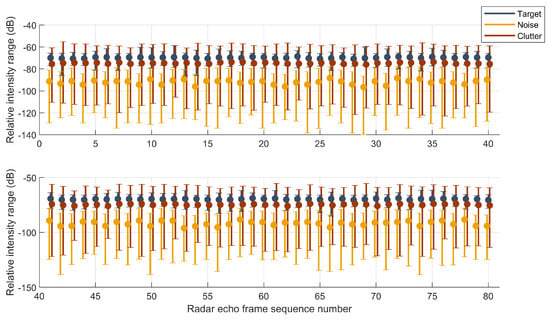

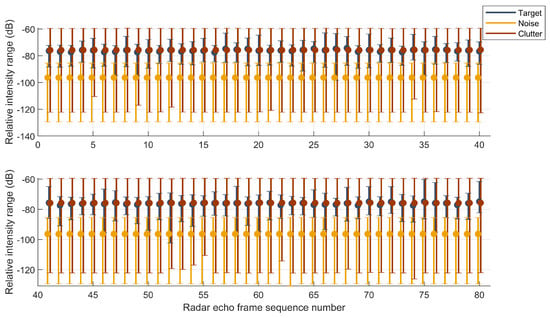

As shown in Figure 10 and Figure 11, this illustrates the relative intensity information of the RD spectrum targets, clutter, and noise for 80 frames tested in datasets Data_1 and Data_2. The three colors in the figure represent the target, background noise, and clutter, respectively. The “dots” represent the relative intensity mean, and the “upper and lower horizontal bars” represent the relative intensity range.

Figure 10.

Relative intensity range of target, clutter and noise of DATA_1.

Figure 11.

Relative intensity range of target, clutter, and noise of DATA_2.

4.2. Comparison of ROC Curves of Detection Methods

The radar target detection receiver operating characteristic (ROC) curve can be utilized for evaluating and comparing various detection performances. It describes the relationship between DR and FAR in detection methods. The area under the curve (AUC, area under the curve) is usually used to evaluate detection methods, corresponds to their performance. A larger AUC indicates better performance.

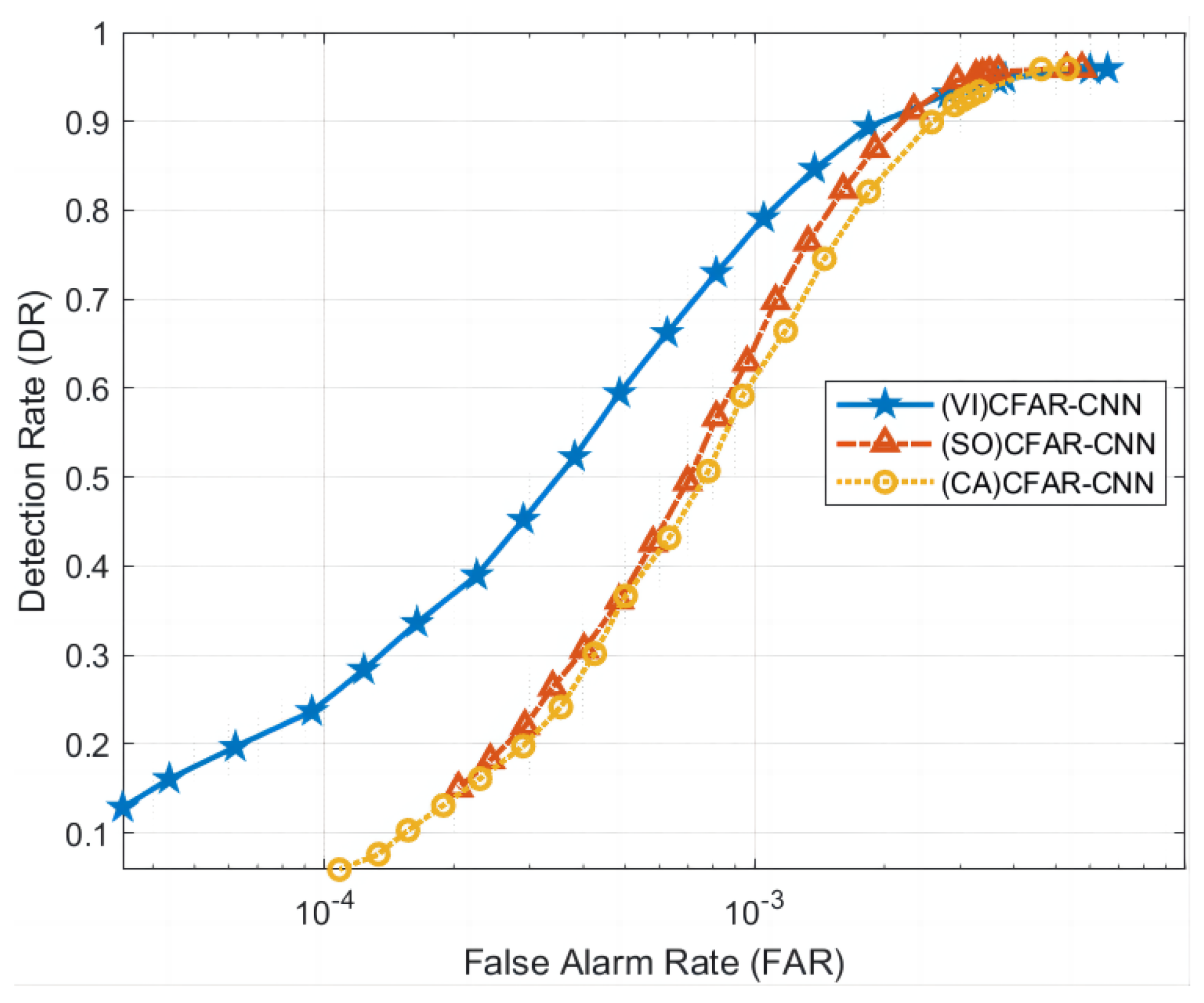

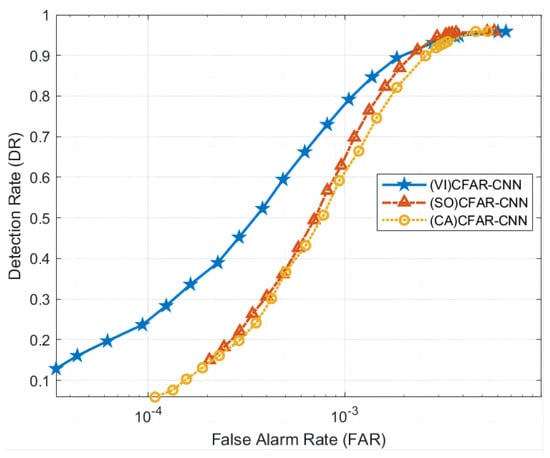

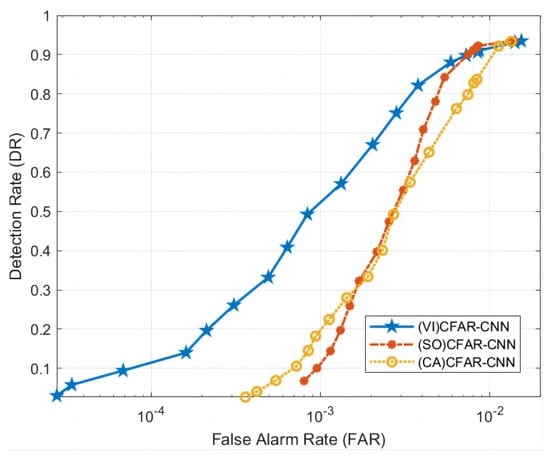

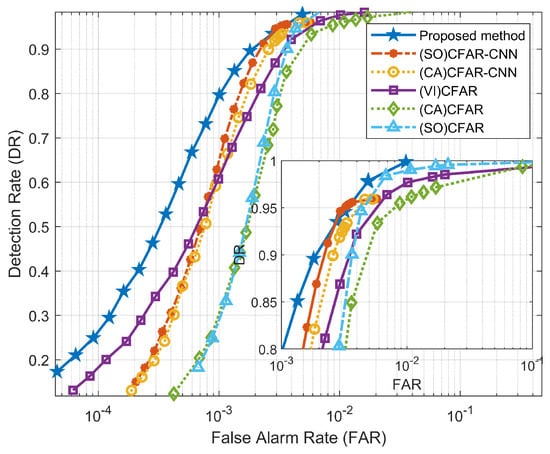

4.2.1. Comparison of Different CFAR Detectors Used as First-Level Detection

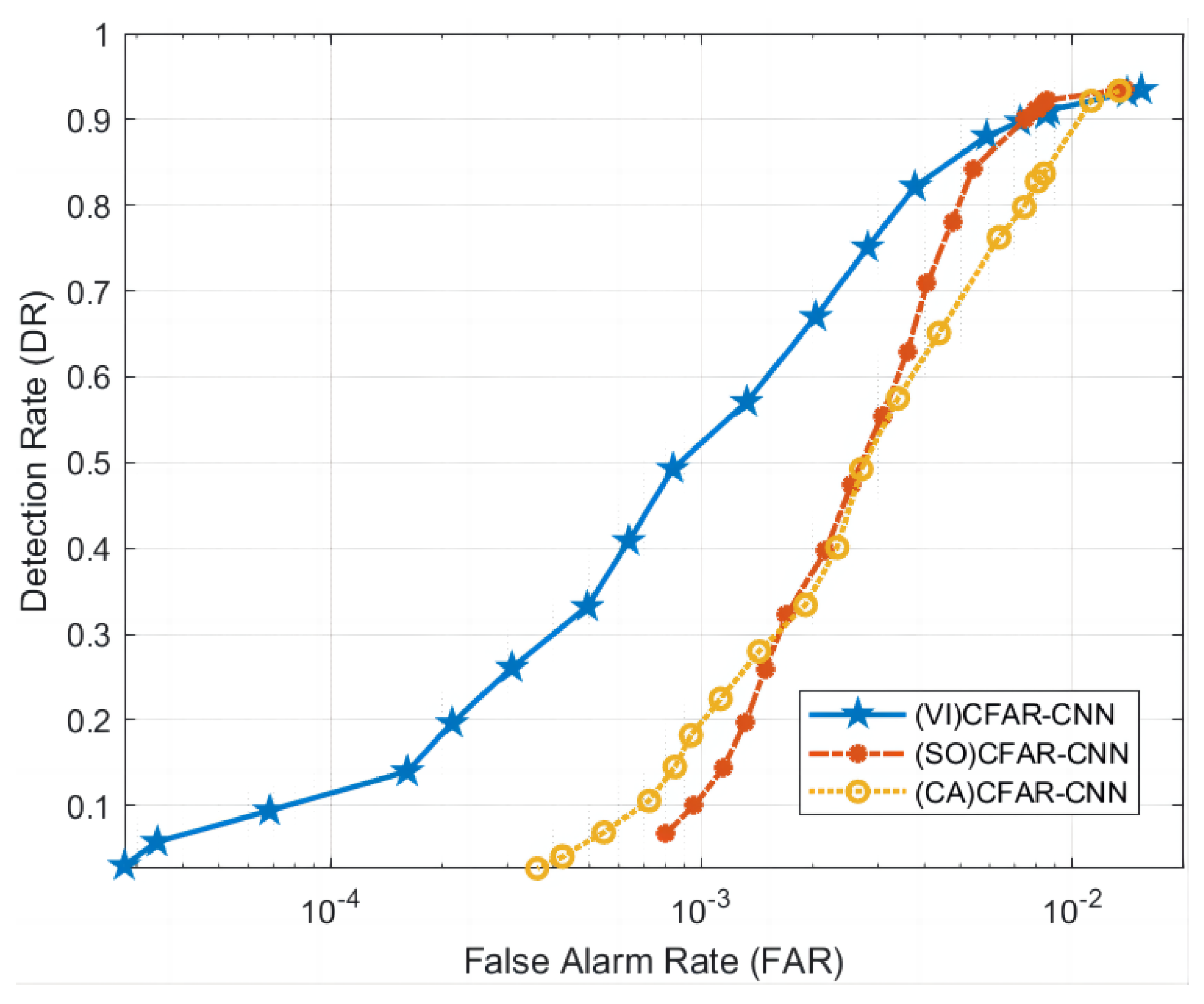

This section aims to verify the detection performance of using different CFAR detectors for first-level detection and subsequently applying the same CNN model for second-level classification. Based on the RD spectrum data of DATA_1 and DATA_2, we compared three solutions that employ different CFAR as the first-level detection method, namely (VI)CFAR-CNN, (SO)CFAR-CNN, and (CA)CFAR-CNN. As shown in Figure 12 and Figure 13, the experimental results show that (VI)CFAR-CNN has the most superior detection performance, while (SO)CFAR-CNN has equivalent performance to (CA)CFAR-CNN. Since (VI)CFAR is an adaptive CFAR method, it is more suitable for detecting high-frequency ground wave radar data with an uneven background. The experimental results align with the underlying theory, thereby substantiating the efficacy and applicability of (VI)CFAR as a first-level detection.

Figure 12.

Comparison of the ROC curves using different CFAR methods as the first level of detection in DATA_1.

Figure 13.

Comparison of ROC curves using different CFAR methods as the first level of detection in DATA_2.

However, regardless of the chosen CFAR as the first-level detection method, once the CNN model reaches the convergence state, there is no further improvement in the detection rate. The ROC curves of (VI)CFAR-CNN, (SO)CFAR-CNN, and (CA)CFAR-CNN gradually converge.

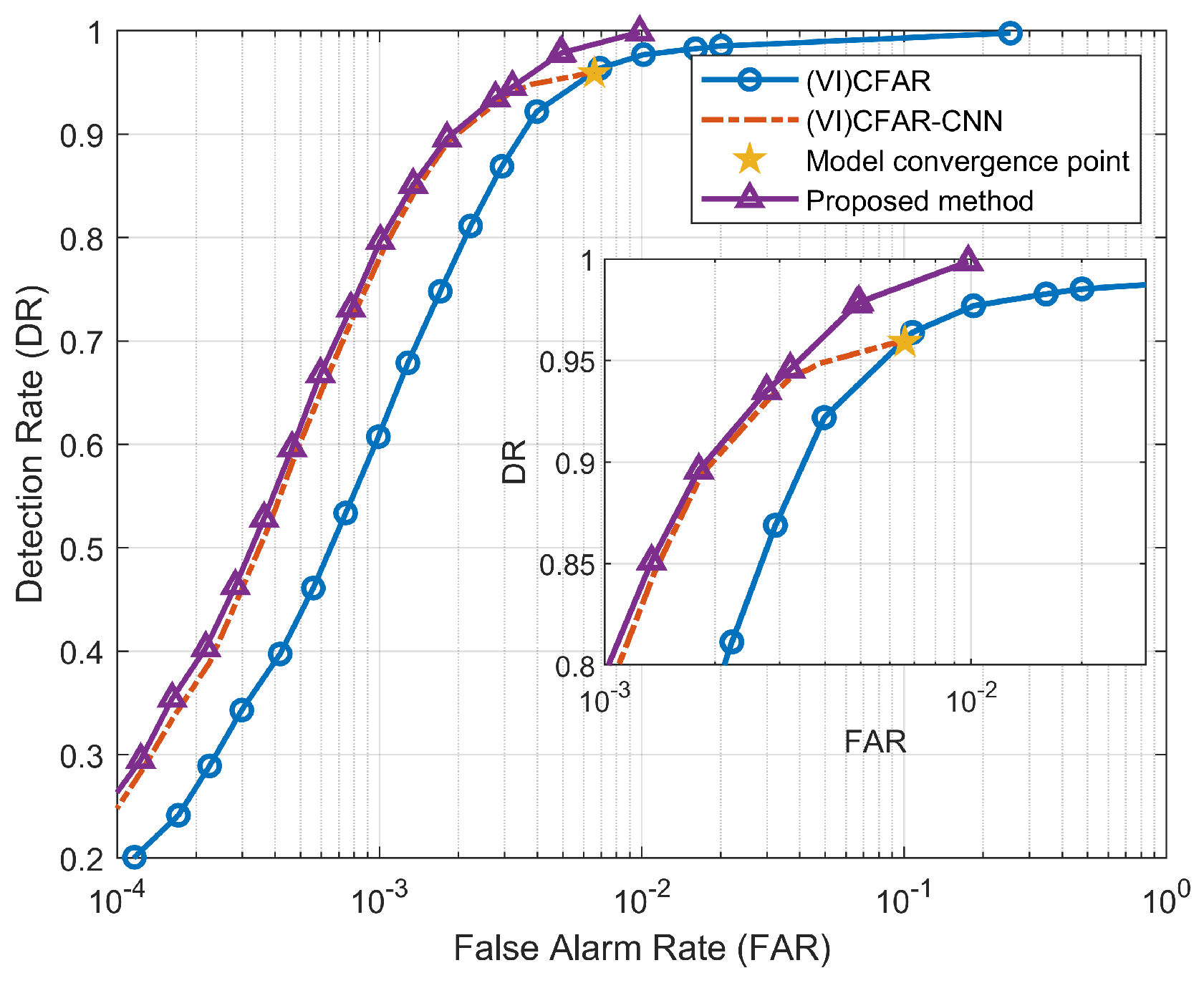

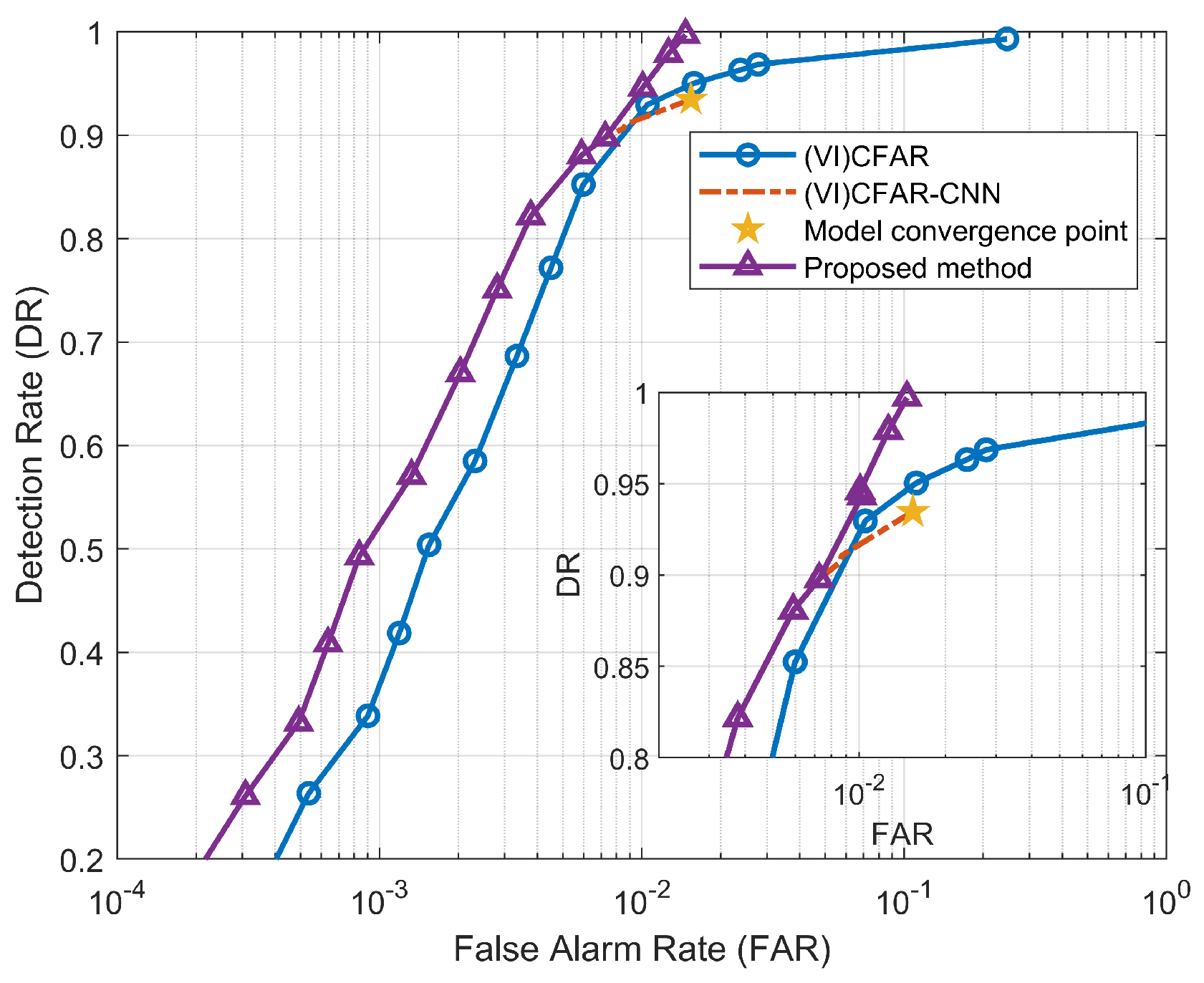

4.2.2. Detection Performance Compensation Effect of Dual-Detection Maps Fusion Decision-Making

To address the issue of limited DR resulting from the convergence of the CFAR-CNN model, this paper presents a novel approach called the dual-detection maps fusion compensation method. In this method, we fix the preset false alarm probability of the first detector (VI)CFAR-CNN, and need to set the preset false alarm probability of the second detector (VI)CFAR, as well as the weights of both detection maps and the value of the normalized factor. The fusion decision of the dual-detection maps compensates for detection loss.

The ROC curve after the dual-detection map fusion compensation is shown in Figure 14 and Figure 15. It can be seen from the figures that the loss of detection performance due to model convergence has been compensated, and the DR has been improved. In addition, the FAR has also been suppressed to a certain extent, which is lower than (VI)CFAR.

Figure 14.

ROC curve after the fusion and compensation of dual-detection maps in DATA_1.

Figure 15.

ROC curve after the fusion and compensation of dual-detection maps in DATA_2.

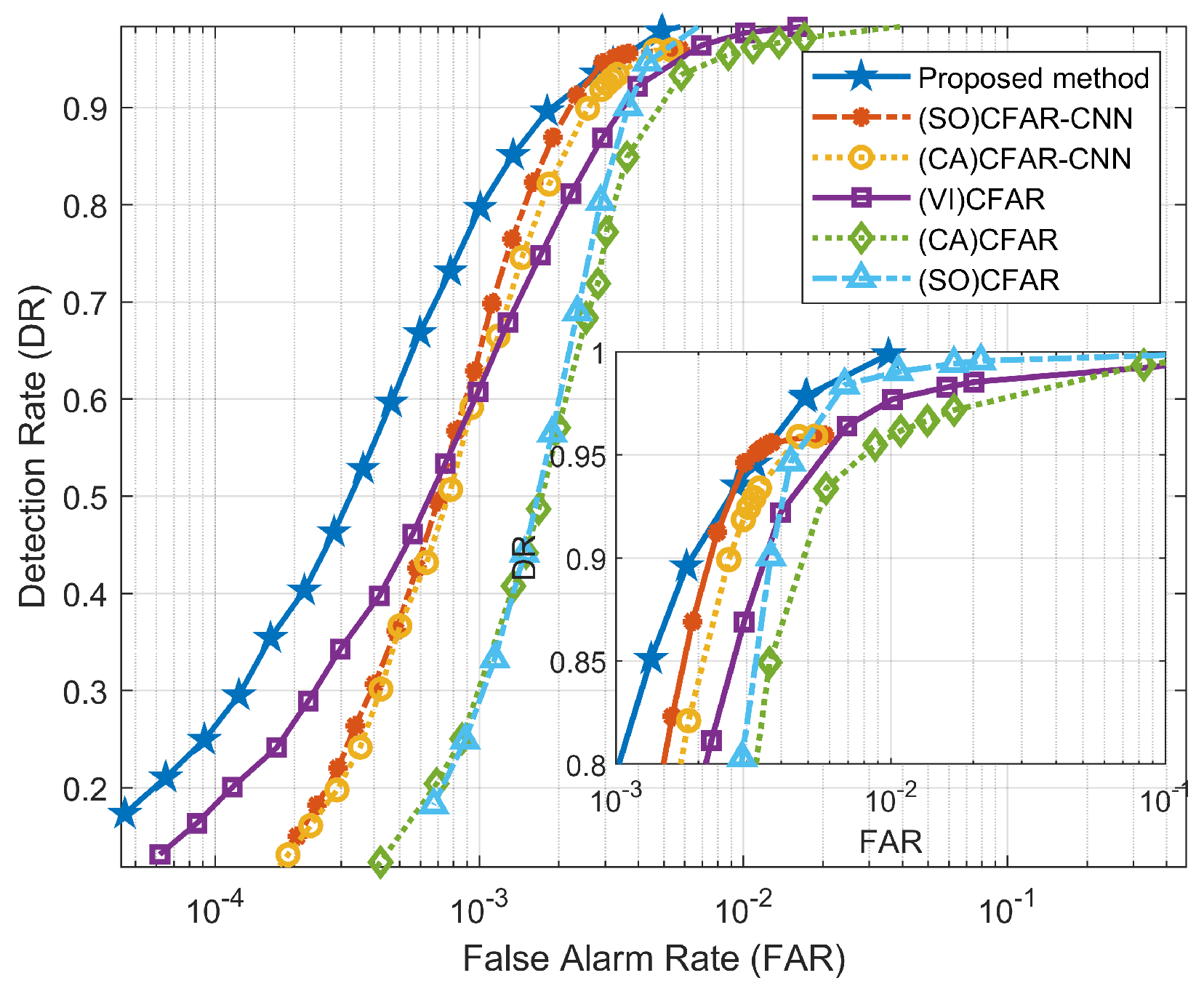

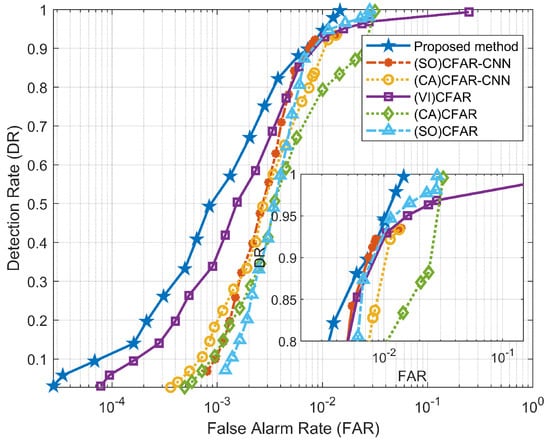

4.2.3. Comparison of ROC Curves between This Method and Other Methods

As shown in Figure 16 and Figure 17, we use the proposed method with two CFAR-CNN detectors that use different CFAR methods as first-level detection (i.e., (SO)CFAR-CNN and (CA)CFAR-CNN), as well as three different CFAR detectors (i.e., (VI)CFAR, (CA)CFAR, and (SO)CFAR) for comparison. By comparing the performance of these methods on both datasets, several conclusions can be drawn.

Figure 16.

Comparison of ROC curves between proposed method and other methods in DATA_1.

Figure 17.

Comparison of ROC curves between the proposed method and other methods DATA_2.

Firstly, the obtained ROC curve clearly demonstrates the superior performance of the proposed method in this article, as indicated by its larger area under the curve. This result demonstrates our method’s improved accuracy in target detection while notably reducing false alarms.

Secondly, despite DATA_2 having a relatively lower SNR and SCR compared to DATA_1, the proposed method exhibits strong detection performance on both datasets. This demonstrates the good robustness and adaptability of our method, showcasing its ability to yield consistent results even when handling low SNR or SCR datasets.

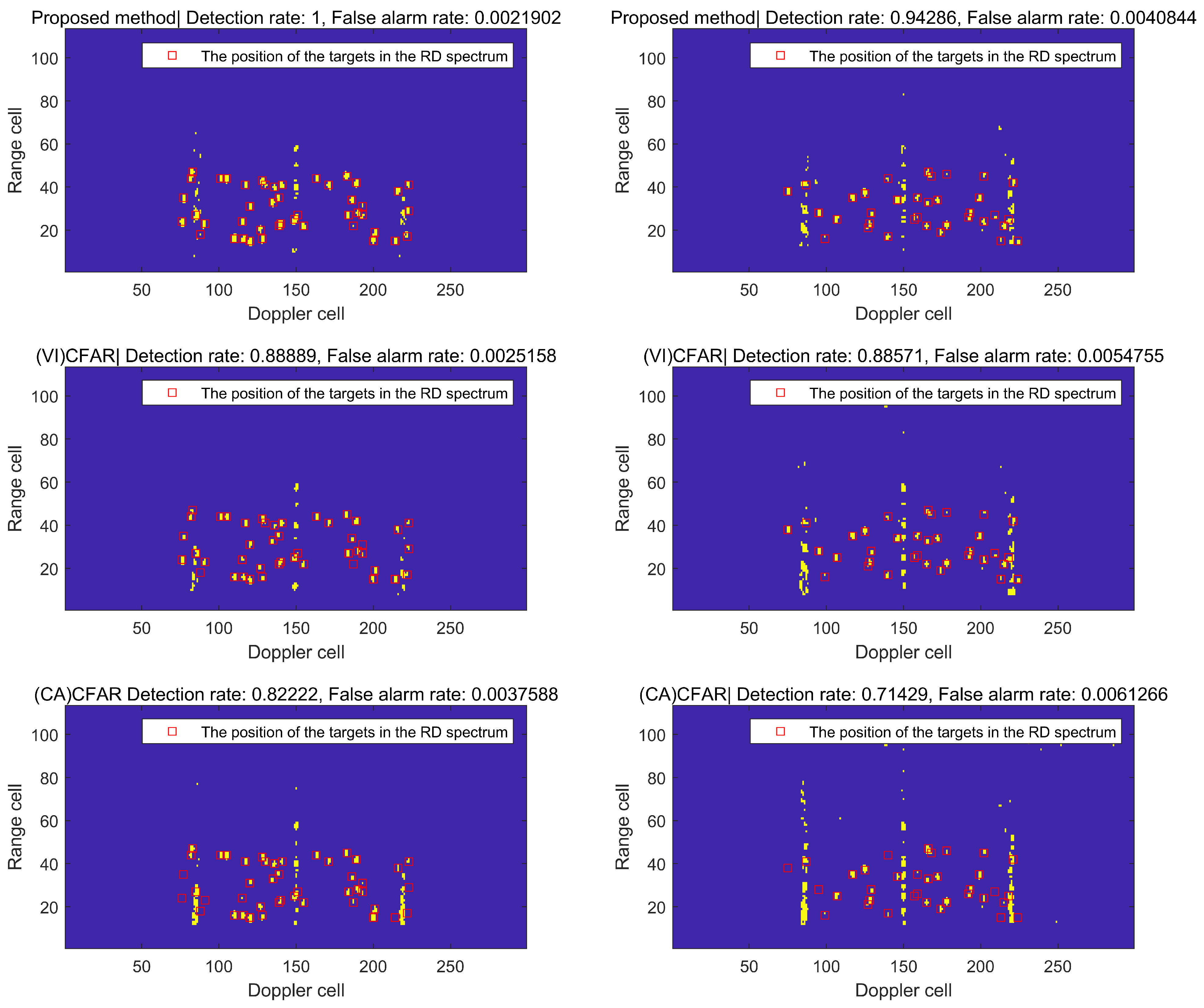

4.3. Comparison of RD Spectrum Detection Effects

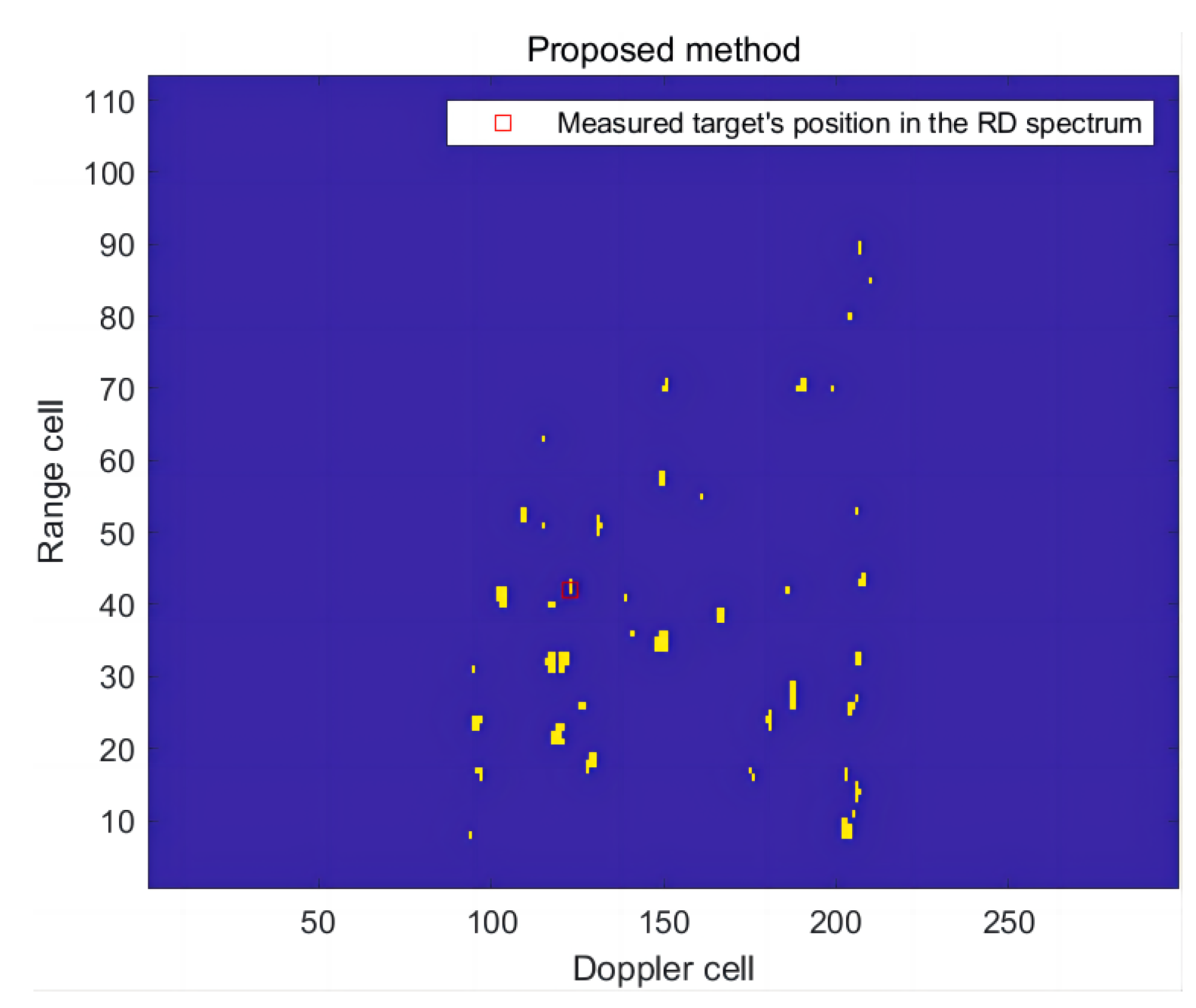

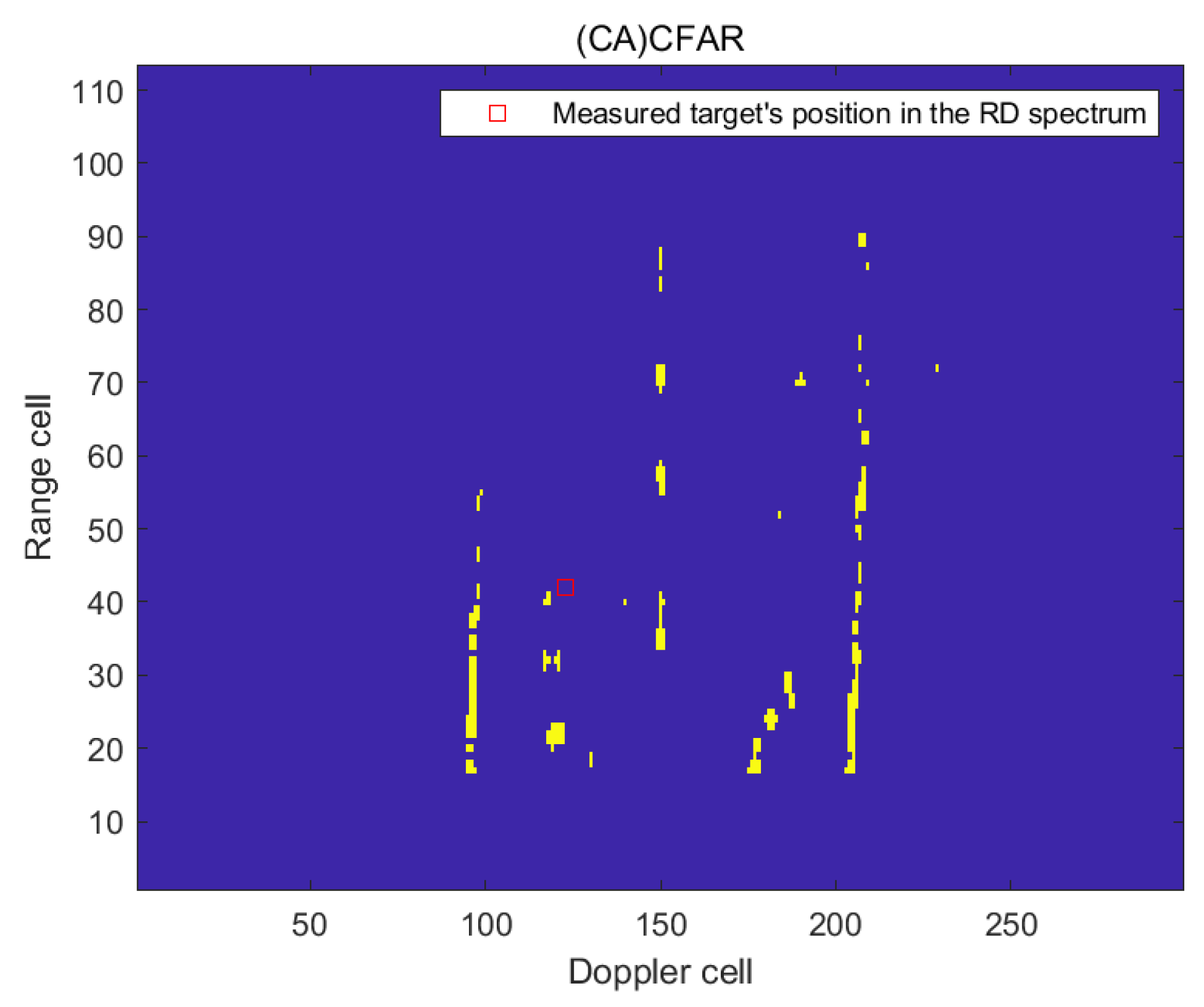

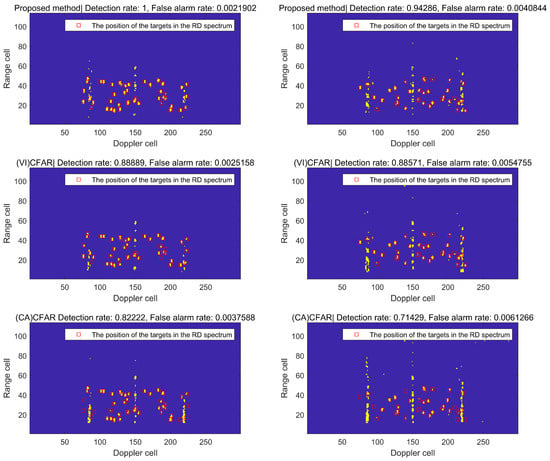

4.3.1. Comparison between the Proposed Method and Traditional CFAR Detection Method

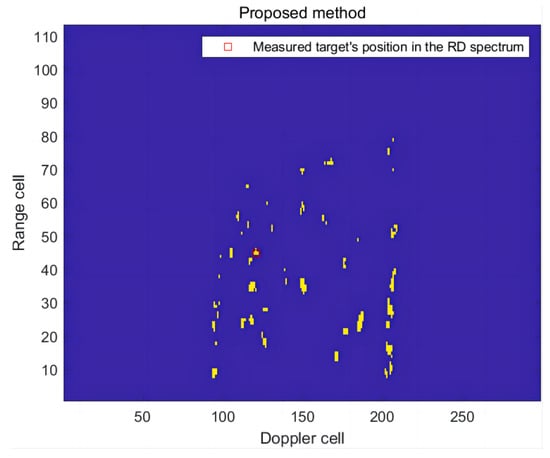

This section presents a comparison between the method proposed in this article and the traditional CFAR detection method in order to evaluate their respective detection effectiveness. To illustrate the comparison results, a frame of RD spectrum data from DATA_1 and DATA_2 was selected as an example, and the detection effects were compared using both the proposed method and the traditional CFAR method.

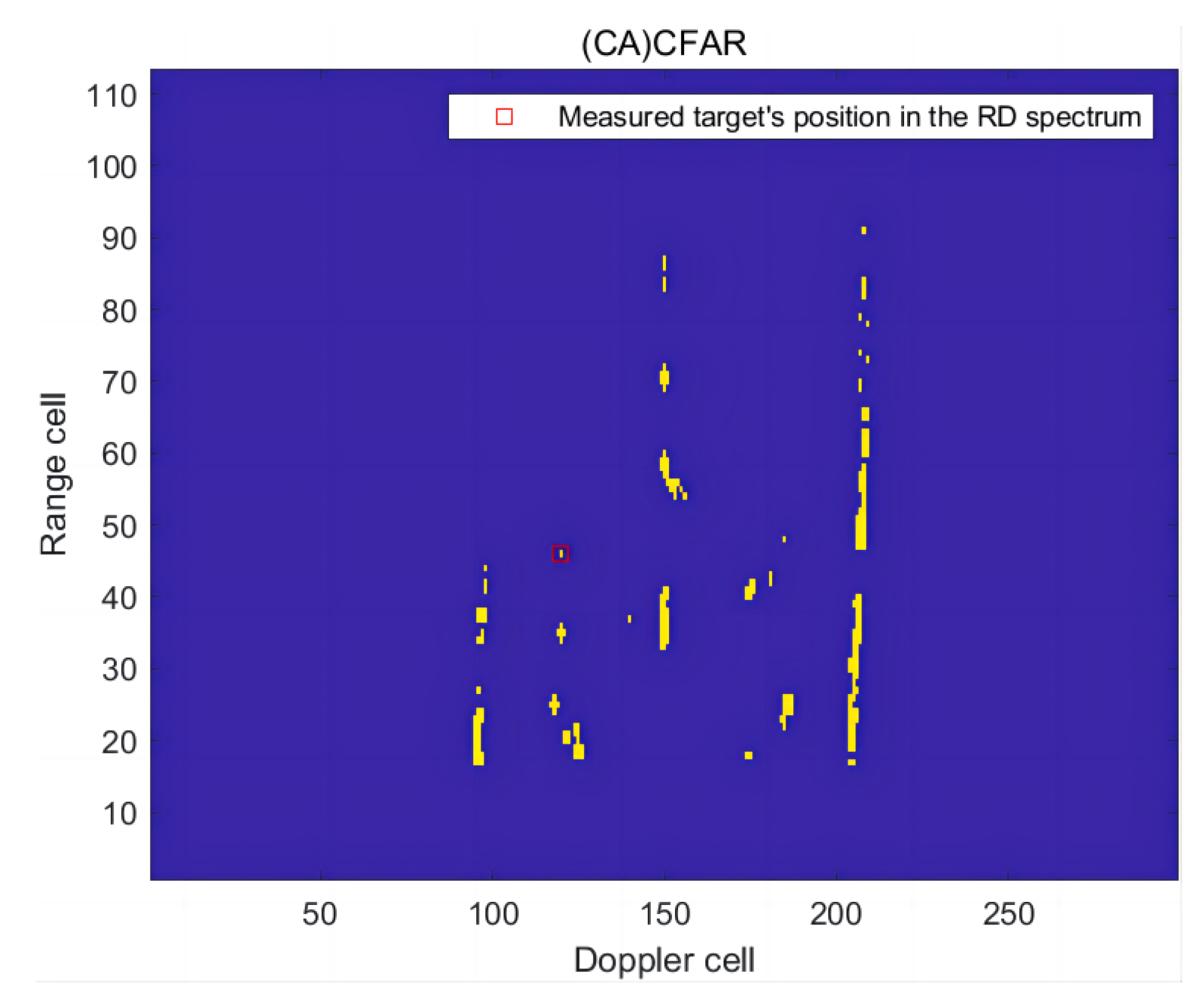

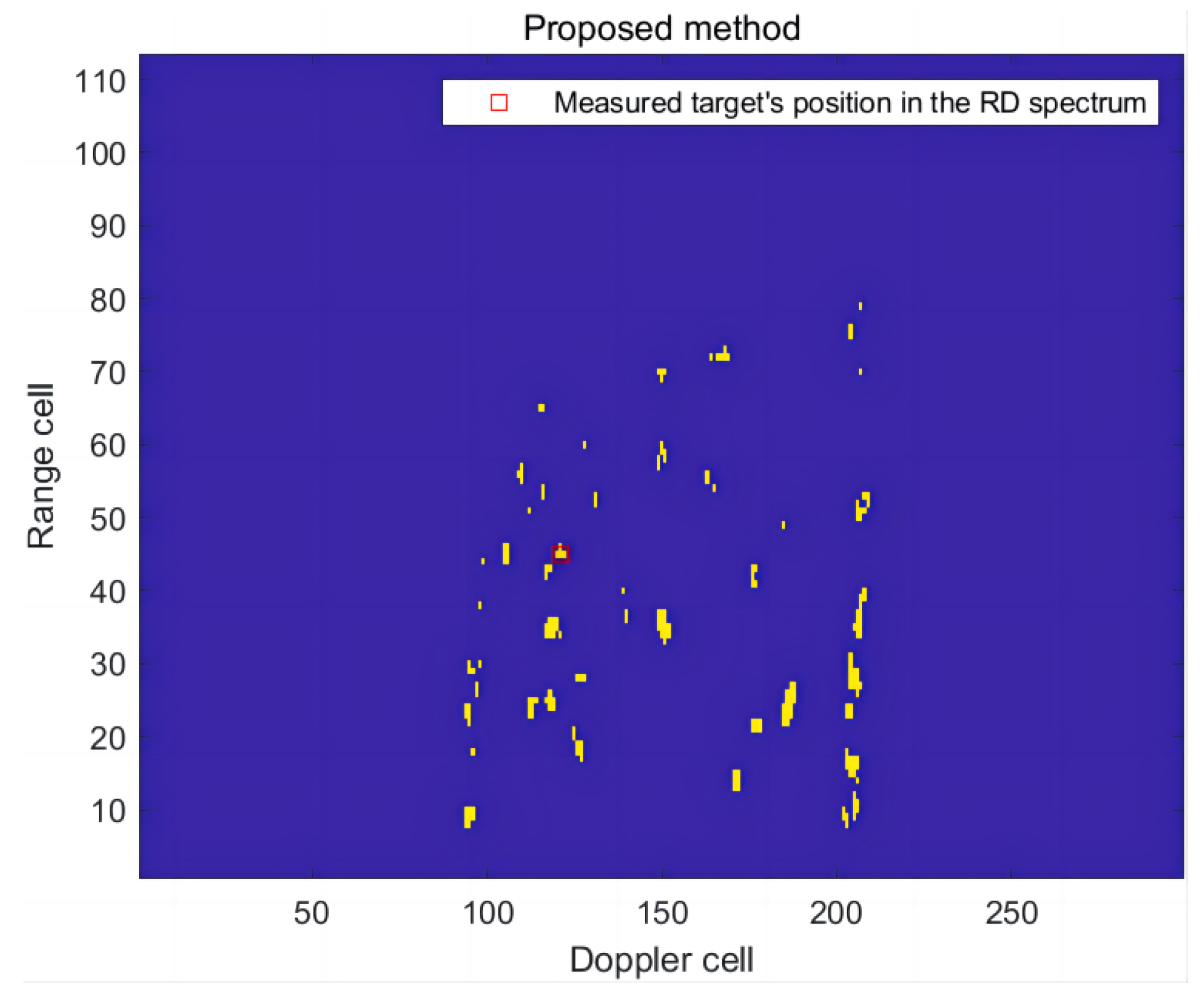

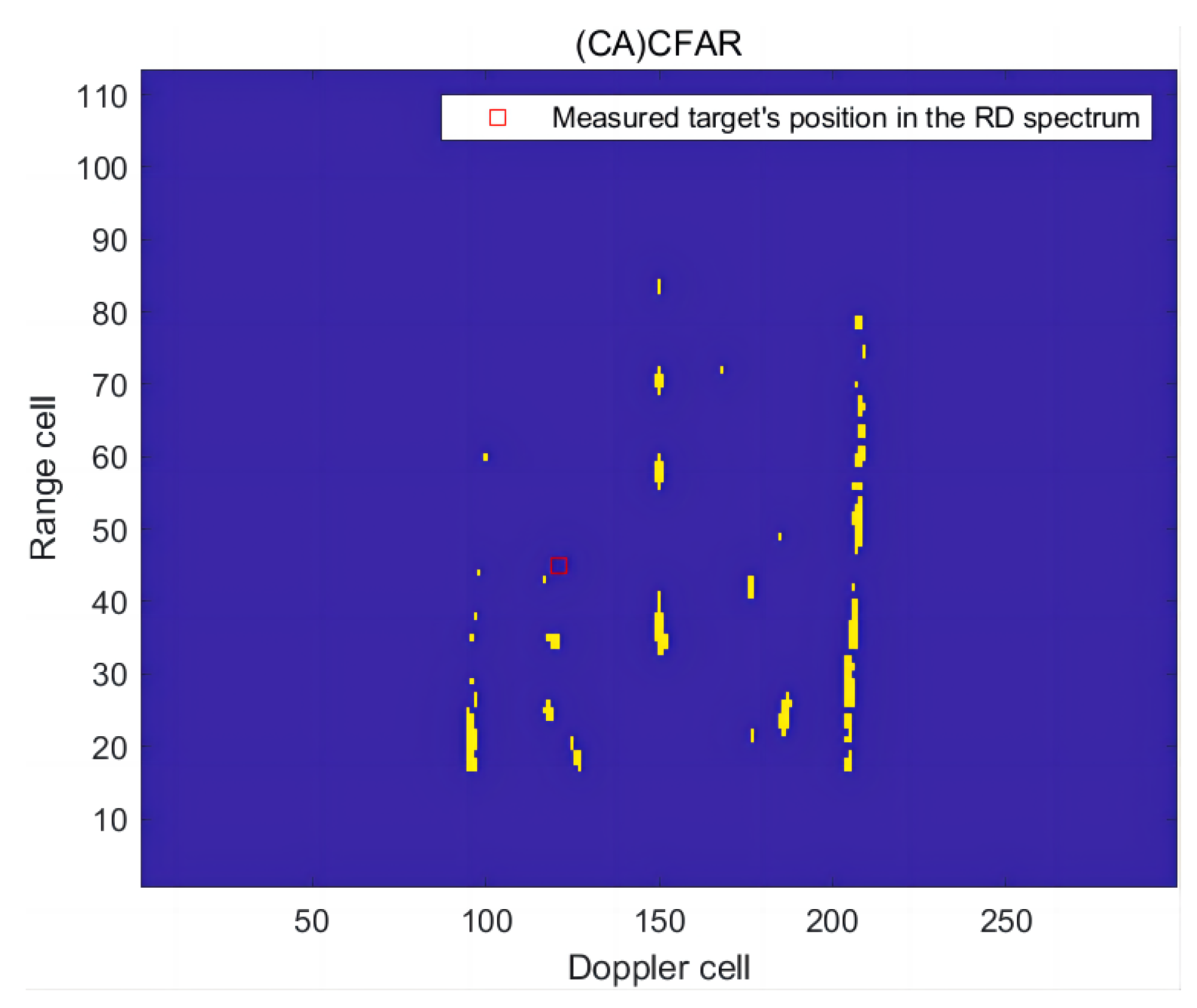

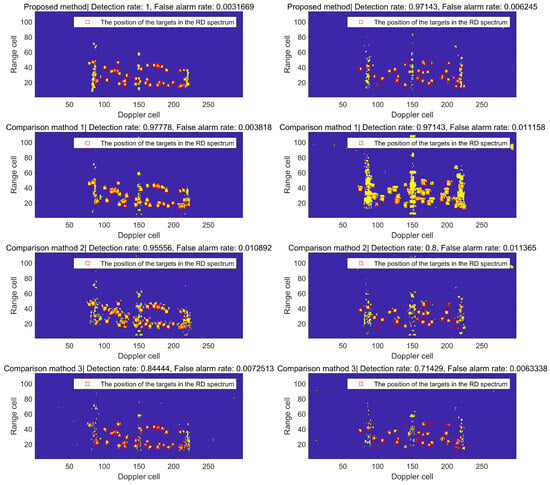

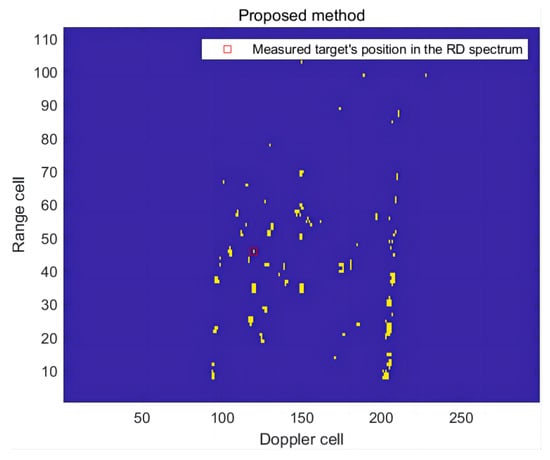

As shown in Figure 18, the left column represents the detection effect using DATA_1 single-frame RD spectrum data, while the right column represents the detection effect using DATA_2 single-frame RD spectrum data. In each detection result figure, the yellow area indicates the detected target results, the blue area indicates the target not being detected, and the red box indicates the position of the actual target in the RD spectrum. This article utilizes two different detection methods, (VI)CFAR and (CA)CFAR, for a comparison with a proposed method. The preset parameters for each detection method are shown in Table 6:

Figure 18.

Comparison of the detection effects between the proposed method and traditional CFAR.

Table 6.

Parameter settings for each method.

After comparing the detection effects of different methods, it can be found that the method in this paper can effectively mitigates false alarms and maintains the detection rate at a high level. When compared to (VI) and (CA)CFAR using the same frame of RD spectrum data, the proposed method demonstrates a superior performance in terms of both FAR and DR.

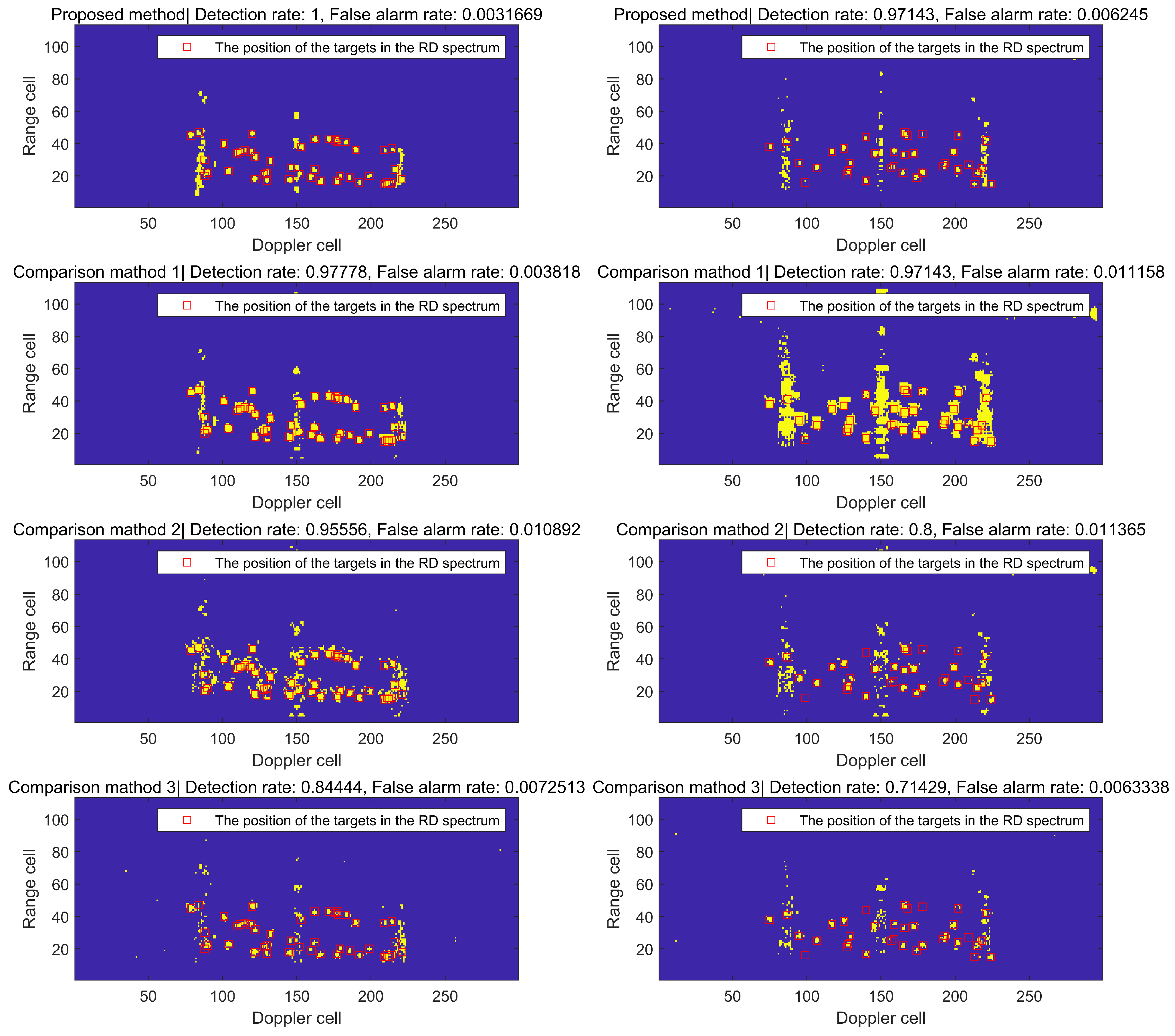

4.3.2. Contrast the Proposed Method against Other Machine Learning-Based Detection Methods

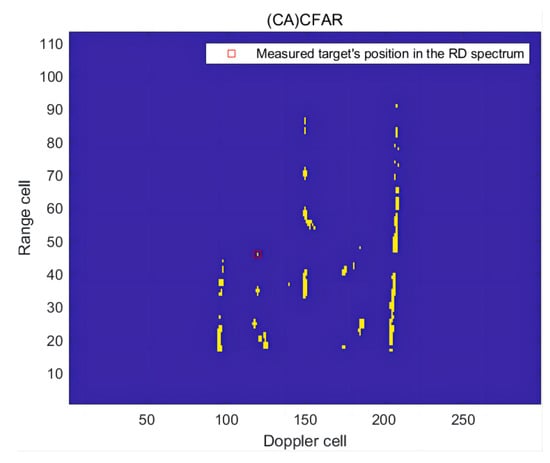

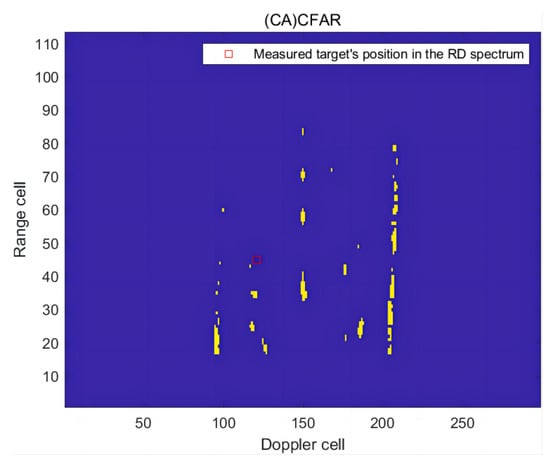

In this section, we present a comparison between the detection effects of the method proposed in this article and other radar target detection methods based on machine learning.

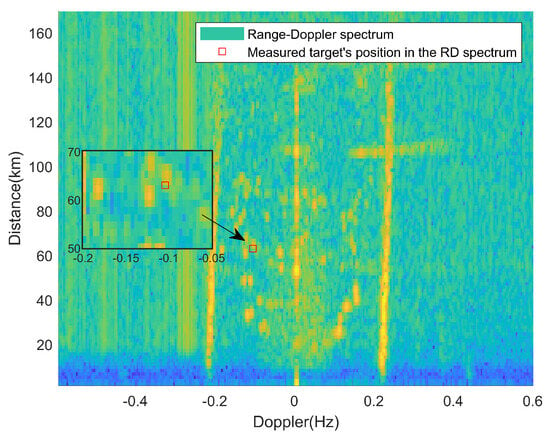

Similarly, we selected one frame of RD spectrum data from DATA_1 and DATA_2 as examples and employed various methods to assess their detection performance, as illustrated in Figure 19. The left column represents the detection effect using DATA_1 single frame RD spectrum data, while the right column represents the detection effect using the DATA_2 single frame RD spectrum data.

Figure 19.

Comparison of the detection effects between the proposed method and other machine learning detection methods.

We compared three additional methods in this part, and provide the basic framework and references for each method in Table 7.

Table 7.

Ideas and parameter settings of different methods.

Among these methods, Comparison method 1 is referenced from the literature [16]. Initially, Doppler processing is conducted on the echo signal, and the sliding window method is employed to input the window slice data into the CNN network for target detection. In fact, the CNN is utilized as a substitute for the CFAR algorithm.

Comparison method 2 refers to the method in the literature [31]. It involves employing the sliding window method to input both the detection unit and the reference unit into an artificial neural network, and determine whether there is a target in the detection unit through the network. In this process, (CA)CFAR is utilized to supervise the training of the artificial neural network.

Comparison method 3 refers to the fundamental framework outlined in the literature [18]. Initially, the morphological detection operator is employed on the RD spectrum to conduct first-level detection. Subsequently, a window is used to intercept the detection outcomes and extract pertinent features, which are then sent to the classifier for classification. To bolster the comparison method’s generalizability and robustness, a support vector machine (SVM) is utilized as the classifier.

After comparing the detection effects of various methods, the proposed method in this article demonstrates a superior performance with the lowest FAR and the highest DR.

4.3.3. Comparison of Overall Performance of Multiple Methods

In this section, we selected 80 frames of the RD spectrum in DATA_1 and DATA_2 as test data, used the proposed method and several methods mentioned before for detection, and finally calculated the detection rate (DR), false alarm rate (FAR) of the missed detection rate (MR) and error rate (ER), where and . The results are shown in Table 8.

Table 8.

Comparison of the performance indicators of different methods.

The results indicate that the proposed method outperforms other methods in all performance indices on the two datasets: DATA_1, characterized by a higher SNR and SCR, and DATA_2, characterized by a lower SNR and SCR. Specifically, the detection rate is the highest, the false alarm rate is the lowest, and the missed alarm rate and error rate are also minimized.

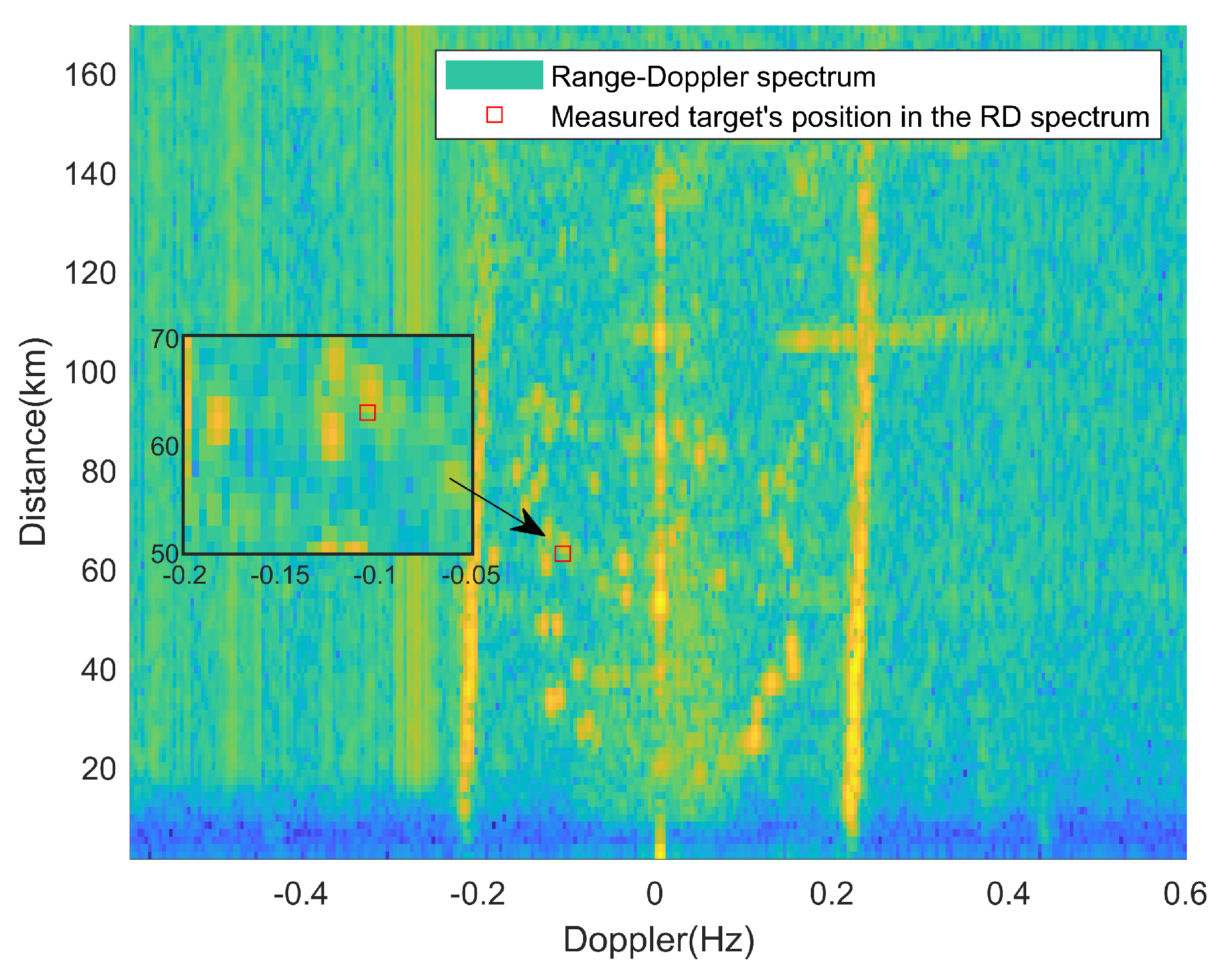

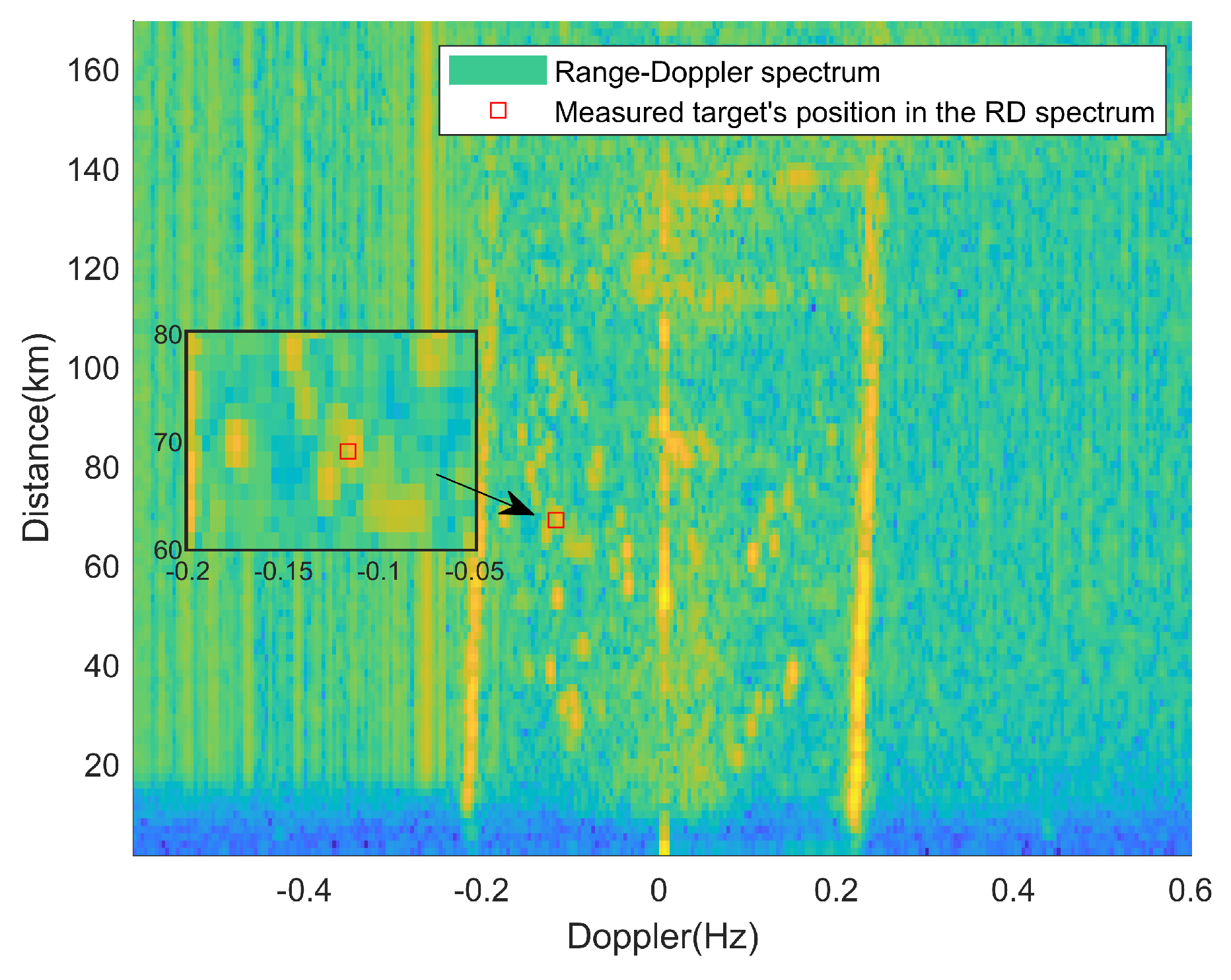

4.4. Verification of the Measured Target Data

In previous experiments, we used the target embedding method to construct a large amount of data in order to more comprehensively and accurately evaluate the performance of proposed method. To assess the feasibility of proposed method in engineering applications, it is essential to test it using the real-world data of measured targets. In this section, we correlated AIS data with HFSWR data, tracked the trajectory information of a cooperative ship, and conducted target calibration using three frames of RD spectra.

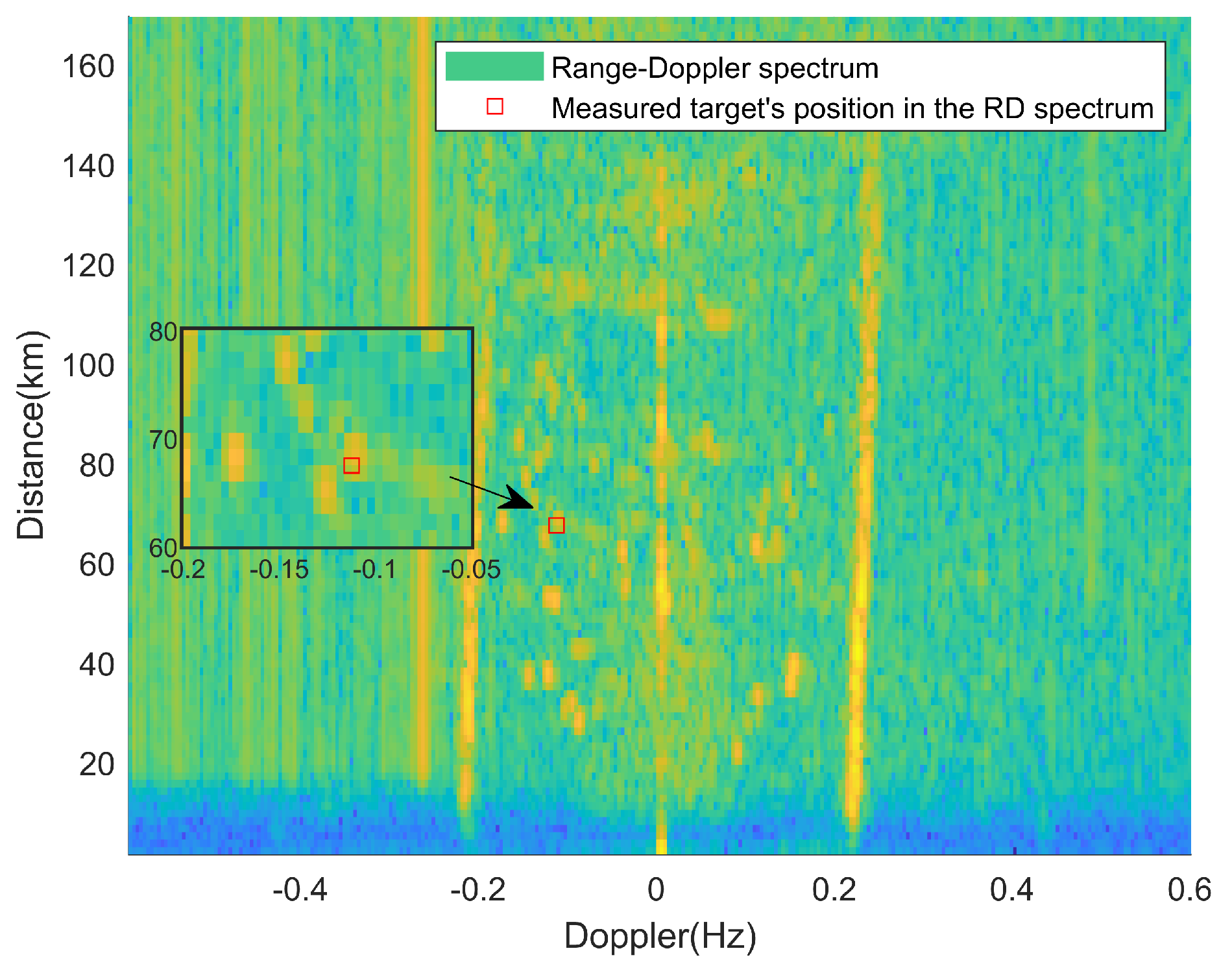

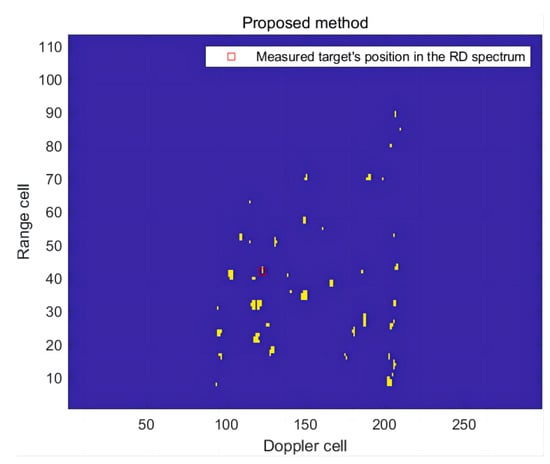

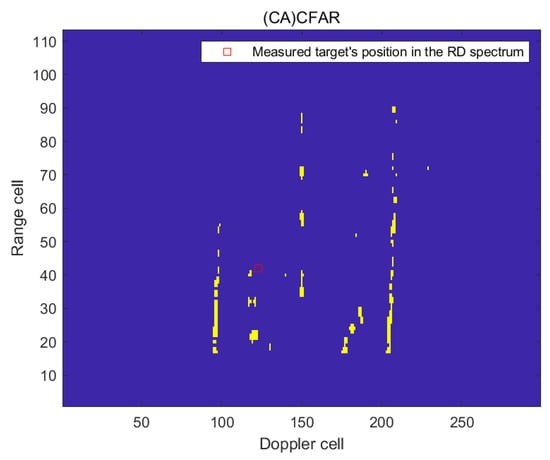

In the first frame of RD spectrum, the radial distance of the ship target is 62.77 km and the radial speed is −3.46 m/s. The method proposed in this paper was utilized to detect the RD of the measured target in this frame, and its detection effect was compared with that of (CA)CFAR. The RD spectrum containing the measured targets is shown in Figure 20. The detection results are presented in Figure 21 and Figure 22.

Figure 20.

RD spectrum of the measured target in the first frame.

Figure 21.

The detection effect of the RD spectrum in the first frame of this article’s method.

Figure 22.

The detection effect of the RD spectrum in the first frame of (CA)CFAR.

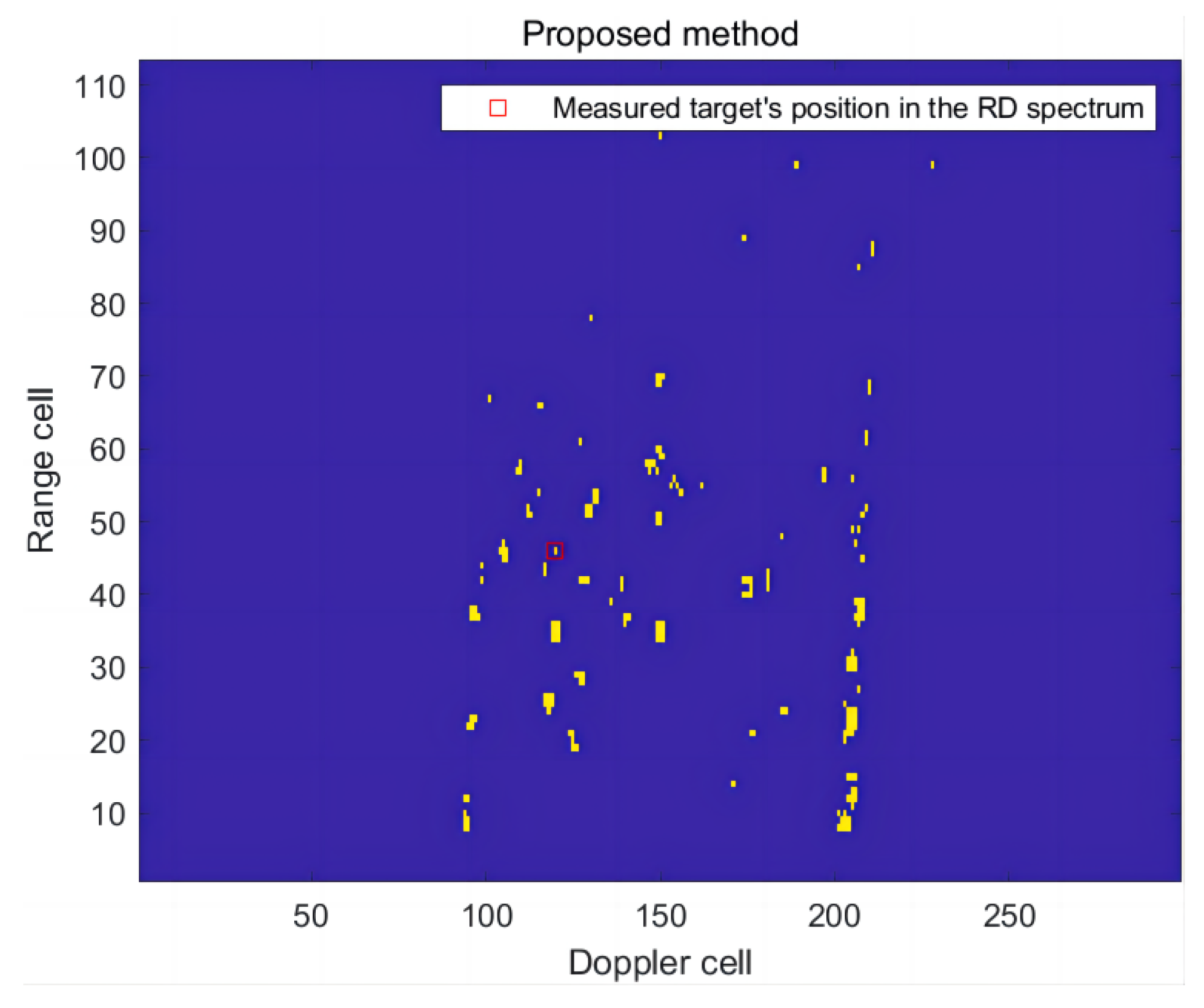

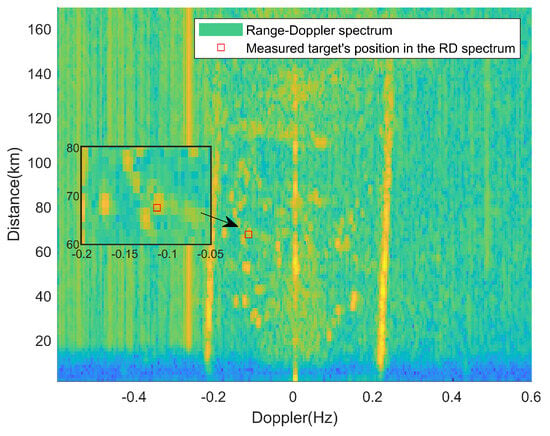

In the second frame of the RD spectrum, the radial distance of the ship target is 68.7 km, and the radial speed is −3.79 m/s. The RD spectrum containing the measured targets is shown in Figure 23. The detection results are presented in Figure 24 and Figure 25.

Figure 23.

RD spectrum of the measured target in the second frame.

Figure 24.

The detection effect of the RD spectrum in the second frame of this article’s method.

Figure 25.

The detection effect of the RD spectrum in the second frame of (CA)CFAR.

In the third frame of RD spectrum, the radial distance of the ship target is 67.5 km, and the radial speed is −3.80 m/s. The RD spectrum containing the measured targets is shown in Figure 26. The detection results are presented in Figure 27 and Figure 28.

Figure 26.

RD spectrum of the measured target in the third frame.

Figure 27.

The detection effect of the RD spectrum in the third frame of this article’s method.

Figure 28.

The detection effect of the RD spectrum in the third frame of (CA)CFAR.

After analyzing the detection results of the three frames of measured target RD spectra, it is evident that the proposed method in this article accurately detects targets in the complete measured RD spectrum. This is achieved by training and building a detection model using simulation data. Furthermore, compared to the traditional CFAR method, this approach effectively mitigates false alarms caused by different clutter types.

5. Conclusions

Inspired by the effective role of human visual processing in target detection and false alarm control, this paper proposes the use of an adaptive constant false alarm detector (VI) CFAR and CNN network cascade detector for target detection on the RD spectrum of HFSWR. Addressing the problem of the limited detection performance of CFAR-CNN caused by the convergence of the CNN model, a method of fusion of dual-detection maps was further proposed to compensate for the loss of detection performance. Afterwards, datasets were constructed using the target embedding method, and the performance of the proposed method in this article was verified and evaluated. The experimental results demonstrate the effectiveness and superiority of the method. Additionally, the feasibility of applying this method in practical applications was confirmed using the target data obtained from complete measurements. In conclusion, the method proposed in this article holds a research value and offers a new direction for the application of machine learning in CFAR processing.

Author Contributions

Conceptualization, Y.J., A.L. and C.Y.; methodology, Y.J.; software, Y.J.; validation, Y.J.; formal analysis, Y.J.; investigation, Y.J.; resources, Y.J. and X.C.; data curation, Y.J., J.W. and X.C.; writing—original draft preparation, Y.J.; writing—review and editing, Y.J.; visualization, Y.J.; supervision, A.L. and C.Y.; project administration, A.L. and C.Y.; funding acquisition, A.L. and C.Y. All authors have read and agreed to the published version of the manuscript.

Funding

The research and publication of the article were funded by the National Natural Science Foundation of China under Grant 62031015 and Mount Taishan Scholar Distinguished Expert Plan under Grant 20190957.

Data Availability Statement

The data pertaining to this scientific research are currently unavailable for public access due to confidentiality constraints. For inquiries regarding access to the data, please get in touch with the corresponding author.

Acknowledgments

We extend our appreciation to the editor and anonymous reviewers for their valuable insights and recommendations. Additionally, our gratitude goes to the research team stationed at the Harbin Institute of Technology, Weihai, for generously sharing the high-frequency surface wave radar data with us.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sun, W.; Ji, M.; Huang, W.; Ji, Y.; Dai, Y. Vessel tracking using bistatic compact HFSWR. Remote Sens. 2020, 12, 1266. [Google Scholar] [CrossRef]

- Wu, M.; Zhang, L.; Niu, J.; Wu, Q.M.J. Target detection in clutter/interference regions based on deep feature fusion for HFSWR. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5581–5595. [Google Scholar] [CrossRef]

- Sun, W.; Li, X.; Ji, Y.; Dai, Y.; Huang, W. Plot Quality Aided Plot-to-Track Association in Dense Clutter for Compact High-Frequency Surface Wave Radar. Remote Sens. 2022, 15, 138. [Google Scholar] [CrossRef]

- Gini, F.; Rangaswamy, M. Knowledge-Based Radar Detection, Tracking, and Classification; Wiley Online Library: Hoboken, NJ, USA, 2008; pp. 1–269. [Google Scholar]

- HM, F. Adaptive detection mode with threshold control as a function of spatially sampled clutter-level estimates. Rca Rev. 1968, 29, 414–465. [Google Scholar]

- Ro ing, H. Radar CFAR thresholding in clutter and multiple target situations. IEEE Trans. Aerosp. Electron. Syst. 1983, 4, 608–621. [Google Scholar]

- He, Y.; Guan, J.; Huang, Y.; Jian, T. Radar Target Detection and Constant False Alarm Rate Processing; Tsinghua University Press: Beijing, China, 2023; pp. 34–72. [Google Scholar]

- Barnum, J. Ship detection with high-resolution HF skywave radar. IEEE J. Ocean. Eng. 1986, 11, 196–209. [Google Scholar] [CrossRef]

- He, Y.; Key; Meng, X. Radar Target Detection and Constant Deficiency Alarm Processing; Tsinghua University Press: Bejing, China, 2011. [Google Scholar]

- Majumder, U.K.; Blasch, E.P.; Garren, D.A. Deep Learning for Radar and Communications Automatic Target Recognition. Microw. J. 2022, 1, 65. [Google Scholar]

- Stehwien, W.; Haykin, S.A. A statistical radar clutter classifier. In Proceedings of the IEEE National Radar Conference, Dallas, TX, USA, 29–30 March 1989. [Google Scholar]

- Pierucci, L.; Bocchi, L. Improvements of radar clutter classification in air traffic control environment. In Proceedings of the 2007 IEEE International Symposium on Signal Processing and Information Technology, Giza, Egypt, 15–18 December 2007; pp. 721–724. [Google Scholar]

- Haykin, S. Cognitive radar: A way of the future. IEEE Signal Process. Mag. 2006, 23, 30–40. [Google Scholar] [CrossRef]

- Besson, O.; Tourneret, J.-Y.; Bidon, S. Knowledge-Aided Bayesian Detection in Heterogeneous Environments. IEEE Signal Process. Lett. 2007, 14, 355–358. [Google Scholar] [CrossRef]

- Li, Q.; Yao, Y. A knowledge-based CFAR detector. Radar Sci. Technol. 2012, 10. [Google Scholar]

- Wang, L.; Tang, J.; Liao, Q. A Study on Radar Target Detection Based on Deep Neural Networks. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Q.; Li, M.; Wu, Q. High frequency ground wave radar based on optimal error self-correction limit learning machine. J. Autom. 2021, 47, 108–120. [Google Scholar]

- Li, Q.; Han, Y. RD spectrum map sea surface target detection algorithm of high-frequency ground wave radar. Mod. Radar 2020, 42, 8. [Google Scholar]

- Zhang, X.; Wu, H.; Sun, H.; Ying, W. Multireceiver SAS imagery based on monostatic conversion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10835–10853. [Google Scholar] [CrossRef]

- Yang, P. An imaging algorithm for high-resolution imaging sonar system. Multimed. Tools Appl. 2023. [Google Scholar] [CrossRef]

- Richards, M. Fundamentals of Radar Signal Processing; McGraw-Hill: Hoboken, NJ, USA, 2005. [Google Scholar]

- Smith, M.E.; Varshney, P.K. Intelligent CFAR processor based on data variability. IEEE Trans. Aerosp. Electron. Syst. 2000, 36, 837–847. [Google Scholar] [CrossRef]

- Gandhi, P.P.; Kassam, S.A. Analysis of CFAR processors in nonhomogeneous background. IEEE Trans. Aerosp. Electron. Syst. 1988, 24, 427–445. [Google Scholar] [CrossRef]

- Viswanathan, R.; Eftekhari, A. A selection and estimation test for multiple target detection. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 509–519. [Google Scholar] [CrossRef]

- Verma, A.K. Variability Index Constant False Alarm Rate (VI-CFAR) for Sonar Target Detection. In Proceedings of the 2008 International Conference on Signal Processing, Communications and Networking, Chennai, India, 4–6 January 2008; pp. 138–141. [Google Scholar]

- Qiu, X. Neural Network and Deep Learning. J. Chin. Inf. Sci. 2020, 7, 109. [Google Scholar]

- Sun, W. A Vessel Azimuth and Course Joint Re-Estimation Method for Compact HFSWR. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1041–1051. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, W. Automatic Detection of Ship Targets Based on Wavelet Transform for HF Surface Wavelet Radar. IEEE Geosci. Remote Sens. Lett. 2017, 14, 714–718. [Google Scholar] [CrossRef]

- Sun, W.; Huang, W.; Ji, Y. Vessel tracking with small-aperture compact high-frequency surface wave radar. In Proceedings of the OCEANS 2019, Marseille, France, 17–20 June 2019; pp. 1–4. [Google Scholar]

- Cruz, N.C.; González-Redondo, Á.; Redondo, J.L. Black-box and surrogate optimization for tuning spiking neural models of striatum plasticity. Front. Neuroinform. 2022, 16, 1017222. [Google Scholar] [CrossRef] [PubMed]

- Akhtar, J.; Olsen, K.E. A Neural Network Target Detector with Partial CA-CFAR Supervised Training. In Proceedings of the 2018 International Conference on Radar (RADAR), Brisbane, Australia, 27–31 August 2018; pp. 1–6. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).