Mountain Vegetation Classification Method Based on Multi-Channel Semantic Segmentation Model

Abstract

1. Introduction

2. Data and Method

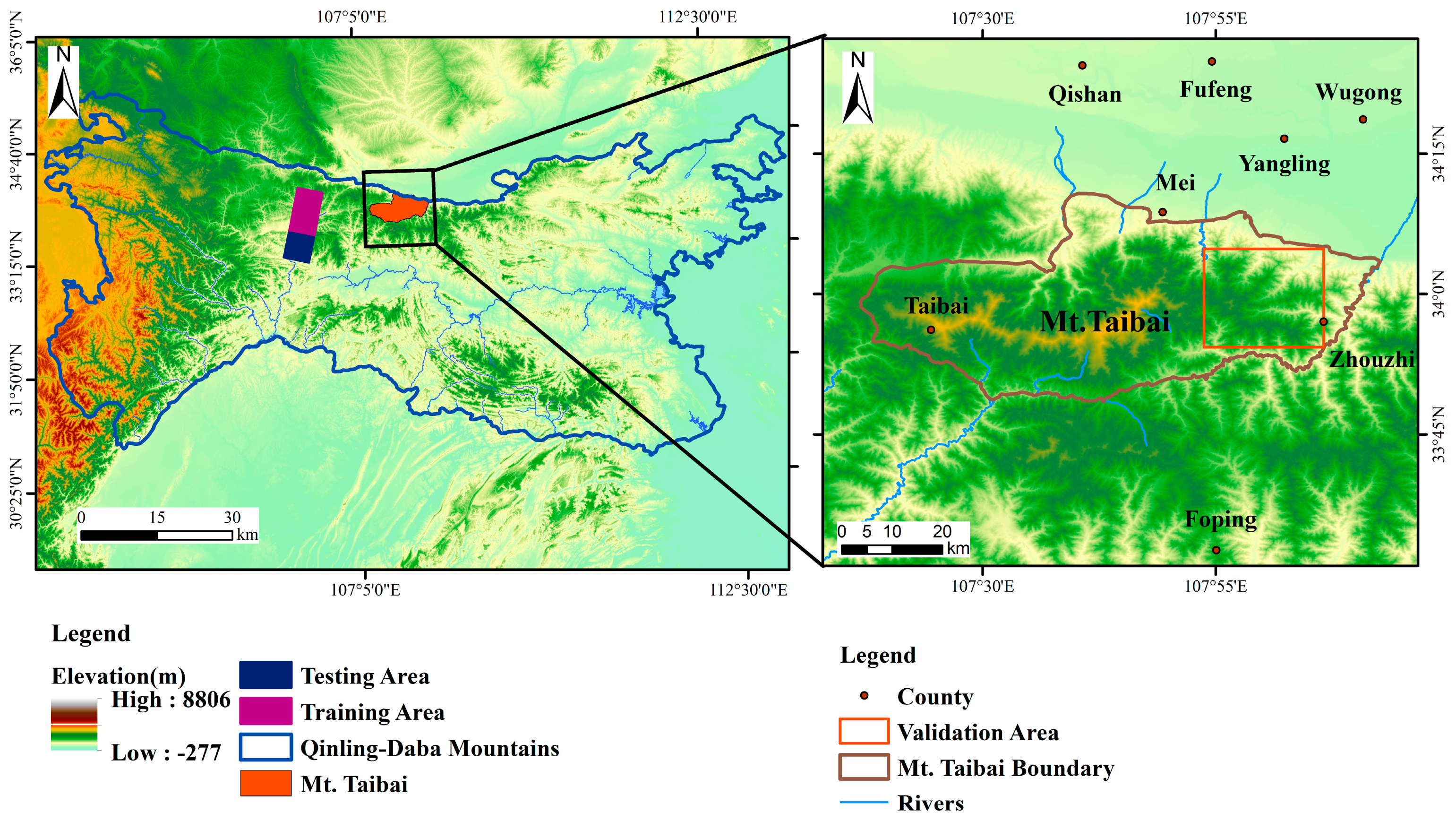

2.1. Study Area

2.2. Data Source and Preprocessing

2.3. Methods

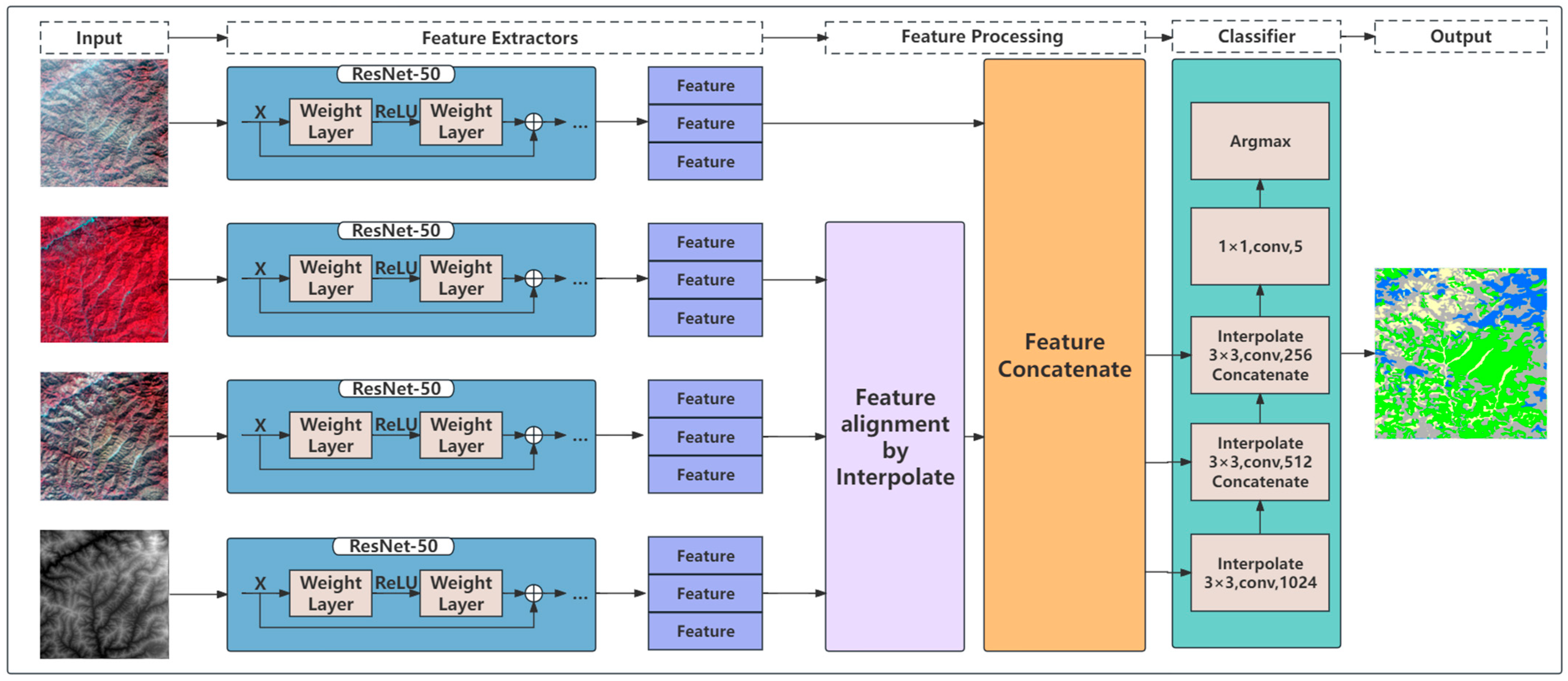

- (1)

- The model was modified to have a four-channel structure, extracting features from the following sources: 2 m resolution winter remote sensing imagery, 16 m resolution winter imagery, 16 m resolution summer imagery and 16 m resolution DEM imagery. Since the above four-channel data had different feature distributions, a pre-trained ResNet-50 model was used to train an ImageNet to extract features from each channel individually, rather than simply stacking the different data bands.

- (2)

- The model was built with the ability to input images of multiple sizes, depending on the data source. Since the image size of 16 m resolution was 64 times (8 × 8) the size of the 2 m resolution image, a scale check was performed and the features were aligned using resampling or interpolation methods to ensure feature fusion in subsequent stages after the model extracted features from the input images.

3. Results

3.1. Training Results of the Models

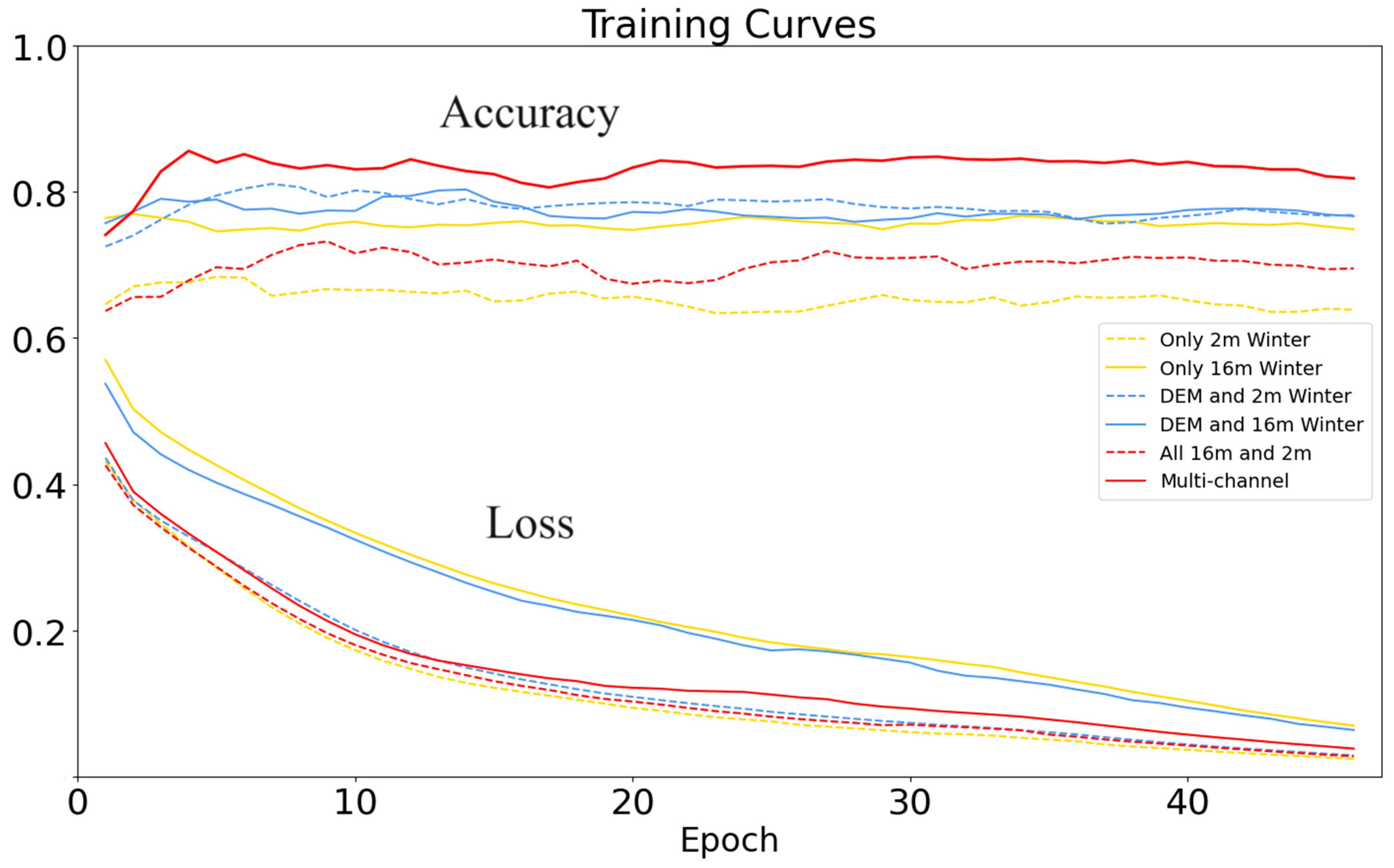

- (1)

- Training results of single-channel models: According to the results of Experiments 1 and 2 in Group 1 (Figure 3), the classification result of the 16 m resolution remote sensing images was better than the result of the 2 m resolution images. This suggested that although the high-resolution images contained richer textural and structural features, this information was not fully utilized in the single-channel model.

- (2)

- Training results of two-channel models: The training results of Experiments 3 and 4 in Group 2 (Figure 3) were very close to each other and higher than the training results of Group 1, indicating that the DEM data could significantly improve the classification results, and the results of the two-channel models were better than the results of the single-channel models.

- (3)

- Training results of the multi-channel models: The training result of Experiment 5 (three-channel images in the model) in Group 3 (Figure 3) was poor, only slightly higher than that of Experiment 1 (single-channel images with 2 m-resolution) and significantly lower than the results of Experiments 3 and 4 in Group 2 and Experiment 2 (single-channel images with 16 m resolution) in Group 1. This showed that the classification model with multi-channel images did not necessarily significantly improve the classification result. However, the training results of Experiment 6 (multi-channel model: three-channel images and one-channel DEM) were significantly better than the results of other experiments, indicating that the multi-channel model fusing DEM and images could significantly improve the results of mountain vegetation classification. Therefore, the multi-channel model, which integrates data from multiple sources for classification, proves to be effective and greatly enhances the accuracy of mountain vegetation classification.

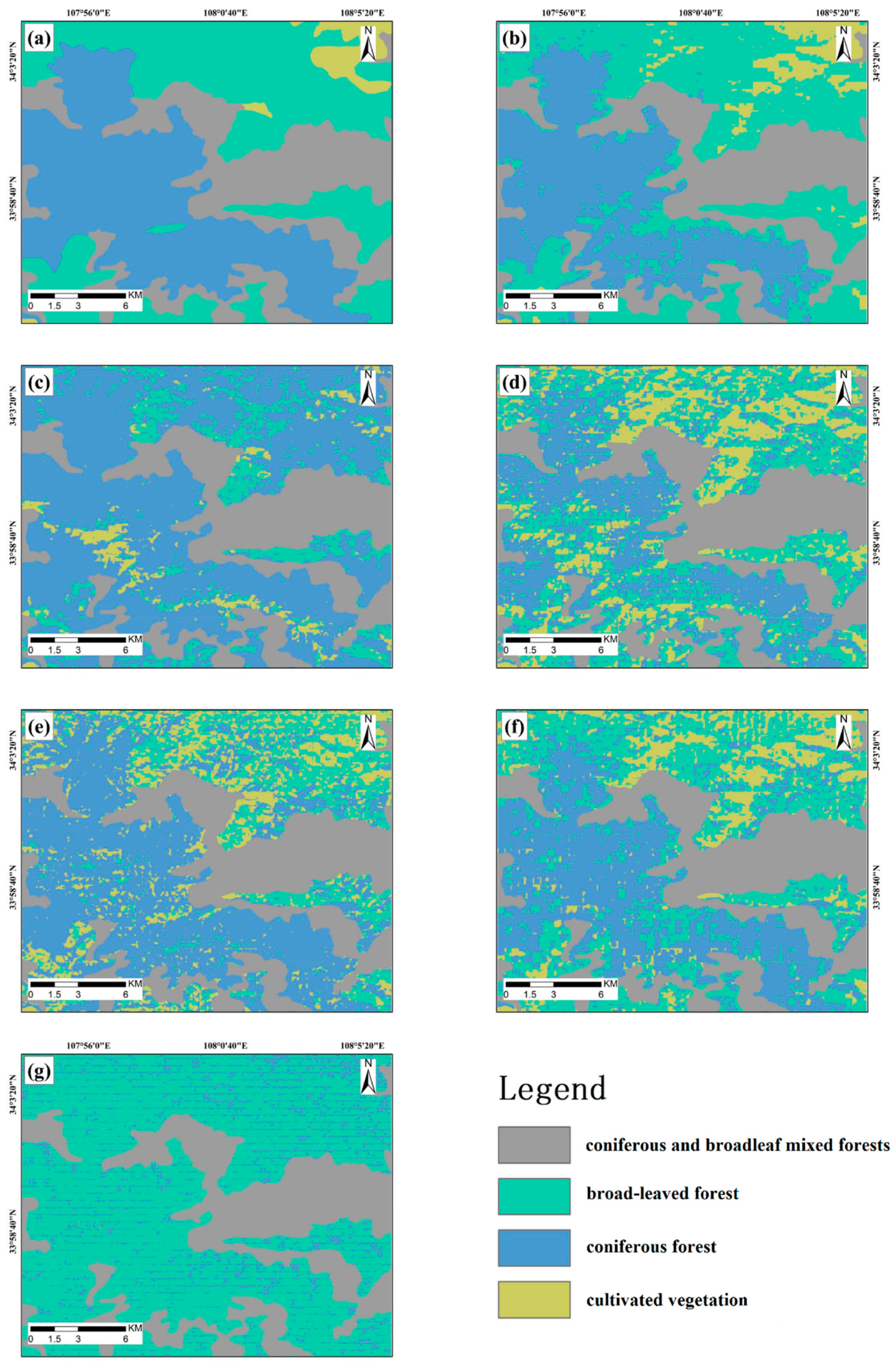

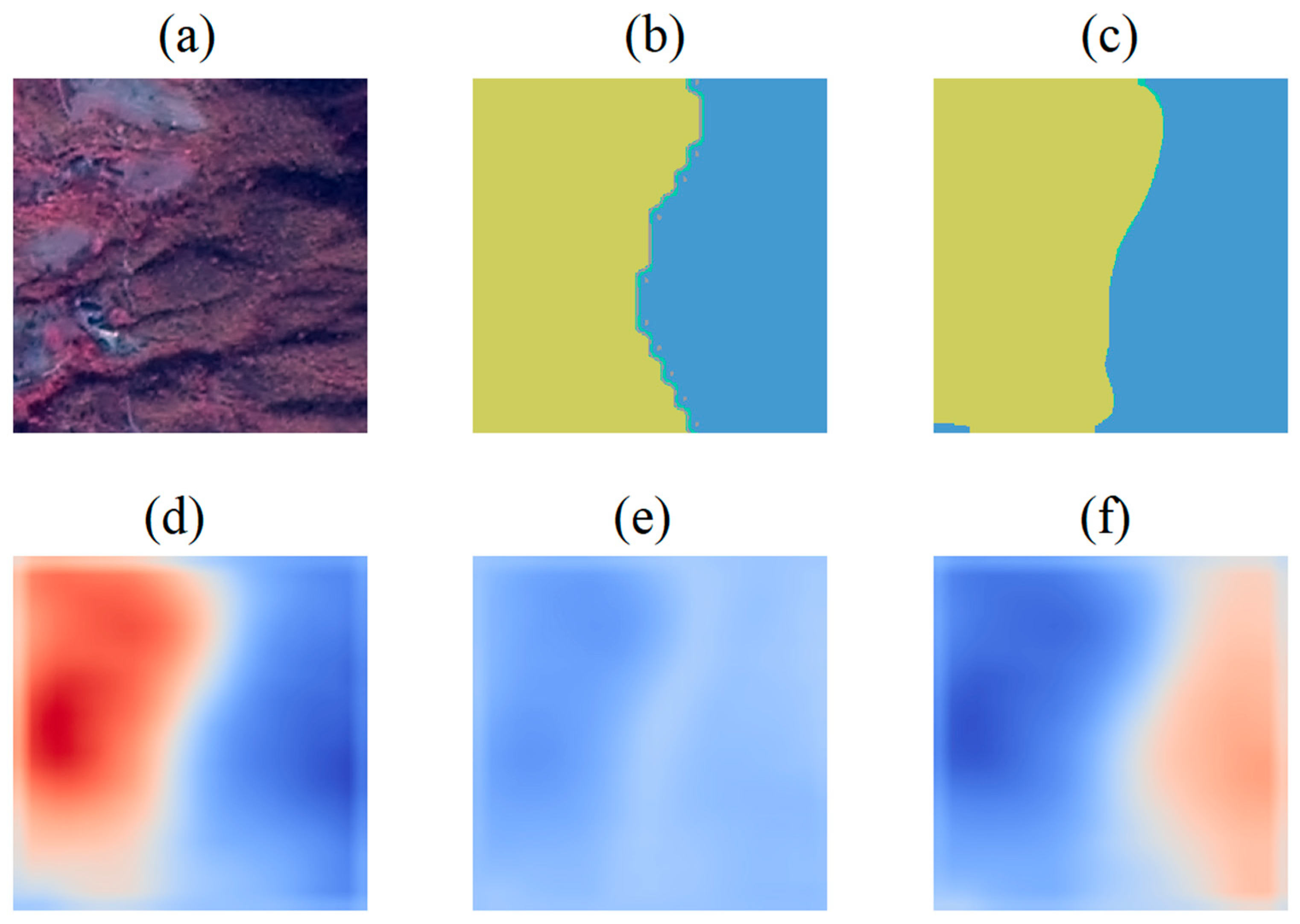

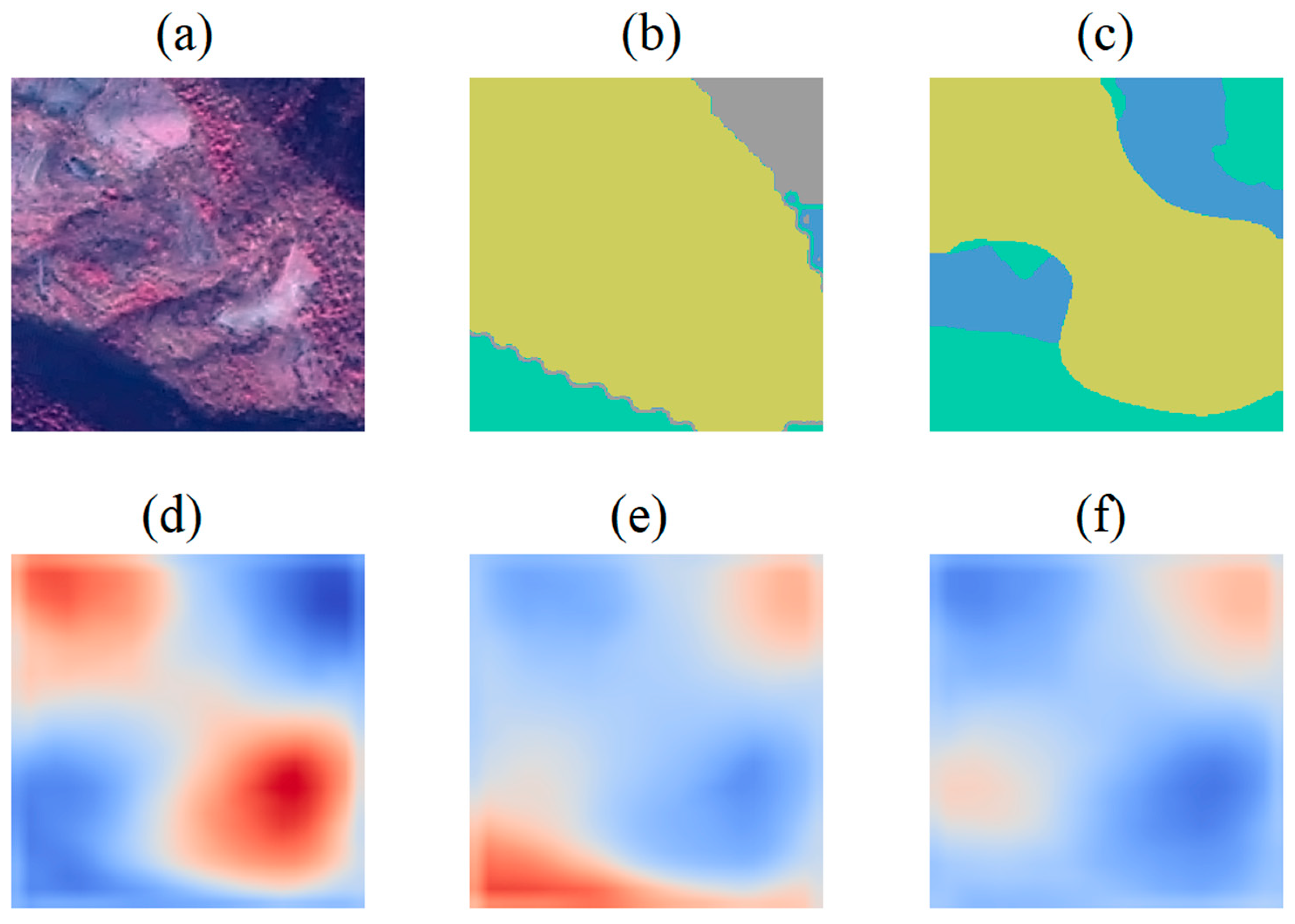

3.2. Classification Results of Mountain Vegetation

- (1)

- Classification results of the models

- (2)

- Classification accuracy of the models

4. Discussion

- (1)

- The effect of the labeled data on the classification accuracy

- (2)

- Effect of data slicing on classification accuracy

- (3)

- Effect of DEM on the vegetation classification

- (4)

- Comparison with other vegetation classification methods in Mt. Taibai

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guo, Q.; Guan, H.; Hu, T.; Jin, S.; Su, Y.; Wang, X.; Wei, D.; Ma, Q.; Sun, Q. Remote sensing-based mapping for the new generation of Vegetation Map of China (1:500,000). Sci. China Life Sci. 2021, 51, 229–241. (In Chinese) [Google Scholar] [CrossRef]

- Yang, C.; Wu, G.; Li, Q.; Wang, J.; Qu, L.; Ding, K. Research progress on remote sensing classification of vegetation. Geogr. Geo-Inf. Sci. 2018, 34, 24–32. [Google Scholar]

- Liu, H.; Zhang, A.; Zhao, Y.; Zhao, A.; Wang, D. Spatial scale transformation–based estimation model for fresh grass yield: A case study of the Xilingol Grassland, Inner Mongolia, China. Environ. Sci. Pollut. Res. 2023, 30, 1085–1095. [Google Scholar] [CrossRef] [PubMed]

- Miao, Y.; Zhang, R.; Guo, J.; Yi, S.; Meng, B.; Liu, J. Vegetation Coverage in the Desert Area of the Junggar Basin of Xinjiang, China, Based on Unmanned Aerial Vehicle Technology and Multisource Data. Remote Sens. 2022, 14, 5146. [Google Scholar] [CrossRef]

- Wu, D.; Liu, Q.; Xia, R.; Li, T. Study on the changes in vegetation structural coverage and its response mechanism to hydrology. Open Geosci. 2022, 14, 79–88. [Google Scholar] [CrossRef]

- Dong, X.; Hu, C. Remote Sensing Monitoring and Evaluation of Vegetation Changes in Hulun Buir Grassland, Inner Mongolia Autonomous Region, China. Forests 2022, 13, 2186. [Google Scholar] [CrossRef]

- Tian, Y.; Wu, Z.; Li, M.; Wang, B.; Zhang, X. Forest Fire Spread Monitoring and Vegetation Dynamics Detection Based on Multi-Source Remote Sensing Images. Remote Sens. 2022, 14, 4431. [Google Scholar] [CrossRef]

- Deng, Y. Analysis of the research progress of forest vegetation remote sensing classification. China Sci. Technol. Inf. 2020, 8, 74–75+78. [Google Scholar]

- Cui, B.; Wu, J.; Li, X.; Ren, G.; Lu, Y. Combination of deep learning and vegetation index for coastal wetland mapping using GF-2 remote sensing images. Natl. Remote Sens. Bull. 2023, 27, 1376–1386. [Google Scholar] [CrossRef]

- Zhang, L.; Luo, W.; Zhang, H.; Yin, X.; Li, B. Classification scheme for mapping wetland herbaceous plant communities using time series Sentinel-1 and Sentinel-2 data. Natl. Remote Sens. Bull. 2023, 27, 1362–1375. [Google Scholar] [CrossRef]

- Rapinel, S.; Mony, C.; Lecoq, L.; Clément, B.; Thomas, A.; Hubert-Moy, L. Evaluation of Sentinel-2 time-series for mapping floodplain grassland plant communities. Remote Sens. Environ. 2019, 223, 115–129. [Google Scholar] [CrossRef]

- Huang, K.; Meng, X.; Yang, G.; Sun, W. Spatio-temporal probability threshold method of remote sensing for mangroves mapping in China. Natl. Remote Sens. Bull. 2022, 26, 1083–1095. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Marconi, S.; Weinstein, B.G.; Zou, S.; Bohlman, S.A.; Zare, A.; Singh, A.; Stewart, D.; Harmon, I.; Steinkraus, A.; White, E.P. Continental-scale hyperspectral tree species classification in the United States National Ecological Observatory Network. Remote Sens. Environ. 2022, 282, 113264. [Google Scholar] [CrossRef]

- Xia, Q.; Li, J.; Dai, S.; Zhang, H.; Xing, X. Mapping high-resolution mangrove forests in China using GF-2 imagery under the tide. Natl. Remote Sens. Bull. 2023, 27, 1320–1333. [Google Scholar] [CrossRef]

- Gao, C.; Jiang, X.; Zhen, J.; Wang, J.; Wu, G. Mangrove species classification with combination of WorldView-2 and Zhuhai-1 satellite images. Natl. Remote Sens. Bull. 2022, 26, 1155–1168. [Google Scholar] [CrossRef]

- Ruiz, L.F.C.; Guasselli, L.A.; Simioni, J.P.D.; Belloli, T.F.; Barros Fernandes, P.C. Object-based classification of vegetation species in a subtropical wetland using Sentinel-1 and Sentinel-2A images. Sci. Remote Sens. 2021, 3, 100017. [Google Scholar] [CrossRef]

- Su, H.; Yao, W.; Wu, Z. Hyperspectral remote sensing imagery classification based on elastic net and low-rank representation. Natl. Remote Sens. Bull. 2022, 26, 2354–2368. [Google Scholar] [CrossRef]

- Liu, S.; Dong, X.; Lou, X.; Larisa, D.R.; Elena, N. Classification and Density Inversion of Wetland Vegetation Based on the Feature Variables Optimization of Random Forest Model. J. Tongji Univ. (Nat. Sci.) 2021, 49, 695–704. [Google Scholar]

- Xing, X.; Yang, X.; Xu, B.; Jin, Y.; Guo, J.; Chen, A.; Yang, D.; Wang, P.; Zhu, L. Remote sensing estimation of grassland aboveground biomass based on random forest. J. Geo-Inf. Sci. 2021, 23, 1312–1324. [Google Scholar]

- Li, D.; Chen, S.; Chen, X. Research on method for extracting vegetation information based on hyperspectral remote sensing data. Trans. CSAE 2010, 26, 181–185+386. [Google Scholar]

- Liang, J.; Zheng, Z.; Xia, S.; Zhang, X.; Tang, Y. Crop recognition and evaluation using red edge features of GF-6 satellite. Natl. Remote Sens. Bull. 2020, 24, 1168–1179. [Google Scholar] [CrossRef]

- Su, Y.; Qi, Y.; Wang, J.; Xu, F.; Zhang, J. Land cover extraction in Ejina Oasis by hyperspectral remote sensing. Remote Sens. Technol. Appl. 2018, 33, 202–211. [Google Scholar]

- Zhang, X.; Yang, Y.; Gai, L.; Li, L.; Wang, Y. Research on Vegetation Classification Method Based on Combined Decision Tree Algorithm and Maximum Likelihood Ratio. Remote Sens. Inf. 2010, 25, 88–92. [Google Scholar]

- Li, L.; Qiao, J.; Yao, J.; Li, J.; Li, L. Automatic freezing-tolerant rapeseed material recognition using UAV images and deep learning. Plant Methods 2022, 18, 5. [Google Scholar] [CrossRef] [PubMed]

- Minallah, N.; Tariq, M.; Aziz, N.; Khan, W.; Rehman, A.U.; Belhaouari, S.B. On the performance of fusion based planet-scope and Sentinel-2 data for crop classification using inception inspired deep convolutional neural network. PLoS ONE 2020, 15, e0239746. [Google Scholar] [CrossRef]

- Guo, Q.; Jin, S.; Li, M.; Yang, Q.; Xu, K.; Ju, Y.; Zhang, J.; Xuan, J.; Liu, J.; Su, Y.; et al. Application of deep learning in ecological resource research: Theories, methods, and challenges. Sci. China Earth Sci. 2020, 50, 1354–1373. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, J.; Ma, Y.; An, J.; Ren, G.; Li, X. Hyperspectral Coastal Wetland Classification Based on a Multiobject Convolutional Neural Network Model and Decision Fusion. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1110–1114. [Google Scholar] [CrossRef]

- Kou, W.; Shen, Z.; Liu, D.; Liu, Z.; Li, J.; Chang, W.; Wang, H.; Huang, L.; Jiao, S.; Lei, Y.; et al. Crop classification methods and influencing factors of reusing historical samples based on 2D-CNN. Int. J. Remote Sens. 2023, 44, 3278–3305. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Li, Q.; Tian, J.; Tian, Q. Deep Learning Application for Crop Classification via Multi-Temporal Remote Sensing Images. Agriculture 2023, 13, 906. [Google Scholar] [CrossRef]

- Flood, N.; Watson, F.; Collett, L. Using a U-net convolutional neural network to map woody vegetation extent from high resolution satellite imagery across Queensland, Australia. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101897. [Google Scholar] [CrossRef]

- Shi, Y.; Han, L.; Huang, W.; Chang, S.; Dong, Y.; Dancey, D.; Han, L. A Biologically Interpretable Two-Stage Deep Neural Network (BIT-DNN) for Vegetation Recognition from Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4401320. [Google Scholar] [CrossRef]

- Kartchner, D.; Nakajima An, D.; Ren, W.; Zhang, C.; Mitchell, C.S. Rule-Enhanced Active Learning for Semi-Automated Weak Supervision. Artif. Intell. 2022, 3, 211–228. [Google Scholar] [CrossRef] [PubMed]

- Langford, Z.L.; Kumar, J.; Hoffman, F.M.; Breen, A.L.; Iversen, C.M. Arctic Vegetation Mapping Using Unsupervised Training Datasets and Convolutional Neural Networks. Remote Sens. 2019, 11, 69. [Google Scholar] [CrossRef]

- Sharma, S.; Ball, J.E.; Tang, B.; Carruth, D.W.; Doude, M.; Islam, M.A. Semantic Segmentation with Transfer Learning for Off-Road Autonomous Driving. Sensors 2019, 19, 2577. [Google Scholar] [CrossRef] [PubMed]

- Qiao, K.; Chen, J.; Wang, L.; Zeng, L.; Yan, B. A top-down manner-based DCNN architecture for semantic image segmentation. PLoS ONE 2017, 12, e0174508. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C.; Larkin, J.; Kwan, L.; Skarlatos, D.; Vlachos, M. Deep Learning Model for Accurate Vegetation Classification Using RGB Image Only. In Electrical Network, Proceedings of the Conference on Geospatial Informatics X, Online, 27 April–8 May 2020; Doucette, P.J., Ed.; SPIE: Bellingham, WA, USA, 2020. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Liu, M.; Fu, B.; Xie, S.; He, H.; Lan, F.; Li, Y.; Lou, P.; Fan, D. Comparison of multi-source satellite images for classifying marsh vegetation using DeepLabV3 Plus deep learning algorithm. Ecol. Indic. 2021, 125, 107562. [Google Scholar] [CrossRef]

- Gonzalez-Perez, A.; Abd-Elrahman, A.; Wilkinson, B.; Johnson, D.J.; Carthy, R.R. Deep and Machine Learning Image Classification of Coastal Wetlands Using Unpiloted Aircraft System Multispectral Images and Lidar Datasets. Remote Sens. 2022, 14, 3937. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Part III 18; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Xu, M.; Xu, H.; Kong, P.; Wu, Y. Remote Sensing Vegetation Classification Method Based on Vegetation Index and Convolution Neural Network. Laser Optoelectron. Prog. 2022, 59, 273–285. [Google Scholar]

- Bazi, Y.; Bashmal, L.; Rahhal, M.M.A.; Dayil, R.A.; Ajlan, N.A. Vision Transformers for Remote Sensing Image Classification. Remote Sens. 2021, 13, 516. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, S.; Wu, W.; Guo, W.; Li, X.; Xia, G.; Zhao, Z. NaSC-TG2: Natural Scene Classification with Tiangong-2 Remotely Sensed Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3228–3242. [Google Scholar] [CrossRef]

- Zhou, D.; Wang, G.; He, G.; Long, T.; Yin, R.; Zhang, Z.; Chen, S.; Luo, B. Robust Building Extraction for High Spatial Resolution Remote Sensing Images with Self-Attention Network. Sensors 2020, 20, 7241. [Google Scholar] [CrossRef] [PubMed]

- Immitzer, M.; Neuwirth, M.; Böck, S.; Brenner, H.; Vuolo, F.; Atzberger, C. Optimal Input Features for Tree Species Classification in Central Europe Based on Multi-Temporal Sentinel-2 Data. Remote Sens. 2019, 11, 2599. [Google Scholar] [CrossRef]

- Yao, Y.; Suonan, D.; Zhang, J. Compilation of 1∶50000 vegetation type map with remote sensing images based on mountain altitudinal belts of Taibai Mountain in the north-south transitional zone of China. Acta Geogr. Sin. 2020, 75, 620–630. [Google Scholar] [CrossRef]

- Zhang, J.; Yao, Y.; Suonan, D.; Gao, L.; Wang, J.; Zhang, X. Mapping of mountain vegetation in Taibai Mountain based on mountain altitudinal belts with remote sensing. J. Geo-Inf. Sci. 2019, 21, 1284–1294. [Google Scholar] [CrossRef]

- Wu, T.; Luo, J.; Gao, L.; Sun, Y.; Dong, W.; Zhou, Y.; Liu, W.; Hu, X.; Xi, J.; Wang, C.; et al. Geo-Object-Based Vegetation Mapping via Machine Learning Methods with an Intelligent Sample Collection Scheme: A Case Study of Taibai Mountain, China. Remote Sens. 2021, 13, 249. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. arXiv 2014, arXiv:1411.4038. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar] [CrossRef]

- Zhang, J.; Yao, Y.; Suo, N. Automatic classification of fine-scale mountain vegetation based on mountain altitudinal belt. PLoS ONE 2020, 15, e0238165. [Google Scholar] [CrossRef]

- Zhang, B. Natural phenomena in mountains: Vertical zones. For. Hum. 2015, 2, 2–4. [Google Scholar]

- Zhao, F.; Zhang, B.; Zhu, L.; Yao, Y.; Cui, Y.; Liu, J. Spectra structures of altitudinal belts and their significance for determining the boundary between warm temperate and subtropical zones in the Qinling-Daba Mountains. Acta Geogr. Sin. 2019, 74, 889–901. [Google Scholar]

- Zhang, B.; Yao, Y.; Xiao, F.; Zhou, W.; Zhu, L.; Zhang, J.; Zhao, F.; Bai, H.; Wang, J.; Yu, F.; et al. The finding and significance of the super altitudinal belt of montane deciduous broad-leaved forests in central Qinling Mountains. Acta Geogr. Sin. 2022, 77, 2236–2248. [Google Scholar]

- Li, J.; Yao, Y.; Liu, J.; Zhang, B. Variation Analysis of the Typical Altitudinal Belt Width in the Qinling-Daba Mountains. Nat. Prot. Areas 2023, 3, 12–25. [Google Scholar]

| Data Type | Sensor | Time | Bands |

|---|---|---|---|

| 2 m resolution remote sensing image | ZY3 and GF2 | winter | 4 |

| 16 m resolution remote sensing image | GF1 | winter and summer | 4 |

| digital elevation model (DEM) data | ZY3 | -- | 1 |

| Conditions | 2 m Resolution Winter Imagery | 16 m Resolution Winter Image | 16 m Resolution Summer Image | DEM Image | |

|---|---|---|---|---|---|

| Experiment | |||||

| 1. Only 2 m winter | √ | ||||

| 2. Only 16 m winter | √ | ||||

| 3. DEM and 2 m winter | √ | √ | |||

| 4. DEM and 16 m winter | √ | √ | |||

| 5. 16 m and 2 m | √ | √ | √ | ||

| 6. Multi-channel model | √ | √ | √ | √ | |

| Experiments | Correct Pixels | Total Pixels | Evaluation Indicator | ||||

|---|---|---|---|---|---|---|---|

| Cultivated Vegetation | Broadleaved Forests | Coniferous Forests | Correct Total | Total Pixels | PA (%) | MIoU (%) | |

| 1. Only 2 m winter | 144,658 | 2,546,833 | 7,654,571 | 10,346,062 | 17,567,654 | 58.9 | 30.5 |

| 2. Only 16 m winter | 559,270 | 3,971,499 | 5,851,712 | 10,382,481 | 17,567,654 | 59.1 | 36.1 |

| 3. DEM and 2 m winter | 388,856 | 3,439,351 | 7,723,454 | 11,551,661 | 17,567,654 | 65.8 | 39.5 |

| 4. DEM and 16 m winter | 459,949 | 5,153,889 | 6,475,331 | 12,089,169 | 17,567,654 | 68.8 | 43.9 |

| 5. All 16 m and 2 m | 1229 | 7,099,200 | 724,230 | 7,824,659 | 17,567,654 | 44.5 | 16.7 |

| 6. Multi-channel model | 537,760 | 7,468,935 | 7,063,657 | 15,070,352 | 17,567,654 | 85.8 | 65.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Yao, Y. Mountain Vegetation Classification Method Based on Multi-Channel Semantic Segmentation Model. Remote Sens. 2024, 16, 256. https://doi.org/10.3390/rs16020256

Wang B, Yao Y. Mountain Vegetation Classification Method Based on Multi-Channel Semantic Segmentation Model. Remote Sensing. 2024; 16(2):256. https://doi.org/10.3390/rs16020256

Chicago/Turabian StyleWang, Baoguo, and Yonghui Yao. 2024. "Mountain Vegetation Classification Method Based on Multi-Channel Semantic Segmentation Model" Remote Sensing 16, no. 2: 256. https://doi.org/10.3390/rs16020256

APA StyleWang, B., & Yao, Y. (2024). Mountain Vegetation Classification Method Based on Multi-Channel Semantic Segmentation Model. Remote Sensing, 16(2), 256. https://doi.org/10.3390/rs16020256