Abstract

Accurate cultivated land parcel data are an essential analytical unit for further agricultural monitoring, yield estimation, and precision agriculture management. However, the high degree of landscape fragmentation and the irregular shapes of cultivated land parcels, influenced by topography and human activities, limit the effectiveness of parcel extraction. The visual semantic segmentation model based on the Segment Anything Model (SAM) provides opportunities for extracting multi-form cultivated land parcels from high-resolution images; however, the performance of the SAM in extracting cultivated land parcels requires further exploration. To address the difficulty in obtaining parcel extraction that closely matches the true boundaries of complex large-area cultivated land parcels, this study used segmentation patches with cultivated land boundary information obtained from SAM unsupervised segmentation as constraints, which were then incorporated into the subsequent multi-scale segmentation. A combined method of SAM unsupervised segmentation and multi-scale segmentation was proposed, and it was evaluated in different cultivated land scenarios. In plain areas, the precision, recall, and IoU for cultivated land parcel extraction improved by 6.57%, 10.28%, and 9.82%, respectively, compared to basic SAM extraction, confirming the effectiveness of the proposed method. In comparison to basic SAM unsupervised segmentation and point-prompt SAM conditional segmentation, the SAM unsupervised segmentation combined with multi-scale segmentation achieved considerable improvements in extracting complex cultivated land parcels. This study confirms that, under zero-shot and unsupervised conditions, the SAM unsupervised segmentation combined with the multi-scale segmentation method demonstrates strong cross-region and cross-data source transferability and effectiveness for extracting complex cultivated land parcels across large areas.

1. Introduction

Cultivated land parcels are the fundamental units of precision agriculture management [1]. The precise delineation of cultivated land parcels holds significant scientific research value in various fields, such as crop asset estimation [2,3], crop-type mapping [4,5], and farmland change detection [6,7]. Traditional cultivated land parcel extraction relies on manual visual interpretation, which, while highly accurate, requires significant human resources [8]. The complete extraction of complex cultivated land parcels, characterized by high landscape fragmentation and irregular distribution, is of great importance for achieving precision agriculture. Although various cultivated land parcel extraction methods have been developed, most are limited to specific, more regular parcel types [9,10]. Currently, no effective method exists for extracting complex cultivated land parcels across large areas under different scenarios.

In cultivated land parcel extraction, accurate boundary information is a critical component for the effective delineation of parcels [11]. The identification of real parcels in remote sensing imagery relies on precise delineation that includes boundary line information. To address the challenges in extracting object boundaries from high-resolution remote sensing images, Refs. [12,13] employed a strategy of line–surface association constraints to improve segmentation quality. This method utilizes object edge lines as constraints, incorporating them into subsequent segmentation processes, which effectively reduces over-segmentation errors and enhances the completeness and accuracy of the segmentation. This line–surface combination segmentation model has also found many similar applications in cultivated land parcel extraction. Ref. [14] used a semantic segmentation network for coarse-scale image region segmentation, followed by richer convolutional features (RCFs) to optimize parcel boundary extraction at a finer scale. Ref. [15] used feature lines of cultivated land edges as constraints, integrating them into semantic segmentation results, and proposed a multi-level segmentation method for cultivated land parcel extraction based on semantic boundaries. This combined segmentation strategy effectively increased the completeness and accuracy of segmentation boundaries, providing a new technical approach for the precise extraction of cultivated land in complex mountainous areas. Additionally, cultivated land parcel boundaries can be further classified into hard boundaries and soft boundaries [16]. Hard boundaries are relatively stable, usually formed by roads (Figure 1a), and cultivated land parcels formed by hard boundaries are referred to as management parcels, within which multiple crop types may be grown during the same period. In contrast, soft boundaries are natural boundaries formed by different planting types and exhibit seasonal variation characteristics (Figure 1b). Cultivated land parcels formed by soft boundaries, or a combination of soft and hard boundaries, are called planting parcels, which consist of a single crop, thus exhibiting higher homogeneity. In complex cultivated land scenarios, the accurate extraction of planting parcels can facilitate more efficient monitoring and resource allocation for cultivated land.

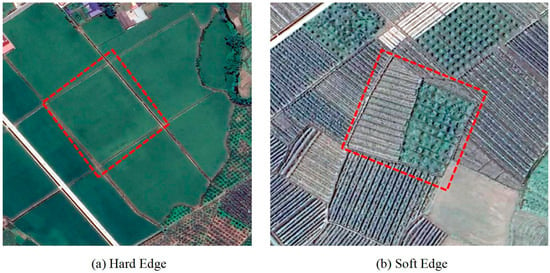

Figure 1.

The classification of field boundary types, in which the two red dashed frames enclose different types of field parcels: (a) hard boundaries are stable and regular, and fields composed of hard boundaries often contain a variety of crops; (b) soft boundaries are variable and irregular, and fields composed of soft boundaries or a combination of soft and hard boundaries are homogeneous, i.e., for a single crop.

Due to the morphological variability in cultivated land parcels and issues like blurred edges, the precise delineation of cultivated land parcels in high-resolution remote sensing images remains challenging. Previous methods for cultivated land parcel delineation were mostly developed for large cultivated areas [17]. However, there is currently no effective method for accurately extracting small, complex cultivated land parcels with irregular shapes, diverse planting types, and blurred boundaries. Cultivated land extraction methods can be categorized into two main approaches: traditional image segmentation methods and deep learning-based segmentation methods. Traditional image segmentation methods widely used for extracting cultivated land include edge detection [18,19] and region segmentation [20,21]. A commonly employed method for feature extraction in region segmentation is multi-scale segmentation. It achieves feature extraction by analyzing the spectral heterogeneity of pixels or regions at different scales and applying region growing or merging techniques [22,23]. The strength of multi-scale segmentation lies in its ability to quickly capture features at different scales, resulting in relatively complete segmented patches. Ref. [24] considered the scale differences between different types of features and used an iterative aggregation strategy to combine homogeneous pixels into objects, thereby generating cultivated land parcels with higher boundary consistency. Ref. [25] proposed an automatic optimal scale (MSAOS) method based on mean shift to extract parcels and confirmed the effectiveness of multi-scale segmentation for extracting cultivated land parcels. However, a major limitation of multi-scale segmentation lies in the difficulty of obtaining optimal parameters for segmenting complex cultivated land parcels [26], which can lead to severe under-segmentation or over-segmentation errors [27]. Additionally, the resulting segmented patches often fail to closely align with the true boundaries of parcels, making it difficult to directly apply the segmented cultivated land parcels, which lack precise boundary information, in precision agriculture management.

Deep learning methods have opened new possibilities for boundary extraction in complex scenarios [28], such as road extraction [29], building extraction [30], and cultivated land parcel extraction [31,32]. Compared to traditional image segmentation methods, deep learning methods can achieve higher accuracy in the task of extracting complex cultivated land parcels. Ref. [33] extracted complex cultivated land parcels based on an improved pyramid scene parsing network, achieving a maximum accuracy of 92.31%. Ref. [34] proposed a hierarchical strategy based on the fragmentation degree of cultivated land and combined it with a recurrent residual convolutional neural network, effectively reducing the impact of region on cultivated land parcel extraction. Although deep learning methods can produce relatively complete segmented patches in cultivated land parcel extraction, they often fail to extract results from imagery that match the visually perceived planting parcels. Therefore, many studies have employed combination strategies using different methods to enhance the ability to extract the true boundaries of complex fragmented cultivated land parcels. For example, Ref. [35] used watershed segmentation as a post-processing step to connect the discrete boundaries detected by the semantic segmentation model, thereby improving boundary accuracy in the segmentation results. Despite the strengths of deep learning methods in extracting cultivated land parcels, they are typically constrained to specific, more regular parcel types and heavily reliant on the quantity and quality of training samples. Additionally, deep learning models are susceptible to the heterogeneity of farmland areas [36], making them difficult to apply for large-scale cultivated land parcel extraction. Therefore, developing an efficient and transferable method for segmenting small, highly fragmented cultivated land parcels is of great significance.

The Segment Anything Model (SAM) is an efficient segmentation system released by Meta AI that can generalize segmentation to unfamiliar objects and images with zero-shot learning, without the need for additional training [37]. Compared to traditional segmentation models, the fundamental difference of the SAM lies in its ability to perform segmentation tasks directly using a pre-trained model, without the need for specialized training or fine-tuning for each task or dataset [38]. Currently, the powerful visual semantic segmentation capabilities of the SAM have been applied in various fields [39], such as medical image analysis [40,41], image rendering [42], and style transfer [43]. However, in remote sensing applications, the SAM often fails to produce optimal results when applied directly due to the unique imaging characteristics of remote sensing images [44]. Thus, the SAM needs to be further developed to adapt to remote sensing applications and address its limitations for specific tasks [45,46,47]. The powerful semantic segmentation capabilities of the SAM offer a new perspective on cultivated land parcel extraction. In the task of cultivated land parcel segmentation, especially in scenarios with significant contrast differences [48], the visual encoder advantages are more pronounced, meaning that the segmented patches contain the semantics of cultivated land boundaries, and the segmentation results are closer to the actual planting parcels. However, using the SAM for unsupervised segmentation in cultivated land parcel extraction also faces some challenges. In semantic segmentation tasks, the SAM is prone to boundary noise interference that is highly related to image quality, particularly for fragmented and small parcels, which may lead the model to mistakenly identify some local parcels as background, resulting in unsegmented areas [49]. Current research on extracting cultivated land parcels using the SAM remains at the technical and methodological level, lacking a practical solution for the extraction of complex cultivated land parcels across larger areas and in multiple scenarios.

Traditional parcel extraction methods struggle to obtain effective segmented patches that are close to the true boundaries. For example, multi-scale segmentation extracts parcels as irregular patches without boundary line features, which cannot directly correspond to the actual parcel boundaries. Semantic segmentation methods usually obtain accurate surface information for cultivated land parcels, but the boundary extraction results are often poor [50]. In contrast, the powerful visual semantic segmentation of the SAM in cultivated land extraction can effectively yield regular segmented patches that include the semantics of cultivated land boundaries. Although the use of the SAM for cultivated land parcel extraction in high-resolution imagery is currently in the exploratory stage, the SAM has shown great potential in effective cultivated land parcel delineation through its zero-shot learning, unsupervised segmentation, and generalization capabilities [51]. However, in remote sensing imagery, many cultivated areas contain low-contrast and complex fragmented regions that the SAM may misidentify as background. Using the SAM alone for cultivated land extraction makes it difficult to achieve the complete and effective extraction of complex cultivated land parcels in large areas. Therefore, in order to obtain an automatic and effective method for the true delineation of cultivated land parcels in complex, large-area scenarios, this study, under zero-shot and unsupervised conditions, utilizes SAM unsupervised segmentation to extract parcels that include parcel boundary semantics and leverages the advantage of multi-scale segmentation to achieve more complete segmentation of complex cultivated land parcels. The SAM unsupervised segmentation patches are used as base map constraints and integrated into subsequent multi-scale segmentation, employing a combined strategy of constrained segmentation. This study proposes a method that combines SAM unsupervised segmentation with multi-scale segmentation, validating its effectiveness and cross-regional transferability across various complex cultivated land scenarios. The aim is to achieve a practical application solution for large-area, multi-scenario complex cultivated land parcel extraction based on the SAM under zero-shot and unsupervised conditions, thereby promoting the wider application of the SAM in the field of cultivated land extraction.

2. Study Area and Data

2.1. Study Area

As one of the important agricultural production areas in Southwest China, Meishan City in Sichuan Province has abundant cultivated land resources and a history of cultivation. The cultivated land in Meishan City primarily grows staple crops such as rice, wheat, and corn, while also planting economic crops like vegetables and fruits, forming a diversified agricultural planting structure. As a standardized demonstration area for the construction of high-standard farmland in China, Meishan City has been committed to the protection and utilization of farmland, improving land use efficiency and agricultural production quality. Thus, conducting cultivated land parcel extraction work in this area holds significant importance, as it can provide a scientific basis for government decision-making. The terrain in this area is diverse, with plains, gentle hills, and mountainous regions coexisting, and the agricultural planting in this region is also diverse, with the distribution of cultivated land showing fragmentation and complexity, posing a significant challenge for model-based plot extraction. To explore the effectiveness and cross-regional transferability of different methods based on the SAM for extracting complex cultivated land parcels, this study delineated four rectangular areas within the study region, each corresponding to a specific terrain type (Figure 2).

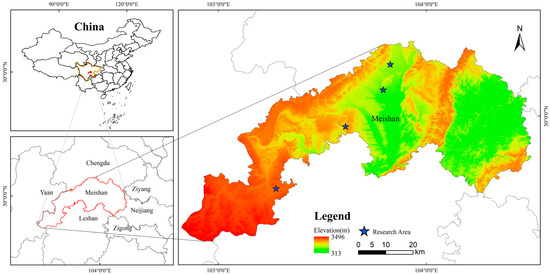

Figure 2.

Using the digital elevation model (DEM) data to select parcels of different terrains for the test area of cultivated land parcel extraction (four blue pentagonal star areas).

2.2. High-Resolution Remote Sensing Data

Previous studies have generally focused on extracting large cultivated land parcels, which do not require high image resolution. Conversely, this study focused on the extraction of complex and fragmented small areas of planted fields, using high-resolution remote sensing images to enhance the precision of parcel extraction. This study utilized data from the Jilin-1 KF satellite in the Jilin-1 satellite series, which can obtain a panchromatic resolution of 0.75 m, a multispectral resolution of 3 m, and a swath width of over 136 km. The data were fused to obtain RGB remote sensing images with a spatial resolution of 1 m [52]. The images were subjected to essential preprocessing procedures, including relative radiometric correction and orthorectification.

2.3. Other Data

The NASA DEM [53] is a seamless digital elevation model with a resolution of about 30 m, generated using reprocessing SRTM data. The elevation and slope information calculated from the NASA DEM data was used as a basis for selecting cultivated areas with different topographical features. Additionally, the non-cultivated areas were masked using the land use data of Meishan City from December 2022. These land use data were created using satellite imagery with a resolution better than 1 m and underwent strict visual interpretation by experts, quality checks, and field verification, achieving an accuracy of over 95%.

3. Methods for Effective Cultivated Land Parcel Segmentation in Multiple Scenes

To verify the effectiveness and cross-region transferability of the SAM unsupervised segmentation combined with multi-scale segmentation for extracting cultivated land parcels, this study designed comparative experiments with different segmentation methods in various cultivated land scenarios using SAM segmentation. Figure 3 shows the overall workflow. Additionally, the SAM unsupervised segmentation in this experiment was conducted using the open-source Python package Segment-Geospatial (samgeo), version 0.10.2, which segments geospatial data using the Segment Anything Model [54]. The samgeo library currently supports the direct application of SAM segmentation to raster images, limited to 3-band 8-bit remote sensing images.

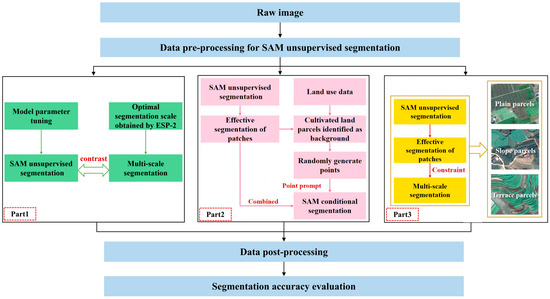

Figure 3.

A general flowchart of the segmentation framework: Part1: to verify the feasibility of SAM unsupervised segmentation in extracting cultivated land parcels and to compare it with traditional multi-scale segmentation methods; Part2: to evaluate the effectiveness of the SAM unsupervised segmentation combined with the point-prompt SAM conditional segmentation method for extracting cultivated land parcels; Part3: to verify the effectiveness and cross-region transferability of the SAM unsupervised segmentation combined with multi-scale segmentation method in different cultivated land scenarios.

3.1. Data Preprocessing for SAM Unsupervised Segmentation

SAM unsupervised segmentation segments objects in images through visual semantic segmentation, where the contrast differences between objects can influence the final segmentation results to some extent [55]. In the process of extracting complex cultivated land parcels, the SAM typically identifies the cultivated land as the foreground and the roads between the fields as the background. However, for low-contrast, complex, and fragmented cultivated land parcels, the SAM may mistakenly identify the area as the background, leading to under-segmentation. Therefore, it is necessary to preprocess high-resolution images with different linear stretching methods before performing SAM unsupervised segmentation and compare the segmentation results to select the most suitable stretching method for extracting cultivated land parcels.

3.2. Experiments on Effective Division of Different Cultivated Land Parcels

3.2.1. Comparative Experiment of SAM and Multi-Scale Segmentation

The experiment selected a cultivated land scene of approximately 7 km2 to test the performance of the SAM-based segmentation method in extracting cultivated land parcels. The land parcels in the experimental area had diverse cultivation types and varying distributions, and there were many construction lands and roads interspersed between them, which caused the boundaries of many cultivated land parcels to be blurred and generated a large number of noise points during segmentation, thereby increasing the difficulty for the model to accurately extract cultivated land parcels. Additionally, cultivated land parcels were classified into five levels based on their area: parcels larger than 100 hectares are classified as extra-large parcels, 16 to 100 hectares as large parcels, 2.56 to 16 hectares as medium parcels, 0.64 to 2.56 hectares as small parcels, and those smaller than 0.64 hectares as very small parcels [56].

In this experiment, SAM unsupervised segmentation was used to delineate cultivated land parcels after applying a stretch to high-resolution remote sensing images, with the SAM parameters being adjusted. After model tuning and comparison, setting the batch option in the mask generation function to true and changing the erosion_kernel from the default none to 3*3 resulted in optimal segmentation performance in complex fragmented cultivated land parcels. Additionally, to verify the feasibility of extracting cultivated land parcels using SAM unsupervised segmentation, the results of SAM unsupervised segmentation were compared with those obtained through traditional multi-scale segmentation in this experiment. The multi-scale segmentation in the experiment was conducted using eCognition Developer (EC) software, version 9.0. When selecting the optimal segmentation scale, the Scale Parameter Estimation (ESP-2) tool developed by [57] was used to estimate the local variance of multi-layer images (LV-ROC) to determine the optimal segmentation scale.

3.2.2. Effectiveness Experiment of SAM Conditional Segmentation with Point Prompts

Due to the highly fragmented landscape of complex cultivated land parcels, it is difficult to achieve ideal results by using a single segmentation method to extract cultivated land. The strength of the SAM lies in its nature as a promptable model, where effective masks can be ultimately predicted through high-quality and sufficient prompt interactions. Therefore, this study adopted a combined strategy of SAM unsupervised segmentation and SAM conditional segmentation with point prompts to evaluate the effectiveness of the SAM conditional segmentation method based on point prompts for extracting cultivated land parcels. The specific process of this method involves using the SAM unsupervised segmentation results from the first stage, overlaying them onto land use data, filtering out the areas identified as background in the SAM unsupervised segmentation process, and randomly generating enough points as model prompts. Points falling inside the unextracted cultivated land parcels are used, and SAM prompt segmentation is performed using single-point and multi-point prompts at different locations. Effective segmented patches are selected to supplement the unextracted cultivated land parcels from the first stage. By using the combination of SAM unsupervised segmentation and point-prompt SAM conditional segmentation, the performance of SAM-based cultivated land parcel extraction can be further improved.

3.2.3. Experiments of the AM Combined with Multi-Scale Segmentation in Various Scenarios

The cultivated land parcels segmented by SAM visual semantic segmentation contained semantic boundaries of cultivated land, which was difficult to achieve with traditional segmentation algorithms and deep learning semantic segmentation. This was also the crucial issue of accurately extracting cultivated land parcels. Therefore, this study proposed a combination method of SAM unsupervised segmentation and multi-scale segmentation to fully utilize the advantages of SAM visual semantic segmentation for cultivated land parcels and the ability of multi-scale segmentation to leverage spatial contextual information at different scales to ensure the completeness of cultivated land parcel extraction. First, SAM unsupervised segmentation was used to extract cultivated land parcels from preprocessed high-resolution remote sensing images, and the obtained results were post-processed by calculating the area and shape index of the segmented patches, and patches identified as background or noise, i.e., small patches below 100 square meters, were removed. Then, the processed SAM unsupervised segmentation patches containing the semantic boundaries of cultivated land parcels were used as conditional constraints. The combination strategy of constraint segmentation was applied, using the SAM unsupervised segmentation results as the base layer in EC for multi-scale segmentation. The ESP-2 tool was used to obtain the optimal segmentation scale suitable for extracting complex cultivated land, resulting in the optimal outcome of extracting cultivated land parcels using the SAM unsupervised segmentation combined with multi-scale segmentation method.

Additionally, the spatial structure characteristics of cultivated land in different regions varied greatly, and this complexity reduced the accuracy of segmentation algorithms. Ref. [58] used a zonal classification method to divide cultivated land into different types. Based on elevation and slope data, the study area was divided into plains and mountainous regions, with the mountainous regions further divided into slopes and terraces. To further verify the effectiveness and cross-region transferability of SAM unsupervised segmentation combined with multi-scale segmentation in extracting cultivated land parcels in complex agricultural scenarios, experiments were conducted to extract cultivated land parcels in 752 plains, 936 slopes, and 830 terrace fields based on the topographic features of the study area.

3.3. Data Post-Processing

Since SAM unsupervised segmentation is a category-free form of segmentation, after obtaining the segmented patches, land use data needed to be used to mask roads and construction land. We refined the segmentation results by performing morphological operations to further smooth the boundaries of the fields and remove isolated small objects that are considered noise. Morphological refinement is a widely used image processing procedure for cleaning spatial objects and has been proven to significantly improve the accuracy of spatial object recognition [59]. To more accurately extract cultivated fields, segmentation patches smaller than 100 square meters were removed during post-processing.

3.4. Evaluation Metric

In this study, multiple evaluation metrics were used to comprehensively assess the performances of segmentation models in terms of segmentation capability, recognition capability, and geometric accuracy. The performances of different segmentation methods for extracting cultivated land parcels were evaluated using three metrics: precision, recall, and intersection over union (IoU). The area where the extracted field patches overlapped with the sample field patches was defined as true positive (TP), the area of the sample field patches that are not extracted was defined as false negative (FN), and the area of non-sample regions that were incorrectly extracted was defined as false positive (FP) (as shown in Figure 4).

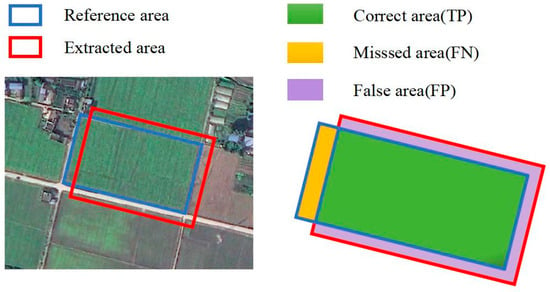

Figure 4.

Diagram showing the correctly extracted area, omissions, and errors in field plot area extraction.

The precision, recall, and IoU of the field extraction are evaluated using Equations (1)–(3) as follows:

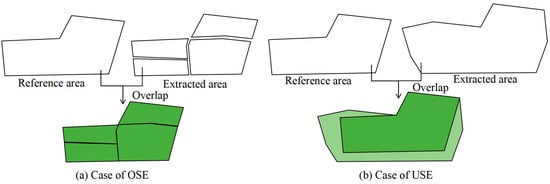

Global over-segmentation (GOSE) and global under-segmentation (GUSE) [60] were also utilized to assess the performance of the field extraction. Figure 5a illustrates an instance where a large field is incorrectly subdivided into several smaller fields, known as the over-segmentation phenomenon. Figure 5b depicts a scenario where smaller fields are incorrectly merged into a larger field, known as the under-segmentation phenomenon.

Figure 5.

Examples of segmentation errors in images: (a,b) represent over-segmentation and under-segmentation.

The formulas for evaluating GOSE and GUSE are as follows:

where represents the area of the true field patch, and represents the area of the model-segmented field patch.

4. Results

4.1. Comparison of SAM Unsupervised Segmentation and Multi-Scale Segmentation

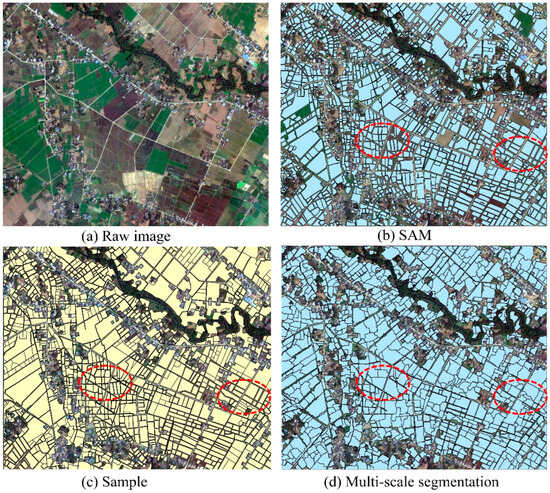

In this study area, more than 95.9% of the cultivated land parcels are classified as very small parcels, which poses a significant challenge for the model to effectively segment cultivated land parcels. This experiment aims to validate the potential of SAM unsupervised segmentation and traditional multi-scale segmentation methods for extracting complex cultivated land parcels. Figure 6 shows the segmentation results and sample annotations under different conditions. Specifically, Figure 6b,d show the results of SAM unsupervised segmentation and multi-scale segmentation, respectively.

Figure 6.

Segmentation results under different methods: (a) raw remote sensing image; (b) the result of parcel merging obtained by SAM unsupervised segmentation under different image stretching methods; (c) manually annotated samples; (d) the result of cultivated land parcels segmented solely using multi-scale segmentation. The red dashed frames in the figure indicate areas with larger differences in segmentation results.

According to the evaluation results shown in Figure 6 and Table 1, the precision, recall, and IoU of SAM unsupervised segmentation in extracting cultivated land parcels reached 86.47%, 74.66%, and 69.88%, respectively. Although it has some capability in extracting cultivated land parcels, a significant amount of cultivated land remains unsegmented, making it unsuitable for direct application in complex cultivated land parcel extraction. In the process of multi-scale segmentation, the ESP-2 tool was used to determine the optimal scale parameter, considering the rate of change in the LV-ROC graph, and several local optimal scales were compared and evaluated, ultimately selecting scale parameter 42. When the shape and compactness parameters were set to 0.4 and 0.5, respectively, the segmentation accuracy was higher. Although the recall rate of EC multi-scale segmentation reached 90.25%, higher than that of SAM unsupervised segmentation, its precision and IoU were much lower than those of SAM unsupervised segmentation. In addition, the GOSE of multi-scale segmentation reached 47.52%.

Table 1.

Segmentation evaluation results under different conditions.

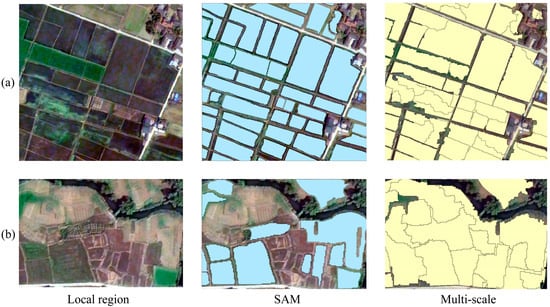

As shown in Figure 7, in fields with clear boundaries and homogeneous interiors, the patches obtained by SAM unsupervised segmentation can closely approximate the actual boundaries of the parcels, while multi-scale segmentation may lead to more over-segmentation and false boundaries (Figure 7a). When the field boundaries are blurred and complex, it is difficult to determine the exact true boundaries even through visual interpretation, leading SAM unsupervised segmentation to mistakenly identify some local areas as the background (Figure 7b). In contrast, multi-scale segmentation performs better in fields with blurred boundaries, despite a tendency toward over-segmentation.

Figure 7.

Comparison of SAM and multi-scale segmentation in local areas: (a) shows the segmentation results of SAM unsupervised segmentation and multi-scale segmentation in regular parcels; (b) shows the segmentation results of both methods in cases of complex and fuzzy boundaries.

SAM unsupervised segmentation relies on visual encoders for feature extraction, and indistinct visual boundary features may cause some areas to be considered the background, thus increasing GUSE. The GOSE ratio for SAM segmentation typically remains around 15%, as the SAM model segments based on differences in land cover pixel values. When the interior of the field is uneven or the boundaries are unclear, noise points may be generated, leading to over-segmentation. Under unsupervised conditions, for areas with uniform distribution and consistent internal texture, SAM segmentation can typically obtain patches containing cultivated land boundary information. However, when field boundaries are complex or the interior is highly heterogeneous, the model is likely to mistakenly classify it as background or over-segment it into multiple patches. Although patches extracted by SAM unsupervised segmentation under different stretching methods can effectively increase the accuracy of cultivated land parcel extraction, many uncultivated lands remain unsegmented. The cultivated land parcels obtained through multi-scale segmentation often lack true boundary information and cannot meet the requirements for precise extraction, and multi-scale segmentation is more advantageous than SAM unsupervised segmentation in areas with blurred boundaries and high noise interference.

4.2. SAM Conditional Segmentation Results for Cultivated Land Extraction under Point Prompts

Based on the characteristics of SAM interactive segmentation with prompts, this experiment added SAM conditional segmentation under point prompts after SAM unsupervised segmentation to supplement the cultivated land not extracted in the first stage. Figure 8 shows the results of SAM unsupervised segmentation and the results after adding point prompts to SAM unsupervised segmentation.

Figure 8.

The results of segmentation using SAM unsupervised segmentation and SAM segmentation combined with point prompts. The red dashed frames in the figure indicate areas with larger differences in segmentation results.

As shown in the evaluation results in Table 2, compared to SAM unsupervised segmentation, the addition of point prompts improved the precision, recall, and IoU of field extraction by 2–3 percentage points, demonstrating that SAM conditional segmentation with point prompts enhances the performance of the SAM in cultivated land extraction. As shown in Figure 8, SAM point-prompt segmentation can supplement some cultivated land parcels not extracted by SAM unsupervised segmentation. However, in some fragmented areas or regions where the boundary differs little from the background, even increasing the number of prompt points or adjusting their positions results in irregular masks that do not correspond to the shape of cultivated land. This indicates that the combination of SAM unsupervised segmentation with SAM conditional segmentation under point prompts has certain limitations in extracting cultivated land parcels.

Table 2.

The assessment results of SAM unsupervised segmentation and SAM segmentation combined with point prompts.

4.3. Results of SAM Combined with Multi-Scale Segmentation for Cultivated Land Extraction in Multiple Scenarios

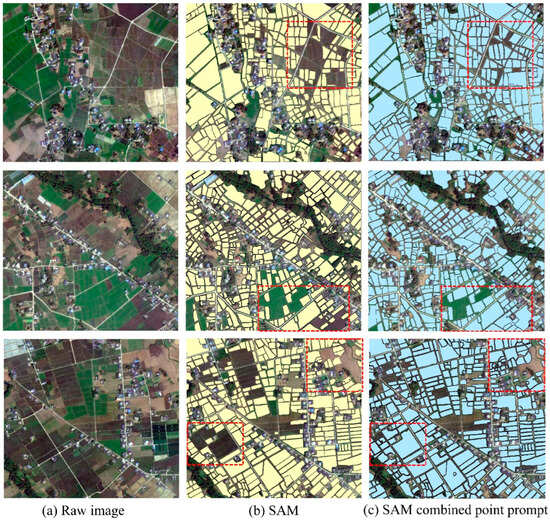

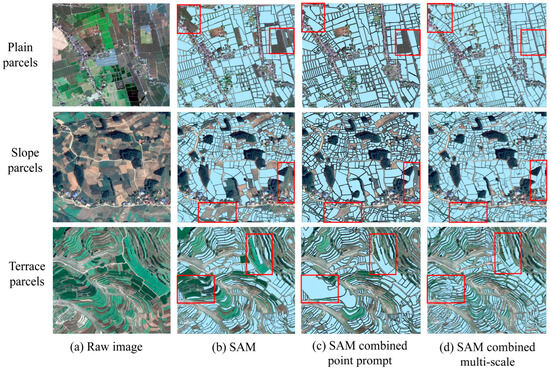

To fully leverage the advantages of SAM visual semantic segmentation in extracting cultivated land parcels and the benefits of multi-scale segmentation, which can utilize spatial contextual information at different scales to ensure the completeness of cultivated land extraction, a combined method of SAM unsupervised segmentation and multi-scale segmentation is proposed to handle complex cultivated land parcels. To validate the effectiveness and cross-region transferability of the proposed method, it is compared with SAM unsupervised segmentation and the combined method of SAM unsupervised segmentation with SAM conditional segmentation under point prompts. Three different types of cultivated land, plains, slopes, and terraces, were selected based on terrain classification for extracting complex cultivated land parcels, as shown in Figure 9.

Figure 9.

Segmentation results of cultivated land parcels extracted by different methods in various complex scenarios. The first row shows the plain type, the second row shows the slope type, and the third row shows the terraced field type. The red dashed frames in the figure indicate areas with larger differences in segmentation results.

According to the segmentation results in Figure 9 and the evaluation results in Table 3, Table 4 and Table 5, SAM unsupervised segmentation performed well in segmenting regular cultivated parcels. However, in complex cultivated land parcels with unclear boundaries and fragmentation, both SAM unsupervised segmentation and combined SAM conditional segmentation with point prompts struggle to fully extract these parcels. In contrast, the proposed method of combining SAM unsupervised segmentation with multi-scale segmentation effectively achieves more comprehensive extraction of cultivated land parcels with real boundary semantics. Multi-scale segmentation, with SAM unsupervised segmentation as the base map, reduces the over-segmentation that occurs when using multi-scale segmentation alone and significantly compensates for the shortcomings of SAM unsupervised segmentation in handling irregular areas and regions with high noise interference. Additionally, the cultivated land parcels extracted by SAM unsupervised segmentation contain boundary information, which is the greatest advantage of using SAM for cultivated land extraction. By using boundary-containing segments as constraints and leveraging the lines with boundary information to constrain the irregular regions obtained from multi-scale segmentation, more parcels with real boundary information can be preserved. Additionally, multi-scale segmentation can supplement the cultivated land parcels missed by SAM unsupervised segmentation due to recognizing blurred boundary areas as the background. In the combined segmentation strategy of SAM unsupervised segmentation and multi-scale segmentation, the strengths of both methods are perfectly integrated, increasing the completeness of cultivated land parcel extraction while enhancing the accuracy of boundary information, validating the effectiveness of proposed method for extracting complex cultivated land parcels. Compared to the method combining SAM unsupervised segmentation with point prompts, although the addition of multi-scale segmentation may lead to the over-segmentation of some large and irregular parcels within the SAM segmentation constraint, resulting in higher GOSE than the SAM method combined with point prompts, the SAM unsupervised segmentation combined with multi-scale segmentation achieves significantly higher precision, recall, and IoU, demonstrating its feasibility in extracting complex cultivated land parcels.

Table 3.

The evaluation results of plain parcel extraction.

Table 4.

The evaluation results of sloped parcel extraction.

Table 5.

The evaluation results of terraced parcel extraction.

In plain areas, different segmentation methods achieve good results. SAM unsupervised segmentation fully demonstrates the advantage of SAM for extracting regular parcels in plain areas, achieving segmentation accuracy close to that of deep learning models trained with specific samples, without the need for training samples. SAM unsupervised segmentation combined with multi-scale segmentation achieved precision, recall, and IoU levels of 92.29%, 87.06%, and 83.07%, respectively. While GOSE increases by about 4 percentage points, GUSE is significantly reduced, supplementing parcels not extracted by SAM unsupervised segmentation. This indicates the feasibility of combining SAM unsupervised segmentation with multi-scale segmentation for extracting cultivated land parcels in plain areas. For slope areas, cultivated parcels vary greatly in shape, and most parcel boundaries are soft, leading to results from different segmentation methods being inferior to those in plain areas. The combination of SAM unsupervised segmentation with multi-scale segmentation improved precision, recall, and IoU levels by about 11 percentage points compared to SAM unsupervised segmentation alone. Although terraced field parcels have clear boundaries and are densely distributed, their shapes are curved and varied. Compared to plains and slopes, the SAM is more likely to treat narrow terraced field parcels as the background. SAM unsupervised segmentation performed poorly in extracting terraced field parcels, with relatively high GOSE and GUSE. After combining with multi-scale segmentation, precision, recall, and IoU were improved to 75.96%, 69.91%, and 63.63%, respectively, showing significant performance improvements compared to using SAM unsupervised segmentation alone. However, due to the narrow and curved nature of terraced fields, the addition of multi-scale segmentation easily leads to over-segmentation, increasing GOSE to 46.27%.

Overall, the SAM unsupervised segmentation combined with multi-scale segmentation proposed in this study achieves more effective evaluation results across different complex cultivated land scenarios in plains, slopes, and terraces compared to basic SAM unsupervised segmentation or SAM unsupervised segmentation combined with point-prompt conditional segmentation. SAM unsupervised segmentation has a greater advantage in terms of extracting regular parcels with clear boundaries. Although the combination with multi-scale segmentation increases over-segmentation in some cases, it significantly improves the extraction of irregular parcels. The method of SAM unsupervised segmentation combined with multi-scale segmentation, through the constraint-based segmentation strategy, integrates the advantages of both methods, achieving zero-sample, unsupervised extraction of various complex cultivated land parcels, demonstrating the feasibility and cross-region transferability of this method for extracting cultivated land parcels in complex scenarios.

5. Discussion

5.1. Data Transferability

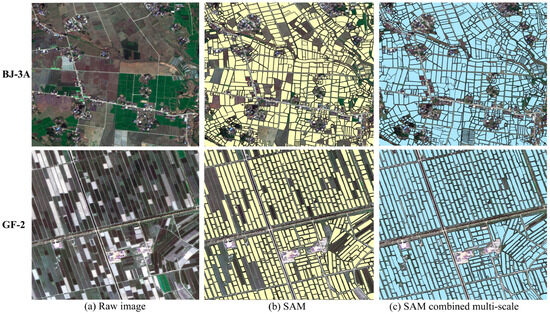

To evaluate the robustness and cross-data source transferability of the proposed SAM unsupervised segmentation combined with multi-scale segmentation method in terms of extracting complex cultivated land parcels from different data sources, two types of VHR satellite images, namely GF-2 and Beijing-3A, were selected for model evaluation. Among them, the BJ-3A satellite has a panchromatic resolution of 0.3–0.5 m and a multispectral resolution of 1.2–2 m [61], while the GF-2 satellite has a panchromatic resolution of 0.8 m and a multispectral resolution of 3.2 m [62]. To ensure data comparability, the selected VHR images were processed through data fusion to generate RGB remote sensing images and resampled to a resolution of 1 m.

The results of cultivated land parcel extraction are presented in Figure 10 and Table 6. Across different satellite data sources, the proposed SAM unsupervised segmentation combined with multi-scale segmentation method demonstrated superior performance compared to basic SAM unsupervised segmentation in terms of extracting cultivated land parcels and produced results comparable to related deep learning methods. This consistency with findings obtained from Jilin-1 satellite images further validates the robustness and cross-data source transferability of the proposed method for extracting cultivated land parcels from various high-resolution satellite data sources.

Figure 10.

Segmentation results of cultivated land parcels extracted by SAM unsupervised segmentation combined with multi-scale segmentation under different satellite data sources. The first row is the BJ-3A satellite image data, and the second row is the GF-2 satellite image data. (b) shows the results of cultivated land parcels extracted by SAM unsupervised segmentation, and (c) shows the results of cultivated land parcels extracted by the combined method of SAM unsupervised segmentation and multi-scale segmentation.

Table 6.

The evaluation results of cultivated land parcels extracted by basic SAM unsupervised segmentation and SAM unsupervised segmentation combined with multi-scale segmentation under different satellite data sources.

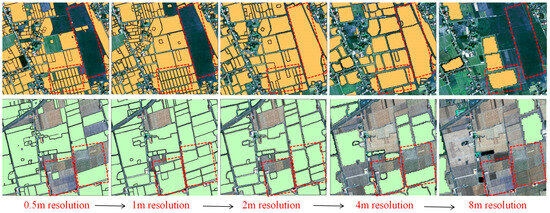

5.2. Influence of Parcels Segmentation Effectiveness in High-Resolution Imagery with Different Resolutions

To further explore the impact of high-resolution image on parcel delineation effectiveness, a 0.5 m high-resolution image was resampled to resolutions of 1 m, 2 m, 4 m, and 8 m. SAM unsupervised segmentation was used to extract parcels, aiming to verify the influence of resolution on SAM segmentation accuracy.

As shown in Figure 11, when the image was down-sampled to a resolution of 1 m, the segmentation results were more complete, and in some local areas, the segmentation was better than the original 0.5 m resolution image. This was because the excessively high resolution reduced the receptive field of the model, causing more cultivated land parcels to be incorrectly identified as the background when segmenting 0.5 m high-resolution images. It shows that a higher resolution does not necessarily result in the better segmentation performance of the model [63,64]. When the resolution was down-sampled through resampling to 4 m, 8 m, or lower, the SAM-based parcel delineation effect correspondingly decreased. The segmentation results indicate that when the resolution was reduced to 2 m or 4 m, the receptive field of the SAM model expanded, making it more effective at identifying large parcels, though it may have overlooked some smaller parcels, making it more suitable for identifying managed parcels. When the resolution was higher, the SAM model tended to delineate smaller parcels, making it more suitable for accurately extracting cultivated land parcels, thus achieving more precise yield estimation and finer agricultural management. This conclusion was consistent with the objective of this study, which used 1 m high-resolution imagery to extract complex cultivated land parcels.

Figure 11.

Image segmentation of the same location with different resolutions based on the SAM. The red dashed frames in the figure indicate areas with larger differences in segmentation results.

5.3. Influence of Different Complex Scenes

Cultivated land scenes, which vary across different terrains, exhibit diverse landscapes and morphological characteristics. The SAM unsupervised segmentation method effectively delineates relatively regular plain parcels and slope parcels with distinct boundaries. However, in complex and fragmented cultivated land scenarios, the performance of SAM unsupervised segmentation in extracting cultivated land parcels declines. Similarly, the point prompt segmentation effect of SAM is poor in highly fragmented cultivated land due to the limitations of using point-prompt SAM conditional segmentation. Parcels extracted using point prompts often identify only the most distinct parcel around the prompt point. Increasing the number of prompt points or changing their positions does not significantly enhance the performance of SAM conditional segmentation in extracting cultivated parcels.

Whether in plains or in slopes and terraces with more complex cropping patterns, the SAM unsupervised segmentation combined with multi-scale segmentation performs better in extracting cultivated land parcels than SAM unsupervised segmentation and SAM conditional segmentation combined with point features. Although the extraction performances of the cultivated parcels decrease with increasing complexity of land types, this method leverages the combination strategy of using SAM unsupervised segmentation patches as constraints. This approach preserves the accuracy of SAM unsupervised segmentation in regular, well-defined parcels while fully utilizing the advantage of multi-scale segmentation to achieve complete segmentation in fragmented, irregular-shaped parcels, making it more suitable for the effective delineation of complex cultivated lands.

The effective and accurate extraction of cultivated land boundaries is crucial for cultivated land resource monitoring and fine agricultural management. However, due to the diverse spectral characteristics and rich texture features of cultivated land in high-resolution remote sensing images, traditional semantic segmentation has limited accuracy and imprecise boundary localization [65]. In contrast, the SAM segmentation method based on visual semantic segmentation can accurately identify the soft boundaries between different crops when extracting cultivated land parcels, thereby better serving precision agricultural management. Additionally, the accuracy of cultivated land parcels extracted by SAM unsupervised segmentation combined with multi-scale segmentation is comparable to those of related deep learning methods trained on samples. This approach enables zero-sample, unsupervised extraction of cultivated land parcels by leveraging the powerful transferability of the SAM and can be directly used for rapid and effective large-scale extraction.

5.4. Limitations and Future Work

The limitation of using SAM to extract complex cultivated land parcels is that SAM unsupervised segmentation is class-agnostic, requiring land use data or post-classification to extract single category elements. In complex scenarios with indistinct boundaries or high noise interference, the extraction of cultivated land parcels may yield suboptimal results. Currently, the SAM, through its strong generalization capability, significantly reduces the workload of manually labeled samples and has been widely explored and applied in the field of remote sensing. SAM unsupervised segmentation shows great application potential in areas with clear boundaries, regular arrangements, and small size variations, such as greenhouse extraction and marine aquaculture extraction. For complex scenarios with a combination of diverse object types, large scale variations, and significant background differences, a combination method similar to that used in this study, integrating multi-scale segmentation, is required to achieve better results. Therefore, in future work, we will continue to explore the extraction of cultivated parcels based on SAM segmentation combined with multi-temporal imagery and optimize the method based on SAM unsupervised segmentation combined with multi-scale segmentation to improve the accuracy of parcel extraction in complex scenes, providing more reliable data for precision agriculture. Meanwhile, by leveraging the advantages of SAM segmentation in terms of patch consistency with real boundaries and high homogeneity, we will conduct further research on large-scale parcel change monitoring based on SAM segmentation.

6. Conclusions

The extraction of complex cultivated land parcels has always faced challenges such as limited accessibility to high-resolution satellite images and the lack of ground truth labels for model training and validation. Additionally, significant differences in farmland across different regions limit the generalizability of the models. This study employs a constrained segmentation strategy, leveraging the advantage of SAM unsupervised segmentation in obtaining segmentation patches with parcel boundary semantic information. The SAM unsupervised segmentation parcels are used as line constraints and incorporated into subsequent multi-scale segmentation. The proposed SAM unsupervised segmentation combined with multi-scale segmentation method performs well in various cultivated land parcel scenarios. Compared to other segmentation models, the advantage of extracting parcels using SAM unsupervised segmentation combined with multi-scale segmentation is that it requires no sample training and can be directly used for unsupervised classification in different complex scenarios. The SAM has excellent blurring perception capability, predicting effective masks in fuzzy conditions; SAM segmentation is fast, and the extracted parcel boundaries are highly consistent with real boundaries, while the segmented patches exhibit higher homogeneity. Even small, fragmented parcels can be effectively segmented, showing strong cross-region and cross-data source transferability. Additionally, multi-scale segmentation compensates for the poor performance of SAM unsupervised segmentation in irregular parcel segmentation, enabling the effective extraction of complex cultivated land parcels.

By evaluating the effectiveness of various SAM-based segmentation methods for extracting complex cultivated land parcels, we found that the SAM shows great potential in the application of extracting cultivated land parcels. The proposed SAM unsupervised segmentation combined with multi-scale segmentation in this study significantly improves the performance of extracting cultivated land parcels in various types of fields, compared to both SAM unsupervised segmentation and point-prompt SAM conditional segmentation. This method offers a low-cost, high-efficiency technical solution for extracting complex cultivated land parcels under zero-sample, unsupervised conditions.

Author Contributions

Conceptualization, X.Y., H.J., and Z.H.; methodology, Y.L., Z.W., Z.H., and X.L.; software, K.G. and Z.H.; validation, X.L., H.L., and Z.H.; formal analysis, K.G. and Z.H.; investigation, Y.L. and Z.H.; resources, X.Y. and Z.H.; data curation, H.J. and Z.H.; writing—original draft preparation, X.Y., Z.H., and Y.L.; writing—review and editing, X.Y., Z.H., and H.J.; visualization, Y.L., X.L., and Z.H.; supervision, Z.W., K.G., and Z.H.; project administration, H.J., H.L., and Z.H.; funding acquisition, X.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (Grant No. 2021YFB3900501).

Data Availability Statement

In the aforementioned passage, it is mentioned that the open-source Python package Segment-Geospatial (samgeo) can be accessed at https://github.com/opengeos/segment-geospatial (accessed on 16 October 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Waldner, F.; Canto, G.S.; Defourny, P. Automated annual cropland mapping using knowledge-based temporal features. ISPRS J. Photogramm. Remote Sens. 2015, 110, 1–13. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Du, Q.; Luo, B.; Chanussot, J. Using high-resolution airborne and satellite imagery to assess crop growth and yield variability for precision agriculture. Proc. IEEE 2012, 101, 582–592. [Google Scholar] [CrossRef]

- Mateo-Sanchis, A.; Piles, M.; Muñoz-Marí, J.; Adsuara, J.E.; Pérez-Suay, A.; Camps-Valls, G. Synergistic integration of optical and microwave satellite data for crop yield estimation. Remote Sens. Environ. 2019, 234, 111460. [Google Scholar] [CrossRef] [PubMed]

- Campos-Taberner, M.; García-Haro, F.J.; Martínez, B.; Sánchez-Ruiz, S.; Moreno-Martínez, Á.; Camps-Valls, G.; Gilabert, M.A. Land use classification over smallholding areas in the European Common Agricultural Policy framework. ISPRS J. Photogramm. Remote Sens. 2023, 197, 320–334. [Google Scholar] [CrossRef]

- Gao, H.; Wang, C.; Wang, G.; Fu, H.; Zhu, J. A novel crop classification method based on ppfSVM classifier with time-series alignment kernel from dual-polarization SAR datasets. Remote Sens. Environ. 2021, 264, 112628. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liu, H.; Peng, S. Detection of cropland change using multi-harmonic based phenological trajectory similarity. Remote Sens. 2018, 10, 1020. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-transformer network with multiscale context aggregation for fine-grained cropland change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Tarasiewicz, T.; Tulczyjew, L.; Myller, M.; Kawulok, M.; Longépé, N.; Nalepa, J. Extracting High-Resolution Cultivated Land Maps from Sentinel-2 Image Series. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 175–178. [Google Scholar]

- North, H.C.; Pairman, D.; Belliss, S.E. Boundary delineation of agricultural fields in multitemporal satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 12, 237–251. [Google Scholar] [CrossRef]

- Watkins, B.; Van Niekerk, A. Automating field boundary delineation with multi-temporal Sentinel-2 imagery. Comput. Electron. Agric. 2019, 167, 105078. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, Z.; Guo, M.; Huang, Y. Multiscale edge-guided network for accurate cultivated land parcel boundary extraction from remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 62, 4501020. [Google Scholar] [CrossRef]

- Wang, M.; Li, R. Segmentation of high spatial resolution remote sensing imagery based on hard-boundary constraint and two-stage merging. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5712–5725. [Google Scholar] [CrossRef]

- Wang, M.; Huang, J.; Ming, D. Region-line association constraints for high-resolution image segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 628–637. [Google Scholar] [CrossRef]

- Xu, L.; Ming, D.; Du, T.; Chen, Y.; Dong, D.; Zhou, C. Delineation of cultivated land parcels based on deep convolutional networks and geographical thematic scene division of remotely sensed images. Comput. Electron. Agric. 2022, 192, 106611. [Google Scholar] [CrossRef]

- Wu, W.; Chen, T.; Yang, H.; He, Z.; Chen, Y.; Wu, N. Multilevel segmentation algorithm for agricultural parcel extraction from a semantic boundary. Int. J. Remote Sens. 2023, 44, 1045–1068. [Google Scholar] [CrossRef]

- Xia, L.; Luo, J.; Sun, Y.; Yang, H. Deep extraction of cropland parcels from very high-resolution remotely sensed imagery. In Proceedings of the 2018 7th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Hangzhou, China, 6–9 August 2018; pp. 1–5. [Google Scholar]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D. Automated crop field extraction from multi-temporal Web Enabled Landsat Data. Remote Sens. Environ. 2014, 144, 42–64. [Google Scholar] [CrossRef]

- Graesser, J.; Ramankutty, N. Detection of cropland field parcels from Landsat imagery. Remote Sens. Environ. 2017, 201, 165–180. [Google Scholar] [CrossRef]

- Chen, B.; Qiu, F.; Wu, B.; Du, H. Image segmentation based on constrained spectral variance difference and edge penalty. Remote Sens. 2015, 7, 5980–6004. [Google Scholar] [CrossRef]

- Xue, Y.; Zhao, J.; Zhang, M. A watershed-segmentation-based improved algorithm for extracting cultivated land boundaries. Remote Sens. 2021, 13, 939. [Google Scholar] [CrossRef]

- Song, Q.; Hu, Q.; Zhou, Q.; Hovis, C.; Xiang, M.; Tang, H.; Wu, W. In-season crop mapping with GF-1/WFV data by combining object-based image analysis and random forest. Remote Sens. 2017, 9, 1184. [Google Scholar] [CrossRef]

- Tang, Z.; Wang, H.; Li, X.; Li, X.; Cai, W.; Han, C. An object-based approach for mapping crop coverage using multiscale weighted and machine learning methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1700–1713. [Google Scholar] [CrossRef]

- Xu, L.; Ming, D.; Zhou, W.; Bao, H.; Chen, Y.; Ling, X. Farmland extraction from high spatial resolution remote sensing images based on stratified scale pre-estimation. Remote Sens. 2019, 11, 108. [Google Scholar] [CrossRef]

- Cai, Z.; Hu, Q.; Zhang, X.; Yang, J.; Wei, H.; He, Z.; Song, Q.; Wang, C.; Yin, G.; Xu, B. An adaptive image segmentation method with automatic selection of optimal scale for extracting cropland parcels in smallholder farming systems. Remote Sens. 2022, 14, 3067. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, X.; Xin, Q.; Huang, J. Developing a multi-filter convolutional neural network for semantic segmentation using high-resolution aerial imagery and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2018, 143, 3–14. [Google Scholar] [CrossRef]

- Ming, D.; Li, J.; Wang, J.; Zhang, M. Scale parameter selection by spatial statistics for GeOBIA: Using mean-shift based multi-scale segmentation as an example. ISPRS J. Photogramm. Remote Sens. 2015, 106, 28–41. [Google Scholar] [CrossRef]

- Waldner, F.; Diakogiannis, F.I. Deep learning on edge: Extracting field boundaries from satellite images with a convolutional neural network. Remote Sens. Environ. 2020, 245, 111741. [Google Scholar] [CrossRef]

- Zhang, L.; Yuan, S.; Dong, R.; Zheng, J.; Gan, B.; Fang, D.; Liu, Y.; Fu, H. Swcare: Switchable learning and connectivity-aware refinement method for multi-city and diverse-scenario road mapping using remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103665. [Google Scholar] [CrossRef]

- Yan, G.; Jing, H.; Li, H.; Guo, H.; He, S. Enhancing building segmentation in remote sensing images: Advanced multi-scale boundary refinement with MBR-HRNet. Remote Sens. 2023, 15, 3766. [Google Scholar] [CrossRef]

- Zhong, B.; Wei, T.; Luo, X.; Du, B.; Hu, L.; Ao, K.; Yang, A.; Wu, J. Multi-swin mask transformer for instance segmentation of agricultural field extraction. Remote Sens. 2023, 15, 549. [Google Scholar] [CrossRef]

- Cai, Z.; Hu, Q.; Zhang, X.; Yang, J.; Wei, H.; Wang, J.; Zeng, Y.; Yin, G.; Li, W.; You, L.; et al. Improving agricultural field parcel delineation with a dual branch spatiotemporal fusion network by integrating multimodal satellite data. ISPRS J. Photogramm. Remote Sens. 2023, 205, 34–49. [Google Scholar] [CrossRef]

- Zhang, D.; Pan, Y.; Zhang, J.; Hu, T.; Zhao, J.; Li, N.; Chen, Q. A generalized approach based on convolutional neural networks for large area cropland mapping at very high resolution. Remote Sens. Environ. 2020, 247, 111912. [Google Scholar] [CrossRef]

- Song, W.; Wang, C.; Dong, T.; Wang, Z.; Wang, C.; Mu, X.; Zhang, H. Hierarchical extraction of cropland boundaries using Sentinel-2 time-series data in fragmented agricultural landscapes. Comput. Electron. Agric. 2023, 212, 108097. [Google Scholar] [CrossRef]

- Persello, C.; Tolpekin, V.A.; Bergado, J.R.; De By, R.A. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef] [PubMed]

- Ji, Z.; Wei, J.; Chen, X.; Yuan, W.; Kong, Q.; Gao, R.; Su, Z. SEDLNet: An unsupervised precise lightweight extraction method for farmland areas. Comput. Electron. Agric. 2023, 210, 107886. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 4015–4026. [Google Scholar]

- Mazurowski, M.A.; Dong, H.; Gu, H.; Yang, J.; Konz, N.; Zhang, Y. Segment anything model for medical image analysis: An experimental study. Med. Image Anal. 2023, 89, 102918. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, L.; Cui, Y.; Huang, G.; Lin, W.; Yang, Y.; Hu, Y. A comprehensive survey on segment anything model for vision and beyond. arXiv 2023, arXiv:2305.08196. [Google Scholar]

- Shi, P.; Qiu, J.; Abaxi, S.M.D.; Wei, H.; Lo, F.P.-W.; Yuan, W. Generalist vision foundation models for medical imaging: A case study of segment anything model on zero-shot medical segmentation. Diagnostics 2023, 13, 1947. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef]

- Yu, T.; Feng, R.; Feng, R.; Liu, J.; Jin, X.; Zeng, W.; Chen, Z. Inpaint anything: Segment anything meets image inpainting. arXiv 2023, arXiv:2304.06790. [Google Scholar]

- Liu, S.; Ye, J.; Wang, X. Any-to-any style transfer: Making picasso and da vinci collaborate. arXiv 2023, arXiv:2304.09728. [Google Scholar]

- Zhang, R.; Jiang, Z.; Guo, Z.; Yan, S.; Pan, J.; Ma, X.; Dong, H.; Gao, P.; Li, H. Personalize segment anything model with one shot. arXiv 2023, arXiv:2305.03048. [Google Scholar]

- Gui, B.; Bhardwaj, A.; Sam, L. Evaluating the efficacy of segment anything model for delineating agriculture and urban green spaces in multiresolution aerial and spaceborne remote sensing images. Remote Sens. 2024, 16, 414. [Google Scholar] [CrossRef]

- Ding, L.; Zhu, K.; Peng, D.; Tang, H.; Yang, K.; Bruzzone, L. Adapting segment anything model for change detection in VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611711. [Google Scholar] [CrossRef]

- Chen, T.; Zhu, L.; Ding, C.; Cao, R.; Wang, Y.; Li, Z.; Sun, L.; Mao, P.; Zang, Y. SAM Fails to Segment Anything?—SAM-Adapter: Adapting SAM in Underperformed Scenes: Camouflage, Shadow, Medical Image Segmentation, and More. arXiv 2023, arXiv:2304.09148. [Google Scholar]

- Zhang, C.; Puspitasari, F.D.; Zheng, S.; Li, C.; Qiao, Y.; Kang, T.; Shan, X.; Zhang, C.; Qin, C.; Rameau, F. A survey on segment anything model (sam): Vision foundation model meets prompt engineering. arXiv 2023, arXiv:2306.06211. [Google Scholar]

- Yan, Z.; Li, J.; Li, X.; Zhou, R.; Zhang, W.; Feng, Y.; Diao, W.; Fu, K.; Sun, X. RingMo-SAM: A foundation model for segment anything in multimodal remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Xia, L.; Liu, R.; Su, Y.; Mi, S.; Yang, D.; Chen, J.; Shen, Z. Crop field extraction from high resolution remote sensing images based on semantic edges and spatial structure map. Geocarto Int. 2024, 39, 2302176. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, M.; Shi, W. CS-WSCDNet: Class Activation Mapping and Segment Anything Model-Based Framework for Weakly Supervised Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5624812. [Google Scholar] [CrossRef]

- Guk, E.; Levin, N. Analyzing spatial variability in night-time lights using a high spatial resolution color Jilin-1 image–Jerusalem as a case study. ISPRS J. Photogramm. Remote Sens. 2020, 163, 121–136. [Google Scholar] [CrossRef]

- Crippen, R.; Buckley, S.; Agram, P.; Belz, E.; Gurrola, E.; Hensley, S.; Kobrick, M.; Lavalle, M.; Martin, J.; Neumann, M.; et al. NASADEM global elevation model: Methods and progress. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 125–128. [Google Scholar] [CrossRef]

- Wu, Q.; Osco, L.P. samgeo: A Python package for segmenting geospatial data with the Segment Anything Model (SAM). J. Open Source Softw. 2023, 8, 5663. [Google Scholar] [CrossRef]

- Ren, Y.; Yang, X.; Wang, Z.; Yu, G.; Liu, Y.; Liu, X.; Meng, D.; Zhang, Q.; Yu, G. Segment Anything Model (SAM) Assisted Remote Sensing Supervision for Mariculture—Using Liaoning Province, China as an Example. Remote Sens. 2023, 15, 5781. [Google Scholar] [CrossRef]

- Lesiv, M.; Laso Bayas, J.C.; See, L.; Duerauer, M.; Dahlia, D.; Durando, N.; Hazarika, R.; Kumar Sahariah, P.; Vakolyuk, M.y.; Blyshchyk, V. Estimating the global distribution of field size using crowdsourcing. Glob. Chang. Biol. 2019, 25, 174–186. [Google Scholar] [CrossRef] [PubMed]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Jiao, S.; Hu, D.; Shen, Z.; Wang, H.; Dong, W.; Guo, Y.; Li, S.; Lei, Y.; Kou, W.; Wang, J. Parcel-level mapping of horticultural crop orchards in complex mountain areas using VHR and time-series images. Remote Sens. 2022, 14, 2015. [Google Scholar] [CrossRef]

- Rishikeshan, C.; Ramesh, H. An automated mathematical morphology driven algorithm for water body extraction from remotely sensed images. ISPRS J. Photogramm. Remote Sens. 2018, 146, 11–21. [Google Scholar] [CrossRef]

- Su, T.; Zhang, S. Local and global evaluation for remote sensing image segmentation. ISPRS J. Photogramm. Remote Sens. 2017, 130, 256–276. [Google Scholar] [CrossRef]

- Wang, Y.; Mao, Z.; Xin, Z.; Liu, X.; Li, Z.; Dong, Y.; Deng, L. Assessing the Efficacy of Pixel-Level Fusion Techniques for Ultra-High-Resolution Imagery: A Case Study of BJ-3A. Sensors 2024, 24, 1410. [Google Scholar] [CrossRef]

- Wu, C.; Guo, Y.; Guo, H.; Yuan, J.; Ru, L.; Chen, H.; Du, B.; Zhang, L. An investigation of traffic density changes inside Wuhan during the COVID-19 epidemic with GF-2 time-series images. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102503. [Google Scholar] [CrossRef]

- Salgueiro, L.; Marcello, J.; Vilaplana, V. SEG-ESRGAN: A multi-task network for super-resolution and semantic segmentation of remote sensing images. Remote Sens. 2022, 14, 5862. [Google Scholar] [CrossRef]

- Osco, L.P.; Wu, Q.; de Lemos, E.L.; Gonçalves, W.N.; Ramos, A.P.M.; Li, J.; Junior, J.M. The segment anything model (sam) for remote sensing applications: From zero to one shot. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103540. [Google Scholar] [CrossRef]

- Li, Z.; Chen, S.; Meng, X.; Zhu, R.; Lu, J.; Cao, L.; Lu, P. Full convolution neural network combined with contextual feature representation for cropland extraction from high-resolution remote sensing images. Remote Sens. 2022, 14, 2157. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).