Abstract

Point clouds are essential 3D data representations utilized across various disciplines, often requiring point cloud completion methods to address inherent incompleteness. Existing completion methods like SnowflakeNet only consider local attention, lacking global information of the complete shape, and tend to suffer from overfitting as the model depth increases. To address these issues, we introduced self-positioning point-based attention to better capture complete global contextual features and designed a Channel Attention module for adaptive feature adjustment within the global vector. Additionally, we implemented a vector attention grouping strategy in both the skip-transformer and self-positioning point-based attention to mitigate overfitting, improving parameter efficiency and generalization. We evaluated our method on the PCN dataset as well as the ShapeNet55/34 datasets. The experimental results show that our method achieved an average CD-L1 of 7.09 and average CD-L2 scores of 8.0, 7.8, and 14.4 on the PCN, ShapeNet55, ShapeNet34, and ShapeNet-unseen21 benchmarks, respectively. Compared to SnowflakeNet, we improved the average CD by 1.6%, 3.6%, 3.7%, and 4.6% on the corresponding benchmarks, while also reducing complexity and computational costs and accelerating training and inference speeds. Compared to other existing point cloud completion networks, our method also achieves competitive results.

1. Introduction

Point clouds are 3D data composed of numerous points, widely used in various fields. Compared to other 3D data formats like voxels and meshes, point clouds can more flexibly represent objects, losing fewer details and preserving the original geometric information of the scene or object. Point clouds can be acquired using sensors such as LiDAR and Kinect. However, due to the limitations of sensor or noise interference, the raw point cloud data are often incomplete and sparse. Incomplete point clouds adversely affect the performance of downstream 3D computer vision tasks such as classification, segmentation [1,2], and object detection [3,4]. Thus, it is necessary to use point cloud completion methods to predict the complete 3D shape.

Recently, the development of point cloud completion methods based on deep learning has been rapid [5,6,7,8,9,10,11,12]. Initially, researchers voxelized point clouds and used 3D CNNs to generate complete shapes [13,14,15]. However, this method led to severe detail loss and had high computational costs. After the introduction of PointNet [1], the direct processing of points became the primary research direction in the point cloud field due to its efficiency. The pioneering work on point cloud completion, PCN [6], also benefited from this, as it utilized the PointNet framework to extract global shape codes and decode them into complete shapes. However, decoding directly from global features could not capture fine-grained information. Consequently, some methods incorporated local structural information into shape completion [9,10,16]. Nevertheless, detail loss still occurred during the decoding process. To generate higher quality complete point clouds, the most popular current approach involves designing the decoder in multiple stages, generating point clouds from coarse to fine [7,8,12]. SnowflakeNet [17] is a classic technique in this approach, and many methods have further improved its structure [8,18,19]. In this work, we focus on designing a faster and more accurate SnowflakeNet.

SnowflakeNet uses skip-transformer and 1D deconvolution (point-wise splitting operations) to achieve good results in point cloud completion. However, experiments have shown that SnowflakeNet has two issues: (1) it only considers local attention and lacks global information of complete points; (2) as the model deepens, it tends to overfit.

For the first issue, specifically, the skip-transformer in SnowflakeNet follows the idea of the Point Transformer [20], using the KNN algorithm to connect each point in the raw point cloud with its neighboring points to form a local structure. It then enhances features by calculating a vector attention matrix based on the feature differences between the center point and its surrounding points. However, because the attention window is limited to the center point and its k-nearest points rather than the entire point cloud, it only aggregates features within local regions, considering only local attention in the point cloud. Therefore, this paper uses Self-Positioning Point-based Attention (SPA) [21] to capture the global context information of the input point cloud (as shown in Figure 1a). SPA only needs to compute attention weights within a small set of points, called self-positioning points (SP points), rather than all points in the point cloud. The context of the entire 3D point cloud is captured through these self-positioning points.

Figure 1.

GSSnowflake motivation diagram. (a) The skip-transformer considers local context (blue) only, and we use a self-positioning point (SP point) to aggregate global context (red) into point features as well. (b) Grouped Vector Attention (GVA) can prevent a drastic increase in parameter count during channel deepening.

For the second issue, both skip-transformers and SPA use vector attention, employing MLP as weight encoding and modulating each channel of the value vector through the Hadamard product. However, as the model deepens, the embedding dimension increases, causing a sharp rise in the parameter count of the weight-encoding MLP, leading to overfitting and limiting the model’s depth and performance. To address this, this paper introduces GVST and GVSPA modules to group vector attention, with channels in each group sharing the same weight (Figure 1b). This significantly reduces the number of parameters in the weight-encoding layer, allowing the model to deepen without overfitting as far as possible.

Additionally, considering that the seed generation module and the point generation module in SnowflakeNet focus on different features, we introduced the Channel Attention (CA) module. The purpose of this module is to allow the global feature vectors to adaptively adjust their feature representations according to the requirements of different modules. Essentially, the CA module uses two learnable weight vectors to adjust the feature values of all channels in the global vectors.

In summary, the contributions of this paper can be summarized as follows:

- Proposed the Grouped Vector Skip-Transformer (GVST), which inherits the advantages of skip-transformers while improving the model’s efficiency of parameter and generalization ability.

- Proposed the Grouped Vector Self-Positioning Point-based Attention (GVSPA), which enhances SnowflakeNet’s ability to capture complete global information while maintaining parameter efficiency through vector grouping.

- Proposed two Channel Attention (CA) modules, enabling global features to adaptively adjust the importance of different channel features according to different modules.

2. Related Work

2.1. Point Cloud Transformer

The proposal of the transformer [22] has significantly advanced the fields of natural language processing and computer vision. During the rapid development of research on vision transformers [23], researchers have also applied transformers to point clouds. Zhao et al. proposed the Point Transformer (PT) [20], and Guo et al. proposed the Point Cloud Transformer (PCT) [24], successfully applying the self-attention mechanism to point clouds and becoming pioneers in this research direction. PCT is a global transformer that computes global attention across the entire point cloud, limited by memory consumption and computational complexity, whereas PT computes local attention within local blocks, alleviating memory issues. Subsequently, Wu et al. proposed Point TransformerV2 [25], an improved version of PT that enhances parameter efficiency while increasing the model’s spatial representation capability.

In addition, Pan et al. applied transformers to the field of point cloud object detection [3], combining global and local attention. Park et al. introduced SpoTr [21], which addresses PCT’s computational complexity issue from a new perspective by calculating global attention only within a smaller set of points rather than all points.

2.2. Point Cloud Completion

2.2.1. Voxel-Based Methods

Inspired by the successful application of Convolutional Neural Networks (CNNs) in various fields such as image restoration and 3D reconstruction [26,27], an intuitive idea for 3D shape completion is to divide the 3D space into regular voxels and apply CNNs to the 3D space. Wu et al. [13] first proposed representing 3D shapes as probability distributions of binary variables on a 3D voxel grid using 3D CNNs to generate complete shapes. Ref. [5] reconstructs complete point clouds from coarse to fine, first using 3D CNNs to predict a coarse complete shape, then converting the output voxels to point clouds for further refinement. However, the memory and computational costs of voxel-based networks increase rapidly with higher spatial resolution. Consequently, generating high-resolution complete point clouds becomes challenging [14,15].

To address these issues, some works adopt different partitioning strategies [28,29] and sparse representations [30,31,32]. However, quantization effects inevitably lead to information loss, making them unsuitable for fine-grained generation tasks.

2.2.2. Point Based Methods

The method proposed by Qi et al. [1] can directly process point sets without requiring intermediate representations, pioneering point-based methods for point cloud processing. Point-based methods can reduce the time overhead associated with converting between point clouds and other data formats (such as voxels) and avoid the loss of details caused by data conversion. Yang et al. proposed FoldingNet [33], one of the pioneering works in point cloud generation, which provides insights for subsequent point cloud completion methods. It involves a two-stage generation process using a 2D grid to generate 3D shapes. Yuan et al. proposed PCN [6], an end-to-end point cloud completion method with an encoder–decoder structure, using MLP and folding operations to generate complete point clouds from global features.

To generate higher-quality and more detailed complete point clouds, some works like [7,34] have designed coarse-to-fine decoding structures, dividing the point cloud generation process into multiple stages. This approach has better interpretability and controllability, garnering increasing attention. Wang et al. proposed the Cascaded Refinement Network [16], which refines the predicted point positions by calculating displacement offsets. Xiang et al. proposed [17], which splits the number of points through a special deconvolution strategy, generating complete point clouds from coarse to fine through multiple stages of point splitting processes. The GSSnowflakeNet proposed in this paper has stronger global feature-capturing capabilities and parameter utilization efficiency, achieving better performance.

3. Methods

3.1. Overview

The overall architecture of the GSSnowflakeNet is outlined in Figure 2. It consists of three modules: a feature extractor module, a seed generation module, and a point generation module. Next, the functions and structures of these three modules will be introduced in detail.

Figure 2.

The overall architecture of GSSnowflake includes three main modules: the feature extractor module, the seed generation module, and the point generation module. SSPD stands for the Snowflake Point Deconvolution module based on SP points. n, n0, n1, n2, and n3 all represent the number of points, f, f1, and f2 denote global vectors, c stands for the number of channels in the global vector, and CA indicates the Channel Attention module.

3.1.1. Feature Extractor Module

The module structure is the same as the corresponding module in SnowflakeNet, consisting of three set abstraction layers [2] interleaved with two Point Transformer blocks [20]. Its main function is to encode the original point set into a global vector of size . The difference is that we introduced Grouped Vector Attention in the Point Transformer blocks (see Section 3.3.1) to improve parameter efficiency.

Additionally, considering that the features concerned by the seed generation module and the point generation module might differ, two Channel Attention (CA) modules are used to adjust the feature values of all channels in .

3.1.2. Seed Generator Module

The seed generator module is consistent with the corresponding module in SnowflakeNet. This module generates a sparse but structurally complete seed point cloud based on the global vector and the original incomplete point cloud .

3.1.3. Point Generation Module

This module consists of three layers of SSPD. It can be considered a point up-sampling module that fills the complete but sparse seed point cloud into a complete and dense point cloud output. Each layer of SSPD extracts the point cloud from the previous layer, splits the points according to the up-sampling factors (r1, r2, r3), and the number of points increases layer by layer ().

3.2. SP Point and Snowflake Point-Based Deconvolution (SSPD)

The self-positioning point (SP point) and Snowflake point-based deconvolution (SSPD) module treats the input points as parent points, splits the parent points into multiple child points, and then offsets the child points to increase the number of points while maintaining geometric details.

Figure 3 shows the structure of the i-th layer of SSPD. SSPD takes the parent points obtained from the previous layer (the -th layer of SSPD), and, through a point splitting (PS) operation, copies them ri times to obtain a set of child points. Then, following the method of PCN [6], it calculates the offset vector for the child points. Using , the positions of the child points are updated so that they move within the neighborhood of the parent points, which is a sphere with radius r.

Figure 3.

The architecture of SSPD. Here, d is the number of point feature channels, n is the number of points, PS is the point-wise splitting operation, and ⊕ represents element-wise addition.

Specifically, the output points from the layer and the global feature vector are input into the Grouped Vector Self-Positioning Point-based Attention module (GVSPA). This module extracts and combines each point’s features with the global features of the complete point cloud to obtain . Then, the Grouped Vector Skip-Transformer (GVST) receives , point coordinates , and the child point offset features from the previous step, and outputs the shape context features . Next, a 1D deconvolution is used for point-splitting operations on . At the same time, the number of points in is replicated through nearest-neighbor interpolation. The former is used to add variations, while the latter retains shape context information. Finally, an MLP layer generates the child point offset features for this step, which will be used to generate the child point coordinate offsets , and will also serve as input to GVST in the next step. can be calculated using the following formula:

where is the hyperbolic tangent function, which compresses the input values to the range .

The point-wise splitting operation mentioned above is a special one-dimensional deconvolution strategy, where the kernel size and stride are both equal to the up-sampling factor ri. Its purpose is to generate multiple child point features for each . If the m-th logit of is denoted as , and its corresponding convolutional kernel is denoted as , then is a matrix of size , where the k-th row of is denoted as . The k-th child point feature is given by the following equation:

Figure 3 illustrates the detailed structure of SSPD. As shown in it, three layers of SSPD are used in the point generation module to gradually increase the number of points. The collaboration between the three SSPDs is crucial for the coherent generation of points, as the splitting information from the previous time can be used to guide the point splitting in the current layer. GVST serves as a collaborative unit between different layers. It considers the splitting information from the previous layer to generate vector attention to guide the point splitting in the current layer.

Since GVST can be seen as a variant of the Point Transformer, it can be classified as a local transformer, which aggregates information only in local blocks [35]. Therefore, this paper introduces GVSPA to capture global contextual information. The following sections will detail GVST and GVSPA.

3.3. Grouped Vector Skip-Transformer (GVST)

The Grouped Vector Skip-Transformer (GVST) learns and refines the spatial context between parent points and child points. “Skip” represents the connection between the displacement feature from the previous layer and the point feature from the current layer [17].

Figure 4 illustrates the detailed structure of the Grouped Vector Skip-Transformer. It takes Q, K, and “pos” as inputs, where Q represents the point features with global contextual information outputted by GVSPA; K represents the offset features outputted by the previous stage; and “pos” represents the coordinates of the point set outputted by the previous stage. GVST concatenates with each point in , then feeds the result into an MLP to generate features that fuse the previous point features and splitting information, which are used as the value vectors .

Figure 4.

Grouped Vector Skip-Transformer. In the figure, “pos” represents the coordinates of the input points, “pe” stands for the position embedding, “pme” stands for the position multiplication embedding, d denotes the dimension of the point features, ni represents the number of points, k represents the number of nearest neighbors, and g represents the number of groups.

Next, the attention vectors are computed using and . The specific steps involve first using the KNN algorithm to calculate the indices of the k nearest points for each point in the set . Then, for each point in , the k nearest points are retrieved based on the indices. For , each point is duplicated k times. Then, the similarity between the center point feature and the offset features of the neighboring points is calculated by element-wise subtraction (), where j is the index of the point and l ranges from 1 to k, representing the k nearest points. The softmax activation function is then applied to obtain the attention vectors , where indicates how much attention the current splitting should pay to the splitting of the previous stage. It is worth noting that in the first stage, there are no offset features from the previous stage. In this case, is used instead of . Finally, the shape context features can be calculated as follows:

Additionally, inspired by [25], the point set coordinates are used to calculate the position embedding and position multiplication embedding (“pe” and “pme” in Figure 4) to enhance the model’s positional encoding capability. This strengthens the model’s ability to capture the spatial positional information of the point cloud. The formula for calculating the positional encoding is as follows:

Here, represents the relative position between the j-th center point and the l-th neighboring point. The operator denotes the relation operation, which in this case is element-wise subtraction.

It is noted in [17] that the skip-transformer uses a relatively small number of channels. Experimental results show that increasing the number of channels leads to a significant increase in the number of parameters in the weight-encoding MLP within the skip-transformer. This, in turn, causes the model to overfit, limiting the model’s depth and performance. Therefore, we group the attention weights to share parameters, which significantly reduces the number of parameters in the weight encoding. This allows the model to deepen without overfitting. The following section will provide a detailed introduction to Grouped Vector Attention.

3.3.1. Grouped Vector Attention

Traditional vector attention mechanisms suffer from a rapid increase in the number of parameters in the multi-layer perceptron (MLP) used for weight encoding, as the number of input embedding channels increases. This large parameter scale limits the model’s generalization capabilities, leading to overfitting. To overcome these drawbacks, the Grouped Vector Attention (GVA) mechanism was introduced. GVA reduces the number of parameters through weight sharing along the channel dimension, similar to how convolutional layers share weights across different input positions by setting the kernel size.

GVA achieves parameter reduction by dividing the value vector into multiple groups along the channel dimension, with elements within the same group sharing the same weight. Logically, Grouped Vector Attention evenly divides the value vector into g groups (1 < g < d), and the weight encoding outputs an attention vector with g channels. Elements within the same group share the same weight. In practice, as shown in the lower part of Figure 5, each channel of the g Channel Attention vector is replicated d/g times and then element-wise multiplied with the value vector to achieve the same result.

Figure 5.

Vector Attention vs. Grouped Vector Attention. The upper part represents traditional vector attention, while the lower part represents Grouped Vector Attention with a group number g = 4. “Conv” denotes the convolutional layer, “bn” stands for batch normalization, ni represents the number of points, and k represents the number of nearest neighbors.

In the skip-transformer, given a point feature and the offset features of the k nearest points , the attention vector can be computed using the following formula:

Similarly, in GVST, the attention vector can be computed using the following formula:

where GL represents Grouped Linear, and Tile denotes channel-wise replication. Specifically, Grouped Linear involves multiplying a learnable parameter with the input embedding , then grouping the input embedding by channels, summing each group, and outputting an embedding with g channels (as illustrated in Figure 5). which can be expressed using the subsequent formula:

where represents the summed result for the i-th group, is the number of channels in the input tensor, is the number of groups, is the index of the current group ranging from 1 to , and is the result obtained by concatenating all along the last dimension (i.e., the grouping dimension), followed by feeding into the softmax and Tile functions.

3.4. Grouped Vector Self-Positioning Point Attention (GVSPA)

In SnowflakeNet’s SPD module, feature enhancement is achieved through the skip-transformer. However, the skip-transformer follows the idea of [20], aggregating information only within local blocks, which is considered local attention. Additionally, [8] points out that the global feature vector f in SnowflakeNet is captured from an incomplete point cloud, meaning that this global vector f only represents information from the visible part of the point cloud. Furthermore, in SnowflakeNet, the complete global shape is captured solely by a mini PointNet, which results in insufficient global information and potential loss of details. Figure 6 illustrates the process of capturing global information in both SnowflakeNet and our method. Due to SnowflakeNet’s approach of capturing complete features with only an MLP and a max-pooling layer, there is a significant loss of detailed features. This results in blurry global shape information, causing some points to deviate from the shape’s outline, leading to noisy points.

Figure 6.

Global feature capture process, taking the first SPD/SSPD as an example and omitting the subsequent point generation process. (a) The global feature capture process in the Snowflake method, which only uses a mini PointNet to capture complete features, resulting in some noise points in the point cloud completion. (b) The global feature capture process in the GSSnowflake method, which uses GVSPA to recapture complete shape features and enhances features using the attention mechanism, resulting in fewer noise points in the point cloud completion.

To address this issue, one could consider capturing more global information and enhancing features by combining global attention from the complete shape’s point cloud. This can be achieved by placing a module after the seed points that aggregates global information. Inspired by [21], this paper proposes the Grouped Vector Self-Positioning Point-based Attention (GVSPA) module to capture the global context of the complete point cloud while maintaining efficient parameter utilization. The context of the entire 3D shape is represented solely by the Self-Positioning points (SP points) (as shown in Figure 7). Therefore, when calculating attention weights, it is only necessary to compute them within a small set of SP points rather than all points, greatly reducing the computational complexity.

Figure 7.

Comparison of local attention, SP attention, and global attention. (a) Local attention calculates attention weights among points within a local region. (b) Self-positioning point attention calculates attention weights only among SP points (colored points in the figure, assuming there are only four SP points). (c) Global attention calculates attention weights among all points.

Figure 8 shows the detailed structure of the GVSPA. It takes the set of point coordinates and the set of point features as input. The point features are the output of a mini PointNet and incorporate the global feature vector f2 from the partial shape. The latent vectors ( denotes the number of SP points, and in practice, we set = 16) are learnable parameters used to capture and express the local shape features around SP points, allowing SP points to adaptively position themselves according to different input shapes. This process is similar to how deformable convolutional networks [36] calculate offsets based on the features of each pixel.

Figure 8.

Grouped Vector Self-Positioning Point-based Attention (GVSPA) detailed structure. Here, m represents the number of SP points, “SP pos” represents the coordinates of SP points, “SP feat” represents the features of SP points, RBF (Radial Basis Function) measures spatial similarity, and PN stands for mini PointNet.

The computation steps of GVSPA can be summarized in three steps: (1) Calculate the SP point set , where . (2) Use the SP point set to calculate the SP point feature set , where . (3) Enhance the SP point features using SP attention, concatenate them with the input point features , and output Q as the input to the subsequent GVST module.

The first step is to calculate the coordinates of the SP points. First, the similarity matrix between vector and the point features is computed using a dot product. Then, the three coordinates of the SP points are obtained by matrix multiplication. Furthermore, the vector represents general features captured from different input point clouds during the training process and can be considered as predefined values of the SP point features. By guiding the generation of SP point coordinates using the difference matrix between the general features and specific point cloud features, the SP points can automatically locate themselves at different coordinate positions based on the varying input point cloud features. The calculation of the SP point set can be formalized as:

The second step is to calculate the features of the SP points. Similar to the method of calculating the coordinates, the feature values for all channels of the SP points are obtained by multiplying the similarity matrix with the point feature matrix. The difference is that the difference matrix between the points and the SP points is computed using the RBF function. The SP point features can be calculated using the following formula:

where RBF (Radial Basis Function) measures the spatial similarity between SP points and the original points. The , is used to calculate semantic feature similarity. This way, the SP point features take into account both spatial relationships and semantic feature relationships. The formula for RBF can be expressed as:

3.5. Channel Attention Module

Considering that the seed generation module and the point generation module may focus on different features—where the former emphasizes the geometric and overall features of the point cloud and the latter focuses on detailed and contextual features—we introduced two Channel Attention (CA) modules. The goal is to adaptively enhance the global feature vector to better suit the current task. Essentially, we use two learnable weight vectors and to element-wise multiply with to obtain and , respectively. This adjusts the feature values of all channels in . The detailed structure is shown in Figure 9. The calculation of the weight vectors is inspired by the method used in [37].

Figure 9.

The architecture of the CA module. C represents the number of channels in the global feature vector, and ⊙ denotes the element-wise multiplication.

Specifically, assuming the input feature vector is , where C represents the number of channels, the two weight vectors to be learned are and . First, an intermediate feature map is calculated through a 1 × 1 convolution, and then the sigmoid function is applied to obtain the weight vectors. This process can be formalized as follows:

where represents the sigmoid function.

Subsequently, the feature vector is element-wise multiplied by the two weight vectors and , resulting in the feature vectors and : The entire calculation process of the CA module can be generalized by the following formula:

3.6. Training Loss

3.6.1. Completion Loss

Chamfer Distance (CD) is a commonly used point cloud matching method to measure the similarity between two point clouds. In point cloud completion tasks, CD is often used as a loss function. Therefore, this paper prioritizes using Chamfer Distance as the loss function. The formula for the L2 version of Chamfer Distance, based on Euclidean distance, is defined as follows:

where and represent two point sets, and and denote point coordinates. The formula for the Chamfer Distance based on the Manhattan distance (L1 version) is defined as follows:

To constrain the generated seed points to the complete shape, downsample the ground truth to have the same number of points as , denoted as , and compute the corresponding Chamfer Distance (CD) loss. Similarly, to constrain the point clouds generated in the subsequent splitting process to have the correct shape, down-sample the ground truth to have the same number of points as , , and , respectively, and compute the corresponding losses. In this way, there are four complete shape point clouds with different numbers of points, each with its corresponding loss. The sum of these four losses is defined as the completion loss, as follows:

where represents the downsampled ground truth point cloud, which has the same number of points as .

3.6.2. Preservation Loss

Following the approach of [38], we use the partial matching loss to preserve the shape of the input incomplete point cloud, calculated by the following equation:

Partial matching loss is a one-way constraint aimed at matching one shape to another shape, without limiting the reverse direction. Since partial matching loss only requires the output point cloud to partially match the input point cloud, we consider it a preservation loss . The total training loss is defined as follows:

4. Experiment

We conducted comprehensive experiments on several widely used benchmarks, including the sparse dataset PCN [6] and the dense dataset ShapeNet55/34 [7]. The basic environment used for the experiments is Ubuntu 22.04, Python 3.10.14, Pytorch 2.1.0, CUDA 12.1, and RTX4090 24G. We used the Adam optimizer on the PCN, training for 400 epochs, and the AdamW optimizer on the ShapeNet55/34, training for 200 epochs. The initial learning rates were 0.001 and 0.0005, respectively, and were gradually decayed. The batch size for all experiments was 64.

4.1. Dataset and Evaluation Metric

4.1.1. PCN Dataset

The PCN dataset was proposed by Yuan et al. in 2018, containing 30,974 partial and complete point cloud pairs from eight categories in ShapeNet [39]. To ensure a fair comparison with previous methods, we adopted the same training/testing split strategy, including 28,974 training samples, 800 validation samples, and 1200 test samples. Additionally, considering that the number of points in incomplete point clouds may vary, we followed previous work and resampled them to a standard size of 2048 points.

4.1.2. ShapeNet-55/34 Dataset

The ShapeNet-55/34 dataset was proposed by Yu et al. [7] in 2021, derived from ShapeNet as well. ShapeNet-55 contains 55 categories, including 41,952 training samples and 10,518 test samples. On the other hand, ShapeNet-34 contains 34 categories, including 46,765 shapes for training and 5705 shapes for testing. The test set is further divided into two parts: one part includes 3400 shapes from 34 seen categories, and the other part includes 2305 shapes from 21 unseen categories. Similar to previous work, we evaluated the model on point cloud data with different missing point ratios (25%, 50%, 75%). The three missing point ratios represent the difficulty levels of the three completion tasks: easy (S), moderate (M), and hard (H).

4.1.3. Evaluation Metric

To quantitatively evaluate different algorithms, we used three common metrics: CD-L1, CD-L2, and F-Score@1%. For the CD metrics, smaller values are better, while for the F-score, larger values are better.

4.2. Evaluation on the PCN Dataset

4.2.1. Quantitative Comparison

For the purpose of comparing our proposed GSSnowflakeNet with SnowflakeNet and other recent point cloud completion networks, we evaluated it on the widely-used PCN dataset using CD-L1 as the evaluation metric. Table 1 shows the quantitative comparison results between our method and other point cloud completion methods. It can be seen that GSSnowflakeNet achieves better average performance. Compared to SnowflakeNet, the average CD is reduced by 0.12, representing a 1.66% decrease in the original loss, and the CD for all individual categories decreased except for Lamp. This indicates that our method has stronger generalization capability, thanks to the grouped design and the global attention module. Compared to other recent point cloud completion models, our method also achieves superior performance in most categories.

Table 1.

Point cloud completion on PCN dataset in terms of per-point L1 Chamfer distance ×103 (lower is better). Bold indicates optimal performance.

4.2.2. Visual Comparison

To visually compare our method with others, we selected point clouds from four categories (airplane, car, sofa, cabinet) in the PCN dataset for completion and visualized the completion results. Figure 10 shows the visual comparison of completion results from different models. We selected several representative point cloud completion methods from Table 1 for comparison. It can be seen that the point clouds completed by GSSnowflakeNet have less noise, stronger detail structure repair capabilities, and higher overall quality compared to other methods. For the 3D shape of the airplane category, the results of the selected completion methods have similar visual effects on the visible side, but the results completed by our method have better symmetry and restore the original shape better on the invisible side, thanks to the richer global information. For the sofa category, the results predicted by our method have more accurate geometric shapes, while PoinTr [7] incorrectly predicts the geometric structure of the sofa.

Figure 10.

Visual comparison on PCN dataset.

4.3. Evaluation on the ShapeNet-55 Dataset

4.3.1. Quantitative Comparison

We also compared our proposed method with other existing methods from recent years on the ShapeNet55 dataset, conducting experiments at three difficulty levels: easy, moderate, and hard. Table 2 shows the point cloud completion results of our method and other methods on the ShapeNet55 dataset, using CD-L2 and F-Score as evaluation metrics. As shown in the table, compared to the original SnowflakeNet algorithm, we reduced the L2 Chamfer Distance by 0.3 (5.7%), 0.3 (4.1%), and 0.4 (3.2%) at the easy, moderate, and hard levels, respectively, with an average reduction of 0.3 (3.6%) in L2 Chamfer Distance. Meanwhile, the F1 Score also improved by 0.021 (4.5%). Additionally, our method achieved competitive results compared to other recent methods.

Table 2.

Point cloud completion on the ShapeNet-55 dataset in terms of the L2 Chamfer distance ×104 (lower is better) and F-Score@1% (higher is better) metric. We report the detailed results for each method in 10 categories. CD-S, CD-M, and CD-H denote the CD results under the three difficulty levels of Simple, Moderate, and Hard. Bold indicates optimal performance.

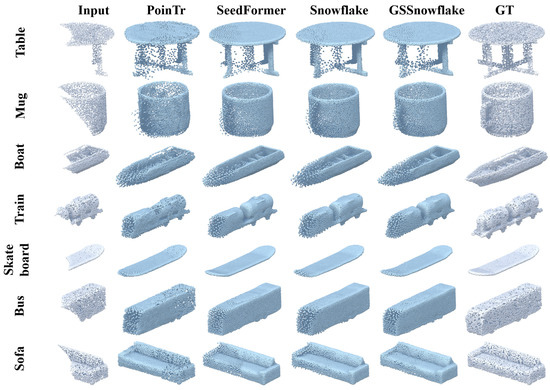

4.3.2. Visual Comparison

To visually compare the completion results of our method with other methods, we selected point clouds from seven categories in the ShapeNet55 dataset for testing. As shown in Figure 11, our method can still restore better geometric structures under high occlusion rates, and the completed point clouds by our method fit closer to the ground truth compared to SnowflakeNet. For instance, in the mug category, our method restored the handle of the cup with better quality, and in the table category, our method produced fewer noise points. These results demonstrate that our method achieves excellent performance on the ShapeNet55 dataset.

Figure 11.

Visual comparison on ShapeNet-55 dataset.

4.4. Evaluation on the ShapeNet-34/21 Dataset

4.4.1. Quantitative Comparison

To further compare our method with other existing point cloud completion methods, we conducted experiments on the ShapeNet-34/21 dataset at three difficulty levels: easy, moderate, and hard. The quantitative comparison results are shown in Table 3. As can be seen from the table, our method achieved better results in CD metrics (CD-S, CD-M, CD-H) and average CD across all 34 visible categories at all three difficulty levels. Compared to the Snowflake method, the metrics for the three difficulty levels decreased by 0.1 (approximately 1.9%), 0.3 (4.2%), and 0.4 (3.3%), respectively, with an average CD decrease of 0.3 (approximately 3.7%) and an F-score improvement of 0.014 (3.3%). This indicates that our improved method has better performance, particularly at higher occlusion rates. For the 21 unseen categories, our method also outperformed other methods in CD metrics. Compared to Snowflake, the CD metrics for the three difficulty levels decreased by 0.3 (3.9%), 0.6 (4.8%), and 1.1 (4.3%), respectively, with an average CD decrease of 0.7 (4.6%) and an F-score improvement of 0.019 (5.0%). This demonstrates that our improved method has stronger generalization capabilities.

Table 3.

Point cloud completion on the ShapeNet-34/21 dataset in terms of the L2 Chamfer distance ×104 (lower is better) and F-Score@1% (higher is better) metric. CD-S, CD-M, and CD-H denote the CD results under the three difficulty levels of Simple, Moderate, and Hard. Bold indicates optimal performance.

4.4.2. Visual Comparison

To visually compare, we selected eight categories from ShapeNet34 and seven categories from ShapeNet-unseen21 for testing and visualized the results for comparison. Figure 12 shows the completion results of our method compared to Snowflake [17] and PoinTr [7] on the ShapeNet34 dataset. From the figure, it can be seen that the point clouds completed by our method have smoother surfaces and better detail features. For example, in the bed category, our method smoothly repairs the geometric plane without obvious abrupt points. For the highly complex geometric shape of the Faucet category, our method also effectively restores the overall shape, while other methods show obvious incorrect shapes. Figure 13 shows the visual comparison on ShapeNet-unseen21. From the figure, it can be seen that our method also has better quality on unseen categories. The Snowflake and PoinTr methods output point clouds with relationships between points that are less reasonable, showing inconsistencies or dispersion.

Figure 12.

Visual comparison on ShapeNet-34 dataset.

Figure 13.

Visual comparison on ShapeNet-unseen21 dataset.

4.5. Ablation Experiment

4.5.1. The Effect of the Number of Vector Attention Groups

We conducted ablation experiments on the number of groups in different modules, using the results on the PCN dataset as the performance reference, as shown in Table 4. The modules in GSSnowflakeNet that require grouping include the Point Transformer (PT) in the feature extractor module, and the Grouped Vector Skip-Transformer (GVST) and Grouped Vector Self-Positioning Point-based Attention (GVSPA) in the SSPD module. The corresponding numbers of groups are denoted as gPT, gGVST, and gGVSPA, respectively. The experiment labeled as VI uses the original Snowflake model as the baseline for our method. Since the embedding dimensions in the three modules are all 64, for ease of grouping attention, gPT, gGVST, and gGVSPA are all divisors of 64.

Table 4.

The effect of the number of vector attention groups. We reported a performance comparison under different group numbers. We provide the Chamfer distances metric of all categories in the PCN dataset (CD-Avg).

From Table 4 and Figure 14, it can be seen that when the number of groups is too small (Experiments I and II), the performance of the attention mechanism cannot be fully utilized, and the model fails to capture finer features, leading to potential underfitting and performance degradation. When gPT = 16, gGVST = 32, and gGVSPA = 32 (Experiment IV), the number of groups is most reasonable and the model performs best. For Experiment V, where the number of groups is too high, the performance slightly decreases.

Figure 14.

Visualization of the effect of vector attention grouping quantity.

4.5.2. Module Design Ablation

We conducted ablation experiments on different modules in the proposed method, including the Grouped Vector Skip-Transformer (GVST), Grouped Vector Self-Positioning Point-based Attention (GVSPA), and the Channel Attention (CA) module, with results shown in Table 5. Experiment A uses the original Snowflake model as the baseline for our method, while Experiments B-D adopt the optimal attention group number combination (gPT = 16, gGVST = 32, gGVSPA = 32). We provide the results on the PCN dataset and the model complexity for reference.

Table 5.

Module design ablation. We report theoretical computation costs (FLOPs) and the number of parameters (Params) of different designs. We also provide the Chamfer distances metric of all categories in the PCN dataset (CD-Avg).

From Table 5 and Figure 15, it can be seen that merely grouping the vectors in PT and ST (Experiment B) significantly reduces the number of parameters and the computational load while slightly improving the model’s performance. Experiment D, which incorporates GVSPA and introduces Channel Attention (CA) to the global vectors, further improves the shape quality of the output results with a slight increase in computational load. Nonetheless, the final parameter count and computational load remain lower than those of the original model (Experiment A). This demonstrates that our method maintains good performance with relatively low computational complexity.

Figure 15.

Visual comparison of module design ablation. A denotes the baseline: SnowflakeNet, B indicates the addition of the GVST module to the baseline, C involves incorporating both the GVST and GVSPA modules, and D represents the integration of the GVST, GVSPA, and CA modules together.

4.5.3. The Effect of Module Embedding Depth

To demonstrate that our method can prevent overfitting even when deepening the model, we designed various combinations of embedding depths for different attention-related modules, as shown in Table 6. Experiments A and B represent the results of our method with module embedding dimensions of 64 and 128 (both using the optimal grouping combination: gPT = 16, gGVST = 32, gGVSPA = 32). Experiments C and D represent the Snowflake method with module embedding dimensions of 64 and 128. The embedding dimensions in the GSSnowflake method include the embedding dimension of the Point Transformer in the feature extractor module (dPT), the embedding dimension of the GVST (dGVST), and the embedding dimension of the GVSPA (dGVSPA). The Snowflake method includes the embedding dimension of the Point Transformer (dPT) and the skip-transformer (dST).

Table 6.

The effect of module embedding depth. We report theoretical computation costs (FLOPs) and the number of parameters (Params) of different designs. We also provide the Chamfer distances metric of all categories in the PCN dataset (CD-Avg).

We provide results on the PCN dataset and model complexity as references in the table. From Table 6, it can be seen that when the embedding dimensions of the attention-related modules in SnowflakeNet increase, the number of parameters and the computational load also increase significantly, and the CD slightly increases compared to the lower embedding dimensions. In contrast, our method shows only a slight increase in parameters and computational load when doubling the module embedding dimensions, and the overall performance slightly improves. Therefore, it can be seen that our vector attention grouping strategy can effectively alleviate the problem of overfitting.

4.6. Complexity Analysis

Compared to existing methods from recent years, our method achieves competitive performance across multiple datasets, as shown in Table 7. In it, we provide a comparison of the model complexity between our method and other existing methods. We also include results on the ShapeNet55/34 and PCN datasets for reference.

Table 7.

Complexity analysis. We report theoretical computation costs (FLOPs) and the number of parameters (Params). We also provide the Chamfer distances metric of all categories in ShapeNet55 (CD55), ShapeNet34 (CD34), ShapeNet-unseen21 (CD21), and PCN benchmark (CDPCN) as references. Bold indicates optimal performance.

From Table 7, it can be seen that our method achieves competitive performance with the smallest FLOPs, indicating that our model requires the least computational resources and has faster training and inference speeds. Although PMP-Net [9] and PMP-Net++ [10] have very small parameter counts, their performance is lower than our method, and they have higher FLOPs. Compared to the original SnowflakeNet, our improved GSSnowflakeNet reduces the number of parameters, preventing overfitting, while also reducing FLOPs and enhancing performance.

4.7. Model Efficiency Analysis

We evaluate model efficiency based on average latency and memory consumption across PCN datasets. Efficiency metrics are measured on a single RTX 4090, excluding the first iteration to ensure steady-state measurements. The results (Table 8) show that, compared to SnowflakeNet, our method exhibits lower latency during both training and inference, with a reduction in memory consumption. As shown in Figure 16, our method achieves lower loss across different training epochs, requires less training time, and surpasses SnowflakeNet’s best performance at epoch 200, indicating higher efficiency.

Table 8.

Model efficiency. We demonstrated the latency and memory during training and inference to compare model efficiency. Bold indicates optimal performance.

Figure 16.

Comparison of training efficiency between Snowflake and GSSnowflake. The number before the “/” represents the time required to train up to the current epoch, measured in hours. The number after the “/” indicates the current average Chamfer Distance.

5. Discussion

(1) Discussion on Performance Improvement. Snowflake extracts global features in two parts: one from the incomplete shape by the feature extractor module, and the other from the complete shape by the mini PointNet. We automatically generate SP points around the complete shape point cloud to further extract global features and refine these features using a global attention mechanism, which is ignored by Snowflake. This refined global feature is then integrated into the point features for more precise subsequent point generation. Additionally, CA enhances global features to some extent, and GVA reduces computational complexity while improving the model’s generalization ability, leading to better results on the test set (as shown in Table 5).

(2) Discussion on Sparse and Dense Benchmark Test Results. We conducted experiments on the sparse benchmark (PCN) and the dense benchmarks (ShapeNet55 and ShapeNet34). The results show that our method achieves greater performance improvement on the dense benchmarks (Table 1, Table 2 and Table 3). This is because, on the sparse dataset, the limited number of points results in less feature information, making the global features insufficient to represent the entire point cloud structure, thereby reducing the effectiveness of the GVSPA and CA modules. On the dense datasets, GVSPA and CA can better select and enhance important features.

(3) Discussion on Limitations. GSSnowflake still finds it challenging to reconstruct all details when handling more complex and irregular shapes (as shown in Figure 12’s motorbike). Additionally, the current experimental results are based on specific datasets, and the model’s performance on other types or larger-scale datasets remains to be further validated. Future research should further explore these limitations and seek solutions.

6. Conclusions

We propose GSSnowflakeNet, which addresses the issues of overfitting and lack of global information in the original model by introducing the GVST, GVSPA, and CA modules. These enhancements improve the model’s performance while reducing the parameters and computational complexity. We evaluated our method on the PCN and ShapeNet55/34 datasets. The experimental results show that our method achieves an average CD-L1 of 7.09 on the PCN dataset, which is a decrease of 0.12 (1.6%) compared to SnowflakeNet. On the ShapeNet55 dataset, it achieves an average CD-L2 of 8.0, a decrease of 0.3 (3.6%) compared to SnowflakeNet. On the ShapeNet34 dataset, it achieves an average CD-L2 of 7.8, a decrease of 0.3 (3.7%) compared to the original method. On the ShapeNet-unseen21 dataset, it achieves an average CD-L2 of 14.4, a decrease of 0.7 (4.6%). We also conducted ablation experiments and embedding depth analysis on the PCN dataset. These results demonstrate the effectiveness of our method.

Author Contributions

Conceptualization, Y.X.; methodology, Y.X.; software, Y.X.; validation, Y.X., Y.C., and D.L.; formal analysis, Y.X.; investigation, Y.X.; resources, C.C. and D.L.; data curation, Y.C.; writing—original draft preparation, Y.X.; writing—review and editing, Y.X., C.C., and D.L.; visualization, Y.X.; supervision, C.C. and D.L.; project administration, C.C. and D.L.; funding acquisition, C.C. and D.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported in part by the project of State Grid Zhejiang Electric Power Company (5211HZ220007).

Data Availability Statement

The PCN dataset used in this paper can be found at https://gateway.infinitescript.com/s/ShapeNetCompletion (accessed on 23 March 2024). The ShapeNet55/34 datasets can be found at https://github.com/yuxumin/PoinTr/blob/master/DATASET.md (accessed on 18 May 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems 30; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Pan, X.; Xia, Z.; Song, S.; Li, L.E.; Huang, G. 3d object detection with pointformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–15 June 2021; pp. 7463–7472. [Google Scholar]

- Ali, W.; Abdelkarim, S.; Zidan, M.; Zahran, M.; El Sallab, A. Yolo3d: End-to-end real-time 3d oriented object bounding box detection from lidar point cloud. In IIn Proceedings of the Computer Vision—ECCV 2018 Workshops, Munich, Germany, 8–14 September 2018; pp. 716–728. [Google Scholar]

- Xie, H.; Yao, H.; Zhou, S.; Mao, J.; Zhang, S.; Sun, W. Grnet: Gridding residual network for dense point cloud completion. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 365–381. [Google Scholar]

- Yuan, W.; Khot, T.; Held, D.; Mertz, C.; Hebert, M. Pcn: Point completion network. In Proceedings of the 2018 IEEE International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 728–737. [Google Scholar]

- Yu, X.; Rao, Y.; Wang, Z.; Liu, Z.; Lu, J.; Zhou, J. Pointr: Diverse point cloud completion with geometry-aware transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 12498–12507. [Google Scholar]

- Zhou, H.; Cao, Y.; Chu, W.; Zhu, J.; Lu, T.; Tai, Y.; Wang, C. Seedformer: Patch seeds based point cloud completion with upsample transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 416–432. [Google Scholar]

- Wen, X.; Xiang, P.; Han, Z.; Cao, Y.P.; Wan, P.; Zheng, W.; Liu, Y.S. Pmp-net: Point cloud completion by learning multi-step point moving paths. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–15 June 2021; pp. 7443–7452. [Google Scholar]

- Wen, X.; Xiang, P.; Han, Z.; Cao, Y.P.; Wan, P.; Zheng, W.; Liu, Y.S. Pmp-net++: Point cloud completion by transformer-enhanced multi-step point moving paths. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 852–867. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Liu, X.; Xie, H.; Nie, L.; Zhou, H.; Tao, D.; Li, X. Learning geometric transformation for point cloud completion. Int. J. Comput. Vis. 2023, 131, 2425–2445. [Google Scholar] [CrossRef]

- Li, S.; Gao, P.; Tan, X.; Wei, M. Proxyformer: Proxy alignment assisted point cloud completion with missing part sensitive transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9466–9475. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Dai, A.; Ruizhongtai Qi, C.; Nießner, M. Shape completion using 3d-encoder-predictor cnns and shape synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5868–5877. [Google Scholar]

- Stutz, D.; Geiger, A. Learning 3d shape completion from laser scan data with weak supervision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1955–1964. [Google Scholar]

- Wang, X.; Ang, M.H., Jr.; Lee, G.H. Cascaded refinement network for point cloud completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 790–799. [Google Scholar]

- Xiang, P.; Wen, X.; Liu, Y.-S.; Cao, Y.-P.; Wan, P.; Zheng, W.; Han, Z. Snowflake point deconvolution for point cloud completion and generation with skip-transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 6320–6338. [Google Scholar] [CrossRef] [PubMed]

- Cai, P.; Scott, D.; Li, X.; Wang, S. Orthogonal Dictionary Guided Shape Completion Network for Point Cloud. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 864–872. [Google Scholar]

- Rong, Y.; Zhou, H.; Yuan, L.; Mei, C.; Wang, J.; Lu, T. CRA-PCN: Point Cloud Completion with Intra-and Inter-level Cross-Resolution Transformers. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 4676–4685. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Park, J.; Lee, S.; Kim, S.; Xiong, Y.; Kim, H.J. Self-positioning point-based transformer for point cloud understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21814–21823. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Guo, M.H.; Cai, J.X.; Liu, Z.N.; Mu, T.J.; Martin, R.R.; Hu, S.M. Pct: Point cloud transformer. Comput. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Wu, X.; Lao, Y.; Jiang, L.; Liu, X.; Zhao, H. Point transformer v2: Grouped vector attention and partition-based pooling. In Advances in Neural Information Processing Systems 35; MIT Press: Cambridge, MA, USA, 2022; pp. 33330–33342. [Google Scholar]

- Fu, Y.; Lam, A.; Sato, I.; Sato, Y. Adaptive spatial-spectral dictionary learning for hyperspectral image restoration. Int. J. Comput. Vis. 2017, 122, 228–245. [Google Scholar] [CrossRef]

- Xie, H.; Yao, H.; Sun, X.; Zhou, S.; Zhang, S. Pix2vox: Context-aware 3d reconstruction from single and multi-view images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2690–2698. [Google Scholar]

- Klokov, R.; Lempitsky, V. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 863–872. [Google Scholar]

- Tatarchenko, M.; Dosovitskiy, A.; Brox, T. Octree generating networks: Efficient convolutional architectures for high-resolution 3d outputs. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2088–2096. [Google Scholar]

- Graham, B.; Engelcke, M.; Van der Maaten, L. 3d semantic segmentation with submanifold sparse convolutional networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9224–9232. [Google Scholar]

- Su, H.; Jampani, V.; Sun, D.; Maji, S.; Kalogerakis, E.; Yang, M.H.; Kautz, J. Splatnet: Sparse lattice networks for point cloud processing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2530–2539. [Google Scholar]

- Wang, P.S.; Sun, C.Y.; Liu, Y.; Tong, X. Adaptive O-CNN: A patch-based deep representation of 3D shapes. ACM Trans. Graph. (TOG) 2018, 37, 1–11. [Google Scholar] [CrossRef]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. Foldingnet: Point cloud auto-encoder via deep grid deformation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 206–215. [Google Scholar]

- Xia, Y.; Xia, Y.; Li, W.; Song, R.; Cao, K.; Stilla, U. Asfm-net: Asymmetrical siamese feature matching network for point completion. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, China, 20–24 October 2021; pp. 1938–1947. [Google Scholar]

- Lu, D.; Xie, Q.; Wei, M.; Gao, K.; Xu, L.; Li, J. Transformers in 3d point clouds: A survey. arXiv 2022, arXiv:2205.07417. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Wen, X.; Han, Z.; Cao, Y.-P.; Wan, P.; Zheng, W.; Liu, Y.S. Cycle4completion: Unpaired point cloud completion using cycle transformation with missing region coding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–15 June 2021; pp. 13080–13089. [Google Scholar]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. Shapenet: An information-rich 3d model repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).