1. Introduction

Crop-type mapping is highly relevant for numerous large-scale agricultural applications, such as sustainable crop management, food security, or agricultural policy [

1,

2]. In this context, satellite images have become increasingly important as they frequently provide useful information about cropland at a large scale. Earth observation (EO) missions such as the Sentinel (ESA) and the Landsat (NASA/USGS) programs freely provide petabytes of imagery, cover large areas with high spatial and temporal resolution, and thus offer new opportunities.

In recent years, the combination of multitemporal multispectral and radar satellite data has been increasingly used for crop-type mapping, as it has led to improved classification accuracies compared to mono-temporal or single-sensor data applications [

3,

4,

5,

6,

7]. These studies have shown that synthetic aperture radar (SAR) data provides different but complementary information on land use and land cover (LULC) when compared to multispectral data. While multispectral data from optical sensors offers information about the spectral reflectance of crops, SAR data complements this by revealing the structural and physical attributes of plants, including details about surface roughness, moisture content, and overall geometry. The combination of multispectral and SAR data can therefore lead to increased classification accuracy [

8,

9], especially when the discrimination of crop types and LULC classes is difficult due to spectral ambiguities. Moreover, the use of multitemporal SAR data seems particularly interesting when the availability of multispectral data is limited due to cloud cover. Therefore, already a few additional Sentinel-1 images increase the LULC map in terms of accuracy [

10]. A detailed review on applications of multispectral and SAR data for LULC monitoring is given by Joshi et al. [

11].

Besides the increased availability of data from different complementary sensors, such as multispectral and SAR, research in LULC mapping was driven, among others, by the selection of representative spatial and temporal image information to increase classification accuracy. In the context of crop-type mapping, spatial image information has often been derived by applying texture filters [

12,

13,

14] or by using object-oriented segmentation methods [

15,

16,

17]. Although image segmentation can significantly increase the accuracy when compared to the results achieved by a pixel-based classification, the definition of adequate segmentation parameters can be challenging [

16,

18]. In order to represent important temporal patterns of crop dynamics, (spectral-)temporal metrics or phenological information were often derived from satellite imagery [

3,

5,

19]. However, traditional approaches have limitations in automation and rely on human experience and domain knowledge when extracting relevant image features from such complex input data [

20]. Currently, deep learning methods are gaining increasing attention due to their automatic and efficient context feature learning from multi-dimensional input data and their ability to process huge datasets, which demand adequate algorithms for data analysis. In particular, convolutional neural networks (CNNs) have recently been applied for crop-type mapping using the combination of multispectral and SAR imagery because they have proved to provide excellent classification accuracy compared to classical approaches [

21,

22,

23,

24,

25].

Nevertheless, the combination of multisensor data remains challenging in the field of remote sensing [

23]. It is particularly important to fuse multisensor data adequately so that relations between data modalities can be learned efficiently [

26]. The main fusion strategies can be divided into three categories [

24]: (1) input-level fusion: combination of information from multiple sources or modalities by simple data stacking into a single data layer; (2) feature-level fusion: features are extracted from each modality separately and then combined; (3) decision-level fusion: In decision-level fusion, the decisions or outputs of multiple classifiers are combined to make a final decision. Initial studies have successfully applied deep learning methods as appropriate models for fusing multispectral and SAR data for crop-type mapping [

7,

21,

23,

24,

25,

27]. However, so far, input-level fusion methods, which are mostly the initial stacking of the individual image sources, have been used as the preferred fusion technique [

21,

25,

27]. The drawback of this approach is the high dimensionality of the input data after layer stacking [

28] as well as the fact that the interactions between data modalities are not fully considered. Therefore, dependencies that may be present within the different datasets are ignored [

23]. The decision fusion technique can improve the classification results due to the combination of multiple classifiers [

4]. The integration of multiple classification outcomes, however, requires specialized knowledge.

When it comes to combining remote sensing data from multiple sources, feature-level fusion methods are considered to be a preferable and more suitable option in comparison to input-level and decision-level fusion methods due to the effective reduction of data redundancy and the ability of multi-level feature extraction. Currently, only a few studies have proposed the advantage of CNN’s as a reliable and automated feature fusion technique for crop type classification. In [

23], a LULC classification was performed using a deep learning architecture based on recurrent and convolutional operations to extract multi-dimensional features from dense Sentinel-1 and Sentinel-2/Landsat time series data. For the classification, these features were combined using fully connected layers to learn the dependencies between the multi-modal features. Ref. [

24] analyzed and compared three fusion strategies (input-, layer-, and decision-level) to identify the best strategy that optimizes optical-radar classification performance using a temporal attention encoder. The results showed that the input- and feature-level fusion achieved the best overall F-score, surpassing the accuracy achieved by the decision-level fusion by 2%. Ref. [

29] proposed a novel method to learn multispectral and SAR features for crop classification through cross-modal contrastive learning based on an updated dual-branch network with partial weight-sharing. The experimental results showed that the model consistently outperforms traditional supervised learning approaches.

In the broader context of LULC classification, first studies have integrated CNNs as effective fusion techniques. For instance, ref. [

30] introduced a two-branch supervised semantic segmentation framework enhanced by a novel symmetric attention module to fuse SAR and optical images. Similarly, ref. [

31] proposed a comparable methodology, employing a spatial-aware circular module featuring a cross-modality receptive field. This mechanism was designed to prioritize critical spatial features present in both SAR and optical imagery. Both approaches notably leveraged attention mechanisms using CNN layers to optimize the integration of both modalities, resulting in significant enhancements in LULC classification. However, these approaches are constrained by their reliance on monotemporal input data and do not address spatio-temporal feature extraction across different modalities. Hence, it is evident that the utilization of CNN techniques for classifying multispectral and SAR data in crop-type mapping remains relatively unexplored. Particularly, most deep learning studies have primarily relied on simplistic methods such as image stacking or single-stage feature fusion. Alternatively, other approaches tend to be excessively complex, involving multiple intricate processing steps, which can result in increased computational costs and complexity. These methods may include advanced feature fusion techniques, multi-stage processing pipelines, or heavy reliance on additional data sources and annotations. Moreover, many early studies in the context of multispectral and SAR fusion were restricted to study sites less than 3000 km

2 or even on plot/farm level or considered only one cropping season [

11]. However, the availability of Sentinel-1 and Sentinel-2 fosters the combination of SAR and multispectral data for large-scale applications and multiple cropping season analysis [

4,

5,

6].

The presented study was intended to make an initial contribution for large-scale and multi-annual crop type classification using multitemporal multispectral satellite data from Sentinel-2/Landsat-8, as well as SAR images from Sentinel-1. Our research focused on developing a spatial and temporal multi-stage image feature fusion technique for multispectral and SAR images to extract relevant temporal vegetation patterns between the different image sources using state-of-the-art CNN methods. For this, we created an adapted dual-stream 3D U-Net architecture for multispectral and SAR images, respectively, to extract relevant spatio-temporal vegetation features based on vegetation indices and SAR backscatter coefficients. To improve channel interdependencies among the data sources, we extended the U-Net network with a feature fusion block, which was applied at multiple levels in the network based on a 3D squeeze-and-excitation (SE) technique by [

32]. This technique allowed features from different input sources to be combined by emphasizing important features while suppressing less important ones. Additionally, the multi-level fusion method reduces the problem of extracting noisy image information due to clouds or speckle noise. From now on, the proposed model is referred to as 3D U-Net FF (3D U-Net Feature Fusion).

As the creation of cloud-free image composites from optical remote sensing data is still a challenging task, researchers frequently rely on spectral-temporal metrics over a certain time period or perform time series interpolation [

6,

24]. Nevertheless, the reduction of pixel-wise spectral reflectance into broad statistical metrics as well as the calculation of synthetic reflectance values may result in the loss of relevant spatial, spectral, and temporal information for crop-type mapping. Moreover, as with other feature extraction methods (e.g., image segmentation, texture), the definition of statistical metrics is user-dependent. For this reason, we used the “original” multitemporal data and implemented an adapted temporal sampling strategy to reduce cloud coverage in the data.

The overarching goal of our study was to develop a robust approach for seasonal crop-type mapping using CNNs and multisensor data. We expected the results of the proposed approach to outperform other classifiers in terms of classification accuracy. Moreover, we assumed that the model could learn meaningful spatial-temporal features and thus could be transferred on a large scale in time. In order to evaluate the potential of our concept and crop maps, we (i) evaluated whether the proposed approach increases the mapping accuracy when compared to commonly regular 2D and 3D U-Net architectures, and (ii) analyzed the transferability of the proposed model to another cropping season without using additional training data.

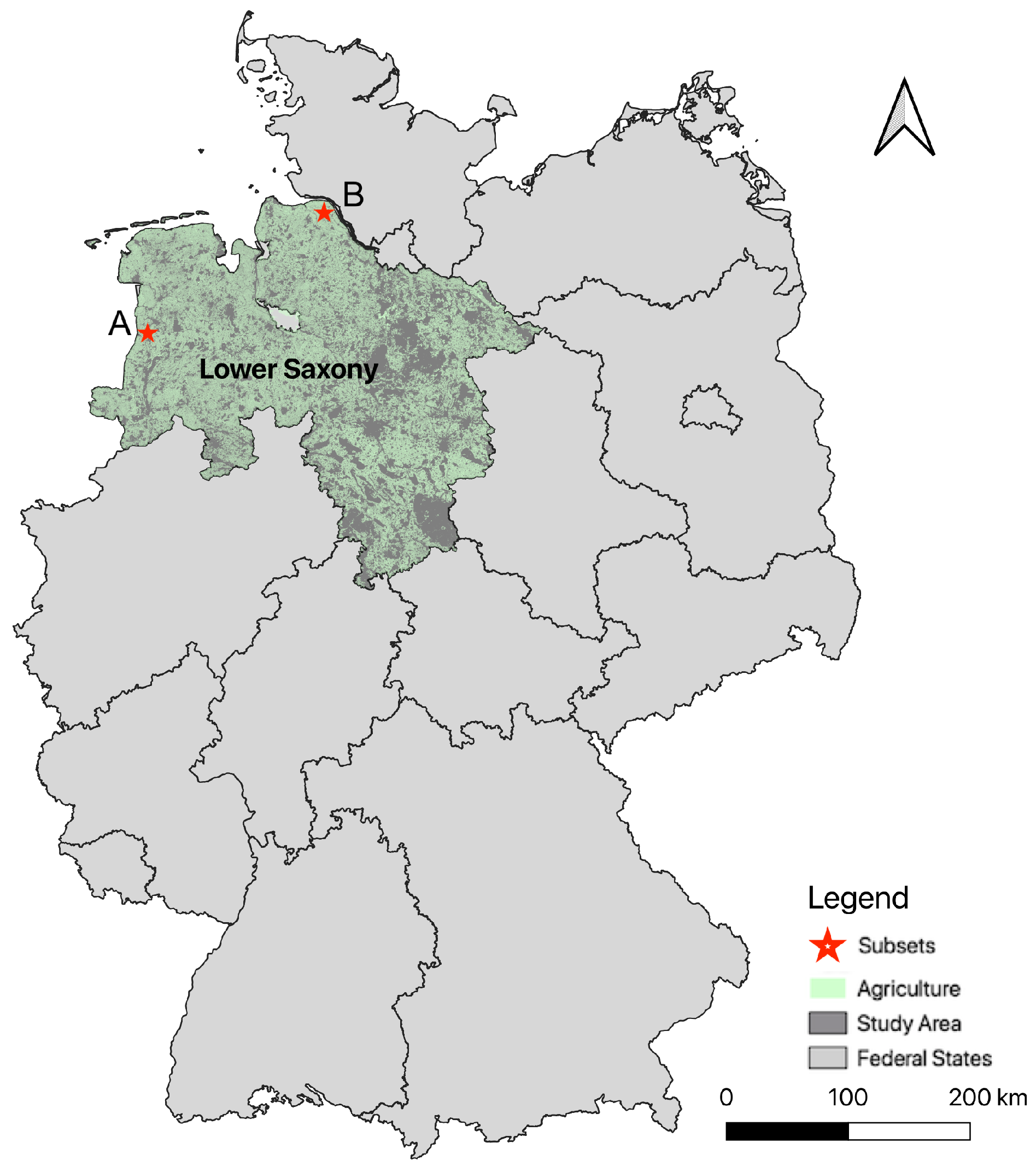

The specific objective of our study was the generation of large-scale crop type maps of Lower Saxony, Germany. We generated four seasonal crop type maps, comprising the cropping seasons 2017/18, 2018/19, 2019/20, and 2020/21. Each crop year began in October and ended in September of the following year.

To assess the effectiveness of our approach, we compared our proposed network with classical 2D and 3D U-Net models. Afterwards, the trained model was applied to generate a crop type map for the cropping season 2021/22 in Lower Saxony. Overall, the findings of this study were expected to contribute to an improved and (semi-)automated framework for large-scale crop-type mapping.

3. Methods

3.1. Patch-Based Multitemporal Image Composites

In contrast to traditional pixel-based classification algorithms, 2D and 3D CNN methods require patch-based input data, which enable the extraction of spatial information. Therefore, we spatially sliced all multispectral and SAR satellite images into non-overlapping patches of equal size using a sliding window method. The patch size specification is critical for the classification performance since it determines the extent to which spatial features within a patch can be extracted by the neural network. We used a window size of 128 × 128 pixels (approximately 163.84 hectares), which preserved crop-specific structures without becoming too computationally intensive for model training.

To derive valuable phenological crop features from our satellite data, we generated multitemporal image composites for all four considered seasons. However, rather than generating region-wide seasonal image composites, we produced multispectral and SAR composites for each considered patch individually.

When considering multispectral satellite data for image composites, clouds are commonly masked to minimize data noise [

6,

7,

27]. However, because of the hightemporal and spatial variability of clouds, it remains a major challenge to derive high-temporal resolution optical image composites. Due to this fact, we decided not to mask any clouds within our multitemporal patches; instead, we utilized a 14-day window across each growing season, aiming to capture relevant phenological changes in crops while managing the challenges posed by cloud cover as well as computational demands. Within each 14-day window, for each patch, we selected the multispectral image with the least cloud cover based on the Sentinel-2

s2cloudless and Landsat-8

fmask cloud detection algorithms. If the Sentinel-2 and Landsat-8 image patches had the same minimum cloud coverage, we preferred the Sentinel-2 data due to their higher spatial resolution. The aim of this method was to reduce the impact of clouds within a multitemporal patch composite while maintaining spatial interrelations between the pixels and keeping temporal information on vegetation phenological dynamics throughout the cropping year. Since remaining clouds were not masked and acquisition dates could differentiate between patch composites, we tried to force the applied classifiers to extract more general crop features to overcome the constraints of temporal cloud cover and the variability in temporal acquisition patterns.

To create seasonal patch-based image composites from the Sentinel-1 data, we also applied a 14-day window for each patch, thus achieving the same temporal resolution for the composites as the multispectral data. As the SAR data is not much affected by clouds, we calculated the mean VH and VV backscatter values of all available images within a 14-day window. Finally, each patch-based composite contained 24 multispectral and SAR images.

To balance the number of bands in the multispectral (6 bands) and SAR (2 bands) imagery data and to reduce spectral noise in the multispectral imagery [

38], we applied two vegetation indices to the multispectral patch composites. Firstly, we computed the normalized difference vegetation index (

NDVI), a widely used remote sensing index that can derive unique crop characteristics based on vegetation status and canopy structure [

39]. Secondly, we derived the tasseled cap

Greenness, which provides relevant information on vegetation phenological stages, using the tasseled cap coefficients ascertained by [

40]. Both indices have been successfully used in the context of LULC classification [

3,

6,

41,

42].

3.2. 2D and 3D U-Net Model

Our classification approach was based on the commonly used U-Net architecture by [

43]. The U-Net model is a special type of deep learning architecture from the domain of semantic image segmentation that is particularly suitable for classification using remote sensing data, as it performs better with a small sample size and unbalanced classes than other CNN models. Due to its symmetric encoder-decoder structure coupled with skip connections, contextual information at multiple scales can be preserved, allowing the model to capture both local and global spatial dependencies within the imagery. This is crucial for accurately distinguishing between different crop types, as the spatial arrangement and contextual cues play a significant role in classification [

44].

The typical 2D U-Net architecture is symmetric and consists of two connected parts, including a contracting path and an expansive path. The contracting path is a typical convolutional network, which uses a sequence of 3 × 3 convolutional and 2 × 2 max-pooling layers to extract and aggregate local spatial image features. After each step, the number of filters is doubled. The expansive path uses a series of 2 × 2 upsampling and convolutional layers to associate the derived image features with their corresponding location in the segmentation map. A distinctive characteristic of the U-Net models is skip connections. These are used to transfer feature maps, which are created in the downsampling process, to their corresponding output of the upsampling operation to preserve important spatial information that has been lost by pooling operations [

45].

The 3D U-Net is composed of 3D kernels to extract features from volumetric data (voxels). 3D convolutions can therefore be applied in three dimensions to extract volumetric information from the input data. In our case, we applied the 3D U-Net model to capture spatio-temporal features from the generated multitemporal image patches.

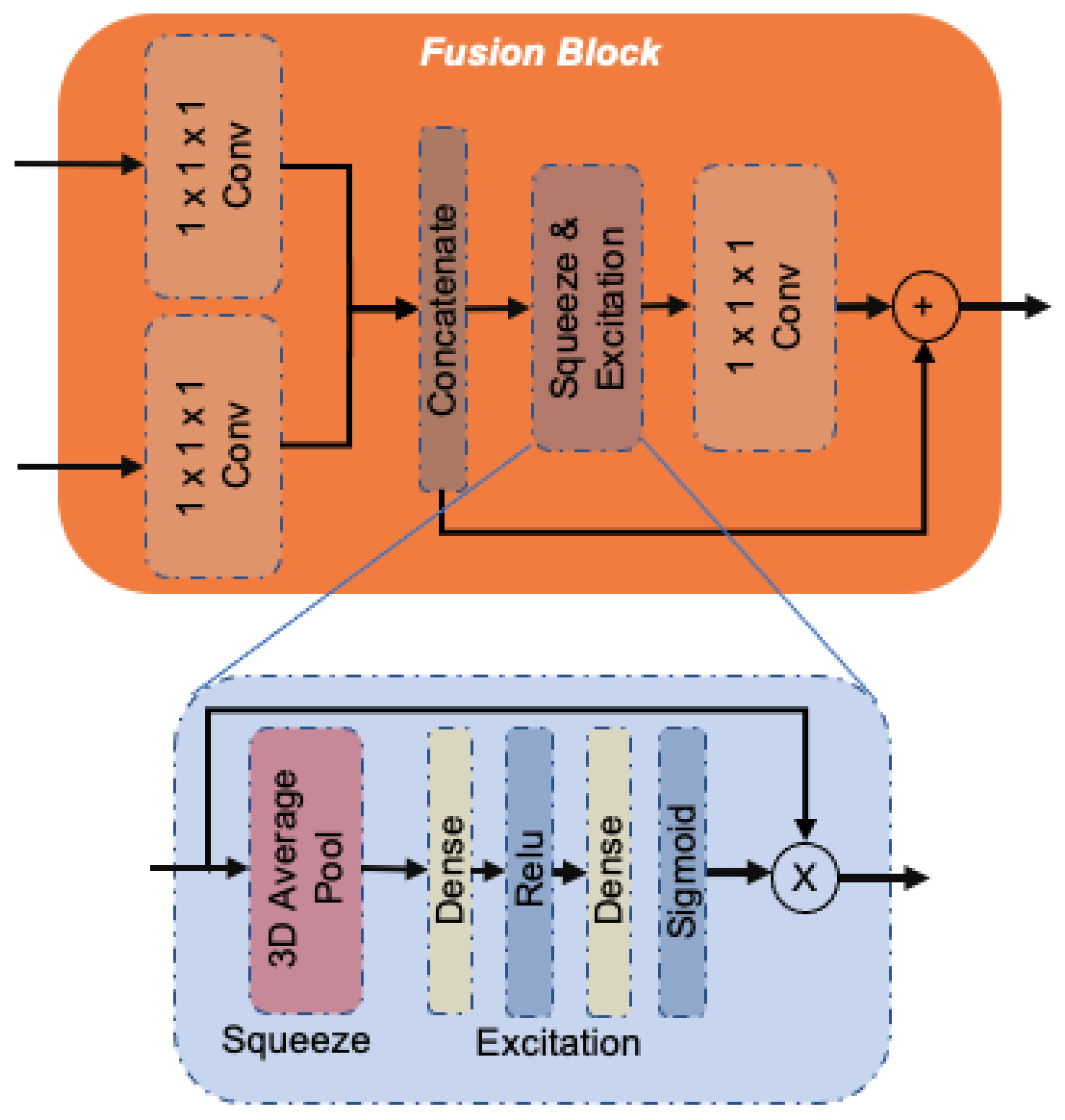

3.3. Proposed Multi-Stage 3D Feature Fusion U-Net Model (3D U-Net FF)

The proposed network model was based on a common 3D convolutional U-Net architecture with modifications to fuse spatio-temporal image features from multispectral and SAR data at different aggregation levels (see

Figure 2). In distinction to the original U-Net architecture, we used a dual-stream 3D U-Net to split multispectral and SAR image data to learn the spatio-temporal features of both data sources separately. With this, we targeted enhanced feature representation and spatial context preservation, as both data streams can focus on sensor-specific image features, capturing both fine-grained details and high-level spatio-temporal semantic information.

The multispectral branch contained a stack of multitemporal patches of NDVI and Greenness values of Sentinel-2 and Landsat imagery, whereas the SAR stream included a stack of temporal VV and VH backscatter patches, derived from Sentinel-1. Therefore, both streams had an input size of 128 × 128 × 24 × 2 and were passed through the contracting and expansive path of the 3D network in parallel to learn features separately.

In detail, the contracting path of both streams involved three downsampling steps (S1, S2, and S3). Each step consisted of a convolutional block with two consecutive 3D convolutions (3 × 3 × 3) with batch normalization and a rectified linear unit (ReLU). Each convolutional block is followed by a 3D max-pooling operation (2 × 2 × 2) to gradually downsample the input size of the patches in the spatio-temporal domain. In the downsampling process, the number of feature maps was doubled with each subsequent step. To combine the contracting and the expansive paths, another convolutional block (S4) with doubled filter numbers was used. According to the contracting path, the expansive path was built up on three steps (S5, S6, and S7). Each step included a 3D transpose convolution with a stride of 2 × 2 × 2 to increase the sizes of the feature maps, followed by the concatenation of the corresponding skip connection. The combined features were then processed by a convolutional block with two consecutive 3D convolutions. The feature maps of both streams were finally added and passed to the output layer. As the output of the network needed to be a 2D classification image, the resulting 3D feature maps were reshaped into 2D arrays and converted to the number of classes using a 2D convolution. To obtain the final class probability maps, we used the softmax function.

To address the task of feature fusion, we modified the multi-level skip connections within the U-Net model. Each corresponding skip connection from both branches was passed to a novel fusion module to combine the spatio-temporal features from both data streams. With this, patterns and dependencies of the different sources should be learned at different aggregation levels, resulting in an efficient and flexible learning procedure.

Our fusion module (see

Figure 3) was inspired by a squeeze-and-excitation (SE) network introduced by [

32], which improves channel interdependences of a single or multiple input sources at almost no computational cost. In our study, we first applied a 3D convolution with a 1 × 1 × 1 kernel to each input branch and concatenated the outputs along the channel dimension. Then we passed the results into a 3D squeeze-and-excitation (SE) network. This network selectively focused on significant feature maps using fully connected layers. Although this model structure has been successful in tasks like image segmentation or object detection, its application to data fusion has been limited.

The 3D SE network performs two main operations: squeezing and exciting. Squeezing reduces the 3D filter maps to a single dimension (1 × 1 × 1 × C) via a 3D global average pooling layer, enabling channel-wise statistics computation without altering channel count. The exciting operation involves a fully connected layer followed by a ReLU function to introduce nonlinearity. Output channel complexity is reduced by a ratio, typically 4 in our case. A second fully connected layer, followed by a sigmoid activation, provides each channel with a smooth gating function. This enables the network to adaptively enhance the channel interdependencies of both input sources at minimal computational cost. At last, we weighted each feature map of the merged feature block based on the result of the squeezing and exciting network using simple matrix multiplication. The feature maps of the individual fusion blocks were passed to each corresponding level in the contracting path for both network streams. Therefore, each stream could benefit from the derived information for optimized feature learning.

As part of the study, we compared our proposed multi-stage fusion model with classical 2D and 3D U-Net architectures for the cropping season 2017/18 to 2020/21. To achieve comparability, we implemented the structure of the two conventional U-Net models according to our presented architecture. In comparison to the proposed network, the input data (NDVI, Greenness, VV, and VH) for the traditional models were stacked (Input Fusion) and fed into only a single U-Net network. Also, traditional skip connections were used by simply concatenating the feature maps from the contracting path to the expansion path. As the input size and number of filters do not change in our model, a valid comparison of all models could be ensured. For the 2D U-Net model, the 3D convolutions were replaced with 2D convolutions.

3.4. Experimental Setup

The proposed 3D U-Net FF model as well as a conventional 2D and 3D U-Net architecture were trained and validated on reference data from Lower Saxony, including four cropping seasons (2017/18 to 2020/21). We considered multiple cropping seasons to address differences in data availability and plant growth due to seasonal climate variations.

For this reason, the created image patches and the corresponding reference patches of Lower Saxony spanning from 2017/18–2020/21 were randomly divided into 66% training data and 33% test data. A total of 10% of the training data has been kept for internal validation during the training process. In total, 296,000 composite patches (with 128 × 128 × 24 pixel) including all four cropping seasons were created and used for model training. It is evident that the training dataset exhibited a significant class imbalance, with some crop types having much larger areas represented compared to others. Dominant classes in the data were grassland (2.1 million hectares) and maize (1.6 million hectares), whereas legumes (36,750 hectares and aggregated classes like other winter cereals (15,660 hectares) and other spring cereals (36,300 hectares) had a relatively lower occurrence.

To facilitate a fair comparison of all classification networks, we employed the same parameters for all classification networks. Specifically, we utilized 64 filters to instantiate the networks, with the number of filters gradually doubled in the contraction path and then halved in the expansive process. The models were trained for 25 epochs, following which there was no discernible improvement in validation error across all models. As a model optimizer, the stochastic gradient descent method Adam was used. The training process was performed with a batch size of 16 and an initial learning rate of 0.0001.

3.5. Accuracy Assessment

The performance of the models was evaluated based on the independent test dataset (97,700 image patches), considering all four cropping seasons. Moreover, the classification accuracy as well as the mapped areas of each crop class in the individual years were analyzed separately from the perspective of model stability over time and the representative crop type areas. Finally, we investigated the temporal transferability of our model to verify its generalizability under different seasonal weather conditions and management practices. Therefore, we applied our proposed 3D U-Net FF to the full dataset for the federal state of Lower Saxony for the cropping season 2021/22.

The accuracy assessment was based on calculated area-weighted accuracies (at a 95% confidence interval), including overall accuracy (OA), user’s accuracy (UA), and producer’s accuracy (PA), as presented in [

46]. As the dataset showed strong class imbalances, we also report the (class-wise) F1 metrics, which are the harmonic mean of precision and recall.

4. Results

4.1. Overall Accuracy Assessment

In order to evaluate the quality and reliability of the generated crop type maps of the individual models, it is essential to conduct an accuracy assessment. This assessment serves as a crucial step in the validation and verification of the classification results, allowing for an objective measurement of the agreement between the predicted crop types and the ground truth data of the models tested.

The classification accuracies of all cropping seasons are summarized in

Figure 4. For all models, high overall accuracies of more than 92% could be achieved. With 94.5%, the proposed 3D U-Net FF model performed the best in terms of OA, while the conventional architectures achieved lower classification results (92.6% (2D U-Net) and 94.2% (3D U-Net)). Differences in accuracy were especially apparent between the 2D U-Net and the two 3D U-Net models, as for all classes, the 2D model provided significant lower class-wise accuracies. The proposed 3D U-Net FF revealed the highest UA and PA values for almost all classes. This was especially significant for classes with lower classification accuracy, such as

other winter cereals,

other spring cereals, or

legume. Finally, the proposed model revealed the highest F1-scores for all classes, with an improved accuracy balance between the crop type classes.

All models exhibited a satisfactory ability to distinguish between agricultural and non-agricultural (background class) pixels, as evidenced by F1-scores above 94%. Very high accuracies of more than 90% could also be achieved for the winter cereal classes winter wheat, winter rye, and winter barley. The results of the 3D U-Net models were higher compared to the 2D U-Net classifier, whereas the highest F1-scores for all classes were given by the proposed 3D U-Net FF. More challenging was the correct classification of winter triticale and other winter cereals, due to misclassification between all winter cereal classes. The 2D U-Net showed the lowest F1-scores of 82.2% and 63.1%, respectively. Both 3D models showed significant improvements for these two classes, whereas the 3D U-Net FF outperformed the common 3D U-Net by about 1% and 4%, respectively.

The classification trends of spring cereal were comparable among the tested models, with varying F1-scores. The highest F1-scores were achieved for spring barley (2D U-Net: F1-score = 87.2%, 3D U-Net: F1-score = 90.8%, and 3D U-Net FF: F1-score = 91.0%). Although lower accuracies were reached for spring oat (2D U-Net: F1-score = 69.9%, 3D U-Net: F1-score = 80.5%, and 3D U-Net FF: F1-score = 81.7%), both 3D models performed adequate in terms of accuracy. The largest variations as well as low accuracies were given for other spring cereals (2D U-Net: F1-score = 48.7%, 3D U-Net: F1-score = 63.9%, and 3D U-Net FF: F1-score = 65.7%). These last two classes were mainly misclassified as spring barley by all classifiers. The proposed 3D U-Net FF also provided the highest accuracy for spring cereals, with major improvements for spring oat and other spring cereals.

The classification of winter rapeseed, maize, potatoes, beet, and grassland demonstrated an excellent accuracy level. These classes consistently achieved high F1-scores near or above 90% across all models. The legume class exhibited larger estimation errors, primarily due to confusion with maize and other crops. The performance varied significantly among the models, with the 2D U-Net achieving the lowest F1-score of 68.2%, while the 3D U-Net and 3D U-Net FF reached 77.8% and 79.4%, respectively.

Overall, the proposed network showed the most reliable and consistent classification results, outperforming the standard models in F1-score across all classes. When comparing the results to the results achieved by the traditional networks, differences in accuracy were particularly large when the classification seemed challenging. This was especially evident for aggregated (mixed) classes such as other winter cereals, other spring cereals, or legume, where very likely (spectral) ambiguities may occur.

4.2. Accuracy Assessment for the Individual Cropping Seasons

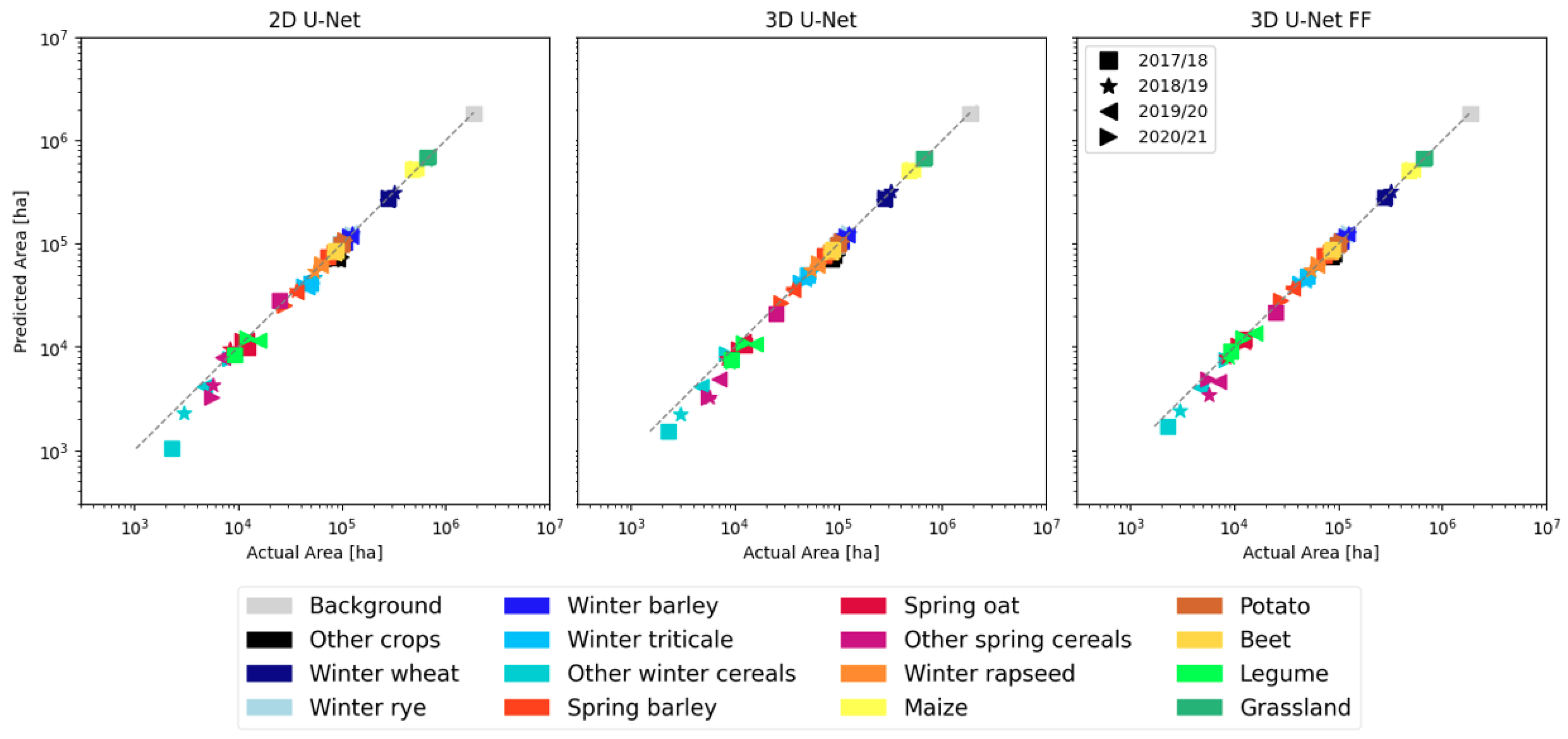

The classification accuracies across the four cropping seasons exhibited distinct similarities for all classifiers, as indicated in

Table 2. Results for the cropping season 2018/19 demonstrated the highest classification accuracy, while the classification in 2017/18 exhibited the lowest OA. The 2D U-Net model showed lower classification accuracy for each individual season. Specifically, the results for season 2017/18 showed a higher misclassification rate, resulting in an overall accuracy of 91.5%. On the other hand, the 3D U-Net models demonstrated improved performances across all years, with OA values ranging from 93.2% to 94.6% for the common 3D model and 93.6% to 95.0% for the proposed 3D U-Net FF architecture. In terms of overall accuracy, the proposed 3D U-Net FF outperformed the common architectures in every season.

Figure 5 shows the correlation between the reported and estimated areas of the individual classes based on the independent test dataset. Despite the differences in class-wise accuracy as well as between the cropping seasons, for all models, excellent and consistent area accuracies were observed with only minor variability. It was found that the mapping accuracy of the models improved with increasing crop area while showing less variability between the cropping years. Classes with smaller areas were slightly underestimated by all classifiers, whereas the common 2D U-Net model showed the highest error rates for the most classes. Especially the smallest class

other winter cereals showed larger variations. When comparing the models, the 3D U-Net models showed an improvement in accuracy for almost all classes. Particularly for the smallest class, an increased area accuracy could be shown, which was consistent with the higher PA value in

Figure 4. The proposed network showcased the most reliable correlations between the referenced and estimated areas, with improvements for smaller classes such as

other winter cereals,

other spring cereals, or

legume across the seasons when compared to the traditional models.

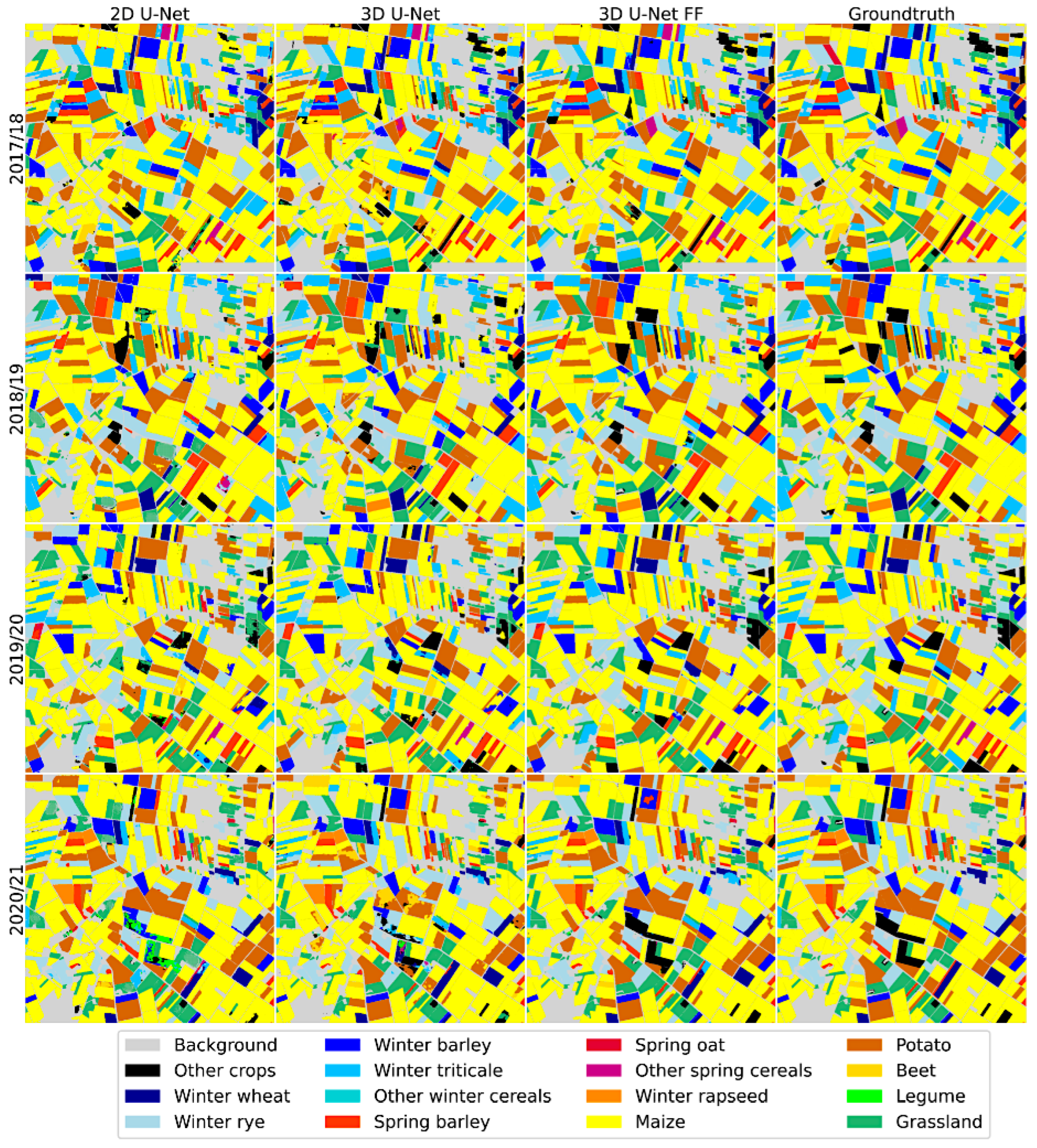

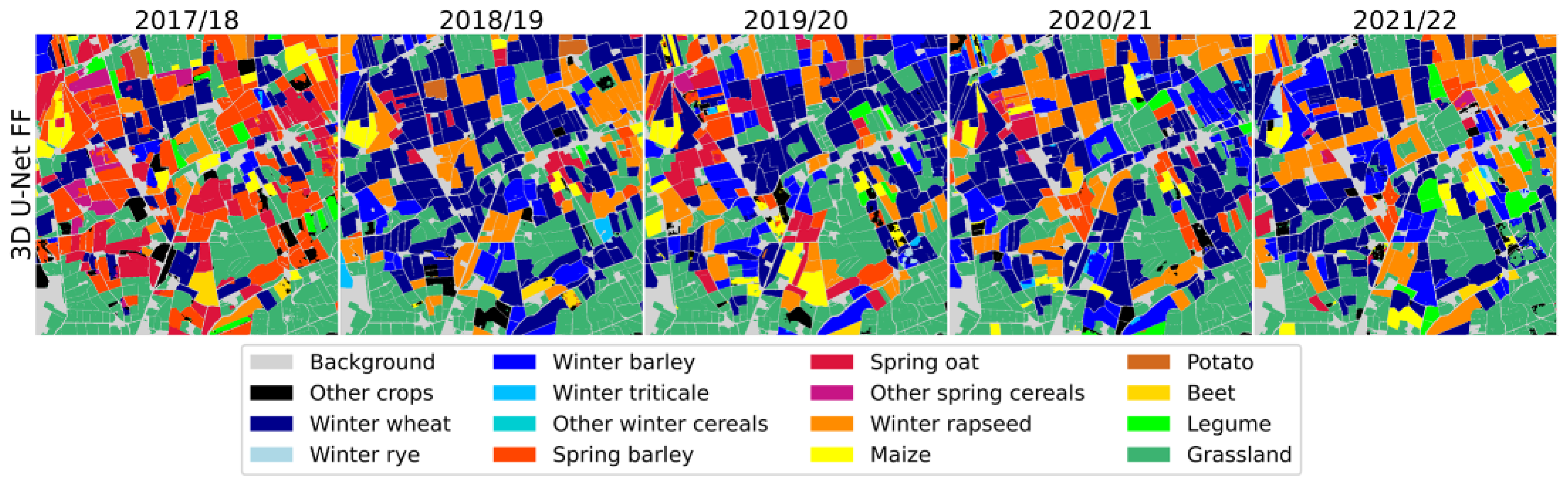

4.3. Seasonal Crop-Type Mapping

The crop-type mapping for Lower Saxony across all models showed that the majority of the fields had been identified correctly. Most fields were classified as a single crop type, with almost no isolated pixels or salt and pepper effects. Field boundaries were clearly delineated, and spatial structures were often correctly mapped. Class confusions exhibited consistency and were comparable across all cropping seasons, despite the yearly variations in climate conditions. Grassland areas dominated the classification maps of each classifier throughout the seasons, which corresponds well to the reported crop areas. In some cases, adjacent fields have been merged by the classifiers, and therefore field boundaries between parcels were not present. Misclassifications appeared systematically at the field edges, as the correct distinction between field boundary and background was challenging.

Figure 6 shows the mapping results of the subset A (see

Figure 1) in the district of Emsland in the western part of Lower Saxony across the four cropping seasons. The region was characterized by a high diversity of crop types and field sizes. A high level of agreement with the reference could be shown for all three models across all cropping seasons. Despite the variations in local and temporal environmental conditions, the results showed no significant spatial classification errors. Thus, each classifier used was able to effectively represent the crop type distribution across the considered seasons. Notably, confusion between crop types often occurred within the same broader crop group, such as winter or spring cereals. In addition, the quality of the results was found to be unrelated to the field size.

When comparing the maps of the models tested, the common 2D and 3D networks exhibited more misclassifications throughout the cropping seasons, impacting both the whole cropping field as well as specific local field areas. This was almost restricted to classes with lower classification accuracy, such as winter triticale, spring oat, or legume, as well as aggregated classes. As already shown in the overall accuracies of the individual cropping years, particularly dominant mapping errors were obvious in the map for the 2017/18 cropping year. The proposed 3D U-Net model demonstrated a substantial improvement in crop-type mapping, reducing major misclassifications within fields confirming the accuracy assessment of a more precise classification result across all cropping seasons.

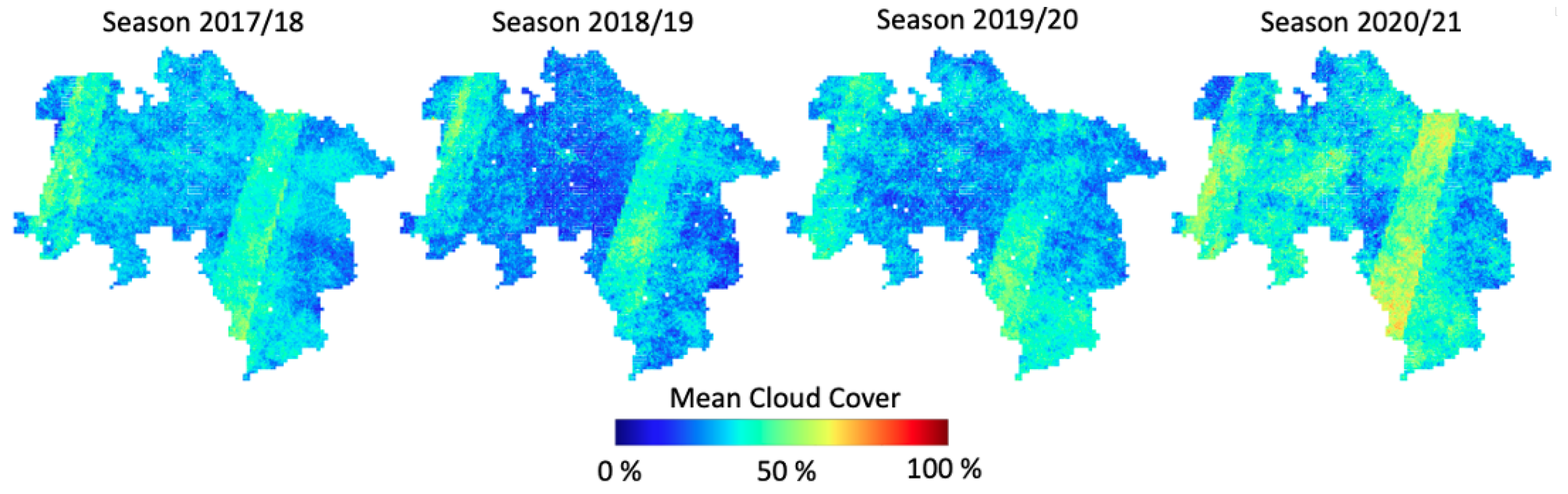

As clouds were not masked in our multispectral image composites, the patches exhibited varying degrees of cloud cover. In

Figure 7, the mean cloud cover of the generated patch-based composites for each season is displayed, showing a significant spatial and temporal variation throughout the study period. For all seasons, two linear patterns of dense cloud cover were noticeable, indicating regions with limited availability of optical image data in these regions. The cropping season of 2020/21 was particularly affected by dense mean cloud cover, which extended over most parts of Lower Saxony. In comparison, the image data for the 2018/19 season were characterized by significantly less cloud cover, particularly for the central area of the federal state.

With respect to the visualized cloud cover, the mapping results showed only a minor correlation between cloud cover and classification accuracy. For example, all three models achieved better classification results for the season 2020/21 than 2017/18, although the mean cloud cover was significantly higher during this season. This suggests that other factors had a stronger impact on crop classification than cloud cover alone. Nonetheless, classification restrictions due to cloud cover could be recognized when clouds appeared persistent for longer periods of the season or during the main phases of crop growth.

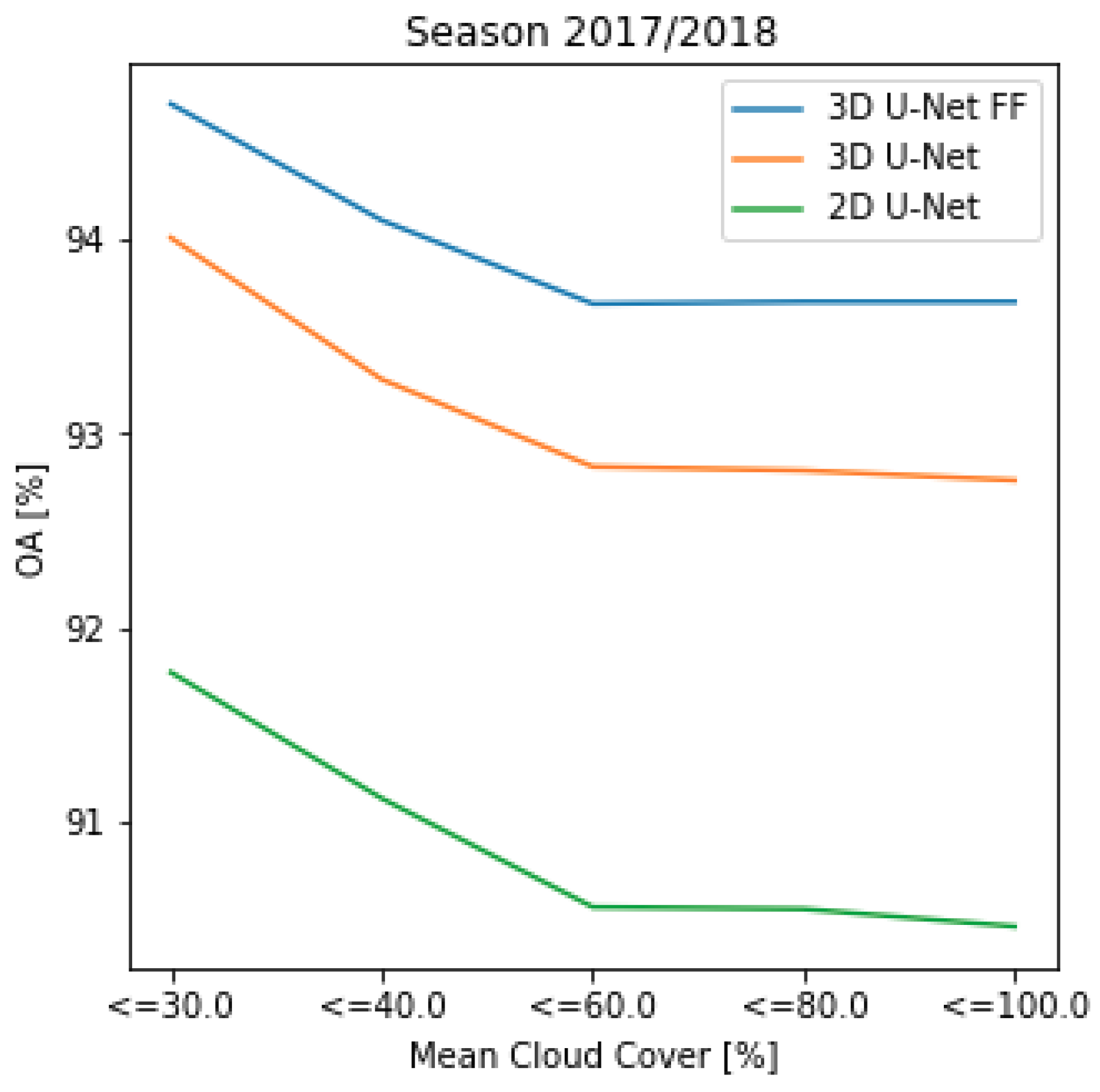

This is underlined by the results of a detailed, cloud-cover-specific accuracy assessment (

Figure 8) for the 2017/18 season. In this assessment, we stepwise excluded pixels from the validation set based on cloud cover thresholds; for example, the ‘<=30%’ category includes only pixels with up to 30% cloud cover. The results clearly show a decrease in overall performance as cloud cover increases. In this context, differences in classification accuracy were evident between the models, with the proposed network demonstrating more accurate and stable results as cloud cover increased compared to the common 2D and 3D models. When considering the classification maps, the traditional models often failed to fully map parcels if cloud cover was too dominant in the multitemporal patches. This was also evident for classes that could otherwise be correctly classified with high accuracy. The results of the proposed 3D U-Net FF, however, were much more consistent compared to the traditional models. Field shapes were more often identified correctly, and crop types were mostly successfully assigned.

4.4. Accuracy Assessment for Temporal Transfer of the 3D U-Net FF

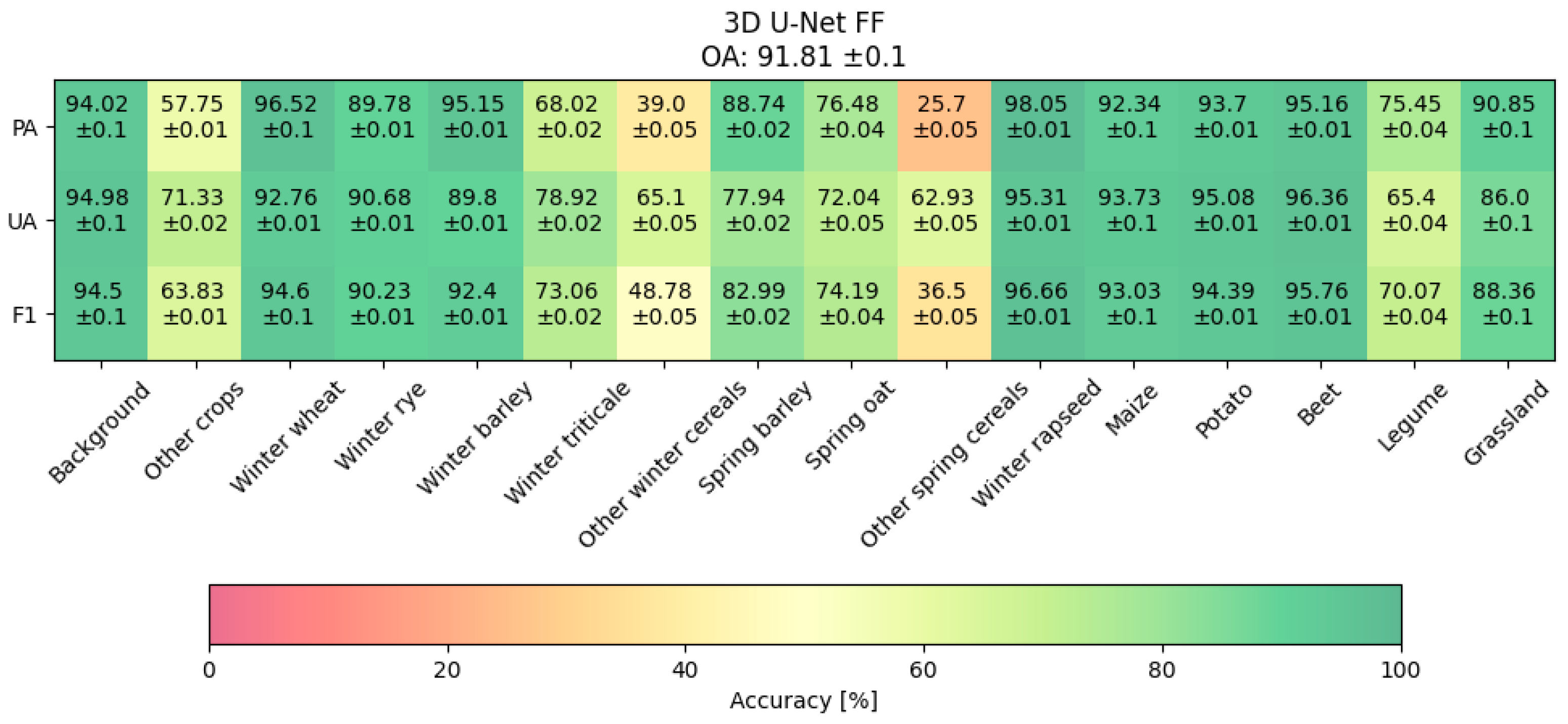

The proposed 3D U-Net FF (trained with data from four cropping seasons 2017/18 to 2020/21) was used to classify data from the 2021/22 season for Lower Saxony. The results displayed in

Figure 9 indicated a good performance in terms of OA (91.8%) as well as a successful discrimination between agricultural and non-agricultural pixels, as evidenced by F1-scores surpassing 94%.

The winter cereals wheat, barley, and rye were successfully classified with only minimal deviations. The detection of winter triticale was more limited compared to the training seasons, resulting in losses in F1-scores of nearly 18%. This relatively low accuracy observed for this class could be attributed to its limited differentiation from other winter cereal classes, which was already observed for the previous seasons. A notable agreement in Lower Saxony was found with the reference data for the spring classes barley (F1-score = 83%) and oats (F1-score = 74.2%). Very satisfactory results were achieved for winter rapeseed, maize, potato, beet and grassland. With F1-scores above 93% for the first four classes and 88.4% for grassland, the identification of these classes was especially given and comparable to the previous seasons. In comparison to prior seasons, the evaluation of legume exhibited still acceptable outcomes (F1-score = 70.1%). The lowest F1-scores were given for other winter cereals and other spring cereals. With 48.8% and 36.5%, respectively, the class estimation was clearly limited and significantly lower than the previous cropping seasons. These findings suggest a notable decrease in accuracy, primarily influenced by exceedingly low precision PA values.

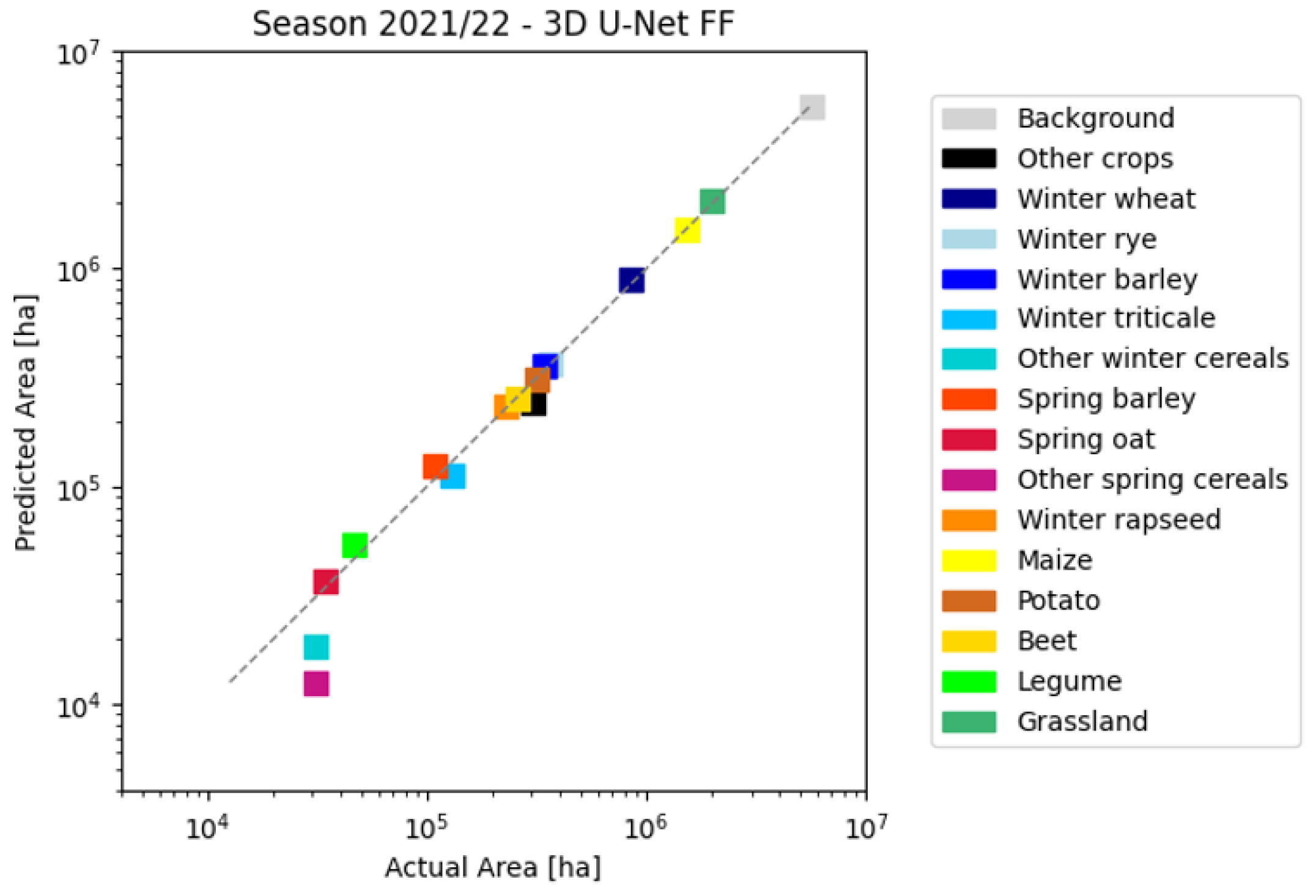

Figure 10 presents the estimated class areas for the season 2021/22, showing still sufficient mapping accuracy for almost all considered classes. A very strong correlation was observed for classes with larger areas, such as

grassland,

maize,

winter rapeseed, and winter cereals (

wheat,

barley, and

rye). Slight disparities were observed for classes like

legumes and

winter triticale. These discrepancies remained, however, within a range of high agreement. Higher mapping errors were only observed for

other winter cereals and

other spring cereals, resulting in underestimations of approximately 10% and 20%, respectively. These underestimations aligned with the low values of PA’s depicted in

Figure 9.

4.5. Mapping of Crop Types for the 2021/22 Season in Lower Saxony

The transfer of the proposed model to the image data of the unknown season 2021/22 showed persistent map accuracy. The small subsets A and B (see

Figure 1) of the classification map of Lower Saxony are illustrated in

Figure 11. The upper figure represents subet A (a region in the district of Emsland), which has already been considered for model comparison in

Figure 6. The lower figure shows subset B, a marsh region in the northern part of Lower Saxony near the estuary of the Elbe River into the North Sea. The considered regions exhibited contrasting predominant crop profiles. The Emsland area is notably characterized by the predominant cultivation of

maize and

potato crops, whereas the marsh area displayed a notable prevalence of cereal and

grassland cultivation.

For both regions, the majority of parcels were accurately predicted, and field structures were well represented compared to the previous seasons. Most pixels within a parcel were correctly assigned, allowing for reliable assessment of individual field crops. No significant spatial mapping errors were observed, which might have been caused by cloud cover or different local environmental conditions. Rather, regional crop type patterns were consistently and accurately mapped. However, in comparison to the training seasons, a slight increase in mapping errors was noticeable. Especially for the classes winter triticale, other winter cereals, and other spring cereals, a higher misclassification rate could be shown, as indicated by the lower accuracy values shown. As limitations in crop type classification were predominantly restricted to specific local field areas, a correct crop type assignment of almost all parcels was possible.

5. Discussion

5.1. Accuracy Assessment across All Cropping Seasons

The presented results demonstrated satisfactory classification accuracy over all seasons, including major crop types like maize, winter rapeseed, and winter cereals like wheat, barley, or rye. Hence, it can be concluded that our proposed approach for generating multitemporal image composites still enables CNNs to effectively manage complex and extensive datasets of multisensor Earth observation (EO) data, even in the presence of temporal cloud cover and varying climate conditions. Further, the detailed accuracy assessment showed that the 3D models outperformed the 2D U-Net in terms of accuracy, resulting in an OA of over 94%.

When considering the class-wise accuracies, the successful classification of winter cereals by all classifiers should be highlighted. Due to similar spectral ambiguities and similar plant structures, these crops are usually very difficult to differentiate in both optical and SAR data [

47]. An exceptional case was

winter triticale. The separation of this crop type was more difficult due to its very similar appearance and phenological development to other winter cereals, as it is a hybrid form of

wheat and

rye [

19]. While this was particularly evident for the standard 2D U-Net (F1-score = 82.2%), the proposed 3D U-Net FF performed very well (F1-score = 91.0%).

The 2D U-Net model exhibited significantly lower classification results for the classes

spring oat and

legume. Both classes are difficult to separate due to spectral similarities to other crop classes, which was also observed in previous studies [

5]. The integration of additional temporal features by the 3D models had a substantial positive impact on the classification accuracy of these classes, resulting in very high accuracies, which also exceeded results from other comparable studies [

4,

5,

6]. This demonstrated that the application of 3D convolutions allowed the networks to effectively learn and capture representative crop patterns related to vegetation dynamics in the temporal domain. Therefore, 3D convolutions proved to be advantageous in the context of multitemporal crop-type mapping.

A detailed accuracy assessment underlined the potential of the proposed 3D N-Net FF. Among all classification algorithms tested, the proposed 3D U-Net FF model performed the best in terms of OA as well as F1-scores, leading to an increase in accuracy for all classes. Moreover, the results of our model were more balanced between the classes, which is crucial for achieving reliable and accurate classification results. For this reason, it can be concluded that the proposed squeeze-and-excitation fusion module helped to focus on important features to increase and balance the classification performance.

A particular strength of the 3D U-Net FF was the ability to accurately classify pixels belonging to aggregated (mixed) classes such as other winter cereals or other spring cereals. These classes encompassed a high level of variance as they included multiple crop types, each with distinct spectral signals and growing patterns. The reason for this excellent performance could stem from learned multi-stage patterns between SAR and multispectral data contributing to the identification of these classes.

5.2. Comparison of the Model Accuracies for the Individual Cropping Seasons

The individual cropping seasons are characterized by altered meteorological conditions and agro-ecological management practices, which may lead to differences in plant growth and corresponding changes in spectral signature patterns. This temporal variability adds complexity to the classification process, making it more difficult to differentiate between them. This can be challenging, especially for crops with similar spectral characteristics and when the availability and quality of remote sensing data are limited.

The results demonstrated robust and consistent classification results over multiple cropping seasons for all U-Net models, with differences in OA between the seasons of around 1.5%. However, our 3D model outperformed the common 2D and 3D classifiers for each season significantly, underlining the extraction of representative spatio-temporal plant features that are robust to inner-seasonal anomalies.

Our results showed that the class-related accuracies of the measured and estimated areas were consistent. However, the accuracies of classes with smaller acreages exhibited greater variability from year to year. This is likely due to the limited sample size and spatial extent of these smaller classes, making them more susceptible to fluctuations in environmental and weather conditions, which in turn leads to increased variability in their spectral behavior. This trend has been observed in other studies (e.g., [

6]).

Within this context, our proposed feature fusion model showcased excellent prediction accuracy for smaller classes such as other winter cereals, other spring cereals, or legume. The first two classes also encompass multiple crop types, resulting in complex spectral signature patterns. By integrating complementary image features of the multispectral and SAR data using the proposed fusion module, our model effectively captured the subtle spectral differences and intricate patterns within these classes, leading to highly accurate classifications. This highlights the strength of our approach in handling the complexities and achieving precise results for classes with diverse crop compositions or limited sampling size.

For all models, the largest decrease in OA was given for the season 2017/18, which was related to extreme weather conditions in the spring and summer months. High temperatures and precipitation deficits with low cloud cover led to permanent high temperature anomalies [

48]. Especially for the spring crops, the very dry conditions caused drastic drought damages [

49] and therefore led to a temporal change in the spectral signal.

5.3. Comparison of the Mapping Accuracies of the Models

The findings of the accuracy assessment were underlined by the visual interpretation of the classification maps, which revealed promising results for all three classifiers. Moreover, each model was able to successfully map the regional crop rotation across the seasons, which provides valuable insights into the spatial distribution of crop types as well as a better understanding of temporal dynamics in agriculture. This information is particularly relevant in the context of climate change and increasing weather extremes to understand the spatial and temporal distribution of crop types for effective land management and planning. The detailed analysis of the classification maps revealed the advantage of the 3D networks over the 2D model in accurately representing individual parcels, enabling a more detailed and comprehensive view of the dynamics and patterns within agricultural fields.

The excellent classification maps across the growing years are especially noticeable in the context of changing cloud conditions, which differed significantly across the cropping seasons. It was shown that the overall influence of clouds on the classification quality was very limited for all models, supporting our approach of using cloud-effected image composites. Instead, the influence of weather extremes, as shown for the season 2017/18, was more relevant for reduced classification accuracy.

Differences between the models could be identified in the case of persistent cloud cover (see

Figure 8). Among all models, the proposed 3D U-Net FF model showed the most stable performance in terms of classification accuracy, especially when classifying pixels that are characterized by strong cloud cover. At this point, it can be assumed that our proposed 3D squeeze-and-excitation fusion module succeeded in suppressing cloud-related feature maps to improve interdependencies between SAR and optical images. For this reason, it can be concluded that complementary multi-stage features are useful to compensate for cloud cover in optical data and therefore overcome temporal restrictions in satellite imagery. However, this hypothesis should be verified in further work.

In the classification maps, it was observed that the precise identification of the parcel boundaries was challenging, which can be difficult due to various factors such as mixed pixels [

27] or changes over time. However, when assigning the crop type to a specific field, the focus is primarily on classifying the spectral properties within the field itself. Therefore, this issue has a relatively little impact on the correct assignment of the crop type to a specific field. Of greater importance for effective agricultural management and decision-making is the ability to distinguish between neighboring fields and individual parcels. As adjacent fields are often merged by the classifiers, the correct differentiation of two fields could be limited when neighboring parcels contain the same crop type.

5.4. Evaluation of Temporal Model Transfer

In the context of operational crop mapping strategies, the temporal transfer of a classifier is particularly interesting. While for “transfer learning” specific and rather complex workflows can be used, we assume that the 3D U-Net was able to learn adequate discriminative features and could be transferred to a certain degree to another cropping season. Therefore, the 3D U-Net FF model, which was trained with data from Lower Saxony from four cropping seasons, was used to classify data from another cropping season.

The transfer of our proposed model to Lower Saxony for the growing seasons 2021/22 was related to only minor losses in the classification accuracy. The ability of our model to adapt well to unknown growing seasons indicates that the network has captured important and generalizable patterns of vegetation dynamics across different temporal compositions, allowing it to perform effectively even in seasons with different environmental conditions and crop variations. Classification losses were given for mixed classes (other spring cereals and other winter cereals). A certain classification loss for these classes was expected due to variations in growth patterns and changes in spectral characteristics across a different season compared to the training data. The limited availability of characteristic patterns due to the relatively small number of training samples can further constrain the accurate assignment of these classes.

However, as the results were still very promising, our approach enabled the identification and assessment of spatial and temporal crop type patterns. An illustrative example (subset region B) with a significant crop rotation change is depicted in

Figure 12. This example highlights the transition from predominantly spring cereals in the season 2017/18 to a dominant presence of winter cereals in the following seasons. This circumstance was attributed to the difficult sowing conditions in the marshland regions, primarily resulting from heavy rainfall events throughout the autumn of 2017. This was partly compensated in the spring period by the cultivation of spring cereals like

barley or

oats [

50].

6. Conclusions

In this paper, we proposed a multi-stage feature fusion method based on a 3D U-Net architecture for annual crop-type mapping using multitemporal Sentinel-1 and Sentinel-2/Landsat-8 imagery. With this approach, operational monitoring of detailed crop type information was shown, enabling comprehensive assessments of agricultural landscapes.

In detail, our study highlighted the following findings: (1) We introduced a novel approach for creating multitemporal image composites by leveraging patch-based image combinations within a 14-day window based on minimum cloud coverage. In this way, we developed a flexible image composition method that can be applied across different cropping seasons that does not require additional user-dependent metric calculations. (2) The proposed feature fusion model resulted in the best overall accuracy, with improvements for all classes. This was evident based on all considered cropping seasons as well as within the individual years, where our model consistently exhibited more stable and balanced classification results. This indicated its ability to mitigate the challenges posed by differences in climate conditions and farming patterns. In addition, our model proved to be more robust to prolonged cloud cover. Based on these results, we concluded that the integration of our 3D fusion model, incorporating a 3D squeeze-and-excitation network, improved the extraction of spatio-temporal information and dependencies between SAR and multispectral images, leading to increased classification performances with almost no additional computational cost. (3) The results obtained showed that the proposed network was highly effective in ensuring stability and reliability when transferring it to a new cropping season, which is particularly significant in light of the rapidly changing environmental conditions and evolving agricultural practices that are becoming increasingly prevalent. Limitations were primarily given for spectral similar classes like spring crops or aggregated classes, whose spectral signals can alter due to differences in climate and soil conditions between larger regions.

In the future, it will be necessary to further optimize the presented model since the distinction between spectrally similar classes and classes with limited training samples remains challenging. It may be possible to improve the classification by increasing the temporal resolution of the patch-based composites. In particular, this would be useful for the spring and summer months, as these contain relevant phenological crop information. In this context, our approach, which uses an adapted temporal sampling strategy for cloud reduction, should be compared to classical methods for creating image composites (which compute predominantly spectral-temporal metrics over time based on cloud-free images). To address class imbalances, various techniques can be employed, such as oversampling or undersampling of the minority classes or applying class weighting during the model training process.

Since the classification method did not provide spatial model transfer, the application should be tested in other regions with different climate sand farming patterns to assess the model’s stability in the spatial and temporal domains. In this context, the integration of further crop species and dealing with the spectral properties of non-crop-related classes (background) seem relevant for a flexible model application.

Altogether, this study showed that state-of-the-art deep learning methods offer great potential to learn representative multi-modal image features from multispectral and SAR satellite imagery for reliable crop-type mapping.