Abstract

Currently, target detection on unmanned aerial vehicle (UAV) images is a research hotspot. Due to the significant scale variability of targets and the interference of complex backgrounds, current target detection models face challenges when applied to UAV images. To address these issues, we designed an effective and lightweight full-scale target detection network, FSTD-Net. The design of FSTD-Net is based on three principal aspects. Firstly, to optimize the extracted target features at different scales while minimizing background noise and sparse feature representations, a multi-scale contextual information extraction module (MSCIEM) is developed. The multi-scale information extraction module (MSIEM) in MSCIEM can better capture multi-scale features, and the contextual information extraction module (CIEM) in MSCIEM is designed to capture long-range contextual information. Secondly, to better adapt to various target shapes at different scales in UAV images, we propose the feature extraction module fitting different shapes (FEMFDS), based on deformable convolutions. Finally, considering low-level features contain rich details, a low-level feature enhancement branch (LLFEB) is designed. The experiments demonstrate that, compared to the second-best model, the proposed FSTD-Net achieves improvements of 3.8%, 2.4%, and 2.0% in AP50, AP, and AP75 on the VisDrone2019, respectively. Additionally, FSTD-Net achieves enhancements of 3.4%, 1.7%, and 1% on the UAVDT dataset. Our proposed FSTD-Net has better detection performance compared to state-of-the-art detection models. The experimental results indicate the effectiveness of the FSTD-Net for target detection in UAV images.

1. Introduction

Target detection represents one of the most important fundamental tasks in the domain of computer vision, attracting substantial scholarly and practical interest in recent years. The core objective of target detection lies in accurately identifying and classifying various targets within digital images, such as humans, vehicles, or animals, and precisely determining their spatial locations. The advent and rapid evolution of deep learning technologies have significantly propelled advancements in target detection, positioning them as a focal point of contemporary research with extensive applications across diverse sectors [1,2]. Notably, drones, leveraging their exceptional flexibility, mobility, and cost-effectiveness, have been integrated into a wide array of industries. These applications span emergency rescue [3], traffic surveillance [4], military reconnaissance [5], agricultural monitoring [6], and so on.

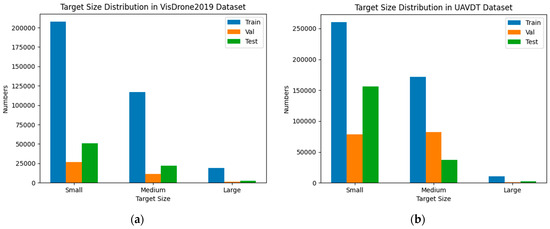

For natural images, target detection technology has made considerable progress. This technology can be divided into single-stage and two-stage detection methods. Two-stage detection methods primarily include region-based target detection approaches such as R-CNN [7], Fast R-CNN [8], Faster R-CNN [9], and so on. Single-stage detection methods encompass techniques like YOLO, SSD, and DETR [10,11]. Natural images are typically captured from a ground-level perspective, with a relatively fixed viewpoint and minimal changes in the shapes and sizes of targets. In contrast, drone images are usually taken from high altitudes, offering a bird’s-eye view. Under this perspective, especially when the drone’s flight altitude varies, the shapes and sizes of targets can change significantly. As shown in Figure 1, we analyzed the number of small, medium, and large targets in the VisDrone 2019 and UAVDT datasets (the latter being divided into training, testing, and validation sets for clarity). The results indicate that the training, testing, and validation sets of both the VisDrone 2019 and UAVDT datasets contain targets of various sizes, showing significant differences in target scale distribution.

Figure 1.

Target size distribution in VisDrone 2019 and UAVDT dataset. (a) Target numbers of different size in VisDrone 2019, (b) target numbers of different sizes in UAVDT.

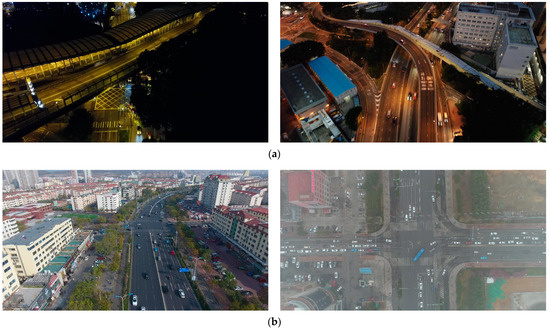

Moreover, targets in the drone images often have more complex backgrounds due to variations in lighting, weather conditions, and surrounding environments. As shown in Figure 2a, the buildings, trees, and other non-target categories presented in the images, along with the dim lighting conditions, make target detection in UAV images more challenging. For Figure 2b, the occlusion by trees, shadows, and adverse weather conditions introduces more challenges to the target detection task. Consequently, the significant scale differences and complex backgrounds make it challenging to directly apply existing detection models designed for natural images to UAV images.

Figure 2.

Examples from the VisDrone 2019 and UAVDT dataset. (a) Examples from VisDrone 2019 dataset, (b) examples from UAVDT dataset.

In this paper, to address the challenges of significant scale variability of targets and complex backgrounds in UAV images, we propose the UAV target detection network, FSTD-Net. The design of FSTD-Net is based on three principal aspects. Firstly, to capture more effective multi-level features and avoid the interference of complex backgrounds, the multi-scale and contextual information extraction module (MSCIEM) is designed. Secondly, to extract full-scale object information that can better represent targets of various scales, we designed the feature extraction module fitting different shapes (FEMFDS), which is guided by the deformable convolutions. The positions of the sampling points in deformable convolutions can be dynamically adjusted during the learning process; the FEMFDS is positioned before the detection heads for different scales, enabling it to effectively capture full-scale target features of different target shapes. Finally, to fully utilize the detailed information contained in the low-level features, we propose the low-level feature enhancement branch (LLFEB), which uses a top-down and bottom-up feature fusion strategy to incorporate low-level semantic features. In summary, the main contributions are as follows:

- (1)

- The MSCIEM is proposed to optimize the semantic features of targets on different scales while avoiding the interference of complex backgrounds. MSIEM employs parallel convolutions with different kernel sizes to capture the features of targets on different scales. The CIEM embedded in MSCIEM is to capture long-range contextual information. Thus, the MSCIEM can effectively capture multi-scale and contextual information.

- (2)

- We design the FEMFDS, which is guided by the deformable convolutions. Due to the FEMFDS breaking through the conventional feature extraction paradigm of standard convolution, the FEMFDS can dynamically match full-scale target shapes.

- (3)

- Due to the rich, detailed features in low-level features, such as edges and textures, we designed the LLFEB. The LLFEB uses a top-down and bottom-up feature fusion strategy to incorporate low-level and high-level features, which can effectively utilize the detailed information in low-level features.

- (4)

- Experiments on the VisDrone2019 and UAVDT dataset show that the proposed FSTD-Net surpasses the state-of-the-art detection models. Our proposed FSTD-Net can achieve more accurate detection of targets at various scales and in a complex background. In addition, FSTD-Net is lightweight. These experiments demonstrate the superior performance of the proposed FSTD-Net.

This paper is structured into six distinct sections. Section 2 reviews the related work pertinent to this work. Section 3 elaborates on the design details of the FSTD-Net. Section 4 presents the parameter settings of the model training process, training environment, and experimental results. Section 5 provides an in-depth discussion of the advantages of FSTD-Net and the impacts of the cropping and resizing methods designed for the experiments. Finally, Section 6 summarizes the current work and proposes directions for future research.

2. Related Work

2.1. YOLO Object Detection Models and New Structure Innovations

The YOLO series of target detection models has played a significant role in advancing the task of target detection in natural images. YOLOv1 initially proposed an end-to-end target detection framework, treating target detection as a regression problem [12]. Subsequent versions, such as YOLOv2 [13], YOLOv3 [14], YOLOv4 [15], and so on, continually optimized network architectures and training methods, leading to improvements in detection accuracy and speed. YOLOv2 improves YOLOv1 by using anchor boxes for better target size and shape handling, higher resolution feature maps for improved localization, and batch normalization for faster convergence and enhanced detection performance. YOLOv3 enhances YOLOv2 by using multi-scale predictions, a ResNet-based architecture, and logistic regression classifiers, improving small target detection, feature extraction, and suitability for multi-label classification, thus boosting accuracy and speed. YOLOv4 introduced the Bag of Specials and Bag of Freebies to enhance model expressiveness. YOLOv5 absorbed state-of-the-art structures and added modules that are easy to study. YOLOv6 customized networks of different scales for various applications and adopted a self-correcting strategy [16]. YOLOv7 addressed some issues through trainable free packets and expanded the approach [17]. YOLOv8 employed the latest C2f structure and PAN structure, with a decoupled form for the detection head [18]. The YOLOv9 further improved the model structure, resolving the issue of information loss [19]. The latest YOLOv10 further advances the performance-efficiency boundary of YOLOs through improvements in both post-processing techniques and model architecture [20]. These enhancements have significantly advanced the YOLO series models in target detection tasks.

Feature Pyramid Networks (FPN) are widely adopted in target detection frameworks, including the YOLO series, and have been proven to significantly enhance detection performance [21]. Recently, there have been several studies focused on improving FPN structure. DeNoising FPN (DN-FPN), proposed by Liu et al, employs contrastive learning to suppress noise at each level of the feature pyramid in the top-down pathway of the FPN [22]. GraphFPN is capable of adjusting the topological structure based on different intrinsic image structures, achieving feature interaction at the same and different scales in contextual layers and hierarchical layers [23]. AFPN enables feature fusion across non-adjacent levels to prevent feature loss or degradation during transmission and interaction. Additionally, through adaptive spatial fusion operations, AFPN suppresses information conflicts between features at different levels [24].

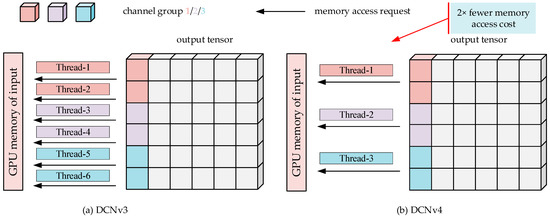

Furthermore, several enhancements to standard convolutional feature extraction have been proposed recently, with deformable convolution garnering significant attention. Standard convolution has a fixed geometric structure, which is detrimental to feature extraction. However, the Deformable Convolution series can overcome this limitation of the CNN building blocks. When first introduced, Deformable Convolution (DCNv) introduced deformable convolution kernels and deformable ROI pooling to enhance the model’s modeling capability, and it can automatically learn offsets in target tasks without additional supervision [25]. DCNv2 addresses the issues of DCNv by further introducing a modulation mechanism to adjust the spatial support regions, avoiding irrelevant information affecting the features, and achieving better results compared to the original DCNv [26]. DCNv3 further extends DCNv2 by weight sharing among convolutional neurons and introducing multiple sets of mechanisms and per-sample normalization modulation scalars [27]. DCNv4 addresses the limitations of DCNv3 by eliminating soft-max normalization in spatial aggregation to enhance its dynamic properties and expressive power. Then, it optimizes memory access to minimize redundant operations and improve speed [28].

2.2. Methods of Mitigating the Impact of Complex Backgrounds on Target Detection

To mitigate the impact of complex backgrounds on target detection, Liu et al. designed a center-boundary dual attention (CBDA) module within their network, employing dual attention mechanisms to extract attention features from target centers and boundaries, thus learning more fundamental characteristics of rotating targets and reducing background interference [29]. Shao et al. developed a spatial attention mechanism to enhance target feature information and reduce interference from irrelevant background information [30]. Wang et al. introduced the target occlusion contrast module (TOCM) to improve the model’s ability to distinguish between targets and non-targets [31]. To alleviate interference caused by complex backgrounds, Zhang et al. designed a global-local feature guidance (GLFG) module that uses self-attention to capture global information and integrate it with local information [32]. Zhou proposed a novel encoder-decoder architecture, combining a transformer-based encoder with a CNN-based decoder, to address the limitations of CNNs in global modeling [33]. Zhang et al. constructed a spatial location attention (SLA) module to highlight key targets and suppress background information [34]. Gao et al. introduced a multitask enhanced structure (MES) that injects prominent information into different feature layers to enhance multi-scale target representation [35]. Gao et al. also designed the hidden recursive feature pyramid network (HRFPN) to improve multi-scale target representation by focusing on information changes and injecting optimized information into fused features [36]. Furthermore, Gao et al. designed an innovative scale-aware network with a plug-and-play global semantic interaction module (GSIIM) embedded within the network [37]. Dong et al. developed a novel multiscale deformable attention module (MSDAM), embedded in the FPN backbone network, to suppress background features while highlighting target information [38].

2.3. Methods of Addressing the Impact of Significant Scale Variations on Target Detection

To address the impact of significant scale variations on target detection, Shen et al. combined inertial measurement units (IMU) to estimate target scales and employed a divide-and-conquer strategy to detect targets of different scales [39]. Jiang et al. enhanced the model’s ability to perceive and detect small targets by designing a small target detection head to replace the large target detection head [40]. Liu et al. designed a widened residual block (WRB) to extract more features at the residual level, improving detection performance for small-scale targets [41]. Lan et al. developed a multiscale localized feature aggregation (MLFA) module to capture multi-scale local feature information, enhancing small target detection [42]. Mao et al. proposed an efficient receptive field module (ERFM) to extract multi-scale information from a variety of feature maps, mitigating the impact of scale variations on small target detection performance [43]. Hong et al. developed the scale selection pyramid network (SSPNet) to enhance the detection ability of small-scale targets in images [44]. Cui et al. created the context-aware block network (CAB Net), which establishes high-resolution and strong semantic feature maps, thereby better detecting small-scale targets [45]. Yang et al. introduced the QueryDet scheme, which utilizes a novel query mechanism to speed up the inference of feature pyramid-based target detectors by first predicting rough locations of small targets on low-resolution features and then using these rough locations to guide high-resolution features for precise detection results [46]. Zhang et al. proposed the semantic feature fusion attention (FFA) module, which aggregates information from all feature maps of different scales to better facilitate information exchange and alleviate the challenge of significant scale variations [47]. Nie et al. designed the enhanced context module (ECM) and triple attention module (TAM) to enhance the model’s ability to utilize multi-scale contextual information, thereby improving multi-scale target detection accuracy [48]. Wang et al. introduced a lightweight backbone network, MSE-Net, which better extracts small-scale target information while also providing good feature descriptions for targets of other scales [49]. Cai et al. designed a poly kernel inception network (PKINet) to capture features of targets more effectively at different scales, thereby addressing the significant variations in target scales more efficiently [50].

3. Methods

In this section, the baseline model YOLOv5s and the proposed FSTD-Net will be introduced. Firstly, the overall framework of the baseline model YOLOv5s will be introduced. Then, we will give an overall introduction to the FSTD-Net. Finally, we will discuss the components of FSTD-Net in detail: the LLFEB, which can provide sufficient detailed information from low-level features; the MSCIEM, which can effectively capture target features at different scales; and long-range context information. The FEMFDS, which is based on deformable convolution, is sensitive to full-scale targets of different scales.

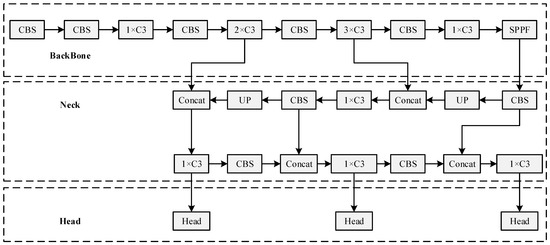

3.1. The Original Structure of Baseline YOLOv5s

YOLOv5 is a classic single-stage target detection algorithm known for its fast inference speed and good detection accuracy. It comprises five different models: n, s, m, l, and x, which can meet the needs of various scenarios. As shown in Figure 3, the YOLOv5s architecture consists of a backbone for feature extraction, which includes multiple CBS modules composed of convolutional layers, batch normalization layers, and SiLU activation functions, as well as C3 modules for complex feature extraction (comprising multiple convolutional layers and cross-stage partial connections). At the end of the backbone, global feature extraction is performed using the SPPF module. The SPPF (Spatial Pyramid Pooling-Fast) module in YOLOv5 serves the purpose of enhancing the receptive field and capturing multi-scale spatial information without increasing the computational burden significantly. By applying multiple pooling operations with different kernel sizes on the same feature map and then concatenating the pooled features, SPPF effectively aggregates contextual information from various scales. This helps in improving the model’s ability to detect targets of different sizes and enhances the robustness of feature representations, contributing to better overall performance in target detection tasks.

Figure 3.

The original structure of baseline YOLOv5s.

The Neck incorporates both FPN and PANet [51] structures, employing concatenation and nearest-neighbor interpolation for upsampling to achieve feature fusion. FPN is utilized to build a feature pyramid that enhances the model’s ability to detect targets at different scales by combining low-level and high-level feature maps. PANet further improves this by strengthening the information flow between different levels of the feature pyramid, thus enhancing the model’s performance in detecting small and large targets.

The Head consists of several convolutional layers that transform the feature maps into prediction outputs, providing the class, bounding box coordinates, and confidence for each detected target. In the YOLOv5s, the detection head adopts a similar structure to YOLOv3 due to its proven efficiency and effectiveness in real-time target detection. This structure supports multi-scale predictions by detecting targets at three different scales, handles varying target sizes accurately, and utilizes anchor-based predictions to generalize well across different target shapes and sizes. Its simplicity and robustness make it easy to implement and optimize, leading to faster training and inference times while ensuring high performance and reliability across diverse applications.

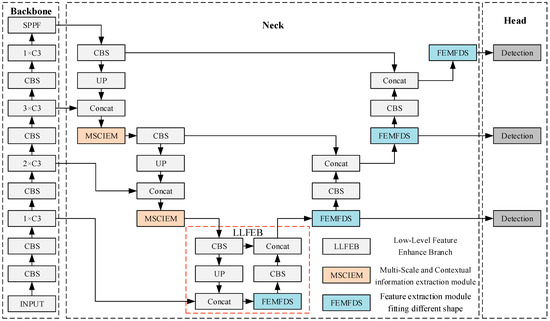

3.2. The Overall Framework of FSTD-Net

In this paper, our proposed FSTD-Net is based on the YOLOv5s architecture, as illustrated in Figure 4. The structure of FSTD-Net includes three main components: The Backbone, the Neck, and the Head, all integrated into an end-to-end framework for efficient and precise target detection. The Backbone is responsible for feature extraction, leveraging YOLOv5s for its lightweight design, which balances performance with computational efficiency. The Neck further processes and fuses these features from different levels of the backbone, enhancing the network’s ability to recognize targets of varying scales and complex backgrounds through multi-scale feature fusion. The Head, employing a detection mechanism similar to YOLOv3, accurately identifies and localizes target categories and positions using regression methods, ensuring high accuracy and reliability. Overall, FSTD-Net’s design combines lightweight architecture with multi-scale feature integration, achieving high detection accuracy while maintaining low computational complexity and high processing efficiency, making it suitable for UAV target detection tasks.

Figure 4.

The overall framework of FSTD-Net.

For FSTD-Net, the Backbone adopts the CSP-Darknet network structure, which effectively extracts image features. In the Neck part, to better suppress complex background information in UAV images and extract information from targets of different scales, the MSCIEM replaces the original C3 module. The MSCIEM is mainly composed of MSIEM and CIEM. MSIEM utilizes multiple convolutional kernels with different receptive fields to better extract information from targets on different scales. Meanwhile, the context information extraction module (CIEM) is embedded in the MSCIEM to extract long-distance contextual semantic information. To improve the detection of targets of different scales in UAV images, the FEMFDS module breaks through the inherent feature extraction method of standard convolution. It can better fit the shapes of targets of different scales and extract more representative features. The designs of MSCIEM and FEMFDS aim to enhance the detection performance of FSTD-Net for targets of different sizes. Furthermore, considering that low-level features contain rich, detailed features such as edges, corners, and texture features, the LLFEB is separately designed to better utilize low-level semantic features and enhance the target detection performance of FSTD-Net.

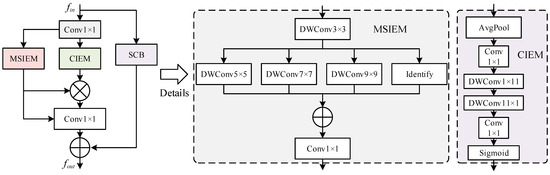

3.3. Details of MSCIEM

Since UAV images are captured from a high-altitude perspective, they introduce complex background information and contain various types of targets. The complexity of the background and the scale differences can significantly impact the performance of target detection. To mitigate the introduction of complex background information and address the issue of scale differences in targets, MSCIEM is designed. As shown in Figure 5, the structural composition of MSCIEM is illustrated. MSCIEM consists of an independent shortcut connection branch (SCB), MSIEM, and CIEM, where features from these three paths are fused as the final output feature. The MSIEM, designed with convolutional kernels of various sizes, effectively captures feature information from targets of different scales in feature maps. Meanwhile, the CIEM is employed to capture contextual information, enhancing the overall feature representation capability.

Figure 5.

The overall structure of MSCIEM.

The inputs and outputs of MSCIEM are respectively and . For the independent branch SCB, it consists of a convolutional layer with a kernel size of 3 × 3 and a stride of 1. The input is . The output feature after processing by SCB is , as shown in Equation (1).

For MSIEM and CIEM, before being fed into MSIEM and CIEM, undergoes a convolutional layer with a 1 × 1 kernel size to reduce the number of channels to 1/4 of the original, resulting in the inputs for MSIEM and CIEM, respectively. For MSIEM, it follows an inception-style structure [52,53]. It first undergoes 3 × 3 depth-wise convolutions (DWConvs) to extract local information and is then followed by a group of parallel DWConv with kernel sizes of 5 × 5, 7 × 7, and 9 × 9 to extract cross-scale contextual information. Let represent the local features extracted by the 3 × 3 DWConv, and represent the cross-scale contextual features extracted by the DWConvs with kernel sizes of 5 × 5, 7 × 7, and 9 × 9, respectively. The contextual features and the local features are fused using 1 × 1 convolutions, resulting in the features , as shown in Equation (2).

For CIEM, its main role is to capture long-range contextual information. CIEM is integrated into MSIEM to strengthen the central features while further capturing the contextual interdependencies between distant pixels. As shown in Figure 5, by using an average pooling layer followed by a 1 × 1 convolutional layer on , the local features are gained. represents the average pooling operation, followed by two depth-wise strip convolutions applied to , and then the long-range contextual information is extracted. Finally, the sigmoid activation function is used to extract the final attention feature map .

In Equation (3), and represent depth-wise strip convolutions with kernel sizes of 11 × 1 and 1 × 11, respectively. denotes the activation function. is used to enhance the contextual expression of features extracted by MSIEM. After the features extracted by MSIEM and CIEM are processed by a 1 × 1 convolution, they are fused with the features extracted by SCB to obtain the final features.

In Equation (4), represents element-wise multiplication.

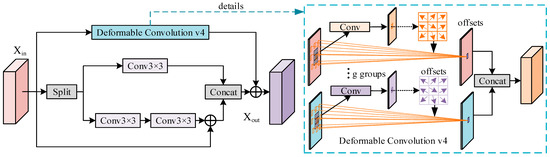

3.4. The Design of FEMFDS

Standard convolutions have fixed geometric structures and receptive fields; thus, they have certain limitations in feature extraction. Standard convolutions cannot effectively model full-scale targets of different shapes present in UAV images. However, deformable convolutions, by adding additional offsets based on sampling positions within the module and learning these offsets during the target task, can better model full-scale targets of various shapes present in UAV images. Thus, we designed the FEMFDS to better fit different full-scale target shapes. As shown in Figure 6, The FEMFDS consists of two branches: one branch is used to extract local features from the image, and the other branch is dedicated to extracting features that can better fit targets of different sizes. The features extracted by these two branches are then fused to obtain the final feature representation.

Figure 6.

The overall structure of FEMFDS.

The deformable convolution used in FEFMDS is deformable convolution v4 (DCNv4). The reason for choosing DCNv4 is that, based on DCNv3, it removes the soft normalization to achieve stronger dynamic properties and expressiveness. Furthermore, as shown in Figure 7, memory access is further optimized. DCNv4 uses one thread to process multiple channels in the same group that share sampling offset and aggregation weights. Thus, DCNv4 has a faster inference speed. When the input is , with height H, width W, and C channels. The process of deformable convolution is as follows:

Figure 7.

The improvements of DCNv4 over the foundation of DCNv3.

In Equation (5), g represents the number of groups for spatial grouping along the channel dimension. For the g-th group, the and respectively represents the feature maps for the g-th group. The dimension of the feature channels is represented by . represents the spatial aggregation weight of the k-th sampling point in the g-th group. represents the k-th position of the predefined grid sampling, similar to standard convolution . represents the offset corresponding to the position of the grid sampling in the g-th group.

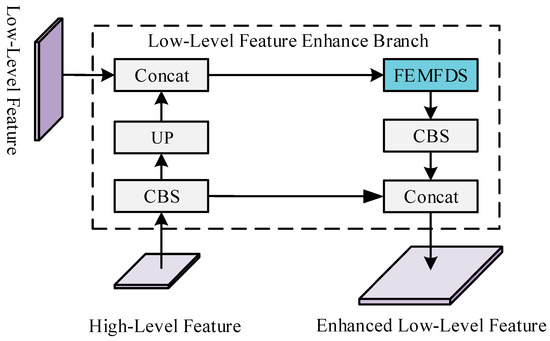

3.5. The Structure of LLFEB

In convolutional neural networks, low-level semantic features include rich details such as edges, corners, textures, and so on. These features provide the foundation for subsequent high-level features, thereby enhancing the model’s detection performance on images. In the YOLOv5s baseline model, the feature maps obtained after two down-sampling operations are not directly used. Instead of directly fusing these low-level semantic features with the down-sample features, we adopt a top-down and bottom-up feature fusion approach. The fusion of low-level and high-level features combines local, detailed information with global semantic information, forming a more comprehensive feature representation. The multi-level information integration helps FSTD-Net better understand and parse image content, thereby further enhancing the detection performance of FSTD-Net on UAV images.

As shown in Figure 8, the specific implementation process of LLFEB is as follows. The low-level feature map is , a convolutional layer that first operates on the corresponding higher-level semantic feature , followed by upsampling on the processed , then the is gained. Then, and are merged with the Concat operation, the fused feature map is further processed with FEMFDS and a convolutional layer, and finally, the enhanced low-level semantic feature is obtained.

Figure 8.

The overall structure of LLFEB.

The overall process of LLFEB is as follows:

In Equation (6), represents the upsampling operation, represents the FEMFDS module.

3.6. Loss Function

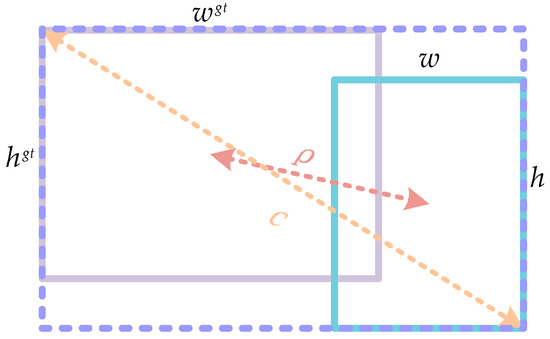

The loss function of FSTD-Net consists of three components, bounding box regression loss, classification loss, and objectness loss. For bounding box regression loss, the Complete Intersection over Union (CIoU) is used. The CIoU loss considers not only the IoU of the bounding box but also the differences in centroid distances and aspect ratios, which makes the prediction of the bounding box more accurate.

In Equation (7), means the Intersection and juxtaposition ratio of predicted and true frames. As shown in Figure 9, we use two different colors of boxes to represent the ground truth boxes and the predicted boxes, , , , represent the width and height of the ground truth boxes and predicted boxes, respectively. represents the Euclidean distance between the center point of the predicted box and the center point of the ground truth box. represents the length of the diagonal of the smallest enclosing box that contains both the predicted box and the ground truth box. represents the weight parameter used to balance the impact of the aspect ratio. represents the consistency measure of the aspect ratio.

Figure 9.

The design principles of the CIoU.

For classification loss, the classification loss (CLoss) measures the difference between the predicted and true categories of the target within each predicted box. The Binary Cross-Entropy Loss (BCEloss) is used to calculate the classification loss.

In Equation (8), represents the total number of categories. represents the true label of class c (0 or 1). represents the predicted probability of class c.

For Objectness Loss (OLoss), the objectness loss measures the difference between the predicted confidence and the true confidence regarding whether each predicted box contains a target.

In Equation (9), represents true confidence (1 if the target is present, otherwise 0). is the predicted confidence.

The final total loss function (TLoss) is obtained by weighting and combining the three aforementioned loss components:

In Equation (10), , and represent the weight coefficients for the bounding box regression loss, classification loss, and objectness loss, respectively. In this experiment, the weights of the box regression loss, classification loss, and objectness loss are 0.05, 0.5, and 1.0, respectively.

4. Experiments and Analysis

In this section, the VisDrone2019 and UAVDT [54] dataset used in this paper will be introduced first, including its characteristics and relevance to the experiment. The experimental setup is then elaborated, encompassing the training configuration, the computational platform used for training, and the evaluation metrics employed to assess the performance of the proposed FSTD-Net and other state-of-the-art models.

4.1. Dataset and Experimental Settings

4.1.1. Dataset Introduction

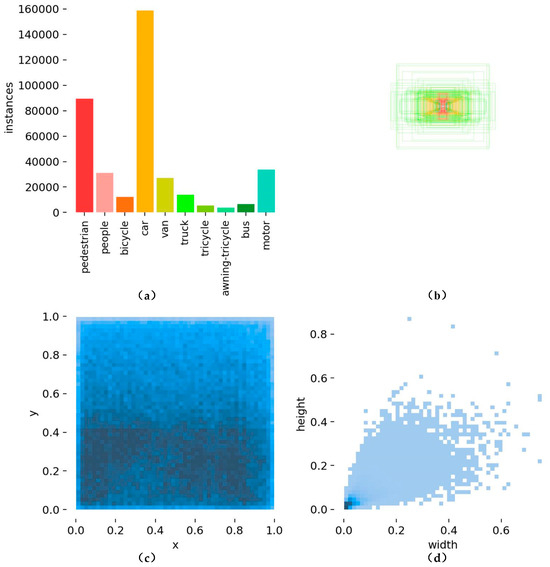

The dataset used in this experiment is the large-scale VisDrone2019 dataset and UAVDT dataset. For the UAVDT dataset, it contains 6471 images for training, 548 images for validation, and 3190 images for testing. However, since 1580 images are reserved for challenges and do not provide the ground truth, we use 1610 images for testing in this experiment. The VisDrone2019 dataset includes a large number of targets of different scales and complex background information, making it suitable for evaluating the performance of the proposed algorithm. As shown in Figure 10, it shows the statistical information on the VisDrone2019 dataset, including the number of instances for different categories, the distribution of bounding box centers, the normalized coordinates of bounding box center points in the image, and the distribution of bounding boxes with different width-to-height ratios. During the training process, all images are cropped into 800 × 800 pixels with a stride of 700. Additionally, to further validate the performance of the proposed network, we conducted experiments on the UAVDT dataset. The UAVDT dataset consists of 50 videos, comprising a total of 40,375 images, each with a resolution of 1024 × 540 pixels. The UAVDT has three categories: car, truck, and bus. Due to the high similarity among image sequences within each video, we selected image sequences from each video to construct the train and test sets. The train set comprises 6566 images, and the test set includes 1830 images.

Figure 10.

Statistical information of VisDrone 2019. (a) The distribution of instance quantities for different categories, (b) the distribution of annotated bounding box center points, (c) the density of bounding box center points, further refining the positional distribution of targets within the image, (d) the width-to-height ratio distribution of annotated bounding boxes.

4.1.2. The Details of Experimental Environment

In this experiment, as shown in Table 1, we utilized an operational environment running on Windows, equipped with a GeForce RTX 2080ti GPU. The programming was conducted using Python 3.8 within the PyCharm integrated development environment, leveraging CUDA 12.2 for GPU support. For deep learning tasks, the Pytorch framework was employed, ensuring efficient model development and training processes.

Table 1.

Experimental environment configuration.

4.1.3. The Setting of Model Training

In this training session, as shown in Table 2, the batch size for each epoch was set to 2, and the input image size was 800 × 800 for VisDrone 2019 dataset and 640 × 640 for the UAVDT dataset. The initial learning rate was set at 0.01 and gradually decayed to 0.001. A momentum of 0.937 was applied to accelerate the convergence process, and a weight decay of 0.0005 was employed to prevent overfitting. The more training epochs, the higher the risk of overfitting; thus, our FSTD-Net are trained for 80 epochs on both VisDrone 2019 and UAVDT dataset. The first three epochs involved a warm-up stage, during which a lower learning rate and momentum were applied to stabilize the training process. Data augmentation techniques included changes in hue, saturation, and brightness, as well as geometric transformations such as rotation, translation, scaling, and shearing. Additionally, to improve model generalization and avoid overfitting, horizontal and vertical flips, as well as advanced augmentation methods like Mosaic, Copy-Paste, and so on, are applied.

Table 2.

The basic parameters of training settings on a different dataset.

4.1.4. Evaluation Metrics

To better evaluate the detection performance of the model, we selected six metrics: average precision (AP), AP50, AP75, APs, APm, and APl. AP represents the average precision across all ten IoU thresholds, ranging from 0.5 to 0.95. AP50 is the average precision calculated at an IoU threshold of 0.5, while AP75 is calculated at an IoU threshold of 0.75. APs, APm, and APl refer to the precision of target detection with areas in the ranges of 32 × 32, 32 × 32 to 96 × 96, and above 96 × 96, respectively. The process for calculating the metrics is as follows:

True Positive (TP), False Positive (FP), and False Negative (FN) are important metrics in evaluating the performance of classification and detection models. TP refers to the number of correctly identified positive instances, meaning the targets that are correctly detected and match the ground truth. FP refers to the number of instances incorrectly identified as positive, meaning the targets that are detected but do not match any ground truth. FN refers to the number of positive instances that were missed by the model, meaning the targets that are present in the ground truth but were not detected by the model. To calculate the specific value of each AP in the above formula, we first need to calculate the Precision (P) and Recall (R) values. Then, AP is obtained by computing the weighted sum of the precision at different recall levels, where the weight is the difference in recall multiplied by the corresponding precision. Specifically, AP50, AP75, APs, APm, and APl correspond to the average precision at different IOU thresholds (such as 0.5 and 0.75) and different target scales (small, medium, and large targets). The calculation method is the same, but the precision and recall used are under the corresponding conditions.

4.2. Experimental Results on VisDrone2019 Dataset

4.2.1. Detecting Results on the VisDrone2019 Dataset

To validate the detection performance of our proposed FSTD-Net, we compared it with several state-of-the-art target detection models, including the YOLOv3, SSD, YOLOv6, YOLOv7, YOLOv8, YOLOv10, Faster R-CNN, and so on, on the VisDrone2019 dataset. As shown in Table 3. From the evaluation metrics we selected, it can be observed that FSTD-Net outperforms other models and achieved the best accuracy values across all evaluation metrics. Specifically, FSTD-Net achieves a 2.4% higher AP, 3.8% higher AP50, and 2.0% higher AP75 compared to the second-best model. Moreover, in the APs, APm, APl metrics, FSTD-Net achieves 1.4%, 3.1%, and 3.7 % higher scores compared to the second-best model. FSTD-Net not only achieves the best detection performance overall among all models but also attains the best detection results in APs, APm, and APl. This indicates that FSTD-Net is more sensitive to targets of different scales in UAV images and can better detect targets with significant scale variability in UAV images. At the same time, the overall number of params in the FSTD-Net remains relatively low. The FSTD-Net maintains the same params as YOLOv8, but it achieves better detection performance. FSTD-Net achieves 5% higher AP50, 2.4% higher AP, 2% higher AP75, 1.4% higher APs, 3.1% higher APm, and 6.4% higher APl compared to YOLOv8s. Our proposed FSTD-Net can achieve better detection performance with a lightweight architecture.

Table 3.

Detection results of FSTD-Net with state-of-the-art models on the VisDrone dataset.

4.2.2. Visual Comparisons of Detection Results

To better demonstrate the superior detection performance of FSTD-Net compared to other state-of-the-art models, we selected several images from the VisDrone 2019 dataset to visualize the detection results, which allows us to directly compare and evaluate the performance differences between FSTD-Net and other models in practical applications. As shown in Figure 11, we use blue boxes to highlight the detection areas for comparison, allowing us to evaluate the detection performance of our proposed FSTD-Net and other advanced models in these specific areas. For better visibility, we enlarge the area outlined by the blue box and move it to the upper right corner of the image. In the first column of images, the blue box areas contain two “motor” targets in the drone imagery. Comparative models, such as YOLOv6 and YOLOv8, failed to detect these two targets, with YOLOv5 detecting only one of them. Due to the proposed LLEFB, FEFMDS, and MCIEM, they have enhanced FSTD-Net’s ability to learn features of targets at different scales; thus, FSTD-Net can precisely detect these two targets. For the second column of images, YOLOv5, YOLOv6, and YOLOv8 all failed to detect the car target highlighted by the blue box. However, our model successfully detected this car target. This is attributed to LLEFB, which enhances FSTD-Net’s capability to learn detailed features of certain target types, such as texture and edge features. In the third column of images, YOLOv5, YOLOv6, and YOLOv8 all failed to detect the human target highlighted by the blue box. However, our FSTD-Net successfully detected this human target, demonstrating FSTD-Net’s superior capability in detecting some difficult targets. In the fourth column of images, YOLOv6 and YOLOv8 all failed to fully detect the bicycle and the two motor targets highlighted in the blue box area. YOLOv5 mistakenly identified the bicycle as a motor. However, our FSTD-Net successfully detected all these targets, demonstrating FSTD-Net’s superior detection capabilities. In the fifth column of images, YOLOv6 and YOLOv8 both failed to fully detect the “van” target highlighted by the blue box, which is situated in a relatively complex background area. However, both our FSTD-Net and YOLOv6 successfully detected the entire target. Thanks to MSCIEM, FSTD-Net can effectively mitigate the interference from the complex background, successfully detecting the target.

Figure 11.

The visual detection results of FSTD-Net and other state-of-the-art models. The first column represents the original images. The second column represents the detection results of YOLOv5. The third column represents the detection results of YOLOv6. The fourth column represents the detection results of YOLOv8. The fifth column represents the detection results of our proposed FSTD-Net.

Overall, our proposed FSTD-Net has better detection results for the presence of the different scale targets in the UAV image or the presence of some targets that are interfered with by complex backgrounds. LLEFB can provide FSTD-Net with sufficient detailed features, enabling FSTD-Net to detect targets that are difficult to detect. MCIEM can provide FSTD-Net with sufficient multi-scale information and, to some extent, avoid interference from complex backgrounds. FEFMDS can better enhance the model’s ability to learn targets on different scales. Both MSCIEM and FEFMDS can better enhance FSTD-Net’s detection of targets on different scales. Our FSTD-Net is better than the compared detection effect of the compared state-of-the-art models; the proposed FSTD-Net can correctly identify the majority of targets in the VisDrone2019 image.

4.2.3. Experimental Results of Different Categories on the VisDrone2019 Test Set

To further validate the effectiveness of the proposed FSTD-Net, we compare the detection performance of the proposed FSTD-Net and the Yolov8s on all categories on the VisDrone2019 dataset. As shown in Table 4, compared to the proposed FSTD-Net, Yolov8s surpasses it by only 0.1% in AP75 and APm for the awning-tricycle target. For the remaining categories, the proposed FSTD-Net all outperforms Yolov8s in detection performance. As shown in Table 3, the params of the FSTD-Net are comparable to those of Yolov8s. However, compared to Yolov8s, FSTD-Net exhibits overall better detection performance, providing more accurate detection for targets of varying scales present in the UAV images. This indicates the superior detection capability of the proposed FSTD-Net, demonstrating its ability to effectively detect various target categories present in the UAV dataset.

Table 4.

Experimental results of different categories on the VisDrone2019 test set.

4.2.4. Ablation Studies of FSTD-Net on the VisDrone 2019 Dataset

To further explore the effectiveness of the proposed method, we conducted ablation experiments to investigate the impact of the LLFEB, MSCIEM, and FEFMDS modules on FSTD-Net detection performance on the VisDrone2019 dataset. Table 5 presents the improvements based on the baseline YOLOv5s model when performing ablation experiments. Table 6 shows the detection performance of the baseline with the addition of the different modules. As shown in Table 5, √ indicated that the current module is embedded in the baseline.

Table 5.

Detailed settings of ablation experiment.

Table 6.

Detailed comparison of the proposed FSTD-Net in the ablation studies.

In the baseline model, the feature maps obtained after two down-sampling operations are not used; thus, the detailed features of the low-level feature have not been fully utilized. The LLFEB enhances the baseline’s learning and understanding of target features in UAV images by providing low-level semantic features such as edges, corners, and textures as a basis for subsequent high-level feature extraction. After introducing LLFEB to baseline, AP50, AP, AP75, APs, and APm on the VisDrone2019 increase by 0.9%, 0.7%, 0.7%, 0.7%, and 0.8%, the APl remains comparable after the introduction of LLFEB. With the integration of LLFEB, the overall target detection accuracy of the model is improved, particularly for medium and small targets.

The FEFMDS overcomes the limitations of standard convolutions in extracting features according to fixed geometric patterns, providing more representative features for the detection of targets of various scales. After introducing FEFMDS, it can be observed that there are improvements of 1% in APs, 2% in APm, and 3.1% in APl. AP50, AP, and AP75 on VisDrone2019 increased by 2.1%, 1.5%, and 1.9%, respectively. The designed model can better detect targets of different scales in drones under the guidance of the FEFMDS.

The MSCIEM suppresses complex backgrounds in UAV images while enhancing the feature extraction of targets of different scales by utilizing multiple convolutions with different kernel sizes. The CIEM further enhances the baseline’s ability to capture long-range semantic information. With the introduction of MSCIEM, we observed improvements of 0.3% in APs, 0.8% in APm, and 0.7% in APl. MSCIEM can guide the model to detect targets of different scales in UAV images more accurately.

In summary, the proposed MSCIEM and FEFMDS modules enhance the baseline’s detection of targets of different scales, while LLFEB improves the baseline’s learning and understanding of target features in UAV images.

4.3. Experimental Results on UAVDT Dataset

To further validate the effectiveness of the proposed FSTD-Net, we further conducted experiments on the UAVDT dataset and compared the detection performance of the FSTD-Net with that of YOLOv6, YOLOv7, YOLOv8, YOLOv10, and so on. Table 7 presents the AP, AP50, AP75, and the detection precision for small, medium, and large targets (APs, APm, APl) on the UAVDT dataset. Compared to other algorithms, FSTD-Net achieved the highest detection precision in AP50, AP, AP75, and APm. Specifically, our method improved by 3.4%, 1.7%, 1%, and 2.1%, respectively, over the second-best model in these metrics. As for the APs and APl, FSTD-Net achieved relatively good results. These results demonstrate the effectiveness of our FSTD-Net in handling large-scale variations in object detection and achieving a good detection accuracy.

Table 7.

Detection results of FSTD-Net with state-of-the-art models on the UAVDT dataset.

To further explore the effectiveness of the proposed method on the UAVDT dataset, we conducted ablation experiments to investigate the impact of the LLFEB, MSCIEM, and FEFMDS modules on FSTD-Net detection performance on the UAVDT dataset. As shown in Table 8, LLFEB can better improve the detection performance of medium and large targets. FEFMDS can help the model better learn small targets. MSCIEM enhances the model’s ability to comprehensively learn targets of various scales, effectively balancing the learning of small and large targets while significantly improving the learning of medium-sized targets. Overall, due to the design of the three modules, FSTD-Net achieves relatively optimal performance in object detection across various scales.

Table 8.

Ablation studies of FSTD-Net on the UAVDT dataset.

To further validate the effectiveness of the proposed FSTD-Net, we conducted a comparison of its detection performance with that of baseline YOLOv5s across all categories on the UAVDT dataset. Table 9 presents the detection performance of FSTD-Net across various categories in the UAVDT dataset. It can be seen that YOLOv5s outperforms FSTD-Net only in the APm of the bus category and the APl of the truck category. In the other metric of all target categories, FSTD-Net all achieves better results. FSTD-Net can achieve a better detection result for all categories of targets in general. Overall, FSTD-Net achieves better detection performance on the UAVDT dataset.

Table 9.

Experimental results of different categories on the UAVDT dataset.

4.4. Extended Experimental Results on VOC Dataset

To validate the generalization capability of the FSTD-Net, we conducted further experiments on the Pascal VOC datasets [56], which include the VOC 2007 and VOC 2012 datasets. The training and validation sets from VOC 2007 and 2012 are combined to form new training and validation sets, respectively, while the test set from VOC 2007 is used as the new test set. The training set consists of 8218 images; the test set contains 4952 images; and the validation set comprises 8333 images. FSTD-Net was trained for a total of 300 epochs, and the results are presented in Table 10. As shown in Table 10, FSTD-Net achieves an AP50 of 68.2%, an AP of 44.1%, and an AP75 of 47.0%. Compared to the baseline, our proposed FSTD-Net achieves improvements of 1.5%, 2.9%, 3.6%, 0.5%, and 4.3% in AP50, AP, AP75, APm, and APl, respectively. It indicates that our proposed model is well-suited for generic image applications.

Table 10.

Detection results of FSTD-Net with baseline on the VOC dataset.

As shown in Table 11, the results demonstrate the detection accuracy of FSTD-Net compared to the baseline across various categories on the VOC dataset. It can be seen that our proposed model outperforms the baseline in most categories, indicating that our method also improves detection performance in general image applications.

Table 11.

The detection results of FSTD-Net with other state-of-the-art models on the VOC dataset.

5. Discussion

5.1. The Effectiveness of FSTD-Net

Targets in UAV images are more complex compared to those in natural images due to significant scale variability and complex background conditions. Target detection models suitable for natural images, such as the YOLO series of neural networks, cannot be directly applied to UAV images. To address this issue, we designed FSTD-Net, providing some insights on how to adapt models such as the YOLO series for use in UAV image detection.

Overall, the structure of FSTD-Net is essentially consistent with the YOLO series, including the backbone, neck, and head parts, maintaining a relatively lightweight structure. Its parameter count is 11.3M, which is roughly equivalent to that of YOLOv8s. However, compared to the YOLO series of neural networks, FSTD-Net is better suited for UAV image detection tasks.

Experimental results on the VisDrone 2019 and UAVDT datasets demonstrate that the methods proposed in this paper can enhance the applicability of the YOLO model for detection tasks involving UAV images. Additionally, we conducted further experiments on the general datasets VOC2007 and VOC2012. Experiments on the general datasets indicate that the proposed methods can also improve the baseline’s detection performance on natural images. Overall, FSTD-Net not only performs well on UAV image datasets but also achieves commendable results on general datasets.

5.2. The Impact of Image Resizing and Cropping on Target Detection Performance

We further conduct the experiments on VisDrone 2019, we cropped the train, test, and val sets into images of 640 × 640 pixels. The original training set contained 6471 images, and after cropping, the number of images in the training set increased to 44,205. We then compared the results of training on two datasets: one with the original images resized to 640 × 640, containing 6471 images, and the other with the cropped images, containing 44,205 images. FSTD-Net was trained for 80 epochs on both datasets. The detection results are shown in Table 12.

Table 12.

Detection results of FSTD-Net with resizing and cropping methods.

As shown in Table 12, it can be observed that the detection performance on the datasets with cropping methods is significantly better across all metrics compared to the performance on the datasets with resizing methods. On one hand, the cropping operation increases the number of data samples in the training set, allowing the network to undergo more iterations during training, which leads to improved detection performance on the cropped dataset. On the other hand, resizing methods change the original shape of the targets in the UAV images, which may significantly degrade detection performance compared to the cropped dataset.

6. Conclusions

In this paper, to address the target scale variability and complex background interference presented in UAV images, we propose the FSTD-Net, which effectively detects multi-scale targets in UAV images and avoids interference caused by complex backgrounds. Firstly, to better capture the features of targets at different scales in UAV images, MSCIEM utilizes a multi-kernel combination approach, and the CIEM in MSCIEM can capture long-range contextual information. Due to MSCIEM, the model can effectively capture features of targets at different scales, perceive long-range contextual information, and be sensitive to significant variations in scale. Secondly, to further account for the targets of different shapes on different scales, FEFMDS breaks through the conventional method of feature extraction by standard convolution, providing a more flexible approach to learning full-scale targets of different shapes. The FEFMDS can better fit full-scale targets of different shapes in UAV images, providing more representative features for the final detection of FSTD-Net. Finally, LLFEB is used to efficiently utilize low-level semantic features, including edges, corners, and textures, providing a foundation for subsequent high-level feature extraction and guiding the model to better understand various target features. Experimental results demonstrate that FSTD-Net outperforms the selected state-of-the-art models, achieving advanced detection performance. FSTD-Net achieves better detection results in terms of overall detection accuracy while maintaining a relatively lightweight structure. In the future, we will further explore more efficient and lightweight target detection models to meet the requirements for direct deployment on UAVs.

Author Contributions

W.Y. performed the experiments and wrote the paper; J.Z. and D.L. analyzed the data and provided experimental guidance. Y.X. and Y.W. helped to process the data. J.Z. and D.L. helped edit the manuscript. Y.X. and Y.W. checked and edited the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

VisDrone2019 dataset: https://github.com/VisDrone (accessed on 7 August 2024). UAVDT dataset: https://sites.google.com/view/grli-uavdt (accessed on 7 August 2024). Pascal VOC dataset: The PASCAL Visual Object Classes Homepage (ox.ac.uk).

Conflicts of Interest

All authors declare no conflicts of interest.

References

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A survey of modern deep learning based object detection models. Digit. Signal Process. 2022, 126, 103514. [Google Scholar] [CrossRef]

- Waheed, M.; Ahmad, R.; Ahmed, W.; Alam, M.M.; Magarini, M. On coverage of critical nodes in UAV-assisted emergency networks. Sensors 2023, 23, 1586. [Google Scholar] [CrossRef] [PubMed]

- Gupta, H.; Verma, O.P. Monitoring and surveillance of urban road traffic using low altitude drone images: A deep learning approach. Multimed. Tools Appl. 2022, 81, 19683–19703. [Google Scholar] [CrossRef]

- Deng, A.; Han, G.; Chen, D.; Ma, T.; Liu, Z. Slight aware enhancement transformer and multiple matching network for real-time UAV tracking. Remote Sens. 2023, 15, 2857. [Google Scholar] [CrossRef]

- Feng, L.; Zhang, Z.; Ma, Y.; Sun, Y.; Du, Q.; Williams, P.; Drewry, J.; Luck, B. Multitask learning of alfalfa nutritive value from UAVbased hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5506305. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Li, W.; Hong, D.; Tao, R.; Du, Q. Deep learning for unmanned aerial vehicle-based object detection and tracking: A survey. IEEE Geosci. Remote Sens. Mag. 2022, 10, 91–124. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS. arXiv 2023, arXiv:2304.00501. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Wei, X. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, H.I.; Tseng, Y.W.; Chang, K.C.; Wang, P.J.; Shuai, H.H.; Cheng, W.H. A DeNoising FPN With Transformer R-CNN for Tiny Object Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4704415. [Google Scholar] [CrossRef]

- Zhao, G.; Ge, W.; Yu, Y. GraphFPN: Graph feature pyramid network for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 2743–2752. [Google Scholar]

- Yang, G.; Lei, J.; Zhu, Z.; Cheng, S.; Feng, Z.; Liang, R. AFPN: Asymptotic feature pyramid network for object detection. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, HI, USA, 1–4 October 2023; pp. 2184–2189. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable convnets v2: More deformable, better results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 9308–9316. [Google Scholar]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H. InternImage: Exploring large-scale vision foundation models with deformable convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14408–14419. [Google Scholar]

- Xiong, Y.; Li, Z.; Chen, Y.; Wang, F.; Zhu, X.; Luo, J.; Dai, J. Efficient Deformable ConvNets: Rethinking Dynamic and Sparse Operator for Vision Applications. arXiv 2024, arXiv:2401.06197. [Google Scholar]

- Liu, S.; Zhang, L.; Lu, H.; He, Y. Center-boundary dual attention for oriented object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5603914. [Google Scholar] [CrossRef]

- Shao, Z.; Cheng, G.; Ma, J.; Wang, Z.; Wang, J.; Li, D. Realtime and accurate UAV pedestrian detection for social distancing monitoring in COVID-19 pandemic. IEEE Trans. Multimed. 2022, 24, 2069–2083. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, B. Contrastive Learning and Similarity Feature Fusion for UAV Image Target Detection. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6001105. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, C.; Zhang, T.; Liu, Y.; Zheng, Y. Self-attention guidance and multiscale feature fusion-based UAV image object detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6004305. [Google Scholar] [CrossRef]

- Zhou, X.; Zhou, L.; Gong, S.; Zhang, H.; Zhong, S.; Xia, Y.; Huang, Y. Hybrid CNN and Transformer Network for Semantic Segmentation of UAV Remote Sensing Images. IEEE J. Miniat. Air Space Syst. 2024, 5, 33–41. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; Yu, P.; Wang, S.; Tao, R. SFSANet: Multi-scale object detection in remote sensing image based on semantic fusion and scale adaptability. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4406410. [Google Scholar] [CrossRef]

- Gao, T.; Li, Z.; Wen, Y.; Chen, T.; Niu, Q.; Liu, Z. Attention-free global multiscale fusion network for remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5603214. [Google Scholar] [CrossRef]

- Gao, T.; Liu, Z.; Zhang, J.; Wu, G.; Chen, T. A task-balanced multiscale adaptive fusion network for object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5613515. [Google Scholar] [CrossRef]

- Gao, T.; Niu, Q.; Zhang, J.; Chen, T.; Mei, S.; Jubair, A. Global to local: A scale-aware network for remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5615614. [Google Scholar] [CrossRef]

- Dong, X.; Qin, Y.; Fu, R.; Gao, Y.; Liu, S.; Ye, Y.; Li, B. Multiscale deformable attention and multilevel features aggregation for remote sensing object detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6510405. [Google Scholar] [CrossRef]

- Shen, H.; Lin, D.; Song, T. Object detection deployed on UAVs for oblique images by fusing IMU information. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6505305. [Google Scholar] [CrossRef]

- Jiang, L.; Yuan, B.; Du, J.; Chen, B.; Xie, H.; Tian, J.; Yuan, Z. MFFSODNet: Multi-Scale Feature Fusion Small Object Detection Network for UAV Aerial Images. IEEE Trans. Instrum. Meas. 2024, 73, 5015214. [Google Scholar] [CrossRef]

- Liu, X.; Leng, C.; Niu, X.; Pei, Z.; Cheng, I.; Basu, A. Find small objects in UAV images by feature mining and attention. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6517905. [Google Scholar] [CrossRef]

- Lan, Z.; Zhuang, F.; Lin, Z.; Chen, R.; Wei, L.; Lai, T.; Yang, C. MFO-Net: A Multiscale Feature Optimization Network for UAV Image Object Detection. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6006605. [Google Scholar] [CrossRef]

- Mao, G.; Liang, H.; Yao, Y.; Wang, L.; Zhang, H. Split-and-Shuffle Detector for Real-Time Traffic Object Detection in Aerial Image. IEEE Internet Things J. 2024, 11, 13312–13326. [Google Scholar] [CrossRef]

- Hong, M.; Li, S.; Yang, Y.; Zhu, F.; Zhao, Q.; Lu, L. SSPNet: Scale selection pyramid network for tiny person detection from UAV images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8018505. [Google Scholar] [CrossRef]

- Cui, L.; Lv, P.; Jiang, X.; Gao, Z.; Zhou, B.; Zhang, L.; Shao, L.; Xu, M. Context-aware block net for small object detection. IEEE Trans. Cybern. 2022, 52, 2300–2313. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded sparse query for accelerating high-resolution small object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13658–13667. [Google Scholar]

- Zhang, Y.; Wu, C.; Zhang, T.; Zheng, Y. Full-Scale Feature Aggregation and Grouping Feature Reconstruction-Based UAV Image Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5621411. [Google Scholar] [CrossRef]

- Nie, J.; Pang, Y.; Zhao, S.; Han, J.; Li, X. Efficient selective context network for accurate object detection. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3456–3468. [Google Scholar] [CrossRef]

- Wang, G.; Zhuang, Y.; Chen, H.; Liu, X.; Zhang, T.; Li, L.; Sang, Q. FSoD-Net: Full-scale object detection from optical remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5602918. [Google Scholar] [CrossRef]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly Kernel Inception Network for Remote Sensing Detection. arXiv 2024, arXiv:2403.06258. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Yu, W.; Zhou, P.; Yan, S.; Wang, X. Inceptionnext: When inception meets convnext. arXiv 2023, arXiv:2303.16900. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The unmanned aerial vehicle benchmark: Object detection and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).