Abstract

In recent years, the detection performance of SAR-GMTI (synthetic aperture radar-ground moving target indication) algorithm based on deep learning has always been limited by insufficient measured data due to the heavy operation complexity and high cost of real SAR systems. To solve this problem, this paper proposes an overall DT-based implementation framework for SAR ground moving target intelligent detection tasks. In particular, by virtue of a SAR imaging algorithm, a high-fidelity twin replica of SAR moving targets is established in digital space through parameter traversal based on the prior target characteristics of the obtained measured datasets. Then, the constructed SAR twin datasets is fed into the neural network model to train an intelligent detector by fully learning features of the moving targets and preset the SAR scene in the twin space, which can realize the robust detection of ground moving targets in related practical scenarios with no need for multiple and complex field experiments. Moreover, the effectiveness of the proposed framework is verified on the MiniSAR measured system, and a comparison with traditional CFAR detection method is given simultaneously.

1. Introduction

Nowadays, with the development of intelligent technology and military aerospace technology, the information warfare has become the main form of modern battlefield, and real-time monitoring of antagonistic combatants and military equipment in varying battlefield environments is the key to victory. Intelligent warfare places greater emphasis on the competition of various battlefield data, and military sensors must rapidly develop to meet the challenges of highly mobile military targets [1,2]. Synthetic aperture radar (SAR) [3,4,5,6,7] consists of an active microwave imaging sensor, which can realize high-resolution imaging and real-time monitoring of the ROI (region of interest) all day long and is not affected by environmental factors.

Ground moving target indication (GMTI) [8,9,10] is one of the key applications of SAR. The traditional SAR-GMTI algorithm is based on signal echo, which is extremely easily affected by background clutter. The detection performance of the algorithm deteriorates sharply, especially under complex urban backgrounds. In recent years, intelligent detection algorithms based on deep learning have attracted great attention from researchers due to their robust performance in the SAR-GMTI task and have been continuously improved and developed to adapt to field detection tasks in complex scenarios. Guo et al. [11] introduced the adaptive activation function and convolution block attention model based on YOLOX to enhance the feature extraction ability of the network and realize optimal detection performance in complex backgrounds. In [12], a new feature aggregation module and mixed-attention mechanism strategy were proposed to enrich the semantic information and spatial features of small targets, and the effectiveness of the proposed algorithm was further verified on several SAR datasets to show that the model could achieve better recognition of targets compared to other detectors. Zhou et al. [13] proposed a lightweight SAR target detection framework based on meta-learning to enhance the generalization performance of new classes through the integration of a meta-feature extractor and a new feature aggregation module. Wang et al. [14] proposed a visual attention-based model for target detection and recognition, and the verification results on MiniSAR datasets proved that the model could accurately and quickly distinguish targets from complex background clutter. Sun et al. [15] proposed a novel target detection algorithm based on feature fusion and a cross-layer connection network, which effectively improved the detection performance of small targets and reduced computation complexity. In order to retain a good balance between detection accuracy and speed of the model, a lightweight hybrid representation learning-enhanced method based on SAR image features was proposed in [16], and its excellent detection performance was verified through intensive experiments.

However, the SAR-GMTI algorithms based on neural network models face more frequent and multi-task tests in complex scenarios. Due to the high equipment cost and operation complexity of SAR systems, the implementation of field experiments is extremely difficult to conduct, and the detection performance and generalization ability of the model are always limited by insufficient measured data. The development demands of intelligent SAR-GMTI algorithms give rise to new technologies, and the advantages of digital twin (DT) [17,18] technology exactly coincide with the current application status of intelligent detection algorithms in SAR-GMTI tasks. DT is a digital replica of a physical entity, which synthesizes the artificial intelligence, data analysis, virtual modeling, and other technologies and simulates the behaviors of the objects in the real system for real-time state monitoring and prediction. As to the intelligent SAR-GMTI tasks, the virtual replica of SAR ground moving targets and scenes under surveillance is the key to realizing robust detection in real systems. By virtue of its pre-modeling advantage, a DT provides new developmental impetus for intelligent SAR ground moving target detection algorithms aided by artificial intelligence networks. Yang et al. [19] proposed a construction method for a spacecraft digital twin (SDT) and discussed key issues such as data collection, system configuration, and data service, providing a theoretical basis for further constructing intelligent integrated systems of spacecraft in virtual and physical spaces. In [20], a digital twin model was established for real and virtual radar systems and on this basis, the architecture of the virtual model and how it became the main step in radar identification were explained further, which played a crucial role in improving the maturity of combat radar processing. Xie et al. [21] proposed a UAV (Unmanned Aerial Vehicle) framework based on a digital twin and studied the application of 3D millimeter wave radar imaging on the UAV. The relevant experimental results verified that the developed digital platform could accurately realize the intelligent operation and management of the UAV. In [22], by virtue of the generated radar digital twin datasets, the machine learning classifier could effectively detect the direction of the UAV with potential danger, obviating complex field experiments. A real-time processor that operated in parallel with the radar was proposed in [23] to alleviate the interference from strong scattering objects, and the number of false alarms in airborne radar detection systems was effectively reduced by modeling the surface intercept of the radar’s main beam.

Inspired by several successful applications of digital twins in multiple fields, this paper proposes a framework for SAR ground moving target intelligent detection based on the integration of DT and artificial intelligence technology. The bright lines that appear in imaging results due to the defocusing effects of moving targets are extremely crucial to the trajectory recognition of moving targets in the scene. However, the obtained measured data are not sufficient enough to complete the training task of the neural network model. In this paper, an SAR imaging algorithm is exploited to construct a high-fidelity SAR twin replica in digital space by parameter traversal, and then the model can realize the robust detection of moving targets in related application scenarios with no need for complex field experiments. The main innovations of this paper are as follows:

- This paper designs an overall framework for SAR moving target intelligent detection based on the integration of DT and artificial intelligence technology. In particular, according to the characteristics of the measured data obtained from a real SAR system, a high-fidelity virtual replica of a current physical system is constructed in digital space. Then, the neural network model is trained on this basis to obtain an intelligent detector that can realize the robust detection of the moving targets in related application scenarios.

- By virtue of the SAR imaging algorithm, the parameters of target motion, position, and SCR (signal–clutter ratio) during echo synthesis are fully traversed to construct the high-fidelity SAR twin datasets, which can fully reflect the characteristics of the moving targets and preset scene in the real system and significantly improve the detection performance and generalization ability of the neural network model in practical application scenarios.

- The effectiveness of the proposed framework is verified on the related measured data obtained by radar sensors. The experimental results indicate that the DT-based framework for SAR ground moving target intelligent detection can effectively realize robust target detection and synchronous state perception in real test scenarios with no need for complex field experiments and is applicable to several SAR systems.

2. Methods

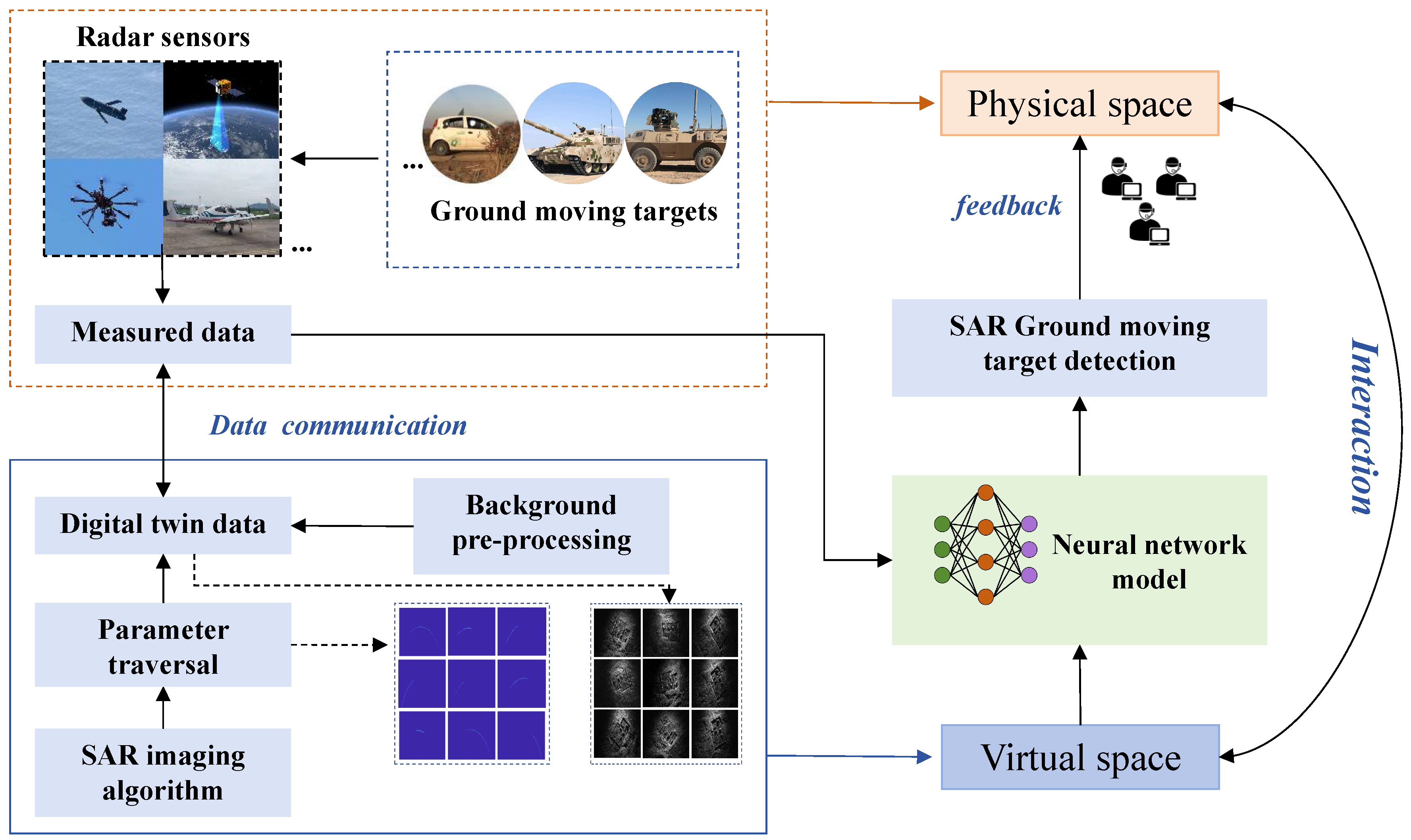

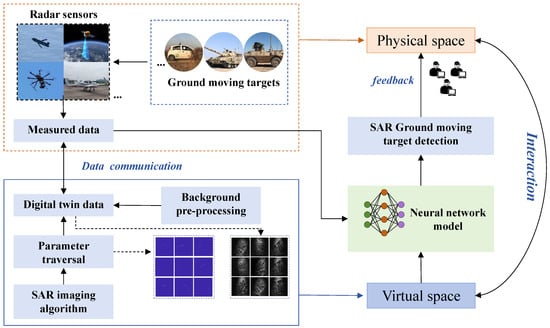

Information warfare puts forward more stringent demands for real-time monitoring of high-mobility military targets. But under limited conditions, it is impracticable to conduct multiple SAR field experiments frequently, which seriously threatens accurate combat on the battlefield. In this regard, this paper proposes an overall framework for SAR ground moving target detection based on the integration of digital twin and artificial intelligence technology. Based on a small quantity of measured data obtain from a current real SAR system, a high-fidelity twin replica of SAR moving targets is established in digital space by parameter traversal. Then, the SAR twin datasets are fed into a neural network model for training to obtain an intelligent detector which can realize the robust detection of moving targets in current application scenarios. The DT-based framework for SAR ground moving target intelligent detection is shown in Figure 1.

Figure 1.

DT-based framework for an intelligent SAR-GMTI system.

The proposed DT-based framework for SAR moving target intelligent detection is applicable to various SAR systems. Moreover, in practical applications, the targets may be submerged in complex background clutter and may not be distinguished accurately, while the Doppler effects of the moving targets induce an energy defocusing of the echo signal and a displacement of the target position in the imaging results, leaving clear bright lines in the scene. The bright line feature contains copious location information on the target trajectory and is an important basis for moving target accurate detection.

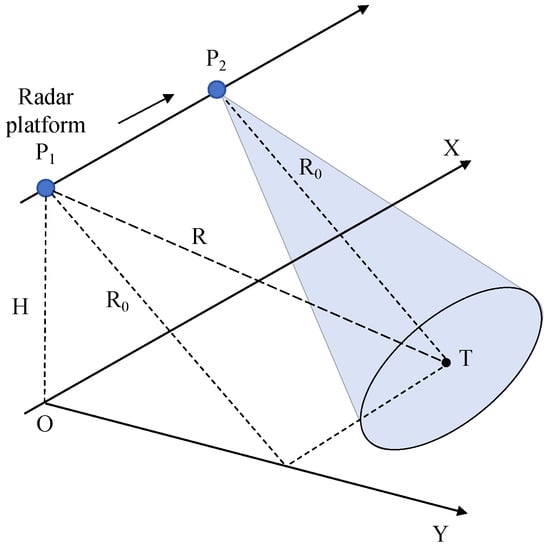

2.1. Physical SAR System

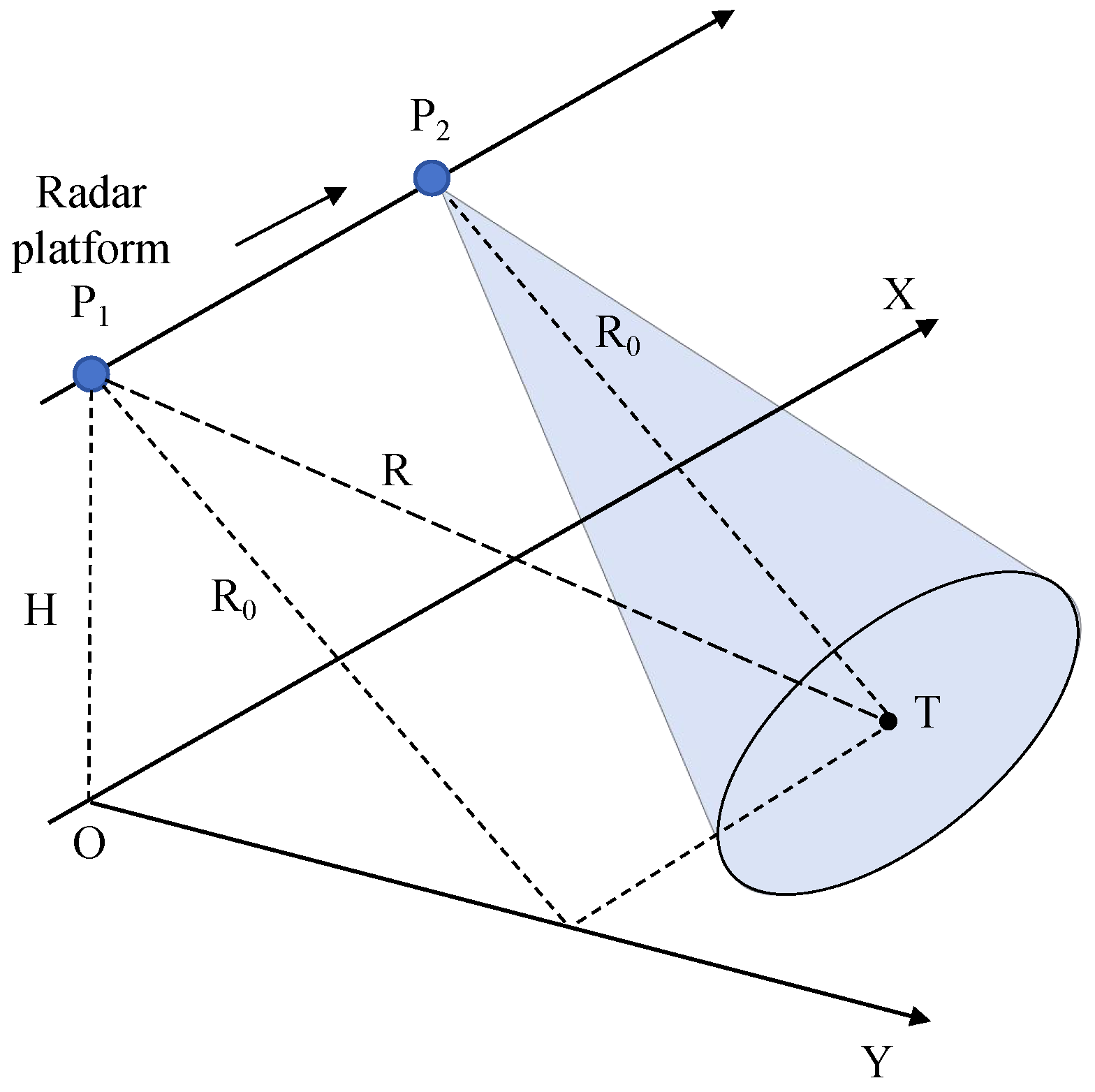

The physical SAR system lays the foundation for the construction of a DT-based model and data acquisition, and the interaction with the virtual space is completed with the aid of the measured data obtained by the real SAR system. The accurate system parameters and analysis of moving target characteristics are the prerequisite for constructing a high-fidelity digital replica of a physical entity. The simple schematic diagram of an SAR platform and beam coverage area is shown in Figure 2. The radar antenna emits pulses along the direction perpendicular to the sensor motion vector and records the received echo reflected from the ground surface.

Figure 2.

Geometric model of radar data acquisition.

The radar platform moves along the X-axis direction, and the operation height is H. Pulses with electromagnetic energy are emitted to the ground surface at a certain interval, and the area on the ground under the coverage of the beam projection is the ROI. There exists a point target T in the observation scene, the instantaneous slant distance to the APC (antenna phase center) is R, and is the shortest distance. Considering the high complexity and cost of SAR field experiments, this paper aims to realize the accurate detection of ground moving targets in related application scenarios only by fully learning and extracting the features of the constructed SAR twin data in digital space aided by digital twin and artificial intelligence technology. In particular, the simulation parameters must be consistent with the real system, and how to construct the high-fidelity SAR ground moving target twin samples is the core of the research.

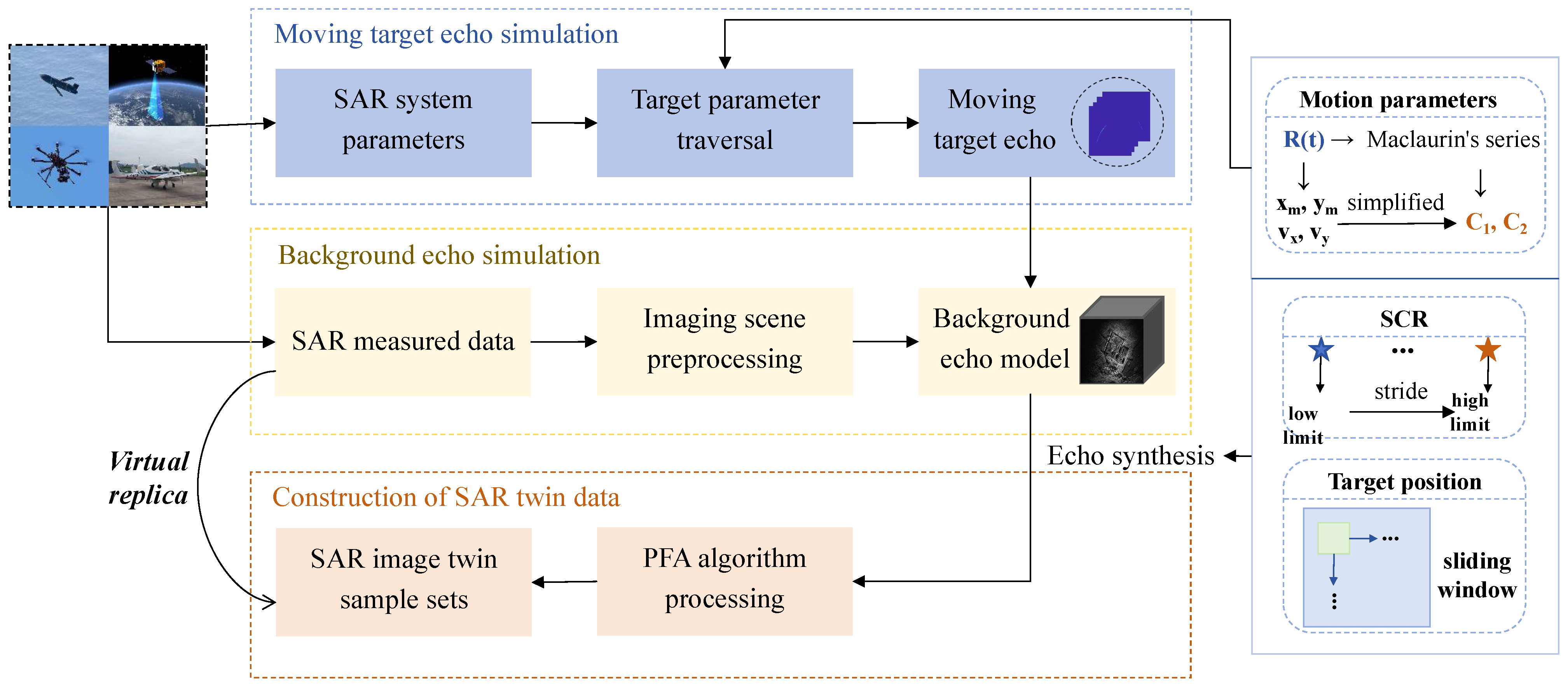

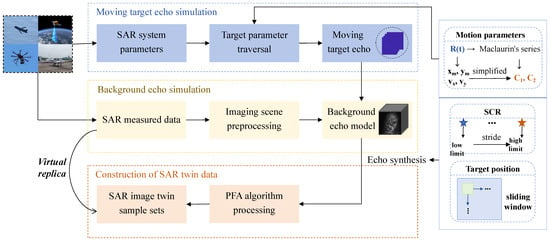

2.2. Virtual Replica of SAR-GMTI

According to the SAR imaging principle of ground observation, the distance history is always differently affected by the target speed, which is manifested as different forms of moving targets in the imaging results. When applying intelligent detection algorithms to SAR-GMTI tasks, the measured data used for model training are extremely difficult to obtain due to the operation complexity of SAR field experiments, which weakens the detection performance and generalization ability of the model. Combined with the SAR imaging algorithm, a high-fidelity SAR twin replica that simulates moving targets in an actual system can be constructed by parameter traversal, and whether the SAR twin data can fully reflect the characteristics of the target and scene under surveillance is the key to realizing the robust detection of moving targets in test scenes. The key steps of SAR twin data based on a radar imaging algorithm are shown in Figure 3.

Figure 3.

Key steps for the construction of SAR twin data.

Compared to a stationary target, the range distance of a moving target is not only related to the platform motion but depends on the movement stage of the target. Therefore, the shape features of moving targets in final imaging results are never the same due to the different motion parameters. On this basis, combined with the SAR imaging algorithm, a large number of SAR sample sets for model training can be obtained by parameter traversal. In particular, the motion parameters of targets, the position distribution in the scene, and the energy ratio between target echo and scene echo are fully considered during the simulation process to ensure SAR twin data present highly similar features to the measured datasets.

- Target motion parameter traversal

Assume that the radar platform operates at a speed of v, the orbit height is H, and the azimuth and range velocity of the moving target are and , respectively. Initially, the coordinates of the APC and the observed moving target are and . As the platform moves, the positions of the APC and the moving target are recorded as and at the azimuth time t. On this basis, the distance history R(t) can be expressed as

where and . From Equation (1), R(t) of the moving target is not only related to the radar platform but also influenced by target motion parameters , , , and . A large number of SAR sample sets that simulate different shapes of moving targets can be obtained by parameter traversal. However, it is difficult to traverse four varying parameters, and in order to reduce the calculation complexity, a Maclaurin expansion is performed on at (retained to the quadratic term), which can be expressed as

where , and and are equivalent coefficients expressed as

Therefore, the number of variables is effectively reduced to two, and for the specific SAR system, the platform motion parameters are determined. During the simulation process, the range of , , , and are reasonably estimated in advance to calculate the upper and lower limits of and , and dual target parameters are further traversed within this range to establish a high-fidelity virtual replica of SAR ground moving targets in the digital system.

- 2.

- Target position traversal

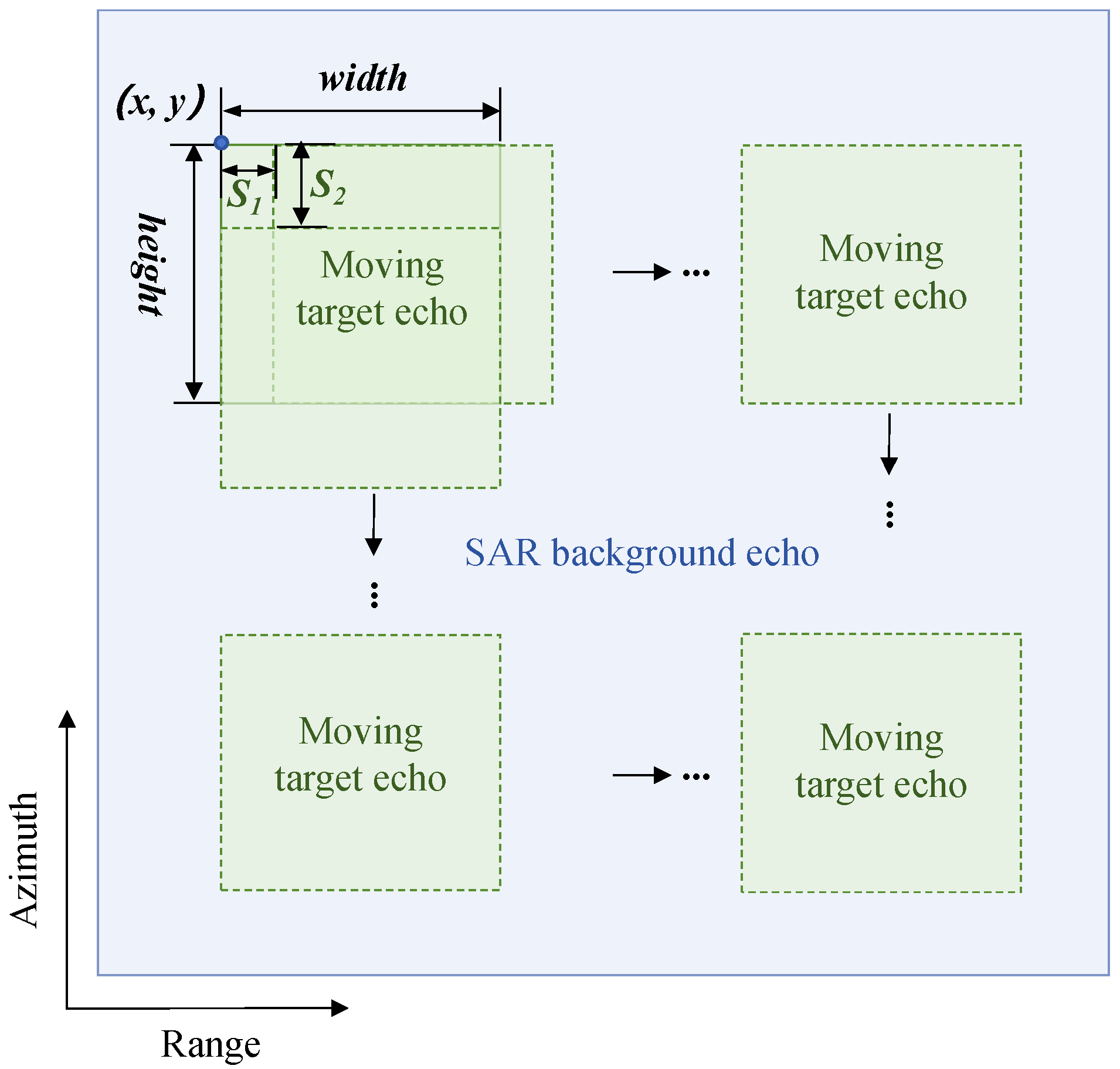

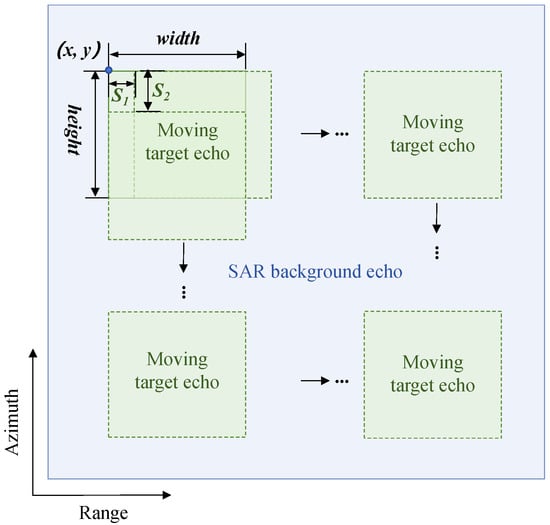

In practical applications, the position where the monitored target is located in an imaging scene always varies. In order to make the constructed SAR twin data closer to the real system, the target position is traversed during the fusion process of the moving target echo and background echo, which further replenishes the details of the twin replica construction and significantly increases the number of SAR image samples. Inspired by the theory of sliding windows, the schematic diagram of the target position traversal is shown in Figure 4.

Figure 4.

Schematic diagram of target position parameter traversal.

The green and blue windows shown in Figure 4 represent the echo matrix of the moving target and SAR scene, respectively. The upper-left corner coordinate of the target echo matrix is , and the imaging area is . The target window slides along the azimuth and range direction with a step size of and until the entire scene is traversed. In this way, the distribution characteristics of moving targets in the scene can be simulated as completely as possible, which further helps establish a high-fidelity SAR twin replica in digital space.

- 3.

- SCR traversal

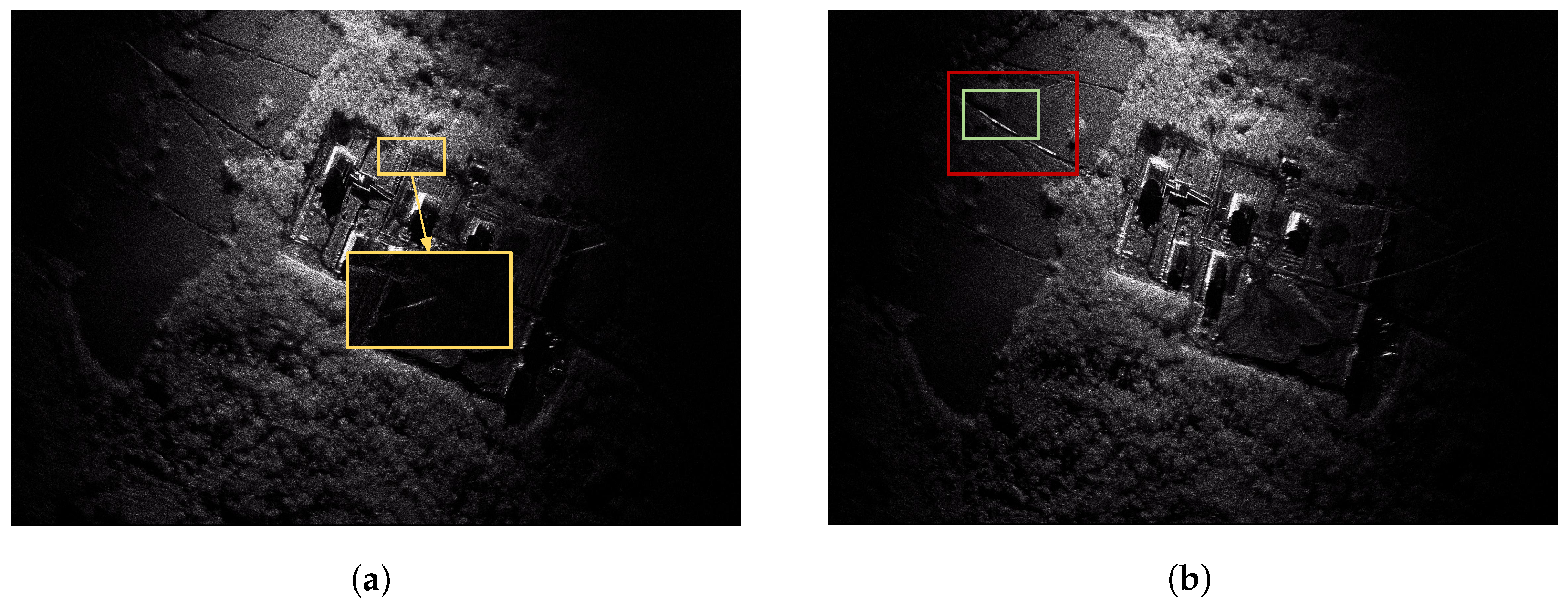

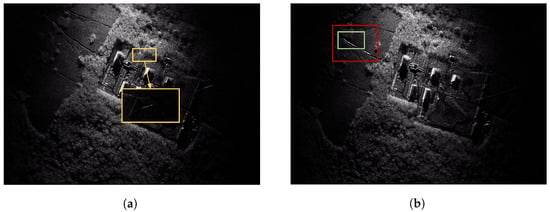

When the moving target echo is adaptively fused with the background echo, the impact of different SCR (signal–clutter ratio) values on imaging results needs to be fully considered. Specifically, the moving target echo is partially or completely submerged in strong background clutter and cannot be detected if SCR is set relatively small. In particular, some bright lines in the imaging results may be broken by background clutter and misjudged as two different targets in the detection results, as shown in Figure 5.

Figure 5.

Examples of detection results influenced by SCR. (a) Missed target (SCR = 10 dB). (b) False alarm (SCR = 13 dB). The red, green and yellow rectangle represent the correctly detected targets, false alarm and missed target, respectively.

From Figure 5a, the target echo is almost completely submerged in strong background clutter and cannot be detected by the network model due to the low SCR. In Figure 5b, the bright line is partially interrupted by surrounding clutter, and part of the bright line is also recognized as the target by the model, which is actually a false alarm and results in a multi-target overlap in the detection results. In an actual SAR system, the shape and brightness of the moving targets in imaging results are indeed complex and variable, affected by light condition, background clutter power, and system instability. Therefore, the influence of the SCR should be considered during the fusion process of the target echo and background echo. The upper and lower limits of the SCR are set based on system characteristics and experience, and SAR twin data samples under different SCRs are obtained by parameter traversal within the corresponding range.

So far, the motion parameters, the position distribution of the target, and the SCR have been taken in consideration comprehensively to construct a high-fidelity SAR twin replica in digital space, which promotes the robust detection of moving targets in related application scenarios with no need for multiple complex fields experiments.

2.3. SAR Ground Moving Target Detection Based on Deep Learning

Traditional SAR-GMTI algorithms based on echo processing are easily affected by complex background clutter, the radar operating mode, and system parameters. For instance, the imaging scene of the radar that operates in the circular spotlight mode constantly rotates due to the changing observation angles, which always requires image registration [24] to detect the moving targets. Recently, artificial intelligence technologies have been developed, so that based on the constructed SAR twin data, the neural network model is combined to fully learn the characteristics of the preset scene and moving targets in digital space and realize the robust detection of moving targets in related application scenarios.

Compared to optical images, SAR gray images always contain less feature information, and the targets often occupy very few pixels in the entire scene, which puts higher demands on the performance of neural network models. With the continuous improvement of deep learning technology, several algorithms with excellent performance have emerged and been successfully applied in target detection tasks. As the typical single-stage object detection algorithm, the Yolo (You only look once) [25] algorithm features an optimal inference speed and real-time detection capability; it treats the object detection as the regression problem and directly inputs the entire image into the network for inference. With the introduction of the RPN (Region Proposal Network), Faster-RCNN (Faster Region-based Convolutional Neural Network) [26] brings the detection accuracy to a new level relying on its good feature extraction and representation capabilities. SSD (Single Shot Multibox Detector) [27] adopts a multi-scale feature extraction strategy to quickly locate targets but cannot achieve sufficient training accuracy in shadow feature maps due to the dependence on manually prior boxes set by experience. Detr (Detection transformer) [28] is an end-to-end object detection model based on the transformer architecture, which eliminates several steps of preset anchor boxes and post-processing. As to different SAR systems, the shapes of moving targets and the observed scene always exhibit different characteristics, so the intelligent detection algorithms should be flexibly selected based on the specific application scenarios and needs.

3. Implementation Details

The proposed SAR ground moving target intelligent detection model framework based on the DT technology in this paper is applicable to various SAR systems such as airborne, spaceborne and missile-borne SAR. In this section, the implementation process is further demonstrated in specific applications. Taking the PFA imaging algorithm as an example, the SAR twin data used for neural network model training are constructed by target parameter traversal in this paper. Then, the Faster-RCNN network is used to learn and extract features of the targets and preset scenes in digital space to realize the robust detection of moving targets in MiniSAR measured data.

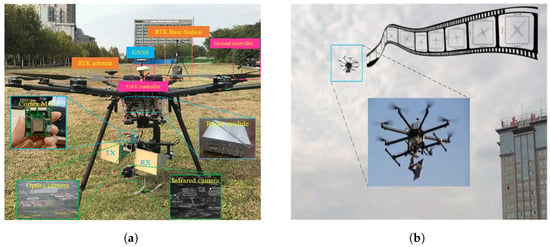

3.1. MiniSAR Measured System

Compared to conventional SAR systems, MiniSAR [29,30] features a small size, a light weight, and lower power and also inherits several preeminent properties of SAR such as all-time, all-weather work and high-resolution imaging, which is suitable for application scenarios with flexible deployment such as mobile platforms, UAV, and vehicle reconnaissance. Currently, MiniSAR can operate in the X-band, Ku band, Ka band, etc. In 2018, the Electronic Information Engineering college of Nanjing University of Aeronautics and Astronautics developed and tested an X-band MiniSAR system [31] for task deployment such as radar imaging and ground moving target detection, and some system parameters are shown in Table 1.

Table 1.

Parameters of the physical MiniSAR system.

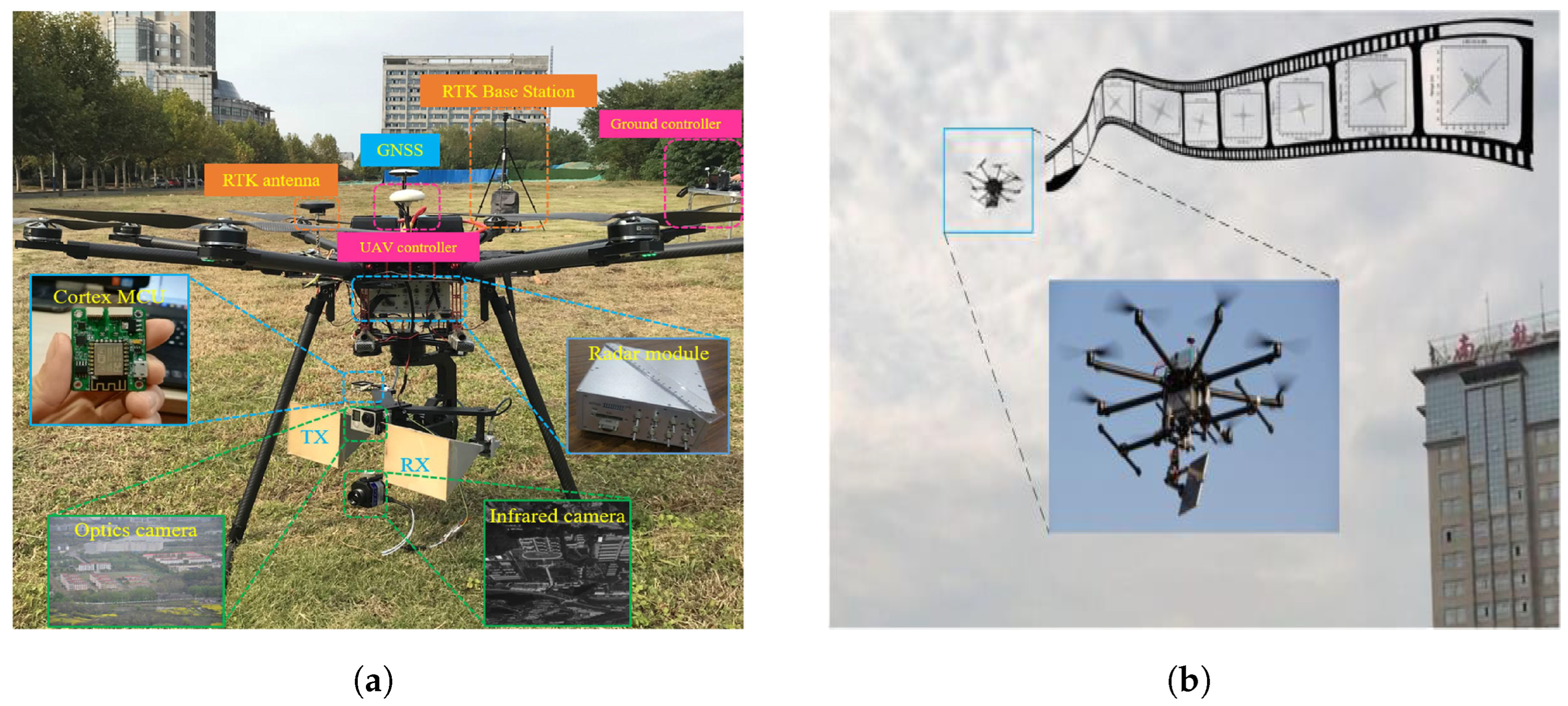

The MiniSAR system mainly consists of radar modules, a UAV, a Cortex MCU, a camera, and a system controller, as shown in Figure 6. Combined with the NUAA MiniSAR system operating in circular spotlight mode, SAR-GMTI tasks can be deployed to collect field data and monitor ROIs continuously. From the obtained measured MiniSAR data, the moving targets are submerged in background clutter and cannot be distinguished. However, the Doppler effects induce obvious defocusing effects and position displacement of the moving targets, leaving bright lines in the imaging results, which also displays rich features for echo localization and provides an important basis for target recognition and trajectory reconstruction.

Figure 6.

Schematic of the NUAA-MiniSAR system. (a) System architecture. (b) Experimental scenario.

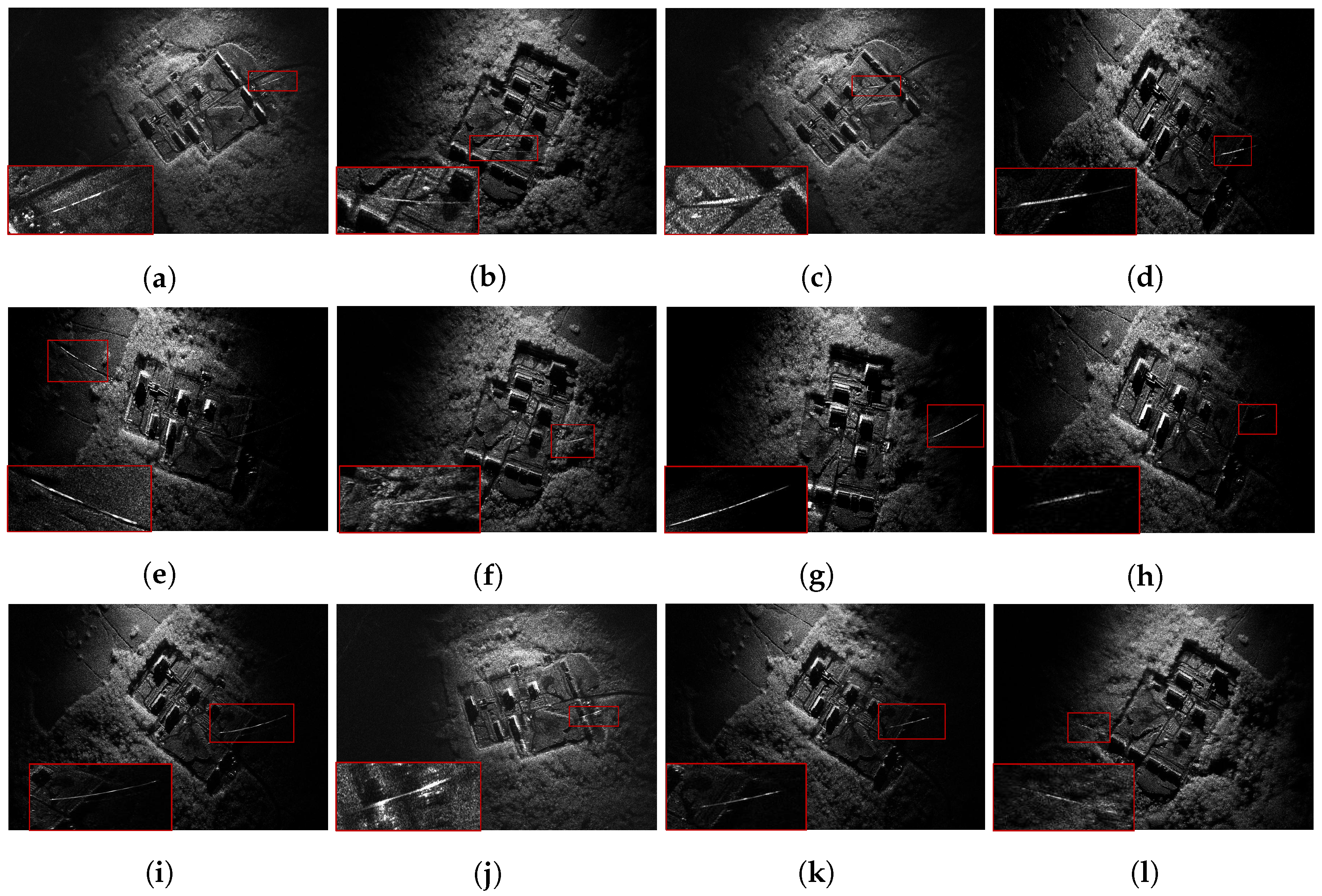

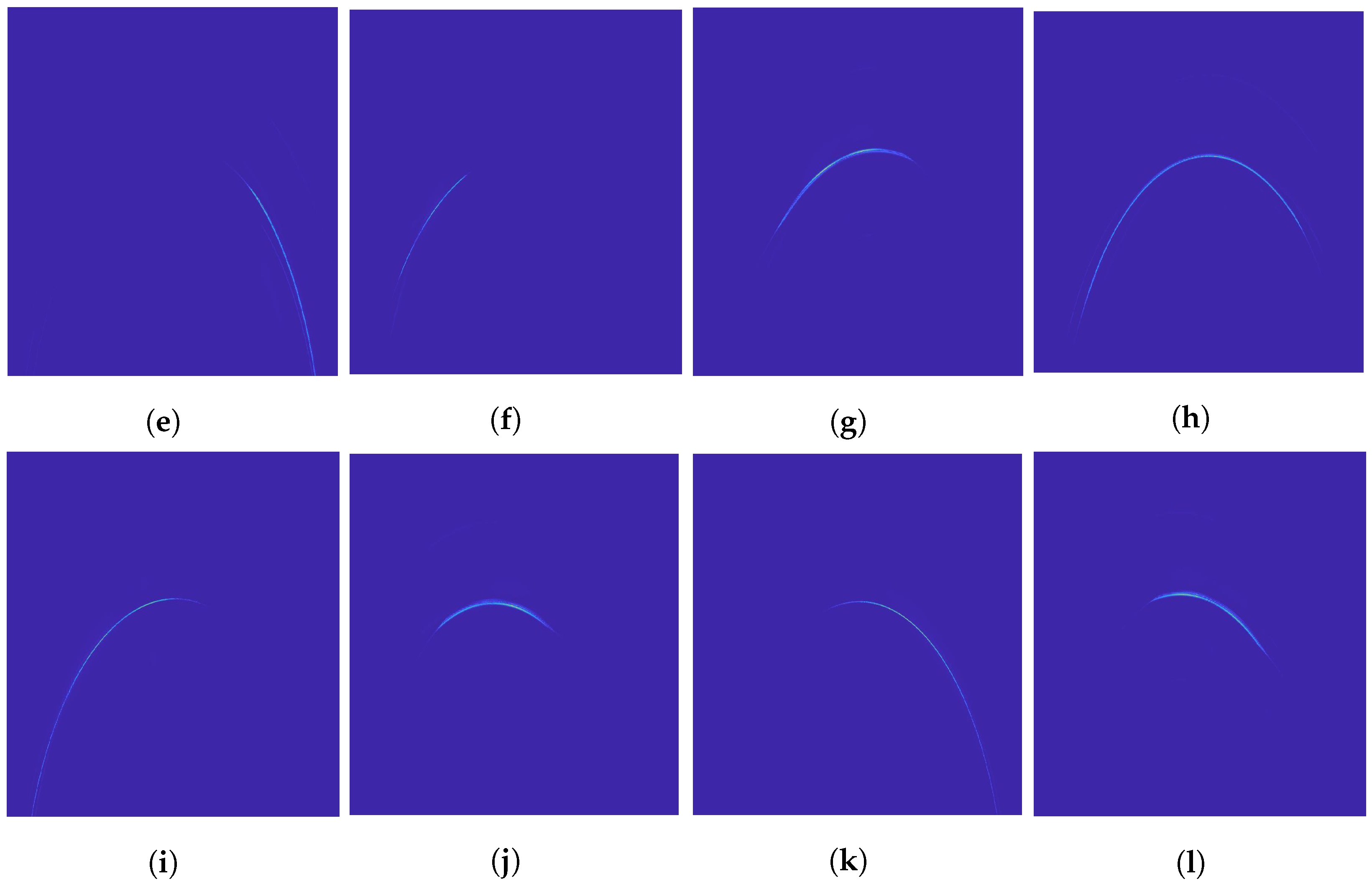

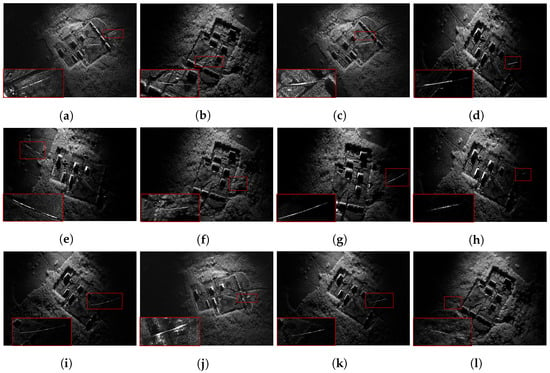

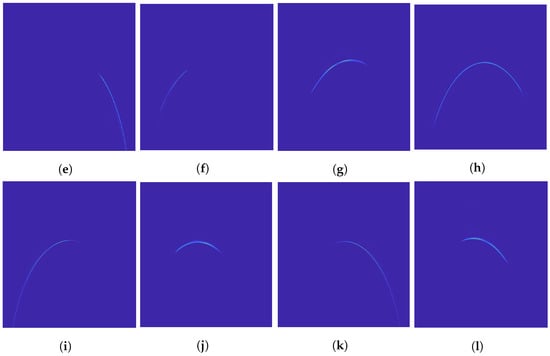

As the MiniSAR platform performs circular motion around the ROI, the relative position and velocity between the radar and the target constantly change, and the system can capture 360° dynamic information of the observed scene. The bright lines in the imaging results generated by the Doppler effects show obvious contrast in the scene, which provides rich features for SAR ground moving target detection. The obtained MiniSAR measured data from different angles are partially shown in Figure 7.

Figure 7.

(a–l) The measured data of the MiniSAR system. The red rectangle represents the real target in the scene.

From Figure 7, it can be seen that the shapes and positions of bright lines in the imaging scene are constantly changing. Due to the strong scattering characteristics of artificial buildings, vegetation, and stones in the observation scene, the traditional SAR-GMTI algorithms based on echo processing are easily affected by complex background clutter and generate a large number of false alarms and missed targets. By comparison, the intelligent detection network based on deep learning can always show optimal detection performance in actual SAR systems by fully learning the morphology features of the moving targets and monitored scene.

3.2. Construction of MiniSAR Twin Datasets

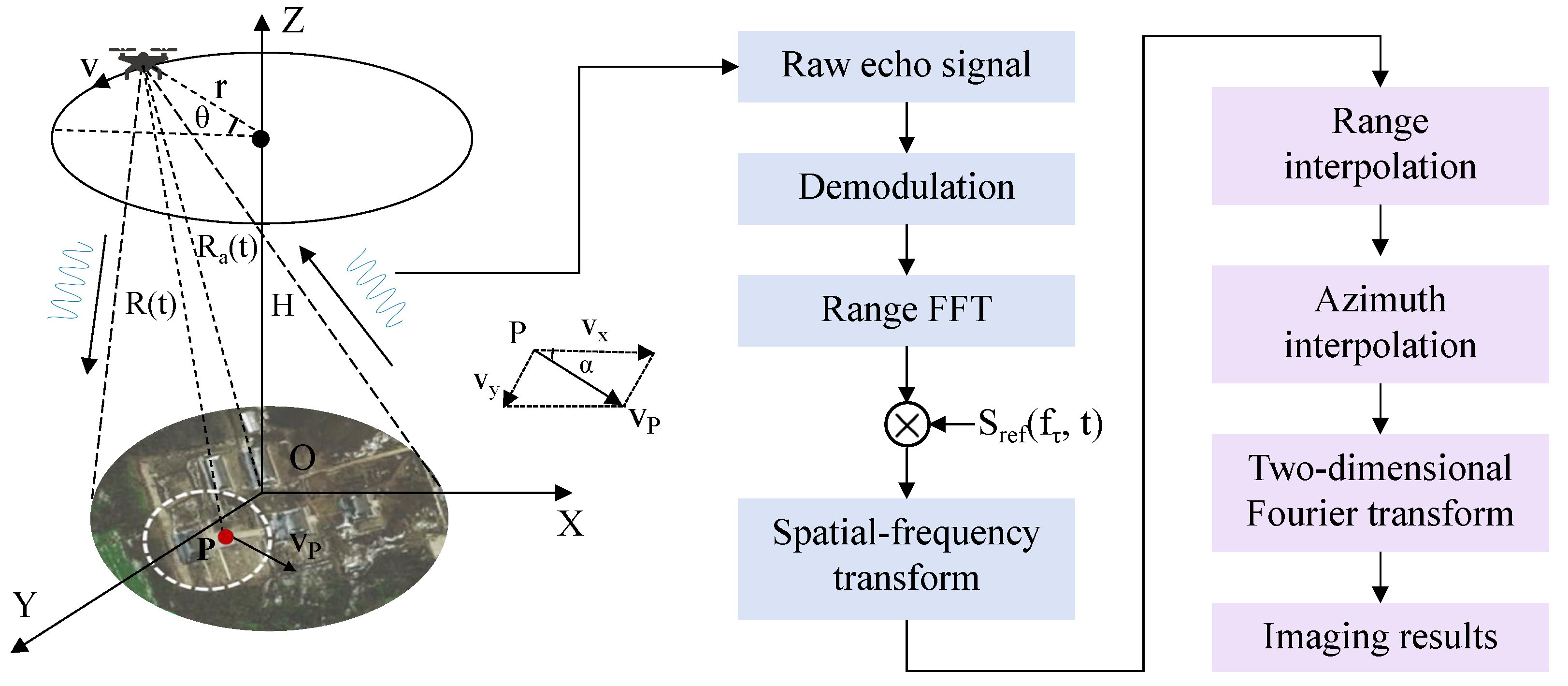

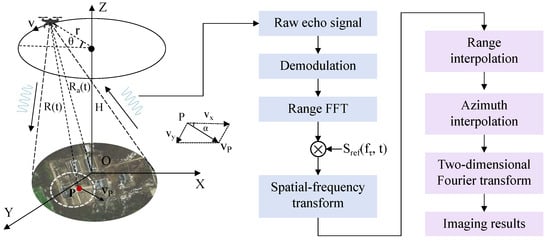

The spotlight-mode SAR system is suitable for the high-resolution imaging of fixed scenes, which can realize the continuous observation of an ROI by changing the direction of the antenna beam to emit an electromagnetic wave towards the same scene. PFA [32,33] is a classical SAR imaging algorithm in spotlight mode, which employs a polar format to store SAR echo data, and effectively solves the problem of migration through the resolution cell in the edge area of the scene, greatly improving the effective focusing imaging range of spotlight SAR. The flowchart of the PFA algorithm is shown in the right part of Figure 8.

Figure 8.

Flowchart of the PFA algorithm.

The geometric observation model of MiniSAR operating in circular-spotlight mode is shown in the left part of Figure 8, taking the scene center as the origin of the coordinate axis. The X-axis, Y-axis, and Z-axis correspond to the range, the azimuth, and the operation height dimension, respectively. The radar platform operates at a speed of v, the flight height is H, and the orbit radius is r. As the platform moves, the instantaneous coordinate of the APC is , and there is a point target P in the observation area with initial position at . The target moves at a speed of with the range and azimuth velocity equal to and , respectively. At azimuth time t, the coordinate of the target is . Assuming the impact of the signal amplitude is ignored, the linear-frequency-modulation continuous wave transmitted by the radar antenna can be expressed by

where represents the range time, is the pulse width, is the carrier frequency, is the range chirp rate, and the bandwidth is . The received signal is the time delay of the transmitted signal, and the delay . The two-dimensional echo signal of the point target after demodulation can be expressed as

where t is the azimuth time, and is the azimuth aperture time. The range Fourier transform is performed on Equation (6) based on the principle of a stationary phase, and we can obtain

And than matched filtering and motion compensation are applied to the obtained echo data by multiplying Equation (7) and the following reference function

where and are the distance from the radar platform to the point target P and the scene center O. The obtained signal can be expressed as

Under the assumption of a planar wavefront, in Equation (9) can be expressed as

where and are the real-time position vector from the APC to the point target P and scene center O, and the instantaneous value are and . and are the azimuth and pitch angle of the APC. On this basis, the PFA imaging results can be obtained by following the relevant steps shown in Figure 8.

Moreover, according to Figure 8, the actual system in this paper operates in circular spotlight mode, and can be expressed as

where , , , , and . On this basis, Equation (11) can be further simplified as

From Equation (12), it can be seen that is related to , , , and , but parameter traversal based on four variables is extremely difficult to conduct. Therefore, in order to reduce the computational complexity, a Maclaurin expansion is performed on at , and we can obtain

where , and the equivalent coefficients and are expressed as

In this way, the simulation process is significantly simplified since multiple shapes of moving targets can be obtained by only traversing and . Specifically, the range of the target velocity and initial position is reasonably estimated to calculate the upper and lower limits of and , and within that range, the target motion parameters can be effectively traversed using a proper step size. In addition, the parameters of the target position and SCR described in Section 2.2 are also traversed to construct a high-fidelity MiniSAR twin replica in digital space.

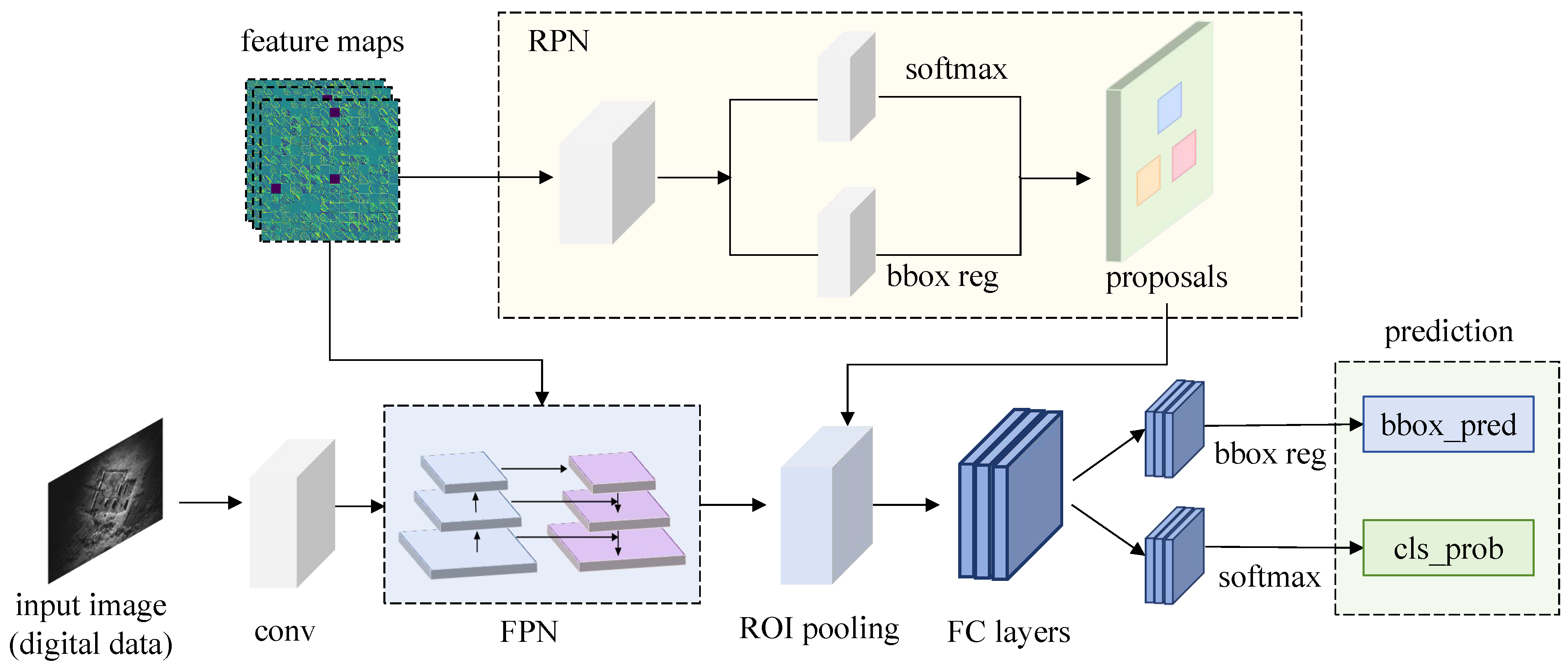

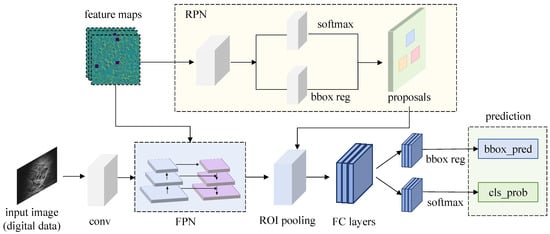

3.3. SAR Moving Target Intelligent Detection Based on Faster-RCNN

Taking Faster-RCNN [34] as an instance, this paper exhibits the overall implementation process of SAR-GMTI tasks by virtue of artificial intelligence. Faster-RCNN has shown superior detection performance on multiple datasets, and the structure of the model is shown in Figure 9. The network mainly consists of two modules, namely, an RPN and a Fast RCNN [35] detector, and can effectively complete SAR-GMTI tasks in complex backgrounds due to its advantage of two-stage inference. Faster-RCNN uses an anchor mechanism to generate region proposals of different sizes and aspect ratios, which are further classified and relocated to obtain final detection results. The constructed high-fidelity twin datasets were used for network training and the trained model was used to detect the moving targets in related application scenarios. By virtue of adequate feature extraction and learning of SAR twin replica in digital space, real-time testing and optimization can be carried out and help combatants acquaint themselves with the characteristics of the battlefield and achieve precise strikes.

Figure 9.

Model structure of Faster-RCNN.

Generally, Faster-RCNN extracts features from the input image through convolutional layers and performs subsequent inference and prediction on the output feature maps. The intelligent network used in this paper mainly includes four parts:

- Feature extraction: To begin with, the feature extraction network generates feature maps used for subsequent RPN layers through convolution and pooling operations. Since SAR images are grayscale images and the targets to be recognized are relatively small, ResNet50 was selected as the backbone network of Faster-RCNN, and an FPN (Feature Pyramid Network) was further introduced to improve the detection performance of the model. In particular, ResNet50 can effectively balance the increasing network layers and the improvement in model performance, which can learn richer semantic information and avoid gradient vanishing and explosion at the same time.

- Multi-scale feature fusion: In the process of feature extraction, the shadow layers contain less semantic information but precise target positions, while the deep layers contain richer semantic information but rough locations. Generally, a CNN (Convolution neural network) adopts the bottom-up feature extraction strategy and only utilizes features of the last layer, which causes a sharp decline in the detection performance of small objects. By comparison, the FPN adopts a bidirectional feature extraction strategy and achieves feature fusion by horizontal connections, which can fully utilize bottom high-resolution features and high-level semantic information for prediction. On the basis of not increasing the computational load, a multi-scale feature pyramid was constructed by simply changing the network connection to realize the robust detection of multi-scale targets.

- Region proposal: An RPN directly processes the feature maps output from the backbone feature extraction network, which generates a series of rectangular region proposals through sliding windows (preset anchors). The convolution results are mapped to feature vectors and then input into fully connected layers for classification and regression. On the one hand, the likelihood probability of the output category is calculated by the SoftMax function, and a binary classification is performed on the anchors (target or background). On the other hand, the coordinates of the bounding boxes that contain targets are predicted and output through the regression layer.

- Classification and regression: The input feature vectors which are generated from the output proposals of the RPN are classified and located by the fully connected layers. Moreover, by virtue of the SoftMax function, the target category in proposals is further classified and the coordinates of the bounding boxes are determined from the regions that contain targets.

4. Experiment Results

According to the framework implementation of the DT-based SAR ground moving target intelligent detection described in Section 3, first, a radar imaging algorithm was combined to construct a high-fidelity SAR twin replica constructed in digital space by parameter traversal. On this basis, SAR twin data were fed into the neural network model for training, and the trained model was used to detect SAR ground moving targets in related practical application scenarios.

4.1. Construction Results of Digital Twin Data

Specifically, the construction of high-fidelity SAR twin data is crucial to realizing the robust detection of moving targets in measured scenarios. The simulation system parameters should keep consistent with those of an actual system. Moreover, the twin datasets should cover target shapes as rich as possible, which ensures that the network model can fully extract and learn the feature information of the monitored targets and preset scene. The simulation parameters of the MiniSAR twin replica are shown in Table 2, and the simulation environment was matlab R2020a.

Table 2.

Simulation parameters of the MiniSAR twin replica.

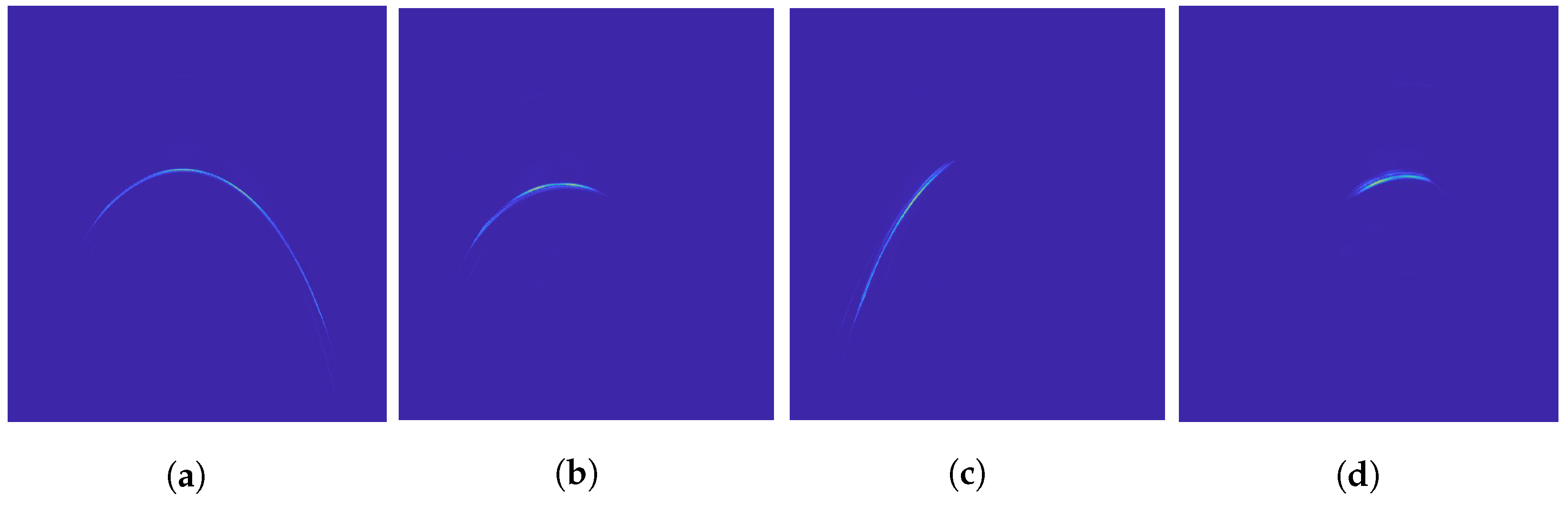

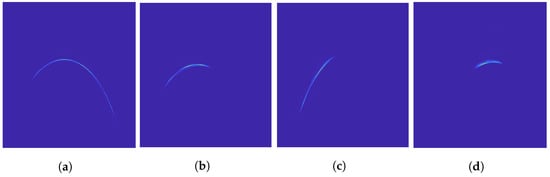

During the traversal process of motion parameters, the velocity of moving targets was set between 3 m/s and 5 m/s. On this basis, the range of and were calculated to be [−8.2, −1.7] ∪ [1.7, 8.2], and [−0.038, 0.018]. Moreover, the traversal step size of and were set to and , respectively. On this basis, the simulation results of moving targets under different settings of motion parameters are shown in Figure 10.

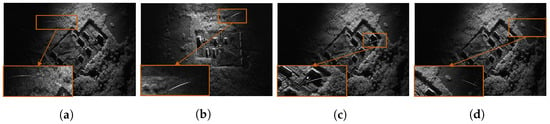

Figure 10.

(a–l) Simulation results of SAR moving targets based on parameter traversal.

It can be seen from Figure 10 that a large number of SAR moving target sample sets were obtained by parameter traversal, which covered rich target shapes and reflected the characteristics of the real imaging results of moving targets in actual SAR systems well. On this basis, the neural network could fully learn the morphological characteristics of moving targets and improve the detection in field application scenarios.

Additionally, in actual SAR systems, the positions of moving targets are randomly distributed in the scene. During the fusion process of the target echo and scene echo, the richer the position parameters (azimuth–range coordinates) of the targets, the better. The observed scene size was , and the effective imaging range of moving targets was . According to the sliding window-based method of target position parameter traversal described in Section 2.2, the step sizes of sliding windows along the range and azimuth directions were both set to 10. In this way, the constructed SAR twin database could fully cover the target positions similar to the actual SAR system, and the number of sample sets was significantly increased at the same time, which could greatly improve the generalization ability and detection performance of the model.

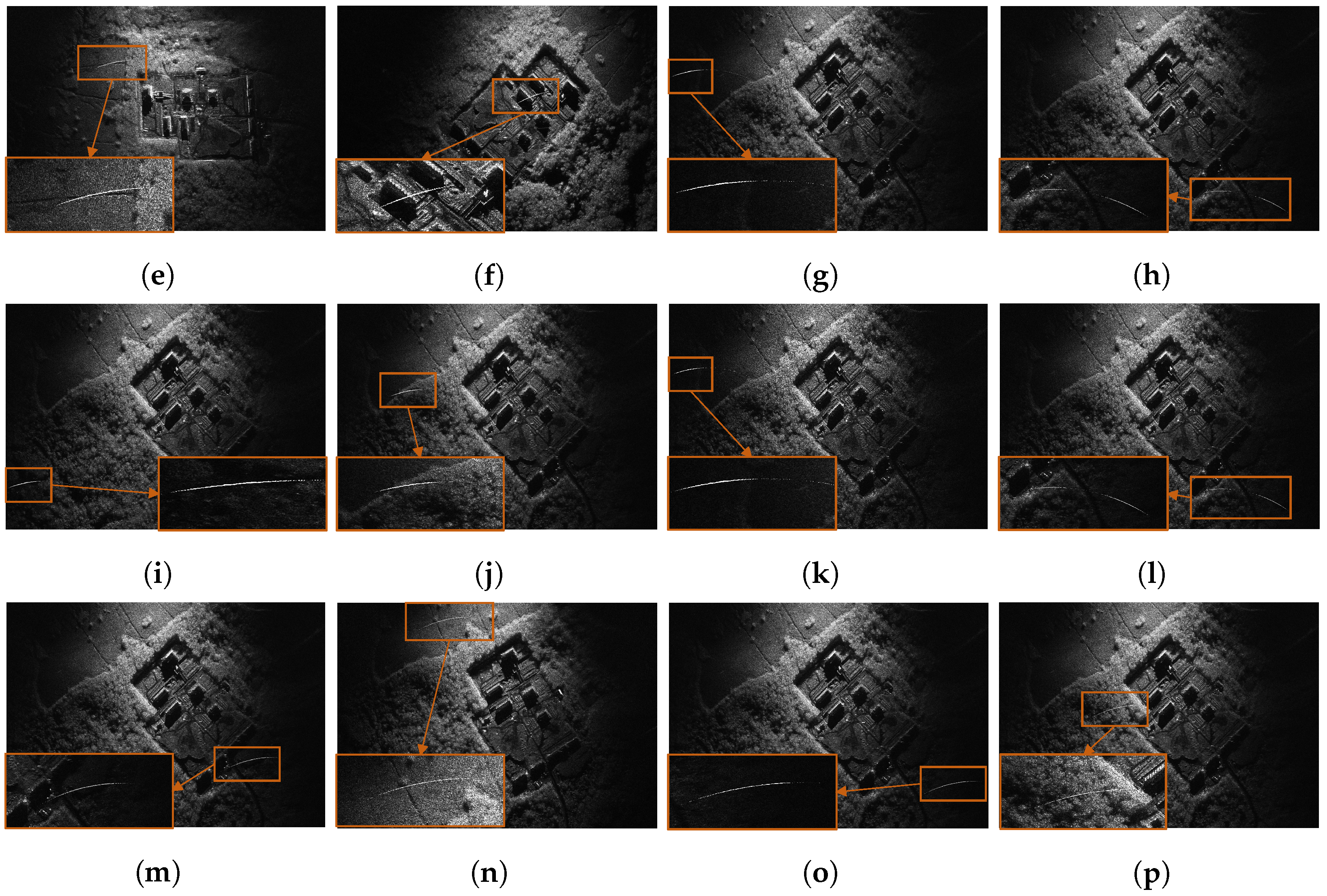

The SCR measures the energy ratio between target echo and scene echo, which is constantly varying, influenced by the system frequency, radar antenna, and target characteristics. Therefore, the SCR was traversed within the range of with a step size equal to , and the constructed SAR twin replica was further improved and better coincided with the measured system, which could fully reflect the characteristics of SAR datasets obtained from the actual system. The constructed SAR twin sample sets are partially shown in Figure 11.

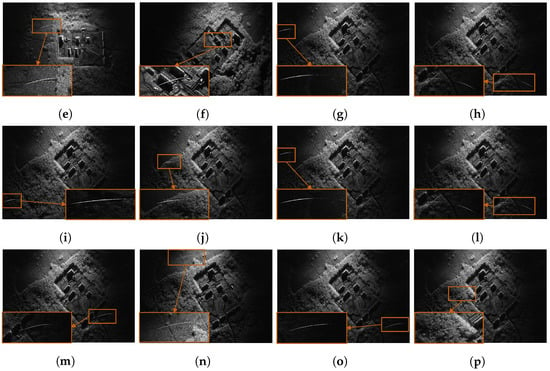

Figure 11.

(a–p) Imaging results of MiniSAR twin data. The orange rectangle represents the simulated SAR moving target.

It can be seen from Figure 11 that the constructed SAR twin data were highly similar to measured datasets obtained from the actual SAR system shown in Figure 7 with the target motion parameters, position distribution, and SCR taken into consideration. The constructed high fidelity SAR twin data could fully reflect the overall characteristics of the measured data, which were fed into the neural network for model training and effectively ensured the robust detection of SAR moving targets in related practical scenarios.

4.2. Validation of DT-Based SAR-GMTI Framework

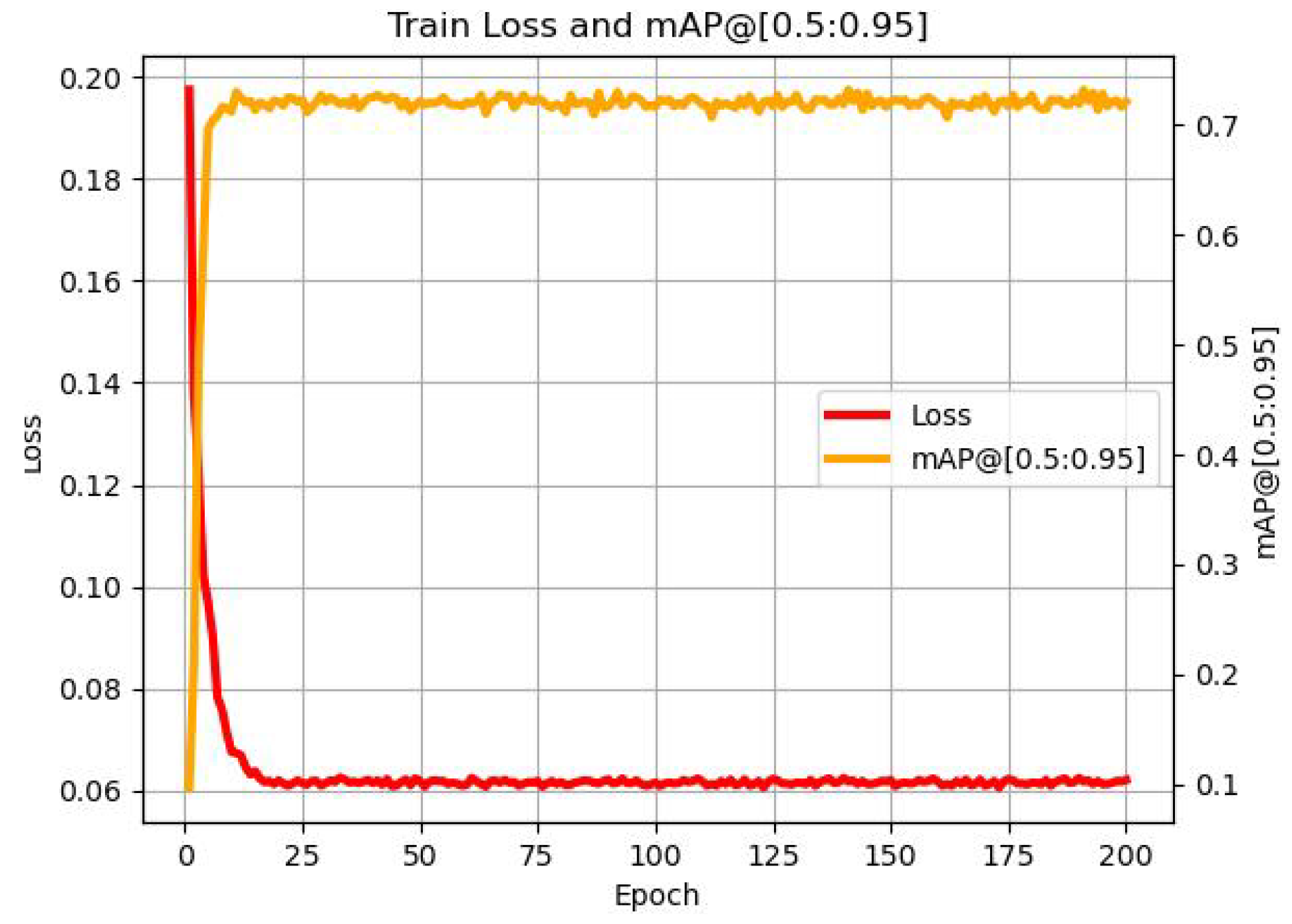

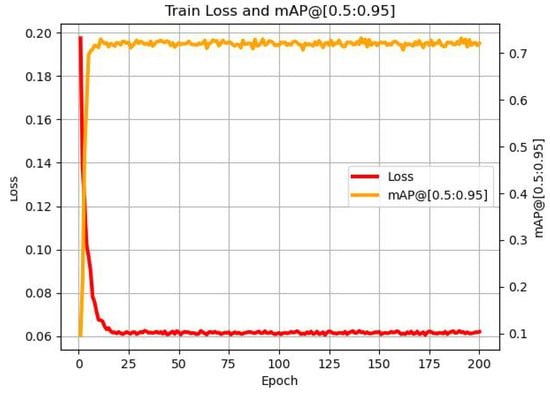

Based on the simulation results obtained by the target parameter traversal in Section 4.1, 6820 SAR samples were fed into the neural network model for training, and the ratio of the training set to the verification set was 7:3. Then the effectiveness of the proposed framework was verified on the measured MiniSAR datasets. The relevant experiments were conducted on the NVIDIA GeForce RTX 3060 Ti GPU platform, the deep learning framework was Pytorch (version 1.7.1), and the training process consisted of 200 epochs. The loss and mAP (mean average precision) curves during the training process are shown in Figure 12.

Figure 12.

Training parameters of Faster-RCNN.

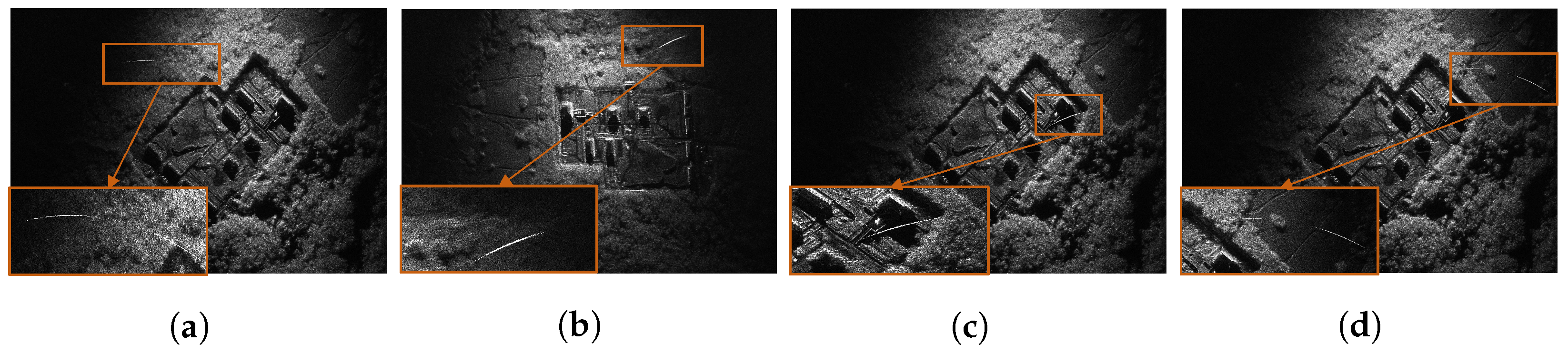

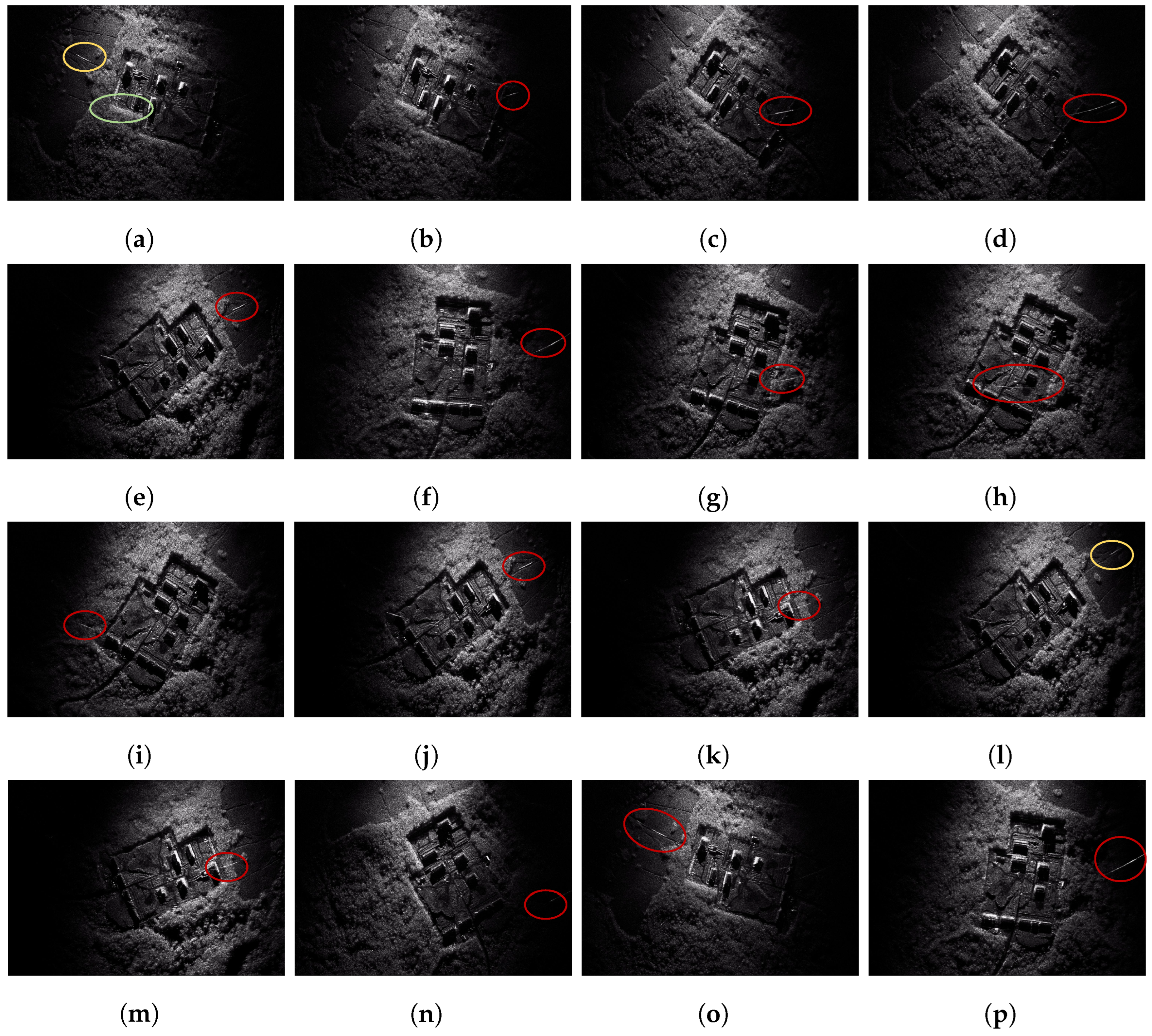

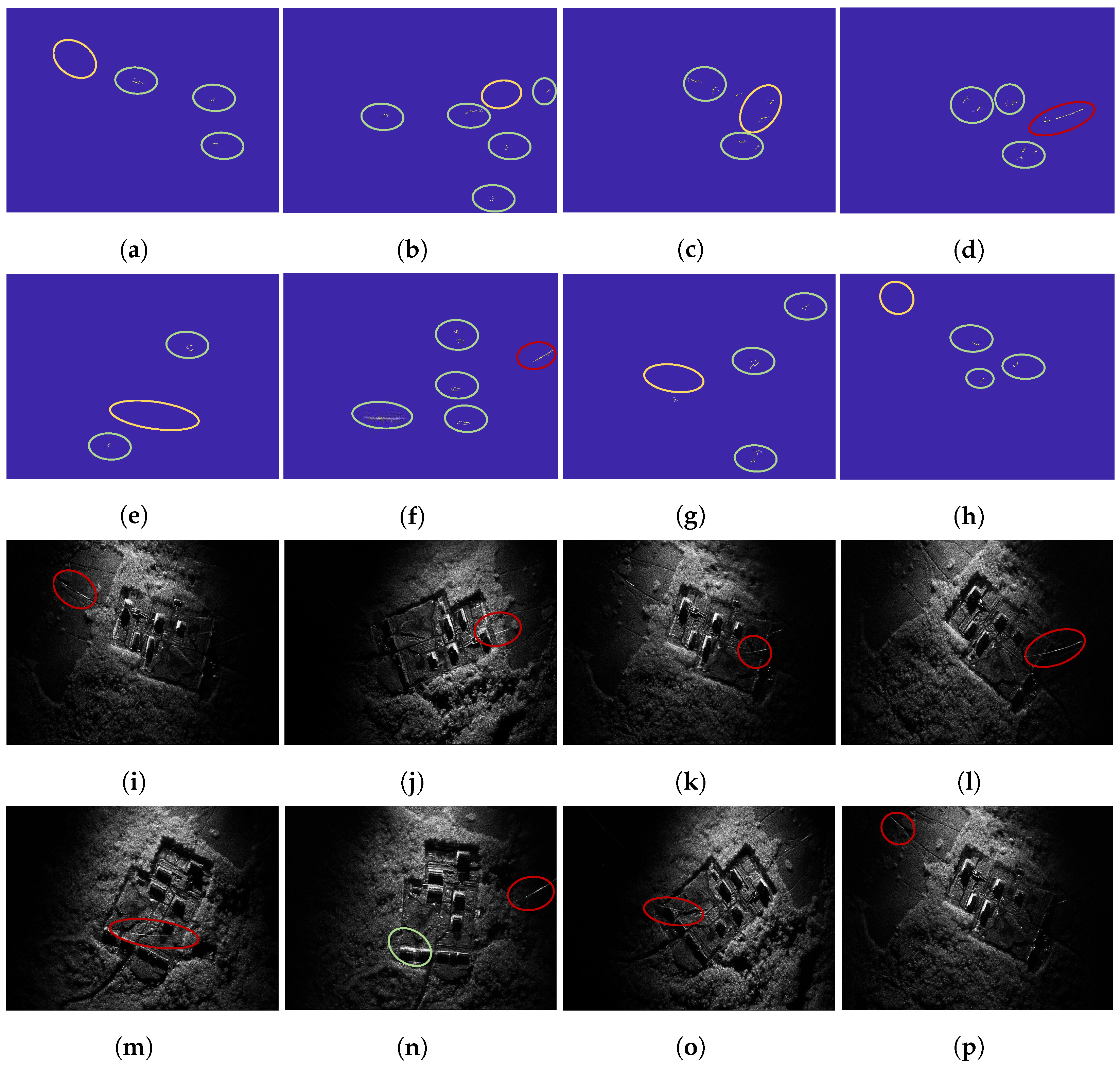

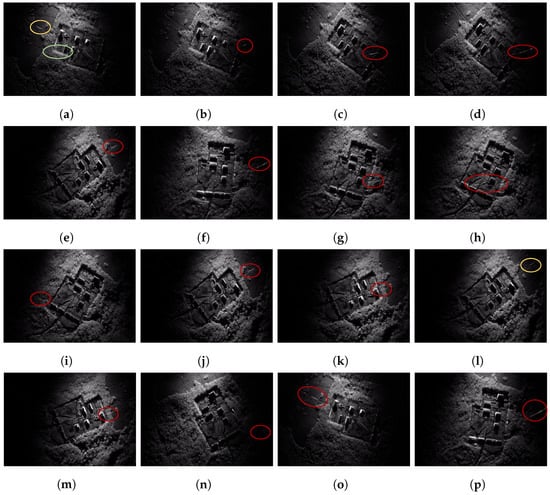

From Figure 12, it can be seen that in the first 20 epochs, the loss and mAP changed sharply with the increase in the number of iterations, while in the subsequent epochs, the parameters remained stable due to model convergence. In order to further verify the detection performance on the test set, the trained Faster-RCNN was used to detect the moving targets in MiniSAR measured datasets, and the detection results are partially shown in Figure 13.

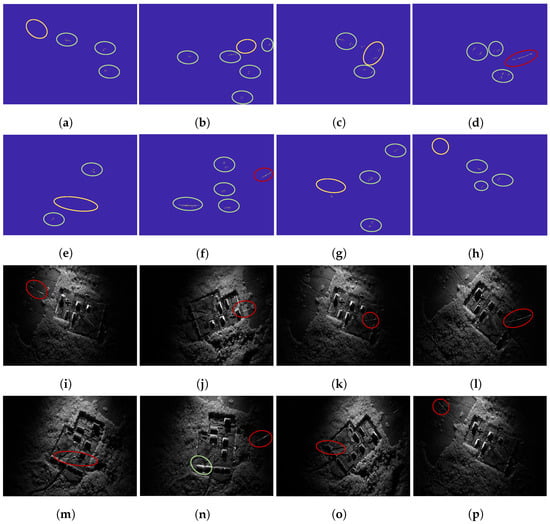

Figure 13.

(a–p) Detection results of partially measured sample sets. The red, green, and yellow ellipses represent the correctly detected target, false alarm, and missed target, respectively.

There existed a lot of disturbance from system noise and ground objects in the scene, and the imaging results of moving targets were highly sensitive to minor variations in target velocity and position due to the limited operating speed and height of the MiniSAR system, which made it more difficult to distinguish the position of moving target trajectories and posed great challenges to the accurate detection of the model. However, Figure 13 indicates that the DT-based framework for SAR moving target intelligent detection could still realize the robust detection of moving targets in MiniSAR measured data by virtue of its optimal pre-modeling and feature extraction capabilities.

During the construction process of SAR twin datasets, the target motion, position parameters, and SCR were fully taken into consideration. In order to further evaluate the impacts of different parameter traversal settings on the detection performance of the model, multiple comparative experiments were conducted based on a variable-controlling method, and the relevant results are shown in Table 3. In particular, the values of , , and the SCR varied within the range of [−8.2, −1.7] ∪ [1.7, 8.2], [−0.038, 0.018], and [12, 20], the scene size was , and the effective imaging range of the moving target was . The traversal step size of , , the SCR, and the sliding step size of the target window along the range and azimuth directions are denoted as , , , , , respectively. The total number of moving targets to be detected was 679.

Table 3.

Comparative detection results under different parameter traversal settings.

In Table 3, Group 9 is the setting method of parameter traversal used in this paper. Groups 1–3 study the influence of target motion parameters on the detection results by setting different step sizes, and the target position parameters and SCR were fixed to (981, 899) and 16. The experiment results indicate that the richer shapes of moving targets could be obtained in the SAR twin database with the decreasing step sizes, which effectively facilitated the full feature extraction of moving targets and improved the detection performance of the model. As the distribution of background clutter was not homogeneous, the target positions in the scene certainly also influenced the detection results, as the experiment results of Groups 4–6 show. With more possible target positions in the scene covered in the SAR twin data, the model could achieve better detection results with fewer false alarms and missed targets. Moreover, the bright lines were broken when interfered by surrounding clutter. If the SCR had not been introduced, part of the bright line may have been recognized as the target, which would actually constitute a false alarm since the complete target was not accurately detected. It can be seen from the experimental results of Groups 7–8 that based on SCR parameter traversal, more moving targets in the sample could be correctly detected with refined step sizes.

In order to further evaluate the influence of the SCR on the detection performance [36], the detection results under different SCRs were recorded in Table 4. The value of the SCR varied within the range of , and , , , and were set to 1, 0.004, 20, and 30, respectively. The detection precision is defined as

where TP and FP represents the true positive targets and false positive targets, which actually correspond to the number of correctly detected targets and false alarms, respectively.

Table 4.

Detection results under different SCRs.

If the SCR is set too small during the simulation process, the target echo is submerged in strong background clutter and is difficult to distinguish. It can be seen from Table 4 that as the SCR increased, the model relatively showed better detection performance with higher detection precision. However, compared to the experiment results of Groups 7–9 in Table 3, the detection performance of the model under a fixed SCR was inferior to that under the parameter traversal of the SCR since the latter-constructed SAR twin datasets were closer to the SAR measured system, fully reflecting the variation characteristics of the real moving targets in imaging results.

In summary, target motion, and the position parameters, and the SCR were verified to have certain impacts on the detection performance of the model, and with the traversal step sizes refined, the detection results were improved with fewer false alarms and missed targets, but the running time and complexity also increased significantly. Therefore, during the specific implementation process, the performance of the model and operation time needed to be comprehensively considered in this paper, which realized the robust detection of moving targets in related field scenarios, as the experiment results of Group 9 showed.

4.3. Comparative Experiment Results and Analysis

According to the experiment results in Section 4.2, the proposed DT-based framework for SAR ground moving target intelligent detection showed optimal detection performance in the related field scenarios by learning the morphology characteristics of the moving target and preset scene in digital space. In order to further verify the effectiveness of the proposed framework, the experiment results obtained by a traditional CFAR (constant false alarm rate) detection algorithm were compared and analyzed.

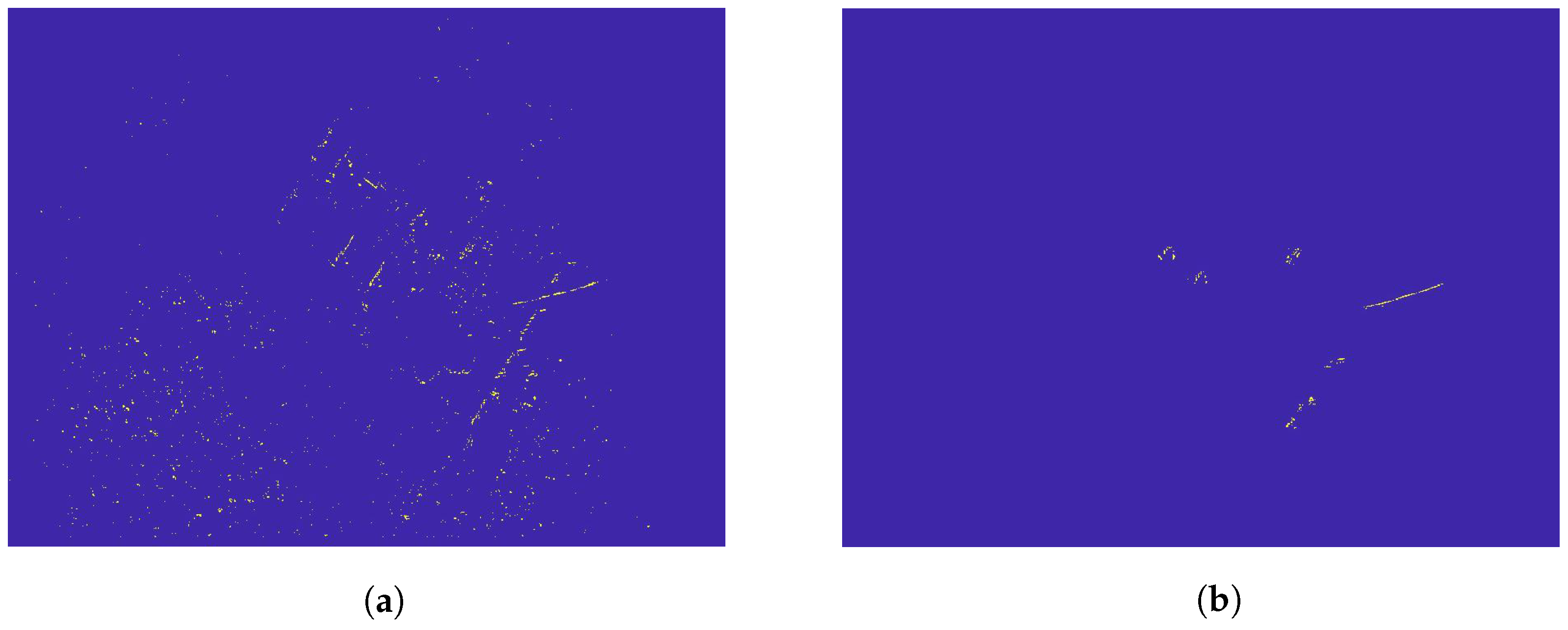

The CFAR detection algorithm [37] is based on echo processing and dynamically adjusts the detection threshold according to clutter power, which maximizes the detection probability under a constant false alarm rate. Specifically, the two-dimensional CA-CFAR (cell-averaging CFAR) detection algorithm was selected to detect the measured datasets. The CA-CFAR method sets the guard band at the edge of the CUT (cell under detection), and if the average signal power is greater than the set threshold T, it is determined that there exists a target in the current CUT, otherwise, there exists no targets. Similarly, the above operation is performed on the next CUT until all cells within the scene are traversed.

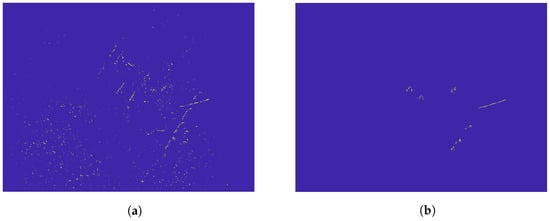

There exist many scattering points in the preliminary detection results obtained by CA-CFAR due to the interference of complex background clutter, and in this situation, the judgment of targets need to be further defined. Based on the preliminary detection results, this paper set a rectangular window with a size equal to that could exactly cover the target imaging range in measured data and calculated the average amplitude of the pixel points within this window. Only the windows with a pixel average amplitude higher than the preset threshold were retained, and the threshold was set to 23 according to the window that covered the real target. In this way, the detection results obtained by CA-CFAR were significantly filtered, and only the preserved windows were judged as targets. The comparative detection results before and after filtering processing are shown in Figure 14.

Figure 14.

Comparison of detection results obtained by CA-CFAR. (a) Preliminary detection results. (b) Final detection results after filtering processing.

From Figure 14, it is indicated that the interference pixels from background clutter in the CFAR detection results were effectively filtered, and the retained windows were all determined as targets, which standardized the principle of target judgment and was conducive to the calculation and comparative analysis of the detection results. On this basis, the comparative detection results obtained by the proposed framework and CA-CFAR algorithm with false alarm rate set to are shown in Figure 15.

Figure 15.

Comparative detection results of MiniSAR measured datasets. (a–h) Detection results by using CA-CFAR (). (i–p) Detection results based on the proposed framework. The red, yellow, and green ellipses represent the correctly detected target, missed target, and false alarm, respectively.

When the target echo power was relatively strong, the CA-CFAR algorithm could accurately detect the moving targets in the scene, as shown in Figure 15d–f. However, the detection performance of CA-CFAR deteriorated sharply when interfered by complex background clutter with a large number of missed targets and false alarms generated, as shown in Figure 15a,b,e,g. In comparison, since the high-fidelity SAR twin data contained rich information on moving targets and preset scene features, the DT-based framework for SAR moving target intelligent detection could still realize the robust detection of moving targets in the same scenario by virtue of its optimal pre-modeling and feature extraction abilities, as shown in Figure 15i–l. In order to further compare the detection performance of the proposed framework and CA-CFAR algorithm under different false alarm rates () quantitatively, the number of correctly detected targets, false alarms, and missed targets in the detection results were calculated and are shown in Table 5. In particular, the recall rate and precision indicators were introduced to make the comparison more intuitive. The recall rate is expressed as

where FN represents false negative targets, which actually correspond to the number of missed targets.

Table 5.

Quantitative comparison of detection results obtained by different methods.

From Table 5, it is indicated that when the false alarm rate was set relatively high (), the CA-CFAR algorithm could correctly detect most of the moving targets in the measured scene, but this was accompanied by a large number of false alarms. Moreover, as the false alarm rate decreased, the detection performance of the CA-CFAR algorithm sharply deteriorated, affected by strong background clutter with an awful recall rate and detection precision. In comparison, the DT-based framework for SAR ground moving target intelligent detection comprehensively showed better detection performance on the MiniSAR measured datasets with a considerable recall rate and detection precision combined. By virtue of the pre-modeling ability of DT, the proposed framework could realize the robust detection of moving targets in complex measured scenarios and is applicable to various SAR systems to complete multiple field detection tasks flexibly and reliably.

5. Discussion

It can be seen from Figure 7 and Figure 11 that combined with radar imaging algorithms, a high-fidelity twin replica can be constructed in the digital space by parameter traversal, which guarantees the neural network model’s accurate detection of the moving targets in actual systems. In additional, the proposed construction scheme of the SAR twin datasets by target parameter traversal can be widely applicable to various SAR systems, which provides the possibility of promoting innovative experiments in related fields even when confronted with insufficient publicly measured datasets.

The experiment results indicate that the proposed DT-based SAR moving target intelligent detection framework shows optimal detection performance on the measured data. Although there exists serious interference from complex background clutter, it can be seen from Figure 13 that the model can still realize the robust detection of SAR moving targets in test scenarios. By virtue of its outstanding pre-modeling ability, DT technology effectively promotes the further development of SAR ground moving target intelligent detection algorithms. In addition, the proposed framework shows better detection performance on the measured datasets compared to the traditional CFAR method with a competent recall rate and detection precision simultaneously. Therefore, the proposed framework can realize robust moving target detection in actual scenarios with no need for complex and multiple field experiments by fully learning the features of SAR twin datasets in digital space, which is applicable to various SAR systems to complete detection tasks effectively.

However, there still exist several missed targets in detection results obtained by the proposed framework due to the limited research time and incomplete parameter traversal. In the future, the constructed SAR twin database can be continuously enriched by adjusting parameter traversal settings, and the intelligent target detection algorithms can be optimized or re-selected according to the characteristics of the SAR measured datasets to further improve the overall performance of the model in application scenarios.

6. Conclusions

Nowadays, the SAR-GMTI algorithms based on deep learning are facing more frequent and multi-task tests in complex scenarios. Due to the high operation complexity and costs of SAR systems, field experiments are extremely difficult to implement, and the detection performance and generalization ability of the model are greatly limited by insufficient datasets. This paper proposed a framework for intelligent ground moving target detection based on the integration of DT and artificial intelligence technology. By fully learning the characteristics of SAR twin data in digital space, the model could realize the accurate recognition of moving targets in a related actual system with no need for complex field experiments.

In particular, the SAR imaging algorithm and parameter traversal were combined to construct a high-fidelity twin replica of monitored targets, which was used for neural network model training, and the detection performance of the model was further verified on SAR measured data. The experimental results on the test datasets indicated that although there existed serious interference from complex scene clutter in application scenarios, the DT-based framework could still realize the effective detection of moving targets in test scenes by virtue of its outstanding pre-modeling ability. This provides a new impetus for the development of intelligent SAR-GMTI algorithms and can be widely applied to various SAR systems to complete field detection tasks.

Author Contributions

Conceptualization, H.L. and H.Y.; methodology, H.L. and H.Y.; software, H.L.; validation, H.L. and J.H.; formal analysis, W.X.; investigation, Z.M.; resources, H.Y.; data curation, H.L.; writing—original draft preparation, H.L.; writing—review and editing, H.L.; visualization, H.Y.; supervision, H.Y.; project administration, H.Y.; funding acquisition, H.Y. and D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant numbers 62271252 and 62171220).

Data Availability Statement

The data that support the findings of this article are not publicly available due to confidentiality requirements, which can be requested from the author at liu097@nuaa.edu.cn.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, K.; Jiu, B.; Pu, W.; Liu, H.; Peng, X. Neural Fictitious Self-Play for Radar Antijamming Dynamic Game with Imperfect Information. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 5533–5547. [Google Scholar] [CrossRef]

- Barshan, B.; Eravci, B. Automatic Radar Antenna Scan Type Recognition in Electronic Warfare. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2908–2931. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, N.; Guo, J.; Zhang, C.; Wang, B. SCFNet: Semantic Condition Constraint Guided Feature Aware Network for Aircraft Detection in SAR Images. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–20. [Google Scholar] [CrossRef]

- Luo, Y.; Song, H.; Wang, R.; Deng, Y.; Zhao, F.; Xu, Z. Arc FMCW SAR and Applications in Ground Monitoring. IEEE Trans. Geosci. Remote. Sens. 2014, 52, 5989–5998. [Google Scholar] [CrossRef]

- Lin, Y.; Zhao, J.; Wang, Y.; Li, Y.; Shen, W.; Bai, Z. SAR Multi-Angle Observation Method for Multipath Suppression in Enclosed Spaces. Remote. Sens. 2024, 16, 621. [Google Scholar] [CrossRef]

- Nan, Y.; Huang, X.; Guo, Y.J. An Universal Circular Synthetic Aperture Radar. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zhu, S.; Liao, G.; Qu, Y.; Zhou, Z.; Liu, X. Ground Moving Targets Imaging Algorithm for Synthetic Aperture Radar. IEEE Trans. Geosci. Remote. Sens. 2011, 49, 462–477. [Google Scholar] [CrossRef]

- Suwa, K.; Yamamoto, K.; Tsuchida, M.; Nakamura, S.; Wakayama, T.; Hara, T. Image-Based Target Detection and Radial Velocity Estimation Methods for Multichannel SAR-GMTI. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 1325–1338. [Google Scholar] [CrossRef]

- Ding, C.; Mu, H.; Zhang, Y. A Multicomponent Linear Frequency Modulation Signal-Separation Network for Multi-Moving-Target Imaging in the SAR-Ground-Moving-Target Indication System. Remote. Sens. 2024, 16, 605. [Google Scholar] [CrossRef]

- Mu, H.; Zhang, Y.; Jiang, Y.; Ding, C. CV-GMTINet: GMTI Using a Deep Complex-Valued Convolutional Neural Network for Multichannel SAR-GMTI System. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Guo, Q.; Liu, J.; Kaliuzhnyi, M. YOLOX-SAR: High-Precision Object Detection System Based on Visible and Infrared Sensors for SAR Remote Sensing. IEEE Sens. J. 2022, 22, 17243–17253. [Google Scholar] [CrossRef]

- Zhou, P.; Wang, P.; Cao, J.; Zhu, D.; Yin, Q.; Lv, J.; Chen, P.; Jie, Y.; Jiang, C. PSFNet: Efficient Detection of SAR Image Based on Petty-Specialized Feature Aggregation. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2024, 17, 190–205. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, J.; Huang, Z.; Wan, H.; Chang, P.; Li, Z.; Yao, B.; Wu, B.; Sun, L.; Xing, M. FSODS: A Lightweight Metalearning Method for Few-Shot Object Detection on SAR Images. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Wang, Z.; Du, L.; Zhang, P.; Li, L.; Wang, F.; Xu, S.; Su, H. Visual Attention-Based Target Detection and Discrimination for High-Resolution SAR Images in Complex Scenes. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 1855–1872. [Google Scholar] [CrossRef]

- Sun, M.; Li, Y.; Chen, X.; Zhou, Y.; Niu, J.; Zhu, J. A Fast and Accurate Small Target Detection Algorithm Based on Feature Fusion and Cross-Layer Connection Network for the SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2023, 16, 8969–8981. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, J.; Huang, Z.; Lv, J.; Song, J.; Luo, H.; Wu, B.; Li, Y.; Diniz, P.S.R. HRLE-SARDet: A Lightweight SAR Target Detection Algorithm Based on Hybrid Representation Learning Enhancement. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–22. [Google Scholar] [CrossRef]

- Mihai, S.; Yaqoob, M.; Hung, D.V.; Davis, W.; Towakel, P.; Raza, M.; Karamanoglu, M.; Barn, B.; Shetve, D.; Prasad, R.V.; et al. Digital Twins: A Survey on Enabling Technologies, Challenges, Trends and Future Prospects. IEEE Commun. Surv. Tutor. 2022, 24, 2255–2291. [Google Scholar] [CrossRef]

- Thieling, J.; Frese, S.; Roßmann, J. Scalable and Physical Radar Sensor Simulation for Interacting Digital Twins. IEEE Sens. J. 2021, 21, 3184–3192. [Google Scholar] [CrossRef]

- Yang, W.; Zheng, Y.; Li, S. Application Status and Prospect of Digital Twin for On-Orbit Spacecraft. IEEE Access 2021, 9, 106489–106500. [Google Scholar] [CrossRef]

- Rouffet, T.; Poisson, J.B.; Hottier, V.; Kemkemian, S. Digital twin: A full virtual radar system with the operational processing. In Proceedings of the 2019 International Radar Conference (RADAR), Toulon, France, 23–27 September 2019; pp. 1–5. [Google Scholar]

- Xie, W.; Qi, F.; Liu, L.; Liu, Q. Radar Imaging Based UAV Digital Twin for Wireless Channel Modeling in Mobile Networks. IEEE J. Sel. Areas Commun. 2023, 41, 3702–3710. [Google Scholar] [CrossRef]

- Sayed, A.N.; Ramahi, O.M.; Shaker, G. UAV Classification Utilizing Radar Digital Twins. In Proceedings of the 2023 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting (USNC-URSI), Portland, OR, USA, 23–28 July 2023; pp. 741–742. [Google Scholar]

- Ritchie, N.; Tierney, C.; Greig, D. Wind turbine clutter mitigation using a digital twin for airborne radar. In Proceedings of the International Conference on Radar Systems (RADAR 2022), Edinburgh, UK, 24–27 October 2022; Volume 2022, pp. 612–616. [Google Scholar]

- Ye, Y.; Shan, J.; Bruzzone, L.; Shen, L. Robust Registration of Multimodal Remote Sensing Images Based on Structural Similarity. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 2941–2958. [Google Scholar] [CrossRef]

- Yang, Y.; Miao, Z.; Zhang, H.; Wang, B.; Wu, L. Lightweight Attention-Guided YOLO with Level Set Layer for Landslide Detection from Optical Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2024, 17, 3543–3559. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liang, X.; Zhang, J.; Zhuo, L.; Li, Y.; Tian, Q. Small Object Detection in Unmanned Aerial Vehicle Images Using Feature Fusion and Scaling-Based Single Shot Detector With Spatial Context Analysis. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1758–1770. [Google Scholar] [CrossRef]

- Li, J.; Tian, P.; Song, R.; Xu, H.; Li, Y.; Du, Q. PCViT: A Pyramid Convolutional Vision Transformer Detector for Object Detection in Remote-Sensing Imagery. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Luomei, Y.; Xu, F. Segmental Aperture Imaging Algorithm for Multirotor UAV-Borne MiniSAR. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–18. [Google Scholar] [CrossRef]

- Lv, J.; Zhu, D.; Geng, Z.; Han, S.; Wang, Y.; Yang, W.; Ye, Z.; Zhou, T. Recognition of Deformation Military Targets in the Complex Scenes via MiniSAR Submeter Images with FASAR-Net. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, D.; Mao, X.; Yu, X.; Li, Y. Multirotors Video Synthetic Aperture Radar: System Development and Signal Processing. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 32–43. [Google Scholar] [CrossRef]

- Sun, G.C.; Xing, M.; Xia, X.G.; Wu, Y.; Bao, Z. Beam Steering SAR Data Processing by a Generalized PFA. IEEE Trans. Geosci. Remote. Sens. 2013, 51, 4366–4377. [Google Scholar] [CrossRef]

- Shi, T.; Mao, X.; Jakobsson, A.; Liu, Y. Efficient BiSAR PFA Wavefront Curvature Compensation for Arbitrary Radar Flight Trajectories. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Zhang, X.; Hu, D.; Li, S.; Luo, Y.; Li, J.; Zhang, C. Aircraft Detection from Low SCNR SAR Imagery Using Coherent Scattering Enhancement and Fused Attention Pyramid. Remote. Sens. 2023, 15, 4480. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Leng, X.; Ji, K.; Kuang, G. Ship Detection From Raw SAR Echo Data. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Joshi, S.K.; Baumgartner, S.V.; da Silva, A.B.; Krieger, G. Range-Doppler Based CFAR Ship Detection with Automatic Training Data Selection. Remote. Sens. 2019, 11, 1270. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).