Fire-Net: Rapid Recognition of Forest Fires in UAV Remote Sensing Imagery Using Embedded Devices

Abstract

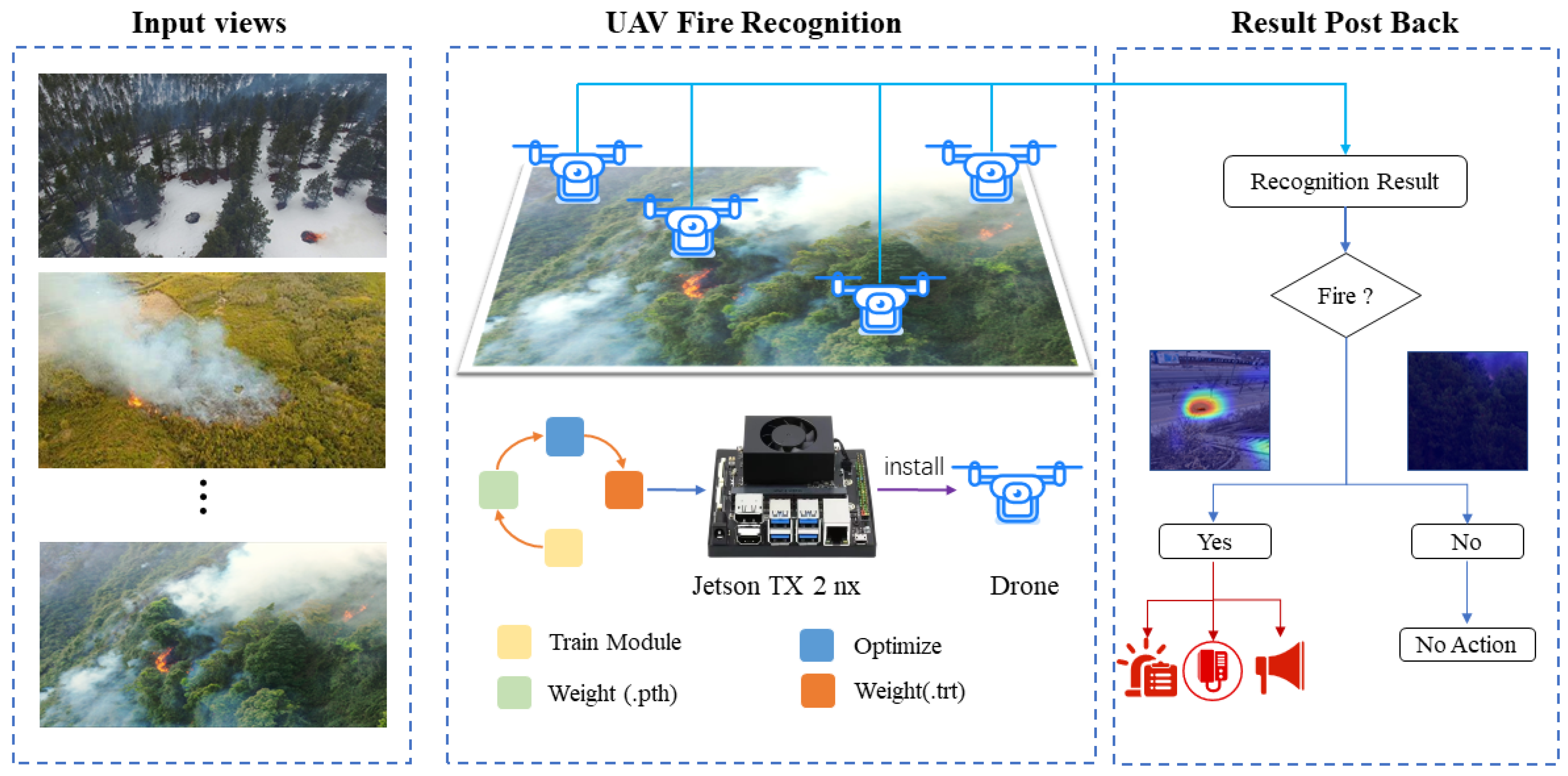

1. Introduction

- (1)

- A real-world forest fire image dataset is enriched in this manuscript. We include various types of fire images as well as those that could be easily confused. This facilitates the networks to learn more subtle features of fires, thereby enhancing the model’s credibility for practical applications;

- (2)

- In this paper, a novel Fire-Net is introduced for forest fire recognition, which uses the CCA module to perceive more fire-related information while ignoring the irrelevant. In addition, the model is accelerated via Tensor RT for embedded deployment, thus enabling the UAV monitor system to detect forest fires in their early stages.

2. Materials and Methods

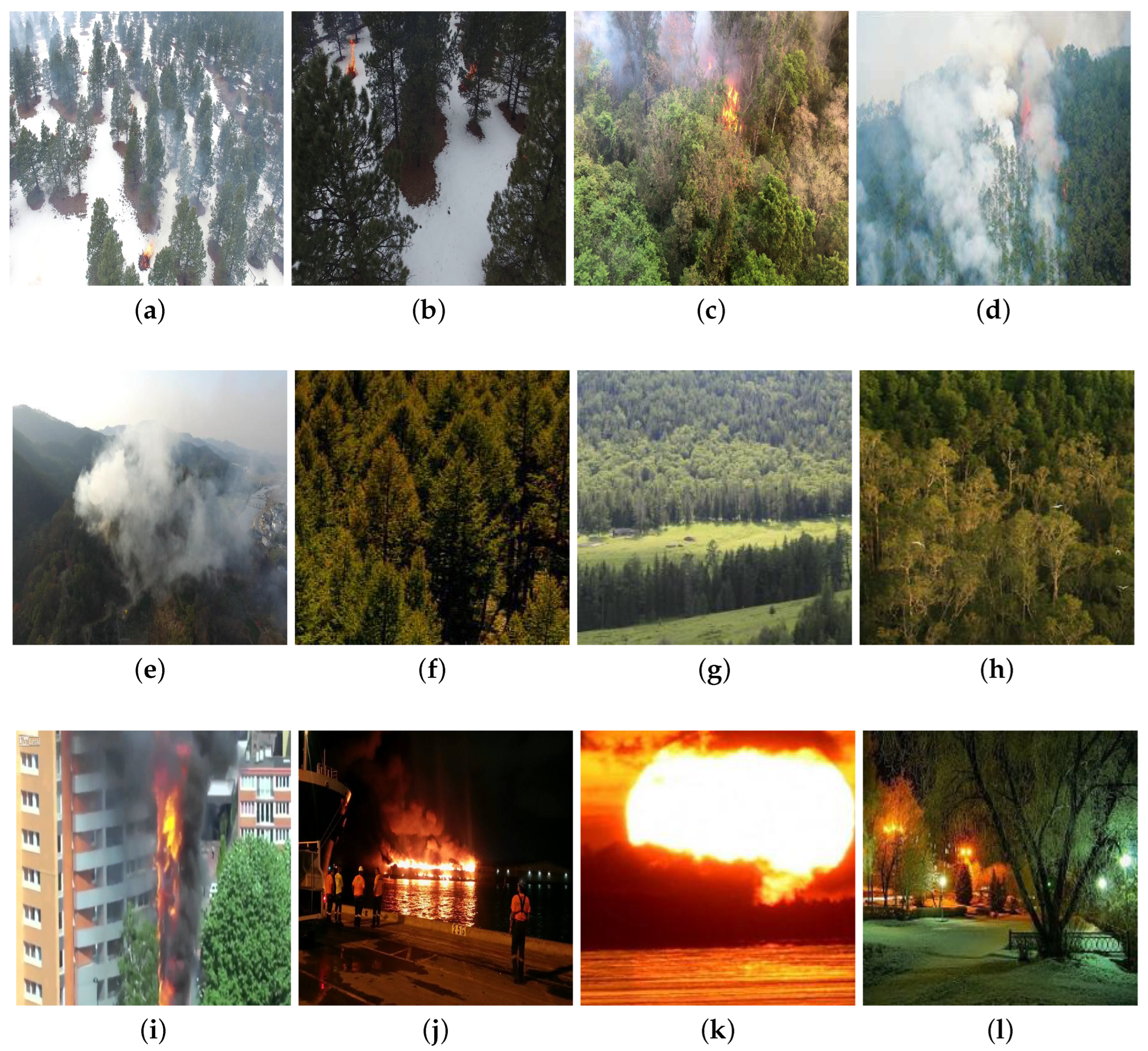

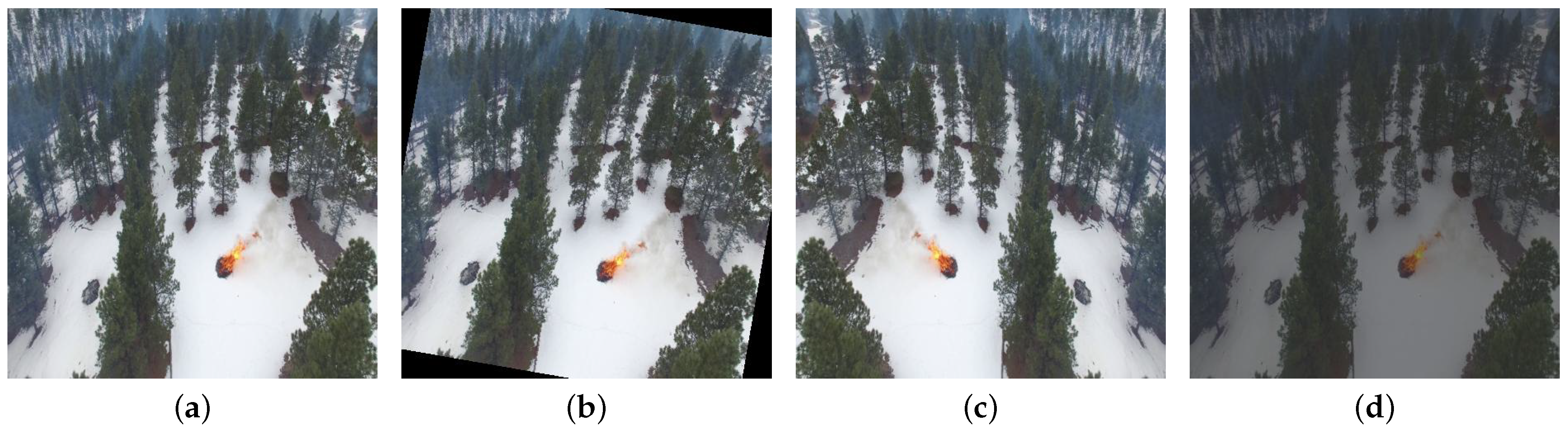

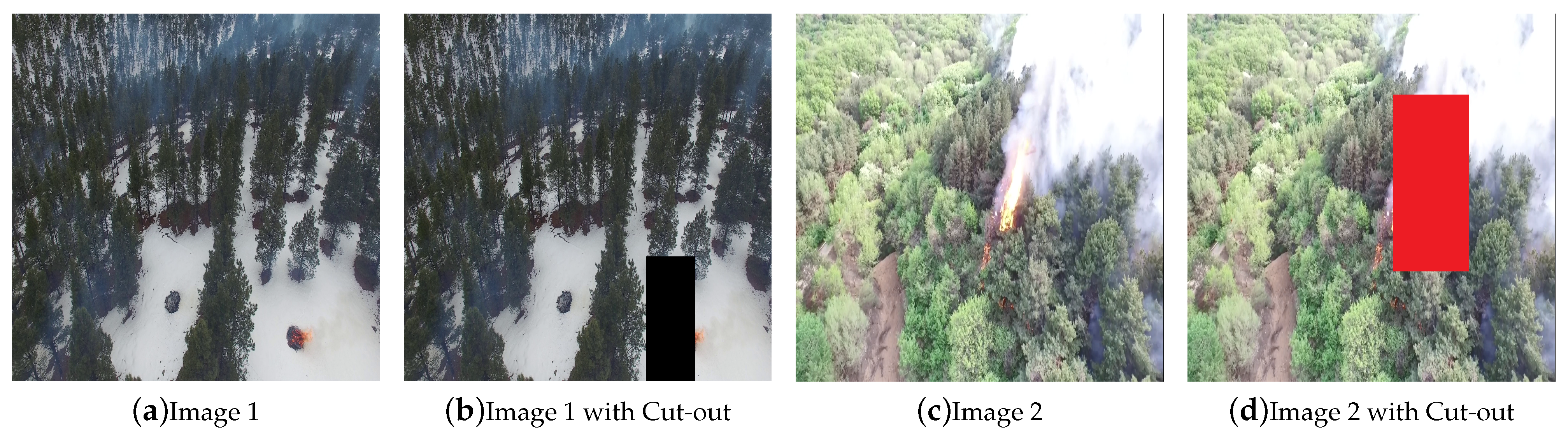

2.1. Datasets

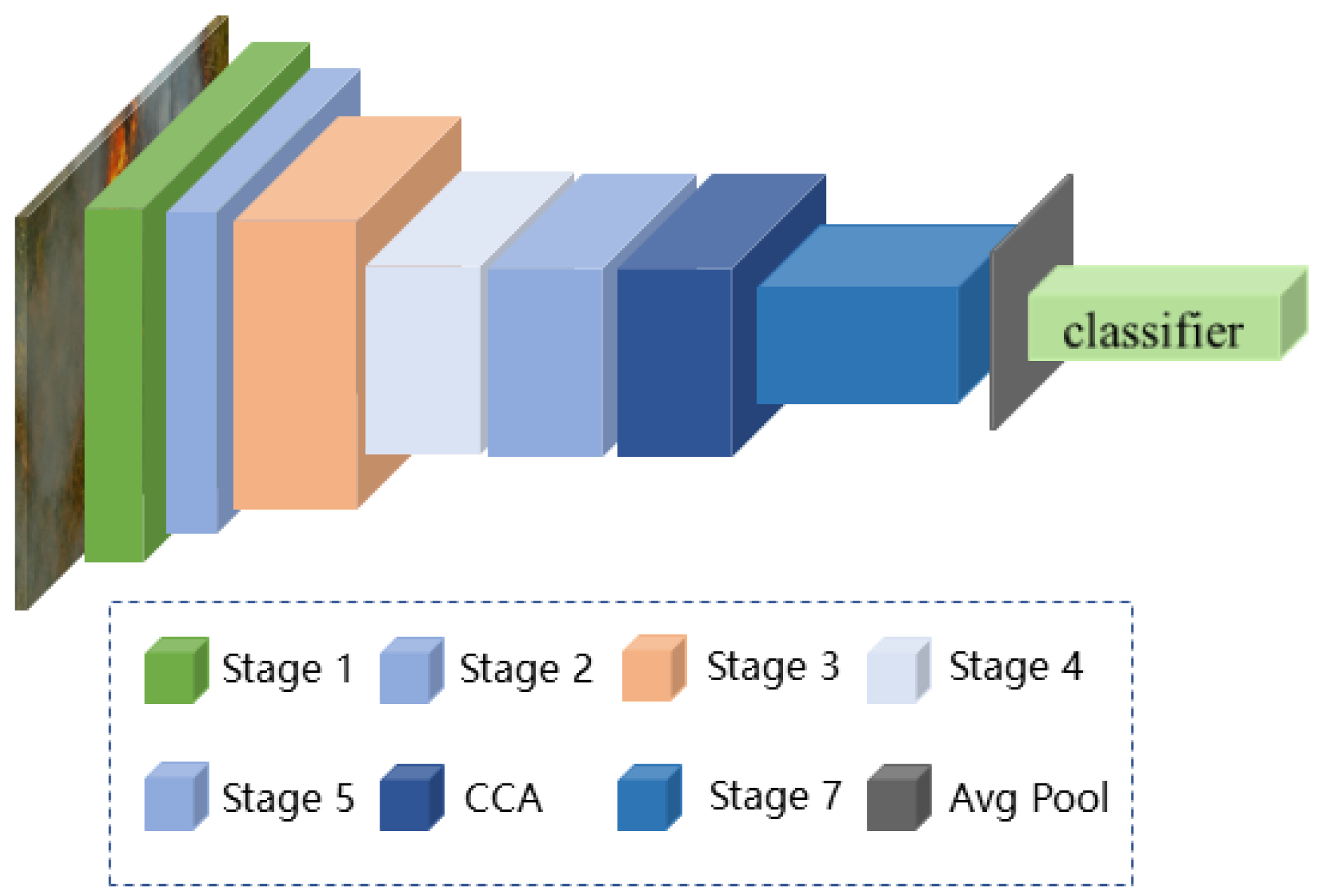

2.2. Fire-Net Detail

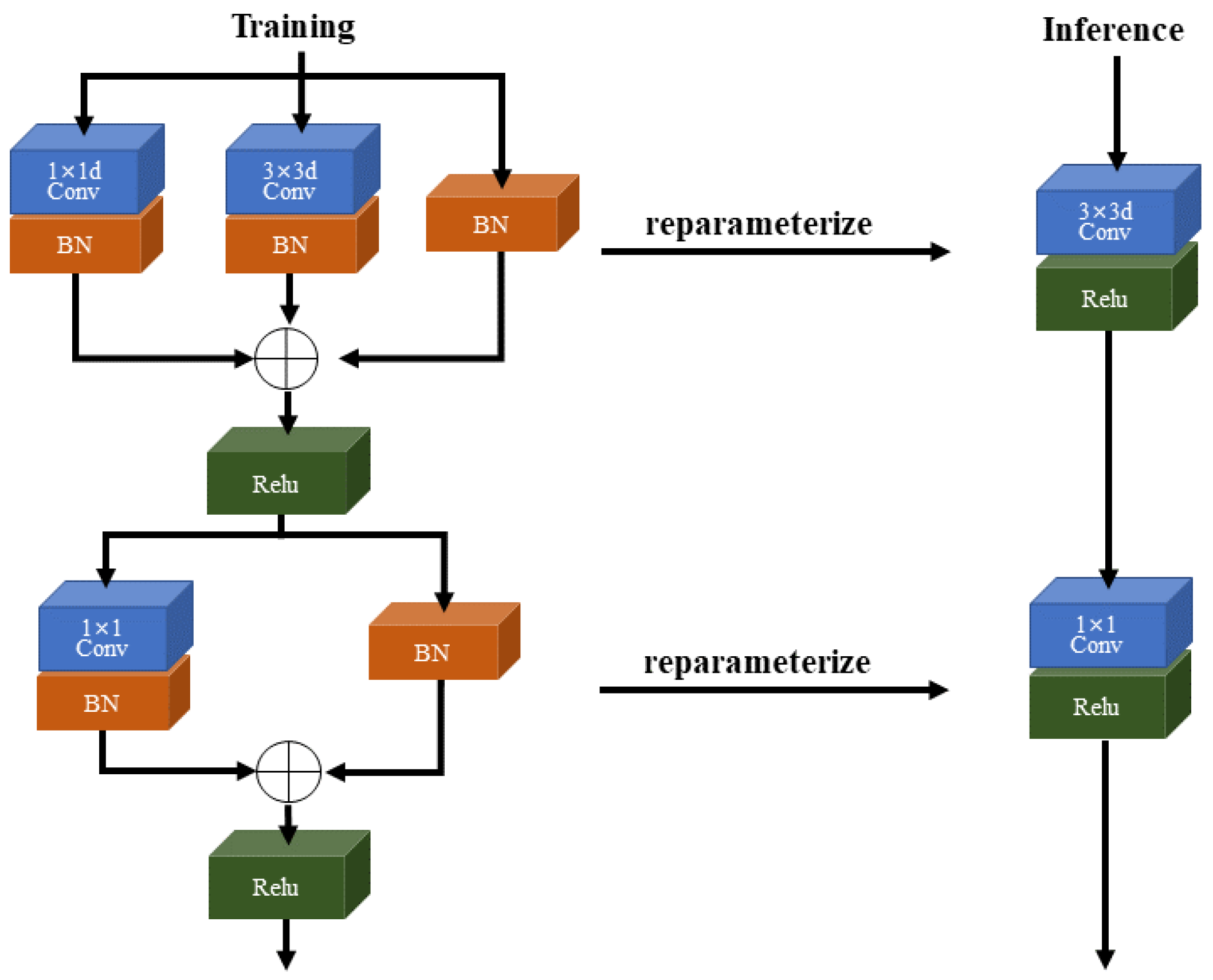

2.2.1. MobileOne Block

2.2.2. Cross-Channel Attention Mechanism

2.3. Data Processing

2.4. Embedded Device

2.5. Model Quantization

2.6. Evaluation Metrics

2.7. Training Environment

3. Experimental Results

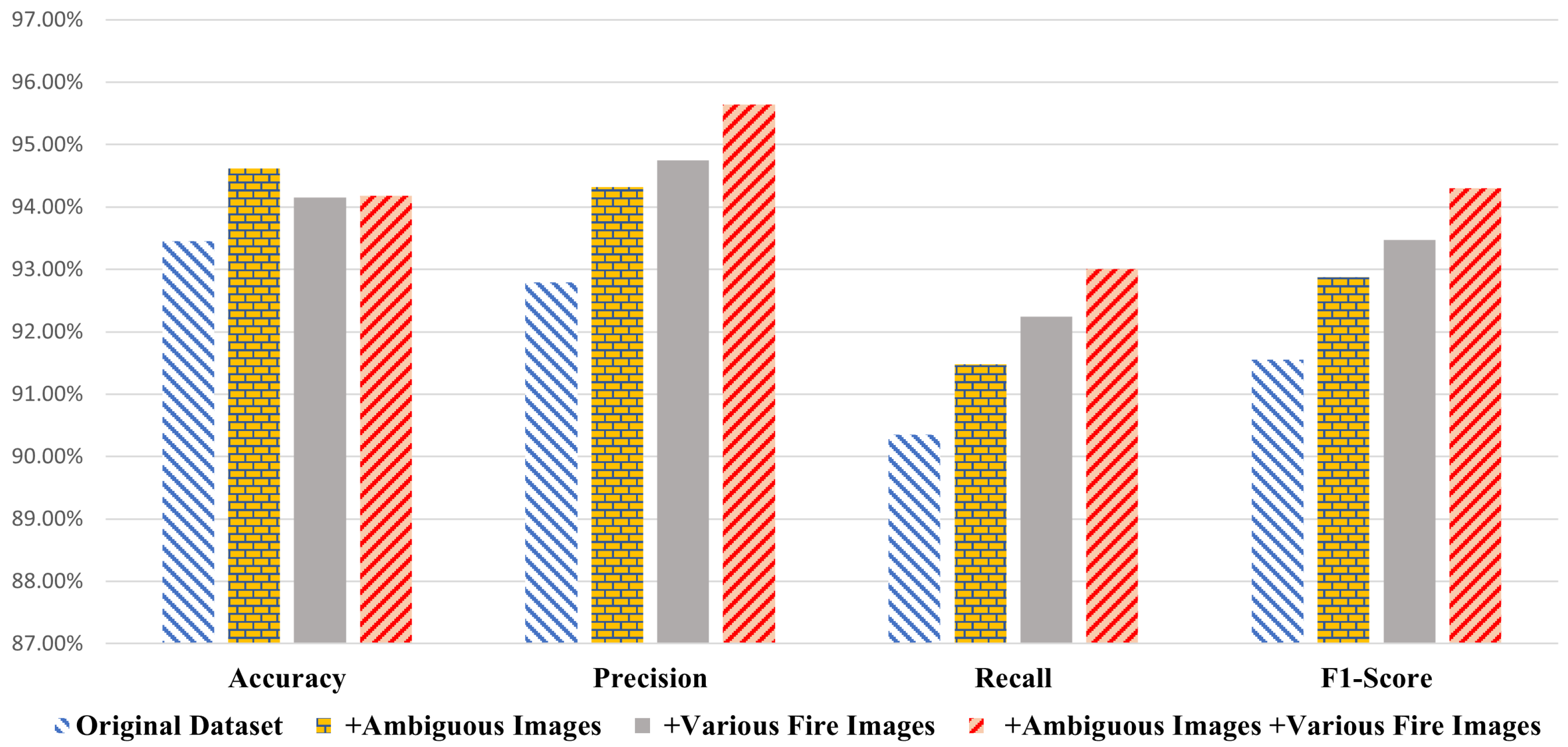

3.1. Comparison of Experimental Results under Different Dataset Compositions

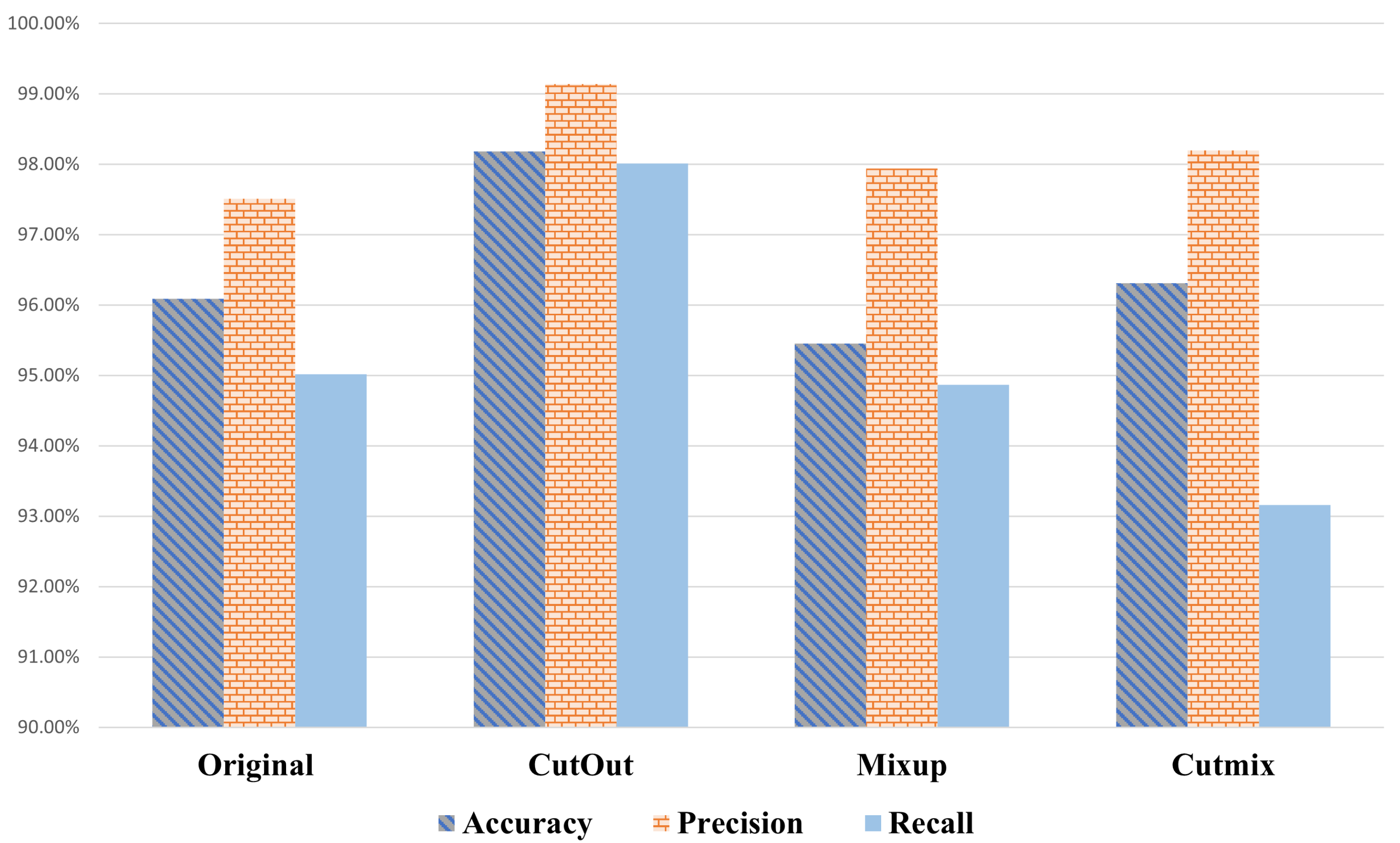

3.2. Comparison of Data Augmentation Techniques

3.3. Attention Mechanisms

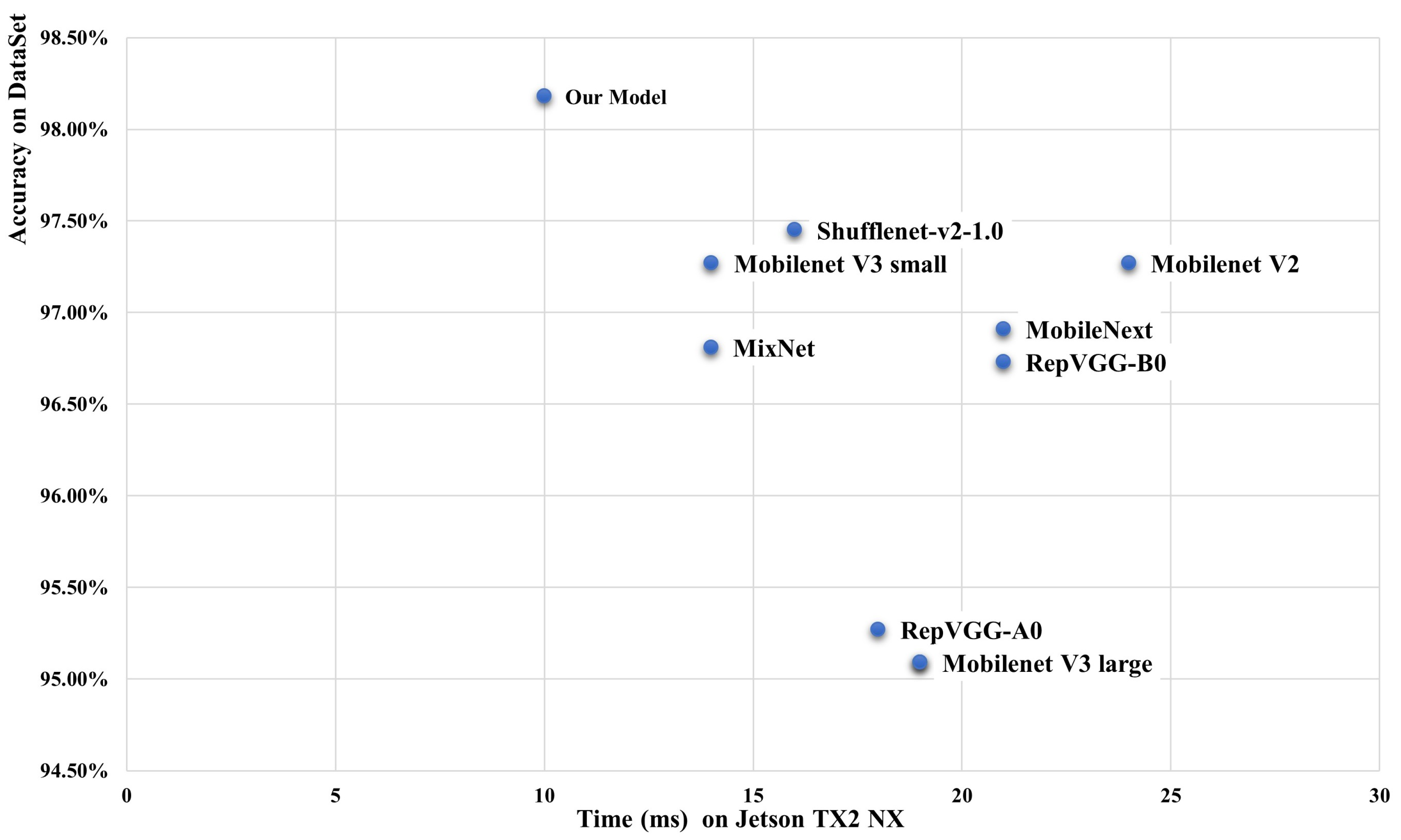

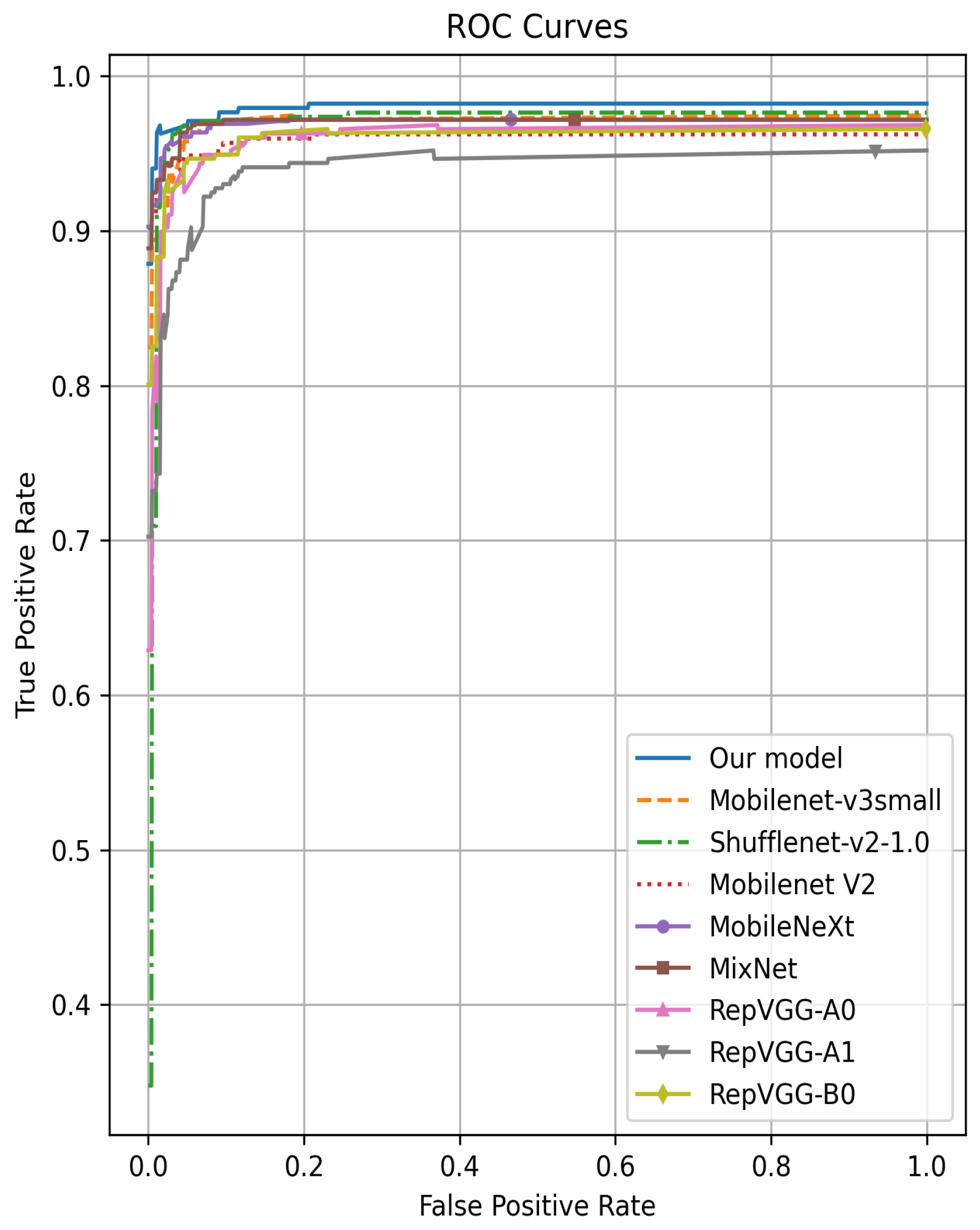

3.4. Comparison with Existing Models

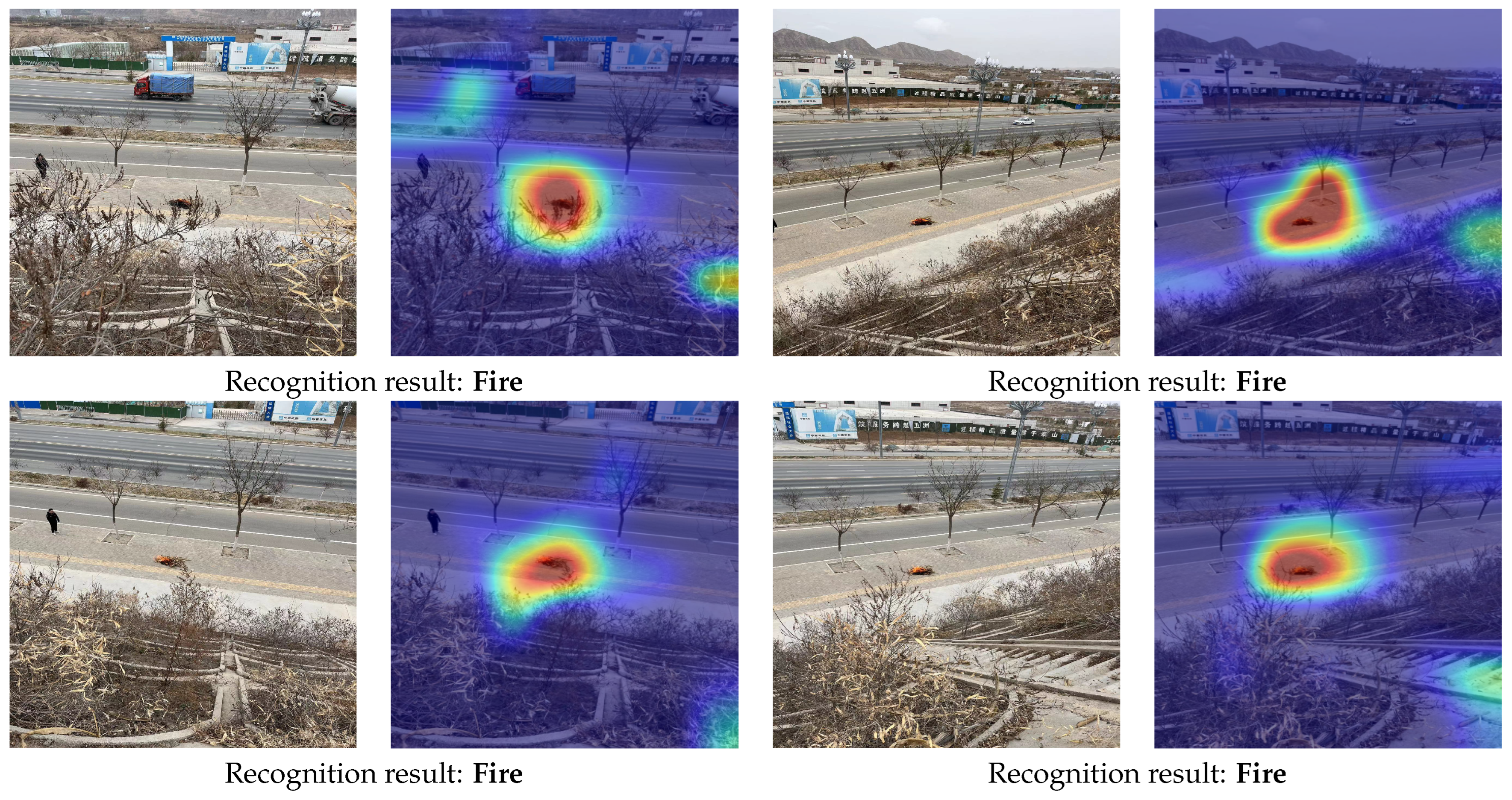

3.5. Field Test Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Menut, L.; Cholakian, A.; Siour, G.; Lapere, R.; Pennel, R.; Mailler, S.; Bessagnet, B. Impact of Landes forest fires on air quality in France during the 2022 summer. Atmos. Chem. Phys. 2023, 23, 7281–7296. [Google Scholar] [CrossRef]

- Sun, Y.; Jiang, L.; Pan, J.; Sheng, S.; Hao, L. A satellite imagery smoke detection framework based on the Mahalanobis distance for early fire identification and positioning. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103257. [Google Scholar] [CrossRef]

- Yandouzi, M.; Grari, M.; Idrissi, I.; Moussaoui, O.; Azizi, M.; Ghoumid, K.; Elmiad, A.K. Review on forest fires detection and prediction using deep learning and drones. J. Theor. Appl. Inf. Technol. 2022, 100, 4565–4576. [Google Scholar]

- Zahed, M.; Bączek-Kwinta, R. The Impact of Post-Fire Smoke on Plant Communities: A Global Approach. Plants 2023, 12, 3835. [Google Scholar] [CrossRef] [PubMed]

- Qin, Y.; Xiao, X.; Wigneron, J.P.; Ciais, P.; Canadell, J.G.; Brandt, M.; Li, X.; Fan, L.; Wu, X.; Tang, H.; et al. Large loss and rapid recovery of vegetation cover and aboveground biomass over forest areas in Australia during 2019–2020. Remote Sens. Environ. 2022, 278, 113087. [Google Scholar] [CrossRef]

- Feng, X.; Merow, C.; Liu, Z.; Park, D.S.; Roehrdanz, P.R.; Maitner, B.; Newman, E.A.; Boyle, B.L.; Lien, A.; Burger, J.R.; et al. How deregulation, drought and increasing fire impact Amazonian biodiversity. Nature 2021, 597, 516–521. [Google Scholar] [CrossRef] [PubMed]

- Mataix-Solera, J.; Cerdà, A.; Arcenegui, V.; Jordán, A.; Zavala, L. Fire effects on soil aggregation: A review. Earth-Sci. Rev. 2011, 109, 44–60. [Google Scholar] [CrossRef]

- Ferreira, A.; Coelho, C.d.O.; Ritsema, C.; Boulet, A.; Keizer, J. Soil and water degradation processes in burned areas: Lessons learned from a nested approach. Catena 2008, 74, 273–285. [Google Scholar] [CrossRef]

- Laurance, W.F. Habitat destruction: Death by a thousand cuts. Conserv. Biol. All 2010, 1, 73–88. [Google Scholar]

- MacCarthy, J.; Tyukavina, A.; Weisse, M.J.; Harris, N.; Glen, E. Extreme wildfires in Canada and their contribution to global loss in tree cover and carbon emissions in 2023. Glob. Change Biol. 2024, 30, e17392. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Z.; Zou, Z.; Chen, X.; Wu, H.; Wang, W.; Su, H.; Li, F.; Xu, W.; Liu, Z.; et al. Severe global environmental issues caused by Canada’s record-breaking wildfires in 2023. Adv. Atmos. Sci. 2024, 41, 565–571. [Google Scholar] [CrossRef]

- Pelletier, F.; Cardille, J.A.; Wulder, M.A.; White, J.C.; Hermosilla, T. Revisiting the 2023 wildfire season in Canada. Sci. Remote Sens. 2024, 10, 100145. [Google Scholar] [CrossRef]

- Kurvits, T.; Popescu, A.; Paulson, A.; Sullivan, A.; Ganz, D.; Burton, C.; Kelley, D.; Fernandes, P.; Wittenberg, L.; Baker, E.; et al. Spreading Like Wildfire: The Rising Threat of Extraordinary Landscape Fires; UNEP: Nairobi, Kenya, 2022. [Google Scholar]

- Li, R.; Hu, Y.; Li, L.; Guan, R.; Yang, R.; Zhan, J.; Cai, W.; Wang, Y.; Xu, H.; Li, L. SMWE-GFPNNet: A High-precision and Robust Method for Forest Fire Smoke Detection. Knowl.-Based Syst. 2024, 248, 111528. [Google Scholar] [CrossRef]

- Lucas-Borja, M.E.; Zema, D.A.; Carrà, B.G.; Cerdà, A.; Plaza-Alvarez, P.A.; Cózar, J.S.; Gonzalez-Romero, J.; Moya, D.; de las Heras, J. Short-term changes in infiltration between straw mulched and non-mulched soils after wildfire in Mediterranean forest ecosystems. Ecol. Eng. 2018, 122, 27–31. [Google Scholar] [CrossRef]

- Ertugrul, M.; Varol, T.; Ozel, H.B.; Cetin, M.; Sevik, H. Influence of climatic factor of changes in forest fire danger and fire season length in Turkey. Environ. Monit. Assess. 2021, 193, 28. [Google Scholar] [CrossRef] [PubMed]

- North, M.P.; Stephens, S.L.; Collins, B.M.; Agee, J.K.; Aplet, G.; Franklin, J.F.; Fulé, P.Z. Reform forest fire management. Science 2015, 349, 1280–1281. [Google Scholar] [CrossRef]

- Sudhakar, S.; Vijayakumar, V.; Sathiya Kumar, C.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16. [Google Scholar] [CrossRef]

- Zhang, Y.; Fang, X.; Guo, J.; Wang, L.; Tian, H.; Yan, K.; Lan, Y. CURI-YOLOv7: A Lightweight YOLOv7tiny Target Detector for Citrus Trees from UAV Remote Sensing Imagery Based on Embedded Device. Remote Sens. 2023, 15, 4647. [Google Scholar] [CrossRef]

- Namburu, A.; Selvaraj, P.; Mohan, S.; Ragavanantham, S.; Eldin, E.T. Forest Fire Identification in UAV Imagery Using X-MobileNet. Electronics 2023, 12, 733. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Fu, Y.; Ding, Y. A Forest Fire Recognition Method Using UAV Images Based on Transfer Learning. Forests 2022, 13, 975. [Google Scholar] [CrossRef]

- Guan, Z.; Miao, X.; Mu, Y.; Sun, Q.; Ye, Q.; Gao, D. Forest Fire Segmentation from Aerial Imagery Data Using an Improved Instance Segmentation Model. Remote Sens. 2022, 14, 3159. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Ding, Y.; Bu, X. MS-FRCNN: A Multi-Scale Faster RCNN Model for Small Target Forest Fire Detection. Forests 2023, 14, 616. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Rui, X.; Li, Z.; Zhang, X.; Li, Z.; Song, W. A RGB-Thermal based adaptive modality learning network for day–night wildfire identification. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103554. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Kastridis, A.; Stathaki, T.; Yuan, J.; Shi, M.; Grammalidis, N. Suburban Forest Fire Risk Assessment and Forest Surveillance Using 360-Degree Cameras and a Multiscale Deformable Transformer. Remote Sens. 2023, 15, 1995. [Google Scholar] [CrossRef]

- Lin, J.; Lin, H.; Wang, F. STPM_SAHI: A Small-Target forest fire detection model based on Swin Transformer and Slicing Aided Hyper inference. Forests 2022, 13, 1603. [Google Scholar] [CrossRef]

- Chen, G.; Zhou, H.; Li, Z.; Gao, Y.; Bai, D.; Xu, R.; Lin, H. Multi-Scale Forest Fire Recognition Model Based on Improved YOLOv5s. Forests 2023, 14, 315. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, J.; Yang, H.; Liu, Y.; Liu, H. A Small-Target Forest Fire Smoke Detection Model Based on Deformable Transformer for End-to-End Object Detection. Forests 2023, 14, 162. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Touvron, H.; Cord, M.; Sablayrolles, A.; Synnaeve, G.; Jegou, H. Going deeper with Image Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 32–42. [Google Scholar]

- Shafi, O.; Rai, C.; Sen, R.; Ananthanarayanan, G. Demystifying TensorRT: Characterizing Neural Network Inference Engine on Nvidia Edge Devices. In Proceedings of the 2021 IEEE International Symposium on Workload Characterization (IISWC), Storrs, CT, USA, 7–9 November 2021; pp. 226–237. [Google Scholar]

- Vasu, P.K.A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. MobileOne: An Improved One millisecond Mobile Backbone. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7907–7917. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Ding, X.; Guo, Y.; Ding, G.; Han, J. ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Blocks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1911–1920. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse Branch Block: Building a Convolution as an Inception-like Unit. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10886–10895. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- DeVries, T.; Taylor, G.W. Improved Regularization of Convolutional Neural Networks with Cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Choe, C.; Choe, M.; Jung, S. Run Your 3D Object Detector on NVIDIA Jetson Platforms:A Benchmark Analysis. Sensors 2023, 23, 4005. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. MixUp: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Yun, S.; Han, D.; Chun, S.; Oh, S.J.; Yoo, Y.; Choe, J. CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Omeiza, D.; Speakman, S.; Cintas, C.; Weldermariam, K. Smooth Grad-CAM++: An Enhanced Inference Level Visualization Technique for Deep Convolutional Neural Network Models. arXiv 2019, arXiv:1908.01224. [Google Scholar]

- Fernandez, F.G. TorchCAM: Class Activation Explorer. 2020. Available online: https://github.com/frgfm/torch-cam (accessed on 24 March 2020).

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhou, H.; Li, J.; Peng, J.; Zhang, S.; Zhang, S. Triplet attention: Rethinking the similarity in transformers. In Proceedings of the the 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Virtual, 14–18 August 2021; pp. 2378–2388. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Zhu, M.; Chen, A.Z.L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhou, D.; Hou, Q.; Chen, Y.; Feng, J.; Yan, S. Rethinking bottleneck structure for efficient mobile network design. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 680–697. [Google Scholar]

- Tan, M.; Le, Q.V. Mixconv: Mixed depthwise convolutional kernels. arXiv 2019, arXiv:1907.09595. [Google Scholar]

- De la Fuente, R.; Aguayo, M.M.; Contreras-Bolton, C. An optimization-based approach for an integrated forest fire monitoring system with multiple technologies and surveillance drones. Eur. J. Oper. Res. 2024, 313, 435–451. [Google Scholar] [CrossRef]

| Stage | Input Size | Blocks | Stride | Block Type | Input Channels | Output Channels |

|---|---|---|---|---|---|---|

| 1 | 224 × 224 | 1 | 2 | MobileOne block | 3 | 96 |

| 2 | 112 × 112 | 2 | 2 | MobileOne block | 96 | 96 |

| 3 | 56 × 56 | 8 | 2 | MobileOne block | 96 | 192 |

| 4 | 28 × 28 | 5 | 2 | MobileOne block | 192 | 512 |

| 5 | 14 × 14 | 5 | 1 | MobileOne block | 512 | 512 |

| 6 | 14 × 14 | 5 | 1 | CCA Module | 512 | 512 |

| 7 | 14 × 14 | 1 | 2 | MobileOne block | 512 | 1280 |

| 8 | 7 × 7 | 1 | 1 | AvgPool | - | - |

| 9 | 1 × 1 | 1 | 1 | Linear | 1280 | 1 |

| Computational Capability | GPU | CPU |

|---|---|---|

| 1.33 TFLOPs | NVIDIA Pascal™ Architecture GPU equipped with 256 CUDA cores | Dual-core 64-bit NVIDIA Denver 2 CPU and a quad-core ARM A57 complex |

| Parameters | Detail |

|---|---|

| Batch Size | 24 |

| Epoch | 100 |

| Input Size | 224 × 224 |

| Initial rate of learning | 0.001 |

| Epoch | 100 |

| Optimization technique | Adam |

| Loss | Binary Cross-Entropy loss |

| Model | Accuracy | Precision | Recall | F1 Score | Weights (M) | Inference Time (ms) | Batch Inference Time (ms) | FPS |

|---|---|---|---|---|---|---|---|---|

| CCA-MobileOne | 98.18% | 99.14% | 98.01% | 0.9857 | 8.2 | 10.35 | 4.63 | 86 |

| SE-MobileOne | 97.22% | 98.25% | 95.26% | 0.9673 | 8.4 | 11.94 | 5.04 | 85 |

| CA-MobileOne | 97.21% | 98.84% | 96.87% | 0.9785 | 8.2 | 11.22 | 4.98 | 76 |

| Triplet-MobileOne | 96.32% | 96.27% | 95.27% | 0.9577 | 8.2 | 10.71 | 4.95 | 86 |

| GAM-MobileOne | 96.18% | 98.53% | 95.44% | 0.9696 | 21.3 | 15.23 | 5.25 | 72 |

| Model | Accuracy | Precision | Recall | F1 Score | AUC | FLOPs (M) | Params (M) | Inference Time (ms) | Batch Inference Time (ms) |

|---|---|---|---|---|---|---|---|---|---|

| Our Model | 98.18% | 99.14% | 98.01% | 0.9857 | 0.98 | 825 | 3.4 | 10 | 4.6 |

| MobileNet-V3-s [51] | 97.27% | 97.19% | 98.58% | 0.9788 | 0.97 | 56 | 2.6 | 14 | 6.2 |

| ShuffleNet-V2-1.0 [52] | 97.45% | 97.73% | 98.29% | 0.9802 | 0.97 | 146 | 2.3 | 16 | 8.6 |

| MobileNet V2 [53] | 97.27% | 99.70% | 96.58% | 0.9812 | 0.96 | 300 | 3.4 | 24 | 8.4 |

| MobileNeXt [54] | 96.91% | 98.83% | 96.30% | 0.9755 | 0.97 | 311 | 4.1 | 21 | 10.2 |

| MixNet [55] | 96.91% | 97.71% | 97.44% | 0.9757 | 0.97 | 256 | 3.4 | 14 | 10.5 |

| RepVGG-A0 [37] | 95.27% | 98.51% | 94.02% | 0.9621 | 0.96 | 1400 | 8.3 | 18 | 6.0 |

| RepVGG-A1 [37] | 95.09% | 97.65% | 94.59% | 0.9610 | 0.94 | 2400 | 12.8 | 20 | 7.2 |

| RepVGG-B0 [37] | 96.73% | 97.98 % | 96.87% | 0.9742 | 0.96 | 3100 | 14.3 | 21 | 6.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Han, J.; Chen, F.; Min, R.; Yi, S.; Yang, Z. Fire-Net: Rapid Recognition of Forest Fires in UAV Remote Sensing Imagery Using Embedded Devices. Remote Sens. 2024, 16, 2846. https://doi.org/10.3390/rs16152846

Li S, Han J, Chen F, Min R, Yi S, Yang Z. Fire-Net: Rapid Recognition of Forest Fires in UAV Remote Sensing Imagery Using Embedded Devices. Remote Sensing. 2024; 16(15):2846. https://doi.org/10.3390/rs16152846

Chicago/Turabian StyleLi, Shouliang, Jiale Han, Fanghui Chen, Rudong Min, Sixue Yi, and Zhen Yang. 2024. "Fire-Net: Rapid Recognition of Forest Fires in UAV Remote Sensing Imagery Using Embedded Devices" Remote Sensing 16, no. 15: 2846. https://doi.org/10.3390/rs16152846

APA StyleLi, S., Han, J., Chen, F., Min, R., Yi, S., & Yang, Z. (2024). Fire-Net: Rapid Recognition of Forest Fires in UAV Remote Sensing Imagery Using Embedded Devices. Remote Sensing, 16(15), 2846. https://doi.org/10.3390/rs16152846