Abstract

In computer vision tasks, the ability to remove rain from a single image is a crucial element to enhance the effectiveness of subsequent high-level tasks in rainy conditions. Recently, numerous data-driven single-image deraining techniques have emerged, primarily relying on paired images (i.e., in a supervised manner). However, when dealing with real deraining tasks, it is common to encounter unpaired images. In such scenarios, removing rain streaks in an unsupervised manner becomes a challenging task, as there are no constraints between images, resulting in suboptimal restoration results. In this paper, we introduce a new unsupervised single-image deraining method called SE-RRACycleGAN, which does not require a paired dataset for training and can effectively leverage the constrained transfer learning capability and cyclic structures inherent in CycleGAN. Since rain removal is closely associated with the analysis of texture features in an input image, we proposed a novel recurrent rain attentive module (RRAM) to enhance rain-related information detection by simultaneously considering both rainy and rain-free images. We also utilize the squeeze-and-excitation enhancement technique to the generator network to effectively capture spatial contextual information among channels. Finally, content loss is introduced to enhance the visual similarity between the input and generated images. Our method excels at removing numerous rain streaks, preserving a smooth background, and closely resembling the ground truth compared to other approaches, based on both quantitative and qualitative results, without the need for paired training images. Extensive experiments on synthetic and real-world datasets demonstrate that our approach shows superiority over most unsupervised state-of-the-art techniques, particularly on the Rain12 dataset (achieving a PSNR of 34.60 and an SSIM of 0.954) and real rainy images (achieving a PSNR of 34.17 and an SSIM of 0.953), and is highly competitive when compared to supervised methods. Moreover, the performance of our model is evaluated using RMSE, FSIM, MAE, and the correlation coefficient, achieving remarkable results that indicate a high degree of accuracy in rain removal and strong preservation of the original image’s structural details.

1. Introduction

Weather conditions, including snow, haze, rain, and wind, cause low visibility in images and videos. This can considerably impair the performance of outdoor vision tasks, such as facial recognition, pedestrian detection, visual tracking, traffic sign identification, object detection, and intelligent surveillance [1,2,3,4,5,6]. As a result, it is crucial to remove rain from input rainy images to develop trustworthy computer vision systems. Thus, algorithms that can successfully remove rain from a rainy image are of great interest [7].

To address the restoration of rain degradation, various strategies have been proposed, including video deraining [8,9] and single-image deraining (SID) [10,11,12,13]. Video deraining uses continuous information between frames to recognize the rain line and restore the background image. This method suffers from poor performance when the camera movements are dynamic. Additionally, as they analyze multiple sequential frames, they require significant computational time, which is critical for some applications, like self-driving cars [10]. On the other hand, SID relies solely on spatial information between adjacent pixels and the visual characteristics of the rain line to remove rain [14]. SID approaches are more difficult since they can only exploit the spatial information in an image, unlike video deraining methods, which can benefit from the dynamics of rainfall and temporal redundancy. In this study, we mainly concentrate on the issue of removing rain streaks from a single image. The goal of single-image rain removal is to eliminate raindrops or streaks from an input image and restore the clean backdrop. Removing rain streaks from single-image is therefore an important research topic that has recently gained a lot of attention in the field of computer vision and pattern recognition [15,16].

SID techniques from the past can be largely split into two categories: model-based methods and data-driven methods [10,12]. The model-based methods focus on incorporating rain’s physical characteristics and background scene knowledge into an optimization problem, and they develop logical algorithms to solve it. They often estimate raindrops or rain streaks using various priors or assumptions, such as sparse coding, low rank, and Gaussian. Despite the significant advancement gained by these approaches, their performance is usually limited, especially when the background is messy and contains intricate illuminations. The main reason for this limited performance is that real-world raindrops and rain streaks do not strictly conform to a sparse or Gaussian distribution. Recently, data-driven methods have been developed by creating certain network architectures and pre-collecting pairs of rainy and clean (ground-truth) images to train network parameters in a supervised manner, aiming to achieve sophisticated rain removal functions [10,17]. However, obtaining accurate paired datasets in the real world is challenging due to environmental constraints. Consequently, supervised learning methods rely on synthetic datasets, posing a challenge to generalization due to the disparity between synthetic and real datasets.

Hence, studying unsupervised SID techniques is essential for enhancing rain removal performance on real images, as they can be trained with real rainy images without a ground truth. The unsupervised CycleGAN network [18] is a logical choice for rain removal. While CycleGAN has demonstrated effectiveness in multiple low-level tasks, applying it to remove rain from single images remains challenging due to the asymmetrical domain knowledge between rainy and rain-free images. In particular, a rainy image consists of both background and rain information, whereas the rain-free image only comprises the background. Consequently, directly employing CycleGAN may lead to issues with color and structural distortion, as well as difficulty in completely erasing rain marks (see Figure 1b).

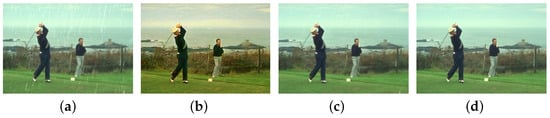

Figure 1.

Comparisons of our result with CycleGAN [18] on Rain100L. (a) Input, (b) CycleGAN [18], (c) our approach, and (d) GT.

In this study, we introduce a novel unsupervised approach to SID, eliminating the need for aligned image pairings. Our method incorporates a rain-attentive module, allowing it to adapt to any image and leverage the circulatory architecture of CycleGAN. Our model is specifically tailored for unsupervised single-image deraining (SID), with the ability to retain the color and structure of images achieved through the multi-loss constrained rain-attentive module (see Figure 1c). The contribution of our work can be summarized as follows:

1. We propose SE-RRACycleGAN, which can generate high-quality, clean, derained images without supervision in the form of aligned rainy and clean images.

2. We propose a novel rain streak extractor termed the Recurrent Rain-Attentive Module (RRAM), which can detect rain information in both rainy and rain-free images.

3. A squeeze-and-excitation (SE) component is introduced to the generator network, so that it can push the network to learn more useful features, prevent model overfitting, and reinforce the network’s generalization ability. We also introduce content loss to generate an image that is visually similar to the input image.

4. Extensive experiments on synthetic and real datasets show that our method gives a competitive result with semi-supervised and supervised methods and outperforms the state-of-the-art unsupervised methods on real rainy images.

2. Related Works

In this section, we provide a concise overview of the existing literature on SID and position the proposed method within the appropriate context. As discussed in Section 1, methods for SID can be broadly categorized into two groups: model-based approaches and data-driven approaches.

2.1. Model-Based Approaches

Numerous model-based methods consider the deraining of a single image as an image decomposition problem, where a rainy image is typically represented as the sum of a rain layer and a rain-free background layer. Using a bilateral filter, Kang et al. [19] decomposed a rainy image into low-frequency (LF) and high-frequency (HF) components. They used sparse coding and morphological component analysis (MCA)-based dictionary learning to separate the rain streaks in the HF component. Luo et al. [20] developed an image patch-based discriminative sparse coding framework that distinguished between rain streaks and the rain-free background. Li et al. [21] proposed a patch-based priors for the rain and rain-free background layers. These priors can take into account different rain streak directions and scales since they are based on Gaussian mixture models. Wang et al. [22] proposed an algorithm that takes an advantage of image decomposition and dictionary learning methods. While these model-based techniques, relying on assumptions and priors, perform well in certain scenarios, they face challenges in eliminating complex rain patterns in real-world environments. This is because the assumptions and priors upon which they rely do not always hold, as real-world raindrops and rain streaks do not strictly adhere to a sparse or Gaussian distribution.

2.2. Data-Driven Approaches

2.2.1. Supervised Learning Method

To enhance prediction accuracy, this method employs a network specifically designed to automatically learn rainline properties from extensive paired data. Ahn et al. [10] proposed a two-step rain removal method. The proposed method first predicts rain streaks, including rain density and streak intensity, from an input rainy image. Then, it can effectively remove rain streaks from images taken under diverse rain conditions. Zhang et al. [23] presented a framework termed an image-deraining conditional generative adversarial network (ID-CGAN), which incorporates discriminative, quantitative, and visual performance into the objective function. Yang et al. [24] introduced a rain removal architecture that effectively detects and removes rain streaks, demonstrating superior performance in heavy rain conditions. They utilized a recurrent process to progressively eliminate overlapping rain streaks in diverse forms and directions. Wang et al. [17] proposed a kernel-guided convolutional neural network (KGCNN) and achieved a good result in solving the problem of over- and under-deraining. However, all of the techniques mentioned above rely on paired datasets, which are difficult to obtain in real-world scenarios.

2.2.2. Unsupervised Learning Method

Recently, some researchers have proposed a GAN-based unsupervised learning approach for SID, drawing inspiration from GANs’ remarkable success in image-to-image translation [25]. Zhu et al. [18] proposed a CycleGAN for learning image-to-image translation in the absence of paired images. The proposed method was constrained by using adversarial loss and cycle consistency loss to make the translated image indistinguishable from the ground truth. Yang et al. [26] presented an unsupervised end-to-end rain removal network termed Rain Removal-GAN (RR-GAN). By introducing a physical model that explicitly learns recovered images and related rain streaks from a differentiable programming perspective, their network mitigates the paired training constraints. Moreover, to recover the clean image, the multi-scale attention memory generator and multi-scale discriminator, which impose constraints on the clean output image, were employed. However, the attention memory used relies only on the single branch from rainy to rain-free images and is also not constrained to learn more about the rain line in the rainy image, which makes it unstable for an unsupervised model due to its limited detection ability. Guo et al. [13] proposed unsupervised derain attention-guided GAN (Derain Attention GAN), which contains a generator featuring an attention mechanism and a multi-scale discriminator to produce rain-free images and distinguish the generated rain-free images, respectively. Also, perceptual consistency loss and internal feature perceptual loss are presented to reduce the artifact features on the generated images. However, they cannot accurately extract rain streaks from a rainy image solely by considering cycle-consistency loss. This is because they do not consider the constraint on the attention mechanism, resulting in a weak constraint between the rainy and rain-free images. Wei et al. [11] presented an unsupervised framework for single-image rain removal and generation termed DerainCycleGAN. They developed an unsupervised rain-attentive detector (URAD) to improve the detection of rain information in both rainy and rain-free images. Moreover, they generated a rain streak with varied shapes and directions, which is distinct from the previous methods. However, the constraint of URAD is weak, and it detects rain masks from rain-free images.

2.3. Visual Attention

Visual attention models have been utilized to pinpoint specific areas within an image for the purpose of capturing distinctive local features [27]. The idea has been employed for visual identification, categorization, and image classification [28,29,30]. Likewise, the concept has demonstrated its effectiveness in both the supervised SID method [31] and the unsupervised SID method [12,26], as it enables the network to determine the specific areas where the removal or restoration process should be concentrated and enhance the precision of the SID task. However, it is important to note that the attention module used in [26,31] primarily relies on a single branch, focusing only on a mapping from rain to rain-free. Furthermore, their constraints are relatively weak, and the attention module used in [12] is unconstrained. Thus, in unsupervised mode, they tend to be unstable due to their limited detection capability, as they rely solely on the rain information from rainy images. On the contrary, we introduced RRAM, which detects rain-related information by simultaneously considering both rainy and rain-free images and is constrained by attentive loss to learn without supervision. So the rain information detected by our RRAM is more stable and accurate than the attention modules used previously.

3. The Proposed Method

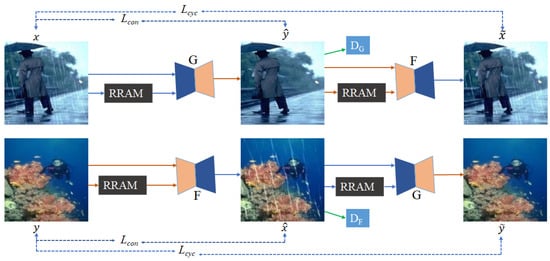

Our objective is to acquire the ability to eliminate rain streaks from a sole input image without relying on paired training data. Figure 2 illustrates the architecture of our SE-RRACycleGAN, which consists of three components: (1) RRAM, which focuses on rain-related details in both images with and images without rain, (2) a pair of generators G and F, responsible for generating rain-free and rainy images, respectively, and (3) a pair of discriminators and , designed to distinguish real images from generated ones. In the subsequent sections, we present detailed explanations of each of the three components and the objective function.

Figure 2.

The architecture of SE-RRACycleGAN. The SE-RRACycleGAN process involves two distinct branches. The rainy-to-rainy branch starts with a rainy image and employs a generator G to produce a rain-free image. Then, this rain-free image is utilized to reconstruct a rainy image using generator F. On the other hand, the rain-free to rain-free branch, begins with a rain-free image, which is initially transformed into a rain image by F and then reversed back to a rain-free image using G.

3.1. Recurrent Rain-Attentive Module (RRAM)

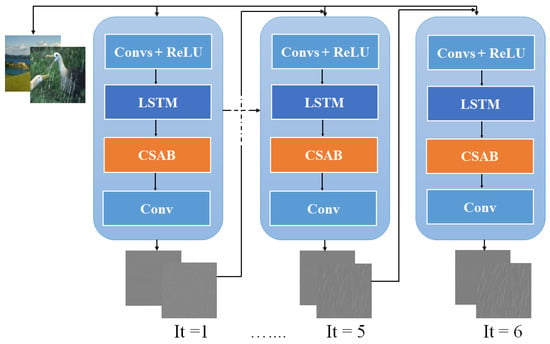

In Figure 3, we introduced RRAM to enhance rain-related information detection by simultaneously considering both rainy and rain-free images. To identify rain information within arbitrary images, our RRAM is constrained by attentive loss in both rain-free and rainy images when learning without supervision. As a result, the rain information detected by our RRAM offers a higher level of accuracy compared to the attention modules used previously. RRAM differs from Cycle-Attention-Derain [12] and CBAM [32] in that it not only incorporates channel and spatial attention blocks, but also utilizes an LSTM unit [33]. This combination allows RRAM to effectively capture spatial and temporal dependencies in images, facilitating the modeling of intricate patterns of rain streaks interacting with the scene. LSTM, with its ability to retain past states [34], effectively models the accumulation of rain streaks and the evolution of rain patterns over time in a single image while mitigating the vanishing gradient problem. Furthermore, LSTM can discern between rain streaks and other image elements, ensuring that the generated rain mask by our RRAM accurately represents the presence of rain compared to those generated by previously used attention modules. Its ability to handle variable-length sequences is crucial in situations where the density and size of rain streaks vary throughout the image.

Figure 3.

Recurrent rain attentive module architecture.

In our RRAM, each iteration involves Conv + ReLU operations for feature extraction from the input image and the preceding segment’s mask. This is followed by an LSTM unit [33], CSAB, and a concluding Conv layer for generating 2D attention maps. The LSTM unit comprises input gate , forget gate , output gate , and cell state . The interactions of these states and gates over time are described as follows:

where represents the features obtained by Conv + ReLU unit; denotes the cell state that will be fed to the next iteration of the LSTM unit; denotes the output features of the LSTM unit, W and b are convolutional matrix and bias vector, respectively; ∗ denotes the convolution operation; [∘] represents the concatenate operation; and denotes the sigmoid activation function. Each convolution in LSTM uses 32 + 32 input channels, 32 output channels, a kernel size of 3 × 3, 1 stride, and 1 padding.

In our RRAM, CSAB denotes a combination of a channel attention block (CAB) and a spatial attention block (SAB), as illustrated in Figure 4. We suggest employing the CAB to distinguish rain streak features from the background and utilizing the SAB to recognize specific attributes such as the sizes, shape, and positioning of the rain streaks. The unit output, , passes through three convolution layers with kernel size of , which is represented by in Figure 4. The first layer is followed by batch normalization and [35], the second layer is followed by batch normalization, and the third layer produces the intermediate feature map, . Y is input to the CAB to obtain the channel attention map of , multiplied with Y to yield the feature map of . passes through SAB to generate the spatial attention map of , multiplied by to yield , summarized as follows:

where ⊗ denotes element-wise multiplication, represents the CAB, and represents the SAB.

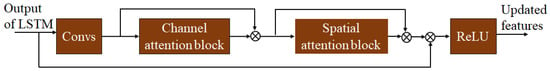

Figure 4.

The structure of our CSAB. represents three convolution layers with kernel size of , where the first convolution layer is followed by batch normalization and ReLU, the second convolution layer is followed by batch normalization, and the third convolution layer results in the intermediate feature map.

Lastly, the output feature F is obtained by adding to and passing the result through ReLU nonlinearity (Equation (3)). This output is then passed through the last convolution layer of RRAM to generate the rain mask (Equation (4)).

where ⊕ denotes element-wise addition, ReLU is the ReLU activation function, and is the convolution layer with kernel size, 1 stride and 1 padding.

The following presents the specifics of the channel attention block and the spatial attention block.

Channel Attention Block (CAB): As each channel within a feature map is considered as a feature detector, channel attention directs its focus toward determining the rain streak of the input rainy image. Thus, to more effectively differentiate rain streak characteristics from background attributes, we have integrated the CAB [32] into RRAM. In our CAB, we employ both average-pooling and max-pooling simultaneously for feature aggregation, for which it is confirmed that it improves the representation power of RRAM rather than using each independently. Spatial information is gathered from the feature map Y by employing average-pooling and max-pooling operations, forming two distinct spatial context features: average-pooled and max-pooled features. Then, both features are fed into the convolutional network, and their outputs are summed together by using element-wise summation to obtain channel attention map [32]. The convolutional network consists of two convolution layers, as shown in (Equation (5)). In summary, the computation of the channel attention map is as follows:

where represents the sigmoid activation function, denotes two convolutional layer with kernel size, 1 stride and 0 padding.

Spatial Attention Block (SAB): Unlike the channel attention map, the spatial attention map focuses on locating rain streaks in rainy images, complementing channel attention. We employ average-pooling and max-pooling along the channel axis, concatenating the results to create an effective feature descriptor for spatial attention computation. The effective highlighting of rain streak regions is achieved through pooling operations along the channel axis [36]. We form a spatial attention map, , to indicate areas for emphasis or suppression. This is achieved by applying a convolution layer to the concatenated feature descriptor. The process involves creating two 2D maps, (max-pooled features) and (average-pooled features), through channel information combination using two pooling methods. The final spatial attention map is obtained by concatenating and convolving these features with a typical convolution layer (Equation (6)).

where represents the sigmoid activation function and denotes convolutional layer with kernel size, 3 stride, and 0 padding.

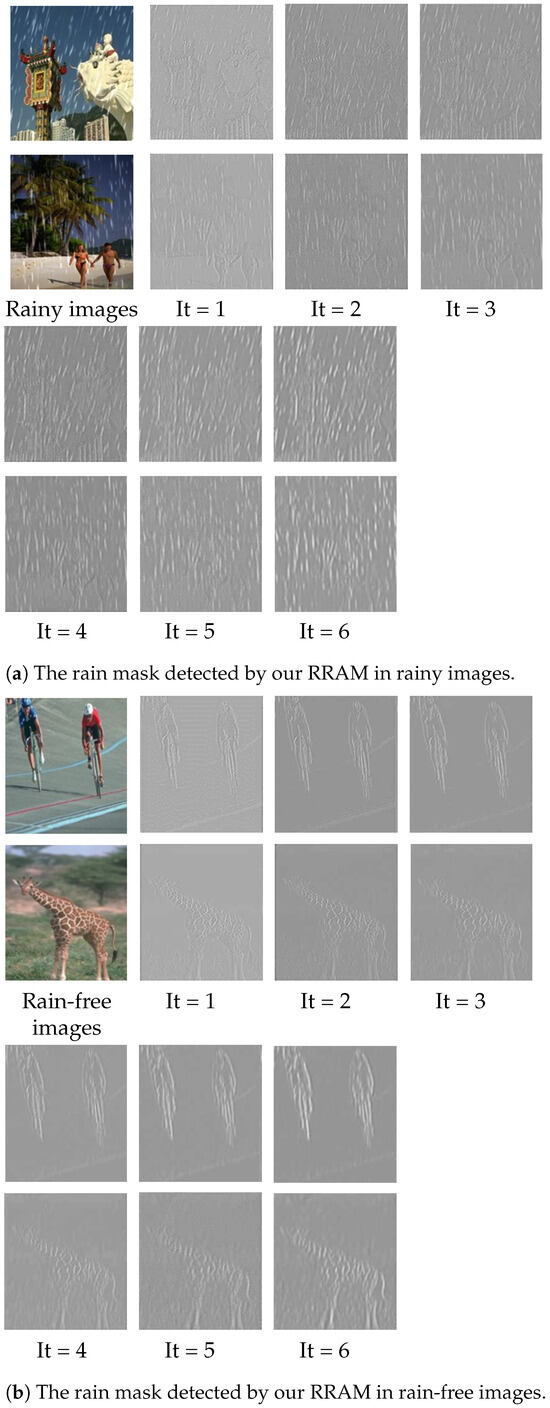

To validate our RRAM’s effectiveness, in Figure 5, we present rain masks detected by RRAM in both rainy and rain-free images. The RRAM input can be rainy images or rain-free images, with the output specifically addressing rain information present in the input image. In Figure 5a, rainy images and their corresponding rain masks identified by RRAM are displayed. It is evident from the illustration that the rain mask becomes clear from iteration to iteration. In Figure 5b, rain-free images are shown, where RRAM correctly identifies the absence of a rain mask from the outset. This distinguishes our RRAM from previous rain attention mechanisms, which erroneously detect rain masks in rain-free images during initial iterations. In order to clearly illustrate the differences between iterations, we made adjustments to the grey color scale of the rain masks detected by our RRAM. Specifically, we shifted the scale from 255 to 176 and reduced the brightness from 0 to −6. This modification enhances the visibility of subtle variations in the rain mask, ensuring they are clearly discernible while maintaining a distinct contrast with the background.

Figure 5.

The rain mask detected by RRAM in both (a) rainy images and (b) rain-free images. The rain mask in rainy images becomes clearer as the number of iterations increases, while there is no rain mask detected in rain-free images. where It denotes iteration.

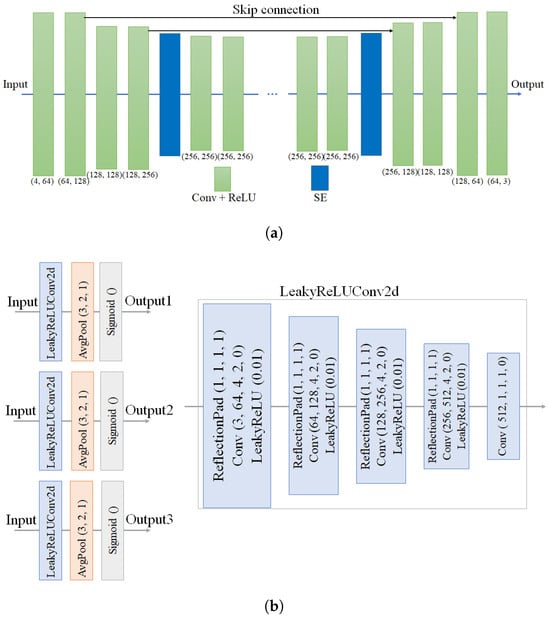

3.2. Generator

To generate rain-free images from rainy images, we used a generator with a structure similar to the U-net contextual encoder–decoder network [37]. However, our generator differs by incorporating squeeze-and-excitation (SE) blocks [38], which adaptively re-weight feature channels. The encoder module comprises eight Conv-ReLU blocks with strided convolutions, aimed at reducing the spatial dimensions of the feature maps, effectively downsampling the input image to extract hierarchical features. Additionally, one block of SE is included, as depicted in Figure 6a (the SE blocks are highlighted in blue, with the left part for the encoder and the right part for the decoder). The output feature of the fourth Conv-ReLU serves as the input features for the SE block, and the output feature of the SE block is then used as the input feature for the fifth Conv-ReLU. Similarly, the decoder is structured with eight Conv-ReLU blocks and one SE block. In the decoding stage, transposed convolutional layers (also known as deconvolutional layers) are used to increase the spatial dimensions of feature maps (i.e., upsampling). The SE block is inserted into the decoder part of the Conv-ReLU blocks, mirroring its position in the encoder part of the Conv-ReLU blocks. Moreover, two skip connections are utilized to propagate fine-grained information from earlier layers to later layers in the network, aiding in the recovery of spatial details lost during encoding. Our generator takes as input the concatenation of the original image and the rain information identified by our proposed RRAM. Notably, this marks the first instance of incorporating SE in a generator for unsupervised single-image rain streak removal, as far as our knowledge extends. A detailed discussion of SE is given below.

Figure 6.

The structure of (a) generator and (b) discriminator.

Squeeze-and-Excitation Block

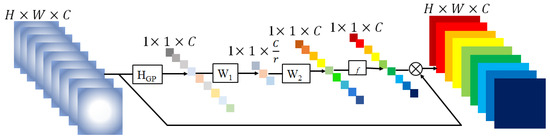

Hu et al. [38] introduced SE, a channel relationship representation that adaptively recalibrates channel-wise feature responses by modeling interdependencies between channels. Each channel has a varied capacity to extract rain components, which can have varying densities and directions in SID tasks. It is generally not fair to consider all feature maps identically when extracting the rain component layer. The contributions made by various feature maps to the rain component layer may vary. We thus apply SE enhancement within our generator network, leveraging its ability to capture spatial contextual information effectively among channels, which has been found to be significant for SID tasks. In SID, certain channels may hold essential information for rain streak removal, while others contain noise or irrelevant details. The novelty of our approach lies in leveraging SE blocks to enhance rain-related features, thereby minimizing the impact of noise and leading to more accurate and effective deraining results. By integrating an SE block into the generator, the model is empowered to learn discriminative features, facilitating the capture of complex rain patterns and the representation of the image’s underlying structure. This capability is particularly significant for deraining tasks that necessitate both local and global feature consideration. Moreover, the SE block contributes to the generator’s ability to generalize across diverse rain patterns by dynamically adjusting the weights of features based on the characteristics of the input image. Consequently, this enhances the robustness and effectiveness of the deraining model across various rain scenarios. The SE process is illustrated in Figure 7 and briefly reviewed as follows. We consider as the input feature map for the SE block. First, F is operated by global average pooling, producing a vector with its cth element as shown in Equation (7):

where and represent the value at position of the cth channel and the corresponding output, respectively.

To fully capture interdependencies among channels resulting from the aggregated information via global average pooling, we follow it with a second operation. The function must satisfy two requirements in order to achieve this goal: Firstly, it should have the capacity to learn nonlinear interactions among channels. Secondly, it should be capable of learning a relationship that is not mutually exclusive, allowing for the emphasis of multiple channel-wise features rather than a one-hot activation. In order to fulfill these requirements, we choose to utilize a straightforward gating mechanism featuring a sigmoid activation function:

where the sigmoid and ReLU functions are denoted by and , respectively. ∗ Indicates the convolution operation. represents the weight set of a convolutional layer, serving as channel downscaling with a reduction ratio of r. Following activation by ReLU, the signal of lower dimensionality is subsequently augmented with a ratio of r by a channel-upscaling layer, characterized by the weight set . Afterward, we acquire the ultimate channel statistics, denoted as , which is utilized to recalibrate the input.

Finally, the output of SE is expressed as Equation (9):

where ⊗ denotes channel-wise multiplication for feature channels and corresponding channel weights.

Generally, the SE block is used to assign weight to each channel. This process makes it possible to adaptively recalibrate each feature map’s feature response, which makes it easier to capture additional spatial contextual information [39].

Figure 7.

Squeeze-and-excitation [40]: where and denote the global pooling function and activation function, respectively.

3.3. Discriminator

In the GAN framework, removing rain from a rainy image goes beyond enhancing visual appeal and achieving quantitative comparability with the ground truth. It requires ensuring that the derained output closely resembles the original ground truth image. To achieve this, employing a robust discriminator that captures both local and global information is crucial for distinguishing between real and fake images. We use two discriminators, and , with structures similar to [41], employing a multi-scale structure with feature maps passed through five convolutional layers and supplied into the sigmoid (Figure 6b). The input to the discriminator network is the ground truth image and the generated image by our generator.

3.4. Objective Function

Our objective function contains four types of losses as elaborated below.

Attentive losses: Like in Derain CycleGAN [11], constraining the unsupervised learning of RRAM is essential to identify rain-related details in any given image. Due to the absence of ground truth for rain information, we use a combination of prior knowledge and self-supervision techniques in RRAM training. In particular, we conduct an initial assessment on the mask of the rain-free image, denoted as , as shown in Equation (10):

where R(y) is the mask of the rain-free image identified by RRAM. Z is a distribution comprising zeros with the same shape as the mask. As there is no rain information present in the rain-free image y, the distribution R(y) should closely resemble Z.

Then, we perform self-supervision on the rain image mask, identified as (Equation (11)).

where R(x), the mask, identifies the rainy regions in the rainy image as detected by RRAM. and x refer to the derained image and original rainy image, respectively. Engaging in self-supervised learning enables RRAM to focus on rain-related information, as illustrated in Figure 5.

Cycle-consistency losses: Like in CycleGAN [18], we define a cycle-consistency loss with the aim of promoting similarity between the reconstructed image F(G(x)) and the original real rain image x and ensuring that G(F(y)) matches the input y.

where is cyclic-consistency losses and and . and represent the data distributions of locally cropped patches randomly extracted from generated rain-free images and rain images, respectively.

Adversarial losses: The goal of a GAN is to employ a min-max game strategy, aiming to train generators G and F to produce samples resembling the data distribution so that the discriminator cannot distinguish between generated and real samples [42]. Simultaneously, the goal is to train discriminators and to effectively discern generated and real images. To achieve this, the suggested approach iteratively updates both the generators and discriminators following the framework outlined in [43]. denotes the adversarial relationship between G and , as shown in Equation (13):

where works to maximize the objective function, aiming to differentiate between the generated rain-free image and real ones. Conversely, G minimizes the loss, striving to enhance the realism of the generated rain-free image. Similarly, we can find by changing the role of G and to F and .

Content losses: The cycle consistency loss minimizes differences between the original image x (or y) and its reconstructed counterpart, F(G(x)) (or G(F(y))). This approach does not consider whether the generated image (or ) visually resembles the original x (or y). Inspired by [44], we decide to include the content loss regularizer in the objective function of SID. We seek to preserve the detailed information of the input image x (or y) while adjusting color for improved visual quality in the resulting image (or ). To achieve this, a VGG16 pre-trained network is employed to extract feature maps from the Conv2_3 layer for both input and generated images. We also utilize the 1-norm for assessing content loss, as it demonstrates greater robust to noise and outliers, facilitating a more effective recovery of details of the rainy image. The content loss regularizer is formulated as

where is content loss, and denotes the layer of the VGG 16 network [45] pre-trained on ImageNet [46]. To our knowledge, this is the first time that content loss has been added to the objective function for single-image rain streak removal.

Total losses: The overall loss function of our proposed network for unsupervised training is expressed as follows:

where , and are trade-off parameters.

4. Experimental Results and Discussion

In this section, we provide details of the conducted experiments and the quality metrics used to assess the effectiveness of the proposed approach. Additionally, we discuss the dataset and training procedures, followed by a comparison of the proposed method with state-of-the-art approaches, along with the ablation studies.

4.1. Network Training and Parameter Setting

4.1.1. Implementation Details

Our model is trained using the PyTorch 2.2.2 with CUDA 11.8 framework [47] in a Python 3.11.3 environment, leveraging the computational power of an NVIDIA GeForce GTX 2080Ti GPU, manufactured by NVIDIA Corporation, Taipei, Taiwan, with 16 GB of memory. During training, we employ random cropping to extract 256 × 256 image patches from the original input images, augmenting the dataset by including their horizontal flips. We optimize the model’s parameters using the Adam optimizer [48] with a mini-batch size of 1, a weight decay of , and a momentum of . The choice of these hyperparameters is based on empirical observations and prior research, where similar values have shown effectiveness in optimizing deep learning models. The training process spans 400 epochs, starting with an initial learning rate of , which is annealed using a PyTorch policy after 200 epochs to aid convergence. We select the number of epochs and the learning rate schedule empirically. The parameters , , and in Equation (15) are meticulously tuned through a process of trial and error, balancing the contributions of different components in the loss function. We conduct experiments with various values for these parameters and select the ones that yield the best performance on the test set. To obtain the final rain mask, we set the number of iterations, . We employ blocks in our generator, considering the trade-off between performance and model complexity. Increasing the number of blocks augments the network’s complexity, necessitating additional training time and memory resources. The choice of blocks is based on empirical observations, where it strikes a good balance between performance and computational cost. Generally, the selection of parameters is guided by empirical observations and experimentation on the available training and test sets, aiming to achieve the best performance on the given task. The training model parameters for the generator and RRAM are shown in Table 1 and Table 2, respectively, while the parameters for the discriminator are clearly depicted in Figure 6b.

Table 1.

The architecture of the generator and parameter settings.

Table 2.

The architecture of RRAM and parameter settings.

4.1.2. Datasets and Evaluation Metrics

To train and evaluate the proposed SE-RRACycleGAN for single-image rain streak removal, we utilized widely recognized synthetic and real-world image datasets. The synthetic datasets utilized for training and testing include (1) Rain100L [49], comprising 200 rainy image pairs for training and 100 pairs for testing; (2) Rain800 [23], consisting of 700 rainy image pairs for training and 100 pairs for testing; and (3) Rain12 [21], which includes 12 pairs of rain-free and rainy images specifically utilized for testing the model trained on Rain100L. The real-world image datasets employed are (1) SPANet-Data [50], a collection of 1000 rainy images along with corresponding ground-truth images, and (2) SIRR-Data [51], which comprises 147 rainy images without corresponding ground-truth images. These datasets were chosen due to their wide recognition in the research community and their capability to encompass a diverse range of real-world rainy image scenarios. The synthetic datasets provide controlled conditions for training and testing, while the incorporation of real-world image datasets enables the assessment of the model’s performance under more challenging and varied environmental conditions.

We evaluate the experimental result of various technique using two widely employed quantitative measures, namely Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) [52] for images with ground truth, while visual results are provided for SIRR-Data due to the lack of ground truth. In addition to the above quantitative measures, our model’s performance is assessed using Root Mean Square Error (RMSE) [53], Feature Similarity Index (FSIM) [54], Mean Absolute Error (MAE) [55], and Correlation Coefficient (CC) [56]. It is noteworthy that the state-of-the-art methods we compared our model with did not employ these specific quantitative measures, thereby restricting direct comparisons based on these measures.

4.2. Comparisons with State-of-the-Art Methods

We evaluate the performance of our method by comparing it with five supervised networks (i.e., DetailNet [57], Clear [58], RESCAN [59], PReNet [60], SPANet [50]), one semi-supervised technique (SIRR [51]), and five unsupervised techniques (i.e., CycleGAN [18], RR-GAN [26], DerainCycleGAN [11], Derain Attention GAN [13], and Cycle-Attention-Derain [12]). Since our model is unsupervised, the unsupervised methods are primarily compared, although our method is highly competitive with the existing semi-supervised and supervised methods.

4.2.1. Comparisons Using a Synthetic Datasets

We evaluate the proposed method on test images from the synthetic datasets (i.e., Rain100L [49], Rain800 [23], and Rain12 [21]) in the first set of experiments and compare its quantitative and qualitative performance against numerous state-of-the-art methods. For a fair comparison, for certain supervised deep-learning networks, such as DetailNet [57], Clear [58], and SPANet [50], and the semi-supervised deep-learning network SSIR [51], we directly adopt the results reported in [11,61], since the evaluation metrics are the same. Moreover, we utilized the source code provided by the authors in the CycleGAN [18], PReNet [60], Derain CycleGAN [11], and RESCAN [59] papers to train and test on synthetic datasets. Lastly, for the comparison with unsupervised rain removal techniques such as RR-GAN [26], Derain Attention GAN [13], and Cycle-Attention-Derain [12], we directly used the results provided in their respective papers as their code is not publicly available. The quantitative and qualitative comparison results are depicted in Table 3 and Figure 8 and Figure 9, respectively. Table 3 clearly indicates that, compared to unsupervised techniques, the proposed SE-RRAMCycleGAN method achieves the best performance on Rain12 and SSIM on Rain800, and better performance on Rain100L and PSNR on Rain800, following Derain Attention GAN [13] and Cycle-Attention-Derain [12], respectively. The proposed method even outperforms the semi-supervised method SIRR, which can gain an advantage from semi-supervised learning, on Rain12. Moreover, the performance of supervised methods declined rapidly from Rain100L to Rain800, whereas our network remains stable. This stability could be attributed to the ability of the RRAM in our unsupervised network to gain a deeper understanding of rain-related features when handling challenging samples. Furthermore, as shown in Table 4, our model’s performance is evaluated using RMSE, FSIM, MAE, and CC. These quantitative metrics collectively assess the model’s effectiveness in single-image deraining tasks. The remarkable performance across these metrics underscores our model’s capability to effectively remove rain while preserving important image details, positioning it as a promising approach for real-world applications such as improving visibility in rainy conditions.

Table 3.

Quantitative experiments evaluated on four datasets.

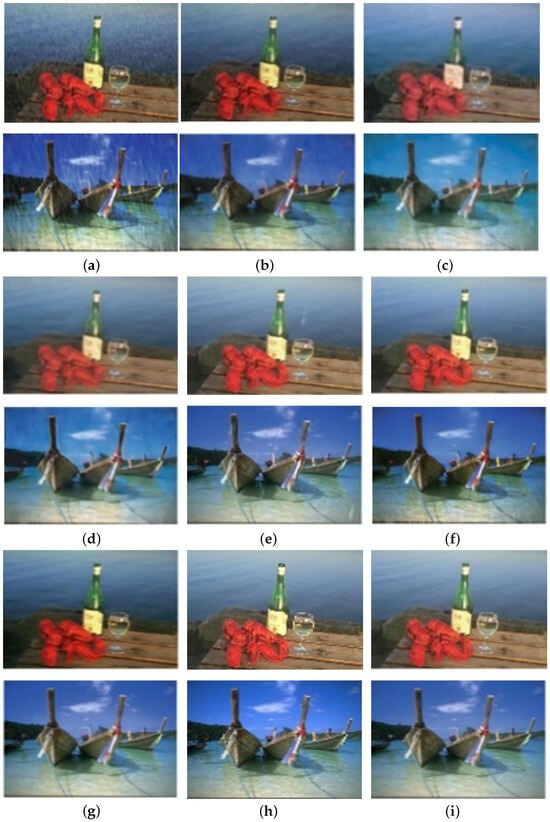

Figure 8.

Comparisons of qualitative results on Rain100L [49]. (a–f) are input rainy images, RESCAN [59], CycleGAN [18], PReNet [60], ours, and ground truth, respectively.

Figure 9.

Comparisons of qualitative results on Rain800 [23], (a–i) are Input rainy images, SPANet [50], CycleGAN [18], RR-GAN [26], DerainCycleGAN [11], Derain Attention GAN [13], Cycle-Attention-Derain [12], ours, and ground truth, respectively.

Table 4.

Quantitative experiments evaluated on four datasets using our model.

We proceed to qualitatively compare Rain100L [49] and Rain800 [23] in Figure 8 and Figure 9. CycleGAN [18] changes the color of the output image and also leaves rain streaks on the image, and even the supervised method RESCAN [59] leaves some amount of rain streaks (Figure 8). However, our method excels in removing numerous rain streaks, preserving a smooth background, and closely resembling the ground truth compared to other approaches. Figure 9 illustrates that most of the methods left some amount of rain streaks, while others, such as CycleGAN [18] and Cycle-Attention-Derain, exhibit noticeable color shifts. Conversely, our method demonstrates superior performance by eliminating rain streaks while preserving the background color (i.e., similar to the ground truth).

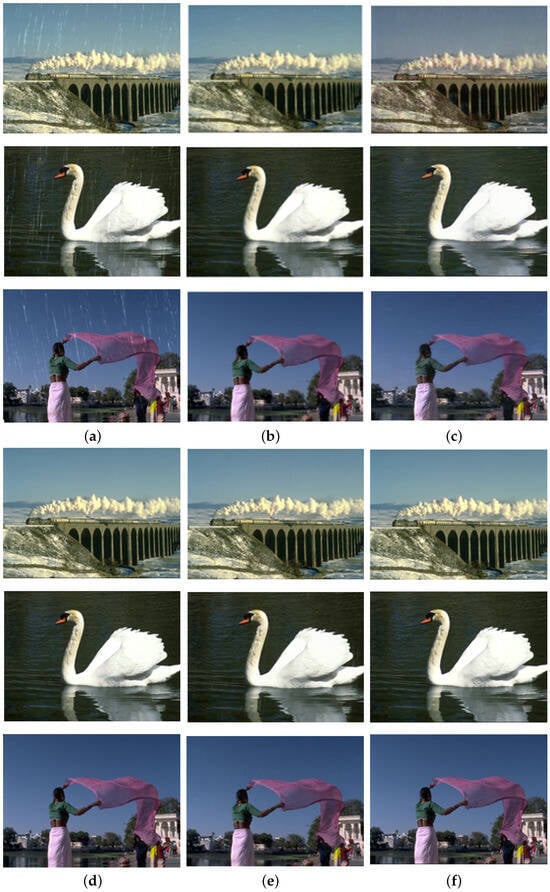

4.2.2. Comparisons Using a Real Datasets

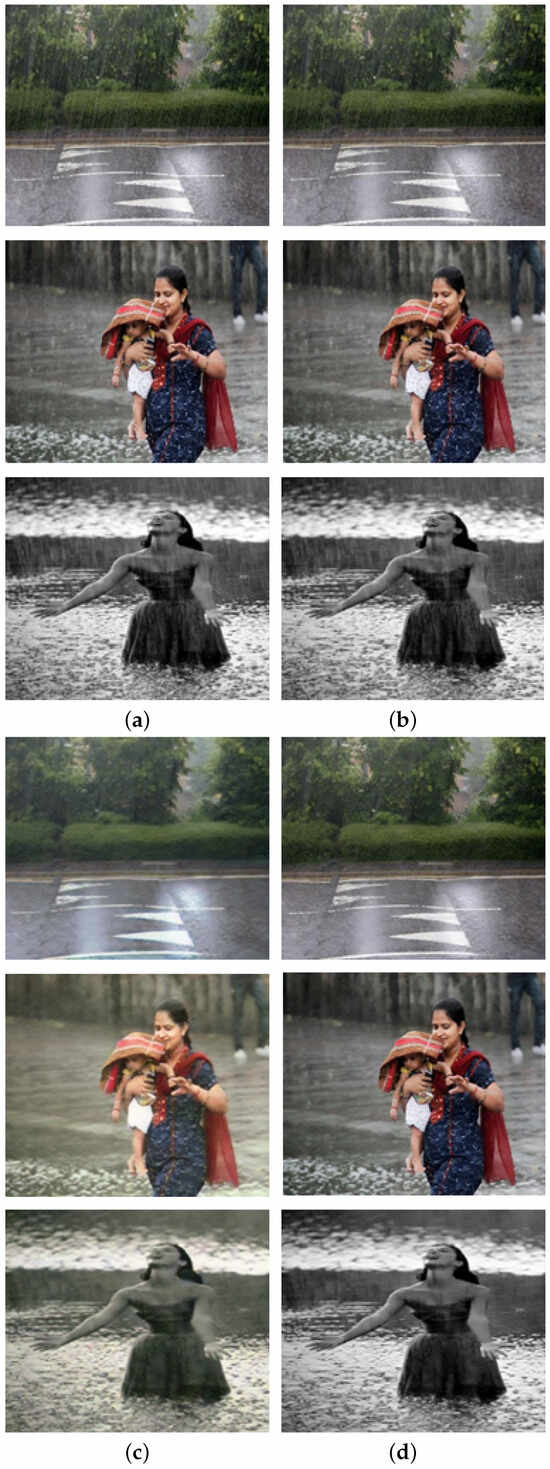

We then evaluate each approach on two real rainy datasets: SPANet-Data [50] and SIRR-Data [51]. These datasets are used to test the model that is trained on Rain100L [49] for all methods. Numerical metrics can be employed to evaluate SPANet-Data since it contains ground-truth images. As shown in Table 3, our method obtains the best result among unsupervised methods and is highly competitive with semi-supervised and supervised approaches on SPANet-Data. We also visually compare our method with a supervised and an unsupervised method using SIRR-Data. As illustrated in Figure 10, the supervised PReNet [60] method left the rain streak on the image, and the unsupervised CycleGAN [18] resulted in a color change on the output image. Our unsupervised method demonstrates better performance than even the supervised method. This may be due to the inflexibility of conventional supervised methods in handling real rainy images because the distribution of real rain is very different from the synthetic dataset on which the supervised methods are trained.

Figure 10.

Comparisons of deraining results on SIRR-Data [51]. (a–d) are rainy images, PReNet [60], CycleGAN [18], and ours, respectively.

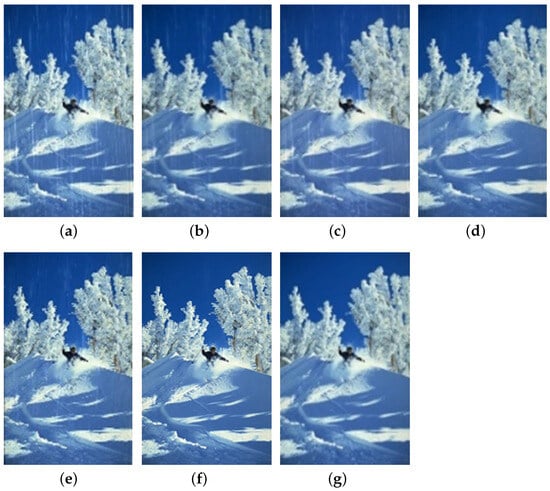

4.3. Ablation Study

We conducted ablation experiments on Rain100L [49] to evaluate the effectiveness of each component in our proposed method. The five experiments included CycleGAN as a baseline, CycleGAN + SE, SE-RRACycleGAN without LSTM, SE-RRACycleGAN without SE, and SE-RRACycleGAN. The results, depicted in Figure 11 and Table 5, demonstrate that the introduced components significantly enhanced rain removal performance compared to CycleGAN. All components proved to be crucial for our method, although Figure 11c–e exhibits some undesired artifacts. This experiment underscores the significance of LSTM in our recurrent rain-attention module and SE blocks in the generator. Our method effectively addresses these artifacts, resulting in a smoother background.

Figure 11.

Visual comparisons of components used in our method. (a–g) are a rainy image, only CycleGAN, CycleGAN + SE, SE-RRACycleGAN without LSTM, SE-RRACycleGAN without SE, our SE-RRACycleGAN, and ground truth, respectively.

Table 5.

Ablation study on different components of our method.

5. Conclusions

In this paper, we introduce an unsupervised SE- RRACycleGAN network for SID. We propose a novel RRAM to enhance the detection of rain-related information by simultaneously analyzing both rainy and rain-free images. In addition, we incorporate the SE into the generator architecture to improve the generator’s ability to generalize across diverse rain patterns by dynamically adjusting the weights of features based on the characteristics of the input image. Moreover, we add the content loss into the objective function to enhance the visual similarity of the generated image with the input image. Extensive experiments conducted on both synthetic and real-world datasets reveal that our method surpasses most of the unsupervised state-of-the-art methods both quantitatively and qualitatively, especially on Rain12 and real rainy images, and is highly competitive with supervised techniques. However, our method may not achieve optimal results in situations where rain streaks closely resemble the background texture in the input images. For instance, as shown in the top row of Figure 8, while our method outperforms other techniques in rain removal, it does introduce some blurring in the background regions. Therefore, in the future, we aim to extend our model’s capabilities to address this issue and also handle images with intense rain conditions, such as those in the Rain100H dataset, snow and fog, where the background is heavily obscured, making it challenging to accurately restore the images without reference values (i.e., ground truth).

Author Contributions

Conceptualization, methodology, analysis, review, editing, and revision G.N.W. and S.S.-D.X.; coding, simulation, and writing, G.N.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially supported by the Ministry of Science and Technology (MOST), Taiwan, under the Grant MOST 111-2221-E-011-146-MY2.

Data Availability Statement

No new datasets were created by ourselves. All the used datasets in this paper can be found as follows: Rain800 [23] https://github.com/hezhangsprinter/ID-CGAN (accessed on 1 January 2024), Rain100L [49] https://github.com/ZhangXinNan/RainDetectionAndRemoval (accessed on 1 January 2024), Rain12 [21] https://github.com/yu-li/LPDerain (accessed on 1 January 2024), SPANet [50] https://github.com/stevewongv/SPANet (accessed on 1 January 2024), and SIRR-Data [51] https://github.com/wwzjer/Semi-supervised-IRR (accessed on 1 January 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Qiao, J.; Song, H.; Zhang, K.; Zhang, X.; Liu, Q. Image super-resolution using conditional generative adversarial network. IET Image Process. 2019, 13, 2673–2679. [Google Scholar] [CrossRef]

- Mao, J.; Xiao, T.; Jiang, Y.; Cao, Z. What can help pedestrian detection? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3127–3136. [Google Scholar]

- Song, Y.; Ma, C.; Wu, X.; Gong, L.; Bao, L.; Zuo, W.; Shen, C.; Lau, R.W.; Yang, M.H. Vital: Visual tracking via adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8990–8999. [Google Scholar]

- Zhu, Z.; Liang, D.; Zhang, S.; Huang, X.; Li, B.; Hu, S. Traffic-sign detection and classification in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2110–2118. [Google Scholar]

- Tripicchio, P.; Camacho-Gonzalez, G.; D’Avella, S. Welding defect detection: Coping with artifacts in the production line. Int. J. Adv. Manuf. Technol. 2020, 111, 1659–1669. [Google Scholar] [CrossRef]

- Chen, H.; He, X.; Qing, L.; Wu, Y.; Ren, C.; Sheriff, R.E.; Zhu, C. Real-world single image super-resolution: A brief review. Inf. Fusion 2022, 79, 124–145. [Google Scholar] [CrossRef]

- Lian, Q.; Yan, W.; Zhang, X.; Chen, S. Single image rain removal using image decomposition and a dense network. IEEE/CAA J. Autom. Sin. 2019, 6, 1428–1437. [Google Scholar] [CrossRef]

- Liu, J.; Yang, W.; Yang, S.; Guo, Z. D3r-Net: Dynamic routing residue recurrent network for video rain removal. IEEE Trans. Image Process. 2018, 28, 699–712. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Xie, Q.; Zhao, Q.; Wei, W.; Gu, S.; Tao, J.; Meng, D. Video rain streak removal by multiscale convolutional sparse coding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6644–6653. [Google Scholar]

- Ahn, N.; Jo, S.Y.; Kang, S.J. EAGNet: Elementwise attentive gating network-based single image de-raining with rain simplification. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 608–620. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, Z.; Wang, Y.; Xu, M.; Yang, Y.; Yan, S.; Wang, M. Deraincyclegan: Rain attentive cyclegan for single-image deraining and rainmaking. IEEE Trans. Image Process. 2021, 30, 4788–4801. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Wang, P.; Shang, D.; Wang, P. Cycle-Attention-Derain: Unsupervised rain removal with CycleGAN. Vis. Comput. 2023, 39, 3727–3739. [Google Scholar] [CrossRef]

- Guo, Z.; Hou, M.; Sima, M.; Feng, Z. DerainAttentionGAN: Unsupervised single-image deraining using attention-guided generative adversarial networks. Signal Image Video Process. 2022, 16, 185–192. [Google Scholar] [CrossRef]

- Yang, W.; Tan, R.T.; Wang, S.; Fang, Y.; Liu, J. single-image deraining: From model-based to data-driven and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4059–4077. [Google Scholar] [CrossRef]

- Yu, X.; Zhang, G.; Tan, F.; Li, F.; Xie, W. Progressive hybrid-modulated network for single-image deraining. Mathematics 2023, 11, 691. [Google Scholar] [CrossRef]

- Liu, T.; Zhou, B.; Luo, P.; Zhang, Y.; Niu, L.; Wang, G. Two-Stage and Two-Channel Attention single-image deraining Network for Promoting Ship Detection in Visual Perception System. Appl. Sci. 2022, 12, 7766. [Google Scholar] [CrossRef]

- Wang, Y.T.; Zhao, X.L.; Jiang, T.X.; Deng, L.J.; Chang, Y.; Huang, T.Z. Rain streaks removal for single image via kernel-guided convolutional neural network. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3664–3676. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Kang, L.W.; Lin, C.W.; Fu, Y.H. Automatic single-image-based rain streaks removal via image decomposition. IEEE Trans. Image Process. 2011, 21, 1742–1755. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Xu, Y.; Ji, H. Removing rain from a single image via discriminative sparse coding. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3397–3405. [Google Scholar]

- Li, Y.; Tan, R.T.; Guo, X.; Lu, J.; Brown, M.S. Rain streak removal using layer priors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2736–2744. [Google Scholar]

- Wang, Y.; Liu, S.; Chen, C.; Zeng, B. A hierarchical approach for rain or snow removing in a single color image. IEEE Trans. Image Process. 2017, 26, 3936–3950. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Sindagi, V.; Patel, V.M. Image de-raining using a conditional generative adversarial network. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3943–3956. [Google Scholar] [CrossRef]

- Yang, W.; Tan, R.T.; Feng, J.; Guo, Z.; Yan, S.; Liu, J. Joint rain detection and removal from a single image with contextualized deep networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1377–1393. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, H.; Peng, X.; Zhou, J.T.; Yang, S.; Chanderasekh, V.; Li, L.; Lim, J.H. Singe image rain removal with unpaired information: A differentiable programming perspective. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9332–9339. [Google Scholar]

- Tian, C.; Xu, Y.; Li, Z.; Zuo, W.; Fei, L.; Liu, H. Attention-guided CNN for image denoising. Neural Netw. 2020, 124, 117–129. [Google Scholar] [CrossRef] [PubMed]

- Zhao, B.; Wu, X.; Feng, J.; Peng, Q.; Yan, S. Diversified visual attention networks for fine-grained object classification. IEEE Trans. Multimed. 2017, 19, 1245–1256. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Zheng, M.; Xu, J.; Shen, Y.; Tian, C.; Li, J.; Fei, L.; Zong, M.; Liu, X. Attention-based CNNs for image classification: A survey. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2022; Volume 2171, p. 012068. [Google Scholar]

- Qian, R.; Tan, R.T.; Yang, W.; Su, J.; Liu, J. Attentive generative adversarial network for raindrop removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2482–2491. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chao, Z.; Pu, F.; Yin, Y.; Han, B.; Chen, X. Research on real-time local rainfall prediction based on MEMS sensors. J. Sens. 2018, 2018, 6184713. [Google Scholar] [CrossRef]

- Liu, R.W.; Hu, K.; Liang, M.; Li, Y.; Liu, X.; Yang, D. QSD-LSTM: Vessel trajectory prediction using long short-term memory with quaternion ship domain. Appl. Ocean Res. 2023, 136, 103592. [Google Scholar] [CrossRef]

- Brown, M.J.; Hutchinson, L.A.; Rainbow, M.J.; Deluzio, K.J.; De Asha, A.R. A comparison of self-selected walking speeds and walking speed variability when data are collected during repeated discrete trials and during continuous walking. J. Appl. Biomech. 2017, 33, 384–387. [Google Scholar] [CrossRef] [PubMed]

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv 2016, arXiv:1612.03928. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, C.; Fan, W.; Zhu, H.; Su, Z. single-image deraining via nonlocal squeeze-and-excitation enhancing network. Appl. Intell. 2020, 50, 2932–2944. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Lee, H.Y.; Tseng, H.Y.; Huang, J.B.; Singh, M.; Yang, M.H. Diverse image-to-image translation via disentangled representations. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 35–51. [Google Scholar]

- Qiao, J.; Song, H.; Zhang, K.; Zhang, X. Conditional generative adversarial network with densely-connected residual learning for single image super-resolution. Multimed. Tools Appl. 2021, 80, 4383–4397. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 139–144. [Google Scholar]

- Du, R.; Li, W.; Chen, S.; Li, C.; Zhang, Y. Unpaired underwater image enhancement based on cyclegan. Information 2021, 13, 1. [Google Scholar] [CrossRef]

- Karen, S. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic Differentiation in Pytorch. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 1 January 2024).

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1357–1366. [Google Scholar]

- Wang, T.; Yang, X.; Xu, K.; Chen, S.; Zhang, Q.; Lau, R.W. Spatial attentive single-image deraining with a high quality real rain dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12270–12279. [Google Scholar]

- Wei, W.; Meng, D.; Zhao, Q.; Xu, Z.; Wu, Y. Semi-supervised transfer learning for image rain removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3877–3886. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Su, Z.; Zhang, Y.; Shi, J.; Zhang, X.P. A Survey of Single Image Rain Removal Based on Deep Learning. ACM Comput. Surv. 2023, 56, 1–35. [Google Scholar] [CrossRef]

- Ratner, B. The correlation coefficient: Its values range between +1/−1, or do they? J. Target. Meas. Anal. Mark. 2009, 17, 139–142. [Google Scholar] [CrossRef]

- Fu, X.; Huang, J.; Zeng, D.; Huang, Y.; Ding, X.; Paisley, J. Removing rain from single images via a deep detail network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3855–3863. [Google Scholar]

- Fu, X.; Huang, J.; Ding, X.; Liao, Y.; Paisley, J. Clearing the skies: A deep network architecture for single-image rain removal. IEEE Trans. Image Process. 2017, 26, 2944–2956. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Wu, J.; Lin, Z.; Liu, H.; Zha, H. Recurrent squeeze-and-excitation context aggregation net for single image deraining. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 254–269. [Google Scholar]

- Ren, D.; Zuo, W.; Hu, Q.; Zhu, P.; Meng, D. Progressive image deraining networks: A better and simpler baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3937–3946. [Google Scholar]

- Wang, H.; Wu, Y.; Li, M.; Zhao, Q.; Meng, D. Survey on rain removal from videos or a single image. Sci. China Inf. Sci. 2022, 65, 111101. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).