Abstract

Recent advancements in deep learning have spurred the development of numerous novel semantic segmentation models for land cover mapping, showcasing exceptional performance in delineating precise boundaries and producing highly accurate land cover maps. However, to date, no systematic literature review has comprehensively examined semantic segmentation models in the context of land cover mapping. This paper addresses this gap by synthesizing recent advancements in semantic segmentation models for land cover mapping from 2017 to 2023, drawing insights on trends, data sources, model structures, and performance metrics based on a review of 106 articles. Our analysis identifies top journals in the field, including MDPI Remote Sensing, IEEE Journal of Selected Topics in Earth Science, and IEEE Transactions on Geoscience and Remote Sensing, IEEE Geoscience and Remote Sensing Letters, and ISPRS Journal Of Photogrammetry And Remote Sensing. We find that research predominantly focuses on land cover, urban areas, precision agriculture, environment, coastal areas, and forests. Geographically, 35.29% of the study areas are located in China, followed by the USA (11.76%), France (5.88%), Spain (4%), and others. Sentinel-2, Sentinel-1, and Landsat satellites emerge as the most used data sources. Benchmark datasets such as ISPRS Vaihingen and Potsdam, LandCover.ai, DeepGlobe, and GID datasets are frequently employed. Model architectures predominantly utilize encoder–decoder and hybrid convolutional neural network-based structures because of their impressive performances, with limited adoption of transformer-based architectures due to its computational complexity issue and slow convergence speed. Lastly, this paper highlights existing key research gaps in the field to guide future research directions.

1. Introduction

Semantic segmentation models are crucial in land cover mapping especially in generating precise Land Cover (LC) maps [1]. LC maps show various types of land cover, such as forests, grasslands, wetlands, urban areas, and bodies of water. These maps are typically created using Remote Sensing (RS) data like satellite imagery or aerial photography [2]. Land cover maps serve for different purposes, including land use management [3], disaster management, urban planning [4], precision agriculture [5], forestry [6], building infrastructure development [7], climate changes problems [8], and others.

Due to advancements in Deep Convolutional Neural Network (DCNN) models, the domain of land cover mapping has progressively evolved [9]. DCNN are potentially successful for extracting information from high-resolution RS data [10]. They possess deep layers and hierarchical architectures, aiming to automatically identify high-level patterns in data [11]. Although DCNN have shown impressive performance in the image classification task, the conventional models still struggle to capture comprehensive global information as well as long-range dependencies inherent to RS data [12]. Consequently, they may not achieve precise image segmentation because they could potentially overlook certain edge details of objects [13]. However, they have successfully contributed valuably to semantic segmentation methodologies.

The semantic segmentation model allocates every pixel in an image to a predefined class [14]. Its effectiveness in landcover segmentation has established it as a mainstream method [15]. It has demonstrated an enhanced performance leading to more accurate segmentation outcomes. A prominent example of a state-of-the-art (SOTA) semantic segmentation model is UNet [16]. Recently, many novel semantic segmentation models tailored for land cover mapping have been proposed, including DFFAN [12], MFANet [17], Sgformer [18], UNetFormer [19], and CSSwin-unet [20]. Accordingly, these models have demonstrated exceptional segmentation accuracy in this domain.

In recent years, literature reviews have predominantly explored Deep Learning (DL) semantic segmentation models. The review by [21] presented significant methods, their origins, and contributions, including insights into datasets, performance metrics, execution time, and memory consumption relevant to DL-based segmentation projects. Similar reviews by [14,22] categorized existing semantic segmentation with DL methods based on criteria like supervision degree during training and architectural design. In addition, ref. [23] summarized various semantic segmentation models for RS Imagery. These reviews offer comprehensive overviews of DL-based semantic segmentation models but have not specifically examined their application to land cover mapping. To address this gap, this literature review focuses on emerging semantic segmentation models in land cover mapping, aiming to answer predefined research questions quantitatively and qualitatively. Our objective is to identify knowledge gaps in semantic segmentation models applied to land cover mapping and understand the evolution of these models in relation to domain-specific studies, data sources, model structures, and performance metrics. Furthermore, this review offers insights for future research directions in land cover mapping.

The next sections of this review are structured as follows: Section 2 provides the research questions and method used in conducting the review. Section 3 delves into the results obtained from the performed systematic literature review, discusses the evolution and trends, the domain study, the data, and semantic segmentation methodologies. Section 4 challenges and provides future insights into land cover mapping. Finally, Section 5 summarizes the highlights of the review.

2. Materials and Methods

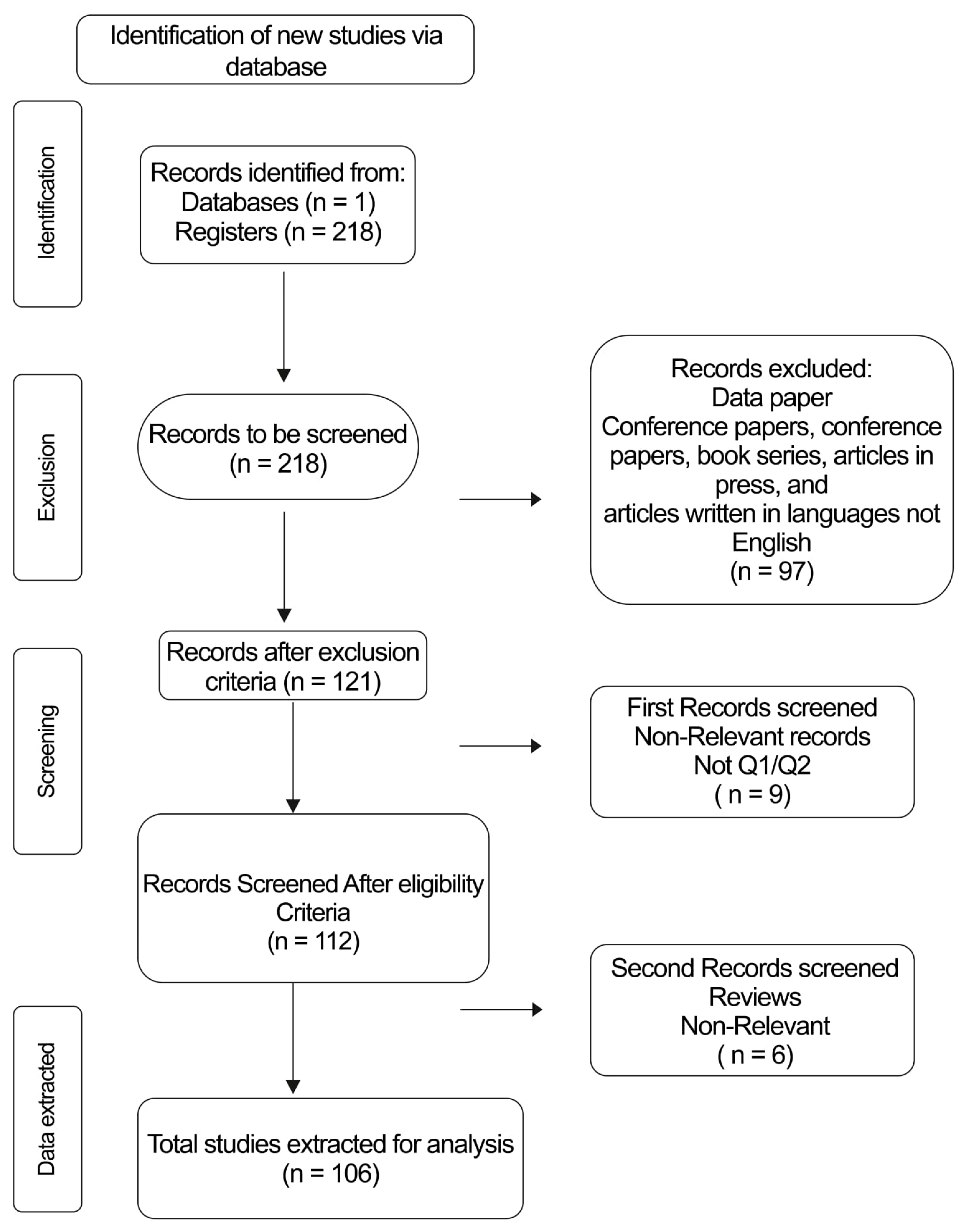

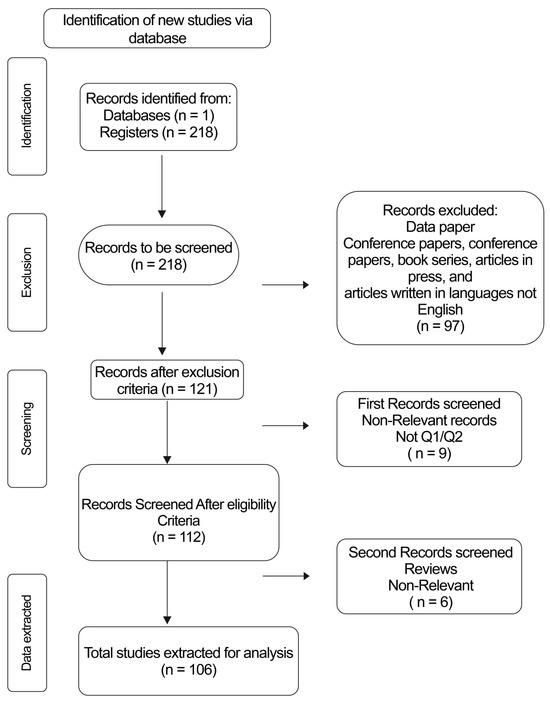

The methodology used in conducting this comprehensive literature review follows the PRISMA framework as outlined by [24] of identification, eligibility, screening, and data extraction. A search strategy was developed to identify the literature for this review (Figure 1). Peer-reviewed papers published in relevant journals between 2017 and 2023 are reviewed. In this section, the research questions are formulated, thereafter, the search strategy was defined using the Scopus database (https://scopus.com accessed on 23 February 2024). The selection of the inclusion and exclusion criteria was explained as well as the eligibility criteria defined to assess articles utilized as the final record for the bibliometric analysis.

Figure 1.

Flow chart of peer-review procedure.

2.1. Research Questions (RQs)

The objectives of the study are addressed based on 4 main research questions. These RQs are specifically selected to elicit trends, benchmark datasets, the state-of-the-art architecture, and performances of semantic segmentation in land cover mapping. This review is built around the following RQs:

- RQ1. What are the emerging patterns in land cover mapping?

- RQ2. What are the domain studies of semantic segmentation models in land cover mapping?

- RQ3. What are the data used in semantic segmentation models for land cover mapping?

- RQ4. What are the architecture and performances of semantic segmentation methodologies used in land cover mapping?

2.2. Search Strategy

The search strategy was carried out using Scopus (Elsevier) database. This database is renowned in the scientific community for its high-quality journals, extensive, multidisciplinary abstract collection, and it is an excellent fit for the purposes of review articles [25]. The search keywords used as search criteria to identify articles from the Scopus database are ‘semantic AND segmentation’, AND ‘land AND use AND land AND cover’, AND ‘deep AND learning’, AND ‘land AND cover AND classification’. The search string is used to find relevant papers that link deep learning semantic segmentation to land use and land cover.

2.3. Study Selection Criteria

After the defined search strings were entered on Scopus, a total of 218 articles were initially retrieved from the scientific database. To further process the data retrieved, the papers are filtered between 2017 and 2023 excluding conference papers, conference reviews, data paper, books, and book chapters, articles in the press, conference proceedings, book series and articles that are not in English language. The stated period 2017–2023 is selected to provide us with the recent developments in the field and during search, there were almost no notable articles published before 2017 on the subject matter. Consequently, the retrieved record was reduced to 121 articles.

2.4. Eligibility and Data Analysis

To determine eligibility and quality assessment of the extracted papers, we remove articles published in journals that are not Q1 or Q2. This is to ensure that the articles with a rigorous peer-review process and quality research output in this field are selected and synthesized for a top-quality review. Following this criteria, 9 articles are excluded, and 112 records are retrieved. For further assessment, titles and abstracts are assessed regarding their relevance to the study. The relevant study focused on articles that implemented various deep learning semantic segmentation models focused on land use/land cover classification, and/or various satellite datasets extracted through semantic segmentation in different applications. At the end of this step, 6 articles are further excluded bringing down the record to a total number of 106 articles between the period of 2017 to 2023, which are eligible for bibliometric analysis.

2.5. Data Synthesis

Data synthesis is an important way to the answer the RQs. The data are visualized in such a way that it presents findings and synthesizes through quantitative and qualitative analysis. Categorization and visualization were carried out to draw important trends and findings of deep learning semantic segmentation models in land cover mapping in relation to datasets, applications, architecture, and performance. The study’s synthesizing themes were developed by full-text content analysis of the 106 articles. Vosviewer software version 1.6.18 (https://vosviewer.com accessed on 24 February 2024) was used to provide graphical visualizations of occurrence of key terms, taking title, abstract, and keywords as input.

3. Results and Discussion

This section investigates the results derived from the performed systematic literature review. Using 106 extracted articles, the results are presented and discussed based on the RQs.

3.1. RQ1. What Are the Emerging Patterns in Land Cover Mapping?

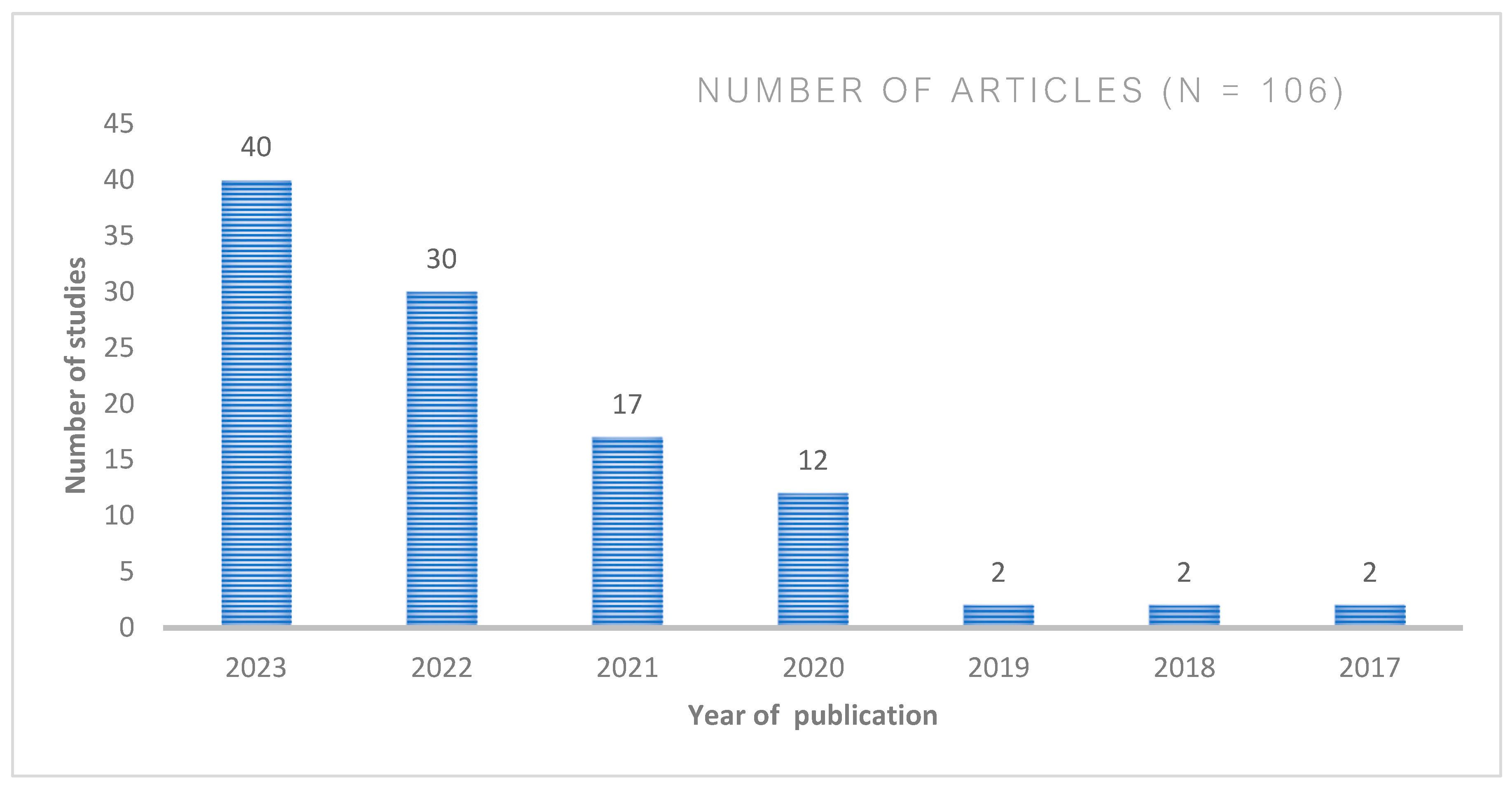

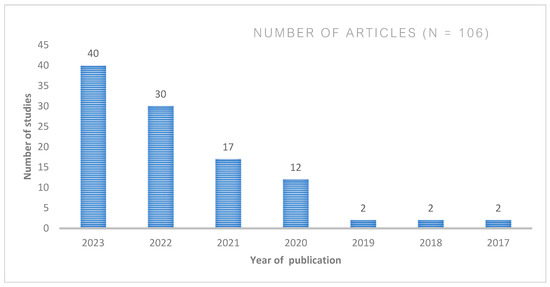

- Annual distribution of research studies

Figure 2 shows the number of research articles published annually from 2017 to 2023. The year 2017 saw a modest output of merely two articles. There is a surge in the number of articles to 12 in 2020, 17 articles by the year 2021, 30 articles by 2022 and 40 articles by 2023. This observation aligns with the understanding that the adoption of deep learning semantic segmentation models on satellite imagery gained significant momentum in 2020 and subsequent years.

Figure 2.

Annual distribution of research studies.

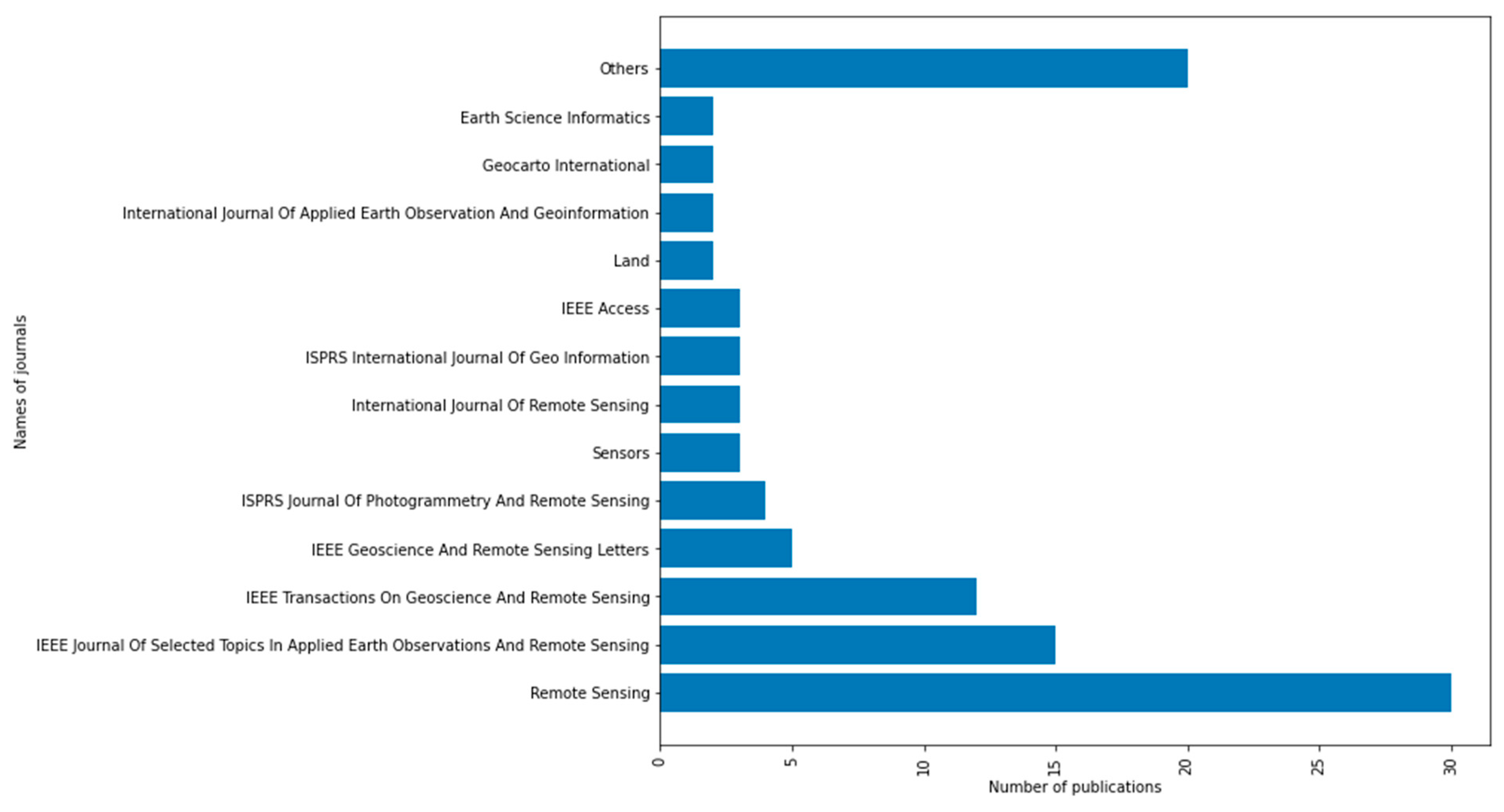

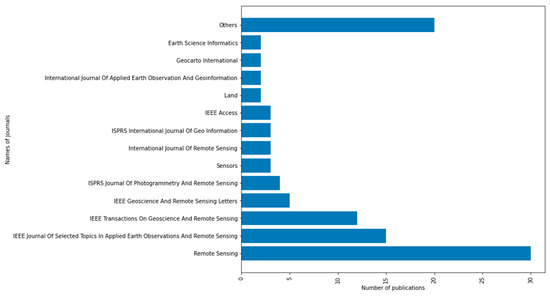

- Leading Journals

Figure 3 depicts the number of articles published in academic journals of this domain. The top 13 journals produced over 81% of the number of research studies of semantic segmentation in land cover mapping. MDPI Remote Sensing (30) has the highest number of published articles in this domain, followed by IEEE Journal of selected topics in Earth Science (15), IEEE Transactions on Geoscience And Remote Sensing (12), IEEE Geoscience and Remote Sensing Letters (5), ISPRS Journal Of Photogrammetry And Remote Sensing (4), and so on, while 20 other journals have one article each published and are grouped as the “other” category.

Figure 3.

Number of relevant publications in this field distributed per relevant journals.

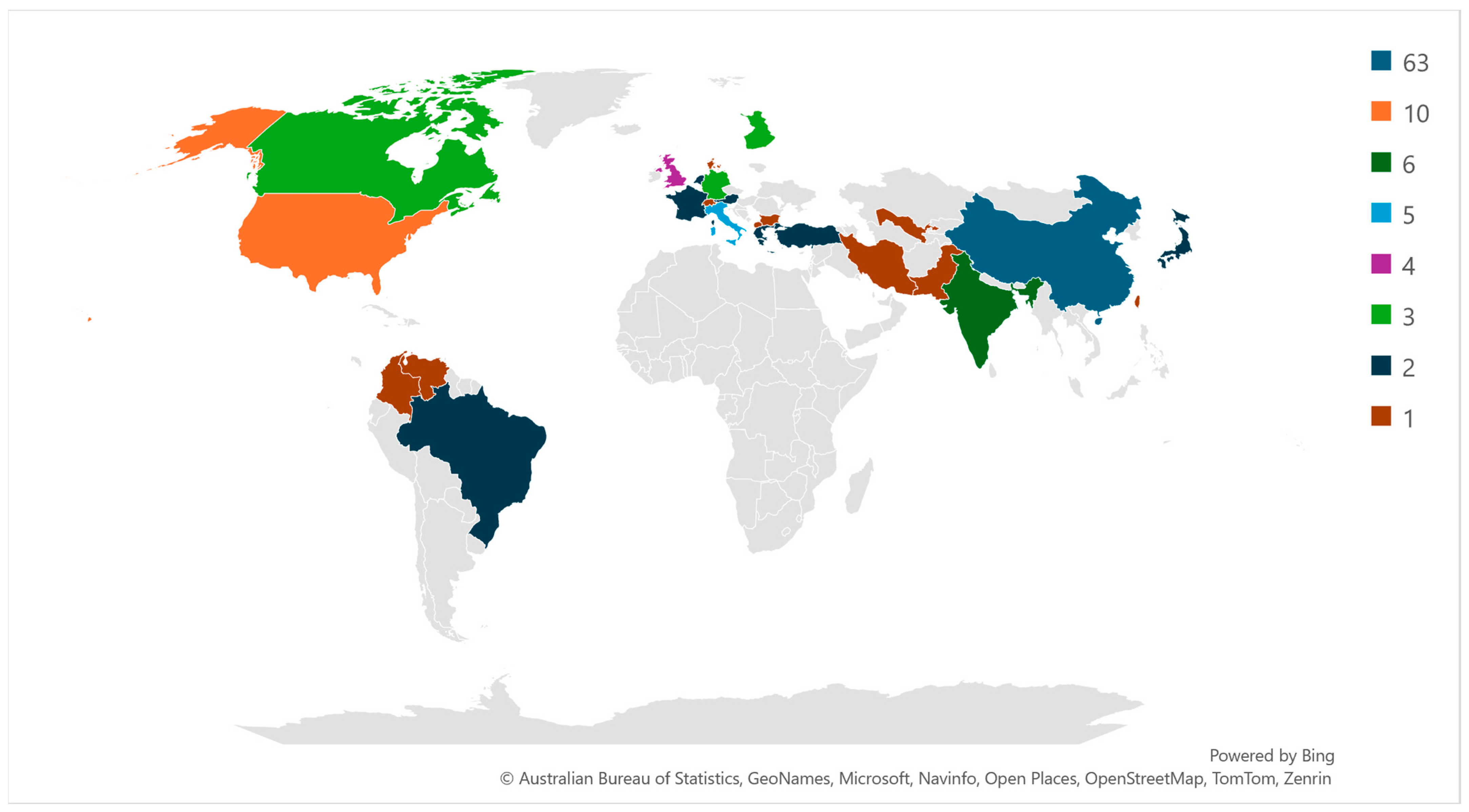

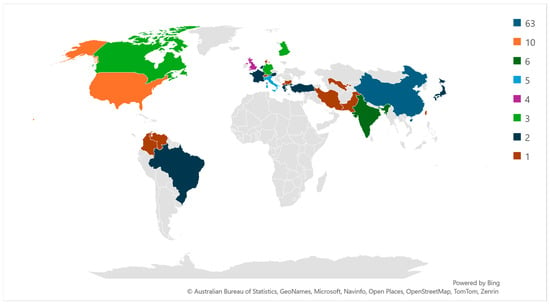

- Geographic distribution of studies

In terms of geographical distribution of studies extracted from the Scopus database, 35 countries contributed to the study domain. Almost all continents have contributions, except for the African continent. Figure 4 shows that China published 63 articles of the total 106 articles, the second is the United States with 10 articles, followed by India (6), Italy (5), South Korea (4), the United Kingdom(4), Canada, Finland, and Germany (3), then Austria, Australia, Brazil, France, Greece, Turkey, the Netherlands, and Japan (2) while the rest of the countries have one each.

Figure 4.

Geographic distribution of studies per country.

- Leading Themes and Timelines

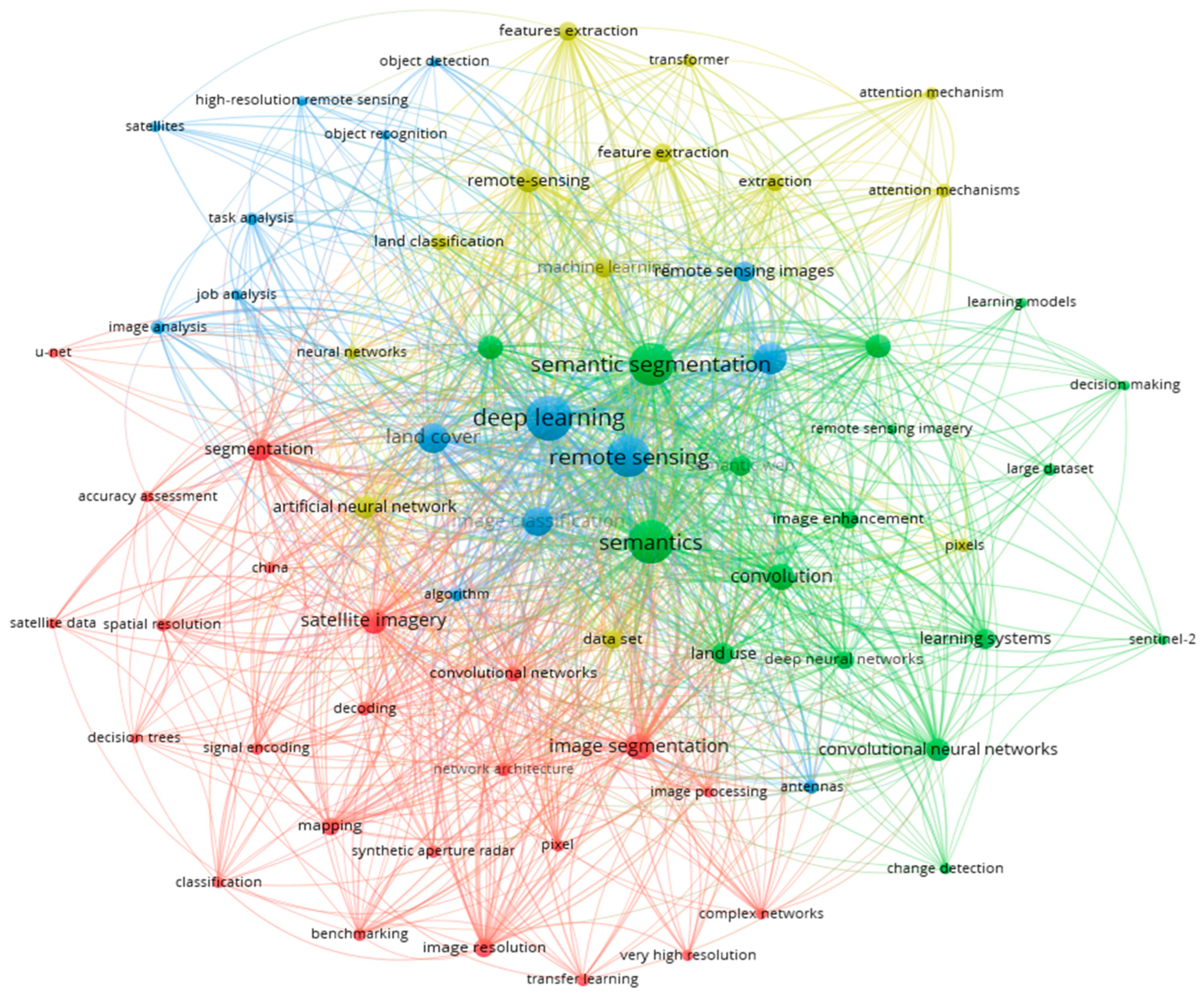

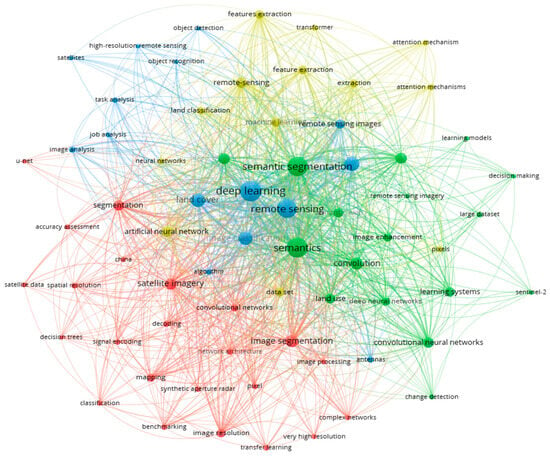

The significant keyword occurrences are obtained from the titles and abstracts of the extracted articles. Figure 5 shows relevant and leading keywords. A threshold of five was set, which means the minimum number of occurrences of a keyword. Only 68 out of 897 keywords met the threshold. Bibliometric analysis reveals that keywords such as "high-resolution RS images", "Remote Sensing", "satellite imagery" and "very high resolution" exhibit prominence, showing strong associations with neural network-related terms including "Semantic Segmentation", "Deep Learning,", "Machine Learning", and "Neural Network". The Semantic Segmentation has “attention mechanisms” and “transformer” as different model’s architectural component. These learning models are further linked to various application domains, evident in their connections to terms like "Land Cover Classification", "Image Classification", "Image Segmentation", "Land Cover", "Land Use “, “Change Detection”, and "Object Detection." In 2020, the research revolved around network architectures, object detection, and image processing. In the later part of 2021, there was a notable shift in research domains, predominantly towards image segmentation, image classification, and land cover segmentation. In 2022 and 2023, there was a pronounced shift in research focusing more on semantic segmentation employing satellite high-resolution images for change detection, land use, and land cover classification and segmentation.

Figure 5.

Significant keywords occurrence driving domain theme.

3.2. RQ2. What Are Domain Studies of Semantic Segmentation Models in Land Cover Mapping?

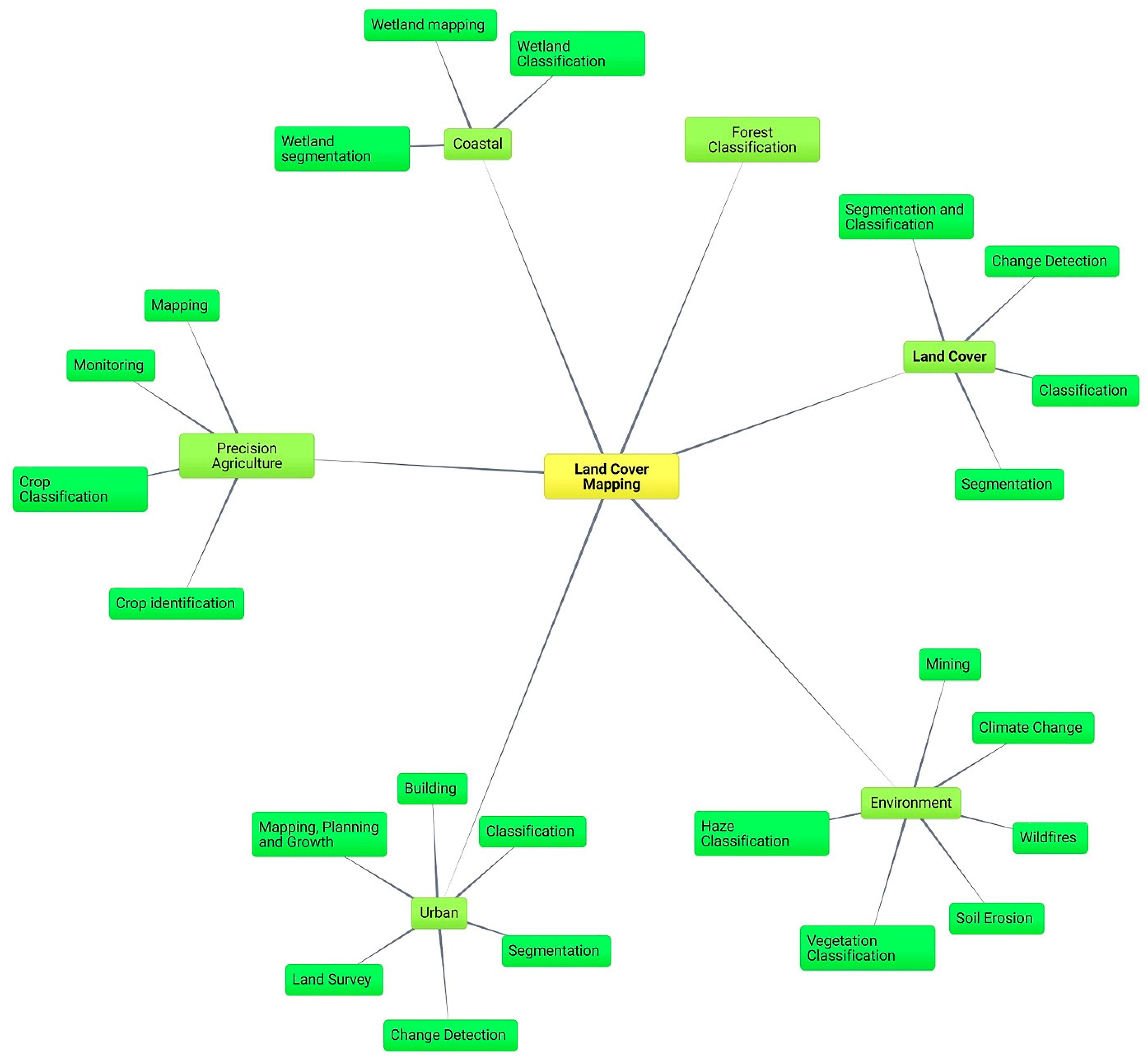

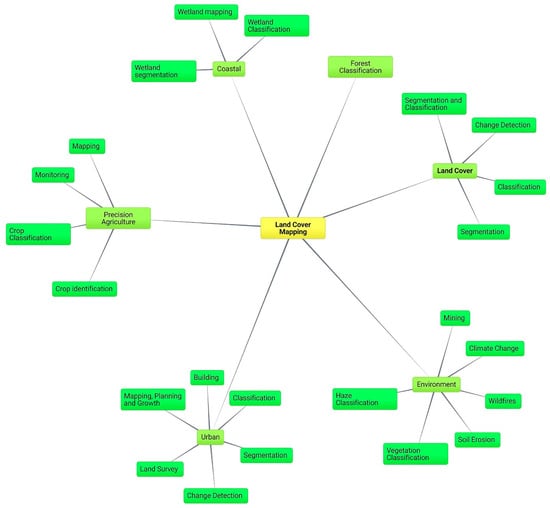

In this section, each extracted paper is clustered based on similar study domain areas. Figure 6 shows the overall mind map of the domain studies. Land cover, urban, precision agriculture, environment, coastal areas, and forest are the mostly studied domain areas.

Figure 6.

Land cover mapping domain studies.

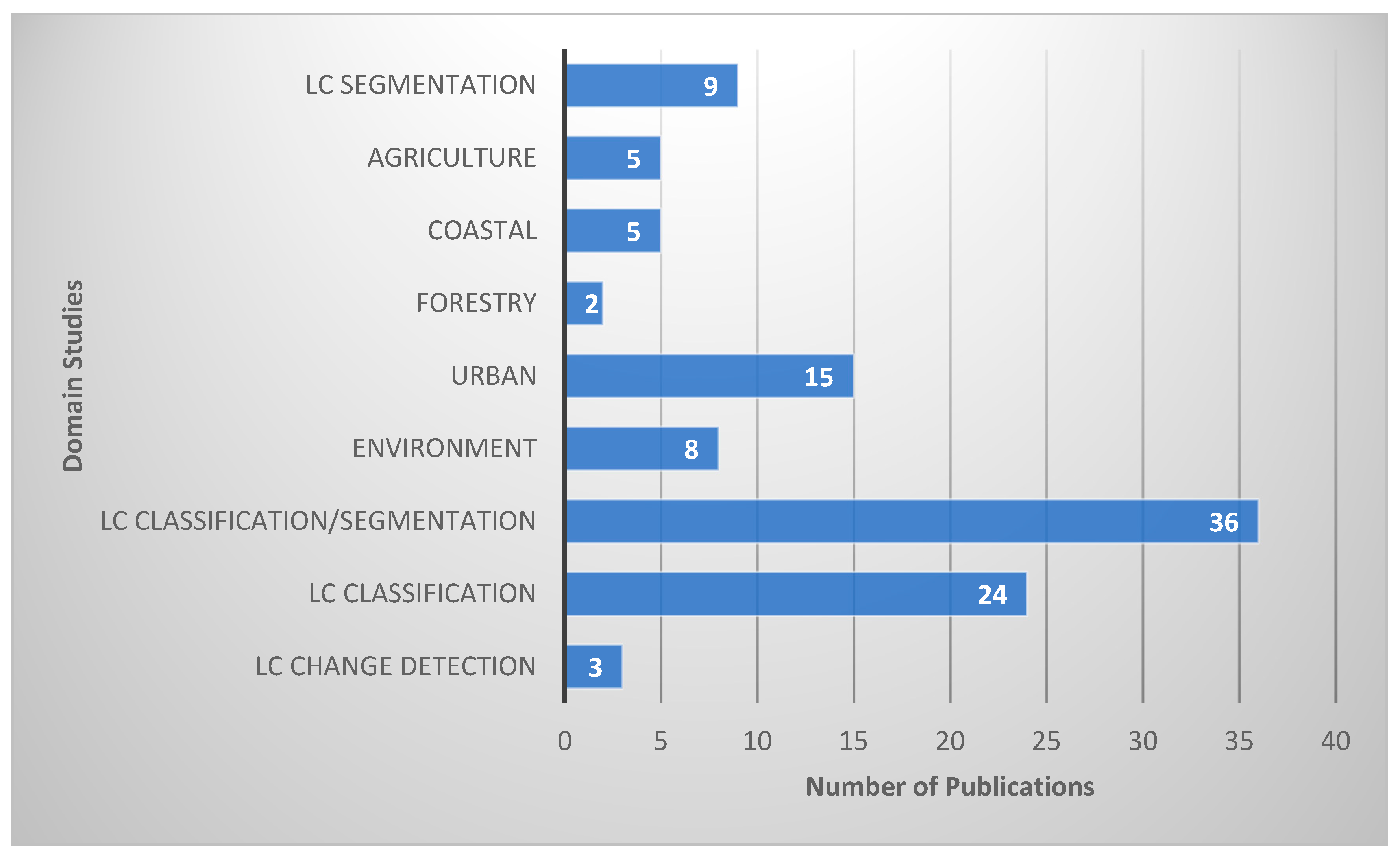

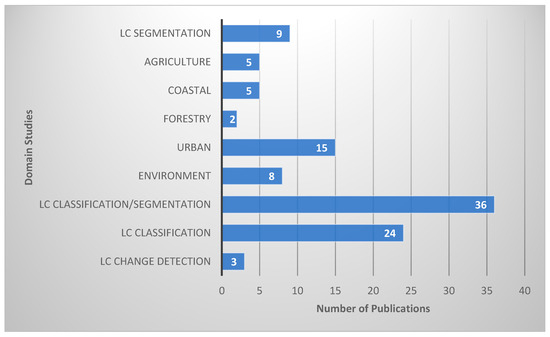

Figure 7 shows that among the 106 articles, 36 studies cover both land cover classification and segmentation, 24 specifically focus on land cover classification, 15 concentrate on urban applications, 9 address land cover segmentation alone, 8 addresses environment issues, 5 center around precision agriculture, 5 are oriented toward coastal applications, 3 articles addressing land cover change detection and 2 focus on forestry.

Figure 7.

Number of publications per domain studies.

- Land Cover Studies

In the 72 out of 106 studies related to land cover, research activities encompass land cover classification (33.3%), land cover segmentation (12.5%), change detection (4.2%), and the combined application of land cover classification and segmentation (50%). These areas of study are extensively documented and represent the most widely researched applications within land cover studies. Land cover classification involves assigning an image to one of several classes of land use and land cover (LULC), while land cover segmentation entails assigning a semantic label to each pixel within an image [26]. Land cover refers to various classes of biophysical Earth cover, while land use describes how human activities modify land cover. On the other hand, change detection plays a crucial role in monitoring LULC changes by identifying changes over time periods, which can help predict future events or environmental impacts. Change detection methods employing DL have attained remarkable achievements [27] across various domains, including urban change detection [4], agriculture, forestry, wildfire management, and vegetation monitoring [28].

- Urban

Among the 15 publications related to urban studies, 38% focus on segmentation applications, including urban scene segmentation [19,29,30], while 31% address urban change detection for mapping, planning, and growth [31,32]. For instance, change detection techniques provide insights into urban dynamics by identifying changes from remote sensing imagery [7], including changes in settlement areas. Additionally, these studies involve predicting urban trends and growth over time, managing land use [3], and monitoring urban densification [33] as well as mapping built-up areas to assess human activities across large regions [34].

Publications in urban studies also cover 6% in land survey management [35] and 25% in urban classification and detection [36,37,38,39,40], particularly in building applications and for urban land-use Classification [40].

- Precision Agriculture

Out of the five publications concerning precision agriculture studies, the research focuses on various aspects such as crop mapping [41], identification [42,43], classification [44], and monitoring [45]. For example, mapping large-scale rice farms [5], monitoring crops to analyze different growth stages [42], and classifying Sentinel data for creating an oil palm land cover map [46].

- Environment

Among the eight environmental studies analyzed, the research spans various applications. These include 25% focusing on soil erosion applications [47,48], which involves rapid monitoring of ground covers to mitigate soil erosion risks. Additionally, 12.5% of the studies center around wildfire applications [49], encompassing burned area mapping, wildfire detection [50], and smoke monitoring [51], along with initiatives for preventing wildfires through sustainable land planning [8]. Another 12.5% of the studies involve haze classification [52], specifically cloud classification using Sentinel-2 imagery. Climate change research [53] accounts for another 12.5% of the studies, focusing on aspects such as the urban thermal environment. Furthermore, 12.5% of the studies are dedicated to vegetation classification [54] and an additional 25% address mining applications [55] including the detection of changes in mining areas [56].

- Forest

Research in this domain encompasses forest classification, including the classification of landscapes affected by deforestation [57]. More so, change detection in vegetation and forest areas enables decision-makers, conservationists, and policymakers to make informed decisions through forest monitoring initiatives [6] and mapping strategies tailored to tropical forests [58].

- Coastal Areas

Within this field, 5 studies out of the 106 articles focus on wetland mapping, classification, and segmentation [10,59,60,61,62]. However, the exploration and study of coastal area remote sensing image segmentation remains a relatively underexplored research area, as noted by [60]. This challenge is primarily attributed to the significant complexities associated with coastal land categories, including issues such as homogeneity, multiscale features, and class imbalance, as highlighted [63].

3.3. RQ3. What Are the Data Used in Semantic Segmentation Models for Land Cover Mapping?

In this section, this paper synthesizes extensively employed, particularly for the study location, data source and benchmark datasets used for land cover mapping.

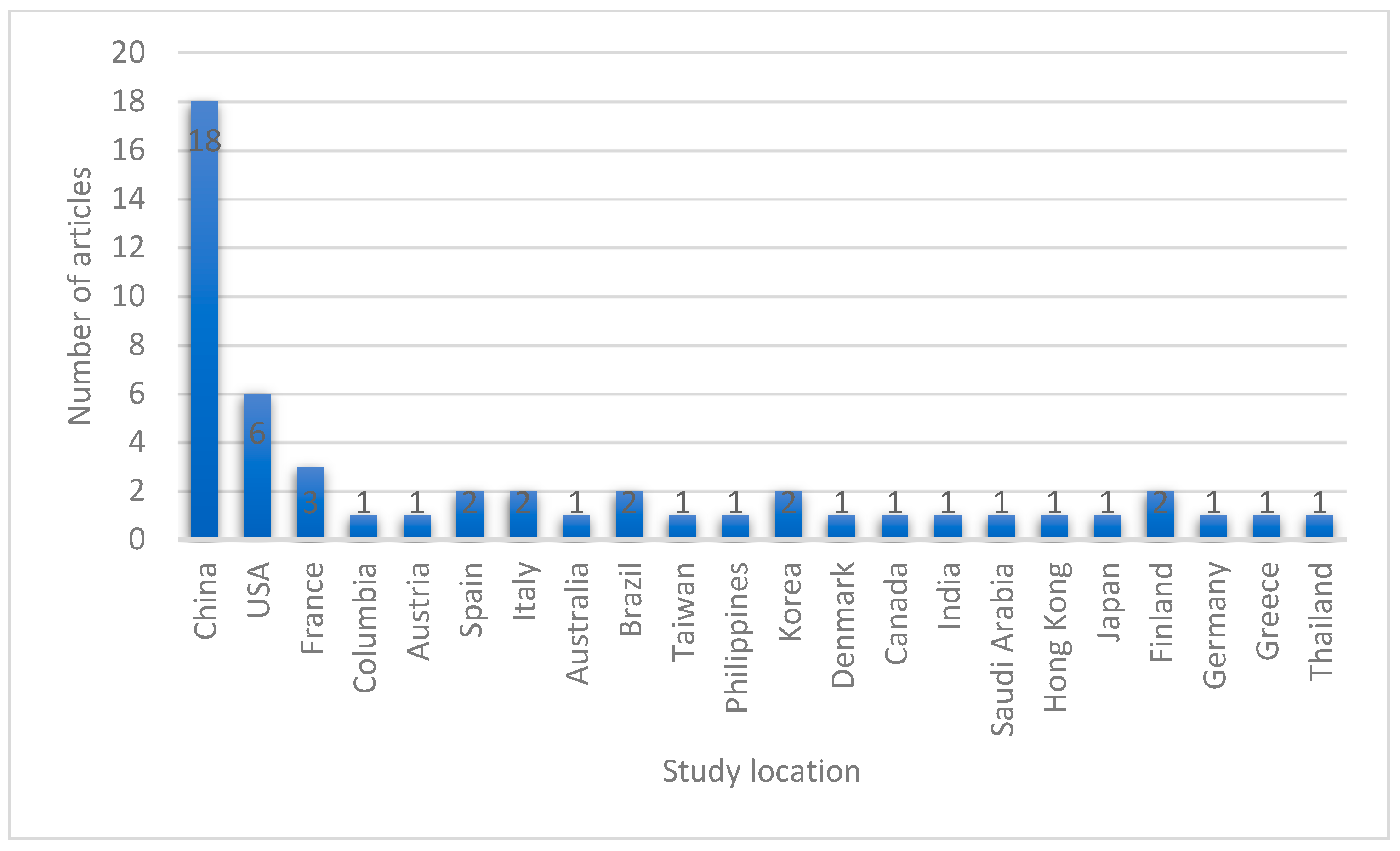

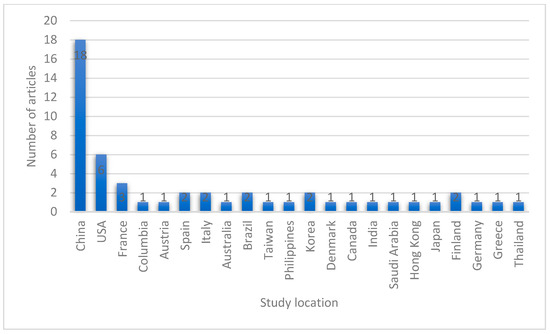

- Study Locations

Figure 8 illustrates the countries where the study areas were located and where in-depth research was conducted among the extracted articles. Among the 22 countries represented in 51 studies, 35.29% of the study areas are located in China, with 11.76% in the USA, 5.88% in France, and 3.92% in Spain, Italy, Brazil, South Korea, and Finland each. Other countries in the chart each account for 1.96% of the study areas.

Figure 8.

Number of publications per study location.

- Data Sources

Table 1 presents the identified data sources along with the number of articles and their corresponding references. The data sources identified in the literature include RS satellites, RS Unmanned Aerial Vehicles (UAVs) and Unmanned Aircraft Systems (UAS), mobile phones, Google Earth, Synthetic Aperture Radar (SAR), and Light Detection and Ranging (LiDAR) sources. Among these, Sentinel-2, Sentinel-1, and Landsat satellites are the most frequently utilized data sources. It is important to recognize that the primary RS technologies include RS satellite imagery, SAR, and LiDAR.

Table 1.

Data sources identified in review.

RS Imagery data are the most extensively utilized in land cover mapping. In RS, data are collected from satellite sources such as Sentinel-1, Landsat, Sentinel-2, WorldView-2, and QuickBird at certain time step intervals for a period. The data capture products of these satellite include Panchromatic (1 Channel – 2D), Multispectral, or Hyperspectral images [1]. RSI can be represented as aerial images [2]; these are taken using Drone or UAVs. These data usually possess spatial resolution and spectral resolution of certain image sizes.

SAR is among the radar systems used in Land Cover Mapping; SAR stands out as a notable data source [81]. SAR utilizes radio detection technology and constitutes an essential tool in this field. SAR data carry distinct advantages, especially in scenarios where optical imagery faces limitations such as cloud cover or limited visibility. SAR can penetrate through cloud cover and offer Earth surface imaging even in the presence of clouds or unfavorable weather conditions. This is one of the key advantages of SAR technology, unlike electromagnetic spectrum which is obstructed by clouds [82].

There are different types of SAR identified in the literature based on different parameters harnessed for the purpose of land cover mapping, such as polarimetric synthetic aperture radar (PolSAR) [80], E-SAR, AIRSAR, and Interferometric Synthetic Aperture Radar [6]. Notable benchmark SAR datasets include Gaofen-3 and RADARSAT-2 datasets [83]. At present, semantic segmentation of PolSAR images holds significant utility in the interpretation of SAR imagery, particularly within agricultural contexts [84]. Similarly, the High-Resolution GaoFen-3 SAR Dataset is useful for the Semantic Segmentation of Building [34,79,85]. The benchmark dataset Gaofen-3 (GF-3), comprised of single-polarization SAR images, holds significant importance [86]. This dataset is derived from China’s pioneering civilian C-band polarimetric SAR satellite, designed for high-resolution RS. Notably, FUSAR-Maps are generated from extensive semantic segmentation efforts utilizing high-resolution GF-3 single-polarization SAR images [87], while the GID dataset is collected from the Gaofen-2 satellite.

LiDAR holds a significant role within the sphere of land cover mapping and climate change [88]. LiDAR involves the emission of laser pulses and the measurement of their return times to precisely gauge distances, creating highly accurate and detailed elevation models of the Earth’s surface. It provides detailed information, including topographic features, terrain variations, and the vertical structure of vegetation. It stands as an indispensable data source for land cover mapping endeavors. Notable examples include the utilization of multispectral LiDAR [54], an advanced RS technology merging conventional LiDAR principles with the capacity to concurrently capture multiple spectral bands. There’s the Follo 2014 LiDAR data, a dataset that specifically captures Light Detection and Ranging (LiDAR) data in the Follo region during 2014. Additionally, the NIBIO AR5 (Norwegian Institute of Bioeconomy Research—Assessment Report 5) Land Resources dataset, developed by the Norwegian Institute of Bioeconomy Research, represents a comprehensive evaluation of land resources. This dataset encompasses a range of attributes including land cover, land use, and pertinent environmental factors [89].

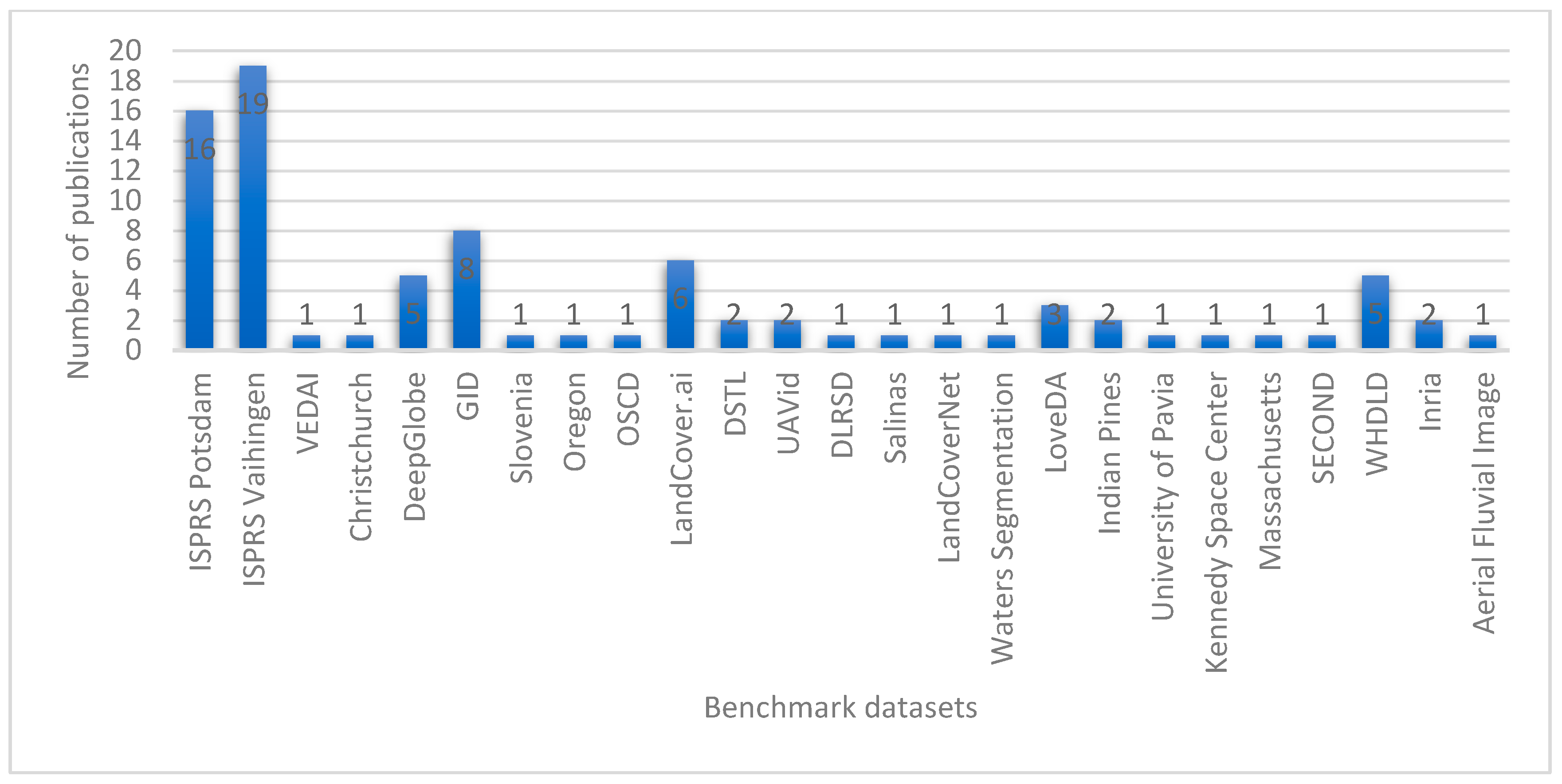

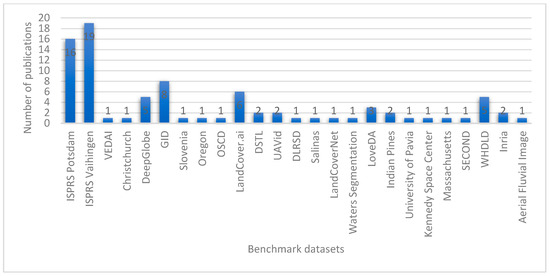

- Benchmark datasets

The benchmark datasets used for evaluation in this domain as identified in the review are shown in Figure 9. ISPRS Vaihingen and Potsdam are the widely used benchmark datasets, followed by GID, Landcover.ai, DeepGlobe, and WHDLD.

Figure 9.

Benchmark datasets identified in the literature.

The ISPRS Vaihingen comprises 33 aerial image patches in IRRGB format along with their associated Digital Surface Model (DSM) data, each with a size of around 2500 × 2500 pixels at 9 cm spatial resolution [90,91]. Similarly, the publicly accessible ISPRS Potsdam dataset encompasses Potsdam city, Germany. It is composed of 38 aerial IRRGB images measuring 6000 × 6000 pixels each at a spatial resolution of 5 centimeters [92].

The Gaofen Image Dataset (GID) dataset [93] contains 150 images of GaoFen-2 data (GF-2 images) [94,95] collected from different cities in China. The GaoFen-2 (GF-2) is high-resolution images acquired from the GaoFen-2 satellite, which captures Panchromatic (PAN) and Multispectral (MS) Spectral Bands. It offers valuable insights into land cover characteristics and patterns. Every image is composed of pixels measuring 6908 × 7300 and R, G, B, and NIR bands, each with a spatial resolution of 4 meters. The GID dataset consists of five land-use categories: farmland, meadow, forest, water, and built-up [96].

The LandCover.ai dataset [97] comprises images chosen from aerial photographs encompassing 216.27 square kilometers of Poland. The dataset includes 41 RS images, with 33 images having an approximate resolution of 25 cm, measuring around 9000 × 9000 pixels, and 8 images with a resolution of approximately 50 cm, spanning about 4200 × 4700 pixels [12,17,98]. The dataset was manually categorized into four types of objects such as buildings, woodland, and water, as well as the background.

DeepGlobe Data [99] is another important dataset in land cover mapping. The dataset stands out as the inaugural publicly available collection of high-resolution satellite imagery primarily emphasizing urban and rural regions. This dataset comprises a total of 1146 satellite images, each with dimensions of 20448 × 20448 [100]. It is of great importance to the Land Cover Classification Challenge. Likewise, the Inria dataset [101] consists of aerial visual images encompassing 10 regions in the United States and Austria, collected at a 30 cm resolution, with RGB bands [102]. It is organized by five cities in both in training and test data. Every city includes 36 image tiles, each sized at 5000 × 5000 pixels, and these tiles are divided into two semantic categories: buildings and non-building classes.

In addition, the Disaster Reduction and Emergency Management Building dataset exhibits a notable similarity to the Inria dataset. It has image tiles size of 5000 × 5000 with a spatial resolution of 30 cm, all the tiles contain R, G, and B bands [96]. The building dataset from Wuhan University comprises an aerial dataset encompassing 8189 image patches captured at a 30 cm resolution. These images are in RGB format, with each patch measuring 512 × 512 pixels [103]. The Aerial Imagery for Roof Segmentation dataset [104] is composed of aerial images that encompass the Christchurch city area in New Zealand. These images are captured at a resolution of 7.5 cm and include RGB bands [102]. It was captured following the seismic event that impacted the town of Christchurch in New Zealand. Four images, each with dimensions of 5000 × 4000 pixels, were labeled to include the following categories: buildings, cars, and vegetation [105]. Other benchmark datasets include the Massachusetts building and road datasets [106], Dense labeling RS dataset [107], VEhicle Detection in Aerial Imagery (VEDAI) dataset, and LoveDA dataset [108].

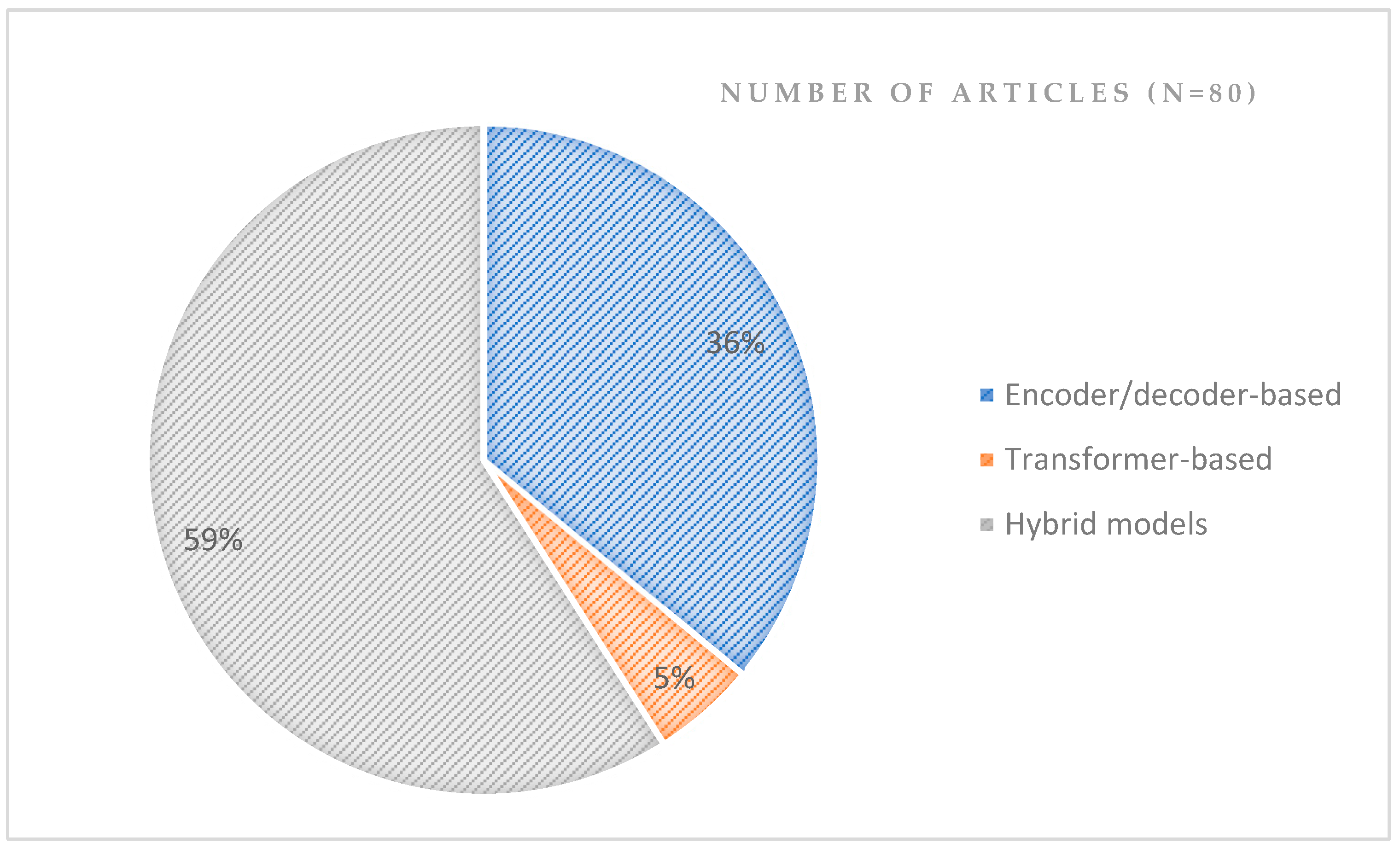

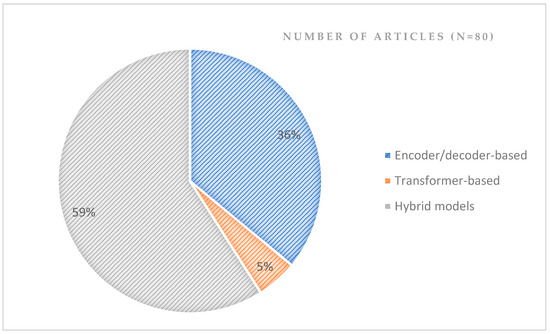

3.4. RQ4. What Are the Architecture and Performances of Semantic Segmentation Methodologies Used in Land Cover Mapping?

This section investigates the design and effectiveness of recent advancements in novel semantic segmentation methodologies applied to land cover mapping. In this paper, the methodologies employed in land cover mapping are classified based on similarities in their structural components. We have identified three primary architectural structures: (i) encoder-decoder structures; (ii) transformer structures; and (iii) hybrid structures. Figure 10 shows that among 80 articles employing different model structures, 59% utilized hybrid structures, 36% utilized encoder-decoder structures, and 5% utilized transformer-based structures.

Figure 10.

Percentage number of articles employing different model structures in data retrieved.

- Encoder-Decoder based structure

Encoder-decoder structures consist of two main parts: an encoder that processes the input data and extracts high-level features, and a decoder that generates the output (e.g., segmentation map) based on the encoder’s representations [16]. The authors [109] suggested an innovative encoding-to-decoding technique known as the Full Receptive Field network, which utilizes two varieties of attention mechanisms, with ResNet-101 serving as the fundamental backbone. Similarly, a different DL segmentation framework known as the DGFNET Dual-gate fusion network. Paper [110] adopts an encoder-decoder architecture design. Typically, encoder-decoder architectures encounter difficulties with the semantic gap. To address this, the DGFNET framework comprises two modules: the Feature Enhancement Module as well as the Dual Gate Fusion Module to mitigate the impact of semantic gaps in deep convolutional neural networks, leading to improved performance in land cover classification. The model underwent evaluation using both the landcover dataset and the Potsdam dataset, achieving MIoU scores of 88.87% and 72.25%, respectively.

The article [111] proposed U-Net incorporating asymmetry and fusion coordination. It is an encoder and decoder architecture with an integrated coordinated attention mechanism, a non-symmetric convolution block refinement fusion block that gets long term dependencies and intricate information from RS data. It was reported that the method was evaluated on DeepGlobe datasets and performed best with an MIoU of 85.54% as reported compared to other models like UNet, MAResU-Net, PSPNet, DeepLab v3+, etc. However, the model has low network efficiency and is not recommended for mobile applications. Also, ref. [112] suggested the Attention dilation-LinkNet neural network, which contains an encoder-decoder structure. It takes advantage of serial-parallel combination dilated convolution and two channel-wise attention mechanisms, as well as a pretrained encoder to be useful for satellite image segmentation particularly road extraction. The best performance of an ensemble of the model achieved an IoU of 64.49% on DeepGlobe road extraction dataset. Table 2 tabulates some semantic segmentation models using the encoder-decoder structure. It shows that these models have relatively impressive generalization performances on different data; however, accuracy can be further enhanced through parameter optimization.

Table 2.

Encoder-decoder-based semantic segmentation models for land cover segmentation.

- Transformer-based structure

Transformer-based architectures are neural network structures originally designed for natural language processing (NLP), utilizing transformer modules as their fundamental building components. In the context of land cover mapping tasks, transformer-based architectures such as the Swin-S-GF [117], BANet [30], DWin-HRFormer [29], spectral spatial transformer [118], Sgformer [18], and Parallel Swin Transformer have been developed. Table 3 presents various transformer-based structures alongside their performance metrics and limitations. Researchers have noted that while these architectures achieve effective segmentation accuracy with an average OA of approximately 89%, transformers can exhibit slow convergence and be computationally expensive, particularly in land cover mapping tasks. This limitation contributes to their relatively low adoption in land cover segmentation applications.

Table 3.

Transformer-based semantic segmentation models for land cover segmentation.

- Hybrid-based structure

A hybrid-based structure combines elements from different neural network architectures or techniques to create a unified model for semantic segmentation. Traditional convolutional neural network methods face limitations in accurately capturing boundary details and small ground objects, potentially leading to the loss of crucial information. While deep convolutional neural networks are applied for classifying land use covers, results often show suboptimal performance in the land cover segmentation task [73]. However, this result can be tackled by a hybrid through introduction of encoder-decoder style semantic segmentation models, leverage existing deep learning backbone [68], and explore diverse data settings and parameters in their experimentation [121]. Other methods of the structure’s enhancement include architectural modifications through the integration of attention mechanisms, transformer architecture, module fusion, and multi-scale feature fusion [122,123]. An example is the SOCNN framework [124], which addresses the limitation faced by CNN through module integration: (i) a module for semantic segmentation; (ii) a module for superpixel optimization; and (iii) a module for fusion. While the evaluated performance of the framework demonstrated improvement, further enhancement in boundary retrieval can be achieved by incorporating superior boundary adhesion and integrating it into the boundary optimization module.

Moreso, paper [125] proposed multi-level context-guided classification method object-based CNN. It involves high-level feature-fusing and employed a Conditional Random Field for better classification performance. The model attained a comparable overall accuracy with DeepLabV3+ at various segmentation scale parameters on the Vaihingen dataset and suboptimal overall accuracy compared to DeepLabV3+ on the Potsdam dataset. Another approach identified is utilizing a Generative Adversarial Network-based approach for domain adaptation, such as the Full Space Domain Adaptation Network [103], as well as leveraging domain adaptation and transfer learning [126]. It has been proven to enhance accuracy in scenarios where source and target images originate from distinct domains. However, the domain adaption segmentation using RS images remains largely underexplored [127]. The authors [92] presented a CNN-based SegNet model that classifies terrain features using 3D geospatial data; the model did better on building classification than other natural objects. The model was validated on the Vaihingen dataset and tested on the Potsdam dataset, achieving an IoU of 84.90%.

In addition, ref. [128] proposed SBANet, which stands for Semantic Boundary Awareness Network used to extract sharp boundaries, and ResNet was employed as the backbone. Subsequently, it was enhanced by introducing a boundary attention module and applying adaptive weights in multitask learning to incorporate both low- and high-level features, with the goals of improving land-cover boundary precision and expediting network convergence. The method was evaluated on the Potsdam and Vaihingen semantic labelling datasets; they reported that SBANet performed best compared to models like UNet, FCN, SegNet, PSPNet, Deeplab3+, and others. The DenseNet-based model [129], a proposed method, modified one of the DL backbones DenseNet by adding two novel fusions that is the unit fusion and cross-level fusion. The unit fusion is detailed-oriented fusion, and the other integrates different information levels. This model with both fusions performed best on the DeepGlobe dataset.

Furthermore, paper [93] suggested a bidirectional grid fusion network, a two-way fusion architecture for classifying land in very high-resolution RS data. It encourages bidirectional information flow with mutual benefits of feature propagation, and a grid fusion architecture is attached for further improvement. The best refined model was tested on ISPRS and GID datasets, achieving MIoU performances of 68.88% and 64.01%, respectively. Table 4 shows some identified hybrid semantic segmentation models and performance metric in land cover mapping. These models have demonstrated effective performances, with an average overall accuracy of 91.3% across presented datasets.

Table 4.

Hybrid-based semantic segmentation models for land cover segmentation.

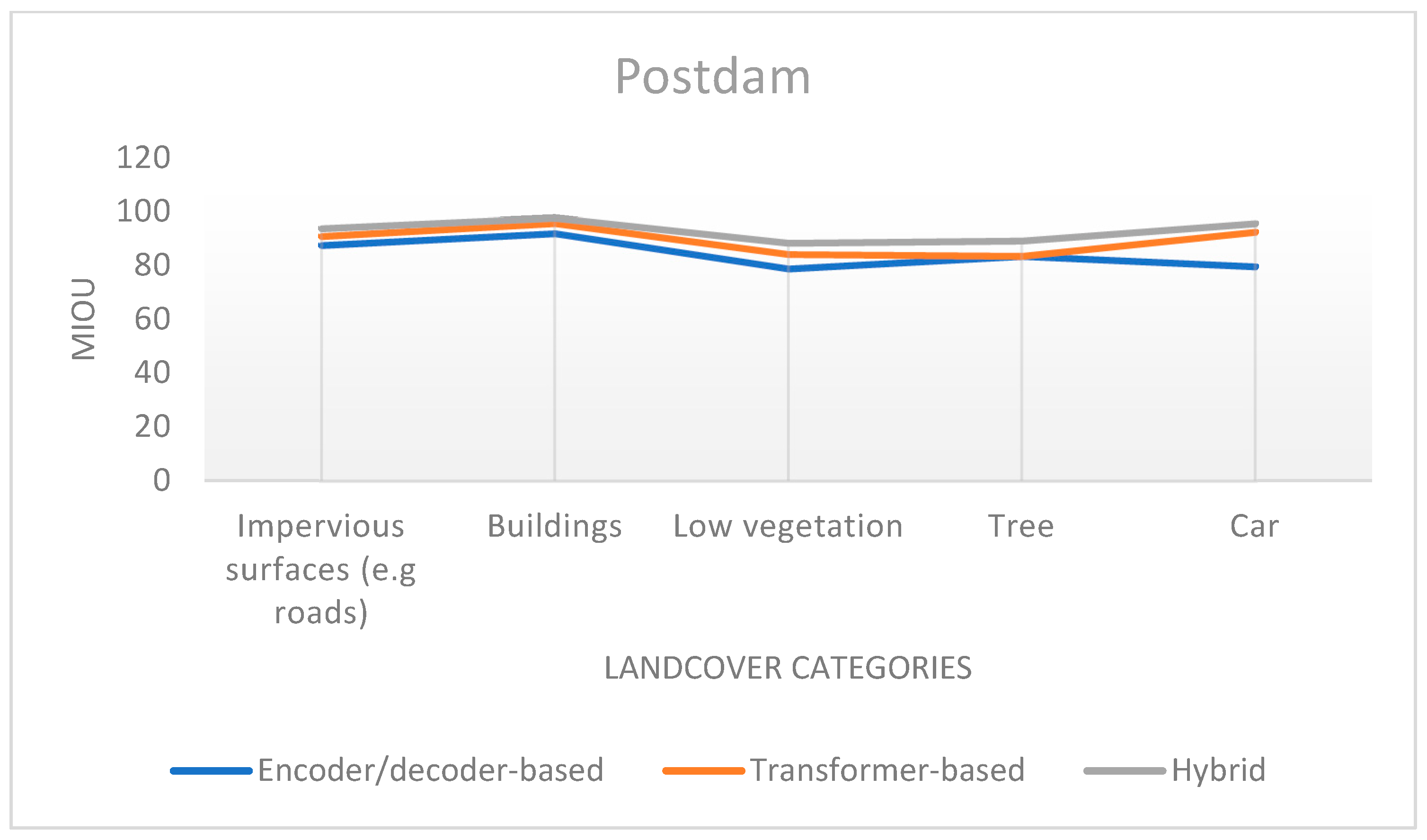

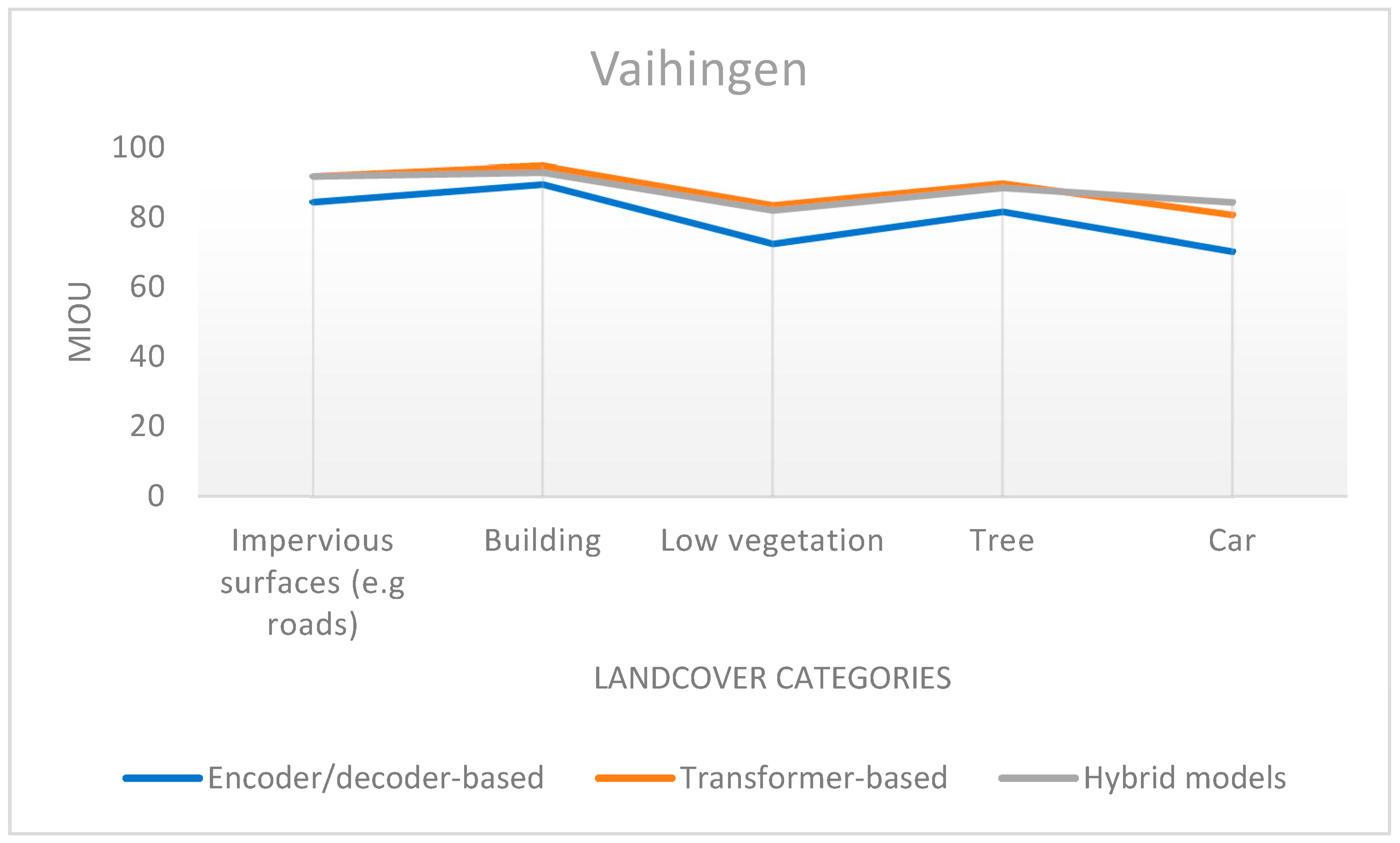

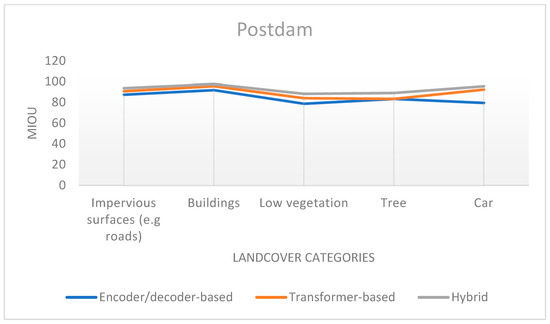

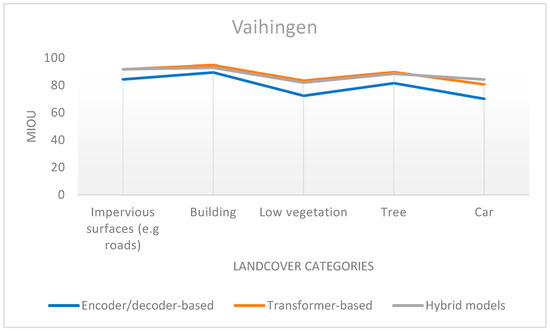

- Performance analysis of semantic segmentation model structures on ISPRS 2-D labelling Potsdam and Vaihingen datasets

Our study examines the performance of various semantic segmentation model structures using the most widely utilized datasets in land cover mapping. The models are categorized based on the three primary architectural structures, and their performances are averaged across ISPRS benchmark datasets.

Figure 11 and Figure 12 show that semantic segmentation models demonstrate impressive generalization capabilities across all ISPRS land cover categories. Among the different structures, the encoder-decoder-based models performed the least well across ISPRS land cover categories. On the Potsdam dataset, hybrid models outperformed others across all landcover categories. For the Vaihingen data, Transformer-based models slightly outperformed other structures in classifying buildings, low vegetation, and trees. Notably, all three model structures showed the highest effectiveness in the building category, with the least generalization performance observed in either the cars or low vegetation categories. Semantic segmentation models perform better in categorizing buildings compared to natural objects such as low vegetation and forests in remote sensing (RS) applications. This supports the authors’ assertion [92] that their model is more effective in man-made features due to its geometric properties than in natural features.

Figure 11.

Semantic segmentation model structures analysis on ISPRS 2-D labelling Potsdam.

Figure 12.

Semantic segmentation model structures analysis on ISPRS 2-D labelling Vaihingen.

- Common experimental training settings

The common experimental training settings found in studies are detailed in Table 5.

Table 5.

Frequently used and common hyper-parameter values for segmentation models structures.

Table 5 shows that all three model structures typically utilize data augmentation during pre-processing. Both the encoder-decoder and hybrid structures use the ResNet model as their backbone, while the Transformer-based structure employs either the ResNet or Swintiny model. For evaluation, commonly used metrics include Mean Intersection over Union (MIoU), Overall Accuracy (OA), and F1 score, among others. It is notable that the Adam optimizer is frequently used for both Transformer-based and hybrid structures, as it helps to improve convergence speed. This is particularly important for Transformer-based structures, which have a high number of parameters that tend to slow down convergence.

4. Challenges, Future Insights and Directions

In this section, we highlight the challenges in land cover mapping and semantic segmentation methodologies in the context of land cover mapping, as revealed through an extensive review of the existing literature. We found persistent gaps requiring targeted attention and innovative solutions in forthcoming research efforts. This section examines these challenges and outlines potential avenues for future investigation.

4.1. Land Cover Mapping

In land cover mapping, the key challenges include extracting land cover boundary information and generating precise land cover maps.

- Extracting boundary information

The precise delineation of sharp and well-defined boundaries [133], the refinement of object edges [134], the extraction of boundary details [128], and the acquisition of content details [135] from RS Imagery, all aimed at achieving accurate land cover segmentation. This research gap remains an area that needs further exploration.

Defining clear land cover boundaries is a crucial aspect of RS and geospatial analysis. It involves precisely delineating the borders that divide distinct land cover categories on the Earth’s surface. Semantic segmentation encounters performance degradation due to the loss of crucial boundary information [124]. The challenge of delineating precise boundaries in land cover maps is exacerbated by the heightened probability of prediction errors occurring at borders and within smaller segments [19,136]. This holds particularly true in scenarios like the segmentation and classification of vegetation land covers [54]. An illustrative example of this complexity is encountered when attempting to segment the boundaries among ecosystems characterized by a combination of both forest and grassland in regions with semi-arid to semi-humid climates [137].

Unclear boundaries, and loss of essential and detailed information at boundaries decreases the chance of producing fine segmentation results [128]. The task of effectively capturing entire and well-defined boundaries in intricate RS images of very high resolution remains open for further research and improvement.

- Generating Precise Land Cover Maps

The demand for accurate and timely high-resolution land cover maps is high and are of immediate importance to various sectors and communities [125]. The creation of precise land cover maps holds significant value for subsequent applications. These applications encompass a diverse range of tasks, including vehicle detection [105], the extraction of building footprints [138], building segmentation [32], road extraction, surface classification [112], determining optimal seamlines for orthoimage mosaicking within settlements [139], and land consumption [140]. This offers valuable assistance in the monitoring and reporting of data within rapidly changing urban regions. However, it has been reported that generating and automating accurate land cover maps still present a formidable challenge [141,142].

4.2. Semantic Segmentation Methodologies

The key challenges of semantic segmentation methodologies in land cover mapping, as identified in reviewed articles, includes enhancing semantic segmentation models performances, improving RS Images analysis using semantic segmentation models, addressing imbalance and unlabeled RS data problem.

- Enhancing deep learning model performance

Improving deep learning semantic segmentation architecture is a notable research gap in land cover mapping. This gap is characterized by the ongoing need to advance the capabilities of these models to address complex challenges emanating in land cover mapping such as improved performance on natural images and convergence speed. Various studies have critically compared the accuracy assessment and effectiveness of models, both on natural images and RS imagery, aiming to enhance their generalization performance [10] and convergence speed. Moreover, multiple studies such as [143] have modified the semantic segmentation model to improve generalization performance for land cover mapping.

- Analysis of RS images

In terms of RS data, extracting information from RS imagery data remains a challenge. The following factors contribute to inaccurate RS classification, namely the complexities inherent in deciphering intricate spatial and spectral patterns within RS Imagery [13,125], the challenge of handling diverse distributions of ground objects with variations within the same class [26] and significant intra-class and limited inter-class pixel differences [144,145,146]. Other contributing factors include data complexity, geographical time difference [147], foggy conditions [148], and data acquisition errors [141]. More so, several studies have implemented works for very high RS resolution images. However, only few studies have focused on low and medium-resolution images [68,75,114,149]. As a future insight, it is recommended to conduct more research using fast and efficient DL methods for low and medium resolution RS.

Another area is the analysis of SAR Images. SAR images is extremely important for many applications especially in Agriculture. Researchers find it very challenging classifying SAR data and the segmentation is poorly understood [84]. Some studies have undertaken land cover classification and segmentation tasks across diverse categories of SAR data [86], including polarimetric SAR imagery [77,84,85], single-polarization SAR images [87], and multi-temporal SAR data [81]. As a consideration, we recommend that a roadmap for simplified and automated semantic segmentation of SAR images should be investigated.

In LiDAR data analysis, ref. [89] pioneered novel deep learning architectures designed specifically for land cover classification and segmentation, which were extensively validated using airborne LiDAR data. Additionally, ref. [150] developed DL models that synergistically leverage airborne LiDAR data and high-resolution RS Imagery to achieve enhanced generalization performance. Beyond RS Imagery and LiDAR, semantic segmentation is applied to high-resolution SAR images [79,83] and aerial images of high resolution [2,151].

- Unlabeled and Imbalance RS data

A large majority of RS images lack high-quality or are largely unlabeled [152], with weak and missing annotations that reduce the model’s performances. The efforts to obtain well-annotated RS data are expensive, laborious, and highly time-consuming. This affects generating accurate land use maps, thereby impacting the generation of precise land cover maps negatively. To mitigate this challenge, exploration into study areas like domain adaptation techniques [153] and the application of DL models can be considered to facilitate the creation of accurate land cover maps [154]. Another suggested approach for addressing the shortage of well-annotated data is to utilize networks capable of utilizing training labels derived from lower resolution land cover data [155]. Furthermore, it is advisable to harness the benefits of multi-modal RS data, as its potential to enhance model performance particularly in situations with limited training samples has not been fully realized [156]. To overcome the laborious and time-consuming process of manually labeling data, certain studies such as [148,157] introduced DL models that addressed this issue. The authors [135] proposed the effectiveness of semi-supervised adversarial learning methods for handling limited and unannotated high-resolution satellite images. Furthermore, the investigations by [39,149,158,159] sought remedies for challenges stemming from the scarcity of well-annotated pixel-level data and as well as other studies proposed steps on how to tackle instances of class imbalance [64,154,160].

Class and sample imbalance of RS images, and data with limited annotation cause performance degradation, which can lead to poor performance especially in minority classes [161,162].

5. Conclusions

This study conducted an analysis of emerging patterns, performance, applications, and data sources related to semantic segmentation in land cover mapping. The objective is to identify knowledge gaps within this domain and offer readers a roadmap and detailed insights into semantic segmentation for land use/land cover mapping. Employing the PRISMA methodology, a comprehensive review is undertaken to address predefined research questions.

The results reveal a substantial increase in publications between 2020 and 2023, with 81% appearing in the top 13 journals. These studies originate from diverse global institutions, with over 59% attributed to Chinese institutions, followed by the USA and India. Research focuses primarily on land cover, urban areas, precision agriculture, environment, coastal areas, and forests, particularly in tasks such as land use change detection, land cover classification, and segmentation of forests, buildings, roads, agriculture, and urban areas. RS satellites, UAV and UAS, mobile phones, Google Earth, SAR, and LiDAR sources are the major data sources, with Sentinel-2, Sentinel-1, and Landsat satellites being the most utilized. Many studies use publicly available benchmark datasets for semantic segmentation model evaluation. ISPRS Vaihingen and Potsdam being widely employed, followed by GID, Landcover.ai, DeepGlobe, and WHDLD datasets.

In terms of semantic segmentation models, three primary architectural structures are identified and grouped as encoder-decoder structures, transformer structures, and hybrid structures. Based on our research, all semantic segmentation model’s structures demonstrate effective performance in land cover mapping. Hybrid and encoder-decoder structures are most popular due to their impressive generalization capabilities and speed. Transformer-based structures show good generalization but slower convergence. Current research directions and expanding frontiers in land cover mapping emphasize the introduction and implementation of innovative semantic segmentation techniques for satellite imagery in RS. Furthermore, key research gaps identified include the need to enhance model accuracy on RS data, improve existing model architectures, extract precise boundaries in land cover maps, address scarcity of well-labeled datasets, and tackle challenges associated with low and medium-resolution RS data. This study provides useful domain specific information. However, there are some threats to review validity, which include database choice, searched keywords, and classification bias.

Author Contributions

Conceptualization, S.A.; methodology, S.A.; software, S.A.; validation, S.A., and P.C.; investigation, S.A.; data curation, S.A.; writing—original draft preparation, S.A.; writing—review and editing, S.A. and P.C.; visualization, S.A.; supervision, P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie-RISE, Project SUSTAINABLE (https://www.projectsustainable.eu) with grant number 101007702”. This work was partially supported by national funds through FCT (Fundação para a Ciência e a Tecnologia), under the project—UIDB/04152/2020 (DOI:10.54499/UIDB/04152/2020)—Centro de Investigação em Gestão de Informação (MagIC)/NOVA IMS.

Data Availability Statement

Data sharing is not applicable to this article.

Acknowledgments

The authors are grateful to the editors and anonymous reviewers for their informative suggestions.

Conflicts of Interest

The author Segun Ajibola was employed by Afridat UG. The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BANet | Bilateral Awareness Network |

| CNN | Convolutional Neural Networks |

| DCNN | Deep Convolutional Neural Network |

| DEANET | Dual Encoder with Attention Network |

| DGFNET | Dual-Gate Fusion Network |

| DL | Deep Learning |

| DSM | Digital Surface Model |

| FCN | Fully Convolutional Networks |

| GF-2 | GaoFen-2 |

| GF-3 | GaoFen-3 |

| GID | GaoFen Image Data |

| HFENet | Hierarchical Feature Extraction Network |

| HMRT | Hybrid Multi-resolution and Transformer semantic extraction Network |

| IEEE | Institute of Electrical and Electronics Engineers |

| IoU | Mean Intersection over Union |

| ISPRS | International Society for Photogrammetry and Remote Sensing |

| LC | Land Cover |

| LiDAR | Light Detection and Ranging data |

| LoveDA | Land-cOVEr Domain Adaptive |

| LULC | Land Use and Land Cover |

| MARE | Multi-Attention REsu-Net |

| MDPI | Multidisciplinary Digital Publishing Institute |

| MIoU | Mean Intersection over Union |

| NLP | Natural Language Processing |

| OA | Overall Accuracy |

| PolSAR | Polarimetric Synthetic Aperture Radar |

| RAANET | Residual ASPP with Attention Net |

| RQ | Research Question |

| RS | Remote Sensing |

| RSI | Remote Sensing Imaginary |

| SAR | Synthetic Aperture Radar |

| SBANet | Semantic Boundary Awareness Network |

| SEG-ESRGAN | Segmentation Enhanced Super-Resolution Generative Adversarial Network |

| SOCNN | Superpixel-Optimized convolutional neural network |

| SOTA | State-Of-The-Art |

| UAS | Unmanned Aircraft System |

| UAV | Unmanned Aerial Vehicle |

| VEDAI | VEhicle Detection in Aerial Imagery |

| WHDLD | Wuhan Dense Labeling Dataset |

References

- Vali, A.; Comai, S.; Matteucci, M. Deep Learning for Land Use and Land Cover Classification Based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Ma, J.; Wu, L.; Tang, X.; Liu, F.; Zhang, X.; Jiao, L. Building Extraction of Aerial Images by a Global and Multi-Scale Encoder-Decoder Network. Remote Sens. 2020, 12, 2350. [Google Scholar] [CrossRef]

- Pourmohammadi, P.; Adjeroh, D.A.; Strager, M.P.; Farid, Y.Z. Predicting Developed Land Expansion Using Deep Convolutional Neural Networks. Environ. Model. Softw. 2020, 134, 104751. [Google Scholar] [CrossRef]

- Di Pilato, A.; Taggio, N.; Pompili, A.; Iacobellis, M.; Di Florio, A.; Passarelli, D.; Samarelli, S. Deep Learning Approaches to Earth Observation Change Detection. Remote Sens. 2021, 13, 4083. [Google Scholar] [CrossRef]

- Wei, P.; Chai, D.; Lin, T.; Tang, C.; Du, M.; Huang, J. Large-Scale Rice Mapping under Different Years Based on Time-Series Sentinel-1 Images Using Deep Semantic Segmentation Model. ISPRS J. Photogramm. Remote Sens. 2021, 174, 198–214. [Google Scholar] [CrossRef]

- Dal Molin Jr., R.; Rizzoli, P. Potential of Convolutional Neural Networks for Forest Mapping Using Sentinel-1 Interferometric Short Time Series. Remote Sens. 2022, 14, 1381. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, X.; Huang, J.; Wang, H.; Xin, Q. Fine-Grained Building Change Detection from Very High-Spatial-Resolution Remote Sensing Images Based on Deep Multitask Learning. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8000605. [Google Scholar] [CrossRef]

- Trenčanová, B.; Proença, V.; Bernardino, A. Development of Semantic Maps of Vegetation Cover from UAV Images to Support Planning and Management in Fine-Grained Fire-Prone Landscapes. Remote Sens. 2022, 14, 1262. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Z.; Zhang, J.; Wei, A. MSANet: An Improved Semantic Segmentation Method Using Multi-Scale Attention for Remote Sensing Images. Remote Sens. Lett. 2022, 13, 1249–1259. [Google Scholar] [CrossRef]

- Scepanovic, S.; Antropov, O.; Laurila, P.; Rauste, Y.; Ignatenko, V.; Praks, J. Wide-Area Land Cover Mapping with Sentinel-1 Imagery Using Deep Learning Semantic Segmentation Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10357–10374. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep Learning for Visual Understanding: A Review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Huang, J.; Weng, L.; Chen, B.; Xia, M. DFFAN: Dual Function Feature Aggregation Network for Semantic Segmentation of Land Cover. ISPRS Int. J. Geoinf. 2021, 10, 125. [Google Scholar] [CrossRef]

- Chen, S.; Wu, C.; Mukherjee, M.; Zheng, Y. Ha-Mppnet: Height Aware-Multi Path Parallel Network for High Spatial Resolution Remote Sensing Image Semantic Seg-Mentation. ISPRS Int. J. Geoinf. 2021, 10, 672. [Google Scholar] [CrossRef]

- Hao, S.; Zhou, Y.; Guo, Y. A Brief Survey on Semantic Segmentation with Deep Learning. Neurocomputing 2020, 406, 302–321. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, X.; Wang, Q.; Dai, F.; Gong, Y.; Zhu, K. Symmetrical Dense-Shortcut Deep Fully Convolutional Networks for Semantic Segmentation of Very-High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1633–1644. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Munich, Germany, 5–9 October 2015; Volume 9351. [Google Scholar]

- Chen, B.; Xia, M.; Huang, J. Mfanet: A Multi-Level Feature Aggregation Network for Semantic Segmentation of Land Cover. Remote Sens. 2021, 13, 731. [Google Scholar] [CrossRef]

- Weng, L.; Pang, K.; Xia, M.; Lin, H.; Qian, M.; Zhu, C. Sgformer: A Local and Global Features Coupling Network for Semantic Segmentation of Land Cover. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 6812–6824. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Xiao, D.; Kang, Z.; Fu, Y.; Li, Z.; Ran, M. Csswin-Unet: A Swin-Unet Network for Semantic Segmentation of Remote Sensing Images by Aggregating Contextual Information and Extracting Spatial Information. Int. J. Remote Sens. 2023, 44, 7598–7625. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A Survey on Deep Learning Techniques for Image and Video Semantic Segmentation. Appl. Soft Comput. J. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Lateef, F.; Ruichek, Y. Survey on Semantic Segmentation Using Deep Learning Techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A Review of Deep Learning Methods for Semantic Segmentation of Remote Sensing Imagery. Expert. Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Manley, K.; Nyelele, C.; Egoh, B.N. A Review of Machine Learning and Big Data Applications in Addressing Ecosystem Service Research Gaps. Ecosyst. Serv. 2022, 57, 101478. [Google Scholar] [CrossRef]

- Tian, T.; Chu, Z.; Hu, Q.; Ma, L. Class-Wise Fully Convolutional Network for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2021, 13, 3211. [Google Scholar] [CrossRef]

- Wan, L.; Tian, Y.; Kang, W.; Ma, L. D-TNet: Category-Awareness Based Difference-Threshold Alternative Learning Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5633316. [Google Scholar] [CrossRef]

- Picon, A.; Bereciartua-Perez, A.; Eguskiza, I.; Romero-Rodriguez, J.; Jimenez-Ruiz, C.J.; Eggers, T.; Klukas, C.; Navarra-Mestre, R. Deep Convolutional Neural Network for Damaged Vegetation Segmentation from RGB Images Based on Virtual NIR-Channel Estimation. Artif. Intell. Agric. 2022, 6, 199–210. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, X.; Li, J. DWin-HRFormer: A High-Resolution Transformer Model With Directional Windows for Semantic Segmentation of Urban Construction Land. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5400714. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Wang, D.; Duan, C.; Wang, T.; Meng, X. Transformer Meets Convolution: A Bilateral Awareness Network for Semantic Segmentation of Very Fine Resolution Urban Scene Images. Remote Sens. 2021, 13, 3065. [Google Scholar] [CrossRef]

- Akcay, O.; Kinaci, A.C.; Avsar, E.O.; Aydar, U. Semantic Segmentation of High-Resolution Airborne Images with Dual-Stream DeepLabV3+. ISPRS Int. J. Geoinf. 2022, 11, 23. [Google Scholar] [CrossRef]

- Sun, Z.; Zhou, W.; Ding, C.; Xia, M. Multi-Resolution Transformer Network for Building and Road Segmentation of Remote Sensing Image. ISPRS Int. J. Geoinf. 2022, 11, 165. [Google Scholar] [CrossRef]

- Chen, T.-H.K.; Qiu, C.; Schmitt, M.; Zhu, X.X.; Sabel, C.E.; Prishchepov, A.V. Mapping Horizontal and Vertical Urban Densification in Denmark with Landsat Time-Series from 1985 to 2018: A Semantic Segmentation Solution. Remote Sens. Environ. 2020, 251, 112096. [Google Scholar] [CrossRef]

- Wu, F.; Wang, C.; Zhang, H.; Li, J.; Li, L.; Chen, W.; Zhang, B. Built-up Area Mapping in China from GF-3 SAR Imagery Based on the Framework of Deep Learning. Remote Sens. Environ. 2021, 262, 112515. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, S.; Zeng, J.; Li, T.; Guo, Q.; Jin, S. A Framework for Land Use Scenes Classification Based on Landscape Photos. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6124–6141. [Google Scholar] [CrossRef]

- Xu, L.; Shi, S.; Liu, Y.; Zhang, H.; Wang, D.; Zhang, L.; Liang, W.; Chen, H. A Large-Scale Remote Sensing Scene Dataset Construction for Semantic Segmentation. Int. J. Image Data Fusion 2023, 14, 299–323. [Google Scholar] [CrossRef]

- Sirous, A.; Satari, M.; Shahraki, M.M.; Pashayi, M. A Conditional Generative Adversarial Network for Urban Area Classification Using Multi-Source Data. Earth Sci. Inf. 2023, 16, 2529–2543. [Google Scholar] [CrossRef]

- Vasavi, S.; Sri Somagani, H.; Sai, Y. Classification of Buildings from VHR Satellite Images Using Ensemble of U-Net and ResNet. Egypt. J. Remote Sens. Space Sci. 2023, 26, 937–953. [Google Scholar] [CrossRef]

- Kang, J.; Fernandez-Beltran, R.; Sun, X.; Ni, J.; Plaza, A. Deep Learning-Based Building Footprint Extraction with Missing Annotations. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3002805. [Google Scholar] [CrossRef]

- Yu, J.; Zeng, P.; Yu, Y.; Yu, H.; Huang, L.; Zhou, D. A Combined Convolutional Neural Network for Urban Land-Use Classification with GIS Data. Remote Sens. 2022, 14, 1128. [Google Scholar] [CrossRef]

- Wei, P.; Chai, D.; Huang, R.; Peng, D.; Lin, T.; Sha, J.; Sun, W.; Huang, J. Rice Mapping Based on Sentinel-1 Images Using the Coupling of Prior Knowledge and Deep Semantic Segmentation Network: A Case Study in Northeast China from 2019 to 2021. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102948. [Google Scholar] [CrossRef]

- Liu, S.; Peng, D.; Zhang, B.; Chen, Z.; Yu, L.; Chen, J.; Pan, Y.; Zheng, S.; Hu, J.; Lou, Z.; et al. The Accuracy of Winter Wheat Identification at Different Growth Stages Using Remote Sensing. Remote Sens. 2022, 14, 893. [Google Scholar] [CrossRef]

- Bem, P.P.D.; de Carvalho Júnior, O.A.; Carvalho, O.L.F.D.; Gomes, R.A.T.; Guimarāes, R.F.; Pimentel, C.M.M. Irrigated Rice Crop Identification in Southern Brazil Using Convolutional Neural Networks and Sentinel-1 Time Series. Remote Sens. Appl. 2021, 24, 100627. [Google Scholar] [CrossRef]

- Niu, B.; Feng, Q.; Su, S.; Yang, Z.; Zhang, S.; Liu, S.; Wang, J.; Yang, J.; Gong, J. Semantic Segmentation for Plastic-Covered Greenhouses and Plastic-Mulched Farmlands from VHR Imagery. Int. J. Digit. Earth 2023, 16, 4553–4572. [Google Scholar] [CrossRef]

- Sykas, D.; Sdraka, M.; Zografakis, D.; Papoutsis, I. A Sentinel-2 Multiyear, Multicountry Benchmark Dataset for Crop Classification and Segmentation With Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3323–3339. [Google Scholar] [CrossRef]

- Descals, A.; Wich, S.; Meijaard, E.; Gaveau, D.L.A.; Peedell, S.; Szantoi, Z. High-Resolution Global Map of Smallholder and Industrial Closed-Canopy Oil Palm Plantations. Earth Syst. Sci. Data 2021, 13, 1211–1231. [Google Scholar] [CrossRef]

- He, J.; Lyu, D.; He, L.; Zhang, Y.; Xu, X.; Yi, H.; Tian, Q.; Liu, B.; Zhang, X. Combining Object-Oriented and Deep Learning Methods to Estimate Photosynthetic and Non-Photosynthetic Vegetation Cover in the Desert from Unmanned Aerial Vehicle Images with Consideration of Shadows. Remote Sens. 2023, 15, 105. [Google Scholar] [CrossRef]

- Wan, L.; Li, S.; Chen, Y.; He, Z.; Shi, Y. Application of Deep Learning in Land Use Classification for Soil Erosion Using Remote Sensing. Front. Earth Sci. 2022, 10, 849531. [Google Scholar] [CrossRef]

- Cho, A.Y.; Park, S.-E.; Kim, D.-J.; Kim, J.; Li, C.; Song, J. Burned Area Mapping Using Unitemporal PlanetScope Imagery With a Deep Learning Based Approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 242–253. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Reinke, K.; Stein, A. Predicting Wildfire Burns from Big Geodata Using Deep Learning. Saf. Sci. 2021, 140, 105276. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, P.; Liang, H.; Zheng, C.; Yin, J.; Tian, Y.; Cui, W. Semantic Segmentation and Analysis on Sensitive Parameters of Forest Fire Smoke Using Smoke-Unet and Landsat-8 Imagery. Remote Sens. 2022, 14, 45. [Google Scholar] [CrossRef]

- Liu, C.-C.; Zhang, Y.-C.; Chen, P.-Y.; Lai, C.-C.; Chen, Y.-H.; Cheng, J.-H.; Ko, M.-H. Clouds Classification from Sentinel-2 Imagery with Deep Residual Learning and Semantic Image Segmentation. Remote Sens. 2019, 11, 119. [Google Scholar] [CrossRef]

- Ji, W.; Chen, Y.; Li, K.; Dai, X. Multicascaded Feature Fusion-Based Deep Learning Network for Local Climate Zone Classification Based on the So2Sat LCZ42 Benchmark Dataset. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 449–467. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Tree, Shrub, and Grass Classification Using Only RGB Images. Remote Sens. 2020, 12, 1333. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Bester, M.S.; Guillen, L.A.; Ramezan, C.A.; Carpinello, D.J.; Fan, Y.; Hartley, F.M.; Maynard, S.M.; Pyron, J.L. Semantic Segmentation Deep Learning for Extracting Surface Mine Extents from Historic Topographic Maps. Remote Sens. 2020, 12, 4145. [Google Scholar] [CrossRef]

- Zhou, G.; Xu, J.; Chen, W.; Li, X.; Li, J.; Wang, L. Deep Feature Enhancement Method for Land Cover With Irregular and Sparse Spatial Distribution Features: A Case Study on Open-Pit Mining. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4401220. [Google Scholar] [CrossRef]

- Lee, S.-H.; Han, K.-J.; Lee, K.; Lee, K.-J.; Oh, K.-Y.; Lee, M.-J. Classification of Landscape Affected by Deforestation Using High-resolution Remote Sensing Data and Deep-learning Techniques. Remote Sens. 2020, 12, 3372. [Google Scholar] [CrossRef]

- Yu, T.; Wu, W.; Gong, C.; Li, X. Residual Multi-Attention Classification Network for a Forest Dominated Tropical Landscape Using High-Resolution Remote Sensing Imagery. ISPRS Int. J. Geoinf. 2021, 10, 22. [Google Scholar] [CrossRef]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and Evaluation of Deep Learning Architectures for Efficient Land Cover Mapping with UAS Hyper-Spatial Imagery: A Case Study over a Wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef]

- Fang, B.; Chen, G.; Chen, J.; Ouyang, G.; Kou, R.; Wang, L. Cct: Conditional Co-Training for Truly Unsupervised Remote Sensing Image Segmentation in Coastal Areas. Remote Sens. 2021, 13, 3521. [Google Scholar] [CrossRef]

- Buchsteiner, C.; Baur, P.A.; Glatzel, S. Spatial Analysis of Intra-Annual Reed Ecosystem Dynamics at Lake Neusiedl Using RGB Drone Imagery and Deep Learning. Remote Sens. 2023, 15, 3961. [Google Scholar] [CrossRef]

- Wang, Z.; Mahmoudian, N. Aerial Fluvial Image Dataset for Deep Semantic Segmentation Neural Networks and Its Benchmarks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4755–4766. [Google Scholar] [CrossRef]

- Chen, J.; Chen, G.; Wang, L.; Fang, B.; Zhou, P.; Zhu, M. Coastal Land Cover Classification of High-Resolution Remote Sensing Images Using Attention-Driven Context Encoding Network. Sensors 2020, 20, 7032. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zhou, Y.; Zhang, Y.; Zhong, L.; Wang, J.; Chen, J. DKDFN: Domain Knowledge-Guided Deep Collaborative Fusion Network for Multimodal Unitemporal Remote Sensing Land Cover Classification. ISPRS J. Photogramm. Remote Sens. 2022, 186, 170–189. [Google Scholar] [CrossRef]

- Tzepkenlis, A.; Marthoglou, K.; Grammalidis, N. Efficient Deep Semantic Segmentation for Land Cover Classification Using Sentinel Imagery. Remote Sens. 2023, 15, 2027. [Google Scholar] [CrossRef]

- Billson, J.; Islam, M.D.S.; Sun, X.; Cheng, I. Water Body Extraction from Sentinel-2 Imagery with Deep Convolutional Networks and Pixelwise Category Transplantation. Remote Sens. 2023, 15, 1253. [Google Scholar] [CrossRef]

- Bergamasco, L.; Bovolo, F.; Bruzzone, L. A Dual-Branch Deep Learning Architecture for Multisensor and Multitemporal Remote Sensing Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2147–2162. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, B.; Chen, Z.; Bai, Y.; Chen, P. A Multi-Temporal Network for Improving Semantic Segmentation of Large-Scale Landsat Imagery. Remote Sens. 2022, 14, 5062. [Google Scholar] [CrossRef]

- Yang, X.; Chen, Z.; Zhang, B.; Li, B.; Bai, Y.; Chen, P. A Block Shuffle Network with Superpixel Optimization for Landsat Image Semantic Segmentation. Remote Sens. 2022, 14, 1432. [Google Scholar] [CrossRef]

- Boonpook, W.; Tan, Y.; Nardkulpat, A.; Torsri, K.; Torteeka, P.; Kamsing, P.; Sawangwit, U.; Pena, J.; Jainaen, M. Deep Learning Semantic Segmentation for Land Use and Land Cover Types Using Landsat 8 Imagery. ISPRS Int. J. Geoinf. 2023, 12, 14. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Stein, A. Recurrent Multiresolution Convolutional Networks for VHR Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6361–6374. [Google Scholar] [CrossRef]

- Karila, K.; Matikainen, L.; Karjalainen, M.; Puttonen, E.; Chen, Y.; Hyyppä, J. Automatic Labelling for Semantic Segmentation of VHR Satellite Images: Application of Airborne Laser Scanner Data and Object-Based Image Analysis. ISPRS Open J. Photogramm. Remote Sens. 2023, 9, 100046. [Google Scholar] [CrossRef]

- Zhang, X.; Du, L.; Tan, S.; Wu, F.; Zhu, L.; Zeng, Y.; Wu, B. Land Use and Land Cover Mapping Using Rapideye Imagery Based on a Novel Band Attention Deep Learning Method in the Three Gorges Reservoir Area. Remote Sens. 2021, 13, 1225. [Google Scholar] [CrossRef]

- Zhu, Y.; Geis, C.; So, E.; Jin, Y. Multitemporal Relearning with Convolutional LSTM Models for Land Use Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3251–3265. [Google Scholar] [CrossRef]

- Fan, Z.; Zhan, T.; Gao, Z.; Li, R.; Liu, Y.; Zhang, L.; Jin, Z.; Xu, S. Land Cover Classification of Resources Survey Remote Sensing Images Based on Segmentation Model. IEEE Access 2022, 10, 56267–56281. [Google Scholar] [CrossRef]

- Clark, A.; Phinn, S.; Scarth, P. Pre-Processing Training Data Improves Accuracy and Generalisability of Convolutional Neural Network Based Landscape Semantic Segmentation. Land 2023, 12, 1268. [Google Scholar] [CrossRef]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A New Fully Convolutional Neural Network for Semantic Segmentation of Polarimetric SAR Imagery in Complex Land Cover Ecosystem. ISPRS J. Photogramm. Remote Sens. 2019, 151, 223–236. [Google Scholar] [CrossRef]

- Wenger, R.; Puissant, A.; Weber, J.; Idoumghar, L.; Forestier, G. Multimodal and Multitemporal Land Use/Land Cover Semantic Segmentation on Sentinel-1 and Sentinel-2 Imagery: An Application on a MultiSenGE Dataset. Remote Sens. 2023, 15, 151. [Google Scholar] [CrossRef]

- Xia, J.; Yokoya, N.; Adriano, B.; Zhang, L.; Li, G.; Wang, Z. A Benchmark High-Resolution GaoFen-3 SAR Dataset for Building Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5950–5963. [Google Scholar] [CrossRef]

- Kotru, R.; Turkar, V.; Simu, S.; De, S.; Shaikh, M.; Banerjee, S.; Singh, G.; Das, A. Development of a Generalized Model to Classify Various Land Covers for ALOS-2 L-Band Images Using Semantic Segmentation. Adv. Space Res. 2022, 70, 3811–3821. [Google Scholar] [CrossRef]

- Mehra, A.; Jain, N.; Srivastava, H.S. A Novel Approach to Use Semantic Segmentation Based Deep Learning Networks to Classify Multi-Temporal SAR Data. Geocarto Int. 2022, 37, 163–178. [Google Scholar] [CrossRef]

- Pešek, O.; Segal-Rozenhaimer, M.; Karnieli, A. Using Convolutional Neural Networks for Cloud Detection on VENμS Images over Multiple Land-Cover Types. Remote Sens. 2022, 14, 5210. [Google Scholar] [CrossRef]

- Jing, H.; Wang, Z.; Sun, X.; Xiao, D.; Fu, K. PSRN: Polarimetric Space Reconstruction Network for PolSAR Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10716–10732. [Google Scholar] [CrossRef]

- Zhang, R.; Chen, J.; Feng, L.; Li, S.; Yang, W.; Guo, D. A Refined Pyramid Scene Parsing Network for Polarimetric SAR Image Semantic Segmentation in Agricultural Areas. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4014805. [Google Scholar] [CrossRef]

- Garg, R.; Kumar, A.; Bansal, N.; Prateek, M.; Kumar, S. Semantic Segmentation of PolSAR Image Data Using Advanced Deep Learning Model. Sci. Rep. 2021, 11, 15365. [Google Scholar] [CrossRef]

- Zheng, N.-R.; Yang, Z.-A.; Shi, X.-Z.; Zhou, R.-Y.; Wang, F. Land Cover Classification of Synthetic Aperture Radar Images Based on Encoder—Decoder Network with an Attention Mechanism. J. Appl. Remote Sens. 2022, 16, 014520. [Google Scholar] [CrossRef]

- Shi, X.; Fu, S.; Chen, J.; Wang, F.; Xu, F. Object-Level Semantic Segmentation on the High-Resolution Gaofen-3 FUSAR-Map Dataset. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3107–3119. [Google Scholar] [CrossRef]

- Yoshida, K.; Pan, S.; Taniguchi, J.; Nishiyama, S.; Kojima, T.; Islam, M.T. Airborne LiDAR-Assisted Deep Learning Methodology for Riparian Land Cover Classification Using Aerial Photographs and Its Application for Flood Modelling. J. Hydroinformatics 2022, 24, 179–201. [Google Scholar] [CrossRef]

- Arief, H.A.; Strand, G.-H.; Tveite, H.; Indahl, U.G. Land Cover Segmentation of Airborne LiDAR Data Using Stochastic Atrous Network. Remote Sens. 2018, 10, 973. [Google Scholar] [CrossRef]

- Xu, Z.; Su, C.; Zhang, X. A Semantic Segmentation Method with Category Boundary for Land Use and Land Cover (LULC) Mapping of Very-High Resolution (VHR) Remote Sensing Image. Int. J. Remote Sens. 2021, 42, 3146–3165. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, P.; Shi, Q.; Liu, M. An Adversarial Domain Adaptation Framework with KL-Constraint for Remote Sensing Land Cover Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3002305. [Google Scholar] [CrossRef]

- Lee, D.G.; Shin, Y.H.; Lee, D.C. Land Cover Classification Using SegNet with Slope, Aspect, and Multidirectional Shaded Relief Images Derived from Digital Surface Model. J. Sens. 2020, 2020, 8825509. [Google Scholar] [CrossRef]

- Wang, Y.; Shi, H.; Zhuang, Y.; Sang, Q.; Chen, L. Bidirectional Grid Fusion Network for Accurate Land Cover Classification of High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5508–5517. [Google Scholar] [CrossRef]

- Shi, H.; Fan, J.; Wang, Y.; Chen, L. Dual Attention Feature Fusion and Adaptive Context for Accurate Segmentation of Very High-Resolution Remote Sensing Images. Remote Sens. 2021, 13, 3715. [Google Scholar] [CrossRef]

- He, S.; Lu, X.; Gu, J.; Tang, H.; Yu, Q.; Liu, K.; Ding, H.; Chang, C.; Wang, N. RSI-Net: Two-Stream Deep Neural Network for Remote Sensing Images-Based Semantic Segmentation. IEEE Access 2022, 10, 34858–34871. [Google Scholar] [CrossRef]

- Yang, N.; Tang, H. Semantic Segmentation of Satellite Images: A Deep Learning Approach Integrated with Geospatial Hash Codes. Remote Sens. 2021, 13, 2723. [Google Scholar] [CrossRef]

- Boguszewski, A.; Batorski, D.; Ziemba-Jankowska, N.; Dziedzic, T.; Zambrzycka, A. LandCover.Ai: Dataset for Automatic Mapping of Buildings, Woodlands, Water and Roads from Aerial Imagery. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Gao, J.; Weng, L.; Xia, M.; Lin, H. MLNet: Multichannel Feature Fusion Lozenge Network for Land Segmentation. J. Appl. Remote Sens. 2022, 16, 016513. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raska, R. DeepGlobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; Volume 2018. [Google Scholar]

- Wei, H.; Xu, X.; Ou, N.; Zhang, X.; Dai, Y. Deanet: Dual Encoder with Attention Network for Semantic Segmentation of Remote Sensing Imagery. Remote Sens. 2021, 13, 3900. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; Volume 2017. [Google Scholar]

- Li, W.; He, C.; Fang, J.; Zheng, J.; Fu, H.; Yu, L. Semantic Segmentation-Based Building Footprint Extraction Using Very High-Resolution Satellite Images and Multi-Source GIS Data. Remote Sens. 2019, 11, 403. [Google Scholar] [CrossRef]

- Ji, S.; Wang, D.; Luo, M. Generative Adversarial Network-Based Full-Space Domain Adaptation for Land Cover Classification from Multiple-Source Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3816–3828. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, L.; Wu, Y.; Wu, G.; Guo, Z.; Waslander, S.L. Aerial Imagery for Roof Segmentation: A Large-Scale Dataset towards Automatic Mapping of Buildings. ISPRS J. Photogramm. Remote Sens. 2019, 147, 42–55. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Multi-Object Segmentation in Complex Urban Scenes from High-Resolution Remote Sensing Data. Remote Sens. 2021, 13, 3710. [Google Scholar] [CrossRef]

- Khan, S.D.; Alarabi, L.; Basalamah, S. Deep Hybrid Network for Land Cover Semantic Segmentation in High-Spatial Resolution Satellite Images. Information 2021, 12, 230. [Google Scholar] [CrossRef]

- Liu, R.; Tao, F.; Liu, X.; Na, J.; Leng, H.; Wu, J.; Zhou, T. RAANet: A Residual ASPP with Attention Framework for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 3109. [Google Scholar] [CrossRef]