An Enhanced Feature Extraction Framework for Cross-Modal Image–Text Retrieval

Abstract

1. Introduction

2. Methodology

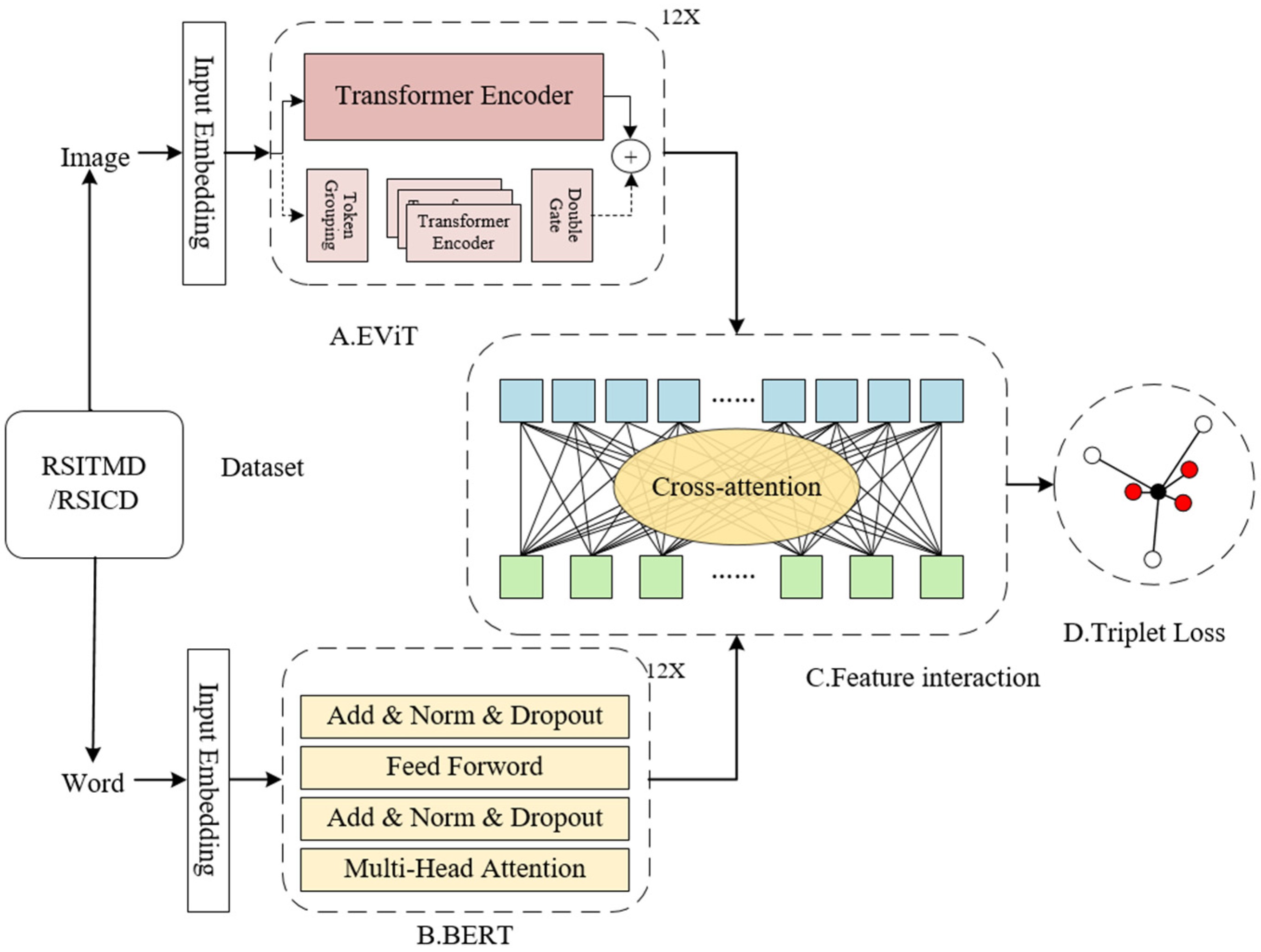

2.1. Overall Framework

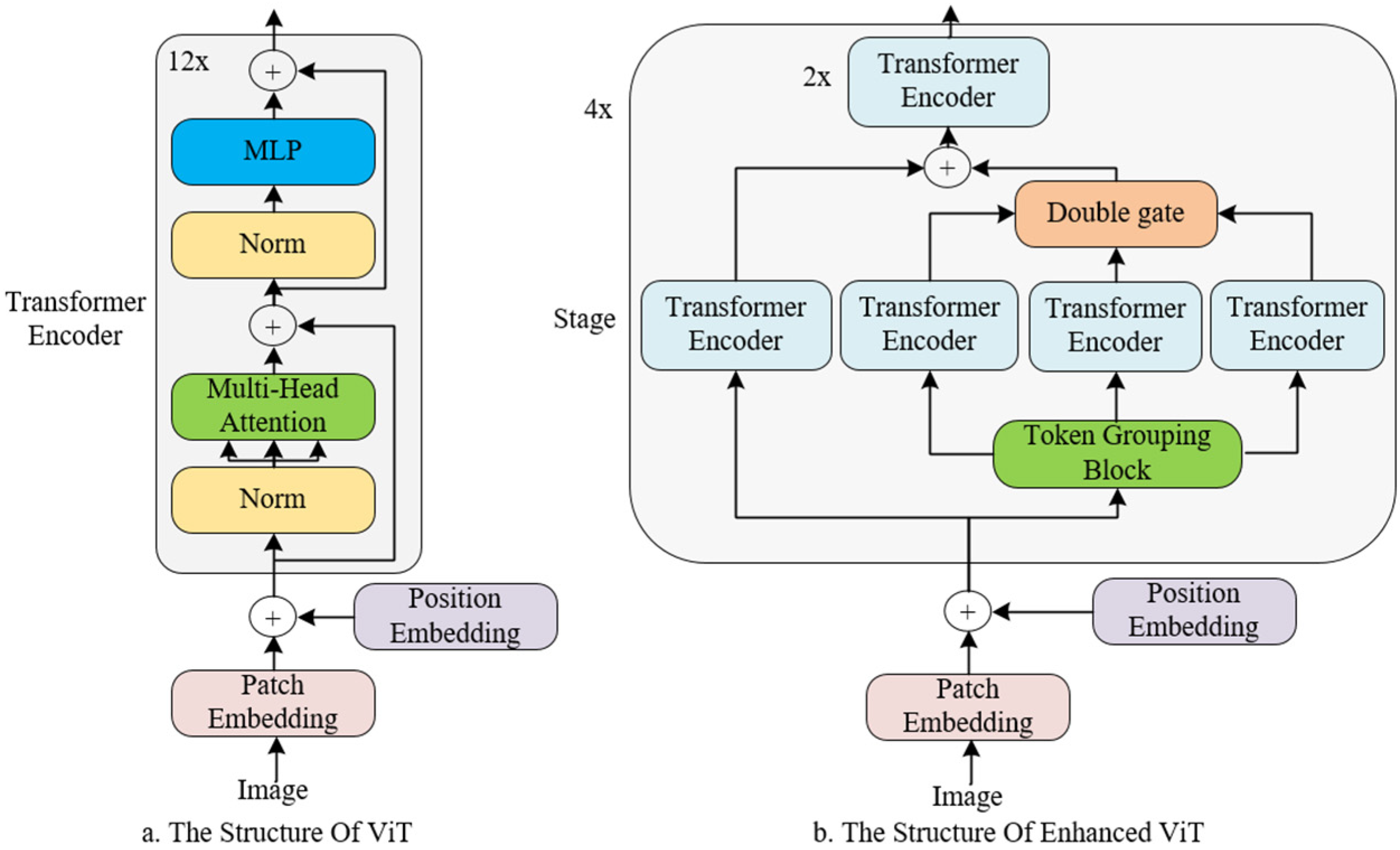

2.2. EViT

2.2.1. Token Grouping Block

2.2.2. DoubleGate

2.3. Feature Interaction and Target Function

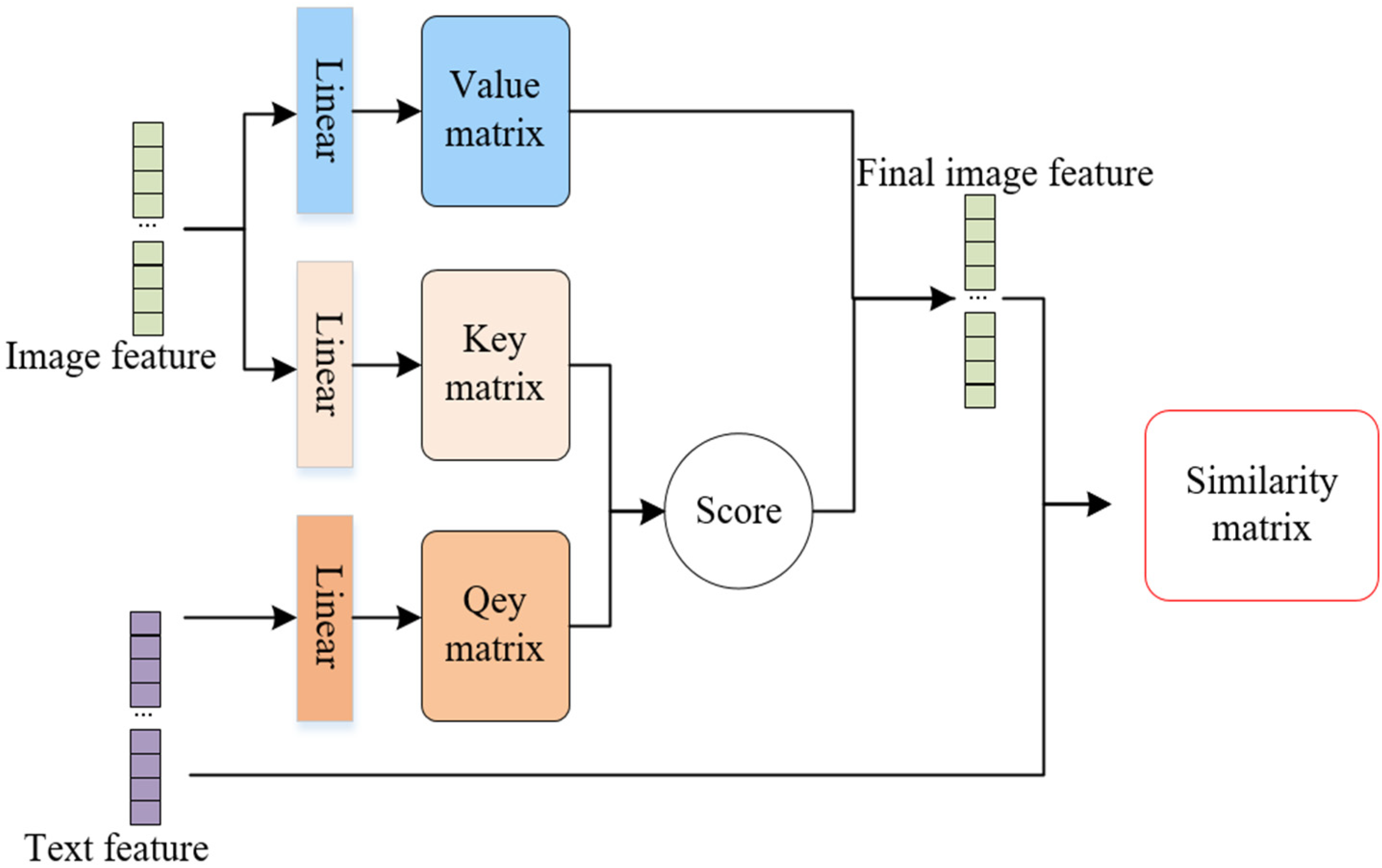

2.3.1. Cross-Attention Layer

2.3.2. Objective Function

3. Experimental

3.1. Experiment Details

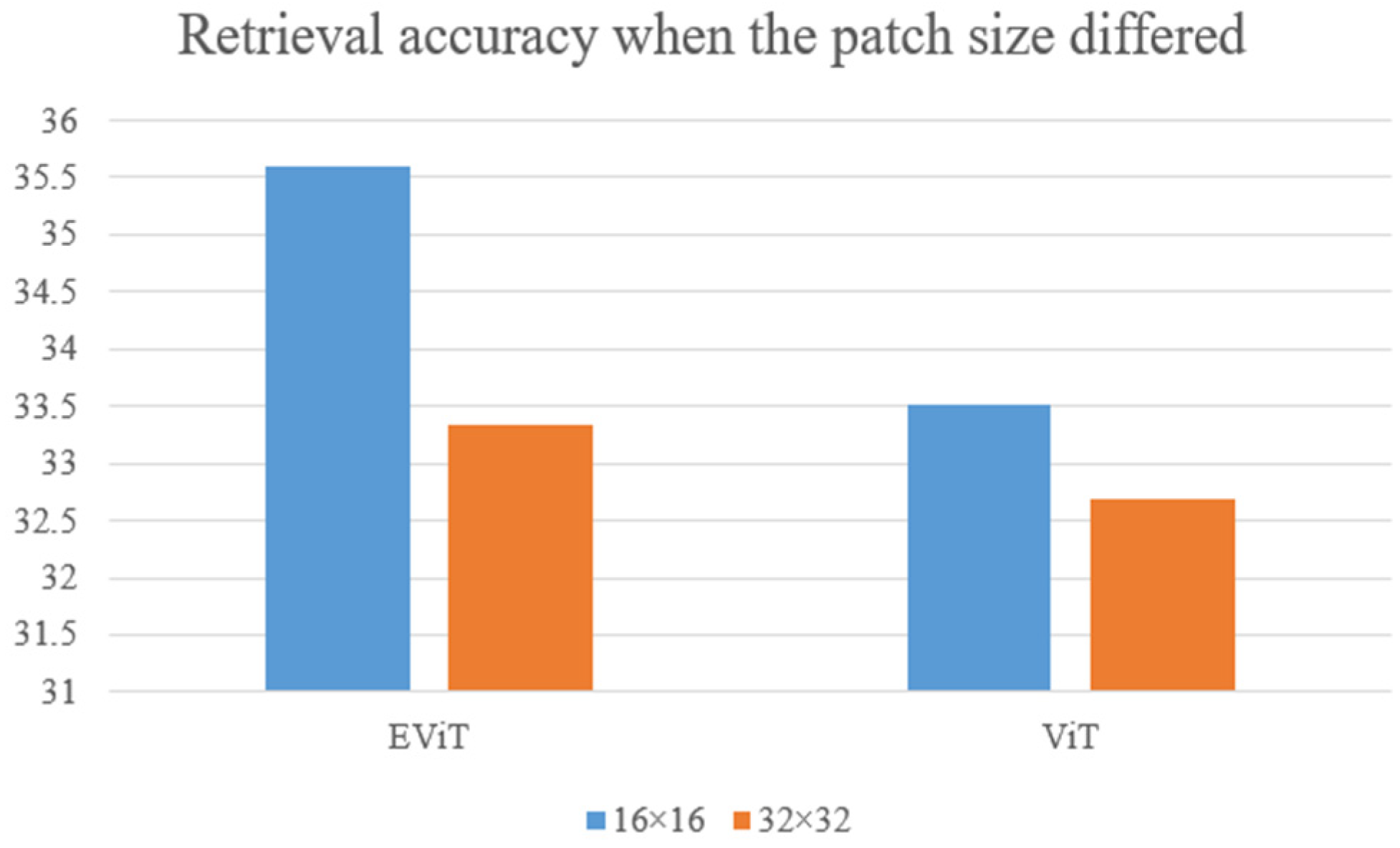

3.2. Experimental Design and Results

3.2.1. Comparisons with Other Approaches

3.2.2. Ablation Studies

3.2.3. Visualization Results

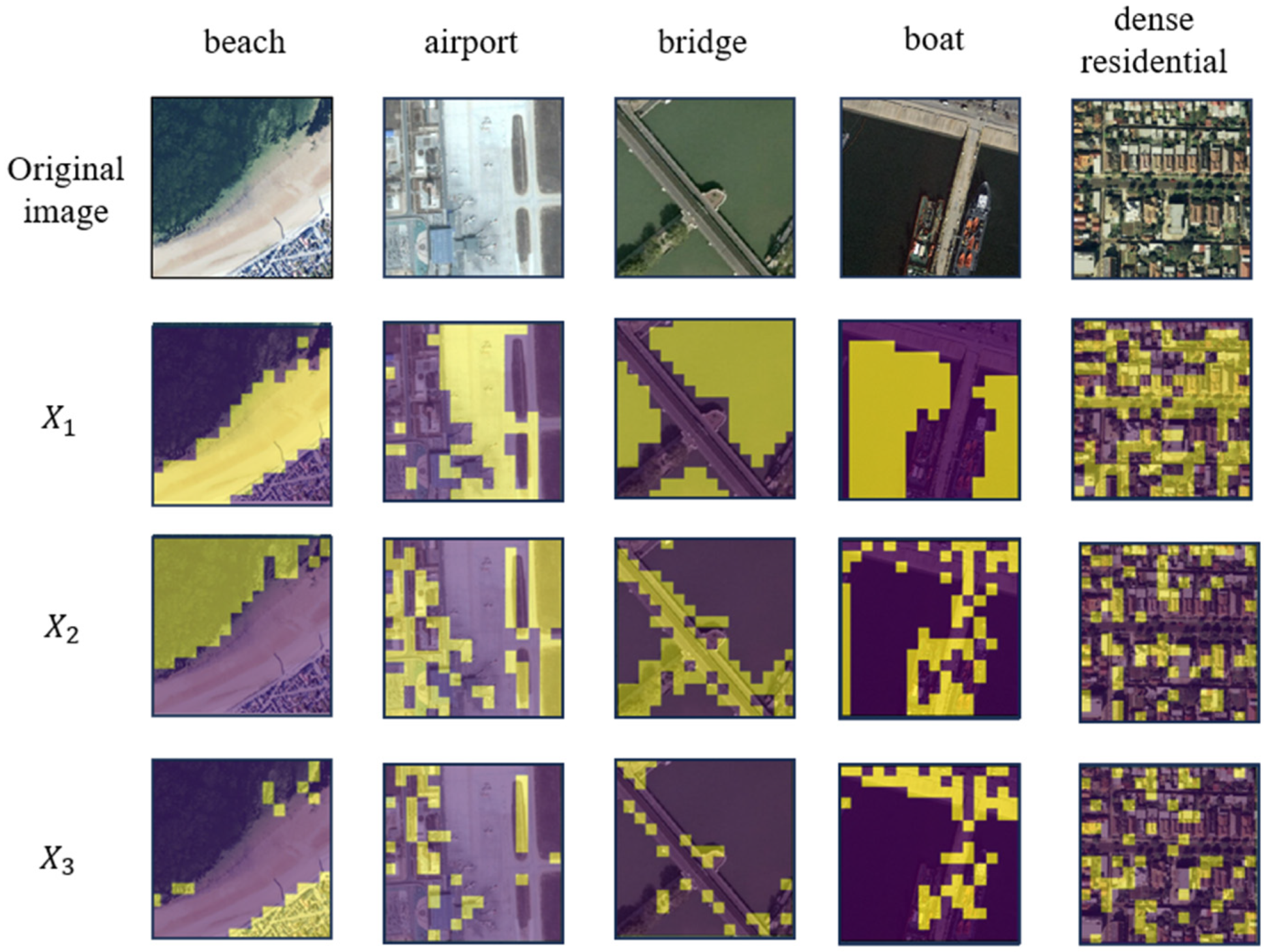

Effect Graph of the Token Grouping Block

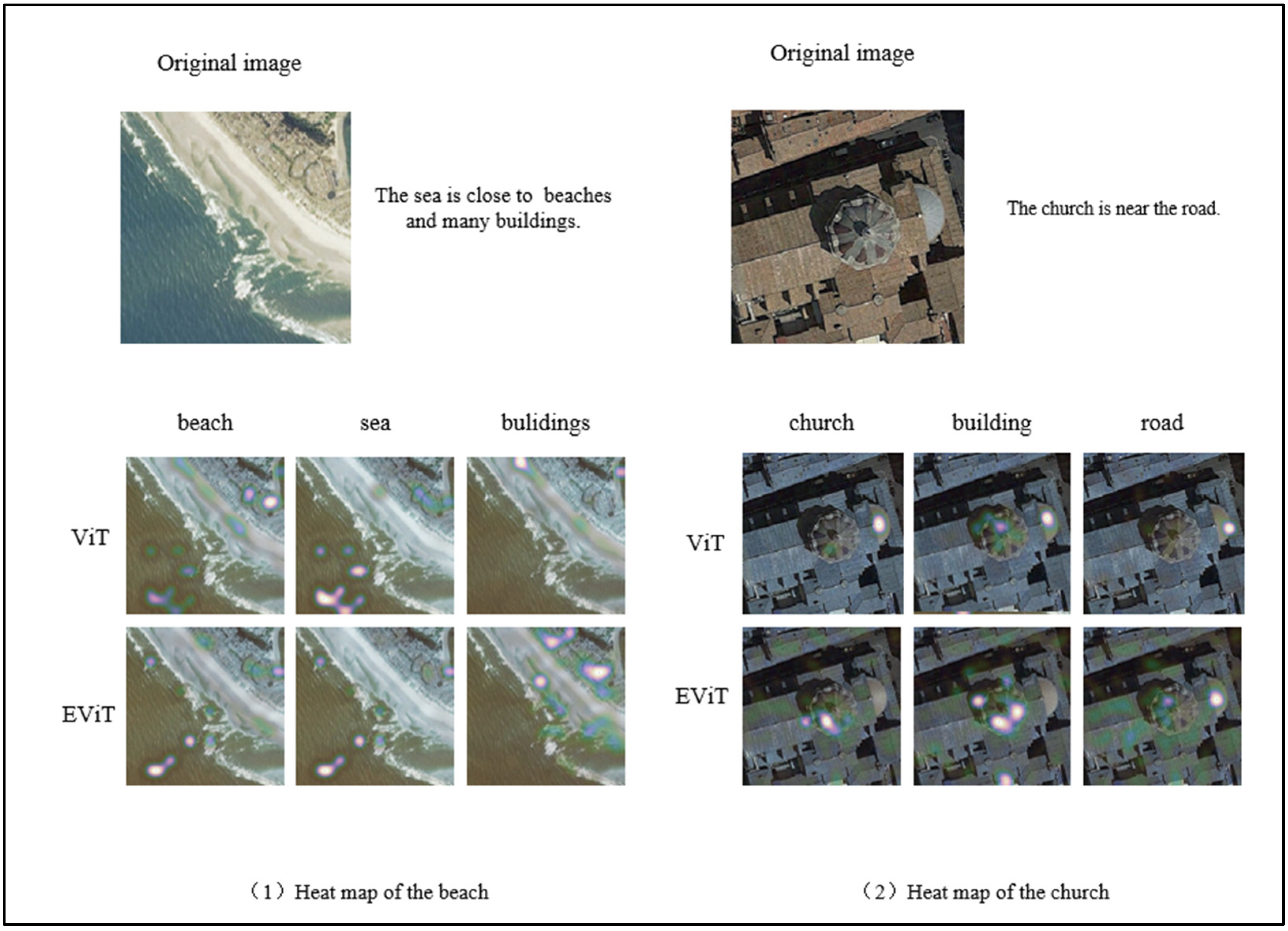

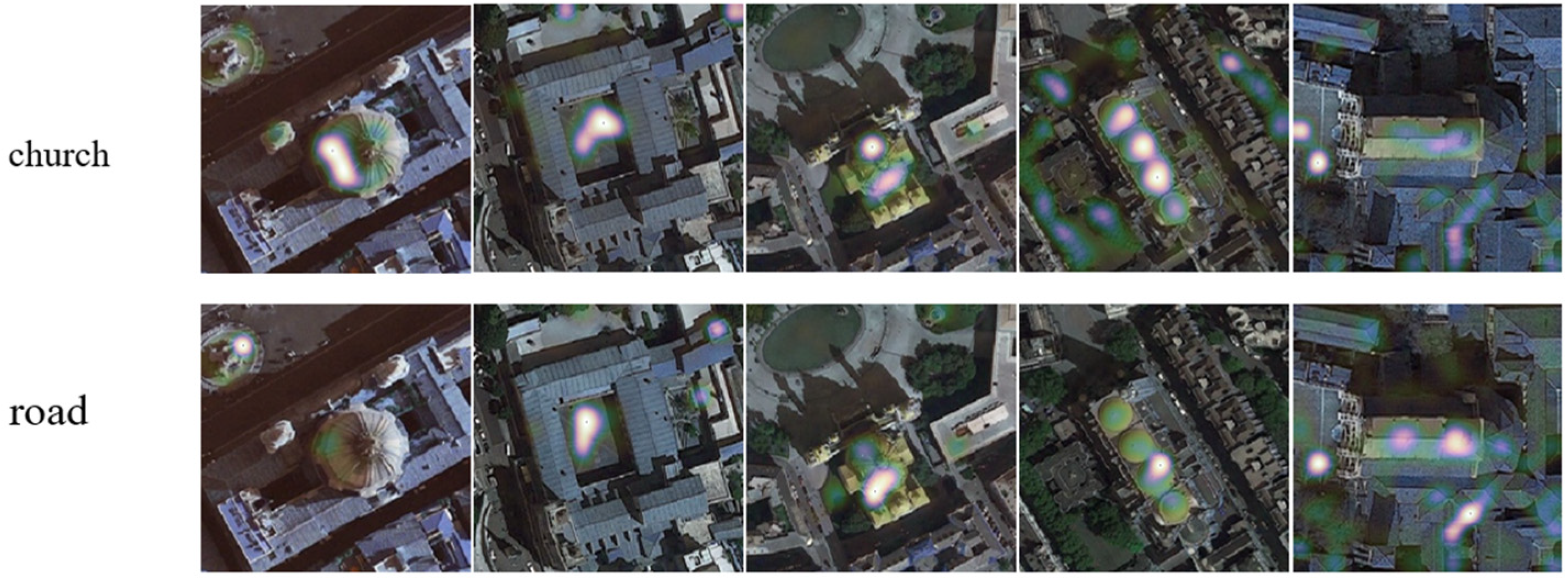

Attention Heat Maps

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.; Li, W.; Wang, X.; Wang, L.; Zheng, F.; Wang, L.; Zhang, H. A Fusion Encoder with Multi-Task Guidance for Cross-Modal Text–Image Retrieval in Remote Sensing. Remote Sens. 2023, 15, 4637. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, preprint. arXiv:2010.11929. [Google Scholar] [CrossRef]

- Zheng, F.; Wang, X.; Wang, L.; Zhang, X.; Zhu, H.; Wang, L.; Zhang, H. A Fine-Grained Semantic Alignment Method Specific to Aggregate Multi-Scale Information for Cross-Modal Remote Sensing Image Retrieval. Sensors 2023, 23, 8437. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Feng, Y.; Zhou, M.; Xiong, X.; Wang, Y.; Qiang, B. A Jointly Guided Deep Network for Fine-Grained Cross-Modal Remote Sensing Text–Image Retrieval. J. Circuits Syst. Comput. 2023, 32, 2350221. [Google Scholar] [CrossRef]

- Cheng, Q.; Zhou, Y.; Fu, P.; Xu, Y.; Zhang, L. A deep semantic alignment network for the cross-modal image-text retrieval in remote sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4284–4297. [Google Scholar] [CrossRef]

- Ding, Q.; Zhang, H.; Wang, X.; Li, W. Cross-modal retrieval of remote sensing images and text based on self-attention unsupervised deep common feature space. Int. Remote Sens. 2023, 44, 3892–3909. [Google Scholar] [CrossRef]

- Rahhal, M.M.A.; Bazi, Y.; Abdullah, T.; Mekhalfi, M.L.; Zuair, M. Deep unsupervised embedding for remote sensing image retrieval using textual cues. Appl. Sci. 2020, 10, 8931. [Google Scholar] [CrossRef]

- Lv, Y.; Xiong, W.; Zhang, X.; Cui, Y. Fusion-based correlation learning model for cross-modal remote sensing image retrieval. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Abdullah, T.; Bazi, Y.; Al Rahhal, M.M.; Mekhalfi, M.L.; Rangarajan, L.; Zuair, M. TextRS: Deep bidirectional triplet network for matching text to remote sensing images. Remote Sens. 2020, 12, 405. [Google Scholar] [CrossRef]

- Zheng, F.; Li, W.; Wang, X.; Wang, L.; Zhang, X.; Zhang, H. A cross-attention mechanism based on regional-level semantic features of images for cross-modal text-image retrieval in remote sensing. Appl. Sci. 2022, 12, 12221. [Google Scholar] [CrossRef]

- Yuan, Z.; Zhang, W.; Rong, X.; Li, X.; Chen, J.; Wang, H.; Fu, K.; Sun, X. A lightweight multi-scale crossmodal text-image retrieval method in remote sensing. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–19. [Google Scholar] [CrossRef]

- Li, H.; Xiong, W.; Cui, Y.; Xiong, Z. A fusion-based contrastive learning model for cross-modal remote sensing retrieval. Int. J. Remote Sens. 2022, 43, 3359–3386. [Google Scholar] [CrossRef]

- Alsharif, N.A.; Bazi, Y.; Al Rahhal, M.M. Learning to align Arabic and English text to remote sensing images using transformers. In Proceedings of the 2022 IEEE Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Istanbul, Turkey, 7–9 March 2022; pp. 9–12. [Google Scholar]

- Yu, H.; Deng, C.; Zhao, L.; Hao, L.; Liu, X.; Lu, W.; You, H. A Light-Weighted Hypergraph Neural Network for Multimodal Remote Sensing Image Retrieval. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2690–2702. [Google Scholar] [CrossRef]

- Yao, F.; Sun, X.; Liu, N.; Tian, C.; Xu, L.; Hu, L.; Ding, C. Hypergraph-enhanced textual-visual matching network for cross-modal remote sensing image retrieval via dynamic hypergraph learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 688–701. [Google Scholar] [CrossRef]

- Yu, H.; Yao, F.; Lu, W.; Liu, N.; Li, P.; You, H.; Sun, X. Text-image matching for cross-modal remote sensing image retrieval via graph neural network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 812–824. [Google Scholar] [CrossRef]

- He, L.; Liu, S.; An, R.; Zhuo, Y.; Tao, J. An end-to-end framework based on vision-language fusion for remote sensing cross-modal text-image retrieval. Mathematics 2023, 11, 2279. [Google Scholar] [CrossRef]

- Yuan, Z.; Zhang, W.; Tian, C.; Rong, X.; Zhang, Z.; Wang, H.; Fu, K.; Sun, X. Remote sensing cross-modal text-image retrieval based on global and local information. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Chen, G.; Wang, W.; Tan, S. Irstformer: A hierarchical vision transformer for infrared small target detection. Remote Sens. 2022, 14, 3258. [Google Scholar] [CrossRef]

- Peng, J.; Zhao, H.; Zhao, K.; Wang, Z.; Yao, L. CourtNet: Dynamically balance the precision and recall rates in infrared small target detection. Expert Syst. Appl. 2023, 233, 120996. [Google Scholar] [CrossRef]

- Li, C.; Huang, Z.; Xie, X.; Li, W. IST-TransNet: Infrared small target detection based on transformer network. Infrared Phys. Technol. 2023, 132, 104723. [Google Scholar] [CrossRef]

- Ren, S.; Zhou, D.; He, S.; Feng, J.; Wang, X. Shunted self-attention via multi-scale token aggregation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10853–10862. [Google Scholar]

- Faghri, F.; Fleet, D.J.; Kiros, J.R.; Fidler, S.V. Improving visual-semantic embeddings with hard negatives. arXiv 2017, arXiv:1707.05612. [Google Scholar]

- Yuan, Z.; Zhang, W.; Fu, K.; Li, X.; Deng, C.; Wang, H.; Sun, X. Exploring a Fine-Grained Multiscale Method for Cross-Modal Remote Sensing Image Retrieval. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3078451. [Google Scholar] [CrossRef]

- Lu, X.; Wang, B.; Zheng, X.; Li, X. Exploring models and data for remote sensing image caption generation. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2183–2195. [Google Scholar] [CrossRef]

- Huang, Y.; Wu, Q.; Song, C.; Wang, L. Learning semantic concepts and order for image and sentence matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6163–6171. [Google Scholar]

| Approach | Text Retrieval | Image Retrieval | mR | ||||

|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||

| AMFMN-soft | 11.06 | 25.88 | 39.82 | 9.82 | 33.94 | 51.90 | 28.74 |

| AMFMN-fusion | 11.06 | 29.20 | 38.72 | 9.96 | 34.03 | 52.96 | 29.32 |

| AMFMN-sim | 10.63 | 24.78 | 41.81 | 11.51 | 34.69 | 54.87 | 29.72 |

| CMFM-Net | 10.84 | 28.76 | 40.04 | 10.00 | 32.83 | 47.21 | 28.28 |

| GaLR | 14.82 | 31.64 | 42.48 | 11.15 | 36.68 | 51.68 | 31.41 |

| AEFEF (ViT) | 11.06 | 31.64 | 47.79 | 11.99 | 40.27 | 58.36 | 33.52 |

| AEFEF | 13.27 | 36.06 | 51.33 | 11.55 | 41.42 | 60.0 | 35.60 |

| Approach | Text Retrieval | Image Retrieval | mR | ||||

|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||

| AMFMN-soft | 5.05 | 14.53 | 21.57 | 5.05 | 19.74 | 31.04 | 16.02 |

| AMFMN-fusion | 5.39 | 15.08 | 23.04 | 4.90 | 18.28 | 31.44 | 16.42 |

| AMFMN-sim | 5.21 | 14.72 | 21.57 | 4.08 | 17.00 | 30.60 | 15.53 |

| CMFM-Net | 5.40 | 18.66 | 28.55 | 5.31 | 18.57 | 30.03 | 17.75 |

| GaLR | 6.59 | 19.9 | 31 | 4.69 | 19.5 | 32.1 | 18.96 |

| AEFEF (ViT) | 6.86 | 16.27 | 26.05 | 6.07 | 21.85 | 36.60 | 18.95 |

| AEFEF | 7.13 | 16.27 | 24.95 | 6.33 | 23.27 | 38.85 | 19.47 |

| Parameters | FLOPS | Training Time/Epoch | |

|---|---|---|---|

| AEFEF (ViT) | 209.31 M | 50.315 G | 350 s |

| AEFEF | 212.26 M | 33.921 G | 406 s |

| EViT(a)1 | EViT(a)2 | EViT(a)3 | EViT(a)4 | |

|---|---|---|---|---|

| EViT(3) | 34.47 | 35.20 | 33.87 | 33.60 |

| EViT(4) | 35.60 | 34.96 | 34.26 | — |

| Text Retrieval | Image Retrieval | mR | |||||

|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||

| EViT(1) | 13.27 | 32.30 | 46.24 | 12.39 | 40.49 | 58.27 | 33.83 |

| EViT(2) | 14.82 | 32.96 | 46.02 | 11.02 | 39.60 | 58.94 | 33.89 |

| EViT(3) | 15.04 | 35.62 | 46.90 | 11.90 | 41.11 | 60.62 | 35.20 |

| EViT(4) | 13.27 | 36.06 | 51.33 | 11.55 | 41.42 | 60.0 | 35.61 |

| Text Retrieval | Image Retrieval | mR | |||||

|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||

| EViT(3) | 12.61 | 30.75 | 45.35 | 10.88 | 40.97 | 60.35 | 33.49 |

| EViT(4) | 13.05 | 31.64 | 50.00 | 11.64 | 41.06 | 60.04 | 34.57 |

| EViT(6) | 14.16 | 34.96 | 46.90 | 9.96 | 40.18 | 58.14 | 34.05 |

| EViT(8) | 10.84 | 31.64 | 46.02 | 11.02 | 39.34 | 59.87 | 33.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Wang, L.; Zheng, F.; Wang, X.; Zhang, H. An Enhanced Feature Extraction Framework for Cross-Modal Image–Text Retrieval. Remote Sens. 2024, 16, 2201. https://doi.org/10.3390/rs16122201

Zhang J, Wang L, Zheng F, Wang X, Zhang H. An Enhanced Feature Extraction Framework for Cross-Modal Image–Text Retrieval. Remote Sensing. 2024; 16(12):2201. https://doi.org/10.3390/rs16122201

Chicago/Turabian StyleZhang, Jinzhi, Luyao Wang, Fuzhong Zheng, Xu Wang, and Haisu Zhang. 2024. "An Enhanced Feature Extraction Framework for Cross-Modal Image–Text Retrieval" Remote Sensing 16, no. 12: 2201. https://doi.org/10.3390/rs16122201

APA StyleZhang, J., Wang, L., Zheng, F., Wang, X., & Zhang, H. (2024). An Enhanced Feature Extraction Framework for Cross-Modal Image–Text Retrieval. Remote Sensing, 16(12), 2201. https://doi.org/10.3390/rs16122201