Abstract

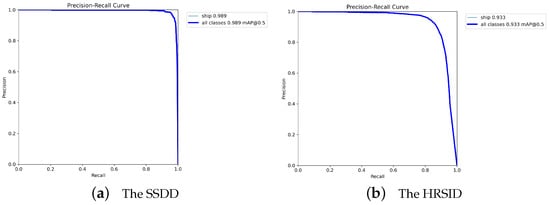

In recent years, deep learning has found widespread application in SAR image object detection. However, when detecting multi-scale targets against complex backgrounds, these models often struggle to strike a balance between accuracy and speed. Furthermore, there is a continuous need to enhance the performance of current models. Hence, this paper proposes LRMSNet, a new multi-scale target detection model designed specifically for SAR images in complex backgrounds. Firstly, the paper introduces an attention module designed to enhance contextual information aggregation and capture global features, which is integrated into a backbone network with an expanded receptive field for improving SAR image feature extraction. Secondly, this paper develops an information aggregation module to effectively fuse different feature layers of the backbone network. Lastly, to better integrate feature information at various levels, this paper designs a multi-scale aggregation network. We validate the effectiveness of our method on three different SAR object detection datasets (MSAR-1.0, SSDD, and HRSID). Experimental results demonstrate that LRMSNet achieves outstanding performance with a mean average accuracy (mAP) of 95.2%, 98.9%, and 93.3% on the MSAR-1.0, SSDD, and HRSID datasets, respectively, with only 3.46 M parameters and 12.6 G floating-point operation cost (FLOPs). When compared with existing SAR object detection models on the MSAR-1.0 dataset, LRMSNet achieves state-of-the-art (SOTA) performance, showcasing its superiority in addressing SAR detection challenges in large-scale complex environments and across various object scales.

1. Introduction

Synthetic Aperture Radar (SAR) is an active microwave imaging radar that employs synthetic aperture principles to attain high-resolution microwave images, enabling all-weather and all-day observation of the ground, unaffected by complex weather conditions []. In recent years, object detection has garnered increasing attention in the field of computer vision. The utilization of SAR imagery for object detection has emerged as a prominent research direction, finding diverse applications in defense and civil fields such as marine development, maritime transportation management, and maritime safety monitoring [,].

Represented by the constant false alarm rate (CFAR) [] detection method, most traditional SAR image target detection algorithms require manual design of features to distinguish between the background and detected targets []. The CFAR algorithm and its derivative algorithms can adaptively adjust the threshold for the input signal, relying heavily on prior knowledge and the contrast between background and targets. Conte et al. [] dealt with CFAR detection of multidimensional signals embedded in Gaussian noise with unknown covariance. Schwegmann et al. [] presented a method involving the conversion of a scalar threshold into a threshold manifold, which is adjusted using a simulated annealing (SA) algorithm to optimally align with the information provided by the ship distribution map generated from transponder data. Qin et al. [] proposed a novel CFAR detection algorithm for high-resolution SAR images, utilizing the generalized Gamma distribution to model the background. However, when the detection environment switches to complex backgrounds and multiple targets, the CFAR algorithm is prone to noise interference and demonstrates limited generalization. Consequently, it is usually only applicable for target detection in SAR images with relatively simple backgrounds.

Recently, deep learning has gained widespread popularity and has become mainstream in various application fields. In the domain of object detection, deep learning has been increasingly applied due to its exceptional accuracy and scalability. Object detection algorithms are primarily categorized into two types: one-stage detection algorithms and two-stage detection algorithms. One-stage detection algorithms, exemplified by SSD [], RetinaNet [], and the YOLO series, perform detection in a single stage. On the other hand, two-stage detection algorithms, such as RCNN [], Fast RCNN [], and Faster RCNN [], involve a two-stage process for detection. Generally, although two-stage detection algorithms can achieve high detection accuracy, they come with high computational costs and a large number of parameters, making deployment on edge devices challenging. In contrast, the YOLO series, known for its high detection accuracy and lower computational costs, is convenient for deployment in edge environments, thus garnering increasing attention.

Although existing models have struck a balance between detection accuracy and model complexity, their performance may not be optimal when facing challenges such as complex environmental contexts, dense targets, and numerous small targets. One of the fundamental reasons for the poor performance of detection models, when confronted with challenges like complex backgrounds and wide-ranging target scales, is the inherent trade-off in neural networks. As these networks deepen, they enhance their capability to extract deep abstract features; however, this often comes at the expense of losing shallow spatial information. In SAR image object detection tasks, the targets are typically small and situated amidst complex environmental interference, with varying scales across different targets. Consequently, the spatial information extracted by neural networks in shallow layers may be overwhelmed by interference signals, leading to inaccurate recognition of small targets. Although semantic information extracted from deep layers is more representative, it is confined to local regions and lacks long-range dependencies, making it challenging to effectively differentiate between interference and target features. Therefore, optimizing feature extraction, integrating spatial and semantic information, and establishing long-range dependencies between different features are critical for addressing the multi-scale and complex background interference issues in SAR image target detection.

To address these challenges, this paper proposes a novel SAR image object detection approach tailored for complex backgrounds and multi-scale targets. This paper introduces an attention mechanism and a novel backbone network to enhance long-distance dependencies and improve feature extraction from SAR images for better detection. Additionally, it proposes an aggregation module to fuse feature layers and redesigns a feature pyramid network with a multi-scale feature extraction module to mitigate information loss and enhance aggregation. The SAR object detection model proposed in our paper can achieve a commendable balance between detection accuracy and the number of model parameters. Our main contributions are summarized as follows:

- We have devised a novel context aggregation module termed the Global Enhancement (GE) block, which comprises two attention modules adept at capturing global information more effectively within SAR images.

- Given that the majority of targets in SAR images are small and the background environment is susceptible to interference, we design a new backbone network with a large receptive field to significantly enhance feature expression ability. We add the GE block to the backbone network to increase the network’s depth and enhance long-range dependencies.

- We designed an Information Fusion Module (IFM) to effectively integrate the multi-layer feature layers obtained from the backbone network and re-output them without altering the scale. This approach enables better aggregation of feature information extracted from the backbone network and helps prevent information loss.

- To enhance feature extraction and aggregation while addressing potential information loss during the extraction process of SAR targets, we have devised an efficient multi-scale feature fusion network. This network comprises feature extraction modules for effectively aggregating feature layers and a feature pyramid network.

2. Related Work

2.1. SAR Image Object Detection

The remarkable success of deep learning, SAR image detection based on deep learning has also emerged in recent years. Li et al. [] proposed a new dataset and four strategies to improve the standard Faster R-CNN algorithm. Zhao et al. [] developed a coupled CNN for small and densely clustered SAR ship detection. Chang et al. [] used YOLOv2 to detect ships from SAR images. Jiang et al. [] proposed a multi-channel fusion SAR image processing method based on YOLOv4 to leverage both image information and the network’s feature extraction capabilities to their fullest extent. Hong et al. [] proposed a new improvement on the YOLOv3 framework for ship detection in marine surveillance. Xu et al. [] proposed a lightweight on-board SAR ship detector based on YOLOv5 called Lite-YOLOv5, which reduced the model volume, decreased the floating-point operations (FLOPs), and realized on-board ship detection without sacrificing accuracy. Zhou et al. [] proposed a new methodology for better detection of multi-scale ship objects in SAR images, which is based on YOLOv5s. Hu et al. [] proposed an anchor-free framework for multi-scale ship detection in SAR images based on a balanced attention network. Tang et al. [] proposed a scale-aware feature pyramid network (SARFNet), which comprises a scale-adaptive feature extraction module and a learnable anchor assignment strategy. Miao et al. [] proposed an improved lightweight RetinaNet for ship detection in SAR images. Zhao et al. [] proposed an automatic SAR image object detection method based on domain adaptation to adapt to unlabeled target domain datasets acquired by different satellites. Feng et al. [] proposed a new lightweight position-enhanced anchor-free SAR ship detection algorithm called LPEDet. While these methods have achieved promising results in SAR image target detection, they also encounter challenges such as low accuracy or overly complex models. Additionally, ensuring high detection accuracy while keeping the model lightweight for deployment on mobile platforms remains a pressing challenge that needs to be addressed.

The latest SAR image object detection models are beginning to tackle these challenges. Chen et al. [] proposed a complex scenes multi-scale ship detection model, according to YOLOv7, called CSD-YOLO. Zhang et al. [] introduced a novel deep learning network named YOLO-FA, which incorporates a frequency attention module (FAM) capable of adaptively processing frequency domain information from SAR images. Ren et al. [] proposed an efficient lightweight network called YOLO-Lite specifically designed for real-time ship detection applications. Zhou et al. [] introduced an improved lightweight RetinaNet for ship detection in SAR images. Wang et al. [] proposed a novel SAR ship detection method, NAS-YOLOX, which leverages the efficient feature fusion of the neural architecture search feature pyramid network (NAS-FPN) and the effective feature extraction of the multi-scale attention mechanism. Tang et al. [] proposed a Pyramid Pooling Attention Network (PPA-Net) for SAR multi-scale ship detection. Zhang et al. [] proposed a spatial cross-scale attention network (SCSA-Net) for SAR image ship detection, which includes a novel spatial cross-scale attention (SCSA) module for eliminating the interference of land background. When dealing with issues like complex environmental backgrounds, intensive targets, and numerous small targets, the performance of these models may not be optimal.

2.2. YOLOv8

YOLOv8, the latest algorithm in the YOLO series, was released by Ultralytics on 10 January 2023. Compared to its predecessors, YOLOv8 offers faster speed and higher detection performance, making it an excellent choice for a wide range of object detection []. YOLOv8 offers five different models based on scaling coefficients (n/s/m/l/x) to accommodate various scenarios. It replaces the C3 structure of YOLOv5 with a more gradient flow-rich C2f structure and adjusts the number of channels for different scale models. The head module of YOLOv8 has been upgraded to a decoupling head structure, separating the classification and detection heads and it has transitioned from an anchor-based to an anchor-free structure [].

3. Materials and Methods

3.1. Overall Network Structure

We propose LRMSNet, a neural network tailored for multi-scale SAR image object detection, featuring a large receptive field. The overall network structure of LRMSNet is depicted in Figure 1, where LRBlock and IFM are the backbone feature extraction module and the information fusion module that we have newly designed. This paper chooses YOLOv8n as our baseline. In comparison to other YOLOv8 networks, YOLOv8n stands out due to its minimal number of parameters and the fastest detection speed, while still maintaining high detection accuracy. This makes it a suitable choice for lightweight SAR image detection. Therefore, this paper selects YOLOv8n as the baseline framework for our network.

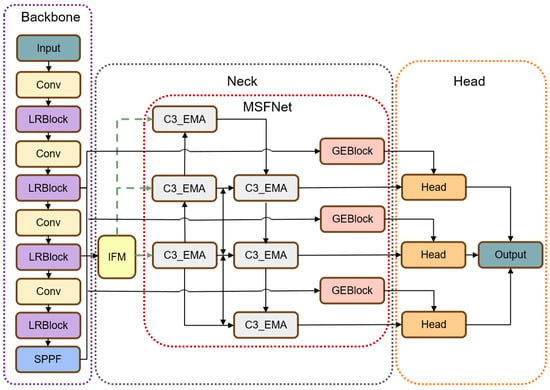

Figure 1.

The overall network structure of LRMSNet.

Our LRMSNet consists of four primary components: a backbone network consisting of blocks with a large receptive field (LR block), an information fusion module (IFM), a multi-scale feature fusion network (MSFNet), and a detection head. Initially, the input image is processed by a backbone network with a large receptive field, capable of extracting deep feature information to capture targets of different scales within complex SAR image backgrounds. Subsequently, the aggregated module combines the three feature layers extracted by the backbone network, enters the feature pyramid structure of the neck network, and utilizes the C3 module integrated with the EMA attention mechanism(C3_EMA) to enhance feature aggregation and prevent feature loss during information transmission. Finally, the feature layers extracted by the backbone network are fused with the feature layers extracted by the neck network using the GE block attention mechanism, and detection is performed using the anchor-free decoupled detection head.

3.2. Global and Efficient Context Block (GE Block)

The proposed global and efficient context (GE) block consists of two cascaded components: the global context (GC) block [] and the efficient context (EC) block. Firstly, the GC block integrates global contextual information into the channels, thereby enhancing the module’s ability to extract long-range dependency information. Subsequently, the EC block generates aggregated features by employing a context aggregation block and generates channel attention through rapid one-dimensional convolution. The specific module structure of the GE block is depicted in Figure 2.

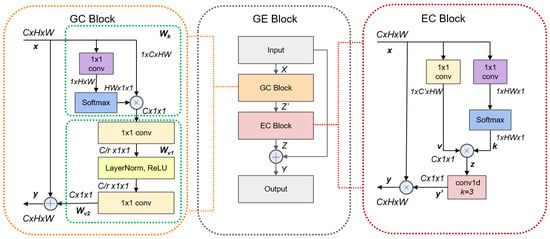

Figure 2.

The specific module structure of the GE block, including GC block [] and EC block. X and Y represent the input and output of the GE block, and denote the input and output of the GC block and the EC block, and , , and denote linear transform matrices. CxHxW denotes an input feature map with channels C, height H and width W. ⊗ denotes matrix multiplication and ⊕ denotes broadcast element-wise addition.

The GC block combines the squeeze and excitation (SE) block [] with the non-local (NL) block []. It adopts the simplified NL block’s approach to context modeling, utilizing global attention pooling and fusion, employing addition. Additionally, it employs the same transform step as the SE block, utilizing a two-layer bottleneck. This combination can fully leverage the strong global context modeling ability of the NL block and the computational efficiency of the SE block, making it suitable for the task requirements of lightweight detection in SAR images with complex backgrounds. The GC block in Figure 2 can be represented as []

where and denote the input and output of the GC block, is the number of positions in the feature map (e.g., for image), i is the index of query positions, and j enumerates all possible positions. , , and denote linear transform matrices. denotes the bottleneck transform and is the weight for global attention pooling.

The EC block is an improvement of the effective channel attention (ECA) module in [], by replacing the global average pooling module of ECA with multiple convolutional blocks for better preserving feature information and positional relationships between different features. This modification stems from the observation that global average pooling compresses the entire feature map into a single value, consequently weakening the relative positional combination relationship between different features. In the EC block, the input initially undergoes two distinct convolutional layers to obtain attention weights and value vectors separately. Subsequently, the softmax function is applied to the key matrix to normalize the weights. These normalized weights are then multiplied with the value matrix to achieve the attention-weighted sum across all positions. Finally, a series of transformations including reshaping, convolution, and activation function processing are applied to generate after the bottleneck transform, and the final output feature is then obtained by fusing with the input . The entire process can be represented as follows:

where and represent the weight parameters of the convolutional operations, and and denote the bias terms. ∗ denotes the convolutional operation and · denotes the multiplication of matrices. Conv1d indicates 1D convolution with a kernel size of 3. Reshape rearranges the dimensions of a tensor to match a new shape. Softmax and indicate the Softmax and Sigmoid functions, respectively. Additionally, Expand_as signifies the operation of expanding to match the shape of , followed by element-wise multiplication. The algorithm details of the EC block are shown in Algorithm 1.

| Algorithm 1 The EC Block. |

| Input: Input tensor x, input channels , reduction factor , kernel size Output: Output tensor y

|

Given the aforementioned EC and GC blocks, the operation of the GE block can be expressed as:

where X and Y represent the input and output of the GE block, Z and represent the intermediate feature layers produced when the input X passes through the GC block and EC block, respectively, and Concat denotes the concatenation of two or more tensors along a specified dimension.

3.3. Large Receptive Field Backbone (LRFB)

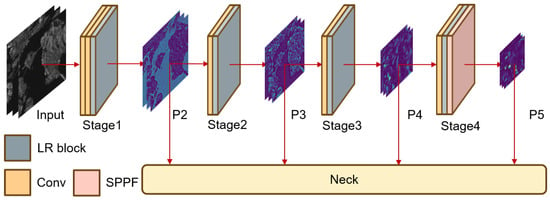

Traditional large-kernel neural networks, such as AlexNet [] and SwinTransformer [], commonly employ convolutional kernel sizes ranging from to . Large-kernel convolutions provide the benefit of attaining larger receptive fields and capturing better global features without heavily relying on deep stacking. However, they also pose challenges such as an excessive parameter count and inadequate model depth. Large convolutional kernels can assist in capturing a broader spectrum of spatial information and analyzing targets of various scales and shapes in SAR images, well-suited to the high resolution and intricate environmental backgrounds of SAR images. Nevertheless, the substantial parameter count resulting from these large convolutional kernels may impede the light weight of the model, and their usage can render the model network incapable of efficiently extracting intricate features from SAR images due to insufficient depth. Hence, in this paper, we integrate and convolutional kernels to mitigate the impact of large convolutional kernels on the model’s parameter count, while achieving a larger receptive field compared to standard networks. Additionally, we incorporate the GE block to augment the model’s depth and enhance the network’s feature extraction capability. Our network built up following such methods has individually achieved favorable results, as it uses a modest number of large kernels to guarantee a large Effective Receptive Field (ERF), multiple small kernels to extract more complicated spatial patterns more efficiently, and efficient structures to further increase the depth to enhance the representational capacity []. The detailed structure of the proposed backbone is shown in Figure 3, which mainly consists of larger receptive field (LR) blocks, convolutional blocks, and a SPPF module. Firstly, the backbone network achieves downsampling through convolutional blocks, then uses LR blocks to extract feature information from the input image and deepen the network depth. Finally, an adaptive size output is achieved through the SPPF module. The LR block we designed can help the backbone network better extract the feature information of SAR images without losing global information. Its detailed structure is shown in Figure 4.

Figure 3.

The structure of our backbone network.

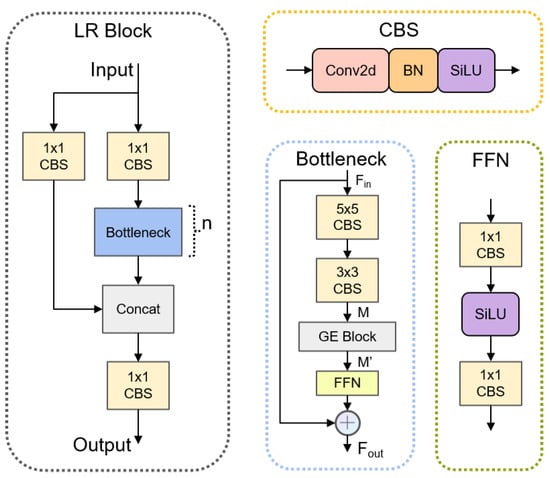

Figure 4.

The structure of the LR block and related modules.

The LR block consists of three CBS (Conv-Bn-SiLU) modules and a bottleneck block. Two CBS modules with a stride of 1 are initially employed to transform the input features along the channel dimension, with one of the outputs being fed into the bottleneck block and looped n times. Subsequently, a CBS module is utilized to fuse the feature information that has passed through the two different convolution paths, aiming to generate more representative feature representations and enhance the performance and expressive power of the model.

The CBS module is composed of a convolutional layer, followed by a Batch Normalization (BN) layer, and a SiLU activation function. The bottleneck block, a residual module, comprises four components: two CBS modules with convolution kernel sizes of and , a GE block, and a Feedforward Neural Network (FFN) composed of two CBS modules and a SiLU activation function. We use to represent the input feature layer of our bottleneck, and its formula is as follows:

specifically, the CBS block is responsible for achieving a larger receptive field, the GE block augments the model’s depth, and the CBS block further enhances depth and strengthens the model’s expressive capabilities. The algorithm details of the bottleneck of the LR block are shown in Algorithm 2.

| Algorithm 2 The Bottleneck of LR Block. |

| Input: Input tensor x, input channels , output channels , shortcut flag , groups g, expansion factor e Output: Output feature map

|

3.4. Information Fusion Module

As feature maps at various levels encompass distinct information, low-level feature maps contain texture details and precise object positions, while high-level feature maps contain abstract and semantic information of larger objects []. Therefore, fusing feature maps across different levels can effectively enhance the detection accuracy of targets across various scales. Feature Pyramid Networks (FPN) combine low-resolution, semantically strong features with high-resolution, semantically weak features via a top-down pathway and lateral connections [], offering a solution to address multi-scale problems.

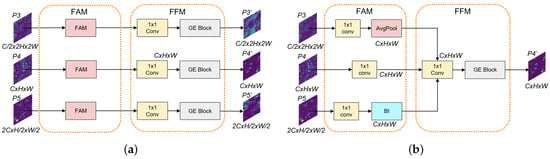

Based on this concept, we have devised an Information Fusion Module, the specific structure of which is illustrated in Figure 5a.

Figure 5.

The structure and fusion process of IFM. (a) The general structure of IFM; (b) The detailed information aggregation process of IFM with P4 as an example.

- Feature alignment module (FAM). We choose the P3, P4, and P5 features outputted by the backbone network for fusion. By performing up-sample and down-sample on the three feature layers to achieve uniform scale size, we retain both the detailed information of low-level features and the semantic information of high-level features. In FAM, we employ the average pooling (AvgPool) operation to down-sample and the bilinear interpolation to up-sample input features. Subsequently, we employ convolution modules to process different branches, ensuring the attainment of an equal number of channels for subsequent processing.

- Feature fusion module (FFM). FFM consists of a convolutional fusion module and an attention module. Initially, a convolutional layer is utilized to concatenate the feature tensors of the three branches along the channel dimension, followed by the employment of an attention module (GE block) to further aggregate the fused features. Using the information aggregation of the P4 feature layer illustrated in Figure 5b as an example, the convolutional fusion module receives three feature layers with an identical number of channels as inputs and generates a feature layer . GE blocks are subsequently utilized to aggregate the fused features, resulting in () as the final output. The formula is as follows:

3.5. Multi-Scale Feature Fusion Network

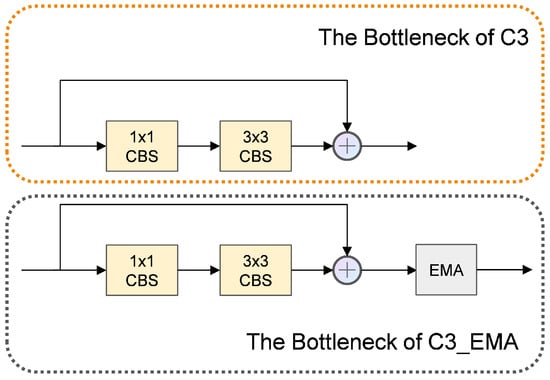

Our multi-scale feature fusion network (MSFFNet) comprises two main components: the feature extraction modules C3_EMA and a feature pyramid network enhanced by BiFPN [].

3.5.1. C3_EMA

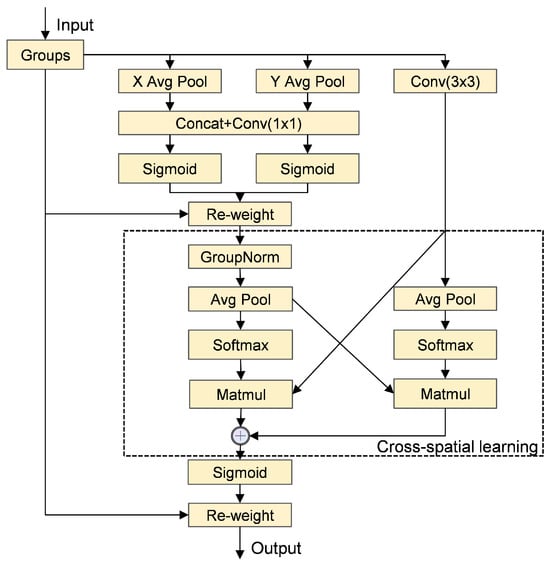

The efficient multi-scale attention (EMA) is a novel cross-spatial learning attention mechanism that utilizes multi-scale parallel subnetworks to establish both short and long-range dependencies []. EMA reshapes the partly channel dimensions into the batch dimensions and fuses the output feature maps of the two parallel subnetworks by a cross-spatial learning method [], and its structure is shown in Figure 6.

Figure 6.

The structure of the EMA [].

We integrate the EMA attention mechanism into the bottleneck block of the C3 module to enhance the feature aggregation capability of the neck network. Given that SAR images are prone to noise and interference in complex scenes, we employ the EMA attention mechanism to assist the model in enhancing feature representation capability while preserving lightweight characteristics. This enables further extraction of features with enriched semantic information without significantly altering the spatial information of the original features. The structure of our enhanced bottleneck block and its comparison with the original version are depicted in Figure 7. The output can be expressed by the following formula:

where is the input feature layer and is the intermediate layer after convolution with convolutional layers. The reason for incorporating the attention mechanism after the residual block is to better utilize the residual connections for propagating attention information, thereby enhancing the feature representation of the residual block.

Figure 7.

The structure of the bottleneck of C3_EMA and C3.

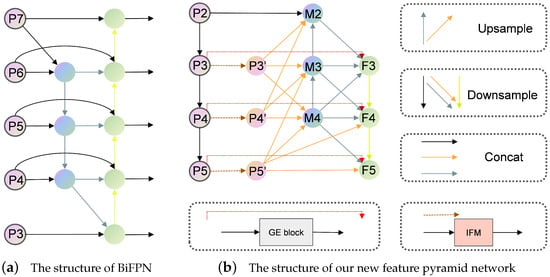

3.5.2. Bi-Directional Feature Pyramid Network (BiFPN)

BiFPN is an efficient bidirectional feature pyramid network, an enhancement of PANet [], which integrates both bidirectional cross-scale connections and fast normalized fusion []. The structure of BiFPN is depicted in Figure 8a. Our new feature pyramid network adopts the concept of bidirectional propagation from BiFPN to enhance the flow of features across different levels and mitigate the loss incurred during feature propagation. The structure is illustrated in Figure 8b.

Figure 8.

The comparison between our new feature pyramid network and the original BiFPN. Upsample and Downsample refer to the upsampling and downsampling operations, while Concat denotes the concatenation of two or more tensors along a specified dimension.

Firstly, to mitigate the loss of low-level and high-level features during feature propagation, we select the four feature layers (P2, P3, P4, and P5) from the backbone network to be fused with the three feature layers (P3’, P4’, and P5’) from the IFM. This enables a better fusion of feature layers containing diverse information. Secondly, we fuse feature layers spanning multiple levels, allowing the fused low-level feature layer to retain detailed information while incorporating information from different high-levels, thereby enriching the feature representation. Finally, we fuse the previously obtained feature layers with the backbone feature layers (P3”, P4”, and P5”) after GE block aggregation, and then utilize them as the input feature layers (F3, F4, and F5) of the detection head. This process enriches the feature information of the target while filtering out interference information and enhances the robustness of the model.

3.6. Evaluation Metrics

To evaluate the performance of our proposed method, we choose and F-measure () as the evaluation metrics for our experiments. The mean average precision () can be calculated from the precision P and recall R, with the formulas for precision P and recall R as follows:

where and denote the number of true positives and false positives, and denotes the number of false negatives. The detection result is considered a true positive () only when the true Intersection over Union (IoU) of the detection result is greater than the specified IoU threshold. Therefore, by setting different IoU thresholds, we can plot the precision–recall (PR) curve for each target category, and the area under this curve represents the average precision (). Each class i corresponds to an , and the average value of these individual average precisions is denoted as . The formulas for and are as follows:

where N denotes the total number of categories. In our paper, we set the IoU threshold for to 0.5. represents the weighted harmonic mean of P and R. Its calculation is as follows:

4. Experiments and Results

In this section, we conduct a series of experiments to evaluate the performance of our model. Firstly, we introduce the dataset, environment configuration, and evaluation metrics used in our experiments. Next, we verify the effectiveness of our model through ablation experiments. Finally, we assess the generalization of our model using different datasets and compare the experimental results with popular generic object detection models as well as the latest SAR image object detection models to illustrate the advantages of our model.

4.1. Datasets

We selected three publicly available datasets to validate the effectiveness and generalization of our proposed method: the large-scale multi-class SAR target detection dataset (MSAR-1.0) [], the SAR Ship Detection Dataset (SSDD) [], and the High-Resolution SAR Image Dataset (HRSID) []. The statistics for these publicly available datasets are presented in Table 1.

Table 1.

Statistics of the three datasets.

- MSAR-1.0: The MSAR-1.0 is a large-scale multi-class SAR image target dataset released in 2022, comprising 28,449 images and scenes, including airports, ports, nearshore areas, islands, open seas, urban areas, etc. The target types consist of four categories: 6368 aircraft, 29,858 ships, 1851 bridge beams, and 12,319 oil tanks []. As the official dataset does not specify a partition, we randomly divided the dataset into training, validation, and testing sets in a ratio of 7:2:1.

- SSDD: The SSDD dataset, released in 2017, comprises 1160 images, including 2546 ships. The ship targets in the SSDD dataset are categorized into three types: large, medium, and small, with small targets being the majority []. According to the official regulations, we divided the dataset into training and testing sets in a ratio of 8:2 for fair comparison.

- HRSID: The HRSID dataset, released in 2020, comprises 5604 images and 16,951 ships, with 98% of them being small to medium-sized targets, making it suitable for evaluating the model’s small object detection performance []. According to official regulations, we divided the dataset into training and testing sets in a ratio of 6.5:3.5.

4.2. Experiment Settings

For the reliability and repeatability of the experiments, all of our experiments were conducted on personal computers equipped with an Intel (R) Xeon (R) Gold 6226R and NVIDIA GeForce RTX 4080 16 GB. The specific experimental setup is detailed in Table 2.

Table 2.

Experimental parameter settings.

4.3. Experiments on MSAR-1.0 Dataset

We selected the MSAR-1.0 as the primary dataset to validate the effectiveness of our proposed method. The MSAR-1.0 offers advantages such as richer dataset categories, a larger data volume, and diverse sizes of large scene images, making it more suitable for assessing the model’s ability to detect multiple classes and small objects. YOLOv8n was chosen as the baseline model for our method, and we conducted a series of ablation experiments on the MSAR-1.0 dataset. The results are presented in Table 3. These experimental findings demonstrate that our proposed method achieves remarkably high detection accuracy while maintaining the model’s lightweight design.

Table 3.

Results of ablation experiments on the MSAR-1.0 dataset.

We compared the LRMSNet model with current mainstream general object detection models, and the results are summarized in Table 4. From the data presented, it is evident that our model achieved competitive performance with fewer parameters and FLOPs. For instance, compared with YOLOX-s, we reduced the parameter count by 51.2% and FLOPs by 5.4%, while increasing mAP by 7.8%. Similarly, using the latest YOLOv8s as a reference, we managed to decrease the parameter count by 59.7% and FLOPs by 54.2%, with only a marginal decrease of 0.34% in mAP. These experiments highlight the highly competitive performance of the LRMSNet model while maintaining lightweight characteristics.

Table 4.

Comparison of different general object detection models based on the MSAR-1.0 dataset.

Compared with the latest lightweight SAR image object detection models, our proposed method also exhibits significant advantages, as demonstrated by the experimental results presented in Table 5. Our model achieved the best performance while only sacrificing a small number of parameters and FLOPs, showcasing state-of-the-art (SOTA) detection accuracy compared to existing models. The experimental results on the MSAR-1.0 dataset illustrate that our proposed method achieves higher accuracy and better detection performance while having a lower parameter count and FLOPs.

Table 5.

Comparison of different SAR image object detection models based on the MSAR-1.0 dataset.

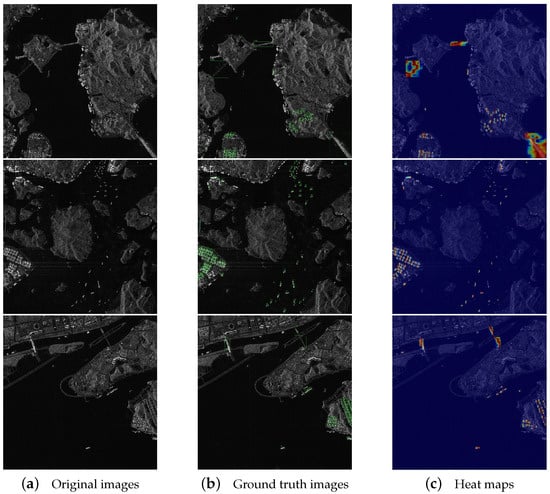

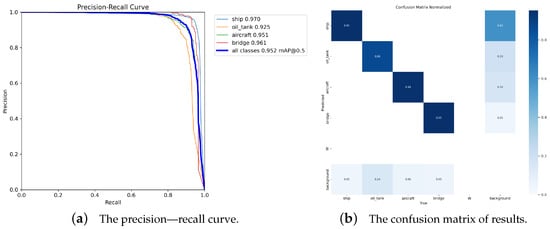

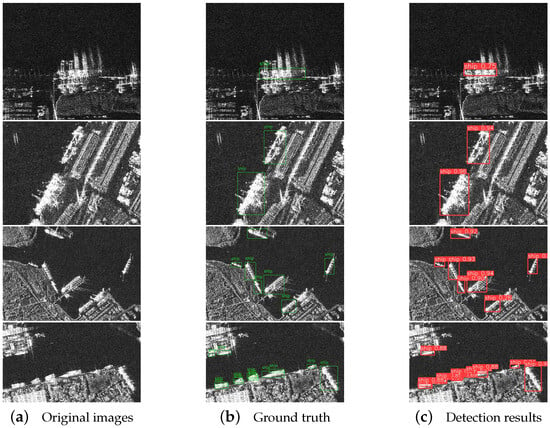

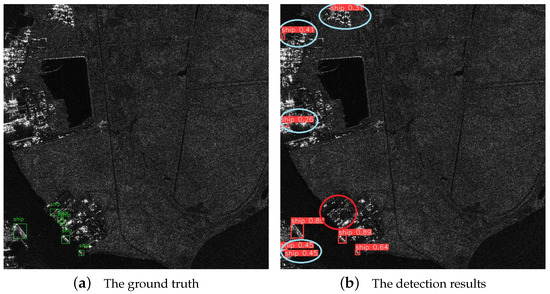

In the following sections, we will delve into more experimental details to elucidate the results obtained by the model on the MSAR-1.0 dataset. We aim to validate the effectiveness of our proposed method through ablation experiments. The comparison between our model’s visualization results and the ground truth plot on the MSAR-1.0 dataset is depicted in Figure 9. Additionally, Figure 10a shows the precision–recall curve for our model’s experimental results on the MSAR-1.0 dataset, and Figure 10b presents the corresponding confusion matrix.

Figure 9.

The visualization results and comparison experiments on the MSAR-1.0 dataset.

Figure 10.

The PR curve and confusion matrix of LRMSNet on the MSAR-1.0 dataset.

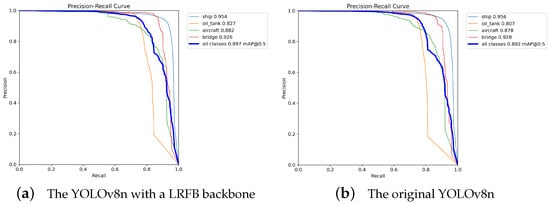

4.3.1. Influence of the LRFB Network on the Experimental Results

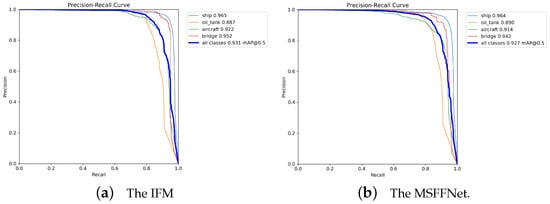

In this ablation experiment, we replaced the backbone network of YOLOv8n with our LRFB network while keeping the other modules unchanged. To ensure fairness in comparison, we removed the pre-trained weights and selected MSAR-1.0 as the dataset for our ablation experiment. The experimental results are summarized in Table 6. As depicted, we achieved a 0.5% increase in mAP at the cost of an increase in parameter count by 0.50 M and FLOPs by 1.2 G. These experimental findings demonstrate that our designed backbone module enhances the detection accuracy of the model while maintaining its lightweight nature, rendering it more suitable for target detection in large-sized SAR images. The precision–recall curves of both are shown in the Figure 11.

Table 6.

The ablation experiment results of LRFB.

Figure 11.

The precision–recall curves of two different models on MSAR-1.0.

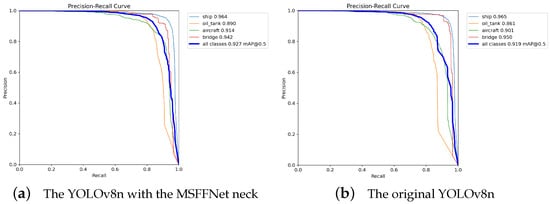

4.3.2. Influence of MSFFNet on the Experimental Results

To verify the effectiveness of our proposed Multi-Scale Feature Fusion Network (MSFFNet), we replaced the neck network of YOLOv8n with MSFFNet while keeping the other components unchanged. After loading pre-training weights, we commenced training. The experimental results are summarized in Table 7. As indicated, our MSFFNet achieved a 0.8% increase in mAP at the cost of increasing the parameter count by 0.14 M and FLOPs by 1.4 G. These experimental findings illustrate that our designed MSFFNet can effectively fuse features and enhance the detection performance of the model without significantly increasing the number of parameters. The precision–recall curves of both are shown in Figure 12.

Table 7.

The ablation experiment results of MSFFNET.

Figure 12.

The precision–recall curves of two different models loaded with pre-training weights on the MSAR-1.0 dataset.

4.3.3. Influence of IFM on the Experimental Results

In this section, we validate the effectiveness of the Information Fusion Module (IFM). As our IFM further fuses features based on MSFFNet, we selected the neck network replaced by MSFFNet as the baseline for comparison. The experimental results are presented in Table 8, and the precision–recall curves of both are shown in Figure 13.

Table 8.

The ablation experiment results of IFM.

Figure 13.

The precision–recall curves of two different models on MSAR-1.0.

4.4. Experiments on SSDD and HRSID Datasets

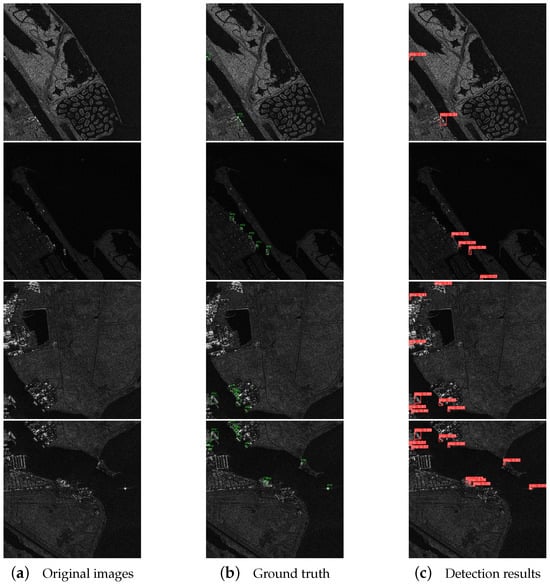

To verify the generalization of our model, we conducted experiments on two SAR ship detection datasets, SSDD and HRSID, and compared them with mainstream universal object detection models and the latest SAR image object detection models. The experimental results are presented in Table 9 and Table 10. SSDD is currently one of the most common datasets in SAR ship detection tasks [], while HRSID is a high-resolution SAR image dataset used for ship detection, which can validate the feature extraction ability and robustness of our model. The detection results of our model on both datasets are illustrated in Figure 14 and Figure 15, while the precision–recall curves are depicted in Figure 16. These experimental results demonstrate that our model exhibits good generalization on different datasets, showcasing better detection performance with fewer model parameters and FLOPs.

Table 9.

Comparison of different object detection models based on the SSDD dataset.

Table 10.

Comparison of different object detection models based on the HRSID dataset.

Figure 14.

The detection results on the SSDD dataset.

Figure 15.

The detection results on the HRSID dataset.

Figure 16.

The PR curves on two different datasets.

5. Discussion

This paper proposes the LRMSNet, a novel SAR image object detection network composed of three key components: a large receptive field backbone, an information fusion module, and a multi-scale feature fusion network. LRMSNet aims to address challenges such as complex background interference, multi-scale objects, and numerous small targets encountered in SAR image object detection. Through experiments conducted on three diverse datasets and ablation studies, we validate the effectiveness of different modules of LRMSNet. In the ablation experiment on the MSAR-1.0 dataset, the result demonstrates a significant improvement of 3.3% in mAP and 3% in F1 Score compared to the baseline. The experiment underscores the capability of LRMSNet in detecting targets of varying scales within large-scale and complex scenes and showcases its generalization across different datasets. When compared with existing mainstream SAR image object detection models, LRMSNet exhibits highly competitive advantages. Achieving a 2.2% enhancement in mAP over the current leading SAR image object detection model in MSAR-1.0, LRMSNet has reached the State of the Art (SOTA). Additionally, it displays robust performance on the SSDD and HRSID datasets.

However, LRMSNet still presents certain limitations. As shown in Figure 17, it may struggle in accurately detecting single-scale targets within complex scenes, leading to missed or false detection. Furthermore, LRMSNet’s lightweight aspect requires improvement. While its parameter count and FLOPs are relatively low compared to current mainstream detection models, there remains scope for further optimization. Addressing these limitations represents a crucial direction for future research and development efforts.

Figure 17.

Detection deficiencies of LRMSNet in complex SAR image backgrounds. The blue elliptical box represents over-detection, while the red elliptical box indicates missed detection.

6. Conclusions

In this paper, we introduce the LRMSNet algorithm tailored for detecting multi-scale targets in complex SAR image environments. Initially, we devised an attention mechanism, the GE block, capable of aggregating contextual information and effectively establishing remote dependency relationships. Building upon this attention mechanism, we proposed a novel backbone network, LRFB, characterized by a large receptive field. This backbone network significantly enhances the extraction and fusion of target feature information in SAR images, thereby augmenting the model’s detection ability. Subsequently, we developed an aggregation module, IFM, proficient in integrating different levels of feature layers. This module reintegrates three feature layers extracted from the backbone network, effectively amalgamating spatial and semantic information from diverse feature layers. Finally, to mitigate feature information loss, we designed a new neck network, MSFFNet. Within this network structure, we revamped a feature pyramid network and introduced a novel multi-scale feature extraction module, C3_EMA, which excels in aggregating and extracting feature information from various levels for subsequent detection tasks. Experimental results across different datasets demonstrate LRMSNet’s excellent performance while maintaining lightweight characteristics, positioning it competitively against mainstream object detection models.

Author Contributions

Conceptualization, H.W. and Z.Z.; methodology, H.W. and W.G.; validation, H.W. and H.S.; writing—original draft preparation, H.W.; writing—review and editing, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62271311 and Grant 62071333.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Ren, X.; Bai, Y.; Liu, G.; Zhang, P. YOLO-Lite: An Efficient Lightweight Network for SAR Ship Detection. Remote Sens. 2023, 15, 3771. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Y.; Qu, L.; Cai, J.; Fang, J. A Spatial Cross-Scale Attention Network and Global Average Accuracy Loss for SAR Ship Detection. Remote Sens. 2023, 15, 350. [Google Scholar] [CrossRef]

- Liu, C.; Chen, Z.; Shao, Y.; Chen, J.; Hasi, T.; Pan, H. Research Advances of SAR Remote Sensing for Agriculture Applications: A Review. J. Integr. Agric. 2019, 18, 506–525. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, H. A CFAR Algorithm Based on Monte Carlo Method for Millimeter-Wave Radar Road Traffic Target Detection. Remote Sens. 2022, 14, 1779. [Google Scholar] [CrossRef]

- Lu, Z.; Wang, P.; Li, Y.; Ding, B. A New Deep Neural Network Based on SwinT-FRM-ShipNet for SAR Ship Detection in Complex Near-Shore and Offshore Environments. Remote Sens. 2023, 15, 5780. [Google Scholar] [CrossRef]

- Conte, E.; De Maio, A.; Galdi, C. CFAR Detection of Multidimensional Signals: An Invariant Approach. IEEE Trans. Signal Process. 2003, 51, 142–151. [Google Scholar] [CrossRef]

- Schwegmann, C.P.; Kleynhans, W.; Salmon, B.P. Manifold Adaptation for Constant False Alarm Rate Ship Detection in South African Oceans. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3329–3337. [Google Scholar] [CrossRef]

- Qin, X.; Zhou, S.; Zou, H.; Gao, G. A CFAR Detection Algorithm for Generalized Gamma Distributed Background in High-Resolution SAR Images. IEEE Geosci. Remote Sens. Lett. 2013, 10, 806–810. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Int. Conf. Comput. Vis. 2018, 42, 318–327. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Zhao, J.; Guo, W.; Zhang, Z.; Yu, W. A Coupled Convolutional Neural Network for Small and Densely Clustered Ship Detection in SAR Images. Sci. China Inf. Sci. 2018, 62, 42301. [Google Scholar] [CrossRef]

- Chang, Y.-L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.-Y.; Lee, W.-H. Ship Detection Based on YOLOv2 for SAR Imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef]

- Jiang, J.; Fu, X.; Qin, R.; Wang, X.; Ma, Z. High-Speed Lightweight Ship Detection Algorithm Based on YOLO-V4 for Three-Channels RGB SAR Image. Remote Sens. 2021, 13, 1909. [Google Scholar] [CrossRef]

- Hong, Z.; Yang, T.; Tong, X.; Zhang, Y.; Jiang, S.; Zhou, R.; Han, Y.; Wang, J.; Yang, S.; Liu, S. Multi-Scale Ship Detection From SAR and Optical Imagery Via A More Accurate YOLOv3. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6083–6101. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T. Lite-YOLOv5: A Lightweight Deep Learning Detector for On-Board Ship Detection in Large-Scene Sentinel-1 SAR Images. Remote Sens. 2022, 14, 1018. [Google Scholar] [CrossRef]

- Zhou, K.; Zhang, M.; Wang, H.; Tan, J. Ship Detection in SAR Images Based on Multi-Scale Feature Extraction and Adaptive Feature Fusion. Remote Sens. 2022, 14, 755. [Google Scholar] [CrossRef]

- Hu, Q.; Hu, S.; Liu, S. BANet: A Balance Attention Network for Anchor-Free Ship Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Tang, L.; Tang, W.; Qu, X.; Han, Y.; Wang, W.; Zhao, B. A Scale-Aware Pyramid Network for Multi-Scale Object Detection in SAR Images. Remote Sens. 2022, 14, 973. [Google Scholar] [CrossRef]

- Miao, T.; Zeng, H.; Yang, W.; Chu, B.; Zou, F.; Ren, W.; Chen, J. An Improved Lightweight RetinaNet for Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4667–4679. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, Z.; Guo, W.; Luo, Y. An Automatic Ship Detection Method Adapting to Different Satellites SAR Images With Feature Alignment and Compensation Loss. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Feng, Y.; Chen, J.; Huang, Z.; Wan, H.; Xia, R.; Wu, B.; Sun, L.; Xing, M. A Lightweight Position-Enhanced Anchor-Free Algorithm for SAR Ship Detection. Remote Sens. 2022, 14, 1908. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, C.; Filaretov, V.F.; Yukhimets, D.A. Multi-Scale Ship Detection Algorithm Based on YOLOv7 for Complex Scene SAR Images. Remote Sens. 2023, 15, 2071. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Y.; Zhao, W.; Wang, X.; Li, G.; He, Y. Frequency-Adaptive Learning for SAR Ship Detection in Clutter Scenes. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Zhou, Y.; Fu, K.; Han, B.; Yang, J.; Pan, Z.; Hu, Y.; Yin, D. D-MFPN: A Doppler Feature Matrix Fused with a Multilayer Feature Pyramid Network for SAR Ship Detection. Remote Sens. 2023, 15, 626. [Google Scholar] [CrossRef]

- Wang, H.; Han, D.; Cui, M.; Chen, C. NAS-YOLOX: A SAR Ship Detection Using Neural Architecture Search and Multi-Scale Attention. Connect. Sci. 2023, 35, 1–32. [Google Scholar] [CrossRef]

- Tang, G.; Zhao, H.; Claramunt, C.; Zhu, W.; Wang, S.; Wang, Y.; Ding, Y. PPA-Net: Pyramid Pooling Attention Network for Multi-Scale Ship Detection in SAR Images. Remote Sens. 2023, 15, 2855. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8. Available online: https://github.com/ultralytics/ultralytics?tab=readme-ov-file (accessed on 7 March 2024).

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. GCNet: Non-Local Networks Meet Squeeze-Excitation Networks and Beyond. In Proceedings of the 2019 IEEE International Conference on Computer Vision Workshops (ICCVW), Seoul, Republic of Korea, 27–29 October 2019. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Ding, X.; Zhang, Y.; Ge, Y.; Zhao, S.; Song, L.; Yue, X.; Shan, Y. UniRepLKNet: A Universal Perception Large-Kernel ConvNet for Audio, Video, Point Cloud, Time-Series and Image Recognition. arXiv 2021, arXiv:2311.15599. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Han, K.; Wang, Y. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. Adv. Neural Inf. Process. Syst. 2024, 36, 51094–51112. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023—IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Xia, R.; Chen, J.; Huang, Z.; Wan, H.; Wu, B.; Sun, L.; Yao, B.; Xiang, H.; Xing, M. CRTransSar: A Visual Transformer Based on Contextual Joint Representation Learning for SAR Ship Detection. Remote Sens. 2022, 14, 1488. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement 2018. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Ultralytics. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 21 March 2024).

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–21 June 2023; pp. 7464–7475. [Google Scholar]

- Zhou, Z.; Chen, J.; Huang, Z.; Lv, J.; Song, J.; Luo, H.; Wu, B.; Li, Y.; Diniz, P.S.R. HRLE-SARDet: A Lightweight SAR Target Detection Algorithm Based on Hybrid Representation Learning Enhancement. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5203922. [Google Scholar] [CrossRef]

- Chang, H.; Fu, X.; Dong, J.; Liu, J.; Zhou, Z. MLSDNet: Multiclass Lightweight SAR Detection Network Based on Adaptive Scale Distribution Attention. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Du, W.; Chen, J.; Zhang, C.; Zhao, P.; Wan, H.; Zhou, Z.; Cao, Y.; Huang, Z.; Li, Y.; Wu, B. SARNas: A Hardware-Aware SAR Target Detection Algorithm via Multiobjective Neural Architecture Search. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–5. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Cheng, P.; Yu, Z.; Yu, L.; Chi, C. A Survey on Deep-Learning-Based Real-Time SAR Ship Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3218–3247. [Google Scholar] [CrossRef]

- Feng, K.; Lun, L.; Wang, X.; Cui, X. LRTransDet: A Real-Time SAR Ship-Detection Network with Lightweight ViT and Multi-Scale Feature Fusion. Remote Sens. 2023, 15, 5309. [Google Scholar] [CrossRef]

- Gao, S.; Liu, J.M.; Miao, Y.H.; He, Z.J. A High-Effective Implementation of Ship Detector for SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).