Abstract

Arnica montana L. is a medicinal plant with significant conservation importance. It is crucial to monitor this species, ensuring its sustainable harvesting and management. The aim of this study is to develop a practical system that can effectively detect A. montana inflorescences utilizing unmanned aerial vehicles (UAVs) with RGB sensors (red–green–blue, visible light) to improve the monitoring of A. montana habitats during the harvest season. From a methodological point of view, a model was developed based on a convolutional neural network (CNN) ResNet101 architecture. The trained model offers quantitative and qualitative assessments of A. montana inflorescences detected in semi-natural grasslands using low-resolution imagery, with a correctable error rate. The developed prototype is applicable in monitoring a larger area in a short time by flying at a higher altitude, implicitly capturing lower-resolution images. Despite the challenges posed by shadow effects, fluctuating ground sampling distance (GSD), and overlapping vegetation, this approach revealed encouraging outcomes, particularly when the GSD value was less than 0.45 cm. This research highlights the importance of low-resolution image clarity, on the training data by the phenophase, and of the need for training across different photoperiods to enhance model flexibility. This innovative approach provides guidelines for mission planning in support of reaching sustainable management goals. The robustness of the model can be attributed to the fact that it has been trained with real-world imagery of semi-natural grassland, making it practical for fieldwork with accessible portable devices. This study confirms the potential of ResNet CNN models to transfer learning to new plant communities, contributing to the broader effort of using high-resolution RGB sensors, UAVs, and machine-learning technologies for sustainable management and biodiversity conservation.

1. Introduction

1.1. Biodiversity Conservation for Semi-Natural Grasslands in Europe

The Convention on Biological Diversity (1992) and subsequent treaties and protocols have brought the sustainable management of ecosystems to the forefront of global challenges. The implementation of these sustainability protocols is achieved with knowledge-intensive management practices. Sustainable management of grasslands must conserve their ecosystem services, and these can be grouped into four types: supporting, provisioning, regulating, and cultural services [1]. The sustainability of natural and semi-natural grassland management is an unresolved issue, with arguments appearing from the global scale to the regional scale, and across multiple domains [2]. Accordingly, at the European level, grasslands represent a significant part of the ≈30% of total agricultural land that has been marked as being of “High Nature Value” (HNV) [3,4,5]. HNV systems are areas where agricultural activities maintain a higher level of biodiversity [6]. The primary challenges faced by these HNV grasslands include agricultural abandonment, intensified farming practices, and socio-economic decline [7]. Romania has 4.8 million ha of grasslands, 2 million of which is marked as HNV, representing ecosystems of the Carpathian arc [8]. The long-term traditional management of grasslands has created a great diversity of species and habitats [9]. To address these threats, it is essential to gather local knowledge about the environment, cultural practices, and socio-economic conditions [10]. Enhancing the economic significance of HNV grasslands is crucial for sustainable management and discouraging land use changes. Unfortunately, the existing European Union support programs are not sufficient for maintaining semi-natural grasslands [11], so new strategies need to be developed. One alternative is the sustainable harvesting of medicinal plants, with an added-value chain. HNV grasslands offer various economic prospects, including those related to medicinal plants. The southeastern European region is a significant source of medicinal plants [12]. However, realizing these opportunities necessitates deeper local and regional knowledge.

A successful example of integrating the agricultural use of HNV grasslands, harvesting of medicinal plants, resource management, and value-adding economic activity is the harvesting of Arnica montana Linné in the Apuseni Mountains, Romania [13].

1.2. A. montana—A Flagship Species of HNV Grasslands in Romania’s Apuseni Mountains

A. montana is used for its medicinal properties, such as its anti-inflammatory, antimicrobial, and cytotoxic effects. A. montana flowerheads, which are rich in sesquiterpene lactones, are the most pharmacologically investigated organ of the plant [14].

A. montana is a herbaceous perennial species from the Asteraceae family. It develops hardy rhizomes and develops a leaf rosette at the base of its 20–60 cm tall stem [15,16]. The plant’s large rosette leaves are ovate, rounded at the tip, and veiny, while leaves attached to the upper stem, as opposites, are smaller, narrower, and spear-shaped. The A. montana inflorescence, a capitulum typical of Asteraceae, grows to approximately 5 cm in diameter and is ringed with long and yellow ray (ligulate) florets, while disc (tubular) florets range in hue between yellow, golden-yellow, and yellow-orange. The anemochorous species depends on the wind to disperse small achenes equipped with a pappus organ. However, its most fertile achenes are short-range, highlighting the importance of conservating A. montana habitats [17]. Nardus stricta grasslands, in which A. montana grows, are officially protected in the European Union under the EU-FPH directive (92/43, Annex V, code 6230) [18]. A. montana is included in the Natura 2000 habitat network under the code 1762 [19] and is classified by the IUCN as being of “Least Concern” [20].

A. montana is native to the temperate biomes of Europe, growing in mountainous oligotrophic grassland ecosystems at altitudes of up to 3000 m [21]. The main subspecies (montana) grows in Central and Eastern Europe, while the other subspecies (atlantica) grows in South-West Europe [14,22,23,24]. Southeastern Europe is where important populations of A. montana are found [25] growing in oligotrophic and traditionally managed grasslands [26,27,28]. These semi-natural grasslands are dependent on traditional management, which adds further complexity to conservation efforts by integrating socio-economic aspects for community-based conservation [29,30,31,32]. Monitoring A. montana populations and their habitats is essential for assessing the long-term effects of harvesting on A. montana flowerheads as a resource [13,33]. Current monitoring activities in Romania are conducted only in the perimeter of the Gârda de Sus community, Apuseni Mountains. The entire area under monitoring covers 183.5 ha, representing 33.3% of the total area of A. montana habitats (550 ha) in the Gârda community. Future activity will be extended to the areas of the other communities, and this will require new and more efficient monitoring methods.

Sustainable harvesting of A. montana flowerheads necessitates estimating and monitoring the population size in this region.

1.3. The Use of Drone-Based Remote Sensing (RS) and Machine Learning for Detecting Small-Scale Patterns in Vegetation

Biodiversity conservation relies on data-driven management, but these efforts often lack adequate support, hindering the achievement of conservation goals. However, there is promising potential in UAVs and remote sensing technologies [34,35]. New hardware and software technology could be used to increase the power and efficiency of biodiversity monitoring by relying on small UAVs and RGB (red–green–blue, visible light) sensors to obtain aerial georeferenced imagery. Further improvements in the scale of analysis of aerial imagery could be achieved with the help of machine learning algorithms. In a review of the literature from 2020 regarding the use of UAVs for biodiversity conservation [36], the lead author found that small and low-cost UAVs or “conservation drones” [37] carrying a sensor payload—usually RGB cameras—can be successfully used for surveys of natural or semi-natural grasslands [38,39,40,41,42,43]. The key performance factor in these efforts was the GSD value of images—representing the level of detail.

A different factor to be considered is the effect of shadows because shadows are darkened pixels that distort the information used by algorithms in RS, and working with low-resolution imagery implies that every bit of useful information is needed. Shadow effects are a known problem, and there are different parallel efforts to develop tools for correcting these errors, even for vegetation shadows [44].

Forests are the more common focus in UAV and RGB sensor applications [45,46] with CNN models, trained on imagery acquired from low-altitude drones, generating accurate detections of trees [47]. Fromm et al., (2019) [48] showed that using CNN models (including ResNet101) with drone imagery works for detecting conifer seedlings. However, A. montana is at the scale of herbaceous vegetation, as shown in the studies of Li et al. (2023) [49], in which different CNN object detection models, trained on UAV imagery, were used successfully to count Brassica napus L. inflorescences. There was sufficient evidence overall to show that grassland vegetation may be a candidate for this detection challenge [50,51,52,53] with flower phenotypes and flowering timing being key aspects of this effort [54].

Automatic image object detection facilitates the rapid analysis of imagery regardless of the nature of the 2D content. The first powerful image classification and detection convolutional neural network (CNN) models were trained on large data sets like MS COCO [55], which contained many common objects, including flowers. The accuracy of automated plant classification, when compared with that conducted by humans, exhibited potential soon after the publication of these extensive models [56,57]. Furthermore, the models demonstrated success in structured and orderly environments, particularly when trained to identify floral characteristics from high-resolution imagery captured at low altitudes [58]. CNNs were shown [59,60] to be especially powerful for classification, while having generous tolerance for noisy training data [61]. Datasets represent the critical input for the development of these models, and there are collective efforts to build them [59] or to collect them from crowds of plant photography amateurs [62]. Heylen et al., (2021) [63] showed that it is possible to train a CNN model with RGB imagery with good accuracy for counting Fragaria x ananassa Duch. flowers. Karila et al., (2022) [64] also compared different CNN models, trained on UAV multi-sensor imagery, to successfully assess fresh and dry biomass and other grassland agronomic quality indicators. A similar effort was undertaken by Gallmann et al., (2022) [65], who used R-CNN model architecture to detect the number of inflorescences in several grassland species simultaneously. In a pre-print, John et al., (2023) [66] used mask R-CNN models, trained with crowd-sourced and ground-based imagery of sixteen flowering meadow species from handheld cameras, to successfully detect and count the species in similar conditions.

1.4. Main Objective

In order to fulfill the objectives of monitoring, it is imperative to conduct surveys across hundreds of hectares of grasslands during each flowering season of A. montana, all within the constraints of a limited budget. The monitoring strategy necessitates a delicate equilibrium between the extent of grassland surveys, the temporal window of the season, and the limitations of management resources. The incorporation of aerial surveys, utilizing unmanned aerial vehicle (UAV)-based imagery, introduces additional constraints, including weather conditions, natural lighting conditions, and the resolution of the target imagery. The acquisition of high-resolution details in the imagery is a valuable objective in this process, yet it imposes restrictions on the available coverage using the UAV. This necessitates the development of a methodology to utilize imagery with lower-resolution details.

Planning for low-resolution imagery allows for an increase in the flight altitude relative to the ground, thereby enabling greater coverage and facilitating a balance between the quantity of monitoring, the quality of monitoring, and the cost of monitoring. Primary goal is to develop a comprehensive and practical system that can effectively utilize UAVs and RGB sensors in the monitoring of A. montana during harvest season. The specific objectives of this study involve examining the potential of a CNN object detection model in identifying flowerheads in semi-natural grassland environments using low-resolution imagery, as well as evaluating the impact of various technological factors on the accuracy of the CNN model. The automatic detection application must be standalone and usable on site. This approach pertains specifically to flowering A. montana plants and excludes first-year plants. Additionally, the study does not encompass the detection of multiple flowerheads on a single A. montana plant.

2. Materials and Methods

2.1. Image Acquisition Approach

The training and evaluation imagery was generated through UAV surveys undertaken in two different regions. Specifically, the training imagery was obtained from a grassland at Todtnau in the Black Forest, Germany, whereas the evaluation imagery originated from various grasslands located in the Apuseni Mountains around Ghețari, Gârda de Sus commune, Romania. Notably, the distance between these two locations exceeds 1100 km, which ensures phenotypical variations among the A. montana populations. Despite the distance, the habitats share similarities [67]. Table 1 presents the general survey parameters for both areas mentioned above.

Table 1.

Survey broad parameters: area, UAV, camera, image size, and flight altitude range. MP—camera sensor size in megapixels.

2.2. Research Locations

2.2.1. Germany

The studied A. montana habitat is a semi-natural oligotrophic grassland of the type Nardo-Callunetea, situated within the Gletscherkessel Präg reservation, in Todtnau, in the Black Forest region of Germany. The habitat has characteristics that are analogous to those of the locations in Ghețari [67]. Drone-based RGB imagery from this location was used exclusively for CNN model training data.

2.2.2. Romania

The research locations in Romania are situated within the commune of Gârda de Sus, on the northern side, between the villages of Ghețari and Poiana Călineasa. Several oligotrophic grassland ecosystems exist in and around Ghețari. From this point forward, “Ghețari” will be used as a shorthand for these locations as a group. Drone-based RGB imagery from this location was used exclusively for evaluating the precision of the trained CNN model.

The topographical relief of the area of Ghețari is complex, with slopes oriented in various directions, and altitudes ranging between 1100 and1400 m. The morphological type is karst plateau, with advanced flattening in certain places. The petrographic profile of the area consists of soil-generating groups of compact rocks (limestone, sandstone, etc.) and fewer loose rocks, with mature pedogenetic processes producing loamy Terra fusca soils [68,69]. According to the research weather station at Ghețari, the multi-year average temperature was around 5.8 °C for the 2000–2023 period. Rainfall occurs in quite different quantities from one place to another, so its territorial distribution is characterized by heterogeneity, with a multiannual average of 931.7 mm for the 2000–2023 period.

The oligotrophic grasslands below the climatic tree line are the result of historical deforestation [31,70,71,72]. These oligotrophic grasslands are rich in plant diversity and listed in Annex I of the Natura 2000 program of the European Union as “Nardus stricta grasslands” (code R 6230) and “mountain hay meadows” (code R 6520). A. montana occurs in grassland types within a floristic and edaphic gradient from the nutrient-poor, oligotrophic Festucetum rubrae to the oligo-mesotrophic Molinio-Arrhenatheretea (Festuca rubra-Agrostis capillaris) [9,32,73]. A. montana can be considered an indicator species associated with other species like Polygala vulgaris L., Gentianella lutescens Velen., Scorzonera rosea Waldst. et Kit., Pilosella aurantiaca (L.) F.W. Schultz et Sch.Bip (Hieracium aurantiacum L.), Viola declinata Waldst. et Kit., Crocus heuffelianus Herb., Gymnadenia conopsea (L.) R.Br., and Traunsteinera globosa (L.) Rchb. 1842 [74].

2.3. Aerial Surveys—Critical Flight Conditions

The flight missions aimed to closely follow the terrain surface using 3D waypoint plans and built-in drone capabilities. Relative altitude is important due to the study area being a mountainous semi-natural grassland with uneven topographical features and a small number of dispersed trees that are a hazard for very-low-altitude flights.

Ground sampling distance (GSD) is a commonly used parameter in photogrammetry. GSD measures the size of an area on the ground as represented by a single pixel in the image captured by the camera attached to the drone. This factor is not immediately obvious in technical specifications and must be calculated. It is a product of flight altitude, camera sensor size, and lens focal length [75]. Lower GSD values imply that a smaller area is represented by each pixel, so there is more detail in the image. Higher GSD values imply that a larger area is represented by each pixel, so there is less detail in the image. As the object detection model uses details to learn, the more details there are, the better it can learn and accurately predict. It is tempting to decrease drone flight altitude to obtain lower GSD values, but this may not be useful in fieldwork because it limits the rate of land surveying. Average GSD values for each UAV mission that were used are listed in Table 2 and Table 3. The photogrammetry software “Pix4D Mapper 4.7” was used to obtain average GSD values for each aerial survey as part of the orthomosaic building process.

Table 2.

Summary descriptions of aerial surveys for training in Todtnau. The GSD value was computed by “Pix4D Mapper”.

Table 3.

Summary descriptions of aerial surveys for evaluation in Ghețari. The ID number represents series order. The GSD value was computed by “Pix4D Mapper”. Some image count values are approximated due to inconsequential data loss.

In computer vision technology and RS, every pixel matters, and shadows can affect colors and textures [44,76,77,78]. To consider this factor, the take off time was used to obtain a retrospectively calculated value for shadow length. During the day, the length of the shadow cast by sunlight increases or decreases depending on the solar angle. An astronomical tool called “SunCalc” [79] was used to calculate the shadow length for each area based on the flight time, at a ground height of 30 cm—representing the average height of A. montana plants. This fixed value, combined with different solar angles, projected shadows of different lengths depending on time, date, and place. Shadow length values for each UAV mission are listed in Table 2 and Table 3.

The images for training, from Germany, belong to four aerial surveys carried out on the 14 June 2018 (Table 2). Flight conditions were sunny with a mostly cloudless sky, and the flight times were from late morning up to early noon.

The evaluation imagery from Romania was acquired in 2021 from 9 aerial surveys, 6 in the last week of June and 3 in the first week of July (Table 3), with similar weather conditions to the training ones, and using the terrain following feature of the drone.

2.4. Training the Model—Inputs and Configuration

Image and data processing, model training, model evaluation, and other supporting operations were conducted on a workstation operating with “Microsoft Windows”, in a “Python 3.6” environment. The training effort relied on a CUDA-enabled graphics card.

The data for training resulted from a common pool of images corresponding to the four flights, from which 155 images were randomly selected to be part of the training set, ensuring a large variety of instances of A. montana inflorescences in a wide GSD range [80].

For data processing, the “SSD ResNet101” object detection base model was chosen from the “TensorFlow2” (TF2) “Detection Model Zoo” list [81,82]. There were several reasons for this choice: a closer fit to the training data images, good performance balance between the mean average precision (mAP) score (39.5) and speed (104 ms) [82,83], and evidence that it worked well with low-resolution or distorted imagery [84,85,86] or small vegetation [48].

The next step consisted of the preparation of images for annotation with labels. This approach is known as “patch-based.” The preparation of training data started with the division of the original images into pieces with a fixed width and height. This process resulted in 4309 new image files, each containing the name of its original file and a serialized unique suffix. The division process was executed with a custom Python 3.6 program. Images that fit on an average desktop screen may be easier to annotate with labels than high-resolution images that require scaling and maneuvering visible portions with scrolling.

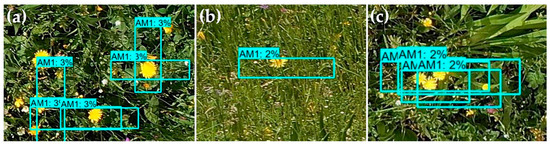

Label names were established as two categories with eight subcategories each. The top-level categories, clear (AM) and blurry (BAM), each had 8 homologous labels: AM0, AM1, AM2, AM3, AM4, AM5, AM10, and AMN, and BAM0, BAM1, BAM2, BAM3, BAM4, BAM5, BAM10, and BAMN, respectively, to mark solitary or grouped inflorescences (Figure 1). AM0 and BAM0 were assigned for half inflorescences. AM1 and BAM1 were assigned to distinct single inflorescences, the main targets of detection. AMN and BAMN were assigned as a catch-all label for ambiguous groups of inflorescences. Labels were allowed to overlap, so that the group labels could include smaller labels, but this practice is optional if the images contain an abundance of target flowers, and labeling does not have to be exhaustive. Distinguishable inflorescences of all sizes were marked with labels.

Figure 1.

Samples from the program’s user interface from two different images: (a) an example of the challenge of labeling blurry or minimum-resolution inflorescences; (b) an example of labeling clear but low-resolution inflorescences. Labels were edited to be more visible. Each label is rectangular, with its corners marked with red circles. The label text is colored violet (BAM1) or light blue (AM1).

As this study represents a prototyping effort, only AM1, BAM1, and BAM2 instances were included in the CNN model and annotated at a large scale across the whole training image set, while the rest remained as prospective presets for future studies.

In the next step, the annotated set of image and label files were randomly separated into a training set with 90% of the files, and a testing set with the remaining 10%. The training set contained 4914 label instances of AM1, 13,226 label instances of BAM1, and 3209 label instances of BAM2 (Table 4).

Table 4.

Training label counts and label size statistics for each implemented label class. Area is measured in pixels (px) or megapixels (Mpx).

Using TensorFlow2 (TF) as a platform for machine learning, the training configuration—the model’s “pipeline.config”—was based on the TF documentation [87,88]. Adjustments were made to manage the hardware capabilities of the workstation. The SSD image resizer property was set to a fixed size of 1024 × 1024 pixels. Maximum detection boxes per image were limited to 100 per class for testing purposes. Training configuration data augmentation options included a random horizontal flip and random image crop. Optimizer properties were set to 50,000 total steps (epochs) for the learning rate, with 2000 warmup steps. Overall, the default configuration settings were preserved to maintain predictability and increase difficulty, thus allowing the quality and quantity of training data (images and labels) to carry larger significance in the object detection results.

2.5. Evaluation of the Model

Evaluation of the performance of the new model was achieved by manually calculating the precision: true positive A. montana detections in relation to the total reported detections. Recall, intersection over union (IoU), and mAP were not assessed. The evaluation imagery produced in Ghețari, Romania, was randomly sampled to provide a consistently large collection of images from each aerial survey. Images that did not contain any A. montana flowers were removed from the set to help focus only on true positives.

To allow for more model errors with the higher-difficulty goal, the threshold for the detection score was decreased to 0.2; this increase in error tolerance produced more detections (positives), but also more false positives, which reduced precision.

The detections were automatically reported and aggregated for each used label (AM1, BAM1, and BAM2) and every image. The relationships between precision, GSD, and shadow size were investigated with statistical measures in order to understand the role of the independent variables in the precision outcome [89].

3. Results

3.1. Image and Label Dataset

Table 4 describes the total amount of label instances provided for the training of the ResNet101 base model, and the surface area (size in px) aggregated for each label class. Label classes with no annotations were not included.

The analysis of the surface area corresponding to each label class shows that most label instances have a small size. The size distribution of the training label area size for AM1 has a median value of 810 px (<29 × 29 px), the distribution for BAM1 has a median value of 648 px (<26 × 26 px), and the distribution for BAM2 has a median value of 1118 px (<34 × 34 px). This type of subsampling analysis provides a way to understand the extent of A. montana inflorescences used to train the model, and it indicates what type of data should be provided for future training efforts.

Considering a recommended minimum label area size of 48 px (8 × 8 px), it is important to consider the imposed limits on precision due to training focused on lower-resolution imagery and higher-difficulty detection, having the further goal of maximizing flight altitude and coverage for drone surveys.

3.2. Post-Training Analysis

Inspection of the model training log, generated by the TF training process and converted into data for the internal inspection tool (“TensorBoard”), showed that the new ResNet model was trained successfully. Descriptive statistics for these machine learning indicators are reported in Table 5.

Table 5.

Learning and loss indicators from model training.

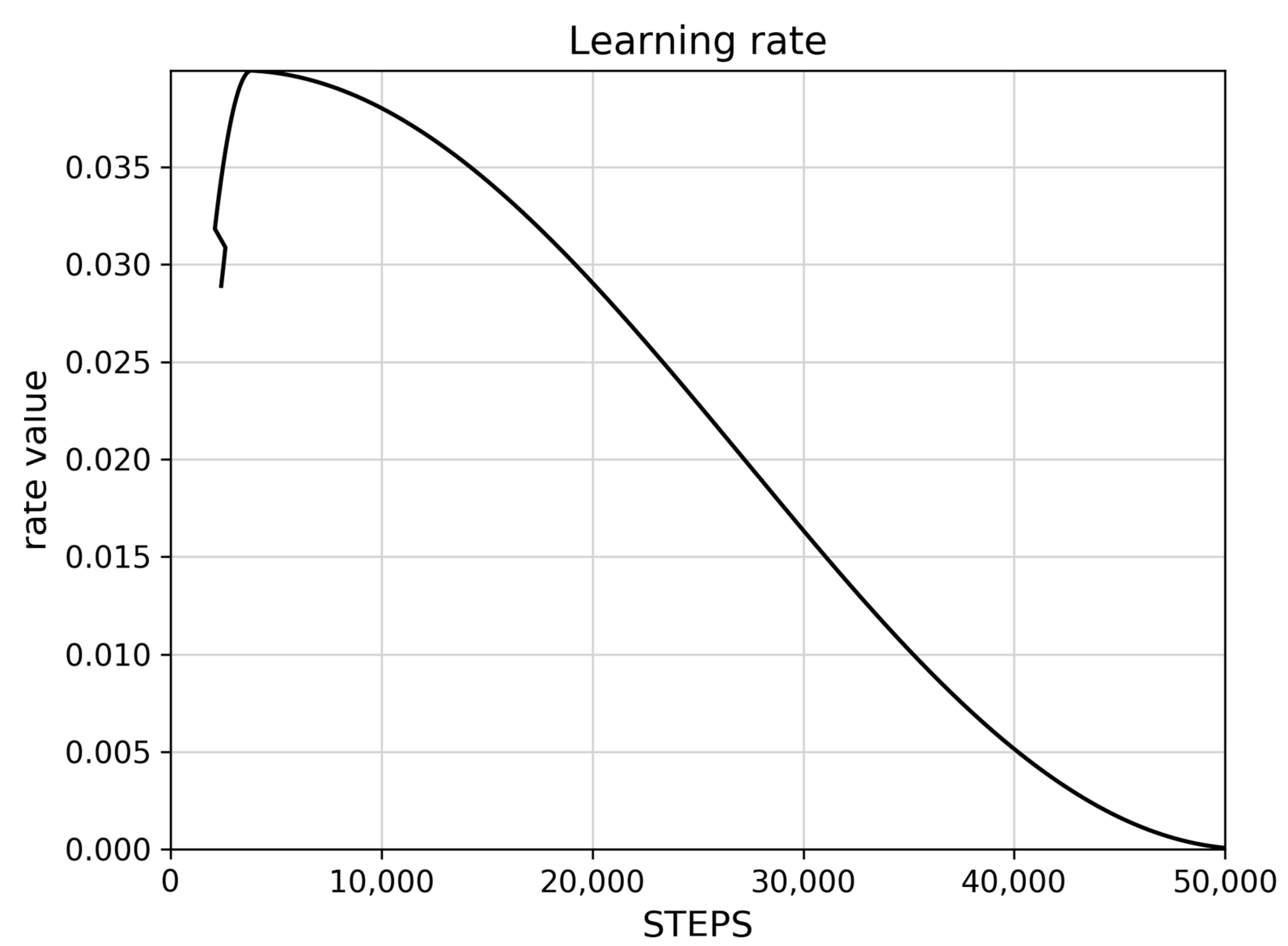

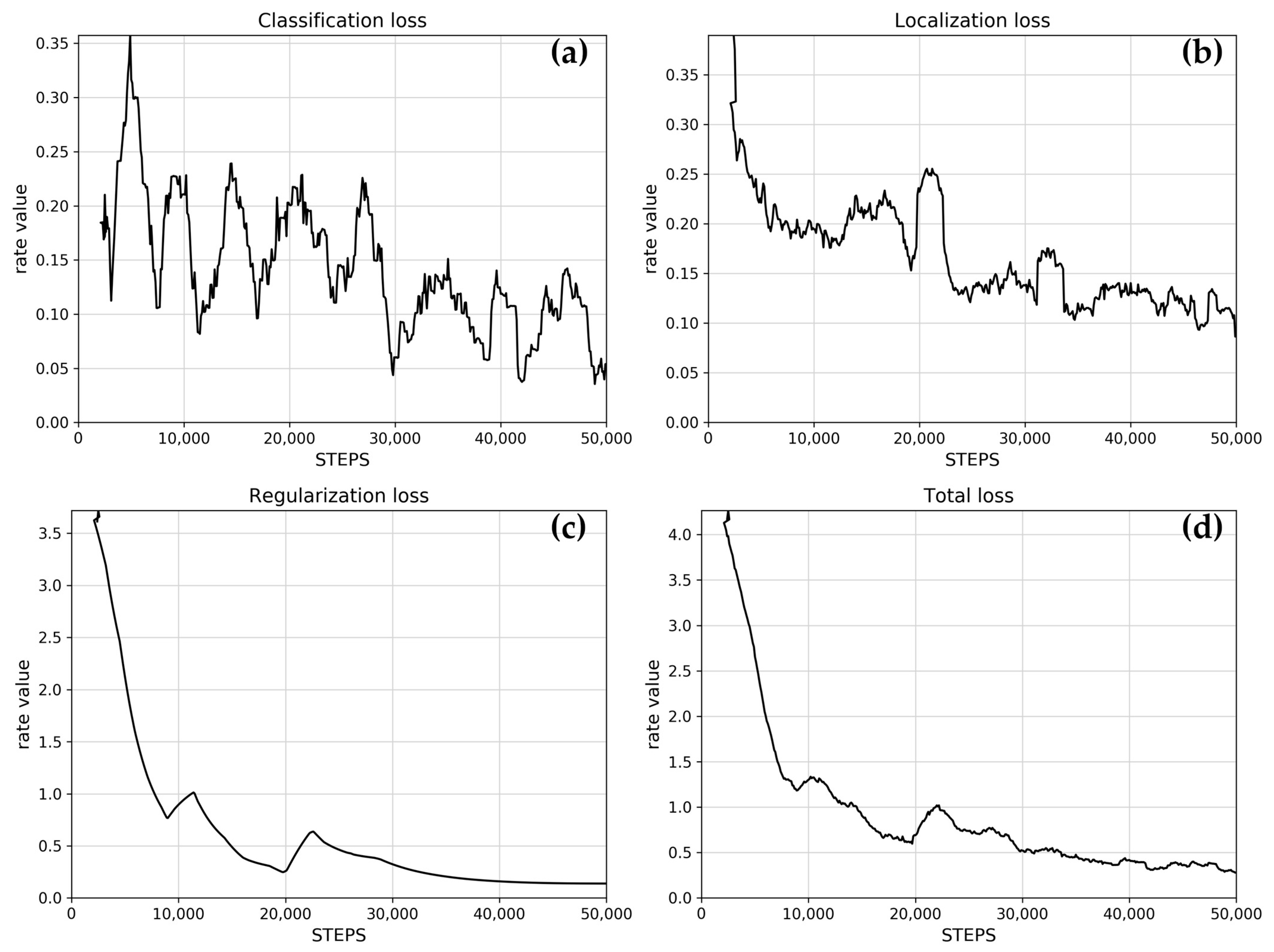

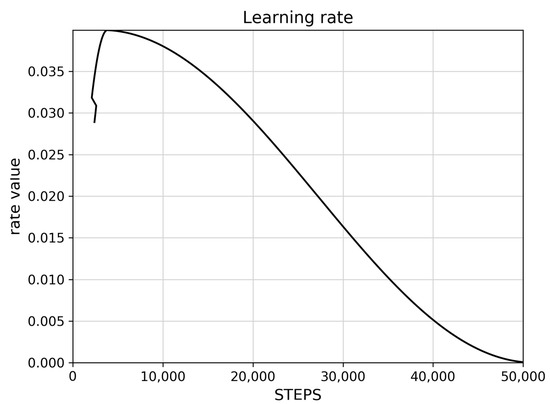

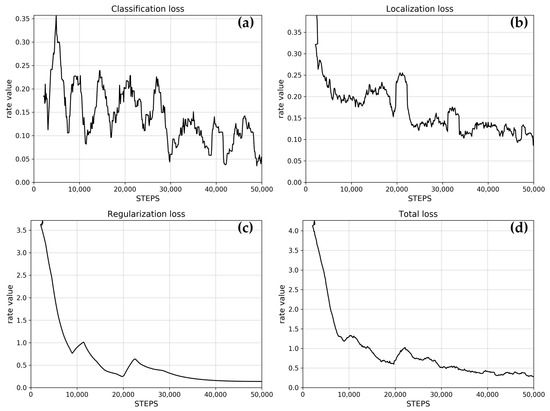

The learning rate controls how much the model updates its weights based on new data. Figure 2 shows a typically excellent rate, that initially increases rapidly, reaches a peak, then slowly decreases towards zero, and finally reaches zero. Figure 3 shows the reported loss indicators. The classification loss rate measures how well the model distinguishes between different objects and different A. montana classes, and the localization loss rate measures how well the model predicts the bounding box around an object such as an A. montana inflorescence.

Figure 2.

Learning rate for trained SSD ResNet101 model.

Figure 3.

Loss indicators: (a) classification loss, (b) localization loss, (c) regularization loss, and (d) total loss. Data values are presented as a rolling mean (window: 25).

The trained model shows classification and localization loss rates trending below 0.5, toward zero, which is typically a reliable result.

The regularization loss rate penalizes overly complex models, preventing overfitting, which improves the quality of learning. The trained model shows an early drop in the rate, which is a typically good outcome.

The total loss rate is the sum of classification, localization, and regularization loss rates, with the regularization loss making up most of the value of this training effort. The loss rate shows a typically good trend toward zero.

Classification and localization loss curves were promising, but not ideal, which will imply a decrease in overall performance (Figure 3a,b). Overall, the post-training inspection confirmed that the training process was successful and that there were sufficient training data, images, and labels to provide the base ResNet model with the necessary information to learn to detect A. montana inflorescences.

3.3. Detection Box Qualities

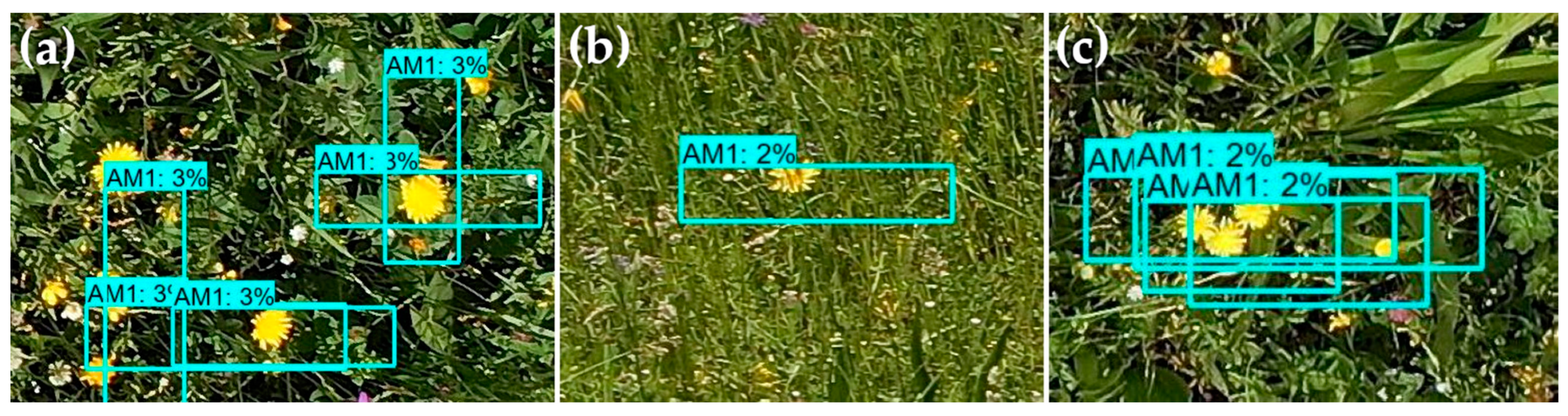

The samples in Figure 4 show how the model can detect clear A. montana individual inflorescences at different angles, at resolutions ranging from very low to medium, and even with some occlusions such as grass stems or leaves. The images also hint at the model’s fit for the edges of the A. montana inflorescence, which is where most of the detail exists as the ligulate flowers contrast the background.

Figure 4.

Sample of AM1 detections. (a) Multiple TP detections with overlapping boxes; (b) single TP detection with some shadows and occlusions; (c) multiple TP detections with inflorescences forming a group.

Overlapping detection (bounding) boxes containing A. montana inflorescences were observed frequently, with the boxes extending to areas around the inflorescence, while the actual A. montana inflorescence was not as neatly centered as in the labels from the training dataset. Figure 4 shows a sample of true positive (TP) detections with excessively large and overlapping boxes.

3.4. Detection Precision

A randomly sampled subset was created from each evaluation set of images (from aerial surveys in Ghețari, Table 3). The precision was manually evaluated for each image in the subset (true positive predictions out of all predictions). Since the only detected label class was the main candidate, AM1, the precision reporting focused on it. The precision value was also averaged for the entire set for each aerial survey (Table 6).

Table 6.

Results for single inflorescence detection (AM1) in evaluation imagery (Ghețari).

For JUN1, there were 1570 detections in total, with a standard deviation (SD) of 29.28 and a mean precision of 51.23. For JUN2, there were 1648 detections, with a SD of 33.65 and a mean precision of 49.05. For JUN3, there were 1450 detections, with a SD of 32.14 and a mean precision of 44.92. For JUN4, there were 1470 detections, with a SD of 15.82 and a mean precision of 51.62, the highest mean precision. For JUN5, there were 265 detections, with a SD of 3.37 and a mean precision of 45.75. For JUN6, there were 911 detections, with a SD of 15.09 and a mean precision of 16.03. For JUL1, there were 65 detections, with a SD of 2.93 and a mean precision of 10, the lowest survey mean precision. For JUL2, there were 111 detections, with a SD of 2.22 and a mean precision of 10.54. For JUL3, there were 237 detections, with a SD of 8.29 and a mean precision of 12.75 (Table 6).

The model did not detect BAM1 despite there being more label instances (see Table 4). Group labels that were part of the training data were not detected at all (BAM2). The model did not detect any blurry A. montana inflorescence label instances (BAM).

For the clear (sharp) A. montana inflorescence labels, the training data only contained individual inflorescence labels (AM1), so detections were only from the AM1 class of labels. As observed in Figure 4, groups of A. montana inflorescences are a challenge in terms of detecting and placing bounding boxes, but this also shows that the model tried to identify individual inflorescences in groups. However, visual decomposition of an overlapping bundle of A. montana inflorescences, which is a natural phenomenon, is unlikely to be successful, because it is difficult to achieve even with the human eye.

Blurry inflorescences remained undetected. Likely, the low-resolution images did not provide the ResNet model with enough features to detect them. However, since all label classes were compared during the learning process (classification loss), blurry labels forced the model to look for sharper edges for the AM1 label. This can improve performance, but it may cause overfitting too.

3.5. Correlations between Precision, GSD, and Timing

3.5.1. Key Aerial Survey Factors

The precision value for each sample set of aerial imagery of A. montana presented visible variability (Table 7), and the characteristics of each flight may reveal the effects of some factors on the performance of the trained model. Since the same drone and camera were used in the aerial surveys, and the A. montana populations living in the study areas in Ghețari have similar phenotypes, the main factors left must be based on flight plans. Precision values for each image from the aerial survey sample sets were pooled into one large dataset for extensive statistical analysis.

Table 7.

Descriptive statistics for variables between aerial survey sample sets from Ghețari.

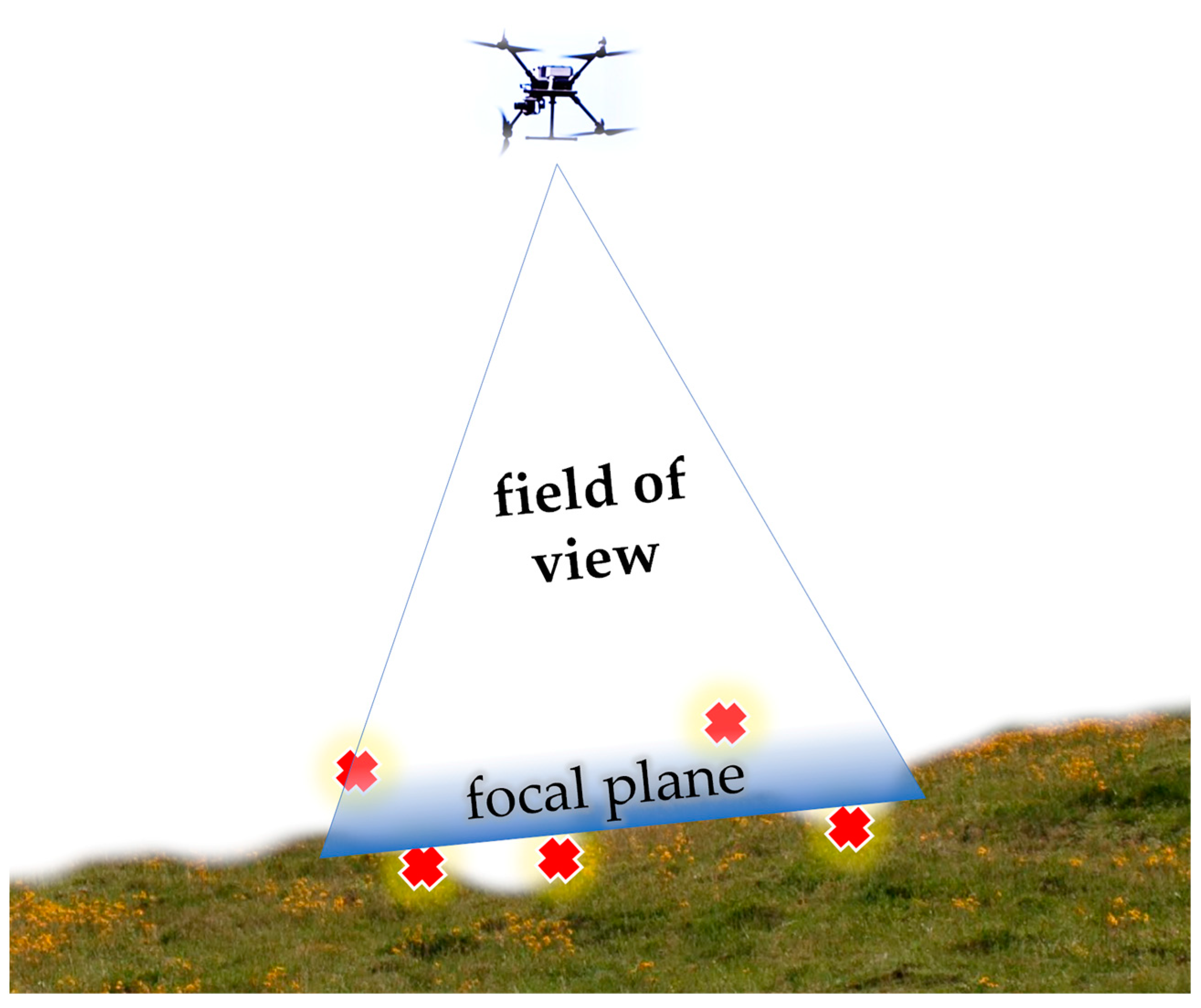

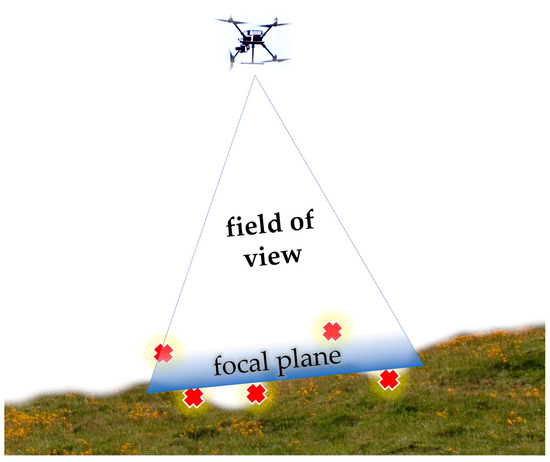

The ground sampling distance (GSD) is a key factor in remote sensing (RS) technology as a direct measure of the ground distance between each pixel (the real-world size of each pixel). GSD is like spatial resolution but is not based on the criterion of using the smallest recognizable detail (good for flat surfaces). GSD is calculated based on sensor size, image focal distance, pixel size and shape, and the UAV’s relative altitude from the ground. Differences appear when mapping uneven topography, as shown in Figure 5. For the aerial surveys in Ghețari, the GSD differences are based on the relative altitude differences, since the sensor size and focal length are the same. The other major difference between these aerial surveys is timing, which determines natural lighting conditions. Shadows can affect the precision of an object detection model because the CNN model is particularly focused on textures, and shadows change these textures.

Figure 5.

The challenge of focusing on uneven terrain. Illustration: how the focal plane can fail to capture clear images of A. montana inflorescences. Red “⨯” symbols represent unfocused spots.

3.5.2. Correlations from Aerial Survey Image Sets as Variables

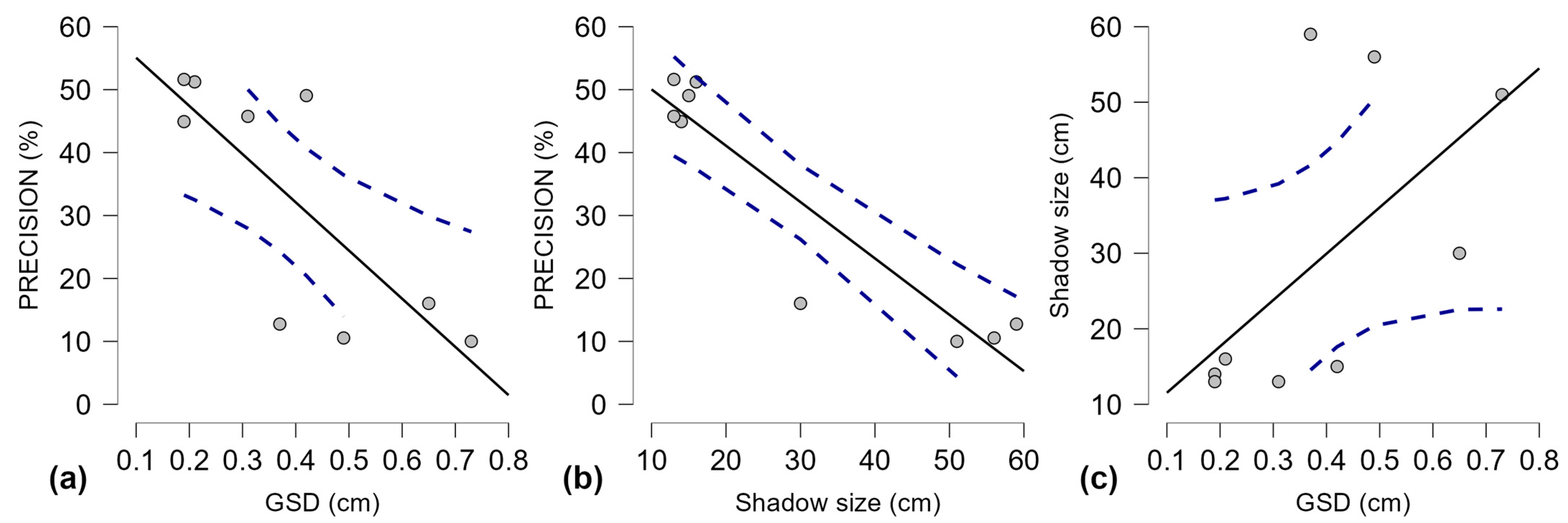

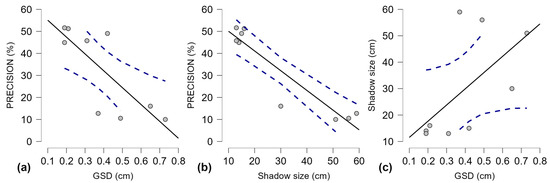

GSD and shadow size were treated as independent variables between the A. montana image sets from each aerial survey (Table 7, Figure 6).

Figure 6.

Scatter plots for Pearson’s correlation for precision, GSD, and Shadow size between aerial survey sample sets, with 95% confidence intervals for linear regression lines; (a) Precision and GSD; (b) Precision and Shadow size; (c) Shadow size and GSD; Gray dots—individual values; solid black line—trend line; dashed blue lines—confidence intervals.

Descriptive statistics are presented in Table 7. The average GSD values attributed to the imagery for each aerial survey were, as noted previously (Table 3), calculated with “Pix4D Mapper” in the orthomosaic building process.

The correlations between the three variables for the individual survey sample sets (Figure 6) show how a larger shadow size can impact precision (Figure 6c) and weaken the effect of GSD values on precision. Pearson’s correlation test was applied to these aerial survey results (Table 8) to measure the effect size in the relationship between the dependent variable, precision, and the other two variables: GSD and shadow size.

Table 8.

Pearson’s correlations for precision, GSD, and shadow size of aerial survey sample sets. r—correlation coefficient; p—probability; CI—confidence interval.

The distribution cannot be normal because the dependent variable (precision) is affected by GSD and shadow size. These results need higher Y values (precision) when X values are low. The results revealed a strong negative correlation between precision and GSD values (r is −0.786). It is expected that the precision will improve when GSD shrinks, as a lower GSD provides more details for the A. montana detection model to work with. However, this relationship should be stronger, which means that other variables interfere. The negative correlation between precision and shadow size is very strong (r is −0.931), meaning that precision decreases significantly when shadow size increases. For the trained model, this suggests that larger shadow size values interfere with the algorithm of A. montana inflorescence detection. Regarding GSD and shadow size, the correlation reveals a moderate positive relationship (r is 0.606), indicating a bias caused by shadow size in the correlation between precision and GSD (Table 8). Precision increases when GSD and shadow size decrease. A further analysis is required on pooled results.

3.5.3. Correlations from the Pooled Image Sample Sets

To further investigate the shadow size distortion observed in Table 8, results were investigated with a pooled approach. Results and parameters from all A. montana inflorescence evaluation sample sets were pooled, resulting in a set of 503 images and their precision scores. The GSD value for each corresponding image was included based on image width, focal length, sensor size, and relative altitude.

Descriptive statistics of the pooled dataset are shown in Table 9. If these results are compared with the average GSD values for the individual survey sample set (Table 7), then individual GSD values are smaller, with their mean being 0.40 cm, while the pooled mean GSD is 0.64 cm.

Table 9.

Descriptive statistics for pooled results with precision, GSD, and shadow size as variables.

This difference is probably due to the fact that sampling was not optimal. Ideally, all the images with A. montana from each drone-based aerial survey from the study areas in Ghețari should have been evaluated.

The pooled set correlation (Table 10) between precision and GSD is moderately negative (r is −0.449) and significant (p < 0.001). Compared with that in the analysis of individual aerial survey sample sets (Table 8), the correlation strength decreases, but is more significant. The correlation between precision and shadow size is weakly negative (r is −0.258), and it is also significant (p < 0.001). Compared with that in the individual aerial survey sample set analysis, the correlation decreases noticeably. Finally, the correlation between GSD and shadow size indicates a weak positive relationship (r = 0.237), and it is also significant (p < 0.001).

Table 10.

Pearson’s correlations for precision, GSD, and shadow in pooled results. r—correlation coefficient; p—probability; SE—standard error.

Compared with the analysis of the individual aerial survey sample sets, the correlation has also decreased, but it has become significant. Overall, the correlations between the three variables have weakened and become statistically significant, compared with those in the previous analysis.

It is likely that a complete evaluation of all the images, which would include more images with lower GSD values to reach the same average value obtained with “Pix4D Mapper”, would further increase the strength of the correlations.

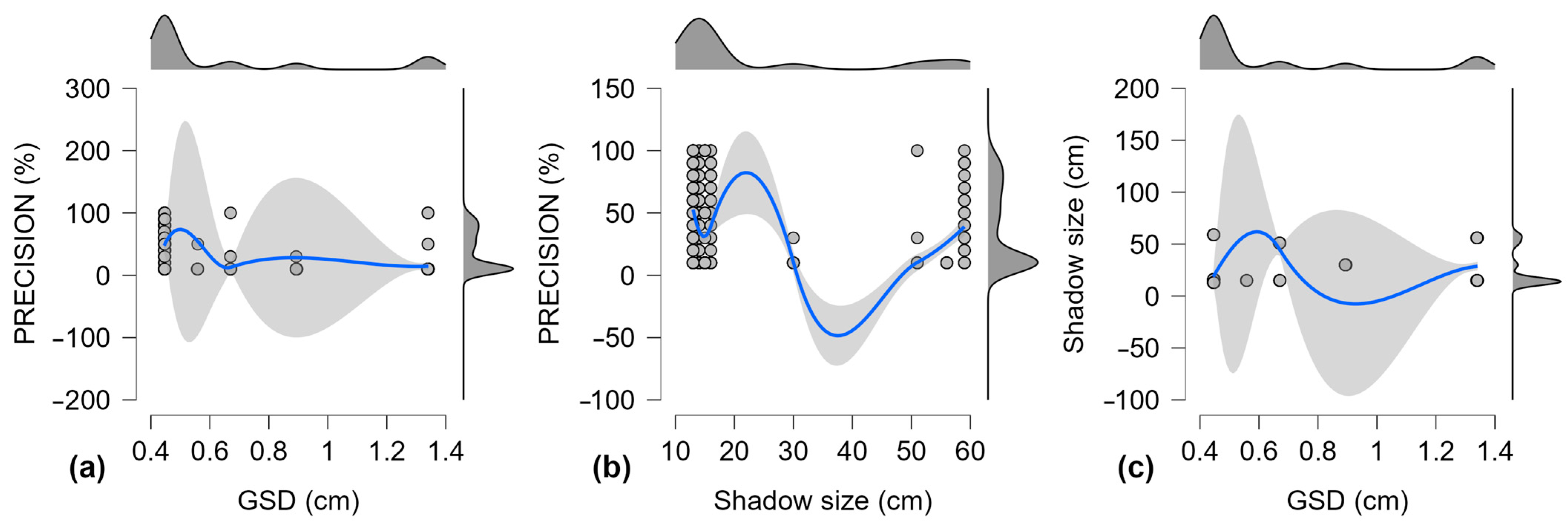

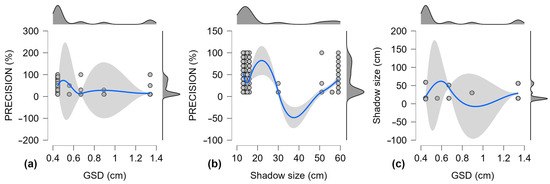

Figure 7 shows the correlations between the three variables from the sample pool. For precision and GSD (Figure 7a), the upper confidence interval is most remarkable in the 0.45–0.55 cm GSD range. For precision and shadow size (Figure 7b), there is a major inflection point around a shadow size of 25 cm.

Figure 7.

Combined scatter and density plots for Pearson’s correlation for precision, GSD, and shadow size for the pooled sample set, with 95% confidence intervals for smooth regression lines. (a) Precision and GSD; (b) Precision and Shadow size; (c) Shadow size and GSD. Scatter plots: gray dots—individual values; solid blue line—trend line; gray area—confidence intervals. Density plots: dark gray area—distribution of values; black line—kernel density estimation.

The correlational analysis provided significant evidence that both GSD and shadow size play a role in the inferences made by the trained CNN model. Having significant effects implies that useful coefficients can be calculated with a regression analysis.

3.5.4. Linear Regression

ANOVA and linear regression analyses were performed to establish the shape of the polynomial model based on precision, GSD and shadow size. These tests were based on the pooled sample of 503 evaluation results. The mathematical polynomial model was based on precision as the dependent variable, and GSD and shadow size as independent variables (Equation (1)):

Y = β0 + β1X1 + β2X2

Y—dependent variable: precision (%)

β0—Precision intercept (%)

β1—GSD coefficient (cm)

X1—GSD independent variable

β2—Shadow size coefficient (cm)

X2—Shadow size independent variable

GSD and shadow size were added, as variables, to the null model in order to investigate the null hypothesis (H0) and determine the coefficients.

The ANOVA test, used for validating the statistical model with an alternate measure (Table 11), indicated that the three-variable model is statistically significant (p < 0.001) with an F-value of 72.817.

Table 11.

ANOVA test: df—degrees of freedom; F—Fisher test value; p—probability value.

The main test, a linear regression, was conducted to calculate the intercept and the coefficients for GSD and shadow size (Table 12).

Table 12.

Precision model coefficients. S.E.—standard error; CI—confidence interval; t—Student’s t-test score; p—probability value.

The intercept for the precision (in percent) of the A. montana inflorescence detection model is 69.173, with a standard error of 2.959, and it is statistically significant with a p-value of less than 0.001. This indicates that the maximum precision of the trained A. montana inflorescence detection model is ≈69%.

Ground sampling distance (cm) has a coefficient of −39.283 with a standard error of 3.874, indicating a negative relationship with the dependent variable, precision. The t-value, −10.140, is statistically significant with a p-value of less than 0.001.

Finally, the coefficient for shadow size (cm) is −0.296 with a standard error of 0.075, suggesting a smaller negative effect on precision compared with the GSD value. The t-value, −3.956, is statistically significant with a p-value of less than 0.001.

Based on the calculated coefficients (Table 12), the following function emerges to describe the effect of GSD and shadow size on precision (Equation (2)):

Precision% = 69.173 − 39.283 × GSDcm − 0.296 × Shadow_sizecm

Intercept: 69.173 ± 2.959

GSD: −39.283 ± 3.874

Shadow size: −0.296 ± 0.075

Intercept: 69.173 ± 2.959

GSD: −39.283 ± 3.874

Shadow size: −0.296 ± 0.075

The precision function (Equation (2)) allows for predictions and corrections. Both variables, GSD and shadow size, are available and usable on site in fieldwork, thus allowing quick corrections of the results produced by the trained CNN model.

For example, with an image that has a GSD of 0.9 cm, a shadow size of 50 cm, and a reported A. montana count of 75 single inflorescence detections, the following calculations apply:

- Maximum precision: 69.173 − 39.283 × 0.9 − 0.296 × 50 = 19.018 (%)

- Corrected count estimate: (75 × 100)/19.018 ≈ 394 (AM1)

In this example, using the calculated maximum precision, the number of inflorescences is estimated by reference to 100%. Thus, a reported number of 75 detections would lead to an estimated total of 394 clear and individual inflorescences (AM1) when the GSD is 0.9 cm and the shadow size is 50 cm.

Further adjustments could be made to the model by factoring in recall along with precision, and by comparing the count values to those obtained via manual counting in the field.

4. Discussion

The objective of this study was not to construct an impeccable detector for the inflorescences of A. montana. Instead, the objective was to investigate the potential and constraints of identifying low-resolution A. montana inflorescences in semi-natural grasslands with accessible devices (using civil drones and cameras). This was carried out while balancing effective coverage and the brief flowering period of 3–4 weeks. To achieve this, it was necessary to operate the drones at a higher altitude, which inherently risked capturing images of a lower resolution. Given that this is a pioneering application for detecting low-resolution A. montana inflorescences, the aim was to develop a functional technology prototype. This prototype would serve as a foundation for progressive enhancements following field tests.

4.1. Model Training

The training dataset of images and labels was sufficient for successful machine learning to retrain the ResNet base model into the A. montana inflorescence detection model, based on the learning rate and total loss reports. As this step is not the focus of this study, it is impossible to say whether or not less training data would have been sufficient. For the trained model, ≈21 thousand labels were used in total, while the conventional minimum for good results in classification tasks is 20 thousand labels [90], which would apply to each class of labels. Gütter et al., (2022) [91] found out that performance decreased significantly with fewer than 10 thousand combined labels. Classification learning in the trained model worked between label classes (AM1, BAM1, and BAM2), and the variability of the classification loss value hints at the learning struggle to distinguish between the clear AM1 labels and the blurry BAM1 and BAM2 labels. Splitting into clear and blurry classes has been tried before, and solutions are more limited for lens blur than for motion blur [92,93]. This tension also exists in the labeling process, especially at low resolutions, as blurriness and clarity represent a gradient. This classification process likely resulted in an overfitting of the model to instances of A. montana inflorescences with sharper edges (AM1) and a focus away from the center of the inflorescence—which is usually a solid spot at low resolutions (blurry or not). The individual disk flowers are not visible at low resolutions, but there may be a contrast between the darker disk (yellow, golden-yellow, and orange-yellow) and the lighter ray flowers (yellow). An alternative strategy for training the model would be to merge the AM1 and BAM1 labels, giving the model more features to learn from without the image clarity challenge. Ideally, all images should be clear, but this is not possible with a single aerial survey due to uneven terrain (Figure 5). This is especially challenging for A. montana, a species that grows in mountainous terrains with numerous heterogeneous slopes [9,67]. A practical solution would require bigger camera sensors that can obtain a lower GSD value and clarity with the focal plane set infinitely far away and at a higher altitude. Alternatively, a telephoto camera lens that can keep its focal distance setting constant during flight may provide clearer images, but with increased requirements for lighting and an increased risk of motion blur.

4.2. Evaluation

The results show that the randomly selected survey sample image sets exhibited significant heterogeneity, reflecting the organic distribution of the species within semi-natural grasslands (Table 6). Setting the minimum threshold score at 0.2 meant that the trained model was able to detect some rarer and more challenging instances of A. montana inflorescences, but with more false-positive results, which reduced the precision. The decision to raise the threshold to higher values would improve the precision, but this decision would depend on the goal of the monitoring effort: whether it is to find the dense hotspots of A. montana or to map the extent of its habitat and discover where A. montana could be expanding or retreating. The challenge of counting A. montana groups of flowers is unresolved, as expected of the training label data focused mainly on AM1 individual instances. The model managed to occasionally detect single inflorescences that were very close to each other, suggesting that adding training data for groups (AM2, AM3, AM4, AM5, and AMN) may provide useful results.

Further model-centered improvements can be added during detection as a form of post-processing filtering [94]. For example, the relatively low-score boxes can be pruned for the overlapping group by removing redundant predictions or merging them to obtain a higher score. During A. montana inflorescence detection, other strategies could be implemented: using different CNN models in advance (image classifiers); using concurrent models and averaging the result; and incorporating more context variables in order to filter and sort results. For example, the GSD value could be calculated for the target aerial image, and this value could be used to check whether a detected object (an A. montana inflorescence) is within a predetermined natural range of inflorescence sizes (e.g., 2–10 cm); anything larger, would be considered an ambiguous group of inflorescences. Another strategy could include training the model to find other A. montana aerial parts and using these to improve the result. Future research may focus on statistically correcting results by understanding the natural frequency and density of clusters of A. montana in a semi-natural grassland. Other studies have tried to exclusively detect flowers, cutting out the background around flowers by using masks and models such as mask R-CNN, but with mixed results and similar challenges of clustering and occlusion [95].

Tuning the training configuration could increase performance by improving the parameters of the anchor generator to better fit the inflorescences shapes (e.g., square box); for example, the multiscale anchor generator could be tuned to manage smaller objects, to have a smaller base size of anchor boxes, and to have fewer or more aspect ratios for anchor boxes. Other tuning aspects, such as adjusting non-maximal suppression [96], would help to reduce false positive detections.

In a similar effort based on a CNN model trained on orthomosaics of semi-natural grassland to classify vegetation relevés from four types of plant communities, Alopecurus pratensis L., Lolium perenne L., Bromus hordeaceus L., and Rumex obtusifolius L., the authors confirmed that low-resolution plants limit performance, that overlapping vegetation increases errors, and that the variety of phenotypes by itself and across phenological stages require much more training data [97]. Improving training data requires increasing the variety of features covered by the training set, which involves adding instances of A. montana inflorescences from more angles, with more backgrounds (grass cover and soil), at more resolutions, and from different hours in the day [90].

The precision of A. montana inflorescence detection was structurally reduced in the experimental design to increase the generalizability of learning (model robustness), while also allowing for the measurement of the influence of aerial survey parameters (GSD and shadow size). As the success rate exists and is limited, real-world precision can be obtained by applying correction coefficients using the statistically derived precision function and by using manual readings to further refine the counts and get a sense of the number of A. montana inflorescences in the semi-natural grasslands. To narrow down the population of individual plants in a study area, further statistical modeling would be required to account for the number of inflorescences per plant.

4.3. Statistical Precision

There is a variance concerning the daytime, because the flights providing imagery for evaluating the trained CNN model (from Ghețari, Romania) have a longer time range compared with that of the training flights (from Todtnau, Germany). For the training flights, the take-off time started at 10:49 and ended at 12:11, which was around solar noon (Table 2). For the evaluation flights, the takeoff time started at 10:16 and ended at 18:31, covering a much larger span of daytime (Table 3). Compared with the other June flights, the first flight from 10:16 produced images with the lowest precision scores, the largest shadow size, and the largest GSD (Table 6). The largest shadow size in the training imagery is 25 cm (Table 2), which matches the decrease in precision observed with statistical analysis (Figure 7b). For GSD, the best-performing aerial image samples were JUN1, JUN2, and JUN4, with an average GSD range of 0.19 to 0.42 cm, the tail end of which matches the peak precision found with statistical analysis (Figure 7a).

The failure in the detection of blurry inflorescences (BAM1 and BAM2) will be investigated in future studies focusing on resolution and clarity. A single low-resolution or blurry A. montana inflorescence is very poor in features, and resembles a solid yellow spot, which is a hard limit as texture is a key target of CNN models [98].

The statistical noise caused by shadows could be mitigated by extending the architecture with models that recognize shadows [99] or replace shadows with lighter areas [100], and other methods [76,101]. A limitation of these attempts to compensate for shadows is that the studies focus primarily on large anthropogenic features [102], not on small natural vegetation. The drop in precision when shadows are larger than 25 cm means that the trained CNN model is specific to the period of daytime in which it is trained, and that aerial images analyzed with this model should be acquired within the same period of the day and season, aiming for a GSD of less than 0.45 cm, to report the most accurate A. montana inflorescence quantity. During training efforts, more images from earlier or later in the day could be used to extend the photoperiod and to improve results by increasing CNN model robustness.

The evaluation results also confirm the methodological findings of Gallmann et al. (2022) [65], who relied on a similar approach to detect multi-species inflorescences; this study used digitally modified GSD to test GSD ranges up to 0.2 cm for images of a small plot of grassland. However, the trained A. montana inflorescence detecting model used real-world GSD values in the average range of 0.19–0.73 cm with images from multiple large plots of semi-natural grassland. Gallmann et al. (2022) [65] found out that larger flower heads are easier to detect, compared with smaller flower heads, as the GSD increases, thus suggesting the presence of a GSD threshold for performance in detecting inflorescences with a diameter similar to that of A. montana inflorescences. The authors also reported difficulties occurring in training and detection due to multiple inflorescences overlapping as occlusions. This type of under-detection caused by inflorescence overlap was also reported by Li et al., (2023) [49] in the effort to count B. napus inflorescences using UAV-acquired imagery to train CenterNet CNN models.

The analysis of the pooled dataset results confirms, with a statistical significance of p < 0.001, the importance of having a low GSD value, and shows the loss in accuracy caused by unexpected shadows for the detection of A. montana inflorescences in a semi-natural grassland.

The power of this developed detection prototype comes from using large low-resolution images for training the model and evaluating the performance of the model in accordance with field data from another site (far away from the A. montana population in the training sets). Due to its robustness, the model is feasible for remote fieldwork on a standard portable computer that can run an application with the trained CNN model.

4.4. Limitations

The accuracy of the trained A. montana inflorescence detection model is negatively affected by shadow patterns that are not covered by training data. Blurriness and occlusions caused by topographical heterogeneity remain a challenge for detection with this model and approach. While the trained model focuses on low-resolution imagery of A. montana inflorescences, it could benefit from enriching the training set with some higher-resolution imagery. The application of the trained model is untested across the full range of A. montana habitats, and it needs to be used during hours between morning and noon.

The information generated with the trained model refers only to A. montana inflorescences, and it does not quantify individual A. montana plants.

In the use of the technology, limitations with regard to shadows can be over-come at various points, but increasing the photoperiod of the training data would maintain the flexibility of the tool. Lens blur issues may be compensated for in the field by conducting secondary surveys with different camera lens adjustments and then averaging the resulting counts of inflorescences. The quantification of individual A. montana plants may be achieved by training the model to identify A. montana rosettes that can be used to assess A. montana population structure [103].

5. Conclusions

Using a remote sensing system based on civil UAVs and RGB cameras, a convolutional neural network (CNN) ResNet model was successfully trained and tested to detect Arnica montana L. inflorescences. The low-resolution images of A. montana used for training provided sufficient features for successful machine learning. The trained model demonstrated robust transferability to new sites with different A. montana populations. Furthermore, the model is operational in field conditions, running on a Python3 application on a standard laptop. This demonstrates the practicality and accessibility of the model for field applications.

As expected, the ground sampling distance (GSD) was confirmed as a critical factor in using UAV imagery for assessing herbaceous flora. Image detail, expressed as the GSD, exhibits a threshold value of 0.45 cm, beyond which there is a noted decline in performance. Aerial surveys configured with settings that yield a GSD less than 0.45 cm enable the trained model to attain superior performance. This underlines the importance of optimal GSD settings in enhancing the effectiveness of machine learning models in flora assessments.

The temporal range of image acquisition, specifically during daylight hours, plays a substantial role in the performance of the CNN model. Drone flights around the time of 11–12 a.m. during the A. montana flowering season allow the model to attain superior performance by mitigating the effect of unexpected shadows in the images.

A constraint of this methodology for quantifying A. montana inflorescences is caused by the decline in performance, attributable to image blurriness. Additionally, it is important to note that A. montana detections are limited to inflorescences, rather than individual plants.

The performance of the model can be enhanced by considering several factors. These include the corrections of error rates identified during analysis, the fine-tuning of the model’s configuration parameters, upgrades to the hardware infrastructure supporting the model, and the specific context in which the model is applied. Each of these aspects plays a crucial role in optimizing the performance of the model and ensuring its robustness.

The developed prototype is capable of assessing both quantitative and qualitative information from drone-captured aerial imagery. Future experiments for improving the technology and accuracy of the model should focus on training with higher-resolution images, on expanding the photoperiod, and on tuning the architecture. As technological advancements continue to enhance camera sensors, drone sensors, and batteries, it is anticipated that the accuracy and functionalities of drone-enabled automatic surveillance of semi-natural grasslands will see substantial improvements.

Author Contributions

Conceptualization, D.D.S., H.W. and A.R.; methodology, D.D.S. and H.W.; software, D.D.S.; validation, D.D.S., H.W. and E.R.; formal analysis, D.D.S.; investigation, D.D.S.; resources, E.R., I.R. and F.P.; data curation, D.D.S.; writing—original draft preparation, D.D.S.; writing—review and editing, D.D.S., A.R., E.R. and I.V.; visualization, D.D.S.; supervision, F.P. and I.R.; project administration, F.P., E.R. and I.V.; funding acquisition, E.R. and I.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received funding by the Deutsche Bundesstiftung Umwelt (DBU), project no. 35006/01.

Data Availability Statement

Project data can be explored on GitHub: https://github.com/dragomirsangeorzanUSAMVCJ/ArnicaMontanaDetection/ (accessed on 1 May 2024).

Acknowledgments

This study is a part of a PhD thesis titled “Research on the potential use of drones for mapping oligotrophic grasslands with Arnica montana in the Apuseni Mountains” by D.D.S. with I.R. as a doctoral supervisor. We also want to thank our drone pilot colleagues for their complex technical efforts.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Buchmann, N.; Fuchs, K.; Feigenwinter, I.; Gilgen, A.K. Multifunctionality of permanent grasslands: Ecosystem services and resilience to climate change. In Proceedings of the Joint 20th Symposium of the European Grassland Federation and the 33rd Meeting of the EUCARPIA Section “Fodder Crops and Amenity Grasses”, Zürich, Switzerland, 24–27 June 2019; Volume 24, pp. 19–26. [Google Scholar] [CrossRef]

- Klaus, V.H.; Gilgen, A.K.; Lüscher, A.; Buchmann, N. Can We Deduce General Management Recommendations from Biodiversity-Ecosystem Functioning Research in Grasslands? In Proceedings of the Joint 20th Symposium of the European Grassland Federation and the 33rd Meeting of the EUCARPIA Section “Fodder Crops and Amenity Grasses”, Zürich, Switzerland, 24–27 June 2019; Volume 24, pp. 63–65. Available online: https://www.research-collection.ethz.ch/handle/20.500.11850/353577 (accessed on 9 July 2023).

- Lomba, A.; Guerra, C.; Alonso, J.; Honrado, J.P.; Jongman, R.; McCracken, D. Mapping and monitoring High Nature Value farmlands: Challenges in European landscapes. J. Environ. Manag. 2014, 143, 140–150. [Google Scholar] [CrossRef]

- Lomba, A.; Moreira, F.; Klimek, S.; Jongman, R.H.; Sullivan, C.; Moran, J.; Poux, X.; Honrado, J.P.; Pinto-Correia, T.; Plieninger, T.; et al. Back to the future: Rethinking socioecological systems underlying high nature value farmlands. Front. Ecol. Environ. 2020, 18, 36–42. [Google Scholar] [CrossRef]

- Paracchini, M.L.; Petersen, J.-E.; Hoogeveen, Y.; Bamps, C.; Burfield, I.; van Swaay, C. High Nature Value Farmland in Europe; European Commission Joint Research Centre: Luxembourg, 2008; ISBN 978-92-79-09568-9. Available online: https://core.ac.uk/download/pdf/38617607.pdf (accessed on 11 May 2024).

- Defour, T. EIP-AGRI Focus Group New Entrants: Final Report. Available online: https://ec.europa.eu/eip/agriculture/en/publications/eip-agri-focus-group-new-entrants-final-report (accessed on 9 July 2023).

- Peyraud, J.-L.; Peeters, A. The Role of Grassland Based Production System in the Protein Security. In Proceedings of the General Meeting of the European Grassland Federation (EGF), Trondheim, Norway, 8 September 2016; Wageningen Academic Publishers: Wageningen, The Netherlands, 2016; Volume 21. Available online: https://hal.inrae.fr/hal-02743435 (accessed on 5 April 2024).

- Ministerului Agriculturii și Dezvoltării Rurale. Planul PAC 2023–2027 pentru România (v1.2); PS PAC 2023–2027; Guvernul României: Romania, 2022; p. 1094. Available online: https://www.madr.ro/planul-national-strategic-pac-post-2020/implementare-ps-pac-2023-2027/ps-pac-2023-2027.html (accessed on 26 November 2023).

- Brinkmann, K.; Păcurar, F.; Rotar, I.; Rușdea, E.; Auch, E.; Reif, A. The grasslands of the Apuseni Mountains, Romania. In Grasslands in Europe of High Nature Value; Veen, P., Jefferson, R., de Smidt, J., van der Straaten, J., Eds.; Brill: Leiden, The Netherlands, 2014; pp. 226–237. ISBN 978-90-04-27810-3. [Google Scholar] [CrossRef]

- Herzon, I.; Raatikainen, K.; Wehn, S.; Rūsiņa, S.; Helm, A.; Cousins, S.; Rašomavičius, V. Semi-natural habitats in boreal Europe: A rise of a social-ecological research agenda. Ecol. Soc. 2021, 26, 13. [Google Scholar] [CrossRef]

- McGurn, P.; Browne, A.; NíChonghaile, G.; Duignan, L.; Moran, J.; ÓHuallacháin, D.; Finn, J.A. Semi-Natural Grasslands on the Aran Islands, Ireland: Ecologically Rich, Economically Poor. In Proceedings of the Grassland Resources for Extensive Farming Systems in Marginal Lands: Major Drivers and Future Scenarios, the 19th Symposium of the European Grassland Federation, CNR-ISPAAM. Alghero, Italy, 7–10 May 2017; pp. 197–199. Available online: https://www.cabdirect.org/cabdirect/abstract/20173250639 (accessed on 9 July 2023).

- Lange, D.; Schippmann, U. Wild-Harvesting in East Europe. In Trade Survey of Medicinal Plants in Germany: A Contribution to International Plant Species Conservation; Bundesamt für Naturschutz: Bonn, Germany, 1997; p. 29. ISBN 978-3-89624-607-3. Available online: https://d-nb.info/950197920/04 (accessed on 9 July 2023).

- Păcurar, F.; Reif, A.; Ruşdea, E. Conservation of Oligotrophic Grassland of High Nature Value (HNV) through Sustainable Use of Arnica montana in the Apuseni Mountains, Romania. In Medicinal Agroecology; Fiebrig, I., Ed.; CRC Press: Boca Raton, FL, USA, 2023; pp. 177–201. ISBN 978-1-00-314690-2. Available online: https://www.taylorfrancis.com/chapters/edit/10.1201/9781003146902-12/conservation-oligotrophic-grassland-high-nature-value-hnv-sustainable-use-arnica-montana-apuseni-mountains-romania-florin-p%C4%83curar-albert-reif-evelyn-ru%C5%9Fdea (accessed on 8 March 2024).

- Kriplani, P.; Guarve, K.; Baghael, U.S. Arnica montana L.—A plant of healing: Review. J. Pharm. Pharmacol. 2017, 69, 925–945. [Google Scholar] [CrossRef]

- Ciocârlan, V. Ord. Asterales (Compositales). In Flora Ilustrată a României: Pteriodophyta et Spermatophyta; Ceres: Bucharest, Romania, 2009; p. 810. ISBN 978-973-40-0817-9. [Google Scholar]

- Bundesamt für Naturschutz (BfN). Artsteckbrief: Biologische Merkmale—Arnica montana L. Available online: https://www.floraweb.de/php/biologie.php?suchnr=585 (accessed on 28 March 2024).

- Strykstra, R.; Pegtel, D.; Bergsma, A. Dispersal Distance and Achene Quality of the Rare Anemochorous Species Arnica montana L.: Implications for Conservation. Acta Bot. Neerl. 1998, 47, 45–56. Available online: https://natuurtijdschriften.nl/pub/541121 (accessed on 26 April 2024).

- EU Commission. Commission Regulation (EU) No 1320/2014 of 1 December 2014 Amending Council Regulation (EC) No 338/97 on the Protection of Species of Wild Fauna and Flora by Regulating Trade Therein. Off. J. Eur. Union 2014, L361, 1–93. Available online: http://data.europa.eu/eli/reg/2014/1320/oj/eng (accessed on 10 March 2024).

- BISE. Arnica montana. Available online: https://biodiversity.europa.eu/species/153665 (accessed on 10 March 2024).

- Bazos, I.; Hodálová, I.; Lansdown, R.; Petrova, A.; IUCN Red List of Threatened Species: Arnica montana. In IUCN Red List of Threatened Species. 2010. Available online: https://www.iucnredlist.org/species/162327/5574104 (accessed on 10 March 2024).

- Duwe, V.K.; Muller, L.A.H.; Borsch, T.; Ismail, S.A. Pervasive genetic differentiation among Central European populations of the threatened Arnica montana L. and genetic erosion at lower elevations. Perspect. Plant Ecol. Evol. Syst. 2017, 27, 45–56. [Google Scholar] [CrossRef]

- Arnica montana L. Plants of the World Online. Kew Science. Available online: http://powo.science.kew.org/taxon/urn:lsid:ipni.org:names:30090722-2 (accessed on 28 March 2024).

- Schmidt, T.J. Arnica montana L.: Doesn’t Origin Matter? Plants 2023, 12, 3532. [Google Scholar] [CrossRef]

- Dapper, H. Liste der Arzneipflanzen Mitteleuropas: Check-list of the medicinal plants of Central Europe. In Liste der Arzneipflanzen Mitteleuropas: Check-List of the Medicinal Plants of Central Europe; Innova: Ulm, Germany, 1987; p. 73. ISBN 3-925096-01-9. [Google Scholar]

- Hultén, E.; Fries, M. Atlas of North European vascular plants north of the Tropic of Cancer. In Atlas of North European Vascular Plants North of the Tropic of Cancer; Koeltz Botanical Books: Konigstein, Germany, 1986; p. 1188. ISBN 978-3-87429-263-4. [Google Scholar]

- Maurice, T.; Colling, G.; Muller, S.; Matthies, D. Habitat characteristics, stage structure and reproduction of colline and montane populations of the threatened species Arnica montana. Plant Ecol. 2012, 213, 831–842. [Google Scholar] [CrossRef]

- Sugier, P.; Kolos, A.; Wolkowycki, D.; Sugier, D.; Plak, A.; Sozinov, O. Evaluation of species inter-relations and soil conditions in Arnica montana L. habitats: A step towards active protection of endangered and high-valued medicinal plant species in NE Poland. Acta Soc. Bot. Pol. 2018, 87, 3592. [Google Scholar] [CrossRef]

- Michler, B. Leitprojekt Heilpflanzen. In Perspektiven für eine traditionelle Kulturlandschaft in Osteuropa. Ergebnisse eines inter-und transdisziplinären, partizipativen Forschungsprojektes in Osteuropa; Rușdea, E., Reif, A., Povara, I., Konold, W., Eds.; Culterra—The Series of Publications by the Professorship for Land Management; Schriftenreihe des Instituts für Landespflege, Albert-Ludwigs-Universität Freiburg: Freiburg, Germany, 2005; Volume 34, pp. 378–380. ISBN 978-3-933390-21-9. Available online: https://www.landespflege.uni-freiburg.de/ressourcen/pub/2005%20-%20Endbericht%20Proiect%20Apuseni.pdf#page=378 (accessed on 13 December 2023).

- Michler, B.; Wolfgang, K.; Susanne, S.; Ioan, R.; Florin, P. Conservation of eastern European medicinal plants: Arnica montana in Romania. In Proceedings of the 4th Conference on Medicinal and Aromatic Plants of South-East European Countries, 9th National Symposium “Medicinal Plants—Present and Perspectives”, 3rd National Conference of Phytotherapy, Iași, România, 28–31 May 2006; Association for Medicinal and Aromatic Plants of Southeast European Countries (AMAPSEEC): Belgrade, Serbia, 2006; pp. 158–160. [Google Scholar]

- Pacurar, F.; Rotar, I.; Gârda, N.; Morea, A. The Management of Oligotrophic Grasslands and the Approach of New Improvement Methods. Transylv. Rev. Syst. Ecol. Res. 2009, 7, 59–68. Available online: https://stiinte.ulbsibiu.ro/trser/trser7/59-68.pdf (accessed on 3 March 2024).

- Reif, A.; Coldea, G.; Harth, G. Pflanzengesellschaften des Offenlandes und der Wälder. In Perspektiven für eine Traditionelle Kulturlandschaft in Osteuropa. Ergebnisse Eines Inter- und Transdisziplinären, Partizipativen Forschungsprojektes in Osteuropa; Rușdea, E., Reif, A., Povara, I., Konold, W., Eds.; Culterra; Schriftenreihe des Instituts für Landespflege, Albert-Ludwigs-Universität Freiburg: Freiburg, Germany, 2005; Volume 34, pp. 78–87. ISBN 978-3-933390-21-9. Available online: https://www.landespflege.uni-freiburg.de/ressourcen/pub/2005%20-%20Endbericht%20Proiect%20Apuseni.pdf#page=78 (accessed on 13 December 2023).

- Michler, B.; Rotar, I.; Pacurar, F.; Stoie, A. Arnica montana, an Endangered Species and a Traditional Medicinal Plant: The Biodiversity and Productivity of Its Typical Grasslands Habitats. In Proceedings of the Grassland Science in Europe, Proceedings of EGF, Tertu, Estonia, 29–31 August 2005; Lillak, R., Viiralt, R., Linke, A., Geherman, V., Eds.; EGF: Tartu, Estonia, 2005; Volume 10, pp. 336–339. Available online: https://www.europeangrassland.org/fileadmin/documents/Infos/Printed_Matter/Proceedings/EGF2005_GSE_vol10.pdf (accessed on 5 March 2021).

- Kathe, W. Conservation of Eastern-European Medicinal Plants: Arnica montana in Romania. In Medicinal and Aromatic Plants: Agricultural, Commercial, Ecological, Legal, Pharmacological and Social Aspects; Bogers, R.J., Craker, L.E., Lange, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 17, pp. 203–211. ISBN 978-1-4020-5448-8. Available online: https://library.wur.nl/ojs/index.php/frontis/article/view/1233 (accessed on 3 March 2024).

- Díaz-Delgado, R.; Mücher, S. Editorial of special issue “Drones for biodiversity conservation and ecological monitoring”. Drones 2019, 3, 47. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, X.; Wang, Z.; Yang, L.; Xie, Y.; Huang, Y. UAVs as remote sensing platforms in plant ecology: Review of applications and challenges. J. Plant Ecol. 2021, 14, 1003–1023. [Google Scholar] [CrossRef]

- Sângeorzan, D.D.; Rotar, I. Evaluating plant biodiversity in natural and semi-natural areas with the help of aerial drones. Bull. Univ. Agric. Sci. Vet. Med. Cluj-Napoca. Agric. 2020, 77, 64–68. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of drone ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef]

- Pande-Chhetri, R.; Abd-Elrahman, A.; Liu, T.; Morton, J.; Wilhelm, V.L. Object-based classification of wetland vegetation using very high-resolution unmanned air system imagery. Eur. J. Remote Sens. 2017, 50, 564–576. [Google Scholar] [CrossRef]

- de Sá, N.C.; Castro, P.; Carvalho, S.; Marchante, E.; López-Núñez, F.A.; Marchante, H. Mapping the Flowering of an Invasive Plant Using Unmanned Aerial Vehicles: Is There Potential for Biocontrol Monitoring? Front. Plant Sci. 2018, 9, 238724. [Google Scholar] [CrossRef]

- Petrich, L.; Lohrmann, G.; Neumann, M.; Martin, F.; Frey, A.; Stoll, A.; Schmidt, V. Detection of Colchicum autumnale in drone images, using a machine-learning approach. Precis. Agric. 2020, 21, 1291–1303. [Google Scholar] [CrossRef]

- Wijesingha, J.; Astor, T.; Schulze-Brüninghoff, D.; Wachendorf, M. Mapping invasive Lupinus polyphyllus lindl. in semi-natural grasslands using object-based image analysis of UAV-borne images. PFG 2020, 88, 391–406. [Google Scholar] [CrossRef]

- Strumia, S.; Buonanno, M.; Aronne, G.; Santo, A.; Santangelo, A. Monitoring of plant species and communities on coastal cliffs: Is the use of unmanned aerial vehicles suitable? Diversity 2020, 12, 149. [Google Scholar] [CrossRef]

- Torresani, M.; Rocchini, D.; Ceola, G.; de Vries, J.P.R.; Feilhauer, H.; Moudrý, V.; Bartholomeus, H.; Perrone, M.; Anderle, M.; Gamper, H.A.; et al. Grassland vertical height heterogeneity predicts flower and bee diversity: An UAV photogrammetric approach. Sci. Rep. 2024, 14, 809. [Google Scholar] [CrossRef] [PubMed]

- Alavipanah, S.K.; Karimi Firozjaei, M.; Sedighi, A.; Fathololoumi, S.; Zare Naghadehi, S.; Saleh, S.; Naghdizadegan, M.; Gomeh, Z.; Arsanjani, J.J.; Makki, M.; et al. The shadow effect on surface biophysical variables derived from remote sensing: A review. Land 2022, 11, 2025. [Google Scholar] [CrossRef]

- Salamí, E.; Barrado, C.; Pastor, E. UAV flight experiments applied to the remote sensing of vegetated areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Haq, M.A.; Ahmed, A.; Gyani, J. Implementation of CNN for plant identification using UAV imagery. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 369–378. [Google Scholar] [CrossRef]

- Fromm, M.; Schubert, M.; Castilla, G.; Linke, J.; McDermid, G. Automated detection of conifer seedlings in drone imagery using convolutional neural networks. Remote Sens. 2019, 11, 2585. [Google Scholar] [CrossRef]

- Li, J.; Li, Y.; Qiao, J.; Li, L.; Wang, X.; Yao, J.; Liao, G. Automatic counting of rapeseed inflorescences using deep learning method and UAV RGB imagery. Front. Plant Sci. 2023, 14, 1101143. [Google Scholar] [CrossRef] [PubMed]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Sys. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Lopatin, J.; Fassnacht, F.E.; Kattenborn, T.; Schmidtlein, S. Mapping plant species in mixed grassland communities using close range imaging spectroscopy. Remote Sens. Environ. 2017, 201, 12–23. [Google Scholar] [CrossRef]

- Neumann, C.; Behling, R.; Schindhelm, A.; Itzerott, S.; Weiss, G.; Wichmann, M.; Müller, J. The colors of heath flowering—Quantifying spatial patterns of phenology in Calluna life-cycle phases using high-resolution drone imagery. Remote Sens. Ecol. Conserv. 2020, 6, 35–51. [Google Scholar] [CrossRef]

- Petti, D.; Li, C. Weakly-supervised learning to automatically count cotton flowers from aerial imagery. Comput. Electron. Agric. 2022, 194, 106734. [Google Scholar] [CrossRef]

- James, K.; Bradshaw, K. Detecting plant species in the field with deep learning and drone technology. Methods Ecol. Evol. 2020, 11, 1509–1519. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Bonnet, P.; Goëau, H.; Hang, S.T.; Lasseck, M.; Sulc, M.; Malécot, V.; Jauzein, P.; Melet, J.-C.; You, C.; Joly, A. Plant identification: Experts vs. machines in the era of deep learning. In Multimedia Tools and Applications for Environmental & Biodiversity Informatics; Springer: Berlin/Heidelberg, Germany, 2018; p. 131. [Google Scholar] [CrossRef]

- Bonnet, P.; Joly, A.; Goëau, H.; Champ, J.; Vignau, C.; Molino, J.-F.; Barthélémy, D.; Boujemaa, N. Plant identification: Man vs. machine. Multimed. Tools Appl. 2016, 75, 1647. [Google Scholar] [CrossRef]

- She, Y.; Ehsani, R.; Robbins, J.; Nahún Leiva, J.; Owen, J. Applications of high-resolution imaging for open field container nursery counting. Remote Sens. 2018, 10, 2018. [Google Scholar] [CrossRef]

- Garcin, C.; Joly, A.; Bonnet, P.; Lombardo, J.-C.; Affouard, A.; Chouet, M.; Servajean, M.; Lorieul, T.; Salmon, J. Pl@ntNet-300K: A Plant Image Dataset with High Label Ambiguity and a Long-Tailed Distribution. 2021. Available online: https://hal.inria.fr/hal-03474556 (accessed on 13 April 2023).