A Registration Method of Overlap Aware Point Clouds Based on Transformer-to-Transformer Regression

Abstract

1. Introduction

2. Materials and Methods

2.1. Problem Setting

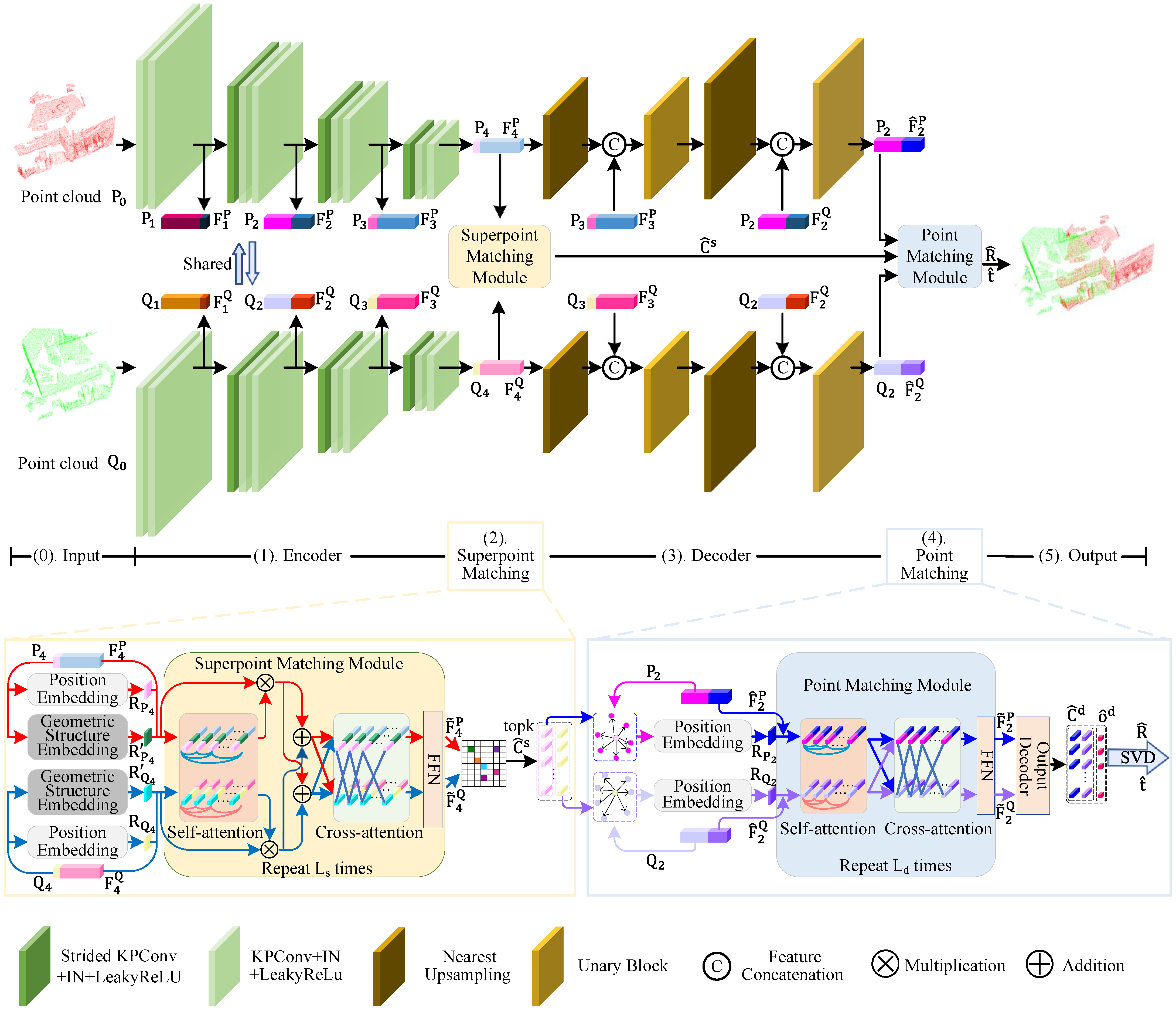

2.2. Overview of Our Method

- We propose a Rotation-Invariant Geometric Transformer Cross-Encoder module (RIG-Transformer) that combines the geometric features and positional encoding of superpoint coordinates to extract more distinctive features for predicting superpoints located in the overlapping region.

- Through the fusion of our RIG-Transformer and Transformer Cross-Encoder, we introduce a Transformer-to-Transformer dense regression (TTReg) that leverages dense point clouds from overlapping regions for both training and testing phases to compute the transformation matrix.

- Through extensive experiments, our method showcases strong matching capabilities on public 3DMatch and ModelNet benchmark, with a notable improvement of 7.2% in matching recall on datasets with small overlap ratios.

2.3. Feature Extraction and Correspondences Sampling

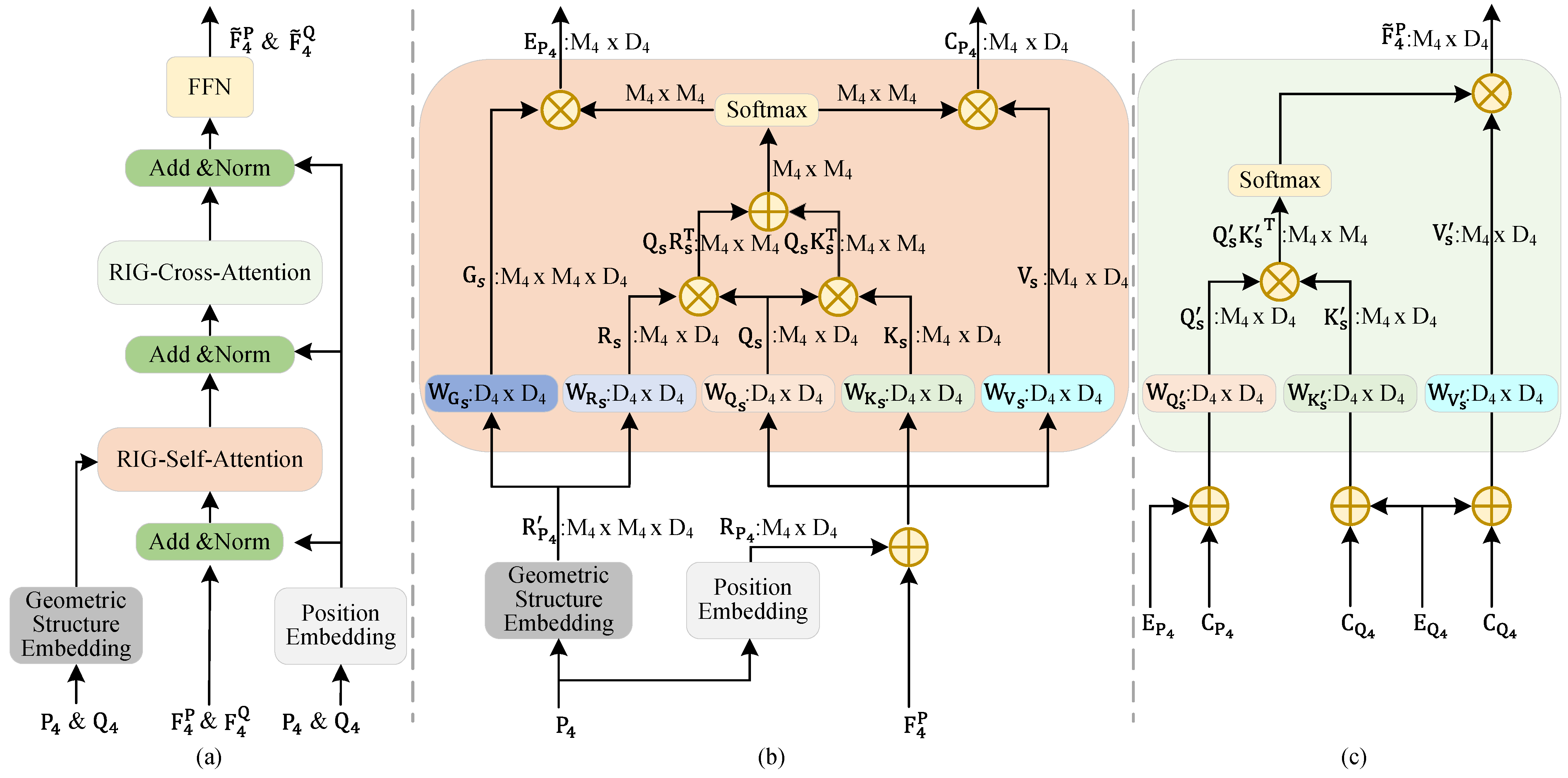

2.4. Superpoint Matching Module

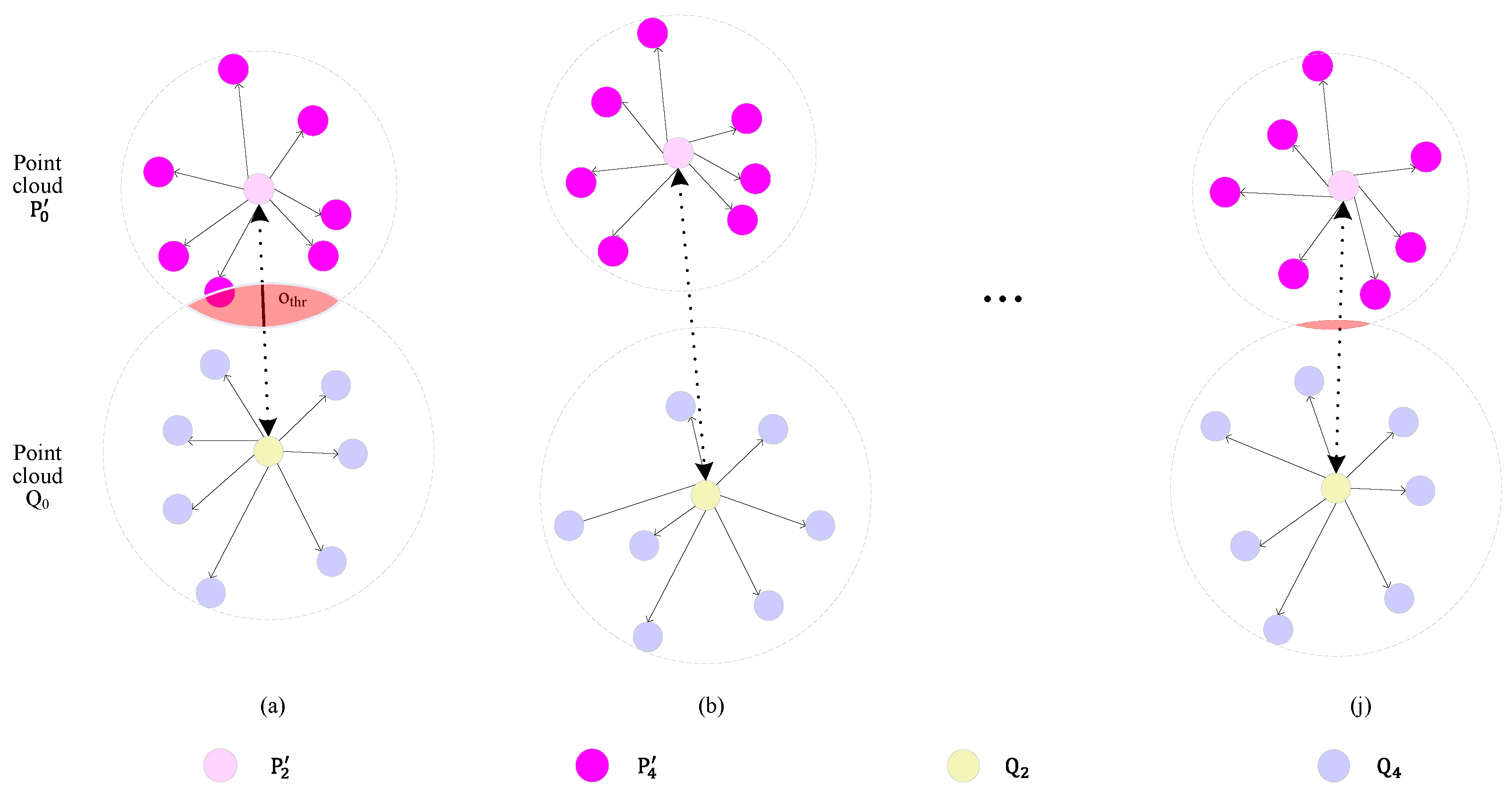

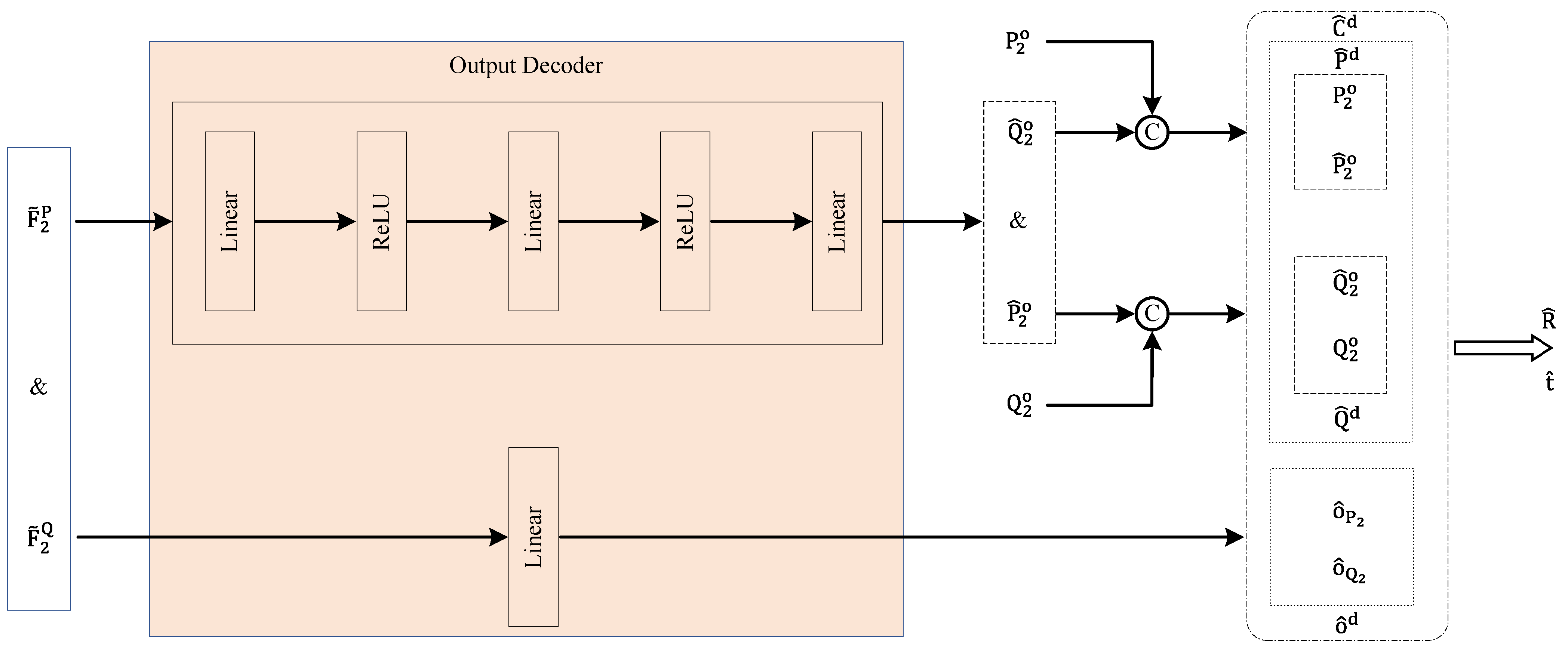

2.5. Point Matching Module

2.6. Loss Function

2.6.1. Superpoint Correspondences Loss Function

2.6.2. Point Correspondences Loss Function

Overlap Loss

Corresponding Point Loss

Feature Loss

3. Results

3.1. Datasets

3.1.1. Indoor Benchmarks: 3DMatch and 3DLoMatch

3.1.2. Synthetic Benchmarks: ModelNet and ModelLoNet

3.2. Experiment Details

3.3. Evaluation

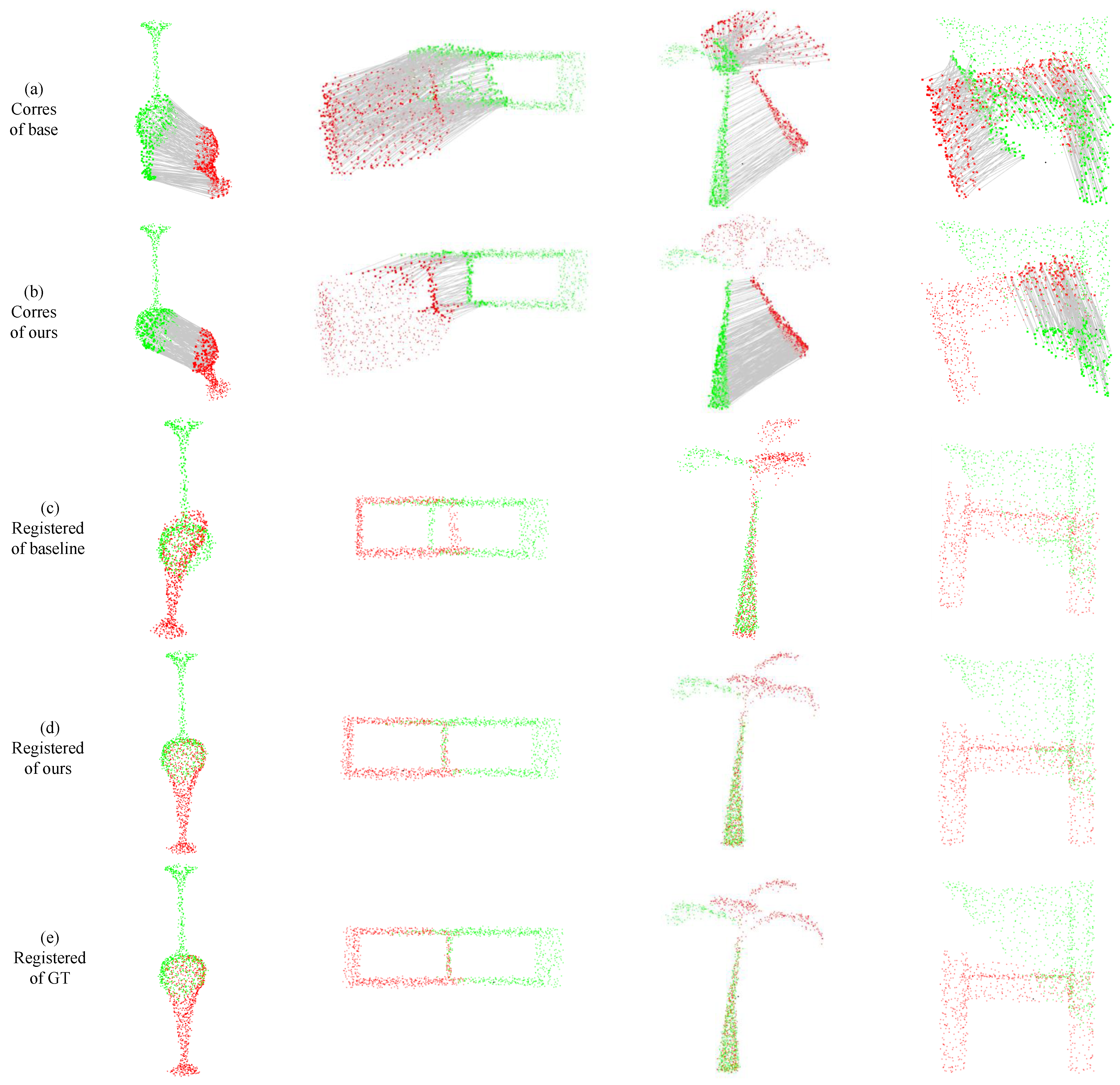

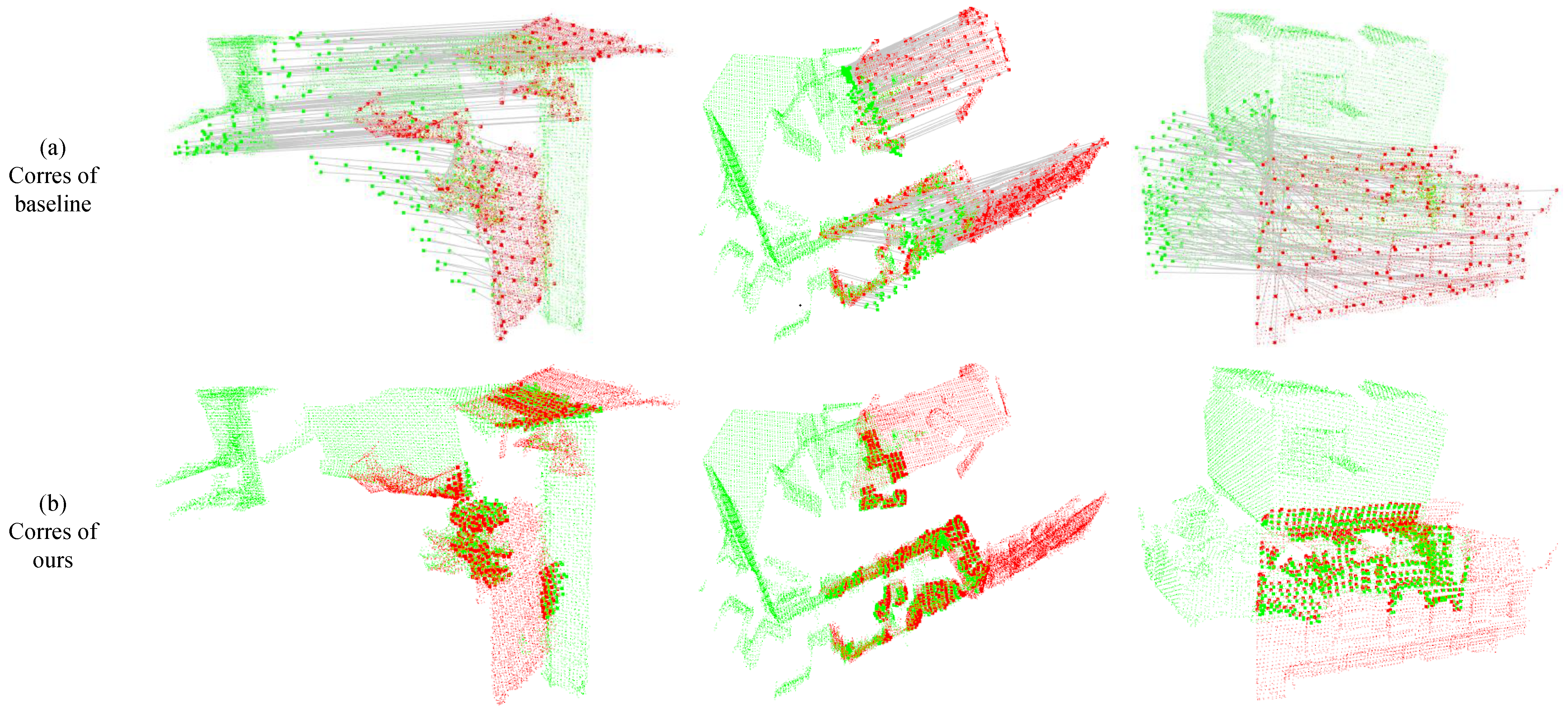

3.3.1. Evaluation of 3DMatch and 3DLoMatch

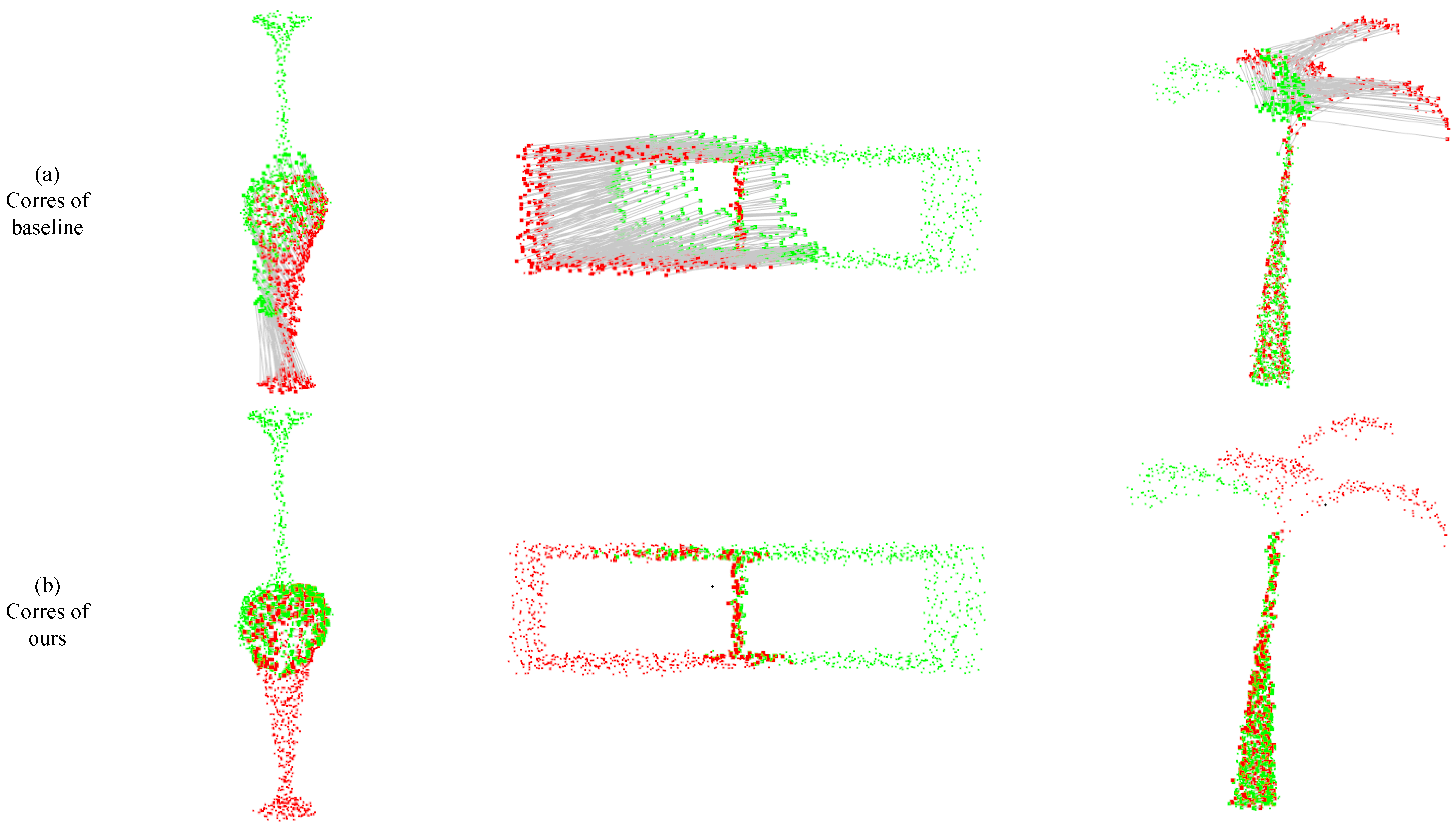

3.3.2. Evaluation of ModelNet and ModelLoNet

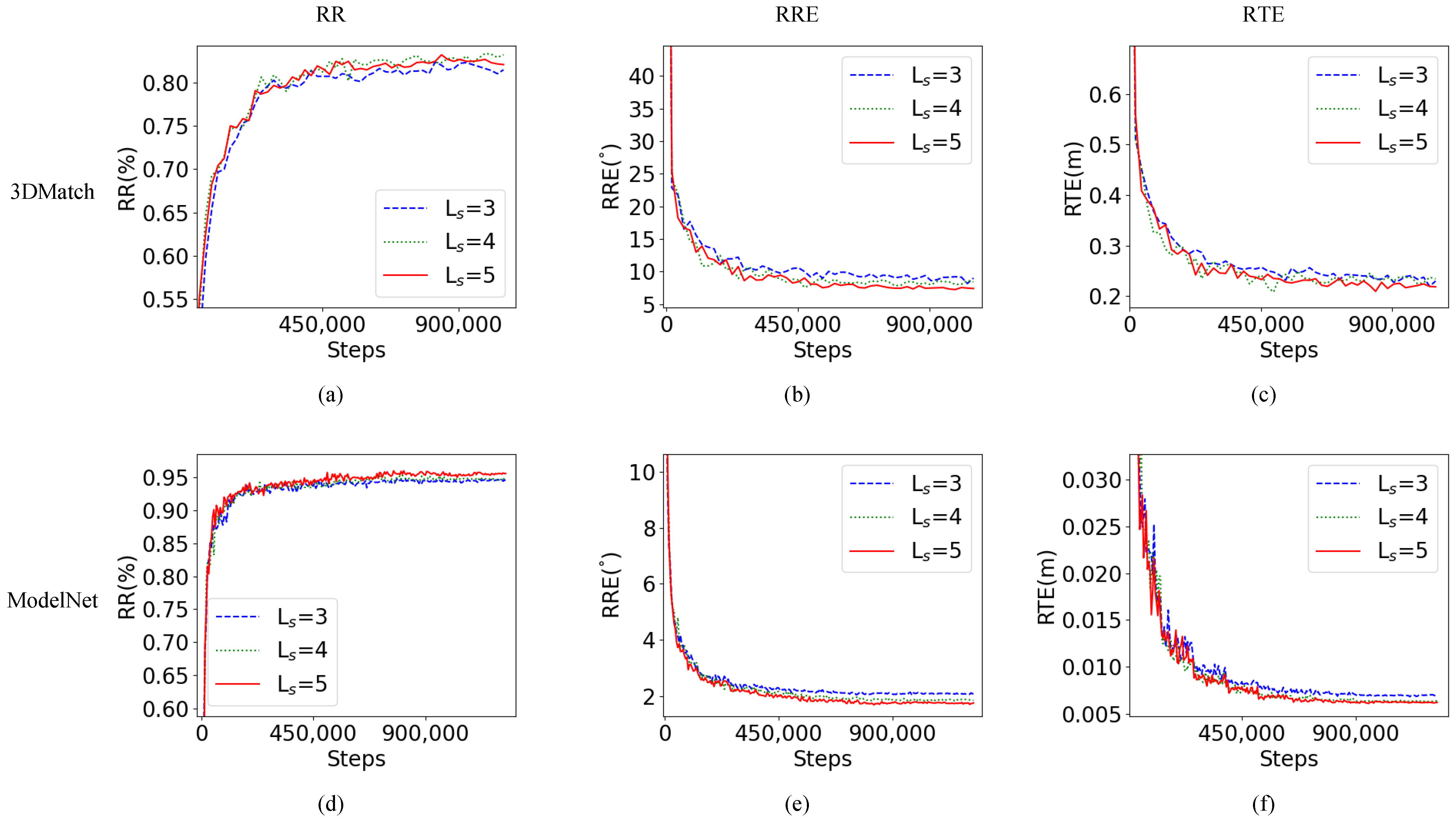

3.4. Ablation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| RIG-Transformer | Rotation-Invariant Geometric Transformer Cross-Encoder |

| TTReg | Transformer-to-Transformer Regression |

| RANSAC | Random Sample Consensus |

| FPS | Farthest Point Sampling |

| RR | Registration Recall |

| RRE | Relative Rotation Error |

| RTE | Relative Translation Error |

| CD | Chamfer Distance |

References

- Chen, Y.; Mei, Y.; Yu, B.; Xu, W.; Wu, Y.; Zhang, D.; Yan, X. A robust multi-local to global with outlier filtering for point cloud registration. Remote Sens. 2023, 15, 5641. [Google Scholar] [CrossRef]

- Sumetheeprasit, B.; Rosales Martinez, R.; Paul, H.; Shimonomura, K. Long-range 3D reconstruction based on flexible configuration stereo vision using multiple aerial robots. Remote Sens. 2024, 16, 234. [Google Scholar] [CrossRef]

- Choy, C.; Park, J.; Koltun, V. Fully convolutional geometric features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8958–8966. [Google Scholar]

- Han, T.; Zhang, R.; Kan, J.; Dong, R.; Zhao, X.; Yao, S. A point cloud registration framework with color information integration. Remote Sens. 2024, 16, 743. [Google Scholar] [CrossRef]

- Mei, G.; Tang, H.; Huang, X.; Wang, W.; Liu, J.; Zhang, J.; Van Gool, L.; Wu, Q. Unsupervised deep probabilistic approach for partial point cloud registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13611–13620. [Google Scholar]

- Cuturi, M. Sinkhorn distances: Lightspeed computation of optimal transport. Adv. Neural Inf. Process. Syst. 2013, 26, 2292–2300. [Google Scholar]

- Qin, Z.; Yu, H.; Wang, C.; Guo, Y.; Peng, Y.; Xu, K. Geometric transformer for fast and robust point cloud registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11143–11152. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Yu, H.; Qin, Z.; Hou, J.; Saleh, M.; Li, D.; Busam, B.; Ilic, S. Rotation-invariant transformer for point cloud matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5384–5393. [Google Scholar]

- Yew, Z.J.; Lee, G.H. Regtr: End-to-end point cloud correspondences with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6677–6686. [Google Scholar]

- Wu, Y.; Zhang, Y.; Ma, W.; Gong, M.; Fan, X.; Zhang, M.; Qin, A.; Miao, Q. Rornet: Partial-to-partial registration network with reliable overlapping representations. IEEE Trans. Neural Netw. Learn. Syst. 2023. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Chen, L.; Hu, B.; Wang, H.; Ren, M. HR-Net: Point cloud registration with hierarchical coarse-to-fine regression network. Comput. Electr. Eng. 2024, 113, 109056. [Google Scholar] [CrossRef]

- Wang, H.; Liu, Y.; Hu, Q.; Wang, B.; Chen, J.; Dong, Z.; Guo, Y.; Wang, W.; Yang, B. Roreg: Pairwise point cloud registration with oriented descriptors and local rotations. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10376–10393. [Google Scholar] [CrossRef] [PubMed]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6411–6420. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Arya, S.; Mount, D.M.; Netanyahu, N.S.; Silverman, R.; Wu, A.Y. ANN: A library for approximate nearest neighbor searching. ACM Trans. Math. Softw. (TOMS) 1999, 26, 469–483. [Google Scholar]

- Huang, S.; Gojcic, Z.; Usvyatsov, M.; Wieser, A.; Schindler, K. Predator: Registration of 3d point clouds with low overlap. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4267–4276. [Google Scholar]

- Li, J.; Chen, B.M.; Lee, G.H. SO-Net: Self-organizing network for point cloud analysis. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9397–9406. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Rocco, I.; Cimpoi, M.; Arandjelović, R.; Torii, A.; Pajdla, T.; Sivic, J. Neighbourhood consensus networks. Adv. Neural Inf. Process. Syst. 2018, 31, 1651–1662. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8922–8931. [Google Scholar]

- Kabsch, W. A solution for the best rotation to relate two sets of vectors. Acta Crystallogr. Sect. A Cryst. Phys. Diffr. Theor. Gen. Crystallogr. 1976, 32, 922–923. [Google Scholar] [CrossRef]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 376–380. [Google Scholar] [CrossRef]

- Lu, F.; Chen, G.; Liu, Y.; Zhang, L.; Qu, S.; Liu, S.; Gu, R. Hregnet: A hierarchical network for large-scale outdoor lidar point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16014–16023. [Google Scholar]

- Zeng, A.; Song, S.; Nießner, M.; Fisher, M.; Xiao, J.; Funkhouser, T. 3dmatch: Learning local geometric descriptors from rgb-d reconstructions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1802–1811. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Yew, Z.J.; Lee, G.H. Rpm-net: Robust point matching using learned features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11824–11833. [Google Scholar]

- Bai, X.; Luo, Z.; Zhou, L.; Fu, H.; Quan, L.; Tai, C.L. D3feat: Joint learning of dense detection and description of 3d local features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6359–6367. [Google Scholar]

- Gojcic, Z.; Zhou, C.; Wegner, J.D.; Wieser, A. The perfect match: 3d point cloud matching with smoothed densities. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5545–5554. [Google Scholar]

- Xu, H.; Liu, S.; Wang, G.; Liu, G.; Zeng, B. Omnet: Learning overlapping mask for partial-to-partial point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 3132–3141. [Google Scholar]

- Choy, C.; Dong, W.; Koltun, V. Deep global registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2514–2523. [Google Scholar]

- Cao, A.Q.; Puy, G.; Boulch, A.; Marlet, R. PCAM: Product of cross-attention matrices for rigid registration of point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 13229–13238. [Google Scholar]

- Aoki, Y.; Goforth, H.; Srivatsan, R.A.; Lucey, S. Pointnetlk: Robust & efficient point cloud registration using pointnet. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7163–7172. [Google Scholar]

- Wang, Y.; Solomon, J.M. Deep closest point: Learning representations for point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3523–3532. [Google Scholar]

| Model | 3DMatch | 3DLoMatch | ||||

|---|---|---|---|---|---|---|

| RR (%)↑ | RRE (°)↓ | RTE (m)↓ | RR (%)↑ | RRE (°)↓ | RTE (m)↓ | |

| 3DSN [29] | 78.4 | 2.199 | 0.071 | 33.0 | 3.528 | 0.103 |

| FCGF [3] | 85.1 | 1.949 | 0.066 | 40.1 | 3.147 | 0.100 |

| D3Feat [28] | 81.6 | 2.161 | 0.067 | 37.2 | 3.361 | 0.103 |

| Predator-5k [17] | 89.0 | 2.029 | 0.064 | 59.8 | 3.048 | 0.093 |

| Predator-1k [17] | 90.5 | 2.062 | 0.068 | 62.5 | 3.159 | 0.096 |

| Predator-NR [17] | 62.7 | 2.582 | 0.075 | 24.0 | 5.886 | 0.148 |

| OMNet [30] | 35.9 | 4.166 | 0.105 | 8.4 | 7.299 | 0.151 |

| DGR [31] | 85.3 | 2.103 | 0.067 | 48.7 | 3.954 | 0.113 |

| PCAM [32] | 85.5 | 1.808 | 0.059 | 54.9 | 3.529 | 0.099 |

| RegTR [10] | 92.0 | 1.567 | 0.049 | 64.8 | 2.827 | 0.077 |

| HR-Net [12] | 93.1 | 1.424 | 0.044 | 67.6 | 2.513 | 0.073 |

| Ours | 93.8 | 1.448 | 0.043 | 73.0 | 2.271 | 0.065 |

| Model | ModelNet | ModelLoNet | ||||||

|---|---|---|---|---|---|---|---|---|

| RR (%)↑ | RRE (°)↓ | RTE (m)↓ | CD (m)↓ | RR (%)↑ | RRE (°)↓ | RTE (m)↓ | CD (m)↓ | |

| PointNetLK [33] | - | 29.725 | 0.297 | 0.02350 | - | 48.567 | 0.507 | 0.0367 |

| OMNet [30] | - | 2.9470 | 0.032 | 0.00150 | - | 6.5170 | 0.129 | 0.0074 |

| DCP-v2 [34] | - | 11.975 | 0.171 | 0.01170 | - | 16.501 | 0.300 | 0.0268 |

| RPM-Net [27] | - | 1.7120 | 0.018 | 0.00085 | - | 7.3420 | 0.124 | 0.0050 |

| Predator [17] | - | 1.7390 | 0.019 | 0.00089 | - | 5.2350 | 0.132 | 0.0083 |

| RegTR [10] | 96.29 * | 1.4730 | 0.014 | 0.00078 | 68.17 * | 3.9300 | 0.087 | 0.0037 |

| HR-Net [12] | 97.71 * | 1.1970 | 0.011 | 0.00072 | 74.33 * | 3.5710 | 0.078 | 0.0034 |

| Ours | 97.24 | 1.3538 | 0.011 | 0.00078 | 72.35 | 3.9580 | 0.086 | 0.0039 |

| Model | 3DMatch | 3DLoMatch | ||||

|---|---|---|---|---|---|---|

| RR (%)↑ | RRE (°)↓ | RTE (m)↓ | RR (%)↑ | RRE (°)↓ | RTE (m)↓ | |

| Baseline [10] | 92.0 | 1.567 | 0.049 | 64.8 | 2.827 | 0.077 |

| 92.2 | 1.494 | 0.044 | 67.5 | 2.289 | 0.070 | |

| 93.8 | 1.516 | 0.045 | 71.4 | 2.212 | 0.068 | |

| 93.8 | 1.448 | 0.043 | 73.0 | 2.271 | 0.065 | |

| Model | ModelNet | ModelLoNet | ||||||

|---|---|---|---|---|---|---|---|---|

| RR (%)↑ | RRE (°)↓ | RTE (m)↓ | CD (m)↓ | RR (%)↑ | RRE (°)↓ | RTE (m)↓ | CD (m)↓ | |

| Baseline [10] | 96.29 * | 1.4730 | 0.014 | 0.00078 | 68.17 * | 3.9300 | 0.087 | 0.0037 |

| 96.05 | 1.8128 | 0.015 | 0.00086 | 70.14 | 4.5655 | 0.089 | 0.0038 | |

| 97.08 | 1.5521 | 0.013 | 0.00083 | 70.77 | 4.2219 | 0.086 | 0.0038 | |

| 97.24 | 1.3538 | 0.011 | 0.00078 | 72.35 | 3.9580 | 0.086 | 0.0039 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Chen, L.; Zhou, Q.; Zuo, J.; Wang, H.; Ren, M. A Registration Method of Overlap Aware Point Clouds Based on Transformer-to-Transformer Regression. Remote Sens. 2024, 16, 1898. https://doi.org/10.3390/rs16111898

Zhao Y, Chen L, Zhou Q, Zuo J, Wang H, Ren M. A Registration Method of Overlap Aware Point Clouds Based on Transformer-to-Transformer Regression. Remote Sensing. 2024; 16(11):1898. https://doi.org/10.3390/rs16111898

Chicago/Turabian StyleZhao, Yafei, Lineng Chen, Quanchen Zhou, Jiabao Zuo, Huan Wang, and Mingwu Ren. 2024. "A Registration Method of Overlap Aware Point Clouds Based on Transformer-to-Transformer Regression" Remote Sensing 16, no. 11: 1898. https://doi.org/10.3390/rs16111898

APA StyleZhao, Y., Chen, L., Zhou, Q., Zuo, J., Wang, H., & Ren, M. (2024). A Registration Method of Overlap Aware Point Clouds Based on Transformer-to-Transformer Regression. Remote Sensing, 16(11), 1898. https://doi.org/10.3390/rs16111898