Abstract

This paper investigates remote sensing data recognition and classification with multimodal data fusion. Aiming at the problems of low recognition and classification accuracy and the difficulty in integrating multimodal features in existing methods, a multimodal remote sensing data recognition and classification model based on a heatmap and Hirschfeld–Gebelein–Rényi (HGR) correlation pooling fusion operation is proposed. A novel HGR correlation pooling fusion algorithm is developed by combining a feature fusion method and an HGR maximum correlation algorithm. This method enables the restoration of the original signal without changing the value of transmitted information by performing reverse operations on the sample data. This enhances feature learning for images and improves performance in specific tasks of interpretation by efficiently using multi-modal information with varying degrees of relevance. Ship recognition experiments conducted on the QXS-SROPT dataset demonstrate that the proposed method surpasses existing remote sensing data recognition methods. Furthermore, land cover classification experiments conducted on the Houston 2013 and MUUFL datasets confirm the generalizability of the proposed method. The experimental results fully validate the effectiveness and significant superiority of the proposed method in the recognition and classification of multimodal remote sensing data.

1. Introduction

In practical applications, there are certain limitations in the information content, resolution, and spectrum of single-mode scenes, making it difficult to meet the application requirements [,,]. Multimodal image fusion has become an attractive research direction. Recently, with the in-depth research of fusion algorithms, multimodal recognition technology has made rapid progress [,,]. The multimodal multi-tasking basic model has been widely studied in the field of computer vision. It combines image data with text or speech data as multimodal input and sets different pre-training tasks for different modal branches to enable the model to learn and understand the information between modalities. Multimodal fusion can improve the recognition rate and has better robustness and stability [], further promoting the development of multimodal image fusion technology. At present, image fusion has been widely used in remote sensing image fusion [], visible and infrared image fusion [] multi-focus image fusion [], multi-exposure image fusion [], medical imaging fusion [], etc.

In recent years, marine ship detection has been extensively used in many fields such as fishery management and navigation supervision. Determining how to achieve accurate detection through multimodal fusion of marine ships has great strategic significance in both civil and military fields. Thus, Cao et al. [] proposed a ship recognition method based on morphological watershed image segmentation and Zemyk moments for ship extraction and recognition in video surveillance frame images. Wang et al. [] developed a SAR ship recognition method based on multi-scale feature attention and an adaptive weighted classifier. Zhang et al. [] presented a fine-grained ship image recognition network based on the bilinear convolutional neural network (BCNN). Han et al. [] proposed a new efficient information reuse network (EIRNet), and based on EIRNet, they designed a dense feature fusion network (DFF-Net), which reduces information redundancy and further improves the recognition accuracy of remote sensing ships. Liu, Chen, and Wang [] fused optical images with SAR images, utilized feature point matching, contour extraction, and brightness saliency to detect ship components, and identified ship target types based on component information voting results. It is challenging to realize information separation under maximum use of information without compromising image quality.

Meanwhile, in the past decade, the application of remote sensing based on deep learning has made significant advancements in object detection, scene classification, land use segmentation, and recognition. This is mainly because deep neural networks can effectively map remote sensing observations into the needed geographic knowledge through their strong feature extraction and representation capabilities [,,]. Existing remote sensing interpretation methods mainly adopt manual visual interpretation and semi-automatic techniques based on accumulated expert knowledge, showing high accuracy and reliability. Artificial intelligence technology represented by deep learning is widely used in remote sensing image interpretation [] and has greatly improved the efficiency of remote sensing data interpretation. For instance, entropy decomposition was utilized to identify crops from synthetic aperture radar (SAR) images []. Similarly, normative forests were used to classify hyperspectral images []. Jafarzadeh et al. [] employed several tree-based classifiers’ bagging and boosting sets to classify SAR, hyperspectral, and multispectral images.

Compared to single sensors, multi-sensor or remote sensing data provides different descriptions of ground objects, thereby providing richer information for various application tasks. In the field of remote sensing, modality can usually be regarded as the imaging results of the same scene and target under different sensors, and using multimodal data for prediction and estimation is a research hotspot in this field. Given this, an integration method using intensity tone saturation (IHS) transform and wavelet was adopted to fuse SAR images with medium-resolution multispectral images (MSIs) []. Cao et al. [] proposed a method for monitoring mangrove species using rotating forest fusion HSI and LiDAR images. Hu et al. [] developed a fusion method for PolSAR and HSI data, which extracts features from the two patterns at the target level and then fuses them for land cover classification. Li et al. [] introduced an asymmetric feature fusion idea for hyperspectral and SAR images. This idea can be extended to the fields of hyperspectral and LiDAR images. Although the above studies have realized the fusion of multiple remote sensing data, designing the loss function specifically needs further investigation. Multimodal data fusion [,] is one of the most promising research directions for deep learning in remote sensing, particularly when SAR and optical data are combined because they have highly different geometrical and radiometric properties [,].

Meanwhile, the Hirschfeld–Gebelein–Rényi (HGR) maximum correlation [] has been widely used as an information metric for studying inference and learning problems []. In the field of multimodal fusion based on HGR correlation, Liang et al. [] introduced the HGR maximum correlation terms into the loss function for person recognition in multimodal data. Wang et al. [] proposed Soft-HGR, a novel framework to extract informative features from multiple data modalities. Ma et al. [] developed an efficient data augmentation framework by designing a multimodal conditional generative adversarial network (GAN) for audiovisual emotion recognition. However, the values of the transmitted data are changed in the data fusion process.

Inspired by previous studies, the issues of remote sensing data recognition and classification with multimodal data fusion are studied. The main innovations of this article are stated as follows:

- (1)

- An HGR correlation pooling fusion algorithm is developed by integrating a feature fusion method with an HGR correlation algorithm. This framework adheres to the principle of relevance correlation and segregates information based on its intrinsic relevance into distinct classification channels. It enables the derivation of loss functions for positive, zero, and negative samples. Then, a tailored overall loss function is designed for the model, which significantly enhances feature learning in multimodal images.

- (2)

- A multimodal remote sensing data recognition and classification model is proposed, which can achieve information separation under maximum utilization. The model enhances the precision and accuracy of target recognition and classification while preserving image information integrity and image quality.

- (3)

- The HGR pooling specifically addresses multimodal pairs (vectors) and intervenes in the information transmission process without changing the value of the transmitted information. It enables inversion operations on positive, zero, and negative sample data in the original signal of the framework, thereby supporting traceability for the restoration of the original signal. This advancement greatly improves the interpretability of the data.

2. Related Work

To date, multimodal data fusion has been widely used in remote sensing [,]. In most cases, multimodal data recognition systems are much more accurate than the corresponding optimal single-modal data recognition systems []. According to the fusion level, the fusion strategies between various modalities can be mainly divided into data-level fusion, feature-level fusion, and decision-level fusion []. Data-level fusion is aimed at the data without special processing for each mode. The original data of each mode is combined without pretreatment to obtain the data after the mode function. Finally, the fusion data are taken as the input of the identification network for training or identification. Feature-level fusion concatenates the features of each modality into a large feature vector, which is then fed into a classifier for classification and recognition. Decision-level fusion determines the weights and fusion strategies of each modality based on their credibility after obtaining the prediction probability through a classifier, and then it obtains the fused classification results.

The complexity of the above three fusion strategies decreases in sequence, and their dependence on the rest of the system processes increases in sequence. Usually, multimodal fusion strategies are selected based on specific situations. With the improvement in hardware computing power and the increasing demand for applications, the studies of data recognition, which contains massive data information and has mature data collection methods, are constantly enriched.

In order to ascertain the degree of correlation and to identify the most informative features, the Hirschfeld–Gebelein–Rényi (HGR) maximal correlation is employed as a normalized measure of the dependence between two random variables. This has been widely applied as an information metric to study inference and learning problems. In [], the sample complexity of estimating the HGR maximal correlation functions comes from the alternating conditional expectation algorithm using training samples from large datasets. By using the HGR maximal correlation in [], the high dependence between the different modalities in the generated multimodal data is modeled. In this way, it exploits different modalities in the generated data to approximate the real data. Although these studies have yielded promising outcomes, it is difficult to achieve accurate detection through multimodal fusion of marine ships, and the interrelationships between modules have not been fully elucidated.

Feature-level fusion can preserve more data information. It first extracts features from the image and then performs fusion. Pedergnana et al. [] used optical and LiDAR data by extracting extended attribute contours of the two modalities and connecting them with the original modalities. Then, a two-layer DBN network structure was proposed, which first learns the features of the two modalities separately and then connects the features of the two modalities to learn the second layer. Finally, a support vector machine (SVM) is utilized to evaluate and classify the connected features [].

However, feature-level fusion requires high computing power and is prone to the curse of dimensionality, and the application of decision-level fusion is also common. To address these issues, a SAR and infrared fusion scheme based on decision-level fusion was introduced []. This scheme uses a dual-weighting strategy to measure the confidence of offline sensors and the reliability of online sensors. The structural complexity of decision-level fusion is relatively low and does not require strict temporal synchronization, which performs well in some application scenarios.

3. Methodology

In this section, the details of the proposed CNN-based special HGR correlation pooling fusion framework for multimodal data are introduced. The framework can preserve adequate multimodal information and extract the correlation between modal 1 and modal 2 data so that discriminative information can be learned more directly.

3.1. Problem Definition

Given paired observations from multimodal data {(x(i), y(i))|x(i) ∈ Rn1, y(i) ∈ Rn2, i = 1, …, N}, let x and y represent the modal 1 image and modal 2 image with dimensionalities Rn1 and Rn2, respectively. The ith components x(i) ∈ x, y(i) ∈ y match each other and come from the same region, while the ith component x(i) ∈x and the jth component y(j) ∈ y do not match each other and come from different regions.

3.2. Model Overview

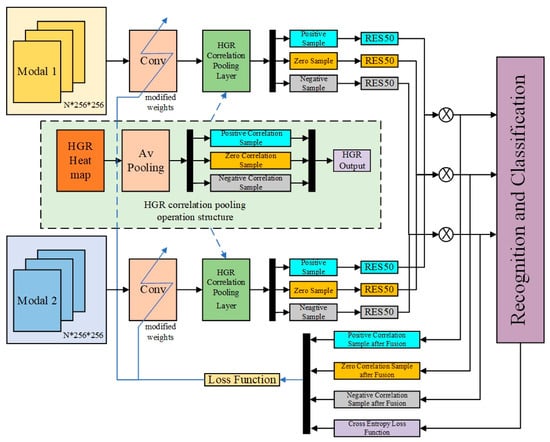

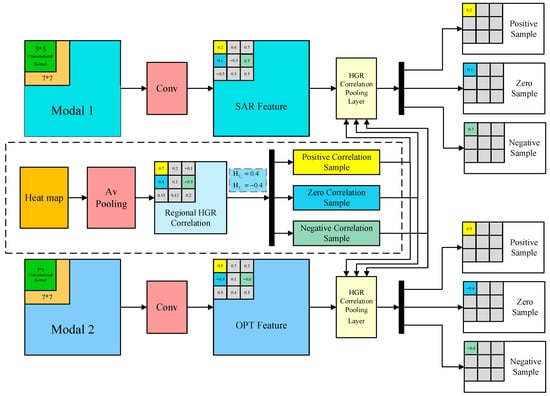

In this paper, to solve the problems of low recognition and classification accuracy and difficulty in effectively integrating multimodal features, an HGR maximal correlation pooling fusion framework is proposed for recognition and classification in multimodal remote sensing data. The overall structure of the framework is shown in Figure 1. In the subsequent subsections, the model will be discussed in detail.

Figure 1.

The overall framework of the proposed multimodal data fusion model.

For multimodal image pairs, the framework has two separate feature extraction networks. To reduce the dimensionality of features, following the feature extraction backbone, a 1 × 1 convolution layer is used, and the modal 1 feature map and modal 2 feature map are obtained separately. Then, a special HGR maximal correlation pooling layer is employed. The HGR pooling handles multimodal pairs (vectors) and only intervenes in the information transmission without changing the value of the transmitted information. The principle is to filter the values of information with different relevant characteristics and transmit them to the corresponding subsequent classification channels. The feature data are processed to obtain positive sample data, zero sample data, and negative sample data for modal 1 and modal 2 features, respectively. Then, the three types of sample data from modal 1 and modal 2 are input into the ResNet50 [] network to extract feature vectors, and feature level fusion is performed on the corresponding feature vectors to obtain fused positive samples, fused zero samples, and fused negative samples.

Finally, the positive, zero, and negative samples of modal 1/modal 2 images are fused respectively using the recognition and classification network, thereby accomplishing multimodal recognition tasks.

3.3. Heatmap and HGR Correlation Pooling

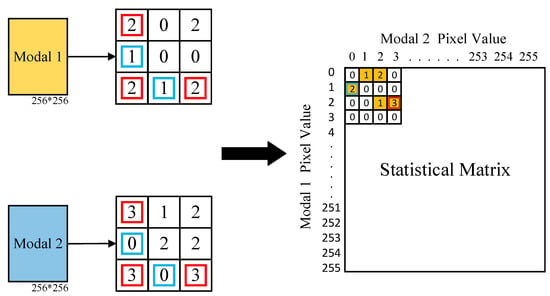

The input set of multimodal images needs to be pre-aligned to generate heatmaps. denotes the input data of modal 1 pixel matrix of size , and denotes the input data of modal 2 pixel matrix of size . The statistical matrices of modal 1 and modal 2 images are illustrated by empirical distributions and defined as follows:

For each pixel of modal 1 and modal 2 images:

where ps and po represent the pixel position in each modal 1 and modal 2 image, respectively, and # represents the number of pixels in modal 1 and modal 2 images. The expressions appear to be defining functions X(ps,x) and Y(po,y), which count the occurrences of specific pixel positions ps and po in two different modal images, respectively.

According to the definition of X and Y, the statistical matrix calculation process is as follows:

where is the statistical matrix calculation function and Ds is the statistical matrix. The calculation of the statistical matrix process is shown in Figure 2.

Figure 2.

Statistical matrix calculation process.

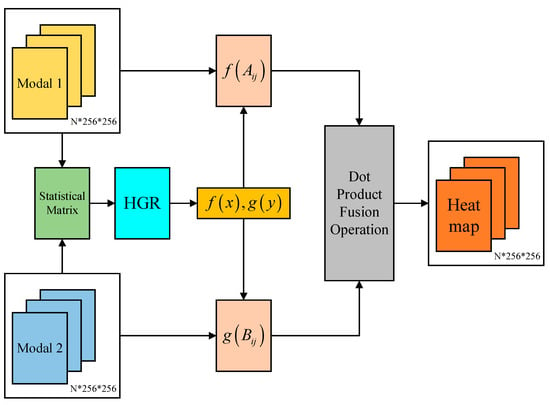

Then, the pixel-level maximal nonlinear cross-correlation between sets is given by:

where f(x) represents modal 1 in each pixel position, g(y) represents modal 2 in each pixel position, and H represent the nonlinear correlation between the pixel points in modal 1 and modal 2 images.

According to the obtained statistical matrix, the HGR cross-correlation is calculated as follows:

where is the HGR cross-correlation calculation function, and f(x) and g(y) are projection vectors. Based on the obtained projection vector, the heatmap pixel matrix is calculated as follows:

where is a heatmap pixel matrix of size . The heatmap calculation process is shown in Figure 3.

Figure 3.

Heatmap calculation process.

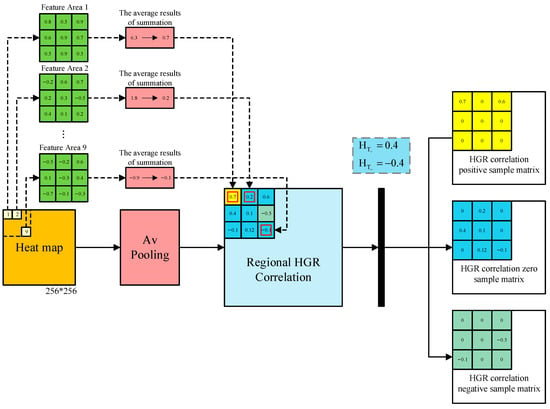

Based on the heatmap pixel matrix obtained above, the average pooling is calculated as follows:

where is the average pooling function, and is the HGR region cross-correlation matrix of size . Furthermore, the calculation of cross-correlation positive, zero, and negative sample matrices can be expressed as:

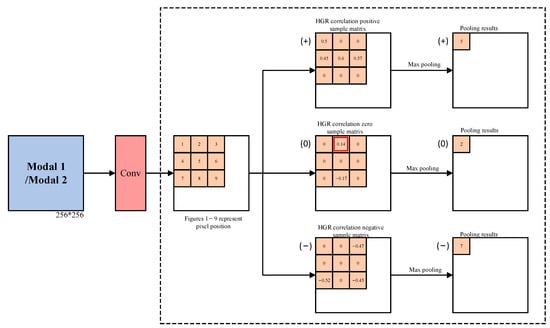

where , and , , and represent positive, zero, and negative cross-correlation sample matrices, respectively. The calculation of the HGR cross-correlation sample matrix is shown in Figure 4.

Figure 4.

The calculation process of the HGR correlation sample matrix.

Meanwhile, A is defined as the image pixel position matrix, and is defined as the matrix maximum pooling dot product operation. Based on the cross-correlation positive, zero, and negative sample matrix obtained by the above calculation, the maximum pooling calculation process is given by:

where , , and are HGR cross-correlation positive, zero, and negative sample matrix maximum pooling results, respectively. The HGR cross-correlation pooling process is shown in Figure 5.

Figure 5.

HGR correlation pooling process.

Finally, for the special HGR maximum correlation pooling layer, the key point is to obtain the transfer position based on the generated correlation matrix and transfer the values corresponding to the intermediate matrix in the pooling process. Due to dimensionality reduction, instead of relying on the common maximum value, only one value is passed on for 3 × 3, and correlation is used for transfer.

As shown in Figure 6, the corresponding modal 1/modal 2 features are transmitted through the special HGR pooling layer, which intervenes in the information transmission based on the HGR maximum correlation matrix instead of normal pooling methods without changing the value of the transmitted information. Meanwhile, the modal 1/modal 2 feature maps are divided into positive samples, zero samples, and negative samples for modal 1 and modal 2 data, respectively.

Figure 6.

Sample division.

3.4. Learning Objective

The cross-entropy loss and the Soft-HGR loss with modified weights are taken as the loss for the whole network. Thus, the learning objective of the framework can be represented as:

where Lce represents the cross-entropy loss and Lsoft-HGR denotes the Soft-HGR loss. α is the penalty parameter to balance the cross-entropy loss Lce and the Soft-HGR loss Lsoft-HGR, which are designer-defined parameters ranging from 0 to 1.

The cross-entropy loss Lce is used to measure the difference between the predicted result and the ground-truth label , and can be expressed as follows:

The Soft-HGR loss Lsoft-HGR [] is utilized to maximize the correlation between multimodal images, and can be represented as follows:

where and are weighting factors, which are designer-defined parameters ranging from 0 to 1. Lpositive, Lzero, and Lnegative represent the loss for positive samples, zero samples, and negative samples, and their definitions are given below:

where fp(X) and gp(Y) represent a pair of positive samples. Similarly, fZ(X) and gZ(Y) represent a pair of zero samples, and fN(X) and gN(Y) represent a pair of negative samples. As a supplement, the expectations and covariance are approximated through the sample mean and sample covariance.

4. Experiments and Analysis

4.1. Dataset

The ship recognition experiments were conducted on the QXS-SROPT dataset, and the land cover classification experiments were conducted on the Houston 2013 and MUUFL datasets to verify the effectiveness of the proposed model and test the improvement in remote sensing data recognition and classification when maximizing the utilization of multimodal information.

QXS-SAROPT [] contains 20,000 pairs of optical and SAR images collected from Google Earth remote sensing optical images and GaoFen-3 high-resolution spotlight images. The size of each image is 256 × 256 to fit the neural network with a resolution of 1 m. This dataset covers San Diego, Shanghai, and Qingdao.

The HSI-LiDAR Houston2013 dataset [], provided for IEEE GRSS DFC2013, consists of imagery collected by the ITRES CASI-1500 imaging sensor. This imagery encompasses the University of Houston campus and its adjacent rural areas in Texas, USA. This dataset is widely used for land cover classification.

The HSI-LiDAR MUUFL dataset was constructed over the campus of the University of Southern Mississippi using the Reflective Optics System Imaging Spectrometer (ROSIS) sensor [,]. This dataset contains HSI and LiDAR data and is widely used for land cover classification.

4.2. Data Preprocessing and Experimental Setup

Since the image pairs in the QXS-SROPT dataset do not contain labels and are not aligned, in this study, manual alignment operations were performed on 131 image pairs. The proposed model learns the corresponding pixel correlation from SAR and optical image pairs, and calculates the maximum pixel-level HGR cross-correlation between SAR-optical datasets and the corresponding projection vectors f (x), g (y) to generate the heatmap and HGR cross-correlation pooling matrix modules. The pixel-level HGR has a certain potential for improving network multimodal information learning.

In the Houston 2013 dataset [], the HSI image contains 349 × 1905 pixels and features 144 spectral channels at a spectral resolution of 10 nm, spanning a range from 364 to 1046 nm. Meanwhile, LiDAR data for a single band provide elevation information for the same image area. The study scene encompasses 15 distinct land cover and land use categories. This dataset contains 2832 training samples and 12,197 test samples, as listed in Table 1 [].

Table 1.

The Houston2013 dataset with 2832 training samples and 12,197 testing samples.

In the MUUFL dataset [], the HSI image contains 325 × 220 pixels, covering 72 spectral bands. The LiDAR imagery incorporates elevation data across two grids. Due to noise considerations, 8 initial and final bands were discarded, and 64 bands remained. The data encompass 11 urban land cover classes, comprising 53,687 ground truth pixels. Table 2 presents the distribution of 5% samples randomly extracted from each category.

Table 2.

The MUUFL Gulfport dataset with 2669 training samples and 51,018 testing samples.

The proposed model was trained using the Lion optimizer for 500 epochs and a batch size of 32 with an initial learning rate of 0.0001. After 30 epochs, the learning rate gradually decreased by 1 × 10−0.01 times in each epoch. All experiments were conducted on a computer equipped with an Intel(R) Xeon(R) Gold 6133 CPU @ 2.50 GHz and an NVIDIA GeForce RTX3090 GPU (NVIDIA, Santa Clara, CA, USA) with 24 G memory, 64-bit Ubuntu 20.04 operating system, CUDA 12.2, and cuDNN 8.8. The source code was implemented using PyTorch 2.1.1 and Python 3.9.16.

4.3. Ship Recognition Experiment

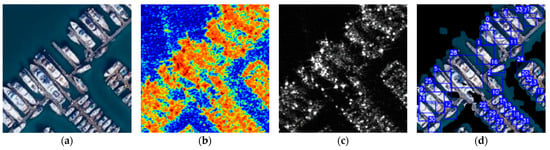

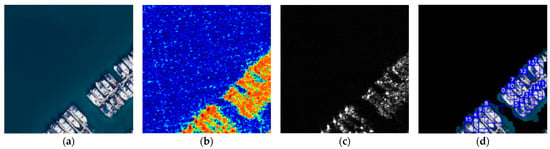

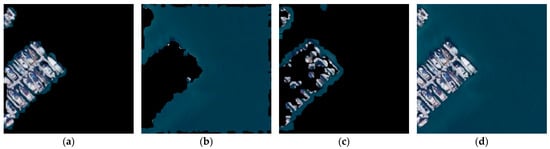

To verify the performance of the proposed HGRPool method, a series of experiments were conducted on the QXS-SAROPT dataset (100 training samples with 4358 instances, 31 testing samples with 1385 instances) to perform ship feature recognition on SAR–optical image pairs. The results of three experiments are illustrated in Figure 7, Figure 8 and Figure 9, where (a) illustrates the optical image, (b) shows the heatmap, (c) displays the SAR image, and (d) depicts the ship recognition results. The results from these figures demonstrate that the HGRPool method effectively identifies different ships, achieving commendable recognition performance and effectively distinguishing water bodies.

Figure 7.

Ship recognition experiment 1: (a) optical image; (b) heatmap; (c) SAR image; (d) ship recognition results.

Figure 8.

Ship recognition experiment 2: (a) optical image; (b) heatmap; (c) SAR image; (d) ship recognition results.

Figure 9.

Ship recognition experiment 3: (a) optical image; (b) heatmap; (c) SAR image; (d) ship recognition results.

The proposed HGRPool method was compared with the BNN method proposed by Bao et al. []. Table 3 lists the values of four commonly used indicators, namely precision (P), recall (R), F1-score (F1), and accuracy (Acc) for the recognition results. Meanwhile, under the same experimental conditions, comparative experiments were conducted with other existing methods, including MoCo-BNN [], CCR-Net [], and MFT []. The experimental data are presented in Table 3. The precision, recall, F1-score, and accuracy of the proposed method reached 0.908, 0.988, 0.946, and 0.898, respectively. The proposed method achieved better results than existing methods, especially in terms of accuracy. The results suggest that the proposed method has greater recognition stability and accuracy and higher localization accuracy.

Table 3.

Ship identification experimental results (best results are bolded).

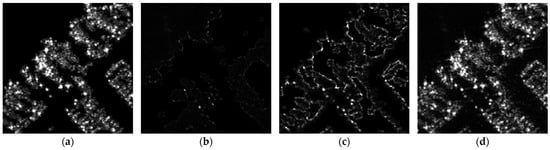

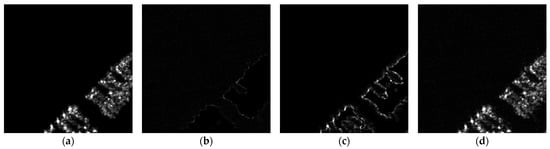

4.4. Information Traceability Experiment

To demonstrate information integrity throughout the processing stages, an information traceability experiment was conducted using images in three distinct forms: positive, negative, and zero. The experiment aimed to retrieve the original images based on these three forms. This part of the experiment involved six sets of tests, three with optical images and three with SAR images. The results are illustrated in Figure 10, Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15. In these figures, (a) represents the positive image, (b) the negative image, (c) the zero image, and (d) the target traceability result image. From Figure 10, Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15, it can be observed that the traced images are consistent with the original images, with no information loss. This indicates that the proposed algorithm maintains information integrity throughout the processing stages, ensuring that no information is lost.

Figure 10.

Optical information traceability experiment 1: (a) optical positive image; (b) optical negative image; (c) optical zero image; (d) optical original image.

Figure 11.

Optical information traceability experiment 2: (a) optical positive image; (b) optical negative image; (c) optical zero image; (d) optical original image.

Figure 12.

Optical information traceability experiment 3: (a) optical positive image; (b) optical negative image; (c) optical zero image; (d) optical original image.

Figure 13.

SAR information traceability experiment 1: (a) SAR positive image; (b) SAR negative image; (c) SAR zero image; (d) SAR original image.

Figure 14.

SAR information traceability experiment 2: (a) SAR positive image; (b) SAR negative image; (c) SAR zero image; (d) SAR original image.

Figure 15.

SAR information traceability experiment 3: (a) SAR positive image; (b) SAR negative image; (c) SAR zero image; (d) SAR original image.

4.5. Land Cover Classification Experiment on the Houston 2013 Dataset

To validate the generalizability of the method proposed in this paper, land cover classification experiments were conducted on the Houston 2013 dataset. Our method was compared with traditional machine learning algorithms and state-of-the-art methods in the field of deep learning, including CCF [], CoSpace [], Co-CNN [], FusAT-Net [], ViT [], S2FL [], Spectral-Former [], CCR-Net [], MFT [], and DIMNet []. The specific results are shown in Figure 16, where (a) displays the DSM of LiDAR data, (b) shows the heatmap, (c) represents the three-band color composite for HSI spectral information, (d) shows the train ground-truth map, (e) shows the test ground-truth map, and (f) illustrates the classification results, with good contrast post-reconstruction. The values of three universal indicators, namely overall accuracy (OA), class accuracy (AA), and Kappa coefficient, are presented in Table 4 for comparison, where the top outcomes are highlighted in bold. It is evident that our method outperforms the others in terms of OA (92.23%), AA (93.55%), and Kappa coefficient (0.9157). It surpasses other methods in eight categories (stressed grass, synthetic grass, water, residential, road, parking lot 1, tennis court, and running track), especially achieving the highest accuracy of 100% in the four categories of synthetic grass, tennis court, water, and running track. Even in the remaining seven categories, our method provides commendable results. Therefore, statistically, our method exhibits superior performance compared to all other models. This suggests that our method is general and universally applicable, and is thus a reliable model.

Figure 16.

Houston 2013 dataset: (a) DSM obtained from LiDAR; (b) heatmap; (c) three-band color composite for HSI images (bands 32, 64, 128); (d) train ground-truth map; (e) test ground-truth map; (f) classification map.

Table 4.

Comparison of various methods on the Houston 2013 dataset (best results are bolded).

4.6. Land Cover Classification Experiment on the MUUFL Dataset

To validate the generalizability of the proposed method, the land cover classification experiments were conducted on the HSI-LiDAR MUUFL dataset. Our method was compared with traditional machine learning algorithms and state-of-the-art methods in the field of deep learning, including CCF, CoSpace, Co-CNN, FusAT-Net, ViT [], S2FL, Spectral-Former, CCR-Net, and MFT. The specific results are illustrated in Figure 17, where (a) displays the three-band color composite for HSI spectral information, (b) shows the heatmap, (c) represents the LiDAR image, (d) shows the train ground-truth map, (e) shows the test ground-truth map, and (f) illustrates the classification results. The values of three universal indicators, namely OA, AA, and Kappa coefficient, are presented in Table 5, with the top outcomes being highlighted in bold. It is evident that our method outperforms the others in terms of OA (94.99%), AA (88.13%), and Kappa coefficient (0.9339). Our method surpasses other methods in five categories (grass-pure, water, buildings’-shadow, buildings, and sidewalk). Even in the remaining six categories, our method obtains commendable results. Therefore, statistically, our method exhibits superior performance compared to all other models. This shows that our method is general and universally applicable, and is thus a reliable model.

Figure 17.

MUUFL dataset: (a) three-band color composite for HSI images (bands 16, 32, 64); (b) heatmap (c) LiDAR image; (d) train ground-truth map; (e) test ground-truth map; (f) classification map.

Table 5.

Comparison of various methods on the HSI-LiDAR MUUFL dataset (best results are bolded).

5. Discussion

5.1. Ablation Experiment

The ablation experiments were conducted on the QXS-SROPT dataset, the Houston 2013 dataset, and the MUUFL dataset to evaluate the proposed HGR correlation pooling fusion framework.

In the ablation experiments on QXS-SROPT datasets, the performance was observed when partially using the HGRPool, i.e., using the HGRPool for positive and negative samples or positive and zero samples, as well as when completely omitting it. The results of ablation experiments on the QXS-SROPT dataset are presented in Table 6. The proposed model demonstrates optimal performance in ship recognition experiments on the QXS-SROPT dataset when it incorporates all components, i.e., when fully utilizing the HGRPool. Meanwhile, there is a notable decline in ship recognition accuracy as the HGRPool component is partially employed or entirely excluded.

Table 6.

Ablation study by removing different modules on the QXS-SROPT dataset (best results are bolded).

In the ablation experiments on the Houston 2013 and MUUFL datasets, the performance was observed when partially using HGRPool and completely omitting it. The results of ablation experiments on the Houston 2013 and MUUFL datasets are shown in Table 7. Similarly, the proposed model demonstrates optimal performance in land cover classification experiments on the Houston 2013 and MUUFL datasets when it incorporates all components, i.e., when fully utilizing the HGRPool. Due to partial use or complete exclusion of the HGRPool component, there is a significant decrease in land cover classification accuracy.

Table 7.

Ablation study by removing different modules on the Houston 2013 and MUUFL datasets (best results are bolded).

5.2. Analyzing the Effect of Experiments

The comparative experimental results confirm the precision and accuracy of our method. Compared with various advanced matching networks, this method not only achieves accurate and stable matching in ship recognition but also has particularly obvious advantages in land cover classification. By integrating a feature fusion method with an HGR correlation algorithm to separate information based on intrinsic correlation into different classification channels while maintaining information integrity, this model achieves information separation and maximizes the utilization of multimodal data, thereby improving the precision and accuracy of target recognition and classification.

From Table 6, it can be seen that the proposed model demonstrates optimal performance (with precision, recall, F1-score, and accuracy of 0.898, 0.908, 0.988, and 0.946, respectively) in ship recognition experiments on the QXS-SROPT dataset when it incorporated all components, i.e., fully using the HGRPool. There is a notable decline in ship recognition accuracy when the HGRPool component is partially used or entirely excluded. When partially using HGRPool (positive/negative sample), only recall (R) is still as high as 0.947. The results of accuracy, precision, and F1-score drop to 0.810, 0.849, and 0.895, respectively. The result of partially using HGRPool (positive/zero sample) is slightly worse than that of partially using HGRPool (positive/negative sample). Additionally, the result corresponding to without HGRPool is the worst, with accuracy, precision, recall, and F1-score being only 0.722, 0.803, 0.877, and 0.838, respectively.

Meanwhile, it can be deduced from Table 7 that there is a notable decline in land cover classification accuracy as the HGRPool component is partially employed or entirely excluded. The result of partially using HGRPool (positive/negative sample) in OA, AA, and Kappa drop to 90.81%, 91.46%, and 0.9013 on the Houston 2013 dataset and 93.79%, 85.07%, and 0.9180 on the MUUFL dataset. The result of partially using HGRPool (positive/zero sample) is slightly worse than that of partially using HGRPool (positive/negative sample). The result corresponding to without HGRPool is the worst, with OA, AA, and Kappa being only 89.64%, 90.26%, and 0.8851 on the Houston 2013 dataset and 92.72%, 80.94%, and 0.9040 on the MUUFL dataset. It can be concluded that the proposed HGR correlation pool fusion framework is effective and helps to improve accuracy.

6. Conclusions

The fusion of multimodal images has always been a research hotspot in the field of remote sensing. To address the issues of low recognition and classification accuracy and difficulty in integrating multimodal features in existing remote sensing data recognition and classification methods, this paper proposes a multimodal remote sensing data recognition and classification model based on a heatmap and HGR cross-correlation pooling fusion operation. Then, an HGR cross-correlation pooling fusion algorithm is proposed by combining the feature fusion method with the HGR cross-correlation algorithm. The model first calculates the statistical matrix through multimodal image pairs, extracts multimodal image features using convolutional layers, and then computes the heatmap from these features. Subsequently, by performing HGR cross-correlation pooling operations, the model can separate information with intrinsic relevance into respective classification channels, achieving dimensionality reduction of multimodal image features. In this approach, less feature data are used to represent the image area information of multimodal images while maintaining the original image information, thereby avoiding the problem of feature dimension explosion. Finally, point multiplication fusion is performed on the dimensionality-reduced feature samples, which are then input into the recognition and classification network for training to achieve recognition and classification of remote sensing data. This method maximizes the utilization of multimodal information, enhances the feature learning capability of multimodal images, improves the performance of specific interpretation tasks related to multimodal image fusion, and achieves classification and efficient utilization of information with different degrees of relevance. By conducting ship recognition experiments on the QXS-SROPT dataset and land cover classification experiments on the Houston 2013 and MUUFL datasets, it was fully verified that the proposed method outperforms other state-of-the-art remote sensing data recognition and classification methods. In future research, efforts will be made to further enhance recognition and classification accuracy and expand the application scope of this method to encompass more complex scenes and additional modalities. Further investigation will also be carried out of adaptive tuning of the parameters to achieve the best recognition and classification effects.

Author Contributions

Author Contributions: Conceptualization, H.Z. and S.-L.H.; methodology, H.Z., S.-L.H. and E.E.K.; validation, H.Z. and S.-L.H.; investigation, H.Z., S.-L.H. and E.E.K.; data curation, H.Z. and S.-L.H.; writing—original draft, H.Z.; writing—review and editing, S.-L.H. and E.E.K.; supervision, S.-L.H. and E.E.K.; funding acquisition, S.-L.H. and E.E.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key R&D Program of China under Grant 2021 YFA0715202, Shenzhen Key Laboratory of Ubiquitous Data Enabling (Grant No. ZDSYS20220527171406015) and the Shenzhen Science and Technology Program under Grant KQTD20170810150821146 and Grant JCYJ20220530143002005.

Data Availability Statement

The Houston 2013 dataset used in this study is available at https://hyperspectral.ee.uh.edu/?page_id=1075 (accessed on 28 August 2023); the MUUFL dataset is available from https://github.com/GatorSense/MUUFLGulfport/ (accessed on 19 October 2023); the QXS-SAROPT dataset under open access license CCBY is available at https://github.com/yaoxu008/QXS-SAROPT (accessed on 27 June 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.; Hofle, B.; Bruzzone, L.; Bovolo, F.; Chi, M.; Anders, K.; Gloaguen, R.; et al. Multisource and multitem-poral data fusion in remote sensing: A comprehensive review of the state of the art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.F.; Chanussot, J. Convolutional Neural Networks for Multimodal Remote Sensing Data Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–10. [Google Scholar] [CrossRef]

- Hong, D.F.; Gao, L.R.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More Diverse Means Better: Multimodal Deep Learning Meets Remote-Sensing Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Li, X.; Lu, G.; Yan, J.; Zhang, Z. A survey of dimensional emotion prediction by multimodal cues. Acta Autom. Sin. 2018, 44, 2142–2159. [Google Scholar]

- Wang, C.; Li, Z.; Sarpong, B. Multimodal adaptive identity-recognition algorithm fused with gait perception. Big Data Min. Anal. 2021, 4, 10. [Google Scholar] [CrossRef]

- Zhou, W.J.; Jin, J.H.; Lei, J.S.; Hwang, J.N. CEGFNet: Common Extraction and Gate Fusion Network for Scene Parsing of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Asghar, M.; Khan, M.; Fawad; Amin, Y.; Rizwan, M.; Rahman, M.; Mirjavadi, S. EEG-Based multi-modal emotion recognition using bag of deep features: An optimal feature selection approach. Sensors 2019, 19, 5218. [Google Scholar] [CrossRef] [PubMed]

- Yang, R.; Wang, S.; Sun, Y.Z.; Zhang, H.; Liao, Y.; Gu, Y.; Hou, B.; Jiao, L.C. Multimodal Fusion Remote Sensing Image–Audio Retrieval. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6220–6235. [Google Scholar] [CrossRef]

- Li, H.; Wu, X. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2019, 28, 2614–2623. [Google Scholar] [CrossRef]

- Yang, B.; Zhong, J.; Li, Y.; Chen, Z. Multi-Focus image fusion and super-resolution with convolutional neural network. Int. J. Wavelets Multiresolution Inf. Process. 2017, 15, 1750037. [Google Scholar] [CrossRef]

- Zhang, X. Benchmarking and comparing multi-exposure image fusion algorithms. Inf. Fusion. 2021, 74, 111–131. [Google Scholar] [CrossRef]

- Song, X.; Wu, X.; Li, H. MSDNet for medical image fusion. In Proceedings of the International Conference on Image and Graphic, Nanjing, China, 22–24 September 2019; pp. 278–288. [Google Scholar]

- Cao, X.F.; Gao, S.; Chen, L.C.; Wang, Y. Ship recognition method combined with image segmentation and deep learning feature extraction in video surveillance. Multimedia Tools Appl. 2020, 79, 9177–9192. [Google Scholar] [CrossRef]

- Wang, C.; Pei, J.; Luo, S.; Huo, W.; Huang, Y.; Zhang, Y.; Yang, J. SAR ship target recognition via multiscale feature attention and adaptive-weighed classifier. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Zhang, Z.L.; Zhang, T.; Liu, Z.Y.; Zhang, P.J.; Tu, S.S.; Li, Y.J.; Waqas, M. Fine-Grained ship image recognition based on BCNN with inception and AM-Softmax. CMC-Comput. Mater. Contin. 2022, 73, 1527–1539. [Google Scholar]

- Han, Y.Q.; Yang, X.Y.; Pu, T.; Peng, Z.M. Fine-Grained recognition for oriented ship against complex scenes in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Liu, J.; Chen, H.; Wang, Y. Multi-Source remote sensing image fusion for ship target detection and recognition. Remote Sens. 2021, 13, 4852. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.S. Remote sensing image scene classification meets deep learning: Challenges, methods, benchmarks, and opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Zhu, X.; Tuia, D.; Mou, L.; Xia, G.; Zhan, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Tsagkatakis, G.; Aidini, A.; Fotiadou, K.; Giannopoulos, M.; Pentari, A.; Tsakalides, P. Survey of deep-learning approaches for remote sensing observation enhancement. Sensors 2019, 19, 3929. [Google Scholar] [CrossRef]

- Gargees, R.S.; Scott, G.J. Deep Feature Clustering for Remote Sensing Imagery Land Cover Analysis. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1386–1390. [Google Scholar] [CrossRef]

- Tan, C.; Ewe, H.; Chuah, H. Agricultural crop-type classification of multi-polarization SAR images using a hybrid entropy decomposition and support vector machine technique. Int. J. Remote Sens. 2011, 32, 7057–7071. [Google Scholar] [CrossRef]

- Xia, J.; Yokoya, N.; Iwasaki, A. Hyperspectral image classification with canonical correlation forests. IEEE Trans. Geosci. Remote Sens. 2016, 55, 421–431. [Google Scholar] [CrossRef]

- Jafarzadeh, H.; Mahdianpari, M.; Gill, E.; Moham-madimanesh, F.; Homayouni, S. Bagging and boosting ensemble classifiers for classification of multispectral, hyperspectral and PolSAR data: A comparative evaluation. Remote Sens. 2021, 13, 4405. [Google Scholar] [CrossRef]

- Li, X.; Lei, L.; Zhang, C.G.; Kuang, G.Y. Multimodal Semantic Consistency-Based Fusion Architecture Search for Land Cover Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Cao, J.; Liu, K.; Zhuo, L.; Liu, L.; Zhu, Y.; Peng, L. Combining UAV-based hyperspectral and LiDAR data for mangrove species classification using the rotation forest algorithm. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102414. [Google Scholar] [CrossRef]

- Yu, K.; Zheng, X.; Fang, B.; An, P.; Huang, X.; Luo, W.; Ding, J.F.; Wang, Z.; Ma, J. Multimodal Urban Remote Sensing Image Registration Via Roadcross Triangular Feature. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4441–4451. [Google Scholar] [CrossRef]

- Li, W.; Gao, Y.H.; Zhang, M.M.; Tao, R.; Du, Q. Asymmetric feature fusion network for hyperspectral and SAR image classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 8057–8070. [Google Scholar] [CrossRef]

- Schmitt, M.; Zhu, X. Data fusion and remote sensing: An ever-growing relationship. IEEE Geosci. Remote Sens. Mag. 2016, 4, 6–23. [Google Scholar] [CrossRef]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M. Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data. Remote Sens. 2019, 11, 2417. [Google Scholar] [CrossRef]

- Schmitt, M.; Tupin, F.; Zhu, X. Fusion of SAR and optical remote sensing data–challenges and recent trends. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Kulkarni, S.; Rege, P. Pixel level fusion recognition for SAR and optical images: A review. Inf. Fusion. 2020, 59, 13–29. [Google Scholar] [CrossRef]

- Rényi, A. On measures of dependence. Acta Math. Hung. 1959, 3, 441–451. [Google Scholar] [CrossRef]

- Huang, S.; Xu, X. On the sample complexity of HGR maximal correlation functions for large datasets. IEEE Trans. Inf. Theory 2021, 67, 1951–1980. [Google Scholar] [CrossRef]

- Liang, Y.; Ma, F.; Li, Y.; Huang, S. Person recognition with HGR maximal correlation on multimodal data. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 25 August 2021. [Google Scholar]

- Wang, L.; Wu, J.; Huang, S.; Zheng, L.; Xu, X.; Zhang, L.; Huang, J. An efficient approach to informative feature extraction from multimodal data. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5281–5288. [Google Scholar]

- Ma, F.; Li, Y.; Ni, S.; Huang, S.; Zhang, L. Data augmentation for audio-visual emotion recognition with an efficient multimodal conditional GAN. Appl. Sci.-Basel. 2022, 12, 527. [Google Scholar]

- Pande, S.; Banerjee, B. Self-Supervision assisted multimodal remote sensing image classification with coupled self-looping convolution networks. Neural Netw. 2023, 164, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion. 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Pan, J.; He, Z.; Li, Z.; Liang, Y.; Qiu, L. A review of multimodal emotion recognition. CAAI Trans. Int. Syst. 2020, 15, 633–645. [Google Scholar]

- Pedergnana, M.; Marpu, P.; Dalla, M.; Benediktsson, J.; Bruzzone, L. Classification of remote sensing optical and LiDAR data using extended attribute profiles. IEEE J. Sel. Top. Signal Process. 2012, 6, 856–865. [Google Scholar] [CrossRef]

- Kim, Y.; Lee, H.; Provost, E. Deep learning for robust feature generation in audiovisual emotion recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26 May 2013. [Google Scholar]

- Kim, S.; Song, W.; Kim, S. Double weight-based SAR and infrared sensor fusion for automatic ground target recognition with deep learning. Remote Sens. 2018, 10, 72. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 January 2016. [Google Scholar]

- Huang, M.; Xu, Y.; Qian, L.; Shi, W.; Zhang, Y.; Bao, W.; Wang, N.; Liu, X.; Xiang, X. The QXS-SAROPT dataset for deep learning in SAR-optical data fusion. arXiv 2021, arXiv:2103.08259. [Google Scholar] [CrossRef]

- Debes, C.; Merentitis, A.; Heremans, R.; Hahn, J.; Frangiadakis, N.; Van Kasteren, T.; Liao, W.; Bellens, R.; Pizurica, A.; Gautama, S. Hyperspectral and LiDAR data fusion: Outcome of the 2013 GRSS data fusion contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2405–2418. [Google Scholar] [CrossRef]

- Gader, P.; Zare, A.; Close, R.; Aitken, J.; Tuell, G. MUUFL Gulfport Hyperspectral and Lidar Airborne Data Set; University of Florida: Gainesville, FL, USA, 2013. [Google Scholar]

- Du, X.; Zare, A. Technical Report: Scene Label Ground Truth Map for MUUFL Gulfport Data Set; University of Florida: Gainesville, FL, USA, 2017. [Google Scholar]

- Hong, D.; Hu, J.; Yao, J.; Chanussot, J.; Zhu, X. Multimodal remote sensing benchmark datasets for land cover classification with a shared and specific feature learning model. ISPRS J. Photogramm. Remote Sens. 2021, 178, 68–80. [Google Scholar] [CrossRef] [PubMed]

- Bao, W.; Huang, M.; Zhang, Y.; Xu, Y.; Liu, X.; Xiang, X. Boosting ship detection in SAR images with complementary pretraining techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8941–8954. [Google Scholar] [CrossRef]

- Qian, L.; Liu, X.; Huang, M.; Xiang, X. Self-Supervised pre-training with bridge neural network for SAR-optical matching. Remote Sens. 2022, 14, 2749. [Google Scholar] [CrossRef]

- Roy, S.K.; Deria, A.; Hong, D.; Rasti, B.; Plaza, A.; Chanussot, J. Multimodal fusion transformer for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–20. [Google Scholar] [CrossRef]

- Franco, A.; Oliveira, L. Convolutional covariance features: Conception, integration and performance in person re-identification. Pattern Recognit. 2017, 61, 593–609. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Chanussot, J.; Zhu, X. CoSpace: Common subspace learning from hyperspectral-multispectral correspondences. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4349–4359. [Google Scholar] [CrossRef]

- Hang, R.; Li, Z.; Ghamisi, P.; Hong, D.; Xia, G.; Liu, Q. Classification of hyperspectral and LiDAR data using coupled CNNs. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4939–4950. [Google Scholar] [CrossRef]

- Mohla, S.; Pande, S.; Banerjee, B.; Chaudhuri, S. FusAtNet: Dual attention based SpectroSpatial multimodal fusion network for hyperspectral and lidar classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 14 June 2020. [Google Scholar]

- Khan, A.; Raufu, Z.; Sohail, A.; Khan, A.R.; Asif, A.; Farooq, U. A survey of the vision transformers and their CNN-transformer based variants. Artif. Intell. Rev. 2023, 56, 2917–2970. [Google Scholar] [CrossRef]

- Hong, D.; Han, Z.; Yao, J.; Gao, L.; Zhang, B.; Plaza, A.; Chanussot, J. SpectralFormer: Rethinking hyperspectral image classification with transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Xu, G.; Jiang, X.; Zhou, Y.; Li, S.; Liu, X.; Lin, P. Robust land cover classification with multimodal knowledge distillation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).