A Method for Extracting Photovoltaic Panels from High-Resolution Optical Remote Sensing Images Guided by Prior Knowledge

Abstract

:1. Introduction

1.1. Background and Significance

1.2. Current Methods

1.3. Limitations and Proposed Solutions

- After continuous downsampling operations, the resolution of the feature map gradually decreases. Models are prone to losing low-level feature information, resulting in a decrease in the integrity of the extracted PV panels [17].

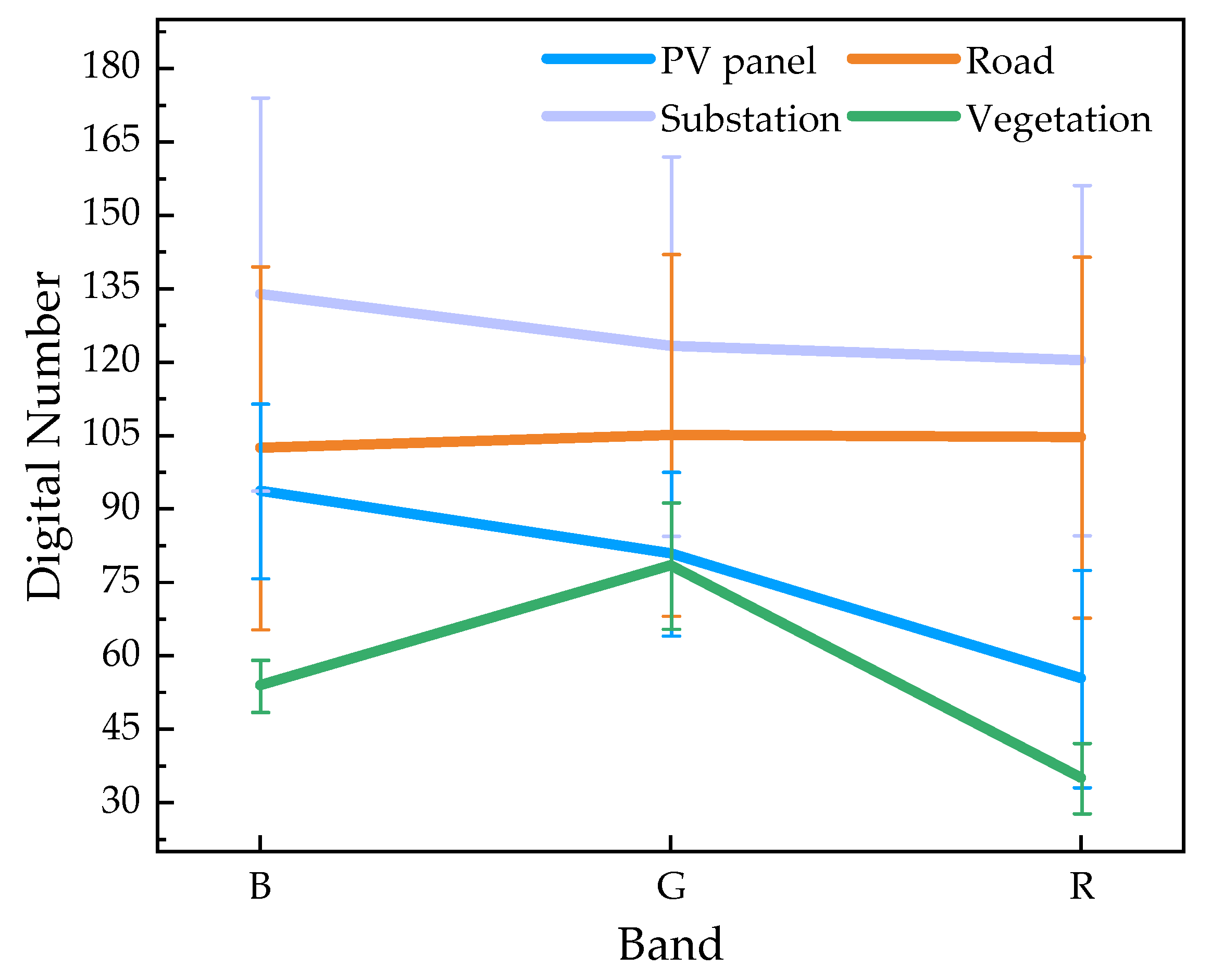

- Although deep learning-based methods can extract good PV panel results, they still lack consideration of the unique characteristics (such as color features) of PV images [21]. To reduce the misclassification of targets or backgrounds, a Photovoltaic Index (PVI) is constructed based on the optical characteristics of PV panels and serves as prior knowledge to differentiate between PV panels and non-PV panels.

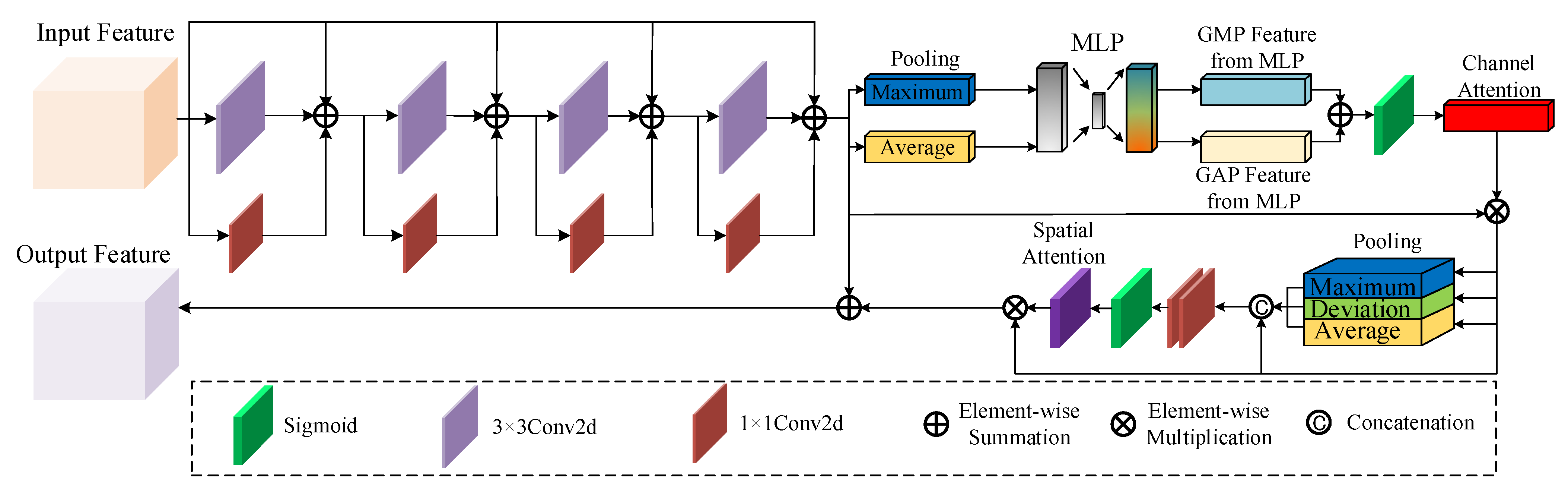

- In order to reduce the loss of low-level features during downsampling, and preserve effective feature information in both the spatial and channel domains, a Residual Convolutional Hybrid Attention Module (RCHAM) is proposed to further enhance the accuracy and integrity of PV panel extraction. This module introduces multilevel convolutions and attention mechanisms into the skip-connection of the encoder–decoder structure for the purpose of enhancing the information interaction between shallow features and deep features.

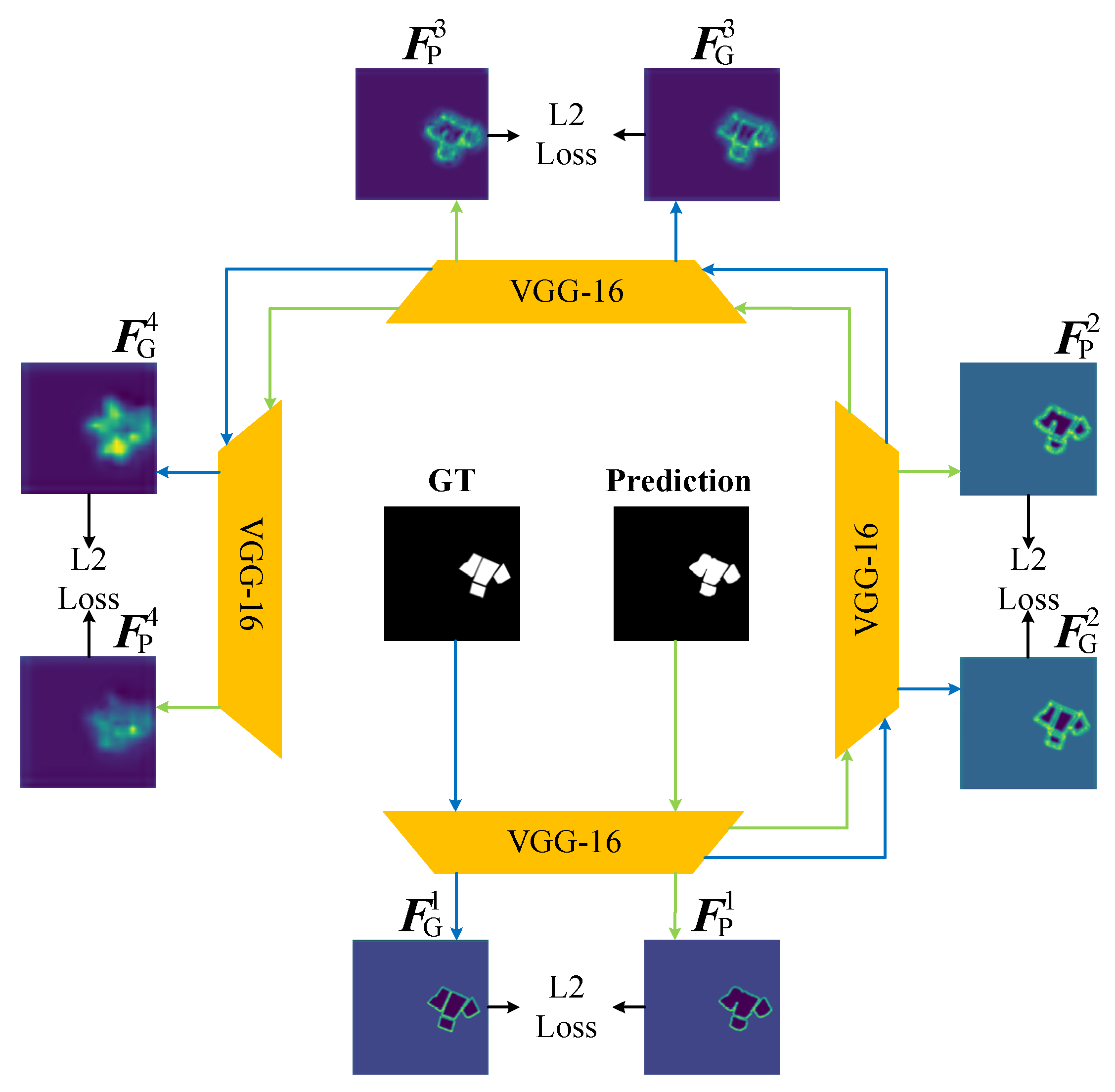

- In order to reduce the phenomenon of blurred edges in the extraction results, a multilevel Feature Loss (FL) function is designed to capture the contour information of the boundaries and improve the accuracy of the boundary segmentation.

2. Related Work

2.1. Object Detection Network

2.2. Semantic Segmentation Network

2.3. Spatial Attention Mechanism and Channel Attention Mechanism

3. Methodology

3.1. Overview

3.2. Photovoltaic Index

3.3. Residual Convolution Hybrid Attention Module

3.4. Feature Loss

4. Results

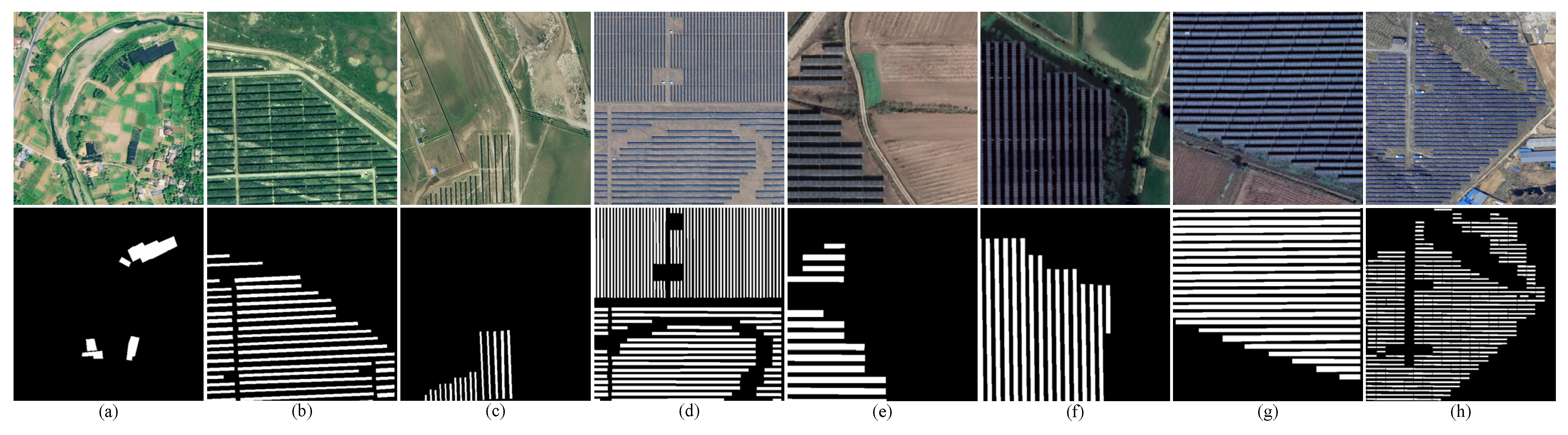

4.1. Datasets and Parameter Settings

4.2. Evaluation Metrics

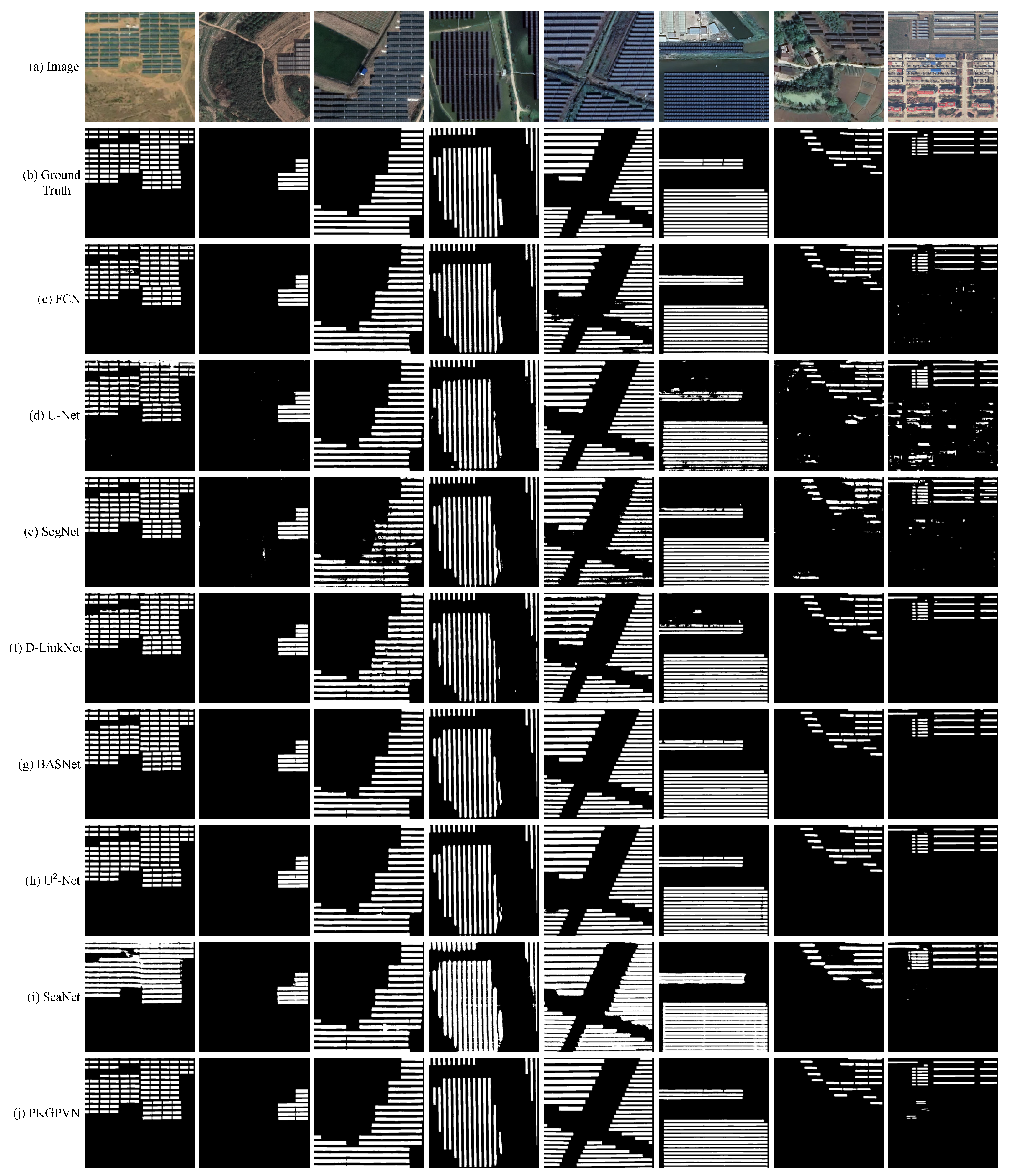

4.3. Comparative Experiments

4.3.1. Experiments on the AIR-PV Dataset

4.3.2. Experiments on the PVP Dataset

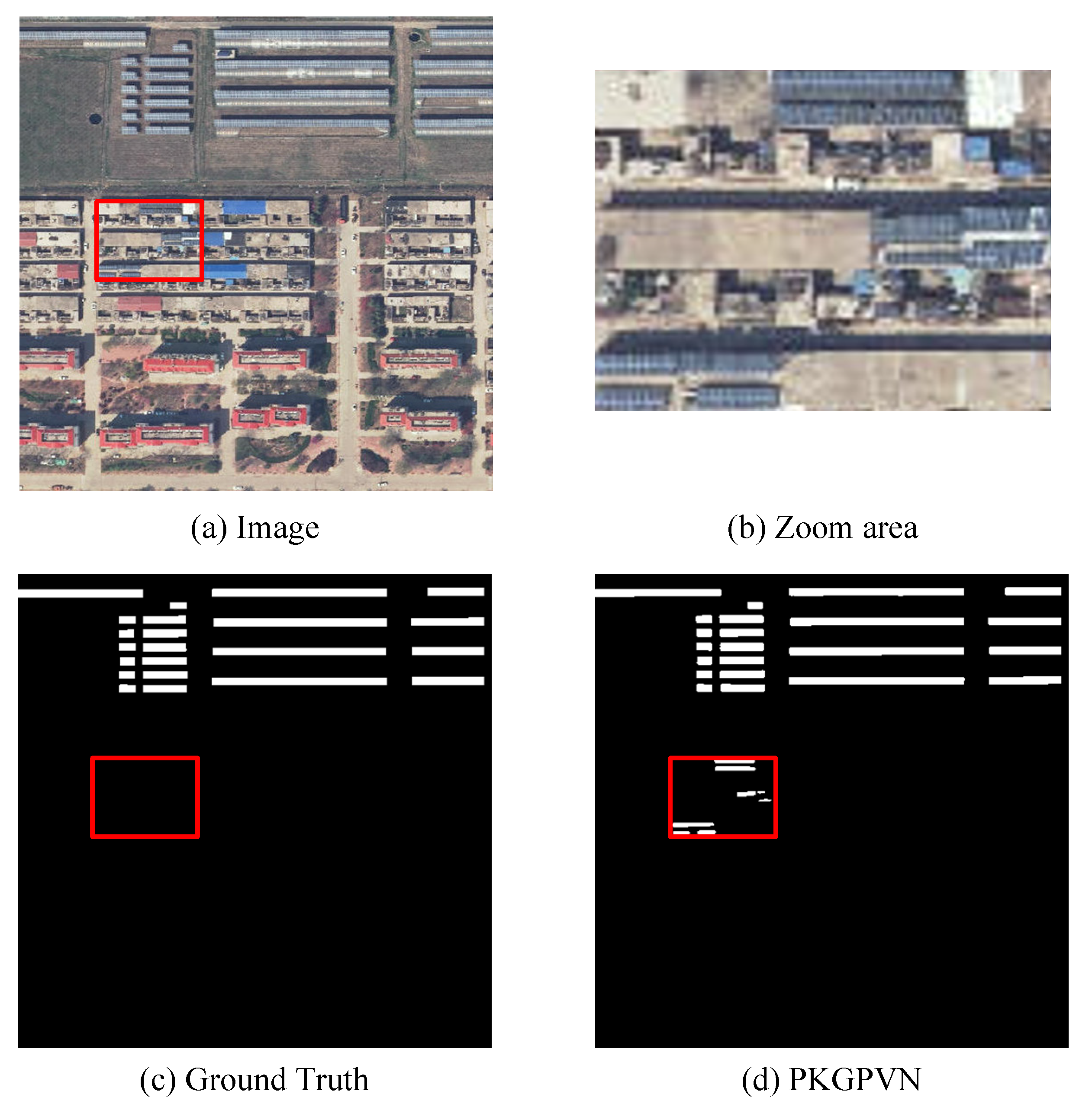

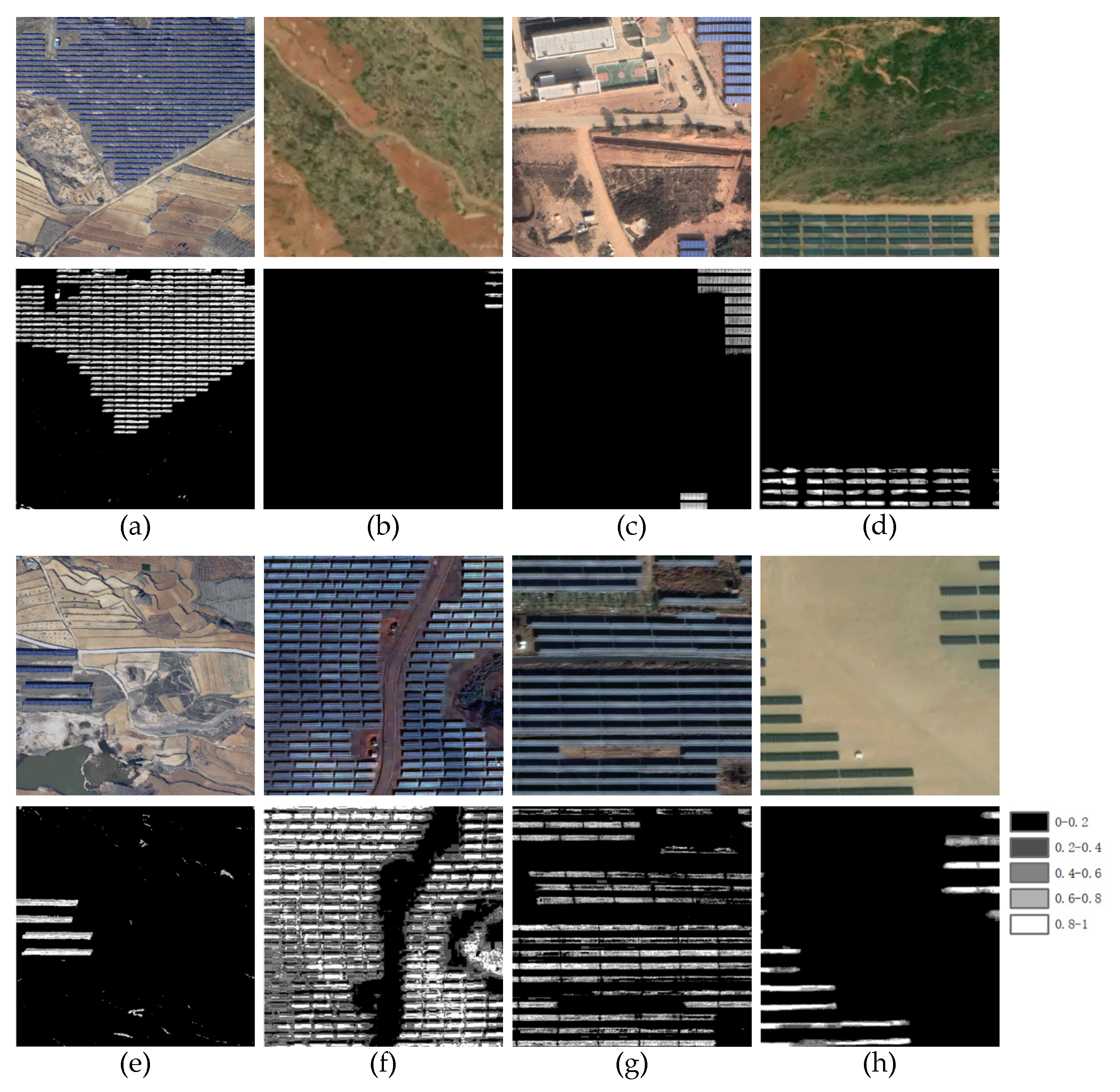

5. Discussion

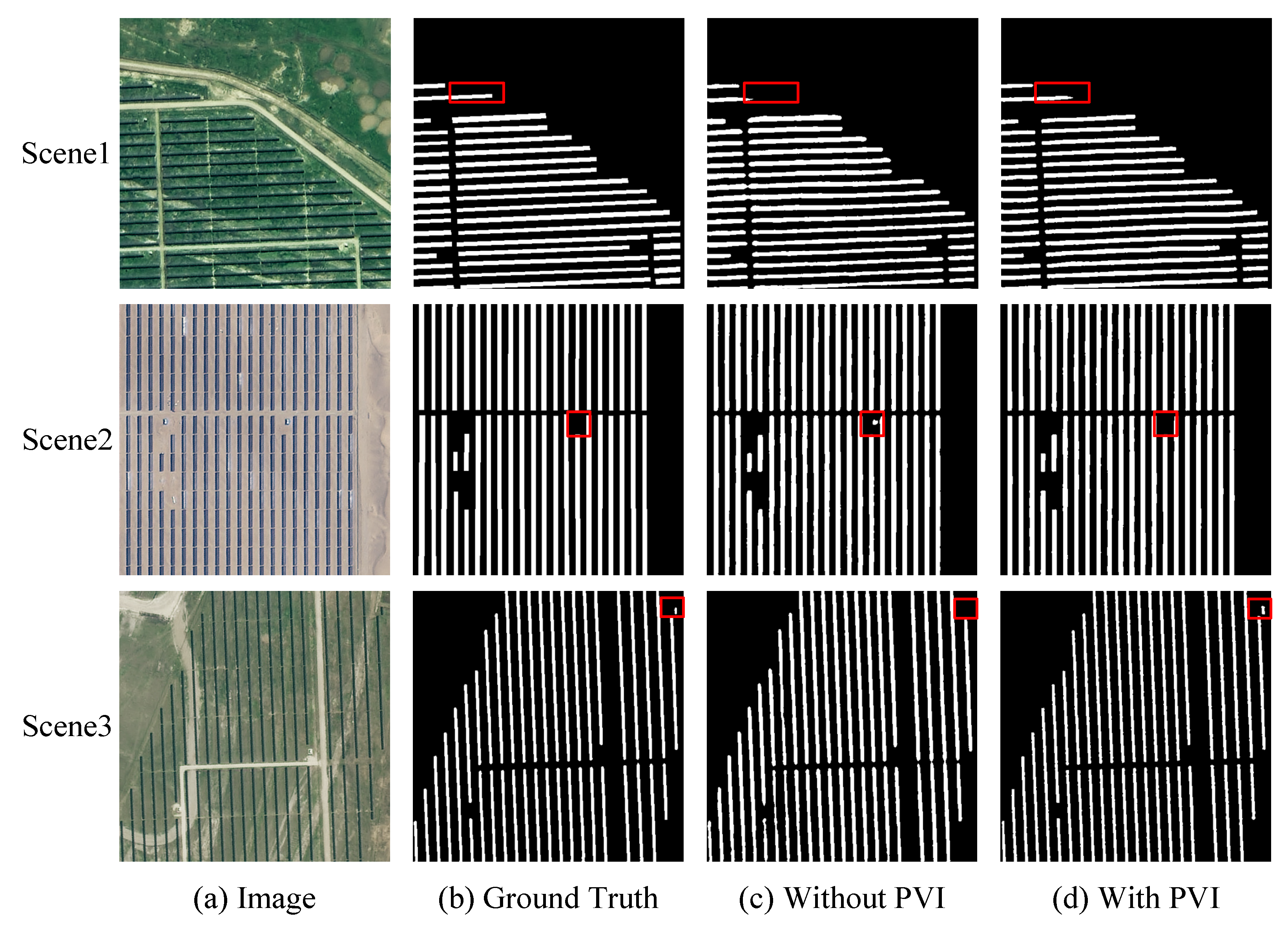

5.1. The Effectiveness of PVI

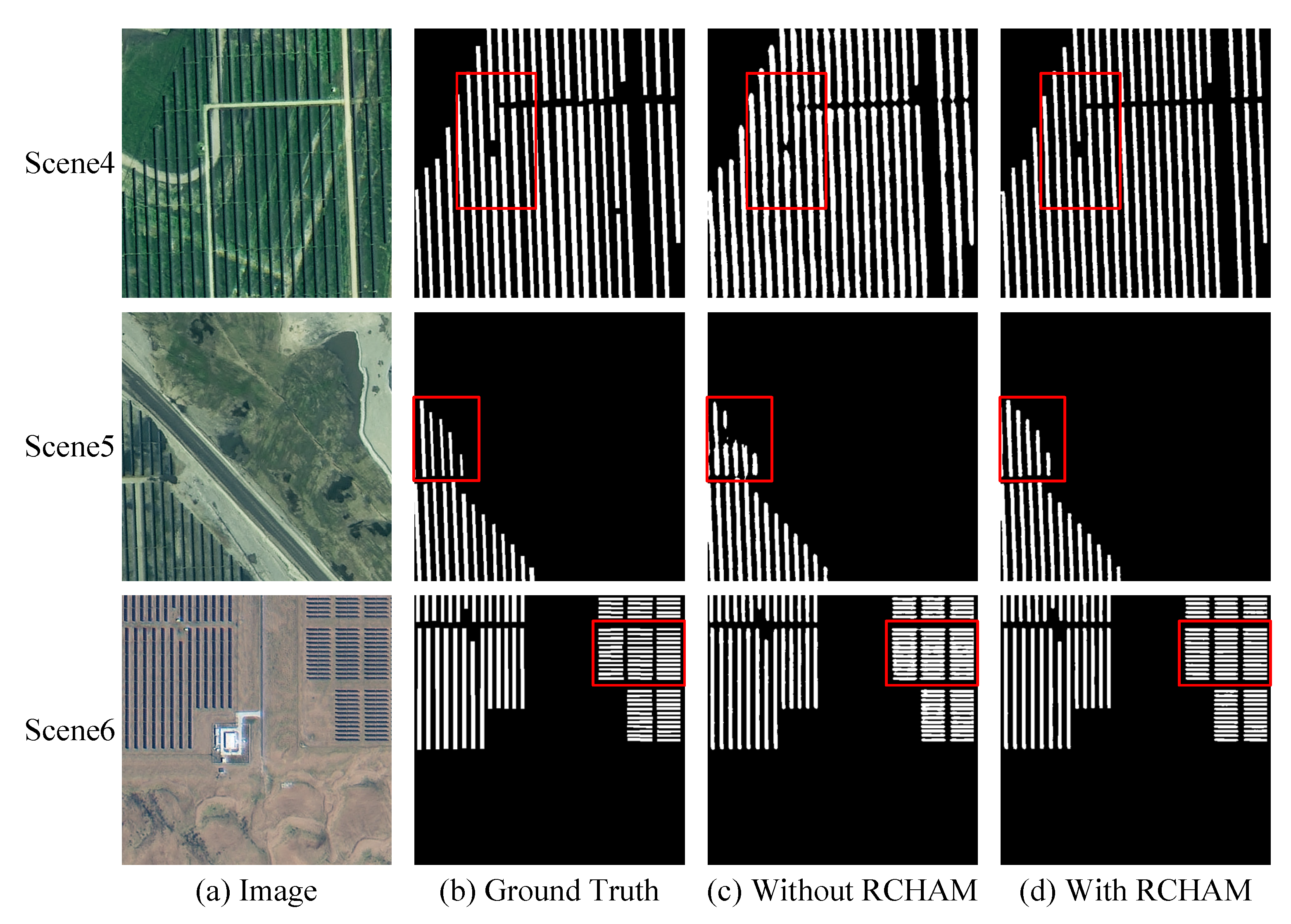

5.2. The Effectiveness of RCHAM

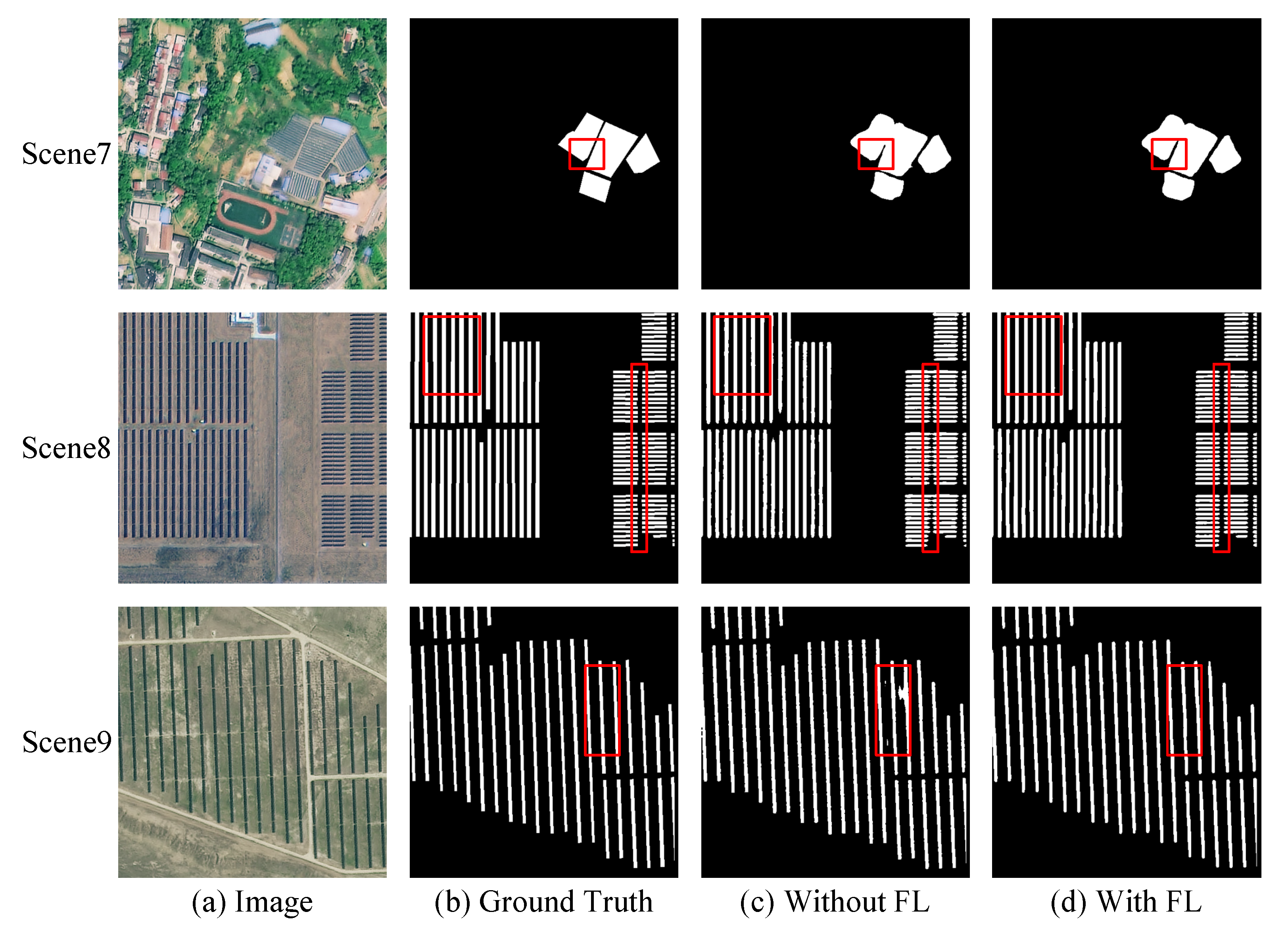

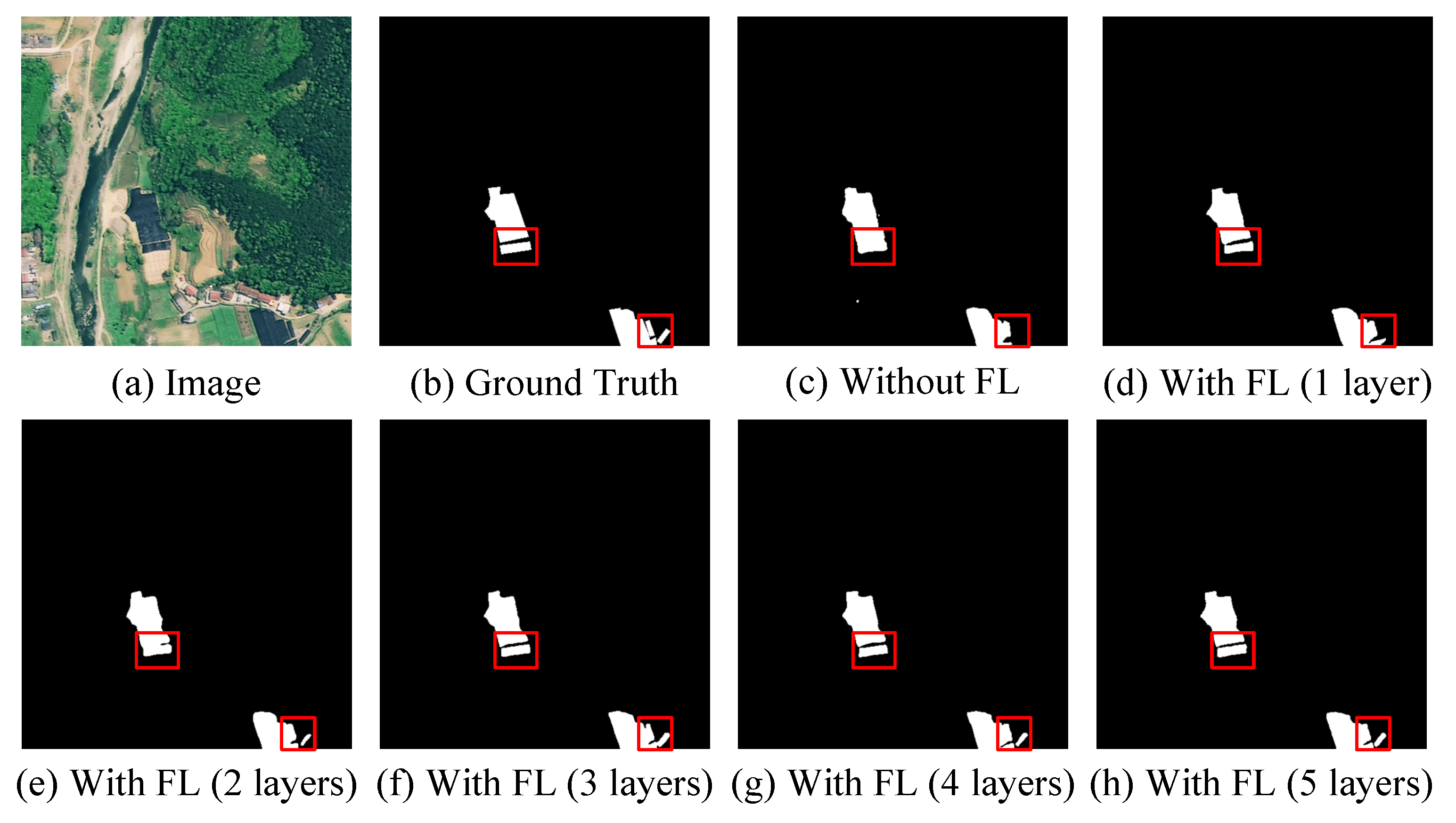

5.3. The Effectiveness of FL

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| PV | Photovoltaic |

| PVI | Photovoltaic Index |

| RCHAM | Residual Convolution Hybrid Attention Module |

| FL | Feature Loss |

| UAV | Unmanned Aerial Vehicle |

| CNN | Convolutional Neural Network |

| SVM | Support Vector Machine |

| RF | Randon Forest |

| RSU | Residual U-block |

| CBAM | Convolutional Block Attention Module |

| CAM | Channel Attention Module |

| SAM | Spatial Attention Module |

| MLP | Multilayer Perceptron |

| BCE | Binary Cross-Entropy |

| GT | Ground Truth |

| IoU | Intersection-over-Union |

Appendix A

| Algorithm A1. PV panel extraction method (PKGPVN). |

| Input: : PV panels images with shape [b, c, h, w] |

| Output: : final prediction map |

| 1: Compute using Equation (2): |

| B = [ : , 0:1, : , : ], G = [ : , 1:2, : , : ], R = [ : , 2:3, : , : ] |

| = (B − R)/(R + G + B) |

| 2: Get network input: |

| = torch.cat([, ], 1) |

| 3: while epoch 100 do |

| 4: Get encoder output: |

| = RSU () |

| 5: Get RCHAM output: |

| = RCHAM () |

| 6: Get decoder output: |

| = RSU (torch.cat(], dim = 1)) |

| 7: : |

| = F.interpolate(, size = [h, w], mode = ‘bilinear’, align_corners = False) |

| 8: : |

| = nn.Conv2d(torch.cat([], dim = 1)) |

| 9: using Equations (3)–(6) |

| 10: |

| 11: end while |

| 12: Obtain the PV panel extraction results through step 4 to 8 |

References

- Hou, J.; Luo, S.; Cao, M. A Review on China’s Current Situation and Prospects of Poverty Alleviation with Photovoltaic Power Generation. J. Renew. Sustain. Energy 2019, 11, 013503. [Google Scholar] [CrossRef]

- Tian, J.; Ooka, R.; Lee, D. Multi-scale solar radiation and photovoltaic power forecasting with machine learning algorithms in urban environment: A state-of-the-art review. J. Clean. Prod. 2023, 426, 139040. [Google Scholar] [CrossRef]

- Yao, Y.; Hu, Y. Recognition and Location of Solar Panels Based on Machine Vision. In Proceedings of the 2017 2nd Asia-Pacific Conference on Intelligent Robot System (ACIRS), Wuhan, China, 16–18 June 2017; pp. 7–12. [Google Scholar] [CrossRef]

- Malof, J.; Hou, R.; Collins, L.; Bradbury, K.; Newell, R. Automatic Solar Photovoltaic Panel Detection in Satellite Imagery. In Proceedings of the 2015 International Conference on Renewable Energy Research and Applications (ICRERA), Palermo, Italy, 22–25 November 2015; pp. 1428–1431. [Google Scholar] [CrossRef]

- Malof, J.; Bradbury, K.; Collins, L.; Newell, R. Automatic Detection of Solar Photovoltaic Arrays in High Resolution Aerial Imagery. Appl. Energy 2016, 183, 229–240. [Google Scholar] [CrossRef]

- Chen, Z.; Kang, Y.; Sun, Z.; Wu, F.; Zhang, Q. Extraction of Photovoltaic Plants Using Machine Learning Methods: A Case Study of the Pilot Energy City of Golmud, China. Remote Sens. 2022, 14, 2697. [Google Scholar] [CrossRef]

- Zhang, R.; Newsam, S.; Shao, Z.; Huang, X.; Wang, J.; Li, D. Multi-scale adversarial network for vehicle detection in UAV imagery. ISPRS J. Photogramm. Remote Sens. 2021, 180, 283–295. [Google Scholar] [CrossRef]

- Li, J.; Yang, B.; Bai, L.; Dou, H.; Li, C.; Ma, L. TFIV: Multi-grained Token Fusion for Infrared and Visible Image via Transformer. IEEE Trans. Instrum. Meas. 2023, 72, 2526414. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Mei, J.; Li, R.; Gao, W.; Cheng, M. CoANet: Connectivity Attention Network for Road Extraction from Satellite Imagery. IEEE Trans. Image Process. 2021, 30, 8540–8552. [Google Scholar] [CrossRef]

- Yu, J.; Wang, Z.; Majumdar, A.; Rajagopal, R. DeepSolar: A Machine Learning Framework to Efficiently Construct a Solar Deployment Database in the United States. Joule 2018, 2, 2605–2617. [Google Scholar] [CrossRef]

- Parhar, P.; Sawasaki, R.; Todeschini, A.; Reed, C.; Vahabi, H.; Nusaputra, N.; Vergara, F. HyperionSolarNet: Solar Panel Detection from Aerial Images. arXiv 2022, arXiv:2201.02107. [Google Scholar] [CrossRef]

- Zhu, R.; Guo, D.; Wong, M.; Qian, Z.; Chen, M.; Yang, B.; Chen, B.; Zhang, H.; You, L.; Heo, J.; et al. Deep solar PV refiner: A detail-oriented deep learning network for refined segmentation of photovoltaic areas from satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 116, 103134. [Google Scholar] [CrossRef]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. PointRend: Image Segmentation as Rendering. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9796–9805. [Google Scholar] [CrossRef]

- Wang, J.; Chen, X.; Jiang, W.; Hua, L.; Liu, J.; Sui, H. PVNet: A novel semantic segmentation model for extracting high-quality photovoltaic panels in large-scale systems from high-resolution remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 119, 103309. [Google Scholar] [CrossRef]

- Ahn, H.K.; Park, N. Deep RNN-Based Photovoltaic Power Short-Term Forecast Using Power IoT Sensors. Energies 2021, 14, 436. [Google Scholar] [CrossRef]

- Wang, Y.; Li, S.; Teng, F.; Lin, Y.; Wang, M.; Cai, H. Improved Mask R-CNN for Rural Building Roof Type Recognition from UAV High-Resolution Images: A Case Study in Hunan Province, China. Remote Sens. 2022, 14, 265. [Google Scholar] [CrossRef]

- Kruitwagen, L.; Story, K.; Friedrich, J.; Byers, L.; Skillman, S.; Hepburn, C. A global inventory of photovoltaic solar energy generating units. Nature 2021, 598, 604–610. [Google Scholar] [CrossRef]

- Li, P.; Zhang, H.; Guo, Z.; Lyu, S.; Chen, J.; Li, W.; Song, X.; Shibasaki, R.; Yan, J. Understanding rooftop PV panel semantic segmentation of satellite and aerial images for better using machine learning. Adv. Appl. Energy 2021, 4, 100057. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Li, L.; Wang, Z.; Zhang, T. GBH-YOLOv5: Ghost Convolution with BottleneckCSP and Tiny Target Prediction Head Incorporating YOLOv5 for PV Panel Defect Detection. Electronics 2023, 12, 561. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Li, L.; Lu, N.; Jiang, H.; Qin, J. Impact of Deep Convolutional Neural Network Structure on Photovoltaic Array Extraction from High Spatial Resolution Remote Sensing Images. Remote Sens. 2023, 15, 4554. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.; Jagersand, M. U2-Net: Going Deeper with Nested U-Structure for Salient Object Detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Li, Y.; Li, H.; Fan, D.; Li, Z.; Ji, S. Improved Sea Ice Image Segmentation Using U2-Net and Dataset Augmentation. Appl. Sci. 2023, 13, 9402. [Google Scholar] [CrossRef]

- Ge, F.; Wang, G.; He, G.; Zhou, D.; Yin, R.; Tong, L. A Hierarchical Information Extraction Method for Large-Scale Centralized Photovoltaic Power Plants Based on Multi-Source Remote Sensing Images. Remote Sens. 2022, 14, 4211. [Google Scholar] [CrossRef]

- Li, J.; Huo, H.; Li, C.; Wang, R.; Feng, Q. AttentionFGAN: Infrared and Visible Image Fusion Using Attention-Based Generative Adversarial Networks. IEEE Trans. Multimed. 2020, 23, 1383–1396. [Google Scholar] [CrossRef]

- Geng, X.; Ji, L.; Sun, K.; Zhao, Y. CEM: More Bands, Better Performance. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1876–1880. [Google Scholar] [CrossRef]

- Yan, Z.; Wang, P.; Xu, F.; Sun, X.; Diao, W. AIR-PV: A benchmark dataset for photovoltaic panel extraction in optical remote sensing imagery. Sci. China Inf. Sci. 2023, 66, 140307. [Google Scholar] [CrossRef]

- Czirjak, D.W. Detecting photovoltaic solar panels using hyperspectral imagery and estimating solar power production. J. Appl. Remote Sens. 2017, 11, 026007. [Google Scholar] [CrossRef]

- Wang, W.; Cai, J.; Tian, G.; Cheng, L.; Kong, D. Research on Accurate Extraction of Photovoltaic Power Station from Multi-source Remote Sensing. Beijing Surv. Mapp. 2021, 35, 1534–1540. [Google Scholar] [CrossRef]

- Ji, L.; Geng, X.; Sun, K.; Zhao, Y.; Gong, P. Target Detection Method for Water Mapping Using Landsat 8 OLI/TIRS Imagery. Water 2015, 7, 794–817. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Rahman, M. MultiResUNet: Rethinking the U-Net Architecture for Multimodal Biomedical Image Segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef] [PubMed]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. BASNet: Boundary-Aware Salient Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7471–7481. [Google Scholar] [CrossRef]

- Li, J.; Huo, H.; Li, C.; Wang, R.; Sui, C.; Liu, Z. Multi-grained Attention Network for Infrared and Visible Image Fusion. IEEE Trans. Instrum. Meas. 2020, 70, 5002412. [Google Scholar] [CrossRef]

- Zhao, X.; Jia, H.; Pang, Y.; Lv, L.; Tian, F.; Zhang, L.; Sun, W.; Lu, H. M2SNet: Multi-scale in Multi-scale Subtraction Network for Medical Image Segmentation. arXiv 2023, arXiv:2303.10894. [Google Scholar] [CrossRef]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. IEEE Trans. Image Process. 2023, 32, 1745–1758. [Google Scholar] [CrossRef]

- Yin, D.; Wang, L. Individual mangrove tree measurement using UAV-based LiDAR data: Possibilities and challenges. Remote Sens. Environ. 2019, 223, 34–49. [Google Scholar] [CrossRef]

- Lei, L.; Yin, T.; Chai, G.; Li, Y.; Wang, Y.; Jia, X.; Zhang, X. A novel algorithm of individual tree crowns segmentation considering three-dimensional canopy attributes using UAV oblique photos. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102893. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 192–1924. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Zhang, X.; Lin, W. Lightweight Salient Object Detection in Optical Remote- Sensing Images via Semantic Matching and Edge Alignment. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5601111. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Li, J.; Zhu, J.; Li, C.; Chen, X.; Yang, B. CGTF: Convolution-Guided Transformer for Infrared and Visible Image Fusion. IEEE Trans. Instrum. Meas. 2022, 71, 5012314. [Google Scholar] [CrossRef]

| Symbols | Descriptions |

|---|---|

| Input feature map of CBAM | |

| Output feature map of CBAM | |

| Channel attention weight | |

| Spatial attention weight | |

| Sub-prediction map | |

| Final prediction map | |

| The i-th layer feature extracted from the prediction values | |

| The i-th layer feature extracted from the ground truth | |

| Weights of sub-prediction map loss | |

| Weights of final prediction map loss | |

| L2 norm calculation between the i-th layer features in FL |

| Model | IoU | Precision | Recall | F1 |

|---|---|---|---|---|

| FCN | 74.09 | 82.77 | 87.73 | 84.97 |

| U-Net | 71.30 | 78.57 | 88.69 | 82.84 |

| SegNet | 56.89 | 62.12 | 87.89 | 70.76 |

| D-LinkNet | 62.03 | 89.54 | 67.54 | 74.86 |

| BASNet | 74.31 | 82.49 | 89.98 | 84.67 |

| U2-Net | 79.12 | 91.01 | 86.17 | 87.96 |

| SeaNet | 79.69 | 89.13 | 88.00 | 88.47 |

| PKGPVN | 82.34 | 91.41 | 89.45 | 90.25 |

| Model | IoU | Precision | Recall | F1 |

|---|---|---|---|---|

| FCN | 82.51 | 93.77 | 86.52 | 89.94 |

| U-Net | 74.92 | 85.19 | 84.49 | 85.03 |

| SegNet | 77.12 | 83.02 | 93.77 | 85.57 |

| D-LinkNet | 81.50 | 91.94 | 87.89 | 88.32 |

| BASNet | 89.85 | 94.99 | 94.71 | 94.79 |

| U2-Net | 90.68 | 95.40 | 94.44 | 94.89 |

| SeaNet | 87.01 | 95.63 | 99.26 | 95.35 |

| PKGPVN | 91.71 | 96.51 | 94.85 | 95.74 |

| Model | Scene 1 | Scene 2 | Scene 3 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | |

| Without PVI | 63.57 | 79.13 | 76.37 | 77.73 | 87.71 | 92.43 | 94.57 | 93.45 | 75.82 | 78.20 | 96.13 | 86.25 |

| With PVI | 73.22 | 86.65 | 82.53 | 84.54 | 87.76 | 93.49 | 93.42 | 93.49 | 82.34 | 90.40 | 90.22 | 90.31 |

| Rise | 9.65 | 7.52 | 6.16 | 6.81 | 0.05 | 1.06 | −1.15 | 0.04 | 6.52 | 12.20 | −5.91 | 4.06 |

| Model | Scene 4 | Scene 5 | Scene 6 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | |

| Without RCHAM | 72.34 | 78.29 | 90.50 | 83.95 | 65.03 | 72.95 | 85.69 | 78.81 | 81.55 | 88.42 | 91.29 | 89.83 |

| With RCHAM | 75.00 | 88.58 | 83.03 | 85.71 | 74.38 | 79.59 | 91.92 | 85.31 | 84.39 | 93.83 | 89.35 | 91.54 |

| Rise | 2.66 | 10.29 | −7.47 | 1.76 | 9.35 | 6.64 | 6.23 | 6.50 | 2.84 | 5.41 | −1.94 | 1.71 |

| Model | Scene 7 | Scene 8 | Scene 9 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | |

| Without FL | 90.98 | 93.81 | 96.79 | 95.28 | 81.85 | 89.59 | 90.45 | 90.02 | 80.88 | 86.86 | 92.16 | 89.43 |

| With FL | 92.34 | 95.90 | 96.13 | 96.02 | 82.80 | 91.92 | 89.30 | 90.58 | 82.97 | 88.94 | 92.51 | 90.69 |

| Rise | 1.36 | 2.09 | −0.66 | 0.74 | 0.95 | 2.33 | −1.15 | 0.56 | 2.09 | 2.08 | 0.35 | 1.26 |

| Model | IoU | Precision | Recall | F1 |

|---|---|---|---|---|

| Without FL | 84.27 | 91.54 | 91.38 | 91.46 |

| With FL (1 layer) | 86.86 | 93.68 | 92.26 | 92.97 |

| With FL (2 layers) | 87.96 | 93.51 | 93.55 | 93.03 |

| With FL (3 layers) | 89.32 | 94.57 | 94.15 | 94.36 |

| With FL (4 layers) | 90.19 | 95.33 | 94.36 | 94.84 |

| With FL (5 layers) | 89.34 | 94.39 | 94.36 | 94.37 |

| Model | IoU | Precision | Recall | F1 |

|---|---|---|---|---|

| Without FL | 82.97 | 90.93 | 90.40 | 90.57 |

| With FL (1 layer) | 84.51 | 92.83 | 89.73 | 91.31 |

| With FL (2 layers) | 85.03 | 92.58 | 90.92 | 91.72 |

| With FL (3 layers) | 85.97 | 93.42 | 91.10 | 91.76 |

| With FL (4 layers) | 86.08 | 93.91 | 91.15 | 92.34 |

| With FL (5 layers) | 86.06 | 93.71 | 91.13 | 92.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Huo, H.; Ji, L.; Zhao, Y.; Liu, X.; Li, J. A Method for Extracting Photovoltaic Panels from High-Resolution Optical Remote Sensing Images Guided by Prior Knowledge. Remote Sens. 2024, 16, 9. https://doi.org/10.3390/rs16010009

Liu W, Huo H, Ji L, Zhao Y, Liu X, Li J. A Method for Extracting Photovoltaic Panels from High-Resolution Optical Remote Sensing Images Guided by Prior Knowledge. Remote Sensing. 2024; 16(1):9. https://doi.org/10.3390/rs16010009

Chicago/Turabian StyleLiu, Wenqing, Hongtao Huo, Luyan Ji, Yongchao Zhao, Xiaowen Liu, and Jing Li. 2024. "A Method for Extracting Photovoltaic Panels from High-Resolution Optical Remote Sensing Images Guided by Prior Knowledge" Remote Sensing 16, no. 1: 9. https://doi.org/10.3390/rs16010009

APA StyleLiu, W., Huo, H., Ji, L., Zhao, Y., Liu, X., & Li, J. (2024). A Method for Extracting Photovoltaic Panels from High-Resolution Optical Remote Sensing Images Guided by Prior Knowledge. Remote Sensing, 16(1), 9. https://doi.org/10.3390/rs16010009