Multi-Dimensional Low-Rank with Weighted Schatten p-Norm Minimization for Hyperspectral Anomaly Detection

Abstract

:1. Introduction

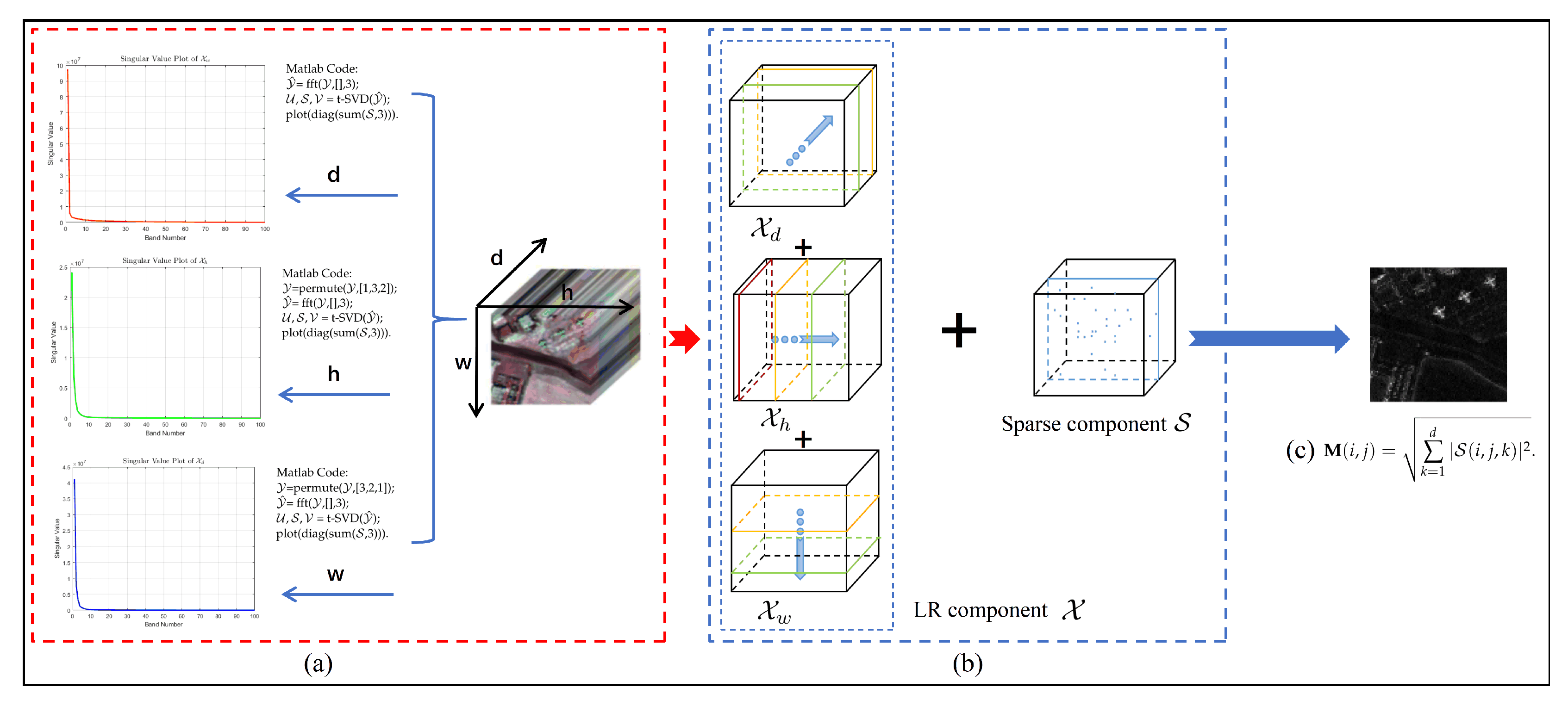

- Low-rankness along three dimensions in the frequency domain is exploited. Through the low-rank property analysis of the tensor along different dimensions, we found that it is not sufficient to measure the low-rankness along only one dimension. Therefore, multi-dimensional low-rankness is embedded into different tensors with t-SVD along different slices. These tensors are then fused to form a background tensor that captures the low-rank characteristics across all three dimensions and enables the MDLR method to effectively explore more comprehensive background information.

- To enforce low-rank in the background tensor, WSNM is applied to the frontal slices of the f-diagonal tensor, which enhances the preservation of the low-rank structure in the background tensor.

2. Notations and Preliminaries

3. Proposed Method

3.1. Tensor Low-Rank Linear Representation

3.2. Weighted Schatten p-Norm Minimization

3.3. Mutil-Dimensional Tensor Low-Rank Norm

3.4. Optimization Procedure

- (1)

- (2)

- (3)

- (4)

- (5)

- (6)

- Lagrange multiplier and

| Algorithm 1 WSNM based on t-SVD. |

Input:

|

| Algorithm 2 MDLR for HSI anomaly detection. |

3.5. Computational Complexity

4. Experimental Results

4.1. HSI Datasets

4.2. Compared Methods and Parameter Setting

- RX [19]: The classical anomaly detection algorithm calculates the Mahalanobis distance between the pixel under test and the background pixels. The parameter of RX is set to 1/min(w,h).

- LSMAD [29]: A method based on low-rank sparse matrix decomposition (LRaSAM) with Mahalanobis distance. We set r = 3, k = 0.8.

- LRASR [34]: Learn low-rank linear representation (LRR) of backgrounds by constructing dictionaries. The parameters and of LRASR are set to 0.1 and 0.1 in LRASR.

- GTVLRR [35]: Adding total variation (TV) and graph regularization to the restructuring of the background in the LRR-based method, we set = 0.5, = 0.2, and = 0.05 according to the GTVLRR.

- PTA [40]: According to the properties of the spatial and spectral dimensions of the HSI, PTA adds TV into spatial dimensions and low-rank into spectral dimensions. The parameters , , of PTA are set to 1, 1, and 0.01 separately.

- DeCNN-AD [36]: Using convolutional neural network (CNN)-based denoisers as the prior for the dictionary representation coefficients, the cluster number of DeCNN-AD is set to 8 and , are set to 0.01.

- PCA-TLRSR [43]: The first method extends LRR to tensor LRR for HSI anomaly detection. The reduced dimensions of PCA are tuned according to PCA-TLRSR and parameter is set to 0.4.

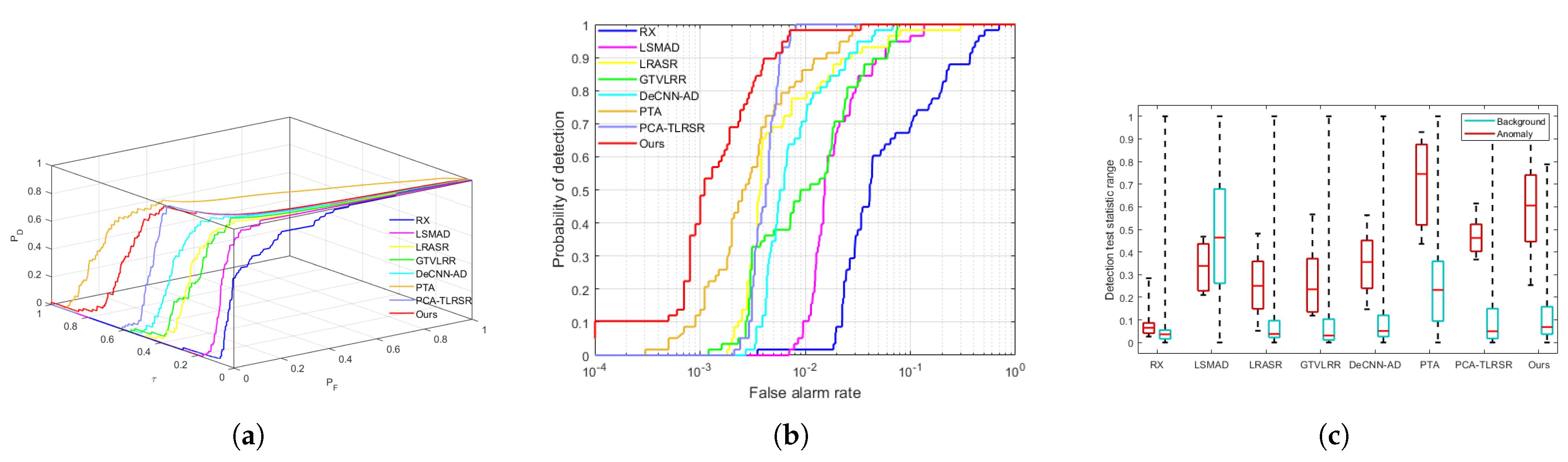

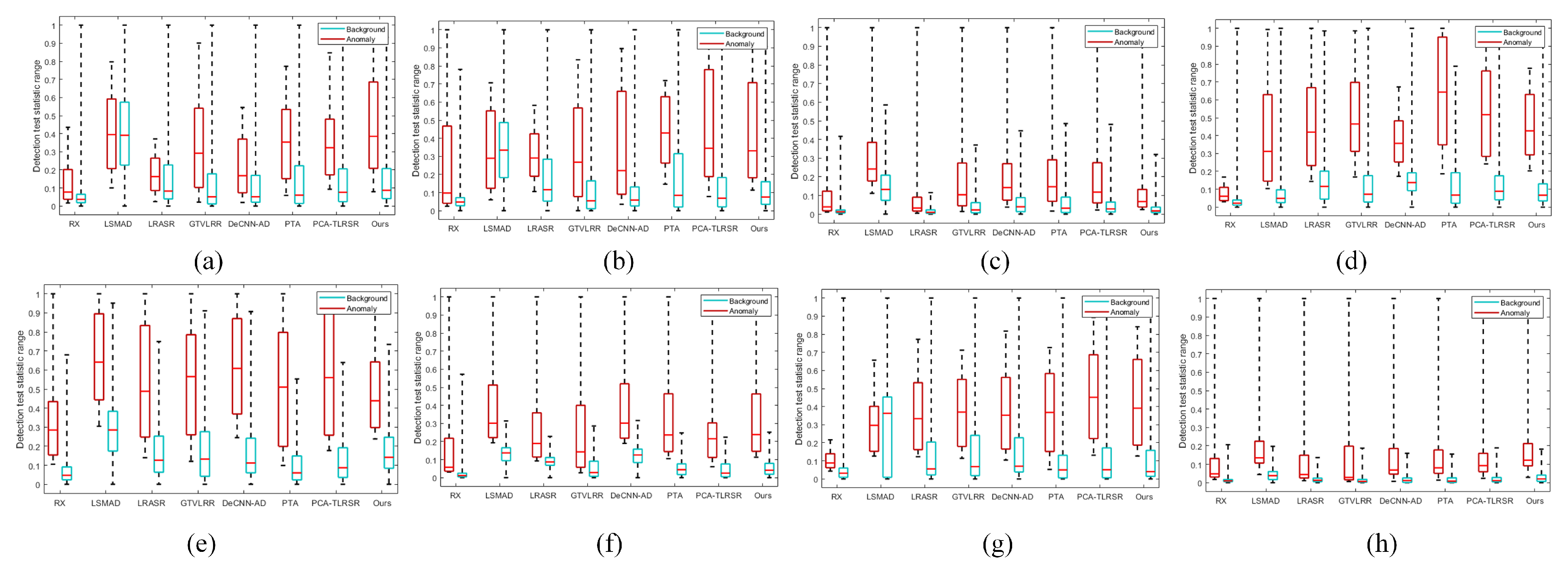

4.3. Detection Performance

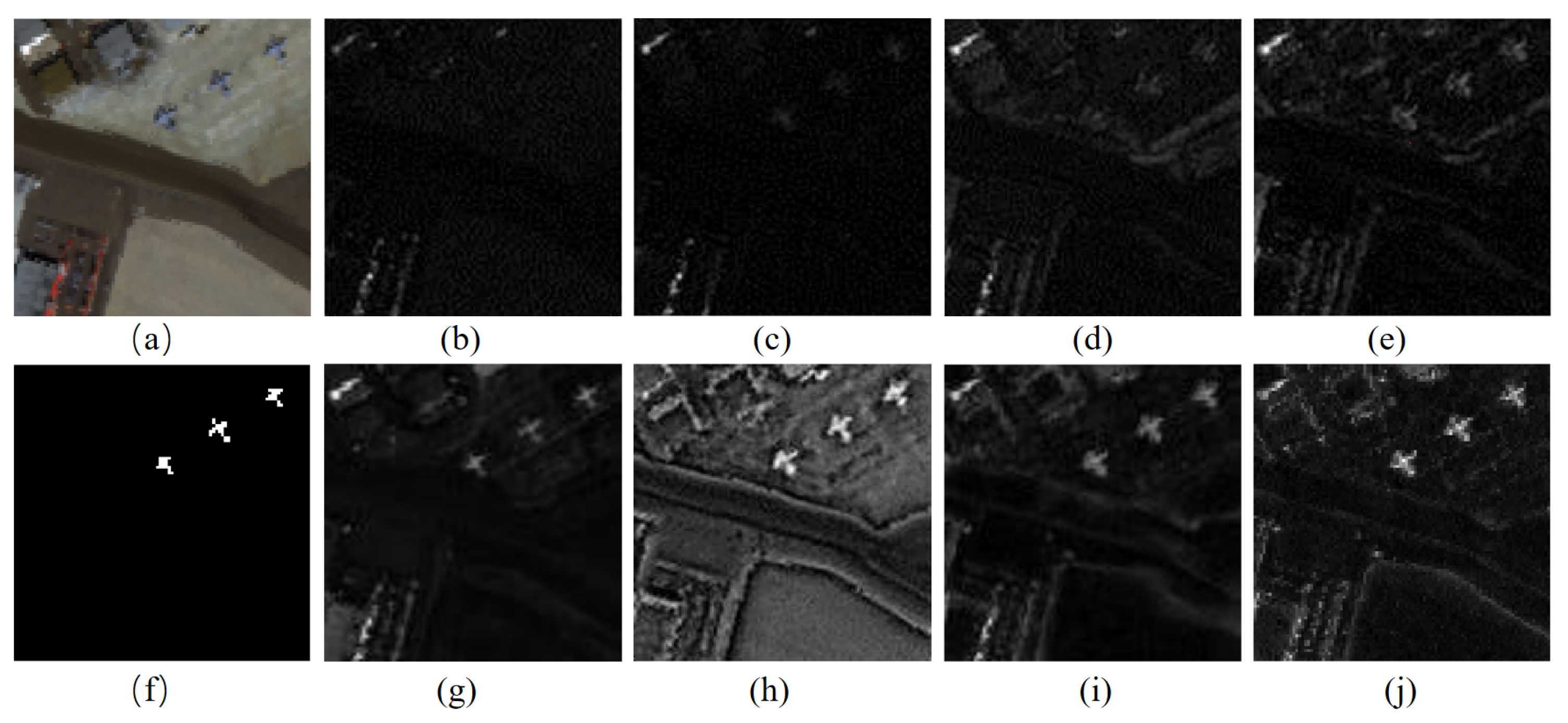

4.3.1. San Diego

4.3.2. HYDICE-Ubran

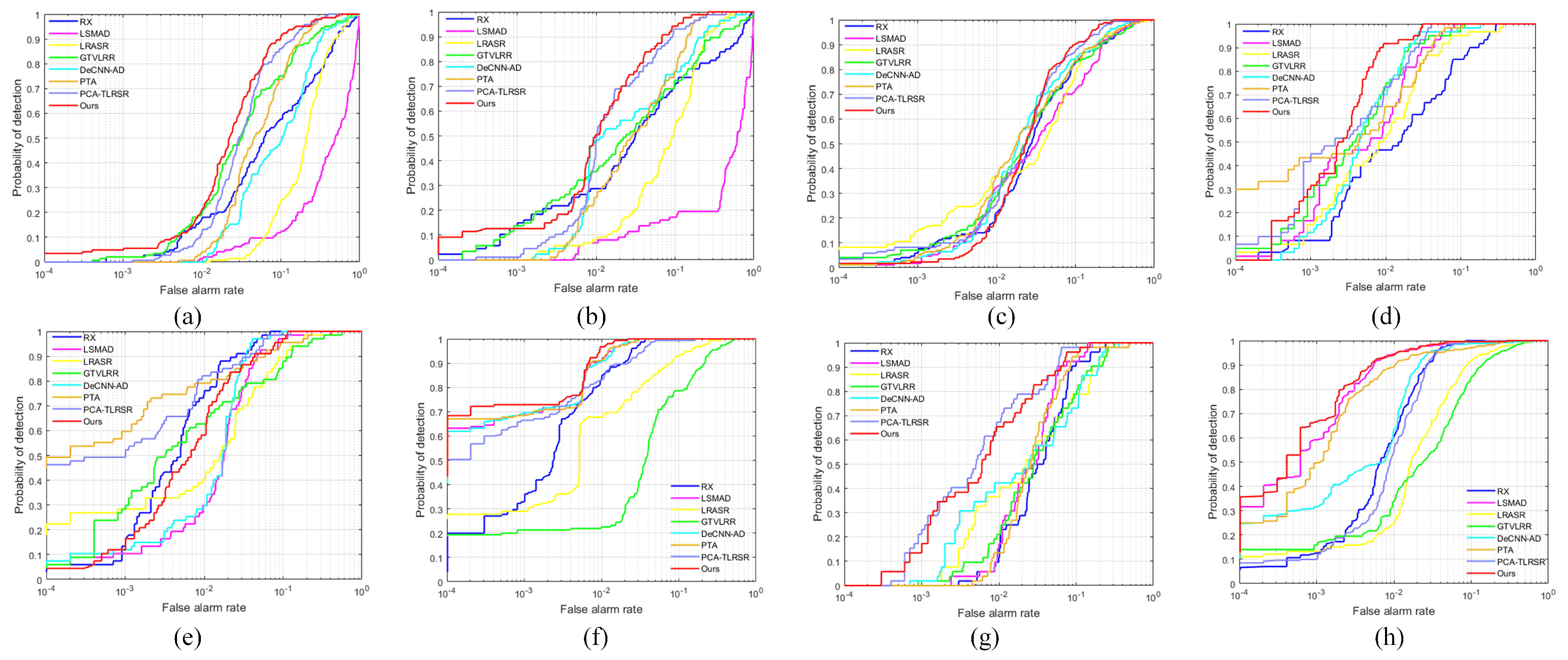

4.3.3. Airport 1–4

4.3.4. Urban 1–4

4.4. Discussion of Multi-Dimensional Low-Rank

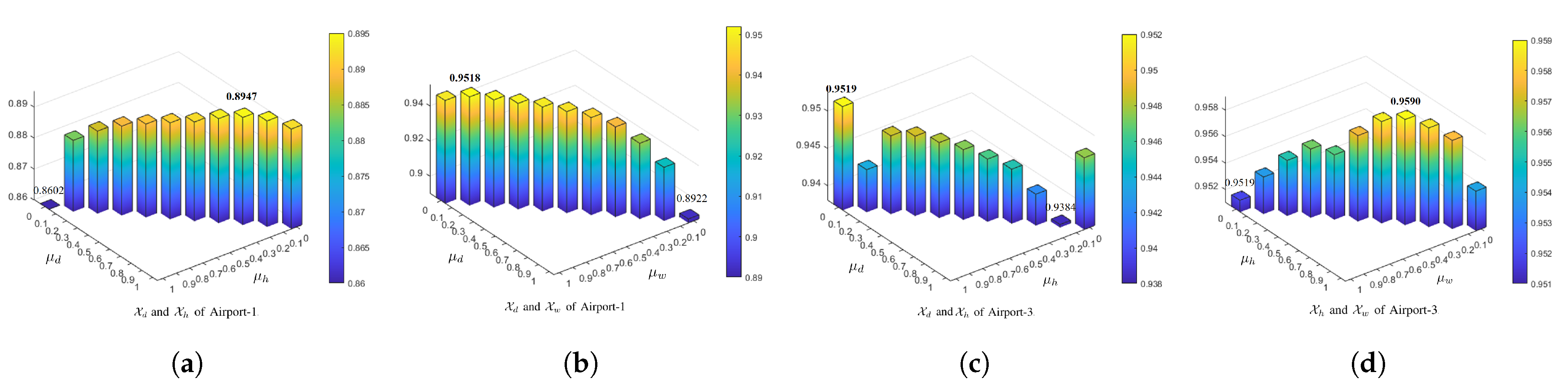

4.5. Parameter Tuning

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.; Wu, H.; Sun, H.; Ying, W. Multireceiver SAS Imagery Based on Monostatic Conversion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10835–10853. [Google Scholar] [CrossRef]

- Zhu, J.; Song, Y.; Jiang, N.; Xie, Z.; Fan, C.; Huang, X. Enhanced Doppler Resolution and Sidelobe Suppression Performance for Golay Complementary Waveforms. Remote Sens. 2023, 15, 2452. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More Diverse Means Better: Multimodal Deep Learning Meets Remote-Sensing Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Liu, F.; Wang, Q. A sparse tensor-based classification method of hyperspectral image. Signal Process. 2020, 168, 107361. [Google Scholar] [CrossRef]

- An, W.; Zhang, X.; Wu, H.; Zhang, W.; Du, Y.; Sun, J. LPIN: A Lightweight Progressive Inpainting Network for Improving the Robustness of Remote Sensing Images Scene Classification. Remote Sens. 2021, 14, 53. [Google Scholar] [CrossRef]

- Tan, K.; Wu, F.; Du, Q.; Du, P.; Chen, Y. A Parallel Gaussian–Bernoulli Restricted Boltzmann Machine for Mining Area Classification With Hyperspectral Imagery. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2019, 12, 627–636. [Google Scholar] [CrossRef]

- Ren, Z.; Sun, L.; Zhai, Q.; Liu, X. Mineral Mapping with Hyperspectral Image Based on an Improved K-Means Clustering Algorithm. In Proceedings of the IGARSS 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 2989–2992. [Google Scholar]

- Rukhovich, D.I.; Koroleva, P.V.; Rukhovich, D.D.; Rukhovich, A.D. Recognition of the Bare Soil Using Deep Machine Learning Methods to Create Maps of Arable Soil Degradation Based on the Analysis of Multi-Temporal Remote Sensing Data. Remote Sens. 2022, 14, 2224. [Google Scholar] [CrossRef]

- Wang, Q.; Li, J.; Shen, Q.; Wu, C.; Yu, J. Retrieval of water quality from China’s first satellite-based Hyperspectral Imager (HJ-1A HSI) data. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, Hawaii, USA, 25–30 July 2010; pp. 371–373. [Google Scholar]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A Deep Learning-Based Approach for Automated Yellow Rust Disease Detection from High-Resolution Hyperspectral UAV Images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef]

- Wan, Y.; Hu, X.; Zhong, Y.; Ma, A.; Wei, L.; Zhang, L. Tailings Reservoir Disaster and Environmental Monitoring Using the UAV-ground Hyperspectral Joint Observation and Processing: A Case of Study in Xinjiang, the Belt and Road. In Proceedings of the IGARSS 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 9713–9716. [Google Scholar]

- Farrar, M.B.; Wallace, H.M.; Brooks, P.R.; Yule, C.M.; Tahmasbian, I.; Dunn, P.K.; Bai, S.H. A Performance Evaluation of Vis/NIR Hyperspectral Imaging to Predict Curcumin Concentration in Fresh Turmeric Rhizomes. Remote Sens. 2021, 13, 1807. [Google Scholar] [CrossRef]

- Légaré, B.; Bélanger, S.; Singh, R.K.; Bernatchez, P.; Cusson, M. Remote Sensing of Coastal Vegetation Phenology in a Cold Temperate Intertidal System: Implications for Classification of Coastal Habitats. Remote Sens. 2022, 14, 3000. [Google Scholar] [CrossRef]

- Cen, Y.; Huang, Y.H.; Hu, S.; Zhang, L.; Zhang, J. Early Detection of Bacterial Wilt in Tomato with Portable Hyperspectral Spectrometer. Remote Sens. 2022, 14, 2882. [Google Scholar] [CrossRef]

- Yin, C.; Lv, X.; Zhang, L.; Ma, L.; Wang, H.; Zhang, L.; Zhang, Z. Hyperspectral UAV Images at Different Altitudes for Monitoring the Leaf Nitrogen Content in Cotton Crops. Remote Sens. 2022, 14, 2576. [Google Scholar] [CrossRef]

- Thornley, R.H.; Verhoef, A.; Gerard, F.F.; White, K. The Feasibility of Leaf Reflectance-Based Taxonomic Inventories and Diversity Assessments of Species-Rich Grasslands: A Cross-Seasonal Evaluation Using Waveband Selection. Remote. Sens. 2022, 14, 2310. [Google Scholar] [CrossRef]

- Pang, D.; Ma, P.; Shan, T.; Li, W.; Tao, R.; Ma, Y.; Wang, T. STTM-SFR: Spatial–Temporal Tensor Modeling With Saliency Filter Regularization for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Gao, Q.; Zhang, P.; Xia, W.; Xie, D.; Gao, X.; Tao, D. Enhanced Tensor RPCA and its Application. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2133–2140. [Google Scholar] [CrossRef] [PubMed]

- Reed, I.S.; Yu, X. Adaptive multiple-band CFAR detection of an optical pattern with unknown spectral distribution. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Manolakis, D.G.; Shaw, G.A. Detection algorithms for hyperspectral imaging applications. IEEE Signal Process. Mag. 2002, 19, 29–43. [Google Scholar] [CrossRef]

- Molero, J.M.; Garzón, E.M.; García, I.; Plaza, A.J. Analysis and Optimizations of Global and Local Versions of the RX Algorithm for Anomaly Detection in Hyperspectral Data. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2013, 6, 801–814. [Google Scholar] [CrossRef]

- Taitano, Y.P.; Geier, B.A.; Bauer, K.W. A Locally Adaptable Iterative RX Detector. Signal Process. 2010, 2010, 1–10. [Google Scholar] [CrossRef]

- Sun, W.; Liu, C.; Li, J.; Lai, Y.M.; Li, W. low-rank and sparse matrix decomposition-based anomaly detection for hyperspectral imagery. J. Appl. Remote Sens. 2014, 8, 15823048. [Google Scholar] [CrossRef]

- Farrell, M.D.; Mersereau, R.M. On the impact of covariance contamination for adaptive detection in hyperspectral imaging. IEEE Signal Process. Lett. 2005, 12, 649–652. [Google Scholar] [CrossRef]

- Billor, N.; Hadi, A.S.; Velleman, P.F. BACON: Blocked adaptive computationally efficient outlier nominators. Comput. Stat. Data Anal. 2000, 34, 279–298. [Google Scholar] [CrossRef]

- Sun, W.; Tian, L.; Xu, Y.; Du, B.; Du, Q. A Randomized Subspace Learning Based Anomaly Detector for Hyperspectral Imagery. Remote Sens. 2018, 10, 417. [Google Scholar] [CrossRef]

- Sun, W.; Yang, G.; Li, J.; Zhang, D. Randomized subspace-based robust principal component analysis for hyperspectral anomaly detection. J. Appl. Remote Sens. 2018, 12, 015015. [Google Scholar] [CrossRef]

- Qu, Y.; Wang, W.; Guo, R.; Ayhan, B.; Kwan, C.; Vance, S.D.; Qi, H. Hyperspectral Anomaly Detection Through Spectral Unmixing and Dictionary-Based low-rank Decomposition. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4391–4405. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, B.; Zhang, L.; Wang, S. A low-rank and Sparse Matrix Decomposition-Based Mahalanobis Distance Method for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1376–1389. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, L.; Chang, S. A low-rank and Sparse Matrix Decomposition- Based Dictionary Reconstruction and Anomaly Extraction Framework for Hyperspectral Anomaly Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1248–1252. [Google Scholar] [CrossRef]

- Li, L.; Li, W.; Du, Q.; Tao, R. low-rank and Sparse Decomposition With Mixture of Gaussian for Hyperspectral Anomaly Detection. IEEE Trans. Cybern. 2020, 51, 4363–4372. [Google Scholar] [CrossRef]

- Liu, G.; Lin, Z.; Yu, Y. Robust Subspace Segmentation by low-rank Representation. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Liu, G.; Lin, Z.; Yan, S.; Sun, J.; Yu, Y.; Ma, Y. Robust Recovery of Subspace Structures by low-rank Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 35, 171–184. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Li, J.; Plaza, A.J.; Wei, Z. Anomaly Detection in Hyperspectral Images Based on low-rank and Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1990–2000. [Google Scholar] [CrossRef]

- Cheng, T.; Wang, B. Graph and Total Variation Regularized low-rank Representation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 391–406. [Google Scholar] [CrossRef]

- Fu, X.; Jia, S.; Zhuang, L.; Xu, M.; Zhou, J.; Li, Q. Hyperspectral Anomaly Detection via Deep Plug-and-Play Denoising CNN Regularization. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9553–9568. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a Fast and Flexible Solution for CNN-Based Image Denoising. IEEE Trans. Image Process. 2017, 27, 4608–4622. [Google Scholar] [CrossRef] [PubMed]

- Guo, S.; Chen, X.; Jia, H.; Han, Z.; Duan, Z.; Tang, Y. Fusing Hyperspectral and Multispectral Images via low-rank Hankel Tensor Representation. Remote Sens. 2022, 14, 4470. [Google Scholar] [CrossRef]

- Zhang, Z.; Ding, C.; Gao, Z.; Xie, C. ANLPT: Self-Adaptive and Non-Local Patch-Tensor Model for Infrared Small Target Detection. Remote Sens. 2023, 15, 1021. [Google Scholar] [CrossRef]

- Li, L.; Li, W.; Qu, Y.; Zhao, C.; Tao, R.; Du, Q. Prior-Based Tensor Approximation for Anomaly Detection in Hyperspectral Imagery. IEEE Trans. Neural Netw. Learn. Syst. 2020, 33, 1037–1050. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Zhou, H.; Gu, L.; Yang, Y.; Yang, Y. Hyperspectral Anomaly Detection via Tensor- Based Endmember Extraction and low-rank Decomposition. IEEE Geosci. Remote. Sens. Lett. 2020, 17, 1772–1776. [Google Scholar] [CrossRef]

- Shang, W.; Peng, J.; Wu, Z.; Xu, Y.; Jouni, M.; Mura, M.D.; Wei, Z. Hyperspectral Anomaly Detection via Sparsity of Core Tensor Under Gradient Domain. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Wang, M.; Wang, Q.; Hong, D.; Roy, S.K.; Chanussot, J. Learning Tensor low-rank Representation for Hyperspectral Anomaly Detection. IEEE Trans. Cybern. 2022, 53, 679–691. [Google Scholar] [CrossRef]

- Sun, S.; Liu, J.; Zhang, Z.; Li, W. Hyperspectral Anomaly Detection Based on Adaptive low-rank Transformed Tensor. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–13. [Google Scholar] [CrossRef]

- Lu, C.; Feng, J.; Chen, Y.; Liu, W.; Lin, Z.; Yan, S. Tensor Robust Principal Component Analysis: Exact Recovery of Corrupted low-rank Tensors via Convex Optimization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5249–5257. [Google Scholar]

- Zhang, D.; Hu, Y.; Ye, J.; Li, X.; He, X. Matrix completion by Truncated Nuclear Norm Regularization. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2192–2199. [Google Scholar]

- Oh, T.H.; Kim, H.; Tai, Y.W.; Bazin, J.C.; Kweon, I.S. Partial Sum Minimization of Singular Values in RPCA for Low-Level Vision. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 145–152. [Google Scholar]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted Nuclear Norm Minimization with Application to Image Denoising. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar]

- Lu, C.; Feng, J.; Chen, Y.; Liu, W.; Lin, Z.; Yan, S. Tensor Robust Principal Component Analysis with a New Tensor Nuclear Norm. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 925–938. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Yan, S. Latent low-rank Representation for subspace segmentation and feature extraction. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1615–1622. [Google Scholar]

- Xie, Y.; Gu, S.; Liu, Y.; Zuo, W.; Zhang, W.; Zhang, L. Weighted Schatten p-Norm Minimization for Image Denoising and Background Subtraction. IEEE Trans. Image Process. 2015, 25, 4842–4857. [Google Scholar] [CrossRef]

- Kerekes, J.P. Receiver Operating Characteristic Curve Confidence Intervals and Regions. IEEE Geosci. 2008, 5, 251–255. [Google Scholar] [CrossRef]

- Khazai, S.; Homayouni, S.; Safari, A.; Mojaradi, B. Anomaly Detection in Hyperspectral Images Based on an Adaptive Support Vector Method. IEEE Geosci. 2011, 8, 646–650. [Google Scholar] [CrossRef]

- Chang, C.I. Comprehensive Analysis of Receiver Operating Characteristic (ROC) Curves for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–24. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. A survey on representation-based classification and detection in hyperspectral remote sensing imagery. Pattern Recognit. Lett. 2016, 83, 115–123. [Google Scholar] [CrossRef]

- Ma, L.; Crawford, M.M.; Tian, J. Local Manifold Learning-Based k-Nearest-Neighbor for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4099–4109. [Google Scholar] [CrossRef]

- Kang, X.; Zhang, X.; Li, S.; Li, K.; Li, J.Y.; Benediktsson, J.A. Hyperspectral Anomaly Detection with Attribute and Edge-Preserving Filters. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5600–5611. [Google Scholar] [CrossRef]

| HSI Data | RX | LSMAD | LRASR | GTVLRR | DeCNN-AD | PTA | PCA-TLRSR | MDLR |

|---|---|---|---|---|---|---|---|---|

| San Diego | 2.054 | 38.46 | 56.394 | 214.343 | 256.589 | 34.344 | 8.312 | 132.46 |

| HSI Datasets | RX | LSMAD | LRASR | GTVLRR | DeCNN-AD | PTA | PCA-TLRSR | MDLR |

|---|---|---|---|---|---|---|---|---|

| San Diego | 0.8885 | 0.9773 | 0.9853 | 0.9795 | 0.9901 | 0.9946 | 0.9957 | |

| HYDICE-Urban | 0.9856 | 0.9901 | 0.9918 | 0.9856 | 0.9935 | 0.9953 | 0.9941 | 0.9975 |

| Airport-1 | 0.8220 | 0.8334 | 0.7854 | 0.9013 | 0.8503 | 0.9207 | 0.9478 | |

| Airport-2 | 0.8403 | 0.9189 | 0.8657 | 0.8695 | 0.9204 | 0.9428 | 0.9697 | |

| Airport-3 | 0.9228 | 0.9401 | 0.9408 | 0.9295 | 0.9434 | 0.9355 | 0.9574 | |

| Airport-4 | 0.9526 | 0.9862 | 0.9723 | 0.9875 | 0.9897 | 0.9875 | 0.9943 | |

| Urban-1 | 0.9829 | 0.9797 | 0.9605 | 0.9820 | 0.9826 | 0.9902 | 0.9835 | |

| Urban-2 | 0.9946 | 0.9836 | 0.9628 | 0.8539 | 0.9973 | 0.9970 | 0.9941 | |

| Urban-3 | 0.9513 | 0.9636 | 0.9415 | 0.9385 | 0.9394 | 0.9578 | 0.9812 | |

| Urban-4 | 0.9887 | 0.9809 | 0.9575 | 0.9205 | 0.9868 | 0.9907 | 0.9869 |

| HSI dataset | San Diego | Airport-1 | Airport-2 | Airport-3 | Airport-4 |

| S-dimensional | 0.9966 | 0.8957 | 0.9655 | 0.9345 | 0.9921 |

| M-dimensional | |||||

| HSI dataset | HYDIE-Urban | Urban-1 | Urban-2 | Urban-3 | Urban-4 |

| S-dimensional | 0.9546 | 0.9619 | 0.9928 | 0.9527 | |

| M-dimensional |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Wang, Z.; Wang, K.; Jia, H.; Han, Z.; Tang, Y. Multi-Dimensional Low-Rank with Weighted Schatten p-Norm Minimization for Hyperspectral Anomaly Detection. Remote Sens. 2024, 16, 74. https://doi.org/10.3390/rs16010074

Chen X, Wang Z, Wang K, Jia H, Han Z, Tang Y. Multi-Dimensional Low-Rank with Weighted Schatten p-Norm Minimization for Hyperspectral Anomaly Detection. Remote Sensing. 2024; 16(1):74. https://doi.org/10.3390/rs16010074

Chicago/Turabian StyleChen, Xi’ai, Zhen Wang, Kaidong Wang, Huidi Jia, Zhi Han, and Yandong Tang. 2024. "Multi-Dimensional Low-Rank with Weighted Schatten p-Norm Minimization for Hyperspectral Anomaly Detection" Remote Sensing 16, no. 1: 74. https://doi.org/10.3390/rs16010074

APA StyleChen, X., Wang, Z., Wang, K., Jia, H., Han, Z., & Tang, Y. (2024). Multi-Dimensional Low-Rank with Weighted Schatten p-Norm Minimization for Hyperspectral Anomaly Detection. Remote Sensing, 16(1), 74. https://doi.org/10.3390/rs16010074