Abstract

Edge detection is one of the most critical operations for moving from data to information. Finding edges between objects is relevant for image understanding, classification, segmentation, and change detection, among other applications. The Gambini Algorithm is a good choice for finding evidence of edges. It finds the point at which a function of the difference of properties is maximized. This algorithm is very general and accepts many types of objective functions. We use an objective function built with likelihoods. Imaging with active microwave sensors has a revolutionary role in remote sensing. This technology has the potential to provide high-resolution images regardless of the Sun’s illumination and almost independently of the atmospheric conditions. Images from PolSAR sensors are sensitive to the target’s dielectric properties and structures in several polarization states of the electromagnetic waves. Edge detection in polarimetric synthetic-aperture radar (PolSAR) imagery is challenging because of the low signal-to-noise ratio and the data format (complex matrices). There are several known marginal models stemming from the complex Wishart model for the full complex format. Each of these models renders a different likelihood. This work generalizes previous studies by incorporating the ratio of intensities as evidence for edge detection. We discuss solutions for the often challenging problem of parameter estimation. We propose a technique which rejects edge estimates built with thin evidence. Using this idea of discarding potentially irrelevant evidence, we propose a technique for fusing edge pieces of evidence from different channels that only incorporate those likely to contribute positively. We use this approach for both edge and change detection in single- and multilook images from three different sensors.

1. Introduction

Blake and Isard [1], among others, stressed the importance of prior knowledge in image processing and machine vision for a wide range of application domains. Such knowledge can be posed in several ways, being ”edge” basilar elements. An edge establishes a discontinuity in the image data properties, usually intensity. Most computer vision algorithms assume that edges occur at positions with rapid intensity or color variation [2]. Such an assumption is, at best, misleading when using synthetic-aperture radar (SAR) imagery for two reasons. First, SAR images are contaminated by an interference pattern, speckle, which is neither additive nor Gaussian and reduces the signal-to-noise ratio. Second, the usual formats are either scalar (an intensity band), bivariate (two intensity polarizations), or multivariate complex (covariance matrix). None of them can be naturally translated into ”colors”.

Gambini et al. [3] provided a solution: an edge detection algorithm that finds changes between the statistical properties of the interior and exterior of a boundary. The boundary evolves towards a configuration that maximizes such difference. This approach was optimized by Gambini et al. [4] using a line search algorithm: instead of searching for the whole boundary, the authors proposed estimating points and then joining them with a B-spline curve.

The line-search approach proved its usefulness with several estimation criteria: maximum likelihood [5], stochastic distances [6], geodesic distances [7], and nonparametric statistics [8] among them.

Borba et al. [9] exploited the strength of the statistical modeling approaches as described in [4,5,6]. The evidence of the edges was extracted separately from each polarimetric intensity channel using the maximum-likelihood method by employing the Wishart model for PolSAR data, followed by their fusion for final edge extraction. Since the diversity of edge information between the landcover classes (agricultural fields, urban, sea, etc.) was observed to be distributed over the polarimetric channels, it justified the need for fusing the edge evidences for piecewise aggregation of the boundary information.

Rey et al. [10] addressed the demand for monitoring inland and coastal water bodies in the presence of several observational issues like surrounding vegetation and wind. Such issues lead to fuzzy edges, extending the zone of radiometric transition that makes identifying the edge boundary progressively challenging.

This statistical approach to edge detection relies on specifying the data distribution. When using PolSAR data, there are several options, e.g., the full covariance matrix, each of the three intensity channels, pairs of intensity channels, the three intensity channels, ratio of intensities, phase differences, and pairs intensity-phase difference. Previous works did not exploit the last three models.

Change detection is one of the most active and challenging research areas in remote sensing. Change detection techniques aim at identifying relevant natural or manmade alterations using at least two images from the area under study Asokan and Anitha [11] Bouhlel et al. [12] exploited a method to change detection named determinant ratio test (DRT) using the constant false alarm rate (CFAR) to provide a threshold to build a binary change map. See Touzi et al. [13], Schou et al. [14] for details about CFAR methods. Edges can also be used for change detection. Rather than trying to identify changes in pixels, we show the viability of studying the temporal evolution of the edges between regions.

The previous fusion methods employ all the sources of evidence in an unweighted manner. We present a technique based on the statistical receiver operating characteristic information fusion (S-ROC), which we call S-ROC, that incorporates the quality of the estimated edge. Furthermore, we propose a change in the Gambini algorithm that solves instability problems. Moreover, we verify the new method’s feasibility for change detection.

This article presents three improvements: two marginal models, the S-ROC information fusion, and a decision rule for the Gambini algorithm. Firstly, we add two densities: the ratio of pairs of intensities and the gamma model for the span. Secondly, the S-ROC technique truncates the number of PCA components in the information fusion. Thirdly, we enhance the Gambini algorithm with a statistically-based decision rule that may decide not to identify an edge.

This article is structured as follows. Section 2 describes:

- (i)

- Computational Resources used in this research.

- (ii)

- The data (images) used in this research.

- (iii)

- The ground references (GR) for each image.

- (iv)

- The Gambini algorithm (GA).

- (v)

- The statistical models stipulated through their probability density functions. In this article, we add two models not used in the literature for edge detection.

- (vi)

- Information fusion. We propose a new approach named S-ROC. We use the principal component analysis (PCA) and a threshold to compute each image’s weight in the fusion process.

- (vii)

- We conclude this section by discussing the Hausdorff distance (Hd) as a quality measure.

2. Materials and Methods

2.1. Computational Resources

We performed the experiments presented in this work in an Intel© Core i7-9750HQ CPU 2.6 GHz computer with 16 GB of RAM running Debian 11. All the coding was performed using Python 3.9.

2.2. Data

In this work, we use simulated and PolSAR images from sensors:

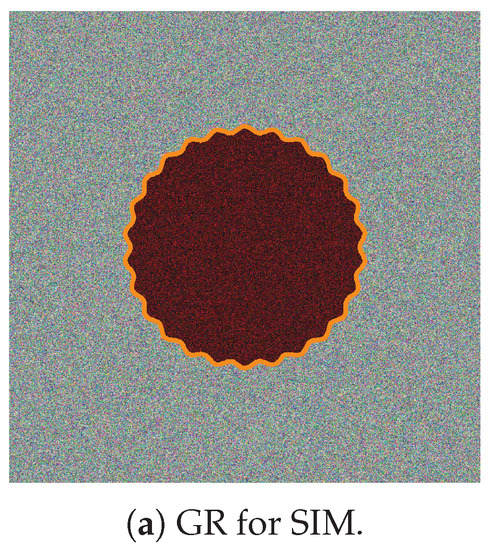

- The Flower, a simulated image (SIM) produced using the Whishart distribution. Details about this phantom can be found in Gambini et al. [3]; Figure 1a shows a sample along with the true edge between background and foreground.

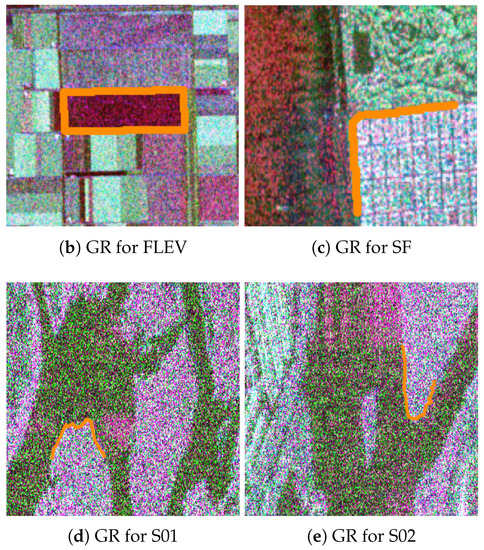

Figure 1. Ground reference images overlain on the Pauli color composites.

Figure 1. Ground reference images overlain on the Pauli color composites. - Airborne AIRSAR sensor in L-Band data [15]:

- Airborne Uninhabited Aerial Vehicle Synthetic Aperture Radar (UAVSAR) sensor in L-Band available on [16]:

- OrbiSAR-2 sensor in P-Band image available on [17]:

- (a)

- Sub-scene 01 of Santos City, Brazil (S01) acquired with the airborne OrbiSAR-2 sensor in P-Band (Figure 3a).

Figure 3. Optical Santos city images.

Figure 3. Optical Santos city images. - (b)

- Sub-scene 02 of Santos City, Brazil (S02) also acquired with the airborne sensor provides details about the S01 and S02 datasets (Figure 3b). Both images were acquired on 12 August 2015.

2.3. Ground References and Images

The edge in the simulated image (SIM, cf. Figure 1a) is stipulated as an expression in polar coordinates. In this case, the ground reference (GR) is the discrete version of this edge.

We analyzed the images from actual sensors pixel-by-pixel using false-color compositions of intensity bands and Pauli decompositions. We marked all the pixels that separate different classes.

2.4. The Gambini Algorithm

The Gambini algorithm (GA) attempts to find evidence of an edge over a thin data strip. A border between regions with different characteristics along the strip is defined at the position of maximal change of an arbitrary feature. The strength of the GA is demonstrated by its applicability under noisy conditions with any model that describes the data well.

We use the Bresenham line algorithm to extract the strips of pixels on which the GA operates. Moreover, we discard at this stage zero-valued pixels that prevent the computation of ratios and logarithms.

The first version of the GA algorithm [3] was computationally intensive because it required iterating a likelihood function. Nascimento et al. [6] reduced the computational cost by using stochastic distances between the PolSAR samples. Naranjo-Torres et al. [7] employed the geodesic distance between SAR intensity samples to measure the dissimilarity between models. These approaches used statistical models with parameters that describe varying degrees of roughness, a feature that proved essential for edge detection.

2.5. Distributions

PolSAR systems register the difference between the transmission and reception of amplitude and phase of signals yielding four polarization channels: , , , and (H for horizontal and V for vertical). If the reciprocity theorem conditions [18] are satisfied, then . Defining the single-look scattering vector as

Thus, the scaled multilook PolSAR return has, in each pixel, the form:

where L is the number of looks, and with is the scattering in each polarization. The superscript * represents the complex conjugate.

Goodman [19] discussed the properties of the full covariance matrix, and Lee et al. [20] derived several of its marginal distributions. Hagedorn et al. [21] obtained the joint distribution of the three intensity channels.

The Wishart distribution is the accepted model for data in covariance matrix format assuming fully developed speckle. The reader is referred to the work by Ferreira et al. [22] for details and extensions. The probability density function (PDF) that characterizes the Wishart distribution is

where is a trace operator, is the complex covariance matrix with entries such that is if then , and is the multivariate gamma function defined by:

and is the gamma function. The Wishart distribution describes fully polarimetric data.

In this work, we are interested in the amount of information different marginal components provide for edge detection. To use such components in the GA algorithm, we use the following distributions that stem from the Wishart model:

- Gamma univariate PDF for intensities: the distribution of each intensity channel follows a gamma law with probability density functionwhere (rather than to allow for flexibility), is the mean, and is the indicator function of the set A. Given the random sample , its reduced log-likelihood under the Wishart model is

- The density that characterizes the ratio of any pair of intensities iswhere and . We can normalize the ratio of intensities asIf we define , with with and , where is the ratio intensity parameter, then (6) becomesThe reduced log-likelihood function under this model is

- Feng et al. [23] show that the gamma distribution models the span, i.e., the sum of the intensities:where and . The reduced log-likelihood under this model for the random sample is:

We obtain the maximum-likelihood parameter estimates (MLE) by maximizing the reduced log-likelihood function with the Broyden–Fletcher–Goldfarb–Shanno (BFGS) method [24]. It is necessary to provide an initial point to optimize the log-likelihood functions. After finding the parameters, we build the total log-likelihood of the data strip and find the edge evidence by applying the generalized simulated annealing (GenSA) [25] method. The log-likelihood total is non-differentiable and oscillates at the extremities of its domain. The GenSA solves the first problem. The second problem was solved by defining a data slack at the extremities. For more details, see Borba et al. [9].

2.6. Information Fusion Methods

Borba et al. [9] discussed the fusion of the detected edge evidence in each intensity channel of a PolSAR image. Based on these ideas, we propose to include the ratio of intensities and the span. We use the principal components analysis (PCA) based method because it can indicate the relative weights of the channels, thus allowing us to decide whether to use or not each data layer.

The edge information from selected channels will be used in the statistic receiver operating characteristic (S-ROC) information fusion method proposed by Giannarou and Stathaki [26]. We call S-ROC the fusion of thresholded information.

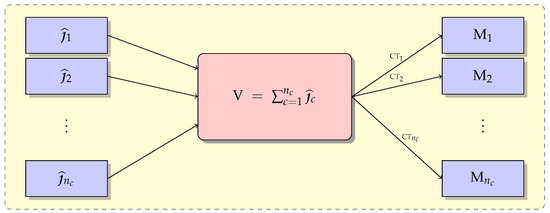

The fusion operates on binary images in which 1 denotes the presence of edge evidence and 0 otherwise. They have the same size ; denote . These images will be fused to obtain the image .

2.6.1. PCA Fusion Methods

PCA fusion is based on Refs. [27,28] and comprises the following steps:

- (i)

- Stack the binary images in column vectors to obtain the matrix .

- (ii)

- Calculate the covariance matrix of .

- (iii)

- Compute the eigenvalues () and corresponding eigenvectors () of the covariance matrix. Sort the eigenvalues and corresponding eigenvectors in decreasing order.

- (iv)

- Compute the vector , where , and is the eigenvalue associated with the eigenvector of ; notice that .

The PCA-based method indicates the coefficients providing the weighting to each correspondent channel with the edge evidence involved in the information fusion. This weighting shows the channel’s importance in the edge evidences fusion process; therefore, we can choose a setting to use in S-ROC fusion.

2.6.2. S-ROC and S-ROC Fusion Methods

S-ROC was proposed by Giannarou and Stathaki [26] and can be summarized in steps (i), (ii), (iii), and (v) below. We propose the modification described in (iv):

- (i)

- Add the binary images to produce the frequency matrix ().

- (ii)

- Use automatic optimal thresholds ranging from on to generate matrices .

- (iii)

- Compare each with all , find the confusion matrix, and generate the ROC curve. The optimal threshold corresponds to the point of the ROC curve at the smallest Euclidean distance to the diagnostic line.

- (iv)

- Use PCA information to choose the channels that will be fused: only those above a threshold () will enter the S-ROC procedure. We used 10% of the sum of the PCA coefficients as the threshold. We named these methods by S-ROC.

- (v)

- The fusion is the matrix which corresponds to the optimal threshold.

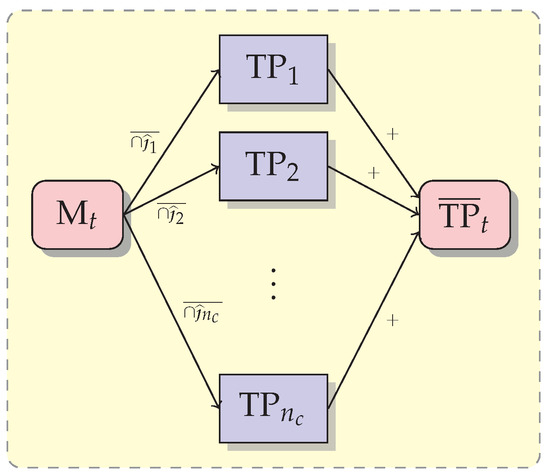

We generate binary images in which one denotes evidence of edge: , being , where is the number of channels. Build the frequency matrix V of the same size as , such that each position stores the frequency of occurrences of edge evidence in each channel ; i.e., V is the pixel by pixel sum of all edge evidence images . The larger the pixel value is, the higher its chance of being an edge. Apply thresholds , , to V and generate the edge evidence maps . The goal of this step is to estimate the optimal global threshold from the set of partial thresholds . Figure 4 shows the flowchart corresponding to this process.

Figure 4.

Flowchart for applying thresholds in S-ROC evidence fusion.

Figure 5 shows the comparison for each matrix, t fixed, with all matrices, with resulting in the probabilities true positive , false positive , true negative , and false negative . Furthermore, we define the means true positive , false positive , true negative , and false negative as

Figure 5.

Structure for evidence fusion with arbitrarily chosen .

The notation means that the comparison is performed pixel by pixel as the intersection between and , averaging according to (12)–(15). The notation + refers to the average of all , or other probabilities , and .

In the sequence, we find the true positive and false negative ratios for each edge map as:

and

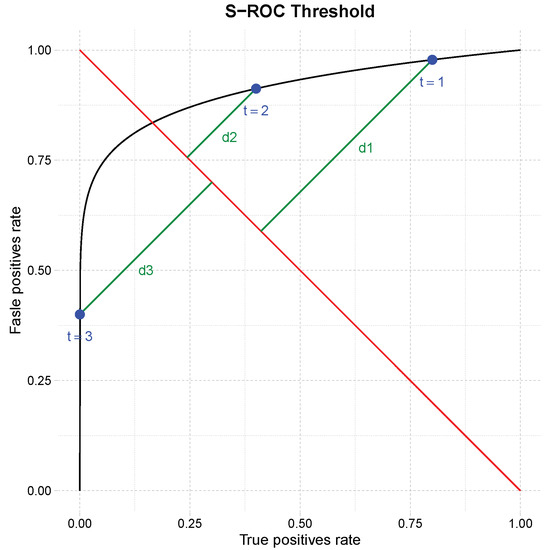

The values of the false positive ratios, , are measured on the horizontal axis, and the true positive ratios, , are measured on the vertical axis. Thus, each edge map produces a point on the two-dimensional graph .

The optimal CT threshold, corresponding to the parameter t that generates the point at the shortest Euclidean distance to the diagnostic line, determines the image with the highest accuracy of detected edges.

We illustrate this technique in Figure 6. The points , , and correspond to three images. The diagnostic line is in red, and the distances between the points and this line are the green segments. As is the closest point to the diagnostic line, the optimal threshold is , and the image performs best in the edge detection task.

Figure 6.

ROC curve for the simulated image of two sheets.

2.7. Measures of Quality

We used the Hausdorff distance (Hd) to measure the performance of the edge estimation by S-ROC and S-ROC fusion. This metric is defined as

where P and are points in the actual (ground reference—GR) and estimated edge points. The smaller the Hd value is, the better the edge is. For details, see Refs. [10,29].

3. Results

3.1. Flower Simulated Image

For the Flower with dimension pixels, we defined the slack constant as 10 pixels, 100 radii, and each radius with 300 pixels. The image has four nominal looks and nine channels.

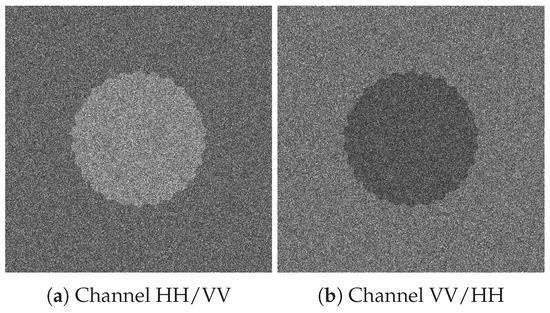

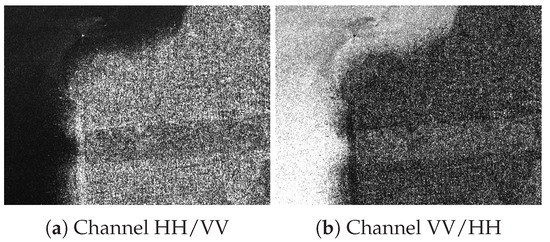

Figure 7.

HH/VV and VV/HH ratios.

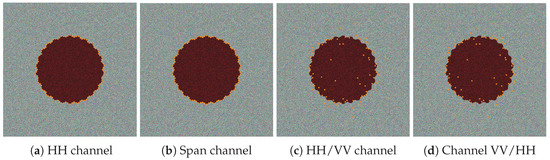

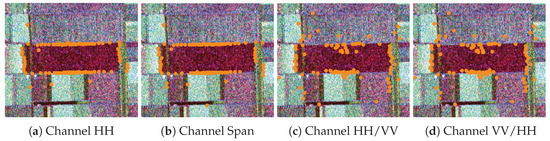

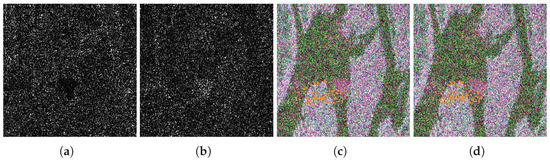

Figure 8 shows the detected points overlain on the Pauli false-color image. We show two good (HH in Figure 8a and span in Figure 8b), and two poor (HH/VV in Figure 8c and VV/HH in Figure 8d) results to emphasize the need to measure the performance and use this information in the fusion process. Figure 9 and Figure 10 show the same analysis but with PolSAR images.

Figure 8.

Four-channel edge evidence overlaid on the Pauli polarimetric color composite.

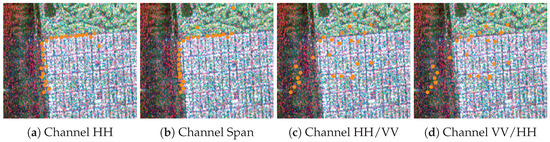

Figure 9.

Edges evidence detected in different channels to FLEV image overlaid on polarimetric color composite.

Figure 10.

Edges evidence detected in different channels to SF image overlaid on polarimetric color composite.

3.2. Flevoland Images

The FLEV image has pixels with four nominal looks. For this image, we defined slacks of 15 pixels, and 100 radii, each with 120 pixels. Zero-valued pixels are removed to avoid division by zero or logarithm applied to zero.

3.3. San Francisco Image

The San Francisco (SF) image has dimension pixels, with four nominal looks and nine channels. We defined slacks of 25 pixels and 25 radii with 120 pixels. Again, zero-valued pixels are discarded.

Figure 10 shows examples of good (HH in Figure 10a and span in Figure 10b) and poor (HH/VV in Figure 10c and VV/HH in Figure 10d) detections.

The ratio images provide little edge information. This was observed in all the images from actual sensors. Therefore, Figure 9 and Figure 10 illustrate the need to incorporate some quality measure in the fusion, which we perform with the S-ROC fusion.

Figure 11 shows the ratio intensities image. The reason for the poor performance of the detection with ratio images is apparent with the San Francisco image.

Figure 11.

San Francisco ratio intensities images.

3.4. Sub-Scene 01 of Santos City

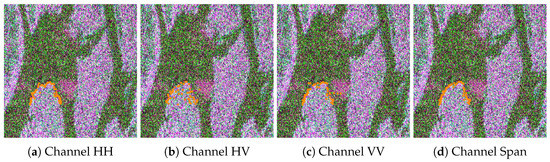

Figure 12 shows the Pauli false-color composition of a high-resolution image acquired with one look by OrbiSAR-2 over Santos City, Brazil, in P-band. The image has pixels and 1 m per pixel. The slack has 15 pixels for this image and 50 radii of 90 pixels each. Zero-valued pixels are removed. Furthermore, the evidence edge detection visualization follows the same construction as before.

Figure 12.

Edges evidence detected in different channels to S01 image overlaid on polarimetric color composite.

Figure 12 shows the results built with univariate gamma and span distributions. Channels HH, VV, and Span lead to accurate detection; see Figure 12a,c,d. Channel HV does not provide good information; see Figure 12b.

Figure 13c,d shows edge estimates obtained with the ratios of intensities; they perform very poorly. Such poor performance can be explained by the lack of visual evidence of edge in the ratio images, as shown in Figure 13a,b.

Figure 13.

Ratios of intensities and edge estimates overlaid on polarimetric color composite. (a) Channel HH/VV. (b) Channel VV/HH. (c) Detection in channel HH/VV. (d) Detection in channel VV/HH.

The methodology applied to image S02 of Santos City presents similar behavior as image S01, and we omit those results for brevity.

3.5. Hd Metric Applied to Edge Evidence Estimates

Table 1 shows the Hd metric applied to the edge estimates from the images defined in items 1 to 4b.

Table 1.

Hausdorff Distance—Hd.

Notably, Table 1 quantifies the poor performance of the method with the ratios of intensities, as verified in the corresponding images for these channels. The span shows good performance, in agreement with the results shown.

3.6. PCA Analysis

The columns of Table 2 show the weight with which each detection channel enters the principal component decomposition. Notice that, in all cases, the individual intensities are the most relevant channels. The span follows them in all cases except in the Flevoland data set.

Table 2.

PCA Coefficients.

We use the PCA analysis to quantify the influence of each channel and then choose the most relevant for the fusion process. We chose the threshold empirically to 10%, with good results.

3.7. S-ROC and S-ROC Information Fusion

Table 3 shows the Hausdorff distance of the results using (i) all the channels, named here S-ROC, and (ii) the S-ROC proposal that selects the channels using principal components.

Table 3.

Hd applied to the results of information fusion techniques.

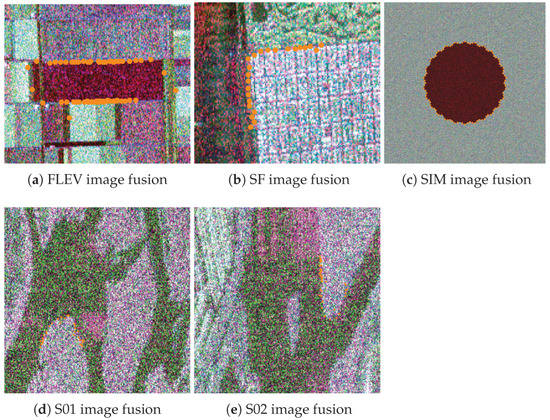

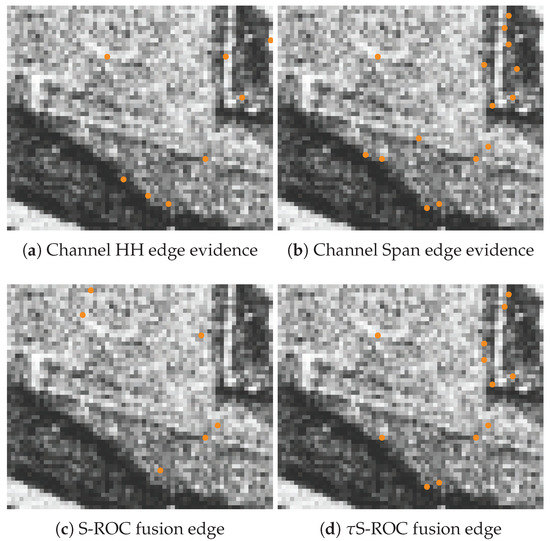

The images in Figure 14 show the results using S-ROC, which are better than those produced by S-ROC (we omit them for brevity). Table 3 presents a quantitative analysis of the Hausdorff distance applied to the results provided by S-ROC and S-ROC.

Figure 14.

Image S-ROC fusion.

We used the SIM image to quantify the sensitivity of the S-ROC method to the variation of because we know the actual edge position. Table 4 was constructed using the S-ROC and S-ROC fusion methods applied to the SIM image, varying the threshold values: , , and . The table presents which channels were selected with each threshold.

Table 4.

Hd applied to the results of the fusion techniques to examine the sensitivity to variation.

Table 5 shows the mean execution times of the methods: edge detection, S-ROC, and S-ROC. We applied the methods ten times. The median values were almost the same as the sample means.

Table 5.

Execution times S-ROC and S-ROC consume for detecting edges.

3.8. Change Detection

This section uses our edge detection for a new change detection approach.

One of the challenges of finding changes in a noisy field is identifying relevant changes over random fluctuations. To achieve this aim, we calculate the log-likelihood function at each radial point, find the sample mean () and the sample standard deviation , and define a threshold . The constant was chosen empirically. Only values outside this interval are considered relevant changes.

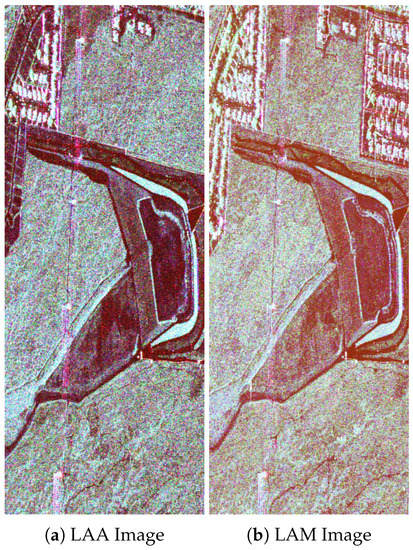

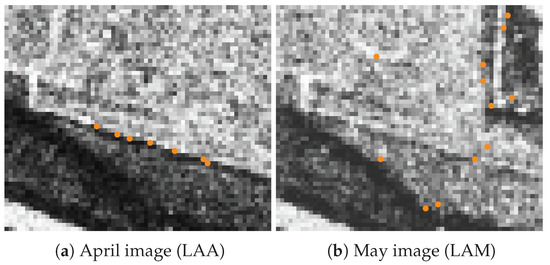

Figure 2 shows two coregistered images for the same regions in Los Angeles, CA, USA. The Jet Propulsion Laboratory/National Aeronautics and Space Administration (JPL) acquired the images using the Uninhabited Aerial Vehicle Synthetic Aperture Radar (UAVSAR) with L-band polarimetric multilook. Figure 2a was acquired on 23 April 2009, and Figure 2b on 11 May 2015. The images have pixels.

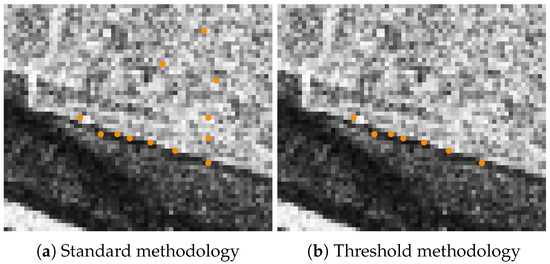

We apply the two methodologies, namely, the standard one and after applying the threshold to the LAA image; Figure 15 shows the results. Figure 15a shows the standard methodology, which shows the presence of outliers. Figure 15b shows the results thresholding by the likelihood; it performs well and does not wrongly identify edges on the relatively flat area. The edge estimates agree with the transition between the two regions. We only show channel HH; however, the results are consistent along all channels: thresholding by the likelihood systematically reduces the number of false positives and, at the same time, does not noticeably increase the number of false negatives.

Figure 15.

Edge evidence methodologies apply to the LAA (HH intensity channel).

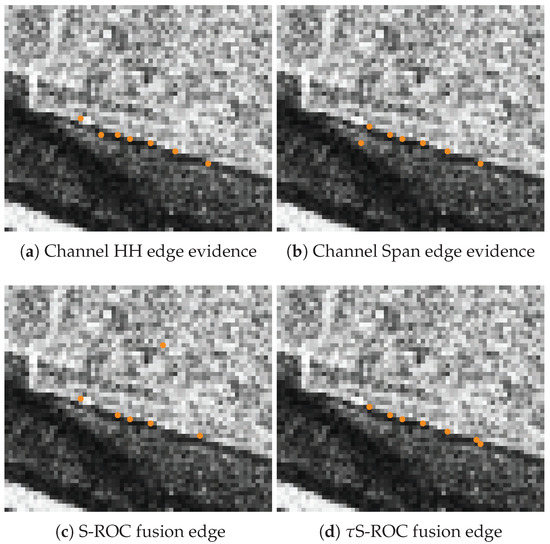

The images in Figure 16 show Pauli decompositions in gray-scale overlaid with edge evidence (Figure 16a,b) and edge detected with S-ROC and S-ROC (Figure 16c,d). The results shown in this figure were built thresholding by the likelihood of the points in each ray.

Figure 16.

Edge evidence and edge detection with uniform region control apply to LAA image.

The images in Figure 17 show Pauli decomposition in gray-scale overlaid with edge evidence (Figure 17a,b) and edges detected with information fusion (Figure 17c,d). The results shown in this figure were built thresholding by the likelihood.

Figure 17.

Edge evidence and edge detection with uniform region control apply to LAM image.

Change detection in multilook polarimetric image SAR (PolSAR) with the methods to detect the edge evidence and thresholding works accurately. The images in Figure 18a,b show the result of applying S-ROC.

Figure 18.

Change detection in the multilook Los Angeles PolSAR image.

4. Discussion

The main results we obtained are summarized in the following:

- (i)

- Although the estimation by maximization of the log-likelihood with the BFGS algorithm is stable, it is most sensitive to the initial point with the distribution of ratios. We used the first- and second-order moments estimates with good results.

- (ii)

- Table 2 shows that the PCA method recommends the fusion of the intensities or span channels, except in image S02; we used these channels to build the fusion methods S-ROC.

- (iii)

- The S-ROC method performs best concerning Hd.

- (iv)

- S02 (Figure 1) is a unique data set in which the ratio of intensities contributes to the S-ROC fusion.

- (v)

- S-ROC is better than S-ROC at discarding outliers.

- (vi)

- S-ROC and S-ROC work well in images from various sensors.

- (vii)

- S-ROC rejects false positive detection on homogeneous areas.

- (viii)

- Figure 16 and Figure 17 show the thresholding results by the likelihood using the HH, and Span channels, and edge detection by S-ROC and S-ROC. These results motivated us to check the change detection in two images of Los Angeles taken from the same region at different times. The result is shown in Figure 18. The visual inspection of Figure 18a (LAA image) and Figure 18b (LAM image) shows the method’s ability to identify changes.

- (ix)

- We used images with different numbers of looks: images S01 and S02 are single-look, while the others have four; our methods present similar performance.

5. Conclusions

Using the ratio channels does not improve edge detection. Even when some ratio channels contribute to edge detection, as in the case of image S02, see Table 3, the value of the PCA coefficient is very close to the threshold; cf. Table 2.

The span produces the best Hd; this result is compatible with the high visual quality of the span images. Including span in the fusion process is, thus, a recommendation. The worst detection performance occurs when using the HH/VV and VV/HH channels; therefore, including these bands in the process is not recommended.

The S-ROC is a suitable approach for edge and change detection. It is computationally more efficient than S-ROC, cf. Table 5. The thresholding operation is fast, and using fewer channels compensates for this step.

Extracting the edge evidence is the most time-consuming state of the processing pipeline. Under the Gambini algorithm, the detection of each ray can be implemented as an independent thread. This approach is, thus, suitable for distributed and parallel architectures.

Thresholding by the likelihood rejects false positives and, thus, is adequate for change detection.

The information captured by the S-ROC method can be improved by adding optical channels and ancillary information in the fusion process in the form of other physically-based and/or empirical models.

Author Contributions

All authors (A.A.D.B., A.M., M.M. and A.C.F.) discussed the results and contributed to the research. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq), grant number 200725/2022-0. And, Victoria University of Wellington, FSRG 2022 – Time Series Analysis with Ordinal Patterns: Transformative Theoretical Advances and New Applications, project number: 410695.

Data Availability Statement

The Python code implementation, data, and Latex files used in this research are on the website: https://github.com/anderborba/remote_sensing_2023 (accessed on 30 March 2023).

Acknowledgments

The author A.A.D.B thank Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq) for the support.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Probability Density Function | |

| GR | Ground Reference |

| SAR | Synthetic-Aperture Radar |

| PolSAR | Polarimetric Synthetic-Aperture Radar |

| AIRSAR | Airborne Synthetic-Aperture Radar |

| OrbiSAR-2 | Orbital SAR |

| JPL | Jet Propulsion Laboratory |

| UAVSAR | Uninhabited Aerial Vehicle Synthetic-Aperture Radar |

| SIM | Flower Simulated Image |

| FLEV | Flevoland Image |

| SF | San Francisco Image |

| S01 | Sub-scene 01 of Santos City |

| S02 | Sub-scene 02 of Santos City |

| LAA | Los Angeles Image on April 2009 |

| LAM | Los Angeles Image on May 2015 |

| ROI | Region Of Interest |

| MLE | Maximum-Likelihood Estimator |

| BFGS | Broyden–Fletcher–Goldfarb–Shanno |

| GenSA | Generalized Simulated Annealing |

| S-ROC | Statistic Receiver Operating Characteristic |

| S-ROC | Statistic Receiver Operating Characteristic with a threshold |

| PCA | Principal Component Analysis |

| Hd | Hausdorff Distance |

References

- Blake, A.; Isard, M. Active Contours; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Gambini, J.; Mejail, M.; Jacobo-Berlles, J.; Frery, A.C. Feature Extraction in Speckled Imagery using Dynamic B-Spline Deformable Contours under the G0 Model. Int. J. Remote Sens. 2006, 27, 5037–5059. [Google Scholar] [CrossRef]

- Gambini, J.; Mejail, M.; Jacobo-Berlles, J.; Frery, A.C. Accuracy of Edge Detection Methods with Local Information in Speckled Imagery. Stat. Comput. 2008, 18, 15–26. [Google Scholar] [CrossRef]

- Frery, A.C.; Jacobo-Berlles, J.; Gambini, J.; Mejail, M. Polarimetric SAR Image Segmentation with B-Splines and a New Statistical Model. Multidimens. Syst. Signal Process. 2010, 21, 319–342. [Google Scholar] [CrossRef]

- Nascimento, A.D.C.; Horta, M.M.; Frery, A.C.; Cintra, R.J. Comparing Edge Detection Methods Based on Stochastic Entropies and Distances for PolSAR Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 648–663. [Google Scholar] [CrossRef]

- Naranjo-Torres, J.; Gambini, J.; Frery, A.C. The Geodesic Distance between GI0 Models and its Application to Region Discrimination. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 987–997. [Google Scholar] [CrossRef]

- Girón, E.; Frery, A.C.; Cribari-Neto, F. Nonparametric edge detection in speckled imagery. Math. Comput. Simul. 2012, 82, 2182–2198. [Google Scholar] [CrossRef]

- Borba, A.A.D.; Marengoni, M.; Frery, A.C. Fusion of Evidences in Intensities Channels for Edge Detection in PolSAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Rey, A.; Revollo Sarmiento, N.; Frery, A.C.; Delrieux, C. Automatic Delineation of Water Bodies in SAR Images with a Novel Stochastic Distance Approach. Remote Sens. 2022, 14, 5716. [Google Scholar] [CrossRef]

- Asokan, A.; Anitha, J. Change detection techniques for remote sensing applications: A survey. Earth Sci. Informatics 2019, 12, 143–160. [Google Scholar] [CrossRef]

- Bouhlel, N.; Akbari, V.; Méric, S. Change Detection in Multilook Polarimetric SAR Imagery with Determinant Ratio Test Statistic. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Touzi, R.; Lopes, A.; Bousquet, P. A statistical and geometrical edge detector for SAR images. IEEE Trans. Geosci. Remote Sens. 1988, 26, 764–773. [Google Scholar] [CrossRef]

- Schou, J.; Skriver, H.; Nielsen, A.; Conradsen, K. CFAR edge detector for polarimetric SAR images. IEEE Trans. Geosci. Remote Sens. 2003, 41, 20–32. [Google Scholar] [CrossRef]

- Chapman, B.; Shi., J. CLPX-Airborne: Airborne Synthetic Aperture Radar (AIRSAR) Imagery, Version 1 [Data Set]; NASA National Snow and Ice Data Center Distributed Active Archive Center: Boulder, CO, USA, 2004. [Google Scholar] [CrossRef]

- Bouhlel, N.; Akbari, V.; Méric, S. Change Detection in Multilook Polarimetric SAR Imagery with DRT Statistic. IEEE Dataport 2021. [Google Scholar] [CrossRef]

- Nobre, R.; Rodrigues, A.; Rosa, R.; Medeiros, F.; Feitosa, R.; Estevão, A.; Barros, A. GRSS SAR/PolSAR Database. IEEE Dataport 2017. [Google Scholar] [CrossRef]

- Long, D.G.; Ulaby, F.T. Microwave Radar and Radiometric Remote Sensing; The University of Michigan Press: Norwood, MI, USA, 2014. [Google Scholar]

- Goodman, N.R. Statistical Analysis Based on a Certain Complex Gaussian Distribution (an Introduction). Ann. Math. Stat. 1963, 34, 152–177. [Google Scholar] [CrossRef]

- Lee, J.S.; Hoppel, K.W.; Mango, S.A.; Miller, A.R. Intensity and phase statistics of multilook polarimetric and interferometric SAR imagery. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1017–1028. [Google Scholar] [CrossRef]

- Hagedorn, M.; Smith, P.J.; Bones, P.J.; Millane, R.P.; Pairman, D. A trivariate chi-squared distribution derived from the complex Wishart distribution. J. Multivar. Anal. 2006, 97, 655–674. [Google Scholar] [CrossRef]

- Ferreira, J.A.; Nascimento, A.D.C.; Frery, A.C. PolSAR Models with Multimodal Intensities. Remote Sens. 2022, 14, 5083. [Google Scholar] [CrossRef]

- Feng, Y.; Wen, M.; Zhang, J.; Ji, F.; Ning, G. Sum of arbitrarily correlated Gamma random variables with unequal parameters and its application in wireless communications. In Proceedings of the 2016 International Conference on Computing, Networking and Communications (ICNC), Kauai, HI, USA, 15–18 February 2016; pp. 1–5. [Google Scholar]

- Henningsen, A.; Toomet, O. maxLik: A package for maximum likelihood estimation in R. Comput. Stat. 2011, 26, 443–458. [Google Scholar] [CrossRef]

- Xiang, Y.; Gubian, S.; Suomela, B.; Hoeng, J. Generalized Simulated Annealing for Global Optimization: The GenSA Package. R J. 2013, 5, 13–28. [Google Scholar] [CrossRef]

- Giannarou, S.; Stathaki, T. Optimal edge detection using multiple operators for image understanding. EURASIP J. Adv. Signal Process. 2011, 2011, 28. [Google Scholar] [CrossRef]

- Naidu, V.P.S.; Raol, J.R. Pixel-level Image Fusion using Wavelets and Principal Component Analysis. Def. Sci. J. 2008, 58, 338–352. [Google Scholar] [CrossRef]

- Mitchell, H. Image Fusion: Theories, Techniques and Applications; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Taha, A.A.; Hanbury, A. An Efficient Algorithm for Calculating the Exact Hausdorff Distance. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2153–2163. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).