Abstract

Achieving high-resolution remote sensing images is an important goal in the field of space exploration. However, the quality of remote sensing images is low after the use of traditional compressed sensing with the orthogonal matching pursuit (OMP) algorithm. This involves the reconstruction of the sparse signals collected by photon-integrated interferometric imaging detectors, which limits the development of detection and imaging technology for photon-integrated interferometric remote sensing. We improved the OMP algorithm and proposed a threshold limited-generalized orthogonal matching pursuit (TL-GOMP) algorithm. In the comparison simulation involving the TL-GOMP and OMP algorithms of the same series, the peak signal-to-noise ratio value (PSNR) of the reconstructed image increased by 18.02%, while the mean square error (MSE) decreased the most by 53.62%. The TL-GOMP algorithm can achieve high-quality image reconstruction and has great application potential in photonic integrated interferometric remote sensing detection and imaging.

1. Introduction

With the increasingly mature manufacturing process of photonic integrated devices and interference detection technology, the segmented planar imaging detector for electro-optical reconnaissance (SPIDER), which has photonic integrated interference imaging as its core technology, has attracted a lot of attention from researchers in the field of astronomical observation or remote sensing detection. It has been used to replace traditional optical telescopes with large volume, weight, and energy consumption [1] in the detection of targets. For example, the Hubble Telescope is 13.3 m long and weighs 27,000 pounds [2].

Interferometry is an important technology used in photonic integrated interferometric imaging systems. It uses electromagnetic wave superposition to extract the wave source information and provides technical support for the reconstruction of high-resolution images. Optical interferometry unifies the light from many lens pairs on a photonic integrated chip (PIC) and then reconstructs the remote sensing image from the optical signal obtained by interferometry. Optical interferometer arrays are the preferred instruments for high-resolution imaging. Such interferometer arrays include the CHARA array [3,4], larger telescope interferometer [5], and navy precision optical interferometer [6]. These systems use far-field spatial coherence measurements to form intensity images of light source targets [7]. In our previous publication [8,9], we discussed the definition of a small-scale interferometric imager, which we called a planar photoelectric detection imaging detector (SPIDER). The SPIDER imager [8] comprises one-dimensional interferometric arms arranged along the azimuth angles in multiple directions. Each interference arm has the same design structure. Any two lenses on the interference arm form the interference baseline; the collected optical signals are coupled in the PIC and interfered in the multi-mode interferometer (MMIs), while the fringe data are read by the two-dimensional detector array. Because the interference arms in the PIC are distributed along the azimuth angle [0, 2], and since interference baselines of any length on the interference arms correspond to the spatial frequency information in the two-dimensional Fourier Transform domain, the PIC can obtain optical frequency information through sparse sampling in all directions. We can use the compressing sensing (CS) theory algorithm to reconstruct sparse optical signal data in order to obtain the content information of detection targets. The CS theory can be applied in the field of photonic integrated interference imaging to meet our needs in life, production, and scientific exploration.

In recent years, CS has attracted increasing attention in signal processing. Donoho et al. proposed this theory in 2006. The traditional Nyquist sampling theorem [10] requires that the sampling frequency of the signal be greater than or equal to twice the signal frequency. The proposed compressed sensing theory overcomes the limitations of traditional sampling theorems. If the collected signals are sufficiently sparse, the original signals can be reconstructed by projection onto random vectors. More specifically, the original signals could be reconstructed at low speeds. Therefore, this innovative theory of improving sampling efficiency has been of great interest in the fields of digital signal processing [11], optical imaging [12], medical imaging [13], radio communication [14], radar imaging [15], and pattern recognition [16]. The research conducted on compressed sensing comprises three main areas: (1) the sparse representation of original signals, with commonly used sparse transform methods such as the Fourier Transform (FT) [17], Discrete Cosine Transform (DCT) [18], and Wavelet Transform (DWT) [19]; (2) the design of the measurement matrix, including the random measurement matrix [20,21] and deterministic measurement matrix [22,23]; (3) reconstruction algorithms, such as the basis pursuit (BP) algorithm [24,25], matching pursuit (MP) algorithm [26], and orthogonal matching pursuit algorithm [27,28,29,30].

The compressed sensing OMP algorithm is one of the most representative greedy algorithms; it is simple, stable, has low computational complexity, and has been widely studied by researchers. In contrast, the traditional OMP algorithm produces Gaussian noise when reconstructing an image, which significantly affects the quality of the reconstructed image. Consequently, the traditional OMP algorithm has continuously been improved over time, and enhanced algorithms such as stagewise orthogonal matching pursuit (STOMP), generalized orthogonal matching pursuit (GOMP), and stagewise weak orthogonal matching pursuit (SWOMP) have been generated to improve the quality of the reconstructed image. To further solve the above-mentioned problems, we improved the threshold limited-generalized orthogonal matching tracing algorithm using the traditional OMP algorithm.

The main contributions of this paper can be summarized as follows:

- (1)

- We improved the traditional OMP algorithm and proposed the TL-GOMP algorithm, which was used to reconstruct the sparse spatial frequency information collected by the PIC and recover the content information of the detected target. In the simulation, we compared the TL-GOMP algorithm with the other improved OMP image reconstruction algorithm from the same series and the non-OMP image reconstruction algorithm, and subsequently verified its superiority in image reconstruction.

- (2)

- Simultaneously, we used this algorithm to reconstruct and simulate the sparse signals collected by photonic integrated chips at different distances. The simulation results showed that the TL-GOMP algorithm can be applied in the field of photon-integrated interferometric remote sensing detection and imaging to recover the content information of unknown targets.

2. Related Work

Image reconstruction is based on sparse original signals from the target or image, and the content and feature information of the target or image are restored and reproduced by designing reconstruction algorithms. At present, the compressed sensing reconstruction algorithm has become the mainstream in the field of image reconstruction, mainly because the image signal has two characteristics: high dimension and can be sparse. The research on compressed sensing theory mainly includes three aspects: sparse signal representation, measurement matrix design, and reconstruction algorithm design.

2.1. Sparse Signal Representation

The sparse representation of signals is an important premise and foundation of compressed sensing theory. When a signal can become approximately sparse under the action of a change domain, it is said to have sparsity or compressibility, which can achieve the purpose of reducing signal storage space and effectively compressed sampling. If the length of a signal is N, and the number of non-zero value elements is no more than k after representation by the sparse basis matrix, we can define it as a k-sparse signal. The sparsity k of the sparse signal directly affects the accuracy of the reconstructed signal; that is, the higher the sparsity, the higher the accuracy of the reconstructed signal. Based on the above reasons, the reasonable selection of the sparse basis matrix is very important. The commonly used transform bases are as follows: Fourier Transform basis [17], Discrete Cosine Transform basis [18], Discrete Wavelet Transform basis [19], Contourlet Transform basis [31], and the K-singular value decomposition method based on matrix decomposition [32].

2.2. Design of Measurement Matrix

In compressed sensing theory, the measurement matrix has the function of sampling the original signal, and its selection is very important. It can project the signal from a high-dimensional space to a low-dimensional space to obtain the corresponding measurement value. In order to obtain an accurate sparse representation through measurement values, an uncorrelated relationship between the observed matrix and the sparse basis matrix was required to satisfy the Restricted Isometry Property (RIP), which guaranteed that the original space and the sparse space could be mapped one-to-one. At the same time, the matrix formed by arbitrarily extracting the number of column vectors that was equal to the number of observed values is non-singular. Commonly used measurement matrices are as follows: Gaussian random matrix [33], measurement matrix constructed based on equilibrium Gold sequence [34], partial Fourier matrix [35], and partial Hadamard matrix [36]. Wang Xia proposed a deterministic random sequence measurement matrix [37] and verified its effectiveness through experiments.

2.3. Design of Reconstruction Algorithm

In recent years, remarkable achievements have been made in the research on compressed sensing reconstruction algorithms, which can be divided into the traditional iterative compressed sensing reconstruction algorithm and the deep compressed sensing network-based reconstruction algorithm.

2.3.1. Traditional Iterative Compressed Sensing Reconstruction Algorithm

The purpose of compressed sensing is to find the sparsest original signal to meet the demands of measurement, which can be understood as the inverse problem of minimizing the norm of l0. Specific methods for achieving this are as follows: (1) convex relaxation method, which converts the minimum l0-norm problem into the minimum l1 norm problem under certain conditions, that is, the non-convex problem is converted into a convex problem such as the basis pursuit algorithm [38] and the Gradient Projection for Sparse Reconstruction (GPSR) [39]; (2) greedy matching tracking algorithms such as the matching pursuit algorithm [26] and the orthogonal matching pursuit algorithm [27,28]; (3) non-convex optimization methods, including the Bayesian Compressed Sensing algorithm (BCS) [40]; and (4) model-based optimization algorithms, the first three of which are based on the sparsity of original signals; these may not be valid for ordinary signals, such as the improved Total Variation-based algorithm (TV) [41].

2.3.2. Reconstruction Algorithm Based on Deep Compressed Sensing Network

As the use of deep learning in various research fields has increased, it has gradually been introduced into the research on compressed sensing image reconstruction algorithms. Ali Mousavi et al. proposed a Stacked Denoising Autoencode (SDA) algorithm, which mainly realized the end-to-end mapping between measured values and reconstructed images and adopted an unsupervised learning method. Kulkarni et al. proposed ReconNet [42], a non-iterative framework based on convolutional neural networks, and applied convolutional neural networks to compressed sensing reconstruction for the first time. The network structure consisted of a fully connected layer and six convolutional layers. Yao and Dai et al. combined the idea of residual learning with ReconNet and proposed a Deep Residual Reconstruction Network (DR2-Net) for compressed image perception reconstruction [43]; the network was cascaded. Kulkarni K, Lohit S et al. [44] further deepened the network structure of ReconNet and used the network structure of a full connection layer to replace the original Gaussian matrix in order to realize the image sampling. This kind of network is called adaptive sampling ReconNet. Xuemei Xie et al. [45] made some improvements to the sampling process of compressed sensing and also used full connection and deconvolution methods to optimize the compressed sensing network. Nie and Fu et al. not only used the convolutional neural network for image reconstruction, but also added image denoising into the network. The ResConv network they proposed [46] has these two characteristics. The CSnet network proposed by Shi et al. [47] redesigned the sampling process, which, as with the previous algorithms, does not only realize image reconstruction, but also puts forward a novel sampling mechanism to match the reconstructed network.

3. Methods

The theoretical framework of compressed sensing consists of three main aspects: the sparse representation of the original signal vector ; the measurement matrix designed to change the high-dimensional original signal into a low-dimensional measurement vector ; and the algorithm designed to obtain the approximate sparse representation in order to recover the original signal.

3.1. The Reconstruction Principle of the OMP Algorithm Based on Compressed Sensing

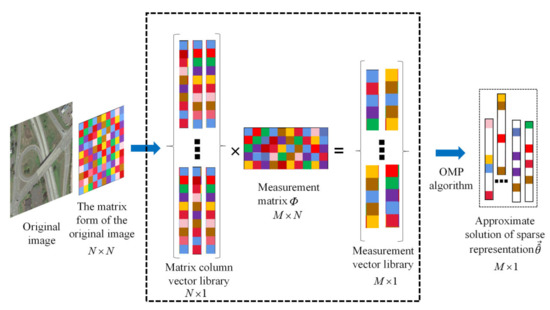

Figure 1 shows a schematic for solving sparse representations in compressed sensing. Here, we consider the compressed sensing theory as a linear model:

where and represent the column vectors in the observation data and unknown image, respectively, and the measurement matrix arranged in the order of the column vectors is known. We chose the unknown image , which can be represented by .

Figure 1.

Schematic of the sparse representation of compressed sensing.

Because the original signal is not absolutely sparse, to transform it into a compressible signal, a sparse basis matrix is adopted, which transforms the original signal into a sparse domain and forms a sparse representation vector . The number of non-zero values in the sparse representation vector is , and thus the vector is called the k-sparse representation. The measured data of the target can be written as follows:

Here, we define the sensor matrix , whose function is to establish the linear relationship between the sparse representation and the measured value .

The measurement data of the target can then be expressed as

Here, we use the most commonly used OMP algorithm [30,31] to illustrate the approximate solution of the sparse representation. A column vector of the measurement data is selected, and is used to represent the residual value after the iteration. The initial value of the residual is set as . represents the matrix used to store the column vector of the sensor matrix after the iteration. The initial value of the matrix is represented by . The sensor matrix is defined as follows:

After multiplying the transposed form of the sensor matrix A with the initial residual value , can be expressed as

Here, each element in the vector represents the inner product of each row vector in with , that is, (; ). The corresponding column vector in the sensor matrix is selected according to the maximum inner product value , and is stored in the matrix. The least-squares method is used to obtain the minimum residual value . The residual value after the iteration is

where represents the column vector selected from the sensor matrix during the iteration. Finally, after iterations, we can obtain the -sparse representation (approximate solution ), which comprises k non-zero values such as . This is an optimization problem for the smallest norm of , which can be mathematically expressed as follows:

Algorithm 1 presents the execution steps of the OMP algorithm. As shown in Figure 2, we multiply the approximate solution by the sparse basis matrix ; then, the original signal recovered is . The final reconstructed image is obtained as follows:

Figure 2.

Schematic of original image reconstruction using sparse representation .

| Algorithm 1: Orthogonal Matching Pursuit |

| Input: Sensor matrix B, Sparseness k |

| Output: |

| Initialize: , , t = 1 |

| Loop performs the following five steps: (1) ; (2) Update the index set: ; Reconstruction of atomic collection: ; (3) Least-squares method:; (4) Update the residual: ; (5) Judgment: If t > k, stop the iteration, or go to step (1). |

3.2. The Reconstruction Principle of the TL-GOMP Algorithm Based on Compressed Sensing

In this section, we introduce an improved TL-GOMP algorithm based on the traditional OMP algorithm. We selected unknown images . To illustrate the principle of the improved TL-GOMP algorithm, we took the unknown target and converted it into the form of a column vector , as expressed by the equation below:

Here, is the measurement matrix. Assuming that the residual value after k iterations is , we arbitrarily extracted a column and assigned it to the initial value of the residual value. By using the transpose form of the sensor matrix , and multiplying the residual value , we could obtain vector as follows:

Here, we define one parameter and the other parameter ; the parameter can be understood as a variable that controls or adjusts the threshold, which is a range value. The principle of its selection is to constantly change the threshold value and form the corresponding column vector of the first inner product values in the sensor matrix into a matrix, with the purpose of solving the optimal least-squares solutions to form a sparse representation. After cycles, the sparsity of the sparse representation is . Parameter represents the number of rows of perception matrix and measurement matrix in compressed sensing theory, or the number of measurements of observation matrix. The threshold is then denoted as

Subsequently, we took the absolute value of each of the elements in the vector and placed them in descending order to obtain the vector . We stored the sequence numbers of the elements satisfying the inequality relation in Equation (12).

The algorithm cycles times in total, where refers to the number of non-zero-valued elements in the sparse representation. Each cycle will store the maximum number of elements that satisfy the threshold conditions. After cycles, there is a value. Thereafter, the column vector of the sensor matrix corresponding to the value of is stored in the matrix , where . We then use the least-squares method to obtain the approximate solution for the sparse representation, as expressed by the equation below:

After each iteration, the updated residual value is expressed as:

Finally, the reconstructed image is obtained as follows:

As listed in Algorithm 2, the core function of the algorithm is to effectively select the maximum number using the additional threshold value. After the algorithm iterates times, the sparse representation vector has sparsity. The threshold value used was . In the subsequent simulations, we selected and .

| Algorithm 2: Threshold Limited–Generalized Orthogonal Matching Pursuit |

| Input: Sensor matrix B, Sparseness k |

| Output: |

| Initialize:, t = 1 |

| Loop performs the following five steps: ; ; (3) Least-squares method: ; ; (5) Judgment: If t > k, stop the iteration, or go to step (1). |

The advantage of this algorithm is that the inner product values meeting the threshold conditions can be quickly screened out in time by setting the limiting threshold value , and corresponding column vectors can be directly found in the sensor matrix according to the serial number of the first inner product values. These inner product values are represented by logical value 1 in the code, while other inner product values are represented by logical value 0. On the other hand, by setting the threshold coefficient to adjust the limiting threshold, we constantly combine the serial numbers of the first inner product values into the corresponding column vectors in the sensor matrix to form a matrix, aiming at solving the optimal least-squares solutions with good universality and flexibility. After iterations, we can reconstruct the image information of the target through sparse representation with a sparsity of .

4. Experiments

To demonstrate the performance of the TL-GOMP algorithm (refer to Algorithm 2) in reconstructing the target images, we present some simulation results in this section. First, we used the TL-GOMP algorithm and the same series of OMP, STOMP, and GOMP algorithms to conduct a comparative simulation of the target test images, as shown in Figure 3. In this case, the simulation results show that the image quality reconstructed using the TL-GOMP algorithm is better than that reconstructed using the same series of OMP algorithms. Subsequently, in order to rigorously prove the advantages of the TL-GOMP algorithm, we selected algorithms other than the OMP series to conduct a comparative simulation of the targets shown in Figure 3, and the simulation results once again showed that the image reconstructed by the TL-GOMP algorithm was better than that reconstructed by the other algorithms. We then applied the TL-GOMP algorithm in the field of photonic integrated interference image reconstruction and used this algorithm to reconstruct sparse spatial frequency information collected by the PIC at different distances. The simulation image results show that this algorithm can reconstruct the content information of the detected target well. Finally, we explored the measurement number and sparsity in the TL-GOMP algorithm and their influence on the quality of the reconstructed images.

Figure 3.

Original image.

In all the experiments below, we used the Gaussian random matrix as the measurement matrix, which is established by the randn function in the code, and the values of each element in this matrix satisfy the standard normal distribution. Meanwhile, we used the discrete cosine transform matrix as the sparse matrix, whose function is the sparse representation or compression of the original signal. In the experiments, the measurement matrix was updated with the operation of the code every time, which reflects the randomness. Therefore, we conducted several simulation experiments in each research part and verified the reliability of the conclusion through the data results.

4.1. Comparison of Simulation Results of the TL-GOMP and OMP Series Algorithms

For this section, we selected test images with pixel values of 350 × 350, 500 × 500, 650 × 650, and 800 × 800 as the target scenes; four image reconstruction algorithms (OMP, STOMP, GOMP, and TL-GOMP) to perform image reconstruction simulation; and used peak signal-to-noise ratio and mean square error to evaluate the image quality. Figure 4a–d show the simulation results of the 800 × 800 image reconstruction. From an intuitive point of view, the improved TL-GOMP tracing algorithm can be used to further improve the quality of the reconstructed images. Table 1 presents the quality evaluation data and the code runtime for the 350 × 350 reconstructed images. From the perspective of quantitative data, we can also see that the PSNR values of the images obtained by the TL-GOMP algorithm increased by 15.82% (compared with the results of the GOMP algorithm), 14.60% (compared with the STOMP algorithm), and 16.63% (compared with the OMP algorithm). The MSE values of the images decreased by 48.64%, 46.30%, and 50.12%, respectively, and the code running time was relatively fast. Therefore, from the above simulation data, we conclude that the TL-GOMP algorithm based on compressed sensing can rely on sparse data collected by the PIC to restore the content information of the detected target.

Figure 4.

Reconstruction results of four different algorithms: (a) OMP; (b) STOMP; (c) GOMP; and (d) TL-GOMP.

Table 1.

PSNR, MSE, and time of the 350 × 350 image reconstructed by OMP series algorithms.

Table 2 shows simulation results, with the test image at a resolution of 500 × 500 pixels selected as the target. The data results show that the PSNR values of the reconstructed images obtained by the TL-GOMP algorithm increased by 18.05% (compared with the GOMP algorithm), 17.82% (compared with the STOMP algorithm), and 18.02% (compared with the OMP algorithm). The MSE values of the images decreased by 53.68%, 53.28%, and 53.62%, respectively.

Table 2.

PSNR, MSE, and time of the 500 × 500 image reconstructed by OMP series algorithms.

Table 3 shows the simulation results, with the test image at a resolution of 650 × 650 pixels selected as the target. The data results show that the PSNR values of the reconstructed images obtained by the TL-GOMP algorithm increased by 17.58% (compared with the GOMP algorithm), 15.40% (compared with the STOMP algorithm), and 15.45% (compared with the OMP algorithm). The MSE values of the images decreased by 52.95%, 48.97%, and 49.08%, respectively.

Table 3.

PSNR, MSE, and time of the 650 × 650 image reconstructed by OMP series algorithms.

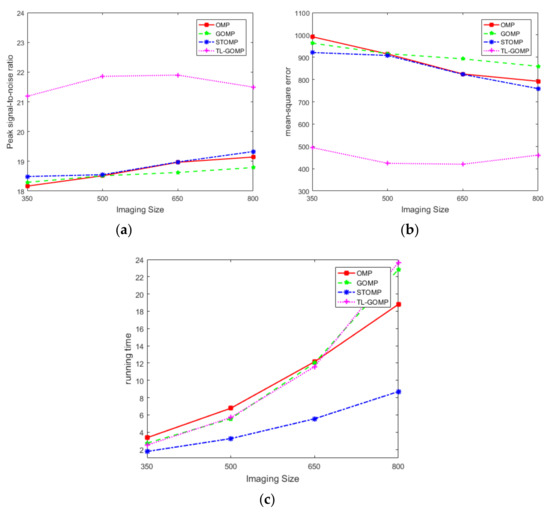

Table 4 shows the simulation results, for which a test image with a resolution of 800 800 pixels was selected as the target. The resulting data show that the PSNR values of the reconstructed image obtained by the TL-GOMP algorithm increased by 14.40% (compared with the result of the GOMP algorithm), 11.21% (compared with the result of the STOMP algorithm), and 12.29% (compared with the result of the OMP algorithm). The MSE values of the images decreased by 46.36%, 39.28%, and 41.82%, respectively. In the image quality evaluation, the higher the peak signal-to-noise ratio, the better the image quality; in contrast, the smaller the mean square error value, the better the image quality. The red curve shown in Figure 5 is the simulation result of the image reconstruction with different resolutions using the TL-GOMP algorithm. We can observe that image quality improves with an increase in resolution, and thus this algorithm has the advantage of improving the quality of the reconstructed image. Table 5 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by TL-GOMP algorithms. Table 6 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by OMP algorithms. Table 7 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by STOMP algorithms. Table 8 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by GOMP algorithms. The data results show the advantages of the TL-GOMP image reconstruction algorithm once again.

Table 4.

PSNR, MSE, and time of the 800 × 800 image reconstructed by OMP series algorithms.

Figure 5.

(a) Relationship between the image size and PSNR of reconstructed images; (b) relationship between the image size and MSE of reconstructed images; (c) relationship between the image size and running times.

Table 5.

PSNR, MSE, and time of the different pixel image reconstructed by TL-GOMP algorithms.

Table 6.

PSNR, MSE, and time of the different pixel image reconstructed by OMP algorithms.

Table 7.

PSNR, MSE, and time of the different pixel image reconstructed by STOMP algorithms.

Table 8.

PSNR, MSE, and time of the different pixel image reconstructed by GOMP algorithms.

4.2. Comparison of Simulation Results of the TL-GOMP and Other Algorithms

In this section, to verify the excellent performance of the TL-GOMP algorithm in image reconstruction, we adopt the research method of simulating the same target and comparing the results with those of other algorithms. In the following section, we simulate the test images with resolutions of 350 350, 500 500, 650 650, and 800 800. Figure 6 shows the simulation results for the test image with a resolution of 800 800 pixels using different types of algorithms. The figure shows that the image reconstructed by the TL-GOMP algorithm reflects the details or content information of the results. We present the results of the simulation and conduct a quantitative analysis below. In conclusion, the TL-GOMP algorithm has great potential for applications in the field of photonic integrated interference imaging.

Figure 6.

Reconstructed image using different algorithms: (a) CoSaMP; (b) IHT; (c) IRLS; (d) GBP; (e) SP; and (f) TL-GOMP.

In this section, we adopted a test image with a resolution of 350 350 as the scene target and simulated it using a series of six different image reconstruction algorithms: Compressive Sampling Matching Pursuit (CoSaMP), Generalized Back Propagation (GBP), Iterative Hard Thresholding (IHT), Iteration Reweighted Least Square (IRLS), Subspace Pursuit (SP), and TL-GOMP. The image quality was evaluated using the peak signal-to-noise ratio and mean square error. Table 9 lists the quality evaluation data and code running times of the reconstructed images. From the quantitative data, it can be seen that the PSNR values of the images obtained by the TL-GOMP algorithm are increased by 25.76% (compared with CoSaMP), 10.32% (compared with GBP), 38.50% (compared with IHT), 5.15% (compared with IRLS), and 17.18% (compared with SP). The MSE values of the images decreased by 63.19%, 36.64%, 74.23%, 21.27%, and 51.09%, respectively, and the code running time was also relatively fast.

Table 9.

PSNR, MSE, and running time of the 350 × 350 image reconstructed by different algorithms.

Table 10 shows the simulation results for the use of a test image with a resolution of 500 500 as the target. The results show that the PSNR values of the reconstructed images obtained by the TL-GOMP algorithm improved by 27.74% (compared with CoSaMP), 10.69% (compared with GBP), 42.08% (compared with IHT), 4.01% (compared with IRLS), and 19.75% (compared with SP). The MSE values of the images decreased by 66.47%, 38.51%, 77.47%, 17.64%, and 56.39%, respectively.

Table 10.

PSNR, MSE, and running time of the 500 × 500 image reconstructed by different algorithms.

Table 11 shows the simulation results for the use of a test image with a resolution of 650 650 as the target. The data in the table show that the PSNR value of the reconstructed image obtained by the TL-GOMP algorithm improved by 26.29% (compared with CoSaMP), 8.87% (compared with GBP), 42.31% (compared with IHT), 2.83% (compared with IRLS), and 17.26% (compared with SP). The MSE values of the images decreased by 64.99%, 33.70%, 77.67%, 12.97%, and 52.39%, respectively.

Table 11.

PSNR, MSE, and running time of the 650 × 650 image reconstructed by different algorithms.

Table 12 lists the simulation results for the use of the test image with a resolution of 800 800 as the target. The data in the table show that the PSNR value of the image reconstructed by the TL-GOMP algorithm increased by 22.92% (compared with CoSaMP), 5.35% (compared with GBP), 37.98% (compared with IHT), 0.40% (compared with IRLS), and 14.74% (compared with SP). The MSE values of the images decreased by 60.26%, 22.23%, 74.40%, 1.91%, and 47.05%, respectively. In Figure 7, the red curve represents the image data as reconstructed by the TL-GOMP algorithm. It can be observed that the peak signal-to-noise ratio and mean square error of the image reconstructed by this algorithm are higher than those reconstructed by the other algorithms. Its mean square error value is much lower than that of the image reconstructed by the other algorithms, and it is also faster in terms of the code running time. In summary, the TL-GOMP algorithm has better image reconstruction performance.

Table 12.

PSNR, MSE, and running time of the 800 × 800 image reconstructed by different algorithms.

Figure 7.

(a) Relationship between the image size and PSNR of the reconstructed image; (b) relationship between the image size and MSE of the reconstructed image; (c) relationship between image size and running time.

Table 13 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by CoSaMP algorithms. Table 14 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by GBP algorithms. Table 15 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by IHT algorithms. Table 16 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by IRLS algorithms. Table 17 shows PSNR values and MSE values of the different pixel image repeatedly reconstructed by SP algorithms. The data results show the advantages of the TL-GOMP image reconstruction algorithm once again.

Table 13.

PSNR, MSE, and time of the different pixel image reconstructed by CoSaMP algorithms.

Table 14.

PSNR, MSE, and time of the different pixel image reconstructed by GBP algorithms.

Table 15.

PSNR, MSE, and time of the different pixel image reconstructed by IHT algorithms.

Table 16.

PSNR, MSE, and time of the different pixel image reconstructed by IRLS algorithms.

Table 17.

PSNR, MSE, and time of the different pixel image reconstructed by SP algorithms.

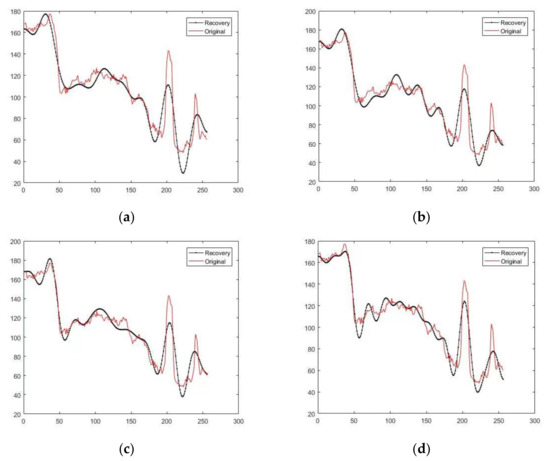

4.3. Simulation Results of Single-Column Signal Reconstruction by the CS TL-GOMP Algorithm

Figure 8 shows the 256 256 target test images. Figure 9 shows the simulation results from the use of the TL-GOMP algorithm to reconstruct a single column of the original signals with different running times. We first selected an image with a resolution of 256 256 for testing, arbitrarily selected a 256 1 column vector as the original signal, and then performed signal reconstruction 1, 50, 100, and 200 times. The simulation results of the signal reconstruction are shown in Figure 9. It can be observed that the reconstructed signal swings around the original signal and gradually approaches the original signal with an increase in the reconstruction time. Table 18 shows that the residual values of the TL-GOMP algorithm after different runs are 168.5664, 161.6117, 150.3473, and 136.5506 upon comparison of the reconstructed signal with the original signal. Among these, the residual value is an important index for measuring the size of the error or the degree of deviation. The simulation results show that with an increase in the number of original signal reconstructions, the residual value demonstrates a decreasing trend; that is, the accuracy of the reconstructed signal gradually approaches that of the original signal. The TL-GOMP algorithm exhibits good stability in the reconstruction of the original signal. Table 19 shows multiple simulation data with different signal reconstruction times.

Figure 8.

Original version of the 256 256 image.

Figure 9.

Reconstruction results of randomly selected single-column signals: (a) 1 time; (b) 50 times; (c) 100 times; (d) 200 times.

Table 18.

Signal reconstruction times and residual values.

Table 19.

Multiple simulation data with different signal reconstruction times.

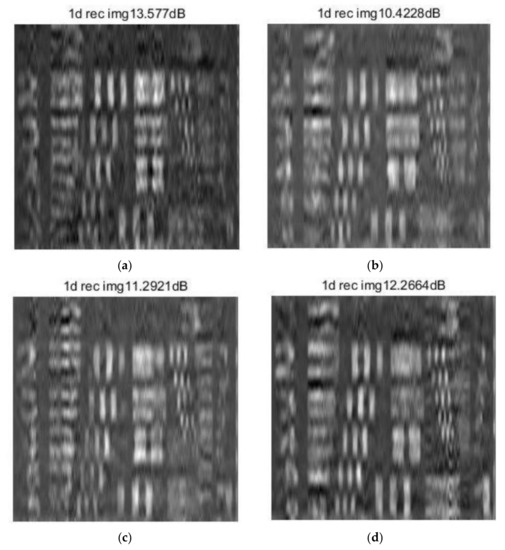

4.4. Simulation Results of the CS TL-GOMP Algorithm in Image Reconstruction at Different Distances

We used the Photonic Integrated Circuit to collect the spatial frequency information emitted by the target to form the restoration image. Figure 10 shows the imaging results for the frequency information collected by the microlens array on the PIC at different distances d; the resolution of the restored images is 256 256. The “Original image” in Figure 10 represents the restoration image of the microlens array on PIC as the target test image. Because the signal acquisition of the PIC is an under-sampling process, it is necessary to use a sparse signal image reconstruction algorithm in order to recover the content information of the detected target.

Figure 10.

Restoration image results of the PIC at different distances: (a) d = 75 m; (b) d = 125 m; (c) d = 175 m; and (d) d = 225 m.

In the experiment, the Gaussian random matrix was selected as the measurement matrix, and the discrete cosine transform matrix was used as the sparse matrix. In this part, we conducted several simulation experiments and displayed the experimental data and reconstructed images of one of them, as shown in Figure 11 and Table 20.

Figure 11.

Simulation results of the TL-GOMP algorithm reconstruction of restored images at different distances: (a) d = 75 m; (b) d = 125 m; (c) d = 175 m; and (d) d = 225 m.

Table 20.

PSNR and MSE of reconstructed images at different distances.

Figure 11 shows the simulation results after the reconstruction of restored images at d = 75, 125, 175, and 225 m using the compressed sensing TL-GOMP algorithm. We used two image quality evaluation indices, the peak signal-to-noise ratio, and the mean square error to measure the image quality. That is, the higher the peak signal-to-noise ratio, the better the image quality. Conversely, the lower the mean square error, the better the image quality. The simulation data in Table 20 show that the compressed sensing TL-GOMP image reconstruction algorithm is suitable for the content restoration of detected targets at different distances. “1d rec img” in Figure 11 represents the result of target reconstruction by TL-GOMP algorithm. We used the image quality evaluation function for evaluation of the reconstructed image; 13.5770 dB, 10.4228 dB, 11.2921 dB, and 12.2664 dB show the peak signal-to-noise ratio of the reconstructed image.

4.5. Influence of Measurement Number M in the CS TL-GOMP Algorithm

Figure 8 shows the 256 256 target test images. The observation matrix () is an important parameter in the CS TL-GOMP algorithm, which can collect the original signal , obtain the sparse representation by combining with the algorithm, and finally reconstruct the desired signal . Figure 12a shows the relationship curve of different measurement numbers on the sparsity and quality of the reconstructed image. The value of ranged from 56 to 256, with a step size of 5. The simulation data show that the sparsity (the number of non-zero values) in the sparse representation is 18. Meanwhile, with an increase in in the observation vector , the PSNR value of the image also increases. In addition, the MSE value of the image exhibits a decreasing trend. Therefore, we can conclude that the quality of the reconstructed image also improves with an increase in in the observation vector. In the experiment, the Gaussian random matrix was selected as the measurement matrix, and the discrete cosine transform matrix was used as the sparse matrix; Figure 12 shows the multiple simulation results.

Figure 12.

Influence of measurement number M on sparsity k and reconstructed image quality. (a) The results of the first experiment; (b) The results of the second experiment; (c) The results of the third experiment; (d) The results of the fourth experiment.

4.6. Influence of Measurement Matrix M × N and Sparsity k in the CS TL-GOMP Algorithm on the Quality of the Reconstructed Image

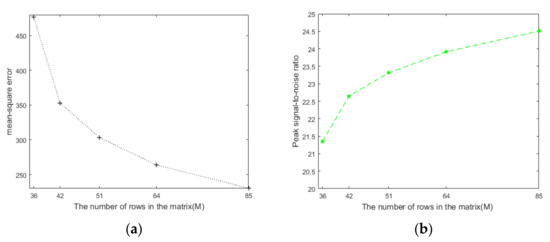

Figure 8 shows the 256 256 target test images. Figure 13 shows the results from the simulation of the relationship between different sparsity values and the quality of the reconstructed image. For the sparsity , we selected the values of 9, 10, 11, 12, and 13 for the simulation of the test image with a resolution of 256 256. The results show that the PSNR increases with an increase in , whereas the MSE decreases with an increase in . Figure 14 shows the simulation results for the relationship between the different measurement matrix sizes and the quality of the reconstructed image. We selected measurement matrices with dimensions of 36 256, 42 256, 64 256, and 85 256 to simulate the same test image. The simulation results show that PSNR increases with an increase in the size of the size of the measurement matrix. The MSE decreases with an increase in the size of the measurement matrix. Table 21 and Table 22 list one of the multiple simulation results. Therefore, we can conclude that the quality of the reconstructed image improves with an increase in and the size of the measurement matrix.

Figure 13.

(a) Variation curves for the PSNR of the reconstructed images with sparsity k; (b) variation curves for the MSE of the reconstructed images with sparsity k.

Figure 14.

(a) Variation curves for the MSE of reconstructed images with matrix size M × N; (b) variation curves for the PSNR of reconstructed images with matrix size M × N.

Table 21.

PSNR and MSE of the reconstructed images with different sparsity k.

Table 22.

PSNR and MSE of the reconstructed images with different matrix sizes M × N.

We studied the influence of different sparsity and different measurement matrix sizes on reconstruction quality. The measurement matrix selected by us was Gaussian random matrix, and the sparse matrix was discrete cosine transform matrix. For the sparsity , we selected the values of 9, 10, 11, 12, and 13. In each case of sparsity, we conducted several experiments, and the results of the experiments are shown in Table 23. Similarly, we selected measurement matrices with dimensions of 36 256, 42 256, 64 256, and 85 256 to simulate the same test image. In the case of the size of each measurement matrix, we also used the same measurement matrix and the same research method to carry out many experiments. The experimental data results are shown in Table 24. According to the data results for many experiments, we conclude that “the quality of the reconstructed image improves with an increase in and the size of the measurement matrix”. However, in order to achieve high-quality image reconstruction, the measurement matrix and sparse matrix of compressed sensing theory are constantly being studied. In future research, we will use an updated measurement matrix to verify the above conclusions.

Table 23.

PSNR and MSE of the multiple simulation data with different sparsity k.

Table 24.

PSNR and MSE of the multiple simulation data with different matrix size M × N.

5. Conclusions

In this study, we improved the traditional image reconstruction algorithm and proposed a TL-GOMP tracing algorithm for compressed sensing. In the simulation, we used the TL-GOMP algorithm and the same series of traditional OMP, STOMP, and GOMP algorithms to perform simulations using the same test targets. The results of the simulation show that the quality of the image reconstructed by the TL-GOMP algorithm was better than that reconstructed by the other traditional algorithms in the same series. To illustrate the advantages of this algorithm more rigorously, we also conducted a comparison simulation between the TL-GOMP algorithm and other image reconstruction algorithms. The results also showed that the quality of the image reconstructed by the TL-GOMP algorithm was better than that reconstructed by the other algorithms, which has potential application value. To verify the stability of the algorithm, we arbitrarily extracted a column of the original signal column vectors and performed signal reconstruction several times. The simulation results showed that the accuracy of the reconstructed signal gradually approached that of the original signal with an increase in the number of reconstruction runs. The TL-GOMP algorithm was also used to reconstruct the restored images at different detection distances, and the simulation results showed that the algorithm could reproduce the content information of the target. Therefore, the TL-GOMP algorithm is advantageous for applications in photonic integrated interference imaging. It can reconstruct sparse spatial frequency information collected by the PIC and recover the content information of the detected target. In summary, the TL-GOMP algorithm can reconstruct the sparse and unknown information collected as well as recover the content information of unknown targets. This could benefit scientific and technological exploration and production, and it also has good potential for application in the field of photonic integrated interference detection technology.

Author Contributions

Conceptualization, C.C., Z.F. and J.Y.; methodology, J.Y.; software, C.C.; validation, Z.W., R.W. and K.L.; formal analysis, S.Y. and B.S.; investigation, J.Y.; resources, C.C.; data curation, J.Y. and Z.F.; writing—original draft preparation, J.Y.; writing—review and editing, Z.F. and C.C.; visualization, C.C.; supervision, Z.W.; project administration, J.Y.; funding acquisition, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

The authors thank the optical sensing and measurement team of Xidian University for their help. This research was supported by the National Natural Science Foundation of Shaanxi Province (Grant No. 2020JM-206), the National Defense Basic Research Foundation (Grant No. 61428060201), and 111 Project (B17035).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ogden, C.; Wilm, J.; Stubbs, D.M.; Thurman, S.T.; Su, T.; Scott, R.P.; Yoo, S.J.B. Flat panel space based space surveillance sensor. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies (AMOS) Conference, Maui, HI, USA, 10–13 September 2013. [Google Scholar]

- Su, T.H.; Liu, G.Y.; Badham, K.E.; Thurman, S.T.; Kendrick, R.L.; Duncan, A.; Wuchenich, D.; Ogden, C.; Chriqui, G.; Feng, S.; et al. Interferometric imaging using Si3N4 photonic integrated circuits for a SPIDER imager. Opt. Express 2018, 26, 12801–12812. [Google Scholar] [CrossRef] [PubMed]

- Aufdenberg, J.; Mérand, A.; Foresto, V.C.D.; Absil, O.; Di Folco, E.; Kervella, P.; Ridgway, S.; Berger, D.; Brummelaar, T.T.; McAlister, H. First results from the CHARA Array. VII. Long-baseline interferometric measurements of Vega consistent with a pole-on, rapidly rotating star. Astrophys. J. 2006, 645, 664–675. [Google Scholar] [CrossRef]

- Brummelaar, T.A.; McAlister, H.A.; Ridgway, S.T.; Bagnuolo, W.G., Jr.; Turner, N.H.; Sturmann, L.; Sturmann, J.; Berger, D.H.; Ogden, C.E.; Cadman, R.; et al. First Results from the CHARA Array. II. A Description of the Instrument. Astrophys. J. 2005, 628, 453–465. [Google Scholar] [CrossRef]

- Petrov, R.G.; Malbet, F.; Weigelt, G.; Antonelli, P.; Beckmann, U.; Bresson, Y.; Chelli, A.; Dugué, M.; Duvert, G.; Gennari, S.; et al. AMBER, the near-infrared spectro-interferometric three-telescope VLTI instrument. Astron. Astrophys. 2007, 464, 1–12. [Google Scholar] [CrossRef]

- Armstrong, J.T.; Mozurkewich, D.; Rickard, L.J.; Hutter, D.J.; Benson, J.A.; Bowers, P.; Elias, N., II; Hummel, C.; Johnston, K.; Buscher, D.; et al. The navy prototype optical interferometer. Astrophys. J. 1998, 496, 550–571. [Google Scholar] [CrossRef]

- Pearson, T.; Readhead, A. Image formation by self-calibration in radio astronomy. Annu. Rev. Astron. Astrophys. 1984, 22, 97–130. [Google Scholar] [CrossRef]

- Badham, K.; Kendrick, R.L.; Wuchenich, D.; Ogden, C.; Chriqui, G.; Duncan, A.; Thurman, S.T.; Su, T.; Lai, W.; Chun, J.; et al. Photonic integrated circuit-based imaging system for SPIDER. In Proceedings of the 2017 Conference on Lasers and Electro-Optics Pacific Rim (CLEO-PR), Singapore, 31 July–4 August 2017. [Google Scholar]

- Scott, R.P.; Su, T.; Ogden, C.; Thurman, S.T.; Kendrick, R.L.; Duncan, A.; Yu, R.; Yoo, S. Demonstration of a photonic integrated circuit for multi-baseline interferometric imaging. In Proceedings of the 2014 IEEE Photonics Conference (IPC), San Diego, CA, USA, 12–16 October 2014; pp. 1–2. [Google Scholar]

- Mishali, M.; Eldar, Y.C. From theory to practice: Sub-Nyquist sampling of sparse wideband analog signals. IEEE J. Sel. Top. Signal Process. 2010, 4, 375–391. [Google Scholar] [CrossRef]

- Hariri, A.; Babaie-Zadeh, M. Compressive detection of sparse signals in additive white Gaussian noise without signal reconstruction. Signal Process. 2017, 131, 376–385. [Google Scholar] [CrossRef]

- Usala, J.D.; Maag, A.; Nelis, T.; Gamez, G. Compressed sensing spectral imaging for plasma optical emission spectroscopy. J. Anal. At. Spectrom. 2016, 31, 2198–2206. [Google Scholar] [CrossRef]

- Chen, Y.; Ye, X.; Huang, F. A novel method and fast algorithm for MR image reconstruction with significantly under-sampled data. Inverse Probl. Imaging 2017, 4, 223–240. [Google Scholar] [CrossRef]

- Lv, S.T.; Liu, J. A novel signal separation algorithm based on compressed sensing for wideband spectrum sensing in cognitive radio networks. Int. J. Commun. Syst. 2014, 27, 2628–2641. [Google Scholar] [CrossRef]

- Bu, H.; Tao, R.; Bai, X.; Zhao, J. A novel SAR imaging algorithm based on compressed sensing. IEEE Geosci. Remote Sens. Lett. 2017, 12, 1003–1007. [Google Scholar] [CrossRef]

- He, Z.; Zhao, X.; Zhang, S.; Ogawa, T.; Haseyama, M. Random combination for information extraction in compressed sensing and sparse representation-based pattern recognition. Neurocomputing 2014, 145, 160–173. [Google Scholar] [CrossRef]

- Kajbaf, H.; Case, J.T.; Yang, Z.; Zheng, Y.R. Compressed sensing for SAR-based wideband three-dimensional microwave imaging system using non-uniform fast Fourier transform. IET Radar Sonar Navig. 2013, 7, 658–670. [Google Scholar] [CrossRef]

- Li, Q.; Han, Y.H.; Dang, J.W. Image decomposing for inpainting using compressed sensing in DCT domain. Front. Comput. Sci. 2014, 8, 905–915. [Google Scholar] [CrossRef]

- Zhang, J.; Xia, L.; Huang, M.; Li, G. Image reconstruction in compressed sensing based on single-level DWT. In Proceedings of the IEEE Workshop on Electronics, Computer & Applications, Ottawa, ON, Canada, 8–9 May 2014. [Google Scholar]

- Monajemi, H.; Jafarpour, S.; Gavish, M.; Stat 330/CME 362 Collaboration Donoho; Donoho, D.L.; Ambikasaran, S.; Bacallado, S.; Bharadia, D.; Chen, Y.; Choi, Y.; et al. Deterministic matrices matching the compressed sensing phase transitions of Gaussian random matrices. Proc. Natl. Acad. Sci. USA 2013, 110, 1181–1186. [Google Scholar] [CrossRef]

- Lu, W.; Li, W.; Kpalma, K.; Ronsin, J. Compressed sensing performance of random Bernoulli matrices with high compression ratio. IEEE Signal Process. Lett. 2015, 22, 1074–1078. [Google Scholar]

- Li, X.; Zhao, R.; Hu, S. Blocked polynomial deterministic matrix for compressed sensing. In Proceedings of the International Conference on Wireless Communications Networking & Mobile Computing, Chengdu, China, 23–25 September 2010. [Google Scholar]

- Yan, T.; Lv, G.; Yin, K. Deterministic sensing matrices based on multidimensional pseudo-random sequences. Circ. Syst. Signal Process. 2014, 33, 1597–1610. [Google Scholar]

- Boyd, S.; Vandenberghe, L. Convex optimization. IEEE Trans. Autom. Control 2006, 51, 1859. [Google Scholar]

- Mota, J.F.; Xavier, J.M.; Aguiar, P.M.; Puschel, M. Distributed basis pursuit. IEEE Trans. Signal Process. 2012, 60, 1942–1956. [Google Scholar] [CrossRef]

- Lee, J.; Choi, J.W.; Shim, B. Sparse signal recovery via tree search matching pursuit. J. Commun. Netw. 2016, 18, 699–712. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, J.; Ren, S.; Li, Q. A reducing iteration orthogonal matching pursuit algorithm for compressive sensing. Tsinghua Sci. Technol. 2016, 21, 71–79. [Google Scholar] [CrossRef]

- Sahoo, S.K.; Makur, A. Signal recovery from random measurements via extended orthogonal matching pursuit. IEEE Trans. Signal Process 2015, 63, 2572–2581. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Hu, Z.; Liang, D.; Xia, D.; Zheng, H. Compressing sampling in computed tomography: Method and application. Nucl. Instrum. Methods Phys. Res. 2014, 748, 26–32. [Google Scholar] [CrossRef]

- Shang, F.F.; Li, K.Y. The Application of Wavelet Transform to Breast-Infrared Images. Cogn. Inform. 2006, 2, 939–943. [Google Scholar]

- Rubinstein, R.; Brucktein, A.M.; Elad, M. Dictionaries for sparse representation modeling. Proc. IEEE 2010, 98, 1045–1057. [Google Scholar] [CrossRef]

- He, J.; Wang, T.; Wang, C.; Chen, Y. Improved Measurement Matrix Construction with Random Sequence in Compressed Sensing. Wirel. Pers. Commun. 2022, 123, 3003–3024. [Google Scholar] [CrossRef]

- Wang, X.; Cui, G.; Wang, L.; Jia, X.L.; Nie, W. Construction of measurement matrix in compressed sensing based on balanced Gold sequence. Chin. J. Sci. Instrum. 2014, 35, 97–102. [Google Scholar]

- Xu, G.; Xu, Z. Compressed sensing matrices from Fourier matrices. IEEE Trans. Inf. Theory 2015, 61, 469–478. [Google Scholar] [CrossRef]

- Lum, D.J.; Knarr, S.H.; Howell, J.C. Fast Hadamard transforms for compressive sensing of joint systems: Measurement of a 3.2 million-demensional bi-photon probability distribution. Opt. Express 2015, 23, 27636–27649. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Wang, K.; Wang, Q.Y.; Liang, R.; Zuo, J.; Zhao, L.; Zou, C. Deterministic Random Measurement Matrices Construction for Compressed Sensing. J. Signal Process. 2014, 30, 436–442. [Google Scholar]

- Narayanan, S.; Sahoo, S.; Makur, A. Greedy pursuits assisted basis pursuit for reconstruction of joint-sparse signals. Signal Process. 2018, 142, 485–491. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D.; Wright, S.J. Gradient Projection for Sparse Reconstruction: Application to Compressed Sensing and Other Inverse Problems. IEEE J. Sel. Top. Signal Process. 2008, 1, 586–597. [Google Scholar] [CrossRef]

- Ji, S.H.; Xue, Y.; Carin, L. Bayesian compressive sensing. IEEE Trans. Signal Process. 2008, 56, 2346–2356. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, S.; Xiong, R.; Ma, S.; Zhao, D. Improved total variation based image compressive sensing recovery by nonlocal regularization. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS), Beijing, China, 19–23 May 2013; pp. 2836–2839. [Google Scholar]

- Kulkarni, K.; Lohit, S.; Turaga, P.; Kerviche, R.; Ashok, A. Reconnet: Non-iterative reconstruction of images from compressively sensed measurements. In Proceedings of the Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; pp. 449–458. [Google Scholar]

- Yao, H.; Dai, F.; Zhang, D.; Ma, Y.; Zhang, S.; Zhang, Y.; Tian, Q. DR 2 -Net: Deep Residual Reconstruction Network for Image Compressive Sensing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lohit, S.; Kulkarni, K.; Kerviche, R.; Turaga, P.; Ashok, A. Convolutional neural networks for noniterative reconstruction of compressively sensed images. IEEE Trans. Comput. Imaging 2018, 4, 326–340. [Google Scholar] [CrossRef]

- Du, J.; Xie, X.; Wang, C.; Shi, G.; Xu, X.; Wang, Y. Fully convolutional measurement network for compressive sensing image reconstruction. Neurocomputing 2019, 328, 105–112. [Google Scholar] [CrossRef]

- Nie, G.; Fu, Y.; Zheng, Y.; Huang, H. Image Restoration from Patch-based Compressed Sensing Measurement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Shi, W.; Jiang, F.; Zhang, S.; Zhao, D. Deep networks for compressed image sensing. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 877–882. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).