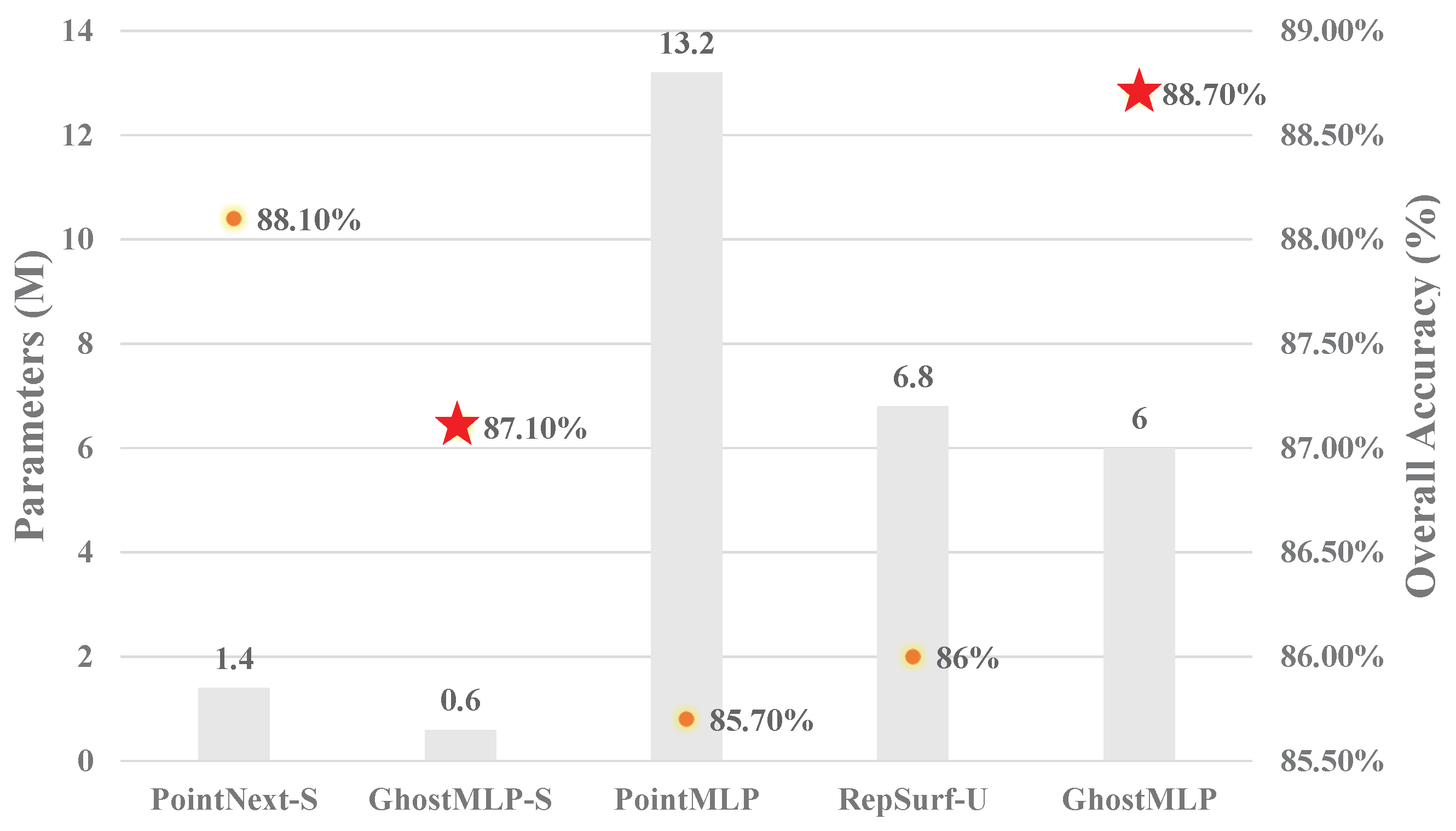

In this section, the GhostMLP architecture will be introduced, which leverages the “Sample_and_Group” and “Ghost Set Abstraction” as the main feature extractors in every stage and shown in

Figure 2. Architecturally, the GhostMLP is a selective combination of two other deep learning models: the PointMLP and PointNeXt. Through proper training strategies, the goal of GhostMLP is to develop an efficient point cloud object classification neural network, and it can extract more features using fewer resources, further minimizing computation time and running faster during practical use.

Another inspiration for developing an efficient neural network for point cloud object classification comes from the I3D [

26]. One contribution of the I3D is to expand a 2D neural network into a 3D network. Conversely, a well-designed 2D neural network can be shrunken into an MLP network to build a point cloud object classification network. However, contraction only may not be sufficient to create an efficient 2D neural network. Therefore, the original GhostNet, designed based on the concept of grouped convolution, is upgraded through the contraction idea to create a network that can be used for point cloud object classification.

Furthermore, it has been noticed that the baseline model of PointMLP does not apply data augmentation to train the neural network. This results in limiting its overall performance improvement. Then through the addition of the PointNeXt component, the extra data augmentation improves the resulting performance of the proposed neural network design. Therefore, a point cloud neural network trained with data augmentation can enhance both the performance of PointMLP and our proposed GhostMLP design as well.

3.1. Framework of GhostMLP

Motivation of a lightweight PointMLP: Many advanced point cloud extractors are complex. The extractor in PointMLP is effective by adopting a simple framework design. Despite using a simple framework, similar to many other neural network designs, it is slow to run on devices with a small amount of memory and computing resources for the point cloud classification operations. We would like to save resources for subsequent downstream tasks, and we have discovered that the component of GhostNet in our proposed GhostMLP can significantly speed up the computation operations in the network.

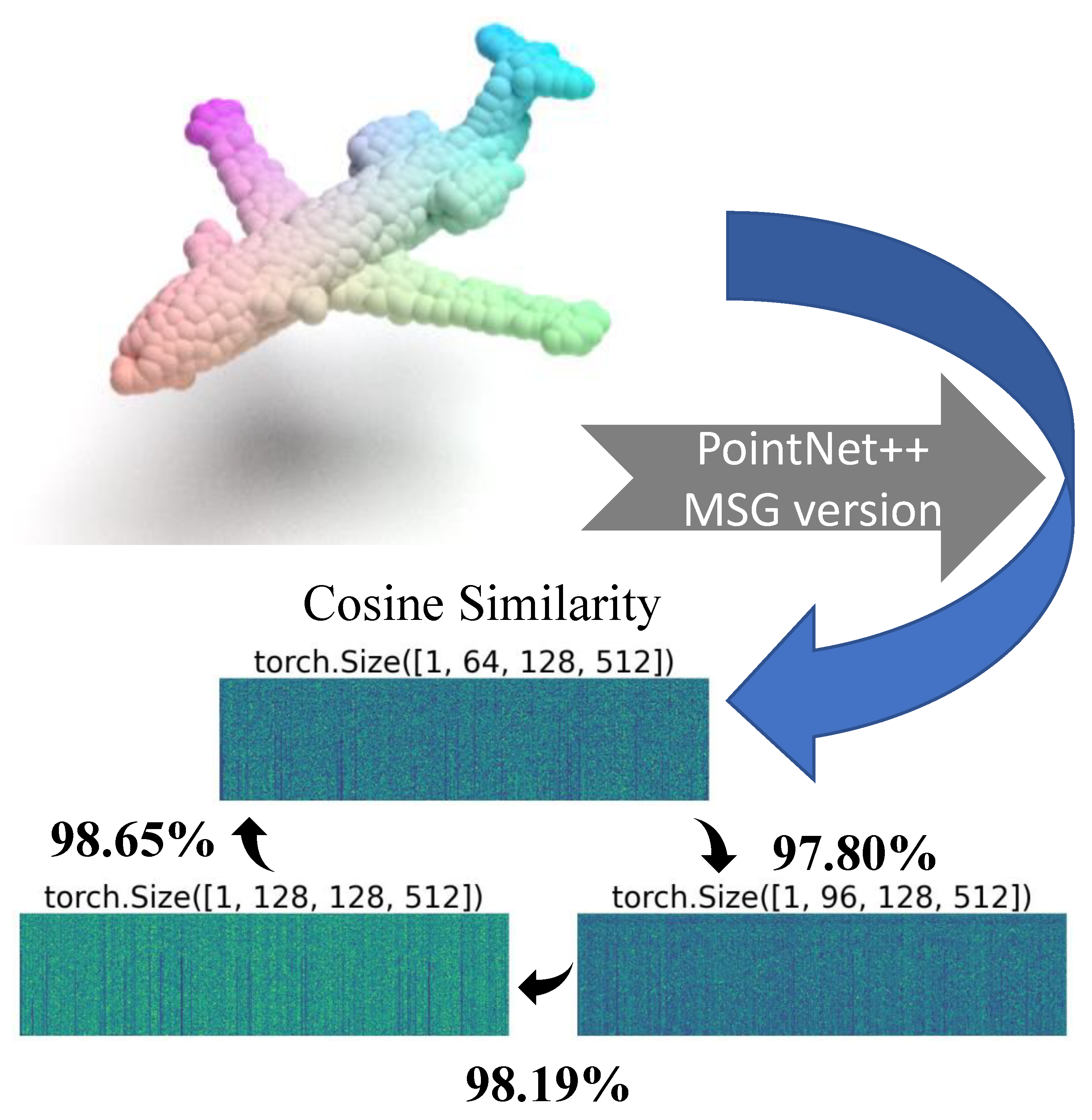

Explore the feature maps in the point cloud. Unlike many feature maps generated by many neural networks for image processing, PointNet++ creates a different set of feature maps based on the dimensions of sampled points, as shown in

Figure 3. These feature maps are not trivial, and redundancies can still be found through cosine similarity calculations. As a result of these findings, the concepts of GhostNet can be applied to point cloud analysis.

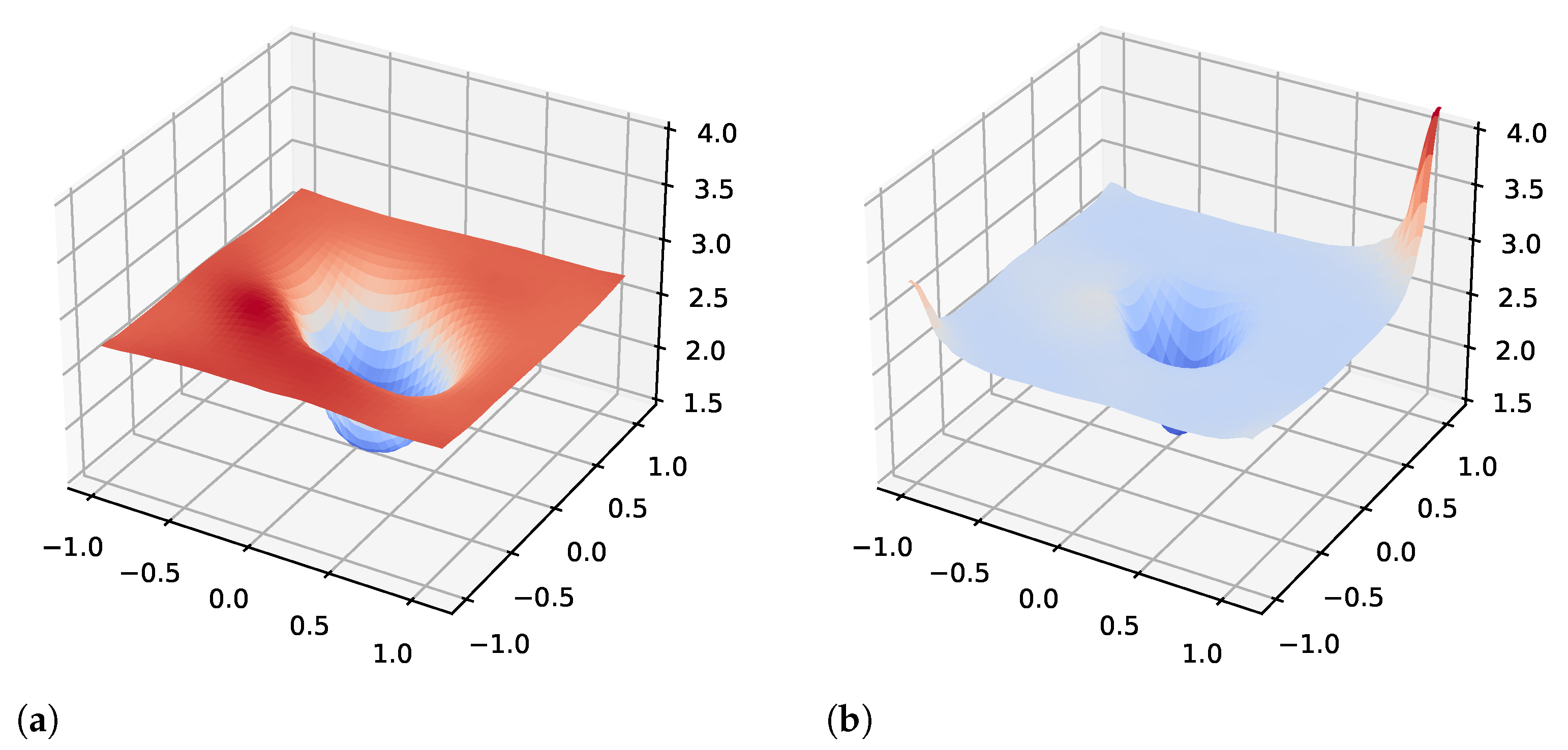

Craft the Ghost block: In this subsection, out goal is to speed up the networks for point cloud classification by avoid carrying out the heavy downstream computation tasks. To achieve this, we have noticed that significant improvement may be possible through adopting the GhostNet design within GhostMLP. In fact, using GhostNet structures directly also does not meet the goal due to its slow performance in resource (memory and computation) constraint computing systems. Therefore, we propose to create two MLP blocks, denoted and (we call them the “primary part” and “ghost part” in the following, respectively), by modifying the GhostNet blocks without duplicating the neural network parameters. We then introduce a residual structure to combine these modified blocks and enable efficient point cloud classification.

Algorithm 1 shows the pseudo code of GhostMLP. It takes three types of inputs: the data, the number of channels, and the ratio. We would like to initially set the

size, which is calculated as a product of the number of input channels and the ratio. Then, we have to set up two MLP components: the

and

. The

is the main feature extractor, and the

is the secondary “cheap operator.” Each of these two MLPs consists of a 1D convolutional layer, a batch normalization layer, and a ReLU activation function. After the initial setting, the input data runs through the

module to obtain the first set of features. Afterwards, the

is applied to the output of the

to obtain the second set of features. Finally, the design is to concatenate the two sets of features along the channel dimension and produce the result.

| Algorithm 1 GhostMLP in a PyTorch-like style. |

- 1:

# Primary MLP: main feature extractor - 2:

# Ghost MLP: cheap operation - 3:

function GhostMLP() - 4:

Input: - 5:

Parameter: - 6:

Output: the feature of data - 7:

# Init the channel of primary_MLP: - 8:

- 9:

# Params: channel, intrinsic_channel - 10:

- 11:

# Init the channel of ghost_MLP: - 12:

- 13:

# Params: intrinsic_channel, ghost_channel - 14:

- 15:

# Forward - 16:

- 17:

- 18:

- 19:

return # The feature of data - 20:

end function

|

In summary, the GhostMLP algorithm is to apply the input data through the two MLP components ( and ) in order to efficiently extracts feature maps. Then by concatenating the generated features from these MLPs, the design obtain more response results with fewer parameters than the traditional MLP designs.

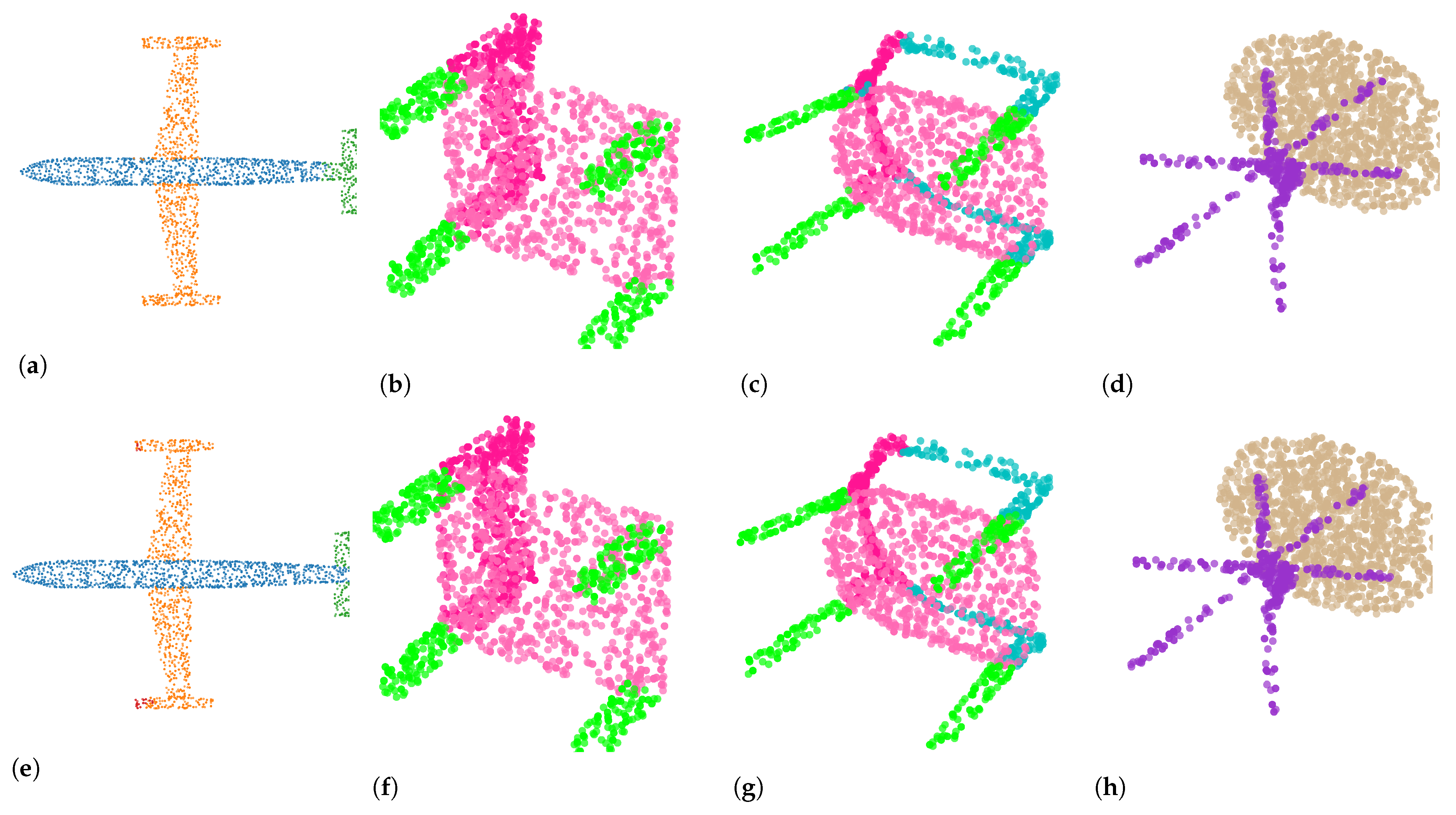

Structure of GhostMLP: Our proposed GhostMLP architecture takes both the positive designs in PointNet++ and PointMLP models. Units such as the geometric affine for sampling and grouping are adopted. In

Figure 2, the forward pass of GhostMLP with only one stage is shown. The geometric affine design in PointMLP is adopted in our GhostMLP for the robustness in performance. In fact, we can adapt to different geometric structures in different local regions in order to sample and group the point cloud data.

The following two equations (Equations (

1) and (

2)) are used in defining the structures of the

module. The first equation computes the output of the

module, and the second equation computes the standard deviation of the feature maps of a local region.

Equation (

3) represents the GhostMLP module in a neural network stage, which consists of two ghost set abstraction blocks:

, and a series of MLPs. Firstly, the input point cloud data is grouped based on geometric affinity using the

module with learnable parameters. After receiving data from the

module, the neural network needs an operation to reshape the data so that it can be passed through the subsequent MLP layers. Third, the data is sent to the module shown in Algorithm 1 to perform a MAX operation to process the features in each local region.

So far, GhostMLP has a design similar to PointNet, but PointNet’s main contribution lies in its ability to learn features of point clouds, given that multi-layer perceptrons (MLPs) struggle with the irregularity of point clouds. However, if we input features that have already been well-learned by MLPs into another MLP, then the challenge brought by point clouds for MLPs no longer exists. Hence, there is one module shown in Algorithm 1 to learn the features on the bottom of the ghost set abstraction. In summary, our model could be shown as follows:

where

represents the MLP block in Algorithm 1, and

represents normal MLP, and

x represents the input data. We crafted two types of neural networks named: GhostMLP and GhostMLP-S, the deeper neural network (40 layers) is named as GhostMLP, and the model with 28 layers is for GhostMLP-S.