1. Introduction

With the rapid development of aerospace remote sensing technology, the application range of remote sensing images has become extensive. Moving object detection (MOD) in aerial remote sensing image sequences is one of the most critical tasks in military surveillance and civil navigation. The MOD task refers to the detection of change regions in an image sequence and the extraction of the moving object from the background image. The extracted moving object can provide a reference area for subsequent tasks, such as action recognition [

1,

2], tracking [

3,

4,

5,

6,

7], behavior analysis [

8], and intelligent video surveillance [

9,

10]. Recently, advances in UAV technology have made it easy to acquire remote sensing images, and relatively inexpensive UAVs have a wide range of applications. Therefore, as the use of moving cameras grows, so does the need to detect moving objects, which makes it critical to develop robust moving object detection methods for moving cameras.

MOD tasks can be divided into static and motion camera tasks depending on whether the camera is in motion. Currently, research on static camera tasks is more mature [

11,

12,

13], but more research on motion camera tasks is required [

14,

15]. Compared with a static camera, a stationary object appears to be in motion in the image sequence obtained by a moving camera due to the movement of the camera. In remote sensing image sequences, camera motion relative to the ground can be characterized as translation, rotation, and scaling. When using a MOD algorithm designed for static cameras on an image sequence obtained from a moving camera, the algorithm will fail due to the appearance of motion in the image background. For motion cameras, MOD methods need to consider not only all problems that arise when the camera is stationary, but also the difficulties caused by camera motion compensation. Additionally, motion targets in remote sensing image sequences are typically far from the shooting platform, which leads to problems such as small target sizes and inadequate object color and texture features. Moreover, the target is susceptible to background interference, which leads to noise and motion blur, making detection difficult. Therefore, detecting motion targets in remote sensing image sequences involves many challenges and difficulties. Here, we present these challenging problems in detail.

Moving background: In a static camera, only the moving objects appear to be moving, but in the case of a moving camera, everything seems to be moving as the camera moves. Additionally, parts of the scene disappear over time. In this case, separating the moving objects from the stationary background becomes more difficult.

Shadows: The shadow of a moving object is often treated as the background, rather than the moving object itself. However, many classical moving object detection algorithms fail to separate the shadow from the moving object itself.

Few target pixels: In the remote sensing image sequence, the field of view is large. The moving target occupies very few pixels, requiring the moving target detection algorithm to focus more on small targets.

Complex background: There are many irrelevant backgrounds with similar characteristics to moving targets, as well as static targets with the same characteristics as moving targets in remote sensing images. Therefore, it is necessary to use motion and the semantic features of moving objects for moving object detection.

In recent decades, many MOD algorithms for stationary cameras have been proposed, but there has been relatively little research on MOD algorithms that directly target moving cameras. For motion target detection under camera motion conditions, traditional MOD methods include three main types: the frame difference method, the optical flow method, and the background subtraction method [

16,

17,

18,

19,

20]. The frame difference method usually compensates for moving backgrounds before detecting them using a static camera. This method is simple to calculate, but it is susceptible to changes in lighting conditions and has high requirements for background motion compensation algorithms. The optical flow method is accurate, as it can calculate the magnitude and direction of the displacement of each pixel in the image. However, it requires a lot of computation and cannot achieve real-time calculation. The background subtraction method identifies the moving target by subtracting the background model from the current frame. However, it requires the construction of a robust background model. The method is the most commonly used motion target detection method. It achieves motion target detection by constructing a universal background, such as a mixture of Gaussian models and the kernel density estimation [

21,

22]. However, its application effect in the case of background movement is not ideal. These algorithms cannot adapt well to the needs of complex scenes and require a better balance between robustness and real-time performance. In addition, these methods can only detect the contours of the motion target and cannot obtain the precise coordinates and categories of each motion target.

With the rise of deep learning, the object detection method based on the convolutional neural network (CNN) has been widely used in various fields [

23,

24,

25]. Two-stage algorithms, such as R-CNN, Fast R-CNN, and Faster R-CNN [

26,

27,

28], which achieve state-of-the-art performances in terms of accuracy, and the YOLO series [

29,

30,

31,

32,

33,

34] of end-to-end algorithms, which achieve the highest detection speed, have been proposed.

Motivated by the success of CNNs in target detection tasks, CNNs have been applied to MOD tasks with some good results. Compared with traditional methods, CNN-based MOD methods show significant advantages in terms of their accuracy and robustness. In [

35], F. LATEEF et al. proposed a CNN-based moving target to detect vehicular video sequences. This consisted of two deep learning networks, an encoder–decoder-based network (ED-Net), and a semantic segmentation network (Mask R-CNN). Mask R-CNN detects the objects of interest, and ED-Net classifies their motion (moving/static) in two consecutive frames. Finally, the two networks are combined to detect the motion targets. In [

36], C. Xiao et al. proposed a motion target detection method for satellite video. They proposed a two-stream detection network divided into dynamic and static fusion networks (DSF-Net). The DSF-Net extracts static contextual information from single frames and dynamic motion cues from consecutive frames. Finally, the two networks are fused in layers for moving target detection. In [

37], H. ZHU et al. proposed a framework consisting of coarse-grained detection and fine-grained detection. A connected region detection algorithm is used to extract moving regions, and then a CNN is used to detect more accurate coordinates and identify classes of objects. In [

38], J. ZHU et al. combined a background compensation method to detect moving regions with a neural network-based approach to localize moving objects accurately, and finally, fused the two results to determine the moving target. In [

39], D. Li et al. used CNNs exclusively for single-strain estimation and target detection. Motion information is used in consecutive frames as an additional input to the target detection network to effectively improve the detection performance.

As mentioned above, traditional motion detection methods can achieve good detection accuracy levels. However, they are time-consuming and can only obtain regional information instead of specific target information, such as the object class and location. The CNN-based approach solves this problem by enabling the network to determine the specific location and target class of the moving target. Most CNN-based algorithms divide the MOD task into two steps: first, they extract motion information, compensate for or extract optical flow information from the moving background, and then input it into the object detection network to obtain motion targets. These methods have achieved good results, but they require additional preprocessing, resulting in a complex detection process. The computation cost of optical flow information is expensive, making it unsuitable for remote sensing image sequences. Therefore, under stationary camera conditions, MOD methods cannot be well applied to moving camera scenes. Traditional image-registration-based MOD methods rely heavily on the accuracy of the registration algorithm. In contrast, deep-learning-based algorithms often need to extract motion information as an auxiliary input to the network.

To address the above problems, inspired by the two-stream network combining motion and semantic information, we propose an end-to-end MOD method for an aerial remote sensing image sequence, which fuses enhanced motion information based on dual frames with semantic information based on a single frame. Specifically, we introduce a Motion Feature Enhancement (MFE) module to extract and enhance motion information between two frames preliminarily. Then, the enhanced motion features are fed into a 3D convolutional Motion Information Extraction (MIE) network to further extract motion features at different convolution depths. Finally, the extracted motion information is fused with semantic features based on a single frame at corresponding feature levels and decoded by a detection head to identify moving targets. In summary, the main contributions are as follows:

- (1)

We propose a feasible MOD model, motion feature enhancement module, and motion information extraction network (MFE-MIE), which enhances motion features by feature differences and then extracts multilayer motion information using 3D convolution and finally fuses the multilayer motion information with the corresponding semantic information to accurately detect moving targets. The proposed method is powered entirely by CNN and implements end-to-end detection. Only two frames are input to realize the detection without additional auxiliary information. In addition, it meets the requirements of MOD task real-time detection.

- (2)

We propose an MFE module, which highlights motion features between two frames and calculates the difference between features. This difference is used as a weight to suppress the background to highlight the foreground. The MFE module can enhance the motion features and provide clues for subsequent processing.

- (3)

We designed an MIE network that uses three groups of lightweight improved 3D convolutional layers to extract further motion information from the enhanced motion information. Compared with directly using 3D convolution to extract motion information, our MIE network achieves more lightweight detection with a better detection effect.

- (4)

We conducted a series of experiments to validate the effectiveness of the proposed method. Experiments on two benchmark datasets demonstrated that our MFE-MIE outperforms other state-of-the-art models, achieving real-time detection and meeting the needs of the detection tasks.

The rest of this paper is organized as follows:

Section 2 presents the details of the proposed MOD model. In

Section 3, we first introduce the adopted dataset and the data processing methods used, and then the proposed method is evaluated and discussed through several experiments.

Section 4 presents the discussion. Finally, the conclusions are given in

Section 5.

2. Methods

In this section, we first present the overall structure of the proposed method. Then, we introduce the proposed MFE module and the MIE network.

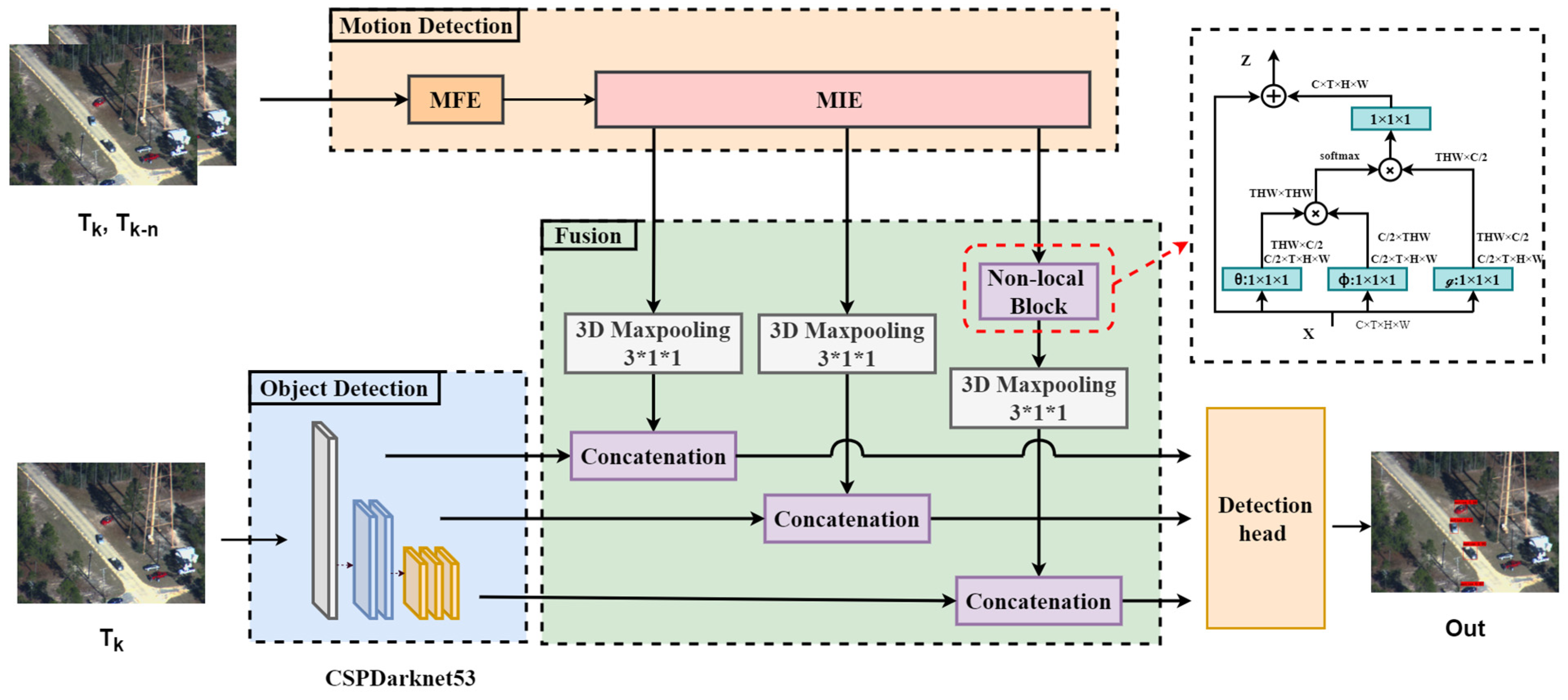

In our work, we propose a moving object detection method named MFE-MIE in which motion information based on two frames and object information based on a single frame are fused. The overall framework of the method is shown in

Figure 1. It is composed of three main parts: motion detection, object detection, and temporal–space fusion. For the motion detection module, a pair of registered images, T

k and T

k−n, are first integrated with motion information by the proposed motion feature enhancement (MFE) module and then encoded by the proposed motion information extraction (MIE) network, which consists of improved 3D convolutions and obtains three layers of motion features with different convolutional depths. The object detection module uses the generic CSPDarknet53 [

31] object feature extraction network to extract three layers of object semantic information at different convolutional depths. The temporal–space fusion module fuses the motion information extracted by the motion detection branch and the object semantic information extracted by the object detection branch at the corresponding level to facilitate the subsequent extraction of moving objects. Finally, the fused information is decoded using two convolutional layers to output the motion target information.

2.1. Motion Detection Module

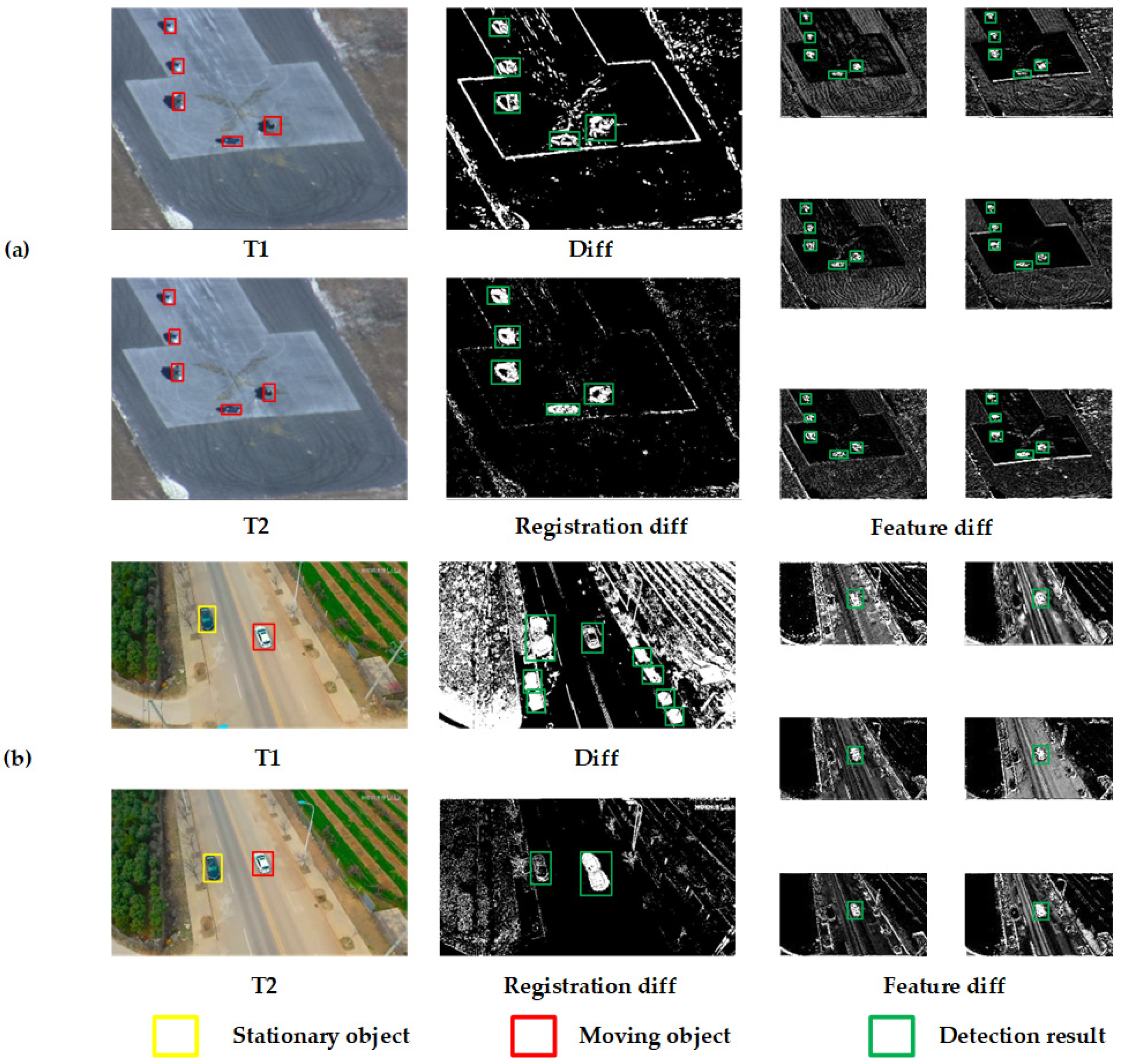

Previous motion detection methods usually only used the simple difference between two frames or the overhead of extracting the optical flow to obtain motion feature information during operation. However, these two operations are less adaptive to motion target detection tasks and often result in insufficient motion information extraction or a huge overhead. To solve this dilemma, a motion detection network consisting of the MFE module and the MIE network is proposed in this paper. It aims to effectively capture the motion information between two frames and provide detection cues to identify moving targets. The MFE module is responsible for integrating and enhancing the motion information between the two images. The MIE network is responsible for further extracting the motion information between the outputs of the features with the MFE module.

2.1.1. MFE Module

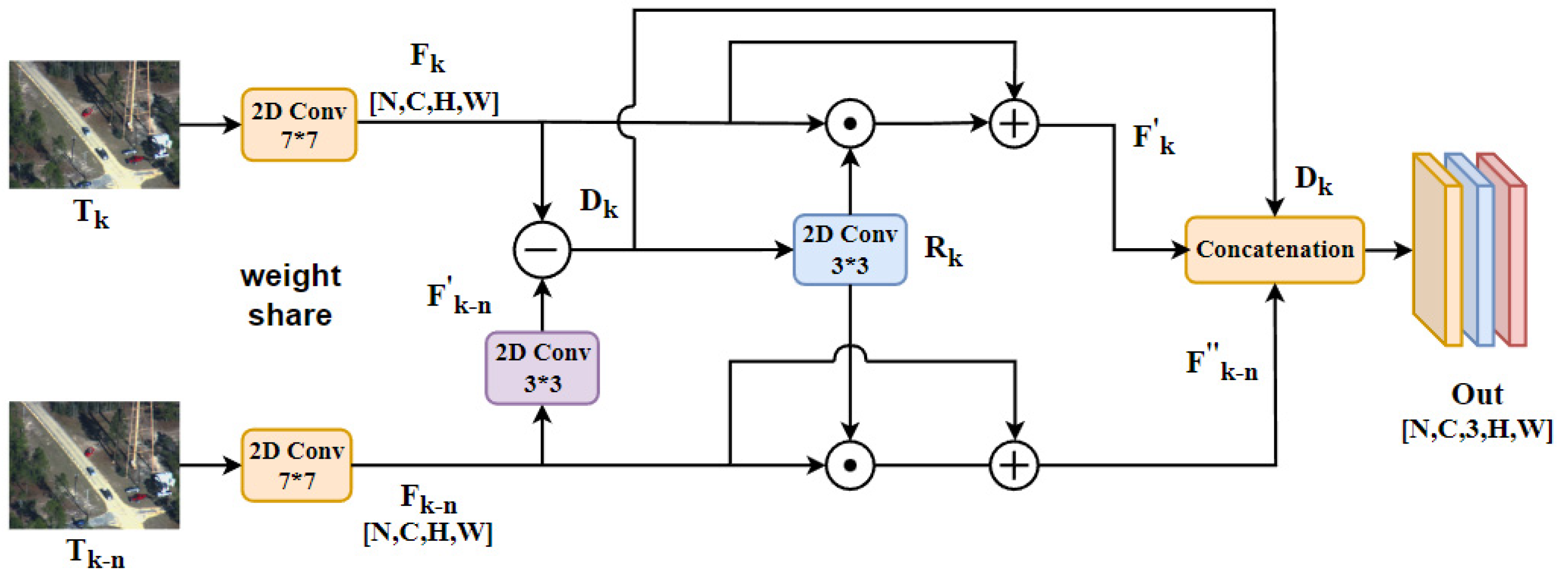

The framework of the MFE module is shown in

Figure 2. It enhances the motion features between two frames, initially extracts the motion information, and suppresses the background information. It was inspired by the frame difference method [

40]. We extend the difference between two frames of the frame difference method to the difference between the feature map of two frames to make it more applicable to the network. Note that our goal is to find a motion representation paradigm that can help to identify motion efficiently, rather than calculating the exact pixel displacement between two frames, as in optical flow. Therefore, we only use two RGB frames as the input and do not add precomputed optical flow images.

First, the two input images are encoded by two weight-sharing 2D convolution modules as

where

and

denote the 3D tensor of the two input images,

represents a convolutional layer with a 7 × 7 filter, a batch normalization layer, and a ReLU activation function,

,

represents the feature map of the

k frame and the feature map of the (

k −

n) frame, respectively. Not only is the moving target moving relative to the background between the two frames, there is also relative motion between the background and the moving camera. We continue to use the convolutional layer transform feature to compensate for the background motion for the (

k −

n) frame feature to obtain

. This procedure can be denoted as

where

denotes a convolutional layer with a 3 × 3 filter, a batch normalization layer, and a ReLU activation function. Then, the difference in the feature maps between two frames was used to approximately represent the motion significance. We calculate the difference between the two feature maps by an element-wise subtraction operation, followed by taking the absolute value of the difference as

where

represents a motion difference feature, and |∙| denotes an absolution operation.

contains rich motion information, so we further put it through a 2D convolutional layer with 3 × 3 filters followed by a sigmoid function, which transforms the motion features into weights

. Then, the motion features of the two feature maps

and

are enhanced with an element-wise multiplication operation. The original feature maps are added to the enhanced features to obtain the refined motion features, which suppress the background and highlight the foreground. This process can be illustrated as

where

and

are element-wise addition and multiplication operations, respectively.

Finally, we concatenate

,

, and

along the time dimension. This can be represented as

where

is a feature concatenation operation along the time dimension, and

denotes the output feature map of size

, where

is the batch size,

C is the number of channels in the feature map, 3 is the number of feature maps, and

H and

W are the feature map’s height and width.

Since the shallow features of the image mainly contain the precise position and motion information while the deep features mainly contain semantic information, the MFE module is only used for the shallow features and the refined motion features are extracted using an improved 3D convolution implementation. In addition, all filters used in the MFE module are essentially 2D convolutional kernels, so it is lightweight.

2.1.2. MIE Network

For the 3D convolution-based motion information extraction (MIE) network, as shown in

Figure 3, the output of MFE module

is fed to a self-developed 3D network, which consists of three 3D convolutional layers, to generate motion features

with three different convolutional depths. Since the computational overhead of 3D convolutional blocks is too expensive, to increase the speed and reduce the cost of computation, we use three convolutional layers with kernels 3 × 1 × 1, 1 × 3 × 3, and 1 × 1× 1, instead of each 3D convolutional block.

First, we decompose a 3D convolutional layer with 3 × 3 × 3 filters into a 1D temporal convolutional layer with 3 × 1 × 1 filters and a 2D spatial convolutional layer with 1 × 3 × 3 filters. Then, the features are integrated through a convolutional layer with a 1 × 1 × 1 filter. Finally, to further reduce the computational cost, the number of channels in the second and third 3D convolutions is halved and then adjusted using the convolutional layer with 1 × 1 × 1 filters. The details of the MIE network are shown in

Table 1.

We denote the spatiotemporal size by

, where

is number of channels,

is the temporal length, and

is the height and width of a square spatial crop. The MIE network was inspired by SlowFast [

41]. It takes

frames of feature maps as the input, which are the

k frame feature, the (

k −

n) frame feature, and the feature difference between them. No temporal down-sampling is performed in this example, because it would be harmful to do so when the number of input frames is small, so the output of each improved 3D convolutional block has a temporal dimension of

.

Note that the designed MIE network is lightweight and computationally efficient, since it consists of only three layers of improved 3D convolution blocks. Moreover, since the 3D convolution can extract the motion information from the features, we can extract more effective motion cues from the designed MIE network.

2.2. Object Detection Module

For the MOD task, the motion features between multiple frames can help to discover the boundaries of moving targets. The semantic information of a single image can help to locate the locations of moving targets and identify object classes. Therefore, single-frame-based target semantic information is also vital for the MOD task. Since YOLO series algorithms have satisfactory results in object detection, we use the backbone network CSPDarknet53 in the object detection module to extract semantic information at three different convolutional depths from a single image frame. CSPDarknet53 consists of five CSP modules. Each CSP module is down-sampled by a convolutional layer with 3 × 3 filters, and we use the output of its last three layers as the output features of the object detection branch.

2.3. Temporal–Space Fusion Module

In this section, we fuse information from both branches of motion detection and target detection to identify moving targets using both motion and semantic information. Each of the two branches extracts three layers of feature maps with different convolutional depths.

First, we feed the third output

of the motion detection into the nonlocal block [

42] to further integrate the motion characteristics of the

channel. The nonlocal block is good for integrating information between channels, but it is expensive, so we only use it for the last motion feature. Then, the first two features of the motion branch and the motion information of the time dimension in the third motion feature of the nonlocal block fusion are fused into the channel. This study tested two different pooling methods to fuse motion information from the temporal dimension into the channels. Finally, we obtained three motion features with different convolutional depths, and the spatial dimensions of the three motion features corresponded to the three object features obtained by object detection. Since the corresponding features on the layer to be fused have the same spatial resolution, referring to the findings of C. Feichtenhofer [

43], we tried to fuse the features using two methods to compute the sum of two feature maps at the same spatial location and stack features in the channel direction.

4. Discussion

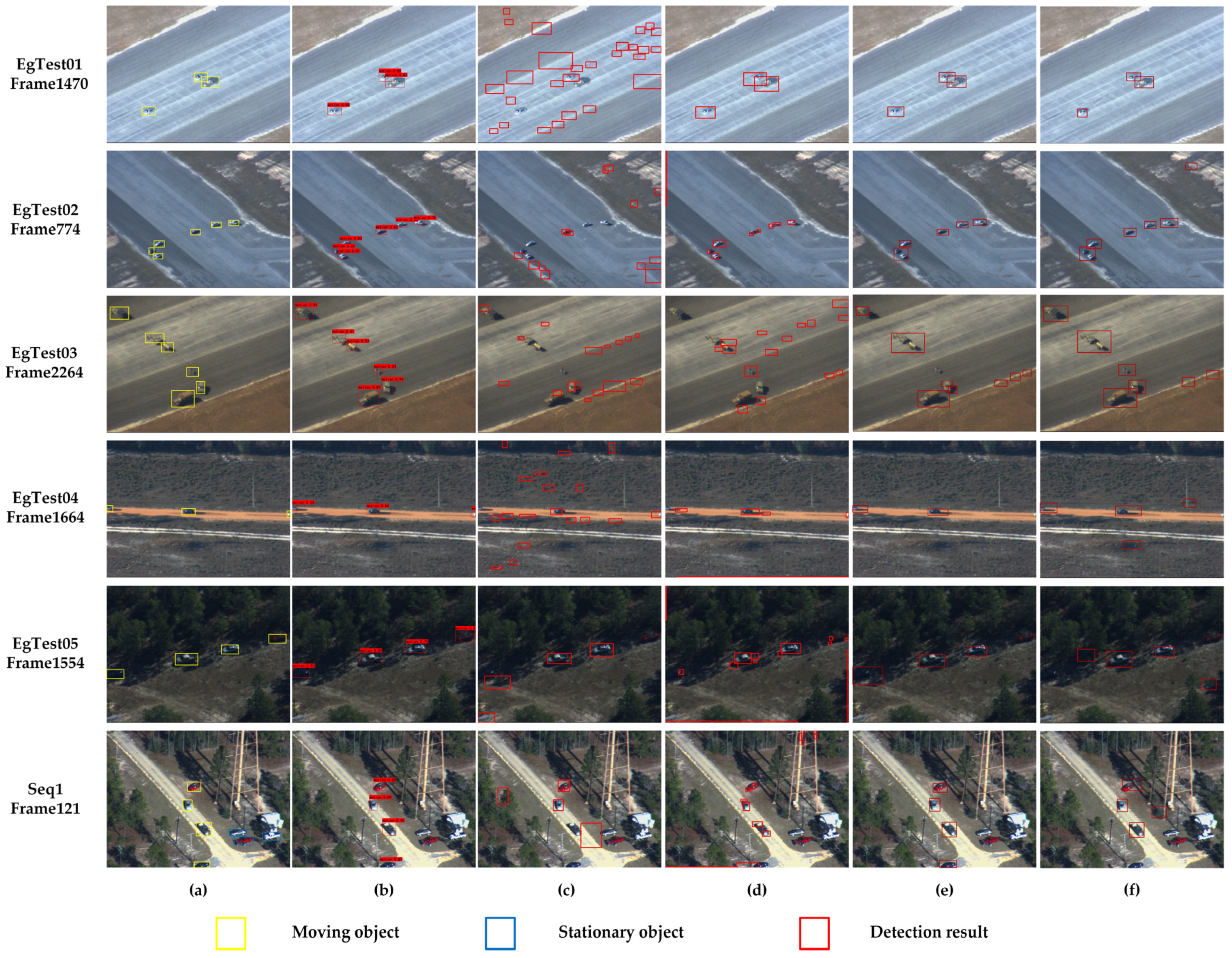

In this work, inspired by the excellent performance of two-stream networks combining spatial and temporal information for motion recognition, we proposed an end-to-end moving object detection model for remote sensing image sequences that operates in real-time. The experimental results show that our proposed MFE-MIE model achieves a real-time (25.25 fps) detection speed with the highest F1 scores (0.93). In addition, we performed a quantitative evaluation by ablation experiments to demonstrate that the proposed MFE module enhances motion feature extraction. We also proposed an MIE network based on improved 3D convolution and experimentally demonstrated that it improves accuracy and reduces false alarm rates.

In

Section 3, we analyzed which method is more suitable for motion information retention when fusing the temporal dimensional information of motion features. The experiments show that the maximum pooling method provides more retention than the average pooling method, and the accuracy, recall, and F1 scores increased by 1.45%, 5.78%, and 0.04, respectively.

Additionally, we used the convolutional layer for background compensation at different positions and determined the influence on the detection results. The experimental results show that using the weight to enhance the features without background compensation can achieve better detection results. This is because multiplying the compensated features using the weights artificially weakens the motion information between the subsequent and . This is not conducive to the subsequent further extraction of motion information by the MIE network. During the process of motion feature enhancement, the original features should be preserved and enhanced as much as possible instead of artificially reducing the interframe information, which is harmful to the subsequent motion information extraction.

At the same time, we studied the impacts of different ways of fusing motion and semantic information on the moving object detection task. The experimental results show that the accuracy rate of concatenation along the channel was 1.89% lower than the element-wise summation for the same feature dimension. Nonetheless, this precision sacrifice is meaningful, with 0.07 and 14.5% gains in terms of the F1 and recall, respectively. Element-wise summation is more flexible than concatenation along channels. The element-wise summation method requires motion features and semantic features to have the same spatial dimension and number of channels. However, the concatenation along channels method only requires them to have the same spatial dimension. When we set the number of semantic feature channels to 768 and the number of motion feature channels to 1024, we were able to achieve the same F1 (0.93) score as when the number of the two branch channels was 1024. In addition, this setup reduced the overall detection time.

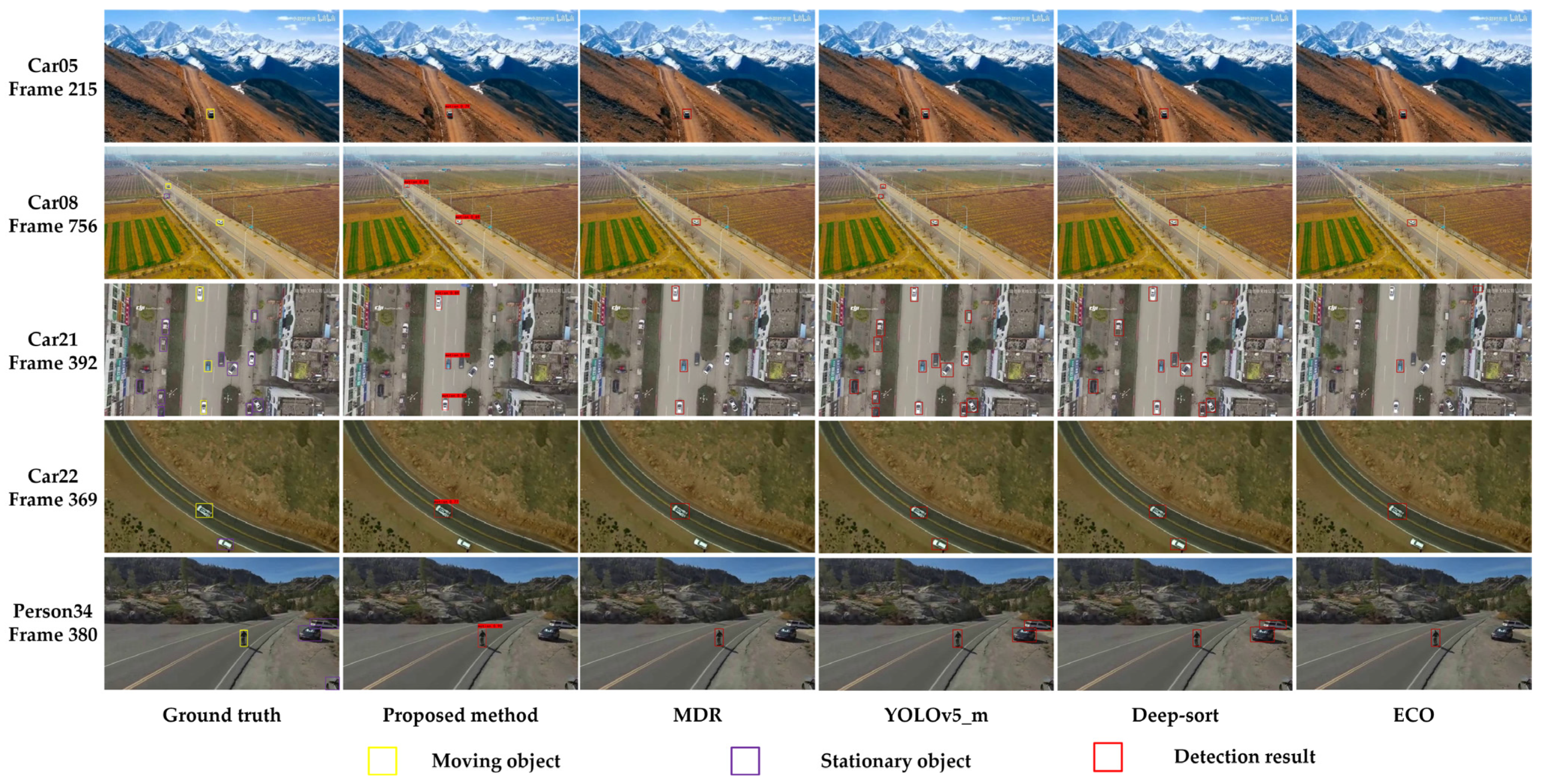

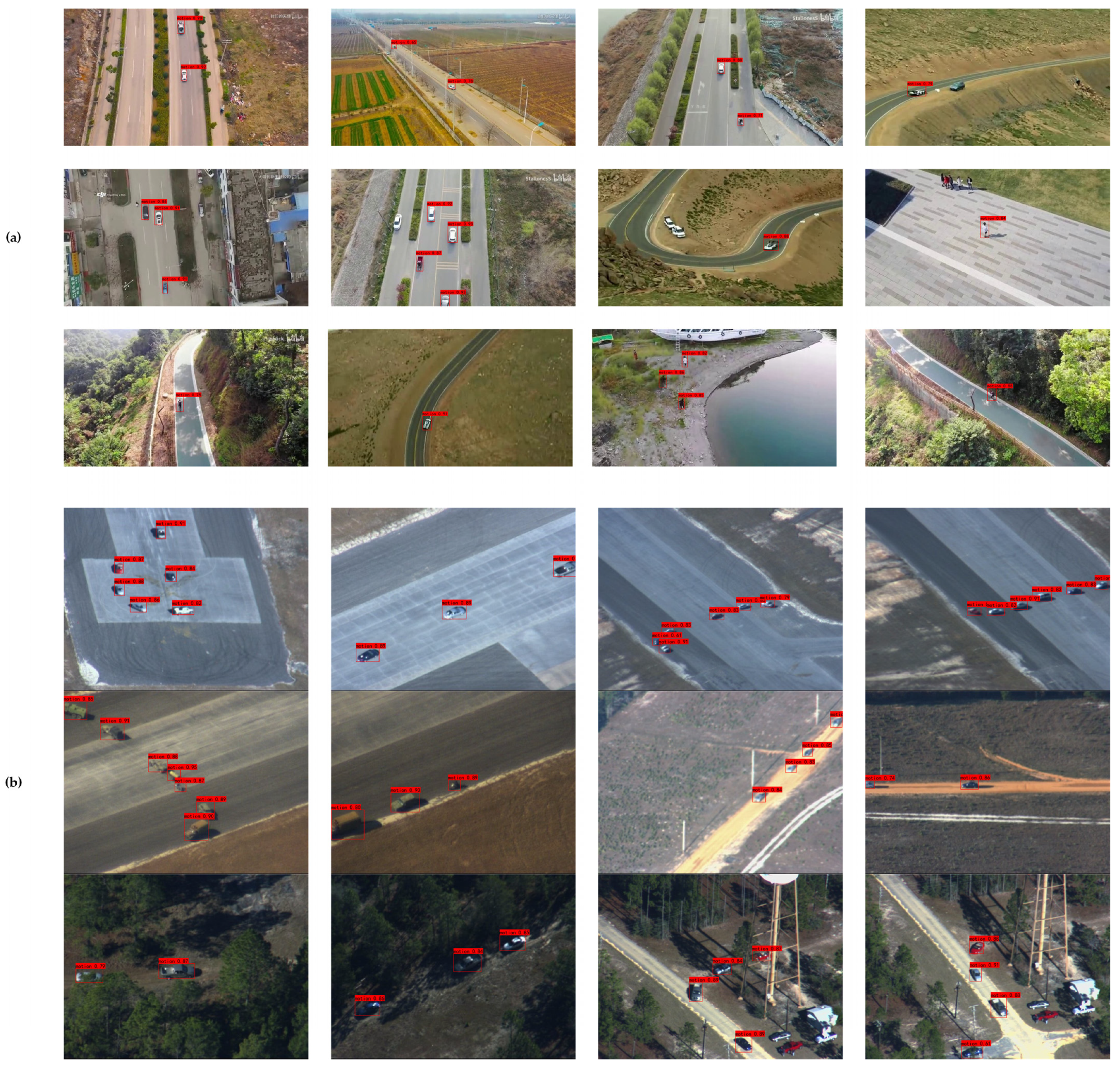

The experimental results on the VIVID dataset show that the proposed method can better localize moving objects and achieve real-time detection compared with the classical moving object detection method. In contrast, the detection effect of the classical algorithm is affected by the registration method and cannot achieve real-time detection. In addition, the detection effect depends on postprocessing. The proposed algorithm can obtain the best detection effect in different image sequences. The idea of combining motion information with semantic information was proven to be effective for moving object detection tasks.

The detection results on the MDR105 dataset show that our method focuses on the moving object detection task and achieves the best detection results compared with other deep-learning-based methods. Unlike the algorithms presented in [

38], our method is all implemented by CNN. The proposed method only needs two RGB images as the input and no other data are required as additional input to output the detection result in an end-to-end manner. Both object detection algorithms and multiobject tracking algorithms can detect all objects in the image. However, they cannot tell whether the object is in motion.

The detection results for the VIVID and MDR105 moving object datasets show that, in most cases, the proposed method can accurately locate the positions of the moving objects, even though the target within the VIVID is smaller. Objectively speaking, there are some misdetections and missed detections in our method. When the displacement of the target is small, the motion detection branch fails to detect the motion information, so the moving target is treated as stationary by our method, resulting in a missed detection. This is due to the lack of motion detection capability. Meanwhile, our method of fusing motion information with semantic information is relatively simple.