1. Introduction

Seabed sediment classification is of great significance in the fields of acoustic remote sensing [

1], marine engineering [

2], seabed mapping [

3], and mineral resource development [

4]. With the development of society and the economy, the significance of the ocean has received increased attention. Traditional seabed sediment data acquisition is mainly through sediments sampling, such as a clam sampler, a gravity sampler, etc. These sampling methods have obvious disadvantages, such as being time consuming and expensive and having difficulty to obtain large-area and continuous data and to sample sediments in the deep sea [

5]. Compared with traditional methods, the acoustic seabed classification (ASC) not only reduces the cost of seabed sediments data acquisition but also greatly improves the efficiency [

6]. Moreover, continuous and large-area seabed sediment information can be obtained. Furthermore, ASC has been proven by many researchers to be a very effective method for seabed sediment classification [

7]. Some classifiers are constructed using these acoustic echo data, since different types of seabed sediments have different reflection and absorption coefficients for acoustic waves [

8].

In recent years, many network structures with powerful classification capabilities have been proposed. GoogLeNet has been proposed to improve the feature expression ability by increasing both the depth and width of the network. Inception makes the network more sparse and has a better classification performance with an appropriate increase in computational requirement [

9]. The proposed residual connection allows the input of each layer of the network to include the output of all previous layers, which can solve the problem of gradient disappearance and degradation. ResNet can be easily optimized and its performance can be increased with depth [

10]. DenseNet has a more powerful performance than GoogLeNet [

9] and ResNet [

10]. The introduction of dense connections allows DenseNet to enhance the propagation of features, encourage feature reuse, mitigate gradient disappearance, and allow for a more concise structure of the network [

11]. The emergence of Transformer has broken the dominance of the convolutional neural network (CNN) in the field of image classification. The attention mechanism used in Transformer is able to filter out a small amount of important information from a large amount of information and ignore the mostly invalid information. Transformer performs very well on large-scale datasets, but its performance on the classification of small-scale datasets is average [

12]. Ding et al. proposed a novel local preserving dense graph neural network with an autoregressive moving average filter and context-aware learning method for hyperspectral image classification. This method has the advantages of better capturing the global graph structure, being more robust to noise, retaining local features in the convolutional layer, and making feature extraction easier [

13]. A novel multifeature fusion network was proposed to solve the problem that most graph neural networks ignore pixel-wised spectral spatial features in hyperspectral image classification. This method uses a multiscale graph neural network to refine multiscale spatial features and deal with the problem of insufficient labeling, and uses a multiscale convolutional neural network to extract multiscale pixel-wised local features [

14].

In recent decades, most seabed sediment classifiers are based on traditional machine learning or deep learning [

15], which are inspired by decision trees (DT) [

16], random forests (RF) [

17], support vector machine (SVM) [

18], CNN [

19], etc. ASC mainly uses seabed sediment information collected by multibeam echosounder systems (MBES), sub-bottom profiler (SBP), and side-scan sonar (SSS) to achieve seabed sediment classification.

Combining the MBES bathymetric data with the backscatter intensity data, multisource data were used to construct a classification model based on the SVM with the Askey–Wilson kernel function. This method can improve the performance of the prior sample input classifier [

20]. The curve of incidence angle and backscattering intensity was fitted by the least squares method using a genetic algorithm, after which four characteristic parameters were extracted from the MBES data with a good fitting effect, and these four characteristic parameters were input into the K-medoids clustering model [

21]. Zhao et al. constructed a classification model based on RF, which can achieve good classification results with fewer computational resources. A feature extraction method called Weyl transform was used to characterize MBES backscattered images and discussed the effects of different feature extraction methods at different scales. The final experimental results showed that the RF classification method based on the Weyl transform has better performance than some traditional features [

3]. A Bayesian classification method using multifrequency MBES data was proposed by Gaida et al. Using multiple frequencies allows better identification than using single-frequency for seabed sediment classification [

22]. Pang et al. extracted multiple features from MBES data and selected the features using RF, and then fed the selected features into three classifiers, i.e., SVM, K-Nearest Neighbor (KNN), and DT, respectively, and the experimental results showed that all three classifiers performed well with no less than 99% accuracy [

23].

He et al. proposed a wavelet back propagation (BP) neural network classification model based on the preferred characteristic parameter selection method by using the relative backscatter intensity difference and the attenuation compensation residual in the SBP data [

24]. In order to overcome the problem of unsoundness in attribute calculation of SBP data properties, a classification method was constructed based on a combination of relaxation time, Wigner–Ville distribution, and modified variational mode decomposition [

25]. Zheng et al. proposed a classification method for seabed sediments based on the Biot model and SBP data. This method only performed well in classifying soft seabed sediments, such as mud and sand. Unfortunately, this method is not applicable if the seabed sediments are hard sediments, such as rock [

26]. In addition, Wang et al. used the grain size parameters of offshore seabed sediments sample as the input features of the classifier, and finally, an efficient sediment classification model was proposed using the XGBoost [

27].

The entropy, standard deviation, and intensity of SSS image pixel values were used as the input to the classifier to effectively identify different types of seabed sediments [

28]. Multiple first-order and second-order features of SSS images were extracted, and after feature selection, the three features of standard deviation, kurtosis, and correlation were combined. Furthermore, this combined feature was used as the input of SVM and DT, respectively. The SVM using a linear kernel has the best performance [

29]. Compared with optical images, acoustic images have a lower resolution, which was solved by Annalakshmi et al. using a super-resolution method. First, low-resolution SSS images were converted to high-resolution images using super-resolution techniques. Then, the texture features of the image were extracted using the local orientation mode. Finally, the super-resolution images were classified by using SVM. Furthermore, using super-resolution images for seabed sediment classification has better classification performance than the original low-resolution images [

30]. Traditional seabed sediment classification is performed by feature extraction from acoustic data and then using the extracted features for classification. Berthold et al. achieved effective seabed sediment classification by directly feeding SSS images into a CNN, which can automatically select effective features during training and then use them for classification. This approach also has good results [

31]. Xi et al. discussed the effect of different training functions of the BP network on convergence time and seabed sediment classification accuracy. According to extensive experiments, the trainlm function network using the Levenberg–Marquardt algorithm converges faster and has good accuracy [

32].

SSS has the following advantages: first, it can provide high-resolution images [

33], which is particularly important for seabed sediment classification. Second, it can obtain continuous two-dimensional underwater acoustic images. Third, compared with the MBES, the cost is lower. Thus, SSS is widely used in underwater archaeology [

34], underwater target recognition [

35], and underwater building inspection [

36]. These are also the reasons why SSS data are used by us for seabed sediment classification.

Furthermore, if the sample size in the dataset is small, many deep learning algorithms will find it difficult to learn the exact mapping relationships. To solve the problem of small-sample size in the dataset, many data augmentation methods have been proposed. Data augmentation based on basic image processing mainly includes geometric transformations, flips, cropping, rotation, translation, etc. [

37]. These methods have solved the problem of data shortage to some extent. However, they still have the disadvantages of adding a limited amount of information, repeated memorization of data, and inappropriate operations that may change the original semantic annotation of the image. The development of deep learning techniques in recent years has provided a new solution for data augmentation [

38]. Goodfellow et al. have proposed generative adversarial networks (GAN) that learn data distributions to achieve data augmentation [

39]. Zhang et al. proposed SAGAN, which can use cues from all feature locations to generate details [

40]. GAN or SAGAN can generate a large number of new high-quality image samples, but the training of the model consumes a lot of computing resources.

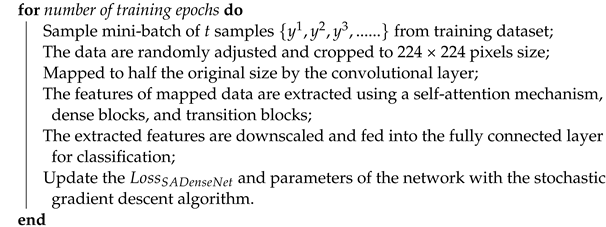

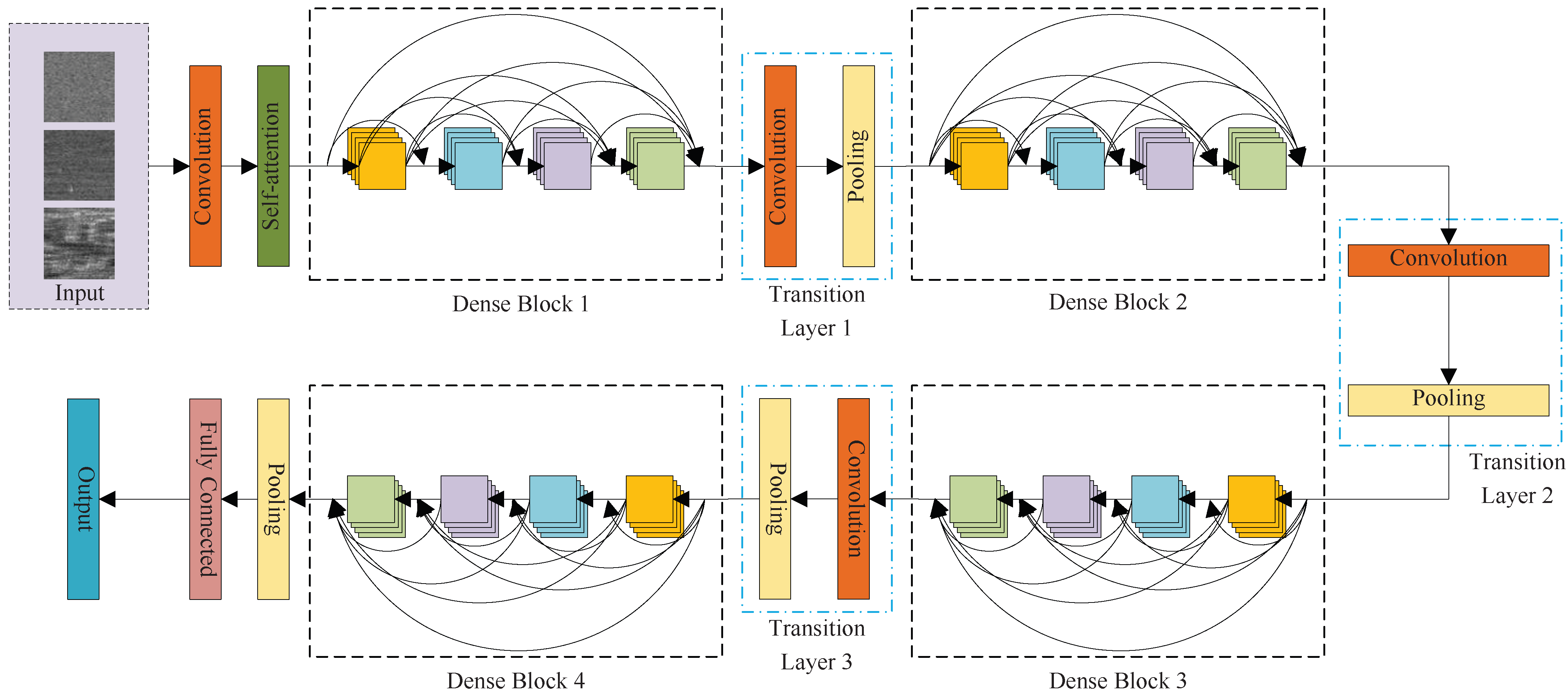

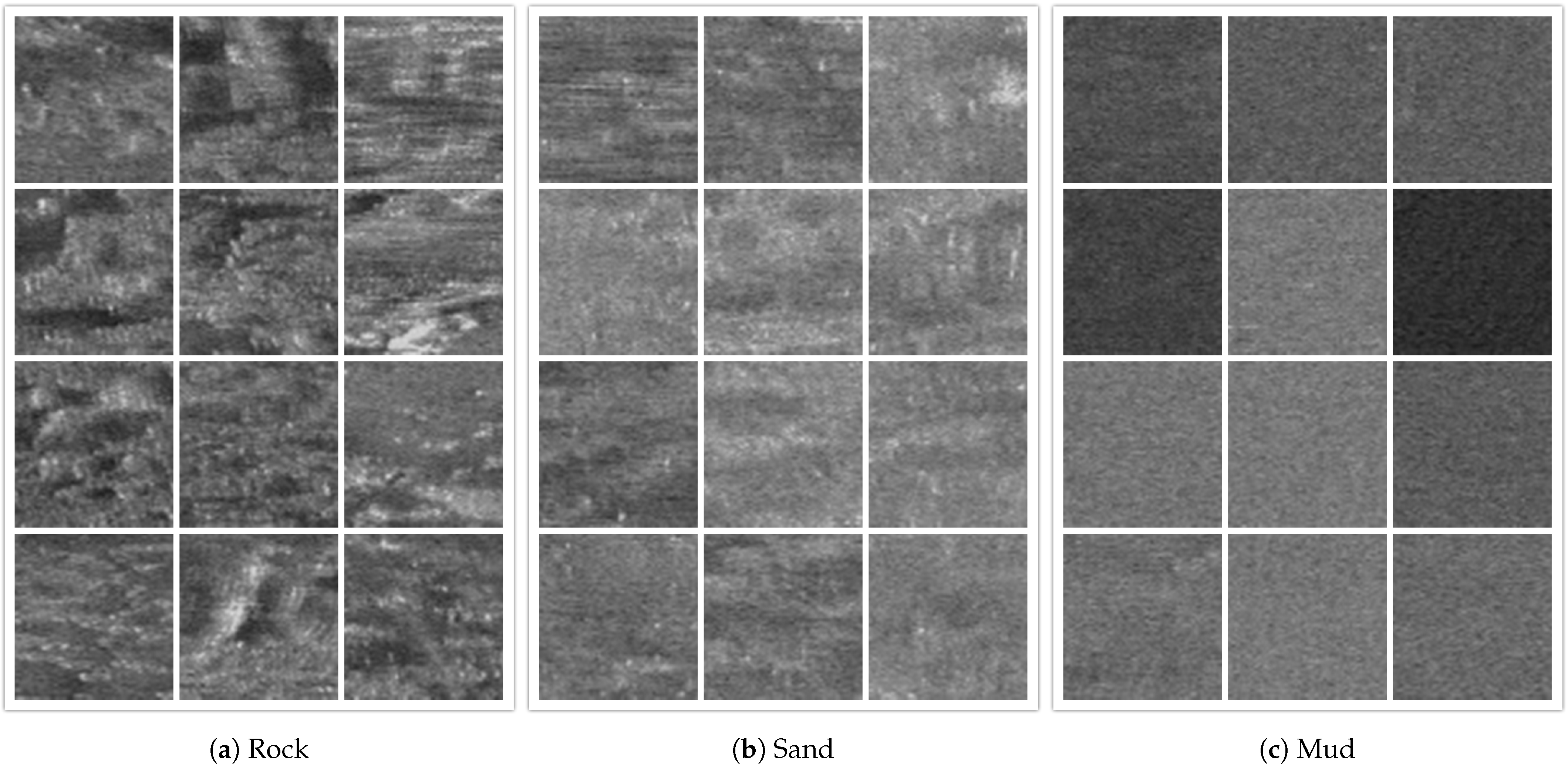

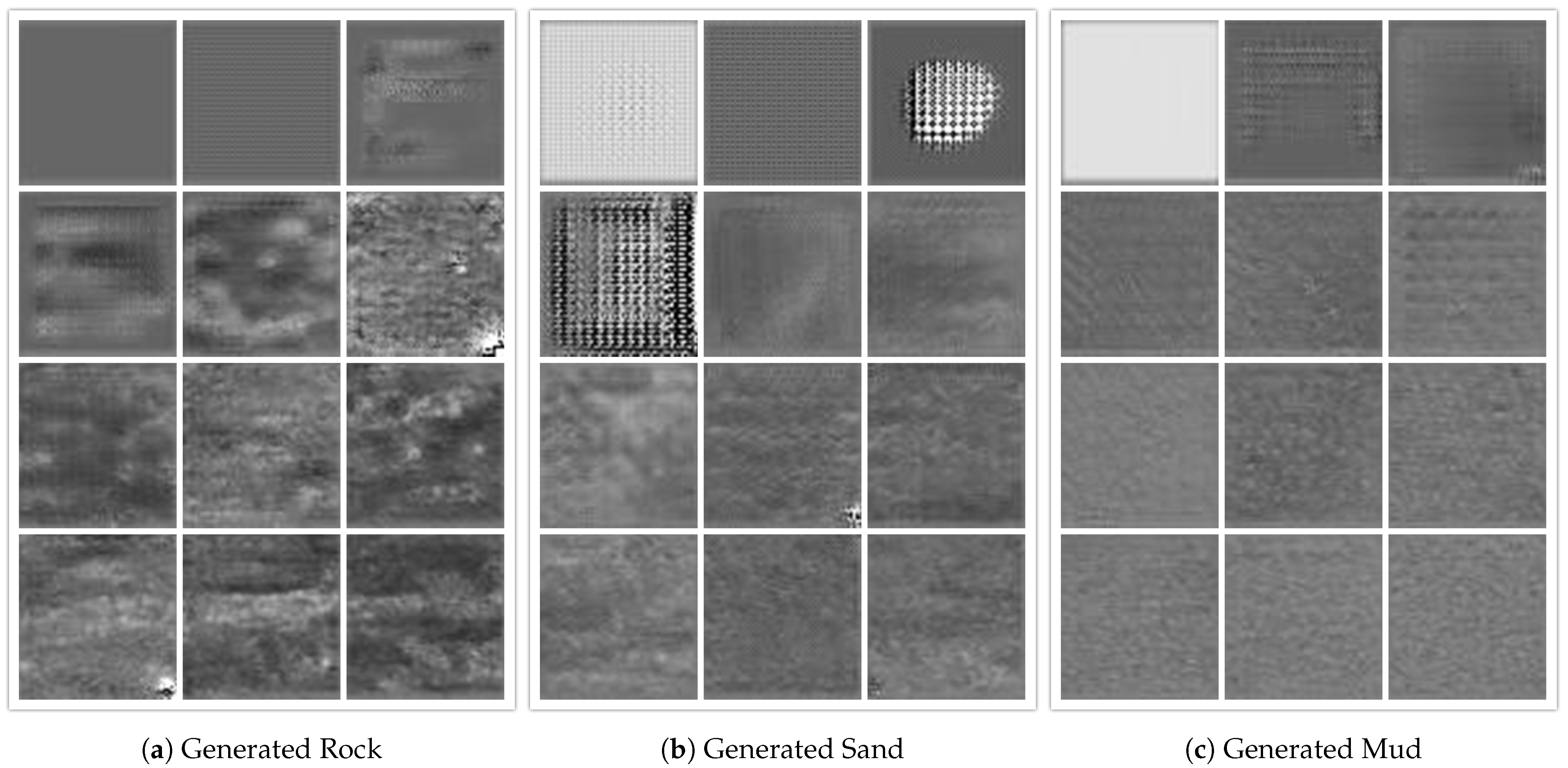

To sum up, it is extremely time consuming, expensive, and difficult to collect large amounts of seabed sediment data. Deep learning classification algorithms are data driven, so it is particularly important to obtain a large amount of training data through image augmentation. Previous researchers have made substantial contributions; however, how to use small-sample data to establish an effective seabed sediment classifier has not been considered. In addition, compared to the data in CIFAR-10 and ImageNet, the features of the seabed sediment image are relatively abstract, and some images are visually similar to noise, making it difficult to extract their image features. In order to resolve the data shortage and build a classifier with better performance, SAGAN was introduced, and a new classifier called SADenseNet was proposed. SAGAN aims to generate more images using small-samples, and finally, the generated and original images are used together to train the classifier. SADenseNet aims to raise the accuracy of seabed sediment classification.

There are three contributions of this study: (1) The self-attention mechanism is introduced for the first time in the classification of small-sample seabed sediments; (2) SAGAN was used to achieve the augmentation of the training data, and the classifier can be better trained with both original and generated images; (3) A novel seabed sediment classifier called SADenseNet is proposed, which has better classification performance compared with the state-of-the-art methods.

We use SSS and sampling data collected in the Weihai sea area to validate our proposed methods. The remainder of this paper is organized as follows.

Section 2 describes our survey area, data, and the proposed methods.

Section 3 presents the experiments and results. The experimental results are discussed in

Section 4. The conclusions are given in

Section 5.

4. Discussion

In seabed sediment classification, to build a classifier with higher classification accuracy and kappa coefficient, there are several approaches, as follows:

- (1)

Improving the data quality of seabed sediments by eliminating the influence of the disturbance factors during collection.

- (2)

Constructing a better feature extractor.

- (3)

Building a better classifier that can better distinguish between different types of seabed sediments.

- (4)

Using more data to train the seabed sediment classifier so that the classifier can be trained better.

In this study, we investigate factors (2)–(4) above as follows.

SAGAN is used for data augmentation, which enables SADenseNet to have more training samples to better learn the differences between different types of seabed sediments, thus improving the classification accuracy.

Moreover, a deep-learning-based neural network is constructed called SADenseNet, which can achieve both characteristic construction and classification of seabed sediment images. The self-attention mechanism is introduced in SADenseNet, which can enable the network to better distinguish seabed sediments.

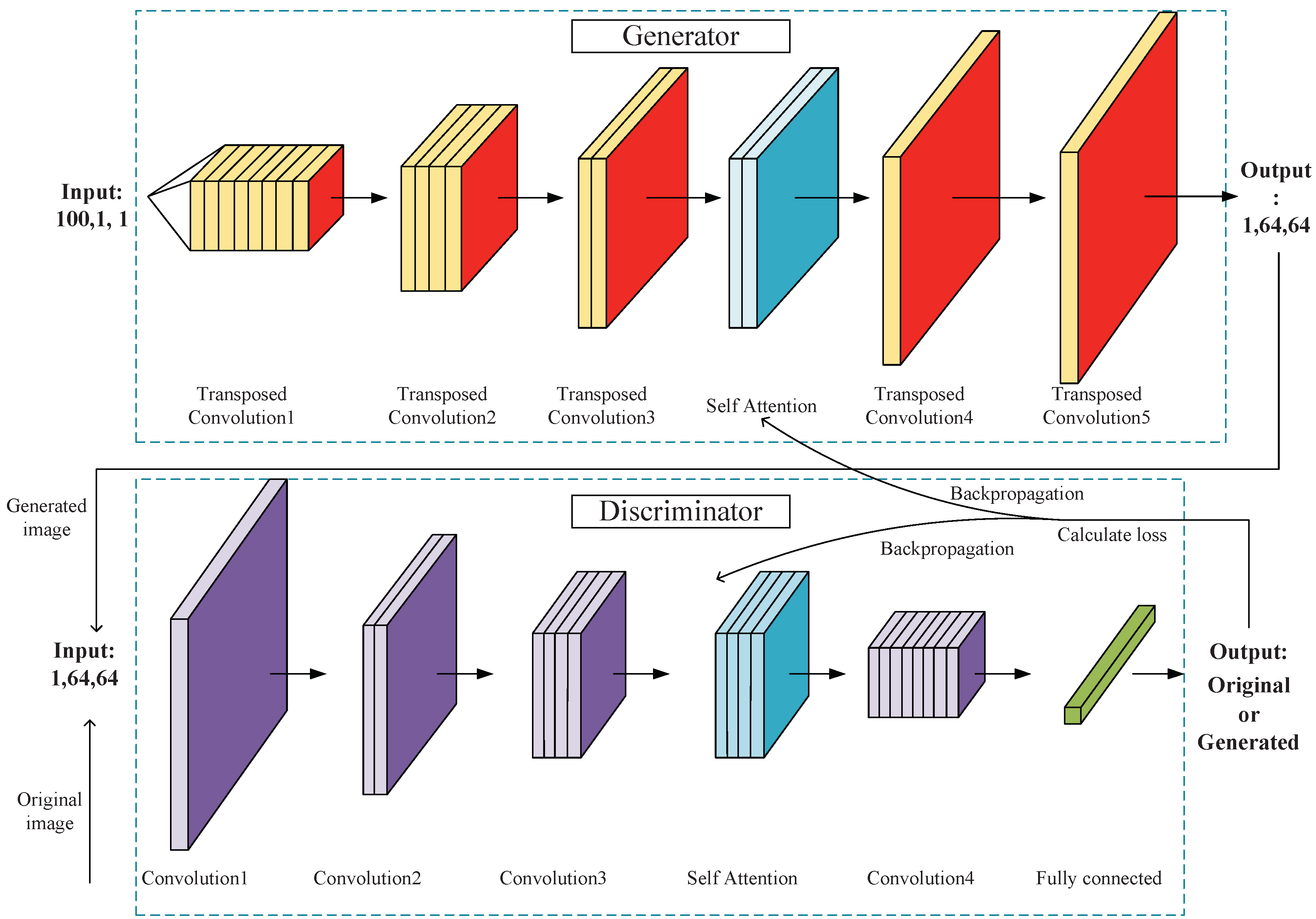

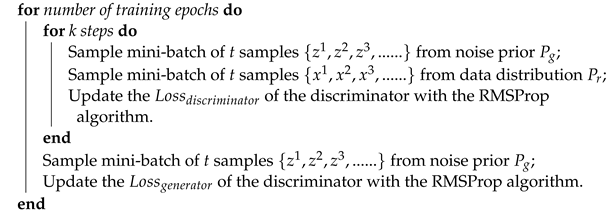

4.1. Image Augmentation

The texture structure presented by SSS images in some sea areas may involve a large spatial range of neighborhoods, and in addition, some classes of images are even close to random distribution. Therefore, in order to better acquire global features of images and generate clear images, larger convolutional kernels as well as deep neural network structures are required. However, such GAN is often difficult to train and obtain satisfactory images. In order to obtain good-quality images, the self-attention mechanism was introduced into the GAN, which is called SAGAN. By adding a self-attention layer to the GAN, the network has a strong global information extraction capability [

39]. In order to improve the network’s ability to analyze and generalize global features, both the discriminator and the generator adopt a deep network structure. The addition of the self-attention layer also further enhances the network’s ability to process long-range information, enabling the generated images to reflect the texture patterns of the original images over a larger spatial range [

53].

We can see from

Figure 7 that as the number of epochs of the network gradually increases, the generated images gradually become concrete from abstract and clear from blurring. Eventually, an image is randomly selected from the selected images, and experts cannot discern whether the image is generated by the generator. The FID of the generated image to the real image is 47.29, which is a good result compared to many advanced GANs [

54]. Furthermore, the images that have been manually selected have a smaller FID than those that have not been manually selected. In addition, the classification results using generated images are very similar to using real images as the test set. All these can prove the effectiveness of SAGAN. We divide the generated images into the training set to increase the feature richness of the dataset, and thus, improve the final classification performance of the classifier. Although theoretically, it can have the ability to optimize the processing of seabed sediments data, experiments are still needed to demonstrate its effectiveness. We will describe and analyze the effectiveness of this method in

Section 4.3.

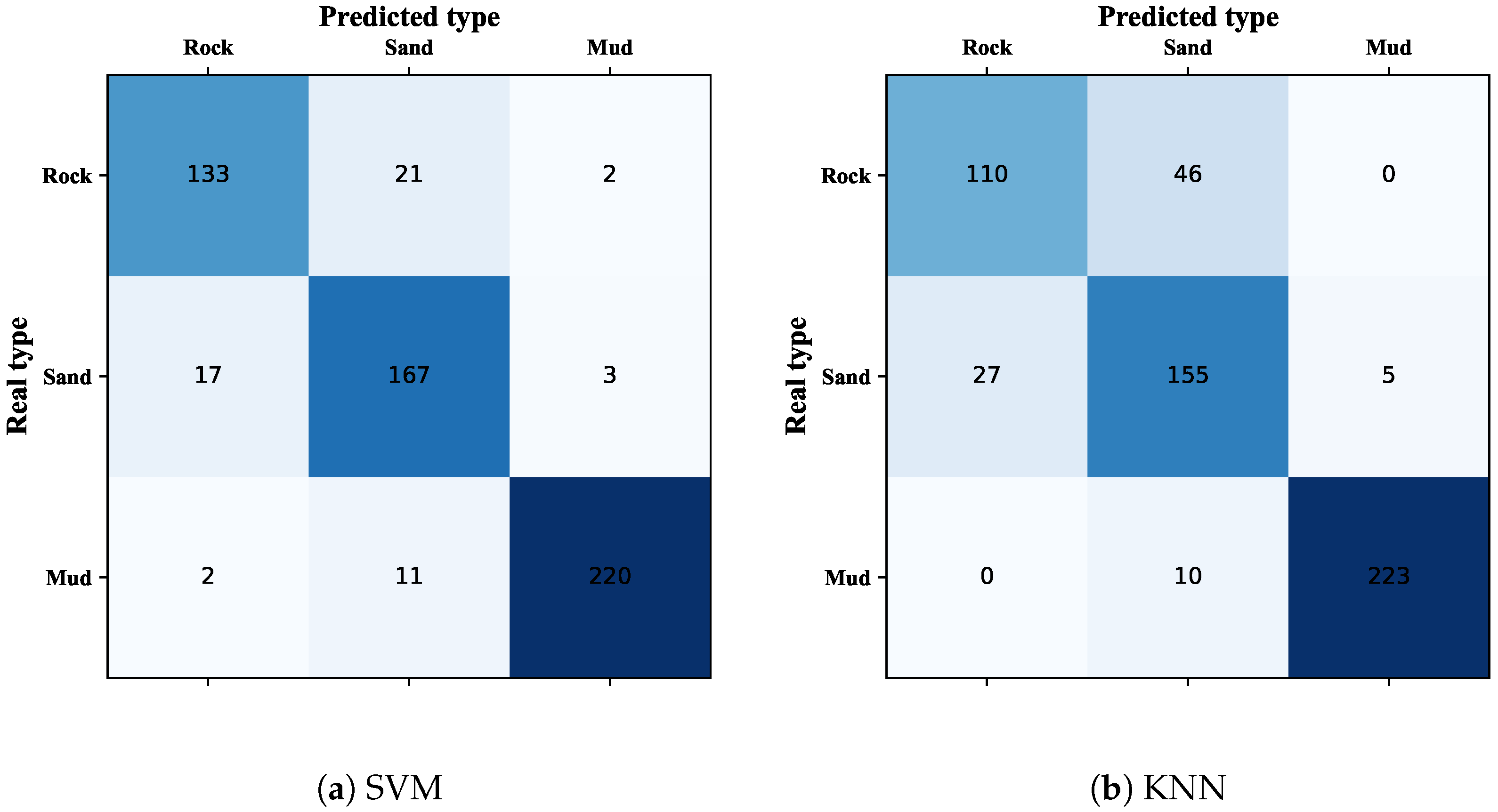

4.2. Classification Accuracy Using Original SSS Images

We can see from the data in

Table 8 that the traditional machine learning methods have good classification results, especially SVM, which has better performance than many deep-learning-based classification models. Deep-learning-based classification models are data-driven, and a large amount of training data is required to make these networks learn the characteristics of different types of sediments adequately. Furthermore, the training samples for this set of experiments were small, so a portion of the deep-learning-based classification models performed worse than traditional machine models. In contrast, SVM requires only a small amount of data to determine the segmentation hyperplane, so it will also have good performance under small-sample conditions. ViT has the worst performance. The number of parameters for the deep learning model is given in

Table 7. Compared to other deep-learning-based classification models, ViT is a very large model, which means that more data are needed to train it to achieve satisfactory performance. Our training set is a very small dataset, which makes it difficult to train ViT adequately. Furthermore, the data dependence of ViT is much greater compared to convolutional neural networks of various structures. GoogLeNet and DenseNet introduce the inception module and dense connection, respectively. Inception module concatenates feature maps of different sizes, and dense connection improves feature flow between layers and enhances feature reuse. Inception module and dense connectivity reduce the data dependency of the network to some extent. In small-sample data classification tasks, a network with an inception module or dense connection will have better classification performance and kappa coefficients compared to a network without inception modules or dense connection.

The proposed SADenseNet has higher accuracy of sediment classification and larger kappa coefficients compared with the state-of-the-art models. The self-attention mechanism is introduced into the proposed SADenseNet. With the current development of deep learning technology, the feature extraction ability of deep networks is becoming more and more powerful, which inevitably causes a large amount of feature redundancy while improving network performance. The self-attention is similar to human vision, which can automatically distinguish important information from global information, reduce the interference of unimportant information, and reduce the waste of computational resources caused by some redundant features. In addition, the self-attention mechanism can better acquire the global features of images and improve the classification performance of the network. The proposed SADenseNet also introduces dense connectivity. The dense connectivity improves the information flow between layers, with each layer obtaining input from all previous layers and passing its output to each subsequent layer, which greatly enhances feature reuse and thus improves the performance of the network. Moreover, each layer of the network is designed to be narrow, which also reduces the number of parameters of the network, decreases the computational cost, and makes the network easier to be trained.

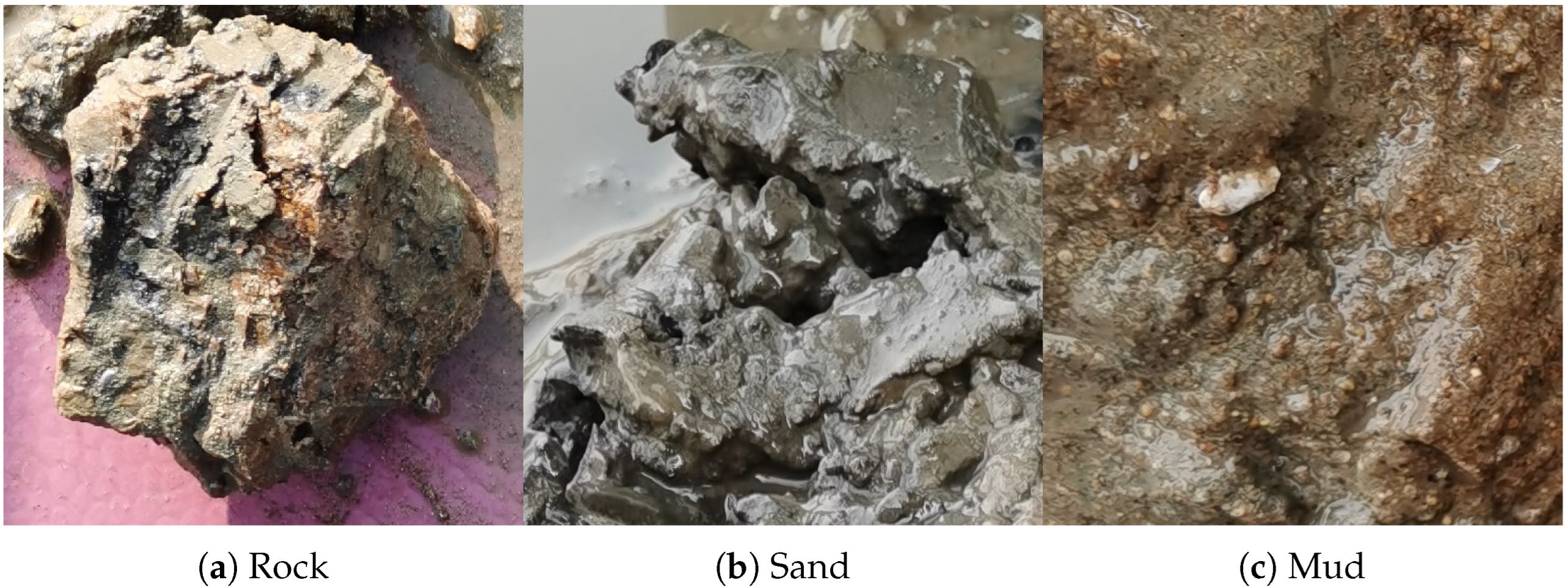

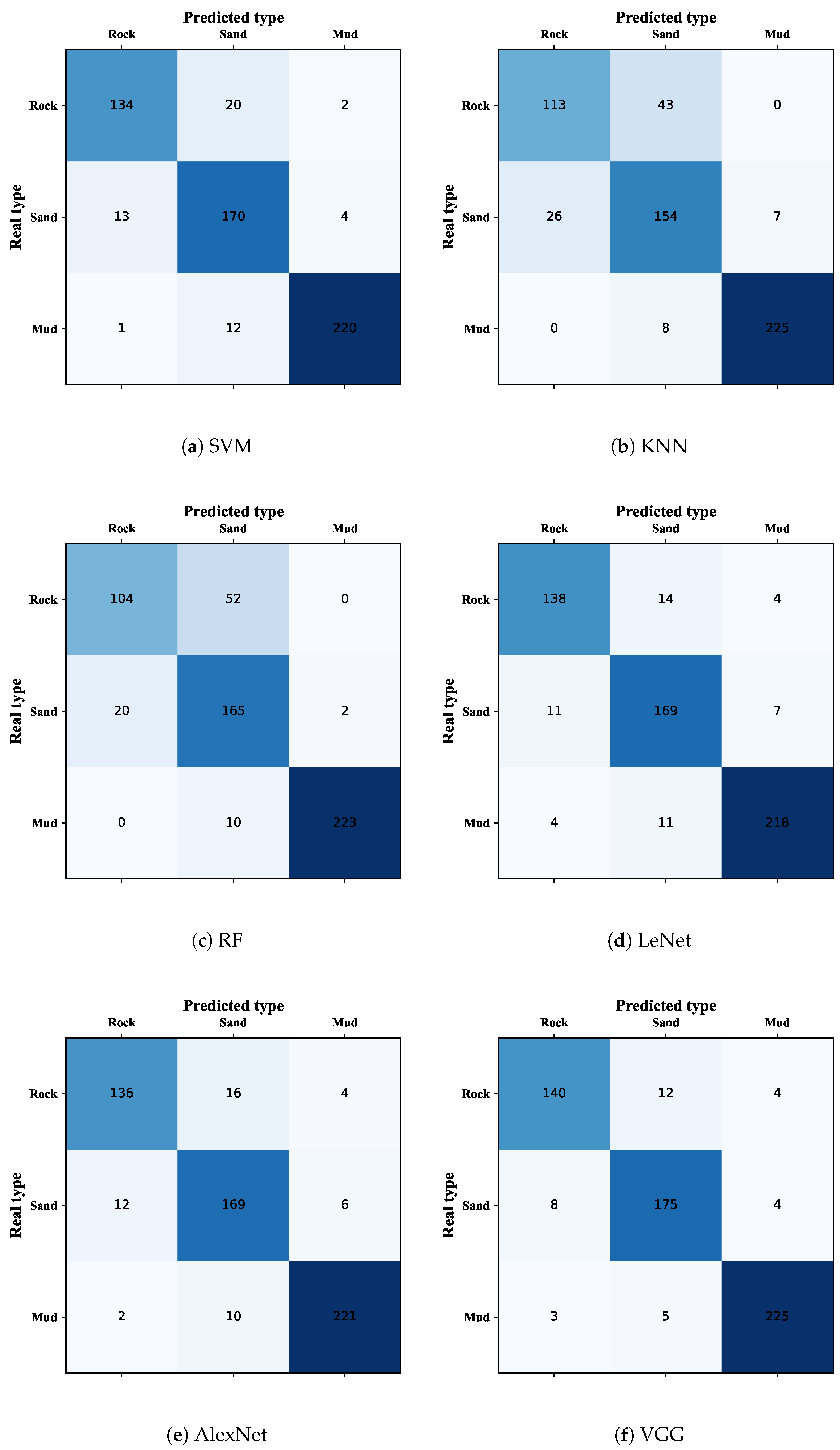

As can be seen from the confusion matrix of each classification model in

Figure 9, the models rarely identify rock as mud or identify mud as rock. This is because there is a large difference in the physical grain size as well as the texture characteristics between rock and mud. Sand with a larger grain size will have more similar features to rock, which will cause the models to misclassify rock and sand. Similarly, sand with a smaller grain size has similar characteristics to mud, which will also cause misclassification of the model.

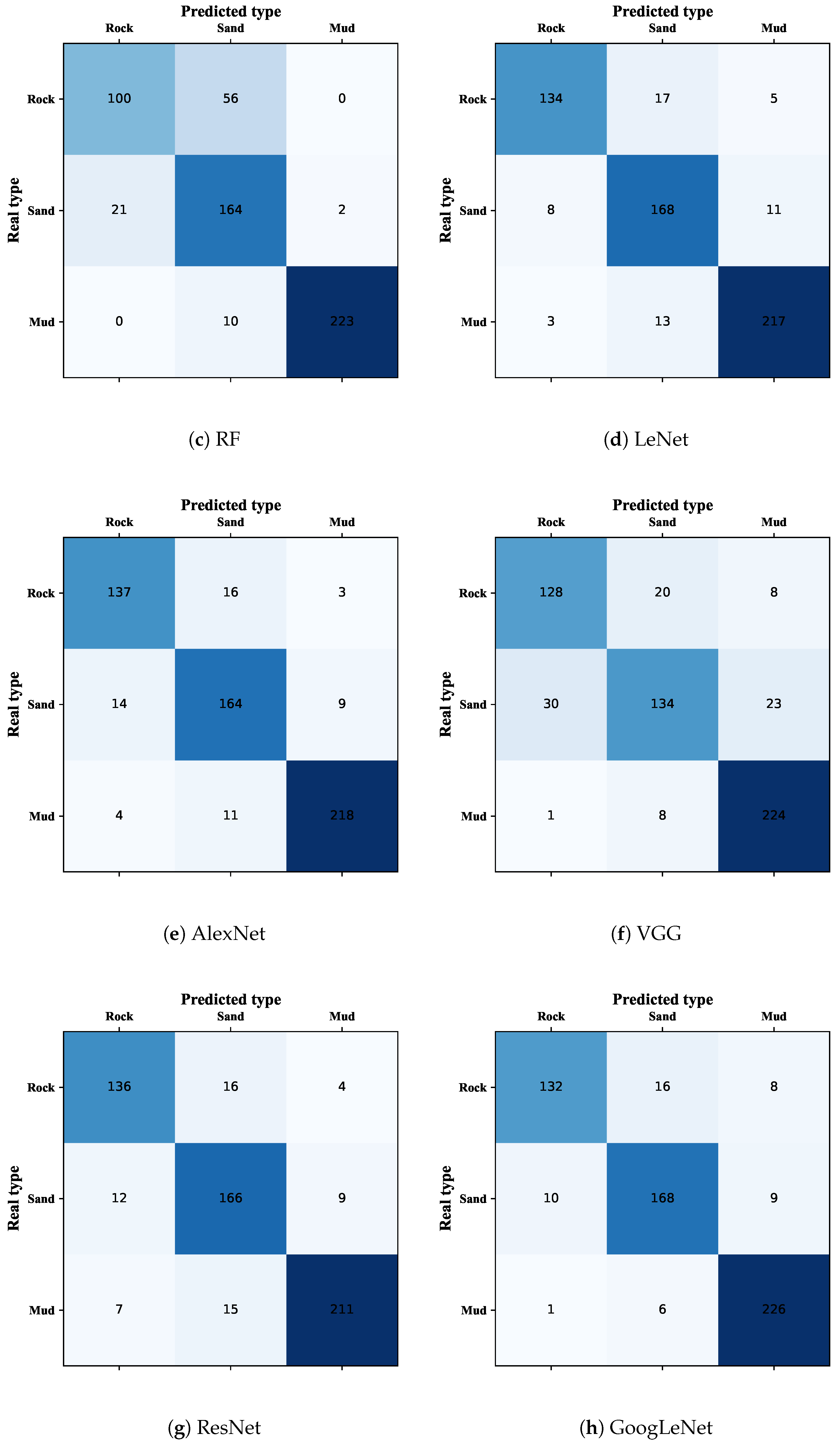

4.3. Classification Accuracy Using Augmented SSS Images

Figure 10 and

Table 9 show the classification performance of all models, and we can see that the proposed SADenseNet also has the best performance. Meanwhile, the models basically do not misidentify rock and mud with each other. Most of the misidentifications consist of identifying rock or mud as sand or sand as rock or mud, which is consistent with the results using the original dataset.

By analyzing the performance of the classification models listed in

Table 8 and

Table 9, it can be seen that only very few of the classification accuracy decreased slightly or remained the same, and the vast majority of the classification accuracy, as well as all kappa coefficients, increased. Artificial intelligence algorithms are data-driven; SAGAN was used for data augmentation, which produced more information not available in the original dataset and enriched the number of features in the data. This allows the classification models to be better trained and more accurately map the acoustic images to the correct sediments type. The model with the largest improvement compared to the original training set was VGG, which improved the overall accuracy by 9.37% and the kappa coefficient by 0.144. VGG has the largest number of parameters in these networks (

Table 6) and is more data-dependent, so VGG trained with the augmented training set had the largest improvement in performance. These experimental results demonstrate that data augmentation of the training set using SAGAN can indeed improve the performance of the classification model.

Training each classification model with the augmented dataset, the deep-learning-based classification models performed better than the traditional machine learning-based ones, which can also indicate that deep-learning-based classification models can better fit the function that maps sediment images to sediments types when the dataset is larger.

5. Conclusions

In this study, SAGAN is used to learn the data distribution of the original dataset and generate new SSS images to perform data augmentation on the original dataset. Through our experiments, We demonstrate that the images generated by SAGAN are very similar to real images and can augment the features of the original dataset, enabling the classification model to better learn the mapping of SSS images to real sediments types, and the classification model has higher classification accuracy and kappa coefficients.

The self-attention mechanism is introduced into the proposed SADenseNet. The self-attention mechanism can automatically scan the SSS images to obtain the key information of the images and highlight the key features of the images, which can reduce the waste of computational resources caused by feature redundancy. Besides, the self-attention mechanism can also better capture the correlation of the internal information and global features of the images, which contributes to raising the performance of SADenseNet. Dense connectivity is also used in SADenseNet. Dense connectivity enhances feature propagation and feature reuse. The output of each layer will be the input of all the subsequent layers so that the input of each layer is jointly determined by all the preceding layers, which can alleviate the gradient disappearance of the network and improve the classification performance. The transition layer reduces the size of the feature map, which improves the computational efficiency of the network.

The data augmentation method, SAGAN, can also be transferred to other data-starved tasks, and SADenseNet can be extended to challenging tasks, such as underwater target recognition. However, our proposed methods have several limitations, as follows: (1) The quality of data generated by SAGAN is unstable and requires manual selection; (2) SAGAN was trained for more than 30 days in order to generate visually appealing images of seabed sediments, which consumed a large amount of computational resources; (3) In order to make our proposed SADenseNet achieve good classification results, it took about 20 days on parameter tuning, which is time-consuming.

In our future research, we will investigate designing a less computationally expensive and more stable data generator, and build a classifier with a relatively simple structure and better classification performance. In addition, image super-resolution reconstruction technology can effectively solve the problem of low resolution of sonar images, which will be the focus of our research in the future.